IE 585 Competitive Network I Hamming Net SelfOrganizing

- Slides: 25

IE 585 Competitive Network – I Hamming Net & Self-Organizing Map

Competitive Nets Unsupervised • MAXNET • Hamming Net • Mexican Hat Net • Self-Organizing Map (SOM) • Adaptive Resonance Theory (ART) Supervised • Learning Vector Quantization (LVQ) • Counterpropagation 2

Clustering Net • Number of input neurons equal to the dimension of input vectors • Each output neuron represents a cluster the number of output neurons limits the number of clusters that can be performed • The weight vector for an output neuron serves as a representative for the input patterns which the net has placed on that cluster • The weight vector for the winning neuron is adjusted 3

Winner-Take-All • The squared Euclidean distance is used to determine the closest weight vector to a pattern vector • Only the neuron with the smallest Euclidean distance from the input vector is allowed to update 4

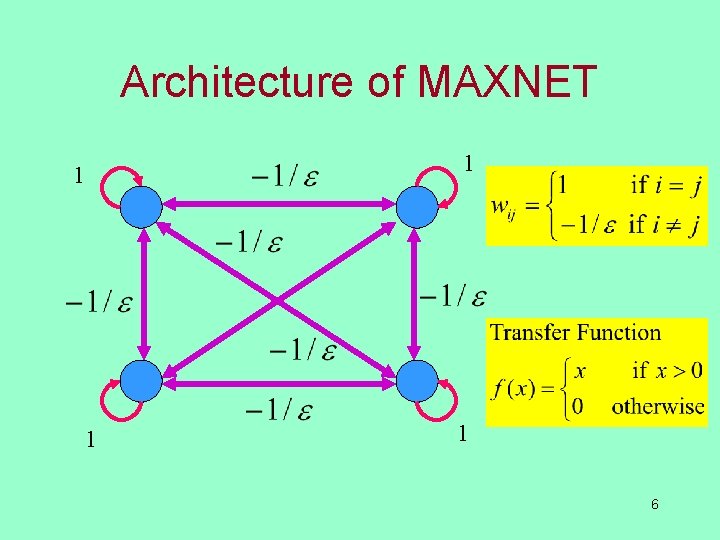

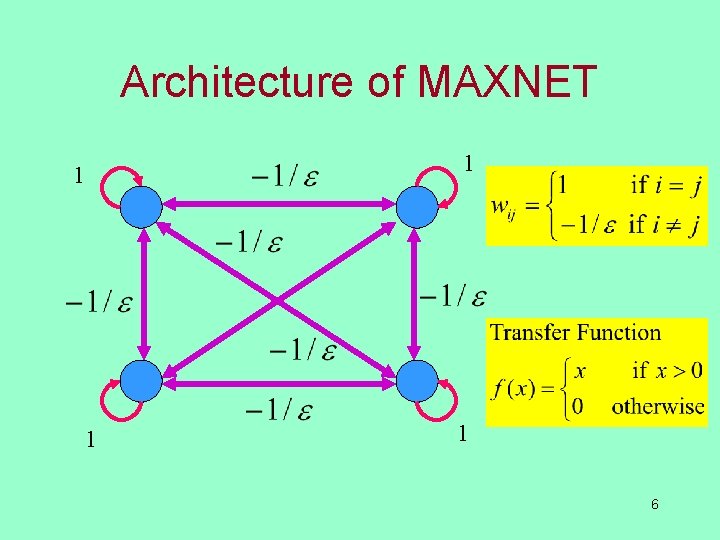

MAXNET • Developed by Lippmann, 1987 • Can be used as a subset to pick the node whose input is the largest • Completely interconnected (including selfconnection) • Symmetric weights • No training • Weights are fixed 5

Architecture of MAXNET 1 1 6

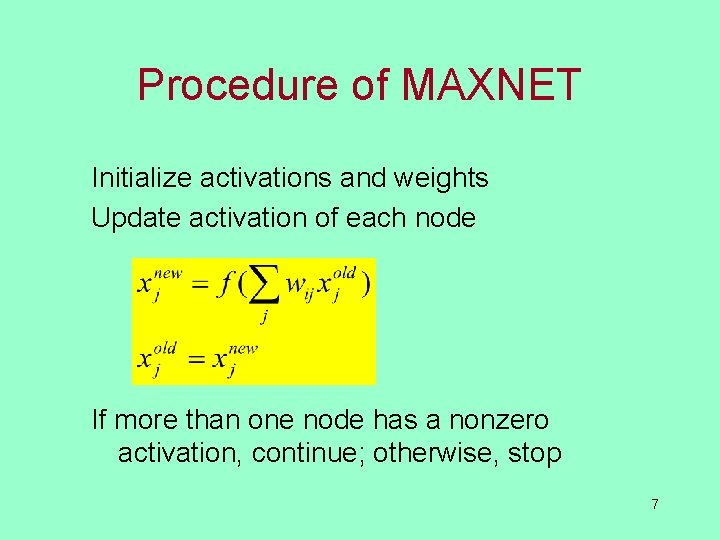

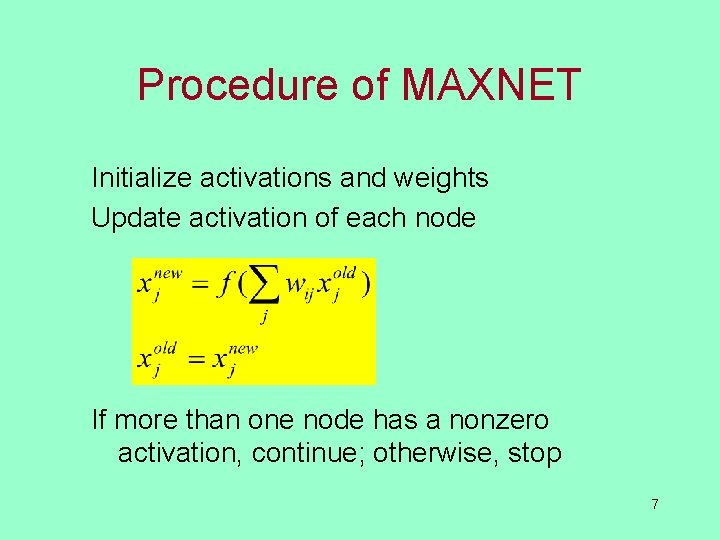

Procedure of MAXNET Initialize activations and weights Update activation of each node If more than one node has a nonzero activation, continue; otherwise, stop 7

Hamming Net • Developed by Lippmann, 1987 • A maximum likelihood classifier • used to determine which of several exemplar vectors is most similar to an input vector • Exemplar vectors determine the weights of the net • Measure of similarity between the input vector and the stored exemplar vectors is (n – HD between the vectors) 8

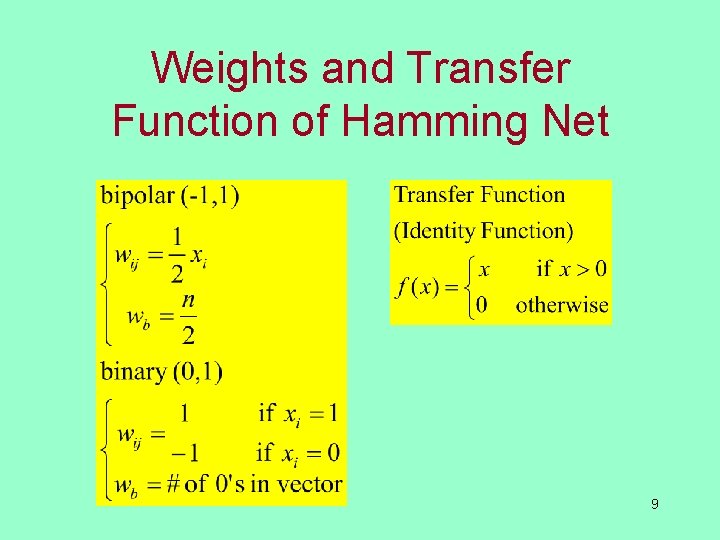

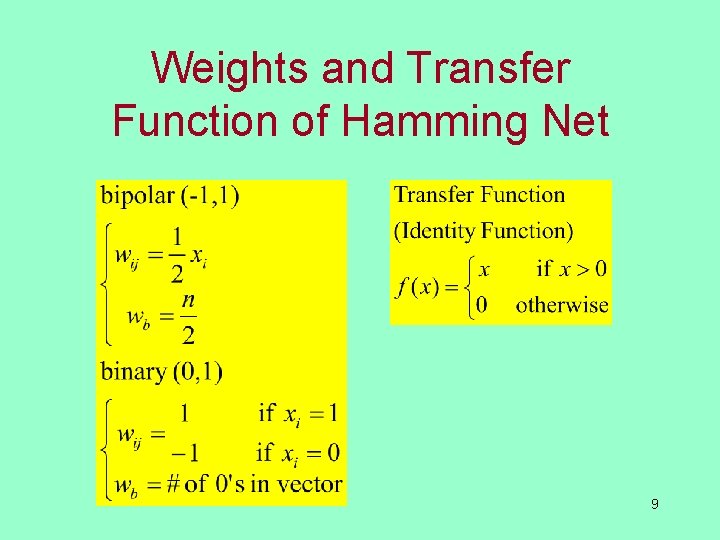

Weights and Transfer Function of Hamming Net 9

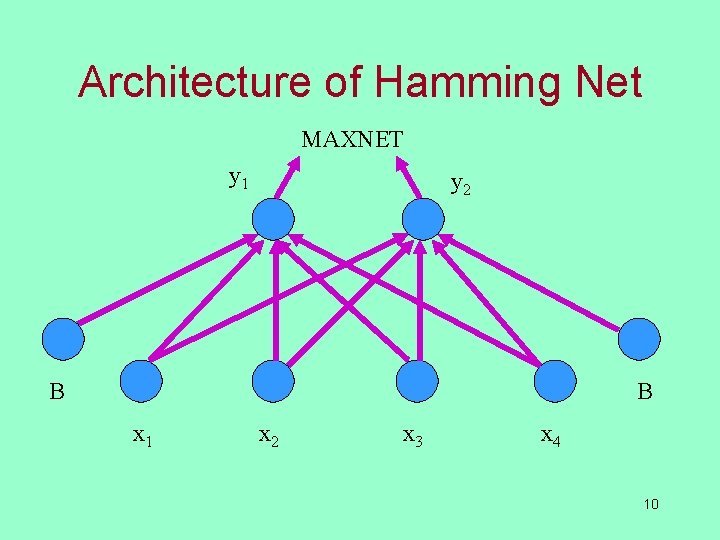

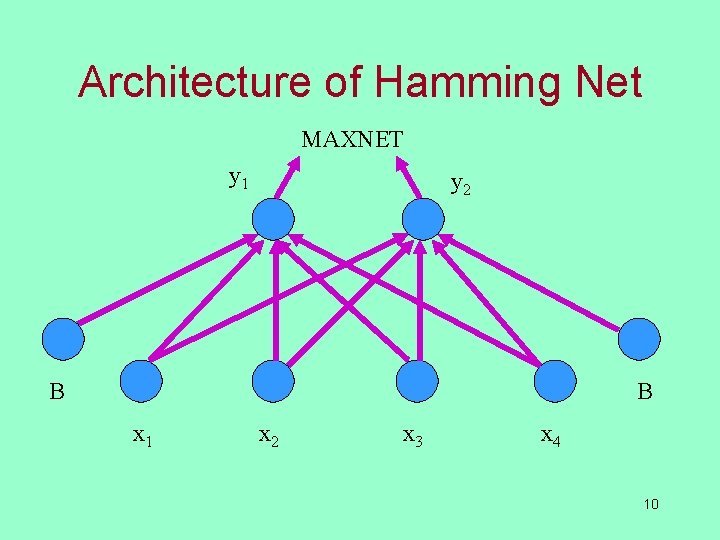

Architecture of Hamming Net MAXNET y 1 y 2 B B x 1 x 2 x 3 x 4 10

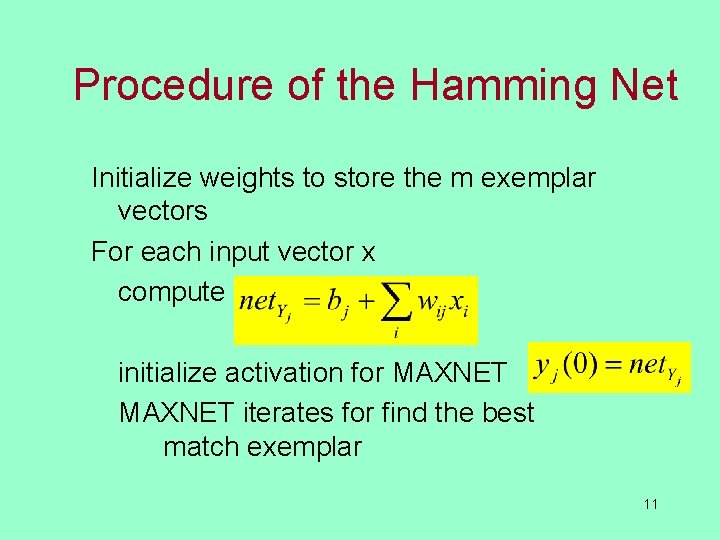

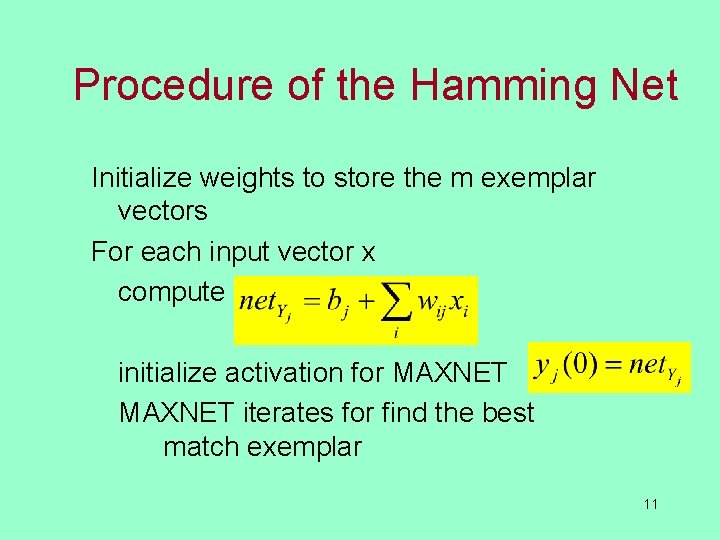

Procedure of the Hamming Net Initialize weights to store the m exemplar vectors For each input vector x compute initialize activation for MAXNET iterates for find the best match exemplar 11

Hamming Net Example 12

Mexican Hat Net • Developed by Kohonen, 1989 • Positive weight with “cooperative neighboring” neurons • Negative weight with “competitive neighboring” neurons • Not connect with far away neurons 13

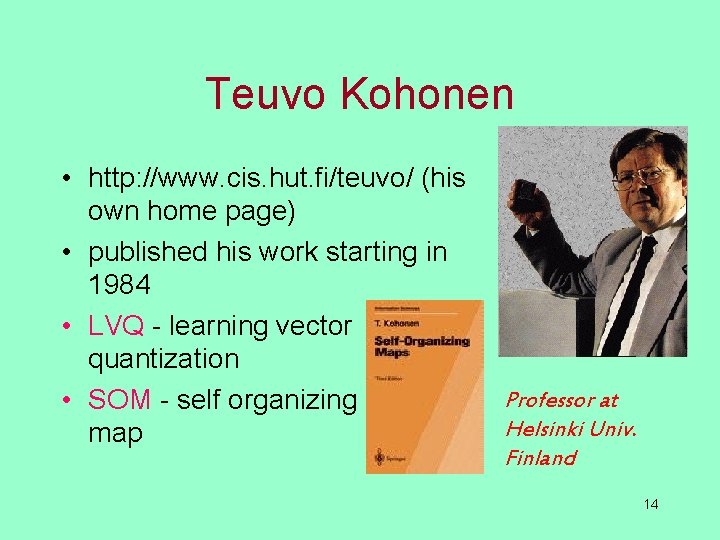

Teuvo Kohonen • http: //www. cis. hut. fi/teuvo/ (his own home page) • published his work starting in 1984 • LVQ - learning vector quantization • SOM - self organizing map Professor at Helsinki Univ. Finland 14

SOM • Also called Topology-Preserving Maps or Self. Organizing Feature Maps (SOFM) • “Winner Take All” learning (also called competitive learning) • winner has the minimum Euclidean distance • learning only takes place for winner • final weights are at the centroids of each cluster • Continuous inputs, continuous or 0/1 (winner take all) outputs • No bias, fully connected • used for data mining and exploration • supervised version exists 15

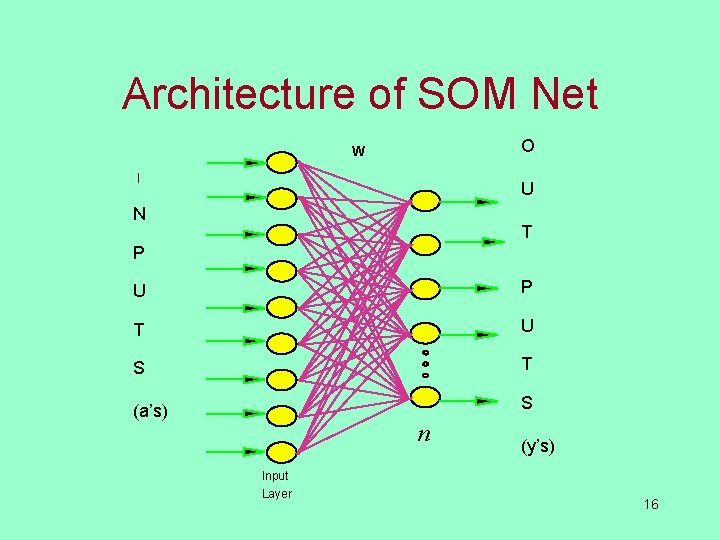

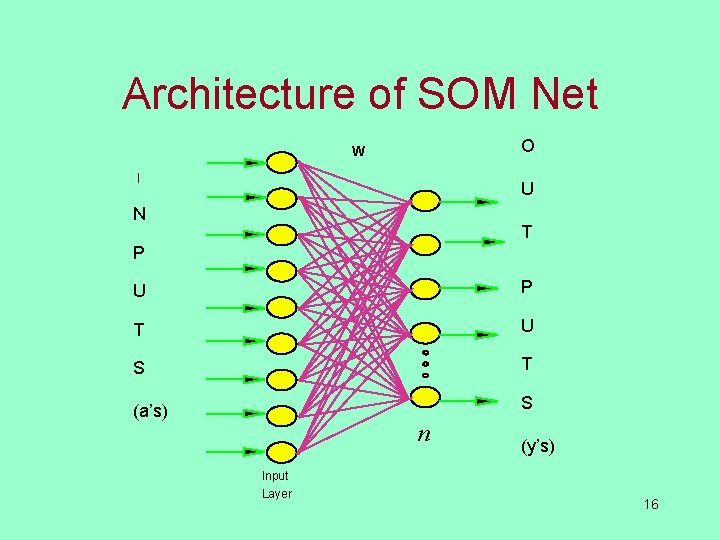

Architecture of SOM Net O W I U N T P U P T U S T (a’s) S n Input Layer (y’s) 16

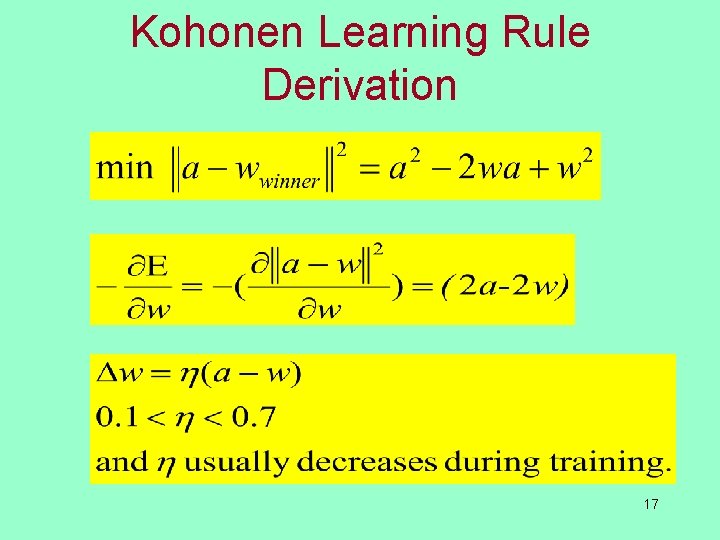

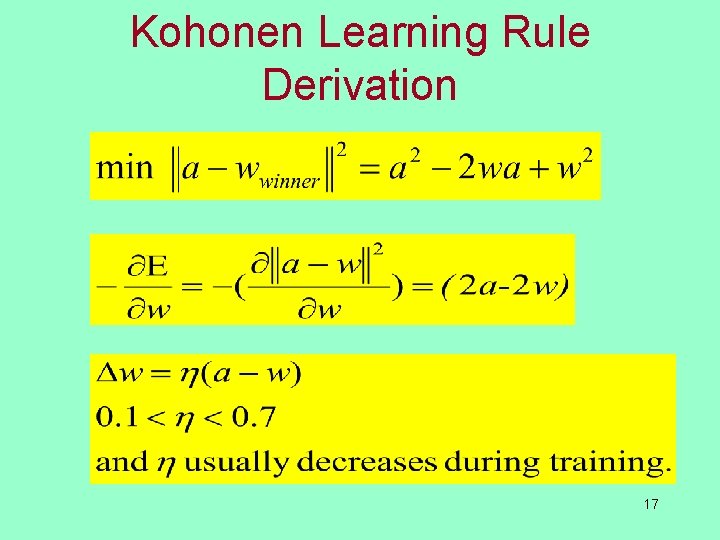

Kohonen Learning Rule Derivation 17

Kohonen Learning 18

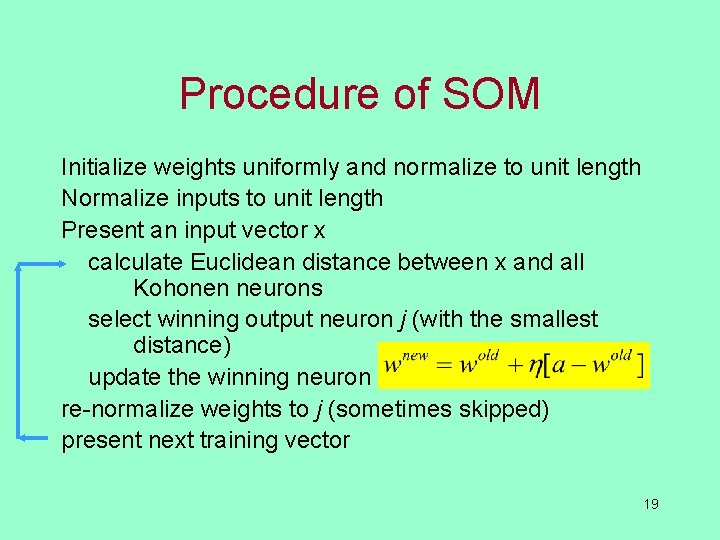

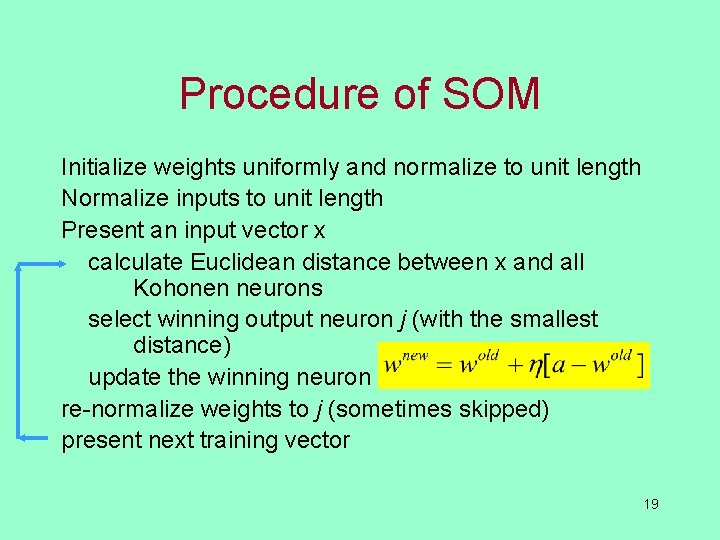

Procedure of SOM Initialize weights uniformly and normalize to unit length Normalize inputs to unit length Present an input vector x calculate Euclidean distance between x and all Kohonen neurons select winning output neuron j (with the smallest distance) update the winning neuron re-normalize weights to j (sometimes skipped) present next training vector 19

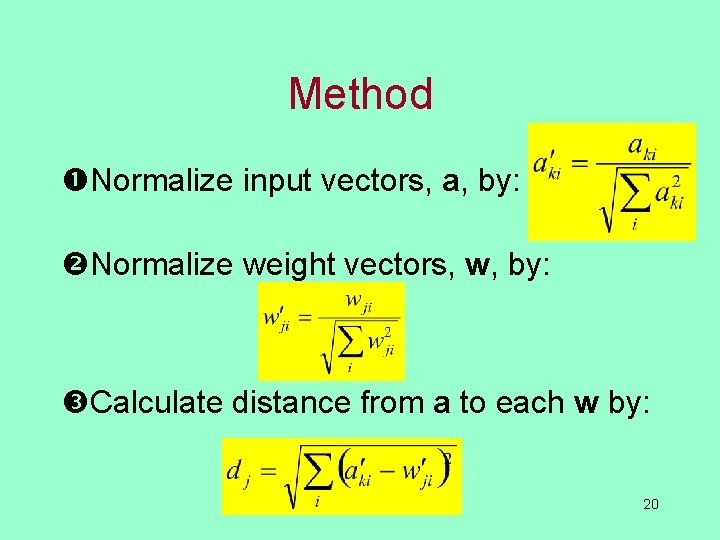

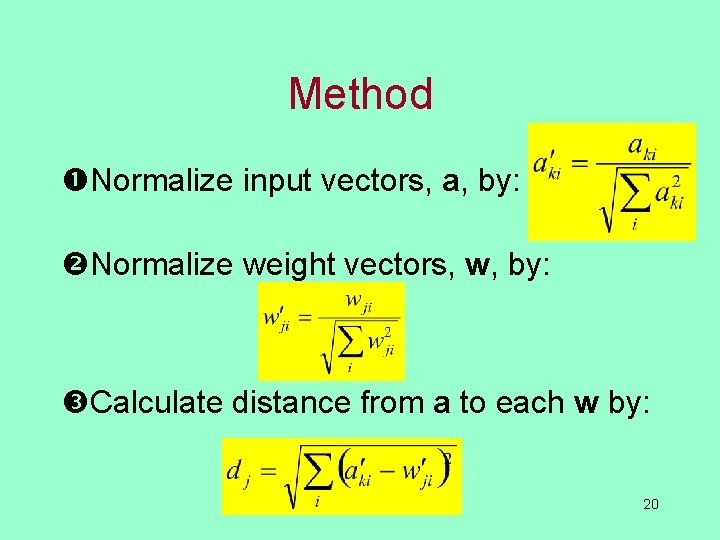

Method Normalize input vectors, a, by: Normalize weight vectors, w, by: Calculate distance from a to each w by: 20

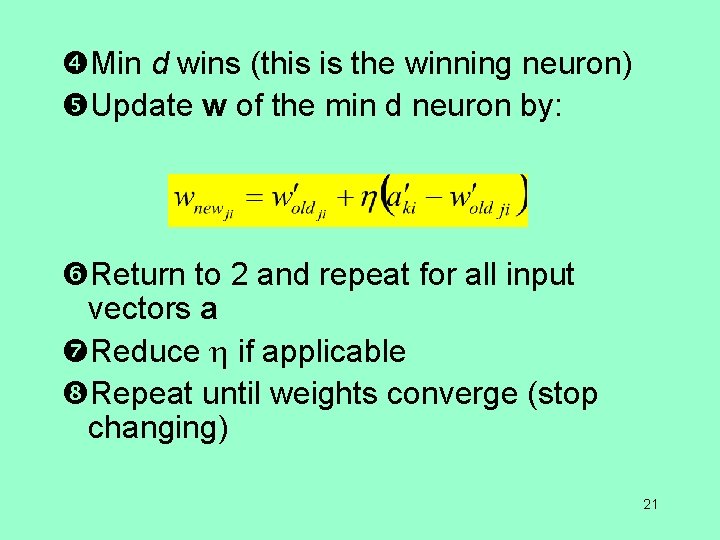

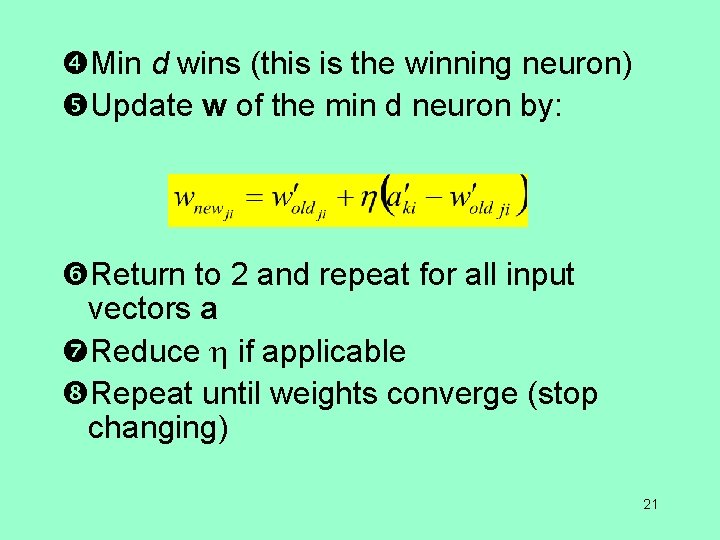

Min d wins (this is the winning neuron) Update w of the min d neuron by: Return to 2 and repeat for all input vectors a Reduce if applicable Repeat until weights converge (stop changing) 21

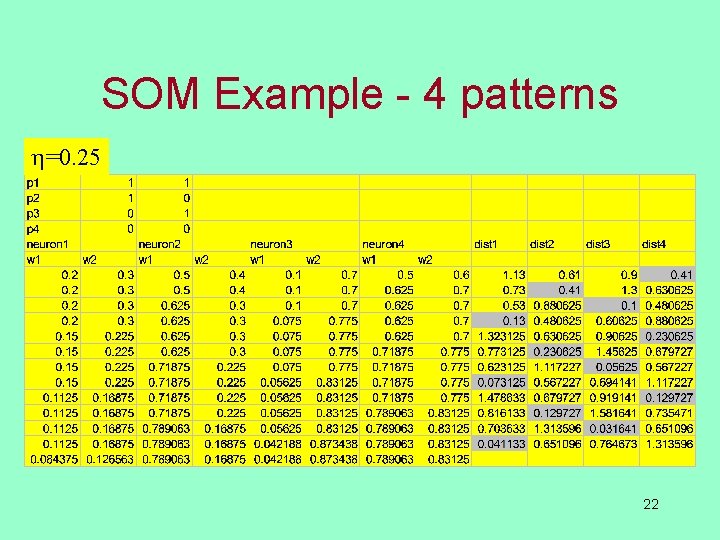

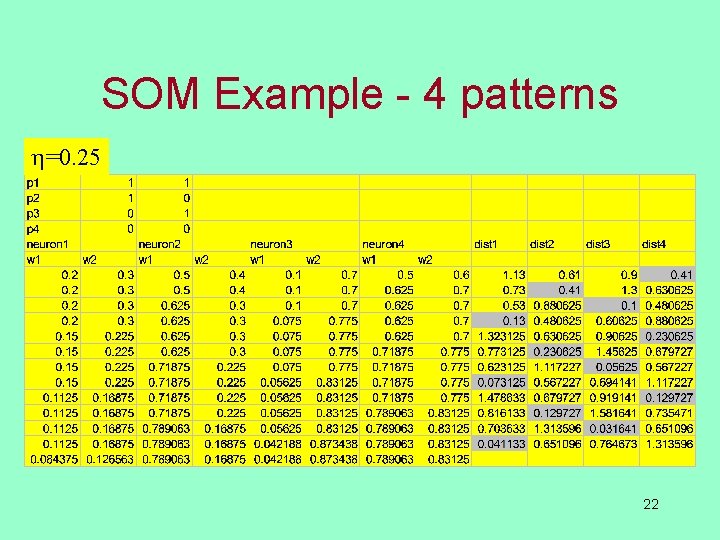

SOM Example - 4 patterns =0. 25 22

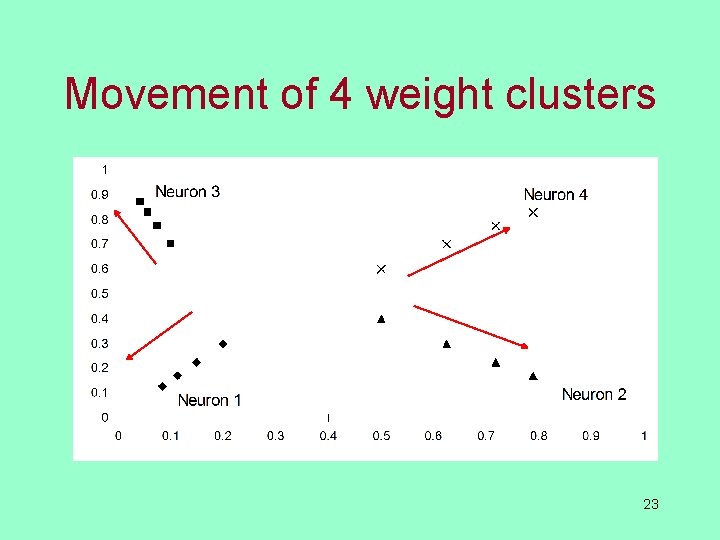

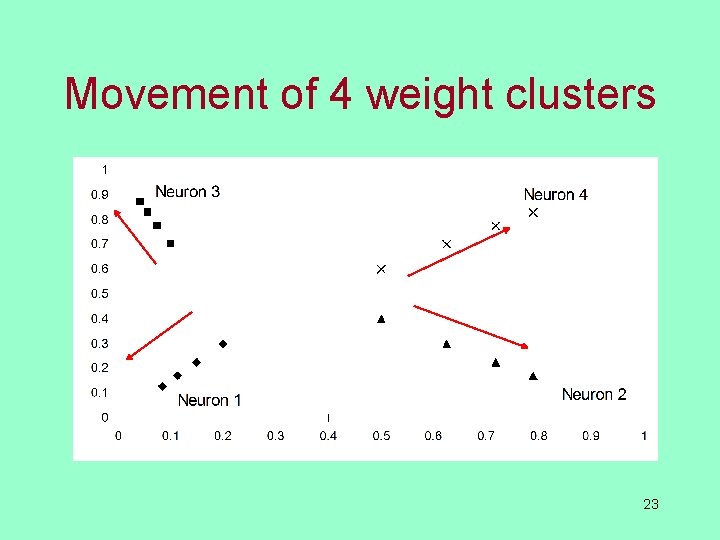

Movement of 4 weight clusters 23

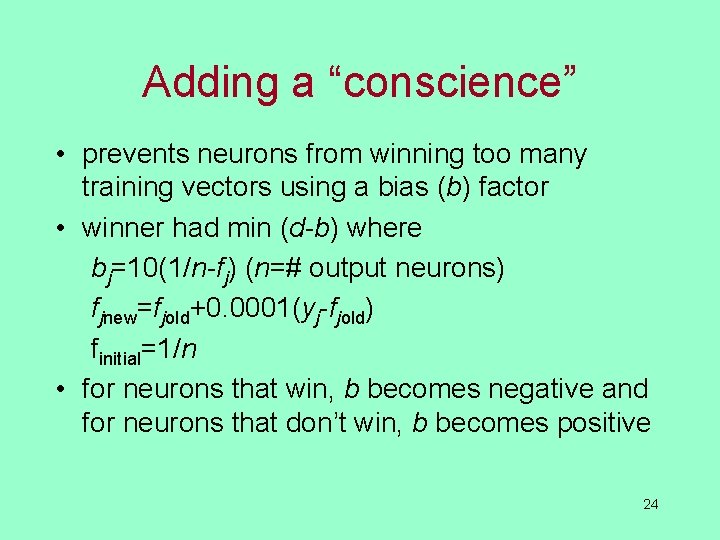

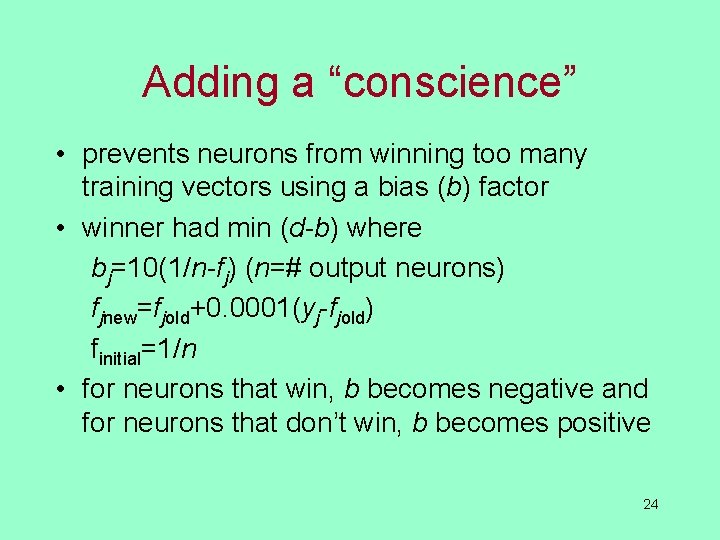

Adding a “conscience” • prevents neurons from winning too many training vectors using a bias (b) factor • winner had min (d-b) where bj=10(1/n-fj) (n=# output neurons) fjnew=fjold+0. 0001(yj-fjold) finitial=1/n • for neurons that win, b becomes negative and for neurons that don’t win, b becomes positive 24

Supervised Version • Same, except if the winning neuron is “correct” use same weight update: wnew = wold + (a - wold) and • if winning neuron is “incorrect” use: wnew = wold - (a - wold) 25