IBM Research Cell Broadband Engine BE Processor Tutorial

IBM Research Cell Broadband Engine (BE) Processor Tutorial Michael Perrone Manager, Cell Solutions Dept. © 2006 IBM Corporation

IBM Research Outline § Cell Overview § Cell Architecture § Cell Programming 2 © 2006 IBM Corporation

IBM Research Cell BE Processor Overview § IBM, SCEI/Sony, Toshiba Alliance formed in 2000 § Design Center opened in March 2001 § Based in Austin, Texas § ~$400 M Investment § February 7, 2005: First technical disclosures § Designed for Sony Play. Station 3 – Commodity processor § Cell is an extension to IBM Power family of processors § Sets new performance standards for computation & bandwidth § High affinity to HPC workloads – Seismic processing, FFT, BLAS, etc. 3 © 2006 IBM Corporation

IBM Research Peak GFLOPs (Cell SPEs only) 4 Free. Scale PPC 970 AMD DC Intel SC Cell DC 1. 5 GHz 2. 2 GHz 3. 6 GHz 3. 0 GHz © 2006 IBM Corporation

IBM Research Peak GFLOPS (PPE & SPEs) 1 st Generation Cell (peak floating point operations per second) § Single Precision Floating Point (SP FP) § Double Precision Floating Point (DP FP) – 230 SP GFLOPS per BE @ 3. 2 GHz: • • 21. 0 DP GFLOPS per BE @ 3. 2 GHz: - 25. 6 GFLOPS per SPE, 8 SPE’s per BE chip, 8 x 32 = 256 GFLOPS 25. 6 GFLOPs from the PU/VMX, 1 PU per BE chip § § 1. 83 DP GFLOPs per SPE, 8 SPE’s per BE chip, 8 x 1. 83= 14. 6 GFLOPs 6. 4 DP GFLOPs per PU, 1 PU per BE chip Other architectures for reference (2005) Peak Performance, floating point instructions per second 5 Net Delta Intel IA 32 @ 4 GHz (SSE 3) SPFP: 32 GFLOPs; DPFP: 16 GFLOPs Cell Advantage (4 GHz): 7. 2 x SP & 1. 3 x DP Intel IA 64 @ 3 GHz SPFP: 12 GFLOPs; DPFP: 12 GFLOPs Cell Advantage (3 GHz) : 19 x SP & 1. 7 x DP AMD Opteron @ 3 GHz (SSE) SPFP: 24 GFLOPS; DPFP: 12 GFLOPs Cell Advantage (3 GHz): 9. 6 x SP & 1. 7 x DP Power 5 @ 3 GHz SPFP: 12 GFLOPs; DPFP: 12 GFLOPs Cell Advantage (3 GHz): 19 x SP & 1. 7 x DP Power 4 @ 2. 5 GHz SPFP: 10 GFLOPs; DPFP: 10 GFLOPs Cell Advantage (3 GHz): 23 x SP & 2. 1 x DP © 2006 IBM Corporation

IBM Research Cell BE Architecture § Combines multiple high performance processors in one chip § 9 cores, 10 threads § A 64 -bit Power Architecture™ core (PPE) § 8 Synergistic Processor Elements (SPEs) for data-intensive processing § Current implementation—roughly 10 times the performance of Pentium for computational intensive tasks § Clock: 3. 2 GHz (measured at >4 GHz in lab) 6 Cell Pentium D Peak I/O BW 75 GB/s ~6. 4 GB/s Peak SP Performance ~230 GFLOPS ~30 GFLOPS Area 221 mm² 206 mm² Total Transistors 234 M ~230 M © 2006 IBM Corporation

IBM Research Cell BE Processor Features § Heterogeneous multi-core system architecture SPE SPU § Synergistic Processor Element (SPE) consists of – Synergistic Processor Unit (SPU) – Synergistic Memory Flow Control (SMF) • Data movement and synchronization • Interface to highperformance Element Interconnect Bus SPU SPU SPU SXU SXU LS LS SMF SMF – Power Processor Element for control tasks – Synergistic Processor Elements for data-intensive processing SPU SXU 16 B/cycle EIB (up to 96 B/cycle) 16 B/cycle PPE 16 B/cycle PPU L 2 L 1 MIC 16 B/cycle (2 x) BIC PXU 32 B/cycle 16 B/cycle Dual XDRTM Flex. IOTM 64 -bit Power Architecture with VMX 7 © 2006 IBM Corporation

IBM Research Power Processor Element § PPE handles operating system and control tasks – 64 -bit Power Architecture. TM with VMX – In-order, 2 -way hardware simultaneous multi-threading (SMT) – Coherent Load/Store with 32 KB I & D L 1 and 512 KB L 2 8 © 2006 IBM Corporation

IBM Research Element Interconnect Bus § EIB data ring for internal communication – Four 16 byte data rings, supporting multiple transfers – 96 B/cycle peak bandwidth – Over 100 outstanding requests 9 © 2006 IBM Corporation

IBM Research Element Interconnect Bus § Coherent SMP Bus – Supports over 100 outstanding requests – Address Collision Detection § High Bandwidth – Operates at ½ processor frequency – Up to 96 Bytes/cycle – over 300 GB/s at 3. 2 GHz processor • 8 Bytes/cycle master and 8 Bytes/cycle slave per element port • 12 Element ports § Modular Design for Scalability – Physical modularity for flexibility 10 § Independent Command/Address and Data Networks § Split Command / Data Transactions © 2006 IBM Corporation

IBM Research Bus Interface Controller 11 © 2006 IBM Corporation

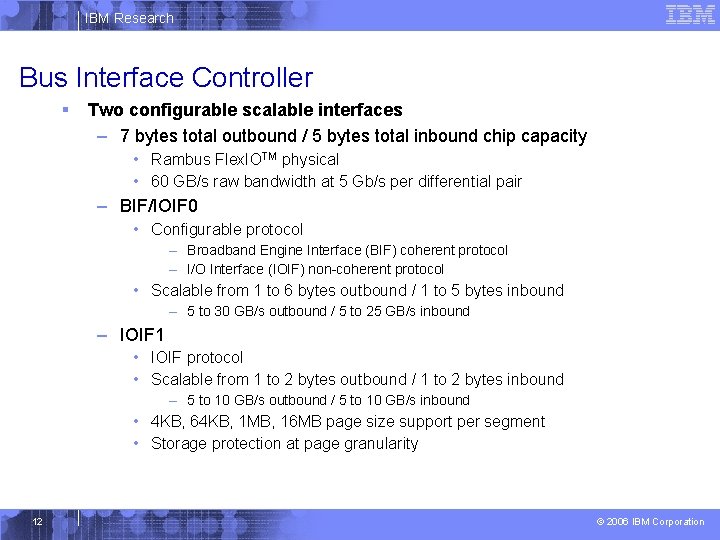

IBM Research Bus Interface Controller § Two configurable scalable interfaces – 7 bytes total outbound / 5 bytes total inbound chip capacity • Rambus Flex. IOTM physical • 60 GB/s raw bandwidth at 5 Gb/s per differential pair – BIF/IOIF 0 • Configurable protocol – Broadband Engine Interface (BIF) coherent protocol – I/O Interface (IOIF) non-coherent protocol • Scalable from 1 to 6 bytes outbound / 1 to 5 bytes inbound – 5 to 30 GB/s outbound / 5 to 25 GB/s inbound – IOIF 1 • IOIF protocol • Scalable from 1 to 2 bytes outbound / 1 to 2 bytes inbound – 5 to 10 GB/s outbound / 5 to 10 GB/s inbound • 4 KB, 64 KB, 1 MB, 16 MB page size support per segment • Storage protection at page granularity 12 © 2006 IBM Corporation

IBM Research Cell BE Processor Can Support Many Systems HDTV Home media servers HPC … XDRtm IOIF XDRtm Cell BE Processor SW Cell BE Processor XDRtm IOIF 13 IOIF 1 Cell BE Processor IOIF 0 BIF XDRtm BIF Cell BE Processor XDRtm IOIF Game console systems Blades BIF § § § © 2006 IBM Corporation

IBM Research Dual XDRTM Controller (25. 6 GB/s @ 3. 2 Gbps) 14 © 2006 IBM Corporation

IBM Research Synergistic Processor Element § SPE provides computational performance – – 15 Dual issue, up to 16 -way 128 -bit SIMD Dedicated resources: 128 -bit RF, 256 KB Local Store Each can be dynamically configured to protect resources Dedicated DMA engine: Up to 16 outstanding requests © 2006 IBM Corporation

IBM Research SPE Highlights § Dual-issue, in-order – Even: float, fixed, byte – Odd: shuffle, L/S, channel, branch § Direct programmer control – DMA/DMA-list (no cache) – Branch hint (no branch prediction) § VMX-like SIMD dataflow – Broad set of operations – Graphics SP-Float – IEEE DP-Float § Unified register file – 128 entry x 128 bit § 256 KB Local Store 14. 5 mm 2 (90 nm SOI) 16 – Combined Instruction, Data & Stack – 16 B/cycle L/S bandwidth – 128 B/cycle DMA bandwidth © 2006 IBM Corporation

IBM Research SIMD “Cross-Element” Instructions § VMX and SPE architectures include “cross-element” instructions – shifts and rotates – permutes / shuffles § Permute / Shuffle Example 17 © 2006 IBM Corporation

IBM Research Software Management of SPE Memory § An SPE has load/store & instruction-fetch access only to its local store – Movement of data and code into and out of SPE local store is via DMA § DMA transfers – 1 -, 2 -, 4 -, 8 -, and 16 -byte naturally aligned transfers – 16 -byte through 16 -KB block transfers (16 -byte aligned) • 128 B alignment is preferable § DMA queues – each SPE has a 16 -element DMA command queue § DMA tags – each DMA command is tagged with a 5 -bit identifier – same identifier can be used for multiple commands – tags used for polling status or waiting on completion of DMA commands § DMA lists – a single DMA command can cause execution of a list of transfer requests (in LS) – lists implement scatter-gather functions – a list can contain up to 2 K transfer requests 18 © 2006 IBM Corporation

IBM Research Cell Programming SPE SPU SPU SXU SXU LS LS SMF SMF 16 B/cycle EIB (up to 96 B/cycle) 16 B/cycle PPE 16 B/cycle PPU L 1 L 2 32 B/cycle MIC 16 B/cycle (2 x) BIC PXU 16 B/cycle Dual XDRTM Flex. IOTM 64 -bit Power Architecture with VMX 19 © 2006 IBM Corporation

IBM Research High-Level Programming View § Code Generation – Write PPE & SPE code • Individually • With Open. MP pragmas (not in current SDK) • single-source compiler in progress. . . – Compile and link into single executable § Run-Time Flow – Run executable on PPE – PPE creates memory map(s) – PPE Spawns SPE threads • PPE pointer to SPE code in main memory • DMA code to SPE • SPE runs code independently 20 © 2006 IBM Corporation

IBM Research Typical CELL Software Development Flow § Algorithm complexity study § Data traffic analysis § Control/Compute partitioning of the algorithm § Develop PPE control code & PPE scalar code § Port PPE scalar code to SPE scalar code – SPE thread creation – Communication & synchronization – DMA code for data movement – Overlay management (if needed) § Transform SPE scalar code to SPE SIMD code – Implement with vector variables using vector intrinsics – Latency handling: multi-buffering, loop unrolling, etc. § Re-balance the computation / data movement § Other optimization considerations – PPE SIMD, system bottle-necks, load balancing 21 © 2006 IBM Corporation

IBM Research Programming Models 1. Application Specific Accelerators §Acceleration provided by O/S services §Application independent of accelerators (platform fixed) PPE mpeg_encode() Power. PC Application O/S Service BE Aware OS (Linux) System Memory Parameter Area Encrypt Open. GL Decrypt AUC AUC AUC MFC Local Store SPU MFC N Local Store SPU TCP Offload N Local Store SPU Decoding Encoding AUC AUC MFC N Data Encryption Local Store SPU N Data Decryption MFC MFC Local Store SPU N Realtime MPEG Encoding Local Store SPU N Compression/ Decompression Application Specific Acceleration Model – SPC Accelerated Subsystems 22 © 2006 IBM Corporation

IBM Research Programming Models 2. Function Offload Power Processor (PPE) §SPE function provided by libraries §Predetermined functions System Memory §Application calls standard Libraries §Single source compilation §SPE working set fits in Local Store Multi-stage Pipeline SPU SPU N N N Local Store MFC MFC §O/S handles SPE allocation Power Processor (PPE) System Memory Parallel-stages SPU N Local Store MFC 23 © 2006 IBM Corporation

IBM Research Programming Models 3. Computational Acceleration §User created RPC libraries §User acceleration routines §User compiles SPE code Power Processor (PPE) PPE Puts Text Static Data Parameters §Local Data PPE Gets Results SPU N Local Store MFC SPE executes §Data and Parameters passed in call §Global Data §Data and Parameters passed in call §SPE Code manages global data PPE Puts Initial Text Static Data Parameters Power Processor (PPE) SPE Puts Results System Memory SPU N Local Store MFC SPE Independently Stages Text & Intermediate Data Transfers while executing 24 © 2006 IBM Corporation

IBM Research Ideal Cell Software § Structured – Easier for memory fetch & SIMD operations – Data prefetch possible – Non branchy instruction pipeline; – Data more tolerant, but has the same caution § Multiple Operations on Data – Many operations on same data before reloading § Easy Parallelize and SIMD – Little or nor collective communication required – No Global or Shared memory or nested loops § Compute Intense – Determined by ops per byte § Fits Streaming Model – Small computation kernel through which you stream a large body of data – Algorithms that fit Graphics Processing Units – GPU’s are being used for more than just graphics today thanks to PCI Express 25 Target Areas § Data Manipulation – Digital Media – Image processing – Video processing – Visualization of output – Compression/decompression – Encryption /decryption – DSP – Audio processing, language translation? § Graphics – Transformation between domains (viewpoint transformation; time vs space; 2 D vs 3 D) – Lighting – Ray Tracing / Ray casting § Floating Point Intensive Applications (SP) – Single precision Physics – Single precision HPC – Sonar § Pattern Matching – Bioinformatics – String manipulation (search engine) – Parsing, transformation, translation (XSLT) – Audio processing, language translation? – Filtering & Pruning § Offload Engines – TCP/IP – Compiler for gaming applications – Network Security, Virus Scan and Intrusion © 2006 IBM Corporation

IBM Research Cell High Affinity Workloads § Cell excels at processing of rich media content in the context of broad connectivity – – – – – Digital content creation (games and movies) Game playing and game serving Distribution of dynamic, media rich content Imaging and image processing Image analysis (e. g. video surveillance) Next-generation physics-based visualization Video conferencing Streaming applications (codecs etc. ) Physical simulation & science § Cell is an excellent match for any applications that require: – – – 26 Parallel processing Real time processing Graphics content creation or rendering Pattern matching High-performance SIMD capabilities © 2006 IBM Corporation

IBM Research Non Ideal Software § Branchy data – Instruction “branchiness” may be partially mitigated through different methods (e. g. calculating both sides of the branch and using select) § Not structured – Not SIMD friendly § Pointer Indirection or Multiple levels of pointer indirection – fetching becomes hard § Data load granularity less than 16 bytes – BW performance degradation – DMA <128 Byte – SPE to local store is 16 Byte § Not easily parallelized § Tightly coupled algorithms requiring synchronization 27 © 2006 IBM Corporation

IBM Research BACKUP SLIDES 28 © 2006 IBM Corporation

IBM Research Node-Level Parallelism (e. g. , MPI) Original Parallelism Hyper Parallelism Nested Parallelism PPE SPE 29 © 2006 IBM Corporation

IBM Research Additional Information § http: //www-306. ibm. com/chips/techlib. nsf/products/Cell – – – Cell Broadband Engine Architecture V 1. 0 Synergistic Processor Unit (SPU): Instruction Set Architecture V 1. 0 SPU Application Binary Interface: Specification V 1. 3 SPU Assembly Language: Specification V 1. 2 SPU C/C++ Language: Extensions V 2. 0 § http: //www. research. ibm. com/cell 30 © 2006 IBM Corporation

IBM Research (c) Copyright International Business Machines Corporation 2005. All Rights Reserved. Printed in the United Sates April 2005. The following are trademarks of International Business Machines Corporation in the United States, or other countries, or both. IBM Logo Power Architecture Other company, product and service names may be trademarks or service marks of others. All information contained in this document is subject to change without notice. The products described in this document are NOT intended for use in applications such as implantation, life support, or other hazardous uses where malfunction could result in death, bodily injury, or catastrophic property damage. The information contained in this document does not affect or change IBM product specifications or warranties. Nothing in this document shall operate as an express or implied license or indemnity under the intellectual property rights of IBM or third parties. All information contained in this document was obtained in specific environments, and is presented as an illustration. The results obtained in other operating environments may vary. While the information contained herein is believed to be accurate, such information is preliminary, and should not be relied upon for accuracy or completeness, and no representations or warranties of accuracy or completeness are made. THE INFORMATION CONTAINED IN THIS DOCUMENT IS PROVIDED ON AN "AS IS" BASIS. In no event will IBM be liable for damages arising directly or indirectly from any use of the information contained in this document. IBM Microelectronics Division 1580 Route 52, Bldg. 504 Hopewell Junction, NY 12533 -6351 31 The IBM home page is http: //www. ibm. com The IBM Microelectronics Division home page is http: //www. chips. ibm. com © 2006 IBM Corporation

IBM Research A Change in thinking…. “What we have in the Cell chip is a ½ speed JS 20 (chip) that can sprint to as much 50 -100 x performance increases when properly leveraged… …if you can get past the memory and programming optimization issues - and these really aren’t much tougher than traditional graphics programming or even device driver development or HPC applications… …and if you can get a proper hardware design around it. ” 32 © 2006 IBM Corporation

IBM Research SXU ISA – Floating Point § Single precision is extended range – range is 1. 2 E-38 to 6. 8 E 38 § Single precision does not implement full IEEE standard, e. g: – truncation toward zero is only supported rounding mode – Na. N not supported as an operand not produced as a result – denorms not supported and treated as zero – except for denorms, Na. Ns, and infinities, format is same as IEEE 33 § Double precision is IEEE standard © 2006 IBM Corporation

IBM Research SXU ISA Differences with VMX § In VMX, not SPU – – – 34 saturating math sum-across Log 2 and 2 x ceil and floor complete byte instructions § In SPU, not VMX – – – – – immediate operands double precision floating point sum of absolute difference count ones in bytes count leading zeros equivalence nand or complement extend sign gather bits form select mask integer multiply and accumulate multiply subtract multiply float shuffle byte special conditions carry and borrow generate sum bytes across extended shift range © 2006 IBM Corporation

IBM Research FFT Performance § 16 M-point, complex, single-precision FFT (+PPE) – Power 5 @ 1. 65 GHz: – Cell @ 3. 2 GHz: 1. 55 GFLOPS 46. 8 GLOPS (20% eff. ) § 64 K-point, complex, single-precision FFT (all in LS) – Cell @ 3. 0 GHz: 35 109 GFLOPS (57% eff. ) © 2006 IBM Corporation

IBM Research Single-SPE: Single Precision Matrix. Multiply Performance (SGEMM) CPI Dual Issue Channel Stalls Other Stalls # of Used Registers GFLOPs Effic’y Original (scalar) 1. 05 26. 1% 11. 4% 26. 3% 47 0. 42 1. 6% SIMD optimized 0. 711 40. 3% 3. 0% 9. 8% 60 10. 96 42. 8% 0. 711 41. 4% 2. 6% 10. 2% 65 11. 12 43. 4% 0. 508 80. 1% 0. 2% 0. 4% 69 25. 12 98. 1% SIMD double buf’d Optimized code §Performance improved significantly with optimizations and tunings by §taking advantage of data level parallelism using SIMD §double buffering for concurrent data transfers and computation §optimizing dual issue rate, instruction scheduling, etc. 36 © 2006 IBM Corporation

IBM Research Parallel Matrix. Multiply Performance (Single Precision) § § 37 Achieved near-linear scalability on 8 SPUs with close to 100% compute efficiency Performed 8 x better than a Pentium 4 with SSE 3 at 3. 2 GHz assuming it can achieve its peak single-precision floating point capability. © 2006 IBM Corporation

IBM Research Crypto Performance – SPE vs. IA 32 Function SPE (Gbps) IA-32 (Gb/sec) SPE advantage AES ECB Encrypt - 128 bit key 1. 93 0. 96 2. 00 AES CBC Encrypt - 128 bit key 0. 75 0. 91 0. 82 AES ECB Decrypt - 128 bit key 1. 41 0. 97 1. 45 AES CBC Decrypt - 128 bit key 1. 41 0. 91 1. 56 DES - encrypt ECB 0. 46 0. 40 1. 16 TDES - encrypt ECB 0. 16 0. 12 1. 31 MD 5 2. 30 2. 68 0. 86 SHA-1 1. 98 0. 85 2. 35 SHA-256 0. 80 0. 49 1. 65 § Optimization efforts in loop unrolling register-based table lookup, dual issue rate, bit permutation, byte shuffling, etc. – More registers give SPE significant advantage in using aggressive loop unrolling and in table lookup – Performance of a single SPE is better than an IA 32 at the same frequency (up to 2. 35 x) in most cases 38 © 2006 IBM Corporation

IBM Research Transform-Light Performance on a Single SPE § 39 Unrolled Loops G 5 2 GHz (Mvtx/sec) G 5 2. 7 GHz (Scaled) (Mvtx/sec) SPE 3. 2 GHz (Mvtx/sec) SPE Advantage 1 82. 95 112 139. 44 1. 25 2 94. 8 128 155. 92 1. 22 4 89. 47 120 208. 48 1. 73 8 58. 45 79 217. 2 2. 75 Optimization of transform-light has been focused on loop unrolling, branch avoidance, dual issue rate, streaming data, etc. – More registers give SPE significant advantage with aggressive loop unrolling – an SPE can perform 1. 7 x better than a best of breed G 5 with VMX © 2006 IBM Corporation

IBM Research MPEG 2 Decoder Performance on a Single SPE Effective # of Used Frames/s # of Cycles # of Inst. CPI Registers @3. 2 GHz CIF (1 Mbps) 63. 4 M 51. 9 M 1. 22 1. 42 126 1514 SDTV (5 Mbps) 263 M 220 M 1. 2 1. 38 126 365 SDTV (8 Mbps) 324 M 290 M 1. 12 1. 27 126 296 HDTV (18 Mbps) 1. 25 G 1. 01 G 1. 24 1. 46 126 77 * Note: Results from simulator, ~10% error in SPCsim results. § VLD (Variable Length decoding) in decoder is very branchy – § MC (Motion Compensation) and IDCT deal with very structured 8 -bit/16 -bit data – § can be highly optimized to take advantage of SPE’s 8 -way/16 -way SIMD capability Pentium 4 w/SSE 2 at 3. 06 GHz can decode 310 frames/s (SDTV) – 40 needs a lot of optimization to run well on a SPE Each SPE can perform very much the same as a Pentium 4 w/ SSE 2 running at the same frequency © 2006 IBM Corporation

- Slides: 40