IBM HPC Services and Big Data Challenges Isabel

IBM HPC Services and Big Data Challenges Isabel Schwerdtfeger Leading Solution Sales Professional schwerdtfeger@de. ibm. com HUF 2016 – New York, August 29, 2016

Agenda § Drivers of HPC industry § HPC as a Service - HPC Cloud § Data center challenges and innovative solutions § Recent developments with Aspera and HPSS § Future outlook © "The Big Apple" NYC Ogilvy and Mather, New York City, 1976

Current drivers of HPC industry § Democratization of HPC – high commoditization, high adoption rates from commercial firms § Need for high energy efficiency for rising petaflops and exaflops § Scalable system software that is power and failure aware § Data management software that can handle the “ 3 Vs” of data: volume, velocity, and variety Overall efficiency at low cost needs to be achieved

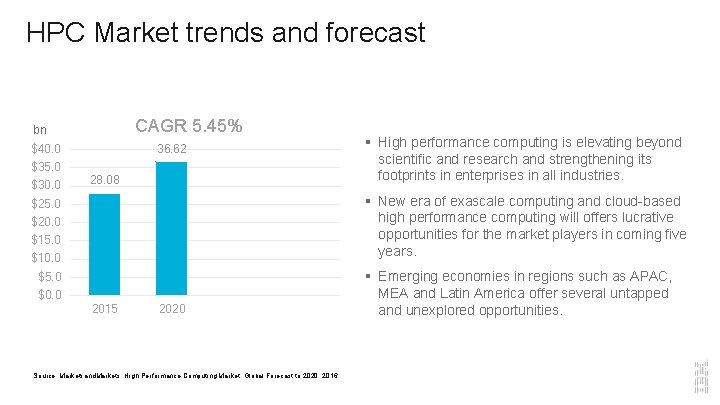

HPC Market trends and forecast CAGR 5. 45% bn 36. 62 $40. 0 $35. 0 $30. 0 28. 08 § High performance computing is elevating beyond scientific and research and strengthening its footprints in enterprises in all industries. § New era of exascale computing and cloud-based $25. 0 high performance computing will offers lucrative opportunities for the market players in coming five years. $20. 0 $15. 0 $10. 0 § Emerging economies in regions such as APAC, $5. 0 $0. 0 2015 2020 Source: Marketsand. Markets, High Performance Computing Market, Global Forecast to 2020, 2016 MEA and Latin America offer several untapped and unexplored opportunities.

HPC operation models On Premise Off Premise § Best price purchasing of hardware and § Model (1): Private HPC Cloud with self- software § Usage of hardware solution with lowest energy consumption to reduce power costs, i. e. direct water cooling, innovative data center concepts § Reliability on standard HW/SW maintenance to support on-site or remote owned hardware in own data center financed over 36, 48, or 60 months § Model (2): Dedicated HPC Private Cloud from a remote data center with annual payments at flat rates § Model (3): Public HPC Cloud resources as “Iaa. S”, “Saa. S”, “Paa. S” from Cloud providers, i. e. Softlayer, AWS, others for peak workloads with a monthly fee

High Performance Computing Services Support new Cognitive solutions Services are Cloud enabled The focus is on Industries Integration of HPC system environments to cognitive, AI, and advanced analytics with its key data into leading solutions. HPC services support all types of IT sourcing options: from on premise, hybrid and to cloud deployments. Experts support leading research institutes, government organizations and industry clients of all sizes.

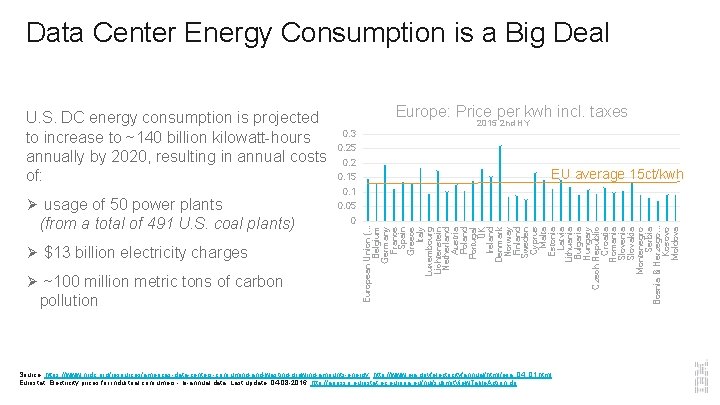

Data Center Energy Consumption is a Big Deal Ø usage of 50 power plants (from a total of 491 U. S. coal plants) Ø $13 billion electricity charges Ø ~100 million metric tons of carbon pollution Europe: Price per kwh incl. taxes 2015 2 nd HY 0. 3 0. 25 0. 2 0. 15 EU average 15 ct/kwh 0. 1 0. 05 0 European Union (. . . Belgium Germany France Spain Greece Italy Luxembourg Lichtenstein Netherland Austria Poland Portugal UK Ireland Denmark Norway Finland Sweden Cyprus Malta Estonia Latvia Lithuania Bulgaria Hungary Czech Republic Croatia Romania Slovenia Slovakia Montenegro Serbia Bosnia & Herzego. . . Kosovo Moldova U. S. DC energy consumption is projected to increase to ~140 billion kilowatt-hours annually by 2020, resulting in annual costs of: Source: https: //www. nrdc. org/resources/americas-data-centers-consuming-and-wasting-growing-amounts-energy, http: //www. eia. gov/electricity/annual/html/epa_04_01. html, Eurostat, Electricity prices for industrial consumers - bi-annual data, Last update: 04 -08 -2016, http: //appsso. eurostat. ec. europa. eu/nui/submit. View. Table. Action. do,

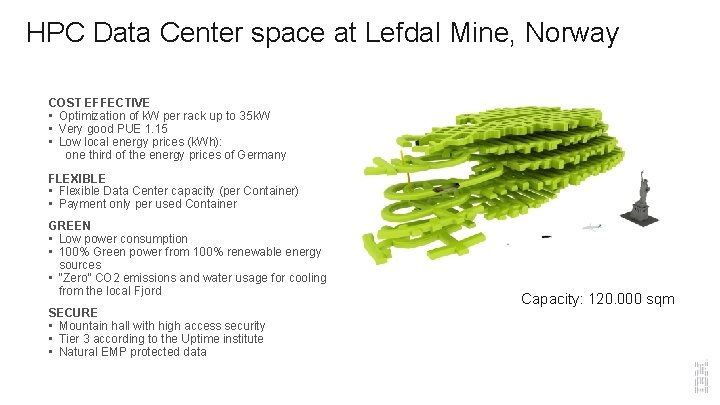

HPC Data Center space at Lefdal Mine, Norway LEADING DATACENTER SOLUTION - EUROPE COST EFFECTIVE • Optimization of k. W per rack up to 35 k. W • Very good PUE 1. 15 • Low local energy prices (k. Wh): one third of the energy prices of Germany FLEXIBLE • Flexible Data Center capacity (per Container) • Payment only per used Container GREEN • Low power consumption • 100% Green power from 100% renewable energy sources • “Zero” CO 2 emissions and water usage for cooling from the local Fjord SECURE • Mountain hall with high access security • Tier 3 according to the Uptime institute • Natural EMP protected data Capacity: 120. 000 sqm

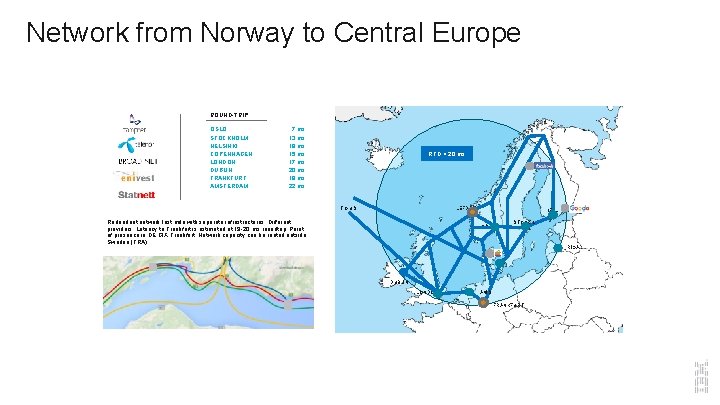

Network from Norway to Central Europe LATENCY – NORDICS / EUROPE ROUND-TRIP OSLO STOCKHOLM HELSINKI COPENHAGEN LONDON DUBLIN FRANKFURT AMSTERDAM 7 ms 13 ms 19 ms 15 ms 17 ms 20 ms 19 ms 22 ms RTD < 20 ms TO US LEFDAL Redundant network last mile with separate infrastructures. Different providers. Latency to Frankfurt is estimated at 19 -20 ms roundtrip. Point of presence in DE CIX Frankfurt. Network capacity can be routed outside Sweden (FRA). HEL. . OSLO STOCKH. RIGA. . DUBLIN LONDON AMS FRANKFURT

Containerized Solution for optimized efficiency and support CONTAINERS

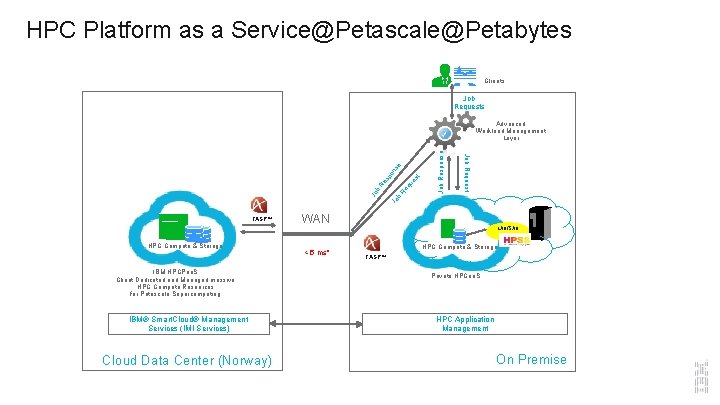

HPC Platform as a Service@Petascale@Petabytes Clients Job Requests IBM HPCPaa. S Client Dedicated and Managed massive HPC Compute Resources for Petascale Supercomputing IBM® Smart. Cloud® Management Services (IMI Services) Cloud Data Center (Norway) es t Re qu Job Response on sp Re HPC Compute & Storage Jo b b Jo FASP™ Job Request se Advanced Workload Management Layer WAN 20 ms* < 5 ms* LAN/SAN HPC Compute & Storage FASP™ Private HPCaa. S HPC Application Management On Premise

Challenges with TCP and alternative technologies § Distance degrades conditions on all networks – Latency (or Round Trip Times) increases – Packet loss increases – Fast networks are just as prone to degradation § TCP performance degrades severely with distance – TCP was designed for LANs and does not perform well over distance – Throughput bottlenecks are severe as latency & packet loss increase § TCP does not scale with bandwidth – TCP designed for low bandwidth – Adding more bandwidth does not improve throughput § Alternative technologies – – – Note: Table displays throughput degradation of TCP transfers on a 1 Gbps network as estimated round trip time and packet loss increases with distance. TCP-based - Network latency & packet loss must be low to work well UDP blasters – Inefficient use of bandwidth leads to congestion Modified TCP – Does not scale well on high-speed networks Data caching - Inappropriate for many large file transfer workflows Data compression - Time consuming & impractical for some file types

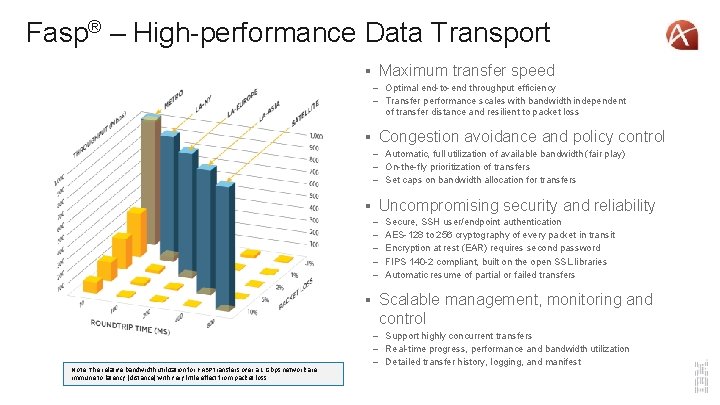

Fasp® – High-performance Data Transport § Maximum transfer speed – Optimal end-to-end throughput efficiency – Transfer performance scales with bandwidth independent of transfer distance and resilient to packet loss § Congestion avoidance and policy control – Automatic, full utilization of available bandwidth (fair play) – On-the-fly prioritization of transfers – Set caps on bandwidth allocation for transfers § Uncompromising security and reliability – – – Secure, SSH user/endpoint authentication AES-128 to 256 cryptography of every packet in transit Encryption at rest (EAR) requires second password FIPS 140 -2 compliant, built on the open SSL libraries Automatic resume of partial or failed transfers § Scalable management, monitoring and control Note: The relative bandwidth utilization for FASP transfers over a 1 Gbps network are immune to latency (distance) with very little effect from packet loss. – Support highly concurrent transfers – Real-time progress, performance and bandwidth utilization – Detailed transfer history, logging, and manifest

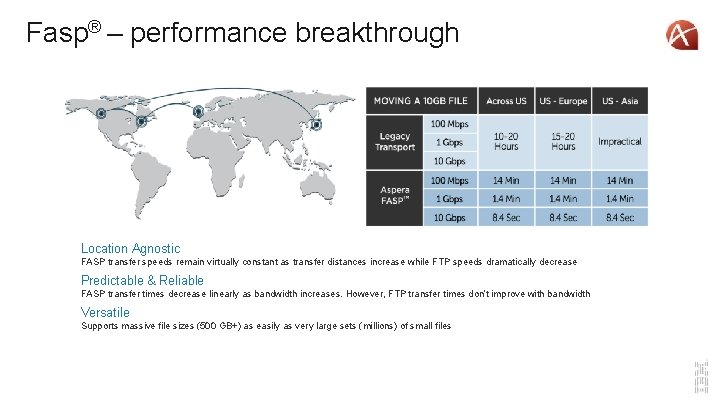

Fasp® – performance breakthrough Location Agnostic FASP transfer speeds remain virtually constant as transfer distances increase while FTP speeds dramatically decrease Predictable & Reliable FASP transfer times decrease linearly as bandwidth increases. However, FTP transfer times don’t improve with bandwidth Versatile Supports massive file sizes (500 GB+) as easily as very large sets (millions) of small files

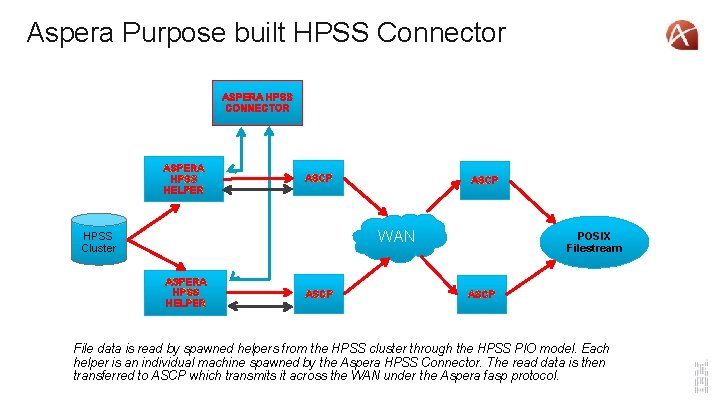

Aspera HPSS Connector • The IBM Aspera HPSS Connector is designed for cluster-to-cluster transfers of data using the HPSS PIO interface. • Provides for accelerated transfers within LAN/WAN environments utilizing the Aspera fasp protocol. • This is a full stack solution which optimizes how data is read from disk as well as how data is transmitted across the WAN.

Aspera Purpose built HPSS Connector ASPERA HPSS CONNECTOR ASPERA HPSS HELPER ASCP WAN HPSS Cluster ASPERA HPSS HELPER ASCP POSIX Filestream ASCP File data is read by spawned helpers from the HPSS cluster through the HPSS PIO model. Each helper is an individual machine spawned by the Aspera HPSS Connector. The read data is then transferred to ASCP which transmits it across the WAN under the Aspera fasp protocol.

Future outlook § The vendors in this market are continuously focusing on developing new technologies and solutions to boost performance and reduce the overall utility cost. § The major focusing areas are exascale computing, hot water cooling, networking technologies and embedded GPU and processors. § The HPC market is no longer limited to the on-premise. § The battle for HPC is between Intel and NVIDIA for the massive number crunching and data moving work that is the hallmark of HPC. Sources: Executive Summary Marketsand. Markets, High Performance Computing Market, Global Forecast to 2020, 2016; V. Natoli, Why 2016 Is the Most Important Year in HPC in Over Two Decades, https: //www. hpcwire. com/2016/08/23/2016 -important-year-hpc-two-decades/

Thank you Isabel Schwerdtfeger / schwerdtfeger@de. ibm. com / +49 -170 -6357251 / IBM Germany

- Slides: 20