I 256 Applied Natural Language Processing Marti Hearst

- Slides: 32

I 256: Applied Natural Language Processing Marti Hearst Sept 18, 2006 1

Why do puns make us groan? He drove his expensive car into a tree and found out how the Mercedes bends. Isn't the Grand Canyon just gorges? 2

Why do puns make us groan? Time flies like an arrow. Fruit flies like a banana. 3

Predicting Next Words One reason puns make us groan is they play on our assumptions of what the next word will be. They also exploit homonymy – same sound, different spelling and meaning (bends, Benz; gorges, gorgeous) polysemy – same spelling, different meaning 4

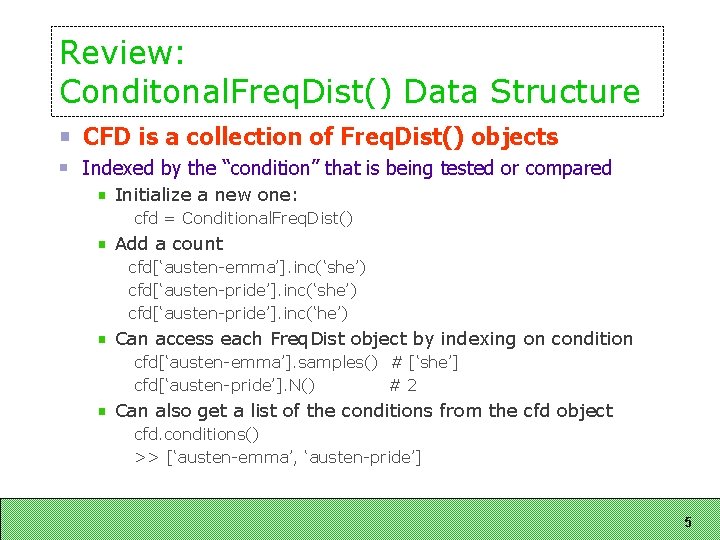

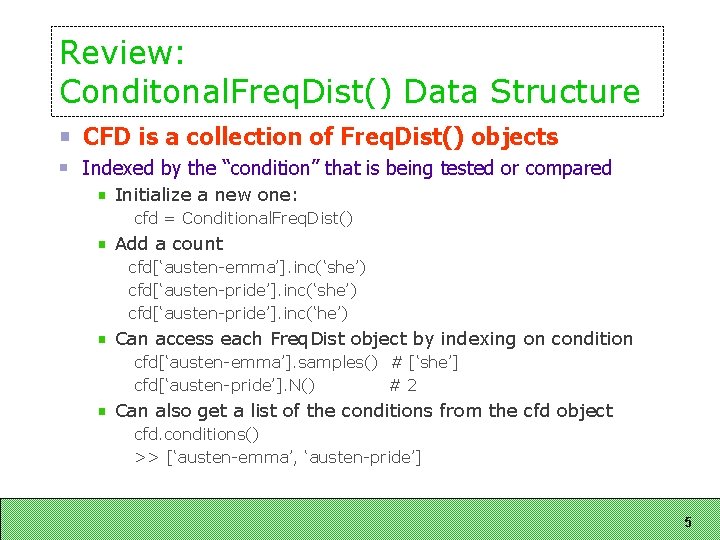

Review: Conditonal. Freq. Dist() Data Structure CFD is a collection of Freq. Dist() objects Indexed by the “condition” that is being tested or compared Initialize a new one: cfd = Conditional. Freq. Dist() Add a count cfd[‘austen-emma’]. inc(‘she’) cfd[‘austen-pride’]. inc(‘he’) Can access each Freq. Dist object by indexing on condition cfd[‘austen-emma’]. samples() # [‘she’] cfd[‘austen-pride’]. N() #2 Can also get a list of the conditions from the cfd object cfd. conditions() >> [‘austen-emma’, ‘austen-pride’] 5

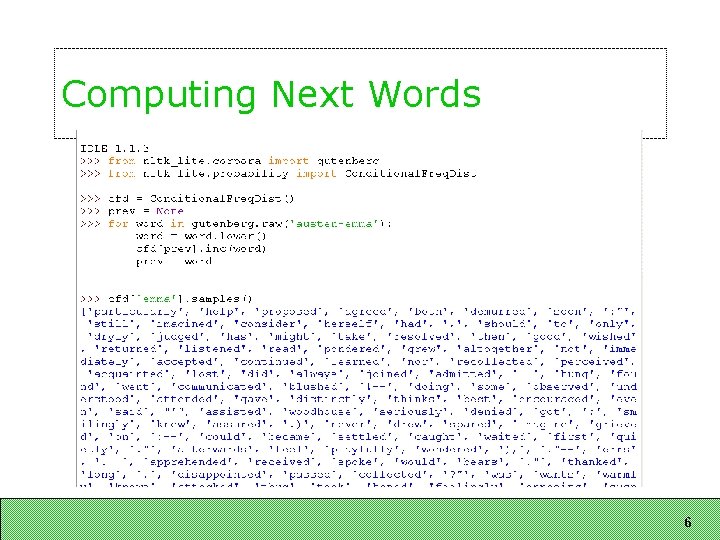

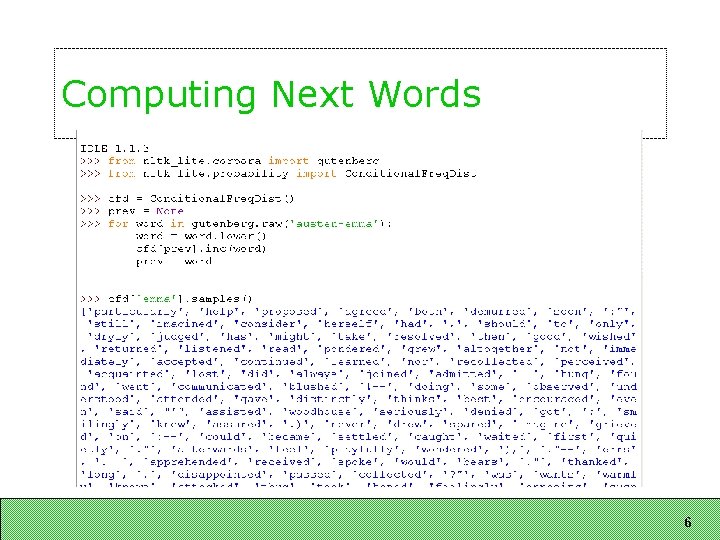

Computing Next Words 6

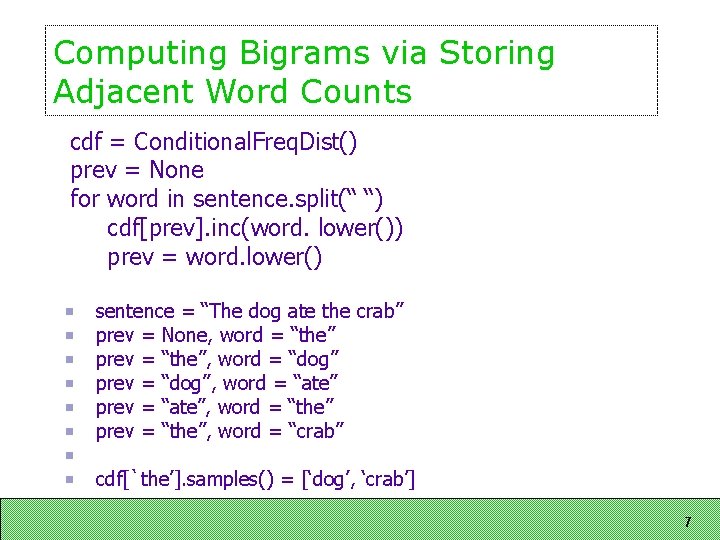

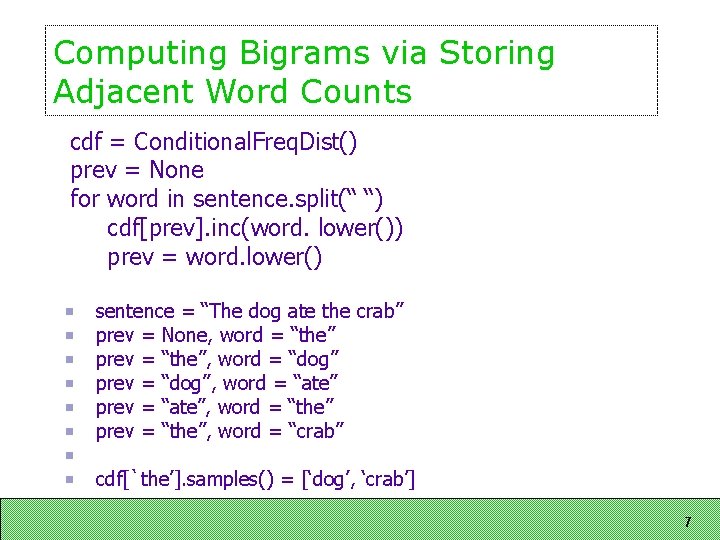

Computing Bigrams via Storing Adjacent Word Counts cdf = Conditional. Freq. Dist() prev = None for word in sentence. split(“ “) cdf[prev]. inc(word. lower()) prev = word. lower() sentence = “The dog ate the crab” prev = None, word = “the” prev = “the”, word = “dog” prev = “dog”, word = “ate” prev = “ate”, word = “the” prev = “the”, word = “crab” cdf[`the’]. samples() = [‘dog’, ‘crab’] 7

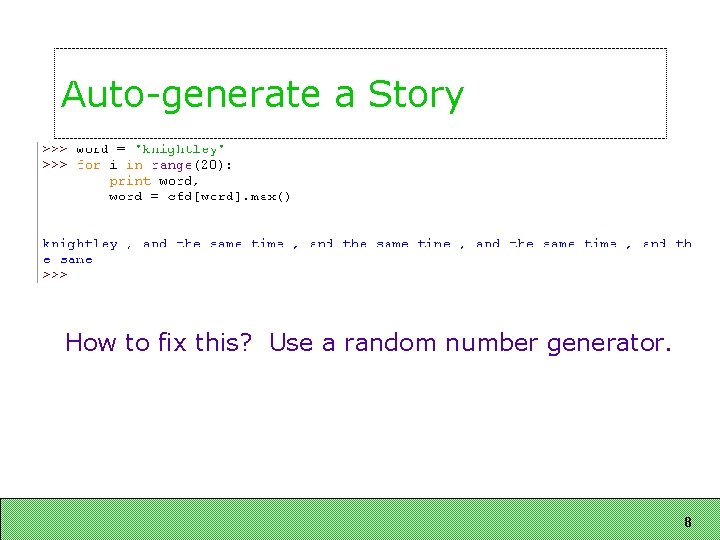

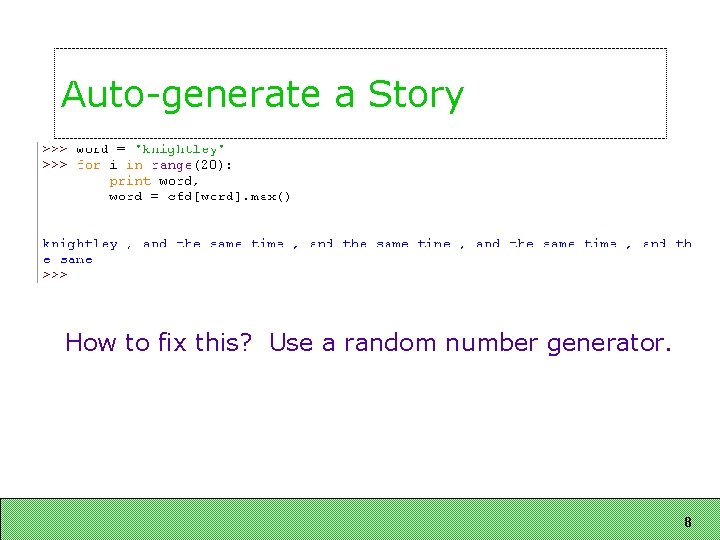

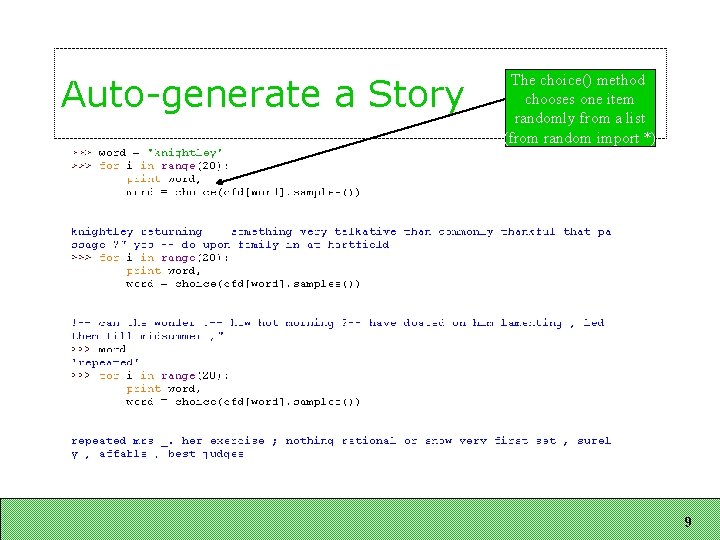

Auto-generate a Story How to fix this? Use a random number generator. 8

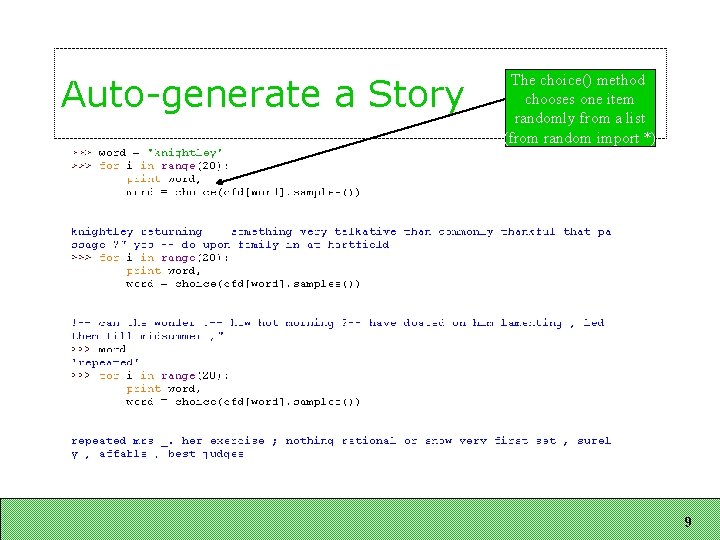

Auto-generate a Story The choice() method chooses one item randomly from a list (from random import *) 9

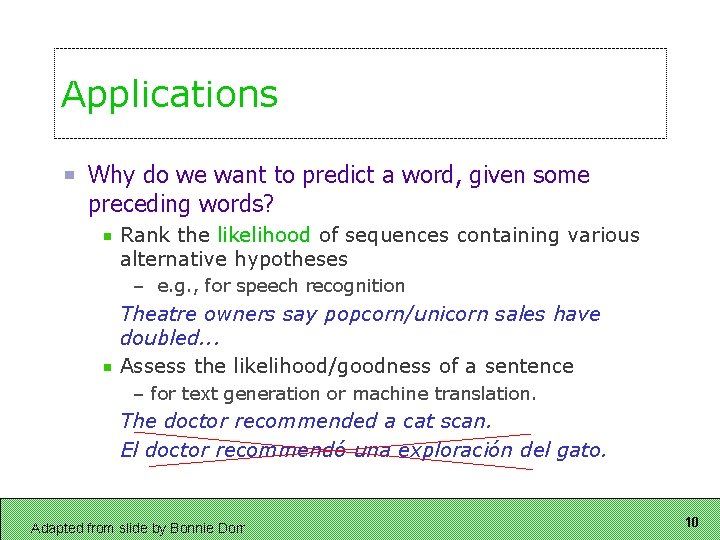

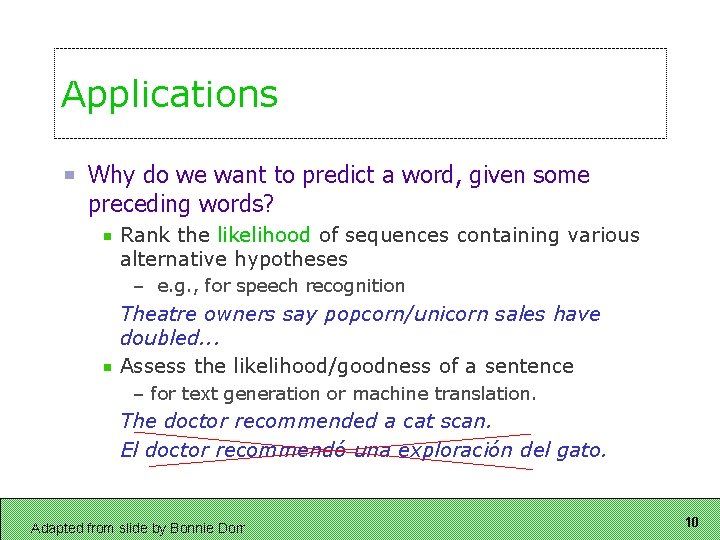

Applications Why do we want to predict a word, given some preceding words? Rank the likelihood of sequences containing various alternative hypotheses – e. g. , for speech recognition Theatre owners say popcorn/unicorn sales have doubled. . . Assess the likelihood/goodness of a sentence – for text generation or machine translation. The doctor recommended a cat scan. El doctor recommendó una exploración del gato. Adapted from slide by Bonnie Dorr 10

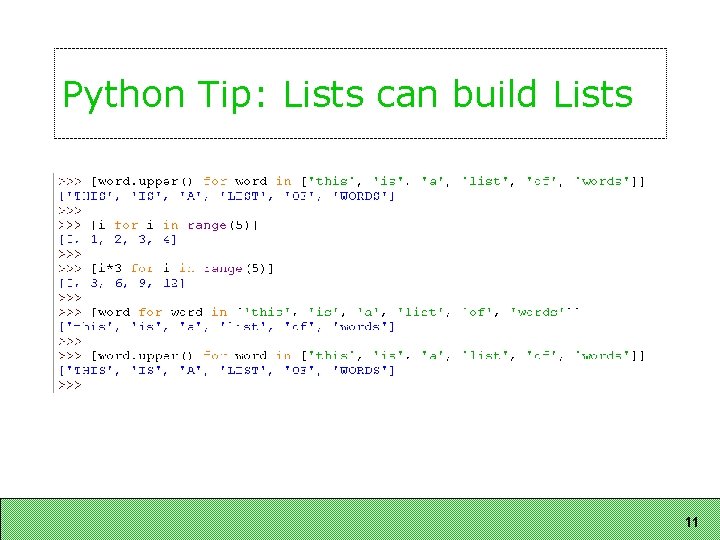

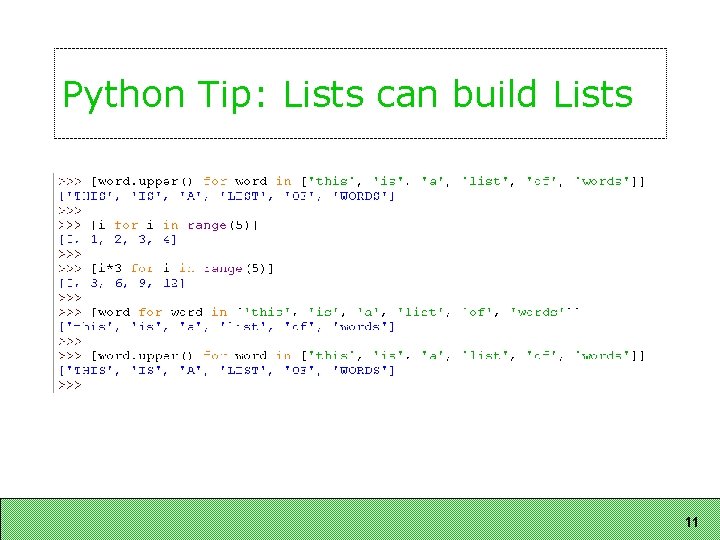

Python Tip: Lists can build Lists 11

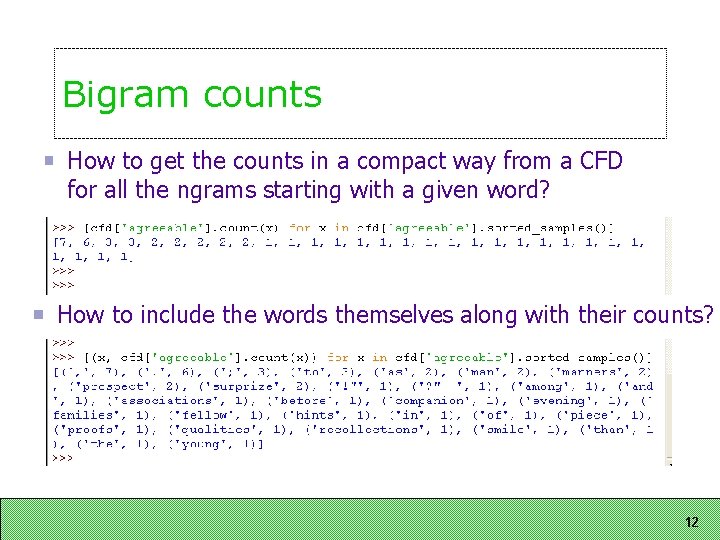

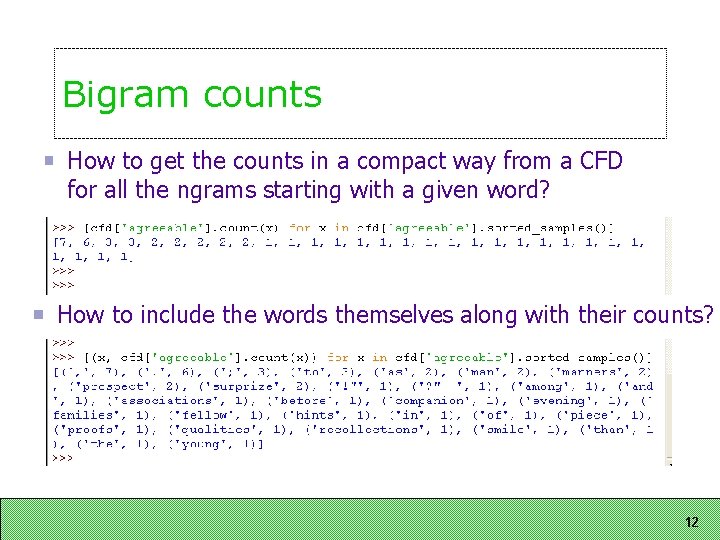

Bigram counts How to get the counts in a compact way from a CFD for all the ngrams starting with a given word? How to include the words themselves along with their counts? 12

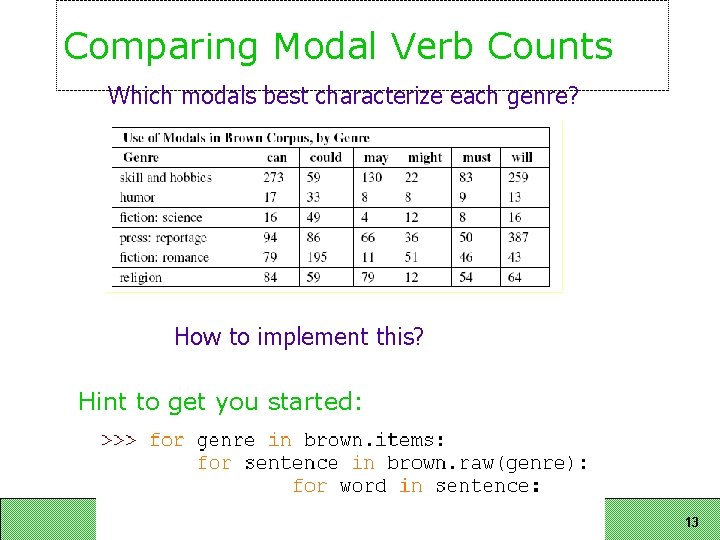

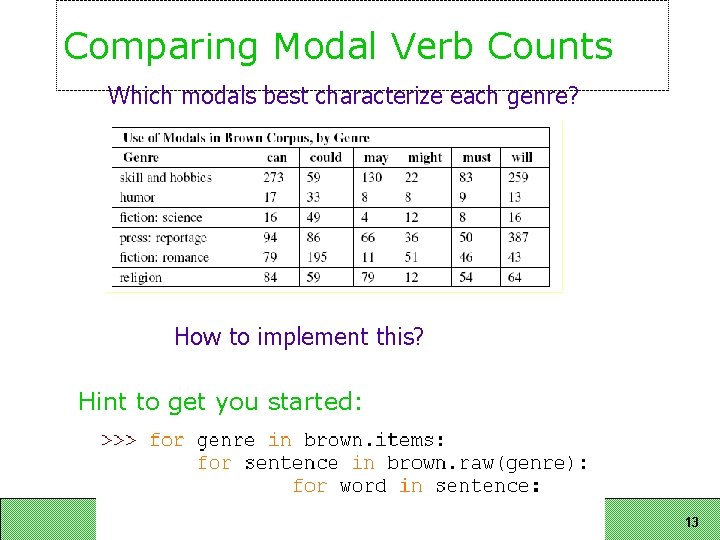

Comparing Modal Verb Counts Which modals best characterize each genre? How to implement this? Hint to get you started: 13

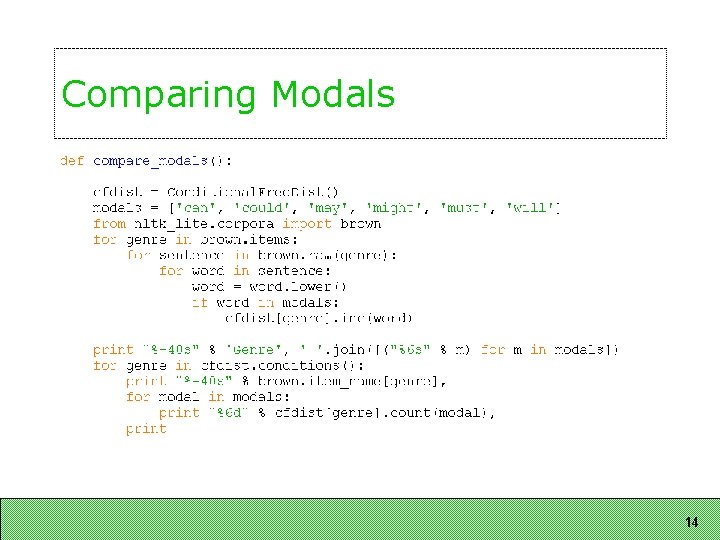

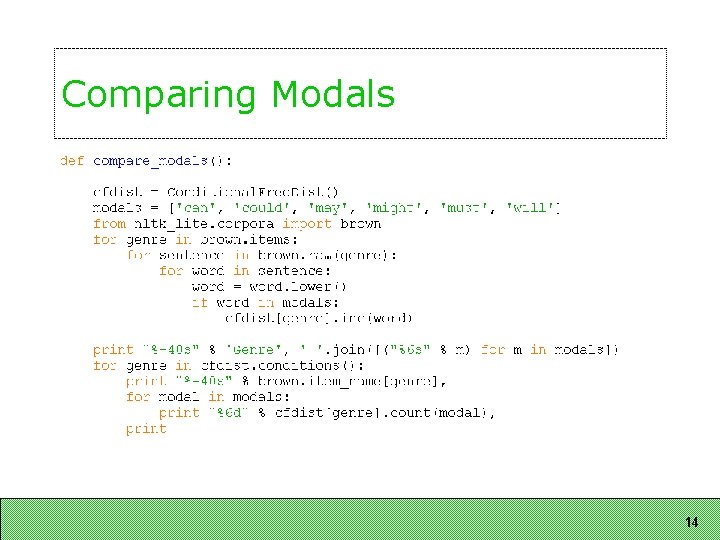

Comparing Modals 14

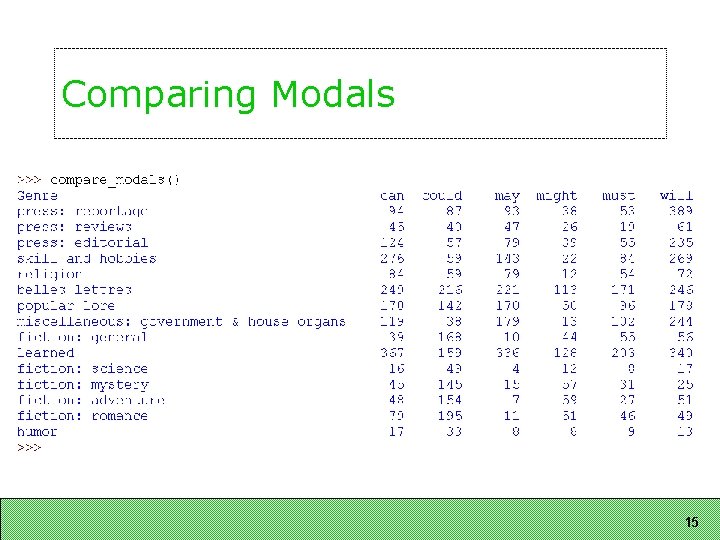

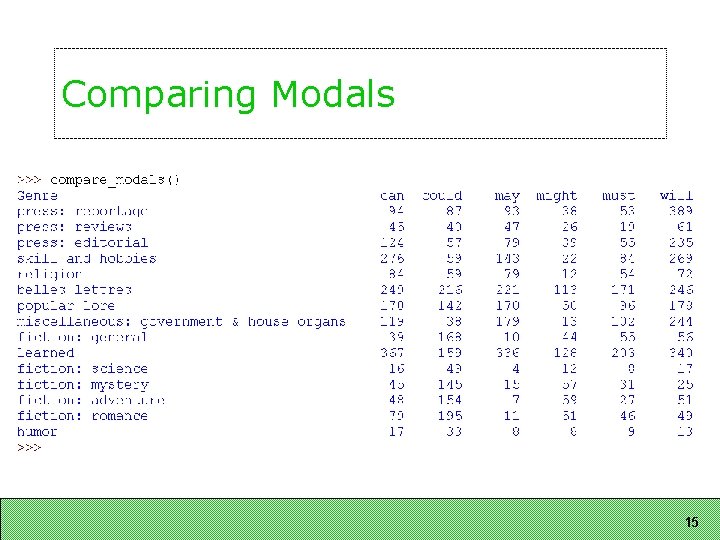

Comparing Modals 15

Part-of-Speech Tagging 16

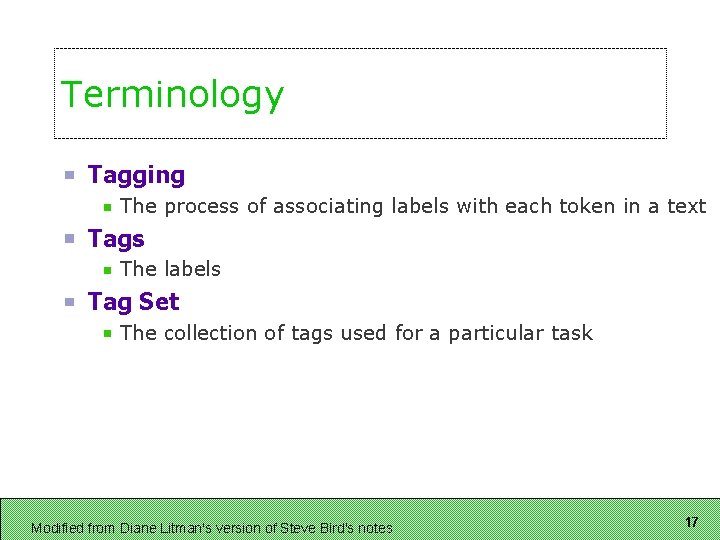

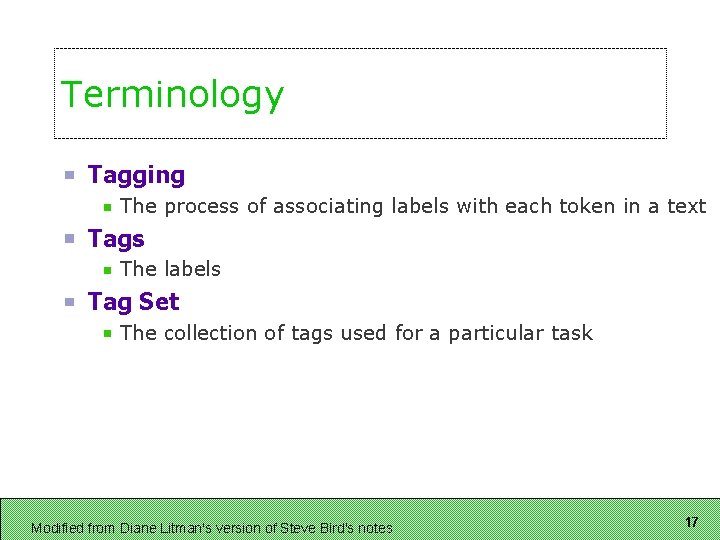

Terminology Tagging The process of associating labels with each token in a text Tags The labels Tag Set The collection of tags used for a particular task Modified from Diane Litman's version of Steve Bird's notes 17

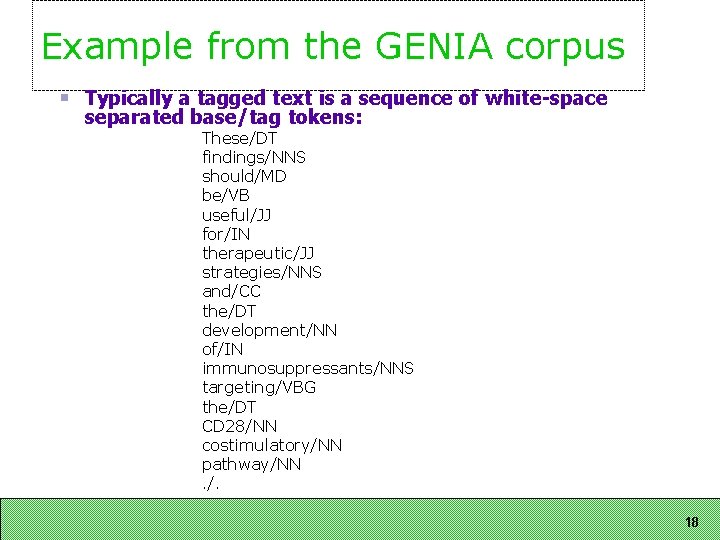

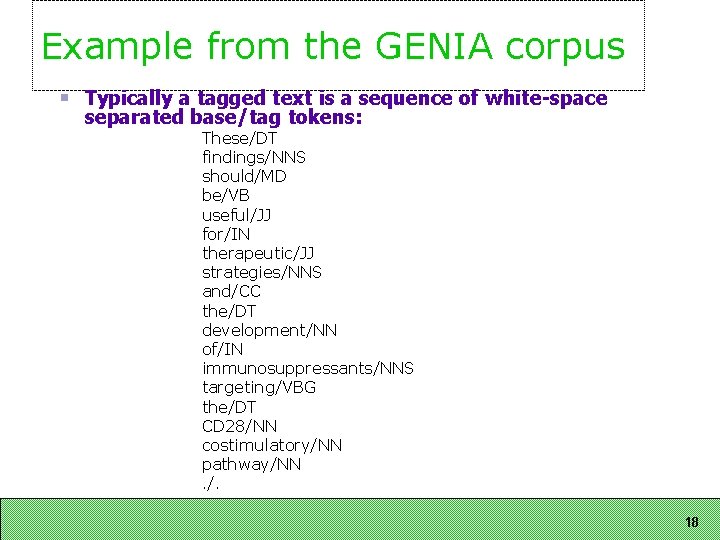

Example from the GENIA corpus Typically a tagged text is a sequence of white-space separated base/tag tokens: These/DT findings/NNS should/MD be/VB useful/JJ for/IN therapeutic/JJ strategies/NNS and/CC the/DT development/NN of/IN immunosuppressants/NNS targeting/VBG the/DT CD 28/NN costimulatory/NN pathway/NN. /. 18

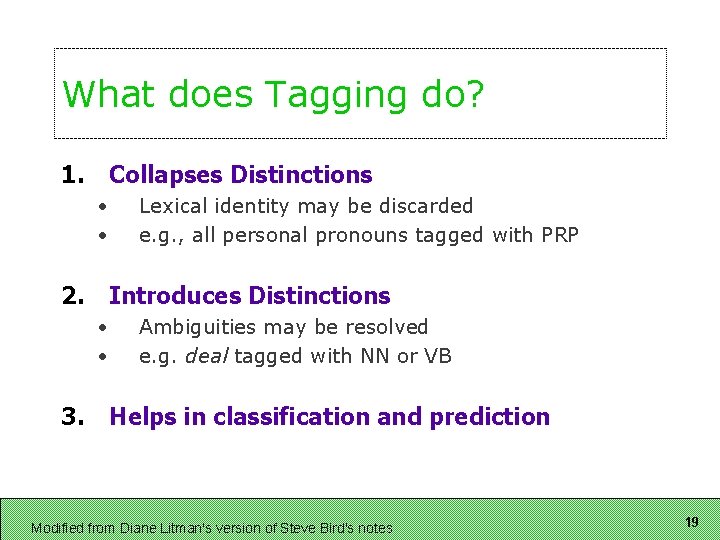

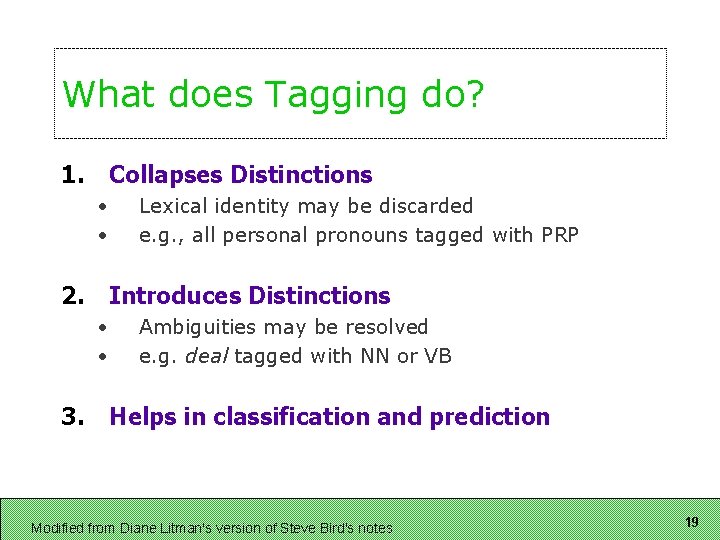

What does Tagging do? 1. Collapses Distinctions • • 2. Introduces Distinctions • • 3. Lexical identity may be discarded e. g. , all personal pronouns tagged with PRP Ambiguities may be resolved e. g. deal tagged with NN or VB Helps in classification and prediction Modified from Diane Litman's version of Steve Bird's notes 19

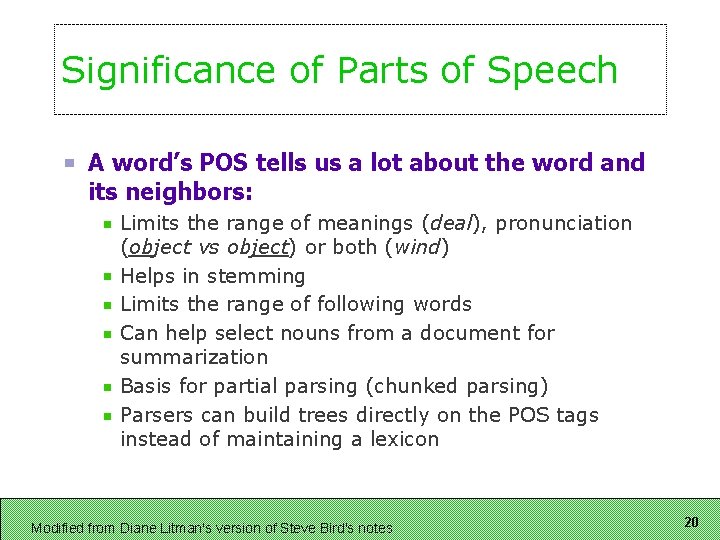

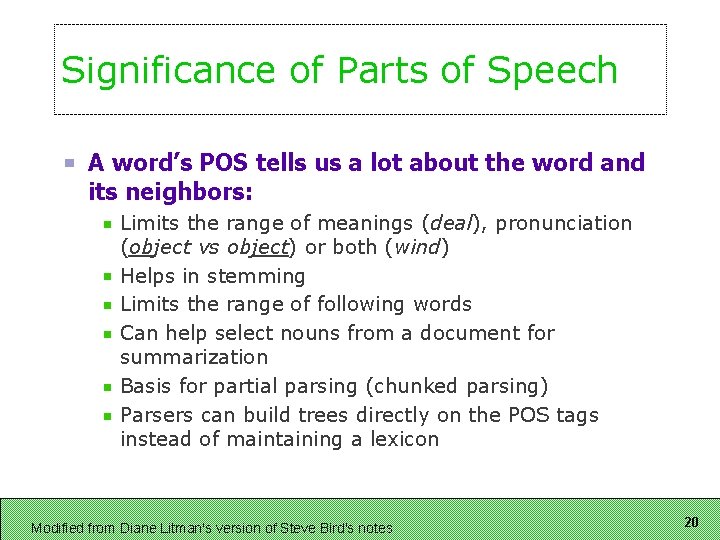

Significance of Parts of Speech A word’s POS tells us a lot about the word and its neighbors: Limits the range of meanings (deal), pronunciation (object vs object) or both (wind) Helps in stemming Limits the range of following words Can help select nouns from a document for summarization Basis for partial parsing (chunked parsing) Parsers can build trees directly on the POS tags instead of maintaining a lexicon Modified from Diane Litman's version of Steve Bird's notes 20

Choosing a tagset The choice of tagset greatly affects the difficulty of the problem Need to strike a balance between Getting better information about context Make it possible for classifiers to do their job Slide modified from Massimo Poesio's 21

Some of the best-known Tagsets Brown corpus: 87 tags (more when tags are combined) Penn Treebank: 45 tags Lancaster UCREL C 5 (used to tag the BNC): 61 tags Lancaster C 7: 145 tags Slide modified from Massimo Poesio's 22

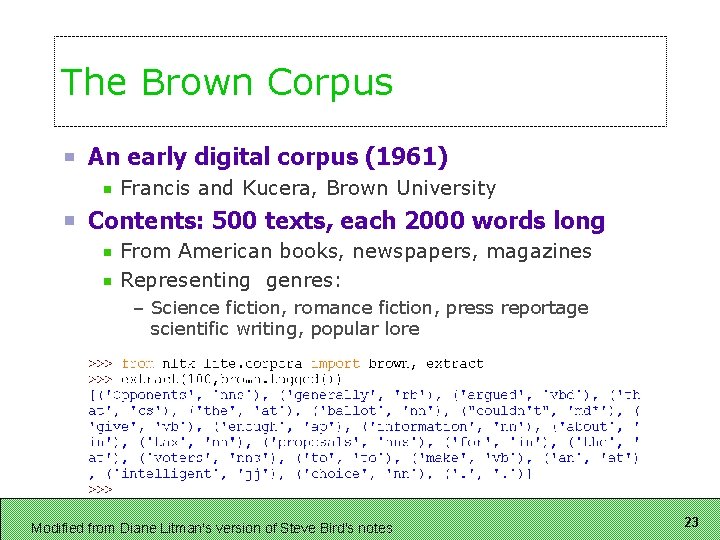

The Brown Corpus An early digital corpus (1961) Francis and Kucera, Brown University Contents: 500 texts, each 2000 words long From American books, newspapers, magazines Representing genres: – Science fiction, romance fiction, press reportage scientific writing, popular lore Modified from Diane Litman's version of Steve Bird's notes 23

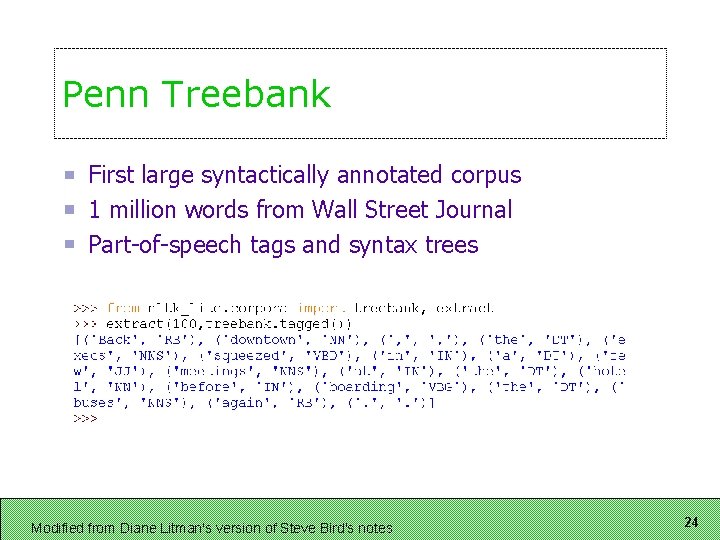

Penn Treebank First large syntactically annotated corpus 1 million words from Wall Street Journal Part-of-speech tags and syntax trees Modified from Diane Litman's version of Steve Bird's notes 24

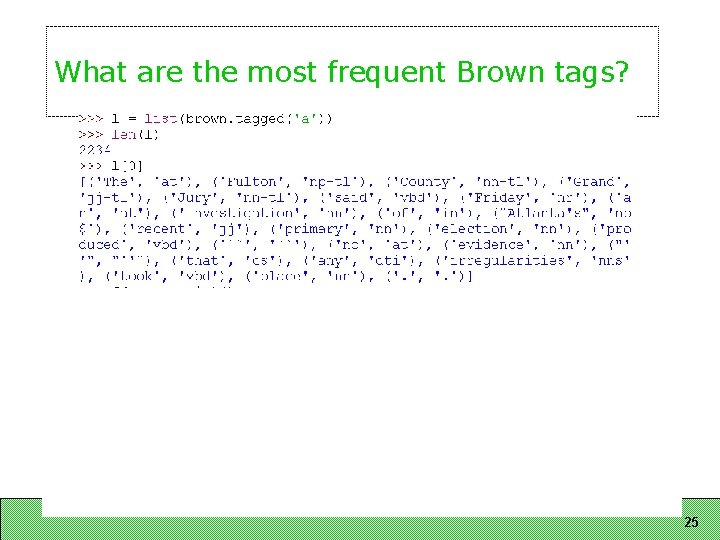

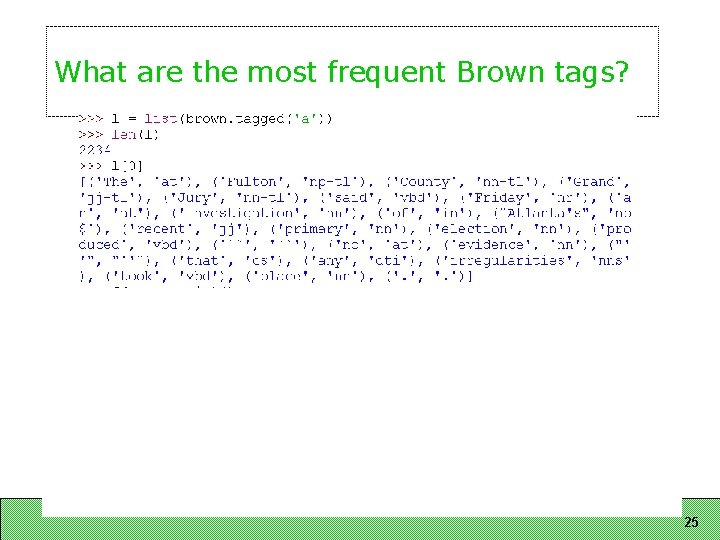

What are the most frequent Brown tags? 25

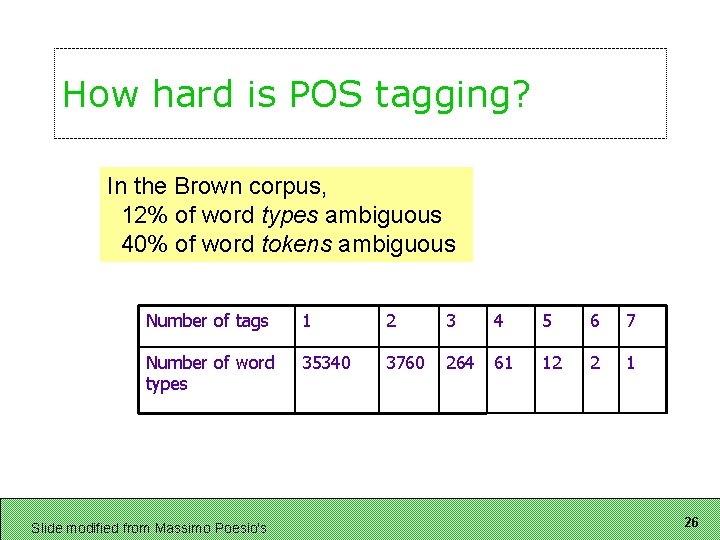

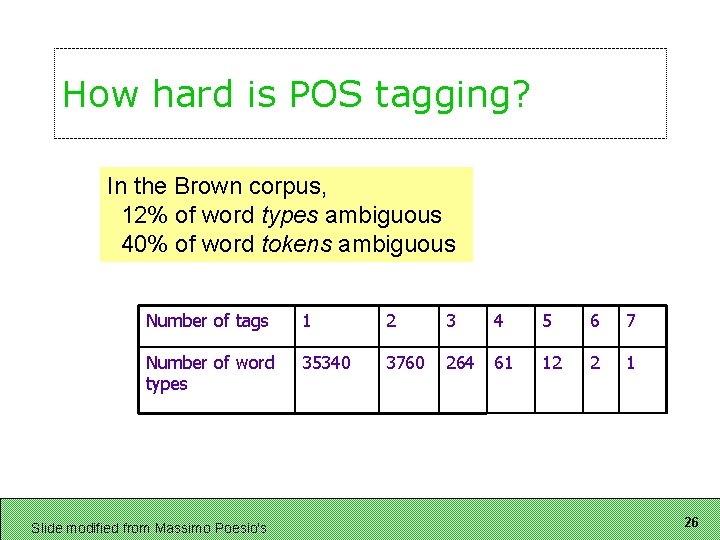

How hard is POS tagging? In the Brown corpus, 12% of word types ambiguous 40% of word tokens ambiguous Number of tags 1 2 3 4 5 6 7 Number of word types 35340 3760 264 61 12 2 1 Slide modified from Massimo Poesio's 26

Tagging methods Hand-coded Statistical taggers Brill (transformation-based) tagger 27

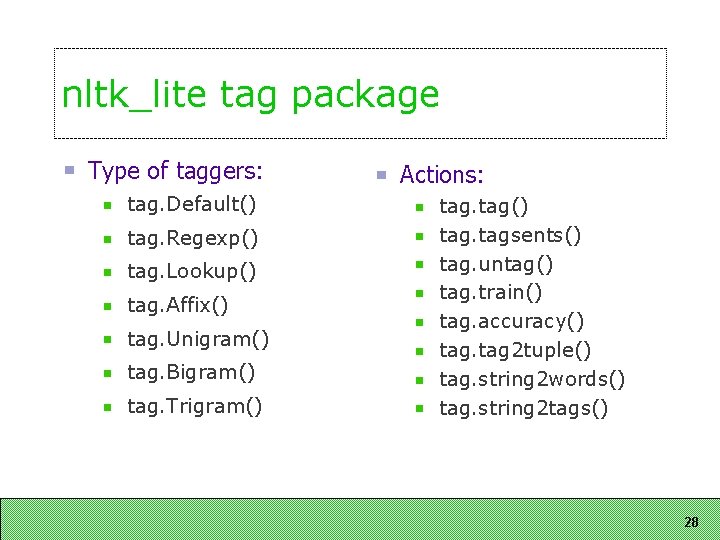

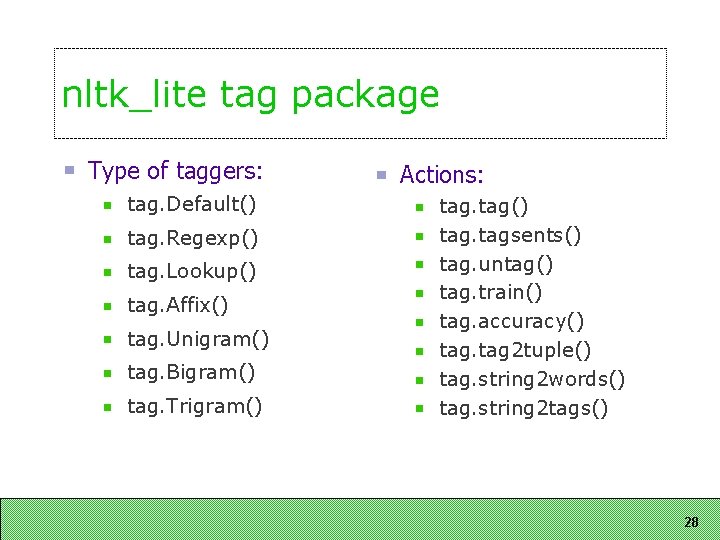

nltk_lite tag package Type of taggers: tag. Default() tag. Regexp() tag. Lookup() tag. Affix() tag. Unigram() tag. Bigram() tag. Trigram() Actions: tag() tagsents() tag. untag() tag. train() tag. accuracy() tag 2 tuple() tag. string 2 words() tag. string 2 tags() 28

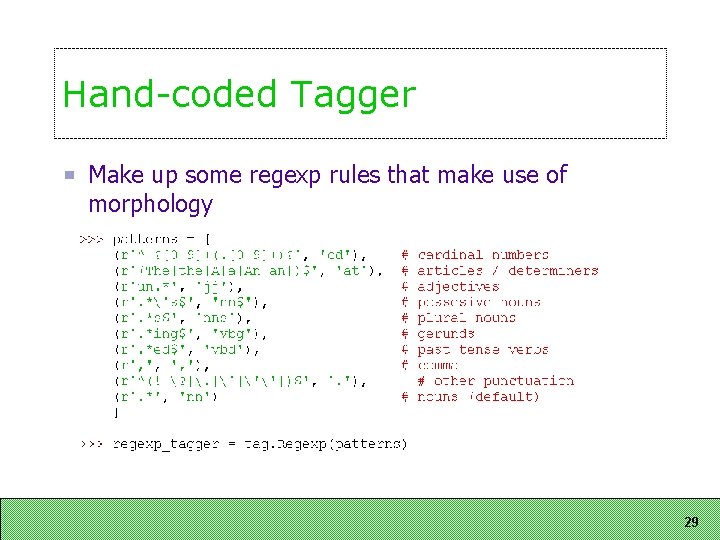

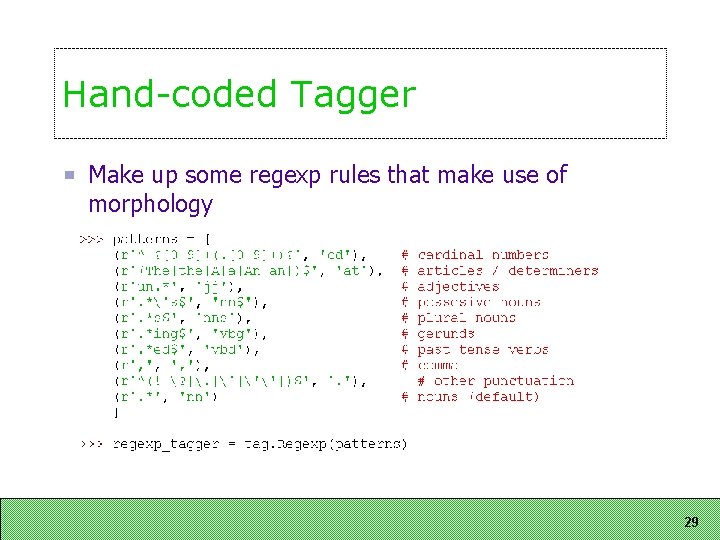

Hand-coded Tagger Make up some regexp rules that make use of morphology 29

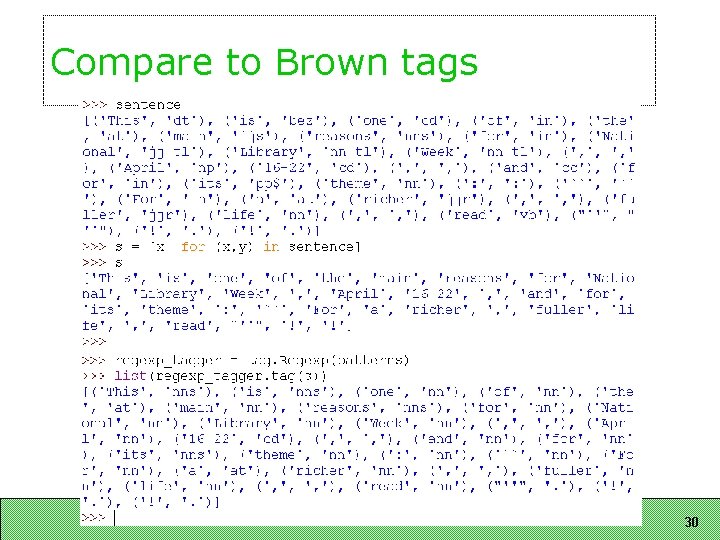

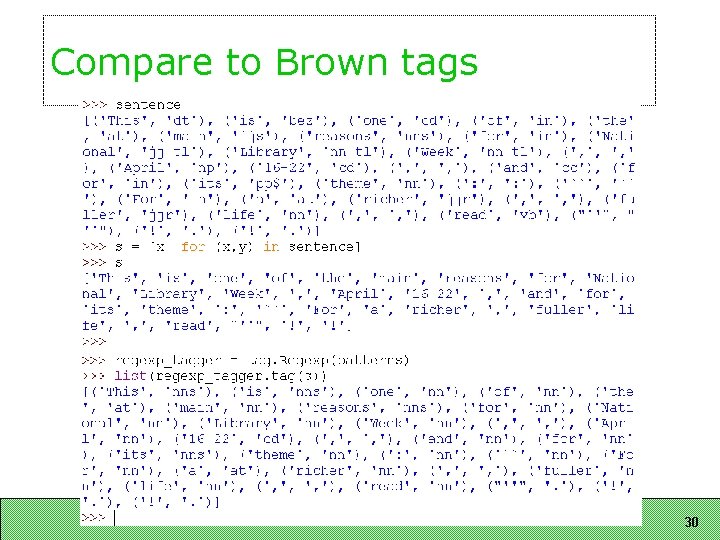

Compare to Brown tags 30

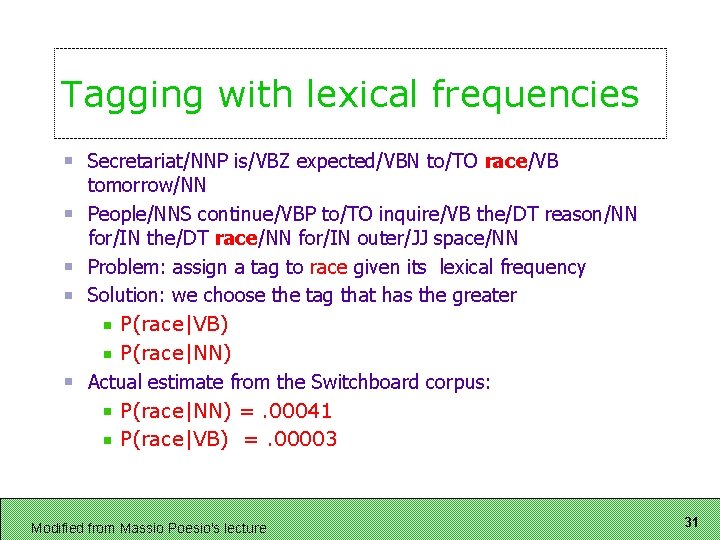

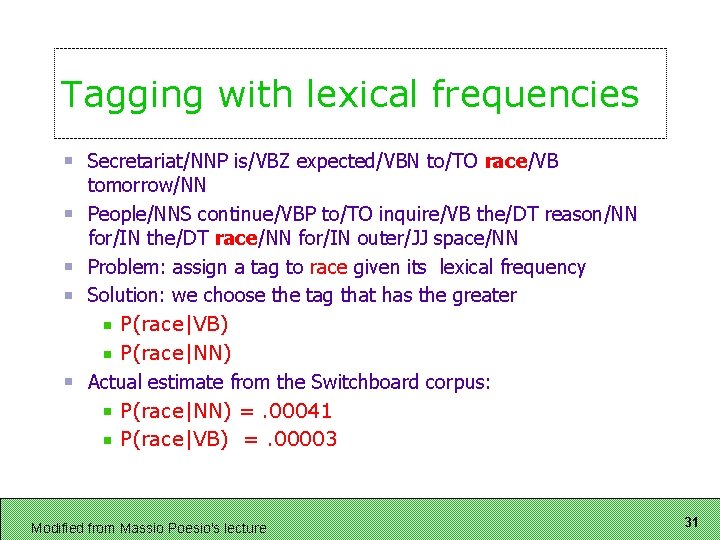

Tagging with lexical frequencies Secretariat/NNP is/VBZ expected/VBN to/TO race/VB tomorrow/NN People/NNS continue/VBP to/TO inquire/VB the/DT reason/NN for/IN the/DT race/NN for/IN outer/JJ space/NN Problem: assign a tag to race given its lexical frequency Solution: we choose the tag that has the greater P(race|VB) P(race|NN) Actual estimate from the Switchboard corpus: P(race|NN) =. 00041 P(race|VB) =. 00003 Modified from Massio Poesio's lecture 31

Next Time N-gram taggers Training, testing, and determining accuracy The Brill tagger 32