Hyper Sight Boosting Distant 3 D Vision on

Hyper. Sight: Boosting Distant 3 D Vision on a Single Dual-camera Smartphone Zifan Liu 1, Hongzi Zhu 1, Junchi Chen 1, Shan Chang 2 and Lili Qiu 3 1 Shanghai Jiao Tong University, China 2 Donghua University, China 3 University of Texas at Austin, USA

Motivation p The depth and 3 D coordinate information around a smartphone and its user can be of great interests Dual cameras are popular on smartphones now! 2

Pinhole Model of a Camera p A general camera model can be characterized by a simple pinhole camera model plus with lens distortions 3

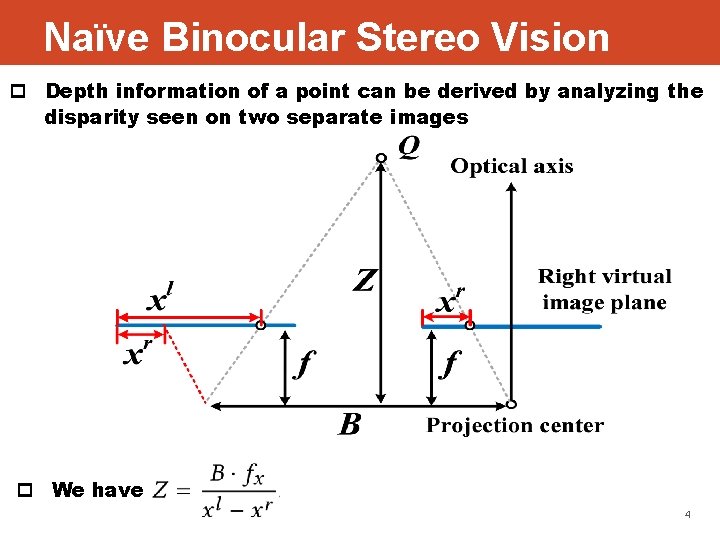

Naïve Binocular Stereo Vision p Depth information of a point can be derived by analyzing the disparity seen on two separate images p We have 4

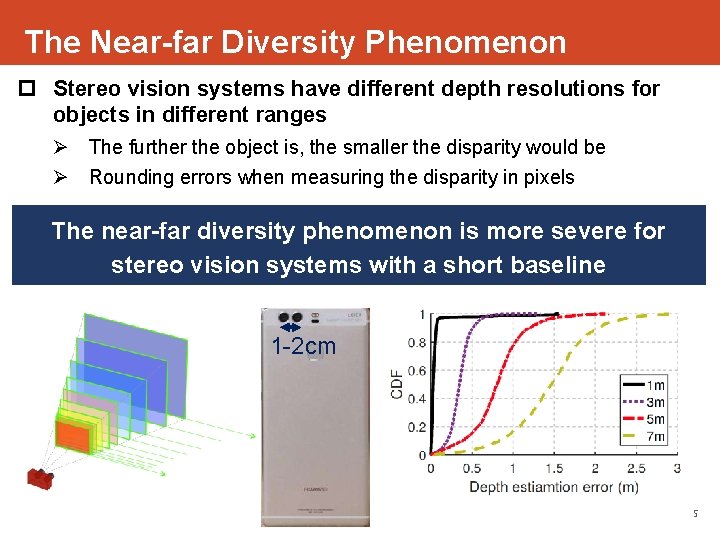

The Near-far Diversity Phenomenon p Stereo vision systems have different depth resolutions for objects in different ranges Ø The further the object is, the smaller the disparity would be Ø Rounding errors when measuring the disparity in pixels The near-far diversity phenomenon is more severe for stereo vision systems with a short baseline 1 -2 cm 5

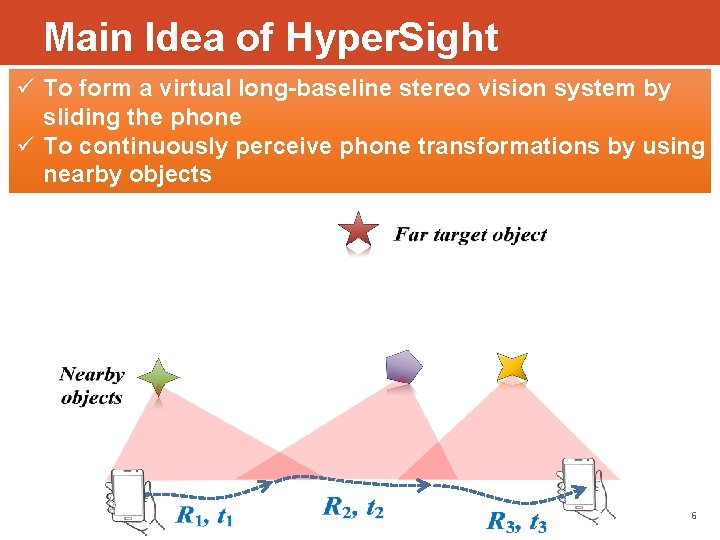

Main Idea of Hyper. Sight ü To form a virtual long-baseline stereo vision system by sliding the phone ü To continuously perceive phone transformations by using nearby objects 6

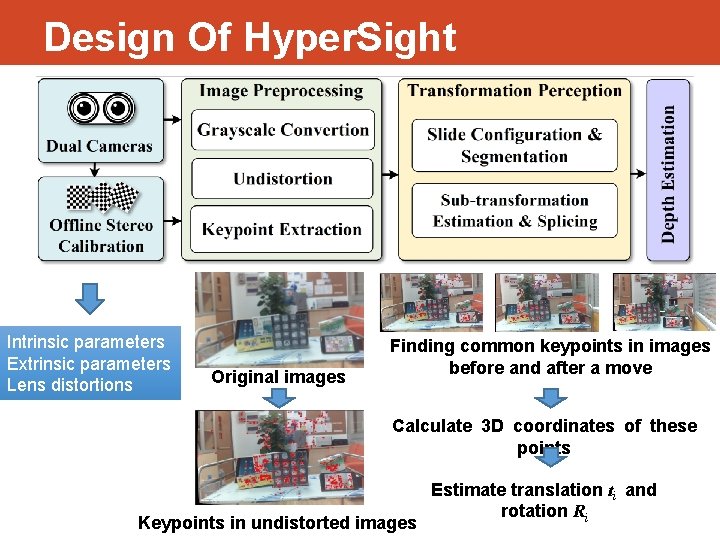

Design Of Hyper. Sight Intrinsic parameters Extrinsic parameters Lens distortions Original images Finding common keypoints in images before and after a move Calculate 3 D coordinates of these points Keypoints in undistorted images Estimate translation ti and rotation Ri

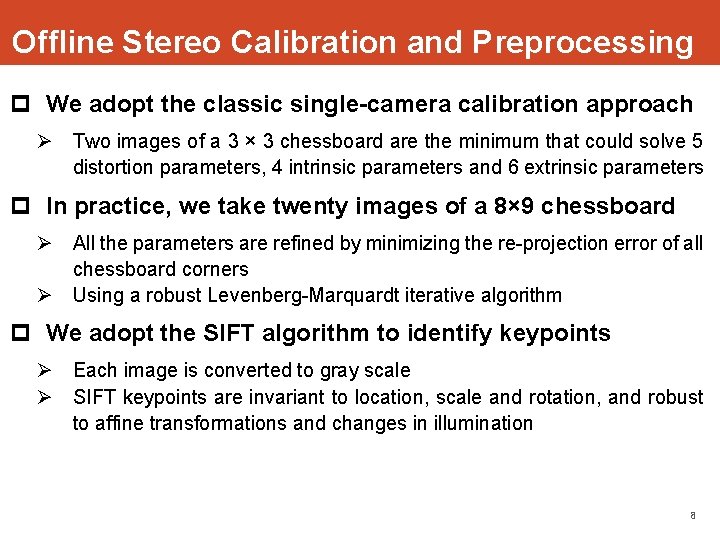

Offline Stereo Calibration and Preprocessing p We adopt the classic single-camera calibration approach Ø Two images of a 3 × 3 chessboard are the minimum that could solve 5 distortion parameters, 4 intrinsic parameters and 6 extrinsic parameters p In practice, we take twenty images of a 8× 9 chessboard Ø All the parameters are refined by minimizing the re-projection error of all chessboard corners Ø Using a robust Levenberg-Marquardt iterative algorithm p We adopt the SIFT algorithm to identify keypoints Ø Each image is converted to gray scale Ø SIFT keypoints are invariant to location, scale and rotation, and robust to affine transformations and changes in illumination 8

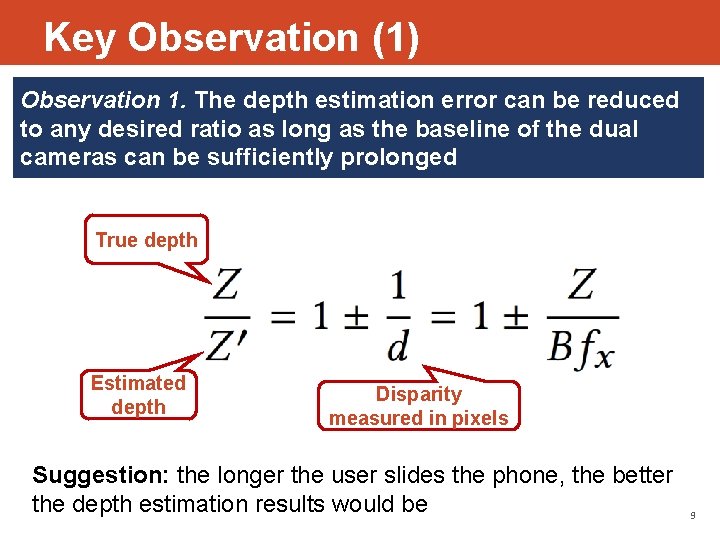

Key Observation (1) Observation 1. The depth estimation error can be reduced to any desired ratio as long as the baseline of the dual cameras can be sufficiently prolonged True depth Estimated depth Disparity measured in pixels Suggestion: the longer the user slides the phone, the better the depth estimation results would be 9

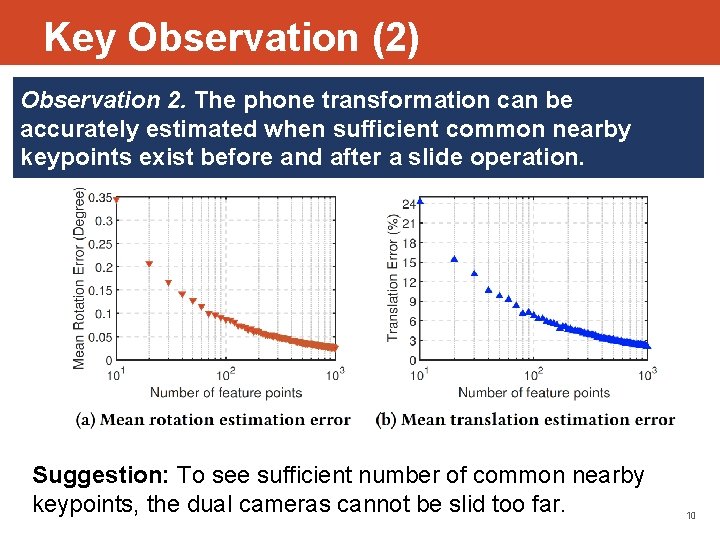

Key Observation (2) Observation 2. The phone transformation can be accurately estimated when sufficient common nearby keypoints exist before and after a slide operation. Suggestion: To see sufficient number of common nearby keypoints, the dual cameras cannot be slid too far. 10

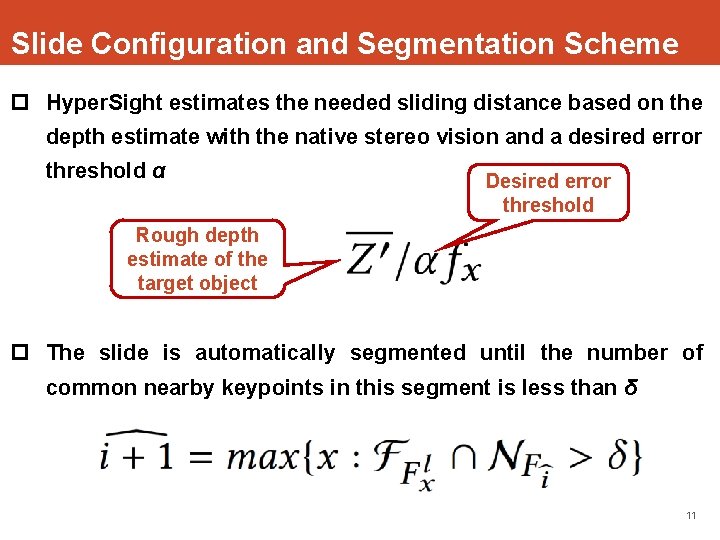

Slide Configuration and Segmentation Scheme p Hyper. Sight estimates the needed sliding distance based on the depth estimate with the native stereo vision and a desired error threshold α Desired error threshold Rough depth estimate of the target object p The slide is automatically segmented until the number of common nearby keypoints in this segment is less than δ 11

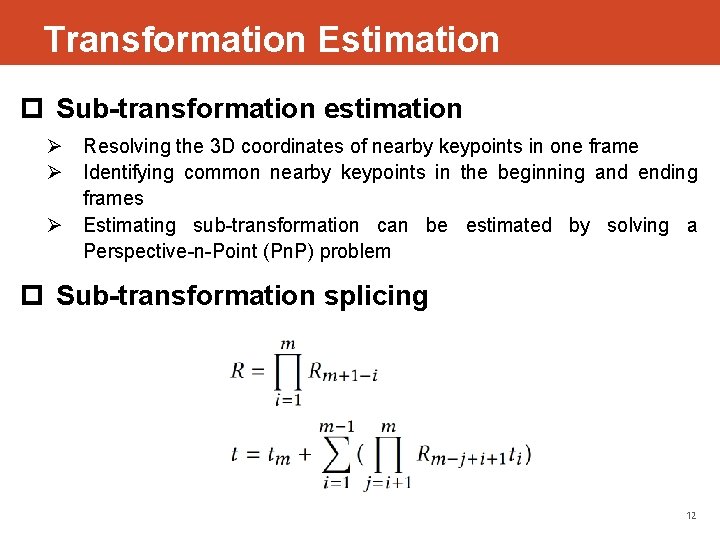

Transformation Estimation p Sub-transformation estimation Ø Resolving the 3 D coordinates of nearby keypoints in one frame Ø Identifying common nearby keypoints in the beginning and ending frames Ø Estimating sub-transformation can be estimated by solving a Perspective-n-Point (Pn. P) problem p Sub-transformation splicing 12

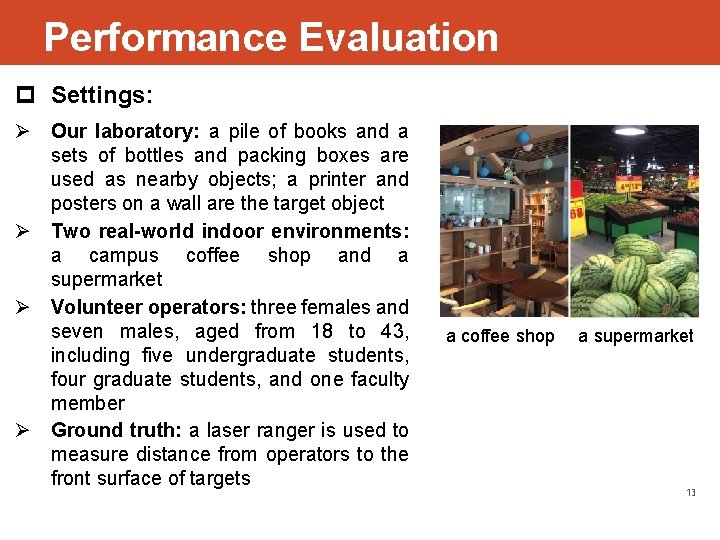

Performance Evaluation p Settings: Ø Our laboratory: a pile of books and a sets of bottles and packing boxes are used as nearby objects; a printer and posters on a wall are the target object Ø Two real-world indoor environments: a campus coffee shop and a supermarket Ø Volunteer operators: three females and seven males, aged from 18 to 43, including five undergraduate students, four graduate students, and one faculty member Ø Ground truth: a laser ranger is used to measure distance from operators to the front surface of targets a coffee shop a supermarket 13

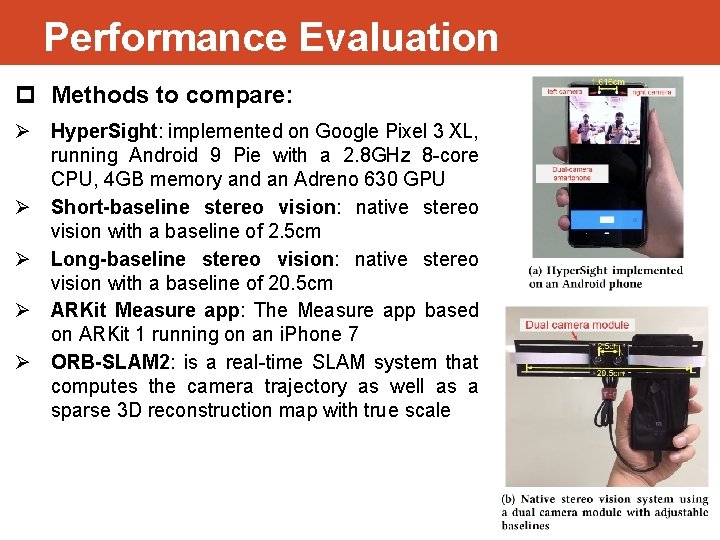

Performance Evaluation p Methods to compare: Ø Hyper. Sight: implemented on Google Pixel 3 XL, running Android 9 Pie with a 2. 8 GHz 8 -core CPU, 4 GB memory and an Adreno 630 GPU Ø Short-baseline stereo vision: native stereo vision with a baseline of 2. 5 cm Ø Long-baseline stereo vision: native stereo vision with a baseline of 20. 5 cm Ø ARKit Measure app: The Measure app based on ARKit 1 running on an i. Phone 7 Ø ORB-SLAM 2: is a real-time SLAM system that computes the camera trajectory as well as a sparse 3 D reconstruction map with true scale 14

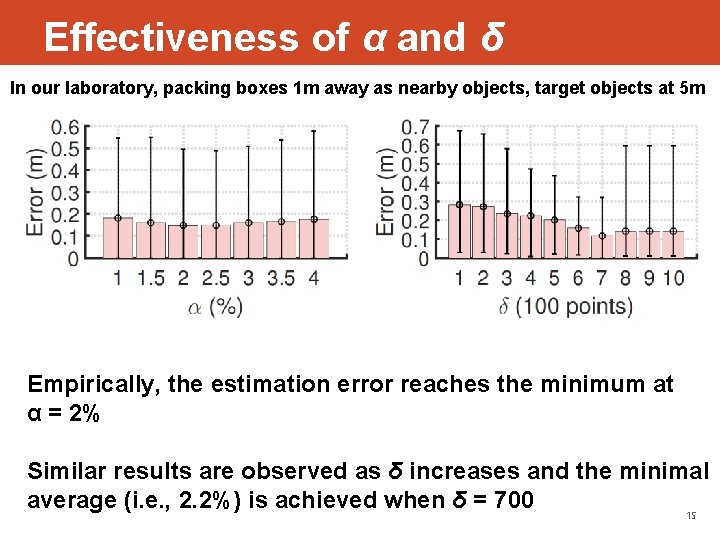

Effectiveness of α and δ In our laboratory, packing boxes 1 m away as nearby objects, target objects at 5 m Empirically, the estimation error reaches the minimum at α = 2% Similar results are observed as δ increases and the minimal average (i. e. , 2. 2%) is achieved when δ = 700 15

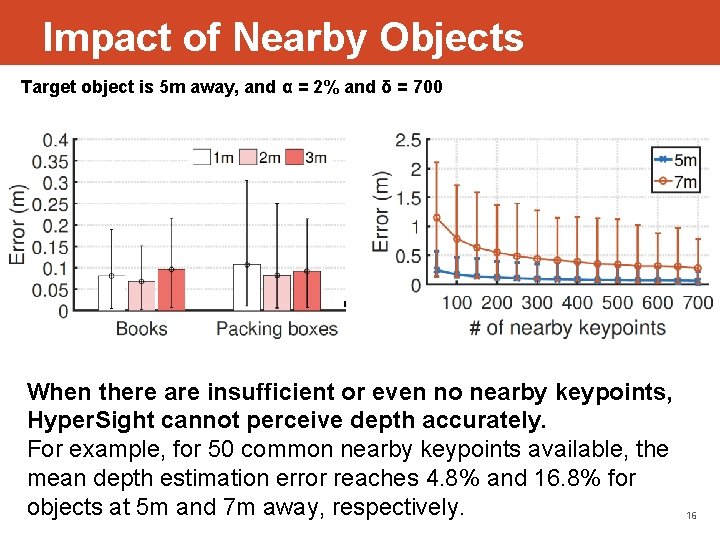

Impact of Nearby Objects Target object is 5 m away, and α = 2% and δ = 700 When there are insufficient or even no nearby keypoints, Hyper. Sight cannot perceive depth accurately. For example, for 50 common nearby keypoints available, the mean depth estimation error reaches 4. 8% and 16. 8% for objects at 5 m and 7 m away, respectively. 16

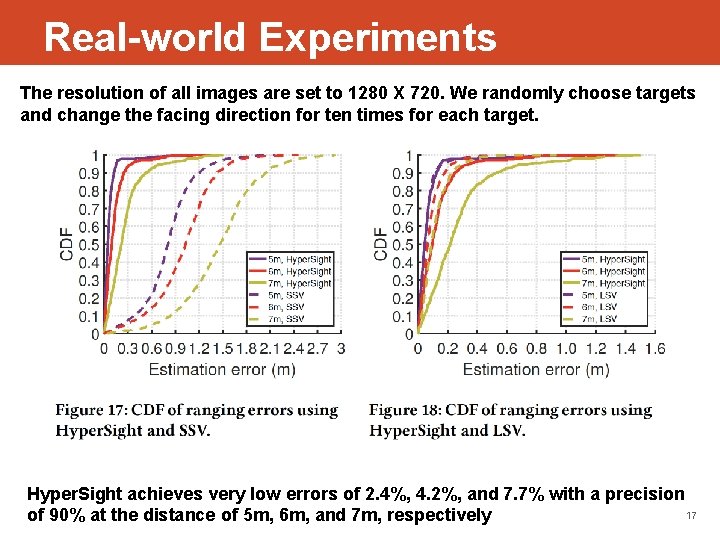

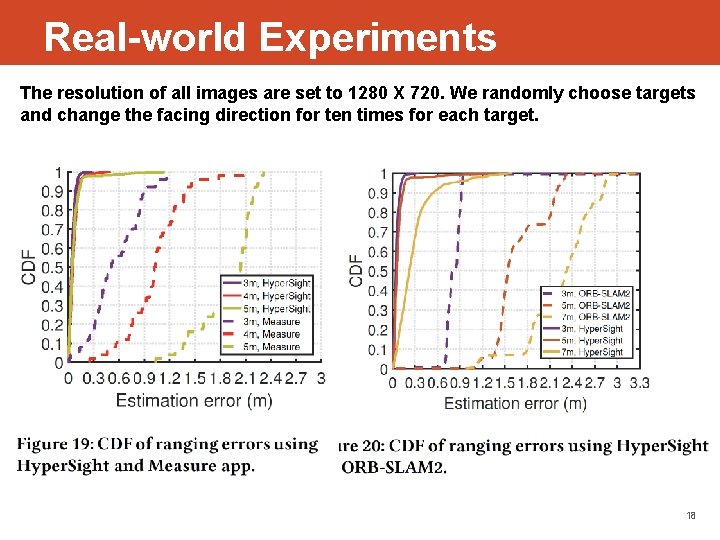

Real-world Experiments The resolution of all images are set to 1280 X 720. We randomly choose targets and change the facing direction for ten times for each target. Hyper. Sight achieves very low errors of 2. 4%, 4. 2%, and 7. 7% with a precision 17 of 90% at the distance of 5 m, 6 m, and 7 m, respectively

Real-world Experiments The resolution of all images are set to 1280 X 720. We randomly choose targets and change the facing direction for ten times for each target. 18

Conclusion l Hyper. Sight overcomes the low accuracy issue of using the native shortbaseline dual cameras to range a distant object l Hyper. Sight is implemented as software on COTS dual-camera devices l Hyper. Sight needs to see nearby objects in order to track the movement of the phone l Hyper. Sight fits best in static scenarios where the target and most objects are not moving l Hyper. Sight requires both nearby objects and far objects to have sufficient identified keypoints, which further limits the usage of Hyper. Sight in the scenarios with plain colors and textures 19

Thanks! A&Q hongzi@sjtu. edu. cn For more details, please access http: //lion. sjtu. edu. cn

- Slides: 20