Hybrid Pipeline Structure for Self Organizing Learning Array

Hybrid Pipeline Structure for Self -Organizing Learning Array ISNN 2007: The 4 th International Symposium on Neural Networks Yinyin Liu 1, Ding Mingwei 2 , Janusz A. Starzyk 1, 1 School of Electrical Engineering & Computer Science Ohio University, USA 2 Ross University

Outline • RC systems design of SOLAR • Dimensionality reduction • Input selection, weighting • Pipeline structure Motor cortex Pars opercularis • Experimental results • Conclusions Somatosensory cortex Sensory associative cortex Visual associative cortex Broca’s area Visual cortex Primary Auditory cortex Wernicke’s area 2

Intelligence AI’s holy grail From Pattie Maes MIT Media Lab • “…Perhaps the last frontier of science – its ultimate challenge- is to understand the biological basis of consciousness and the mental process by which we perceive, act, learn and remember. . ” from Principles of Neural Science by E. R. Kandel et al. E. R. Kandel won Nobel Price in 2000 for his work on physiological basis of memory storage in neurons. • “…The question of intelligence is the last great terrestrial frontier of science. . . ” from Jeff Hawkins On Intelligence. Jeff Hawkins founded the Redwood Neuroscience Institute devoted to brain research. He co -founded Palm Computing and Handspring Inc. 3

How can we design intelligence? • We need to know how • We need means to implement it • We need resources to build and sustain its operation 4

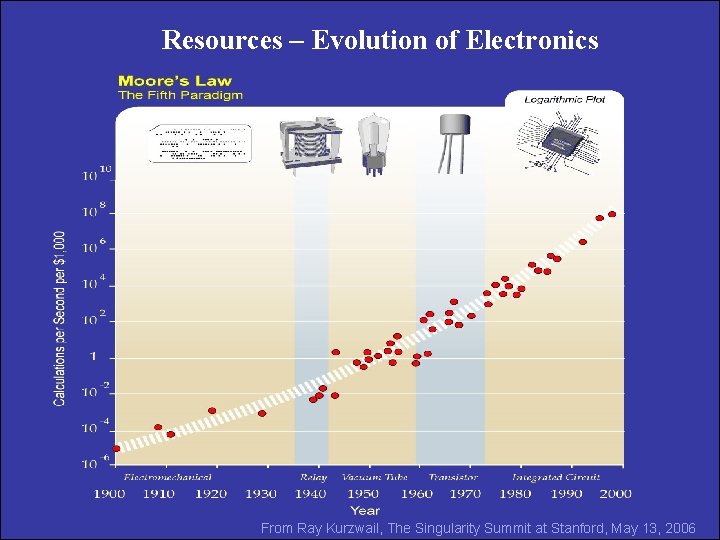

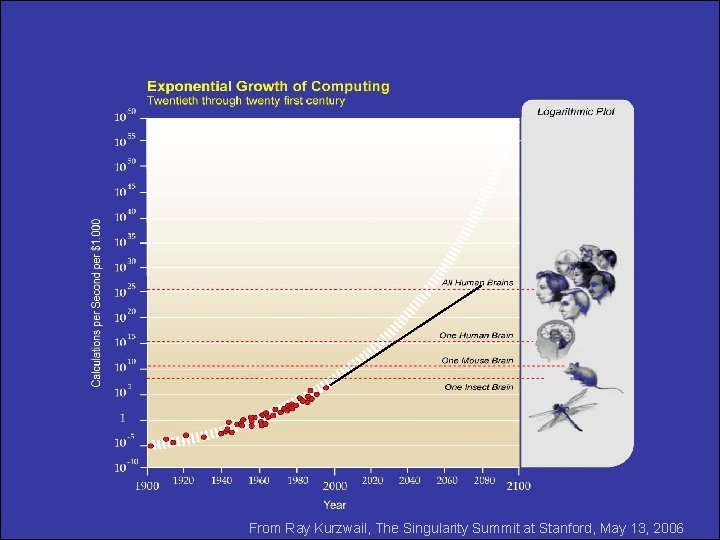

Resources – Evolution of Electronics 5 From Ray Kurzwail, The Singularity Summit at Stanford, May 13, 2006

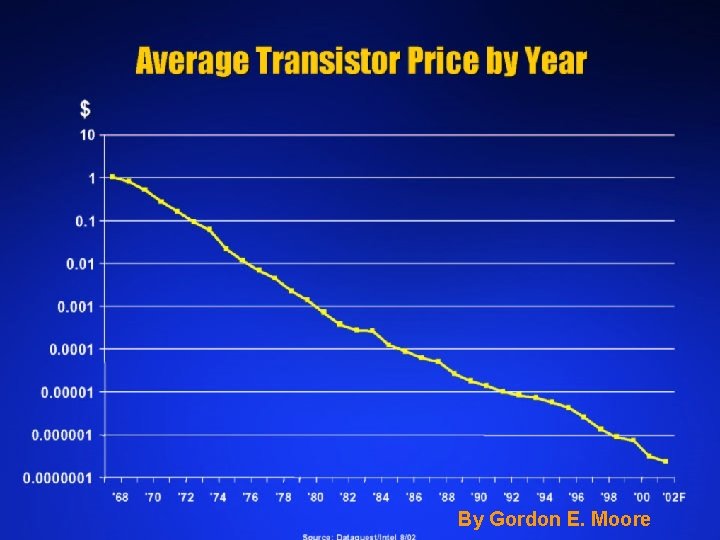

By Gordon E. Moore 6

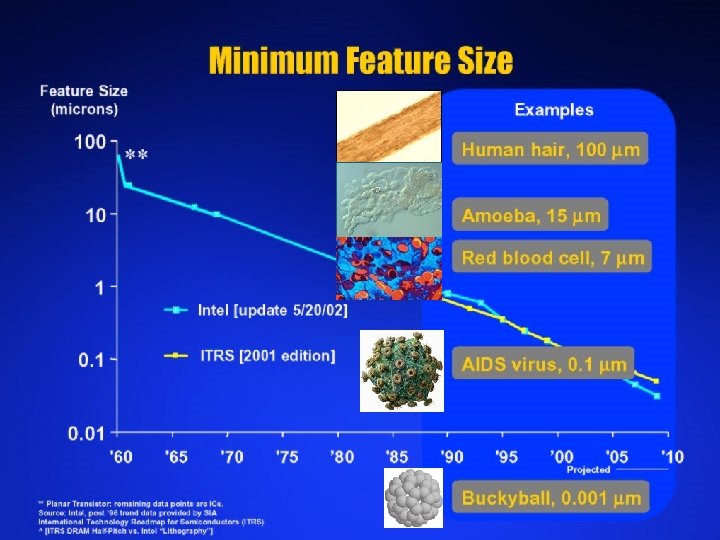

7

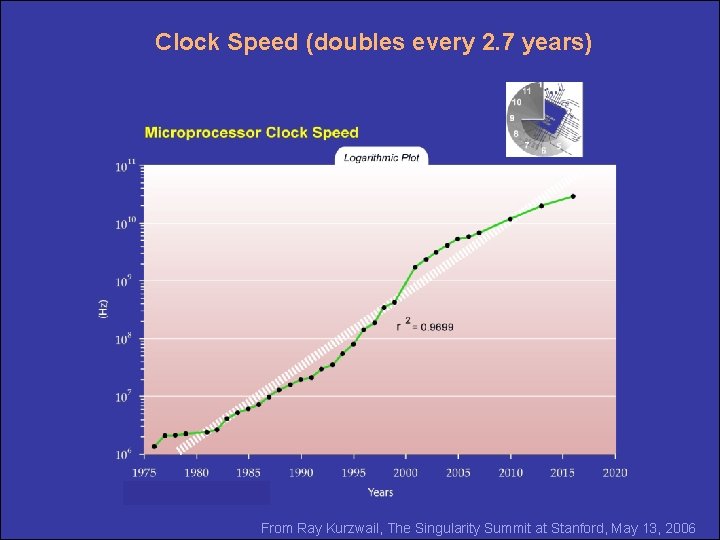

Clock Speed (doubles every 2. 7 years) 8 From Ray Kurzwail, The Singularity Summit at Stanford, May 13, 2006

From Ray Kurzwail, The Singularity Summit at Stanford, May 13, 2006 9

Outline • RC systems design of SOLAR • Dimensionality reduction • Input selection, weighting • Pipeline structure Motor cortex Pars opercularis • Experimental results • Conclusions Somatosensory cortex Sensory associative cortex Visual associative cortex Broca’s area Visual cortex Primary Auditory cortex Wernicke’s area 10

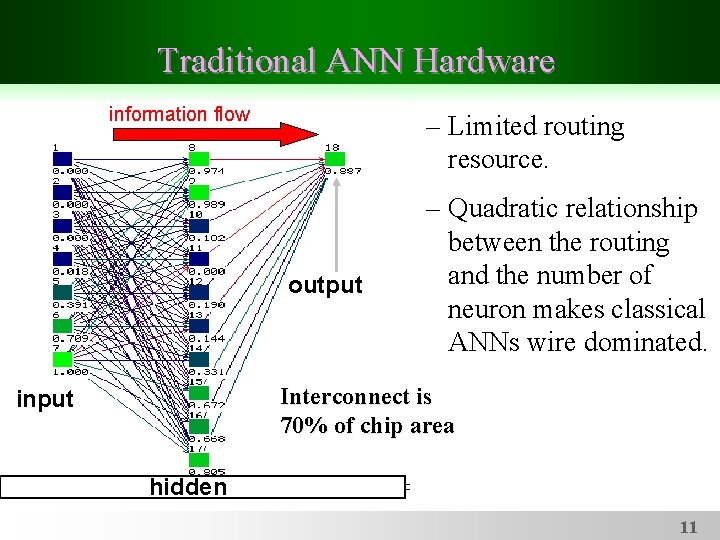

Traditional ANN Hardware information flow – Limited routing resource. output – Quadratic relationship between the routing and the number of neuron makes classical ANNs wire dominated. Interconnect is 70% of chip area input hidden 11

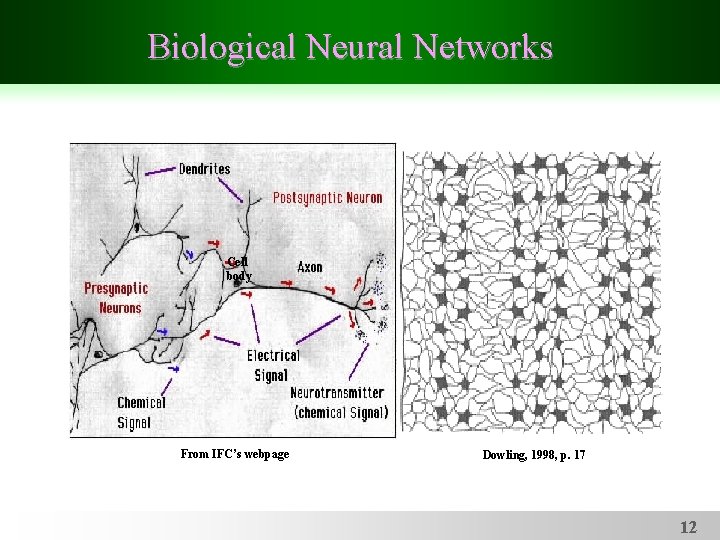

Biological Neural Networks Cell body From IFC’s webpage Dowling, 1998, p. 17 12

Sparse Structure • 1012 neurons in human brain are sparsely connected • On average, each neuron is connected to other neurons through about 104 synapses • Sparse structure enables efficient computation and saves energy and cost 13

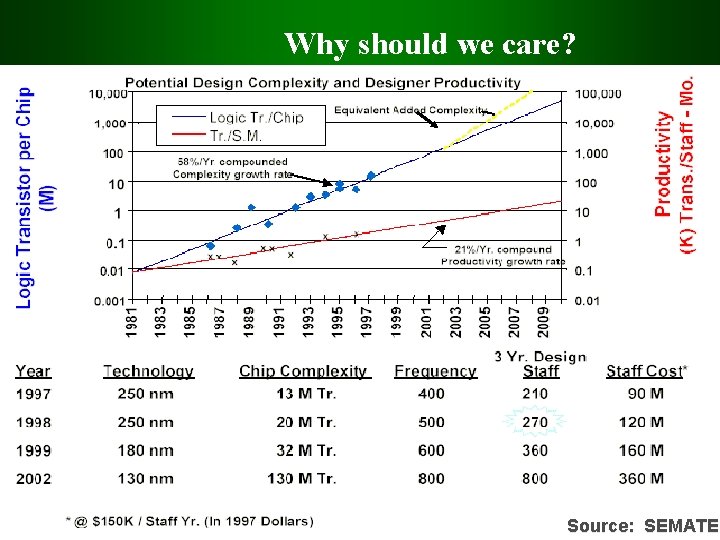

Why should we care? Source: SEMATEC 14

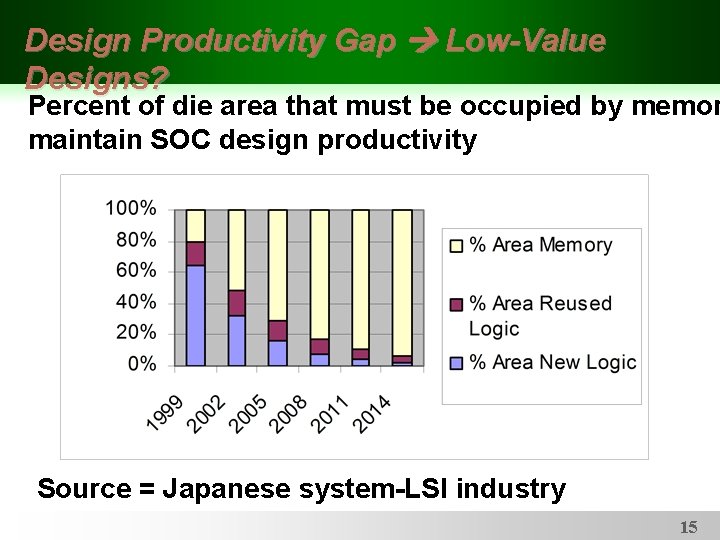

Design Productivity Gap Low-Value Designs? Percent of die area that must be occupied by memor maintain SOC design productivity Source = Japanese system-LSI industry 15

Outline • RC systems design of SOLAR • Dimensionality reduction • Input selection, weighting • Pipeline structure • Experimental results Pars opercularis Motor cortex • Conclusions Somatosensory cortex Sensory associative cortex Visual associative cortex Broca’s area Visual cortex Primary Auditory cortex Wernicke’s area 16

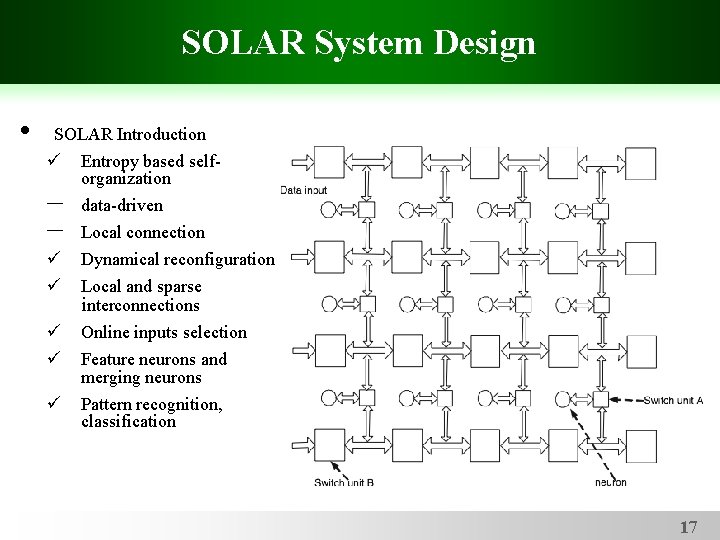

SOLAR System Design • SOLAR Introduction ü – – ü ü ü Entropy based selforganization data-driven Local connection Dynamical reconfiguration Local and sparse interconnections Online inputs selection Feature neurons and merging neurons Pattern recognition, classification 17

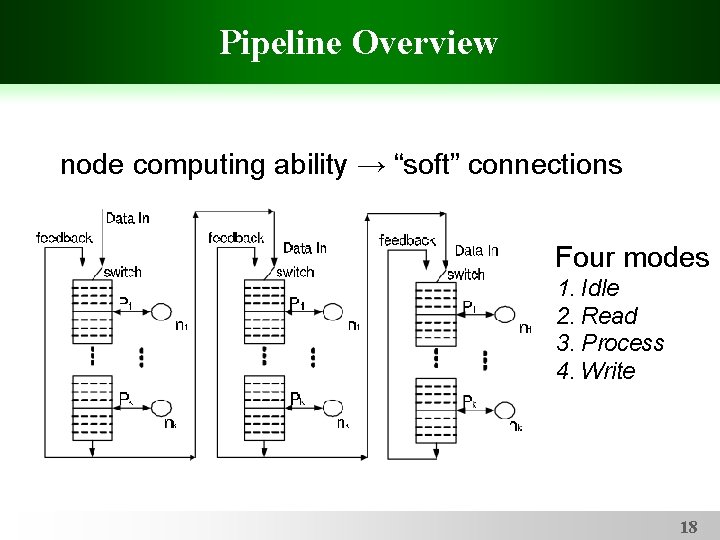

Pipeline Overview node computing ability → “soft” connections Four modes 1. Idle 2. Read 3. Process 4. Write 18

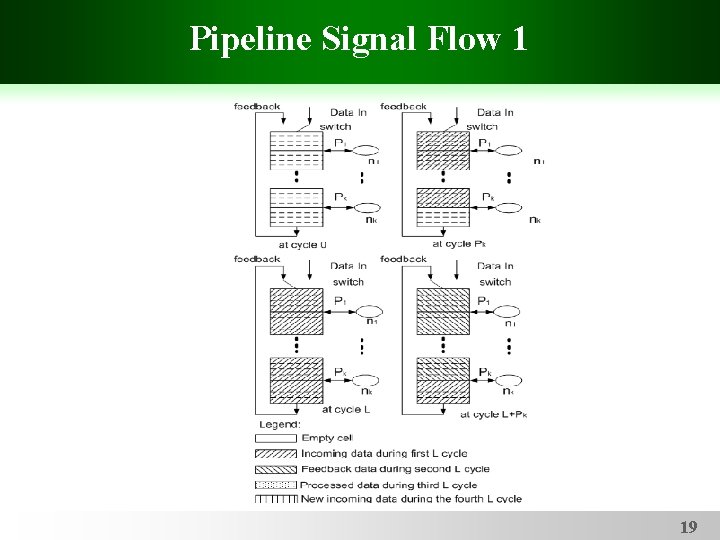

Pipeline Signal Flow 1 19

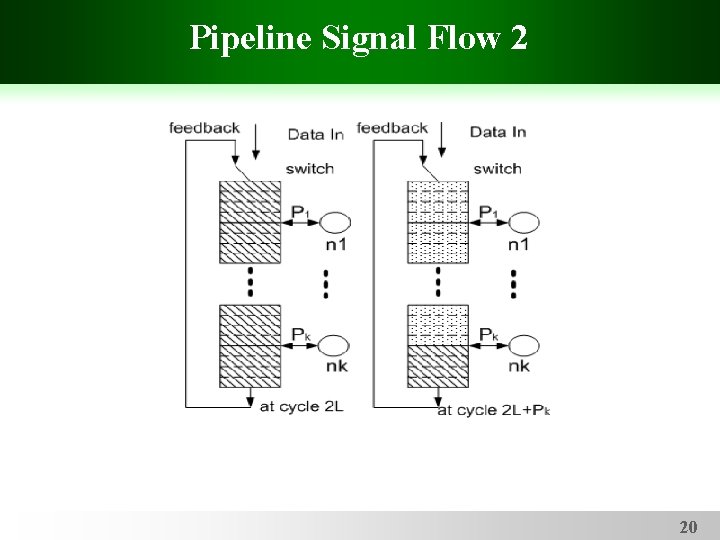

Pipeline Signal Flow 2 20

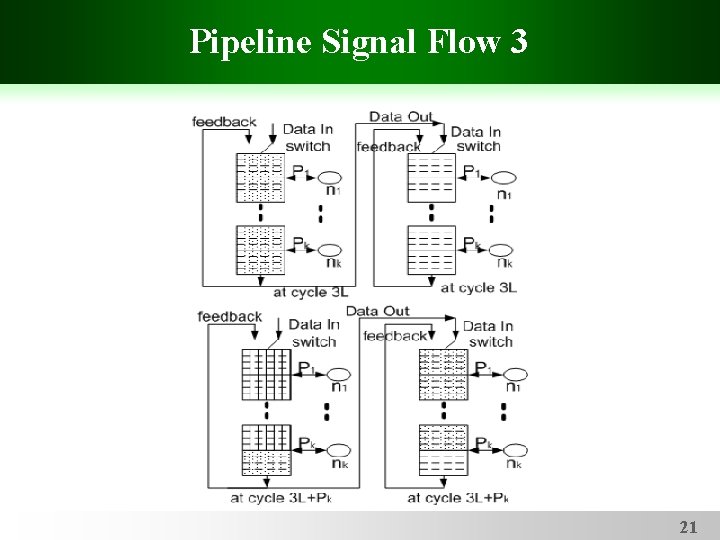

Pipeline Signal Flow 3 21

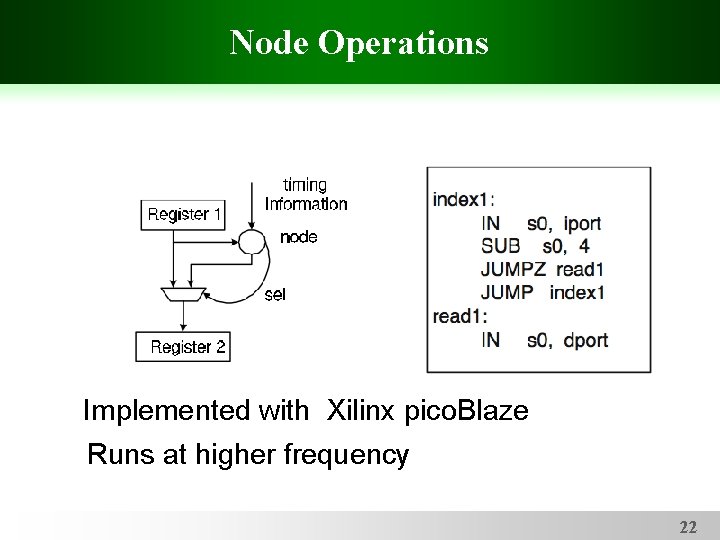

Node Operations Implemented with Xilinx pico. Blaze Runs at higher frequency 22

Outline • RC systems design of SOLAR • Dimensionality reduction • Input selection, weighting • Pipeline structure Motor cortex Pars opercularis • Experimental results • Conclusions Somatosensory cortex Sensory associative cortex Visual associative cortex Broca’s area Visual cortex Primary Auditory cortex Wernicke’s area 23

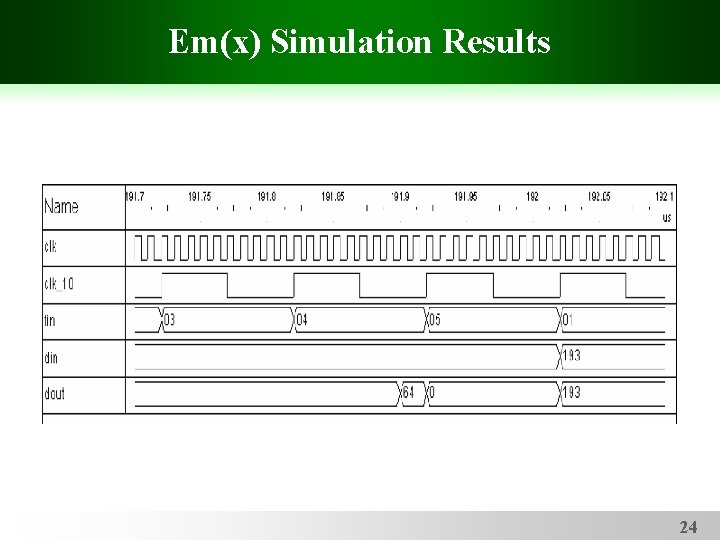

Em(x) Simulation Results 24

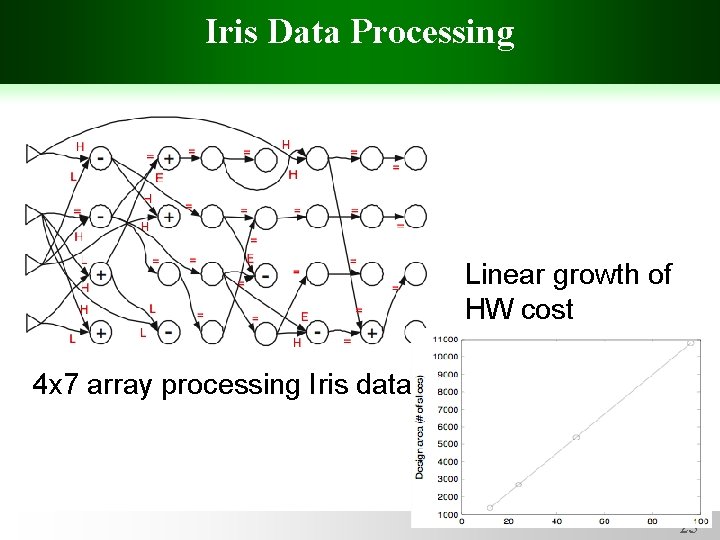

Iris Data Processing Linear growth of HW cost 4 x 7 array processing Iris data 25

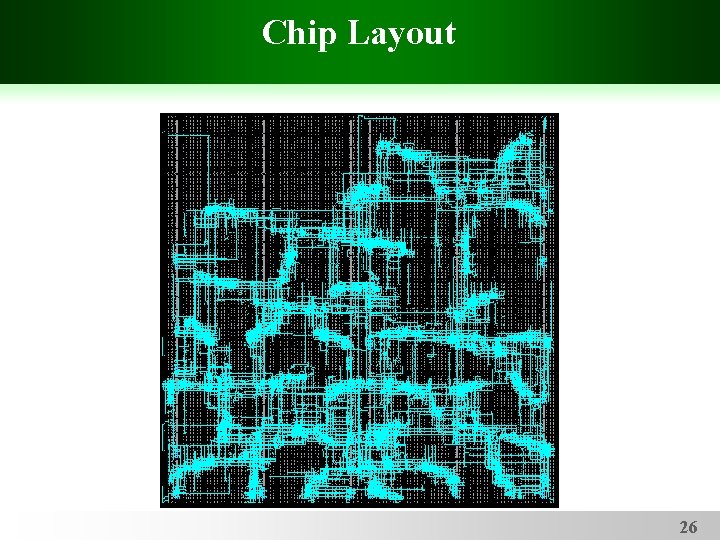

Chip Layout 26

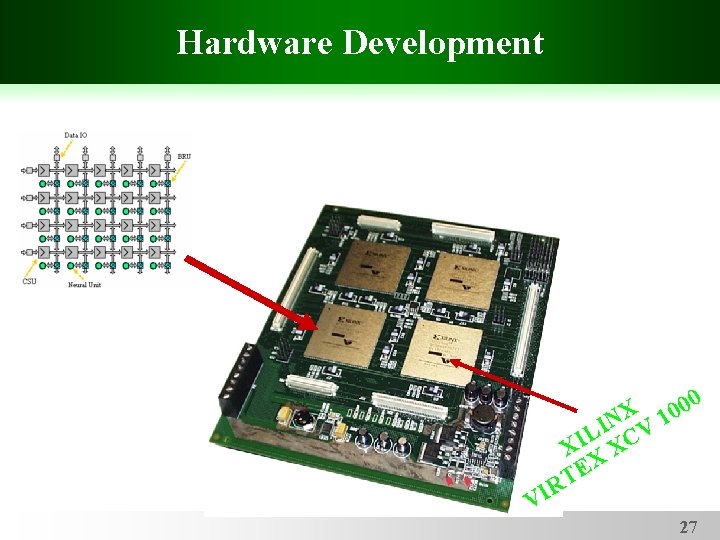

Hardware Development 0 0 0 NX V 1 I L C I X X X E T R VI 27

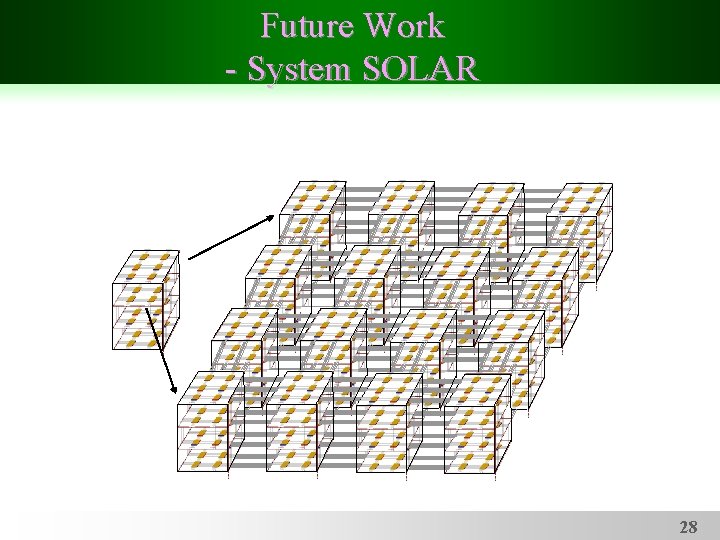

Future Work - System SOLAR 28

Conclusions & Future work • Sparse coding building in sparsely connected networks • WTA scheme: local competition accomplish the global competition using primary and secondary layers –efficient hardware implementation • OTA scheme: local competition produces neuronal activity reduction • OTA – redundant coding: more reliable and robust • WTA & OTA: learning memory for developing machine intelligence Future work: • Introducing temporal sequence learning • Building motor pathway on such learning memory • Combining with goal-creation pathway to build intelligent machine 29

- Slides: 29