Human Organizational Performance H O P Bob Edwards

Human & Organizational Performance – H. O. P. Bob Edwards, M. Eng.

The Complex Adaptive Environment The more complex our organization or process is, the more error prone it is. . . and the less tolerant of error it becomes. (Conklin, 2012)

H. O. P. helps us improve our operational learning … … it’s not like a traditional program. . .

H. O. P. improves our traditional programs by increasing our collaboration & operational learning.

Works hand in hand with our Safety and Quality Management System’s approach: - VPP (4 Elements) ANSI Z 10 (PDCA) OHSAS 18001 (Risk Based & OE) Upcoming ISO 45001 (Similar to Z 10) Internal System (i. e. Framework) ISO 9001 (Quality).

Our Goal with H. O. P. . is to become a lot less surprised by human error and failure. . . and instead, become a lot interested in learning! more

Through improved operational learning, our organizations are becoming more Reliable and Resilient!

History of Human Performance

Nuclear Power Plants

Aviation Safety

Automotive Safety

General Industry

The Principles of Human Performance 1. People make errors 2. Error-likely situations are predictable 3. Individual behaviors are influenced 4. Operational upsets can be avoided 5. Our response to failure matters.

Todd Conklin, Ph. D.

Implementation Process 1. Leadership Commitment 2. Selected & Trained Pilot Sites 3. Implemented Learning Teams 4. Developed Advocates / Coaches 5. Created Centers of Excellence 6. Shared Success Stories

HOP - GE Businesses

HOP – Other Companies

The Principles of Human Performance 1. People make errors 2. Error-likely situations are predictable 3. Individual behaviors are influenced 4. Operational upsets can be avoided 5. Our response to failure matters

Was this Kenny’s FAULT?

What about an injured firefighter?

To err is human. . . to forgive is Divine. (Alexander Pope, 1688 -1744) …and neither are Marine Corps Policy!

Free Billy!

Blame is very common but not helpful at all!

The Principles of Human Performance 1. People make errors 2. Error-likely situations are predictable 3. Individual behaviors are influenced 4. Operational upsets can be avoided 5. Our response to failure matters

“Mistakes arise directly from the way the mind handles information, not through stupidity or carelessness. ” (Edward de Bono Ph. D)

Error Frequencies Simple math error with self-check: 3 in 100 (P. L. Clemens, 2002)

Error Frequencies Inspector oversight of operator: 1 in 10 (P. L. Clemens, 2002)

Error Frequencies High stress / dangerous activity: 3 in 10 (P. L. Clemens, 2002)

Error Tolerance is Strongly Situational A batting average of. 330 is worthy of Hall of Fame. . . would that be acceptable …. . . for a concert pianist? (P. L. Clemens, 2002)

Operational Errors Immediate results

Organizational Errors Delayed results

Robots vs. Humans

Error is not a Choice… Error is not Violation.

Deviation Types Ø Intentional Deviation (Rule Breaking) Ø Unintentional Deviation (Error) Ø Normalized Deviation (Common)

HOP is NOT the absence of rules or discipline HOP is the notion that if you depend on a person doing something 100% right 100% of the time… …you will be disappointed. . . …A LOT (Baker, 2014)

The Texting Trash Hauler

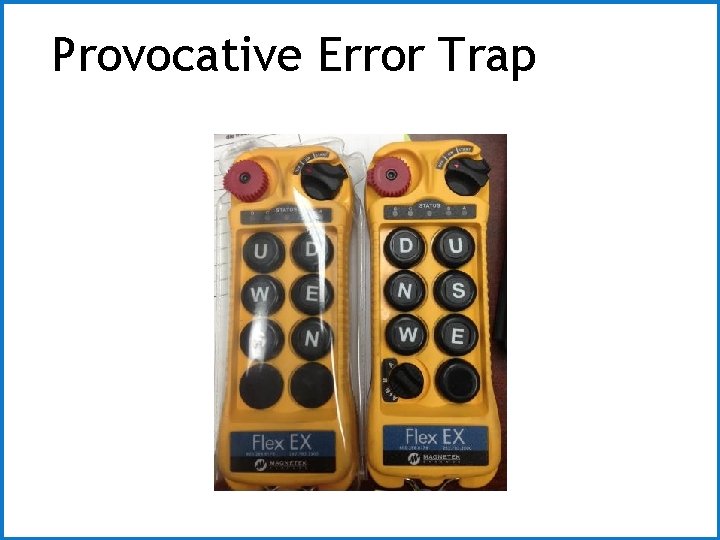

HOP Terminology • Error Traps • Provocative Error Traps • Trigger • Latent Conditions

Error Trap

Provocative Error Trap

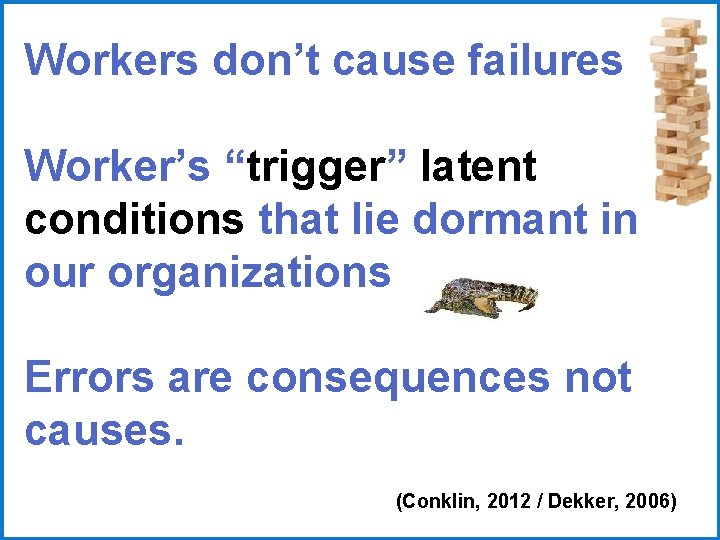

Workers don’t cause failures Worker’s “trigger” latent conditions that lie dormant in our organizations Errors are consequences not causes. (Conklin, 2012 / Dekker, 2006)

Error is common. . . Being surprised by it doesn’t make things better.

The Principles of Human Performance 1. People make errors 2. Error-likely situations are predictable 3. Individual behaviors are influenced 4. Operational upsets can be avoided 5. Our response to failure matters

People Are As Safe As They Need To Be, Without Being Overly Safe… In Order To Get Their Job Done. (Conklin)

Drivers Are As Safe As They Need To Be, Without Being Overly Safe… In Order To Get To Their Destination. (Edwards)

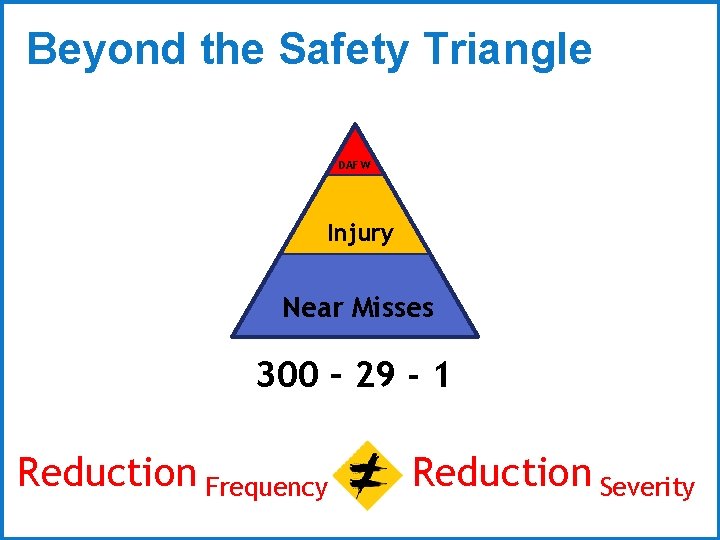

Beyond the Safety Triangle DAFW Injury Near Misses 300 – 29 - 1 Reduction Frequency Reduction Severity

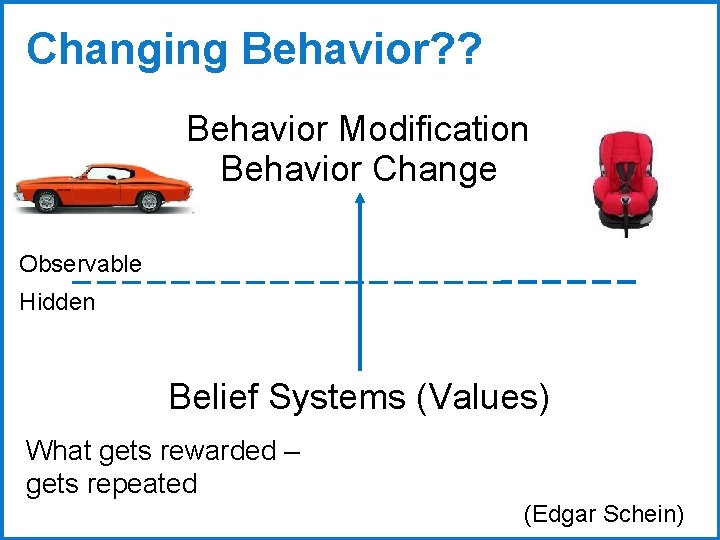

Changing Behavior? ? Behavior Modification Behavior Change Observable Hidden Belief Systems (Values) What gets rewarded – gets repeated (Edgar Schein)

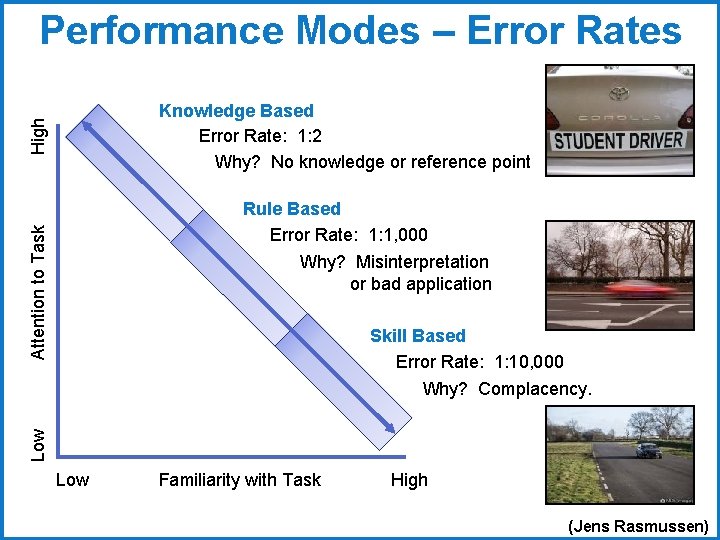

Performance Modes – Error Rates High Knowledge Based Error Rate: 1: 2 Why? No knowledge or reference point Attention to Task Rule Based Error Rate: 1: 1, 000 Why? Misinterpretation or bad application Low Skill Based Error Rate: 1: 10, 000 Why? Complacency. Low Familiarity with Task High (Jens Rasmussen)

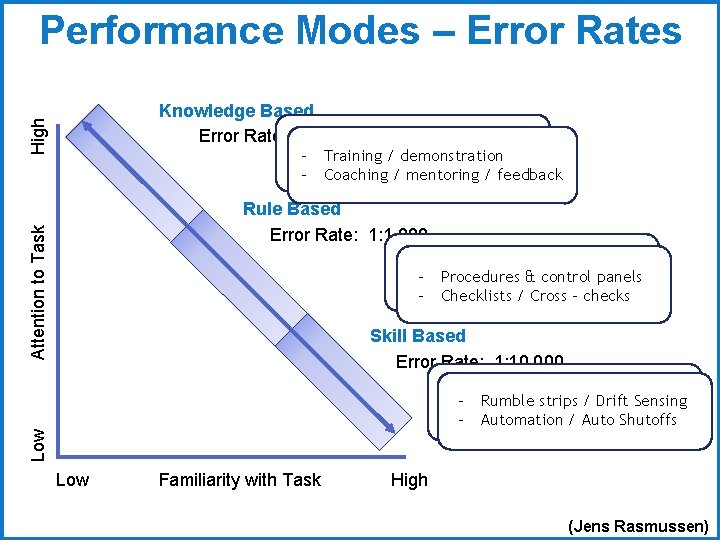

Performance Modes – Error Rates High Knowledge Based - Inadequate knowledge Error Rate: 1: 2 -- No knowledge Training / demonstration -- No reference point Coaching / mentoring / feedback Attention to Task Rule Based Error Rate: 1: 1, 000 - Misapplication of good rulespanels - Application Proceduresof&bad control to apply a good rule - Failure Checklists / Cross - checks Skill Based Error Rate: 1: 10, 000 Low --- Low Familiarity with Task Omissions Rumble strips / Drift Sensing Slips / trips / lapses Automation /(how? ) Auto Shutoffs Complacency High (Jens Rasmussen)

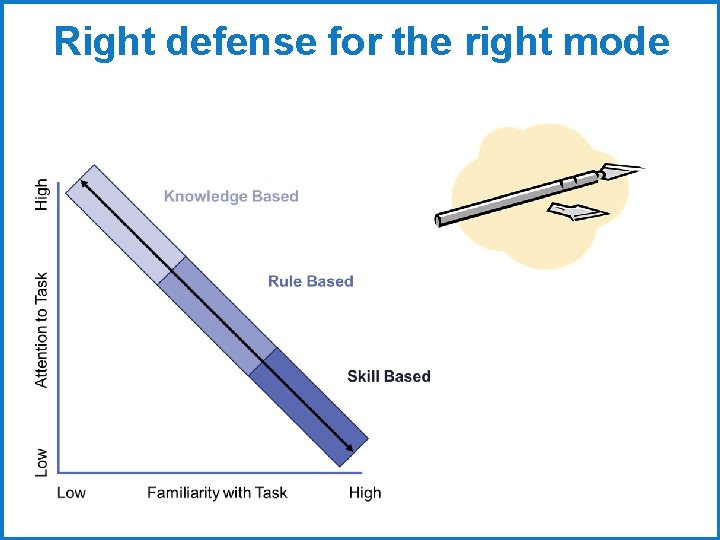

Right defense for the right mode

The Principles of Human Performance 1. People make errors 2. Error-likely situations are predictable 3. Individual behaviors are influenced 4. Operational upsets can be avoided 5. Our response to failure matters

Great performance is not the absence of errors. . . it’s the presence of defenses. (Conklin, 2012)

Procedures are important… But they are not sufficient enough to create safety Our organizations have become complex-webs of procedures that are incomplete and difficult. (Conklin)

Defenses • Types of Defenses • Strength of Defenses • Layers of Defense • Sustainability of Defenses

Hierarchy of Controls ? ? • Elimination • Substitution • Engineering Controls • Administrative Controls • Behavior (Cultural) • PPE Not so focused on it being a hierarchy More focused on ownership and effectiveness.

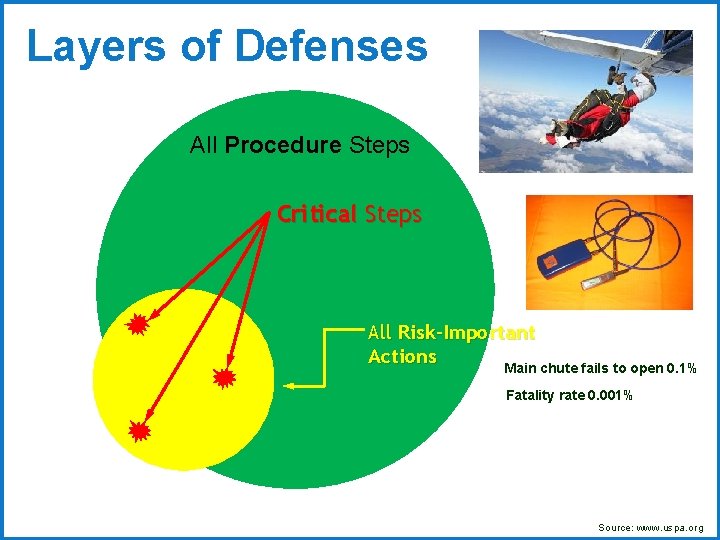

Layers of Defenses All Procedure Steps Critical Steps All Risk-Important Actions Main chute fails to open 0. 1% Fatality rate 0. 001% Source: www. uspa. org

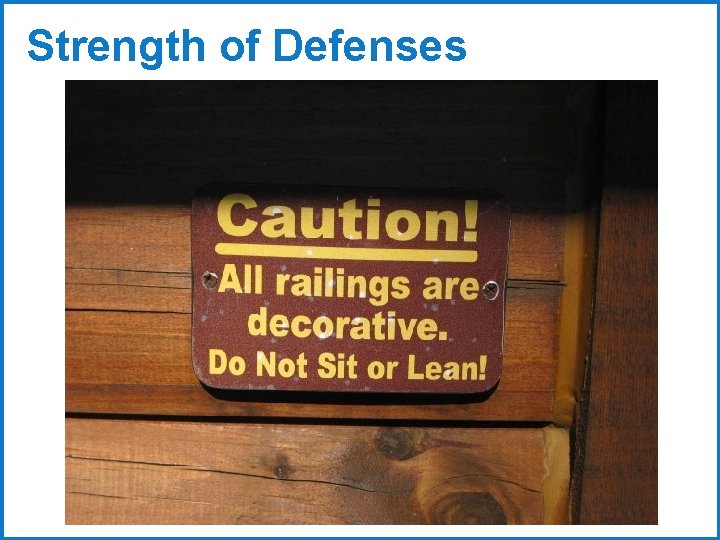

Strength of Defenses

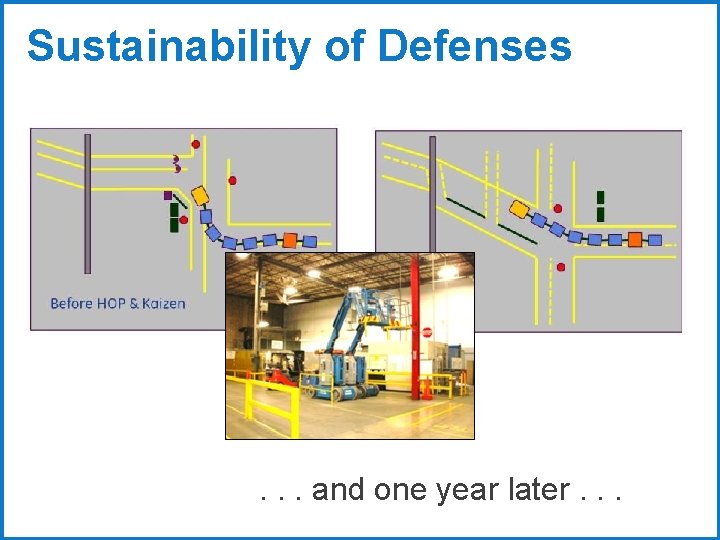

Sustainability of Defenses . . . and one year later. . .

We want to make it easier to do right than to do wrong!

The Principles of Human Performance 1. People make errors 2. Error-likely situations are predictable 3. Individual behaviors are influenced 4. Operational upsets can be avoided 5. Our response to failure matters

Workers Are Masters of Complex Adaptive Behavior… (Conklin)

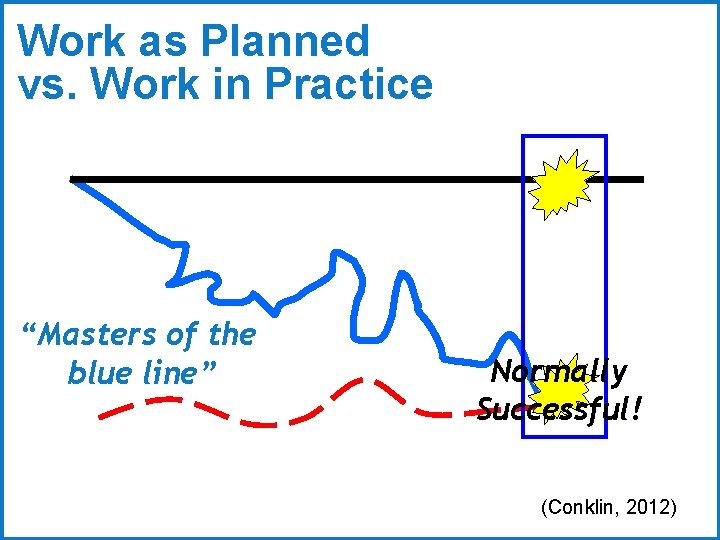

Work as Planned vs. Work in Practice “Masters of the blue line” Normally Successful! (Conklin, 2012)

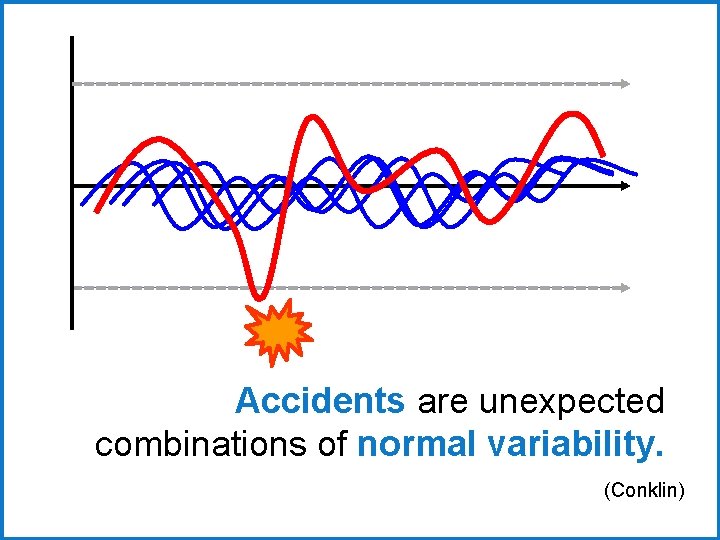

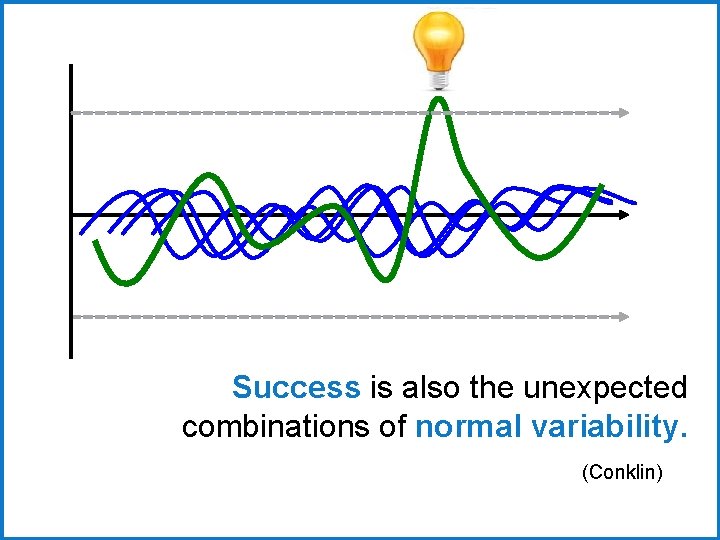

Accidents are unexpected combinations of normal variability. (Conklin)

Success is also the unexpected combinations of normal variability. (Conklin)

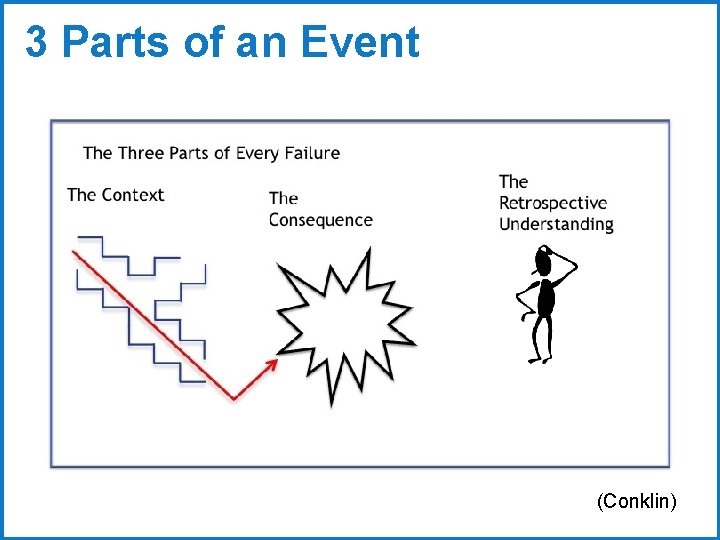

3 Parts of an Event (Conklin)

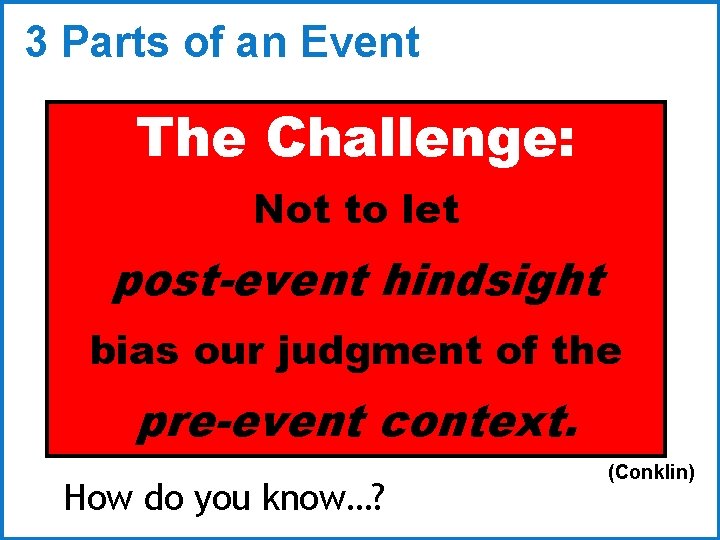

3 Parts of an Event The Challenge: Not to let post-event hindsight bias our judgment of the pre-event context. How do you know…? (Conklin)

“Underneath every seemingly obvious, simple story of error, there is a second deeper story. A more complicated story. . . a story about the system in which people work. ” (Dekker, 2006)

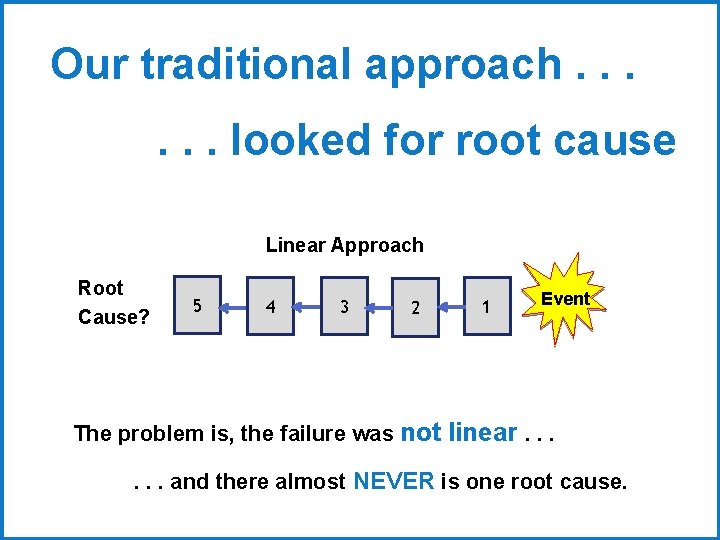

Our traditional approach. . . looked for root cause Linear Approach Root Cause? 5 4 3 2 1 Event The problem is, the failure was not linear. . . and there almost NEVER is one root cause.

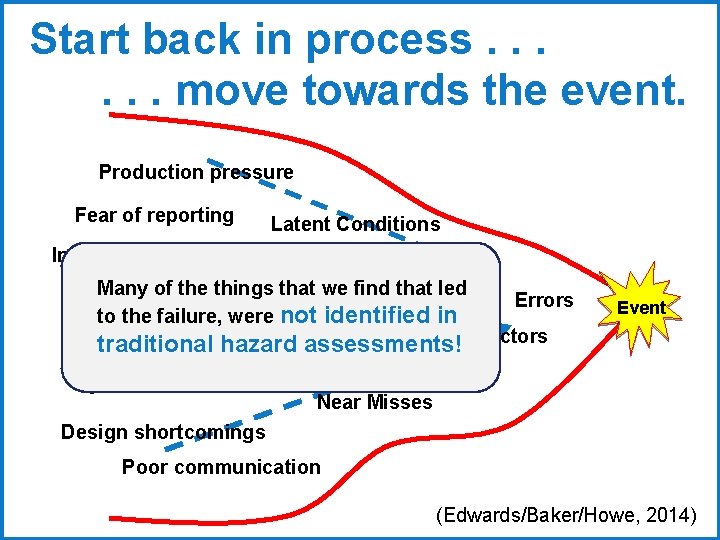

Start back in process. . . move towards the event. Production pressure Fear of reporting Latent Conditions Inadequate defenses System Weaknesses Many of the things that we find that led Resource constraints Errors Hazards & Risks to the failure, were not identified in Flawed processes Local Factors traditional hazard assessments! Normal Variability System deficiencies Near Misses Event Design shortcomings Poor communication (Edwards/Baker/Howe, 2014)

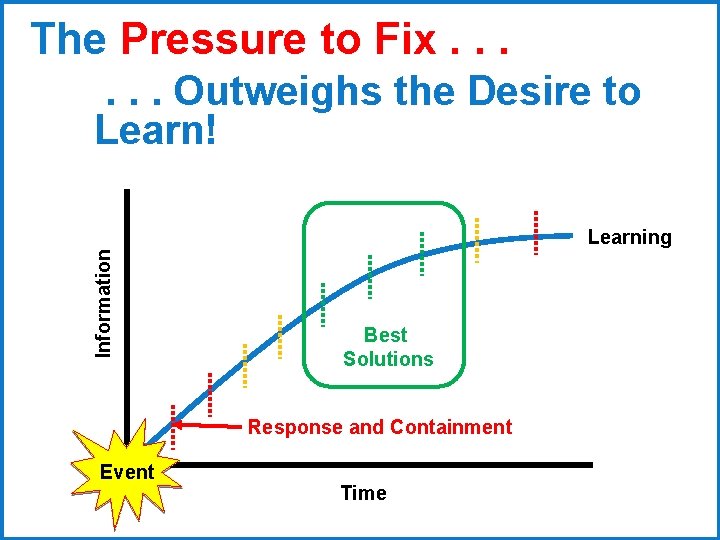

The Pressure to Fix. . . Information . . . Outweighs the Desire to Learn! Learning Best Solutions Response and Containment Event Time

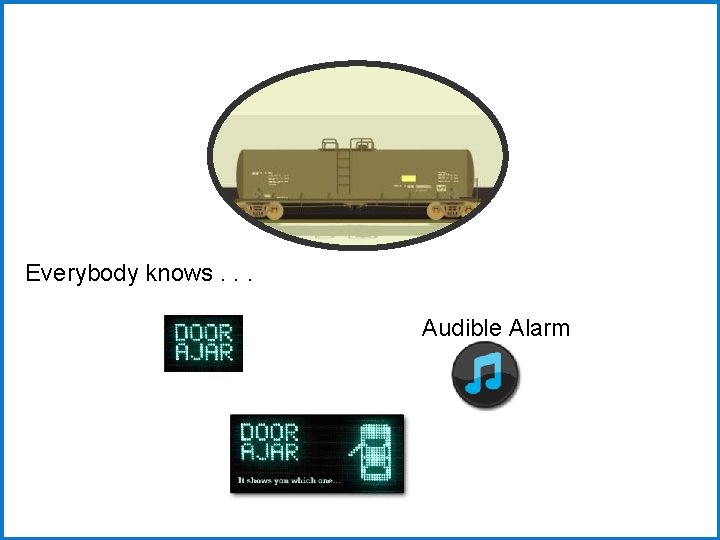

Everybody knows. . . Audible Alarm

“. . . blame is the enemy of understanding. ” (Andrew Hopkins)

Beyond Taylorism • Break the concept that the planner is • • smarter than the worker Bring the worker and planner together to create the plan Anticipate that human error will occur Expect operational drift Understand why the drift occurs Learn from “masters of the blue line” Learn from failure Learn from success.

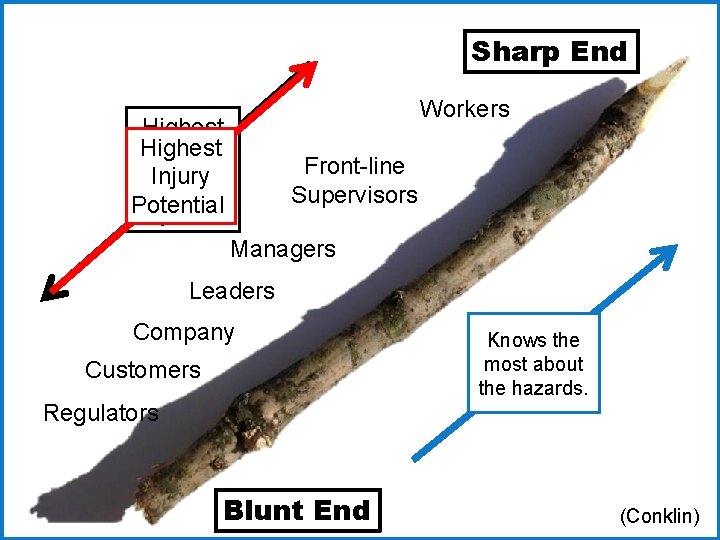

Sharp End Highest Influence Front-line Injury Over Supervisors Potential System Managers Workers Leaders Company Customers Regulators Blunt End Knows the most about the hazards. (Conklin)

Operational Learning

… you want to understand why it made sense for people to do what they did … in their context (not yours!) … (Dekker, 2006)

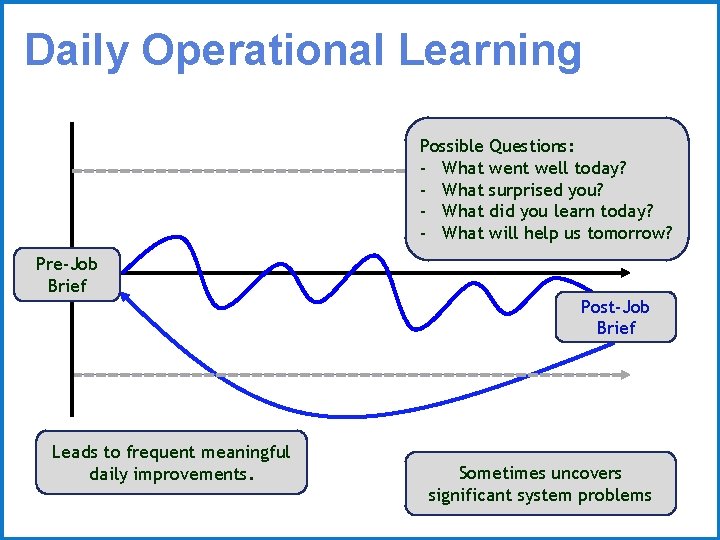

Daily Operational Learning Possible Questions: - What went well today? - What surprised you? - What did you learn today? - What will help us tomorrow? Pre-Job Brief Leads to frequent meaningful daily improvements. Post-Job Brief Sometimes uncovers significant system problems

Event Response • Respond seriously and deliberately to events, near misses and good catches • Remember, events are “Information Rich!” • Promote a culture of learning & collaboration • Learn before taking action • Reduce operational complexity • Fix processes and systems – NOT people.

How do we do this? • Pull the right people together • Give them time and space to learn and discover • Create an open dialog (Trust) • Ask meaningful questions • Listen and Learn (Be interested) • Empower the team to help solve the problems.

Shift the question from “why”. . . to “how”. (Conklin)

Operational Learning Not an investigation Not worried about collusion Not focused on the “one true story” Not focused on the one “root cause? ” It is the story as each person saw the event It is the story of complexity It is the story of normal variability and coupling It is the story of how work gets done.

The Learning Team Process ü Determine need for Learning Team ü 1 st Session – Learning Mode only ü Provide “Soak Time” ü 2 nd Session – Start in Learning Mode ü Define defenses / build new ones ü Tracking actions & criteria for closure ü Tell the story.

Learning Team Session One . . . is to learn and discover. . . and not to fix!

Sample questions for Session 1 - How far back in the process should we start? - Tell me about your work. How hard is it to get things done? - How doable are your procedures? - Do you have the right tools? - What were the conditions leading up to the event? - What other near misses have you seen in this area? - What worked well? What failed or went wrong? - Where else could a similar event happen? - What else should I know? Who should this be shared with? - How did the employee’s actions (or inaction) make sense in their context? (not yours!) - Who else should we invite to the next session?

Wall of Discovery

Soak Time • At least overnight (if at all possible) • Allows time to process learnings • Allows time to go look • Allows time to study and research

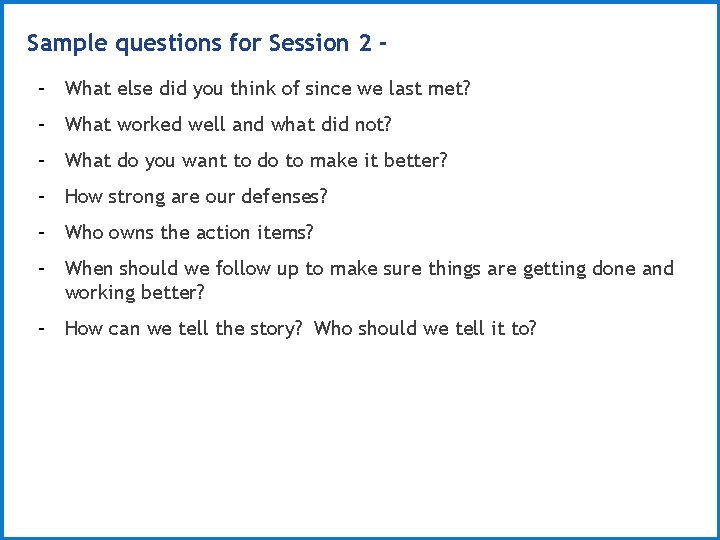

Sample questions for Session 2 - What else did you think of since we last met? - What worked well and what did not? - What do you want to do to make it better? - How strong are our defenses? - Who owns the action items? - When should we follow up to make sure things are getting done and working better? - How can we tell the story? Who should we tell it to?

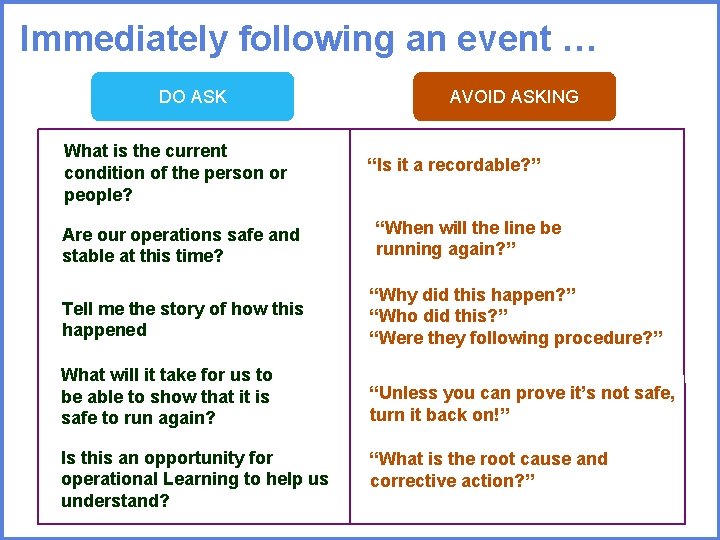

Immediately following an event … DO ASK What is the current condition of the person or people? AVOID ASKING “Is it a recordable? ” Are our operations safe and stable at this time? “When will the line be running again? ” Tell me the story of how this happened “Why did this happen? ” “Who did this? ” “Were they following procedure? ” What will it take for us to be able to show that it is safe to run again? “Unless you can prove it’s not safe, turn it back on!” Is this an opportunity for operational Learning to help us understand? “What is the root cause and corrective action? ”

When do we need to learn? • • Post-event (Injury/Quality/Operations) Near Miss or Close Call Good Catch Interesting Successes High Risk Operations Challenging Design Problems Anytime you can’t explain something.

Bias and other Obstacles • • • Hindsight Bias Group Think Confirmation Bias Counter Factual Similarity Bias Need for Blame Admission of Blame Irrationality Bounded Rationality Fundamental Attribution Error Irrational Escalation Bias (Sunk Cost)

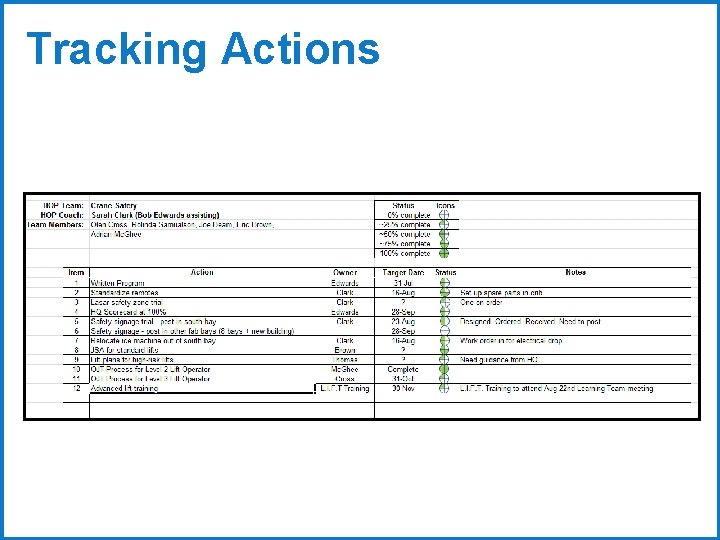

Tracking Actions

Learning Team Closure Criteria Continue the effort with the Learning Team until the Team has implemented sufficient levels of defenses to satisfy: ü The Learning Team ü The affected employees ü Management. . . AND. . . ü We have communicated our story.

We can NOT engineer out the possibility of every mistake… …we cannot error proof the world We CAN build layers of defenses to build in space for mistakes.

Learning from success. . .

Safety Defined Safety is not the freedom from risk …. . . it is the freedom from unacceptable risk. M. Bidez, 2013

When we believe we know the answer. . . we stop asking questions. . . we stop listening. . . we stop learning!

The power to ask the right questions. . . comes from acknowledging that you don’t know the right answer.

Workplaces and organizations are easier to manage than the minds of individual workers. You cannot change the human condition, but you can change the conditions under which people work. (Dr. James Reason)

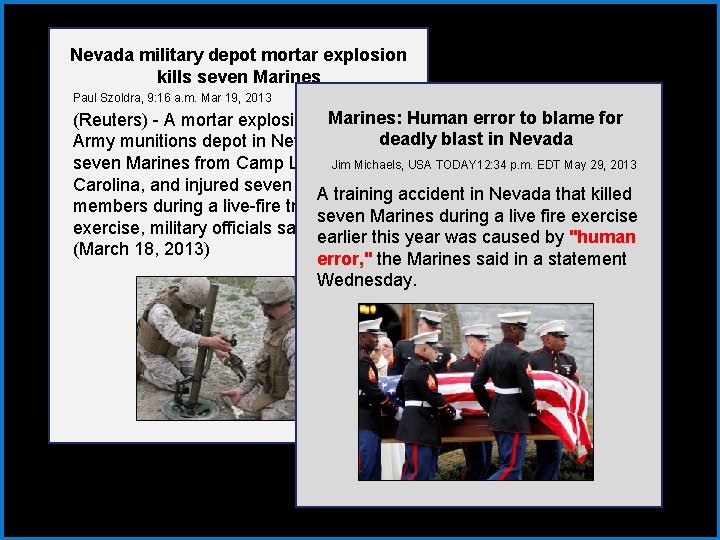

Nevada military depot mortar explosion kills seven Marines Paul Szoldra, 9: 16 a. m. Mar 19, 2013 (Reuters) - A mortar explosion at. Marines: a U. S. Human error to blame for Army munitions depot in Nevada killeddeadly blast in Nevada Jim Michaels, seven Marines from Camp Lejeune, North USA TODAY 12: 34 p. m. EDT May 29, 2013 Carolina, and injured seven other service A training accident in Nevada that killed members during a live-fire training seven Marines during a live fire exercise, military officials said on Tuesday. earlier this year was caused by "human (March 18, 2013) error, " the Marines said in a statement Wednesday.

“I have never been especially impressed by the heroics of people convinced they are about to change the world. I am more awed by those who struggle to make one small difference. ” (Ellen Goodman)

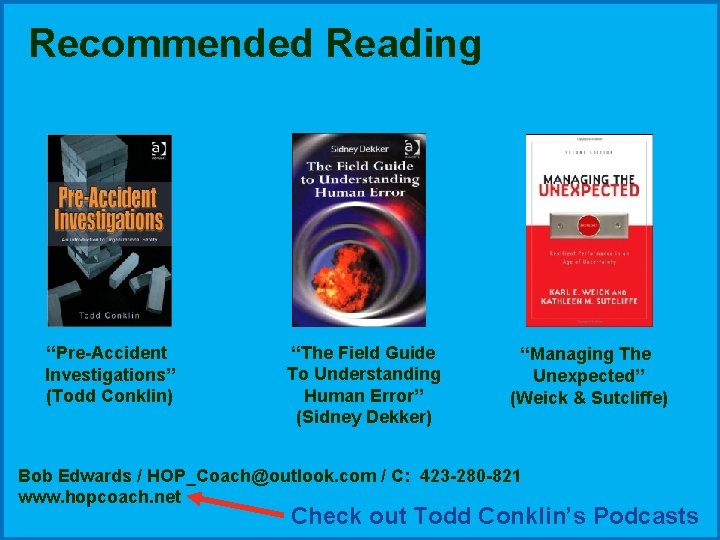

Recommended Reading “Pre-Accident Investigations” (Todd Conklin) “The Field Guide To Understanding Human Error” (Sidney Dekker) “Managing The Unexpected” (Weick & Sutcliffe) Bob Edwards / HOP_Coach@outlook. com / C: 423 -280 -821 www. hopcoach. net Check out Todd Conklin’s Podcasts

- Slides: 104