Human Computer Interaction Evaluation Techniques HumanComputer Interaction 1

- Slides: 86

Human Computer Interaction Evaluation Techniques Human-Computer Interaction 1

What is evaluation? l The role of evaluation: l We still need to assess our designs and test our systems to ensure that they actually behave as we expect and meet user requirements. Human-Computer Interaction 2

What is evaluation? (Cont. ) l l l Evaluation should not be thought of as a single phase in the design process. Evaluation should occur throughout the design life cycle, with the results of the evaluation feeding back into modifications to the design. It is not usually possible to perform extensive experimental testing continuously throughout the design, but analytical( )ﺗﺤﻠﻴﻠﻴﺔ and informal techniques can and should be used. Human-Computer Interaction 3

Broad headings on evaluation techniques l We will consider evaluation techniques under two broad headings( )ﻋﻨﺎﻭﻳﻦ ﻋﺮﻳﻀﺔ : l l Evaluation Through Expert Analysis. Evaluation Through User Participation. Human-Computer Interaction 4

Goals of Evaluation l Evaluation has three main goals: l To assess extent( ) ﻣﺪﻯ and accessibility of the system’s functionality. l To assess user’s experience of the interaction. l To identify specific problems with the system. Human-Computer Interaction 5

Goals of Evaluation (Cont. ) 1. To assess extent and accessibility of the system’s functionality: l The system’s functionality is important in that it must accord with the users requirements. l So the design of the system should enable users to performed their intended tasks more easily. l The use of the system must be matching the user’s expectations of the task. Human-Computer Interaction 6

Goals of Evaluation (Cont. ) 2. To assess user’s experience of the interaction: l This includes considering aspects such as: l l How easy the system is to learn. Its usability. The users satisfaction with it. The user’s enjoyment and emotional response. Human-Computer Interaction 7

Goals of Evaluation (Cont. ) 3. To identify specific problems with the system: l Unexpected results -> confusion amongst users. l Related to both the functionality and usability of the design. Human-Computer Interaction 8

Objectives of User Interface Evaluation l Key objective of both UI design and evaluation: “Minimize malfunctions” l l Key reason for focusing on evaluation: Without it, the designer would be working “blindfold”. l Designers wouldn’t really know whether they are solving customer’s problems in the most productive way. Human-Computer Interaction 9

Evaluation Techniques l l Evaluation: l Tests usability and functionality of system. l Evaluates both design and implementation. l Should be considered at all stages in the design life cycle. But, in order for evaluation to give feedback to designers. . . Ø l . . . we must understand why a malfunction occurs. Malfunction analysis: l l Determine why a malfunction occurs. Determine how to eliminate malfunctions. Human-Computer Interaction 10

Overview of Interface Evaluation Methods l Three types of methods: Passive evaluation. l Active evaluation. l Predictive evaluation (usability inspections). l l All types of methods useful for optimal results. Used in parallel. attempt to prevent malfunctions. Human-Computer Interaction 11

1. Passive evaluation l Performed while prototyping in a test. l Does not actively seek malfunctions. l l l Only finds them when they happen to occur. Infrequent malfunctions may not be found. Generally requires realistic use of a system. l Users become malfunctions. frustrated( ﻣﺤﺒﻂ Human-Computer Interaction 12 ) with

1. Passive evaluation. (Cont. ) Gathering Information: a) Problem report monitoring: l Users should have an easy way to register their frustration / suggestions. l Best if integrated with software. Human-Computer Interaction 13

1. Passive evaluation (Cont. ) b) Automatic software logs. l Can gather much data about usage: § § § l Command frequency. Error frequency. Undone operations (a sign of malfunctions). Logs can be taken of: § § Just keystrokes, mouse clicks. Full details of interaction. Human-Computer Interaction 14

1. Passive evaluation (Cont. ) c) Questionnaires: l Useful to obtain statistical data from large numbers of users. § l Proper statistical means( ) ﺍﻟﻮﺳﺎﺋﻞ ﺍﻹﺣﺼﺎﺋﻴﺔ ﺍﻟﻤﻨﺎﺳﺒﺔ are needed to analyze results. Gathers subjective data about importance of malfunction. § § Less frequent malfunctions may be more important. Users can prioritize needed improvements. Human-Computer Interaction 15

Questionnaires l Set of fixed questions given to users. l Limit on number of questions: l l l Very hard to phrase questions well. Questions can be closed- or open-ended. Advantages: l Quick and reaches large user group. Human-Computer Interaction 16

Open-ended questions l Open-ended questions are those questions that will solicit ( )ﺍﻟﺘﻤﺎﺱ additional information from the inquirer( )ﺍﻟﻤﺴﺘﻌﻠﻢ. l l Examples: l l l How may/can I help you? Where have you looked already? What aspect are you looking for? What kind of information are you looking for? What would you like to know about [topic]? When you say [topic], what do you mean? 17

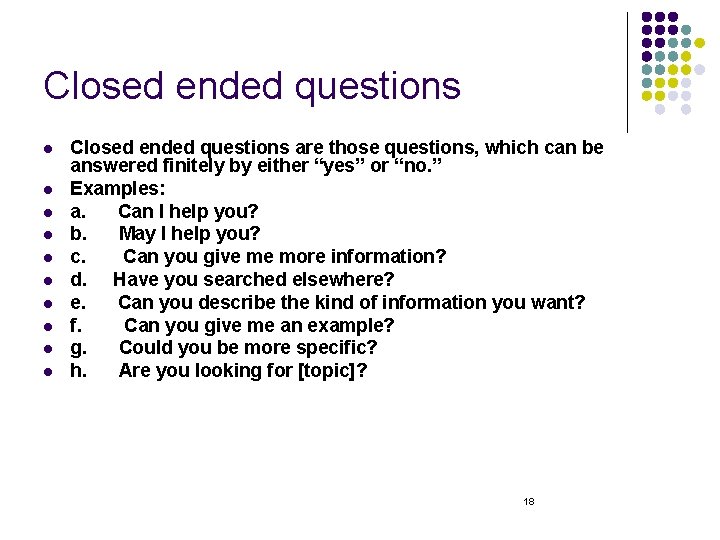

Closed ended questions l l l l l Closed ended questions are those questions, which can be answered finitely by either “yes” or “no. ” Examples: a. Can I help you? b. May I help you? c. Can you give me more information? d. Have you searched elsewhere? e. Can you describe the kind of information you want? f. Can you give me an example? g. Could you be more specific? h. Are you looking for [topic]? 18

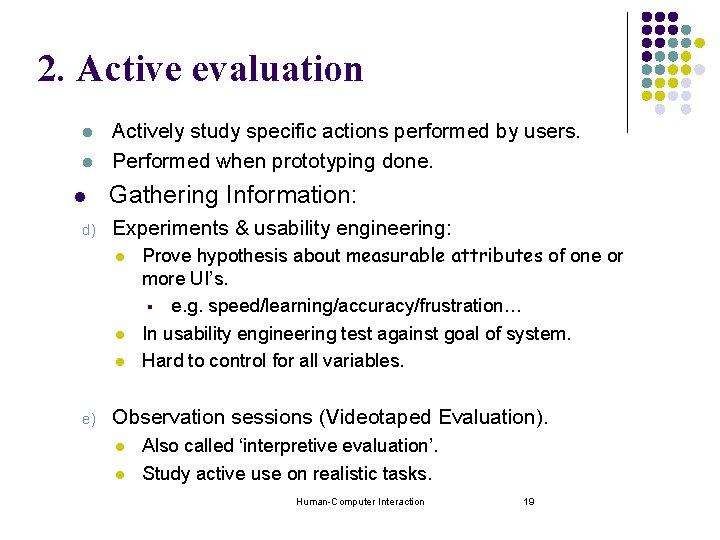

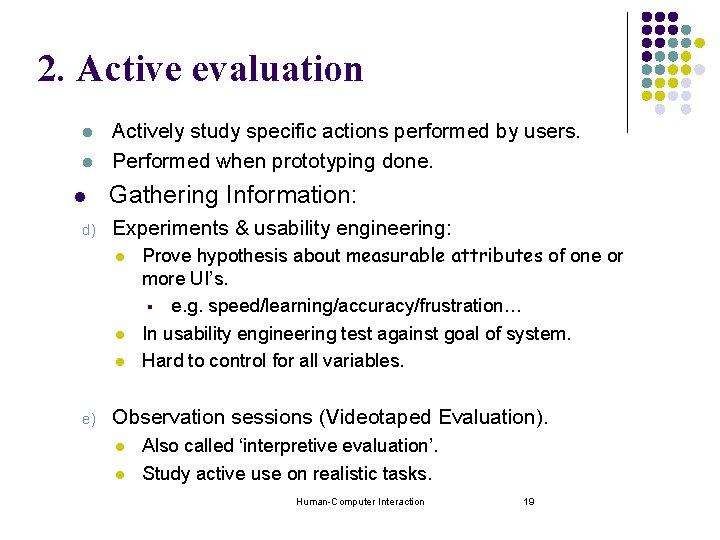

2. Active evaluation l l l d) Actively study specific actions performed by users. Performed when prototyping done. Gathering Information: Experiments & usability engineering: l l l e) Prove hypothesis about measurable attributes of one or more UI’s. § e. g. speed/learning/accuracy/frustration… In usability engineering test against goal of system. Hard to control for all variables. Observation sessions (Videotaped Evaluation). l l Also called ‘interpretive evaluation’. Study active use on realistic tasks. Human-Computer Interaction 19

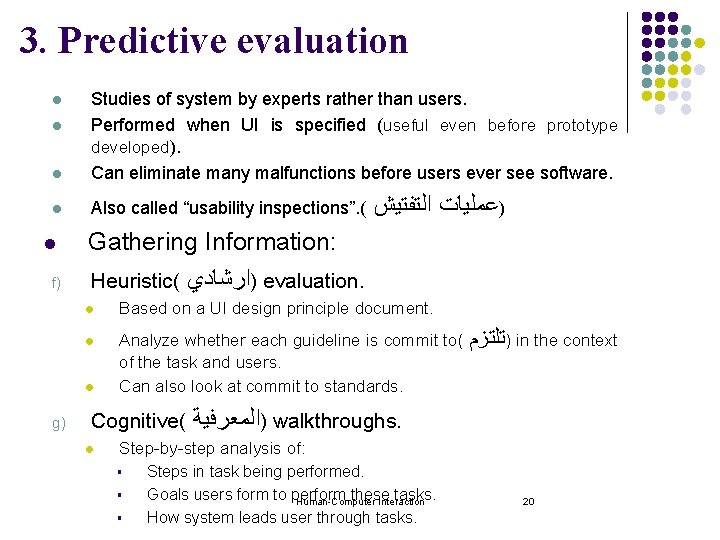

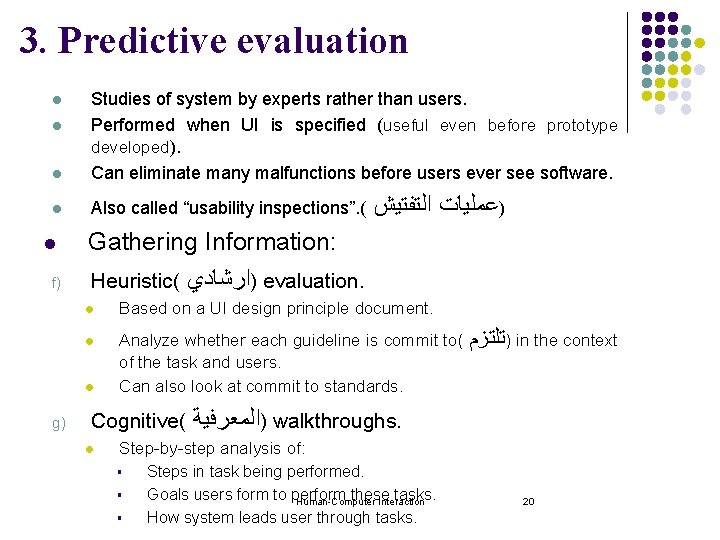

3. Predictive evaluation l Studies of system by experts rather than users. Performed when UI is specified (useful even before prototype developed). Can eliminate many malfunctions before users ever see software. l Also called “usability inspections”. ( l l l f) Gathering Information: Heuristic( )ﺍﺭﺷﺎﺩﻱ evaluation. l Based on a UI design principle document. l Analyze whether each guideline is commit to( of the task and users. Can also look at commit to standards. l g) )ﻋﻤﻠﻴﺎﺕ ﺍﻟﺘﻔﺘﻴﺶ Cognitive( l )ﺗﻠﺘﺰﻡ in the context )ﺍﻟﻤﻌﺮﻓﻴﺔ walkthroughs. Step-by-step analysis of: § § § Steps in task being performed. Goals users form to perform these tasks. Human-Computer Interaction How system leads user through tasks. 20

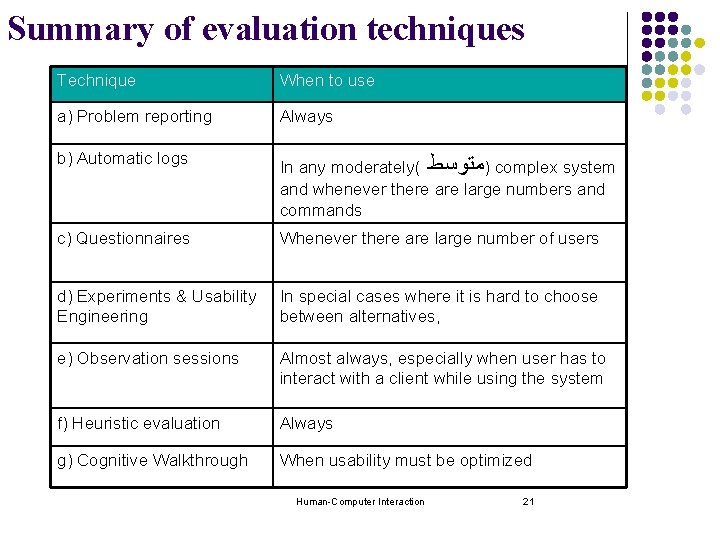

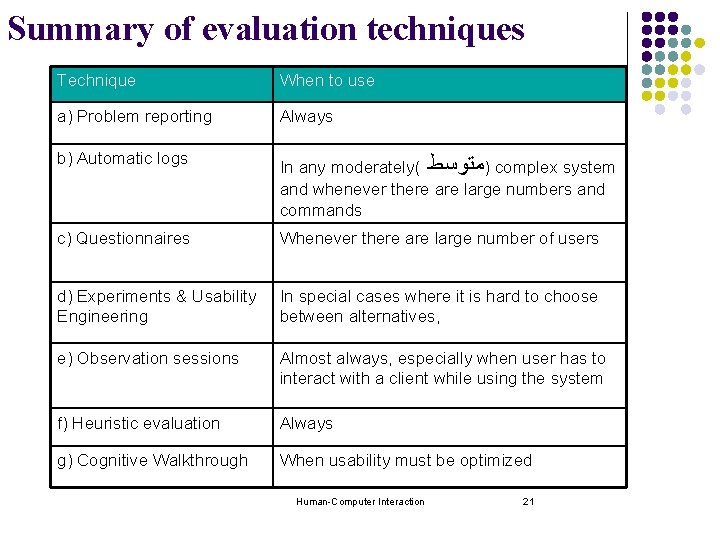

Summary of evaluation techniques Technique When to use a) Problem reporting Always b) Automatic logs In any moderately( )ﻣﺘﻮﺳﻂ complex system and whenever there are large numbers and commands c) Questionnaires Whenever there are large number of users d) Experiments & Usability Engineering In special cases where it is hard to choose between alternatives, e) Observation sessions Almost always, especially when user has to interact with a client while using the system f) Heuristic evaluation Always g) Cognitive Walkthrough When usability must be optimized Human-Computer Interaction 21

Evaluating Designs l The evaluation should occur throughout the design process. l These methods can be used at any stage in the development process from a design specification, through storyboards and prototypes, to full implementations, making them: Flexible evaluation approaches. Human-Computer Interaction 22

1. Videotaped Evaluation A software engineer studies users who are actively using the user interface: l l l To observe what problems they have. The sessions are videotaped. Can be done in user’s environment. Activities of the user: Preferably talks to him/her-self as if alone in a room. l This process is called ‘co-operative’ evaluation when the software engineering and user talk to each other. Human-Computer Interaction 23

1. Videotaped Evaluation (Cont. ) l. The l l With using it, ‘you can see what you want to see from the system’. You can repeatedly analyze, looking for different problems. l. Tips l l importance of video: for using video: Several cameras are useful. Software is available to help analyse video by dividing into segments and labelling the segments. Human-Computer Interaction 24

2. Experiments 1. Pick a set of subjects (users): 1. 2. A good mix to avoid biases( )ﺍﻟﺘﺤﻴﺰﺍﺕ. A sufficient number to get statistical significance (avoid random happenings effect results). Pick variables to test: l Variables Manipulated to produce different conditions: § § § 3. Should not have too many. They should not affect each other too much. Make sure there are no hidden variables. Develop a hypothesis: l l A prediction of the outcome. Aim of experiment is to show this is correct. Human-Computer Interaction 25

Variables l Independent variable (IV): l l Characteristics changed to produce different conditions. e. g. interface style, number of menu items… Dependent variable (DV): l Characteristics measured in the experiment. e. g. time taken, number of errors. Human-Computer Interaction 26

3. Heuristic Evaluation l Developed by Jakob Nielsen & Rolf Molich in the early 1990 s. l l Helps find usability problems in a UI design. a heuristic is based on UI guideline. usability criteria (heuristics) are identified. design examined by experts to see if these are violated Human-Computer Interaction 27

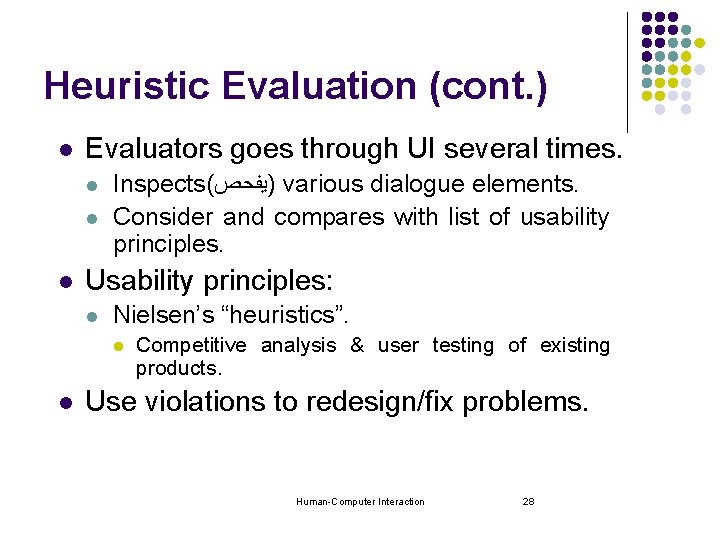

Heuristic Evaluation (cont. ) l Evaluators goes through UI several times. l l l Inspects( )ﻳﻔﺤﺺ various dialogue elements. Consider and compares with list of usability principles. Usability principles: l Nielsen’s “heuristics”. l l Competitive analysis & user testing of existing products. Use violations to redesign/fix problems. Human-Computer Interaction 28

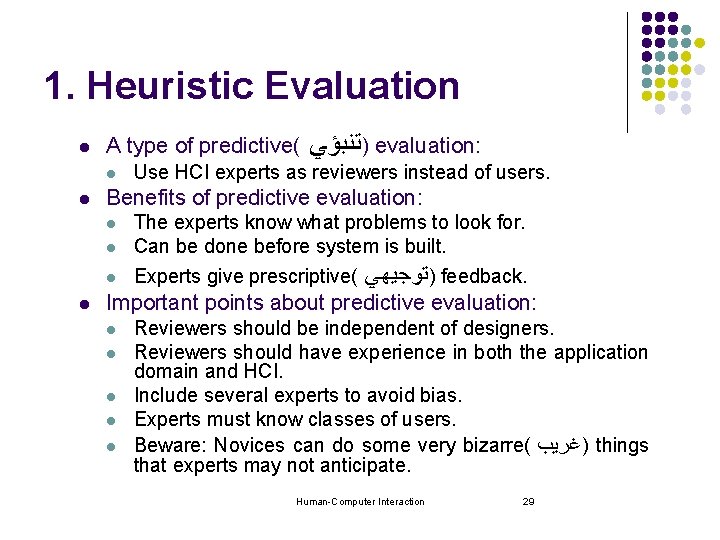

1. Heuristic Evaluation l A type of predictive( l l Use HCI experts as reviewers instead of users. Benefits of predictive evaluation: l The experts know what problems to look for. Can be done before system is built. l Experts give prescriptive( l l )ﺗﻨﺒﺆﻲ evaluation: )ﺗﻮﺟﻴﻬﻲ feedback. Important points about predictive evaluation: l l l Reviewers should be independent of designers. Reviewers should have experience in both the application domain and HCI. Include several experts to avoid bias. Experts must know classes of users. Beware: Novices can do some very bizarre( ) ﻏﺮﻳﺐ things that experts may not anticipate. Human-Computer Interaction 29

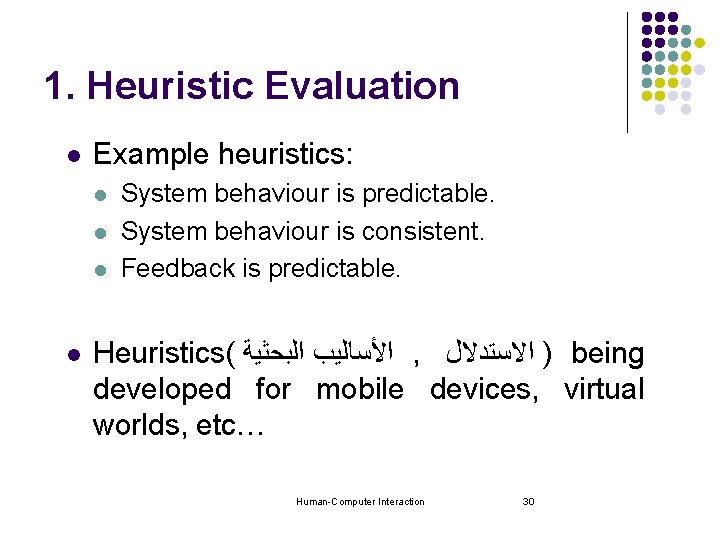

1. Heuristic Evaluation l Example heuristics: l l System behaviour is predictable. System behaviour is consistent. Feedback is predictable. Heuristics( ﺍﻷﺴﺎﻟﻴﺐ ﺍﻟﺒﺤﺜﻴﺔ , ) ﺍﻻﺳﺘﺪﻻﻝ being developed for mobile devices, virtual worlds, etc… Human-Computer Interaction 30

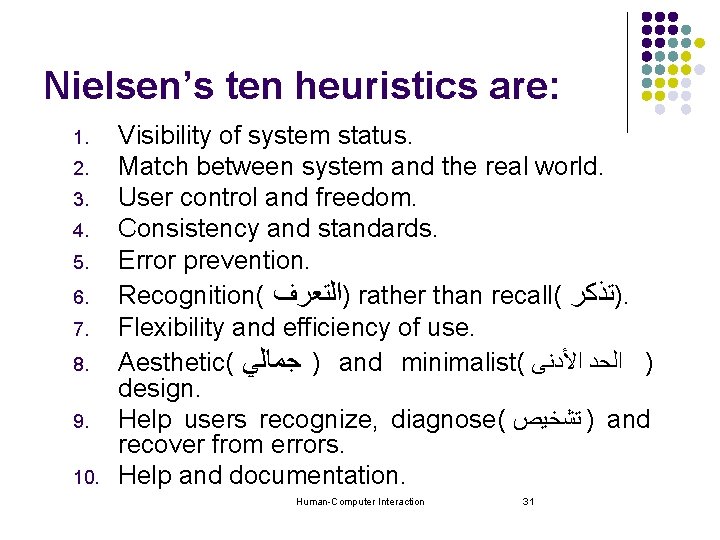

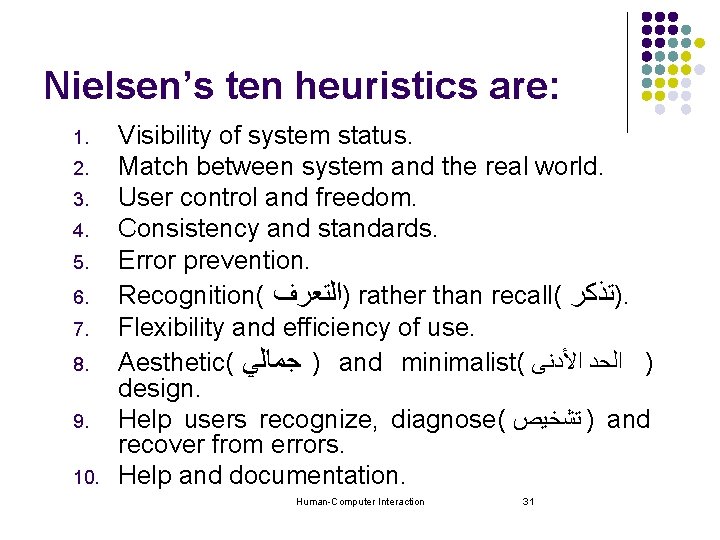

Nielsen’s ten heuristics are: 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. Visibility of system status. Match between system and the real world. User control and freedom. Consistency and standards. Error prevention. Recognition( )ﺍﻟﺘﻌﺮﻑ rather than recall( )ﺗﺬﻛﺮ. Flexibility and efficiency of use. Aesthetic( ) ﺟﻤﺎﻟﻲ and minimalist( ) ﺍﻟﺤﺪ ﺍﻷﺪﻧﻰ design. Help users recognize, diagnose( ) ﺗﺸﺨﻴﺺ and recover from errors. Help and documentation. Human-Computer Interaction 31

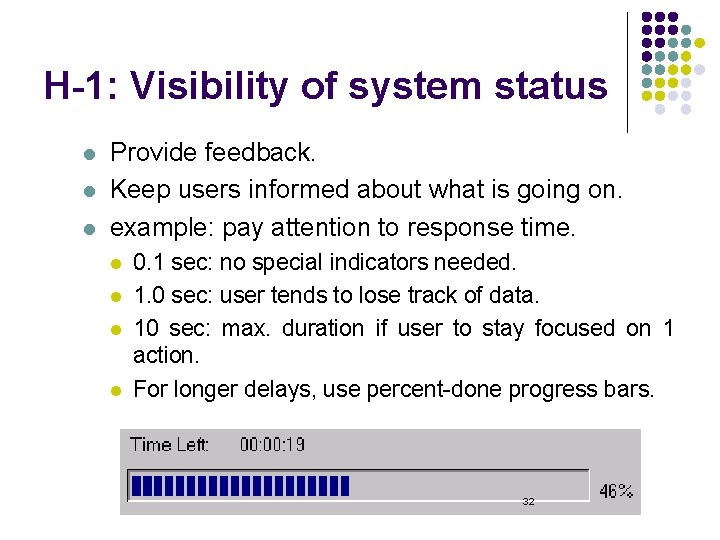

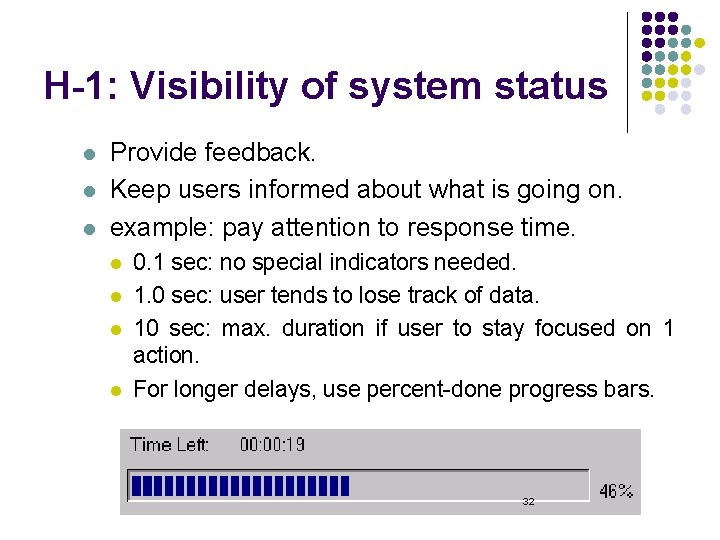

H-1: Visibility of system status l l l Provide feedback. Keep users informed about what is going on. example: pay attention to response time. l l 0. 1 sec: no special indicators needed. 1. 0 sec: user tends to lose track of data. 10 sec: max. duration if user to stay focused on 1 action. For longer delays, use percent-done progress bars. Human-Computer Interaction 32

H-1: Visibility of system status l Continuously inform the user about: What it is doing. l How it is interpreting the user’s input. l User should always be aware of what is going on. l What’s it doing? > Do it Time for coffee. > Do it This will take 5 minutes. . . 33

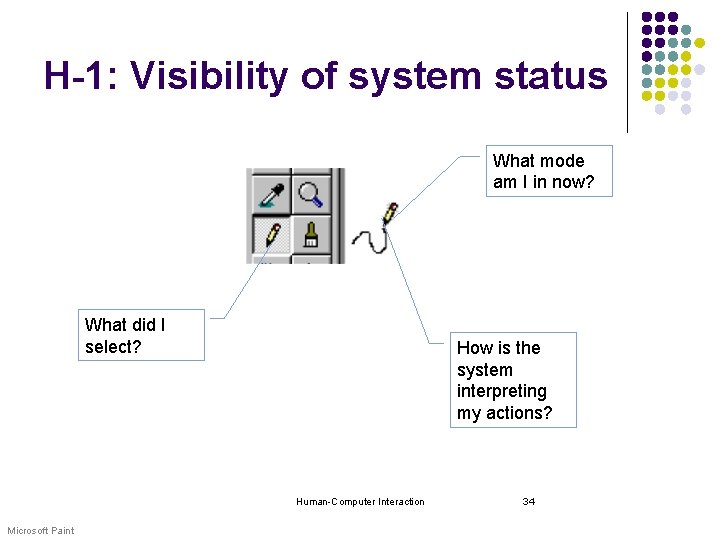

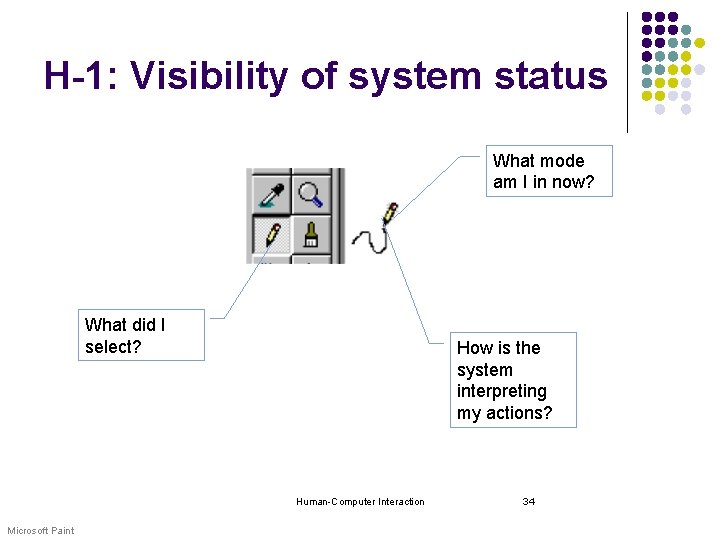

H-1: Visibility of system status What mode am I in now? What did I select? How is the system interpreting my actions? Human-Computer Interaction Microsoft Paint 34

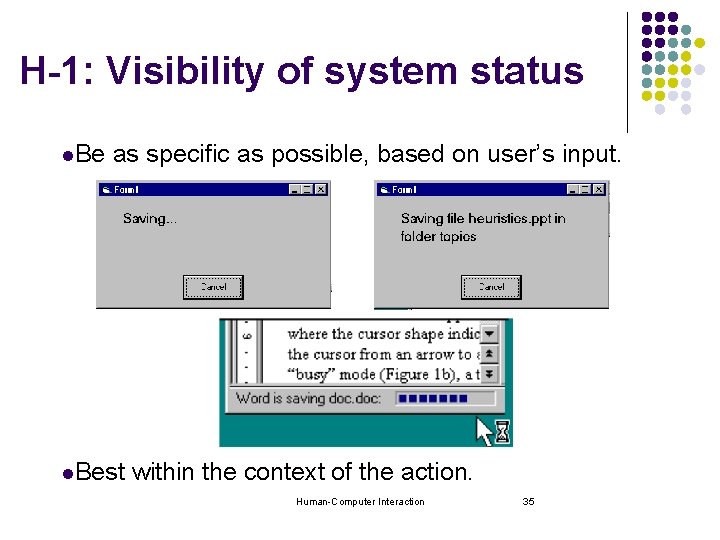

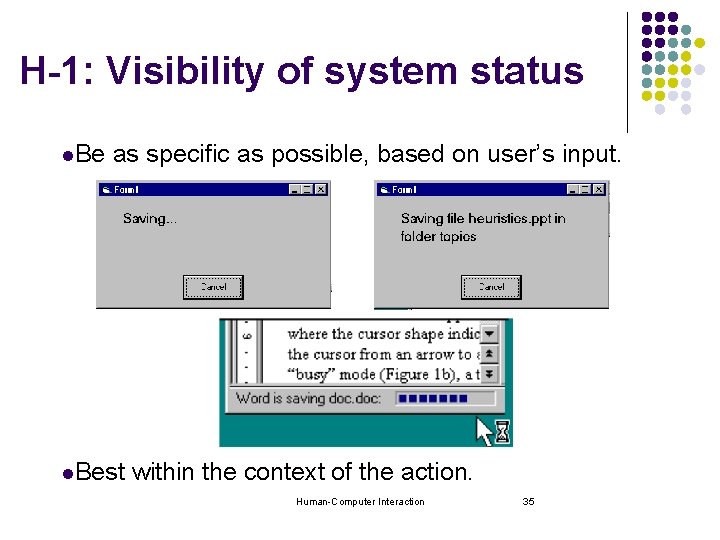

H-1: Visibility of system status l. Be as specific as possible, based on user’s input. l. Best within the context of the action. Human-Computer Interaction 35

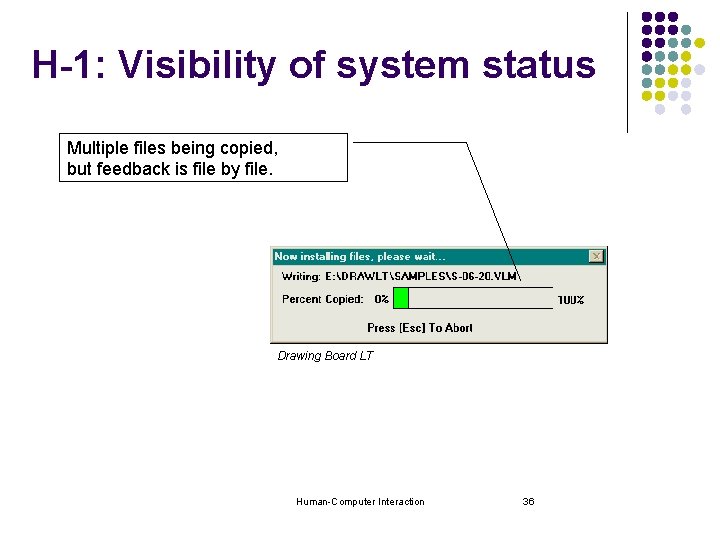

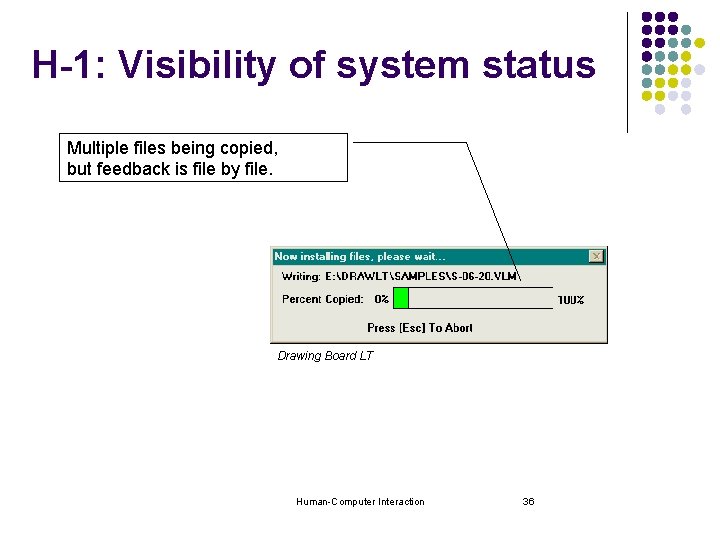

H-1: Visibility of system status Multiple files being copied, but feedback is file by file. Drawing Board LT Human-Computer Interaction 36

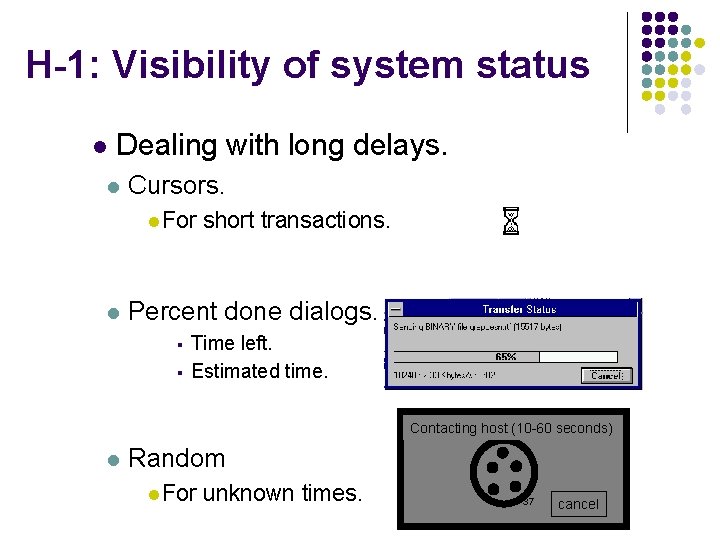

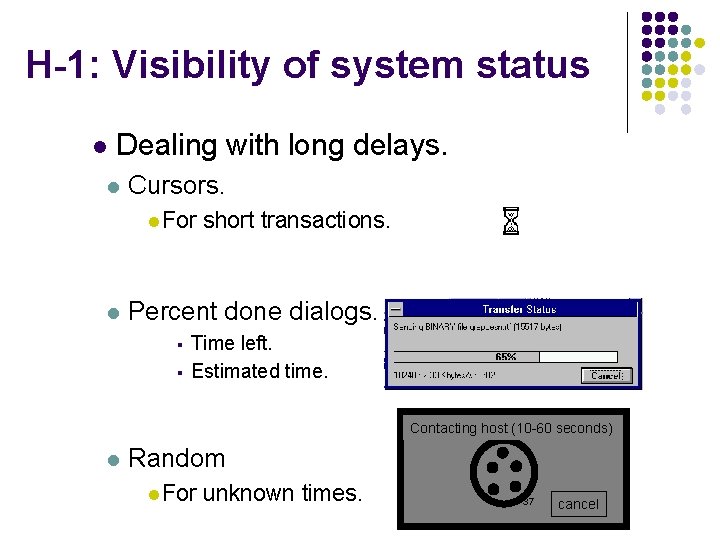

H-1: Visibility of system status l Dealing with long delays. l Cursors. l For l short transactions. Percent done dialogs. § § Time left. Estimated time. Contacting host (10 -60 seconds) l Random l For unknown times. 37 cancel

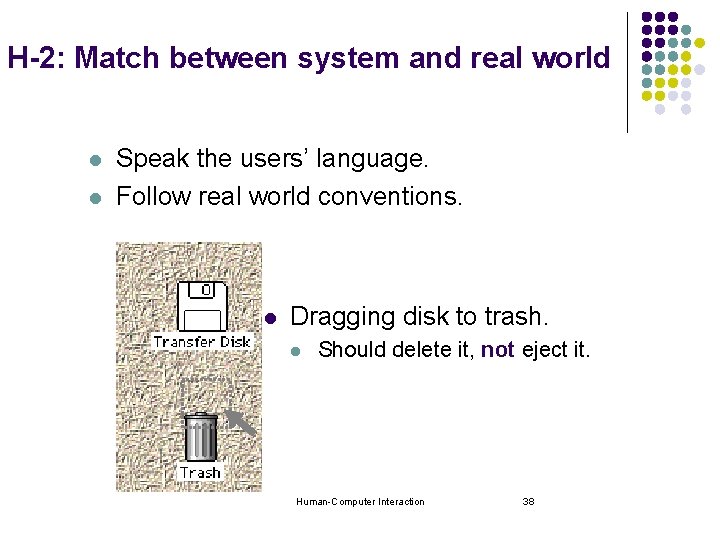

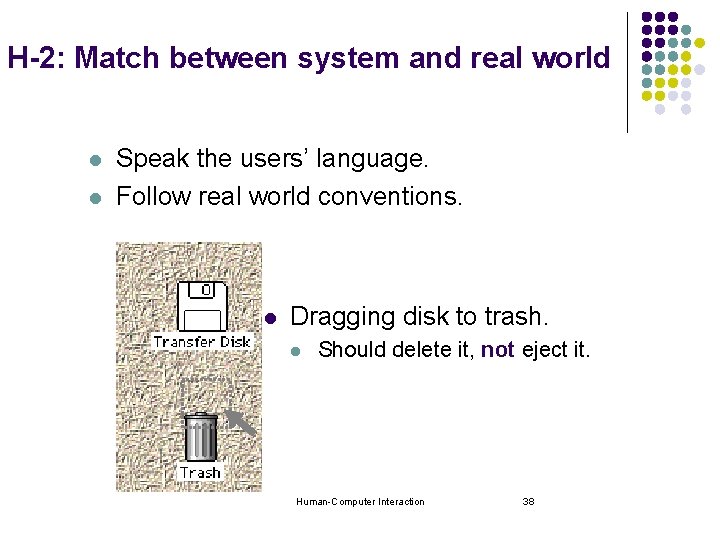

H-2: Match between system and real world l l Speak the users’ language. Follow real world conventions. l Dragging disk to trash. l Should delete it, not eject it. Human-Computer Interaction 38

H-2: Match between system and real world My program gave me the message Rstrd Info. What does it mean? Hmm… but what does it mean? ? ? That’s restricted information No, no… Rstrd Info stands for “Restricted Information” But surely you can tell me!!! It means the program is too busy to let you log on Human-Computer Interaction Ok, I’ll take a coffee 39

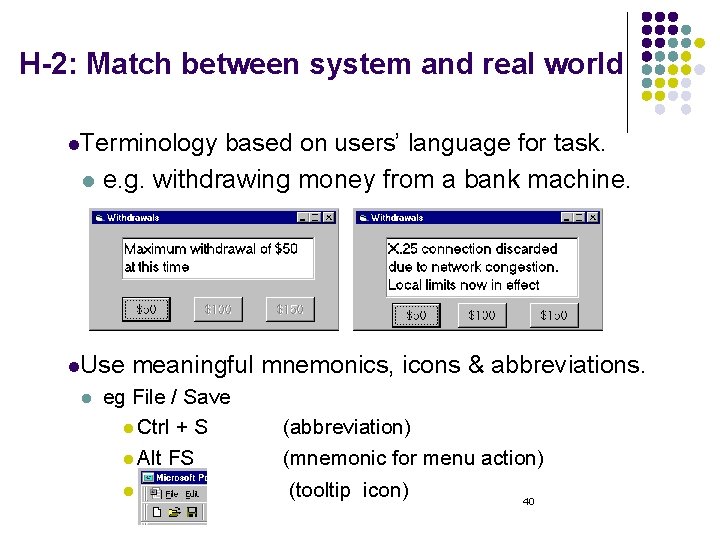

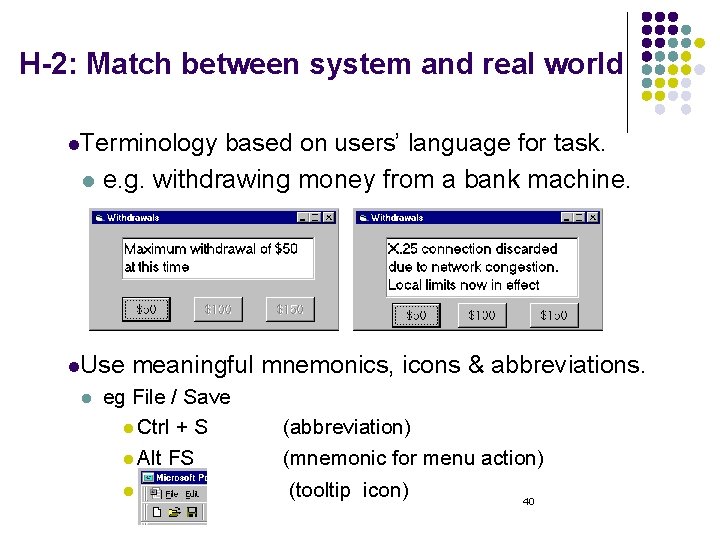

H-2: Match between system and real world l. Terminology l based on users’ language for task. e. g. withdrawing money from a bank machine. l. Use l meaningful mnemonics, icons & abbreviations. eg File / Save l Ctrl + S l Alt FS l (abbreviation) (mnemonic for menu action) (tooltip icon) 40

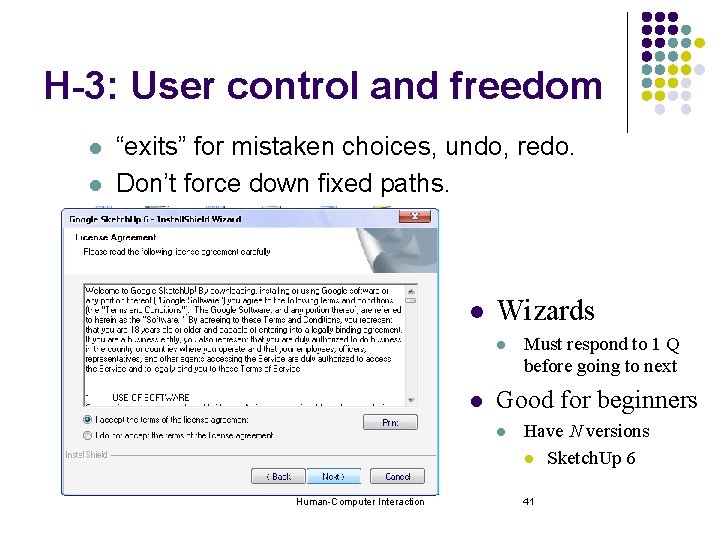

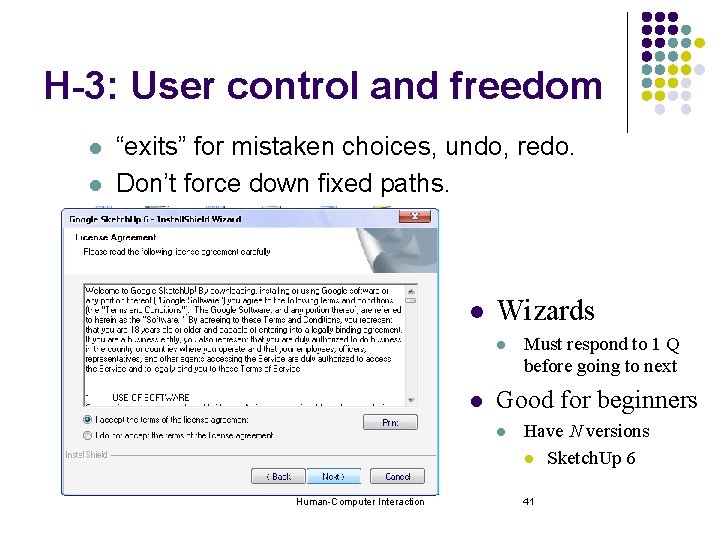

H-3: User control and freedom l l “exits” for mistaken choices, undo, redo. Don’t force down fixed paths. l Wizards l l Good for beginners l Human-Computer Interaction Must respond to 1 Q before going to next Have N versions l Sketch. Up 6 41

H-3: User control and freedom How do I get out of this? 42

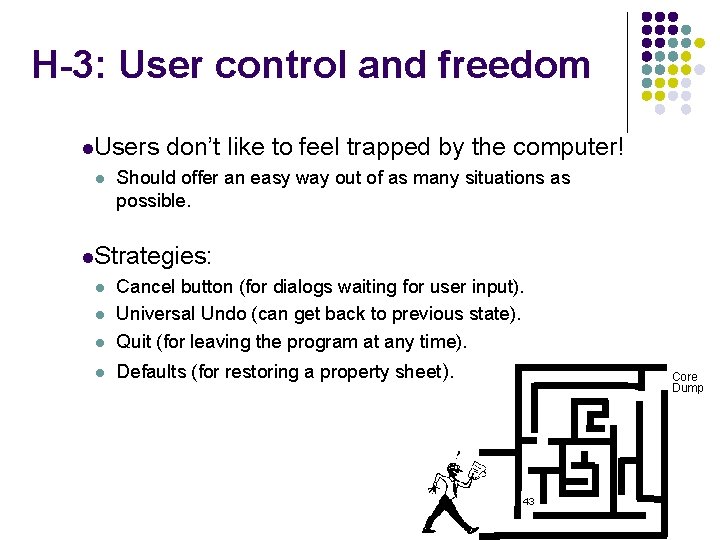

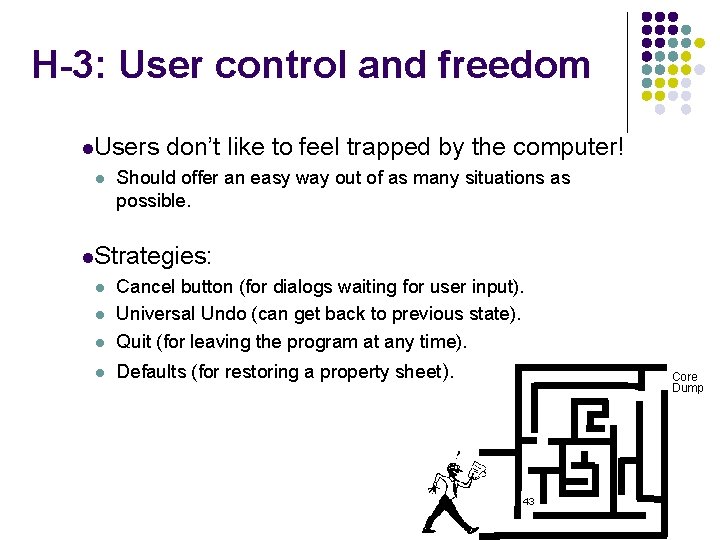

H-3: User control and freedom l. Users l don’t like to feel trapped by the computer! Should offer an easy way out of as many situations as possible. l. Strategies: l Cancel button (for dialogs waiting for user input). Universal Undo (can get back to previous state). Quit (for leaving the program at any time). l Defaults (for restoring a property sheet). l l Core Dump 43

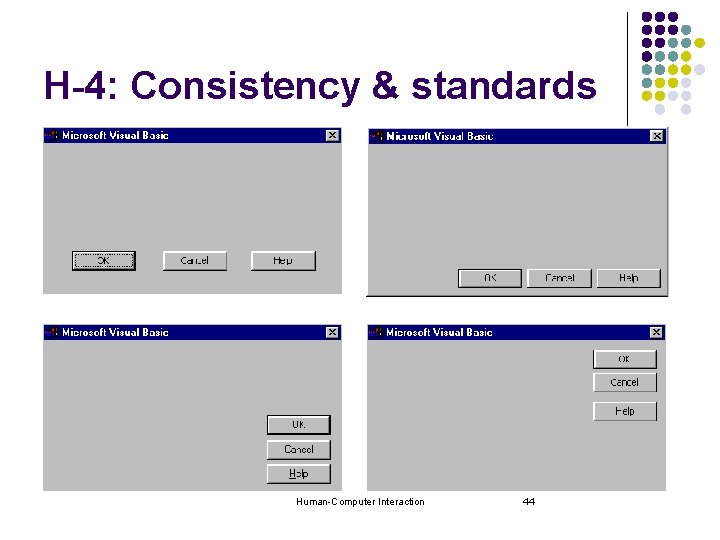

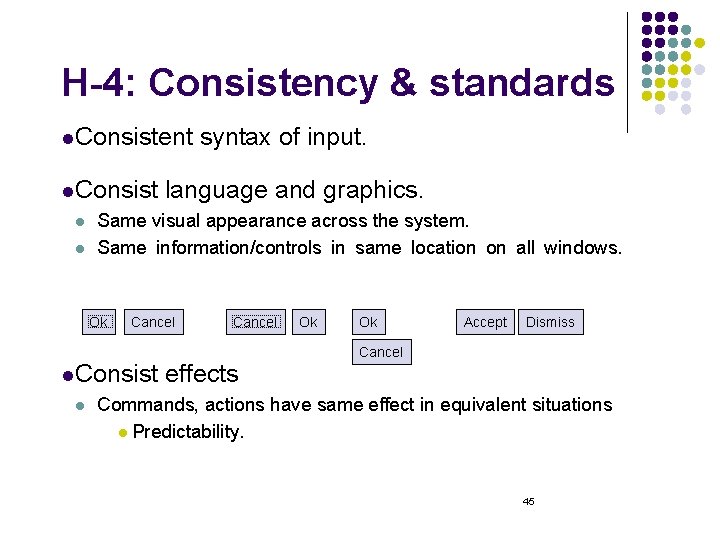

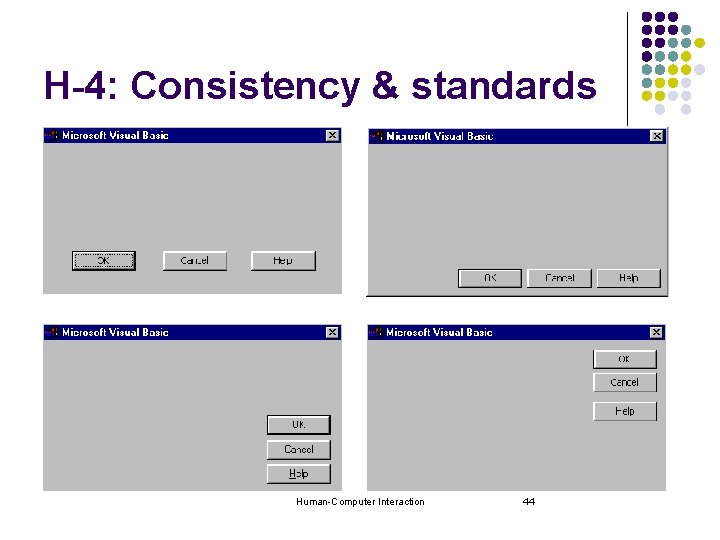

H-4: Consistency & standards Human-Computer Interaction 44

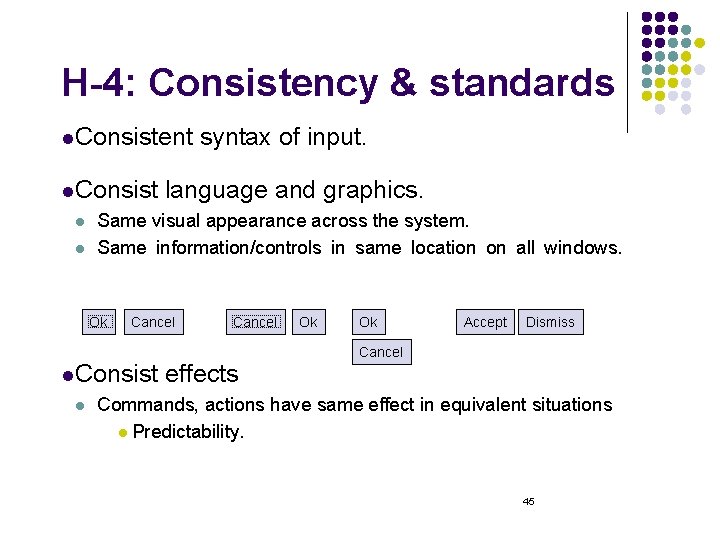

H-4: Consistency & standards l. Consistent l. Consist l l language and graphics. Same visual appearance across the system. Same information/controls in same location on all windows. Ok Cancel l. Consist l syntax of input. Cancel effects Ok Ok Accept Dismiss Cancel Commands, actions have same effect in equivalent situations l Predictability. 45

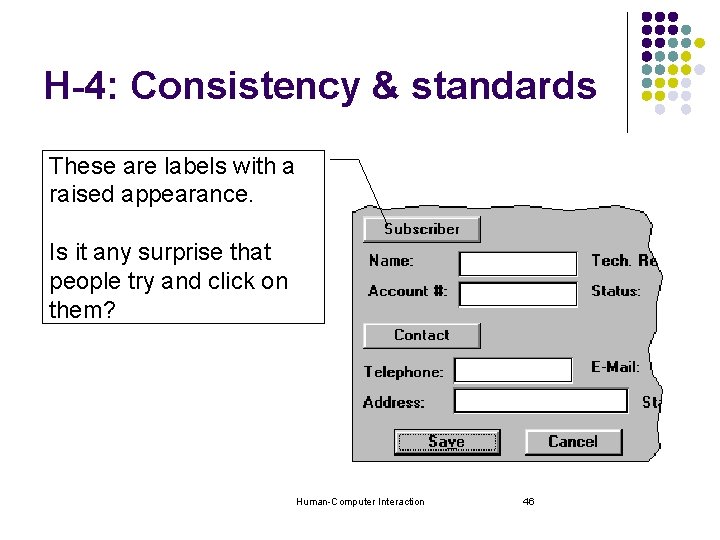

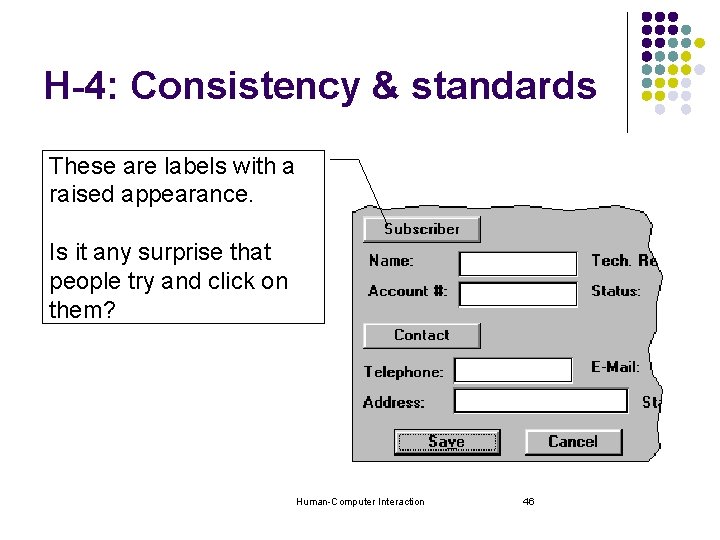

H-4: Consistency & standards These are labels with a raised appearance. Is it any surprise that people try and click on them? Human-Computer Interaction 46

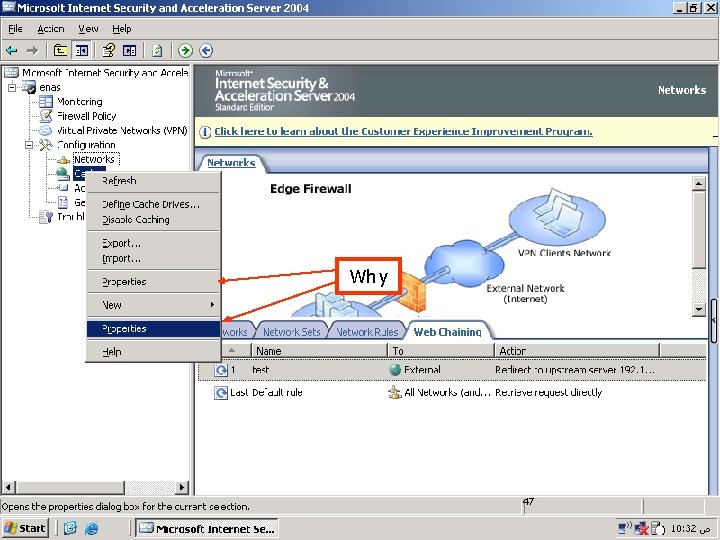

Why Human-Computer Interaction 47

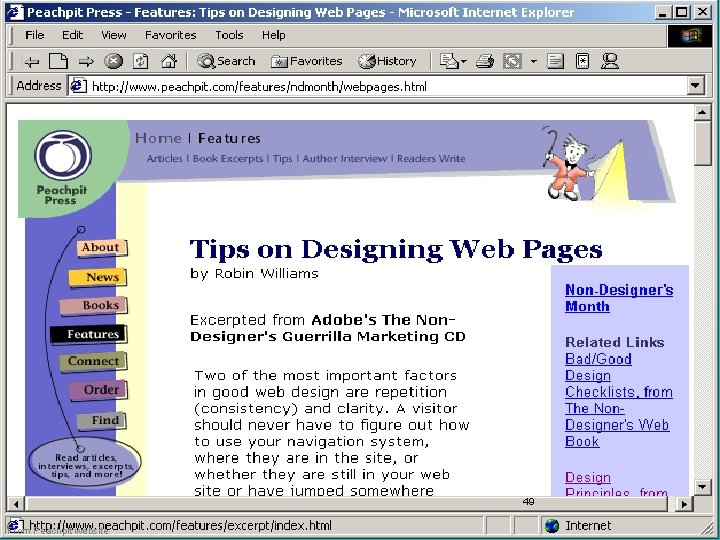

Human-Computer Interaction From Peachpit website 48

Human-Computer Interaction From Peachpit website 49

H-5: Error prevention l Make it difficult to make errors. l Even better than good error message is a careful design that prevents a problem from occurring in the first place. Human-Computer Interaction 50

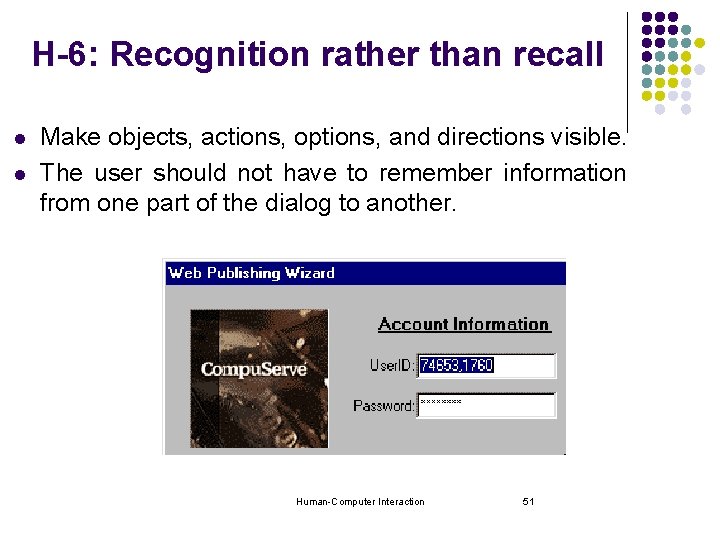

H-6: Recognition rather than recall l l Make objects, actions, options, and directions visible. The user should not have to remember information from one part of the dialog to another. Human-Computer Interaction 51

H-6: Recognition rather than recall l. Computers good at remembering, people are not! l. Promote recognition over recall. l l Menus, icons, choice dialog boxes vs commands, field formats. Relies on visibility of objects to the user. 52

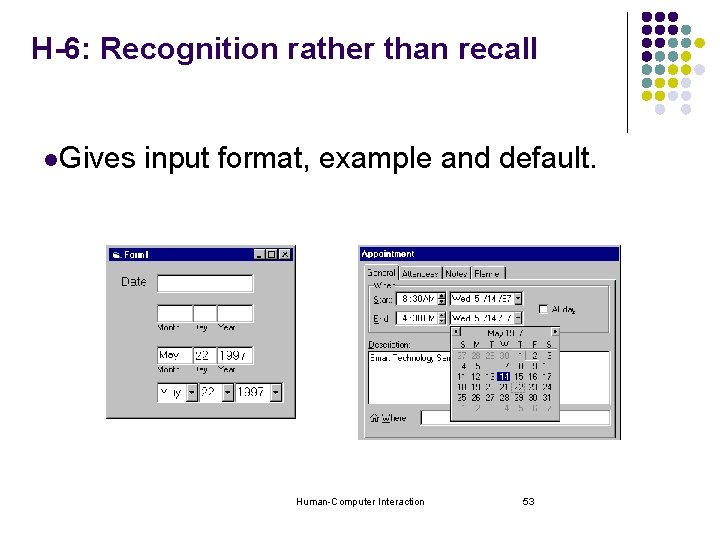

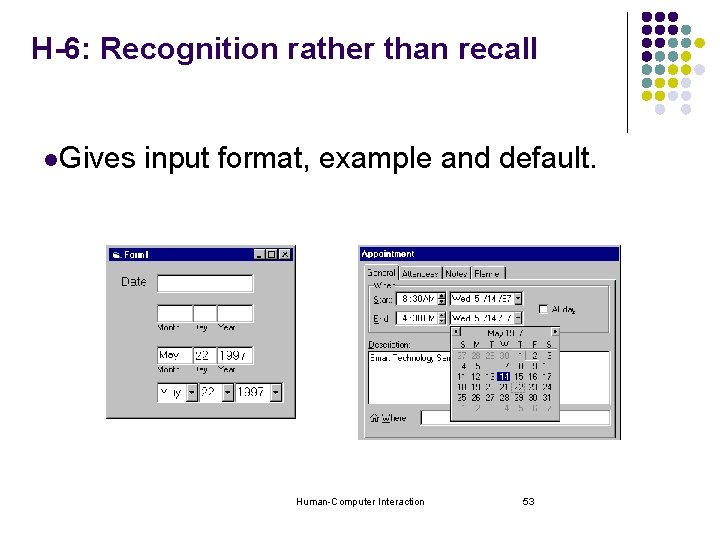

H-6: Recognition rather than recall l. Gives input format, example and default. Human-Computer Interaction 53

H-7: Flexibility and efficiency of use l Accelerators for experts l l Allow users to tailor frequent actions l l e. g. , keyboard shortcuts e. g. , macros Customized user profiles on the web Human-Computer Interaction 54

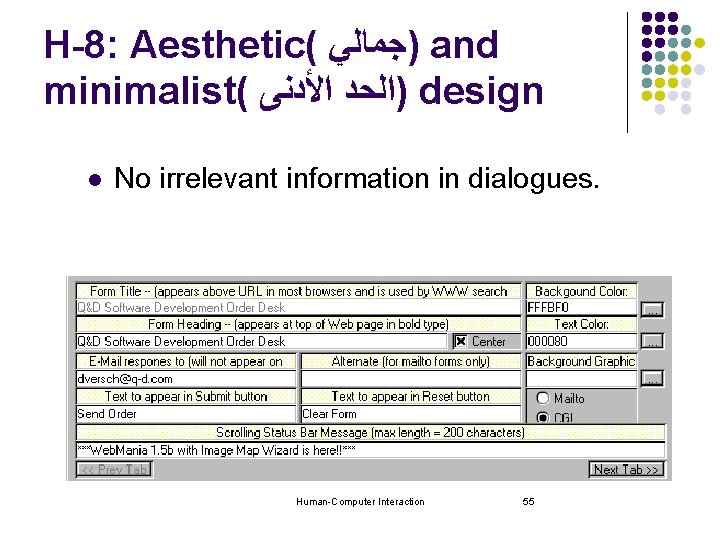

H-8: Aesthetic( )ﺟﻤﺎﻟﻲ and minimalist( )ﺍﻟﺤﺪ ﺍﻷﺪﻧﻰ design l No irrelevant information in dialogues. Human-Computer Interaction 55

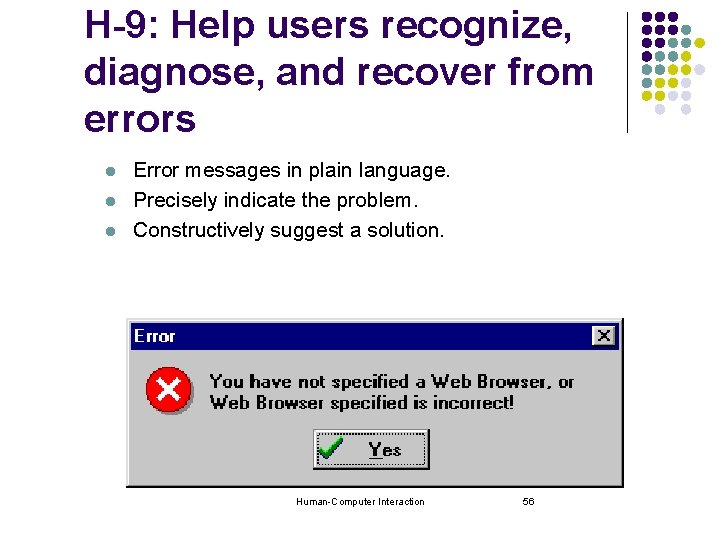

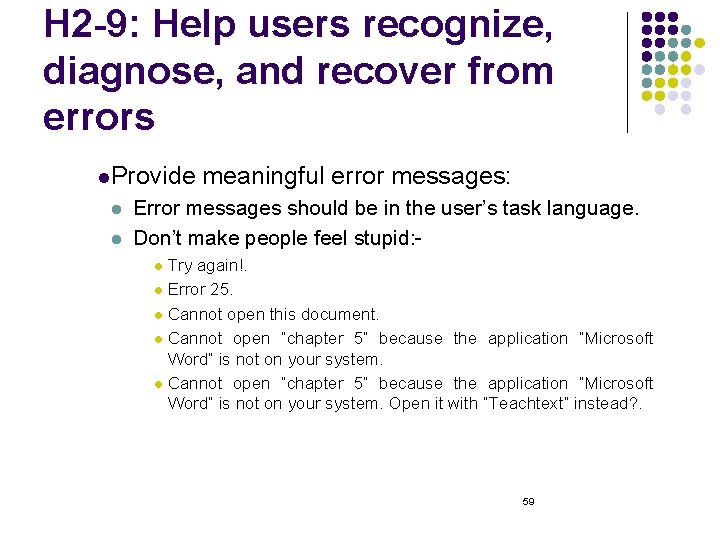

H-9: Help users recognize, diagnose, and recover from errors l l l Error messages in plain language. Precisely indicate the problem. Constructively suggest a solution. Human-Computer Interaction 56

H-9: Help users recognize, diagnose, and recover from errors l. People l. Errors will make errors! we make l Mistakes l Conscious( )ﺍﻟﻮﺍﻋﻲ actions lead to an error instead of correct solution. l Slips l Unconscious behaviour gets misdirected in route to satisfy a goal. 57

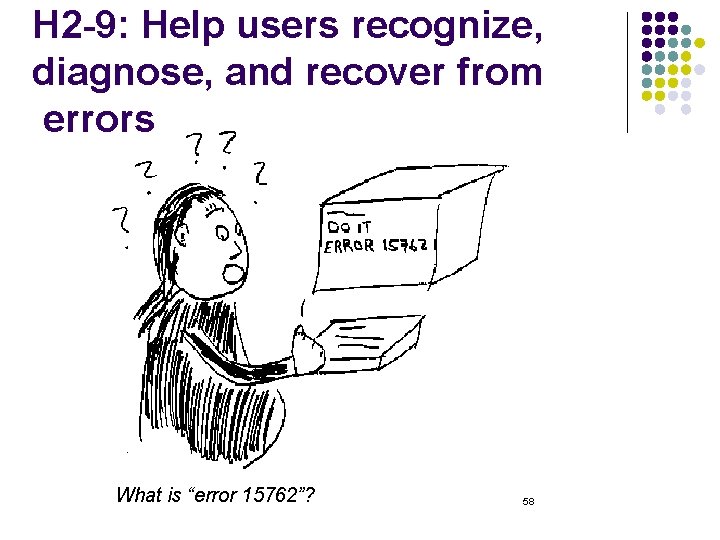

H 2 -9: Help users recognize, diagnose, and recover from errors What is “error 15762”? 58

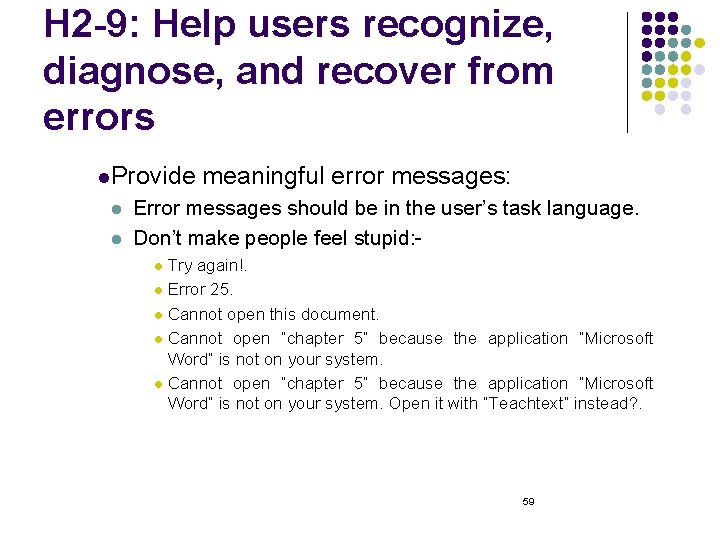

H 2 -9: Help users recognize, diagnose, and recover from errors l. Provide l l meaningful error messages: Error messages should be in the user’s task language. Don’t make people feel stupid: Try again!. l Error 25. l Cannot open this document. l Cannot open “chapter 5” because the application “Microsoft Word” is not on your system. Open it with “Teachtext” instead? . l 59

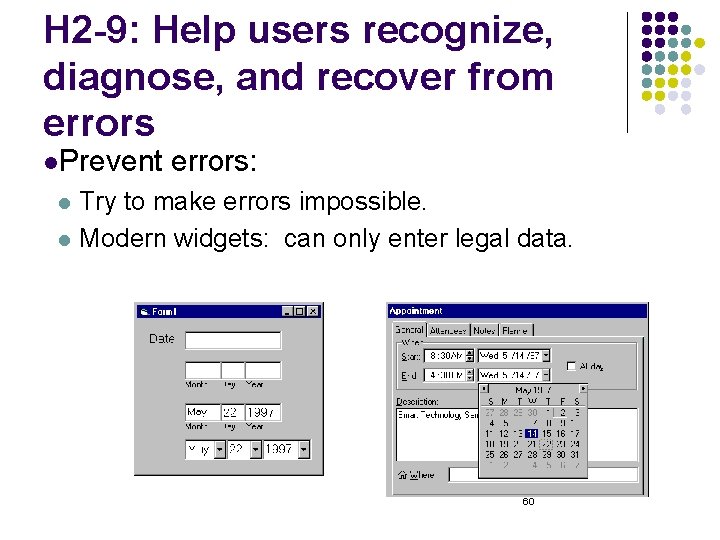

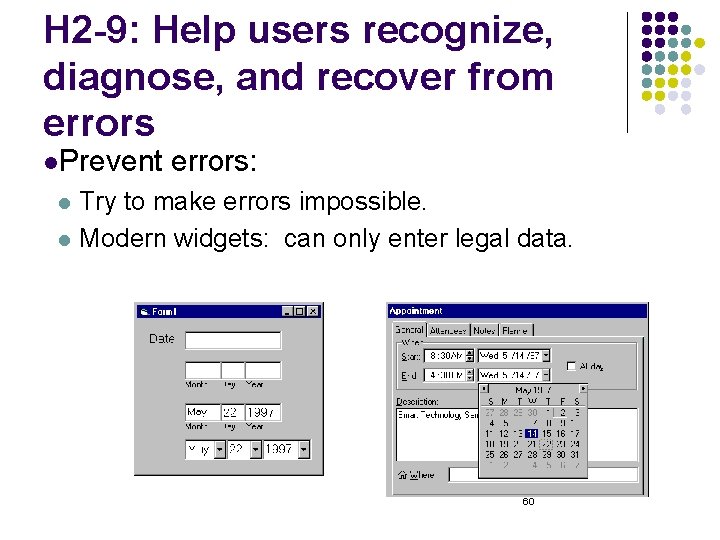

H 2 -9: Help users recognize, diagnose, and recover from errors l. Prevent errors: Try to make errors impossible. l Modern widgets: can only enter legal data. l 60

H 2 -10: Help and documentation l l Easy to search. Focused on the user’s task. List concrete steps to carry out. Not too large. Human-Computer Interaction 61

H 2 -10: Help and documentation l. Help is not a replacement for bad design! l. Simple l systems: Use minimal instructions. l. Most other systems: Simple things should be simple. l Learning path for advanced features. l Volume 37: A user's guide to. . . 62

Documentation and how it is used users do not read manuals. l. Usually used when users are in some kind of panic. l. Many l l l paper manuals unavailable in many businesses! l e. g. single copy locked away in system administrator’s office. online documentation better. online help specific to current context. l. Sometimes l used for quick reference. list of shortcuts. . . 63

2. Cognitive Walkthrough Proposed by Polson and colleagues. l Evaluates design on how well it supports user in learning task. l Focus on ease of learning. l Usually performed by expert in cognitive psychology. l Expert is told the assumptions about user population, context of use, task details. l expert ‘walks though’ design to identify potential problems using psychological principles. Human-Computer Interaction 64

2. Cognitive Walkthrough l Walkthroughs require a detailed review of a sequence of actions. l In the cognitive walkthrough, the sequence of actions refers to the steps that an interface will require a user to perform in order to accomplish some task. Human-Computer Interaction 65

Walkthrough needs four things: 1. A specification or prototype of the system. l It doesn't have to be complete, but it should be fairly detailed. 2. A description of the task the user is to perform on the system. 3. Written list of the actions needed to complete the task with the proposed system. 4. An indication of who the users are and what kind of experience and knowledge the evaluators can assume about them. Human-Computer Interaction 66

Four Questions The evaluator will answer these questions: 1. Is the effect of the action the same as the user’s goal at that point? 2. Will users see that the action is available? 3. Once users have found the correct action, will they know it is the one they need? 4. After the action is taken, will users understand the feedback they get? Human-Computer Interaction 67

Cognitive Walkthrough l For each task walkthrough considers: l l What impact will interaction have on user? What processes are required? What learning problems may occur? Analysis focuses on goals and knowledge: l Does the design lead the user to generate the correct goals? Human-Computer Interaction 68

Steps of a Cognitive Walkthrough l l l Define inputs. Convene analysts. Step through action sequences for each task. Record important information. Modify UI. Human-Computer Interaction 69

Define Inputs - Example l l l Task: Move an application to a new folder or drive Who: Win 2003 user Interface: Win 2003 desktop l l l Folder containing desired app. is open. Destination folder/drive is visible. Action sequence. . . Human-Computer Interaction 70

Action Sequence l l Move mouse to app. icon. Right mouse down on app. icon: l l l Result: App. icon highlights. Failure: The user may not know that the right mouse button is the proper one to use. Success? : Highlighting shows something happened, but was it the right thing? Human-Computer Interaction 71

Action Sequence (cont’d) l Release mouse button: l l l Result: Menu appears: Cut, Copy, Create Shortcut, Cancel. Success: User is prompted for next action. Move mouse to “Cut”: l l Result: Selection highlights. Success: Standard GUI menu interaction. Human-Computer Interaction 72

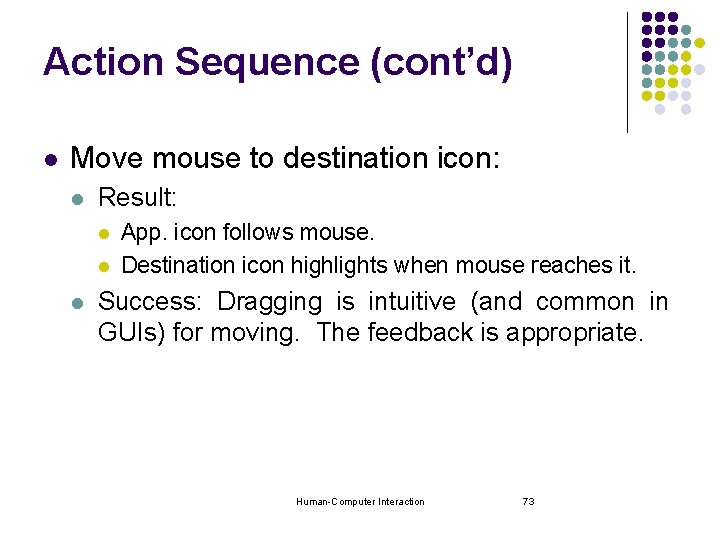

Action Sequence (cont’d) l Move mouse to destination icon: l Result: l l l App. icon follows mouse. Destination icon highlights when mouse reaches it. Success: Dragging is intuitive (and common in GUIs) for moving. The feedback is appropriate. Human-Computer Interaction 73

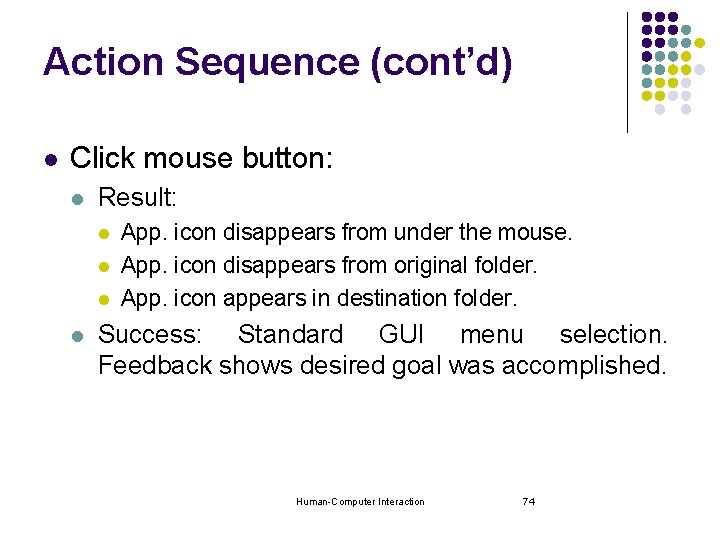

Action Sequence (cont’d) l Click mouse button: l Result: l l App. icon disappears from under the mouse. App. icon disappears from original folder. App. icon appears in destination folder. Success: Standard GUI menu selection. Feedback shows desired goal was accomplished. Human-Computer Interaction 74

Cognitive Walkthrough Example: l l l step 1: identify task step 2: identify action sequence for task l user action: Press the ‘timed record’ button l system display: Display moves to timer mode. Flashing cursor appears after ‘start’. step 3: perform walkthrough l for each action – answer the following questions § § l Is the effect of the action the same as the user’s goal at that point? Will users see that the action is available? Once users have found the correct action, will they know it is the one they need? After the action is taken, will users understand the feedback they get? Might find a potential usability problem relating to icon on ‘timed record’ button. Human-Computer Interaction 75

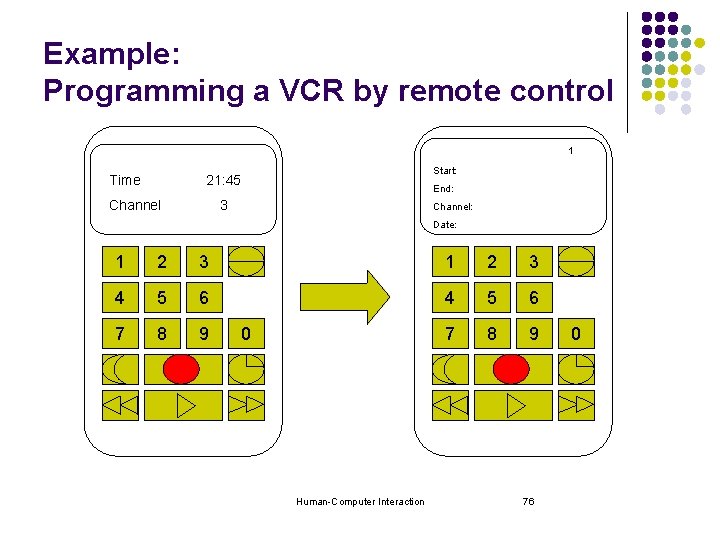

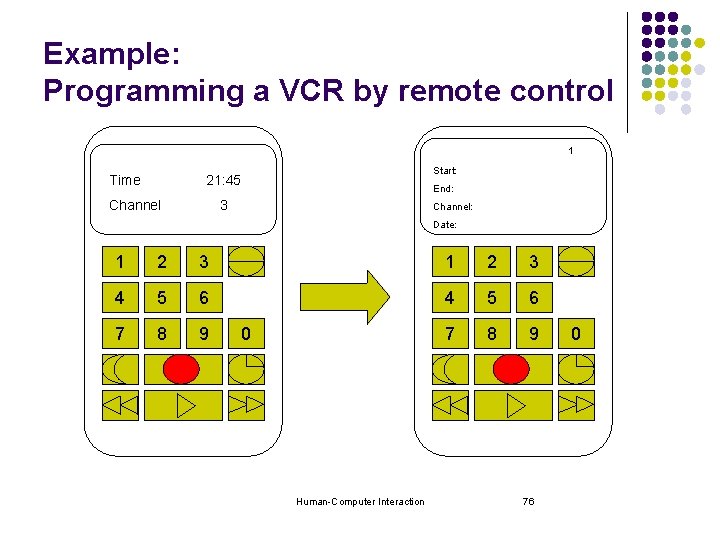

Example: Programming a VCR by remote control 1 Time Start: 21: 45 Channel End: 3 Channel: Date: 1 2 3 4 5 6 7 8 9 0 Human-Computer Interaction 76 0

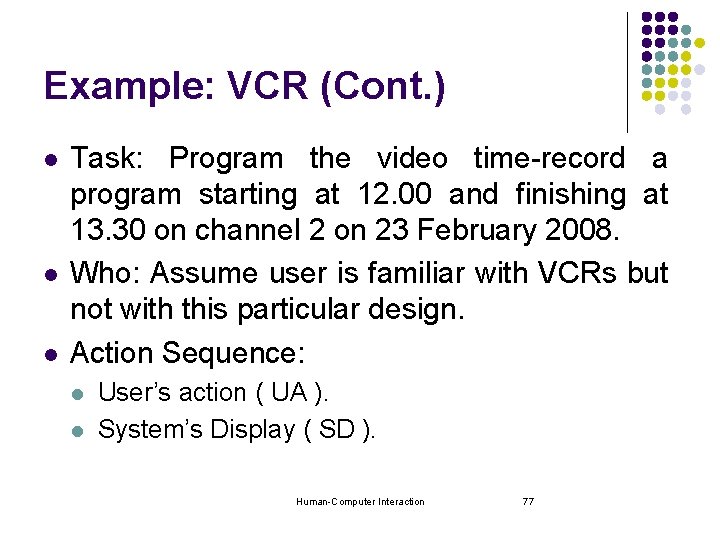

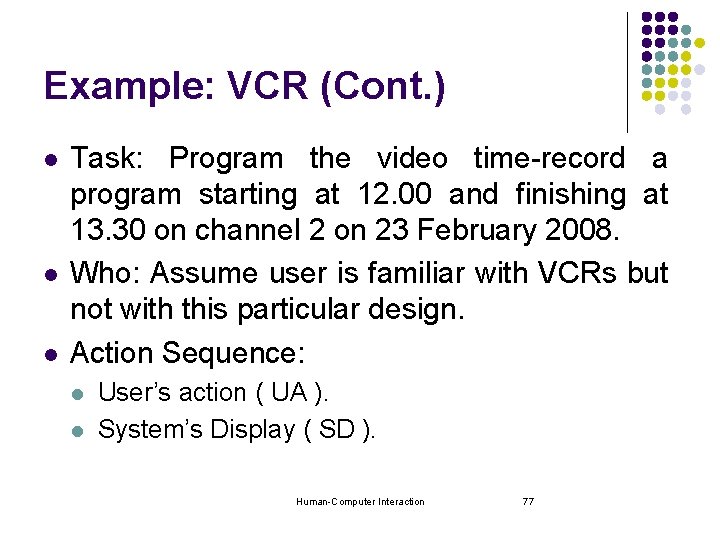

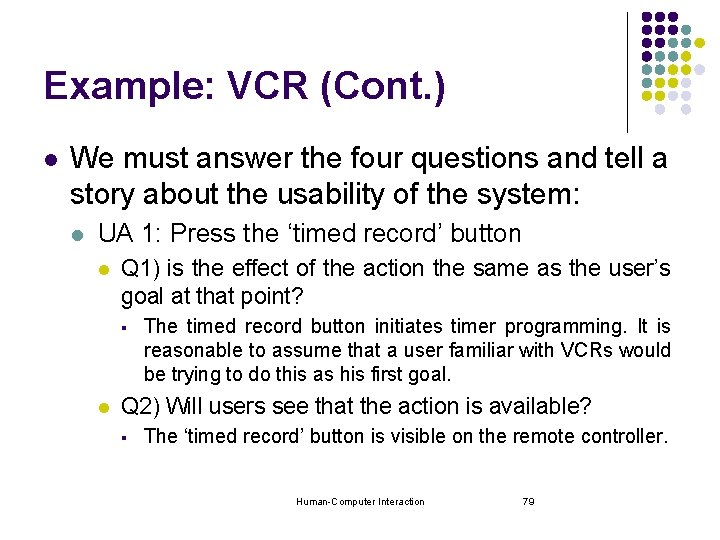

Example: VCR (Cont. ) l l l Task: Program the video time-record a program starting at 12. 00 and finishing at 13. 30 on channel 2 on 23 February 2008. Who: Assume user is familiar with VCRs but not with this particular design. Action Sequence: l l User’s action ( UA ). System’s Display ( SD ). Human-Computer Interaction 77

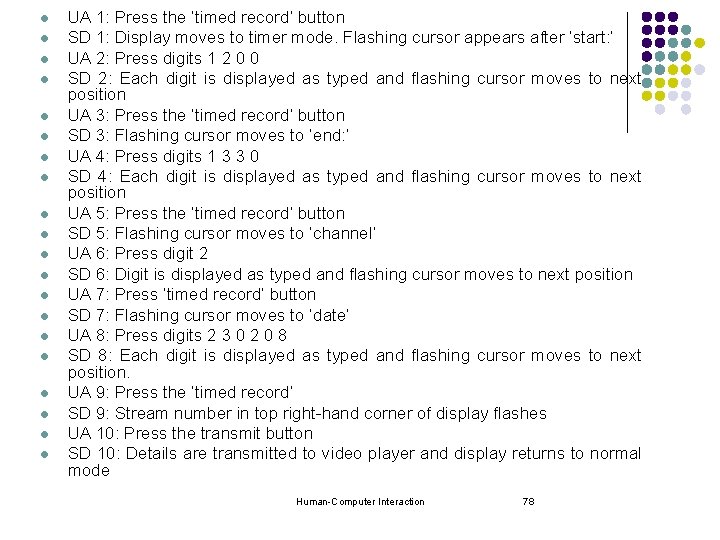

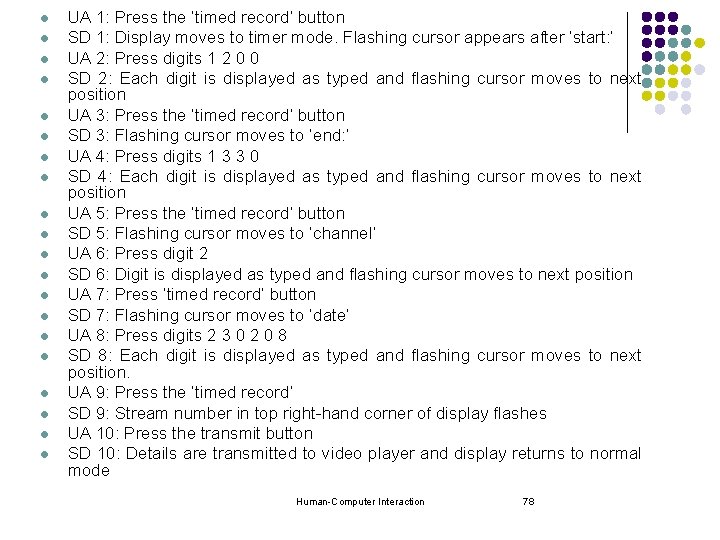

l l l l l UA 1: Press the ‘timed record’ button SD 1: Display moves to timer mode. Flashing cursor appears after ‘start: ’ UA 2: Press digits 1 2 0 0 SD 2: Each digit is displayed as typed and flashing cursor moves to next position UA 3: Press the ‘timed record’ button SD 3: Flashing cursor moves to ‘end: ’ UA 4: Press digits 1 3 3 0 SD 4: Each digit is displayed as typed and flashing cursor moves to next position UA 5: Press the ‘timed record’ button SD 5: Flashing cursor moves to ‘channel’ UA 6: Press digit 2 SD 6: Digit is displayed as typed and flashing cursor moves to next position UA 7: Press ‘timed record’ button SD 7: Flashing cursor moves to ‘date’ UA 8: Press digits 2 3 0 2 0 8 SD 8: Each digit is displayed as typed and flashing cursor moves to next position. UA 9: Press the ‘timed record’ SD 9: Stream number in top right-hand corner of display flashes UA 10: Press the transmit button SD 10: Details are transmitted to video player and display returns to normal mode Human-Computer Interaction 78

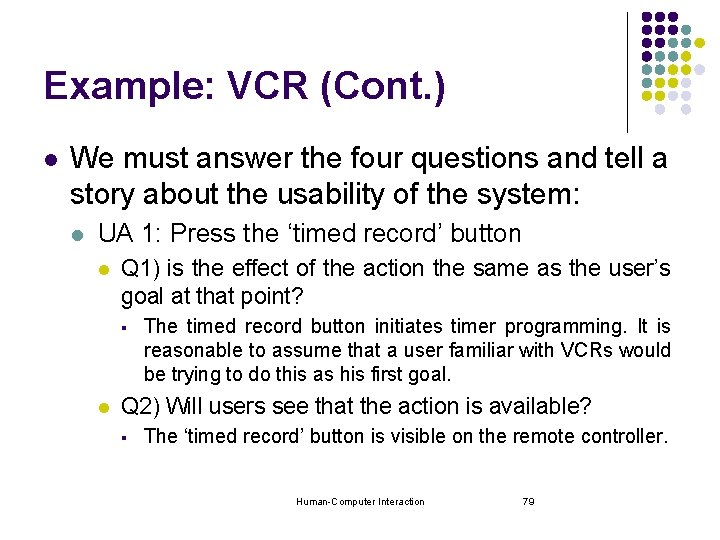

Example: VCR (Cont. ) l We must answer the four questions and tell a story about the usability of the system: l UA 1: Press the ‘timed record’ button l Q 1) is the effect of the action the same as the user’s goal at that point? § l The timed record button initiates timer programming. It is reasonable to assume that a user familiar with VCRs would be trying to do this as his first goal. Q 2) Will users see that the action is available? § The ‘timed record’ button is visible on the remote controller. Human-Computer Interaction 79

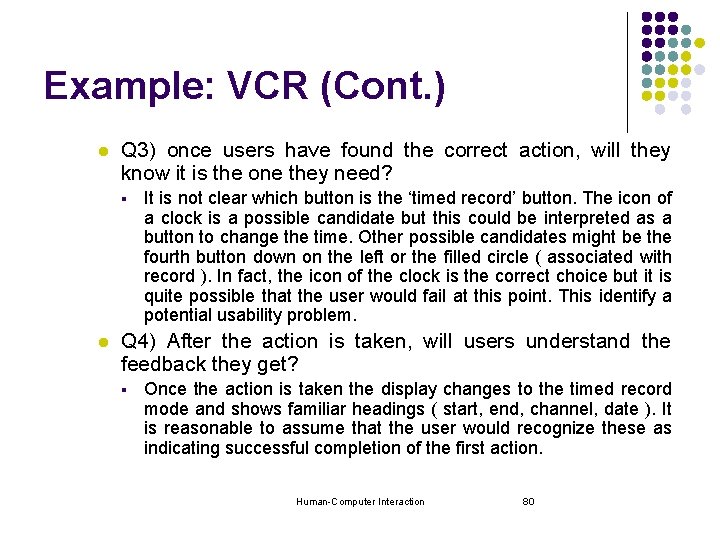

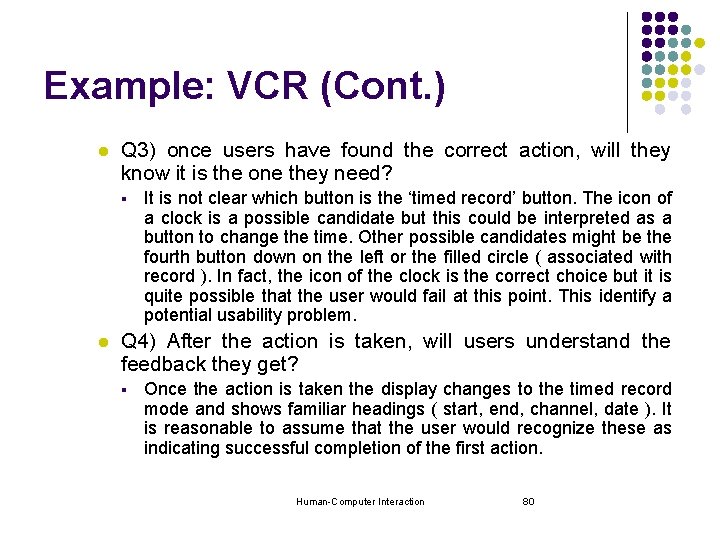

Example: VCR (Cont. ) l Q 3) once users have found the correct action, will they know it is the one they need? § l It is not clear which button is the ‘timed record’ button. The icon of a clock is a possible candidate but this could be interpreted as a button to change the time. Other possible candidates might be the fourth button down on the left or the filled circle ( associated with record ). In fact, the icon of the clock is the correct choice but it is quite possible that the user would fail at this point. This identify a potential usability problem. Q 4) After the action is taken, will users understand the feedback they get? § Once the action is taken the display changes to the timed record mode and shows familiar headings ( start, end, channel, date ). It is reasonable to assume that the user would recognize these as indicating successful completion of the first action. Human-Computer Interaction 80

Evaluation through user participation l l Some of the techniques we have considered so far concentrate on evaluating a design or system through analysis by the designer, or an expert evaluator, rather than testing with actual users. User participation in evaluation tends to occur in the later stages of development when there is at least a working prototype of the system in place. Human-Computer Interaction 81

Styles of evaluation l There are two distinct evaluation styles: l l Those performed under laboratory conditions. Those conducted in the work environment or ‘in the field’. Human-Computer Interaction 82

Laboratory Studies l l l In the first type of evaluation studies, users are taken out of their normal work environment to take part in controlled tests, often in a specialist usability laboratory. This approach has a number of benefits and disadvantages. A well equipped usability laboratory may contain sophisticated audio/visual recording and analysis facilities. Human-Computer Interaction 83

Laboratory Studies (Cont. ) l There are some situations observation is the only option. l l where laboratory e. g. if the system is to be located in a dangerous or remote location, such as a space station. Some very constrained single user tasks may be adequately performed in a laboratory. Want to manipulate the context in order to uncover problems or observe less used procedures. Want to compare alternative designs within a controlled context. Human-Computer Interaction 84

Field Studies l l The second type of evaluation takes the designer or evaluator out into the user’s work environment in order to observe the system in action. High levels of ambient noise, greater levels of movement and constant interruptions, such as phone calls, all make field observation difficult. Human-Computer Interaction 85

Field studies (Cont. ) l l The very ‘open’ nature of this situation means that you will observe interactions between systems and between individuals that would have been missed in a laboratory study. The context is retained and you are seeing the user in his ‘ natural environment ‘. Human-Computer Interaction 86