Human behaviour analysis and interpretation based on the

Human behaviour analysis and interpretation based on the video modality: postures, facial expressions and head movements A. Benoit, L. Bonnaud, A. Caplier, N. Eveno, V. Girondel, Zakia Hammal, M. Rombaut

Introduction « Looking at people domain » : automatic analysis and interpretation of human actions (gestures, behaviour, expressions…) Needs low level information (video analysis step answering to « how are things ? » ) and high level interpretation (data fusion answering to « what is happening ? » ).

Applications • multimodal interactions and interface cf Similar NOE • mixed reality systems • smart rooms • smart surveillance systems (hypovigilance detection, detection of distress cases: old people surveillance, bus surveillance) • e-learning assistance

Outline 1. Global posture recognition 2. Facial expressions recognition 3. Head motion analysis and interpretation 4. Conclusion which expression ? which head motion ? which posture ?

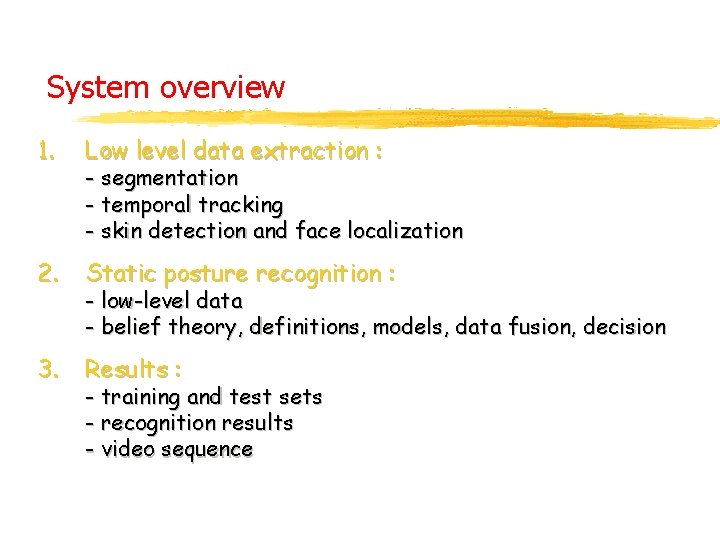

System overview 1. Low level data extraction : 2. Static posture recognition : 3. Results : - segmentation - temporal tracking - skin detection and face localization - low-level data - belief theory, definitions, models, data fusion, decision - training and test sets - recognition results - video sequence

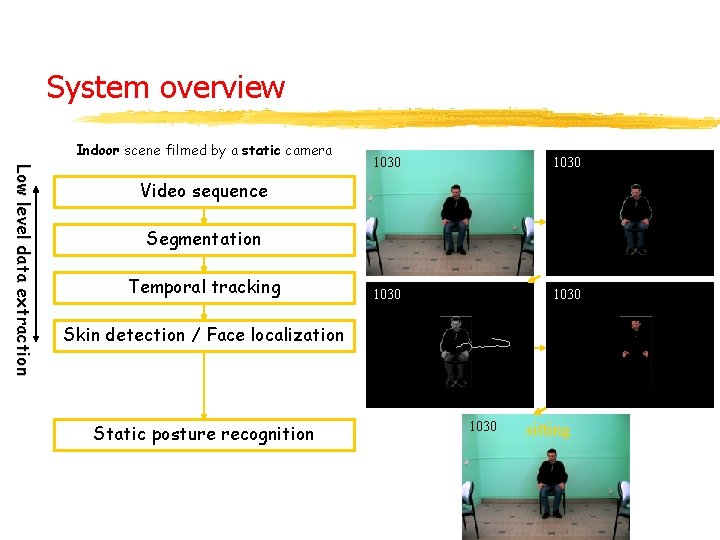

System overview Indoor scene filmed by a static camera L o w l e v e l d a ta e x tr a c ti o n 1030 Video sequence Segmentation Temporal tracking Skin detection / Face localization Static posture recognition 1030 sitting

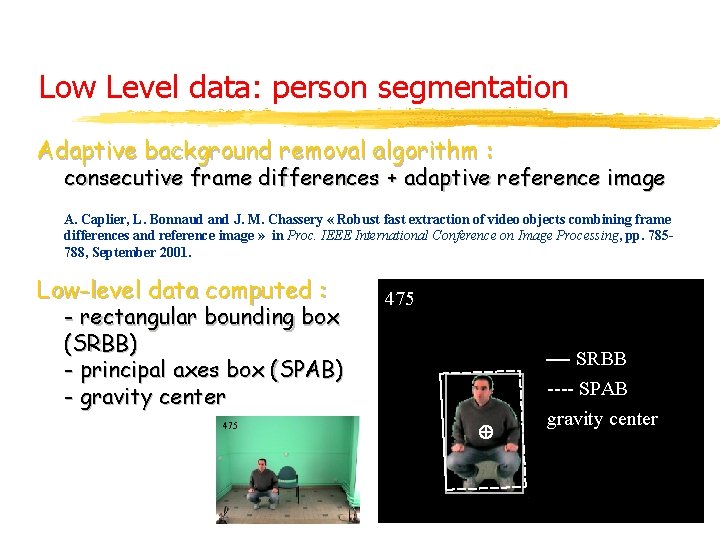

Low Level data: person segmentation Adaptive background removal algorithm : consecutive frame differences + adaptive reference image A. Caplier, L. Bonnaud and J. M. Chassery « Robust fast extraction of video objects combining frame differences and reference image » in Proc. IEEE International Conference on Image Processing, pp. 785788, September 2001. Low-level data computed : - rectangular bounding box (SRBB) - principal axes box (SPAB) - gravity center 475 SRBB ---- SPAB gravity center

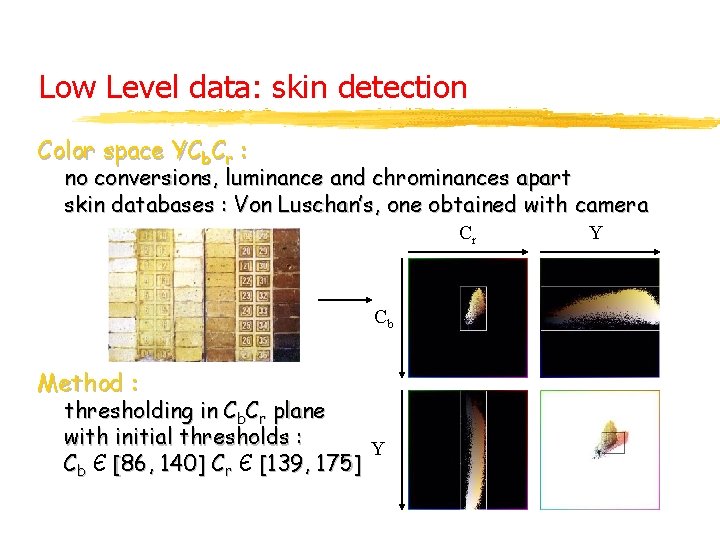

Low Level data: skin detection Color space YCb. Cr : no conversions, luminance and chrominances apart skin databases : Von Luschan’s, one obtained with camera Cr Cb Method : thresholding in Cb. Cr plane with initial thresholds : Y Cb Є [86, 140] Cr Є [139, 175] Y

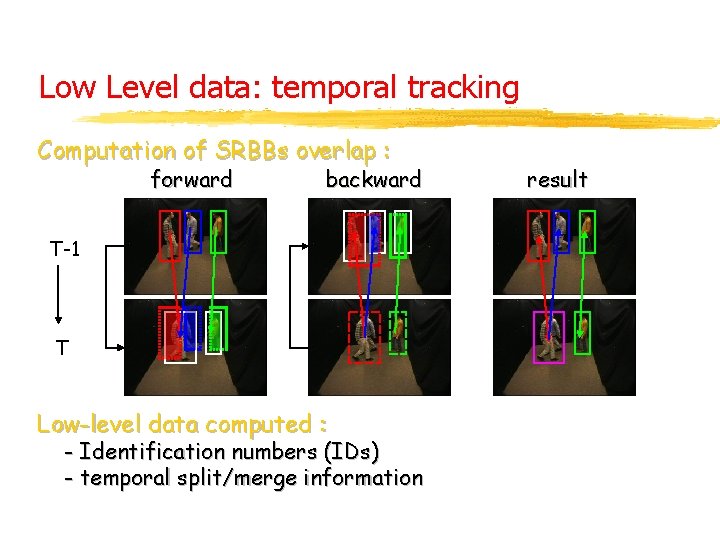

Low Level data: temporal tracking Computation of SRBBs overlap : forward backward T-1 T Low-level data computed : - Identification numbers (IDs) - temporal split/merge information result

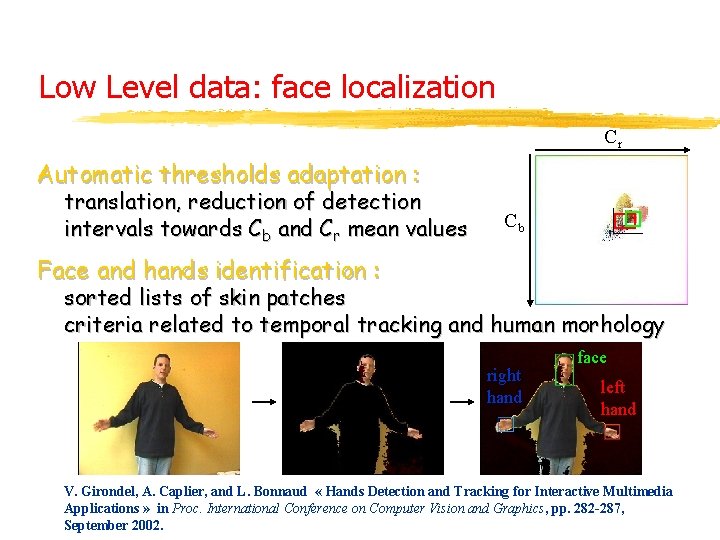

Low Level data: face localization Cr Automatic thresholds adaptation : translation, reduction of detection intervals towards Cb and Cr mean values Cb Face and hands identification : sorted lists of skin patches criteria related to temporal tracking and human morhology right hand face left hand V. Girondel, A. Caplier, and L. Bonnaud « Hands Detection and Tracking for Interactive Multimedia Applications » in Proc. International Conference on Computer Vision and Graphics, pp. 282 -287, September 2002.

System overview 1. Low level data extraction : 2. Static posture recognition : 3. Results : - segmentation - temporal tracking - skin detection and face localization - low-level data - belief theory, definitions, models, data fusion, decision - training and test sets - recognition results - video sequence

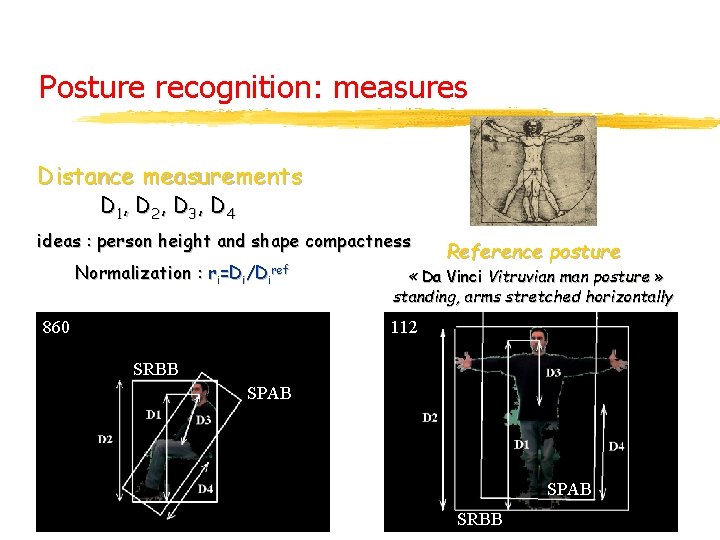

Posture recognition: measures Distance measurements D 1, D 2 , D 3 , D 4 ideas : person height and shape compactness Normalization : ri=Di/Diref 860 Reference posture « Da Vinci Vitruvian man posture » standing, arms stretched horizontally 112 i SRBB SPAB SRBB

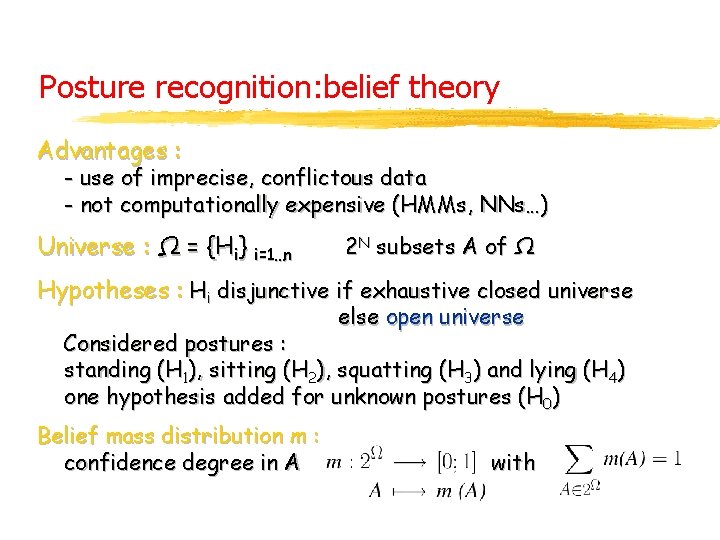

Posture recognition: belief theory Advantages : - use of imprecise, conflictous data - not computationally expensive (HMMs, NNs…) Universe : Ω = {Hi} i=1…n 2 N subsets A of Ω Hypotheses : Hi disjunctive if exhaustive closed universe else open universe Considered postures : standing (H 1), sitting (H 2), squatting (H 3) and lying (H 4) one hypothesis added for unknown postures (H 0) Belief mass distribution m : confidence degree in A with

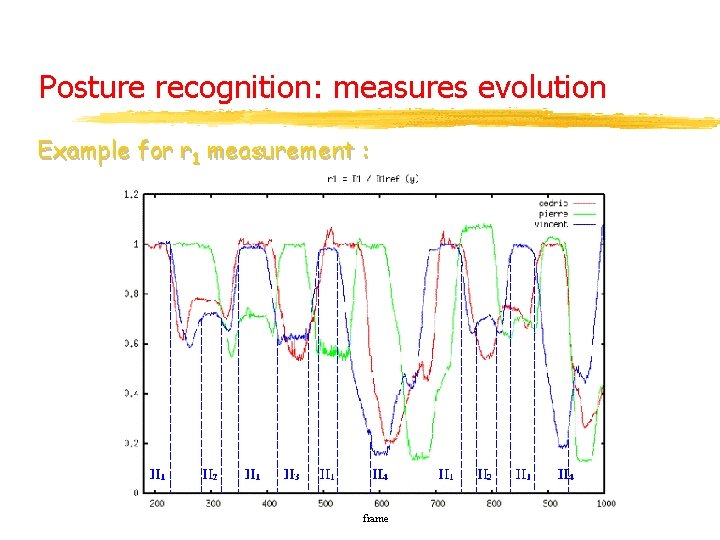

Posture recognition: measures evolution Example for r 1 measurement : frame

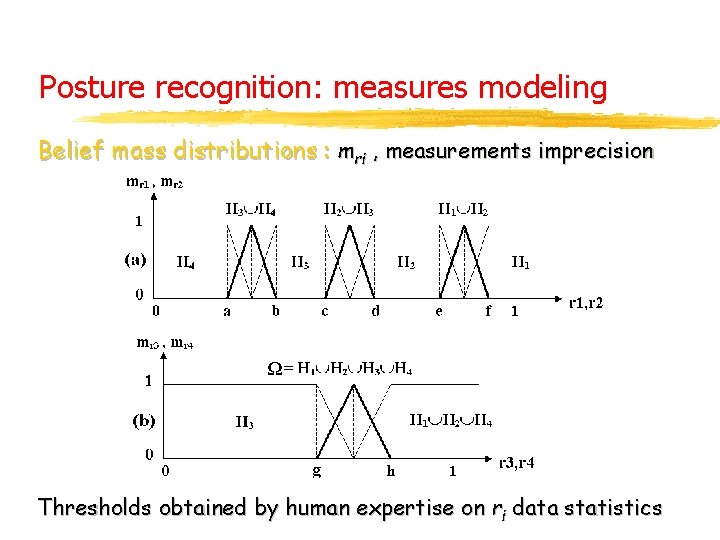

Posture recognition: measures modeling Belief mass distributions : mri , measurements imprecision Ω= Thresholds obtained by human expertise on ri data statistics

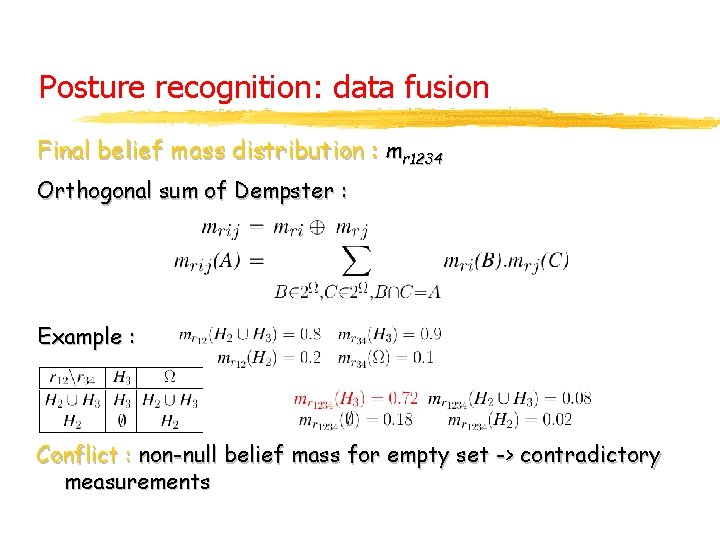

Posture recognition: data fusion Final belief mass distribution : mr 1234 Orthogonal sum of Dempster : Example : Conflict : non-null belief mass for empty set -> contradictory measurements

System overview 1. Low level data extraction : 2. Static posture recognition : 3. Results : - segmentation - temporal tracking - skin detection and face localization - low-level data - belief theory, definitions, models, data fusion, decision - training and test sets - recognition results - video sequence

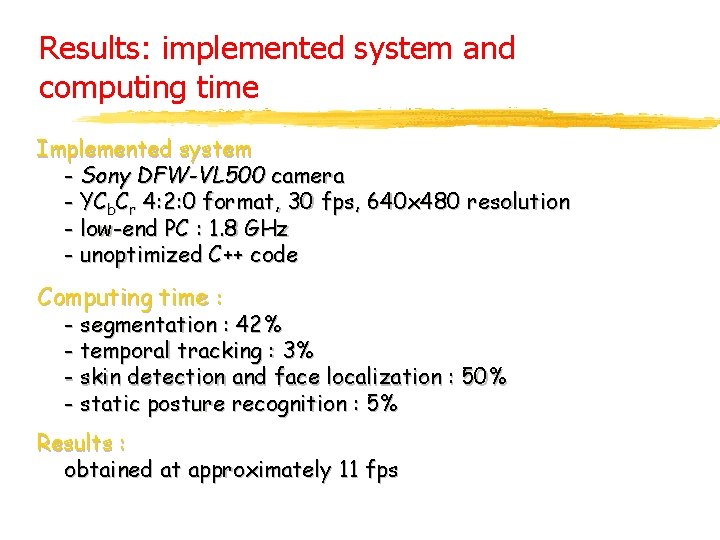

Results: implemented system and computing time Implemented system - Sony DFW-VL 500 camera - YCb. Cr 4: 2: 0 format, 30 fps, 640 x 480 resolution - low-end PC : 1. 8 GHz - unoptimized C++ code Computing time : - segmentation : 42% - temporal tracking : 3% - skin detection and face localization : 50% - static posture recognition : 5% Results : obtained at approximately 11 fps

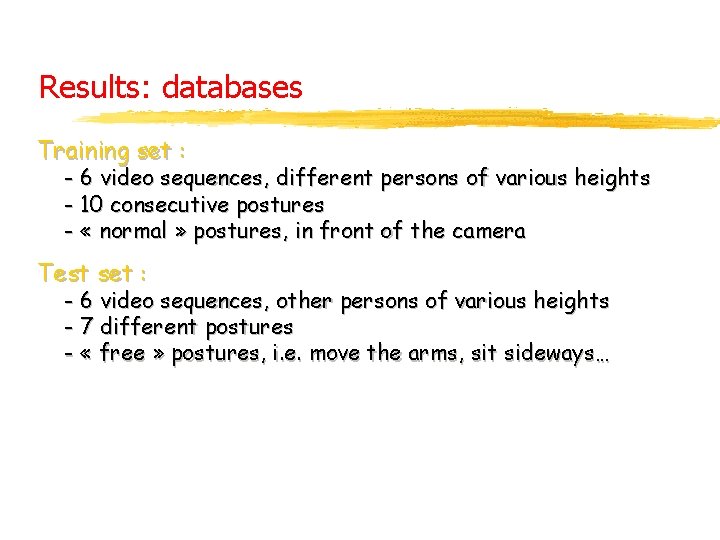

Results: databases Training set : - 6 video sequences, different persons of various heights - 10 consecutive postures - « normal » postures, in front of the camera Test set : - 6 video sequences, other persons of various heights - 7 different postures - « free » postures, i. e. move the arms, sit sideways…

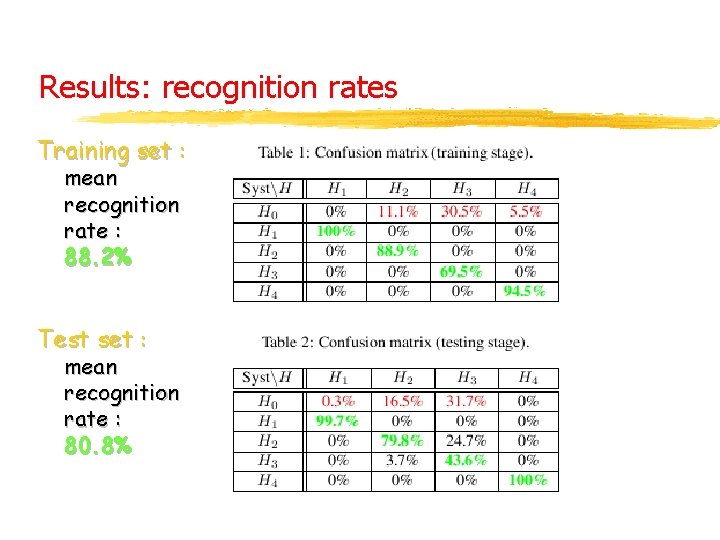

Results: recognition rates Training set : mean recognition rate : 88. 2% Test set : mean recognition rate : 80. 8%

Results: video example

Outline 1. Global posture recognition 2. Facial expressions recognition 3. Head motion analysis and interpretation 4. Conclusion which expression ? which head motion ? which posture ?

Facial expressions analysis 1 Assumptions 2 Facial features segmentation: low level data 3 Facial expressions recognition: high level interpretation 4 Facial expression recognition based on audio: a step towards a multimodal system

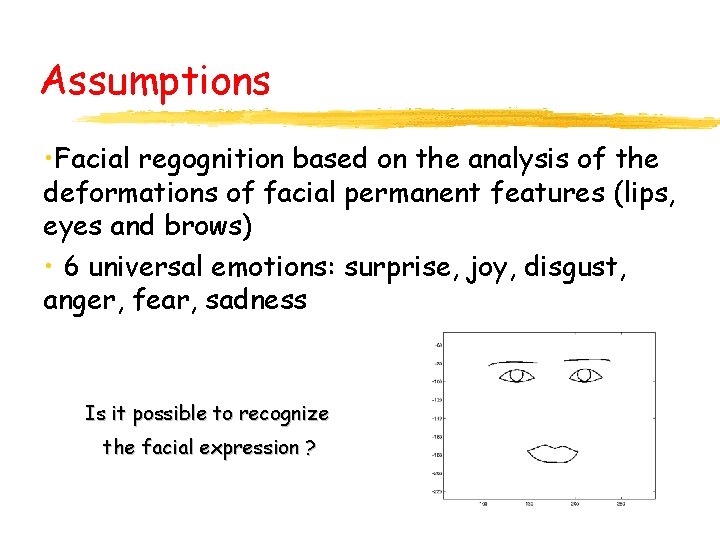

Assumptions • Facial regognition based on the analysis of the deformations of facial permanent features (lips, eyes and brows) • 6 universal emotions: surprise, joy, disgust, anger, fear, sadness Is it possible to recognize the facial expression ?

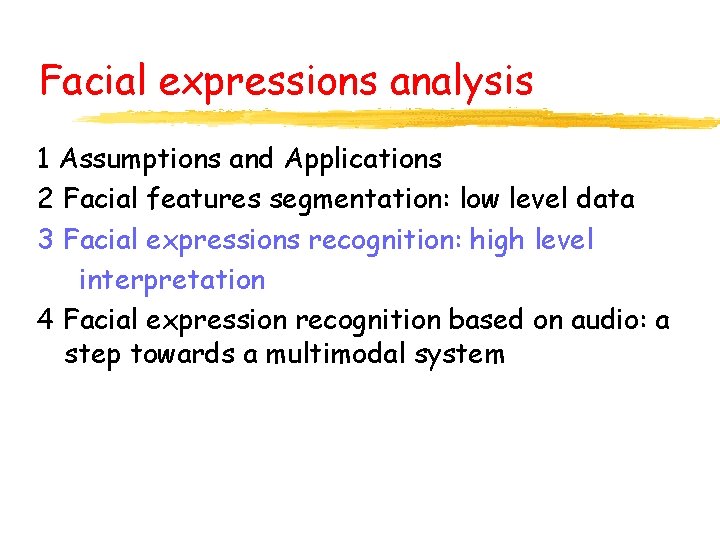

Facial expressions analysis 1 Assumptions and Applications 2 Facial features segmentation: low level data 3 Facial expressions recognition: high level interpretation 4 Facial expression recognition based on audio: a step towards a multimodal system

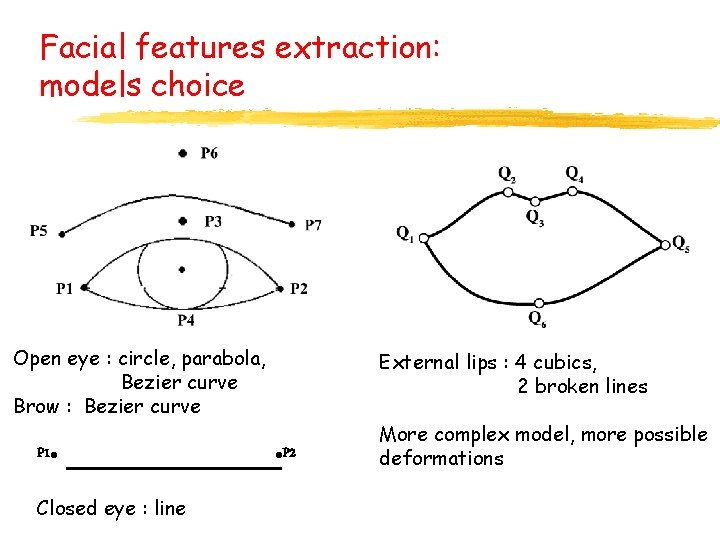

Facial features extraction: models choice Open eye : circle, parabola, Bezier curve Brow : Bezier curve P 1 . Closed eye : line External lips : 4 cubics, 2 broken lines . P 2 More complex model, more possible deformations

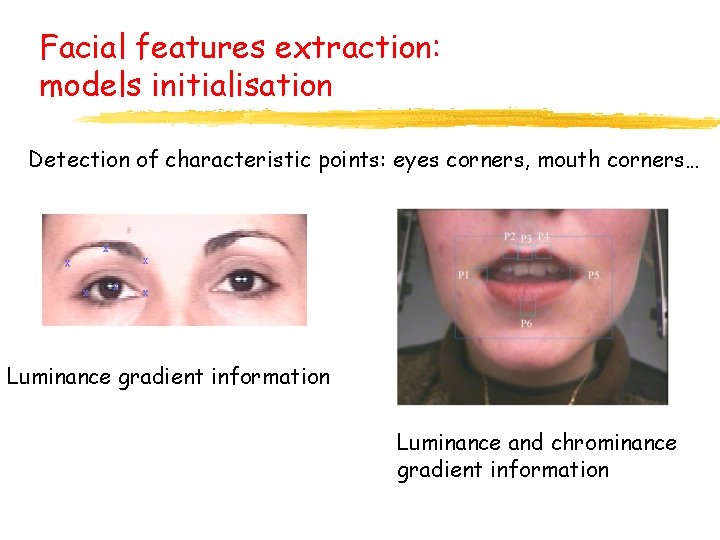

Facial features extraction: models initialisation Detection of characteristic points: eyes corners, mouth corners… x x x Luminance gradient information Luminance and chrominance gradient information

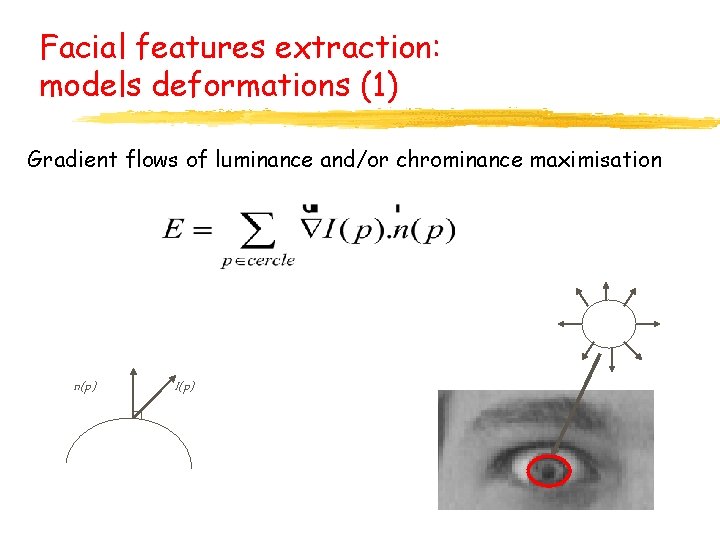

Facial features extraction: models deformations (1) Gradient flows of luminance and/or chrominance maximisation n(p) I(p)

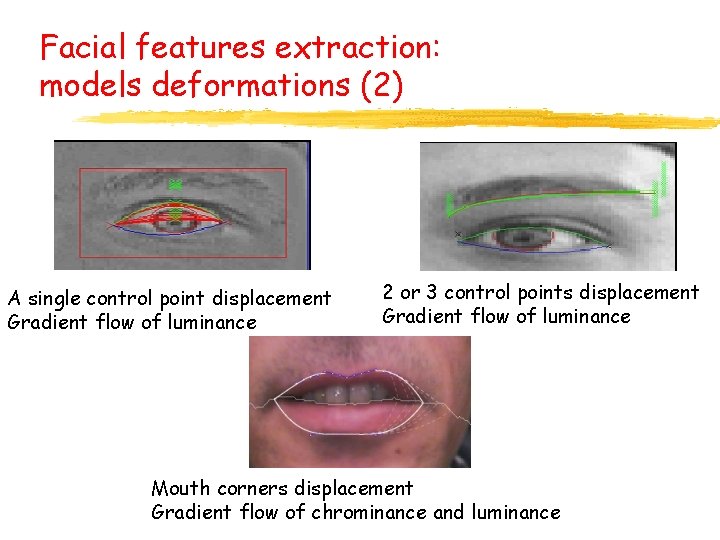

Facial features extraction: models deformations (2) A single control point displacement Gradient flow of luminance 2 or 3 control points displacement Gradient flow of luminance Mouth corners displacement Gradient flow of chrominance and luminance

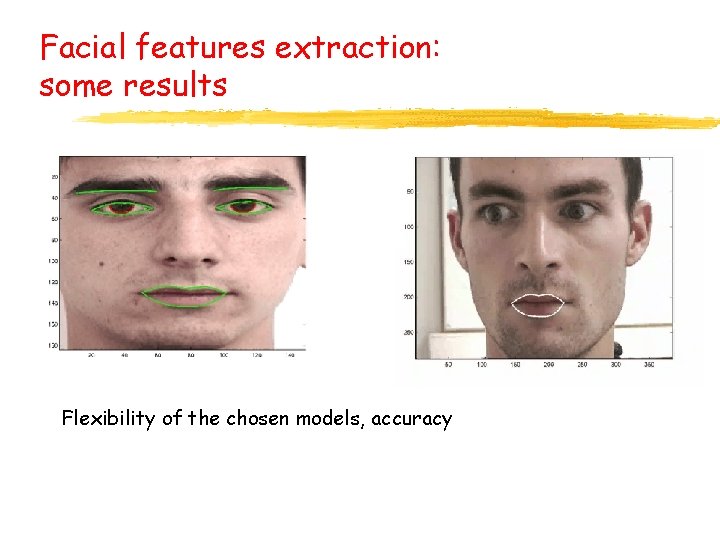

Facial features extraction: some results Flexibility of the chosen models, accuracy

Facial expressions analysis 1 Assumptions and Applications 2 Facial features segmentation: low level data 3 Facial expressions recognition: high level interpretation 4 Facial expression recognition based on audio: a step towards a multimodal system

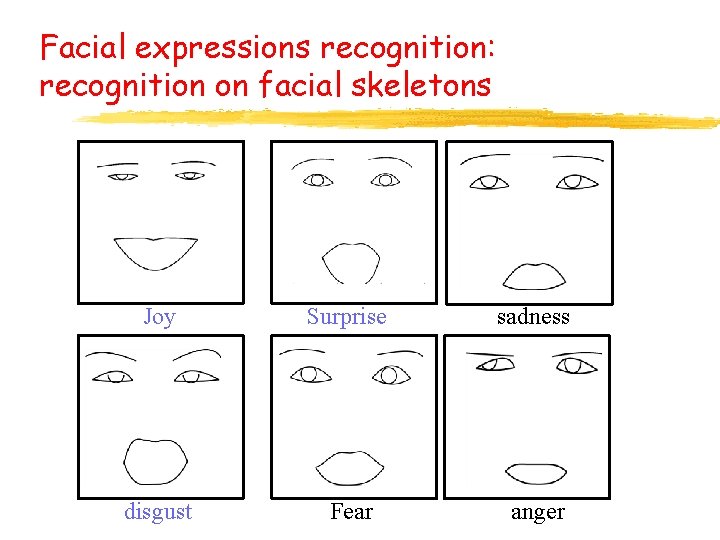

Facial expressions recognition: recognition on facial skeletons Joy Surprise sadness disgust Fear anger

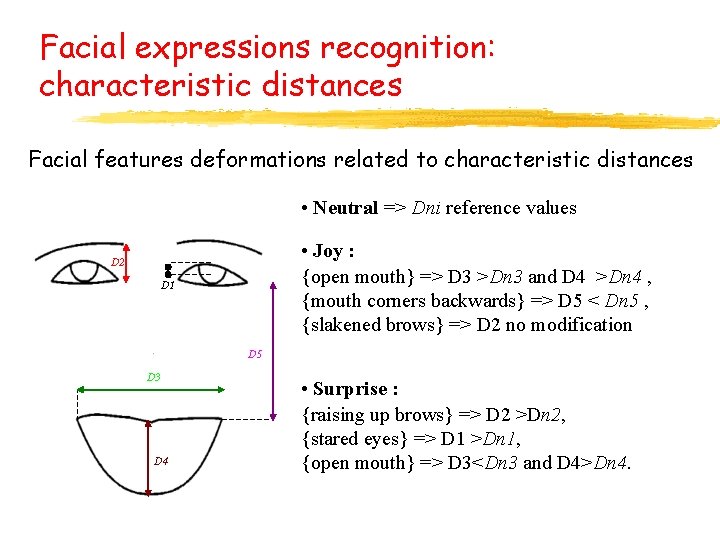

Facial expressions recognition: characteristic distances Facial features deformations related to characteristic distances • Neutral => Dni reference values • Joy : {open mouth} => D 3 >Dn 3 and D 4 >Dn 4 , {mouth corners backwards} => D 5 < Dn 5 , {slakened brows} => D 2 no modification D 2 D 1 D 5 D 3 D 4 • Surprise : {raising up brows} => D 2 >Dn 2, {stared eyes} => D 1 >Dn 1, {open mouth} => D 3<Dn 3 and D 4>Dn 4.

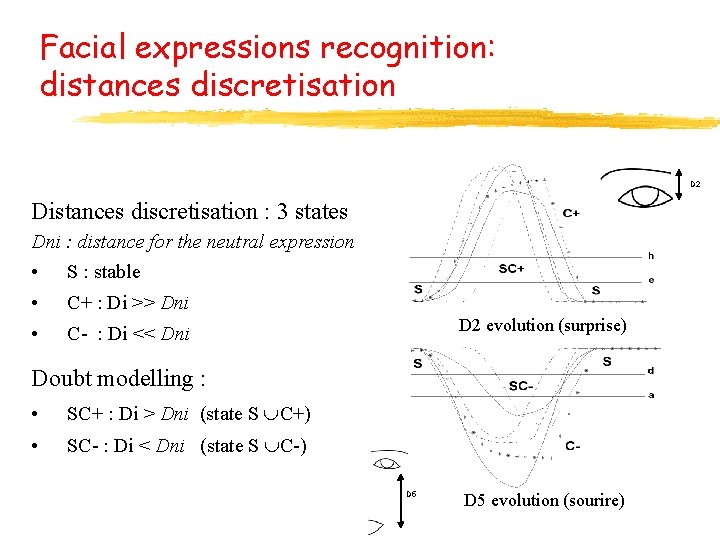

Facial expressions recognition: distances discretisation D 2 Distances discretisation : 3 states Dni : distance for the neutral expression • S : stable • C+ : Di >> Dni • C- : Di << Dni D 2 evolution (surprise) Doubt modelling : • SC+ : Di > Dni (state S C+) • SC- : Di < Dni (state S C-) D 5 evolution (sourire)

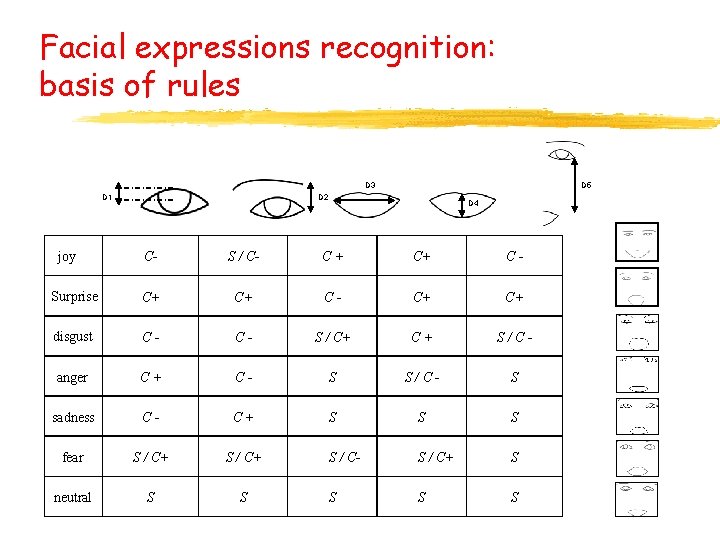

Facial expressions recognition: basis of rules D 3 D 1 joy D 5 D 2 D 4 C- S / C- C+ C+ C- Surprise C+ C+ C- C+ C+ disgust C- C- S / C+ C+ S/C- anger C+ C- S S/C- S sadness C- C+ S S S fear S / C+ S / C- S / C+ S neutral S S S

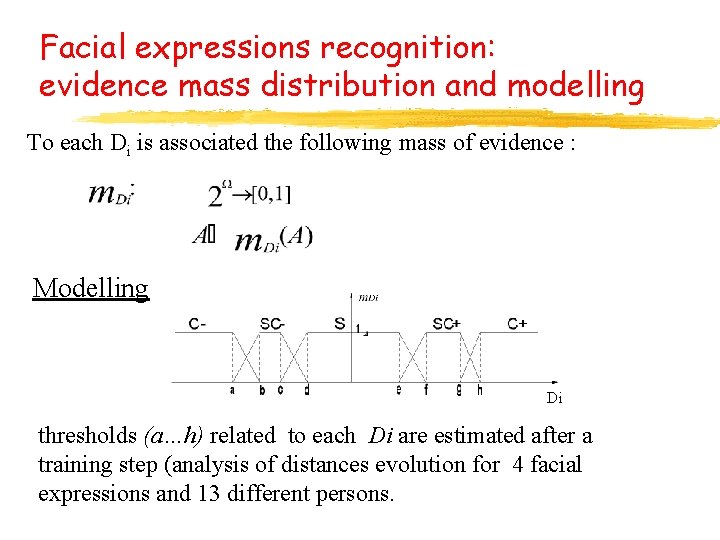

Facial expressions recognition: evidence mass distribution and modelling To each Di is associated the following mass of evidence : Modelling Di thresholds (a…h) related to each Di are estimated after a training step (analysis of distances evolution for 4 facial expressions and 13 different persons.

Facial expressions recognition: method principle (1) • Distances Di measurement and symbolic state determination • Computation of the mass distribution for each Di state • With the basis of rules, computation of the evidence mass for each expression and each Di • Combination of evidence mass distribution in order to take all the measures into account before taking a decision.

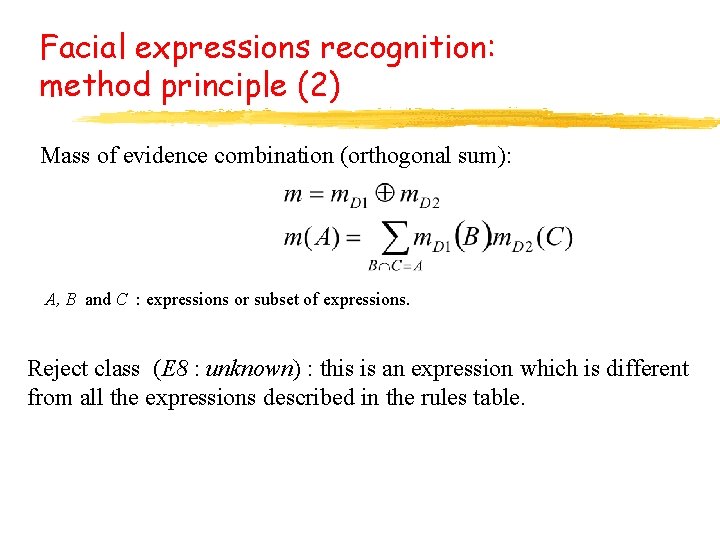

Facial expressions recognition: method principle (2) Mass of evidence combination (orthogonal sum): A, B and C : expressions or subset of expressions. Reject class (E 8 : unknown) : this is an expression which is different from all the expressions described in the rules table.

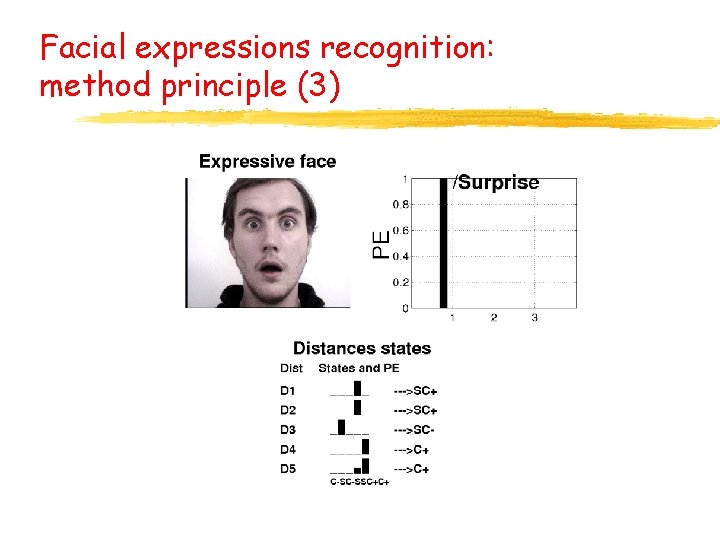

Facial expressions recognition: method principle (3)

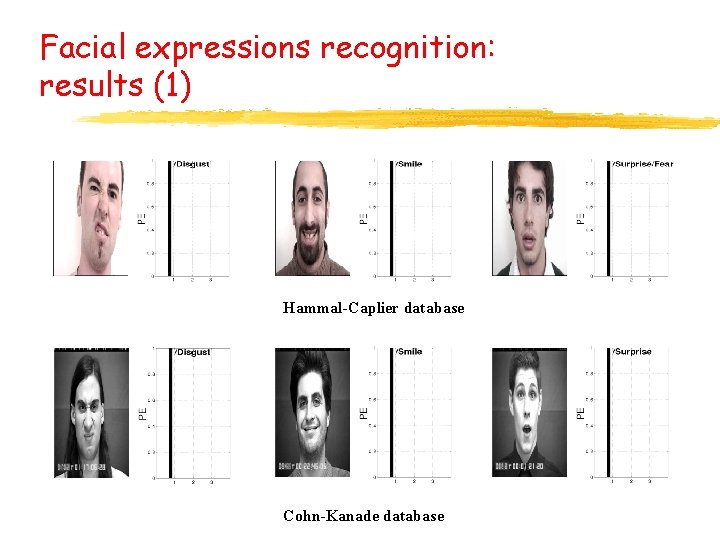

Facial expressions recognition: results (1) Hammal-Caplier database Cohn-Kanade database

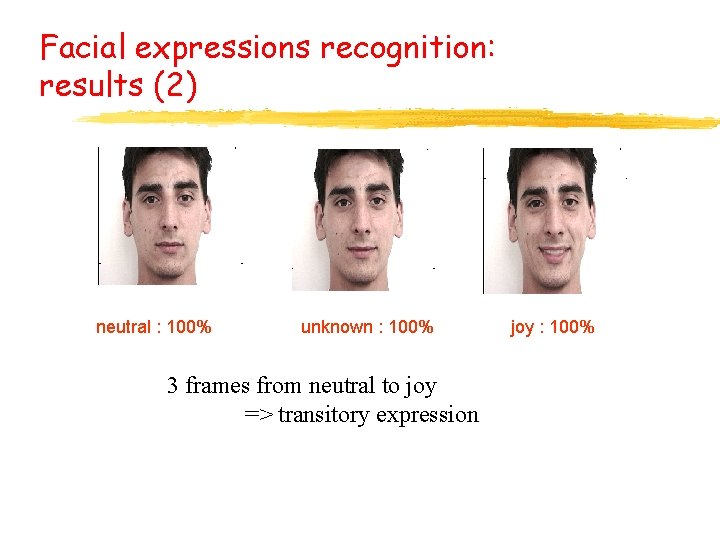

Facial expressions recognition: results (2) neutral : 100% unknown : 100% 3 frames from neutral to joy => transitory expression joy : 100%

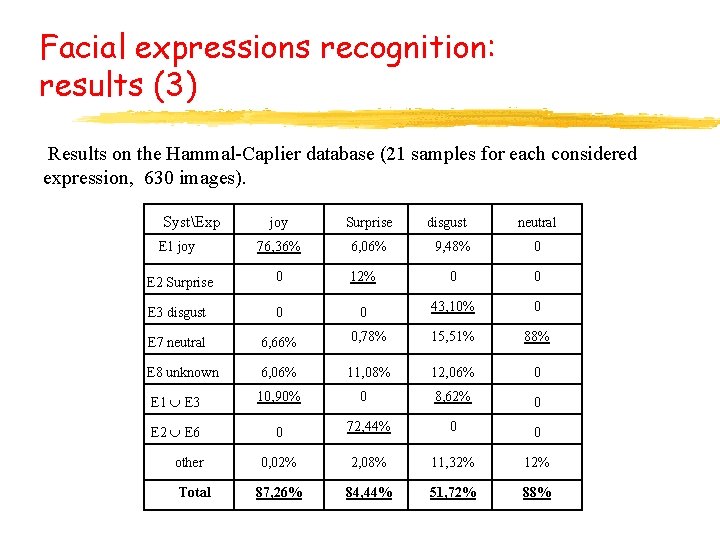

Facial expressions recognition: results (3) Results on the Hammal-Caplier database (21 samples for each considered expression, 630 images). SystExp joy Surprise E 1 joy 76, 36% 6, 06% E 2 Surprise 0 E 3 disgust 0 E 7 neutral 6, 66% E 8 unknown 6, 06% E 1 E 3 10, 90% E 2 E 6 0 other Total disgust neutral 9, 48% 0 12% 0 0 0 43, 10% 0 0, 78% 15, 51% 88% 11, 08% 12, 06% 0 8, 62% 0 72, 44% 0 0 0, 02% 2, 08% 11, 32% 12% 87, 26% 84, 44% 51, 72% 88% 0

Facial expressions analysis 1 Assumptions 2 Facial features segmentation: low level data 3 Facial expressions recognition: high level interpretation 4 Facial expression recognition based on audio: a step towards a multimodal system

Facial expressions analysis based on audio (collaboration with Mons University) Idea: characterization of expressions in the speech signal => use of statistical speech features such as speech rate, SPI, energy and pitch Problem: expressions classes are different. After the preliminary study, 2 classes active (joy, surprise, anger) and passive (neutral, sadness) suitable for speech Perspectives: definition of a multimodal system for facial expression recognition.

Outline 1. Global posture recognition 2. Facial expressions recognition 3. Head motion analysis and interpretation 4. Conclusion which expression ? which head motion ? which posture ?

Head motion interpretation 1 Introduction 2 Head motion estimation: biological modelling 3 Examples of head motion interpretation

Introduction Idea: Global head motions such as nods and local facial motion such as blinking… are involved in the human to human communication process. Aim: automatic analysis and interpretation of such “gestures”.

Head motion interpretation 1 Introduction 2 Head motion estimation: biological modelling 3 Examples of head motion interpretation

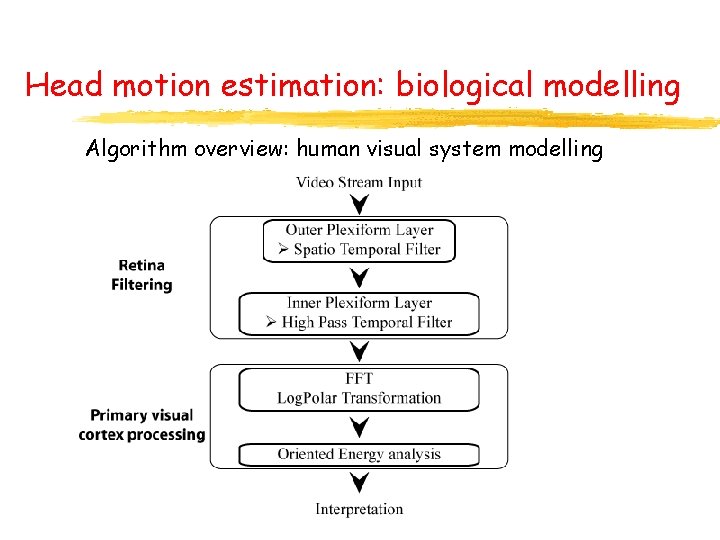

Head motion estimation: biological modelling Algorithm overview: human visual system modelling

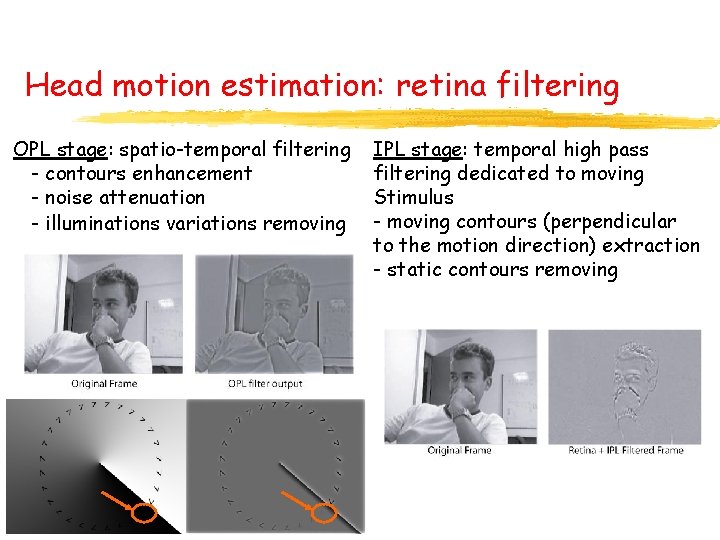

Head motion estimation: retina filtering OPL stage: spatio-temporal filtering - contours enhancement - noise attenuation - illuminations variations removing IPL stage: temporal high pass filtering dedicated to moving Stimulus - moving contours (perpendicular to the motion direction) extraction - static contours removing

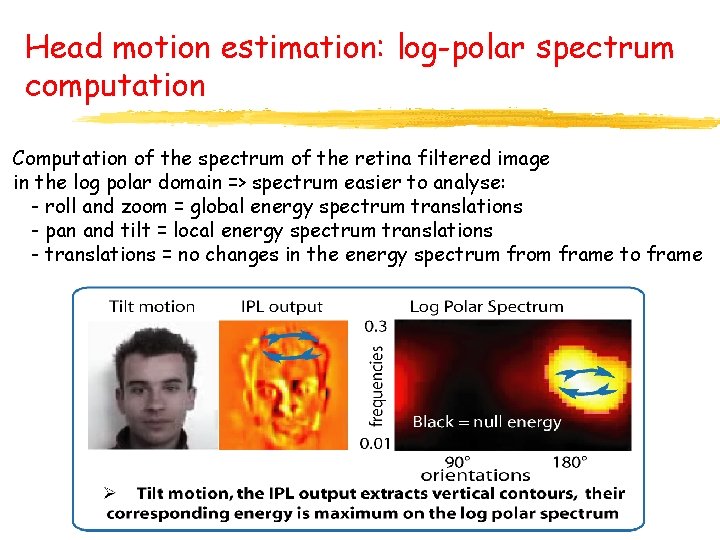

Head motion estimation: log-polar spectrum computation Computation of the spectrum of the retina filtered image in the log polar domain => spectrum easier to analyse: - roll and zoom = global energy spectrum translations - pan and tilt = local energy spectrum translations - translations = no changes in the energy spectrum from frame to frame

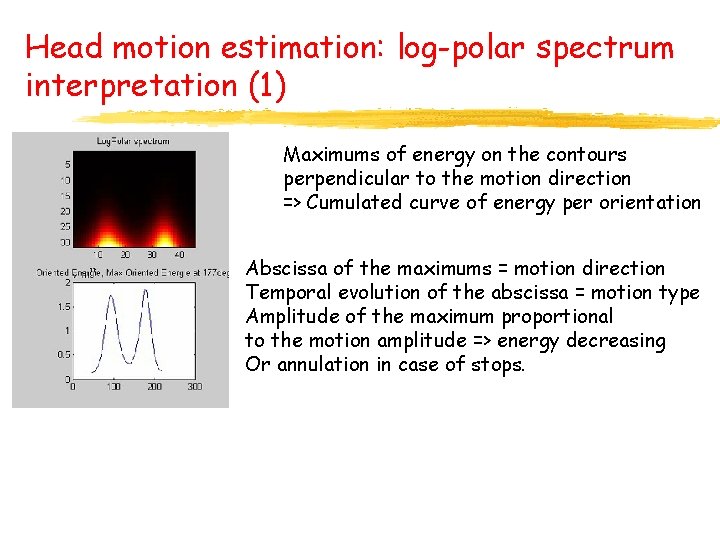

Head motion estimation: log-polar spectrum interpretation (1) Maximums of energy on the contours perpendicular to the motion direction => Cumulated curve of energy per orientation Abscissa of the maximums = motion direction Temporal evolution of the abscissa = motion type Amplitude of the maximum proportional to the motion amplitude => energy decreasing Or annulation in case of stops.

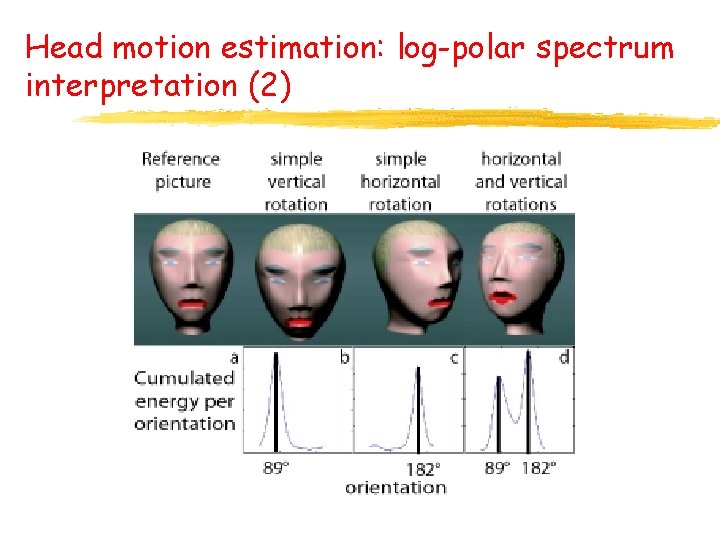

Head motion estimation: log-polar spectrum interpretation (2)

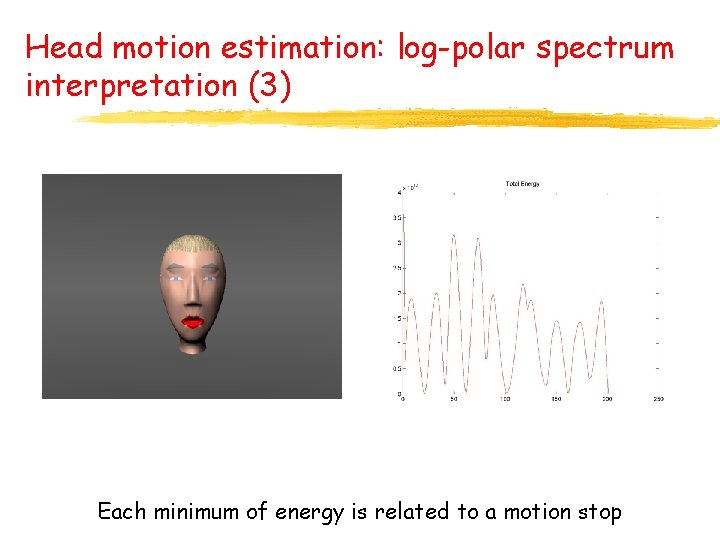

Head motion estimation: log-polar spectrum interpretation (3) Each minimum of energy is related to a motion stop

Head motion estimation: log-polar spectrum interpretation (4) To summarize : properties of the retina filtered energy spectrum in the log-polar domain Ø max of energy associated to the contours moving to the motion Ø no motion = no energy ØOrientation of energy maximums = motion directions Ø « movements » of energy maximums = motion type

Head motion interpretation 1 Introduction 2 Head motion estimation: biological modelling 3 Examples of head motion interpretation

Head nods of approbation or negation (1) Goal : recognition of head nods of approbation and negation I am still with you Idea : detection of periodic head motions Ø approbation: periodic head tilting Ø negation: periodic head panning Approach: to put a biological head motion detector on a face bounding box and to control all the head movements

Head nods of approbation or negation (2)

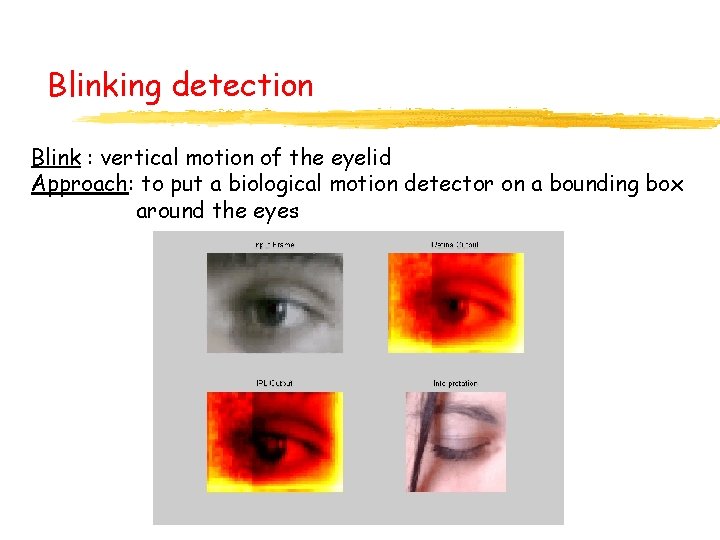

Blinking detection Blink : vertical motion of the eyelid Approach: to put a biological motion detector on a bounding box around the eyes

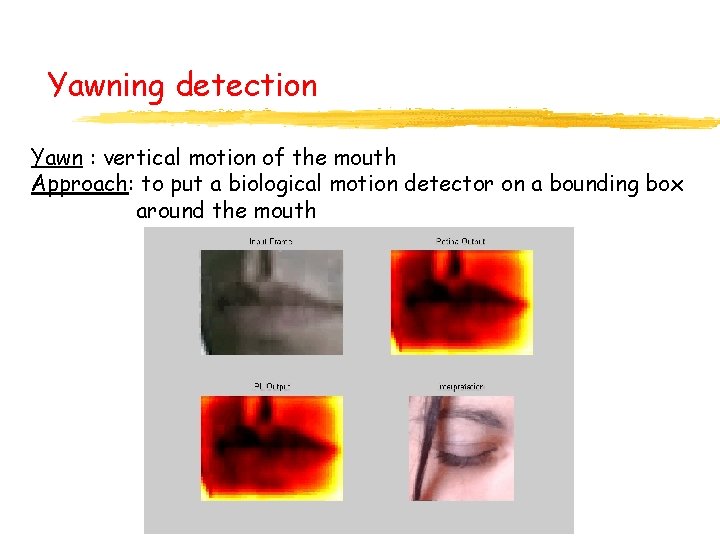

Yawning detection Yawn : vertical motion of the mouth Approach: to put a biological motion detector on a bounding box around the mouth

Hypo vigilance detection Hypovigilence : short or long eyes closing multiple head rotations frequent yawning Approach: combine the information coming from 3 biological motion detectors

Outline 1. Global posture recognition 2. Facial Expressions recognition 3. Head motion analysis and interpretation 4. Conclusion which expression ? which head motion ? which posture ?

Conclusion 1. 2. 3. Human activities analysis and recognition based on video and images data Unified approaches: extraction of low level data and fusion process for high level semantic interpretation Correlation with application; exple: project 4 of Enterface Workshop about Attention level detection of driver

- Slides: 63