Huffman Coding l l l D A Huffman

- Slides: 11

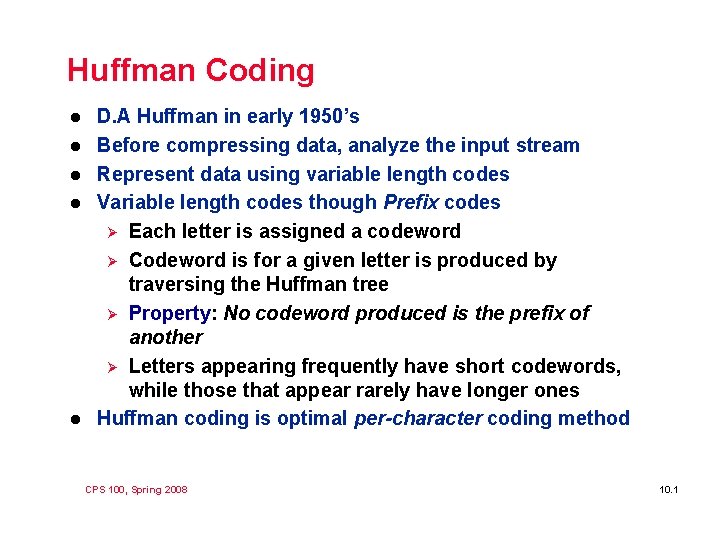

Huffman Coding l l l D. A Huffman in early 1950’s Before compressing data, analyze the input stream Represent data using variable length codes Variable length codes though Prefix codes Ø Each letter is assigned a codeword Ø Codeword is for a given letter is produced by traversing the Huffman tree Ø Property: No codeword produced is the prefix of another Ø Letters appearing frequently have short codewords, while those that appear rarely have longer ones Huffman coding is optimal per-character coding method CPS 100, Spring 2008 10. 1

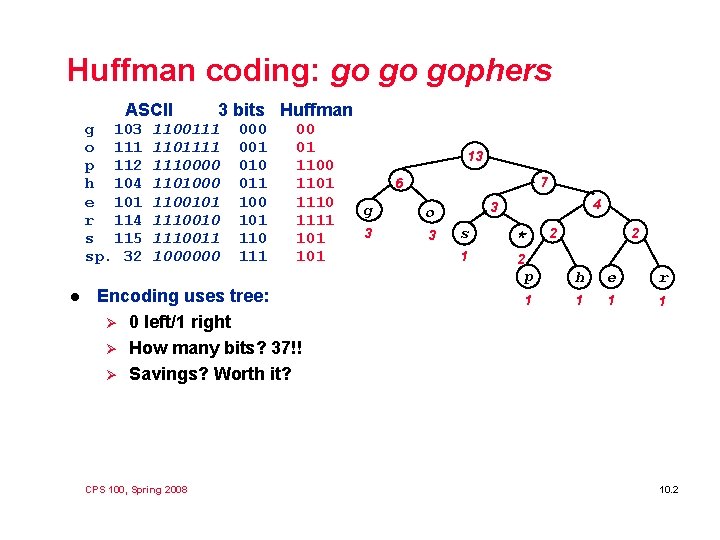

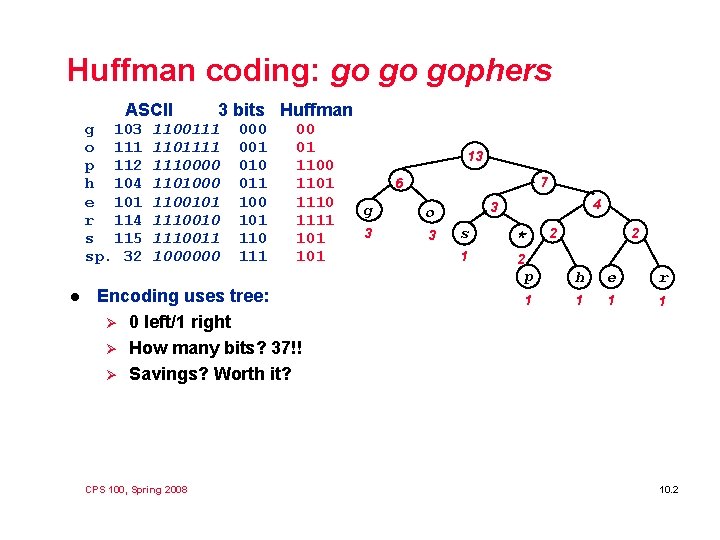

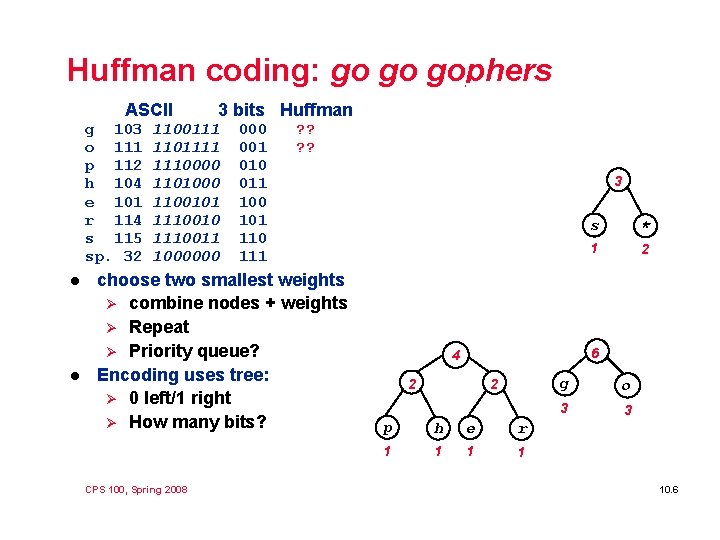

Huffman coding: go go gophers ASCII g 103 o 111 p 112 h 104 e 101 r 114 s 115 sp. 32 l 3 bits Huffman 1100111 1101111 1110000 1101000 1100101 1110010 1110011 1000000 001 010 011 100 101 110 111 00 01 1100 1101 1110 1111 101 13 7 6 g o 3 3 Encoding uses tree: Ø 0 left/1 right Ø How many bits? 37!! Ø Savings? Worth it? 4 3 s * 1 2 2 2 p h e r 1 1 6 4 2 2 g o 3 3 3 CPS 100, Spring 2008 p h e r 1 1 s * 1 2 10. 2

Coding/Compression/Concepts l We can use a fixed-length encoding, e. g. , ASCII or 3 bits/per Ø What is minimum number of bits to represent N values? Ø Repercussion for representing genomic data (a, c , g, t)? Ø l We can use a variable-length encoding, e. g. , Huffman Ø How do we decide on lengths? How do we decode? Ø Values for Morse code encodings, why? Ø CPS 100, Spring 2008 10. 3

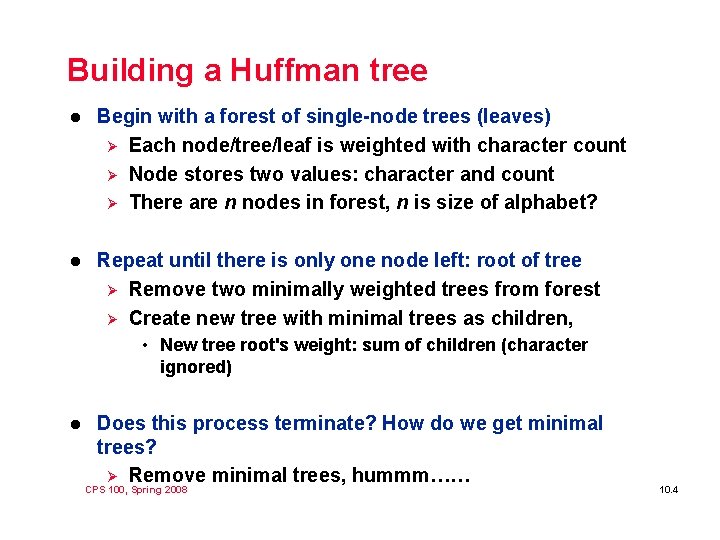

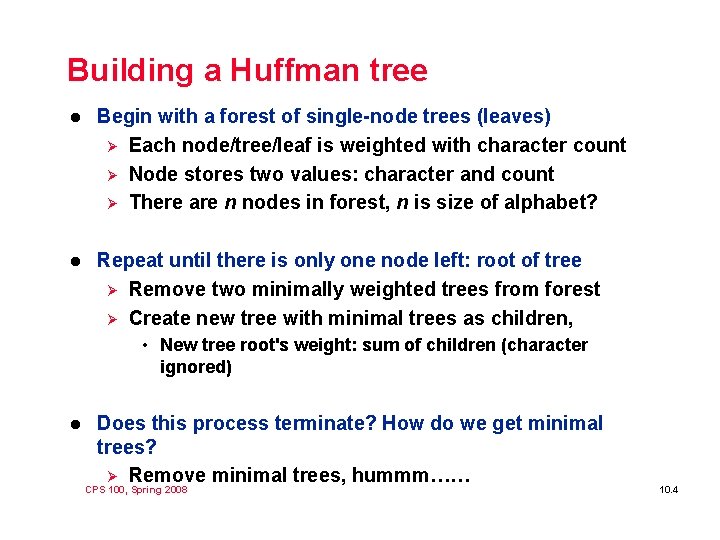

Building a Huffman tree l Begin with a forest of single-node trees (leaves) Ø Each node/tree/leaf is weighted with character count Ø Node stores two values: character and count Ø There are n nodes in forest, n is size of alphabet? l Repeat until there is only one node left: root of tree Ø Remove two minimally weighted trees from forest Ø Create new tree with minimal trees as children, • New tree root's weight: sum of children (character ignored) l Does this process terminate? How do we get minimal trees? Ø Remove minimal trees, hummm…… CPS 100, Spring 2008 10. 4

Mary Shaw l Software engineering and software architecture Ø Tools for constructing large software systems Ø Development is a small piece of total cost, maintenance is larger, depends on welldesigned and developed techniques l Interested in computer science, programming, curricula, and canoeing, health-care costs ACM Fellow, Alan Perlis Professor of Compsci at CMU l CPS 100, Spring 2008 10. 5

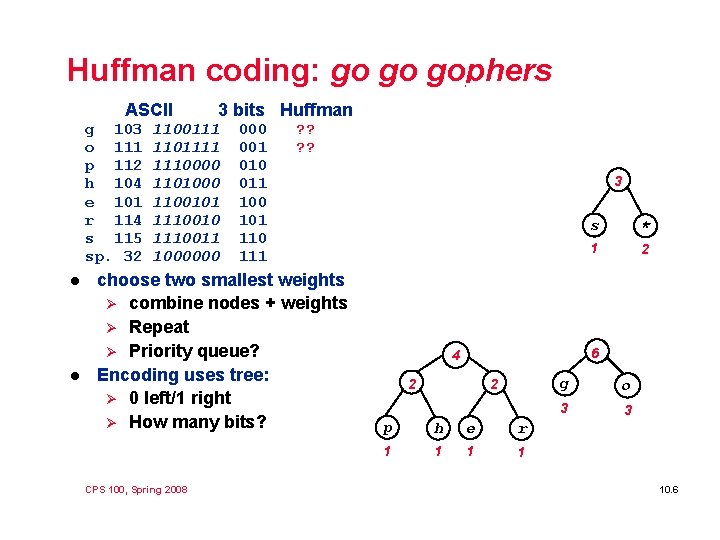

Huffman coding: go go gophers ASCII g 103 o 111 p 112 h 104 e 101 r 114 s 115 sp. 32 l l 3 bits Huffman 1100111 1101111 1110000 1101000 1100101 1110010 1110011 1000000 001 010 011 100 101 110 111 ? ? choose two smallest weights Ø combine nodes + weights Ø Repeat Ø Priority queue? Encoding uses tree: Ø 0 left/1 right Ø How many bits? CPS 100, Spring 2008 g o p h e r s * 3 3 1 1 1 2 2 2 3 p h e r s * 1 1 1 2 6 4 2 2 p h e r 1 1 g o 3 3 10. 6

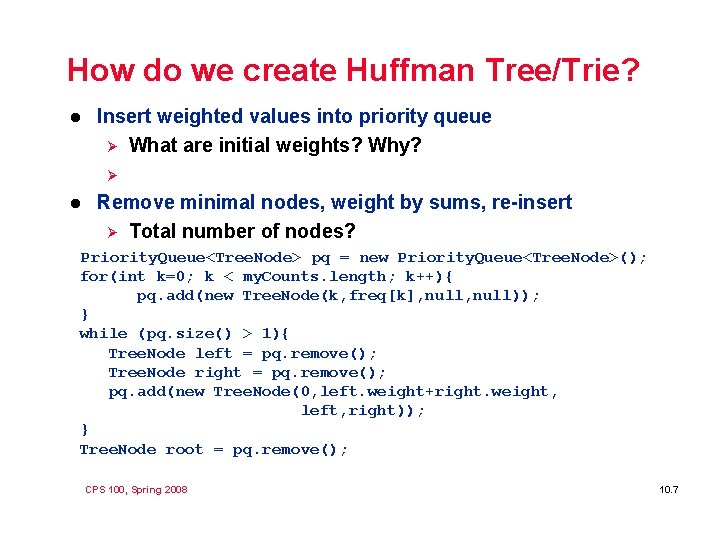

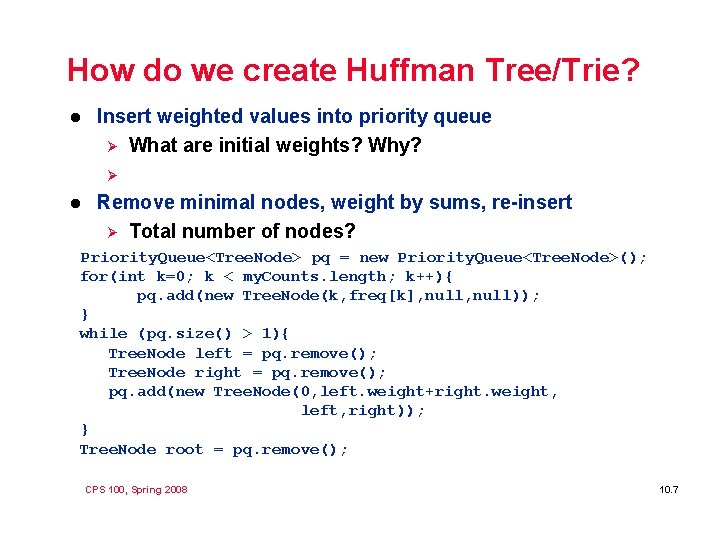

How do we create Huffman Tree/Trie? l Insert weighted values into priority queue Ø What are initial weights? Why? Ø l Remove minimal nodes, weight by sums, re-insert Ø Total number of nodes? Priority. Queue<Tree. Node> pq = new Priority. Queue<Tree. Node>(); for(int k=0; k < my. Counts. length; k++){ pq. add(new Tree. Node(k, freq[k], null)); } while (pq. size() > 1){ Tree. Node left = pq. remove(); Tree. Node right = pq. remove(); pq. add(new Tree. Node(0, left. weight+right. weight, left, right)); } Tree. Node root = pq. remove(); CPS 100, Spring 2008 10. 7

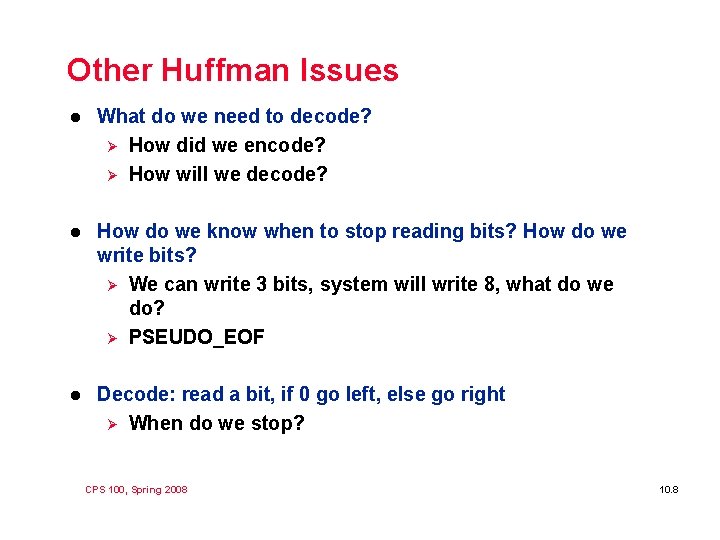

Other Huffman Issues l What do we need to decode? Ø How did we encode? Ø How will we decode? l How do we know when to stop reading bits? How do we write bits? Ø We can write 3 bits, system will write 8, what do we do? Ø PSEUDO_EOF l Decode: read a bit, if 0 go left, else go right Ø When do we stop? CPS 100, Spring 2008 10. 8

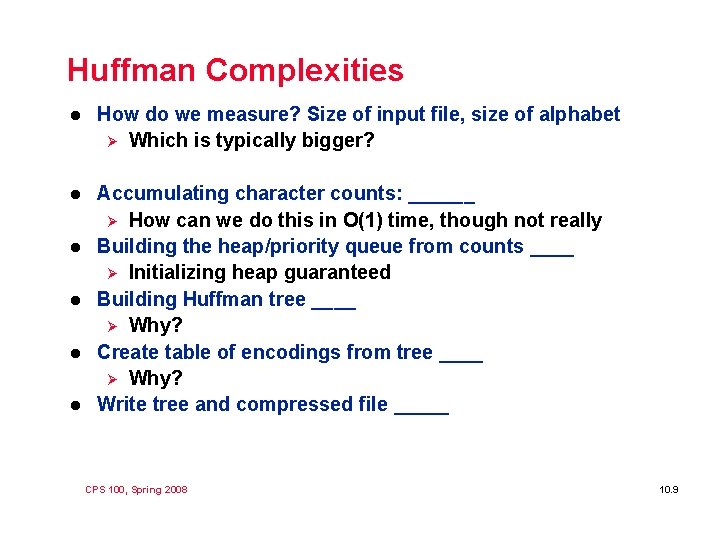

Huffman Complexities l How do we measure? Size of input file, size of alphabet Ø Which is typically bigger? l Accumulating character counts: ______ Ø How can we do this in O(1) time, though not really Building the heap/priority queue from counts ____ Ø Initializing heap guaranteed Building Huffman tree ____ Ø Why? Create table of encodings from tree ____ Ø Why? Write tree and compressed file _____ l l CPS 100, Spring 2008 10. 9

Writing code out to file l How do we go from characters to encodings? Ø Build Huffman tree Ø Root-to-leaf path generates encoding l Need way of writing bits out to file Ø Platform dependent? Ø Complicated to write bits and read in same ordering l See Bit. Input. Stream and Bit. Output. Stream classes Ø Depend on each other, bit ordering preserved l How do we know bits come from compressed file? Ø Store a magic number CPS 100, Spring 2008 10. 10

Other methods l l l Adaptive Huffman coding Lempel-Ziv algorithms Ø Build the coding table on the fly while reading document Ø Coding table changes dynamically Ø Protocol between encoder and decoder so that everyone is always using the right coding scheme Ø Works well in practice (compress, gzip, etc. ) More complicated methods Ø Burrows-Wheeler (bunzip 2) Ø PPM statistical methods CPS 100, Spring 2008 10. 11