Huffman Coding Huffman coding Gonzalez et al 2008

- Slides: 21

Huffman Coding

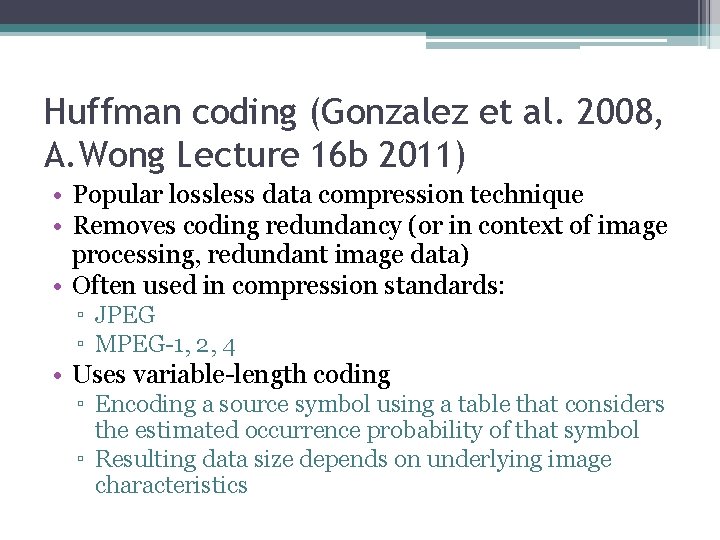

Huffman coding (Gonzalez et al. 2008, A. Wong Lecture 16 b 2011) • Popular lossless data compression technique • Removes coding redundancy (or in context of image processing, redundant image data) • Often used in compression standards: ▫ JPEG ▫ MPEG-1, 2, 4 • Uses variable-length coding ▫ Encoding a source symbol using a table that considers the estimated occurrence probability of that symbol ▫ Resulting data size depends on underlying image characteristics

Steps 1. ) Determine histogram of image 2. ) Construct Huffman tree 3. ) Encode the image using codes generated from the Huffman tree

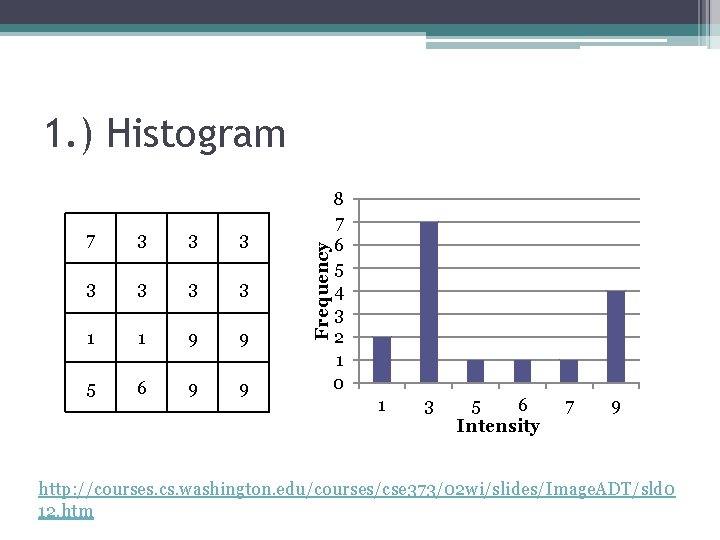

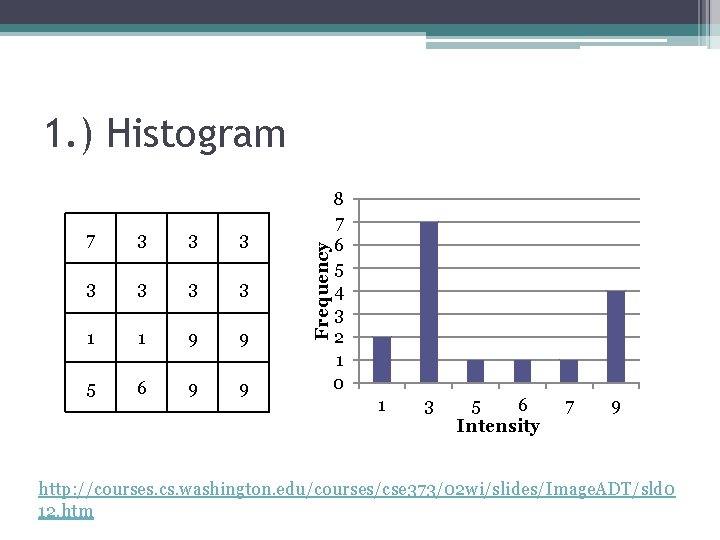

7 3 3 3 3 1 1 9 9 5 6 9 9 Frequency 1. ) Histogram 8 7 6 5 4 3 2 1 0 1 3 5 6 Intensity 7 9 http: //courses. cs. washington. edu/courses/cse 373/02 wi/slides/Image. ADT/sld 0 12. htm

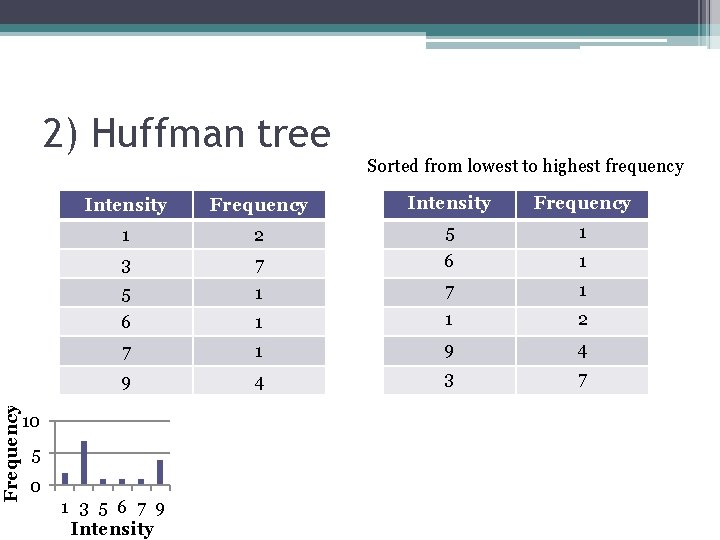

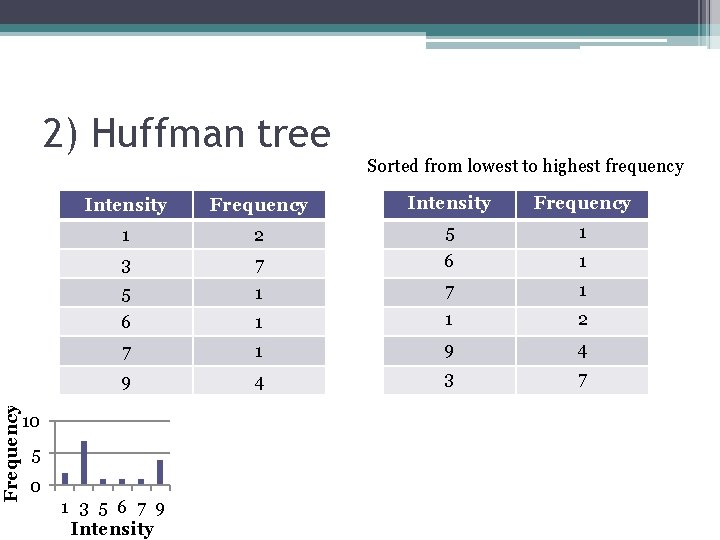

Frequency 2) Huffman tree Sorted from lowest to highest frequency Intensity Frequency 1 2 5 1 3 7 6 1 5 1 7 1 6 1 1 2 7 1 9 4 3 7 10 5 0 1 3 5 6 7 9 Intensity

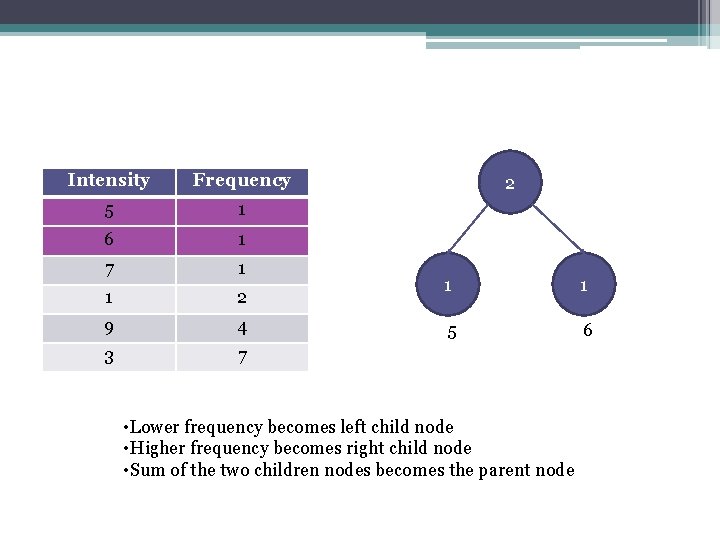

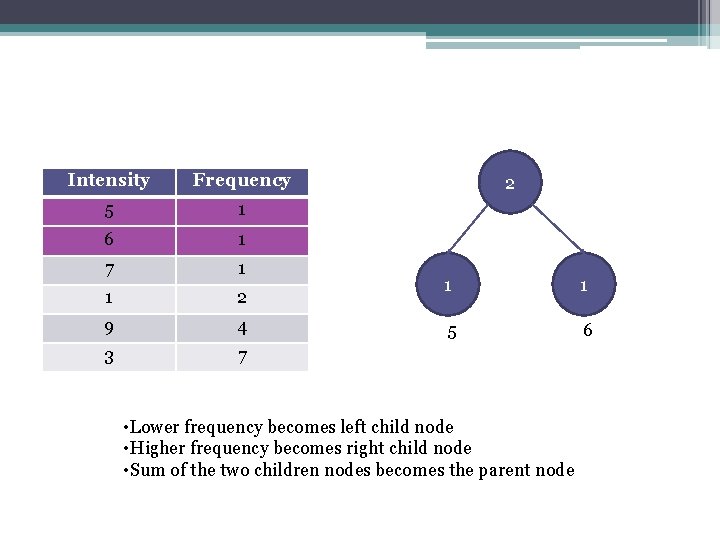

Intensity Frequency 5 1 6 1 7 1 1 2 9 4 3 7 2 1 1 5 6 • Lower frequency becomes left child node • Higher frequency becomes right child node • Sum of the two children nodes becomes the parent node

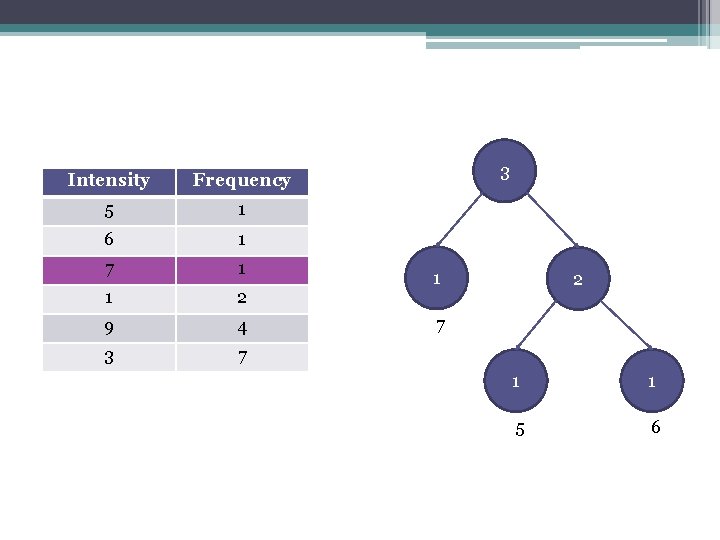

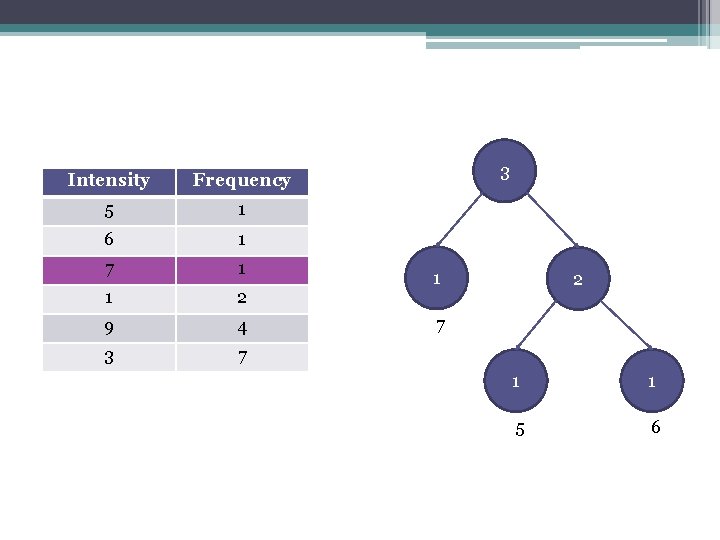

Intensity Frequency 5 1 6 1 7 1 1 2 9 4 3 7 3 1 2 7 1 1 5 6

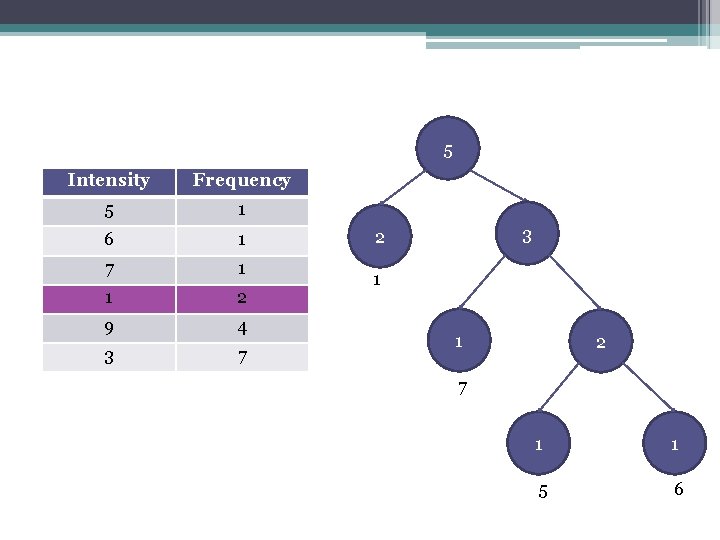

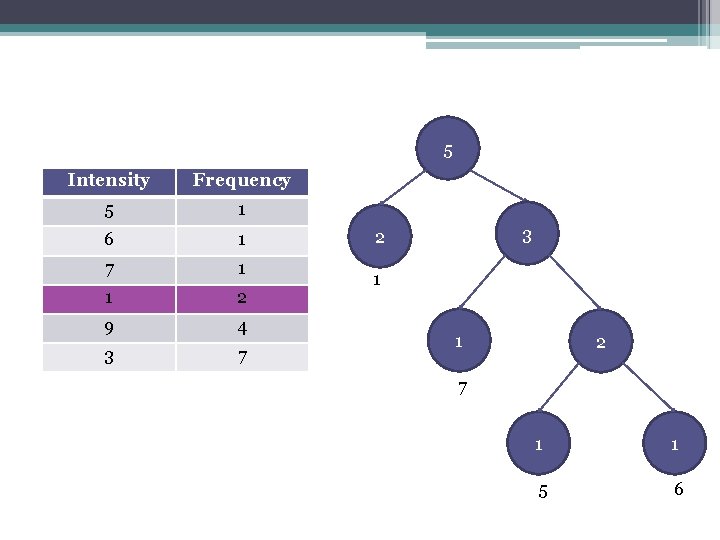

5 Intensity Frequency 5 1 6 1 7 1 1 2 9 4 3 7 3 2 1 1 2 7 1 1 5 6

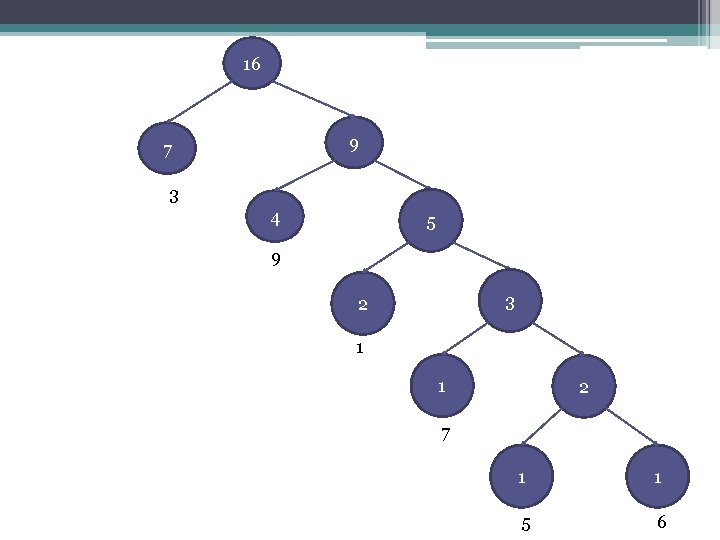

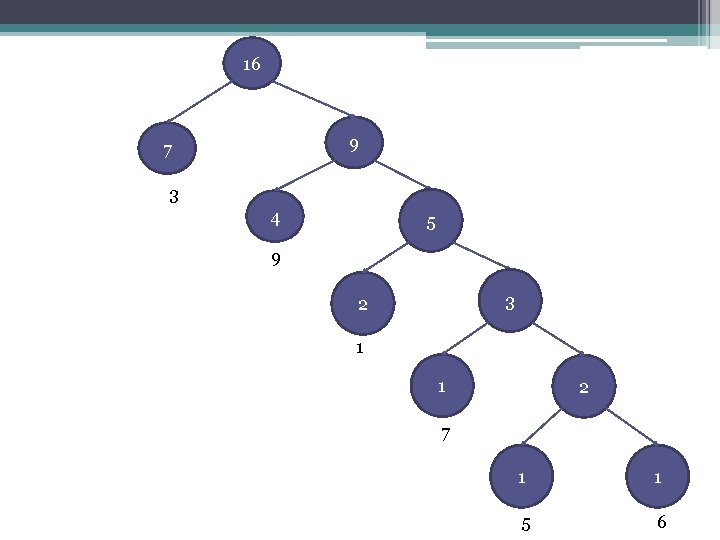

16 9 7 3 4 5 9 3 2 1 1 2 7 1 1 5 6

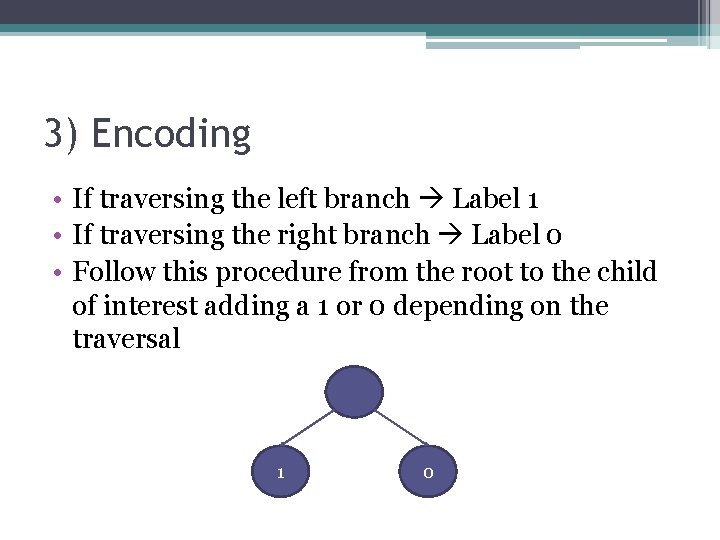

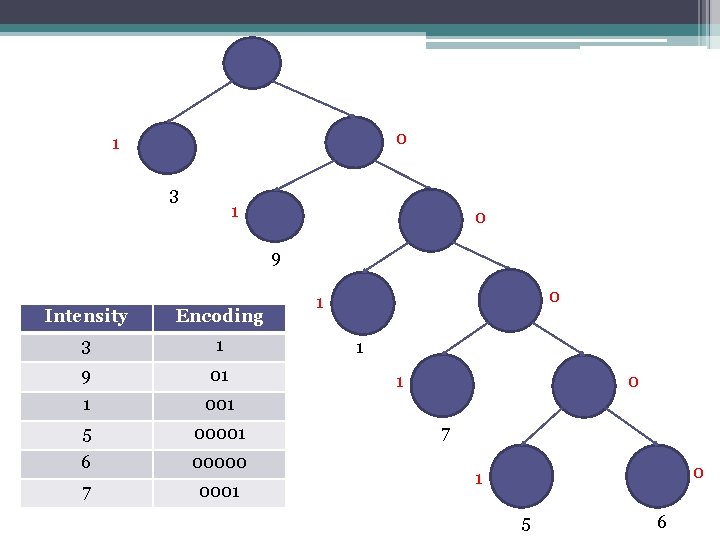

3) Encoding • If traversing the left branch Label 1 • If traversing the right branch Label 0 • Follow this procedure from the root to the child of interest adding a 1 or 0 depending on the traversal 1 0

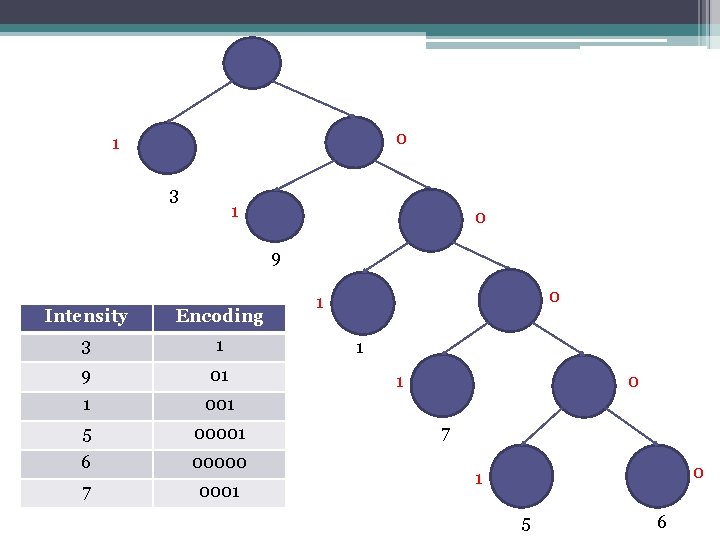

0 1 3 1 0 9 Intensity Encoding 3 1 9 01 1 001 5 00001 6 00000 7 0001 0 1 1 1 0 7 0 1 5 6

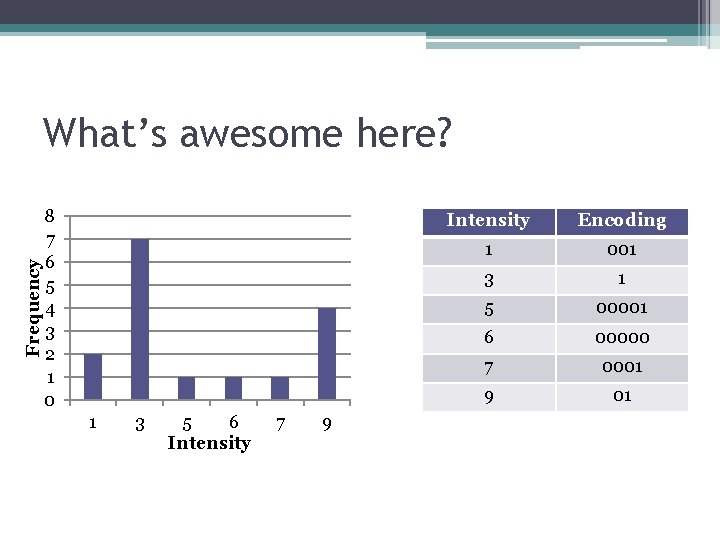

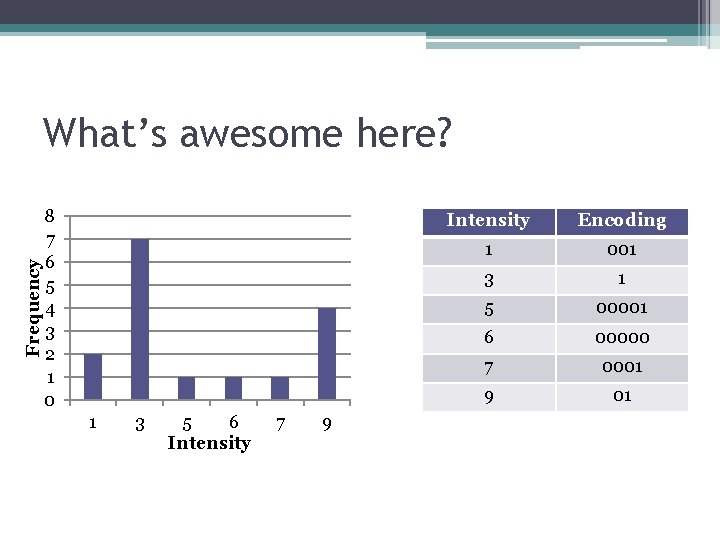

Frequency What’s awesome here? 8 7 6 5 4 3 2 1 0 1 3 5 6 Intensity 7 9 Intensity Encoding 1 001 3 1 5 00001 6 00000 7 0001 9 01

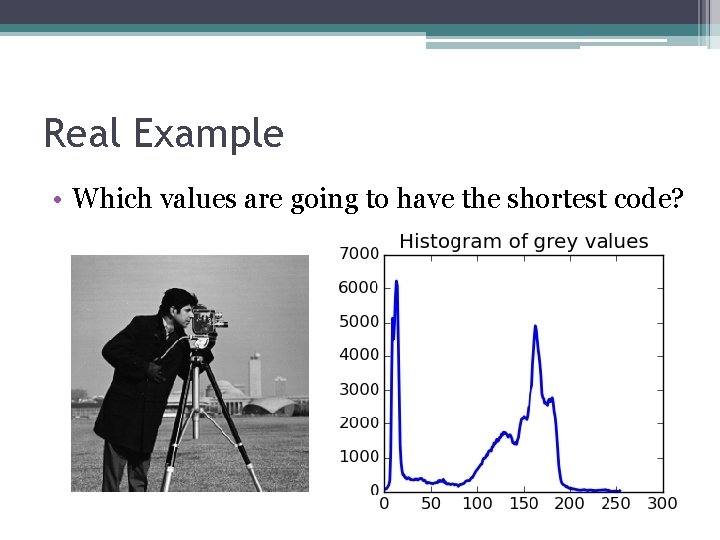

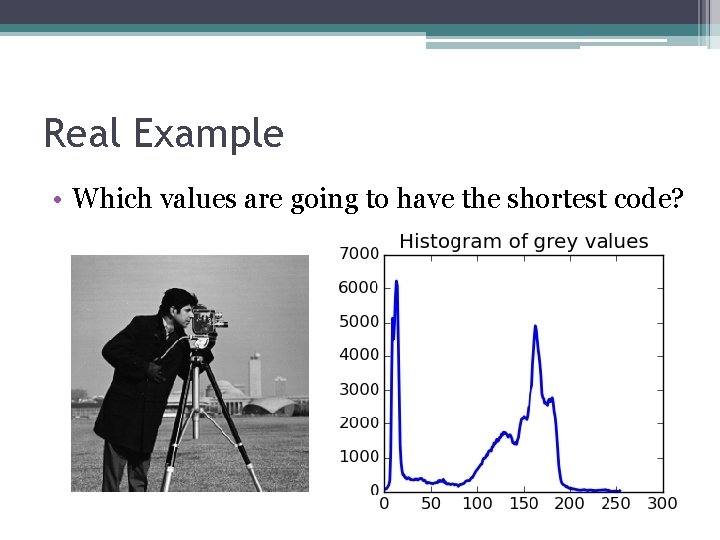

Real Example • Which values are going to have the shortest code?

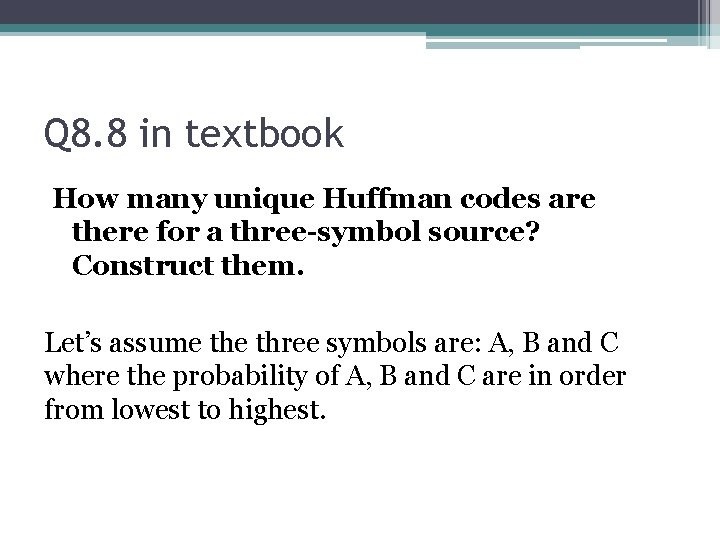

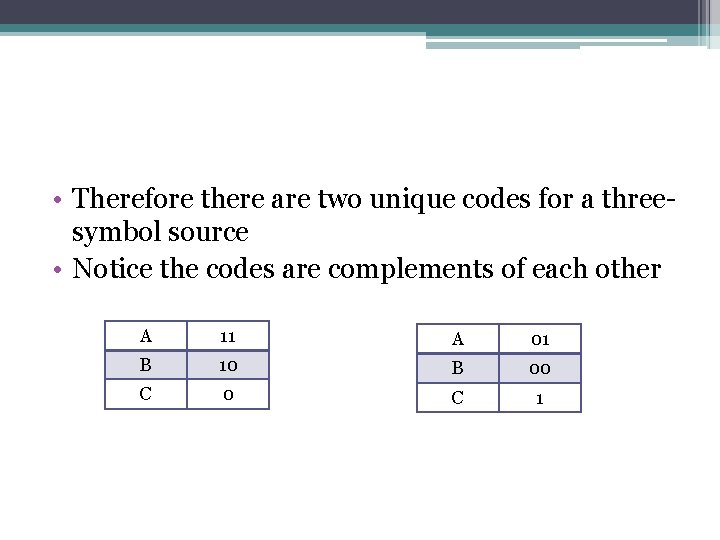

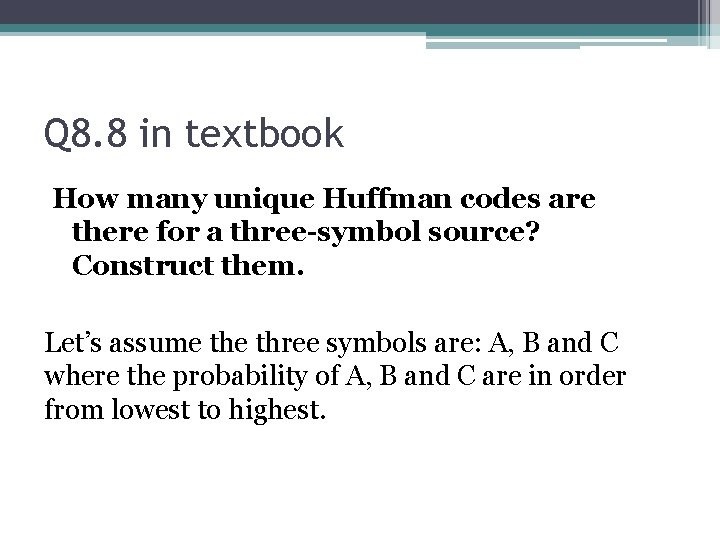

Q 8. 8 in textbook How many unique Huffman codes are there for a three-symbol source? Construct them. Let’s assume three symbols are: A, B and C where the probability of A, B and C are in order from lowest to highest.

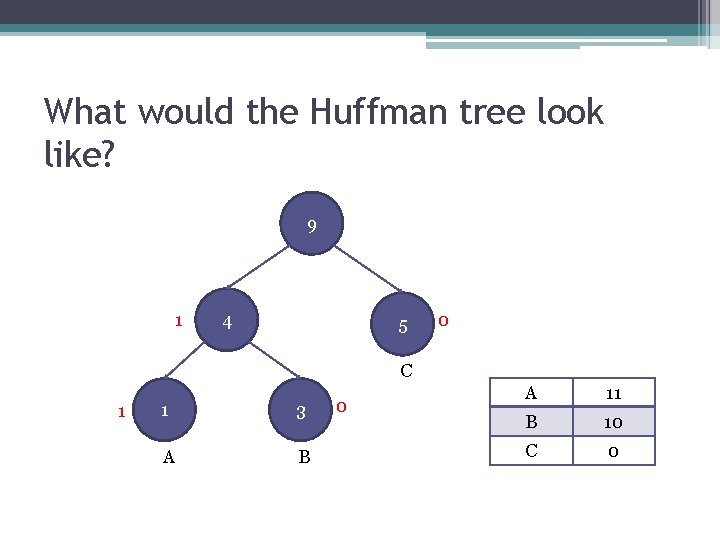

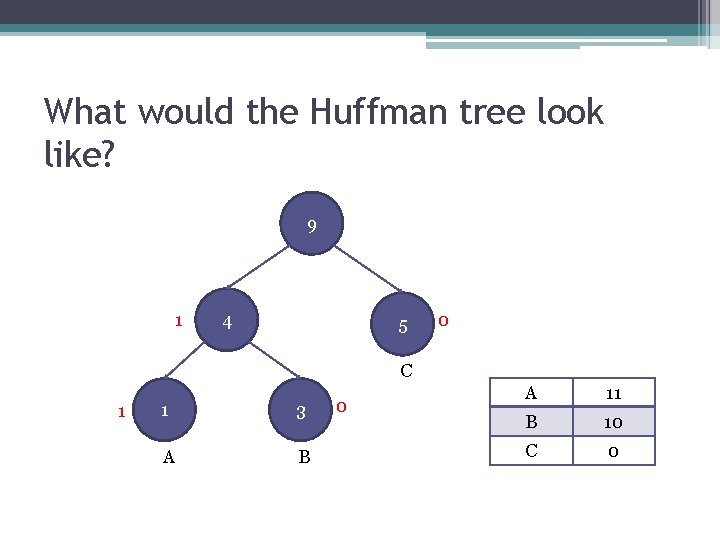

What would the Huffman tree look like? 9 1 4 5 0 C 1 1 3 A B 0 A 11 B 10 C 0

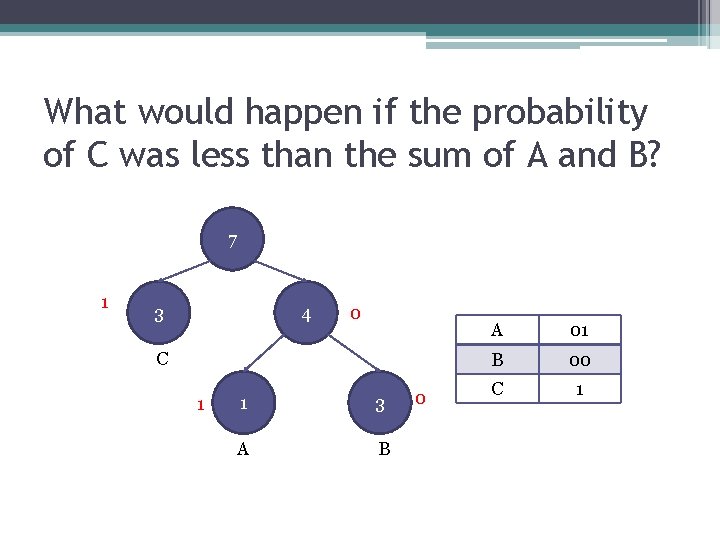

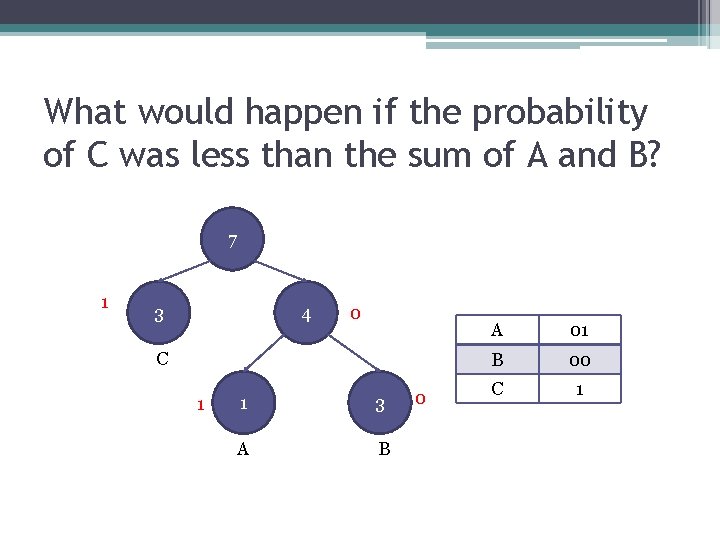

What would happen if the probability of C was less than the sum of A and B? 7 1 3 4 0 C 1 1 3 A B 0 A 01 B 00 C 1

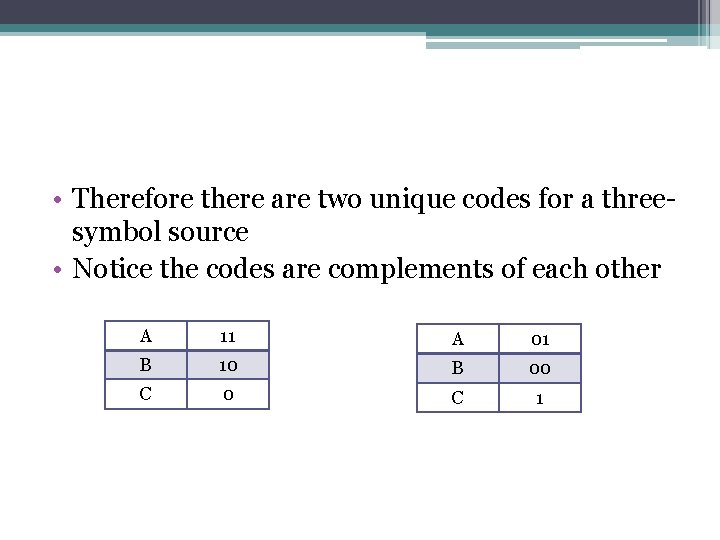

• Therefore there are two unique codes for a threesymbol source • Notice the codes are complements of each other A 11 A 01 B 10 B 00 C 1

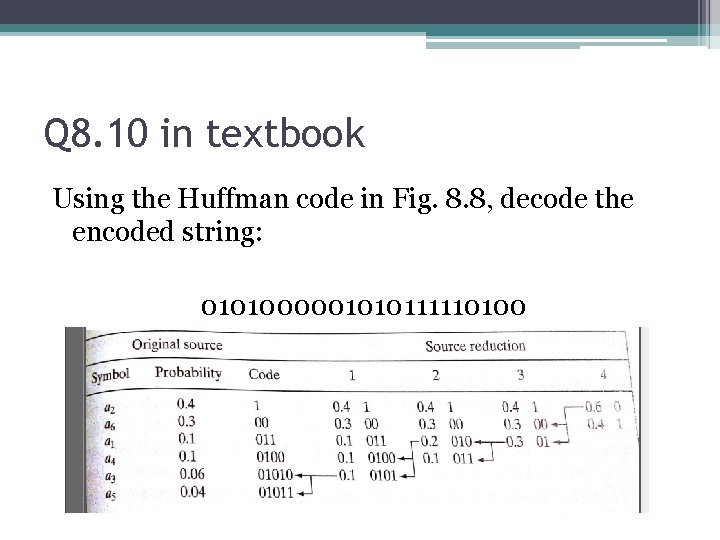

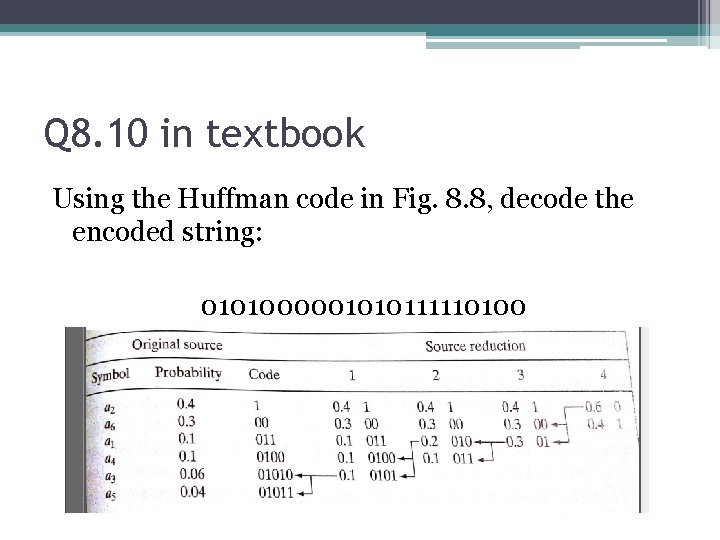

Q 8. 10 in textbook Using the Huffman code in Fig. 8. 8, decode the encoded string: 0101000001010111110100

0101000001010111110100

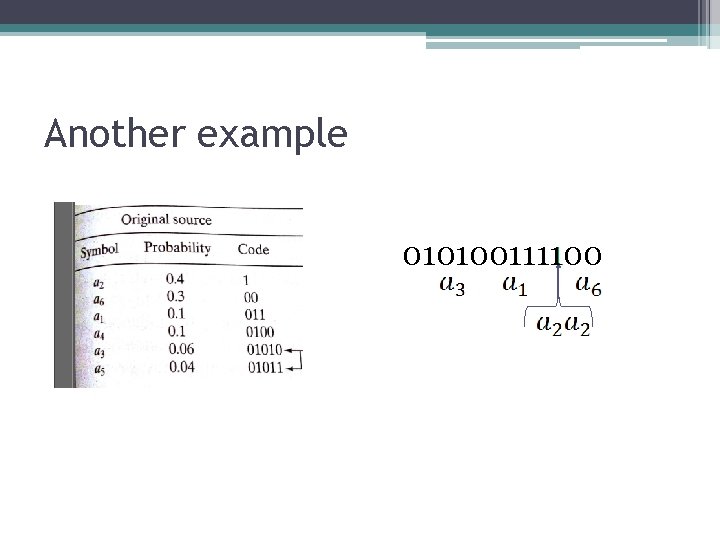

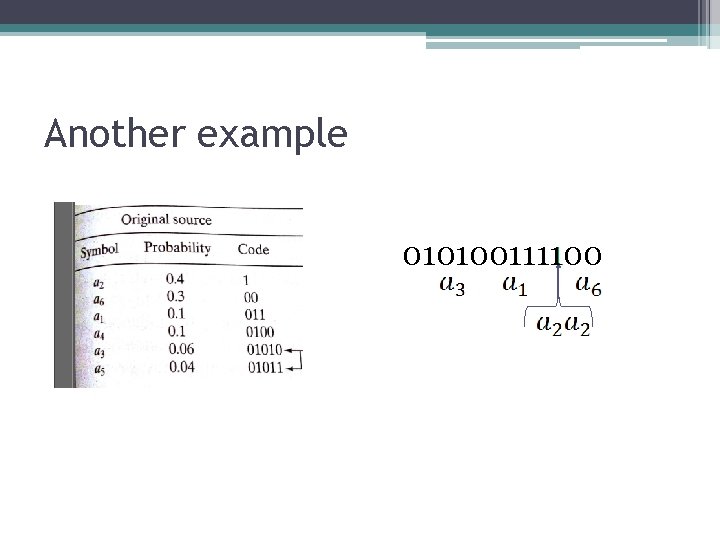

Another example 010100111100