HRTF HRTF 8 Audio Graph Node Node Audio

![Applications of Spatial Audio [Video] Applications of Spatial Audio [Video]](https://slidetodoc.com/presentation_image_h/02c6e4e748fe763fbd52b32859ad7314/image-23.jpg)

![public Game. Object[] Audio. Sources; void Awake() { // Find all objects tagged Spatial. public Game. Object[] Audio. Sources; void Awake() { // Find all objects tagged Spatial.](https://slidetodoc.com/presentation_image_h/02c6e4e748fe763fbd52b32859ad7314/image-35.jpg)

- Slides: 50

HRTF

HRTF 8

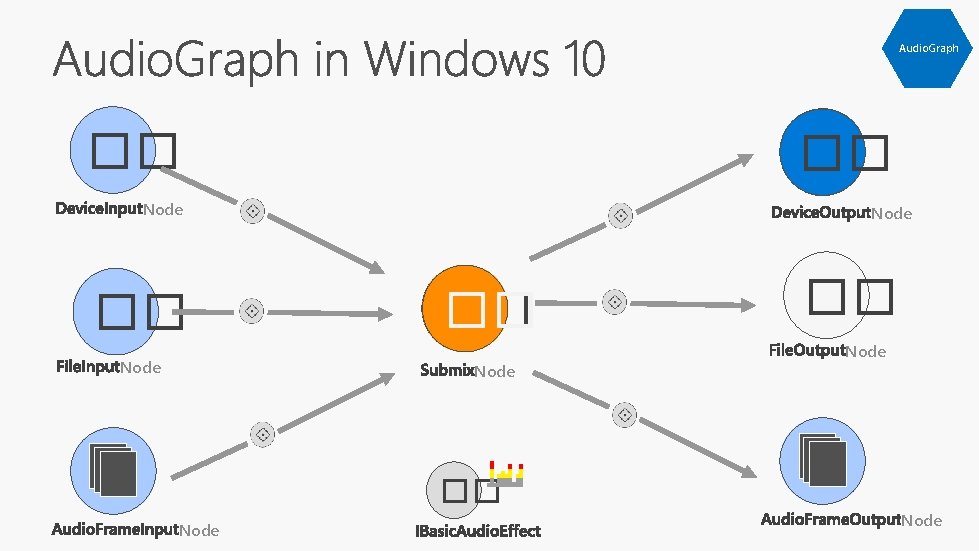

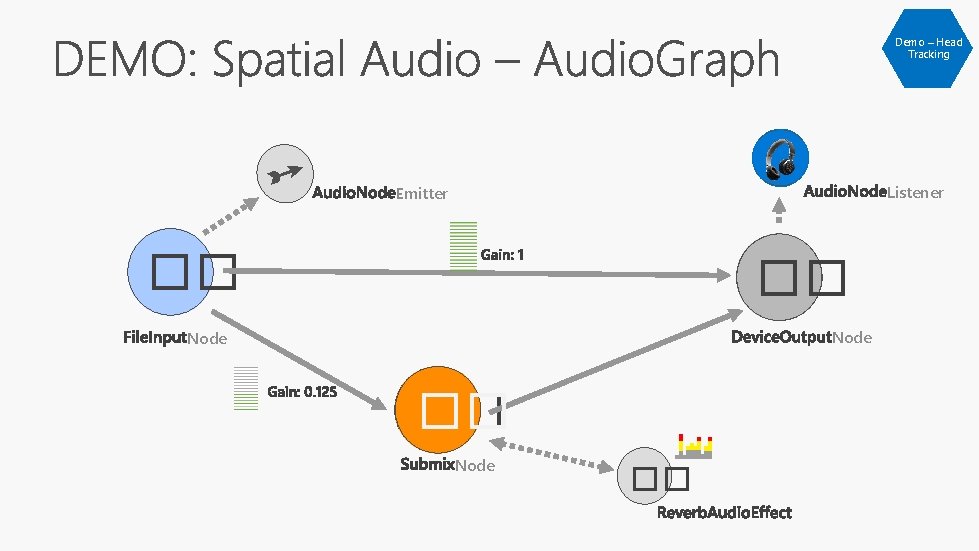

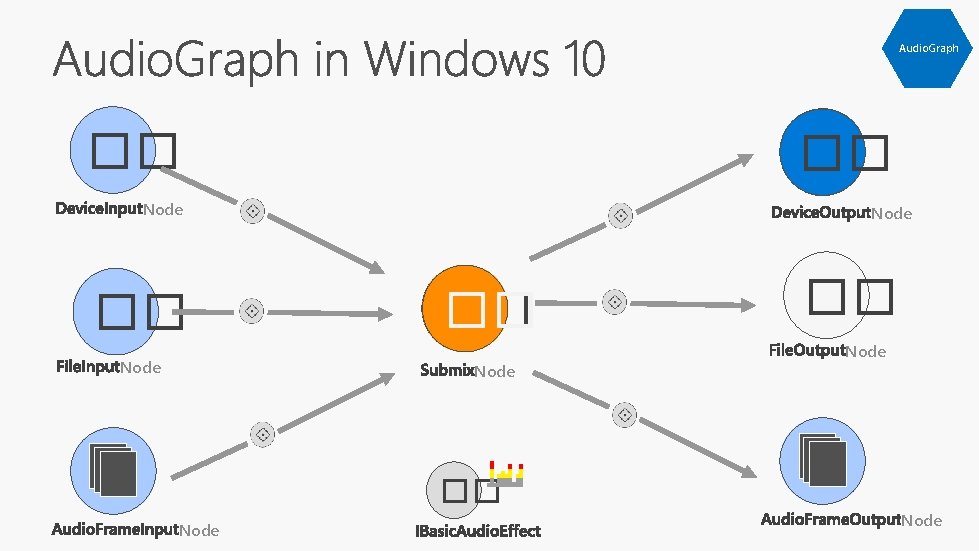

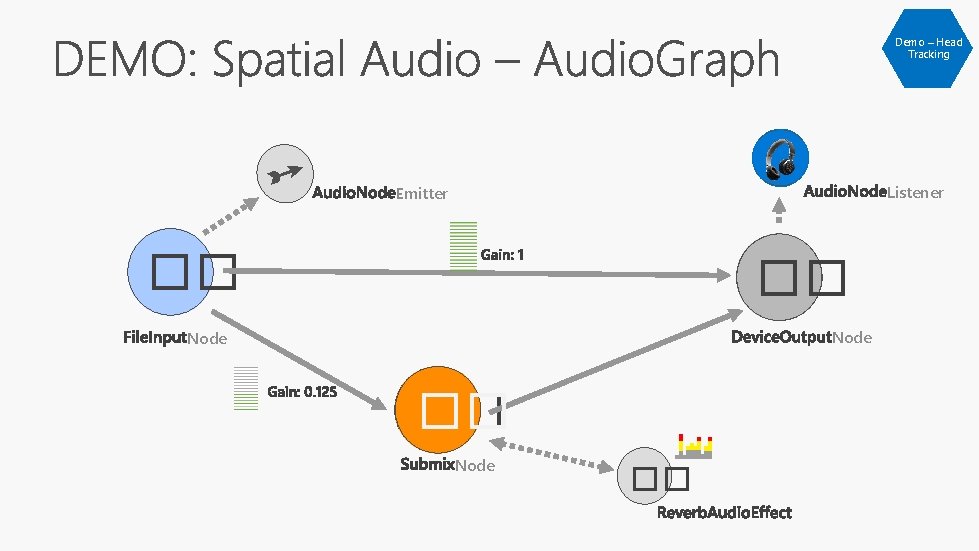

Audio. Graph �� �� Node �� �� Node

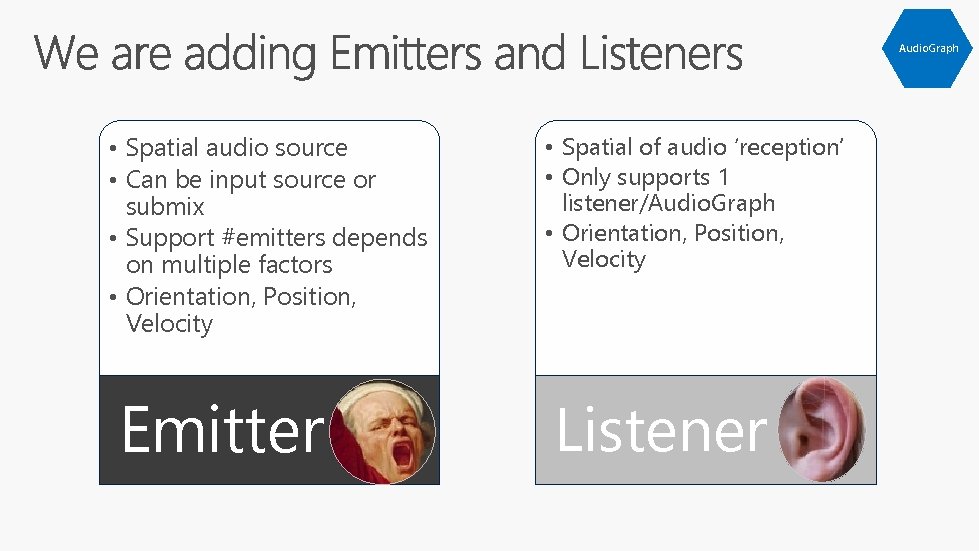

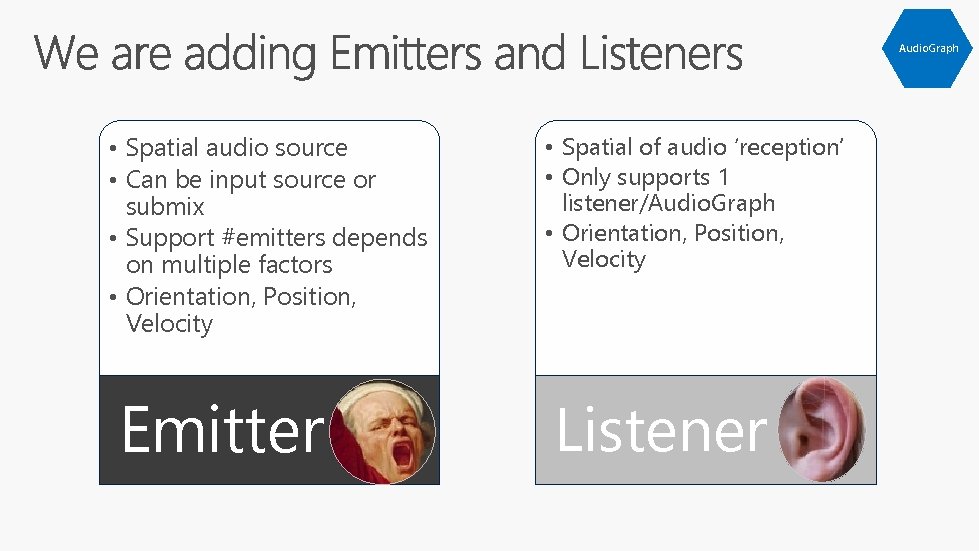

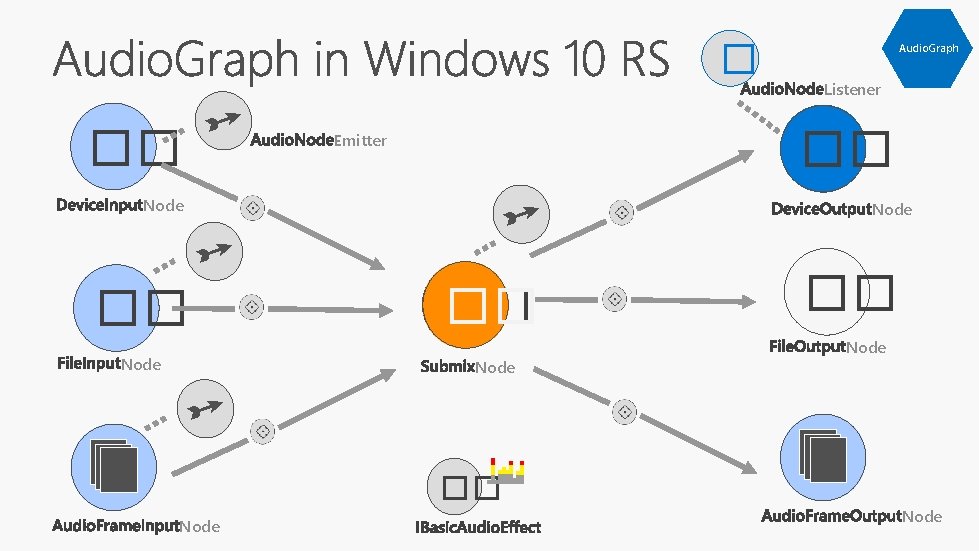

Audio. Graph • Spatial audio source • Can be input source or submix • Support #emitters depends on multiple factors • Orientation, Position, Velocity Emitter • Spatial of audio ‘reception’ • Only supports 1 listener/Audio. Graph • Orientation, Position, Velocity Listener

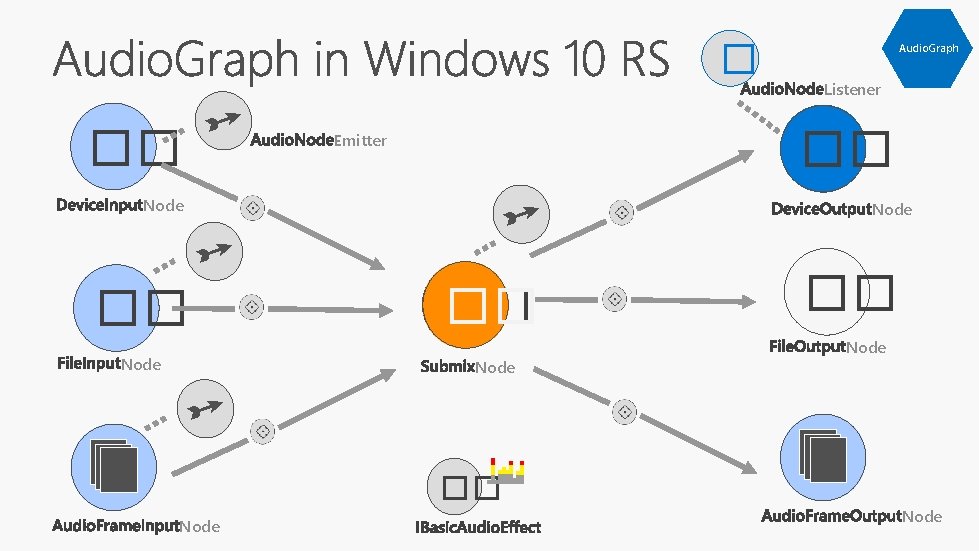

� �� �� Node Listener �� Emitter Node Audio. Graph �� �� Node Node

Audio. Graph � Listener Emitter �� �� Node ��

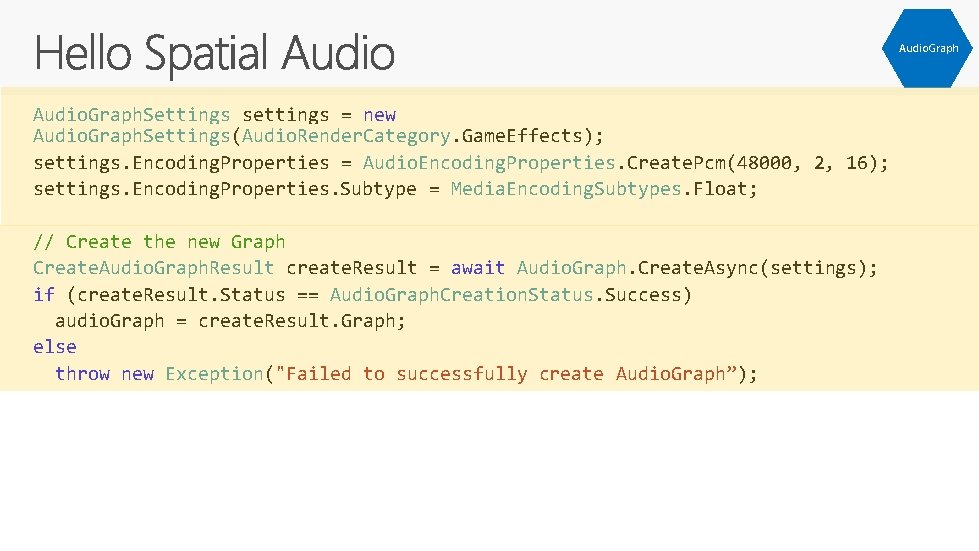

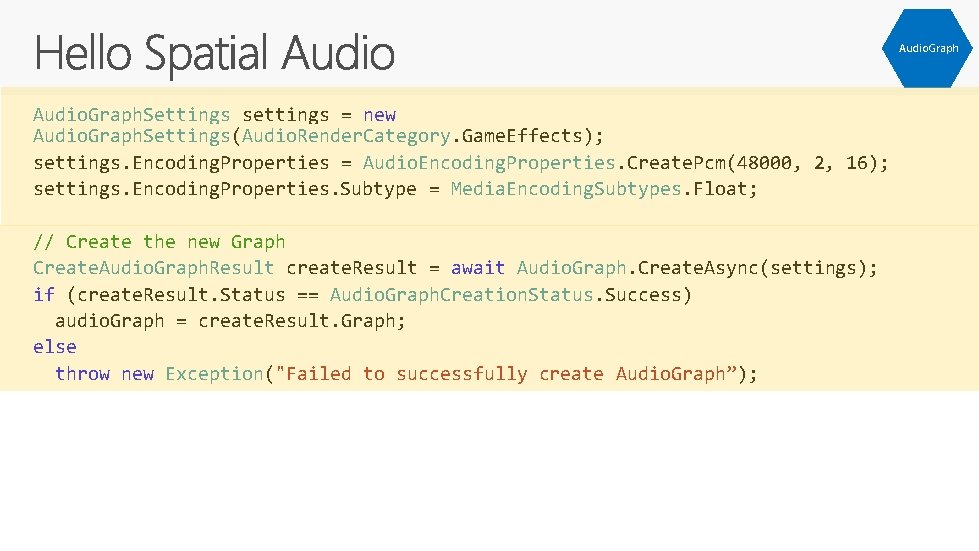

Audio. Graph. Settings settings = new Audio. Graph. Settings(Audio. Render. Category. Game. Effects); settings. Encoding. Properties = Audio. Encoding. Properties. Create. Pcm(48000, 2, 16); settings. Encoding. Properties. Subtype = Media. Encoding. Subtypes. Float; // Create the new Graph Create. Audio. Graph. Result create. Result = await Audio. Graph. Create. Async(settings); if (create. Result. Status == Audio. Graph. Creation. Status. Success) audio. Graph = create. Result. Graph; else throw new Exception("Failed to successfully create Audio. Graph”);

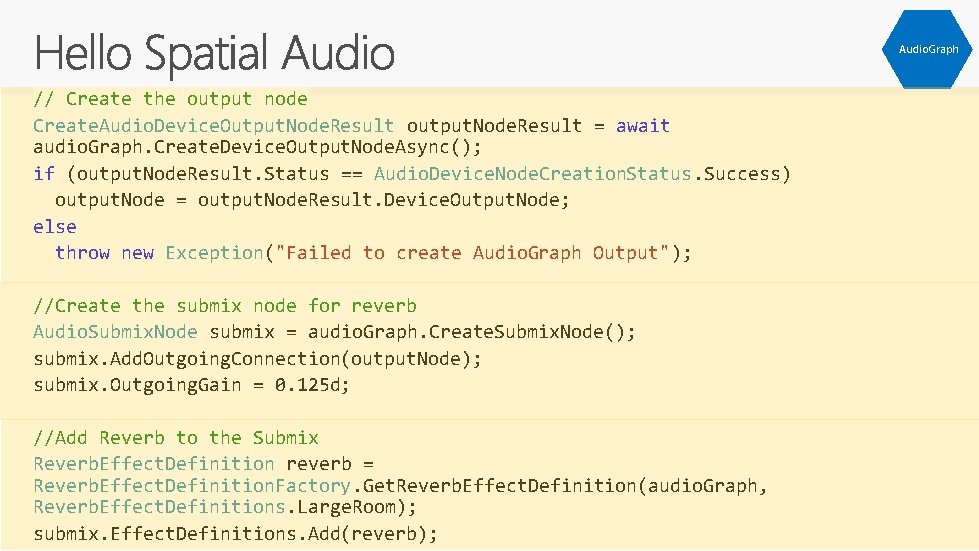

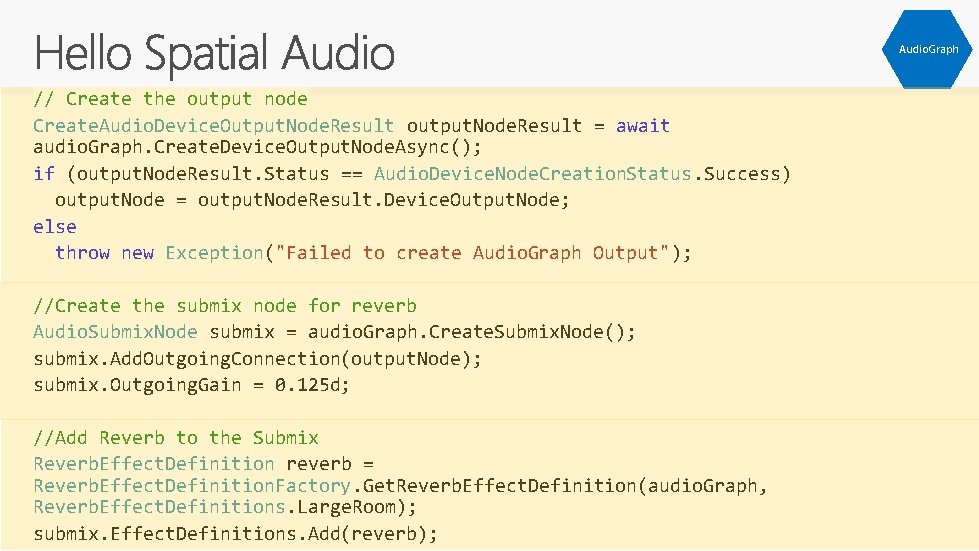

Audio. Graph // Create the output node Create. Audio. Device. Output. Node. Result output. Node. Result = await audio. Graph. Create. Device. Output. Node. Async(); if (output. Node. Result. Status == Audio. Device. Node. Creation. Status. Success) output. Node = output. Node. Result. Device. Output. Node; else throw new Exception("Failed to create Audio. Graph Output"); //Create the submix node for reverb Audio. Submix. Node submix = audio. Graph. Create. Submix. Node(); submix. Add. Outgoing. Connection(output. Node); submix. Outgoing. Gain = 0. 125 d; //Add Reverb to the Submix Reverb. Effect. Definition reverb = Reverb. Effect. Definition. Factory. Get. Reverb. Effect. Definition(audio. Graph, Reverb. Effect. Definitions. Large. Room); submix. Effect. Definitions. Add(reverb);

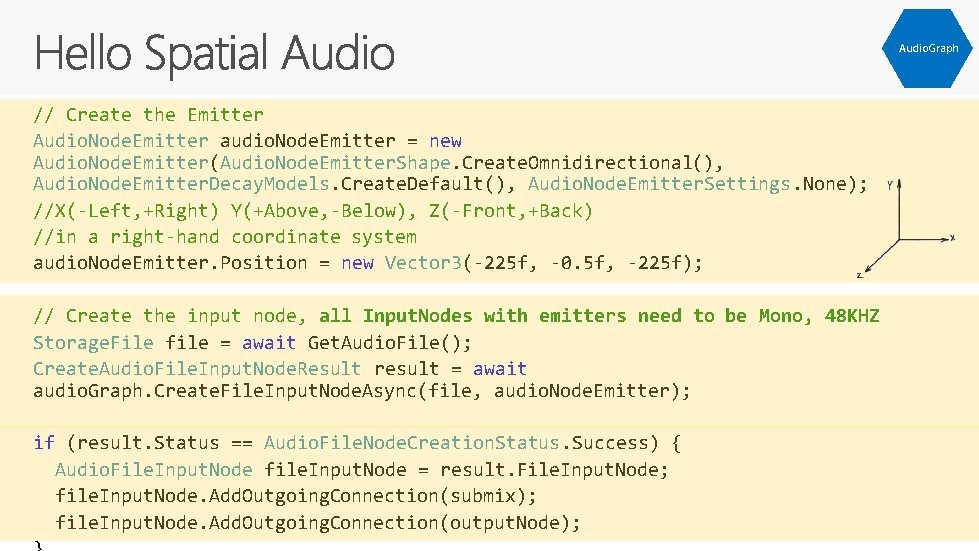

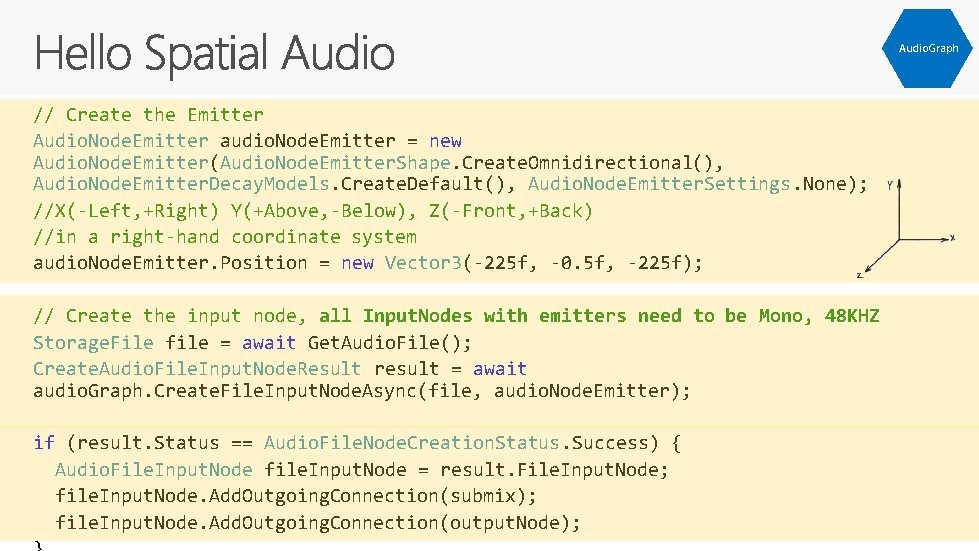

Audio. Graph // Create the Emitter Audio. Node. Emitter audio. Node. Emitter = new Audio. Node. Emitter(Audio. Node. Emitter. Shape. Create. Omnidirectional(), Audio. Node. Emitter. Decay. Models. Create. Default(), Audio. Node. Emitter. Settings. None); //X(-Left, +Right) Y(+Above, -Below), Z(-Front, +Back) //in a right-hand coordinate system audio. Node. Emitter. Position = new Vector 3(-225 f, -0. 5 f, -225 f); // Create the input node, all Input. Nodes with emitters need to be Mono, 48 KHZ Storage. File file = await Get. Audio. File(); Create. Audio. File. Input. Node. Result result = await audio. Graph. Create. File. Input. Node. Async(file, audio. Node. Emitter); if (result. Status == Audio. File. Node. Creation. Status. Success) { Audio. File. Input. Node file. Input. Node = result. File. Input. Node; file. Input. Node. Add. Outgoing. Connection(submix); file. Input. Node. Add. Outgoing. Connection(output. Node);

� Powered by: Jabra Intelligent Headset Advanced sensor pack and dynamic 3 D audio - and exciting new apps platform! http: //aka. ms/spatialspheredemocode https: //intelligentheadset. com/

Demo – Head Tracking Listener Emitter �� �� Node ��

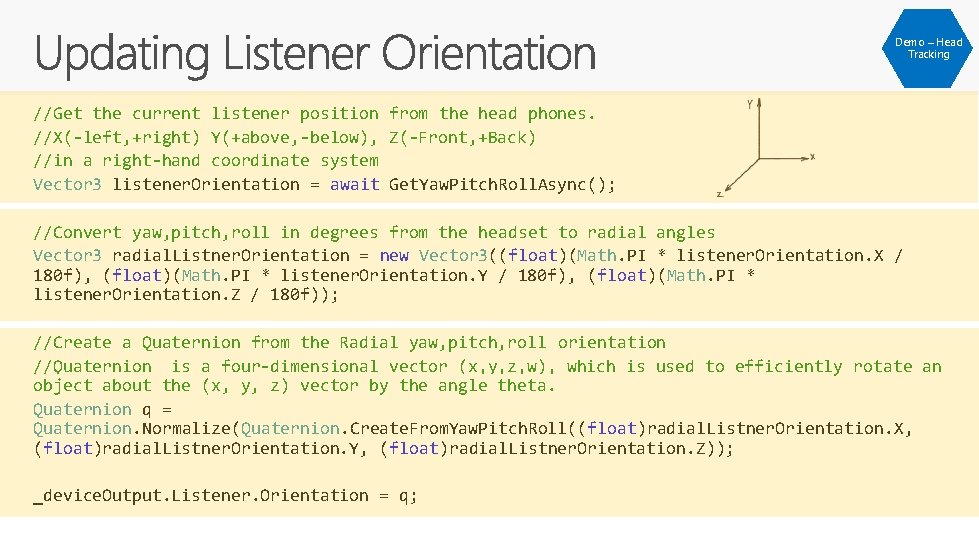

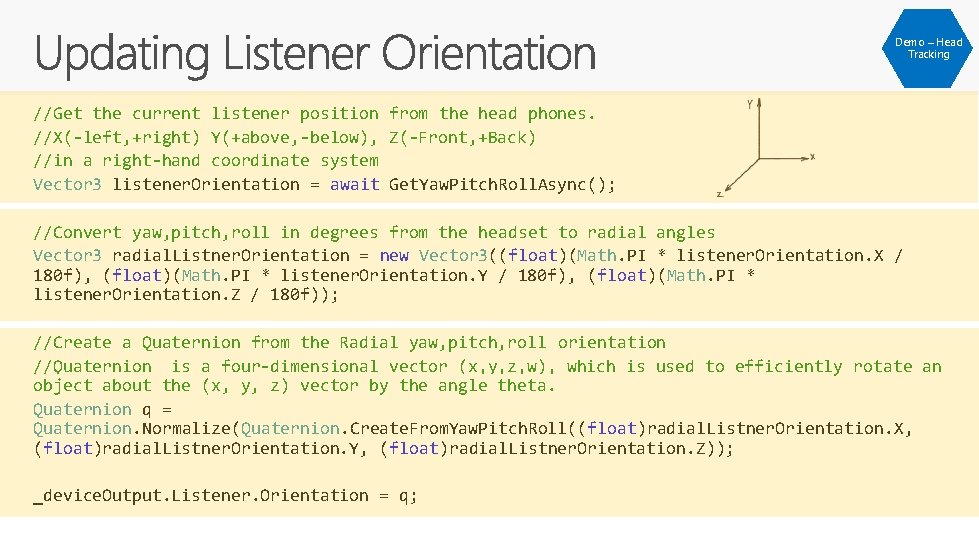

Demo – Head Tracking //Get the current listener position from the head phones. //X(-left, +right) Y(+above, -below), Z(-Front, +Back) //in a right-hand coordinate system Vector 3 listener. Orientation = await Get. Yaw. Pitch. Roll. Async(); //Convert yaw, pitch, roll in degrees from the headset to radial angles Vector 3 radial. Listner. Orientation = new Vector 3((float)(Math. PI * listener. Orientation. X / 180 f), (float)(Math. PI * listener. Orientation. Y / 180 f), (float)(Math. PI * listener. Orientation. Z / 180 f)); //Create a Quaternion from the Radial yaw, pitch, roll orientation //Quaternion is a four-dimensional vector (x, y, z, w), which is used to efficiently rotate an object about the (x, y, z) vector by the angle theta. Quaternion q = Quaternion. Normalize(Quaternion. Create. From. Yaw. Pitch. Roll((float)radial. Listner. Orientation. X, (float)radial. Listner. Orientation. Y, (float)radial. Listner. Orientation. Z)); _device. Output. Listener. Orientation = q;

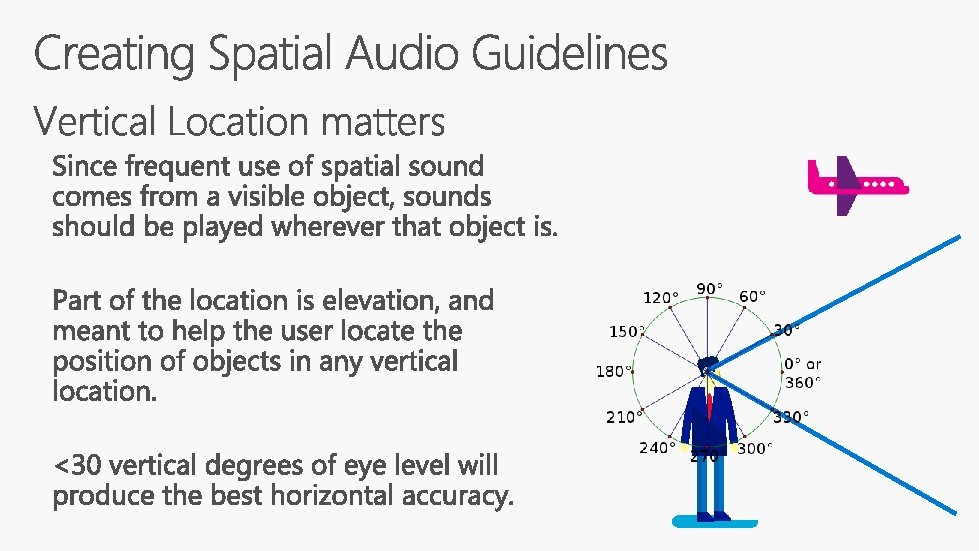

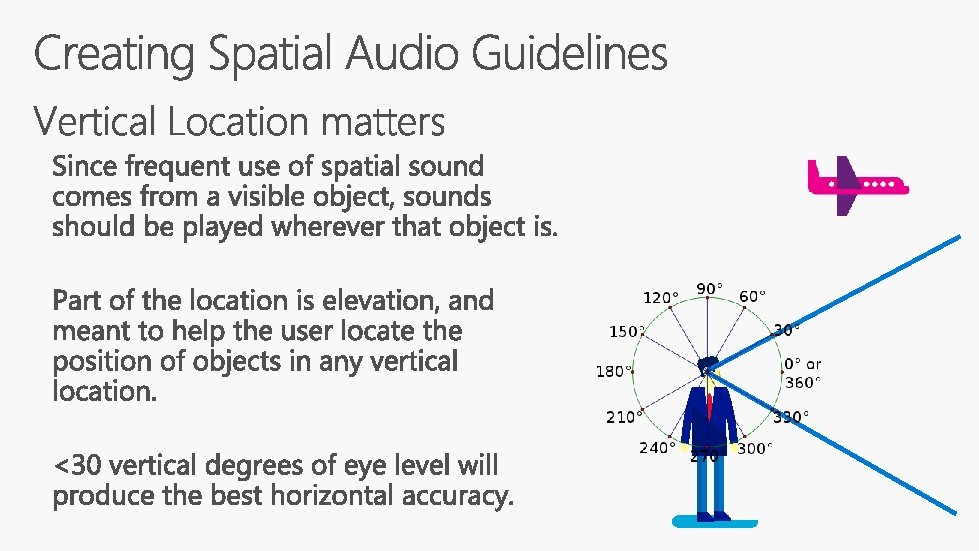

Applications of Spatial Audio

![Applications of Spatial Audio Video Applications of Spatial Audio [Video]](https://slidetodoc.com/presentation_image_h/02c6e4e748fe763fbd52b32859ad7314/image-23.jpg)

Applications of Spatial Audio [Video]

Lighting up the World through Sound Cities Unlocked The Guide Dogs Project Applications of Spatial Audio Mission Can technology help us be more present, more human? Strategy Use spatial Audio to present location information to users who have visual impairments, making it easy for the user to know where they are, what’s around them, and how to get where they want to go. • Increase confidence • Increase enjoyment Key Technologies • Spatial Audio – convey distance & direction, minimize cognitive load • Background audio • Headset and remote – connected to phone via BT and BLE • Mapping services

copyright 2008, Blender Foundation / www. bigbuckbunny. org

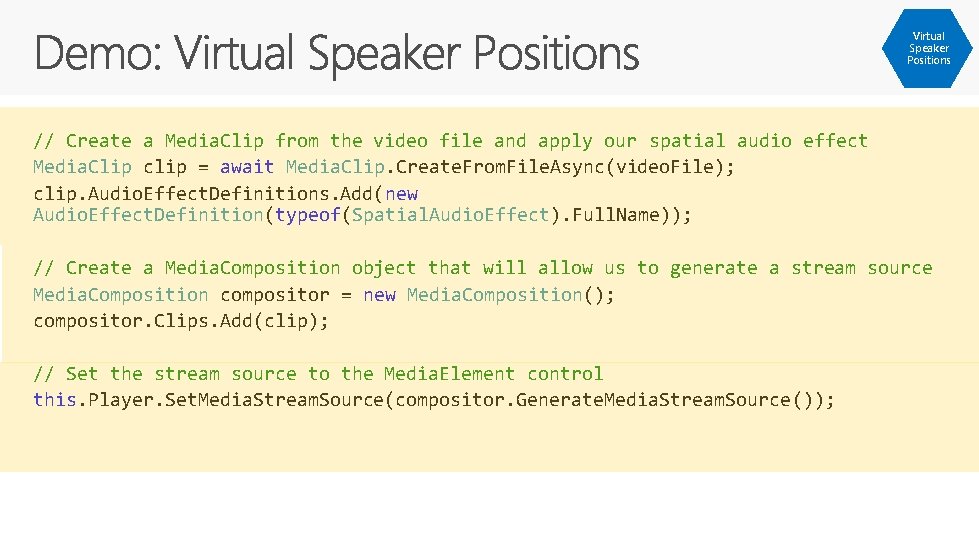

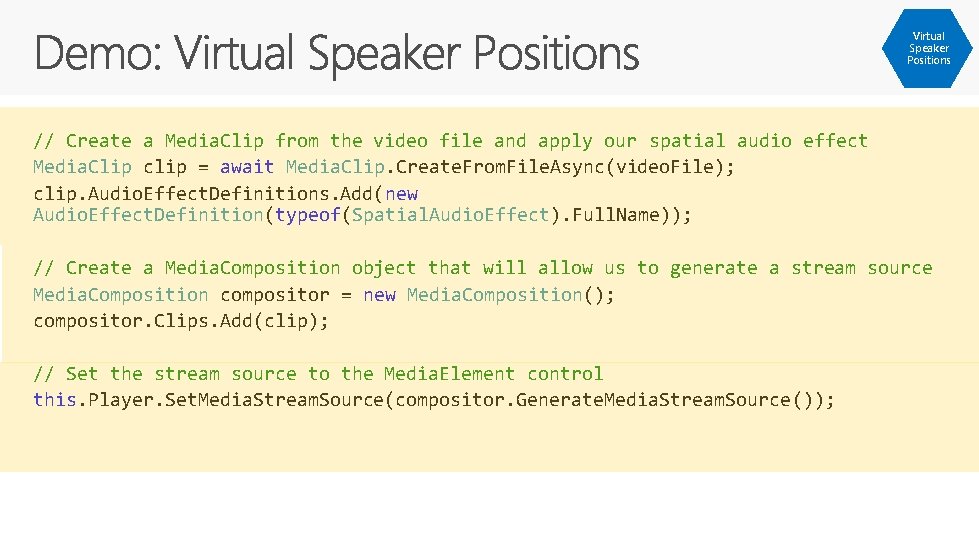

Virtual Speaker Positions �� Emitter Node �� �� Node �� � Node

Virtual Speaker Positions // Create a Media. Clip from the video file and apply our spatial audio effect Media. Clip clip = await Media. Clip. Create. From. File. Async(video. File); clip. Audio. Effect. Definitions. Add(new Audio. Effect. Definition(typeof(Spatial. Audio. Effect). Full. Name)); // Create a Media. Composition object that will allow us to generate a stream source Media. Composition compositor = new Media. Composition(); compositor. Clips. Add(clip); // Set the stream source to the Media. Element control this. Player. Set. Media. Stream. Source(compositor. Generate. Media. Stream. Source());

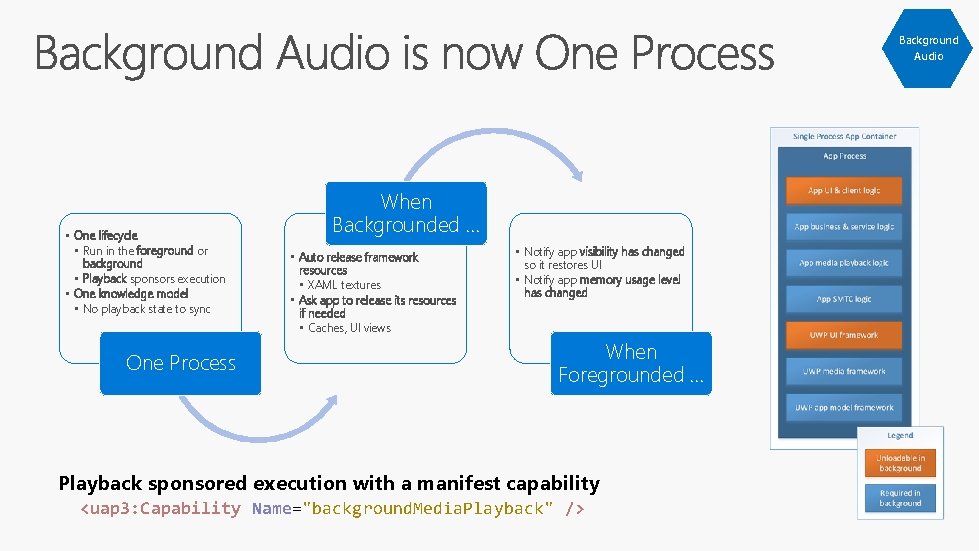

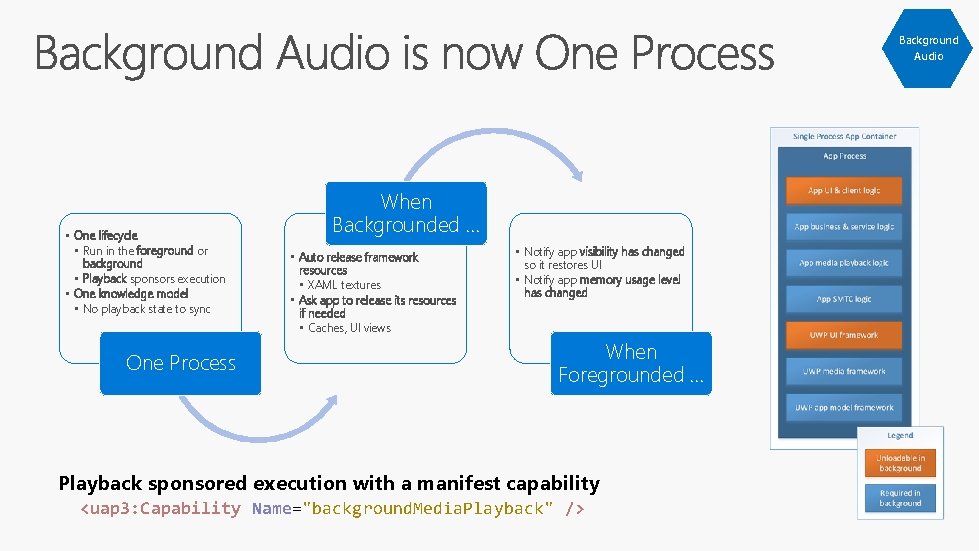

Background Audio • One lifecycle • Run in the foreground or background • Playback sponsors execution • One knowledge model • No playback state to sync One Process When Backgrounded … • Auto release framework resources • XAML textures • Ask app to release its resources if needed • Caches, UI views • Notify app visibility has changed so it restores UI • Notify app memory usage level has changed When Foregrounded … Playback sponsored execution with a manifest capability <uap 3: Capability Name="background. Media. Playback" />

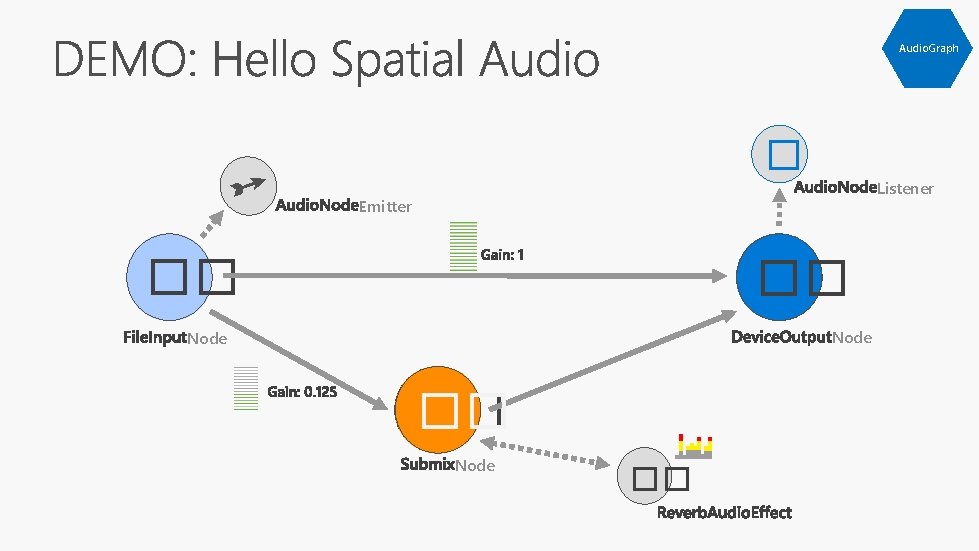

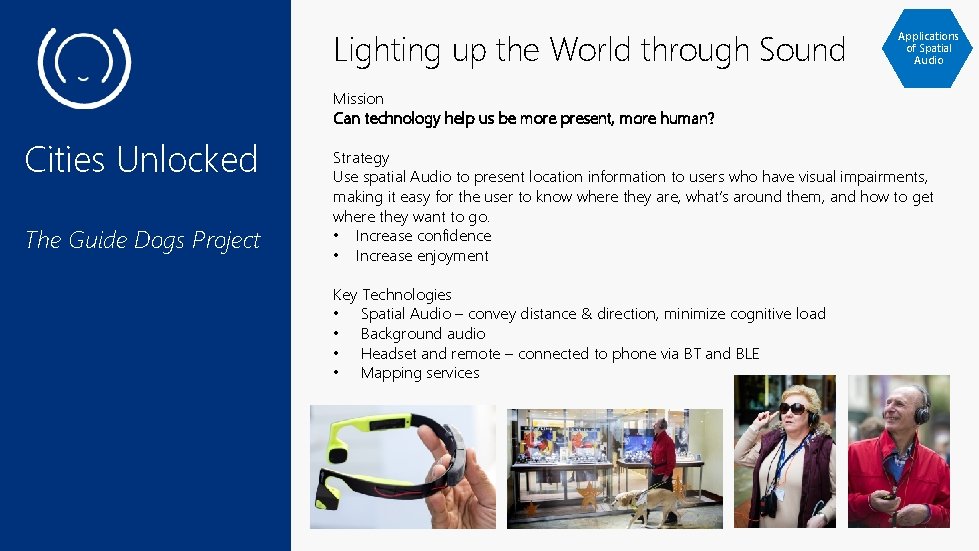

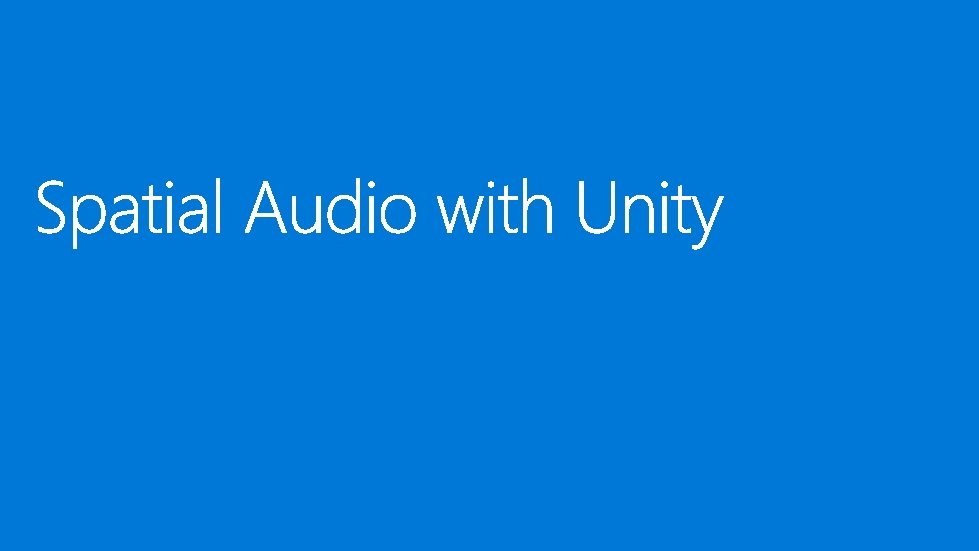

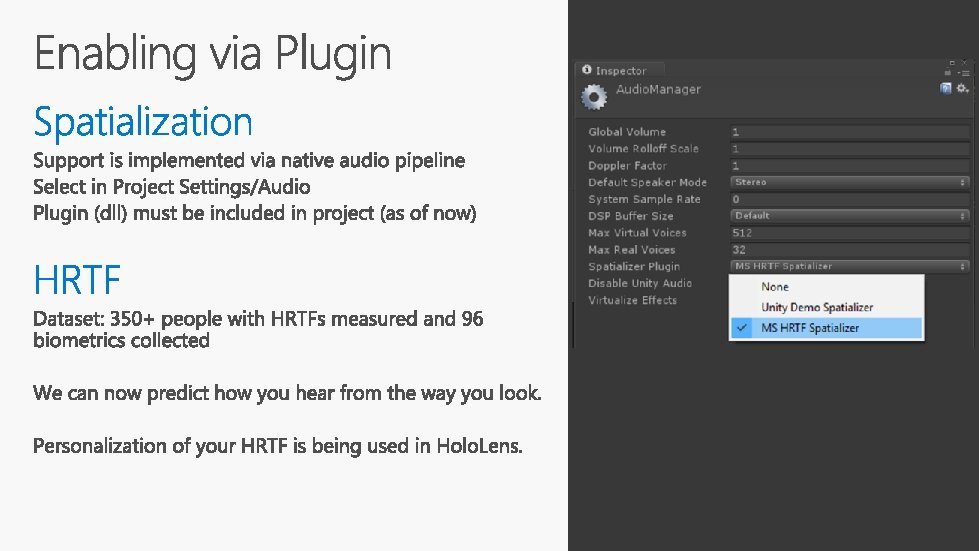

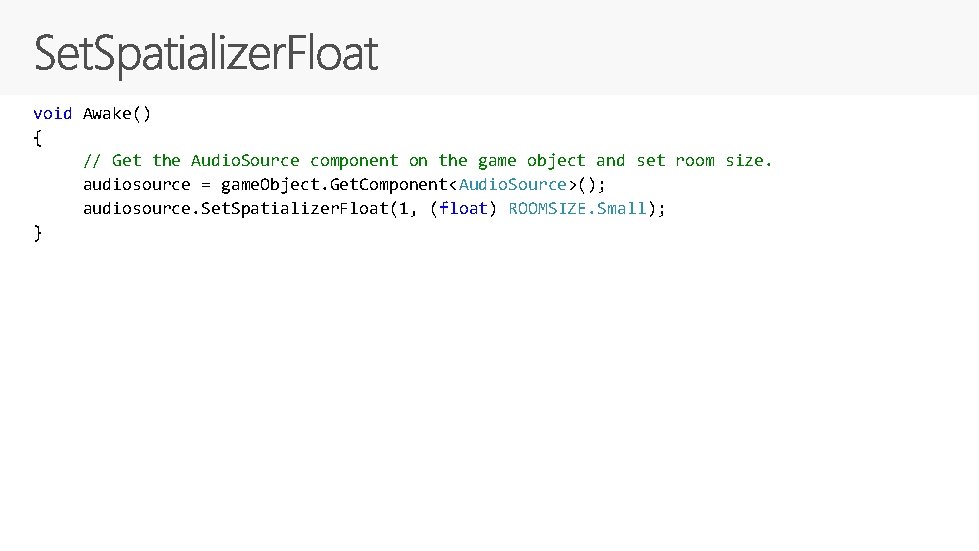

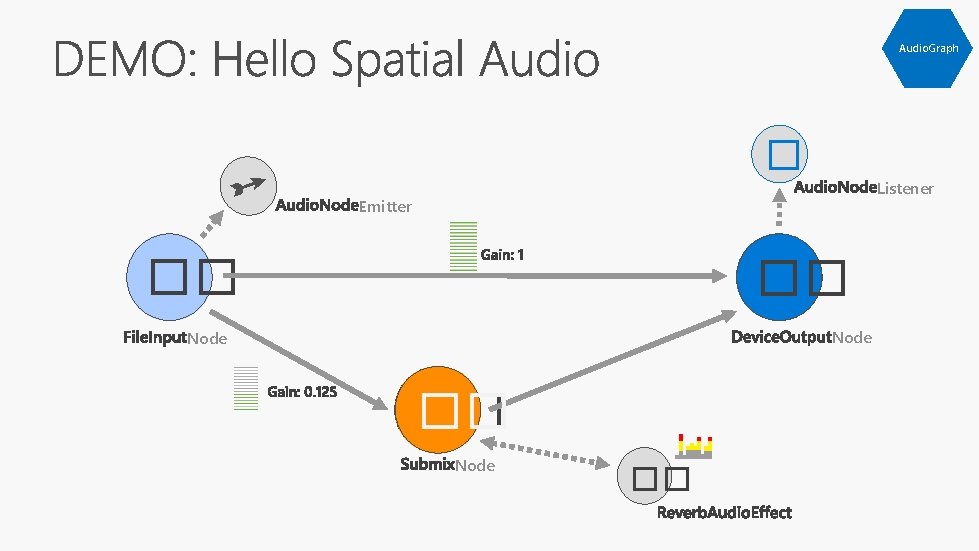

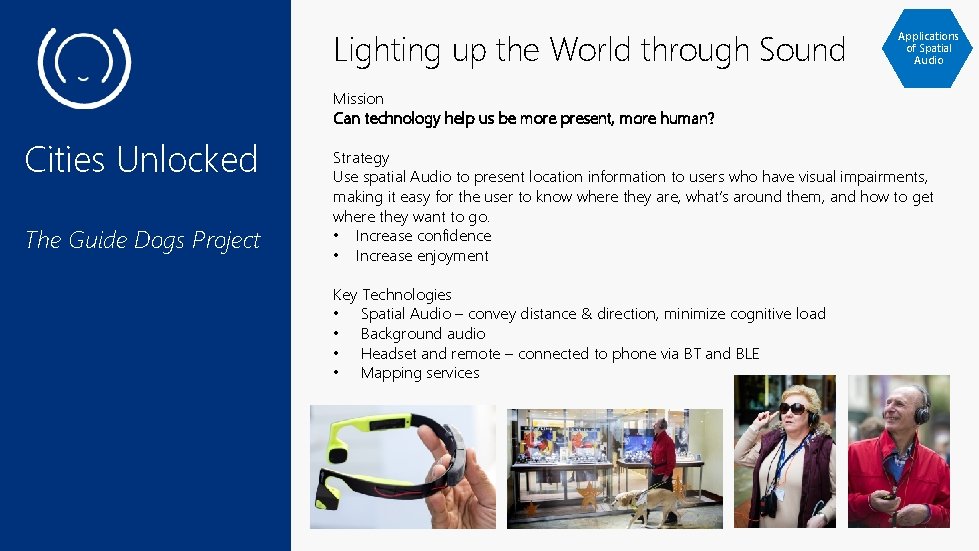

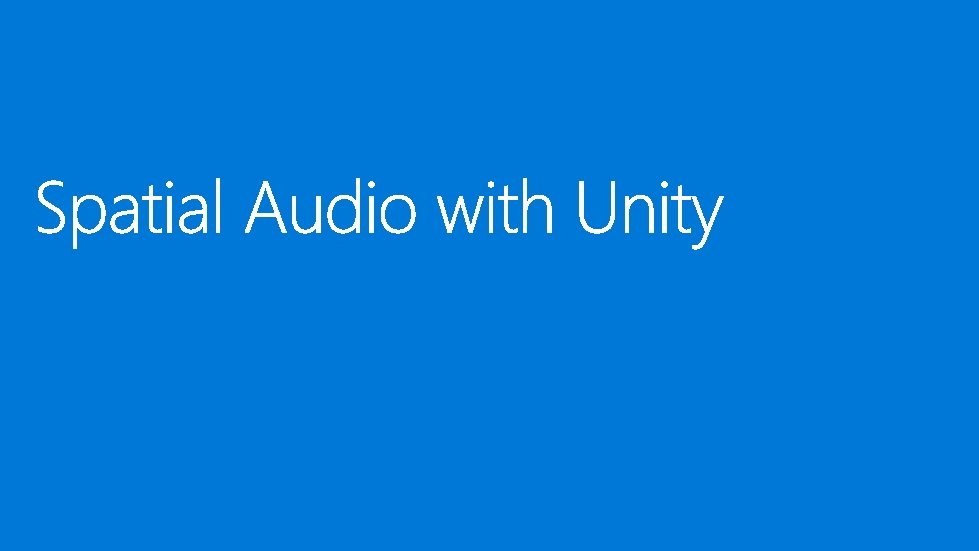

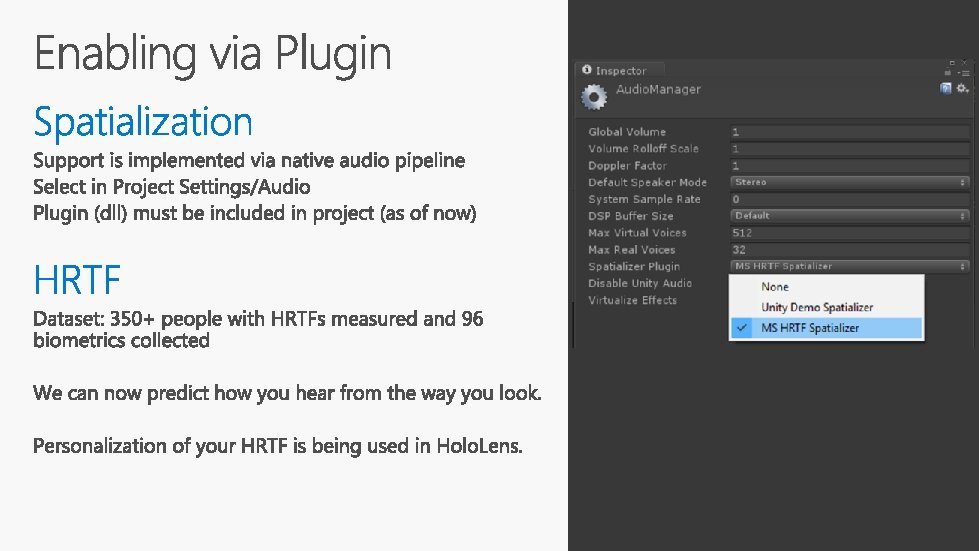

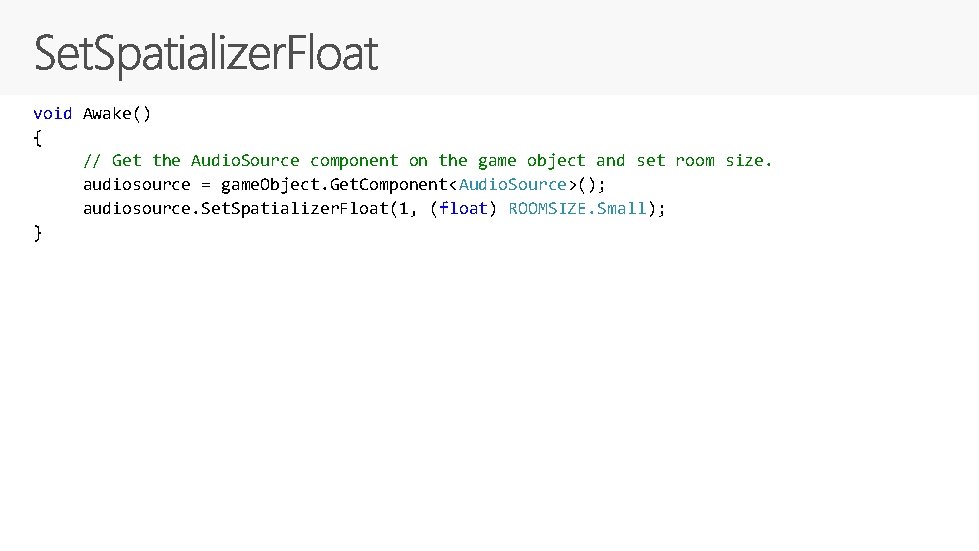

void Awake() { // Get the Audio. Source component on the game object and set room size. audiosource = game. Object. Get. Component<Audio. Source>(); audiosource. Set. Spatializer. Float(1, (float) ROOMSIZE. Small); }

![public Game Object Audio Sources void Awake Find all objects tagged Spatial public Game. Object[] Audio. Sources; void Awake() { // Find all objects tagged Spatial.](https://slidetodoc.com/presentation_image_h/02c6e4e748fe763fbd52b32859ad7314/image-35.jpg)

public Game. Object[] Audio. Sources; void Awake() { // Find all objects tagged Spatial. Emitters Audio. Sources = Game. Object. Find. Game. Objects. With. Tag("Spatial. Emitters. Small"); foreach (Game. Object source in Audio. Sources) { var audiosource = source. Get. Component<Audio. Source>(); audiosource. spread = 0; audiosource. spatial. Blend = 1; audiosource. rolloff. Mode = Audio. Rolloff. Mode. Custom; // Plugin: Set. Spatializer. Floats here to avoid a possible pop audiosource. Set. Spatializer. Float(1, ROOMSIZE. SMALL); // audiosource. Set. Spatializer. Float(2, _min. Gain); // 2 audiosource. Set. Spatializer. Float(3, _max. Gain); // 3 audiosource. Set. Spatializer. Float(4, 1); // 4 is the unity. Gain } } 1 is the room. Size param is the min. Gain param is the max. Gain param – distance to 0 attn.

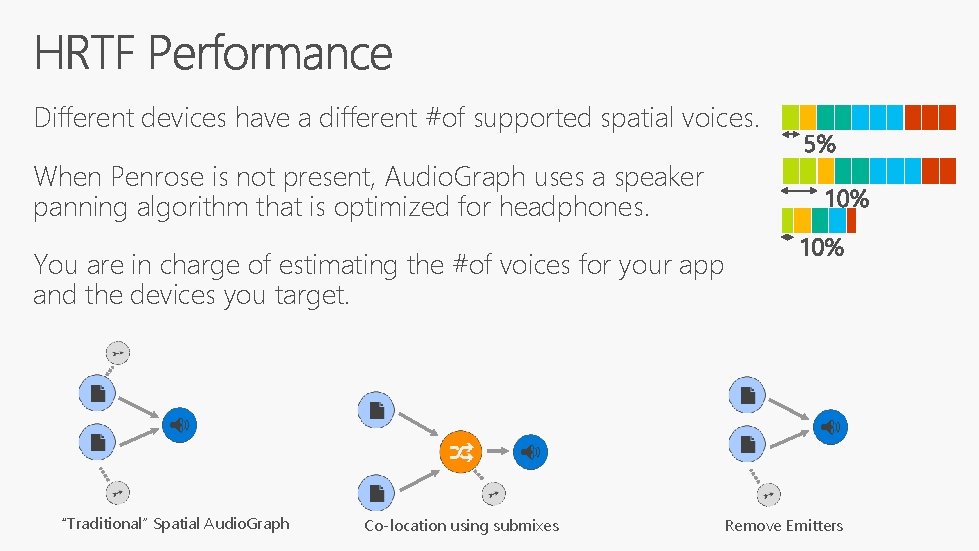

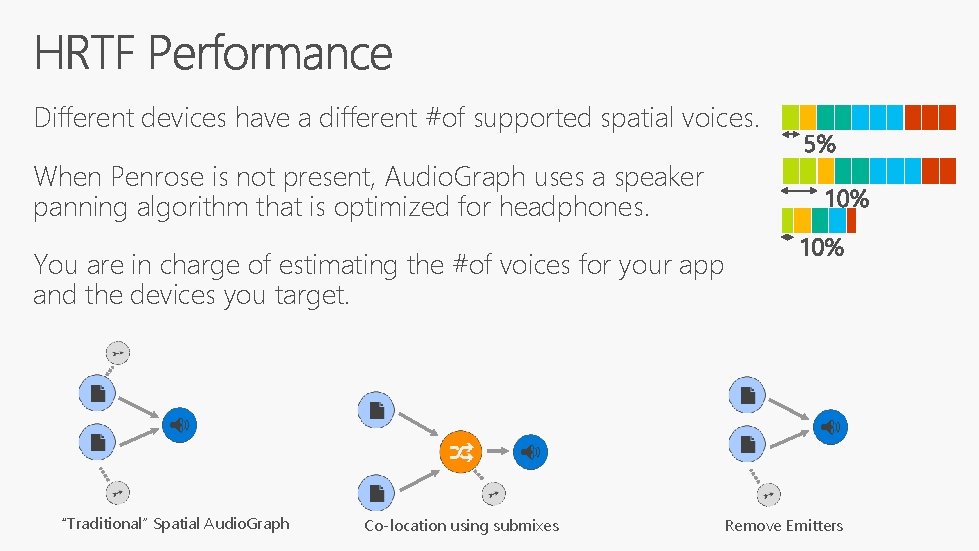

Different devices have a different #of supported spatial voices. When Penrose is not present, Audio. Graph uses a speaker panning algorithm that is optimized for headphones. You are in charge of estimating the #of voices for your app and the devices you target. “Traditional” Spatial Audio. Graph Co-location using submixes Remove Emitters

MEDIA DEVELOPMENT INTERACTIVE // VISUAL FX // 3 D PROJECTION MAPPING // EVENTS VR / AR DEVELOPMENT VIRTUAL & AUGMENTED REALITY PRODUCTION // 360 VIDEO PRODUCTION

Channel 9 Microsoft Virtual Academy Holo. Lens

Arbitrary Ear Angles from 3 -D Head Scans Anthropometric Features Sound Fields Spatial Audio Anthropometric Parameterisation of a Spherical Scatterer ITD Model with Estimation of Multipath Propagation Delays and Interaural Time Differences HRTF Magnitude Synthesis via Sparse Representation of A Method for Converting Between Cylindrical and Spherical Harmonic Representations of HRTF Phase Synthesis via Sparse Representation of Anthropometric Features Fields Ambiophonics Harmonics Coefficients on Incomplete Data Efficient Implementation of the Spectral Division Method for Arbitrary Virtual Sound Gentle Acoustic Crosstalk Cancelation Using the Spectral Division Method and HRTF Magnitude Modeling Using a Non-Regularized Least-Squares Fit of Spherical