HPUX File System Internals Introduction to HFS Vx

![Blocks and Fragments File system records the space in terms of fragments using cg_free[] Blocks and Fragments File system records the space in terms of fragments using cg_free[]](https://slidetodoc.com/presentation_image_h/36d8c9c73ea03beaf437af9a7152f415/image-22.jpg)

![Inode Cache : LRU Hash (i_dev, i_number) ihead[] ifreeh ih_head[0] ih_head[1] ifreet ninode : Inode Cache : LRU Hash (i_dev, i_number) ihead[] ifreeh ih_head[0] ih_head[1] ifreet ninode :](https://slidetodoc.com/presentation_image_h/36d8c9c73ea03beaf437af9a7152f415/image-62.jpg)

![Inode Cache ihinit( ): Initializes the Inode cache. • Allocates the in-core inodes [ninode] Inode Cache ihinit( ): Initializes the Inode cache. • Allocates the in-core inodes [ninode]](https://slidetodoc.com/presentation_image_h/36d8c9c73ea03beaf437af9a7152f415/image-63.jpg)

![nc_hash ncache[ncsize] nc_lru[ dnlc_hash_locks] DNLC Architecture h_next, h_prev lru_next, lru_prev name vp dp nc_hash ncache[ncsize] nc_lru[ dnlc_hash_locks] DNLC Architecture h_next, h_prev lru_next, lru_prev name vp dp](https://slidetodoc.com/presentation_image_h/36d8c9c73ea03beaf437af9a7152f415/image-81.jpg)

![Buffer cache structures struct bufhd *bufhash, tunable “bufhash_table_size” bfreelist[CPU][BQ_*] struct bufhd { int 32_t Buffer cache structures struct bufhd *bufhash, tunable “bufhash_table_size” bfreelist[CPU][BQ_*] struct bufhd { int 32_t](https://slidetodoc.com/presentation_image_h/36d8c9c73ea03beaf437af9a7152f415/image-86.jpg)

![1. Creat: File operations # include <fcntl. h> main() { int fd; char buf[10] 1. Creat: File operations # include <fcntl. h> main() { int fd; char buf[10]](https://slidetodoc.com/presentation_image_h/36d8c9c73ea03beaf437af9a7152f415/image-97.jpg)

![File operations # include <fcntl. h> main() { int fd; char buf[10]; if ((fd=open(“/var/test", File operations # include <fcntl. h> main() { int fd; char buf[10]; if ((fd=open(“/var/test",](https://slidetodoc.com/presentation_image_h/36d8c9c73ea03beaf437af9a7152f415/image-98.jpg)

- Slides: 107

HP-UX File System Internals Introduction to HFS, Vx. FS, Mem. FS and “In memory” structures HP Servers / Operating System Technologies Lab March 5 th , 2016 K Ravindra S Kini / Sachin Metimath

Agenda Introduction The Physical Layout of HFS data allocation and management Inode Cache Virtual File System(VFS) / Vnode Layer Directory Name Lookup Cache (DNLC) Buffer Cache File & File system operations Introduction to Mem. FS Q&A

Introduction to Unix File system –File: A file is logically a container for data. –File system: File system allows users to organize, manipulate and access different files. – Sample file system tree tmp dev bin usr tty 01 who date The file system is organized as a tree with a single root node ( writer “/”). Every non-leaf node of the file system structure is a directory of files Leaf nodes of the tree are either directories, regular files or special devices files.

Creating new File system on HP-UX –Step 1 : Identify the disk or logical volume on which you want to create the file system. –Step 2 : Create the required file system on the disk or logical volume –Step 3 : Attach the new disk containing file system on the UNIX tree for user to access it.

LAB work: # bdf Step 1: Creating the logical volume # lvcreate –L 50 M –n <vol name> /dev/vg 00 Step 2 : creating the file system # mkfs –F hfs /dev/vg 00/<vol name> # mkdir < dirname > Step 3: creating new branch in Unix tree # mount –F hfs /dev/vg 00/<vol name> <dir name> # bdf

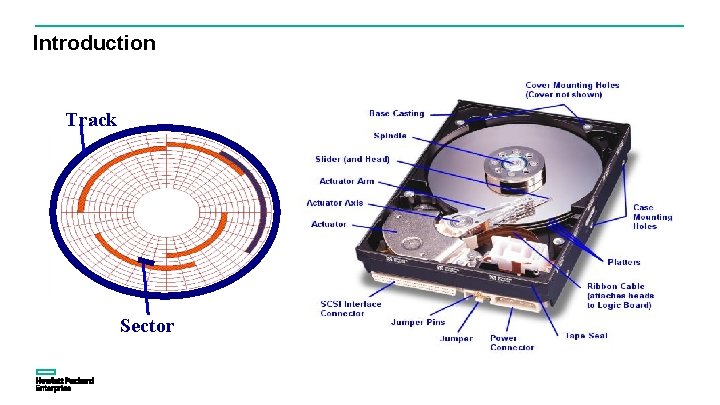

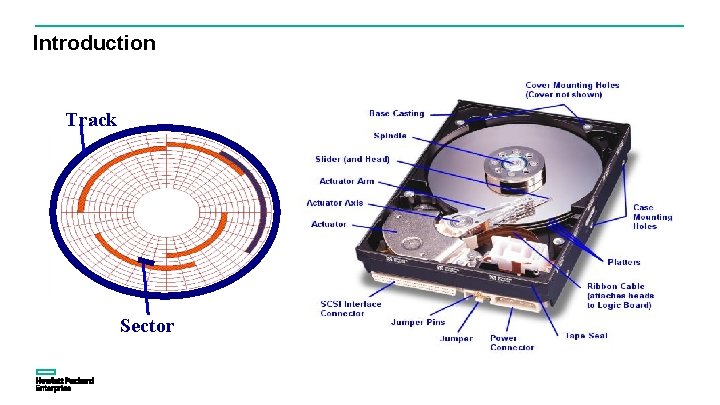

Introduction Track Sector

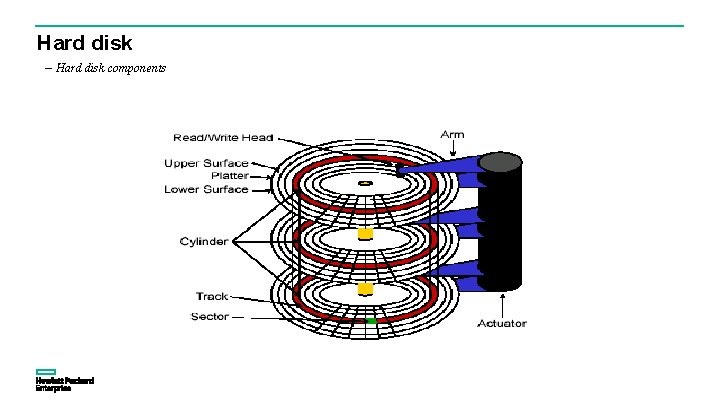

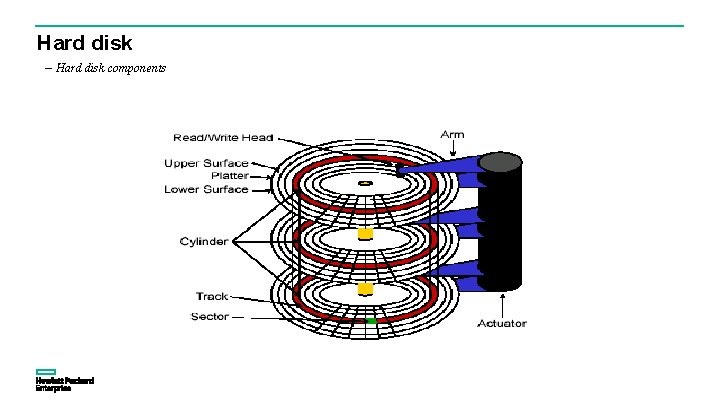

Hard disk – Hard disk components

Unix (UFS) or High Performance / Hierarchical File system (HFS) 8

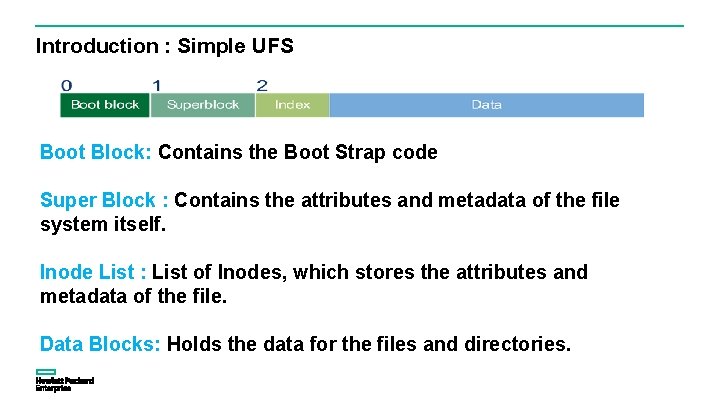

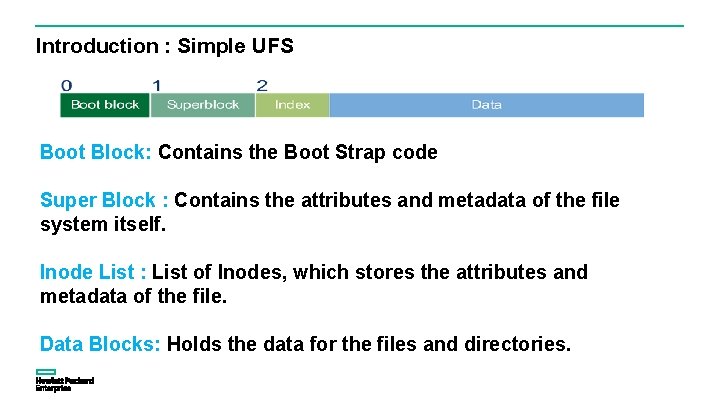

Introduction : Simple UFS Boot Block: Contains the Boot Strap code Super Block : Contains the attributes and metadata of the file system itself. Inode List : List of Inodes, which stores the attributes and metadata of the file. Data Blocks: Holds the data for the files and directories.

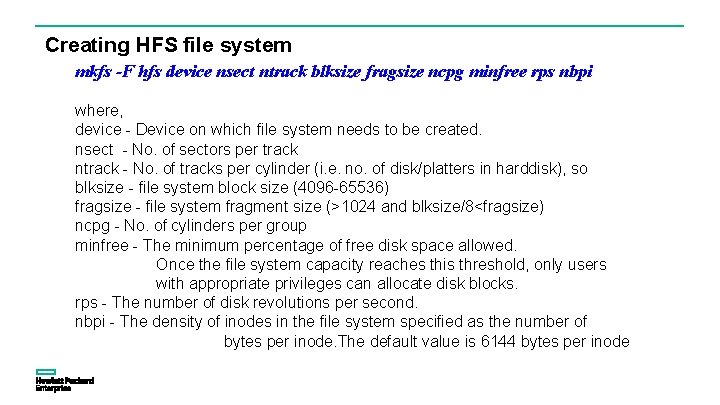

Creating HFS file system mkfs -F hfs device nsect ntrack blksize fragsize ncpg minfree rps nbpi where, device - Device on which file system needs to be created. nsect - No. of sectors per track ntrack - No. of tracks per cylinder (i. e. no. of disk/platters in harddisk), so blksize - file system block size (4096 -65536) fragsize - file system fragment size (>1024 and blksize/8<fragsize) ncpg - No. of cylinders per group minfree - The minimum percentage of free disk space allowed. Once the file system capacity reaches this threshold, only users with appropriate privileges can allocate disk blocks. rps - The number of disk revolutions per second. nbpi - The density of inodes in the file system specified as the number of bytes per inode. The default value is 6144 bytes per inode

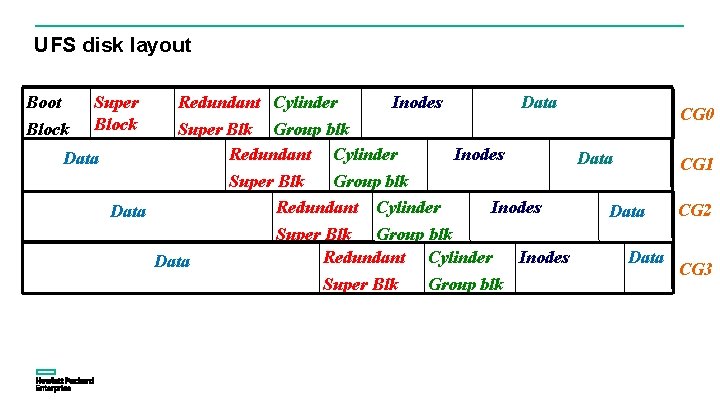

UFS disk layout Boot Block Super Block Data Redundant Cylinder Inodes Super Blk Group blk Redundant Cylinder Data Super Blk Data Inodes Super Blk Group blk Redundant Cylinder Group blk CG 0 Inodes CG 1 Data CG 2 CG 3

UFS disk layout Super Block Redundant Boot Block Super Block Cylinder Group blk (0) Redundant Super Block CG 1 Cylinder Group blk (1) Inode block Redundant Super Block CG 2 Data block Inode block

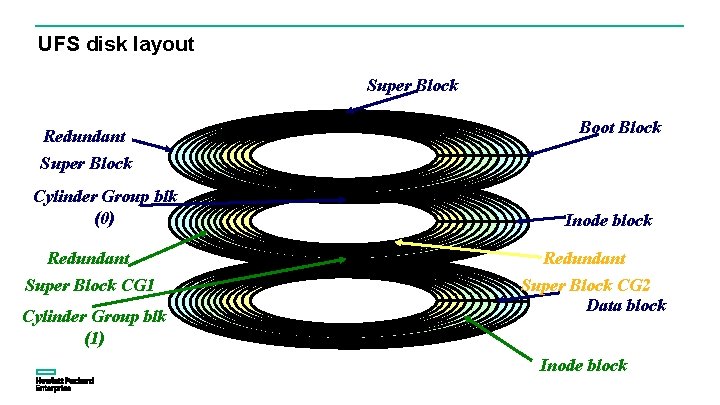

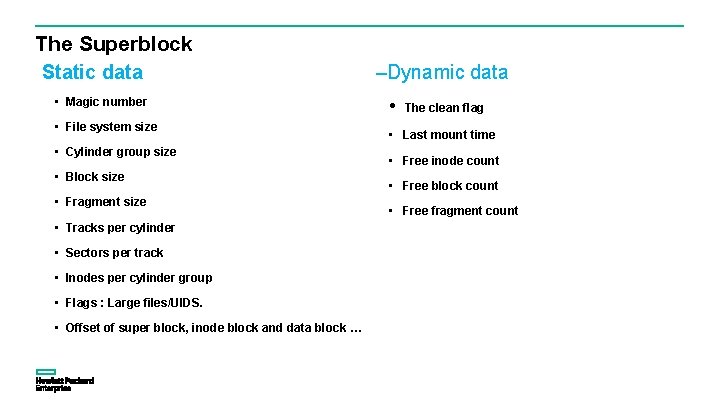

The Superblock Static data • Magic number • File system size • Cylinder group size • Block size • Fragment size • Tracks per cylinder • Sectors per track • Inodes per cylinder group • Flags : Large files/UIDS. • Offset of super block, inode block and data block … –Dynamic data • The clean flag • Last mount time • Free inode count • Free block count • Free fragment count

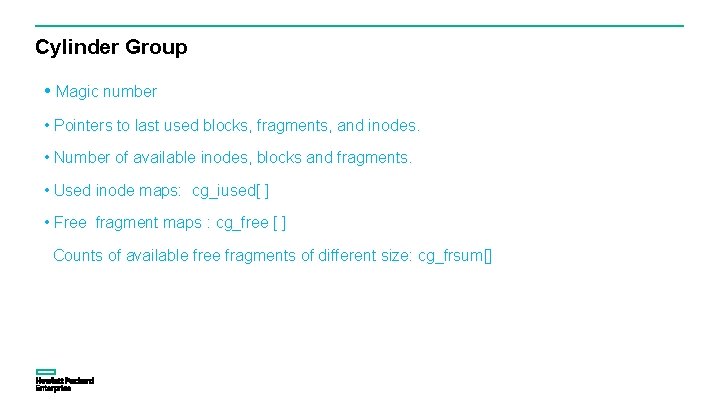

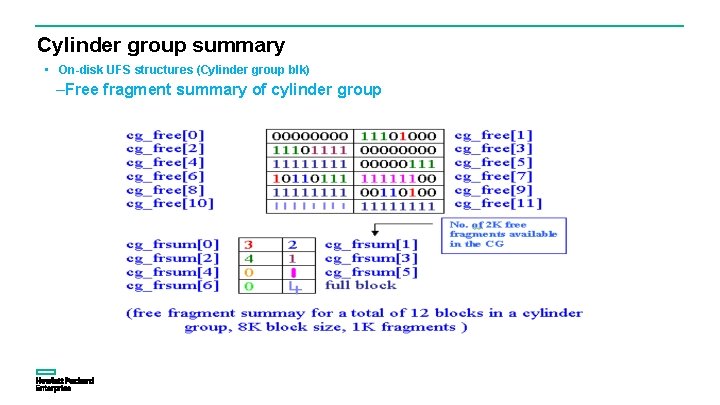

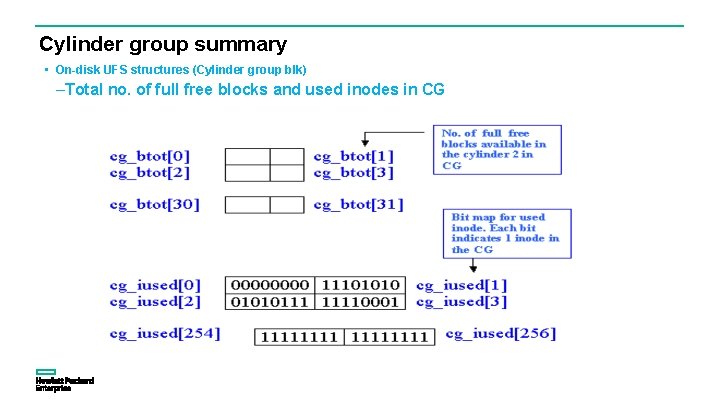

Cylinder Group • Magic number • Pointers to last used blocks, fragments, and inodes. • Number of available inodes, blocks and fragments. • Used inode maps: cg_iused[ ] • Free fragment maps : cg_free [ ] Counts of available free fragments of different size: cg_frsum[]

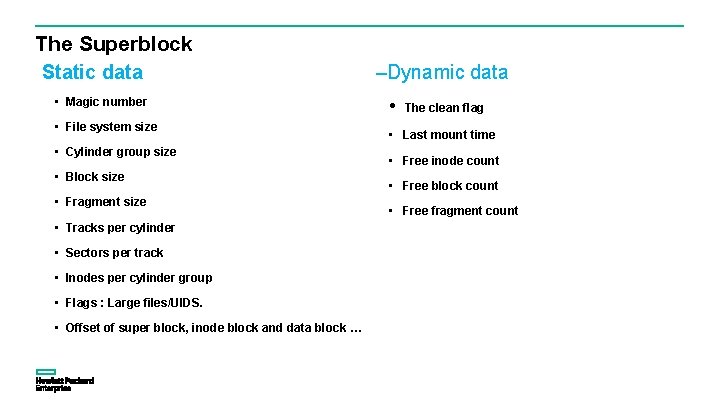

Cylinder group summary • On-disk UFS structures (Cylinder group blk) –Free fragment bit map of cylinder group

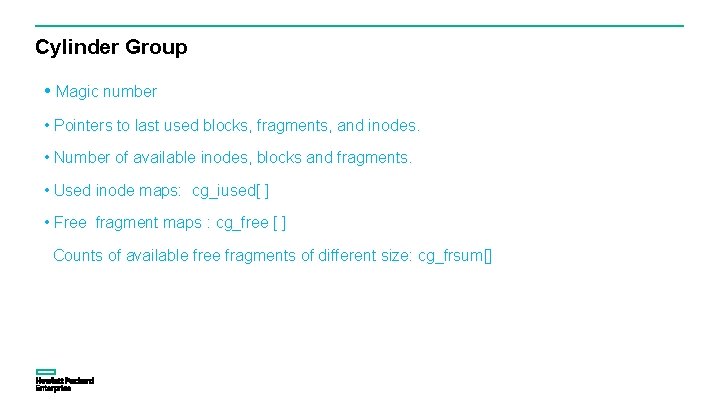

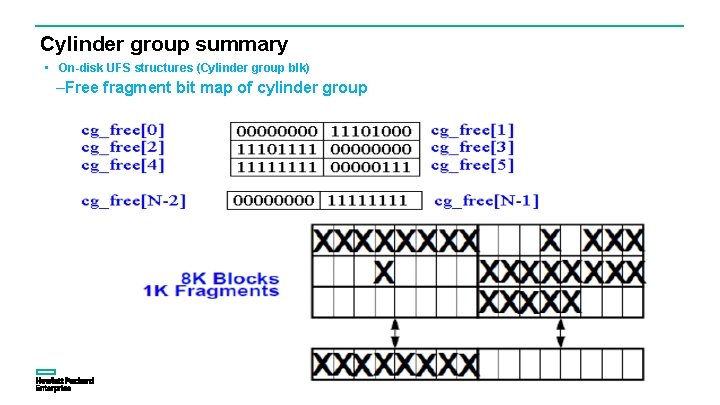

Cylinder group summary • On-disk UFS structures (Cylinder group blk) –Free fragment summary of cylinder group

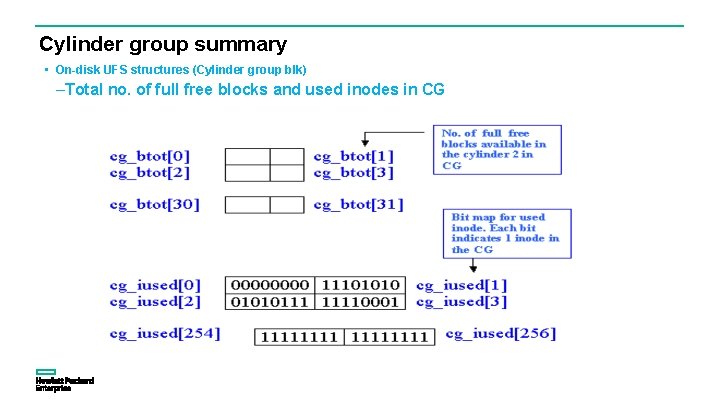

Cylinder group summary • On-disk UFS structures (Cylinder group blk) –Total no. of full free blocks and used inodes in CG

UFS Disk Inodes All Files include: –Dependent upon File Type: • Type • • Permissions • Link target name • Number of links • Pipe information • Ownership • Major and minor number Address of regular file blocks • Size in terms of length • Size in terms of fragments held • Usage times • Generation count • Flags File name , Inode number is not be stored in Inode

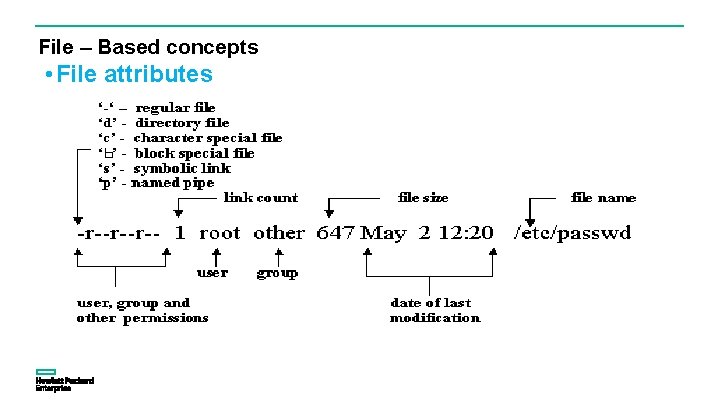

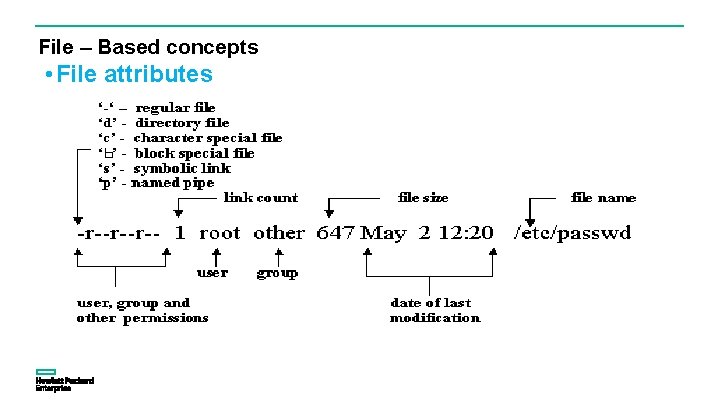

File – Based concepts • File attributes

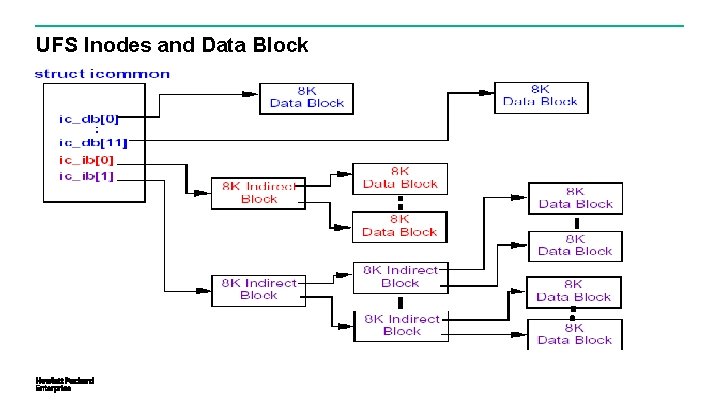

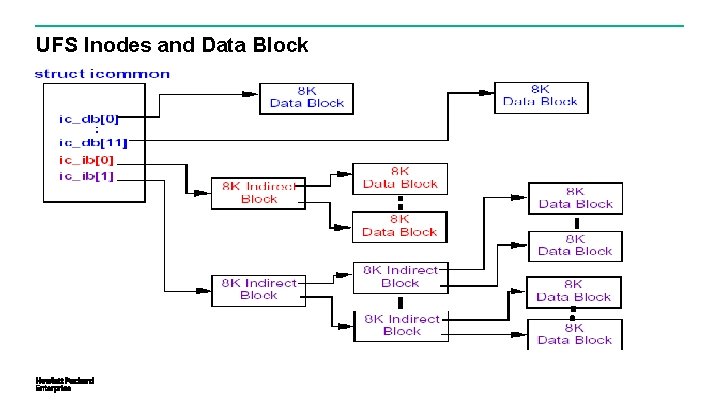

UFS Inodes and Data Block

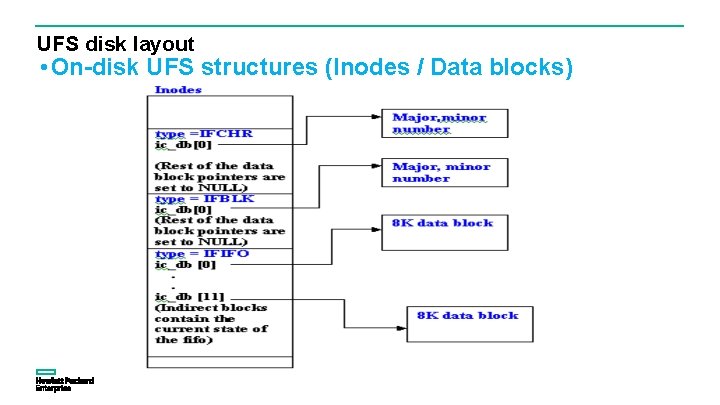

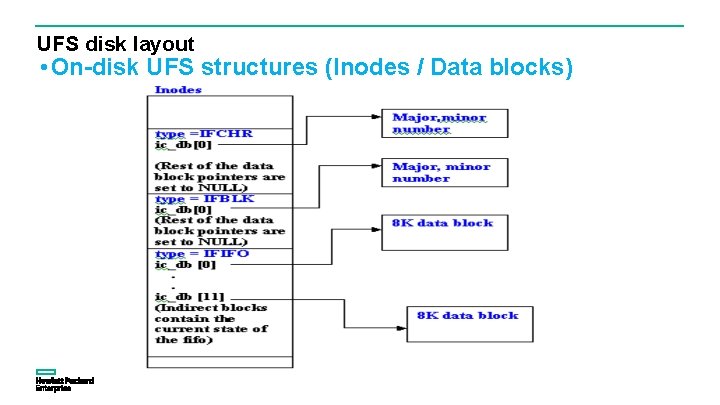

UFS disk layout • On-disk UFS structures (Inodes / Data blocks)

![Blocks and Fragments File system records the space in terms of fragments using cgfree Blocks and Fragments File system records the space in terms of fragments using cg_free[]](https://slidetodoc.com/presentation_image_h/36d8c9c73ea03beaf437af9a7152f415/image-22.jpg)

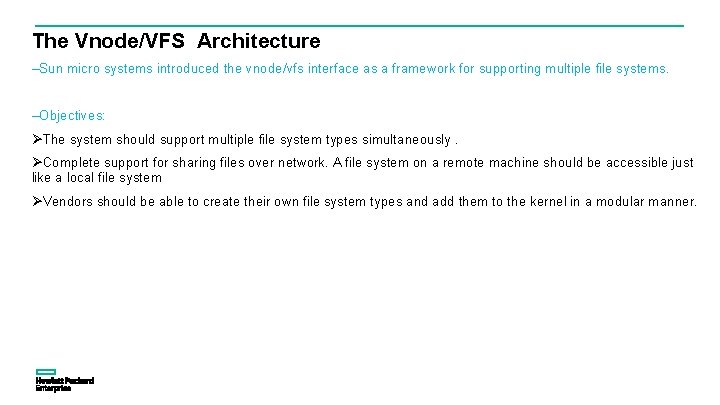

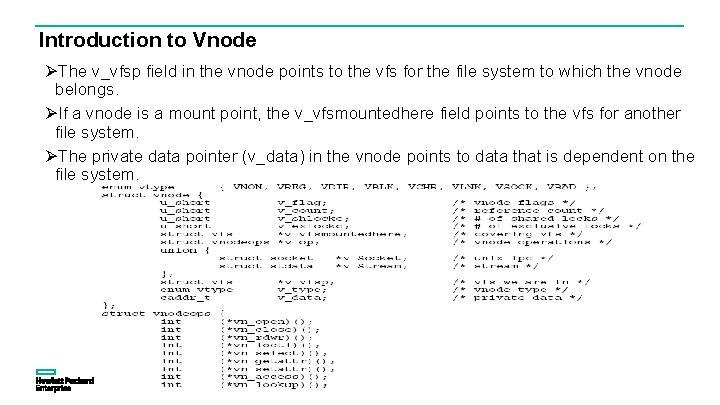

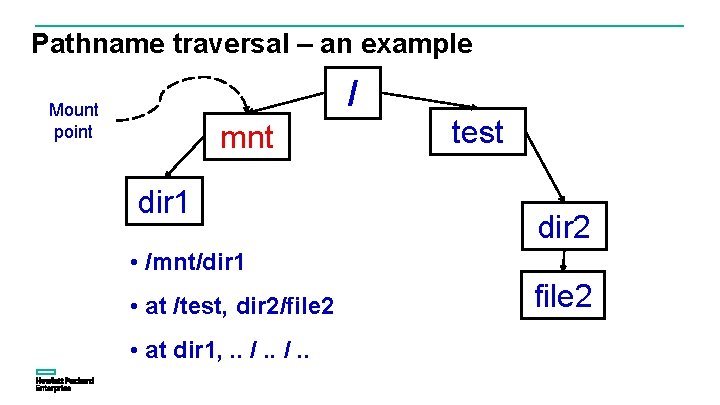

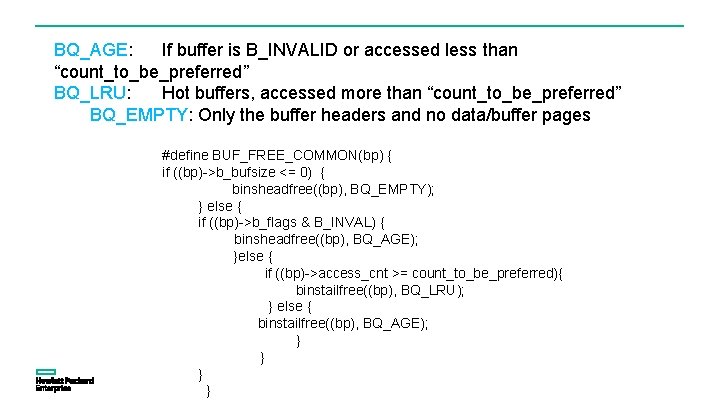

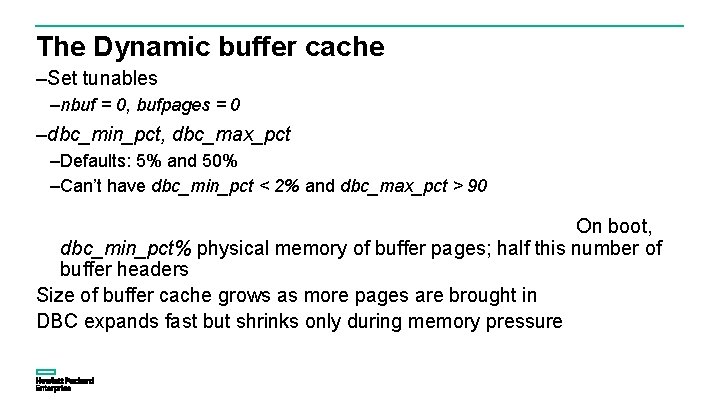

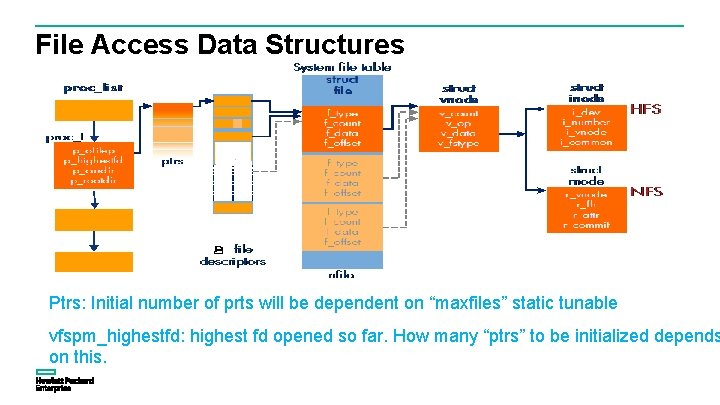

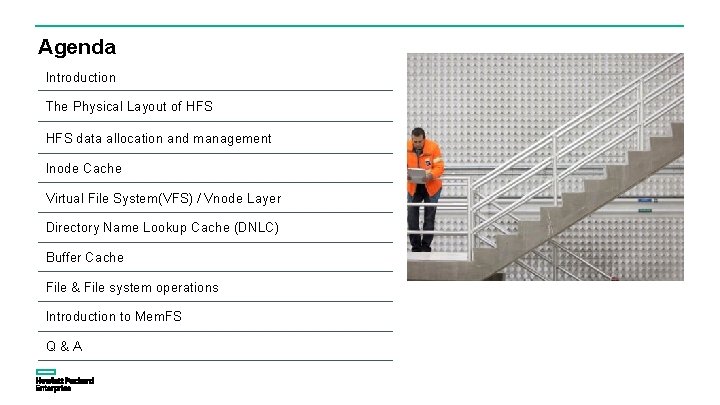

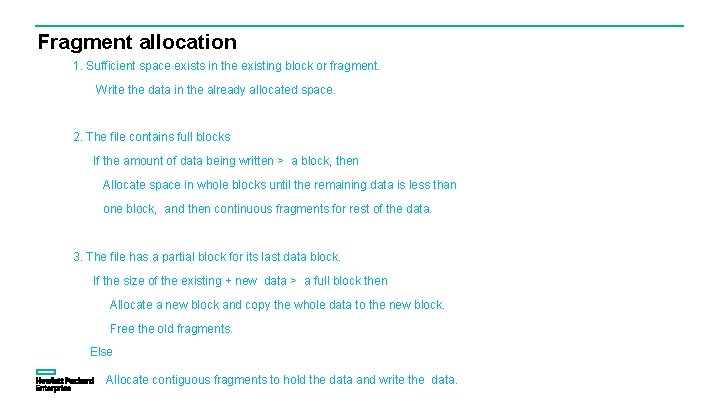

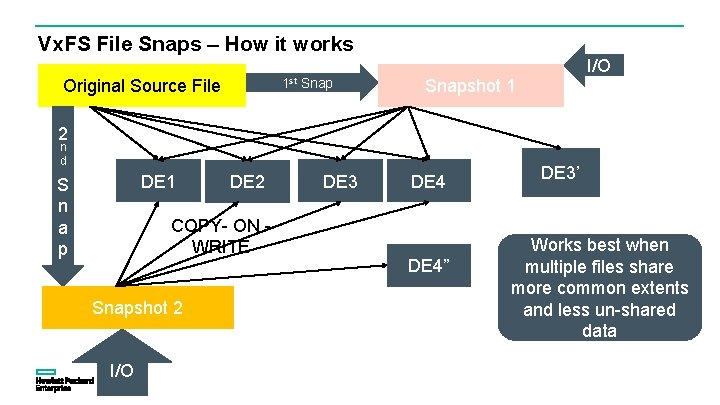

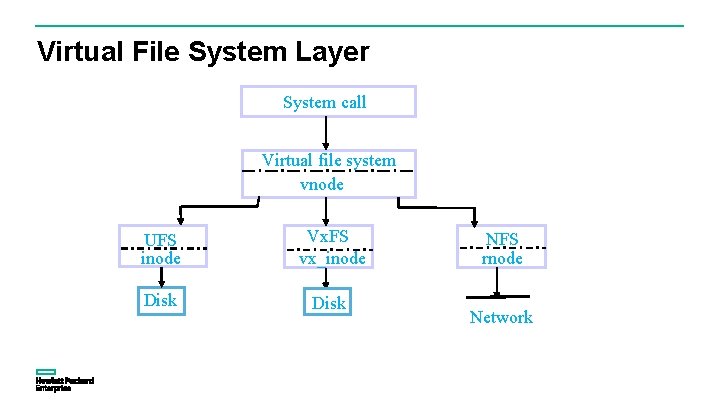

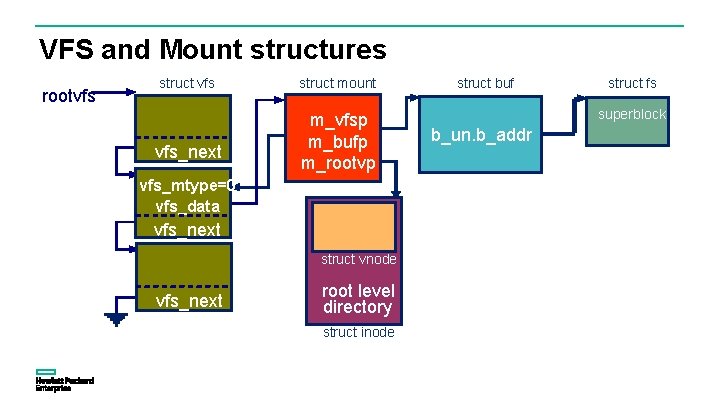

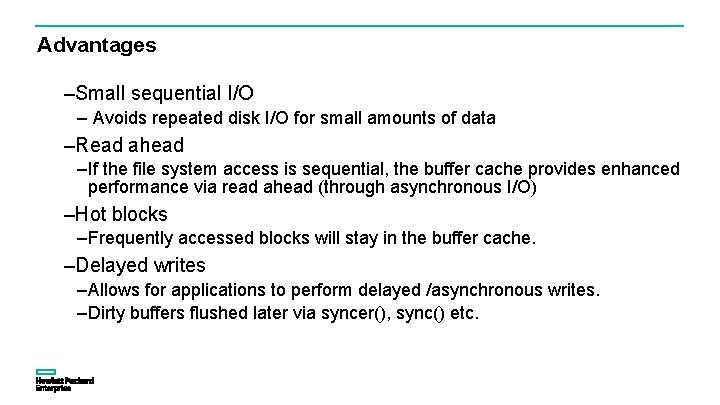

Blocks and Fragments File system records the space in terms of fragments using cg_free[] bit maps

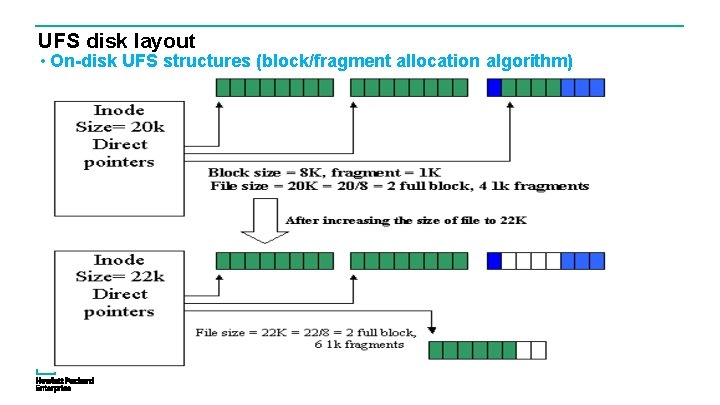

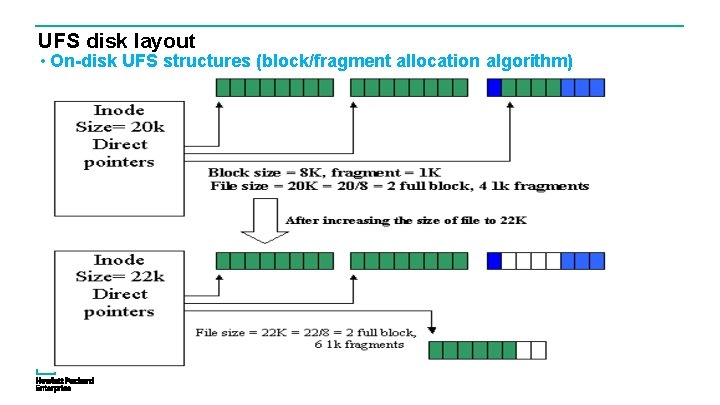

UFS disk layout • On-disk UFS structures (block/fragment allocation algorithm)

Fragment allocation 1. Sufficient space exists in the existing block or fragment. Write the data in the already allocated space. 2. The file contains full blocks If the amount of data being written > a block, then Allocate space in whole blocks until the remaining data is less than one block, and then continuous fragments for rest of the data. 3. The file has a partial block for its last data block. If the size of the existing + new data > a full block then Allocate a new block and copy the whole data to the new block. Free the old fragments. Else Allocate contiguous fragments to hold the data and write the data.

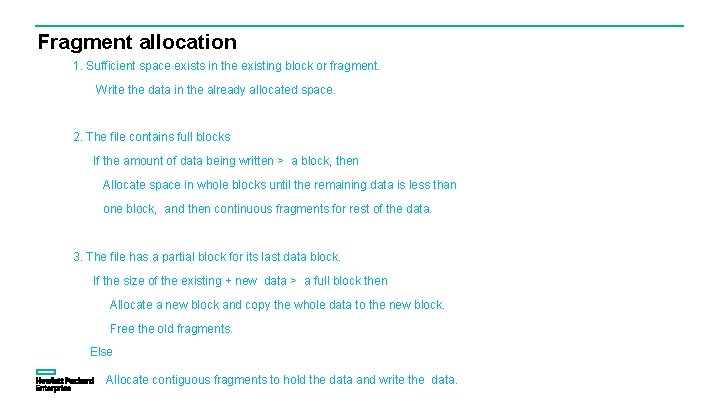

Disk Inode/Block Allocation policies Inode allocation : • Allocate inodes for file in the same cylinder group as the parent. • Allocate inodes for new directories in the cylinder group with a higher than average number of free inodes and the smallest number of directory entries. – Block allocation: • Allocate the direct block in the same cylinder group as the inode that describes the file. • Allocate an indirect blocks choose a cylinder group with greater than average number of free blocks.

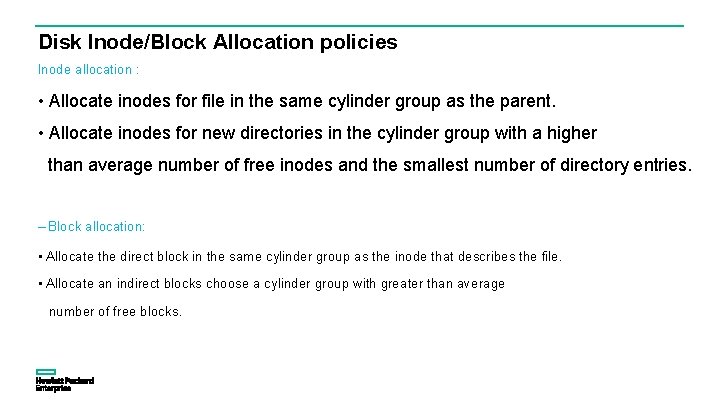

UFS Directory Structure Struct direct reclen: offset from the current entry to the next valid entry. #define DIRSIZ(dp) calculates the amount of space needed for entry. ((sizeof (struct direct) - (MAXNAMLEN+1)) + (((dp)->d_namlen+1 + 3) &~ 3))

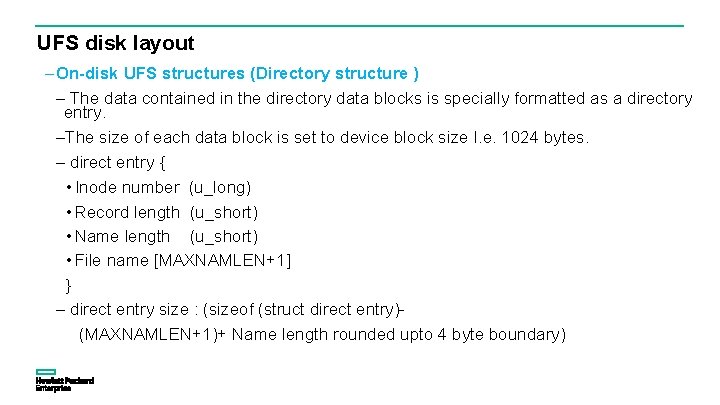

UFS disk layout – On-disk UFS structures (Directory structure ) – The data contained in the directory data blocks is specially formatted as a directory entry. –The size of each data block is set to device block size I. e. 1024 bytes. – direct entry { • Inode number (u_long) • Record length (u_short) • Name length (u_short) • File name [MAXNAMLEN+1] } – direct entry size : (sizeof (struct direct entry)(MAXNAMLEN+1)+ Name length rounded upto 4 byte boundary)

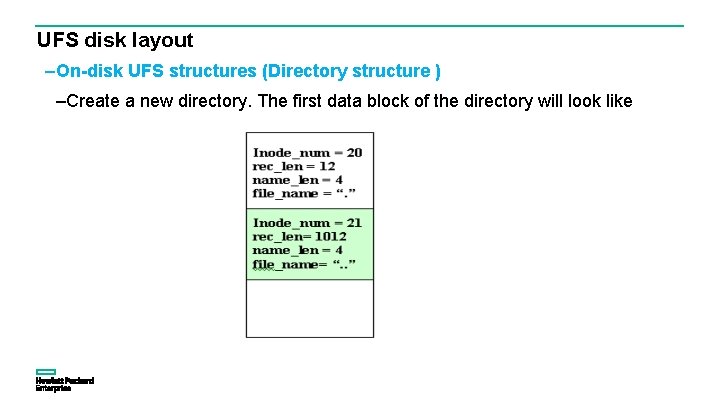

UFS disk layout –On-disk UFS structures (Directory structure ) –Create a new directory. The first data block of the directory will look like

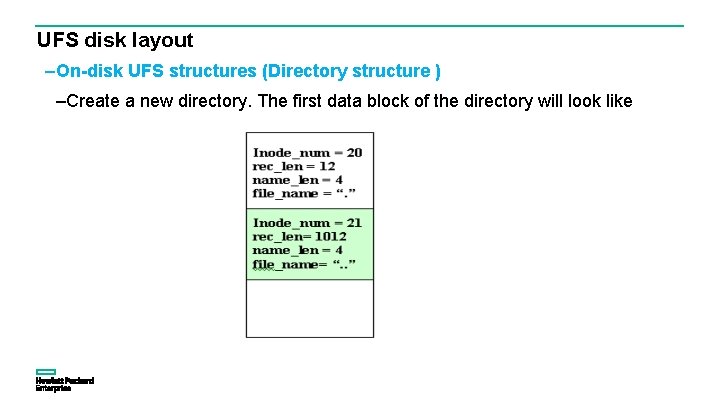

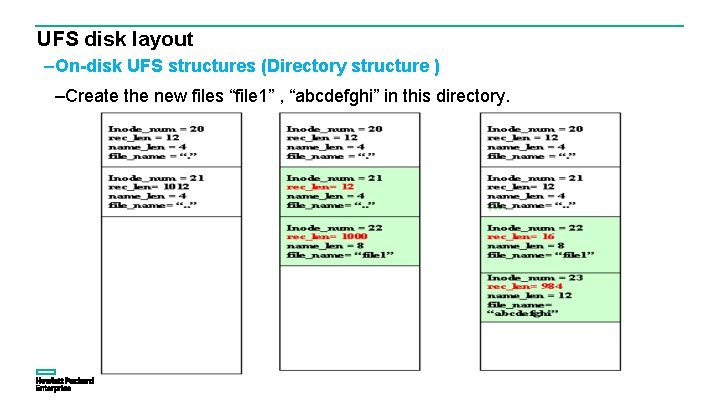

UFS disk layout –On-disk UFS structures (Directory structure ) –Create the new files “file 1” , “abcdefghi” in this directory.

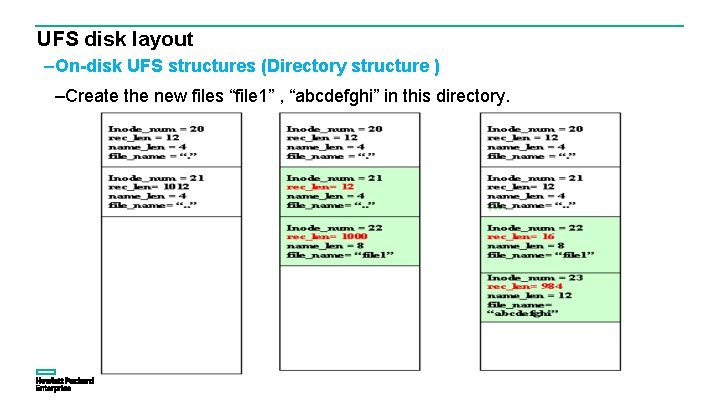

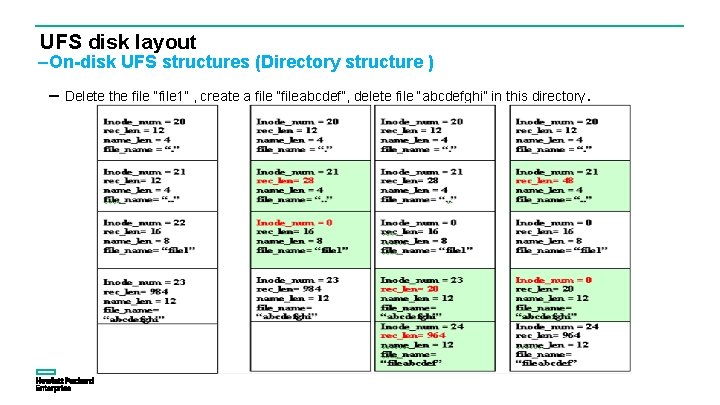

UFS disk layout –On-disk UFS structures (Directory structure ) – Delete the file “file 1” , create a file “fileabcdef”, delete file “abcdefghi” in this directory.

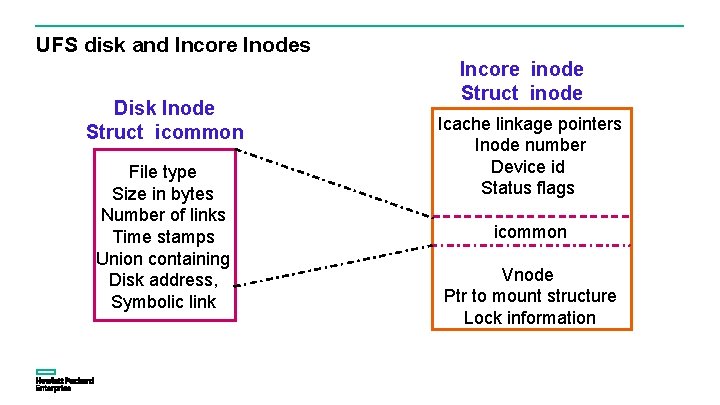

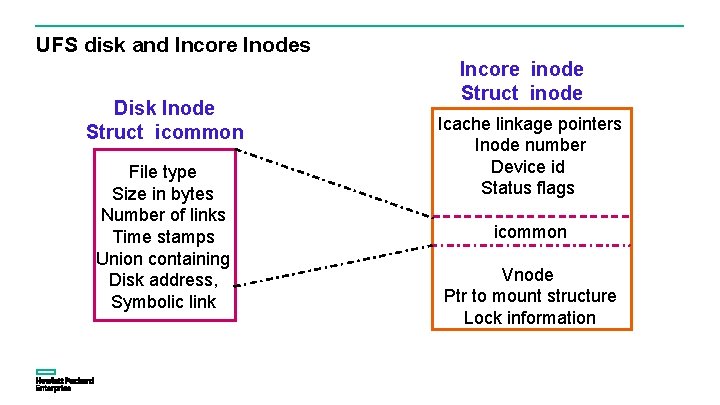

UFS disk and Incore Inodes Disk Inode Struct icommon File type Size in bytes Number of links Time stamps Union containing Disk address, Symbolic link Incore inode Struct inode Icache linkage pointers Inode number Device id Status flags icommon Vnode Ptr to mount structure Lock information

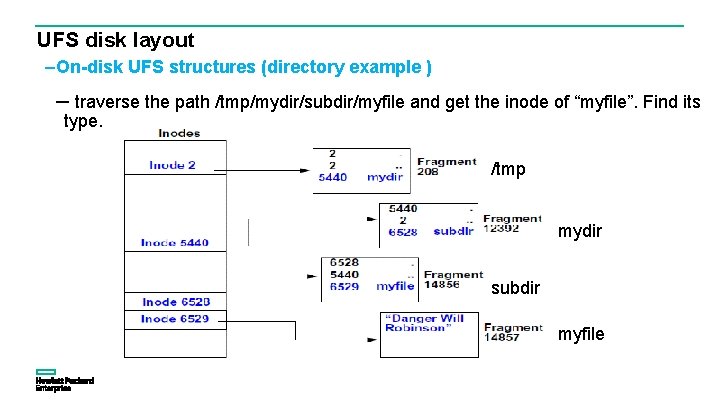

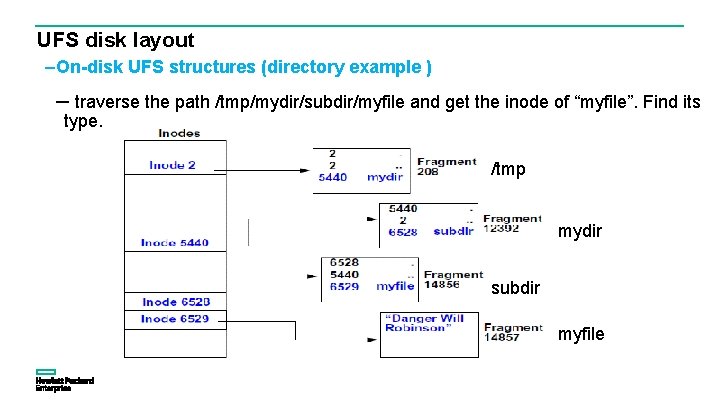

UFS disk layout –On-disk UFS structures (directory example ) – traverse the path /tmp/mydir/subdir/myfile and get the inode of “myfile”. Find its type. /tmp mydir subdir myfile

Vx. FS on HP-UX 33

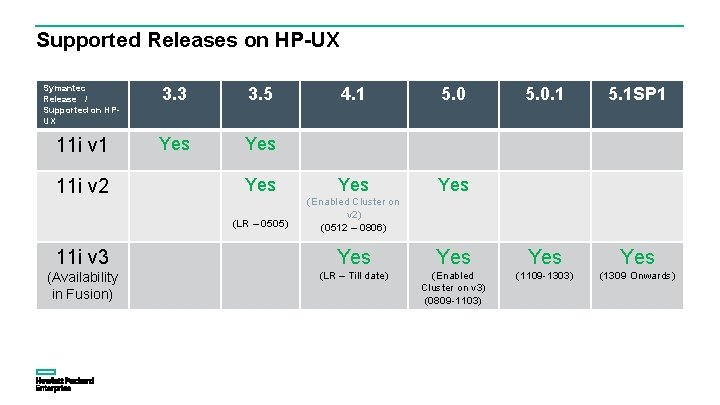

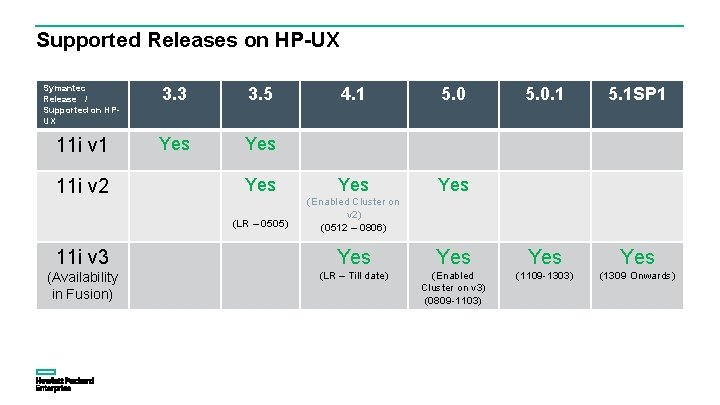

Supported Releases on HP-UX Symantec Release / Supported on HPUX 3. 3 3. 5 11 i v 1 Yes 11 i v 2 4. 1 5. 0 Yes Yes (LR – 0505) (Enabled Cluster on v 2) (0512 – 0806) 5. 0. 1 5. 1 SP 1 11 i v 3 Yes Yes (Availability in Fusion) (LR – Till date) (Enabled Cluster on v 3) (0809 -1103) (1109 -1303) (1309 Onwards)

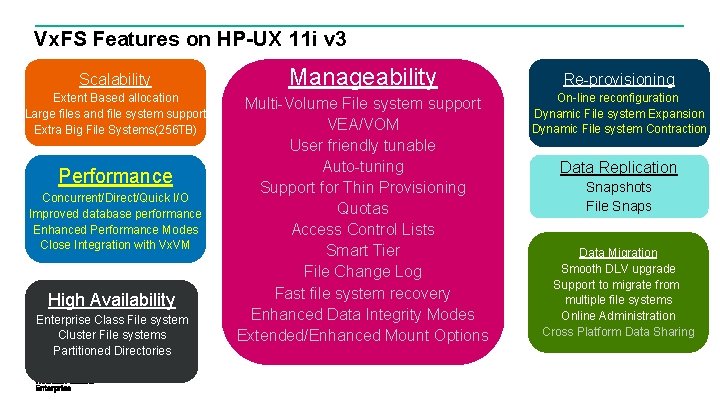

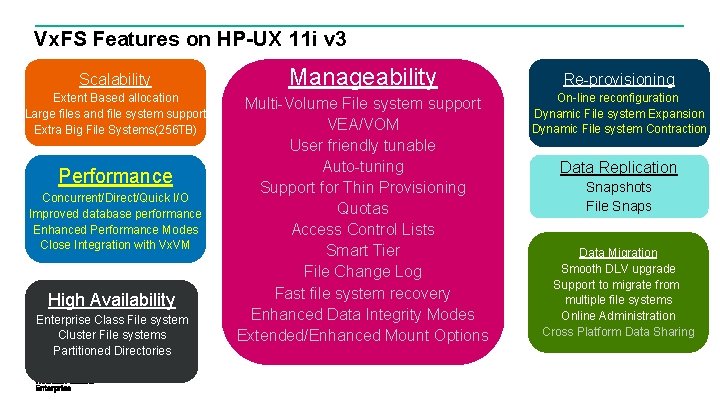

Vx. FS Features on HP-UX 11 i v 3 Scalability Extent Based allocation Large files and file system support Extra Big File Systems(256 TB) Performance Concurrent/Direct/Quick I/O Improved database performance Enhanced Performance Modes Close Integration with Vx. VM High Availability Enterprise Class File system Cluster File systems Partitioned Directories Manageability Multi-Volume File system support VEA/VOM User friendly tunable Auto-tuning Support for Thin Provisioning Quotas Access Control Lists Smart Tier File Change Log Fast file system recovery Enhanced Data Integrity Modes Extended/Enhanced Mount Options Re-provisioning On-line reconfiguration Dynamic File system Expansion Dynamic File system Contraction Data Replication Snapshots File Snaps Data Migration Smooth DLV upgrade Support to migrate from multiple file systems Online Administration Cross Platform Data Sharing

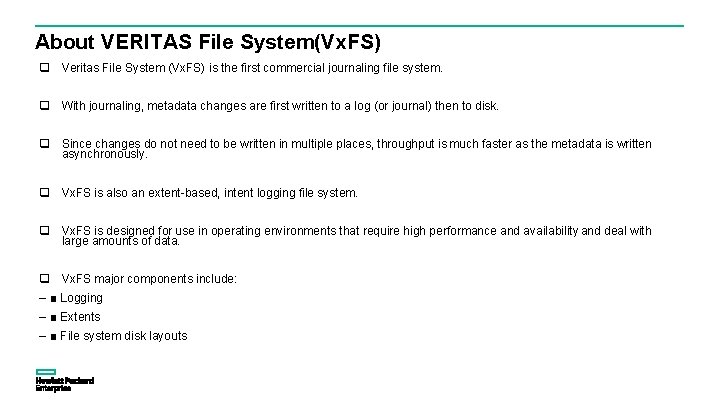

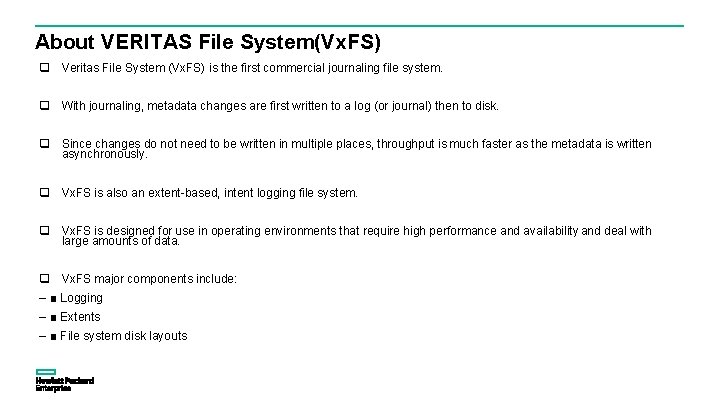

About VERITAS File System(Vx. FS) q Veritas File System (Vx. FS) is the first commercial journaling file system. q With journaling, metadata changes are first written to a log (or journal) then to disk. q Since changes do not need to be written in multiple places, throughput is much faster as the metadata is written asynchronously. q Vx. FS is also an extent-based, intent logging file system. q Vx. FS is designed for use in operating environments that require high performance and availability and deal with large amounts of data. q Vx. FS major components include: – ■ Logging – ■ Extents – ■ File system disk layouts

Vx. FS TERMINOLOGIES : Disk Layout (4)

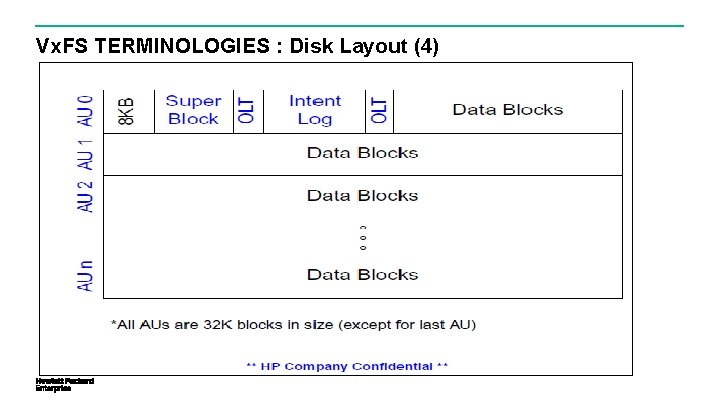

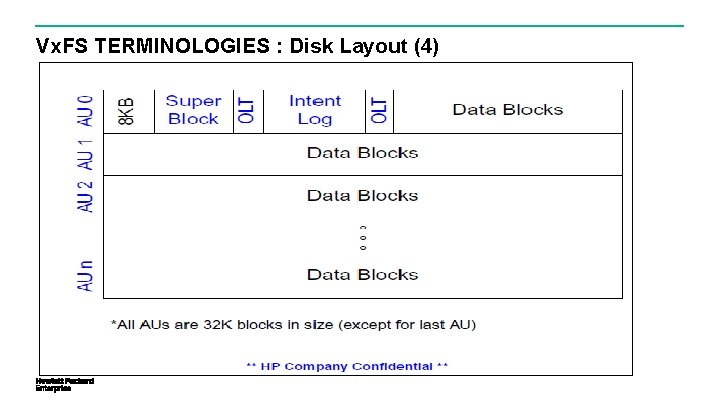

Vx. FS TERMINOLOGIES : Superblock

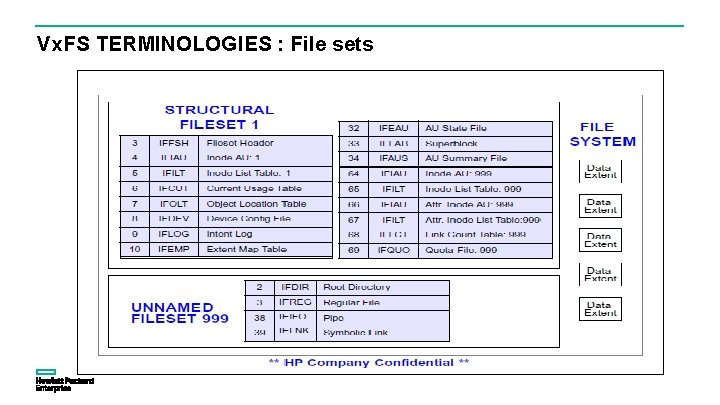

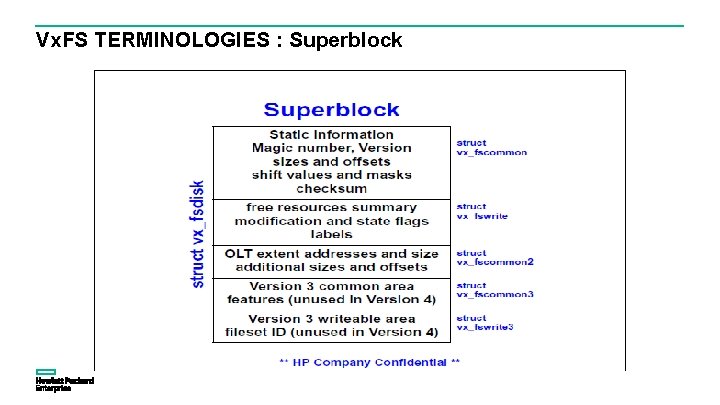

Vx. FS TERMINOLOGIES : File sets

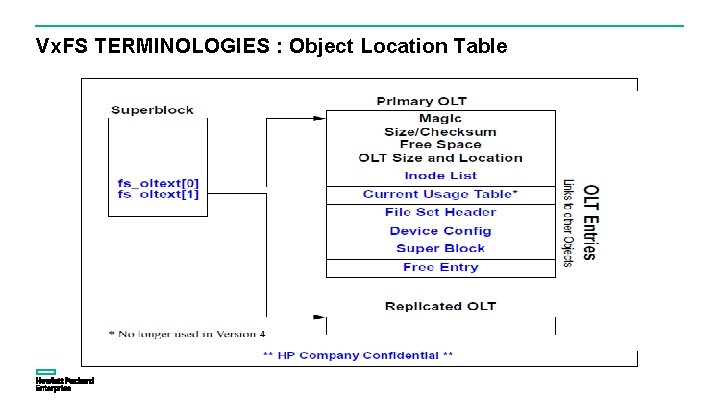

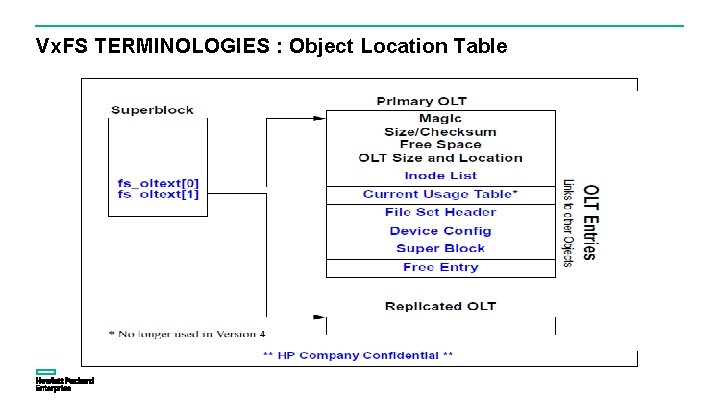

Vx. FS TERMINOLOGIES : Object Location Table

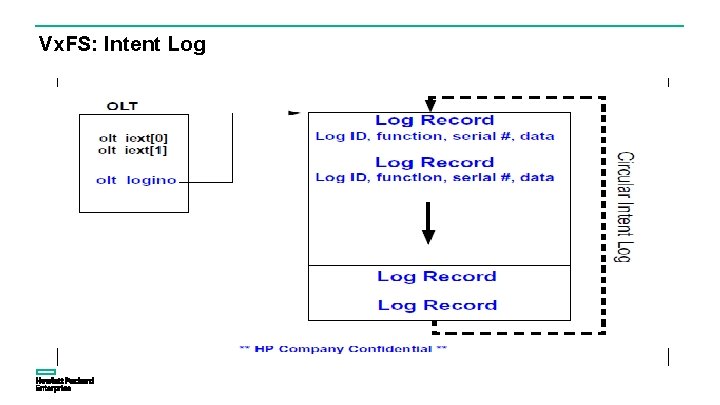

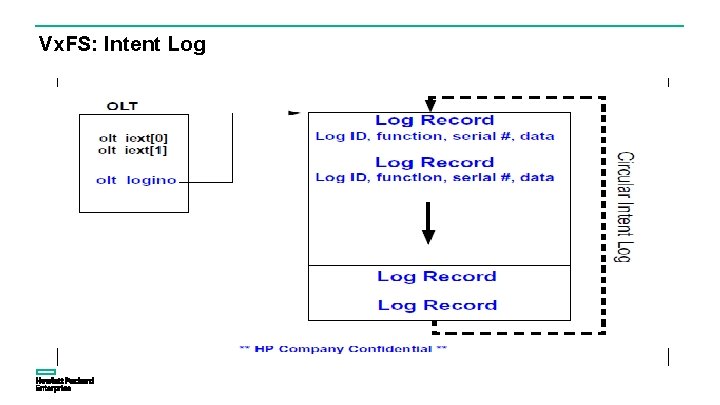

Vx. FS: Intent Log

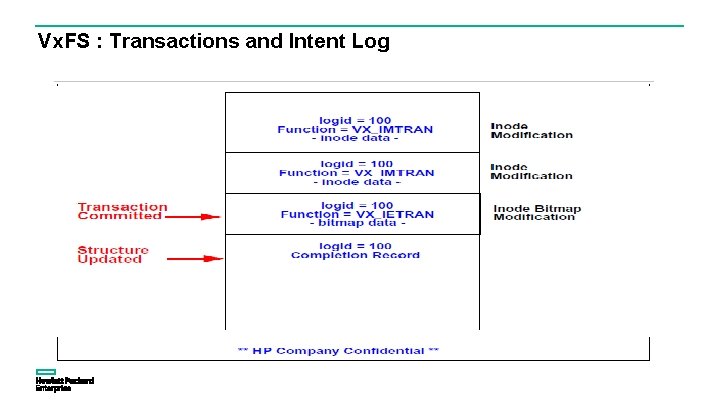

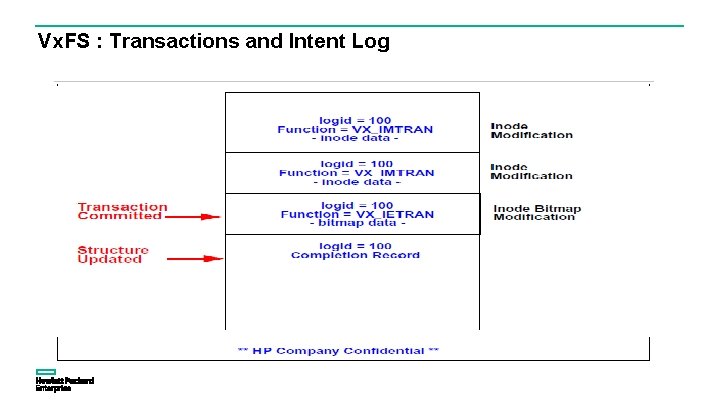

Vx. FS : Transactions and Intent Log

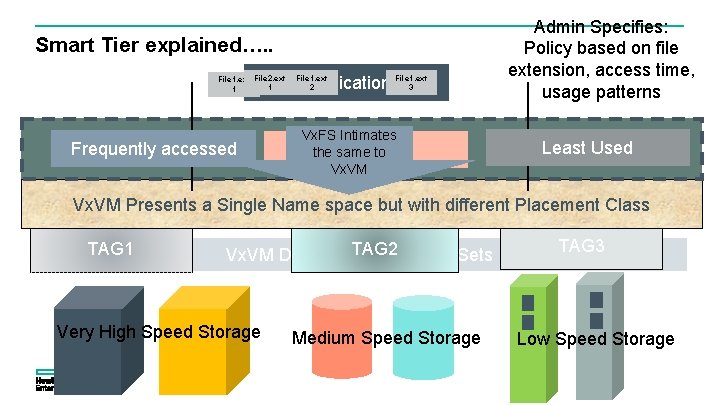

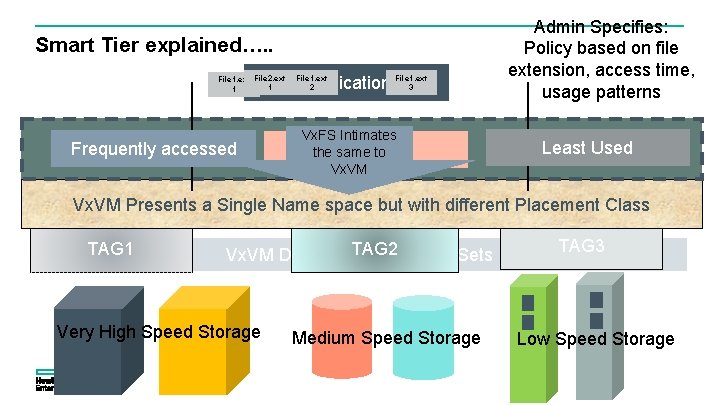

Smart Tier explained…. . File 1. ext File 2. ext 1 1 Frequently accessed Application File 1. ext 3 File 1. ext 2 Vx. FS Intimates Vx. FS File System the. Mirrored same to Admin Specifies: Policy based on file extension, access time, usage patterns Least Used Vx. VM Presents a Single Name space but with different Placement Class VS 1 TAG 1 VS 2 TAG 2 VM 2 Sets Vx. VM Disk VM 1 Group & Volume Very High Speed Storage Medium Speed Storage VL 1 TAG 3 VL 2 Low Speed Storage

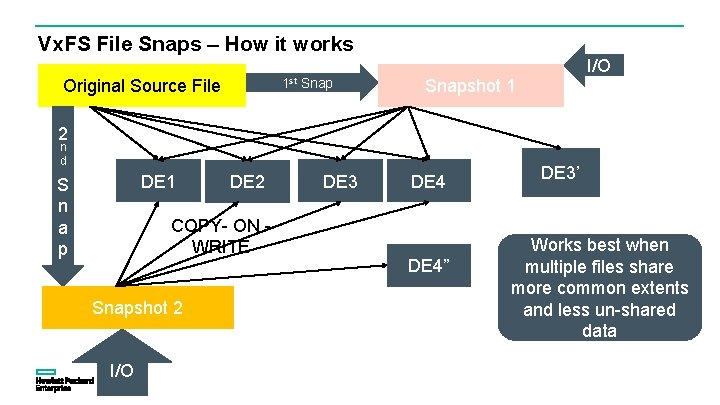

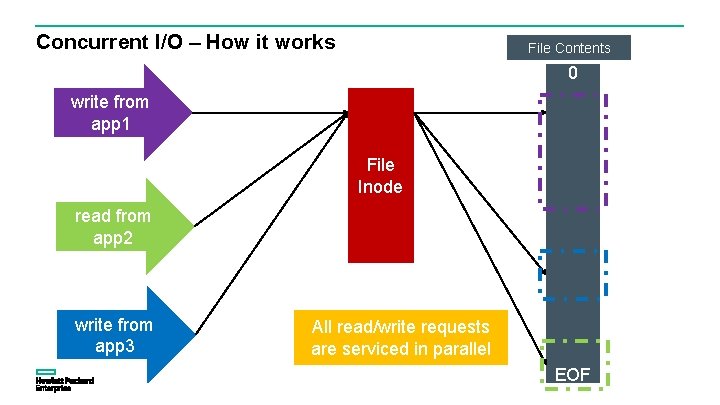

Vx. FS File Snaps – How it works 1 st Original Source File Snap I/O Snapshot 1 2 n d DE 1 S n a p DE 2 COPY- ON WRITE Snapshot 2 I/O DE 3 DE 4” DE 3’ Works best when multiple files share more common extents and less un-shared data

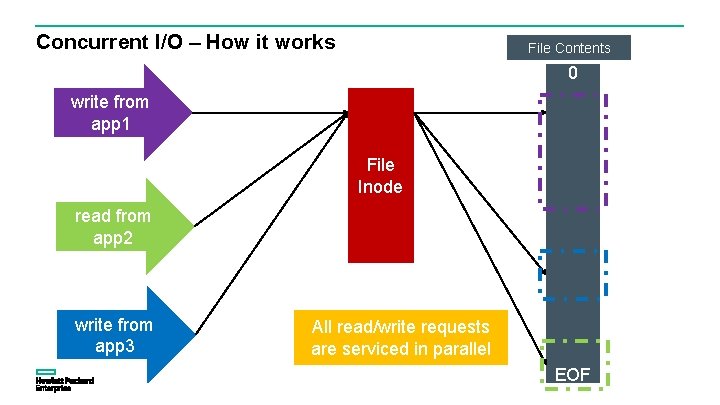

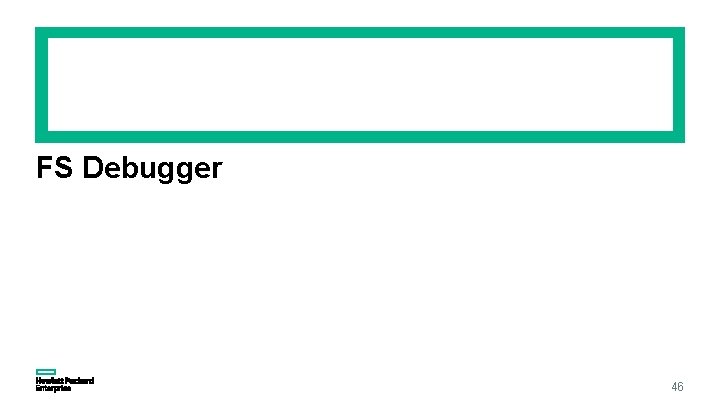

Concurrent I/O – How it works File Contents 0 write from app 1 File Inode read from app 2 write from app 3 All read/write requests are serviced in parallel EOF

FS Debugger 46

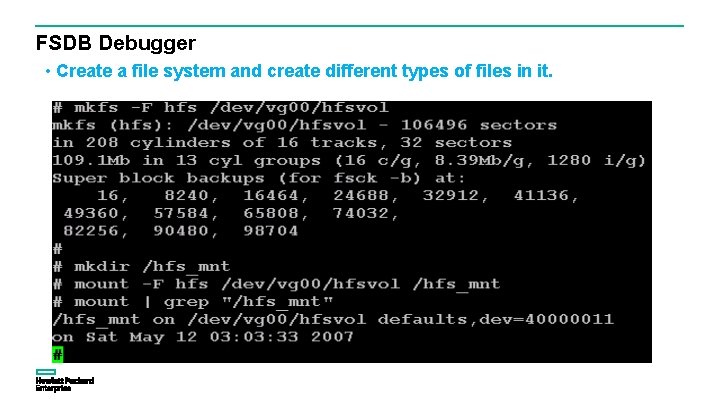

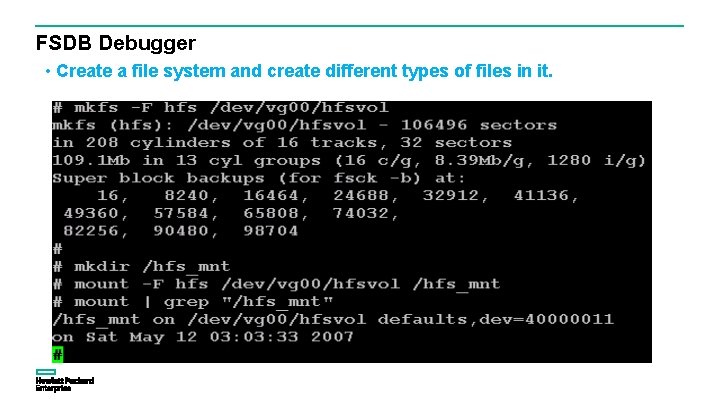

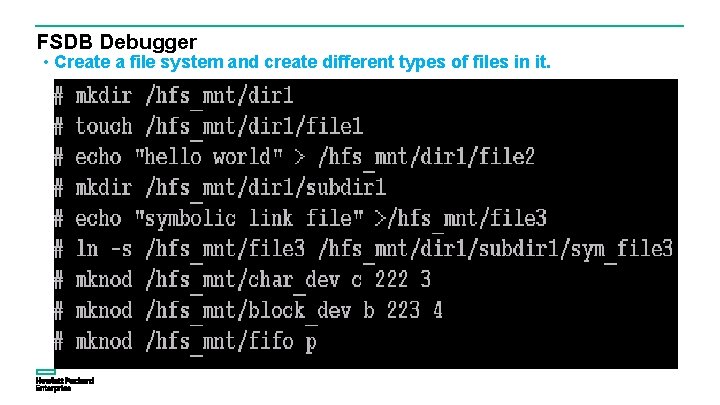

FSDB Debugger • Create a file system and create different types of files in it.

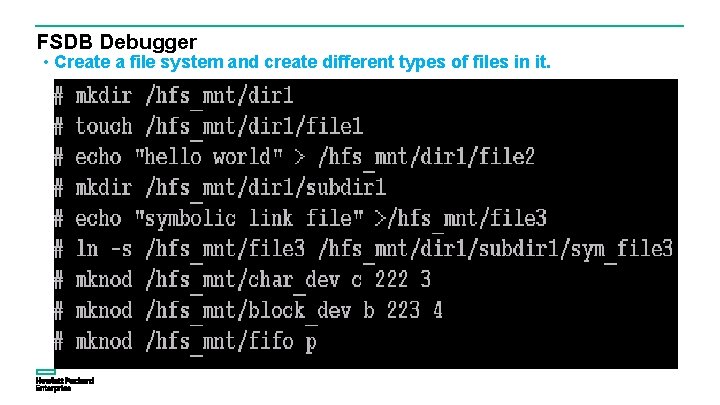

FSDB Debugger • Create a file system and create different types of files in it.

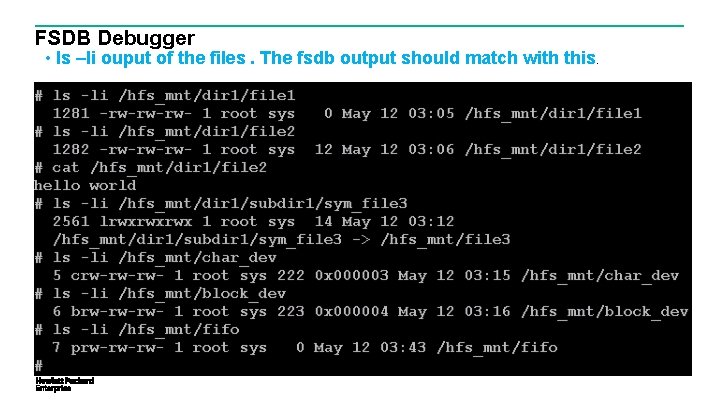

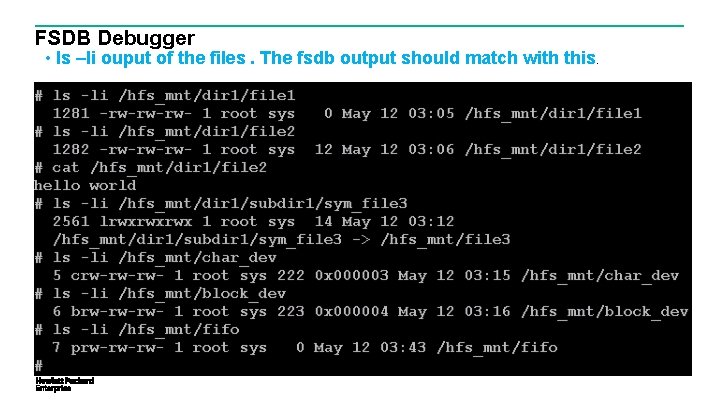

FSDB Debugger • ls –li ouput of the files. The fsdb output should match with this.

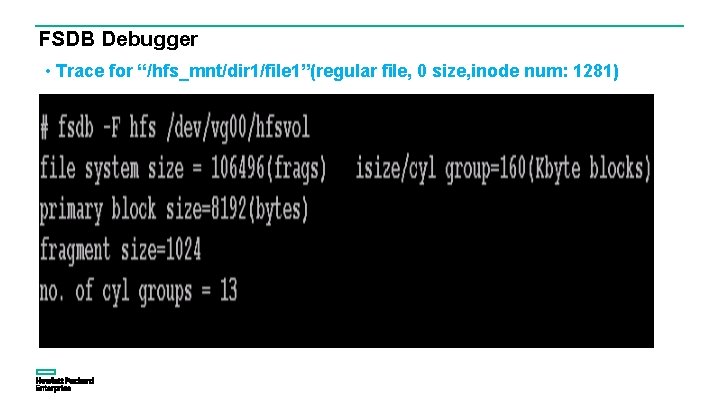

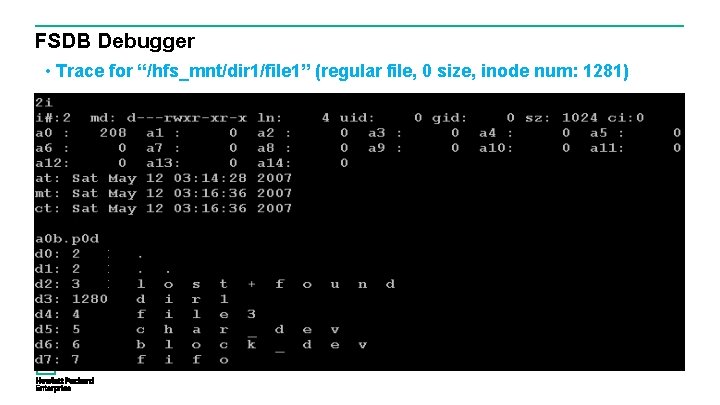

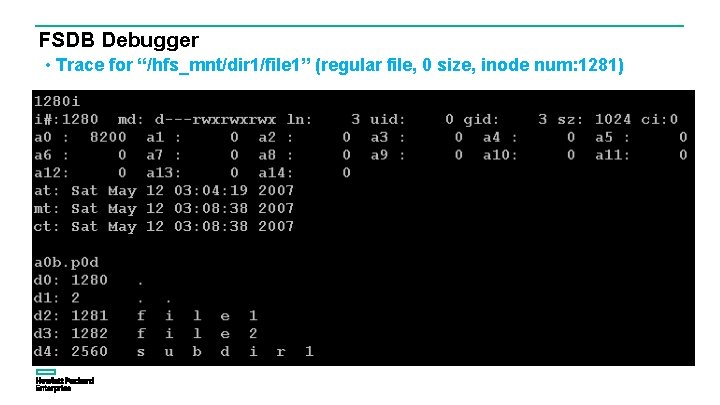

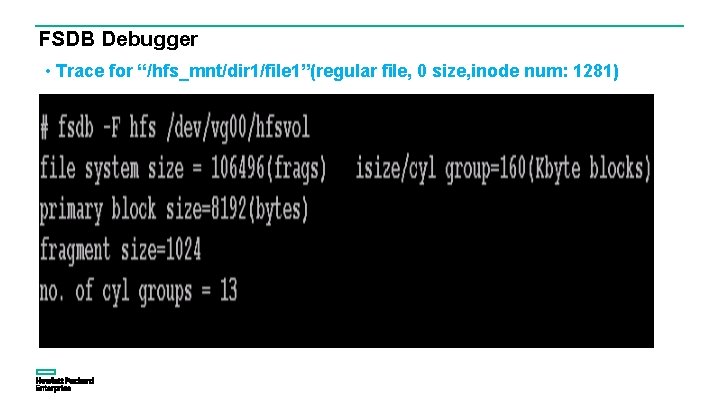

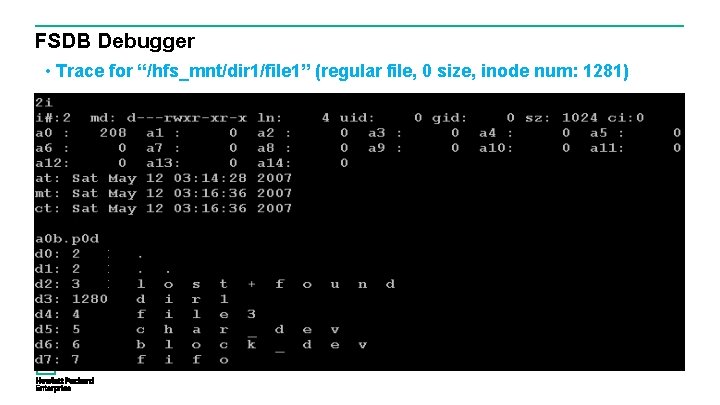

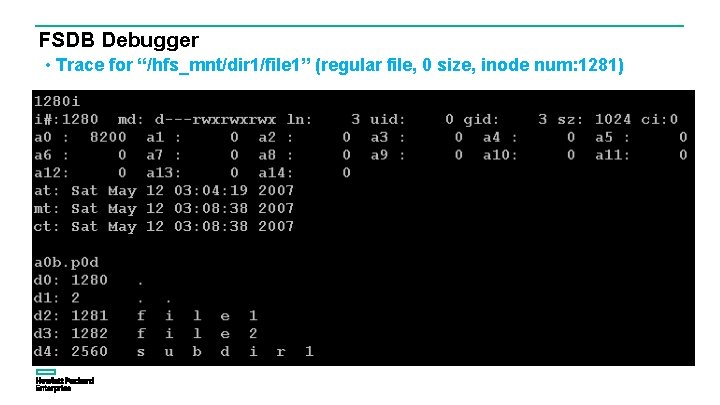

FSDB Debugger • Trace for “/hfs_mnt/dir 1/file 1”(regular file, 0 size, inode num: 1281)

FSDB Debugger • Trace for “/hfs_mnt/dir 1/file 1” (regular file, 0 size, inode num: 1281)

FSDB Debugger • Trace for “/hfs_mnt/dir 1/file 1” (regular file, 0 size, inode num: 1281)

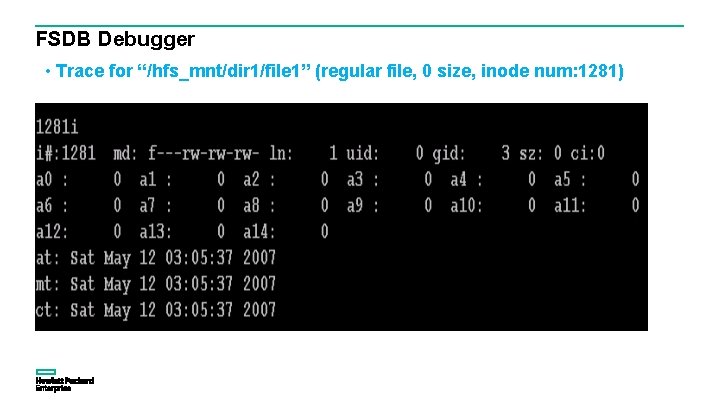

FSDB Debugger • Trace for “/hfs_mnt/dir 1/file 1” (regular file, 0 size, inode num: 1281)

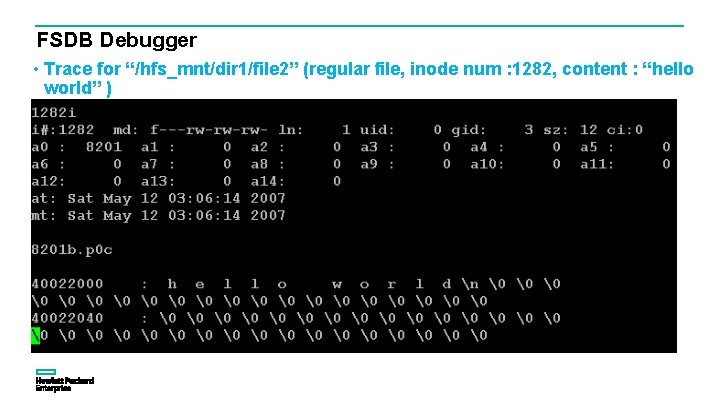

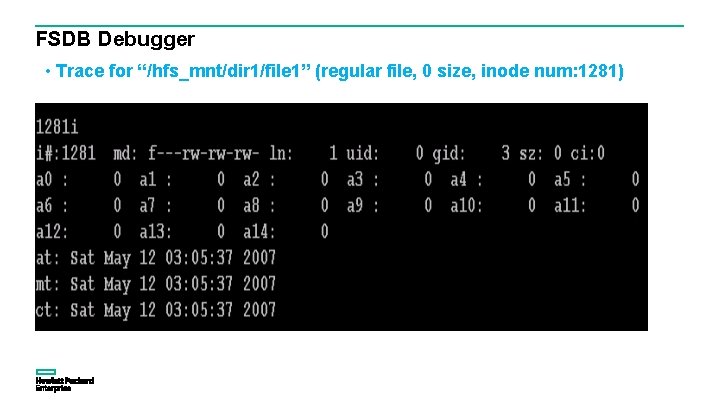

FSDB Debugger • Trace for “/hfs_mnt/dir 1/file 2” (regular file, inode num : 1282, content : “hello world” )

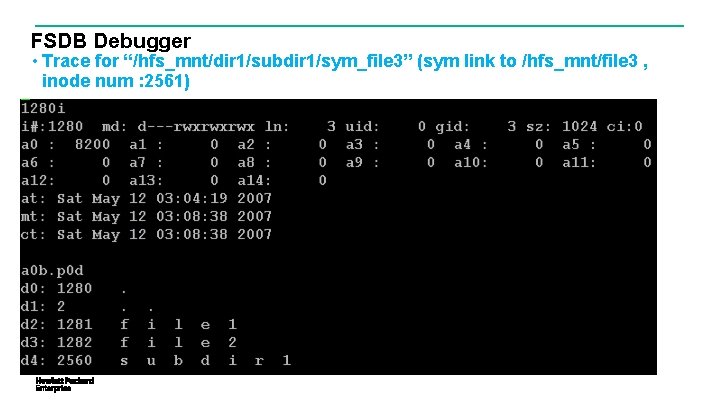

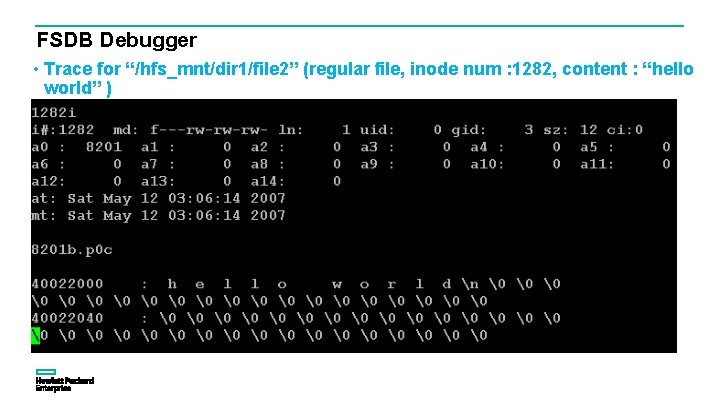

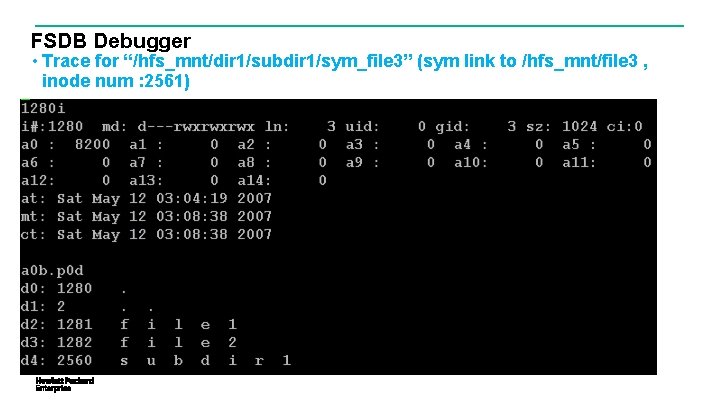

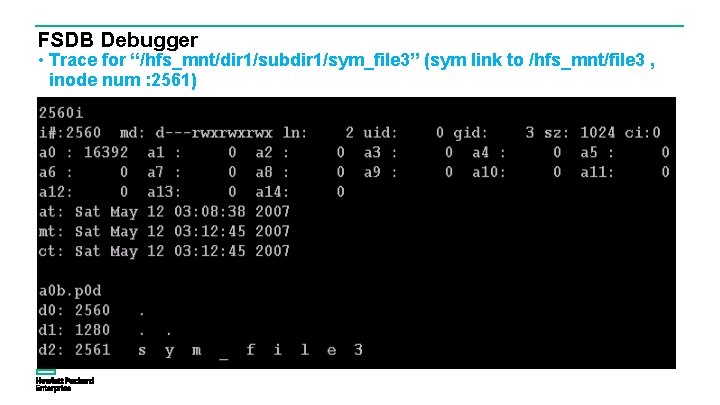

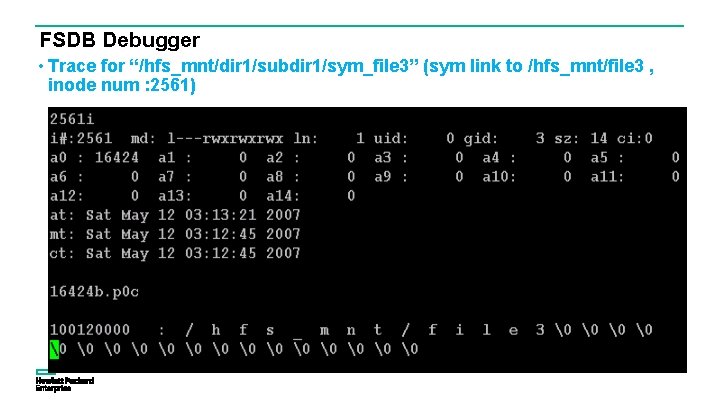

FSDB Debugger • Trace for “/hfs_mnt/dir 1/subdir 1/sym_file 3” (sym link to /hfs_mnt/file 3 , inode num : 2561)

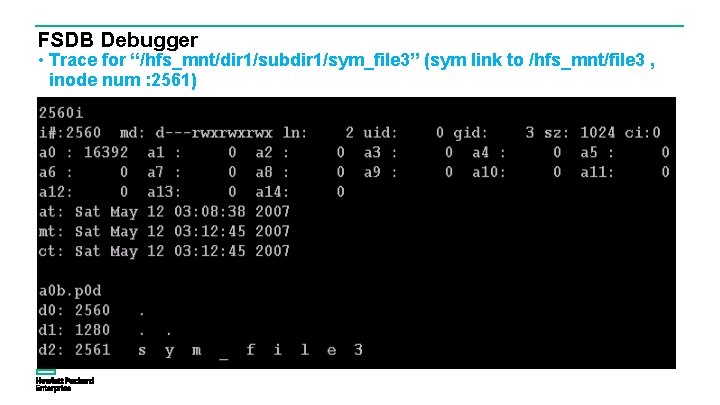

FSDB Debugger • Trace for “/hfs_mnt/dir 1/subdir 1/sym_file 3” (sym link to /hfs_mnt/file 3 , inode num : 2561)

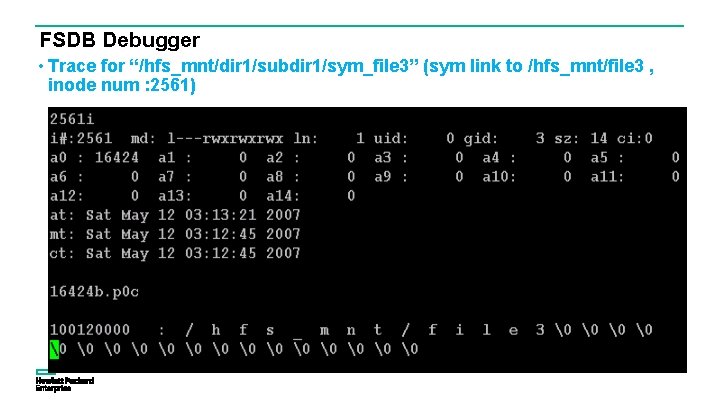

FSDB Debugger • Trace for “/hfs_mnt/dir 1/subdir 1/sym_file 3” (sym link to /hfs_mnt/file 3 , inode num : 2561)

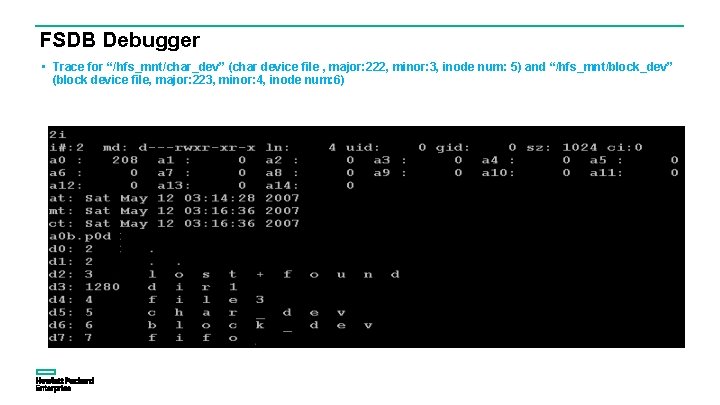

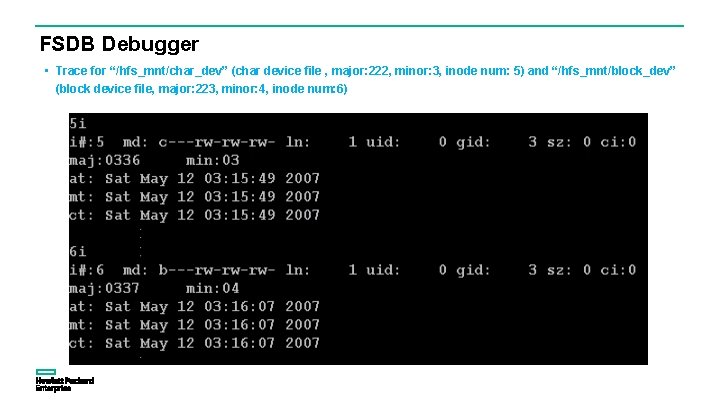

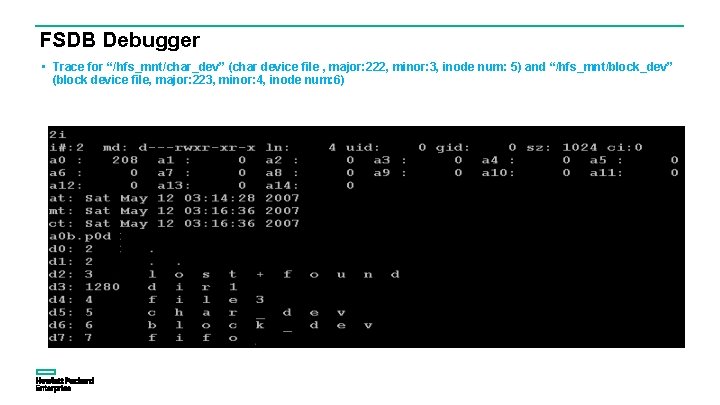

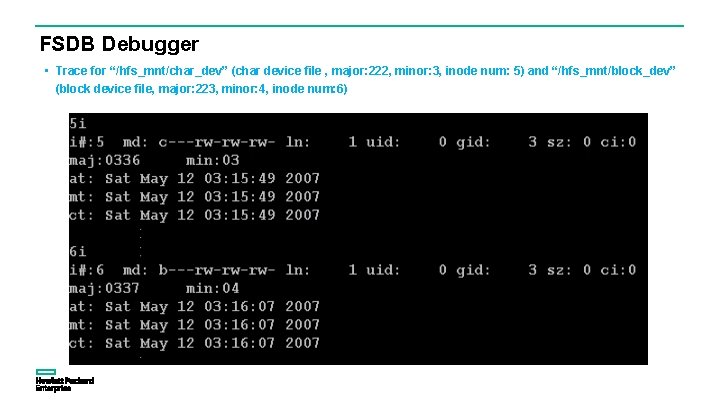

FSDB Debugger • Trace for “/hfs_mnt/char_dev” (char device file , major: 222, minor: 3, inode num: 5) and “/hfs_mnt/block_dev” (block device file, major: 223, minor: 4, inode num: 6)

FSDB Debugger • Trace for “/hfs_mnt/char_dev” (char device file , major: 222, minor: 3, inode num: 5) and “/hfs_mnt/block_dev” (block device file, major: 223, minor: 4, inode num: 6)

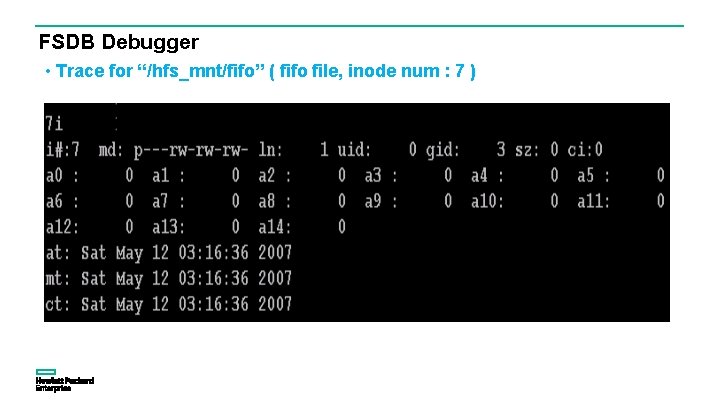

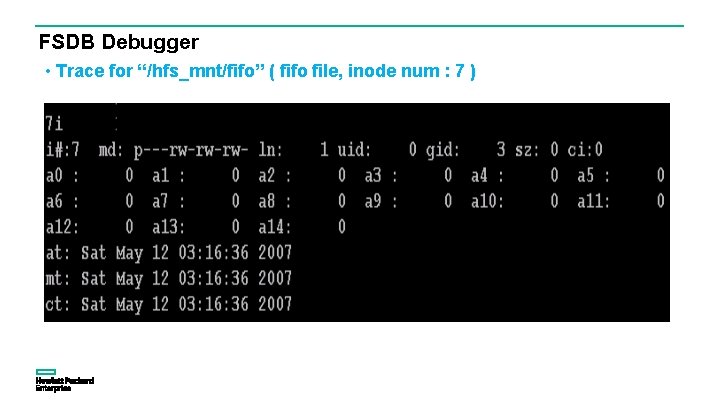

FSDB Debugger • Trace for “/hfs_mnt/fifo” ( fifo file, inode num : 7 )

Inode Cache 61

![Inode Cache LRU Hash idev inumber ihead ifreeh ihhead0 ihhead1 ifreet ninode Inode Cache : LRU Hash (i_dev, i_number) ihead[] ifreeh ih_head[0] ih_head[1] ifreet ninode :](https://slidetodoc.com/presentation_image_h/36d8c9c73ea03beaf437af9a7152f415/image-62.jpg)

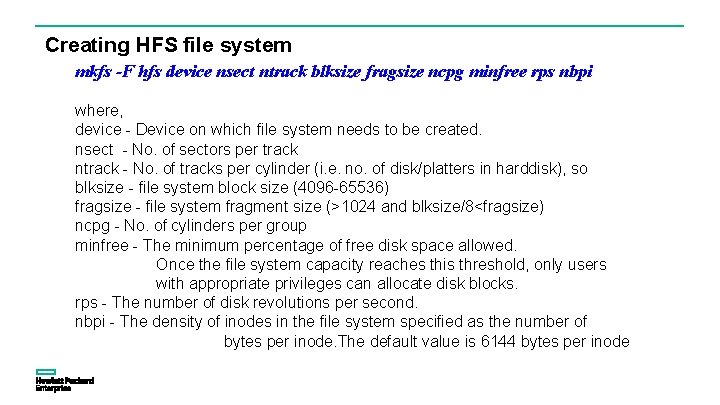

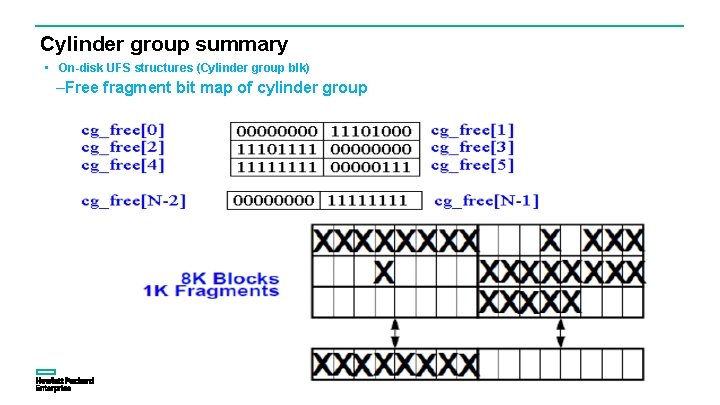

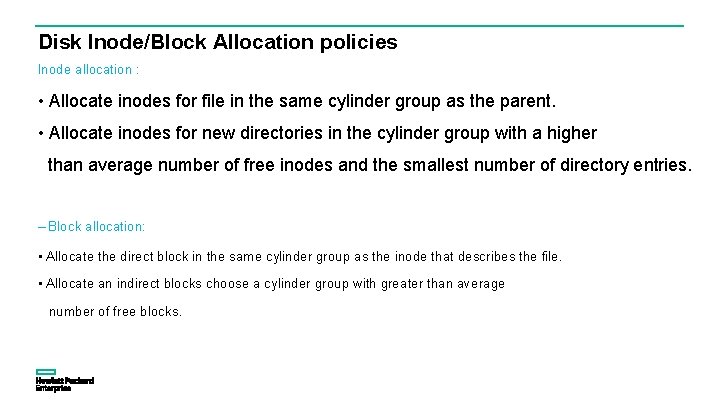

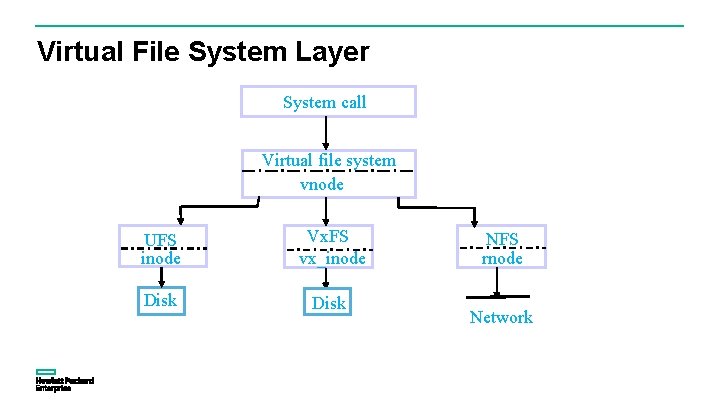

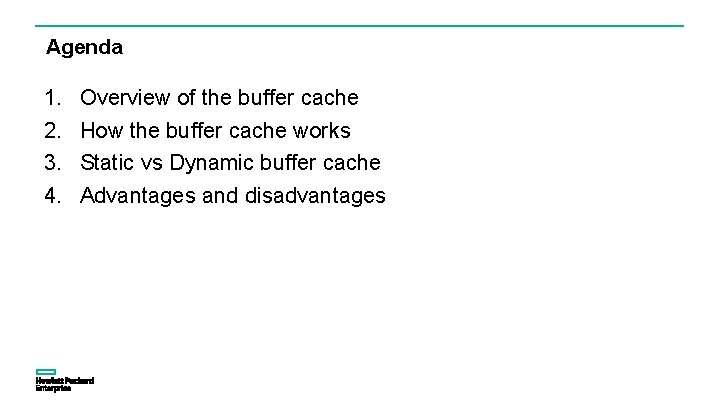

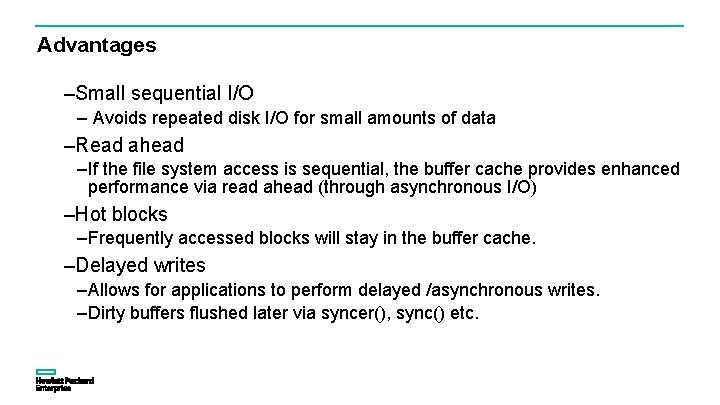

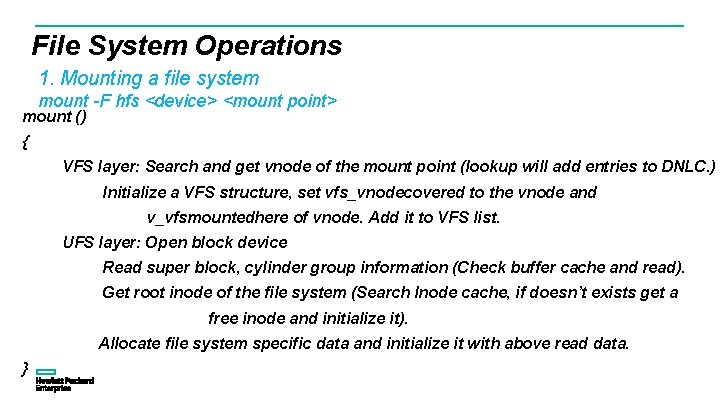

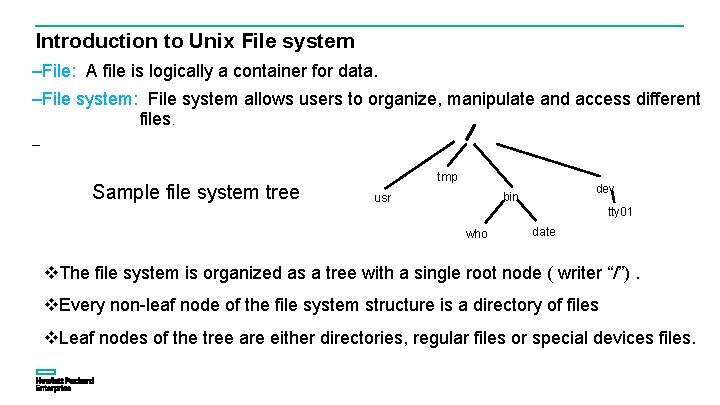

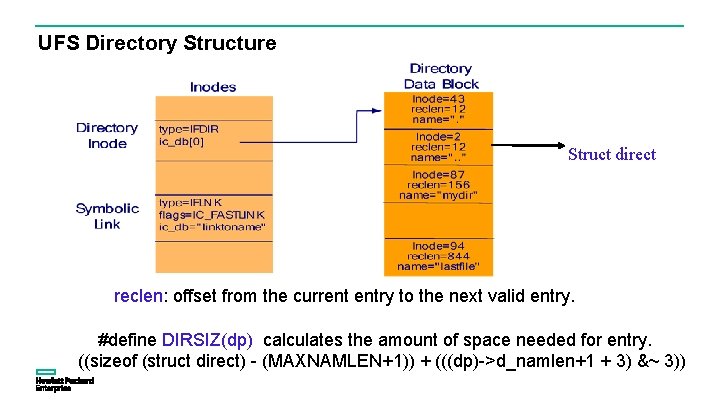

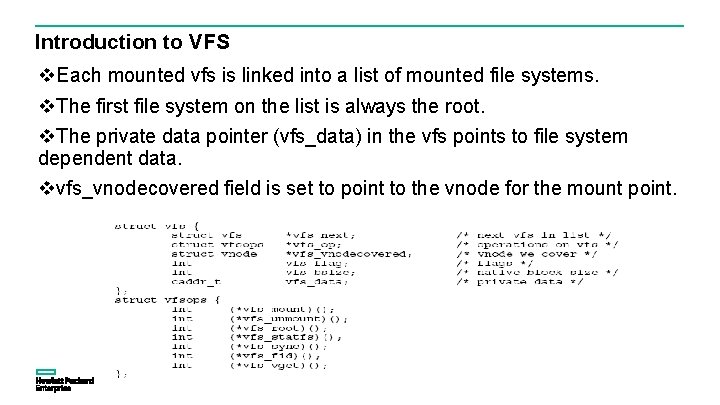

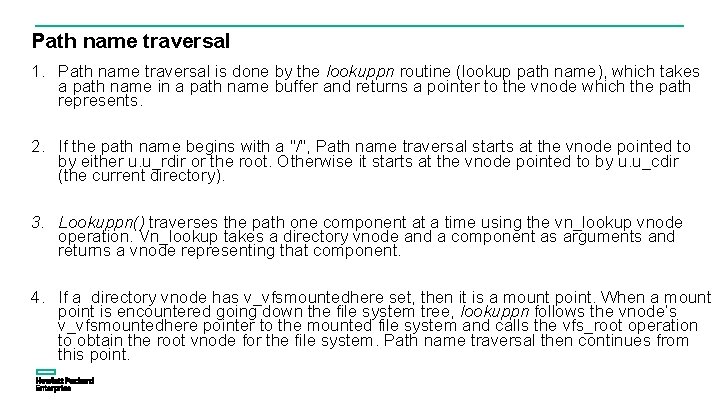

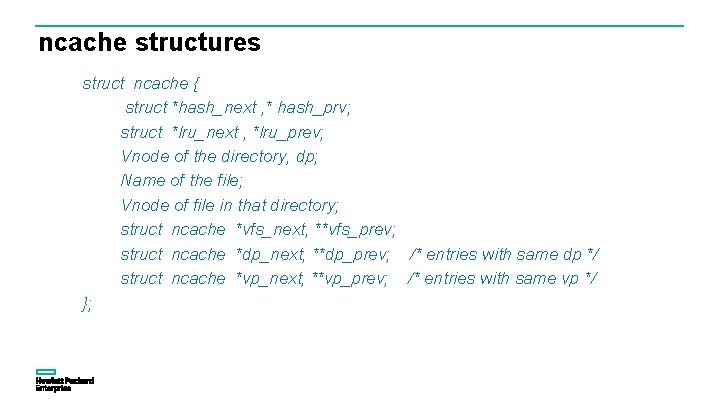

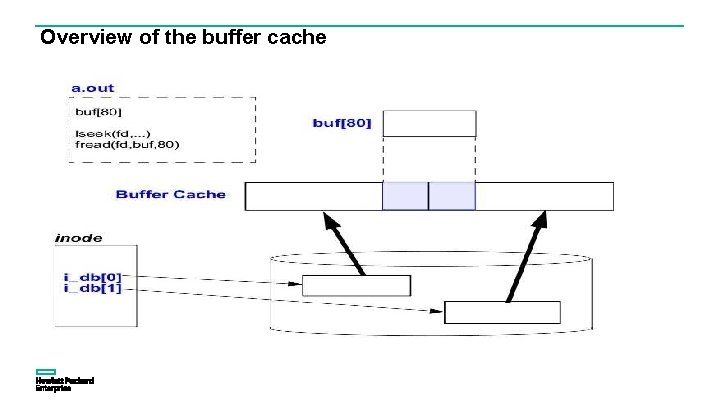

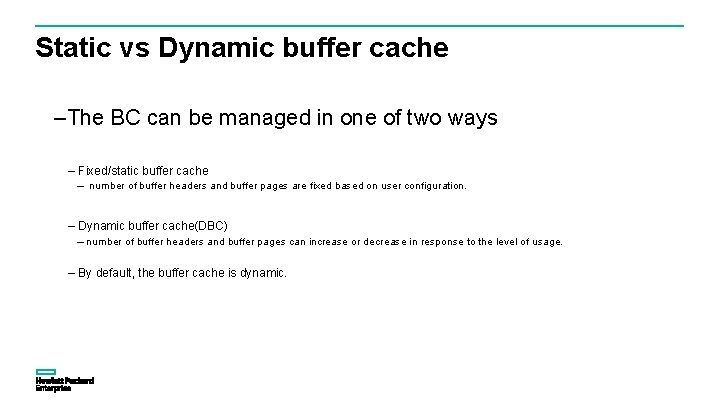

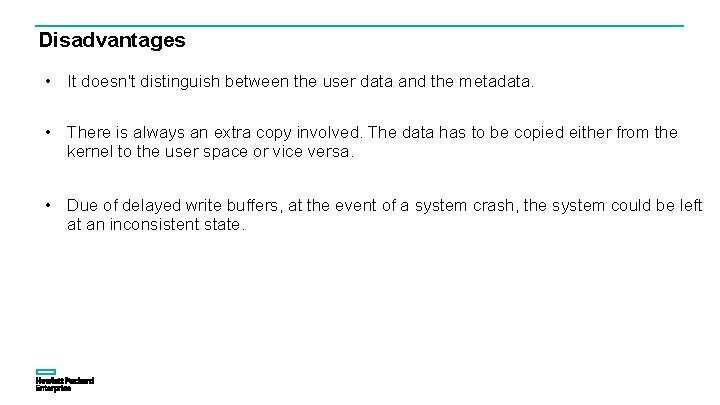

Inode Cache : LRU Hash (i_dev, i_number) ihead[] ifreeh ih_head[0] ih_head[1] ifreet ninode : Number of Inodes in the cache. ihead[ ]: Pointers to inodes which represents the hash chains. ifreeh/ ifreet: Pointer to the first/last inode in the freelist

![Inode Cache ihinit Initializes the Inode cache Allocates the incore inodes ninode Inode Cache ihinit( ): Initializes the Inode cache. • Allocates the in-core inodes [ninode]](https://slidetodoc.com/presentation_image_h/36d8c9c73ea03beaf437af9a7152f415/image-63.jpg)

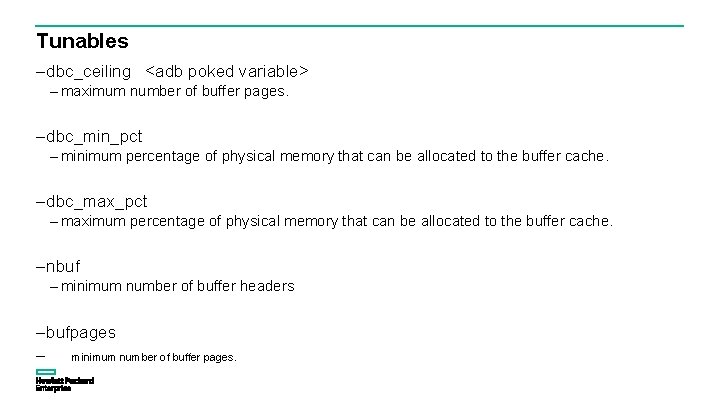

Inode Cache ihinit( ): Initializes the Inode cache. • Allocates the in-core inodes [ninode] and insert it to the inode free list. • Initialize the inode hash list to have no entries. iget( ) : Returns the locked incore inode. • Search the hash list with given device and inode number. • If inode does not exist in the cache, get a free inode and initialize it [copy the on disk inode, devide id, inode number] and insert it to appropriate hash list. • If the freelist is null a table full message is displayed. iput( ): Returns the inode to the inode cache.

VFS – Vnode Architecture 64

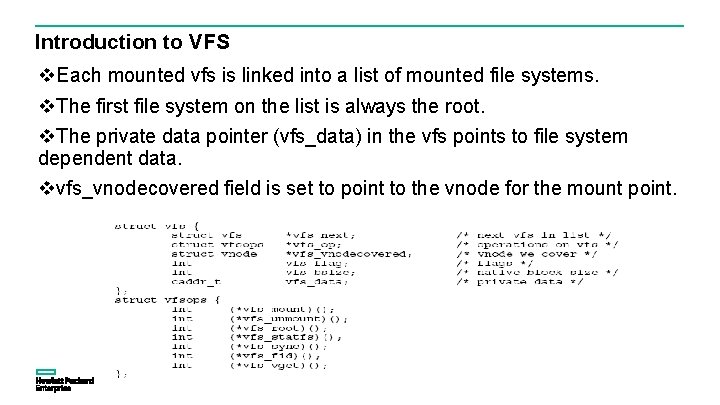

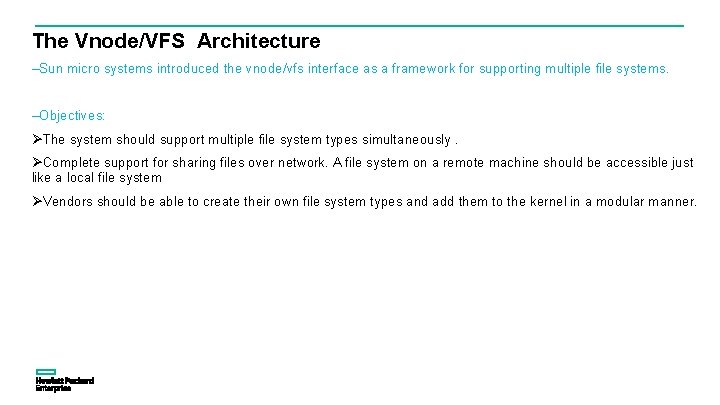

The Vnode/VFS Architecture –Sun micro systems introduced the vnode/vfs interface as a framework for supporting multiple file systems. –Objectives: The system should support multiple file system types simultaneously. Complete support for sharing files over network. A file system on a remote machine should be accessible just like a local file system Vendors should be able to create their own file system types and add them to the kernel in a modular manner.

Virtual File System Layer System call Virtual file system vnode UFS inode Vx. FS vx_inode Disk NFS rnode Network

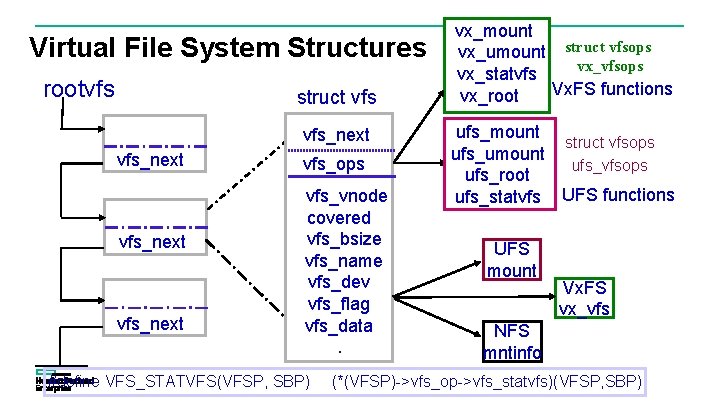

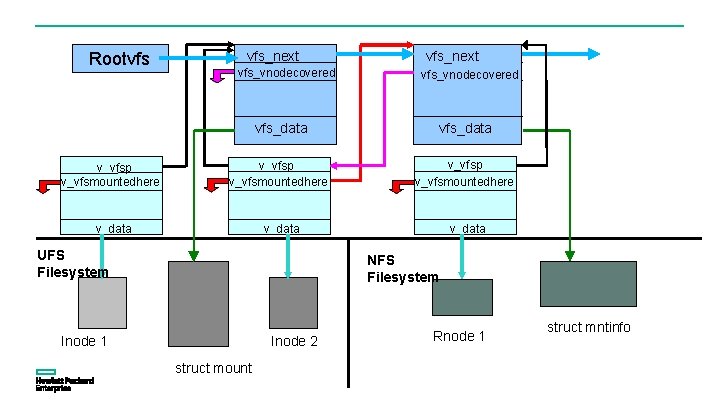

Introduction to VFS Each mounted vfs is linked into a list of mounted file systems. The first file system on the list is always the root. The private data pointer (vfs_data) in the vfs points to file system dependent data. vfs_vnodecovered field is set to point to the vnode for the mount point.

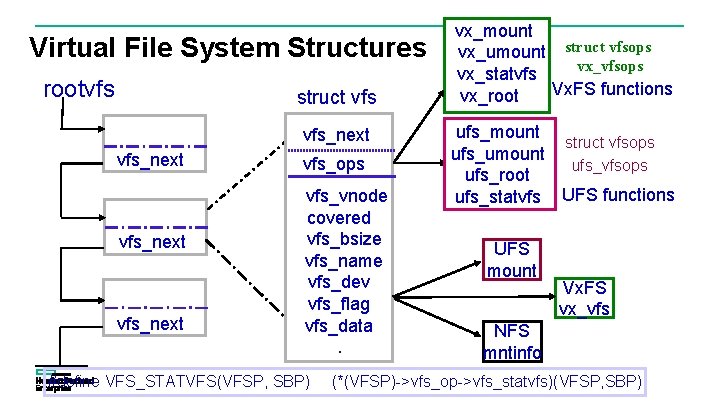

Virtual File System Structures rootvfs struct vfs_next vfs_ops vfs_vnode covered vfs_bsize vfs_name vfs_dev vfs_flag vfs_data. #define VFS_STATVFS(VFSP, SBP) vx_mount vx_umount struct vfsops vx_statvfs Vx. FS functions vx_root. ufs_mount struct vfsops ufs_umount ufs_vfsops ufs_root ufs_statvfs UFS functions UFS mount Vx. FS vx_vfs NFS mntinfo (*(VFSP)->vfs_op->vfs_statvfs)(VFSP, SBP)

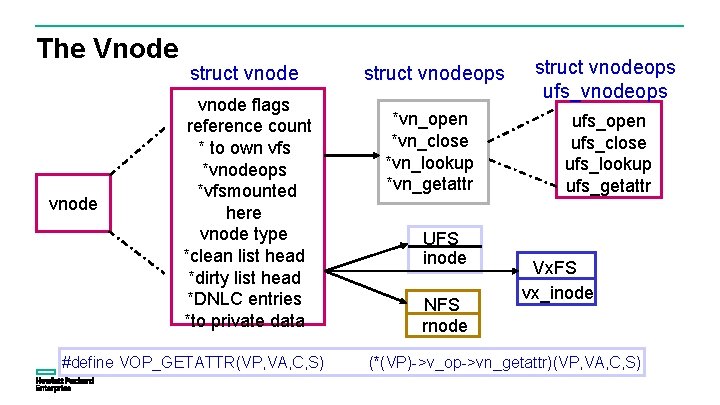

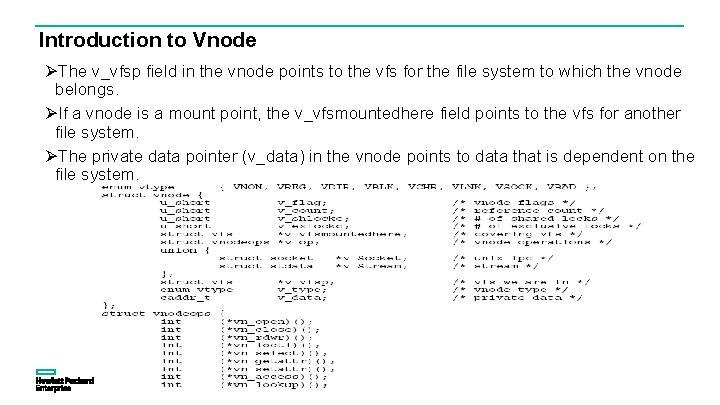

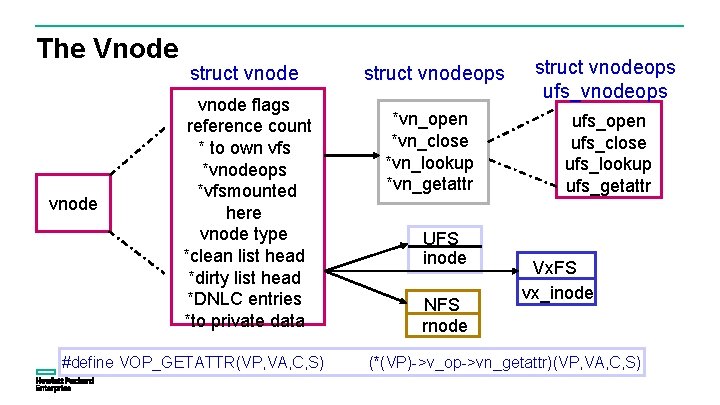

Introduction to Vnode The v_vfsp field in the vnode points to the vfs for the file system to which the vnode belongs. If a vnode is a mount point, the v_vfsmountedhere field points to the vfs for another file system. The private data pointer (v_data) in the vnode points to data that is dependent on the file system.

The Vnode vnode struct vnode flags reference count * to own vfs *vnodeops *vfsmounted here vnode type *clean list head *dirty list head *DNLC entries *to private data #define VOP_GETATTR(VP, VA, C, S) struct vnodeops ufs_vnodeops *vn_open *vn_close *vn_lookup *vn_getattr ufs_open ufs_close ufs_lookup ufs_getattr UFS inode NFS rnode Vx. FS vx_inode (*(VP)->v_op->vn_getattr)(VP, VA, C, S)

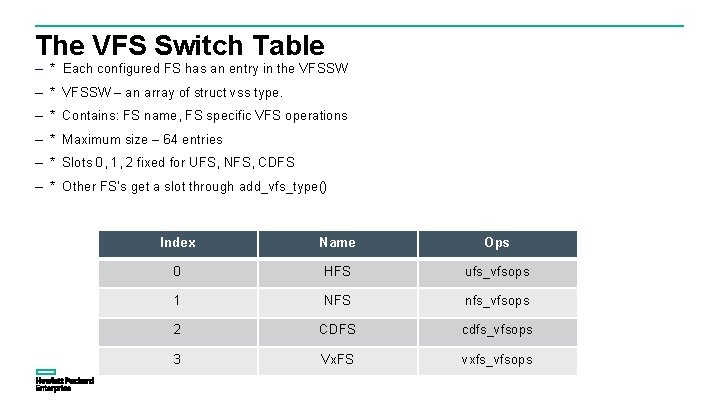

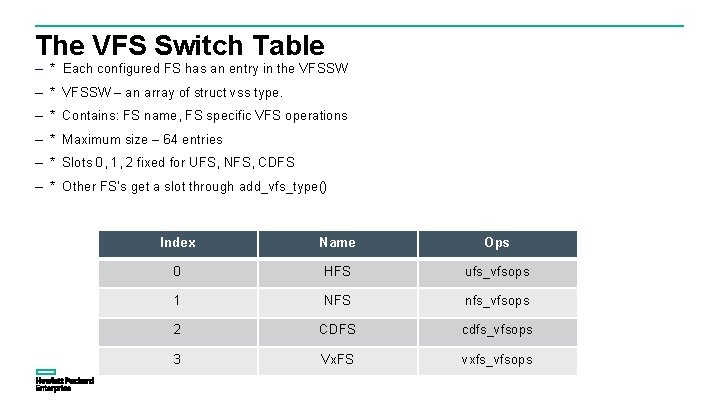

The VFS Switch Table – * Each configured FS has an entry in the VFSSW – * VFSSW – an array of struct vss type. – * Contains: FS name, FS specific VFS operations – * Maximum size – 64 entries – * Slots 0, 1, 2 fixed for UFS, NFS, CDFS – * Other FS’s get a slot through add_vfs_type() Index Name Ops 0 HFS ufs_vfsops 1 NFS nfs_vfsops 2 CDFS cdfs_vfsops 3 Vx. FS vxfs_vfsops

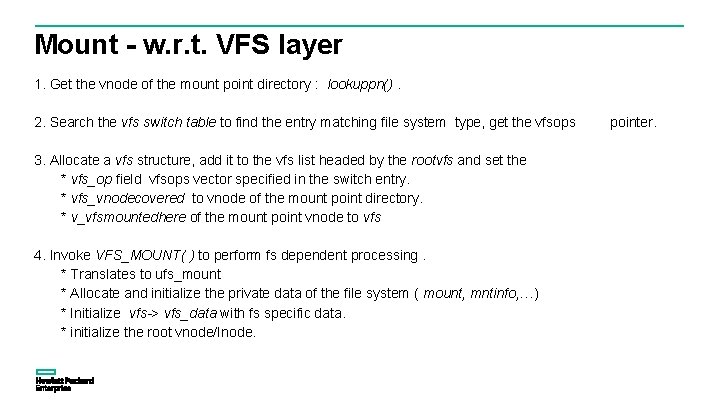

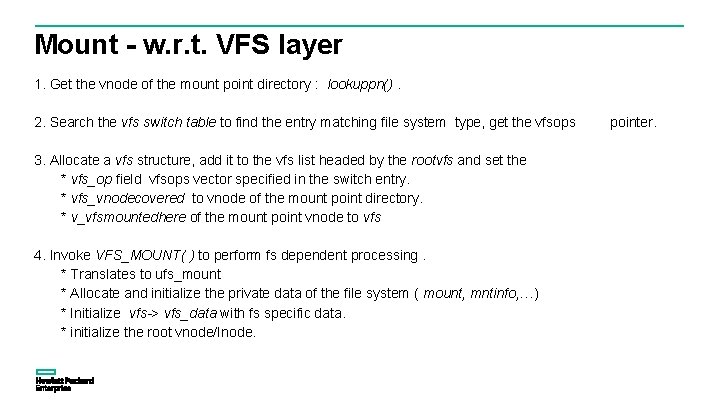

Mount - w. r. t. VFS layer 1. Get the vnode of the mount point directory : lookuppn(). 2. Search the vfs switch table to find the entry matching file system type, get the vfsops 3. Allocate a vfs structure, add it to the vfs list headed by the rootvfs and set the * vfs_op field vfsops vector specified in the switch entry. * vfs_vnodecovered to vnode of the mount point directory. * v_vfsmountedhere of the mount point vnode to vfs 4. Invoke VFS_MOUNT( ) to perform fs dependent processing. * Translates to ufs_mount * Allocate and initialize the private data of the file system ( mount, mntinfo, …) * Initialize vfs-> vfs_data with fs specific data. * initialize the root vnode/Inode. pointer.

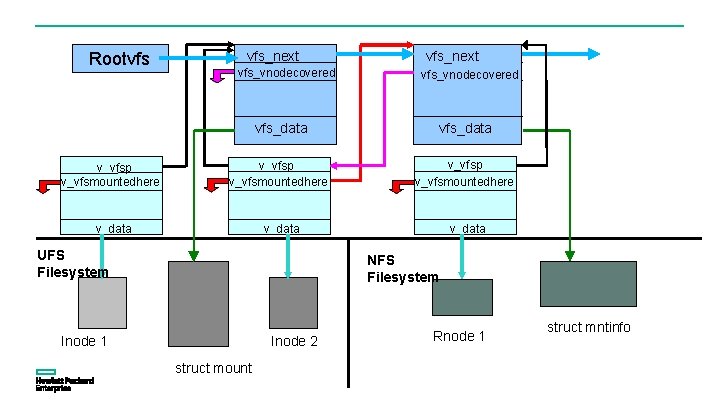

Rootvfs vfs_next vfs_vnodecovered vfs_data v_vfsp v_vfsmountedhere v_data UFS Filesystem NFS Filesystem Inode 1 Inode 2 struct mount Rnode 1 struct mntinfo

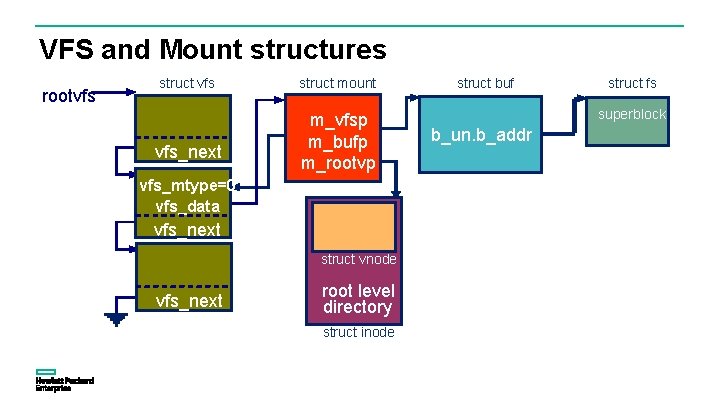

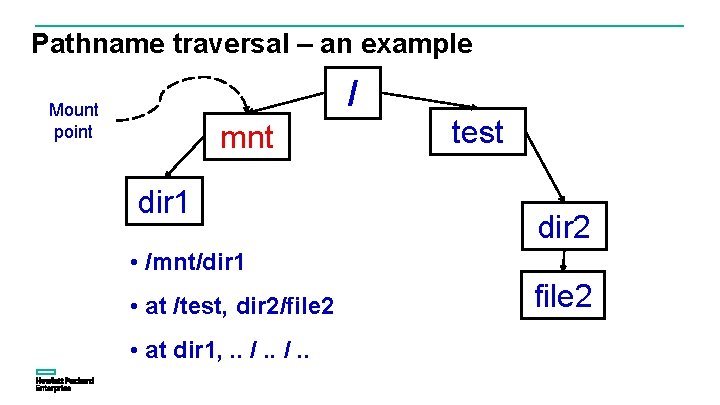

VFS and Mount structures rootvfs struct vfs_next struct mount m_vfsp m_bufp m_rootvp vfs_mtype=0 vfs_data vfs_next struct vnode vfs_next root level directory struct inode struct buf struct fs superblock b_un. b_addr

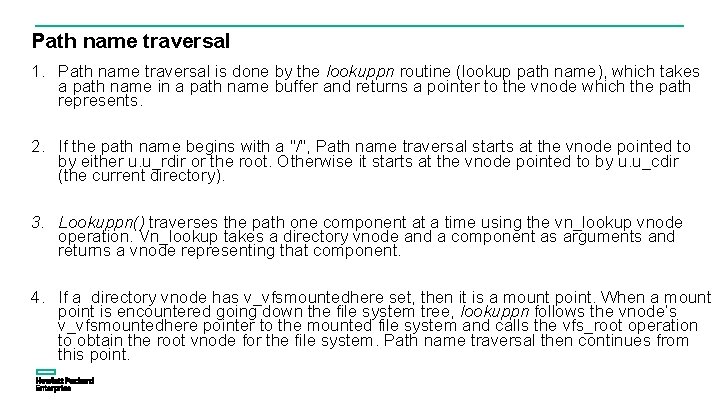

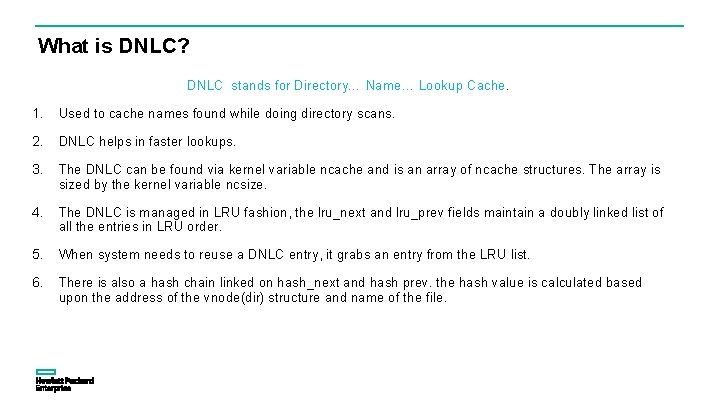

Path name traversal 1. Path name traversal is done by the lookuppn routine (lookup path name), which takes a path name in a path name buffer and returns a pointer to the vnode which the path represents. 2. If the path name begins with a "/", Path name traversal starts at the vnode pointed to by either u. u_rdir or the root. Otherwise it starts at the vnode pointed to by u. u_cdir (the current directory). 3. Lookuppn() traverses the path one component at a time using the vn_lookup vnode operation. Vn_lookup takes a directory vnode and a component as arguments and returns a vnode representing that component. 4. If a directory vnode has v_vfsmountedhere set, then it is a mount point. When a mount point is encountered going down the file system tree, lookuppn follows the vnode’s v_vfsmountedhere pointer to the mounted file system and calls the vfs_root operation to obtain the root vnode for the file system. Path name traversal then continues from this point.

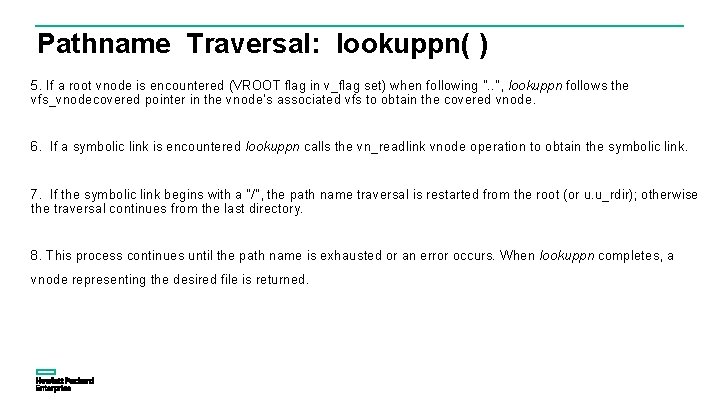

Pathname Traversal: lookuppn( ) 5. If a root vnode is encountered (VROOT flag in v_flag set) when following ". . ", lookuppn follows the vfs_vnodecovered pointer in the vnode’s associated vfs to obtain the covered vnode. 6. If a symbolic link is encountered lookuppn calls the vn_readlink vnode operation to obtain the symbolic link. 7. If the symbolic link begins with a "/", the path name traversal is restarted from the root (or u. u_rdir); otherwise the traversal continues from the last directory. 8. This process continues until the path name is exhausted or an error occurs. When lookuppn completes, a vnode representing the desired file is returned.

Pathname traversal – an example / Mount point mnt dir 1 test dir 2 • /mnt/dir 1 • at /test, dir 2/file 2 • at dir 1, . . /. . file 2

Directory Name Lookup Cache (DNLC) 78

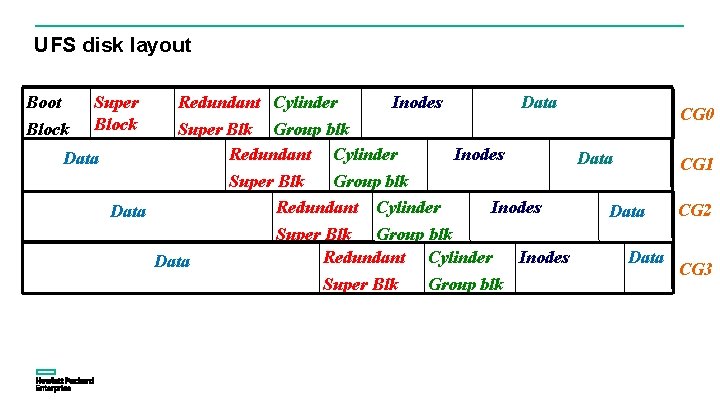

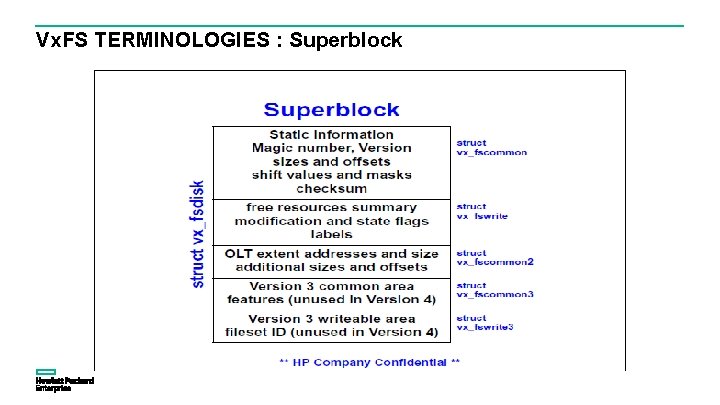

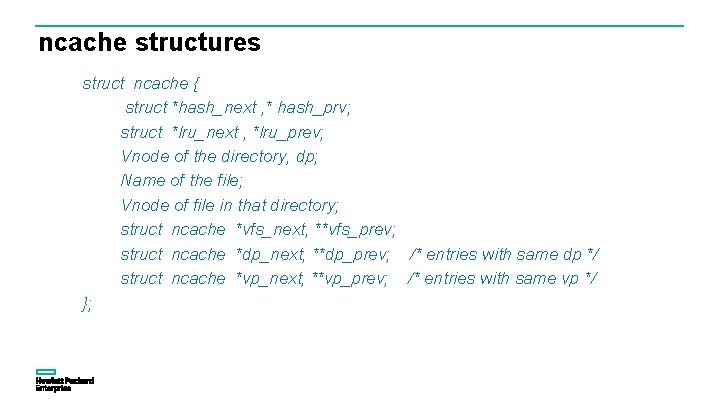

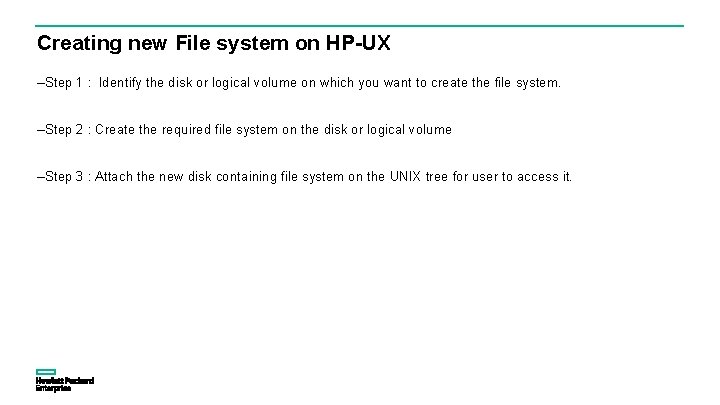

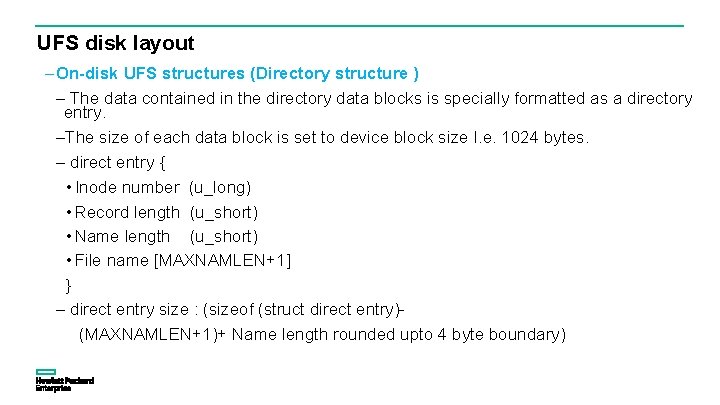

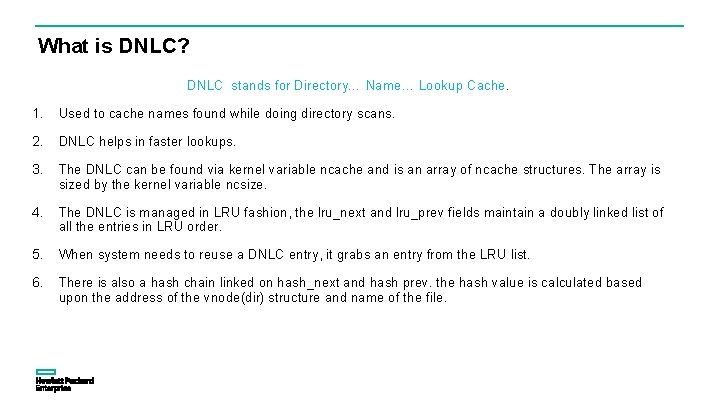

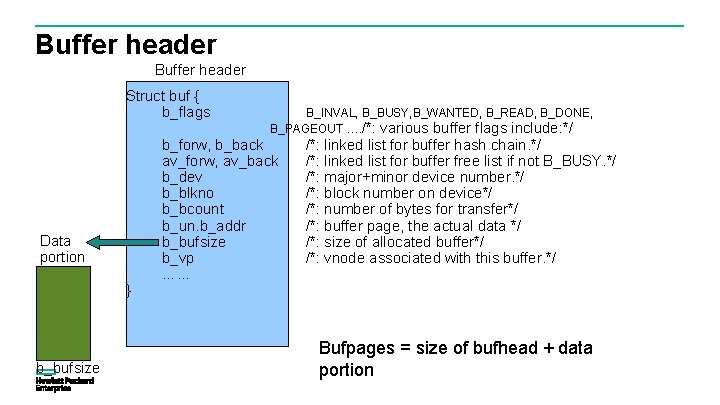

ncache structures struct ncache { struct *hash_next , * hash_prv; struct *lru_next , *lru_prev; Vnode of the directory, dp; Name of the file; Vnode of file in that directory; struct ncache *vfs_next, **vfs_prev; struct ncache *dp_next, **dp_prev; /* entries with same dp */ struct ncache *vp_next, **vp_prev; /* entries with same vp */ };

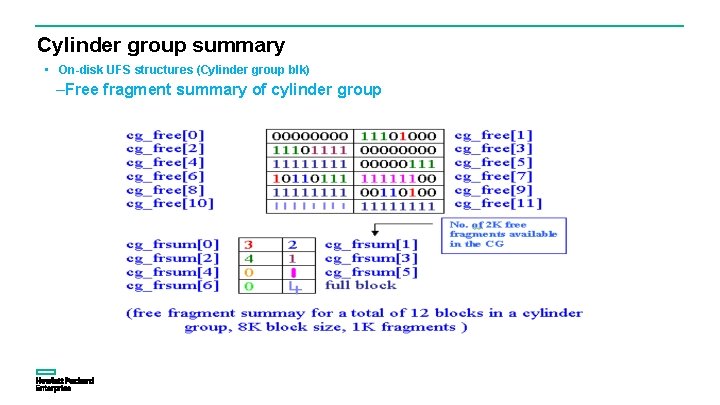

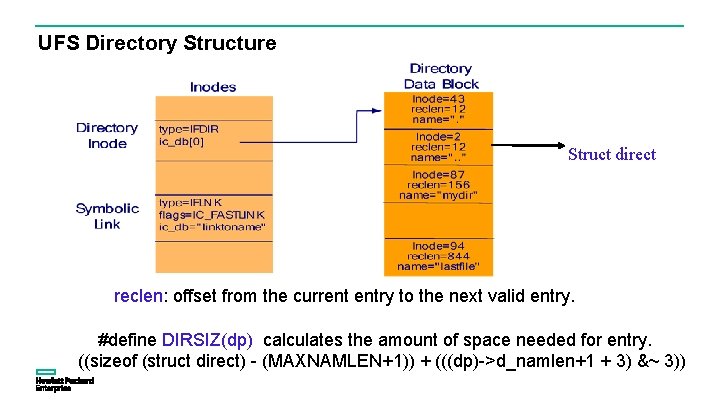

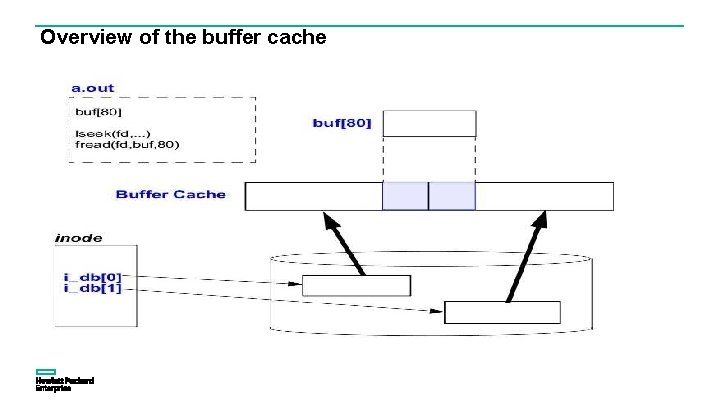

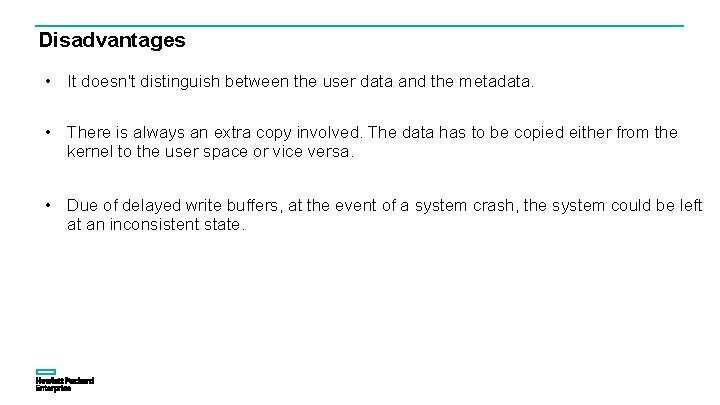

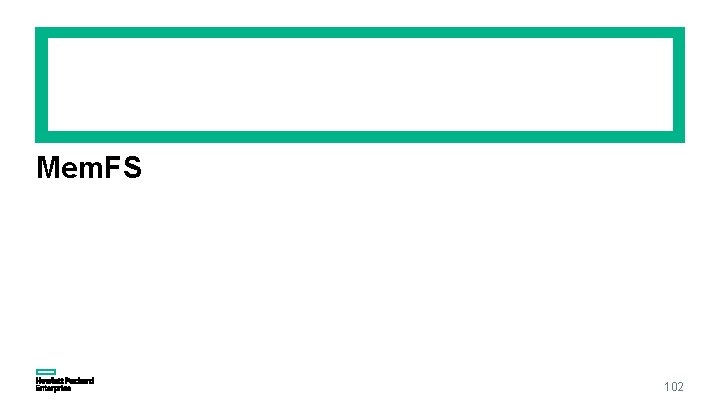

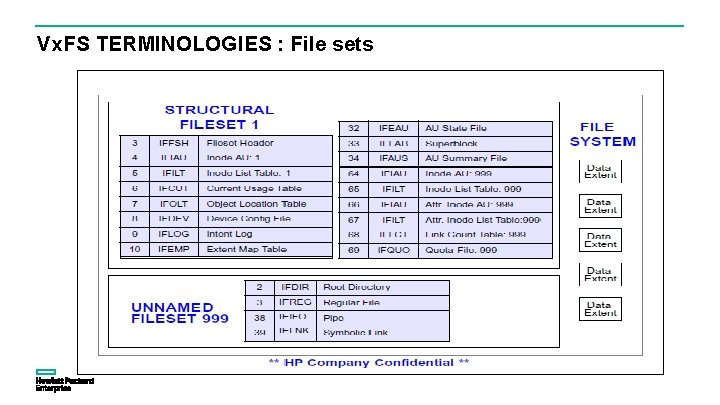

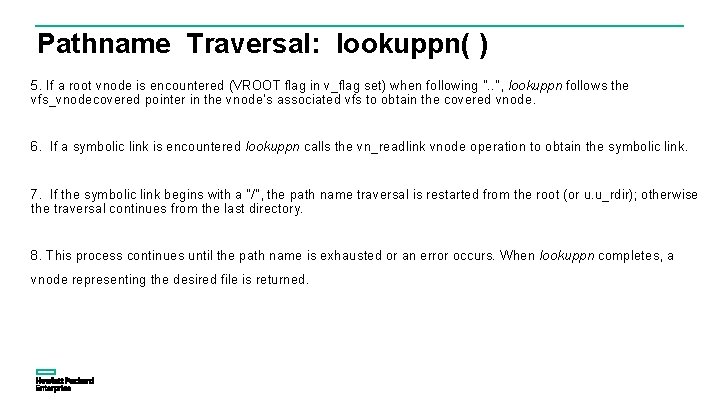

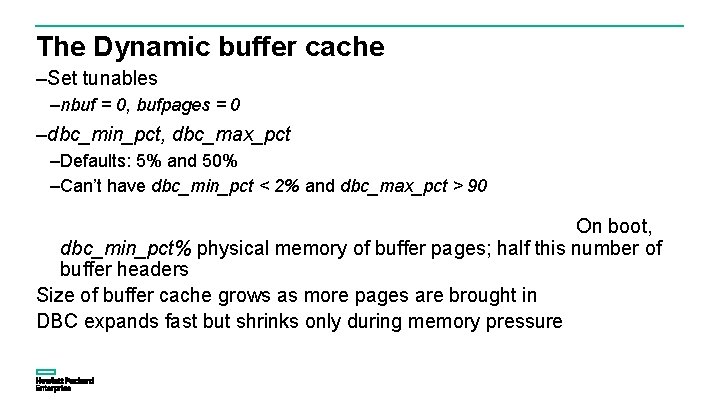

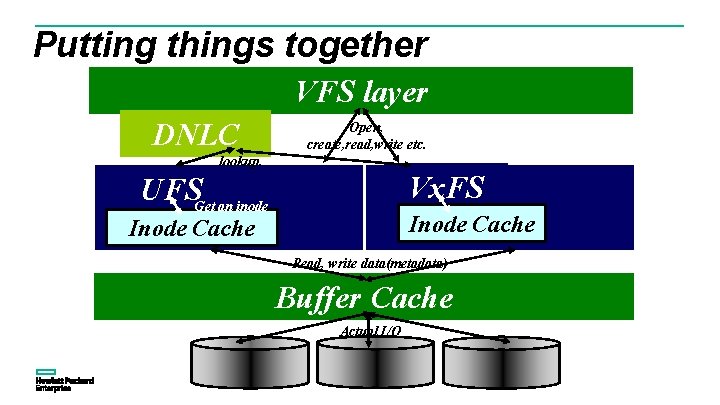

What is DNLC? DNLC stands for Directory… Name… Lookup Cache. 1. Used to cache names found while doing directory scans. 2. DNLC helps in faster lookups. 3. The DNLC can be found via kernel variable ncache and is an array of ncache structures. The array is sized by the kernel variable ncsize. 4. The DNLC is managed in LRU fashion, the lru_next and lru_prev fields maintain a doubly linked list of all the entries in LRU order. 5. When system needs to reuse a DNLC entry, it grabs an entry from the LRU list. 6. There is also a hash chain linked on hash_next and hash prev. the hash value is calculated based upon the address of the vnode(dir) structure and name of the file.

![nchash ncachencsize nclru dnlchashlocks DNLC Architecture hnext hprev lrunext lruprev name vp dp nc_hash ncache[ncsize] nc_lru[ dnlc_hash_locks] DNLC Architecture h_next, h_prev lru_next, lru_prev name vp dp](https://slidetodoc.com/presentation_image_h/36d8c9c73ea03beaf437af9a7152f415/image-81.jpg)

nc_hash ncache[ncsize] nc_lru[ dnlc_hash_locks] DNLC Architecture h_next, h_prev lru_next, lru_prev name vp dp

HP-UX Buffer Cache 82

Agenda 1. 2. 3. 4. Overview of the buffer cache How the buffer cache works Static vs Dynamic buffer cache Advantages and disadvantages

Overview of the buffer cache

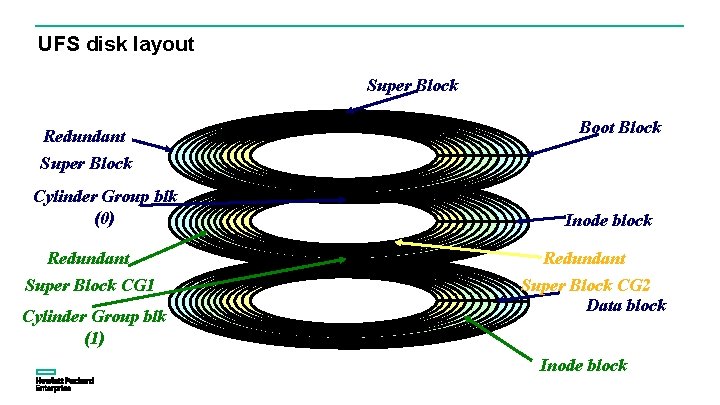

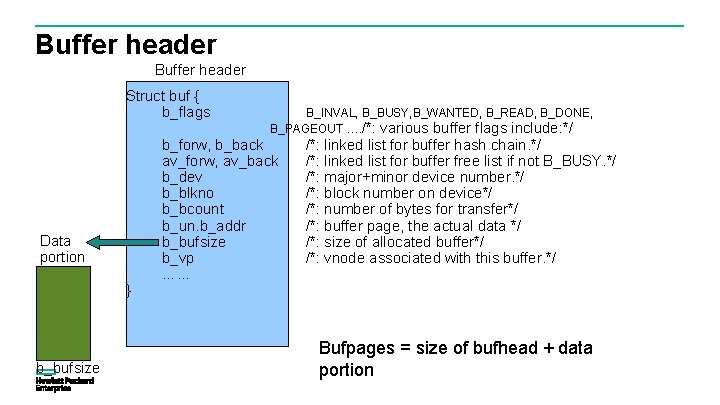

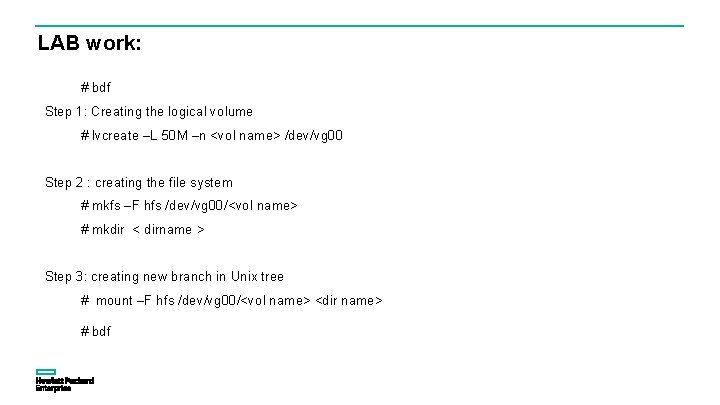

Buffer header Struct buf { b_flags Data portion } b_bufsize B_INVAL, B_BUSY, B_WANTED, B_READ, B_DONE, B_PAGEOUT …. /*: various buffer flags include: */ b_forw, b_back av_forw, av_back b_dev b_blkno b_bcount b_un. b_addr b_bufsize b_vp …… /*: linked list for buffer hash chain. */ /*: linked list for buffer free list if not B_BUSY. */ /*: major+minor device number. */ /*: block number on device*/ /*: number of bytes for transfer*/ /*: buffer page, the actual data */ /*: size of allocated buffer*/ /*: vnode associated with this buffer. */ Bufpages = size of bufhead + data portion

![Buffer cache structures struct bufhd bufhash tunable bufhashtablesize bfreelistCPUBQ struct bufhd int 32t Buffer cache structures struct bufhd *bufhash, tunable “bufhash_table_size” bfreelist[CPU][BQ_*] struct bufhd { int 32_t](https://slidetodoc.com/presentation_image_h/36d8c9c73ea03beaf437af9a7152f415/image-86.jpg)

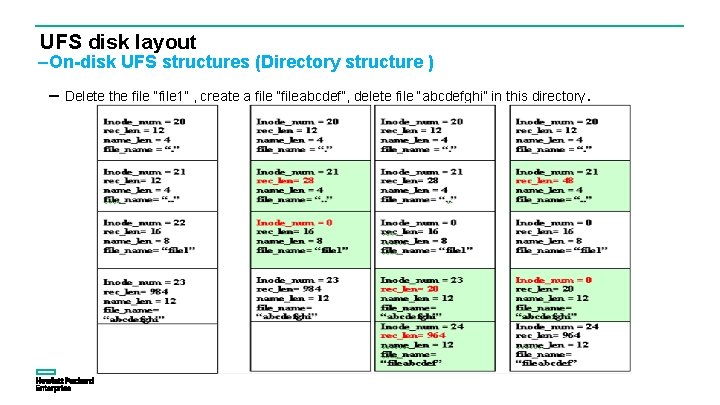

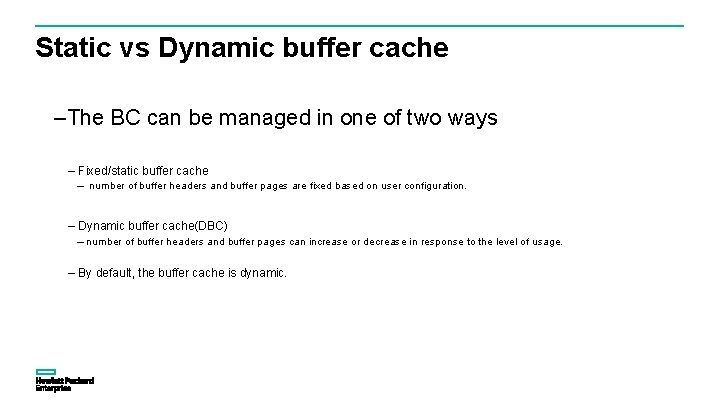

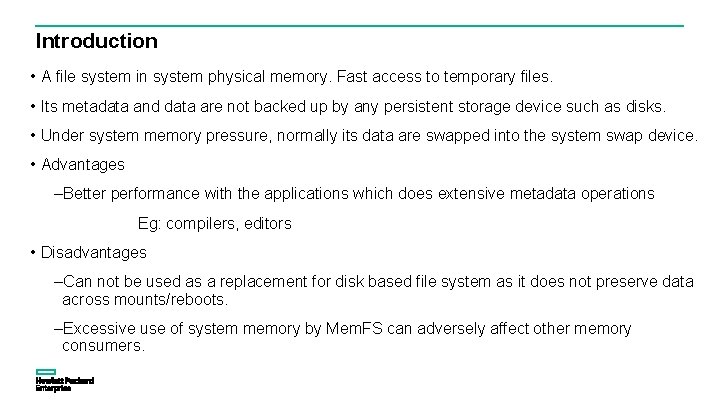

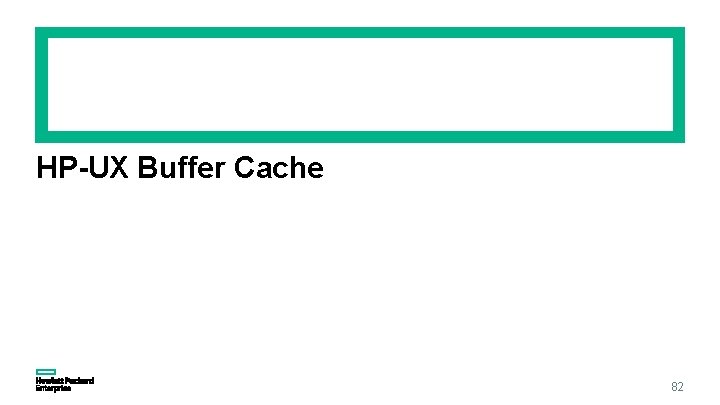

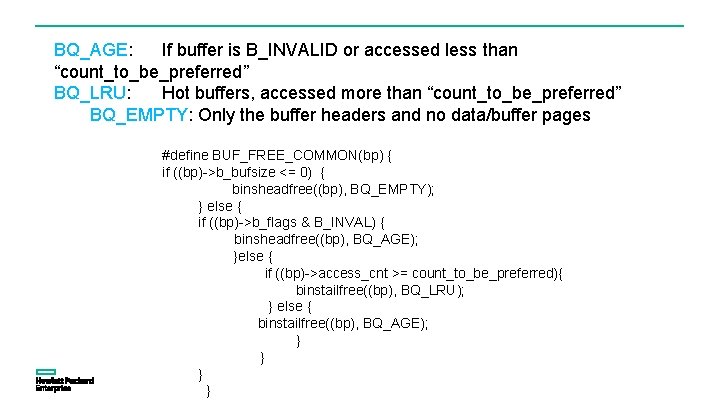

Buffer cache structures struct bufhd *bufhash, tunable “bufhash_table_size” bfreelist[CPU][BQ_*] struct bufhd { int 32_t b_flags; struct buf *b_forw; struct buf *b_back; lock_t *bh_lock; };

BQ_AGE: If buffer is B_INVALID or accessed less than “count_to_be_preferred” BQ_LRU: Hot buffers, accessed more than “count_to_be_preferred” BQ_EMPTY: Only the buffer headers and no data/buffer pages #define BUF_FREE_COMMON(bp) { if ((bp)->b_bufsize <= 0) { binsheadfree((bp), BQ_EMPTY); } else { if ((bp)->b_flags & B_INVAL) { binsheadfree((bp), BQ_AGE); }else { if ((bp)->access_cnt >= count_to_be_preferred){ binstailfree((bp), BQ_LRU); } else { binstailfree((bp), BQ_AGE); } }

Static Vs Dynamic Buffer Cache 89

Static vs Dynamic buffer cache –The BC can be managed in one of two ways – Fixed/static buffer cache – number of buffer headers and buffer pages are fixed based on user configuration. – Dynamic buffer cache(DBC) – number of buffer headers and buffer pages can increase or decrease in response to the level of usage. – By default, the buffer cache is dynamic.

Tunables –dbc_ceiling <adb poked variable> – maximum number of buffer pages. –dbc_min_pct – minimum percentage of physical memory that can be allocated to the buffer cache. –dbc_max_pct – maximum percentage of physical memory that can be allocated to the buffer cache. –nbuf – minimum number of buffer headers –bufpages – minimum number of buffer pages.

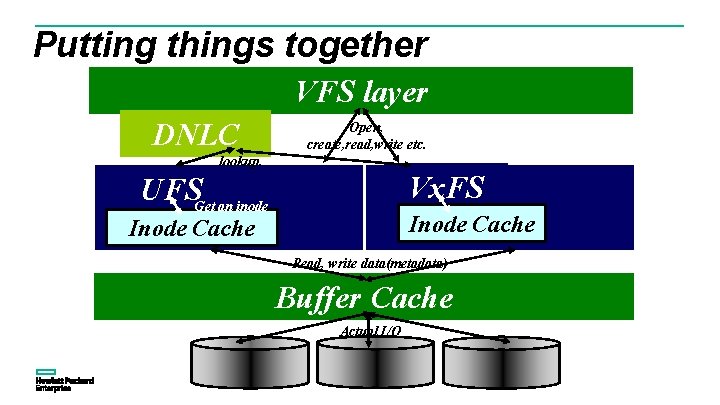

The Dynamic buffer cache –Set tunables –nbuf = 0, bufpages = 0 –dbc_min_pct, dbc_max_pct –Defaults: 5% and 50% –Can’t have dbc_min_pct < 2% and dbc_max_pct > 90 On boot, dbc_min_pct% physical memory of buffer pages; half this number of buffer headers Size of buffer cache grows as more pages are brought in DBC expands fast but shrinks only during memory pressure

Advantages –Small sequential I/O – Avoids repeated disk I/O for small amounts of data –Read ahead –If the file system access is sequential, the buffer cache provides enhanced performance via read ahead (through asynchronous I/O) –Hot blocks –Frequently accessed blocks will stay in the buffer cache. –Delayed writes –Allows for applications to perform delayed /asynchronous writes. –Dirty buffers flushed later via syncer(), sync() etc.

Disadvantages • It doesn't distinguish between the user data and the metadata. • There is always an extra copy involved. The data has to be copied either from the kernel to the user space or vice versa. • Due of delayed write buffers, at the event of a system crash, the system could be left at an inconsistent state.

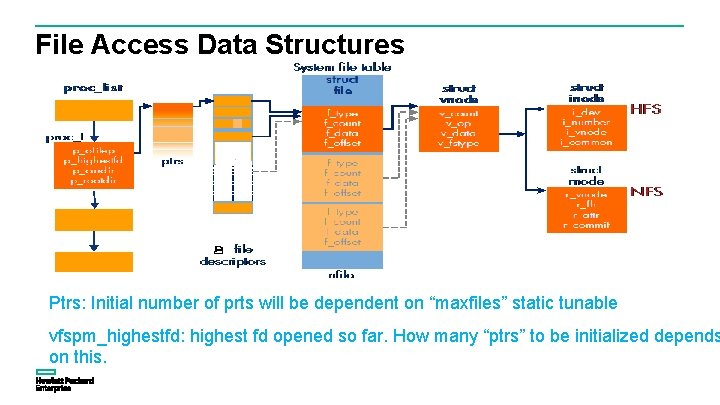

Putting things together VFS layer DNLC Open, create, read, write etc. lookup. Vx. FS UFSGet an inode Inode Cache Read, write data(metadata) Buffer Cache Actual I/O

File Access Data Structures Ptrs: Initial number of prts will be dependent on “maxfiles” static tunable vfspm_highestfd: highest fd opened so far. How many “ptrs” to be initialized depends on this.

File System Operations 1. Mounting a file system mount -F hfs <device> <mount point> mount () { VFS layer: Search and get vnode of the mount point (lookup will add entries to DNLC. ) Initialize a VFS structure, set vfs_vnodecovered to the vnode and v_vfsmountedhere of vnode. Add it to VFS list. UFS layer: Open block device Read super block, cylinder group information (Check buffer cache and read). Get root inode of the file system (Search Inode cache, if doesn’t exists get a free inode and initialize it). Allocate file system specific data and initialize it with above read data. }

![1 Creat File operations include fcntl h main int fd char buf10 1. Creat: File operations # include <fcntl. h> main() { int fd; char buf[10]](https://slidetodoc.com/presentation_image_h/36d8c9c73ea03beaf437af9a7152f415/image-97.jpg)

1. Creat: File operations # include <fcntl. h> main() { int fd; char buf[10] = "abcdefghij"; if ((fd=creat(“/var/test", 0666))==-1) { perror("create"); exit(1); } if (write(fd, buf, 10)==0) { perror("write"); exit(1); } close(fd); } a. Allocate a file descriptor and file table entry. Set f_count 2. Write: to 1. 3. b. Close: Search the DNLC for name “test”. If it doesn’t a. Get the file table entry from file descriptor and in exists, read the entries a. get Get thevnode. filedirectory table entry from (lookupname file descriptorwill andread in turn the directory entries). turn get the vnode. b. the no. of blocks available the file system. c. If. Check the file entry is not found, find a on suitable slot in b. Decrement the f_count, if it is not last count return. If it is less than required blocks, free the disk blocks directory. used by purged inodes. c. the link count, if it is 0, tofree used d. Check Get suitable inode (number) usethe on blocks the disk, by this file. Put the inode inthe purge list. mark the as used and decrement no. of free. Update c. Get ainode fragment, mark fragment as used. inodes. the copy. Firstinode data at block inode is set d. disk Else, put the of end of free list. to this fragment. e. Search inode cache for this inode, if it doesn’t e. Update disk inode copy of theinode. cache, initialize it, exists, get a free d. Change the inode from size, mark the inode as dirty. allocate a vnode. Put it on to the hash list, remove it d. Covert number to on disk block number. from the freefragment list. Read the fragment (bread). f. Add the entry in the directory. Write the directory entry (bwrite). e. Copy the data to the buffer. g. Add a DNLC entry f. Asynchronously write the data (bdwrite). h. f_data is initialized to the vnode and f_offset is set g. After write completes, the buffer is put on to free list to 0. (buffer cache).

![File operations include fcntl h main int fd char buf10 if fdopenvartest File operations # include <fcntl. h> main() { int fd; char buf[10]; if ((fd=open(“/var/test",](https://slidetodoc.com/presentation_image_h/36d8c9c73ea03beaf437af9a7152f415/image-98.jpg)

File operations # include <fcntl. h> main() { int fd; char buf[10]; if ((fd=open(“/var/test", O_RDWR))==-1) { perror("create"); exit(1); } if (read(fd, buf, 10)==0) { perror("write"); exit(1); } close(fd); } 1. Open: Allocate a file descriptor and file table entry. Set 2. a. read: f_count to 1. a. Get the file table entry from file descriptor and in b. get Search the DNLC for name “test”. As it was added turn the vnode. during create, an entry will be found. So, we will get the b. Get the file system block/fragment (bmap) for the inode directly. offset. Get corresponding disk block number. c. f_data is initialized to the vnode and f_offset is set to c. 0. Read the block (bread) synchronously, through buffer cache. d. As the block was added to buffer cache during write, the buffer will be available, so disk I/O is avoided. e. Copy the data from the buffer. f. After read completes, the buffer is put on to free list (buffer cache).

Mem. FS 102

Introduction • A file system in system physical memory. Fast access to temporary files. • Its metadata and data are not backed up by any persistent storage device such as disks. • Under system memory pressure, normally its data are swapped into the system swap device. • Advantages –Better performance with the applications which does extensive metadata operations Eg: compilers, editors • Disadvantages –Can not be used as a replacement for disk based file system as it does not preserve data across mounts/reboots. –Excessive use of system memory by Mem. FS can adversely affect other memory consumers.

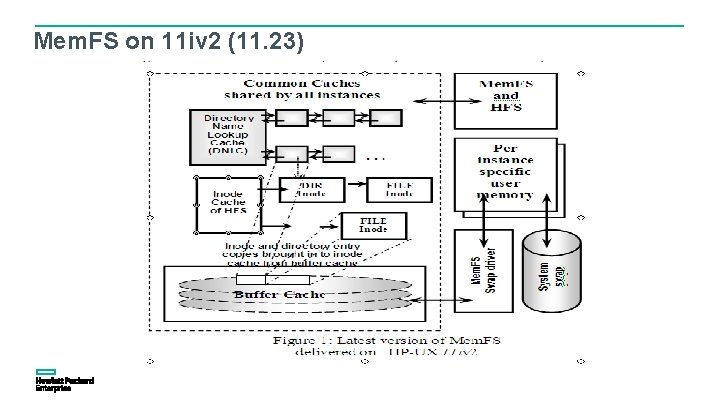

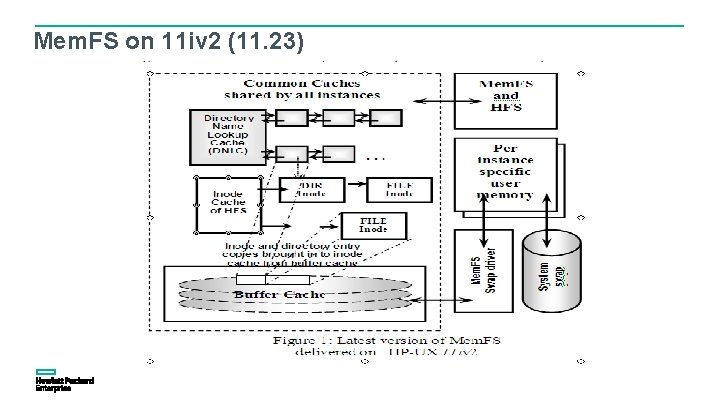

Mem. FS on 11 iv 2 (11. 23)

Limitations of HPUX 11 iv 2 Mem. FS • File size and File system size are restricted by UFS file system limits (256 Gb). • No. of files that can be created on a Mem. FS instance is restricted by file system size. • All the inodes are pre-allocated, wasting kernel memory. • Scalability issues with large directories • Overheads during allocation of blocks for files and directories. • Pre-allocates virtual memory of file system size (similar to RAM disk ) during the creation of file system, which can cause the system to run out of virtual memory. • Swapping in/out of memfs data is very slow.

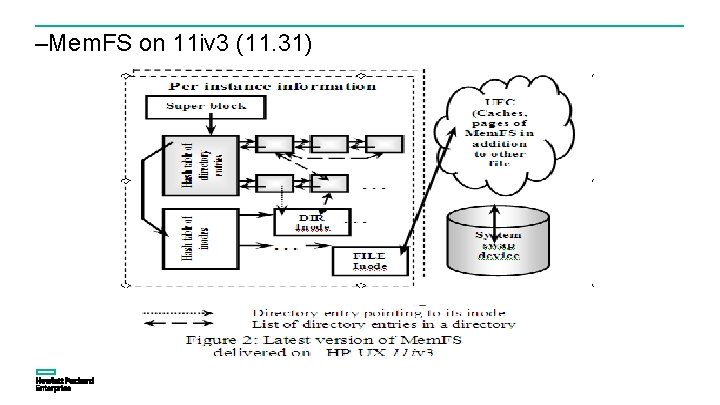

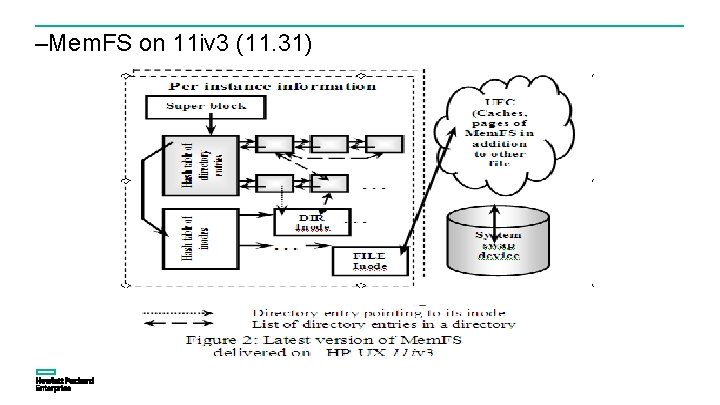

–Mem. FS on 11 iv 3 (11. 31)

Design highlights • New light weight layout • Free from HFS design limitations – No pre-allocation of inodes. – Large directories handled efficiently – Algorithms for organizing metadata and data are free from disk based architecture. – file and file system sizes can be greater than 256 GB. • Uses modified Unified file cache (UFC) for caching data • Does not use mount process address space. UFC swaps Mem. FS data directly to swap device. • Efficient usage of kernel memory and other resources

Mem. FS 11 iv 3 features • Performs better than Mem. FS on HP-UX 11 iv 2 (by a factor of 2). • Support for large file and file system sizes (Currently tested till 300 GB). • No limitation on the number of Mem. FS instances. • Number of files on a file system can be restricted, if needed. • File system size can be restricted or can be allowed to grow depending on the swap space available. • Swaps data on to system swap device efficiently. • Maximum amount of memory and swap that can be used by Mem. FS can be tuned.

Questions / Answers

Thank you sachin. metimath@hpe. com ravindra. kini@hpe. com