HPSG parser development at Utokyo Takuya Matsuzaki University

![Supertagger-based parsing [Clark and Curran, 2004; Ninomiya et al. , 2006] • Supertagging [Bangalore Supertagger-based parsing [Clark and Curran, 2004; Ninomiya et al. , 2006] • Supertagging [Bangalore](https://slidetodoc.com/presentation_image/fe42318b9b4418a3d8865225a53099df/image-4.jpg)

![Supertagger-based parsing [Clark and Curran, 2004; Ninomiya et al. , 2006] • Ignore the Supertagger-based parsing [Clark and Curran, 2004; Ninomiya et al. , 2006] • Ignore the](https://slidetodoc.com/presentation_image/fe42318b9b4418a3d8865225a53099df/image-5.jpg)

![Previous supertagger-based parsing [Clark and Curran, 2004; Ninomiya et al. , 2006] • Ignore Previous supertagger-based parsing [Clark and Curran, 2004; Ninomiya et al. , 2006] • Ignore](https://slidetodoc.com/presentation_image/fe42318b9b4418a3d8865225a53099df/image-8.jpg)

- Slides: 31

HPSG parser development at U-tokyo Takuya Matsuzaki University of Tokyo

Topics • Overview of U-Tokyo HPSG parsing system • Supertagging with Enju HPSG grammar

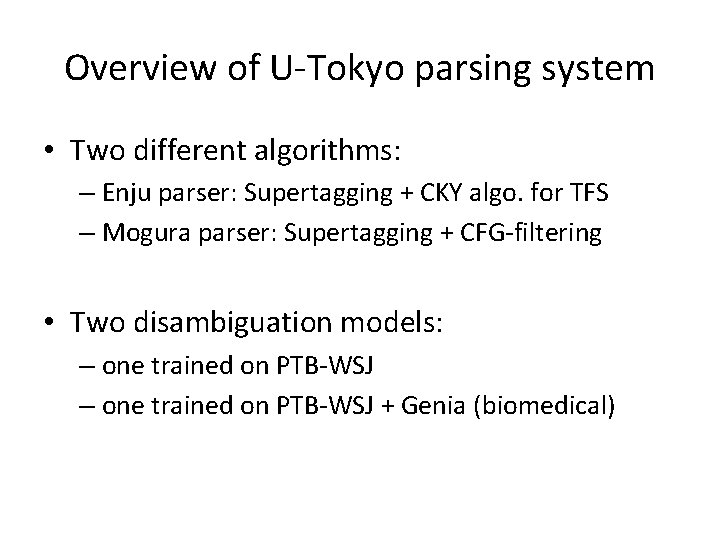

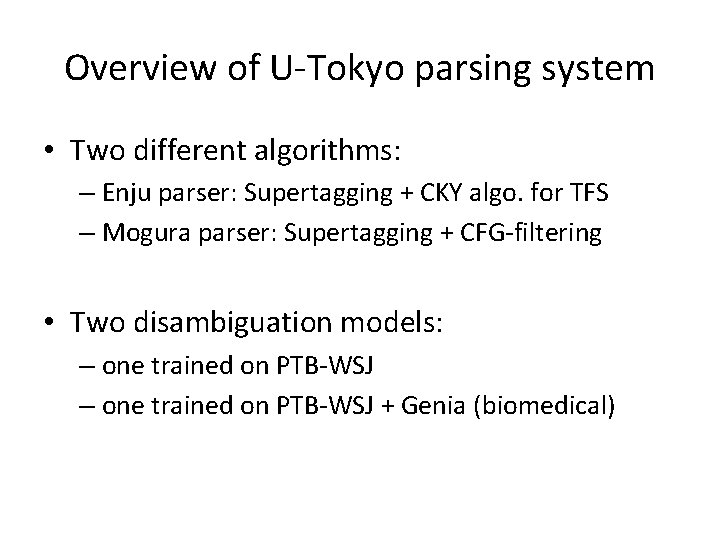

Overview of U-Tokyo parsing system • Two different algorithms: – Enju parser: Supertagging + CKY algo. for TFS – Mogura parser: Supertagging + CFG-filtering • Two disambiguation models: – one trained on PTB-WSJ + Genia (biomedical)

![Supertaggerbased parsing Clark and Curran 2004 Ninomiya et al 2006 Supertagging Bangalore Supertagger-based parsing [Clark and Curran, 2004; Ninomiya et al. , 2006] • Supertagging [Bangalore](https://slidetodoc.com/presentation_image/fe42318b9b4418a3d8865225a53099df/image-4.jpg)

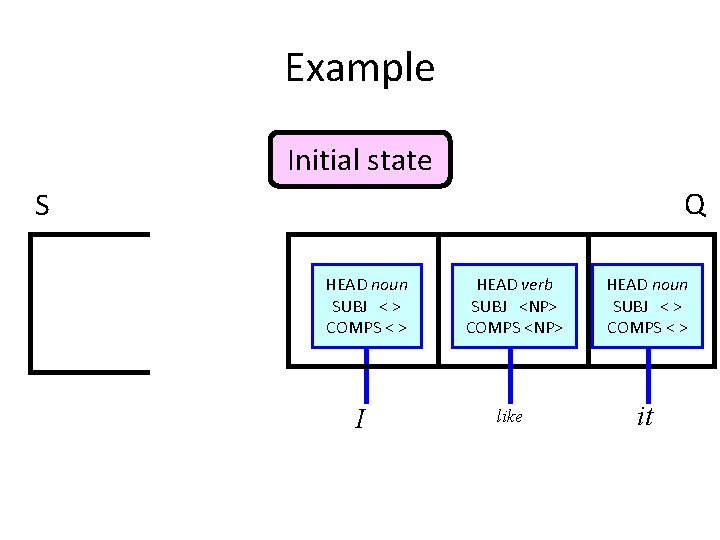

Supertagger-based parsing [Clark and Curran, 2004; Ninomiya et al. , 2006] • Supertagging [Bangalore and Joshi, 1999]: Selecting a few LEs for a word by using a probabilistic model of P(LE | sentence) P: small P: large HEAD noun SUBJ <> HEAD noun SUBJ < > COMPS < > HEAD noun SUBJ <> <> COMPS SUBJ < > COMPS < > I HEAD verb HEAD SUBJverb <NP> HEAD verb SUBJ <NP> COMPS <NP> HEAD verb<NP> SUBJ COMPS <NP> HEAD verb SUBJ <NP> COMPS <NP> like HEAD noun SUBJ <> HEAD noun SUBJ < > COMPS < > HEAD noun SUBJ <> <> COMPS SUBJ < > COMPS < > it

![Supertaggerbased parsing Clark and Curran 2004 Ninomiya et al 2006 Ignore the Supertagger-based parsing [Clark and Curran, 2004; Ninomiya et al. , 2006] • Ignore the](https://slidetodoc.com/presentation_image/fe42318b9b4418a3d8865225a53099df/image-5.jpg)

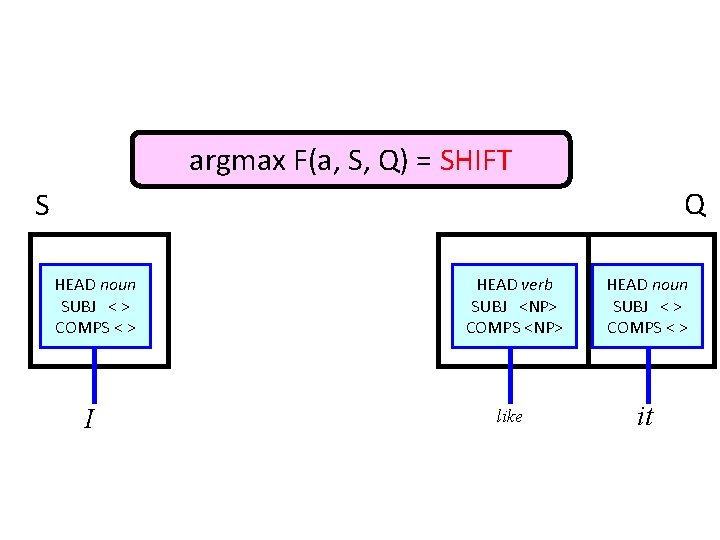

Supertagger-based parsing [Clark and Curran, 2004; Ninomiya et al. , 2006] • Ignore the LEs with small probabilities LEs with P > threshold P: large P: small HEAD noun SUBJ <> HEAD noun SUBJ < > COMPS < > HEAD noun SUBJ < >< > COMPS HEAD noun SUBJ < > <<>> < > COMPS SUBJ < > COMPS < >< > COMPS < > II Input to the parser HEAD verb HEAD SUBJverb <NP> HEAD verb SUBJ <NP> COMPS <NP> HEAD verb<NP> SUBJ <NP> COMPS <NP> HEAD verb SUBJ <NP> COMPS <NP> COMPS <NP> like HEAD noun SUBJ <> HEAD noun SUBJ < > COMPS < > HEAD noun SUBJ <> <> COMPS HEAD noun SUBJ < > COMPS < > HEAD noun SUBJ < > <<>> < > COMPS SUBJ <<>> < > COMPS < > it it

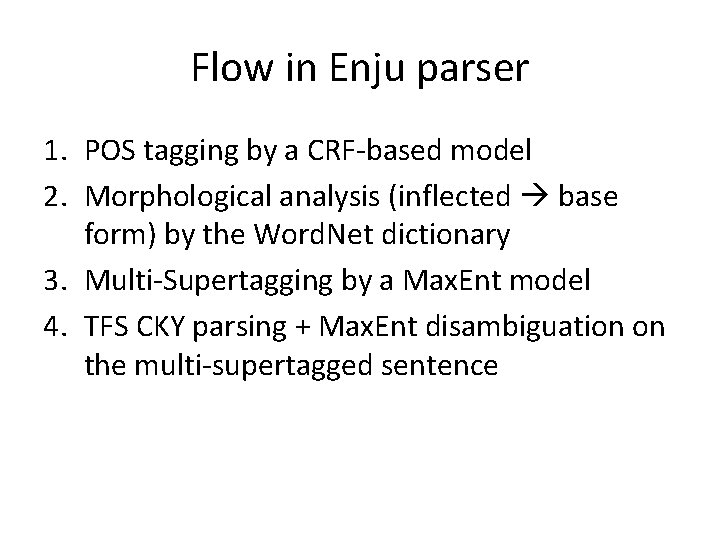

Flow in Enju parser 1. POS tagging by a CRF-based model 2. Morphological analysis (inflected base form) by the Word. Net dictionary 3. Multi-Supertagging by a Max. Ent model 4. TFS CKY parsing + Max. Ent disambiguation on the multi-supertagged sentence

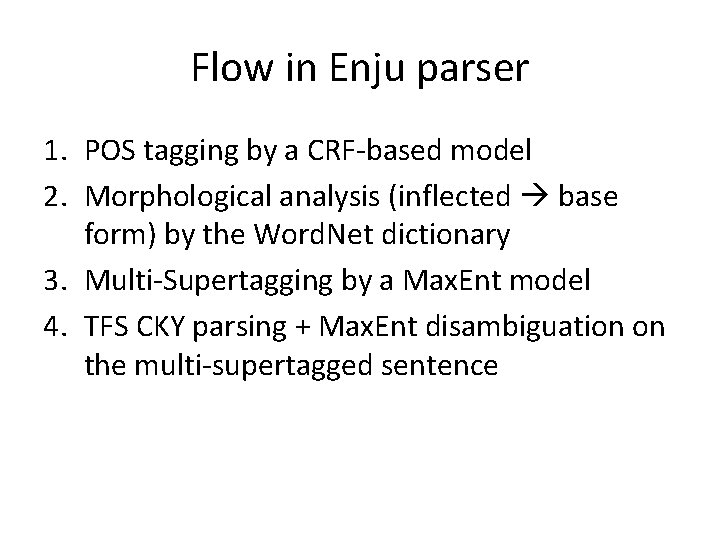

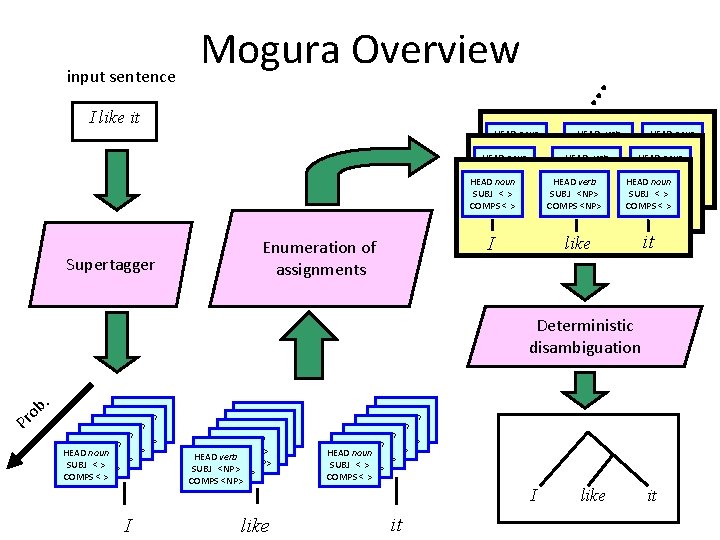

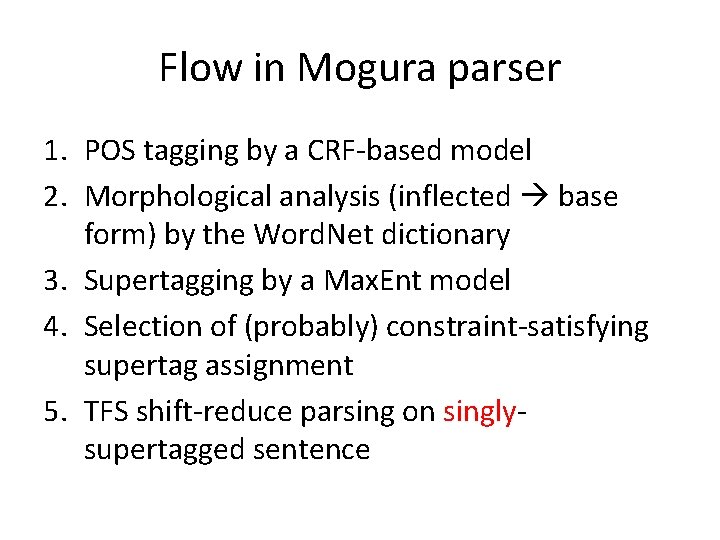

Flow in Mogura parser 1. POS tagging by a CRF-based model 2. Morphological analysis (inflected base form) by the Word. Net dictionary 3. Supertagging by a Max. Ent model 4. Selection of (probably) constraint-satisfying supertag assignment 5. TFS shift-reduce parsing on singlysupertagged sentence

![Previous supertaggerbased parsing Clark and Curran 2004 Ninomiya et al 2006 Ignore Previous supertagger-based parsing [Clark and Curran, 2004; Ninomiya et al. , 2006] • Ignore](https://slidetodoc.com/presentation_image/fe42318b9b4418a3d8865225a53099df/image-8.jpg)

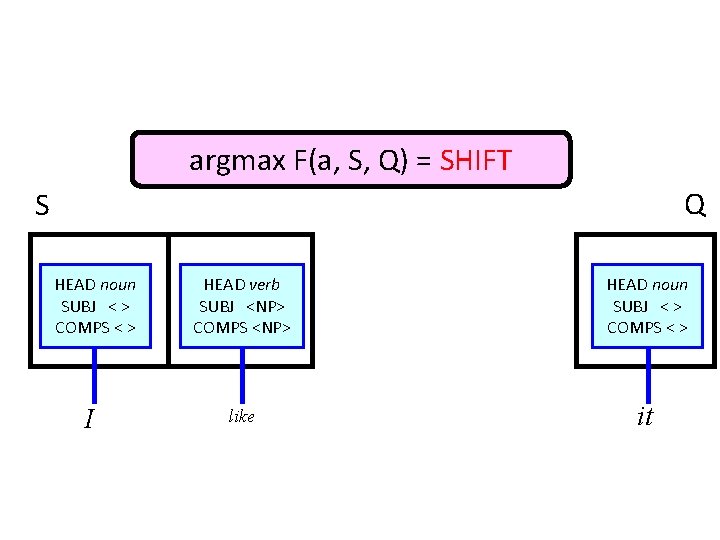

Previous supertagger-based parsing [Clark and Curran, 2004; Ninomiya et al. , 2006] • Ignore the LEs with small probabilities LEs with P > threshold P: large P: small HEAD noun SUBJ <> HEAD noun SUBJ < > COMPS < > HEAD noun SUBJ < >< > COMPS HEAD noun SUBJ < > <<>> < > COMPS SUBJ < > COMPS < >< > COMPS < > II Input to the parser HEAD verb HEAD SUBJverb <NP> HEAD verb SUBJ <NP> COMPS <NP> HEAD verb<NP> SUBJ <NP> COMPS <NP> HEAD verb SUBJ <NP> COMPS <NP> COMPS <NP> like HEAD noun SUBJ <> HEAD noun SUBJ < > COMPS < > HEAD noun SUBJ <> <> COMPS HEAD noun SUBJ < > COMPS < > HEAD noun SUBJ < > <<>> < > COMPS SUBJ <<>> < > COMPS < > it it

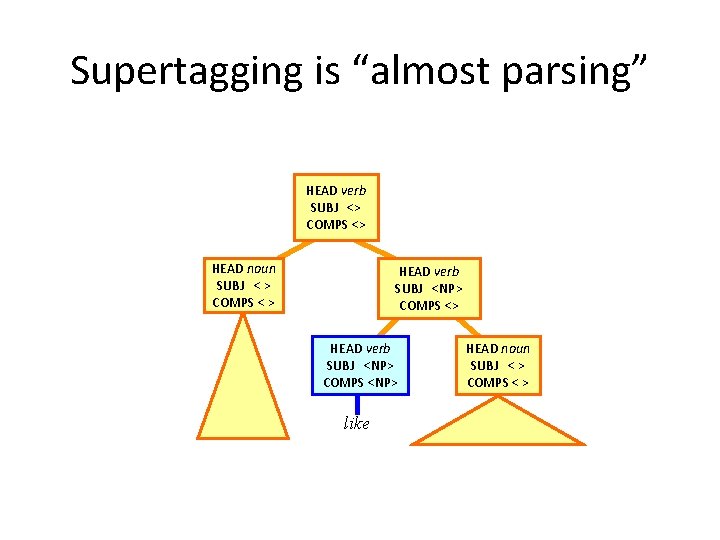

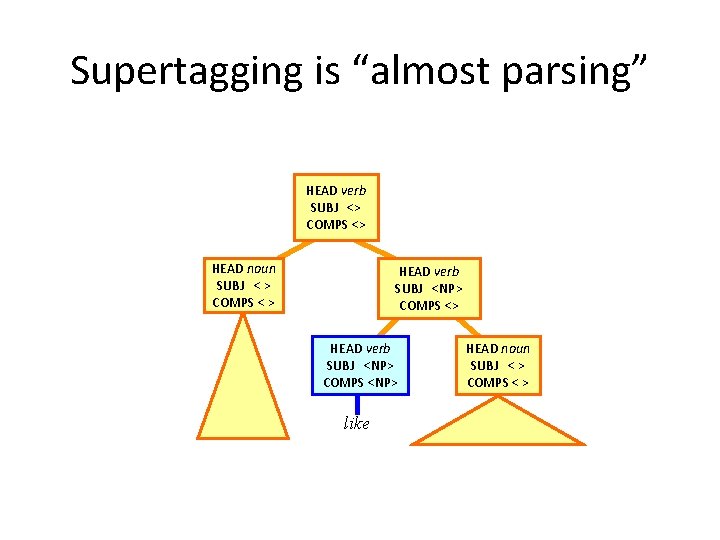

Supertagging is “almost parsing” HEAD verb SUBJ <> COMPS <> HEAD noun SUBJ < > COMPS < > HEAD verb SUBJ <NP> COMPS <NP> like HEAD noun SUBJ < > COMPS < >

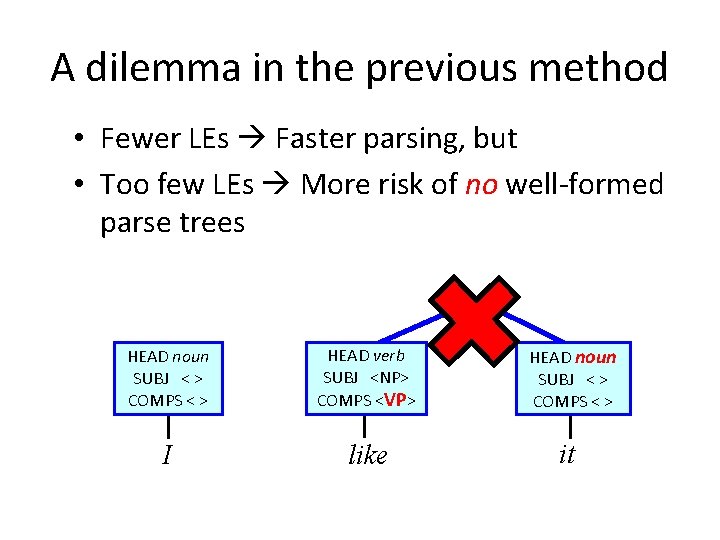

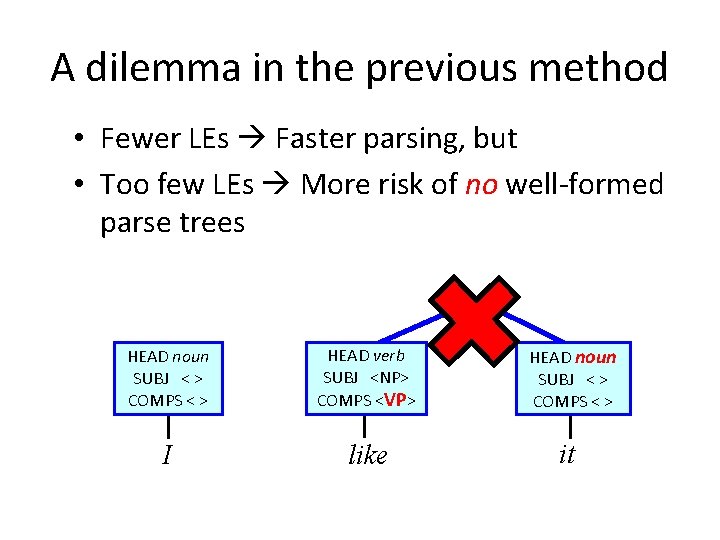

A dilemma in the previous method • Fewer LEs Faster parsing, but • Too few LEs More risk of no well-formed parse trees HEAD noun SUBJ < > COMPS < > HEAD verb SUBJ <NP> COMPS <VP> I like HEAD noun SUBJ < > COMPS < > it

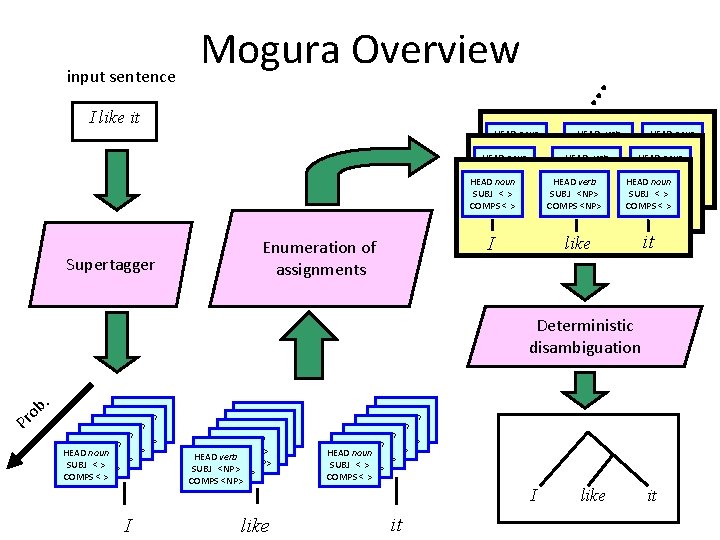

. . . input sentence Mogura Overview I like it HEAD noun HEAD verb SUBJ < > SUBJ <NP> COMPS HEAD noun< > COMPS HEAD verb<NP> HEAD SUBJ < > SUBJ <NP> HEAD noun< > COMPS HEAD verb<NP> HEAD COMPS SUBJ < > SUBJ <NP> COMPS <NP> I like I Supertagger I Enumeration of assignments it it it Deterministic disambiguation ob r P . HEAD noun SUBJ <> HEAD noun< > SUBJ COMPS <> HEAD noun SUBJ <> <> COMPS HEAD noun SUBJ < > COMPS < > SUBJ <> <> COMPS < > I HEAD verb HEAD SUBJverb <NP> HEAD verb SUBJ <NP> COMPS <NP> HEAD verb SUBJ <NP> COMPS <NP> like HEAD noun SUBJ <> HEAD noun< > SUBJ COMPS <> HEAD noun SUBJ <> <> COMPS HEAD noun SUBJ < > COMPS < > SUBJ <> <> COMPS < > I it like it

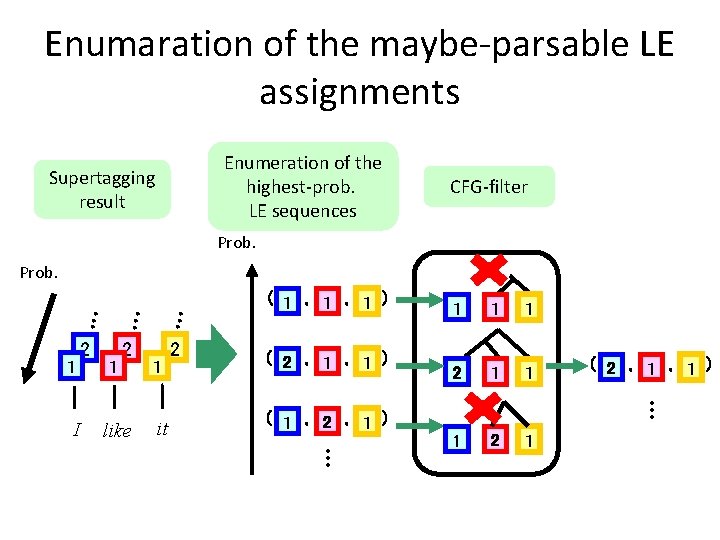

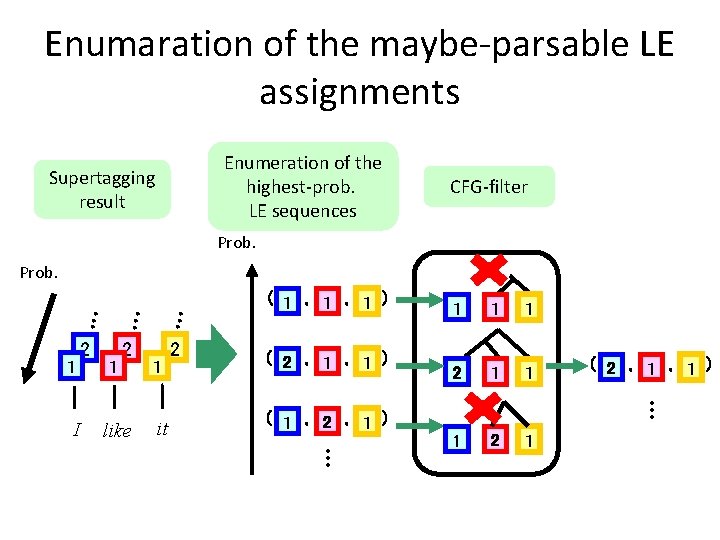

Enumaration of the maybe-parsable LE assignments Enumeration of the highest-prob. LE sequences Supertagging result CFG-filter Prob. 1 1 2 like 1 it 2 ( 1 , 1) ( 2 , 1 ) 1 1 1 2 1 1 1 2 1 ( 2 , 1 ) . . . I 2 . . Prob. ( 1 , 2 , 1 ) . . .

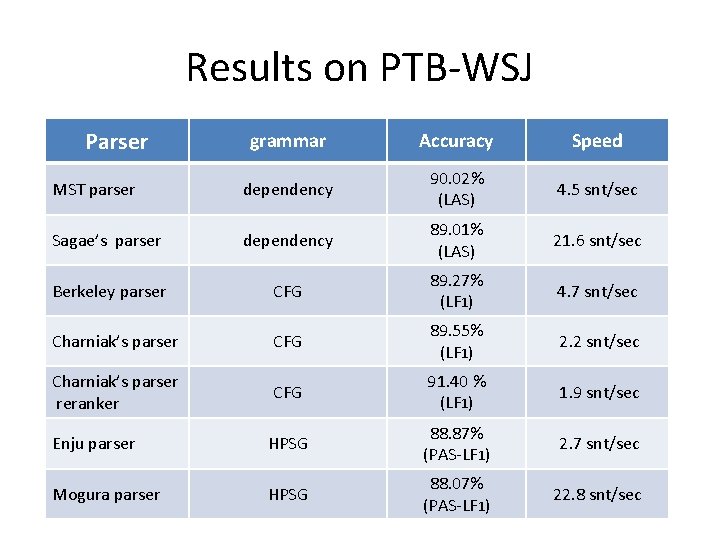

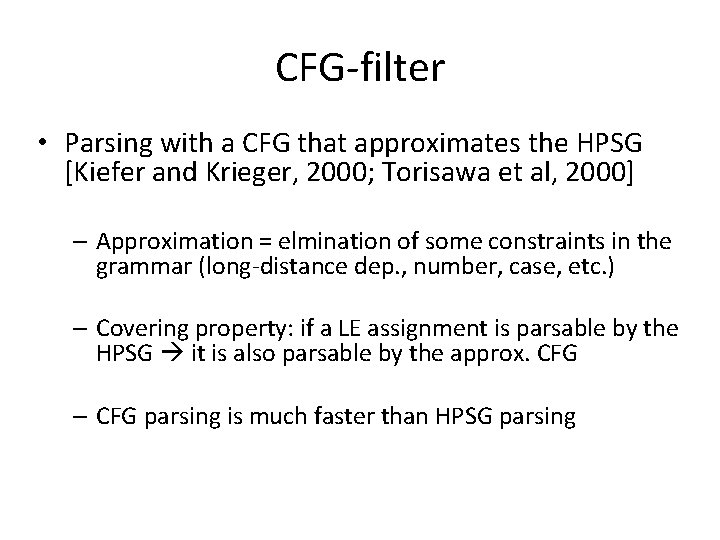

CFG-filter • Parsing with a CFG that approximates the HPSG [Kiefer and Krieger, 2000; Torisawa et al, 2000] – Approximation = elmination of some constraints in the grammar (long-distance dep. , number, case, etc. ) – Covering property: if a LE assignment is parsable by the HPSG it is also parsable by the approx. CFG – CFG parsing is much faster than HPSG parsing

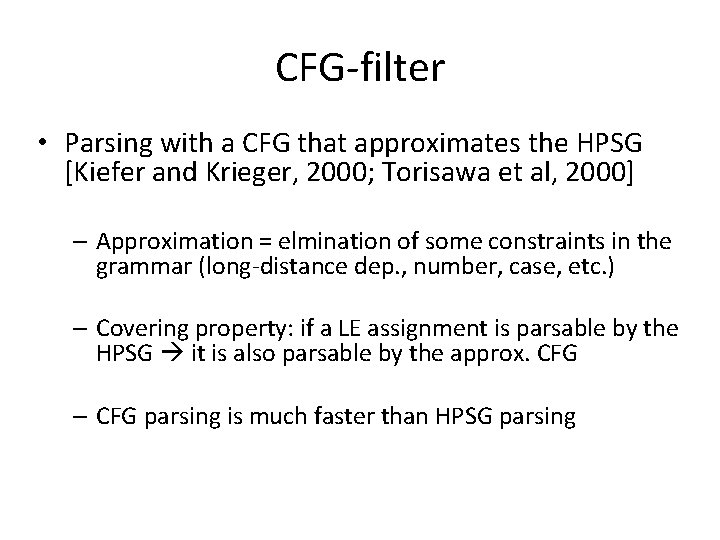

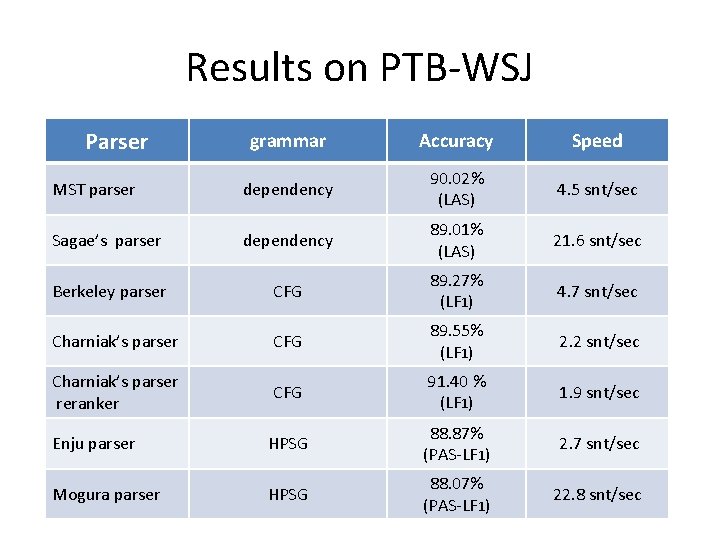

Results on PTB-WSJ Parser grammar Accuracy Speed MST parser dependency 90. 02% (LAS) 4. 5 snt/sec Sagae’s parser dependency 89. 01% (LAS) 21. 6 snt/sec Berkeley parser CFG 89. 27% (LF 1) 4. 7 snt/sec Charniak’s parser CFG 89. 55% (LF 1) 2. 2 snt/sec Charniak’s parser reranker CFG 91. 40 % (LF 1) 1. 9 snt/sec Enju parser HPSG 88. 87% (PAS-LF 1) 2. 7 snt/sec Mogura parser HPSG 88. 07% (PAS-LF 1) 22. 8 snt/sec

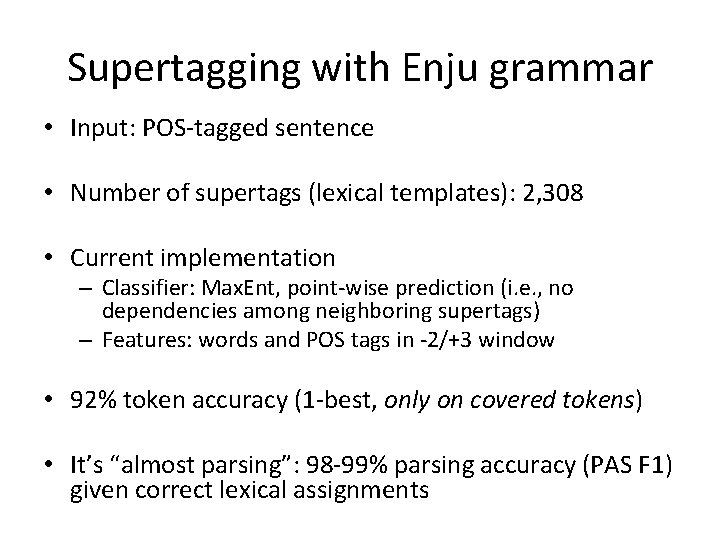

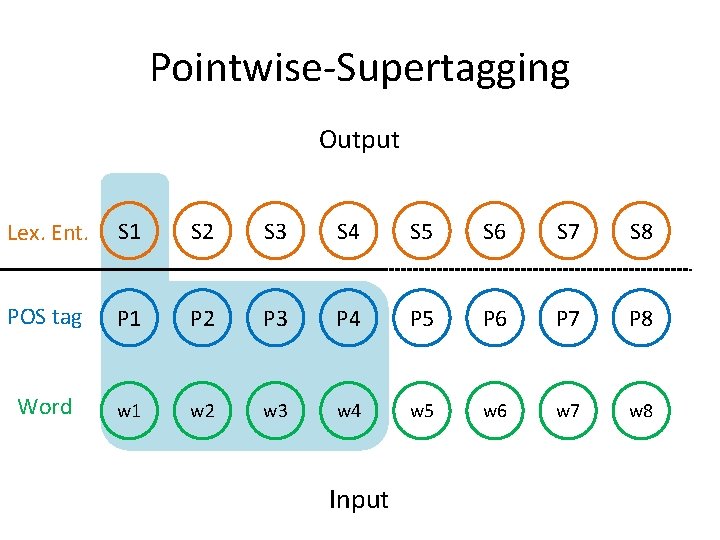

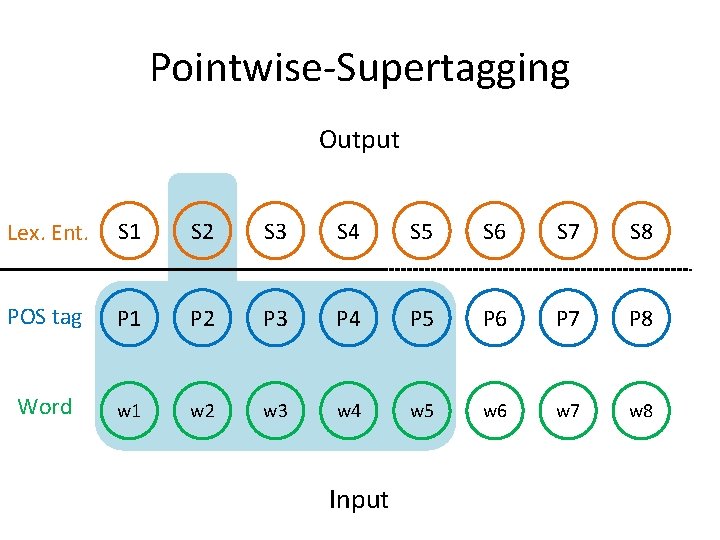

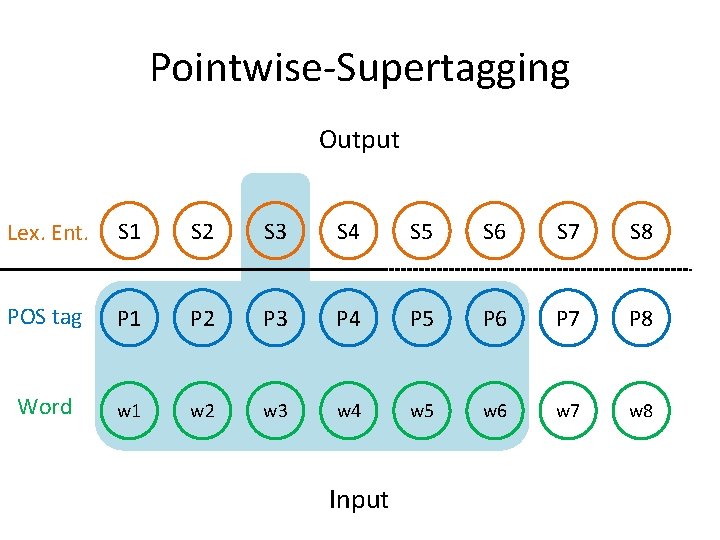

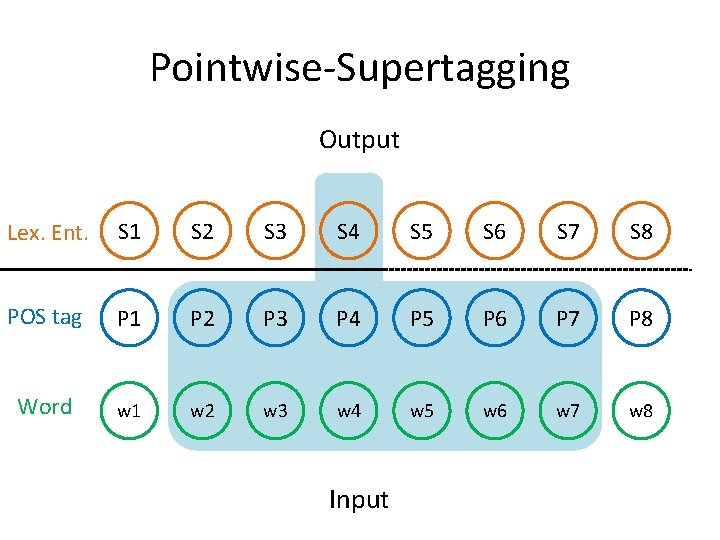

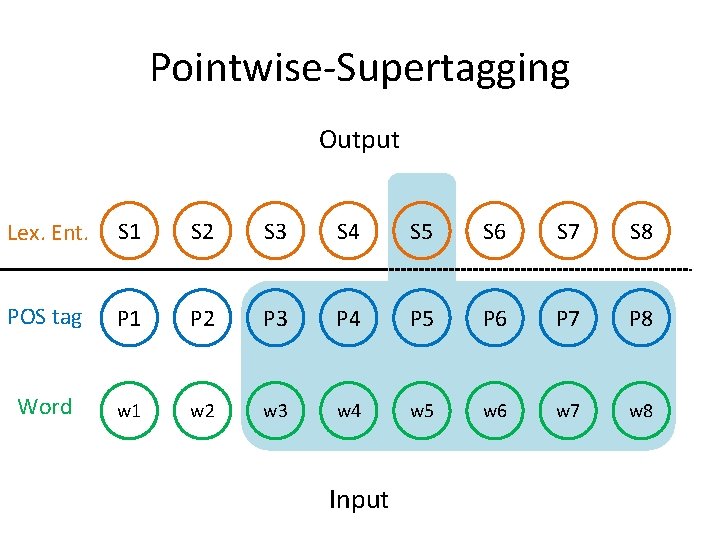

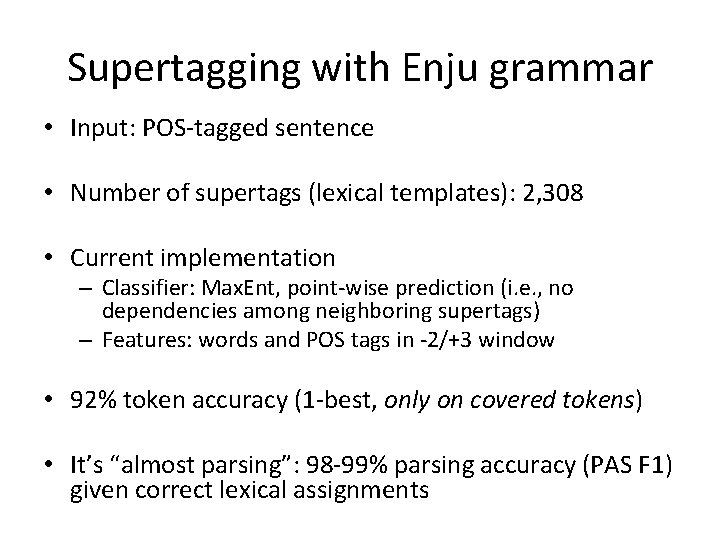

Supertagging with Enju grammar • Input: POS-tagged sentence • Number of supertags (lexical templates): 2, 308 • Current implementation – Classifier: Max. Ent, point-wise prediction (i. e. , no dependencies among neighboring supertags) – Features: words and POS tags in -2/+3 window • 92% token accuracy (1 -best, only on covered tokens) • It’s “almost parsing”: 98 -99% parsing accuracy (PAS F 1) given correct lexical assignments

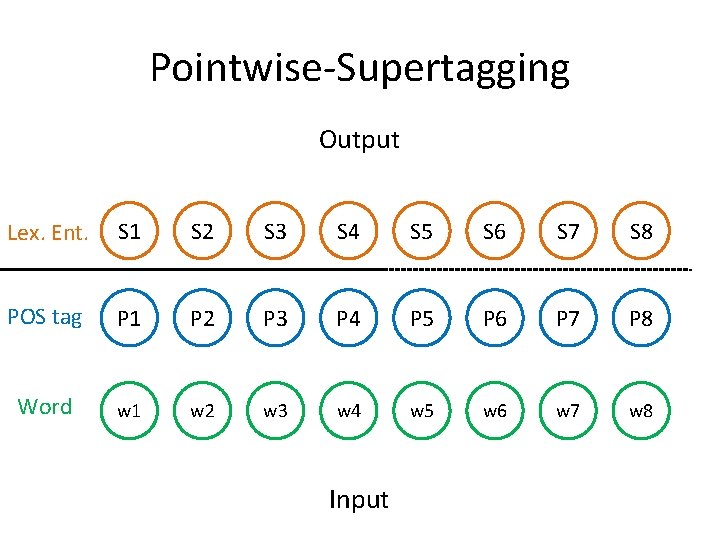

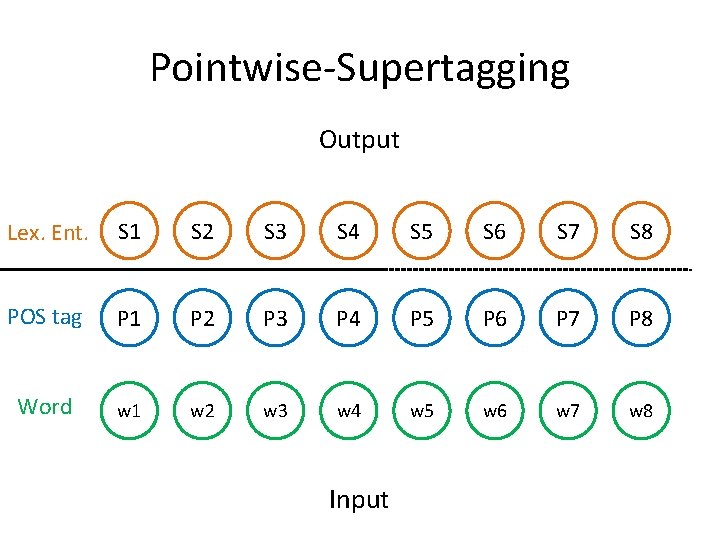

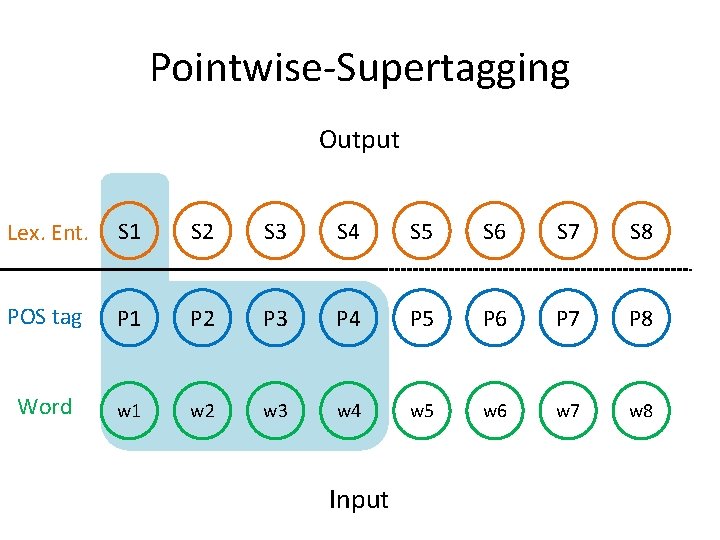

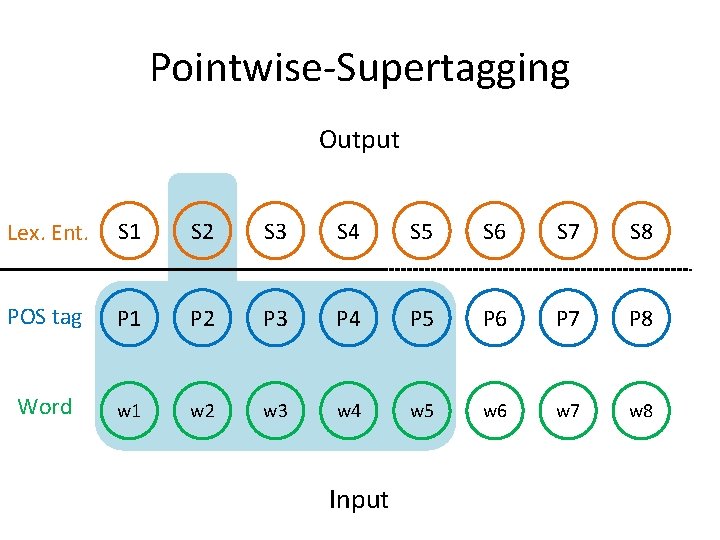

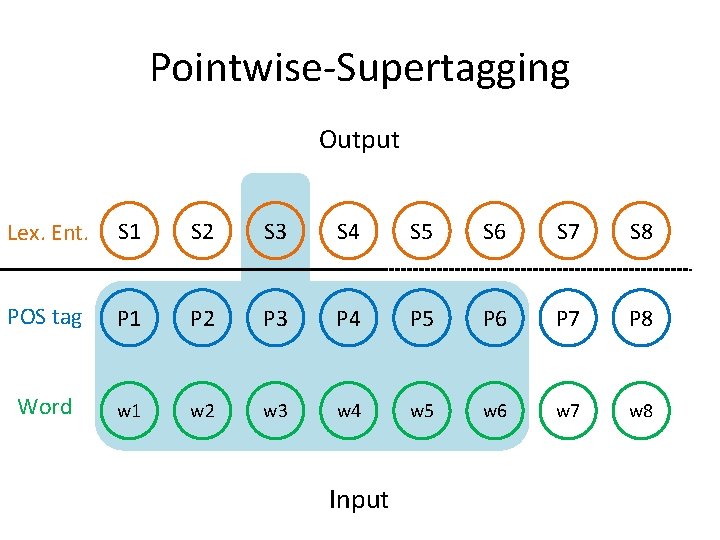

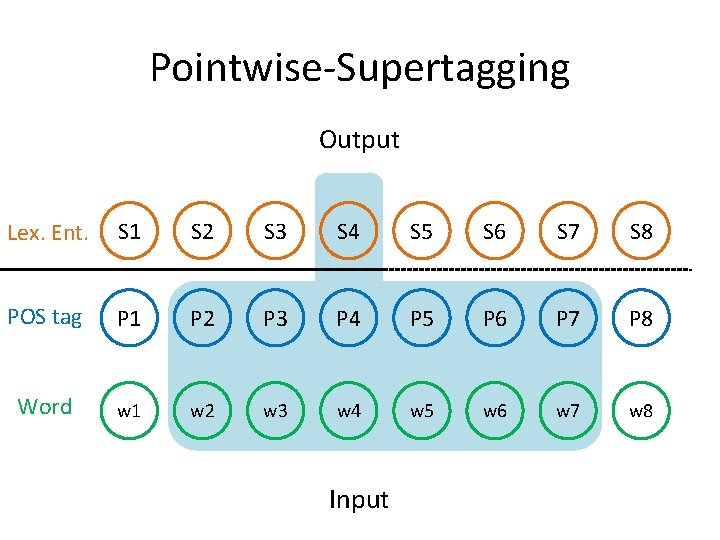

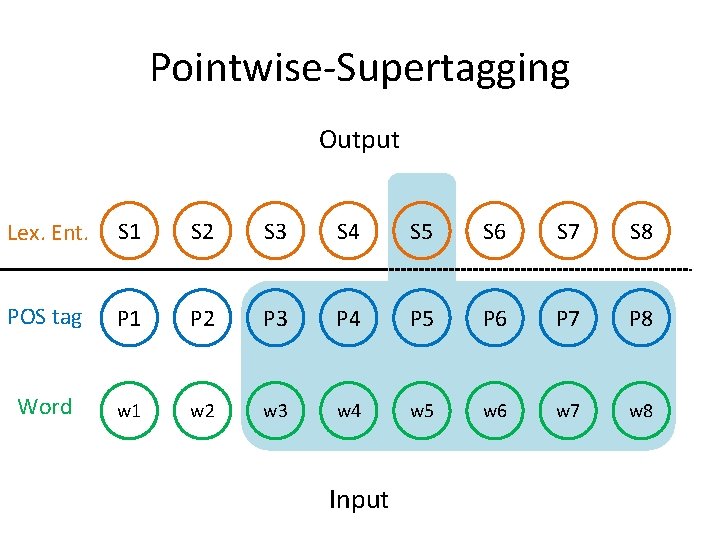

Pointwise-Supertagging Output Lex. Ent. S 1 S 2 S 3 S 4 S 5 S 6 S 7 S 8 POS tag P 1 P 2 P 3 P 4 P 5 P 6 P 7 P 8 Word w 1 w 2 w 3 w 4 w 5 w 6 w 7 w 8 Input

Pointwise-Supertagging Output Lex. Ent. S 1 S 2 S 3 S 4 S 5 S 6 S 7 S 8 POS tag P 1 P 2 P 3 P 4 P 5 P 6 P 7 P 8 Word w 1 w 2 w 3 w 4 w 5 w 6 w 7 w 8 Input

Pointwise-Supertagging Output Lex. Ent. S 1 S 2 S 3 S 4 S 5 S 6 S 7 S 8 POS tag P 1 P 2 P 3 P 4 P 5 P 6 P 7 P 8 Word w 1 w 2 w 3 w 4 w 5 w 6 w 7 w 8 Input

Pointwise-Supertagging Output Lex. Ent. S 1 S 2 S 3 S 4 S 5 S 6 S 7 S 8 POS tag P 1 P 2 P 3 P 4 P 5 P 6 P 7 P 8 Word w 1 w 2 w 3 w 4 w 5 w 6 w 7 w 8 Input

Pointwise-Supertagging Output Lex. Ent. S 1 S 2 S 3 S 4 S 5 S 6 S 7 S 8 POS tag P 1 P 2 P 3 P 4 P 5 P 6 P 7 P 8 Word w 1 w 2 w 3 w 4 w 5 w 6 w 7 w 8 Input

Pointwise-Supertagging Output Lex. Ent. S 1 S 2 S 3 S 4 S 5 S 6 S 7 S 8 POS tag P 1 P 2 P 3 P 4 P 5 P 6 P 7 P 8 Word w 1 w 2 w 3 w 4 w 5 w 6 w 7 w 8 Input

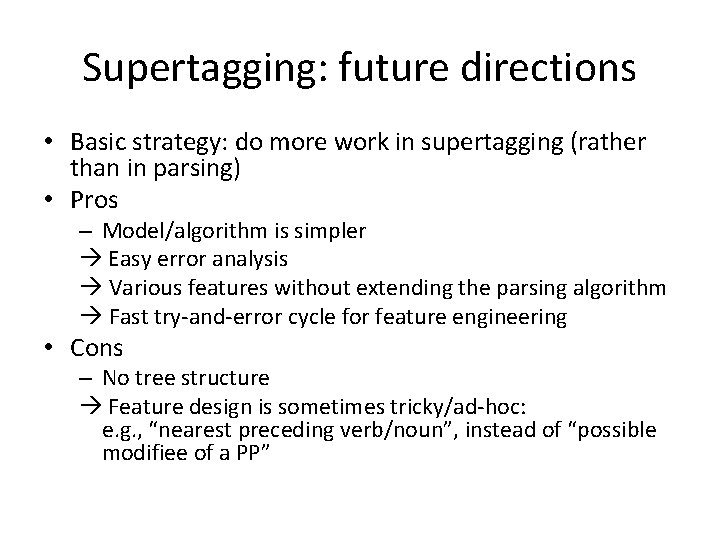

Supertagging: future directions • Basic strategy: do more work in supertagging (rather than in parsing) • Pros – Model/algorithm is simpler Easy error analysis Various features without extending the parsing algorithm Fast try-and-error cycle for feature engineering • Cons – No tree structure Feature design is sometimes tricky/ad-hoc: e. g. , “nearest preceding verb/noun”, instead of “possible modifiee of a PP”

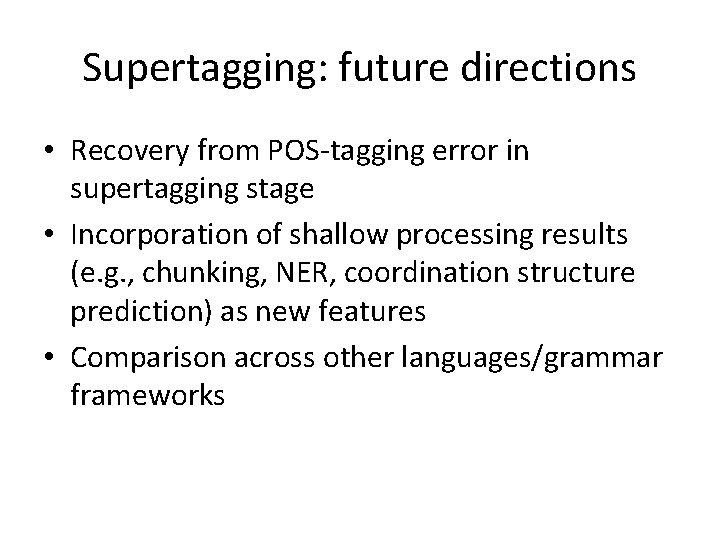

Supertagging: future directions • Recovery from POS-tagging error in supertagging stage • Incorporation of shallow processing results (e. g. , chunking, NER, coordination structure prediction) as new features • Comparison across other languages/grammar frameworks

Thank you!

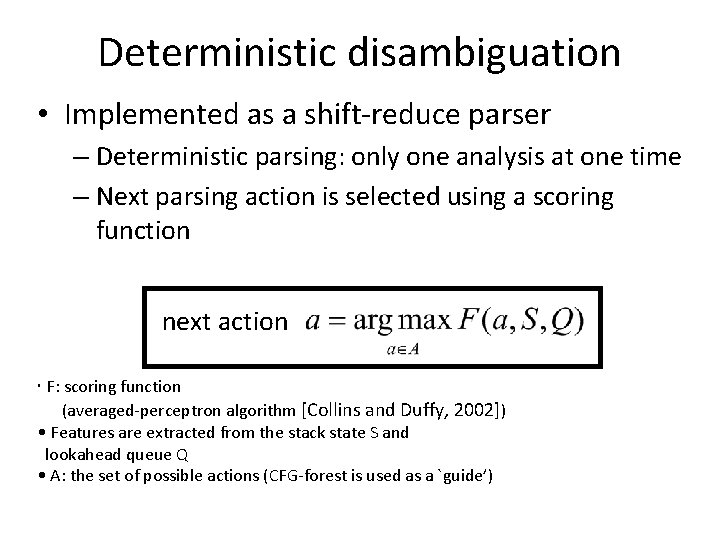

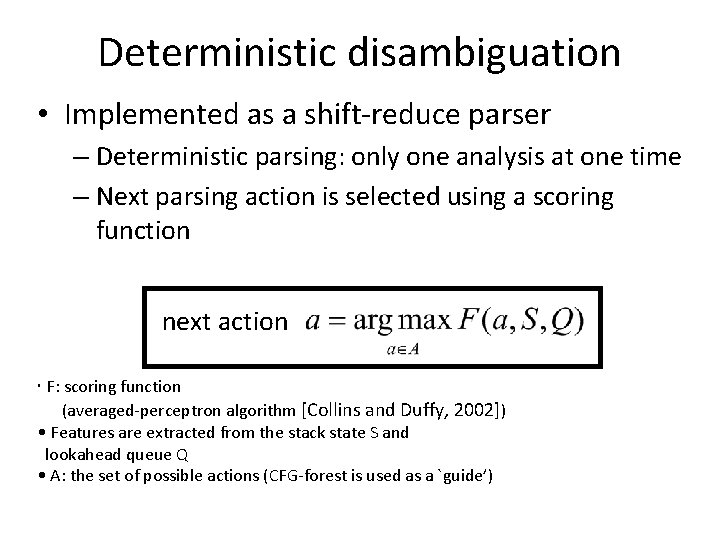

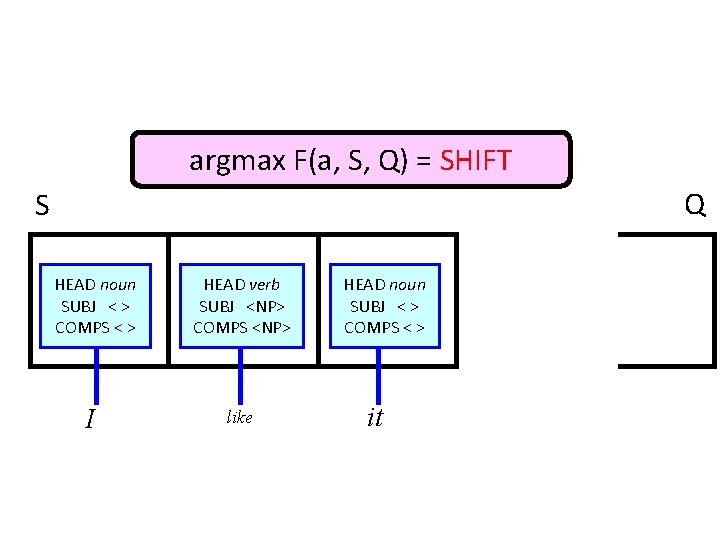

Deterministic disambiguation • Implemented as a shift-reduce parser – Deterministic parsing: only one analysis at one time – Next parsing action is selected using a scoring function next action • F: scoring function (averaged-perceptron algorithm [Collins and Duffy, 2002]) • Features are extracted from the stack state S and lookahead queue Q • A: the set of possible actions (CFG-forest is used as a `guide’)

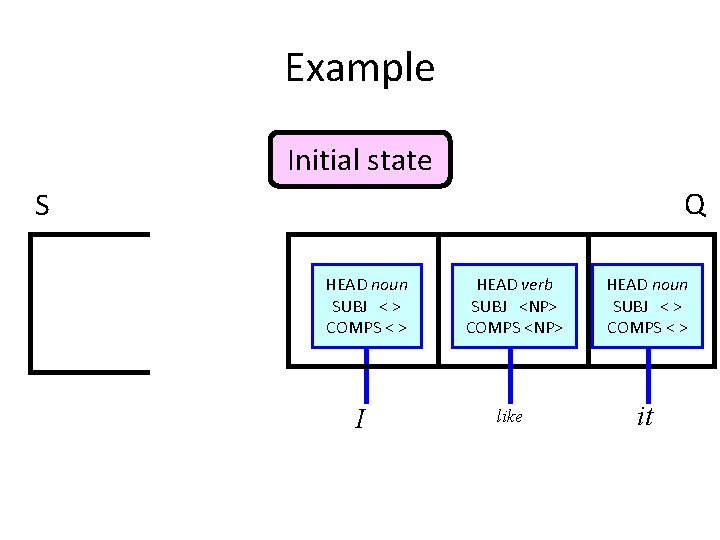

Example Initial state Q S HEAD noun SUBJ < > COMPS < > I HEAD verb SUBJ <NP> COMPS <NP> HEAD noun SUBJ < > COMPS < > like it

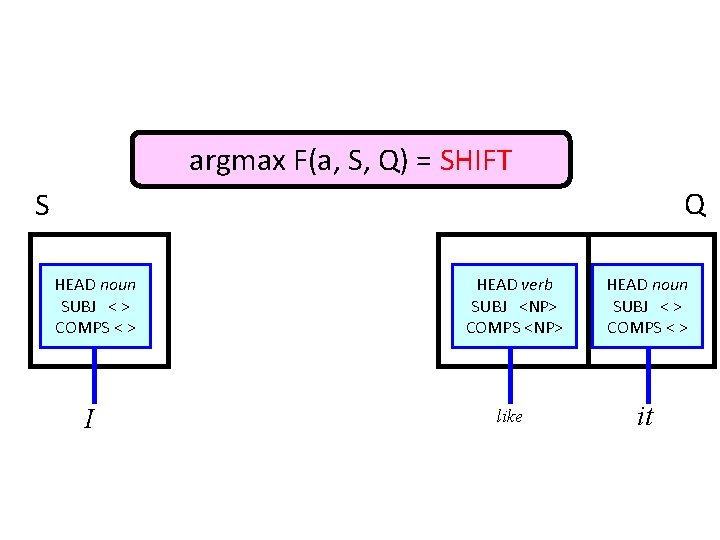

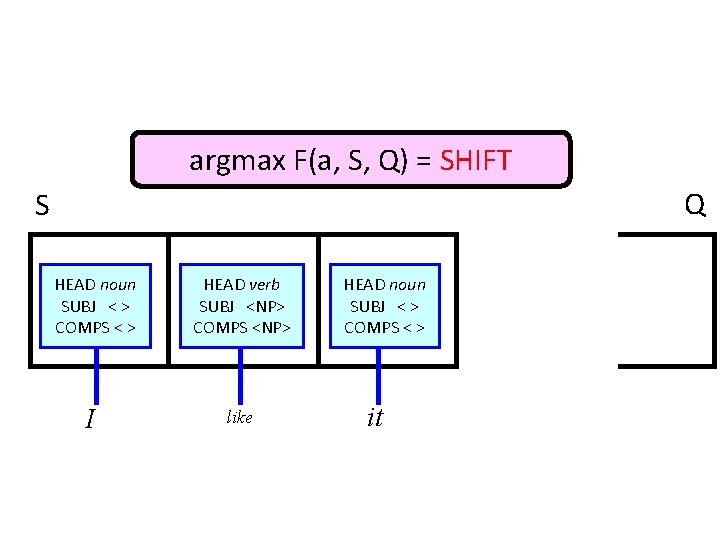

argmax F(a, S, Q) = SHIFT Q S HEAD noun SUBJ < > COMPS < > I HEAD verb SUBJ <NP> COMPS <NP> HEAD noun SUBJ < > COMPS < > like it

argmax F(a, S, Q) = SHIFT Q S HEAD noun SUBJ < > COMPS < > I HEAD verb SUBJ <NP> COMPS <NP> HEAD noun SUBJ < > COMPS < > like it

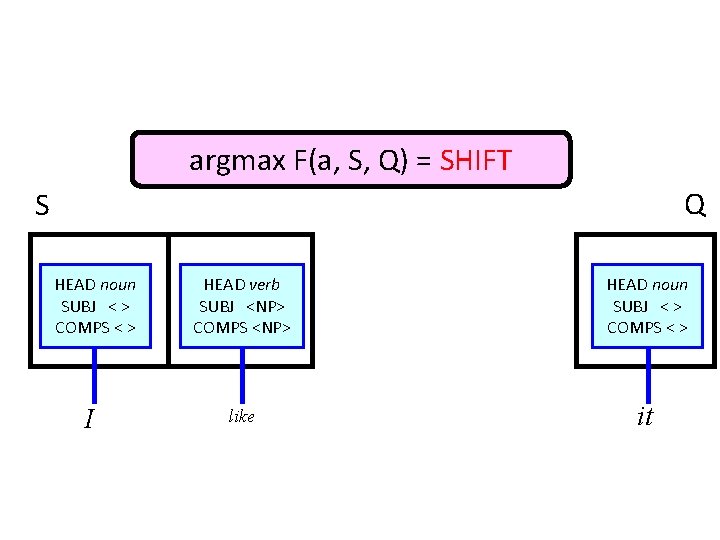

argmax F(a, S, Q) = SHIFT Q S HEAD noun SUBJ < > COMPS < > HEAD verb SUBJ <NP> COMPS <NP> I like HEAD noun SUBJ < > COMPS < > it

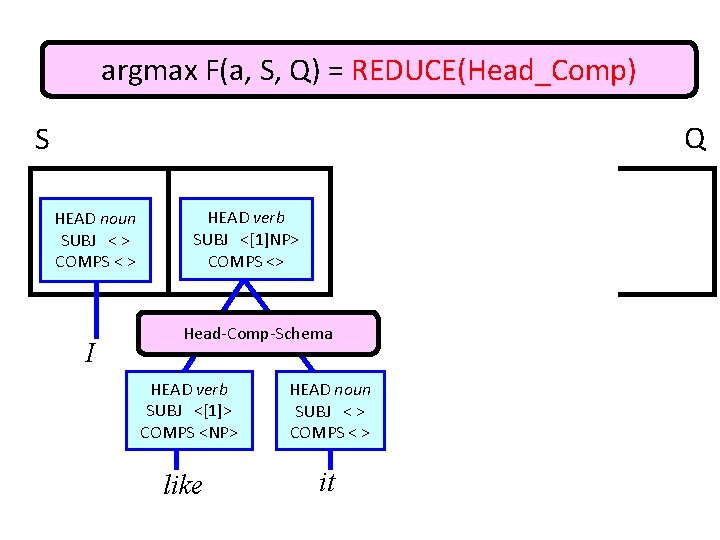

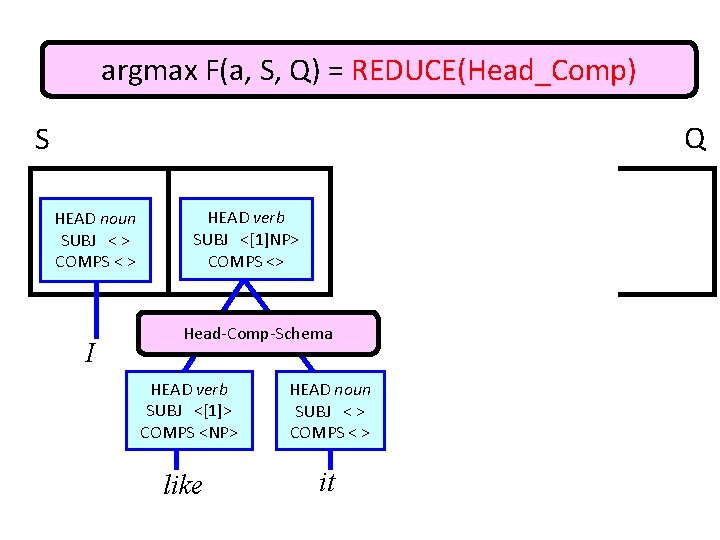

argmax F(a, S, Q) = REDUCE(Head_Comp) Q S HEAD noun SUBJ < > COMPS < > I HEAD verb SUBJ <[1]NP> COMPS <> Head-Comp-Schema HEAD verb SUBJ <[1]> COMPS <NP> like HEAD noun SUBJ < > COMPS < > it

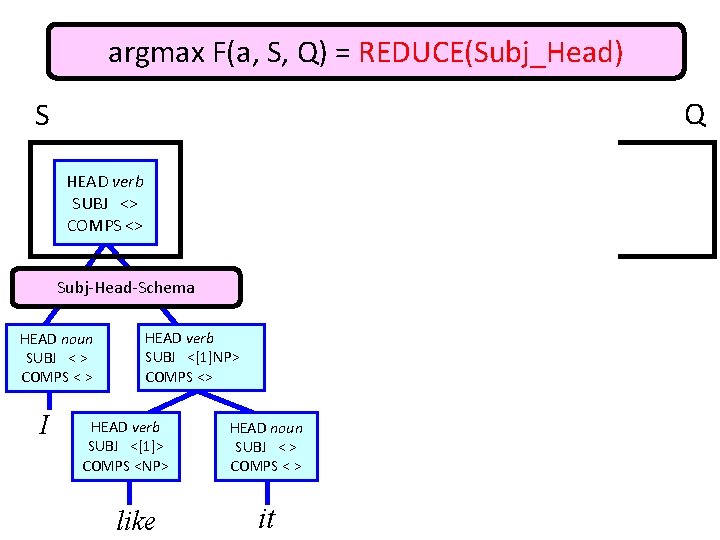

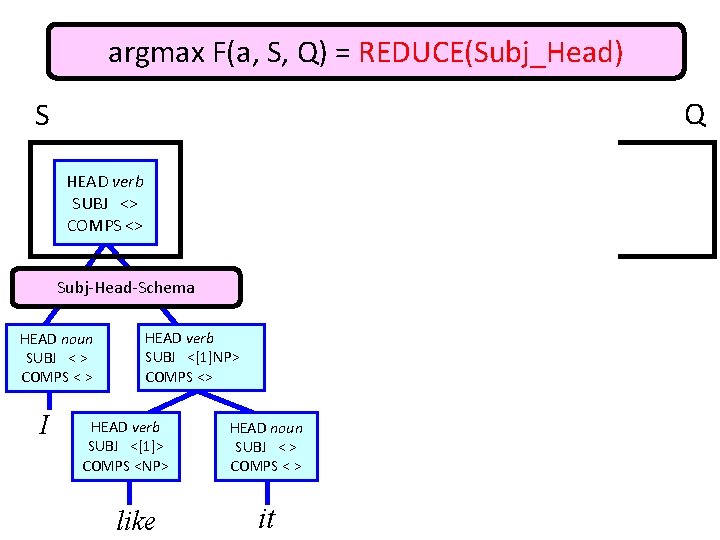

argmax F(a, S, Q) = REDUCE(Subj_Head) Q S HEAD verb SUBJ <> COMPS <> Subj-Head-Schema HEAD noun SUBJ < > COMPS < > I HEAD verb SUBJ <[1]NP> COMPS <> HEAD verb SUBJ <[1]> COMPS <NP> like HEAD noun SUBJ < > COMPS < > it