HPSEE Introduction to parallel programming with MPI www

- Slides: 20

HP-SEE Introduction to parallel programming with MPI www. hp-see. eu Emanouil Atanassov Institute of Information and Communication Technologies Bulgarian Academy of Science emanouil@parallel. bas. bg The HP-SEE initiative is co-funded by the European Commission under the FP 7 Research Infrastructures contract no. 261499

OUTLINE q q q Parallel programming techniques What is MPI Types of MPI communication routines General structure of an MPI program Execution of MPI programs Additional considerations for developing MPI codes HP-SEE Training – Sofia, Bulgaria 29 -30, Nov. 2010 2

Parallel computing techniques q q q “Parallel computing is a form of computation in which many calculations are carried out simultaneously” Fine-grained, coarse-grained, and embarrassing parallelism Flynn's taxonomy: q q SISD – Single Instruction Single Data SIMD - Single Instruction Multiple Data MIMD Multiple Instructions Multiple Data MISD- Multiple Instructions / Single Data Parallel Programming with MPI Training – Sofia, Bulgaria, 1 Dec. 2011 3

MIMD scheme q MIMD - Multiple Instructions Multiple Data SI SISD SD Parallel Programming with MPI Training – Sofia, Bulgaria, 1 Dec. 2011 4

Memory models and parallel computing techniques q q q Shared memory vs Distributed memory What is NUMA Open. MP Parallel programming using threads MPI Hybrid approaches Parallel Programming with MPI Training – Sofia, Bulgaria, 1 Dec. 2011 5

What is MPI q q q q MPI stands for Message Passing Interface (MPI) is a standardized and portable message -passing system designed by a group of researchers from academia and industry to function on a wide variety of parallel computers. The standard defines the syntax and semantics of a core of library routines useful to a wide range of users writing portable message-passing programs in Fortran 77 or C. Binding for other languages are also supported, including C++. The current version of the standard is MPI-2. MPI is usually deployed as a set of libraries, header files and wrappers for compiling, linking and executing the MPI applications are widely portable and can use shared or distributed memory systems. The exchange of messages happens over various interconnects: q q q Memory bus Infiniband Myrined, Quadrics, …. TCP/IP, other network protocols Proprietory interconnects (supercomputers). Parallel Programming with MPI Training – Sofia, Bulgaria, 1 Dec. 2011 6

General structure of an MPI program q q q q #include "mpi. h". . . - other includes, for example #include <stdio. h> int main(int argc, char **argv) { int rank, nprocs; MPI_Init(&argc, &argv); MPI_Comm_size(MPI_COMM_WORLD, &nprocs); MPI_Comm_rank(MPI_COMM_WORLD, &rank); …. . – operators, for example: q q printf("Hello. World! I'm process %d of %d on %sn", rank, nprocs); MPI_Finalize(); } Usually program is compiled with mpicc or mpif 77 (mpif 90, mpicxx) and executed with mpirun or mpiexec. Parallel Programming with MPI Training – Sofia, Bulgaria, 1 Dec. 2011 7

Communicators q q q q All mpi specific communications take place with respect to a communicator Communicator: A collection of processes and a context MPI_COMM_WORLD is the predefined communicator of all processes Processes within a communicator are assigned a unique rank value q q MPI_Comm_size( MPI_COMM_WORLD, &size ); MPI_Comm_rank( MPI_COMM_WORLD, &rank ); Parallel Programming with MPI Training – Sofia, Bulgaria, 1 Dec. 2011 8

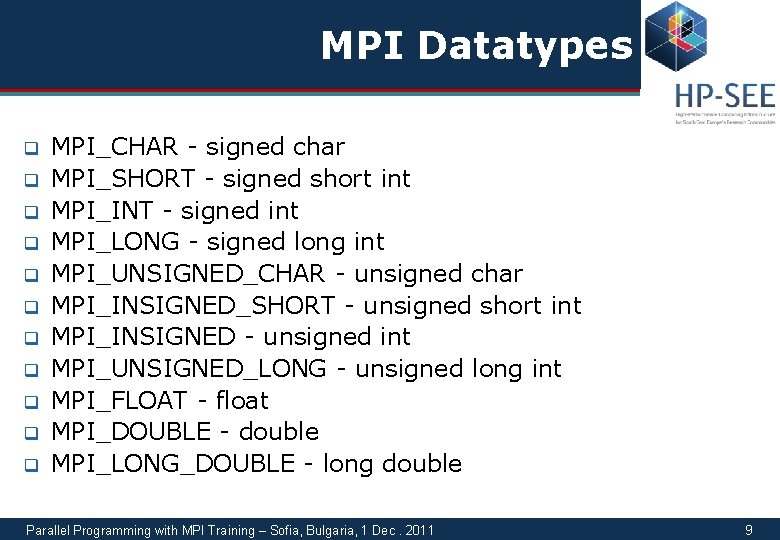

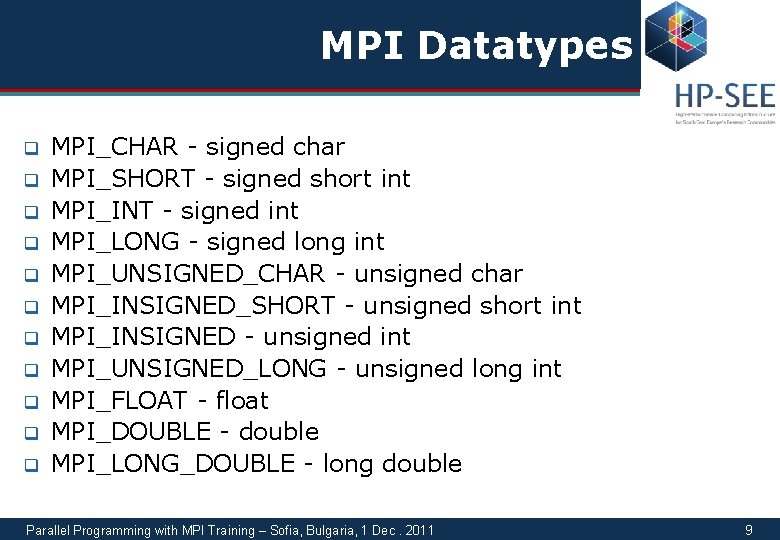

MPI Datatypes q q q MPI_CHAR - signed char MPI_SHORT - signed short int MPI_INT - signed int MPI_LONG - signed long int MPI_UNSIGNED_CHAR - unsigned char MPI_INSIGNED_SHORT - unsigned short int MPI_INSIGNED - unsigned int MPI_UNSIGNED_LONG - unsigned long int MPI_FLOAT - float MPI_DOUBLE - double MPI_LONG_DOUBLE - long double Parallel Programming with MPI Training – Sofia, Bulgaria, 1 Dec. 2011 9

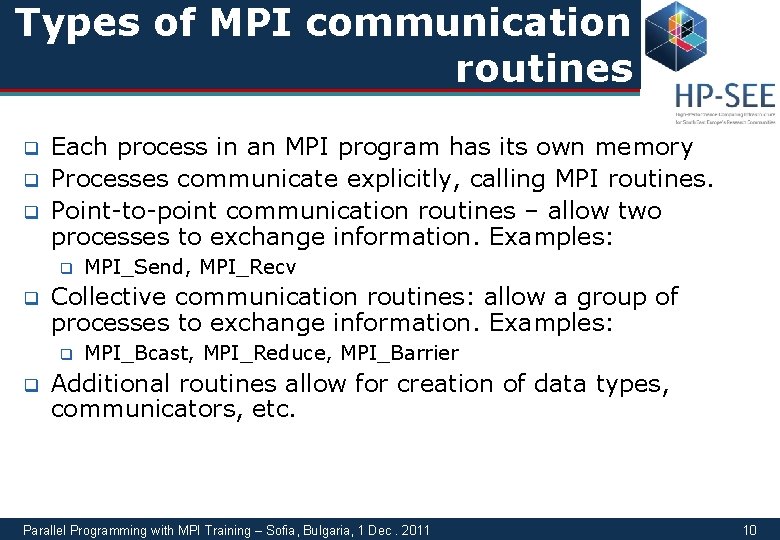

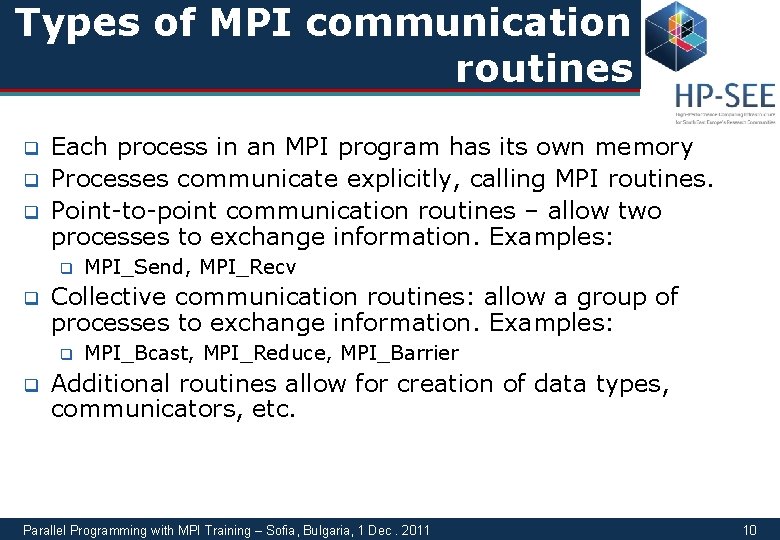

Types of MPI communication routines q q q Each process in an MPI program has its own memory Processes communicate explicitly, calling MPI routines. Point-to-point communication routines – allow two processes to exchange information. Examples: q q Collective communication routines: allow a group of processes to exchange information. Examples: q q MPI_Send, MPI_Recv MPI_Bcast, MPI_Reduce, MPI_Barrier Additional routines allow for creation of data types, communicators, etc. Parallel Programming with MPI Training – Sofia, Bulgaria, 1 Dec. 2011 10

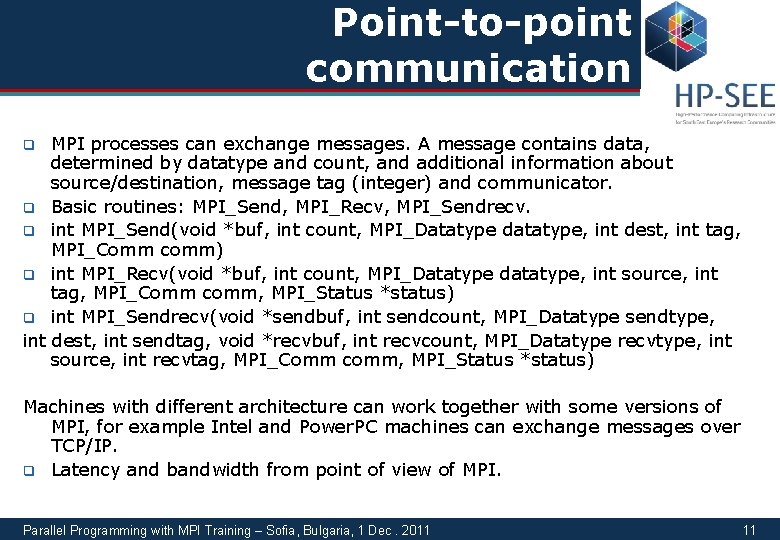

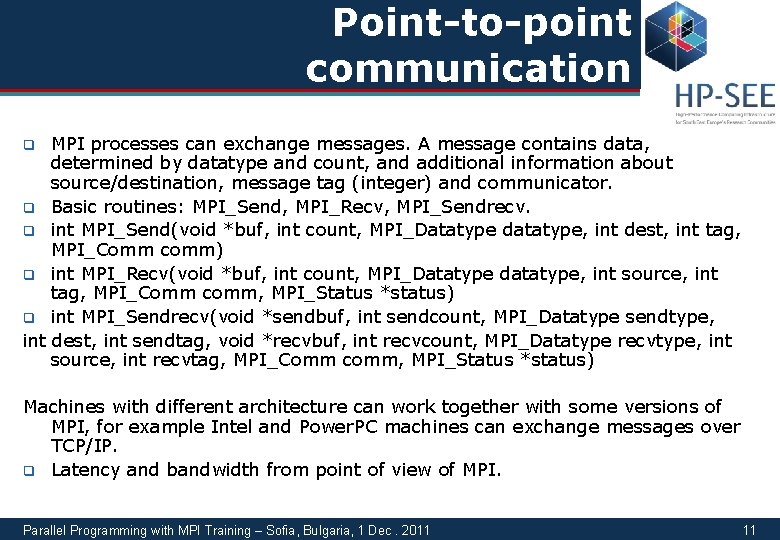

Point-to-point communication MPI processes can exchange messages. A message contains data, determined by datatype and count, and additional information about source/destination, message tag (integer) and communicator. q Basic routines: MPI_Send, MPI_Recv, MPI_Sendrecv. q int MPI_Send(void *buf, int count, MPI_Datatype datatype, int dest, int tag, MPI_Comm comm) q int MPI_Recv(void *buf, int count, MPI_Datatype datatype, int source, int tag, MPI_Comm comm, MPI_Status *status) q int MPI_Sendrecv(void *sendbuf, int sendcount, MPI_Datatype sendtype, int dest, int sendtag, void *recvbuf, int recvcount, MPI_Datatype recvtype, int source, int recvtag, MPI_Comm comm, MPI_Status *status) q Machines with different architecture can work together with some versions of MPI, for example Intel and Power. PC machines can exchange messages over TCP/IP. q Latency and bandwidth from point of view of MPI. Parallel Programming with MPI Training – Sofia, Bulgaria, 1 Dec. 2011 11

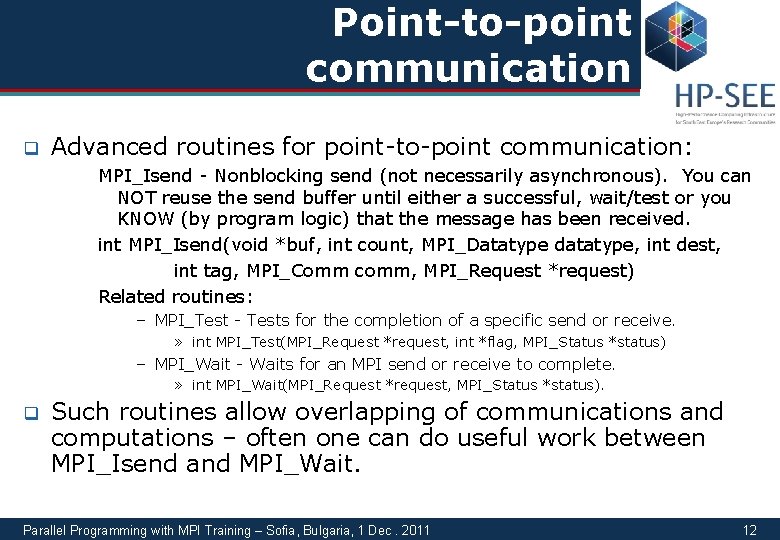

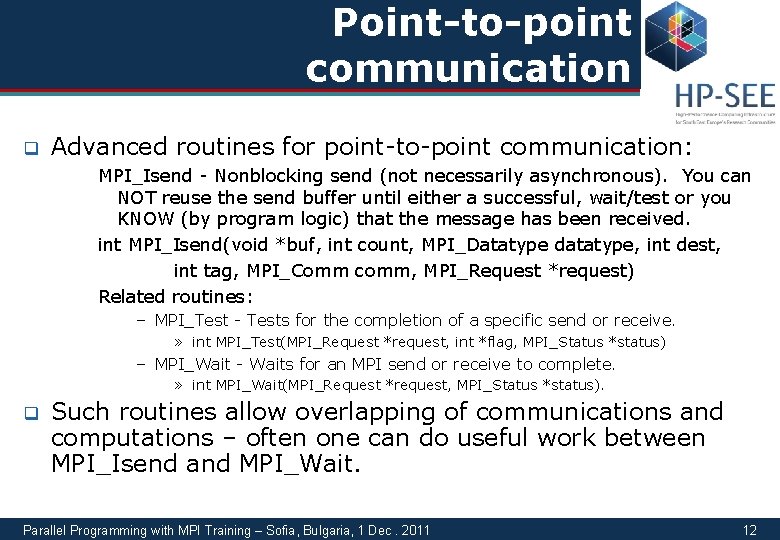

Point-to-point communication q Advanced routines for point-to-point communication: MPI_Isend - Nonblocking send (not necessarily asynchronous). You can NOT reuse the send buffer until either a successful, wait/test or you KNOW (by program logic) that the message has been received. int MPI_Isend(void *buf, int count, MPI_Datatype datatype, int dest, int tag, MPI_Comm comm, MPI_Request *request) Related routines: – MPI_Test - Tests for the completion of a specific send or receive. » int MPI_Test(MPI_Request *request, int *flag, MPI_Status *status) – MPI_Wait - Waits for an MPI send or receive to complete. » int MPI_Wait(MPI_Request *request, MPI_Status *status). q Such routines allow overlapping of communications and computations – often one can do useful work between MPI_Isend and MPI_Wait. Parallel Programming with MPI Training – Sofia, Bulgaria, 1 Dec. 2011 12

Collective communications q q q Collective routines typically involve all processes from a communicator They should be preferred because they allow the MPI stack to optimize information flow. Basic routines: q MPI_Barrier - Blocks until all processes have reached this routine. q q int MPI_Barrier(MPI_Comm comm) MPI_Bcast - Broadcasts a message from the process with rank root to all other processes of the group. int MPI_Bcast(void *buffer, int count, MPI_Datatype datatype, int root, MPI_Comm comm) q q q MPI_Reduce - Reduces values on all processes within a group. int MPI_Reduce(void *sendbuf, void *recvbuf, int count, MPI_Datatype datatype, MPI_Op op, int root, MPI_Comm comm) Parallel Programming with MPI Training – Sofia, Bulgaria, 1 Dec. 2011 13

Collective communications More advanced routines for collective communication: q MPI_Scatter - Sends data from one task to all tasks in a group. q q MPI_Gather - Gathers values from a group of processes. q q int MPI_Gather(void *sendbuf, int sendcount, MPI_Datatype sendtype, void *recvbuf, int recvcount, MPI_Datatype recvtype, int root, MPI_Comm comm) MPI_Scatterv - Scatters a buffer in parts to all tasks in a group. q q int MPI_Scatter(void *sendbuf, int sendcount, MPI_Datatype sendtype, void *recvbuf, int recvcount, MPI_Datatype recvtype, int root, MPI_Comm comm) int MPI_Scatterv(void *sendbuf, int *sendcounts, int *displs, MPI_Datatype sendtype, void *recvbuf, int recvcount, MPI_Datatype recvtype, int root, MPI_Comm comm) MPI_Allreduce - Combines values from all processes and distributes the result back to all processes. q int MPI_Allreduce(void *sendbuf, void *recvbuf, int count, MPI_Datatype datatype, MPI_Op op, MPI_Comm comm) Parallel Programming with MPI Training – Sofia, Bulgaria, 1 Dec. 2011 14

Compilation of MPI programs q q q Compilation is achieved by using mpicc or mpif 77 instead of simply gcc or gfortran. For software developed by other people, in many cases one should replace CC=gcc with CC=mpicc in the Makefile, or perform MPICC=mpicc MPIF 77=mpif 77. /configure For Intel compiler it is preferable to use mpiicc and mpiifort (mpiicpc for C++ compilation). These commands are wrappers that invoke the regular compiler with appropriate compile (-I… ) and link flags, adding the necessary MPI libraries. It is usually impossible to combine object files or libraries compiled with different compilers. HP-SEE Training – Sofia, Bulgaria 29 -30, Nov. 2010 15

Hybrid Open. MP- MPI programming q q Open. MP relies on using compiler directives for shared memory parallel programming. It is generally advantageous to use Open. MP within a node and then MPI across nodes in order to reduce number of MPI processes. However, one should take care to either use thread-safe versions of the MPI library or (more easy for beginners) call MPI routines only from one of the Open. MP threads. Performance advantage of such hybrid programming is not guaranteed in practice. Parallel Programming with MPI Training – Sofia, Bulgaria, 1 Dec. 2011 16

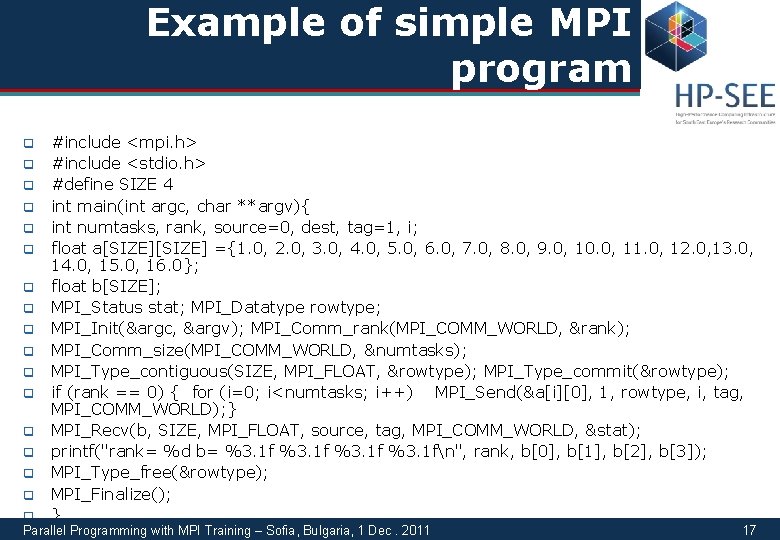

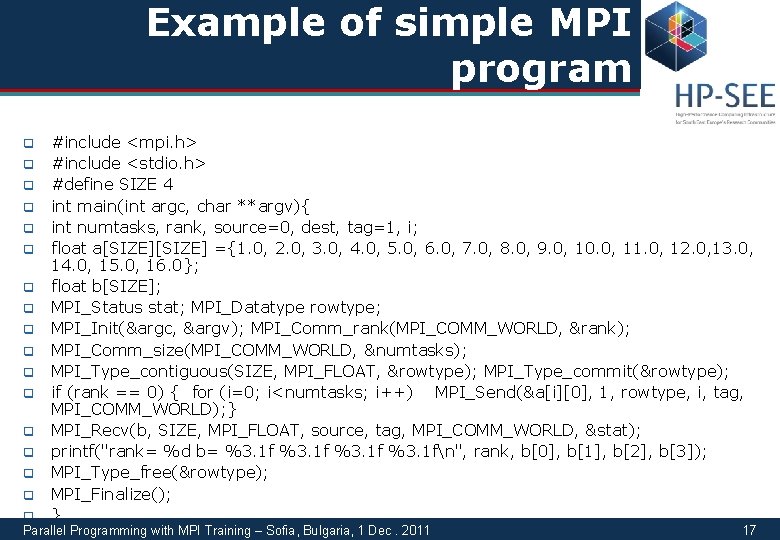

Example of simple MPI program q q q q #include <mpi. h> #include <stdio. h> #define SIZE 4 int main(int argc, char **argv){ int numtasks, rank, source=0, dest, tag=1, i; float a[SIZE] ={1. 0, 2. 0, 3. 0, 4. 0, 5. 0, 6. 0, 7. 0, 8. 0, 9. 0, 10. 0, 11. 0, 12. 0, 13. 0, 14. 0, 15. 0, 16. 0}; float b[SIZE]; MPI_Status stat; MPI_Datatype rowtype; MPI_Init(&argc, &argv); MPI_Comm_rank(MPI_COMM_WORLD, &rank); MPI_Comm_size(MPI_COMM_WORLD, &numtasks); MPI_Type_contiguous(SIZE, MPI_FLOAT, &rowtype); MPI_Type_commit(&rowtype); if (rank == 0) { for (i=0; i<numtasks; i++) MPI_Send(&a[i][0], 1, rowtype, i, tag, MPI_COMM_WORLD); } MPI_Recv(b, SIZE, MPI_FLOAT, source, tag, MPI_COMM_WORLD, &stat); printf("rank= %d b= %3. 1 fn", rank, b[0], b[1], b[2], b[3]); MPI_Type_free(&rowtype); MPI_Finalize(); } q Parallel Programming with MPI Training – Sofia, Bulgaria, 1 Dec. 2011 17

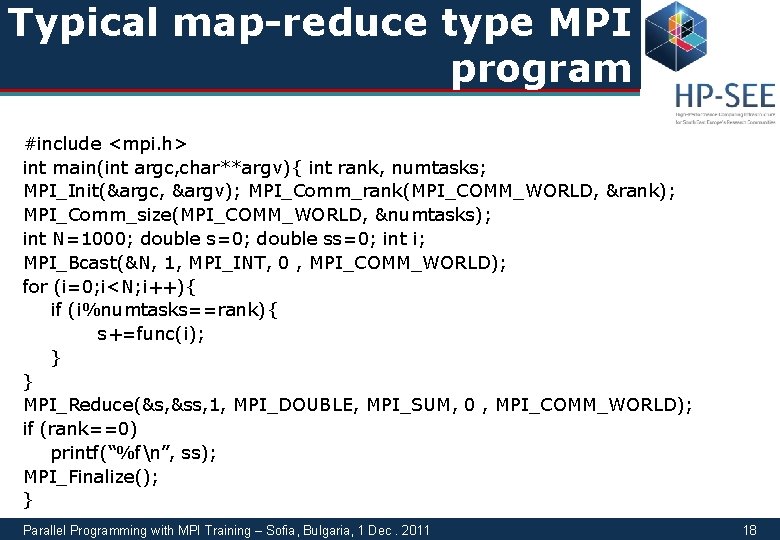

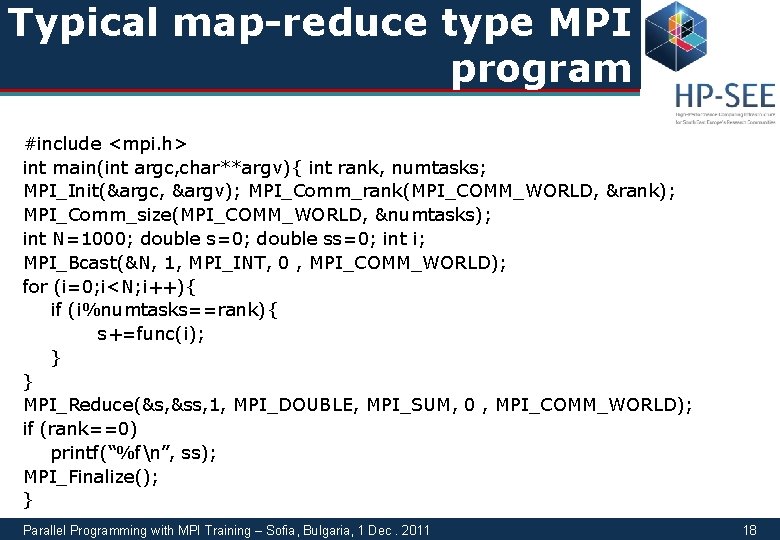

Typical map-reduce type MPI program #include <mpi. h> int main(int argc, char**argv){ int rank, numtasks; MPI_Init(&argc, &argv); MPI_Comm_rank(MPI_COMM_WORLD, &rank); MPI_Comm_size(MPI_COMM_WORLD, &numtasks); int N=1000; double s=0; double ss=0; int i; MPI_Bcast(&N, 1, MPI_INT, 0 , MPI_COMM_WORLD); for (i=0; i<N; i++){ if (i%numtasks==rank){ s+=func(i); } } MPI_Reduce(&s, &ss, 1, MPI_DOUBLE, MPI_SUM, 0 , MPI_COMM_WORLD); if (rank==0) printf(“%fn”, ss); MPI_Finalize(); } Parallel Programming with MPI Training – Sofia, Bulgaria, 1 Dec. 2011 18

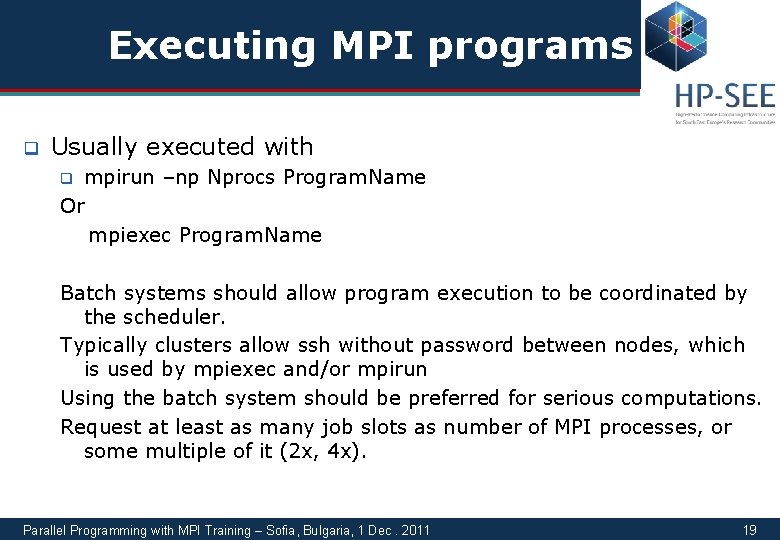

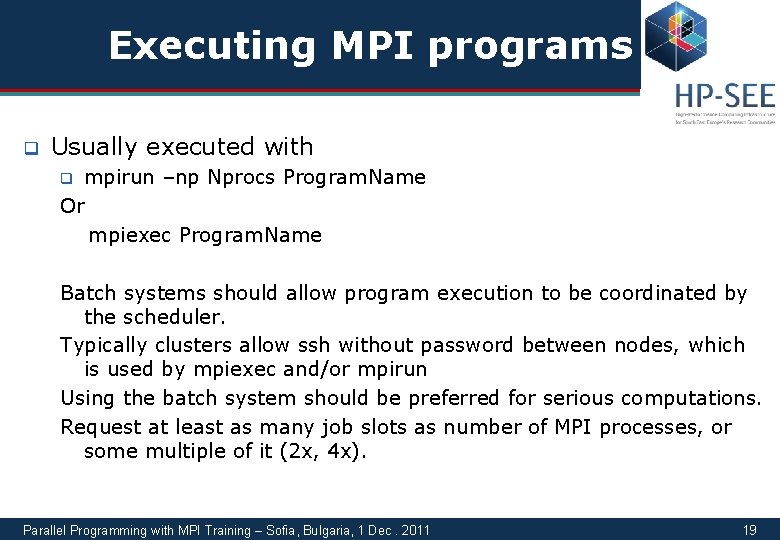

Executing MPI programs q Usually executed with mpirun –np Nprocs Program. Name Or mpiexec Program. Name q Batch systems should allow program execution to be coordinated by the scheduler. Typically clusters allow ssh without password between nodes, which is used by mpiexec and/or mpirun Using the batch system should be preferred for serious computations. Request at least as many job slots as number of MPI processes, or some multiple of it (2 x, 4 x). Parallel Programming with MPI Training – Sofia, Bulgaria, 1 Dec. 2011 19

Conclusions q q q MPI is a portable, widely used and well understood standard for parallel programming Starting to program using MPI is easy – can be done on single computer MPI scales to hundreds of thousands of CPU cores Some problems are more easy to parallelize than others Have fun with MPI! Parallel Programming with MPI Training – Sofia, Bulgaria, 1 Dec. 2011 20