HPC XC cluster Slurm and HPCLSF YuSheng Guo

- Slides: 38

HPC XC cluster Slurm and HPCLSF Yu-Sheng Guo Account Support Consultant HP Taiwan © 2006 Hewlett-Packard Development Company, L. P. The information contained herein is subject to change without notice

SLURM 2020/12/8 HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 2

What is SLURM ? • • • SLURM : Simple Linux Utility for Resource Management Arbitrates requests by managing queue of pending work Allocates access to computer nodes within a cluster Launches parallel jobs and manages them (I/O, signals, limits, etc. ) NOT a comprehensive cluster administration or monitoring package NOT a sophisticated scheduling system − An external entity can manage the SLURM queues via plugin 2020/12/8 HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 3

SLURM Design Criteria • Simple − Scheduling complexity external to SLURM (LSF, PBS) • Open source: GPL • Fault-tolerant − For SLURM daemons and its jobs • Secure − Restricted user access to compute nodes • System administrator friendly − Simple configuration file • Scalable to thousands of nodes 2020/12/8 HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 4

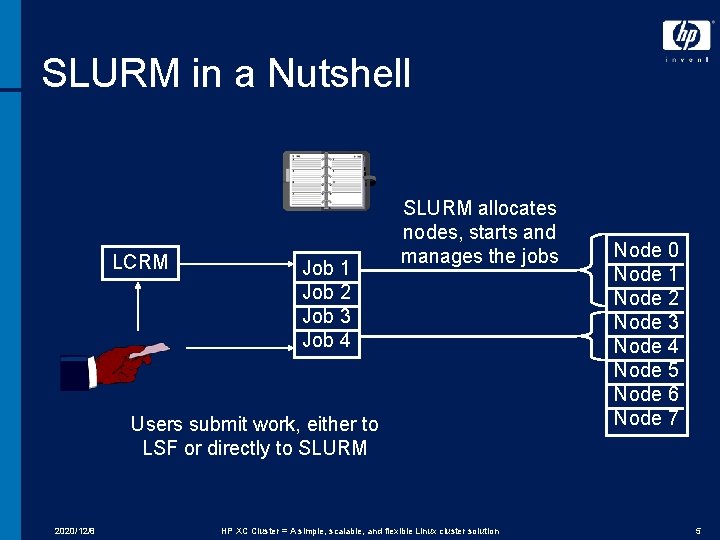

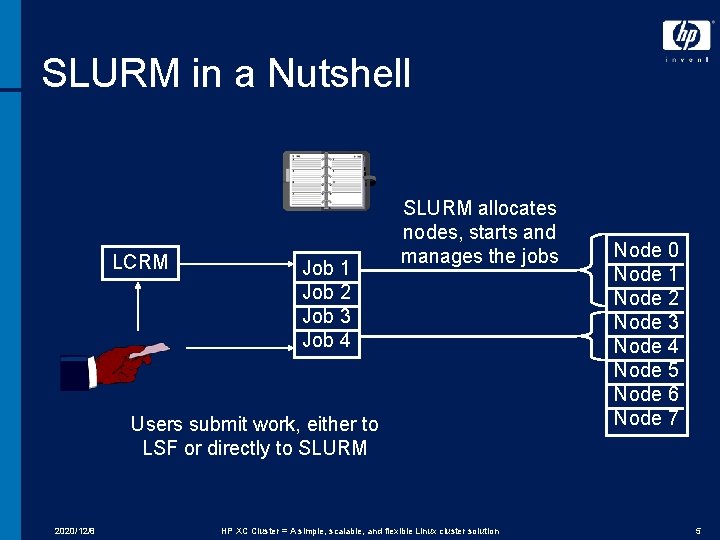

SLURM in a Nutshell LCRM Job 1 Job 2 Job 3 Job 4 SLURM allocates nodes, starts and manages the jobs Users submit work, either to LSF or directly to SLURM 2020/12/8 HP XC Cluster = A simple, scalable, and flexible Linux cluster solution Node 0 Node 1 Node 2 Node 3 Node 4 Node 5 Node 6 Node 7 5

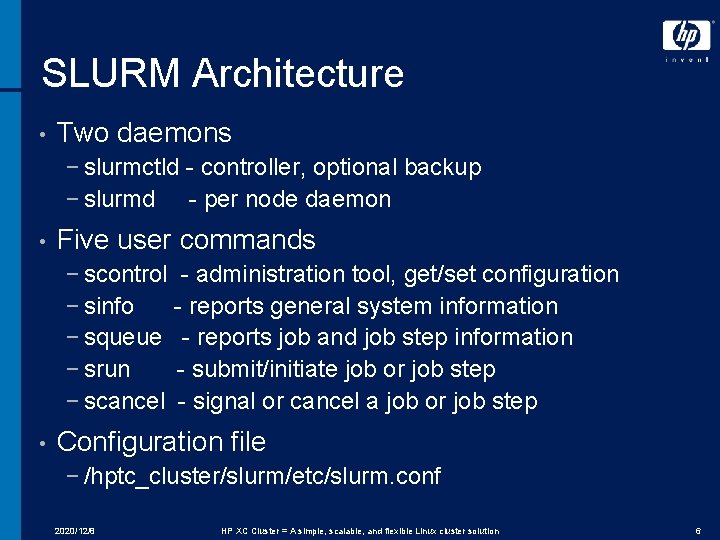

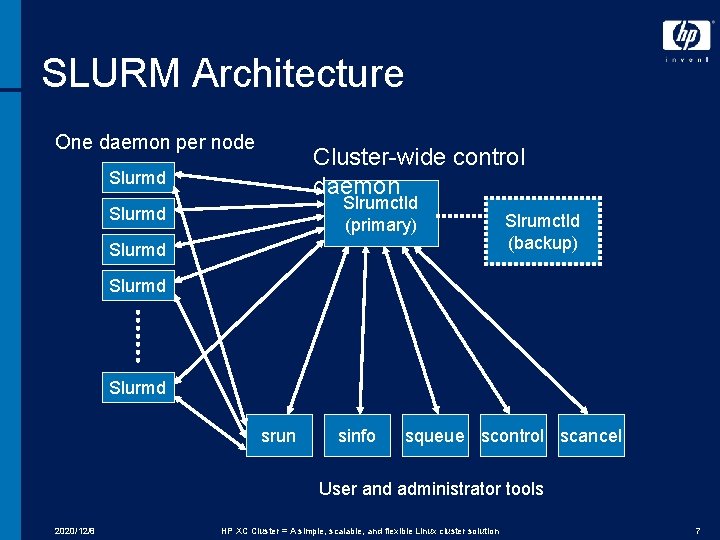

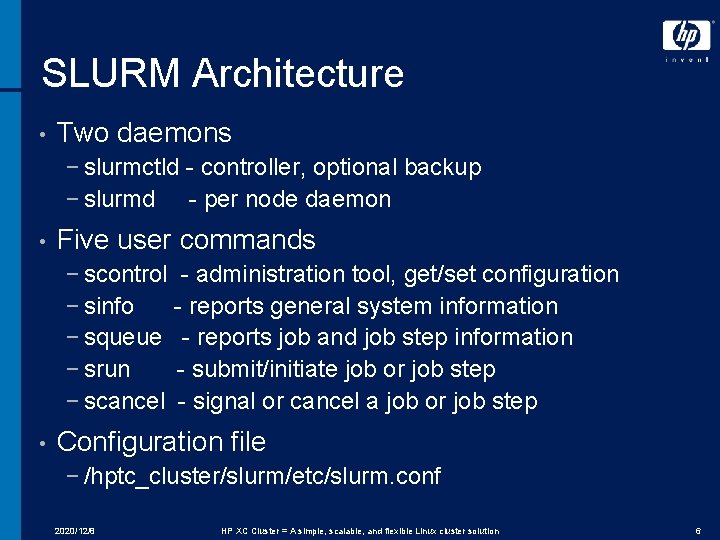

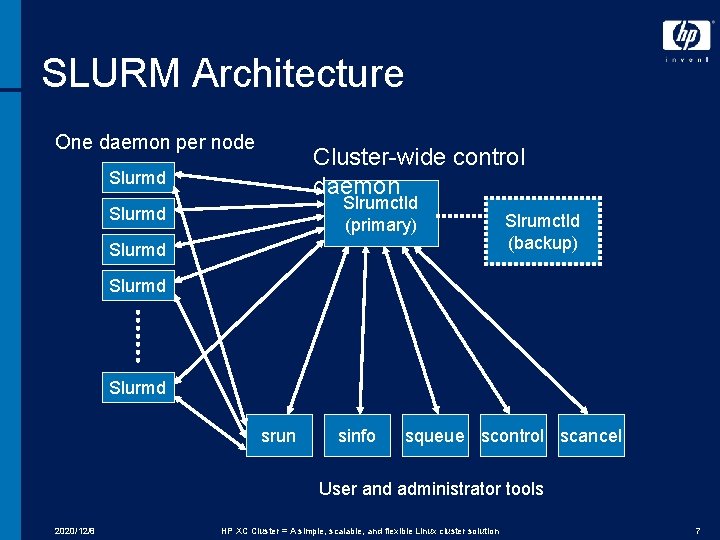

SLURM Architecture • Two daemons − slurmctld - controller, optional backup − slurmd - per node daemon • Five user commands − scontrol - administration tool, get/set configuration − sinfo - reports general system information − squeue - reports job and job step information − srun - submit/initiate job or job step − scancel - signal or cancel a job or job step • Configuration file − /hptc_cluster/slurm/etc/slurm. conf 2020/12/8 HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 6

SLURM Architecture One daemon per node Cluster-wide control daemon Slurmd Slrumctld (primary) Slurmd Slrumctld (backup) Slurmd srun sinfo squeue scontrol scancel User and administrator tools 2020/12/8 HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 7

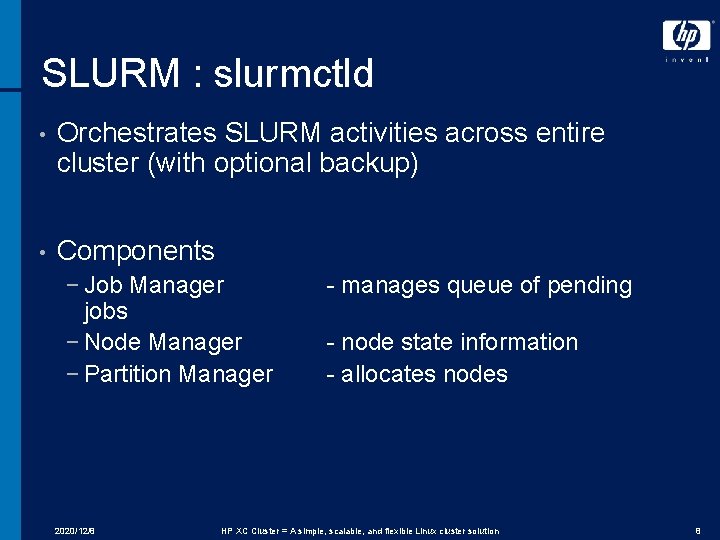

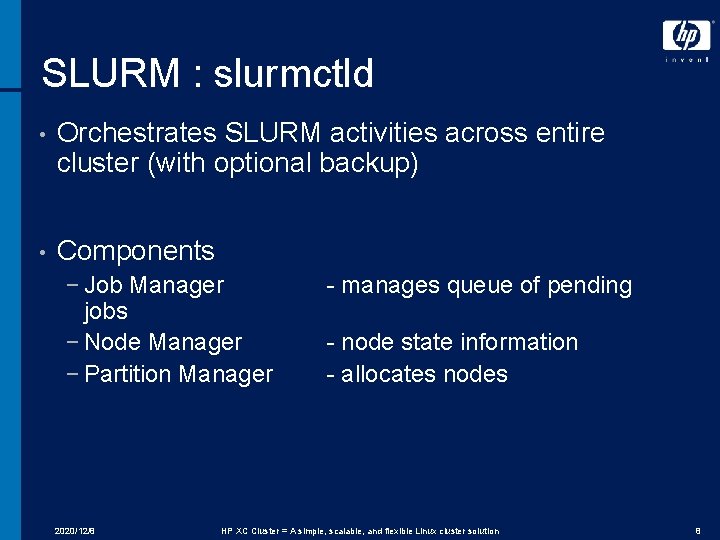

SLURM : slurmctld • Orchestrates SLURM activities across entire cluster (with optional backup) • Components − Job Manager jobs − Node Manager − Partition Manager 2020/12/8 - manages queue of pending - node state information - allocates nodes HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 8

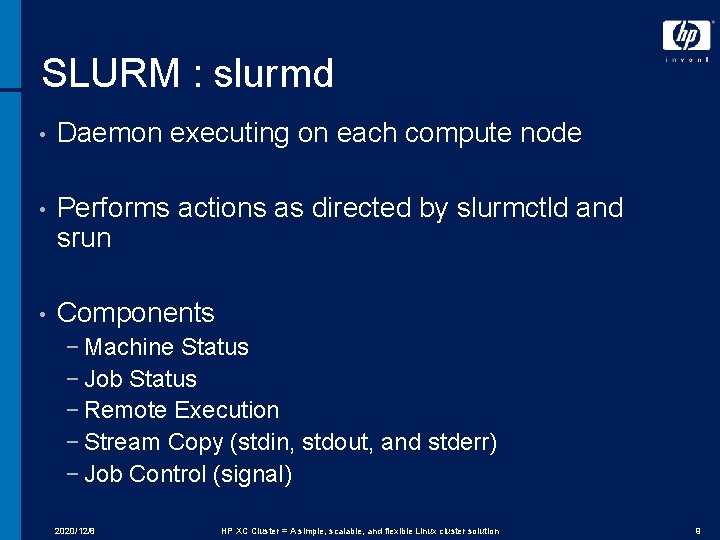

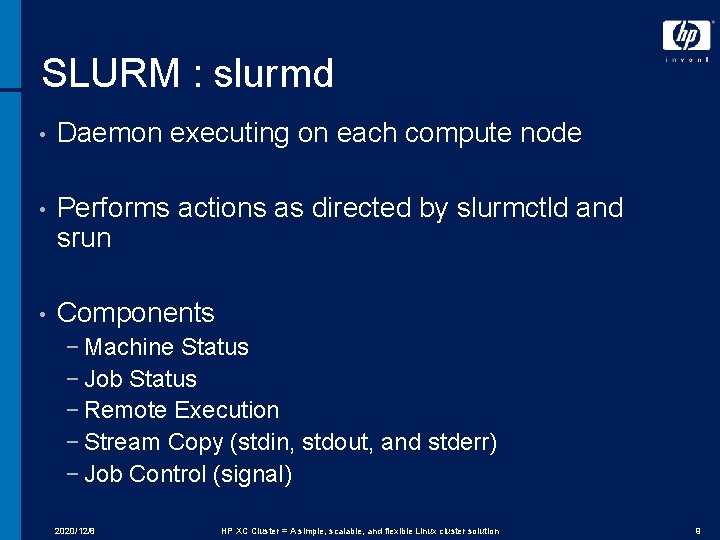

SLURM : slurmd • Daemon executing on each compute node • Performs actions as directed by slurmctld and srun • Components − Machine Status − Job Status − Remote Execution − Stream Copy (stdin, stdout, and stderr) − Job Control (signal) 2020/12/8 HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 9

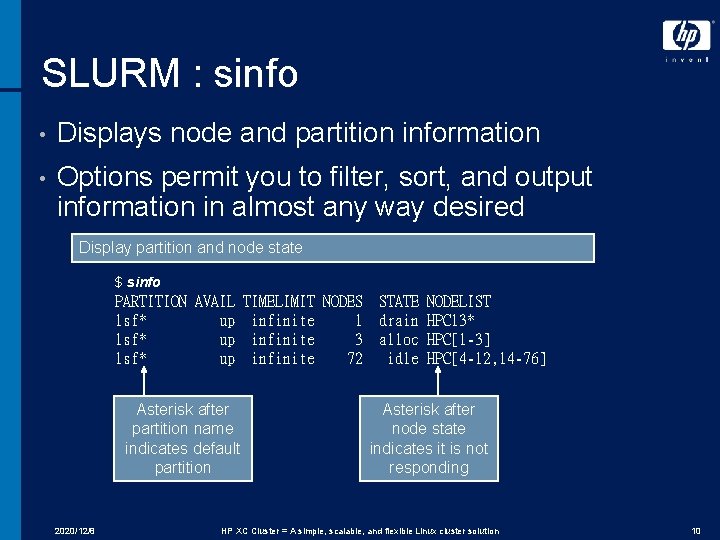

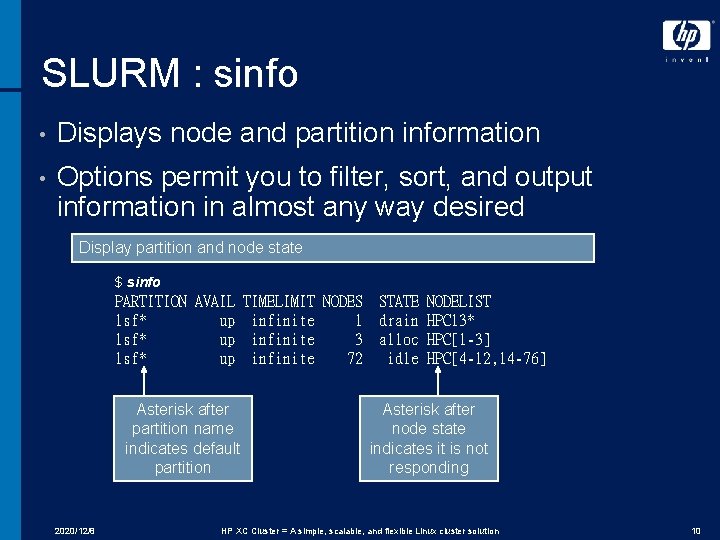

SLURM : sinfo • Displays node and partition information • Options permit you to filter, sort, and output information in almost any way desired Display partition and node state $ sinfo PARTITION AVAIL TIMELIMIT NODES STATE NODELIST lsf* up infinite 1 drain HPC 13* lsf* up infinite 3 alloc HPC[1 -3] lsf* up infinite 72 idle HPC[4 -12, 14 -76] Asterisk after partition name indicates default partition 2020/12/8 Asterisk after node state indicates it is not responding HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 10

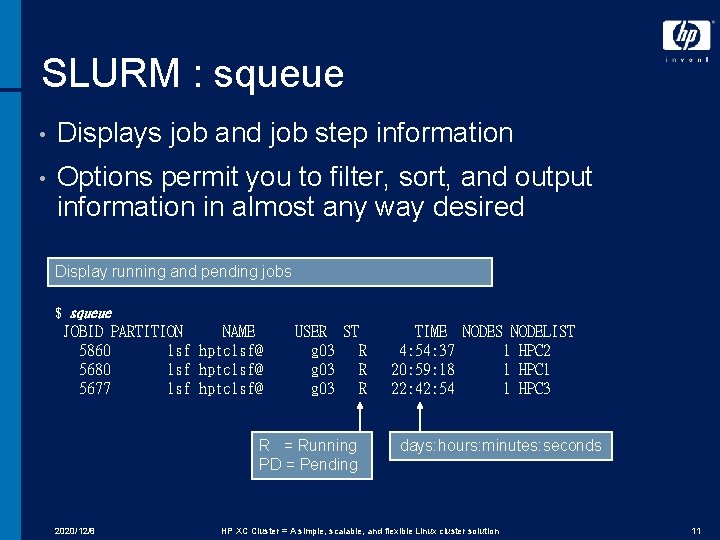

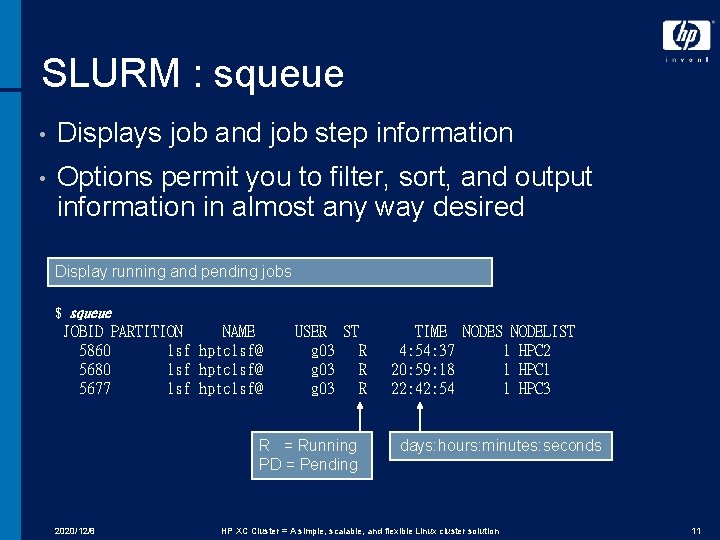

SLURM : squeue • Displays job and job step information • Options permit you to filter, sort, and output information in almost any way desired Display running and pending jobs $ squeue JOBID PARTITION NAME 5860 lsf hptclsf@ 5680 lsf hptclsf@ 5677 lsf hptclsf@ USER ST g 03 R R = Running PD = Pending 2020/12/8 TIME NODES NODELIST 4: 54: 37 1 HPC 2 20: 59: 18 1 HPC 1 22: 42: 54 1 HPC 3 days: hours: minutes: seconds HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 11

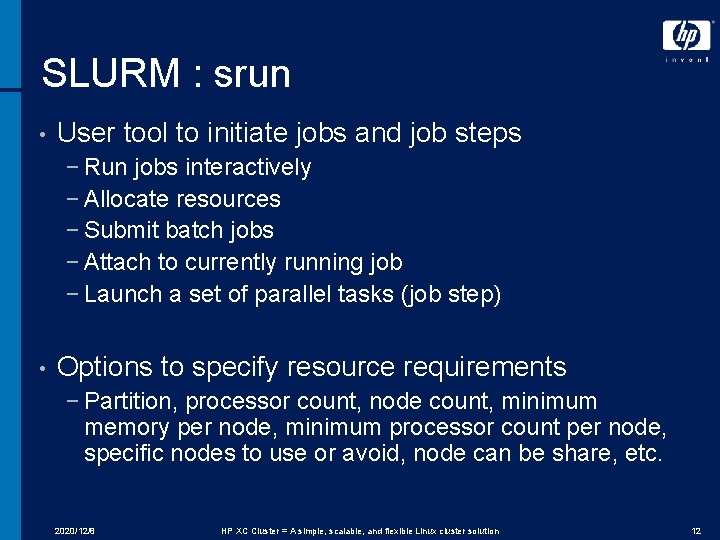

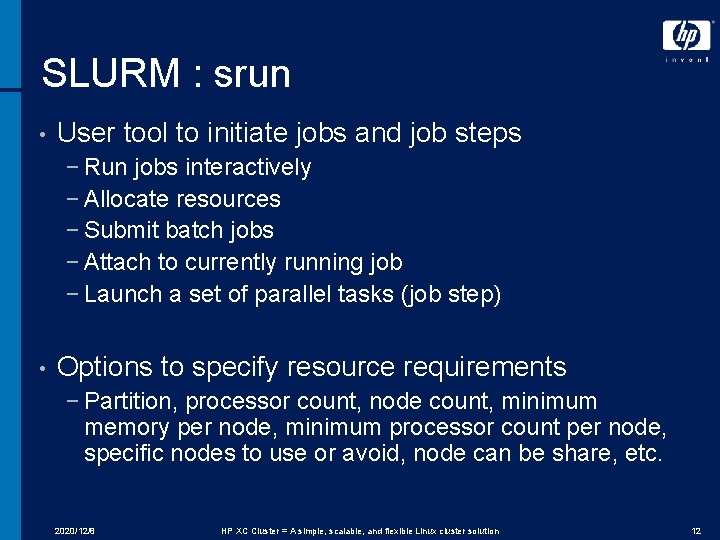

SLURM : srun • User tool to initiate jobs and job steps − Run jobs interactively − Allocate resources − Submit batch jobs − Attach to currently running job − Launch a set of parallel tasks (job step) • Options to specify resource requirements − Partition, processor count, node count, minimum memory per node, minimum processor count per node, specific nodes to use or avoid, node can be share, etc. 2020/12/8 HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 12

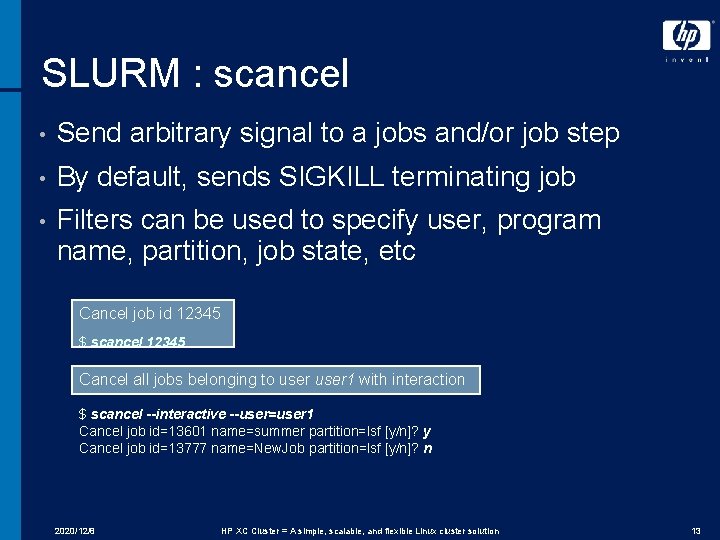

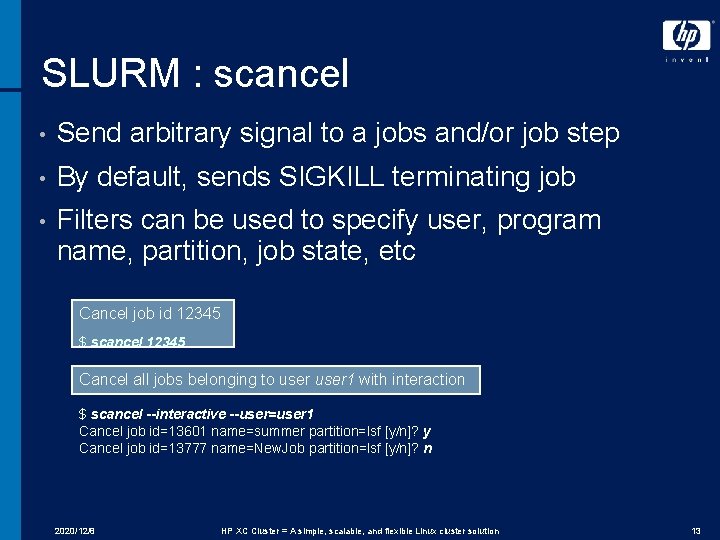

SLURM : scancel • Send arbitrary signal to a jobs and/or job step • By default, sends SIGKILL terminating job • Filters can be used to specify user, program name, partition, job state, etc Cancel job id 12345 $ scancel 12345 Cancel all jobs belonging to user 1 with interaction $ scancel --interactive --user=user 1 Cancel job id=13601 name=summer partition=lsf [y/n]? y Cancel job id=13777 name=New. Job partition=lsf [y/n]? n 2020/12/8 HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 13

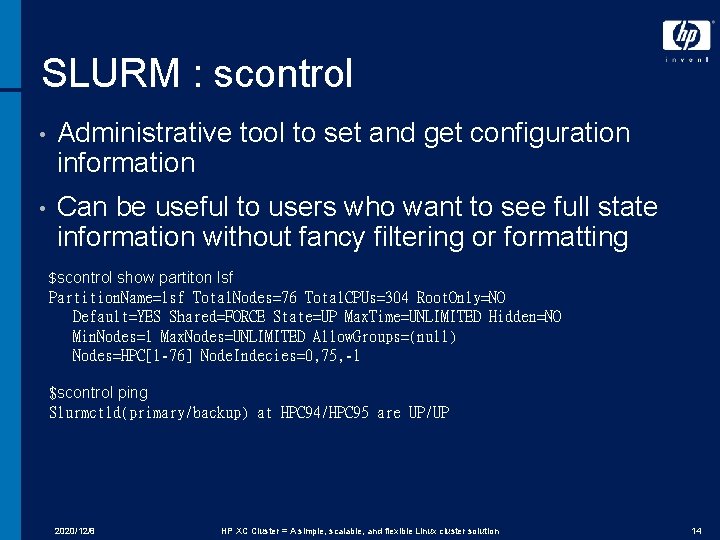

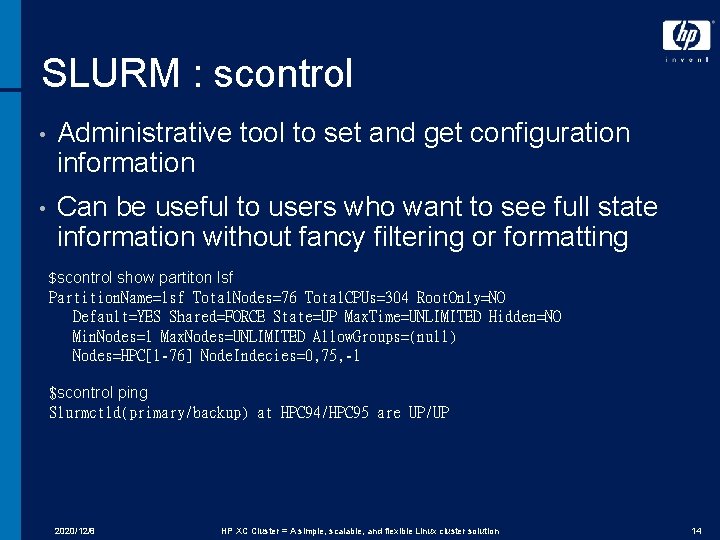

SLURM : scontrol • Administrative tool to set and get configuration information • Can be useful to users who want to see full state information without fancy filtering or formatting $scontrol show partiton lsf Partition. Name=lsf Total. Nodes=76 Total. CPUs=304 Root. Only=NO Default=YES Shared=FORCE State=UP Max. Time=UNLIMITED Hidden=NO Min. Nodes=1 Max. Nodes=UNLIMITED Allow. Groups=(null) Nodes=HPC[1 -76] Node. Indecies=0, 75, -1 $scontrol ping Slurmctld(primary/backup) at HPC 94/HPC 95 are UP/UP 2020/12/8 HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 14

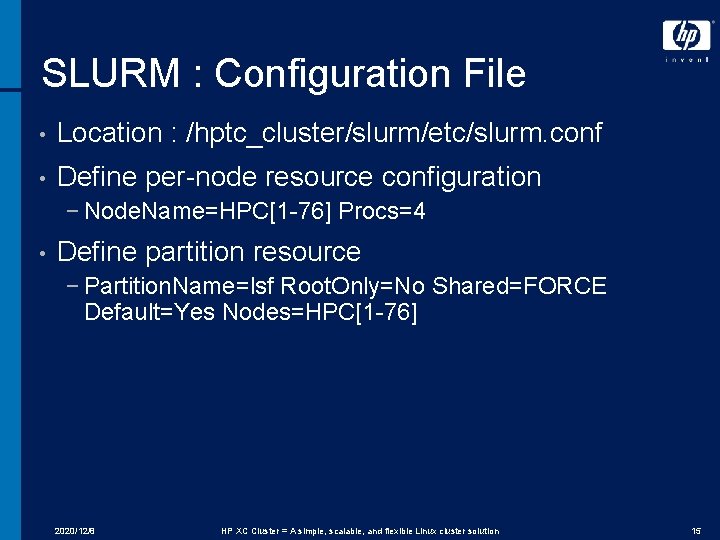

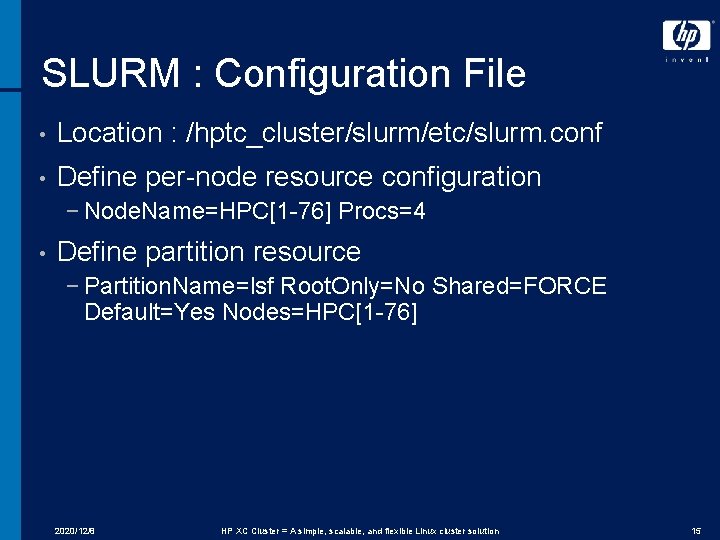

SLURM : Configuration File • Location : /hptc_cluster/slurm/etc/slurm. conf • Define per-node resource configuration − Node. Name=HPC[1 -76] Procs=4 • Define partition resource − Partition. Name=lsf Root. Only=No Shared=FORCE Default=Yes Nodes=HPC[1 -76] 2020/12/8 HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 15

LSF-HPC 2020/12/8 HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 16

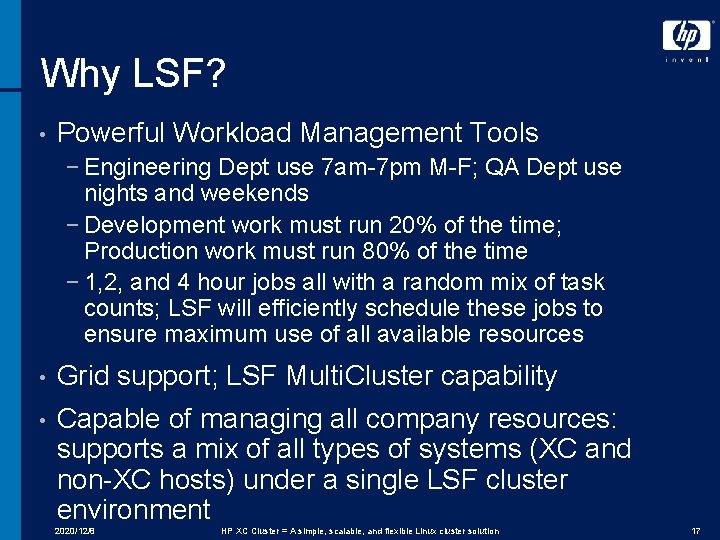

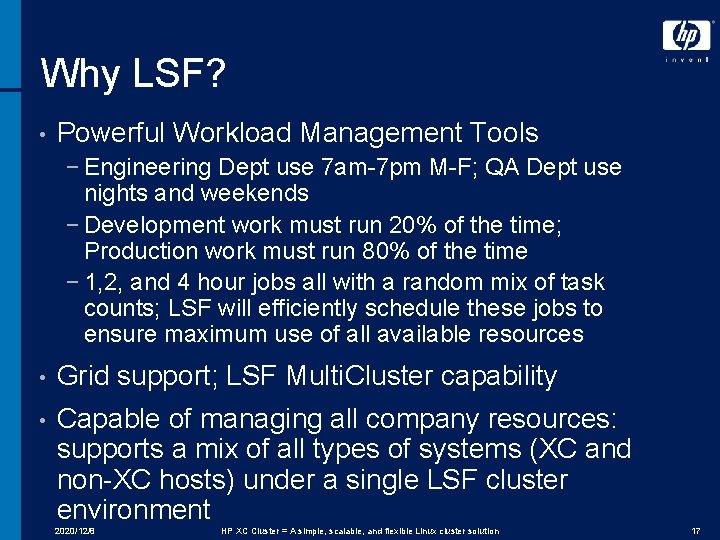

Why LSF? • Powerful Workload Management Tools − Engineering Dept use 7 am-7 pm M-F; QA Dept use nights and weekends − Development work must run 20% of the time; Production work must run 80% of the time − 1, 2, and 4 hour jobs all with a random mix of task counts; LSF will efficiently schedule these jobs to ensure maximum use of all available resources • Grid support; LSF Multi. Cluster capability • Capable of managing all company resources: supports a mix of all types of systems (XC and non-XC hosts) under a single LSF cluster environment 2020/12/8 HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 17

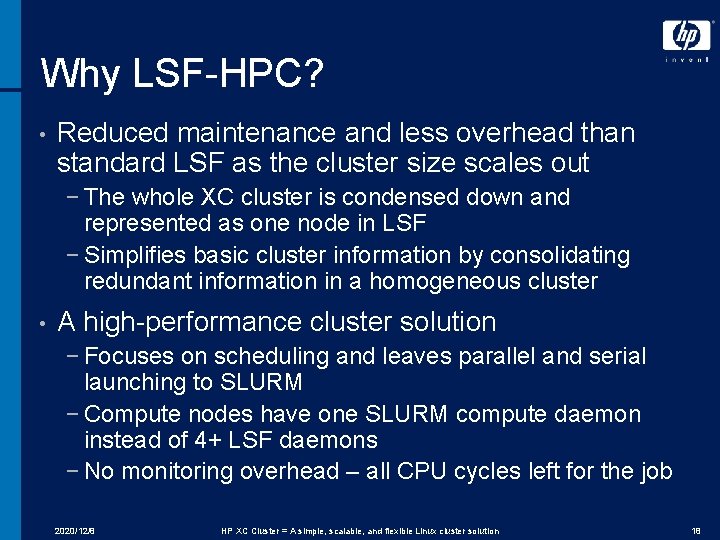

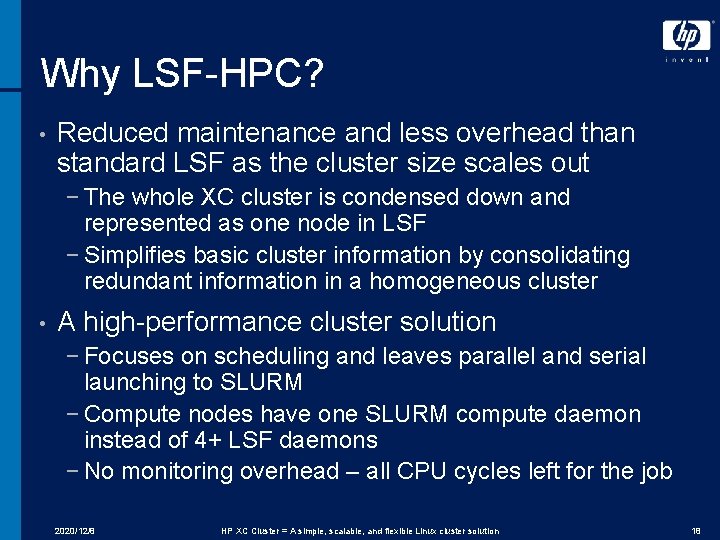

Why LSF-HPC? • Reduced maintenance and less overhead than standard LSF as the cluster size scales out − The whole XC cluster is condensed down and represented as one node in LSF − Simplifies basic cluster information by consolidating redundant information in a homogeneous cluster • A high-performance cluster solution − Focuses on scheduling and leaves parallel and serial launching to SLURM − Compute nodes have one SLURM compute daemon instead of 4+ LSF daemons − No monitoring overhead – all CPU cycles left for the job 2020/12/8 HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 18

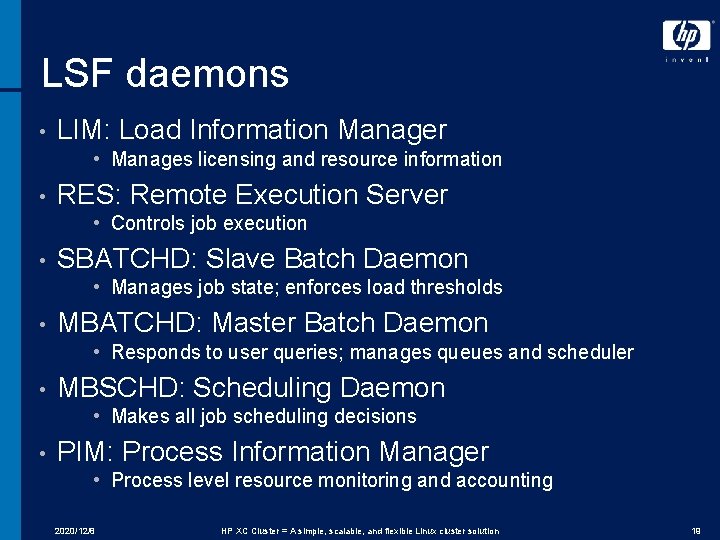

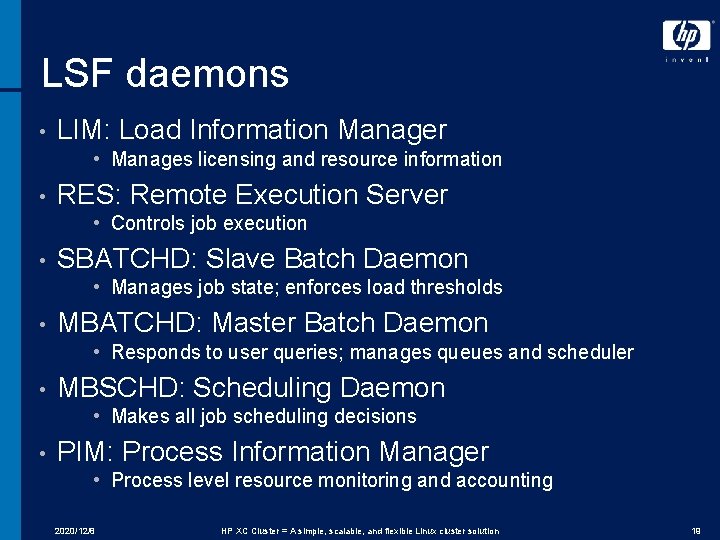

LSF daemons • LIM: Load Information Manager • Manages licensing and resource information • RES: Remote Execution Server • Controls job execution • SBATCHD: Slave Batch Daemon • Manages job state; enforces load thresholds • MBATCHD: Master Batch Daemon • Responds to user queries; manages queues and scheduler • MBSCHD: Scheduling Daemon • Makes all job scheduling decisions • PIM: Process Information Manager • Process level resource monitoring and accounting 2020/12/8 HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 19

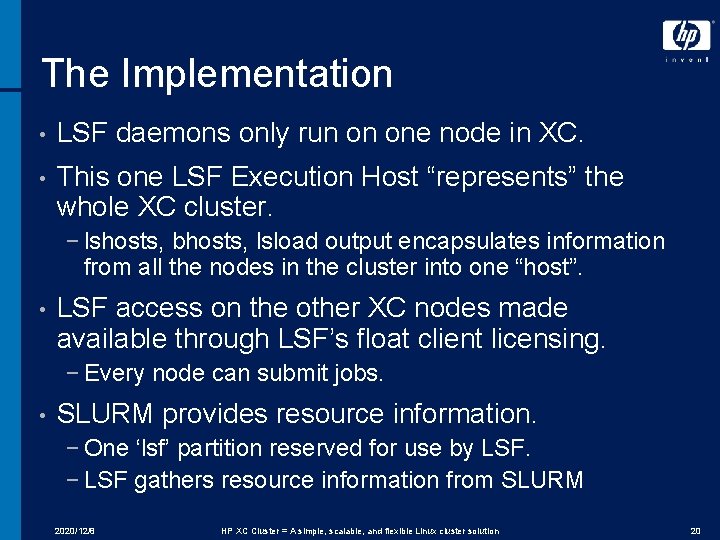

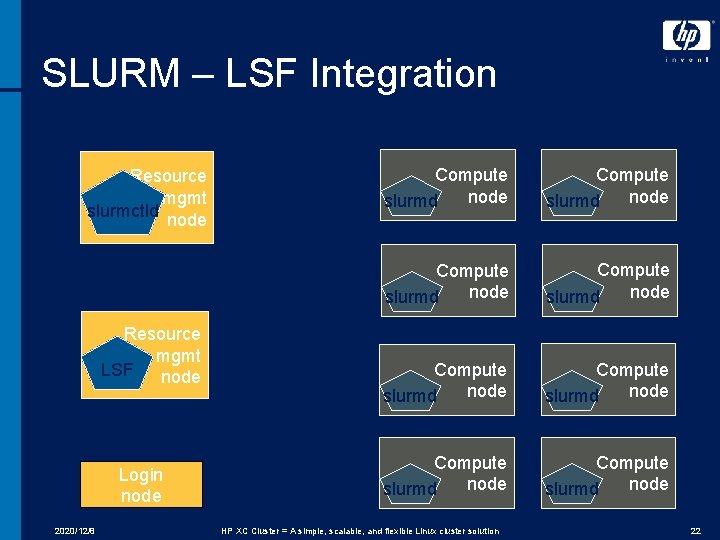

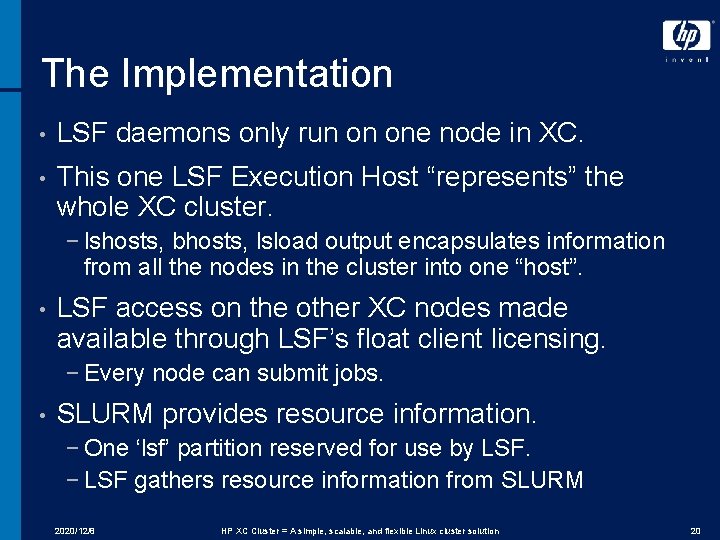

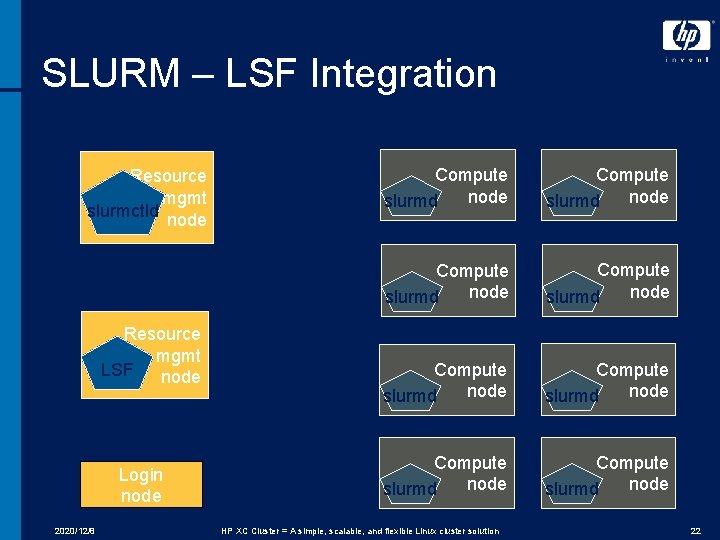

The Implementation • LSF daemons only run on one node in XC. • This one LSF Execution Host “represents” the whole XC cluster. − lshosts, bhosts, lsload output encapsulates information from all the nodes in the cluster into one “host”. • LSF access on the other XC nodes made available through LSF’s float client licensing. − Every node can submit jobs. • SLURM provides resource information. − One ‘lsf’ partition reserved for use by LSF. − LSF gathers resource information from SLURM 2020/12/8 HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 20

SLURM – LSF Integration 2020/12/8 HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 21

SLURM – LSF Integration Resource mgmt slurmctld node Resource mgmt LSF node Login node 2020/12/8 Compute node slurmd Compute node slurmd HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 22

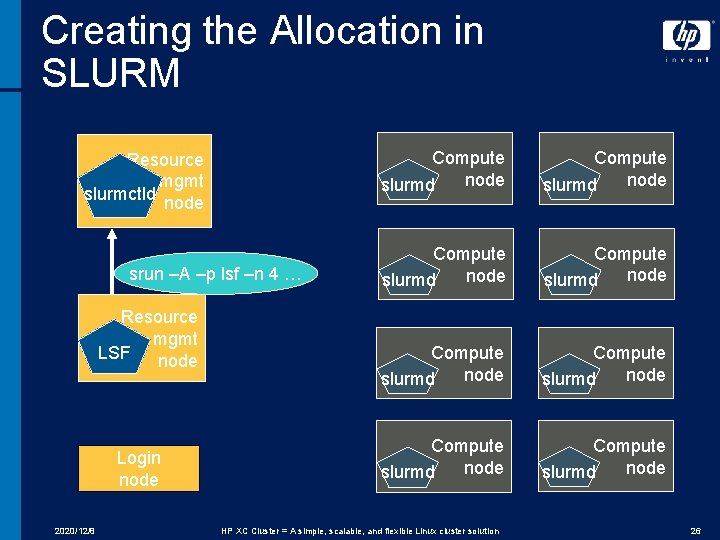

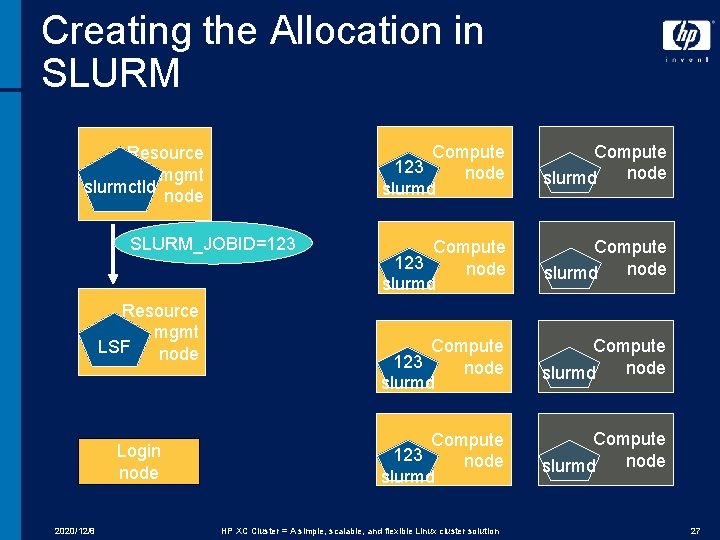

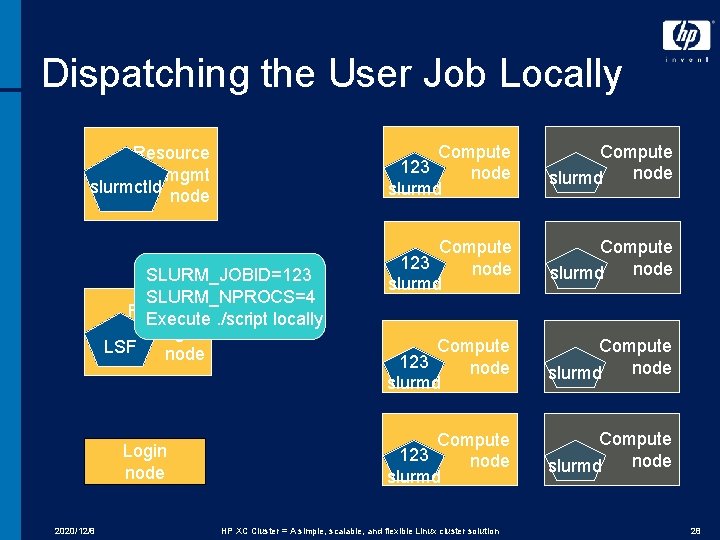

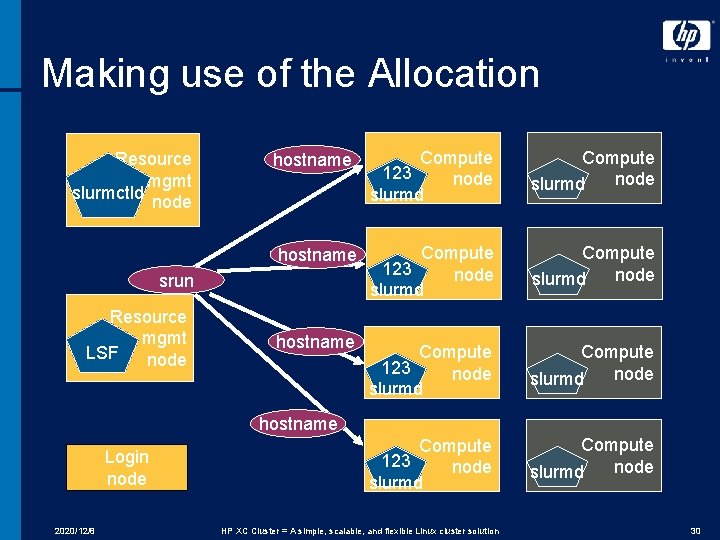

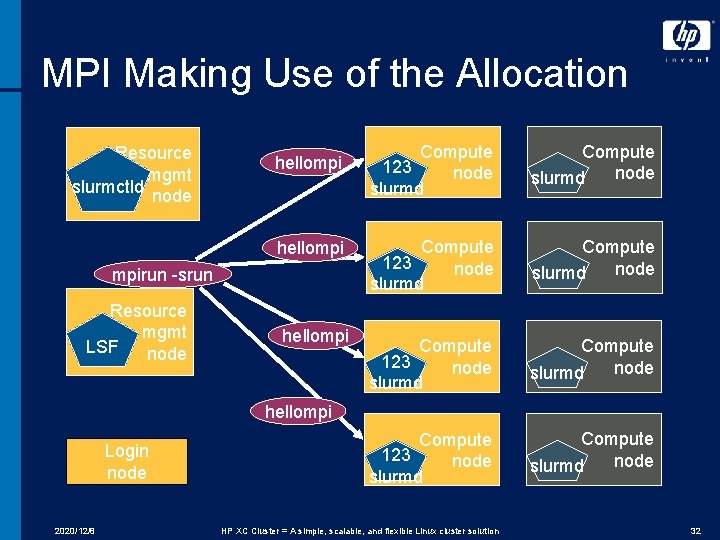

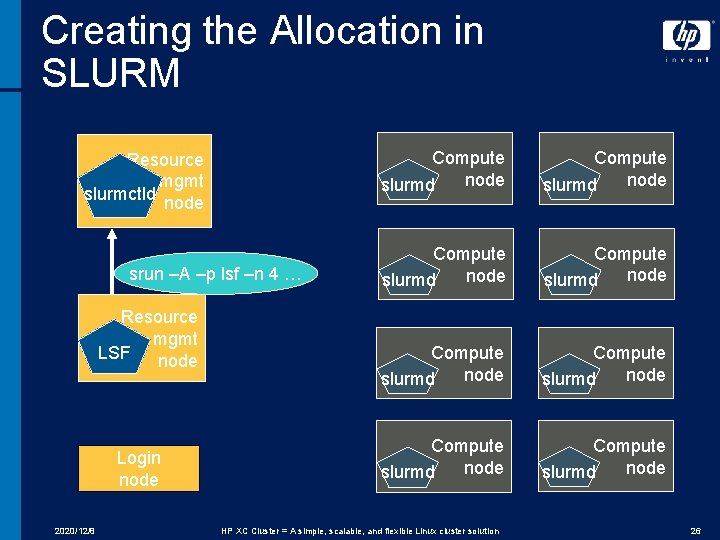

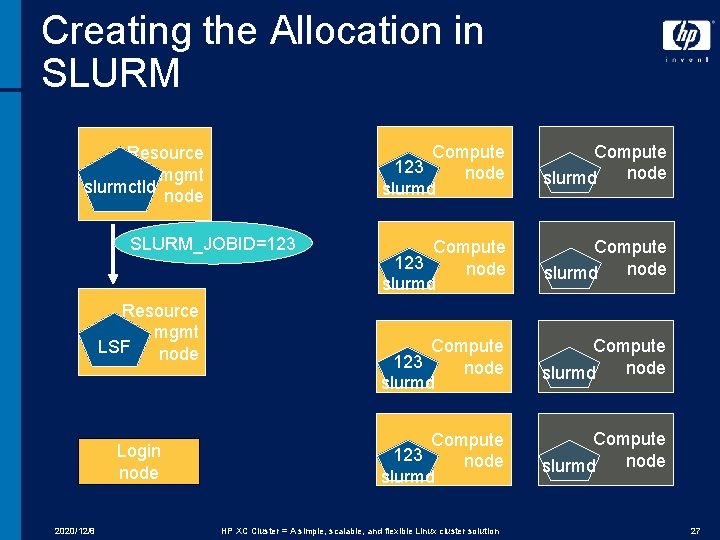

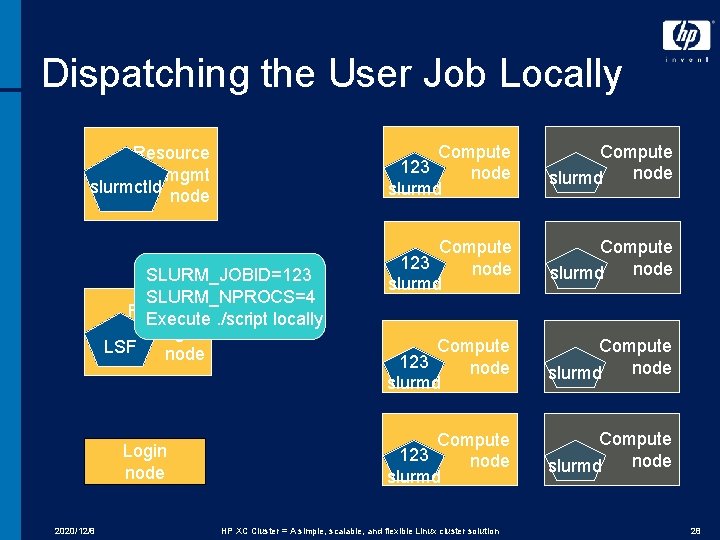

Launching a Job • LSF creates resource allocations for each job via SLURM − Support for specific topology requests available through a SLURM external scheduler • LSF then dispatches the job locally, and the job makes use of the allocation in several ways − ‘srun’ commands distribute the tasks − HPMPI’s ‘mpirun -srun’ command for MPI jobs − Job can use it’s own launch mechanism • LINDA applications (i. e. Gaussian) stick with ssh on XC 2020/12/8 HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 23

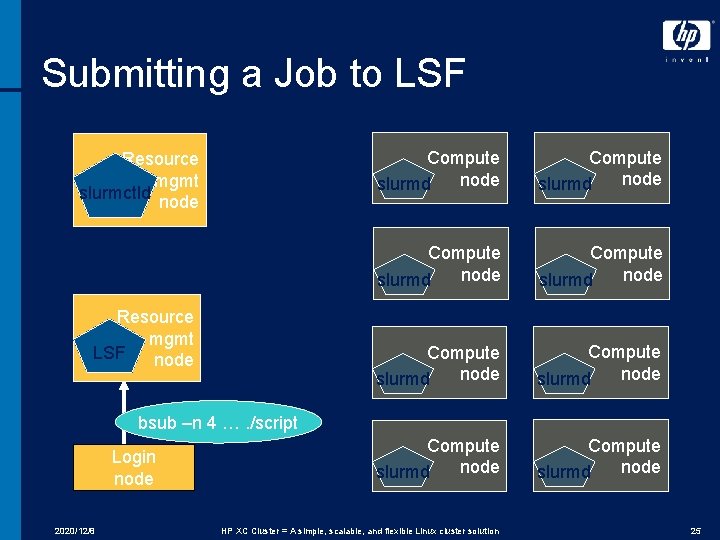

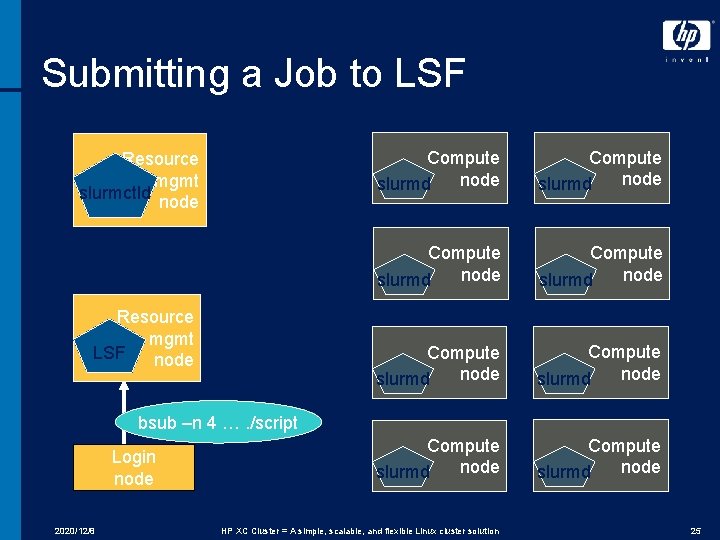

A Sample User Job • Consider a user job ‘. /script’ which looks like: #!/bin/sh hostname srun hostname mpirun –srun. /hellompi • bsub –n 4 –ext “SLURM[nodes=4]”. /script − Should include output redirection also (‘-o job. out’) • This job requests 4 processors (-n 4) across 4 nodes (nodes=4) − SLURM will run one task per node 2020/12/8 HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 24

Submitting a Job to LSF Resource mgmt slurmctld node Resource mgmt LSF node Compute node slurmd Compute node slurmd bsub –n 4 …. /script Login node 2020/12/8 HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 25

Creating the Allocation in SLURM Resource mgmt slurmctld node srun –A –p lsf –n 4 … Resource mgmt LSF node Login node 2020/12/8 Compute node slurmd Compute node slurmd HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 26

Creating the Allocation in SLURM Resource mgmt slurmctld node SLURM_JOBID=123 Resource mgmt LSF node Login node 2020/12/8 Compute 123 node slurmd Compute node slurmd HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 27

Dispatching the User Job Locally Resource mgmt slurmctld node SLURM_JOBID=123 SLURM_NPROCS=4 Resource Execute. /script locally mgmt LSF node Login node 2020/12/8 Compute 123 node slurmd Compute node slurmd HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 28

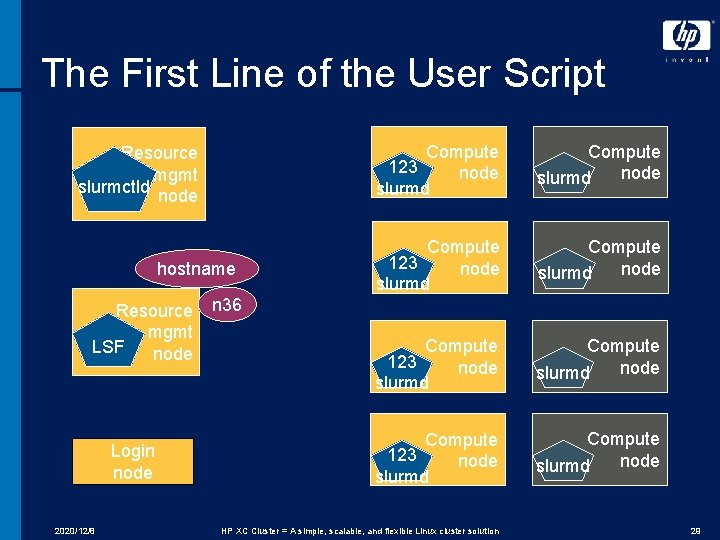

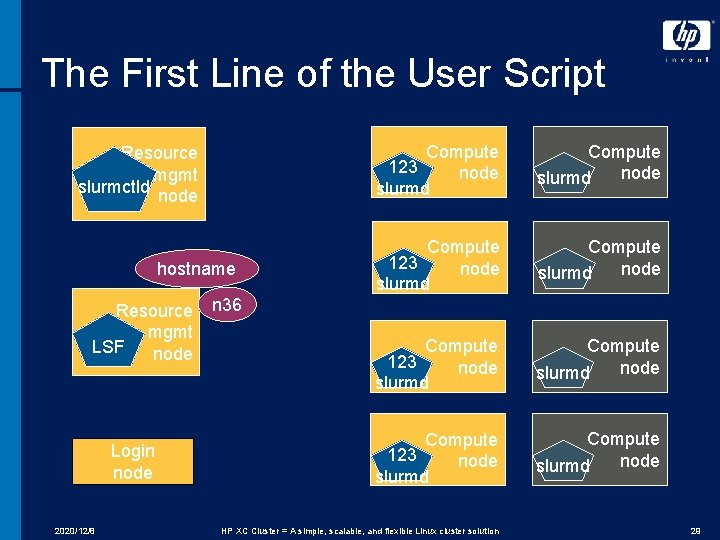

The First Line of the User Script Resource mgmt slurmctld node hostname Resource mgmt LSF node Login node 2020/12/8 Compute 123 node slurmd Compute node slurmd n 36 HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 29

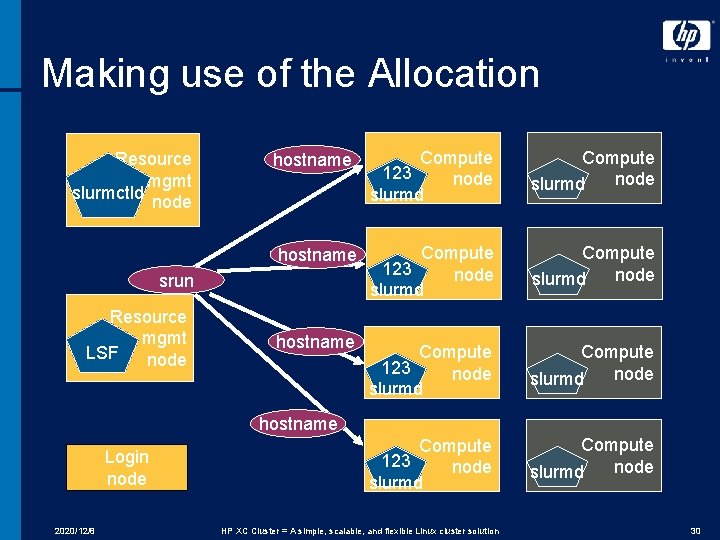

Making use of the Allocation Resource mgmt slurmctld node hostname Compute 123 node slurmd Compute node slurmd srun Resource mgmt LSF node hostname Login node 2020/12/8 HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 30

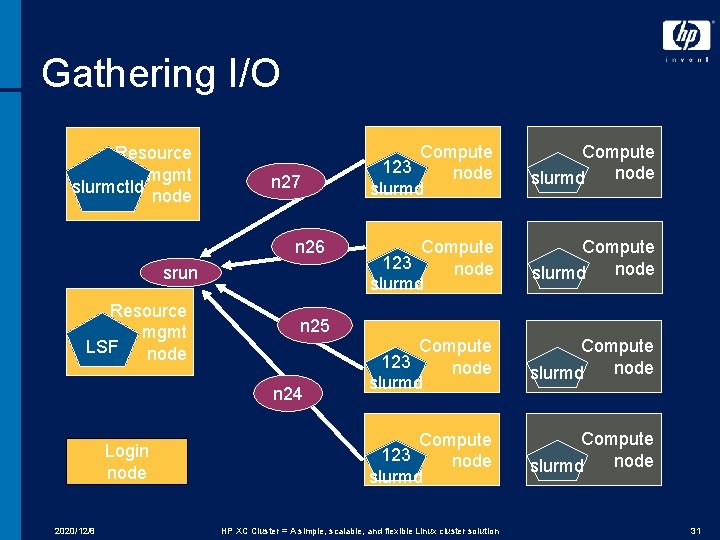

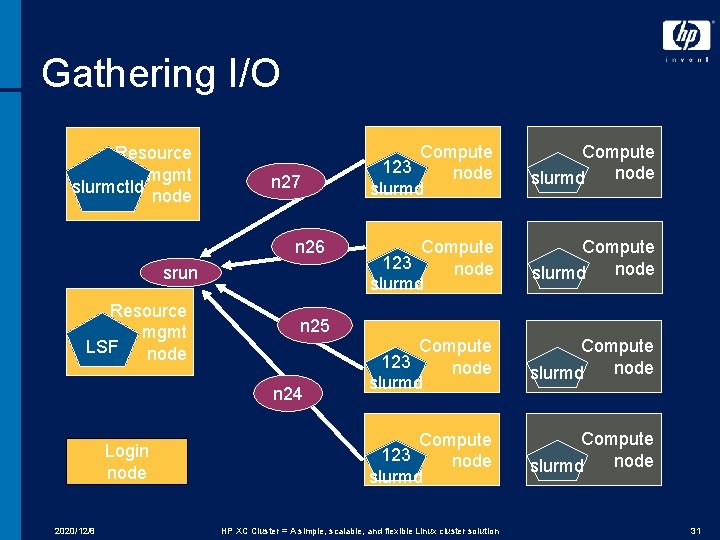

Gathering I/O Resource mgmt slurmctld node n 27 n 26 srun Resource mgmt LSF node 2020/12/8 Compute node slurmd Compute 123 node slurmd Compute node slurmd n 25 n 24 Login node Compute 123 node slurmd HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 31

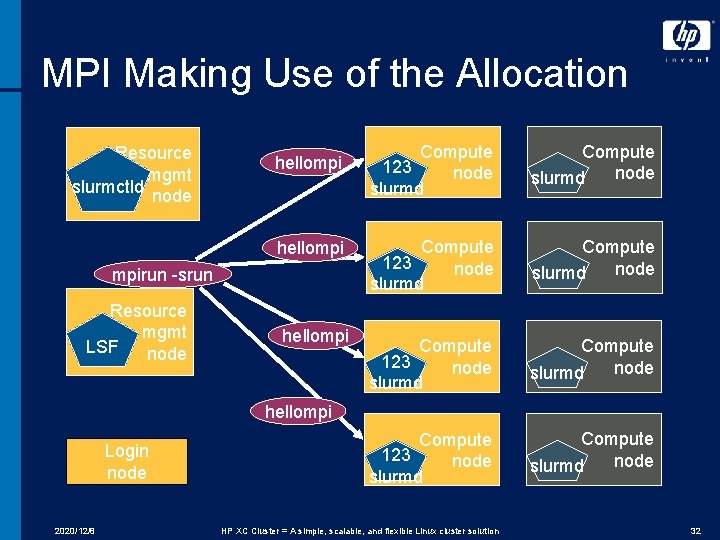

MPI Making Use of the Allocation Resource mgmt slurmctld node hellompi mpirun -srun Resource mgmt LSF node hellompi Compute 123 node slurmd Compute node slurmd hellompi Login node 2020/12/8 HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 32

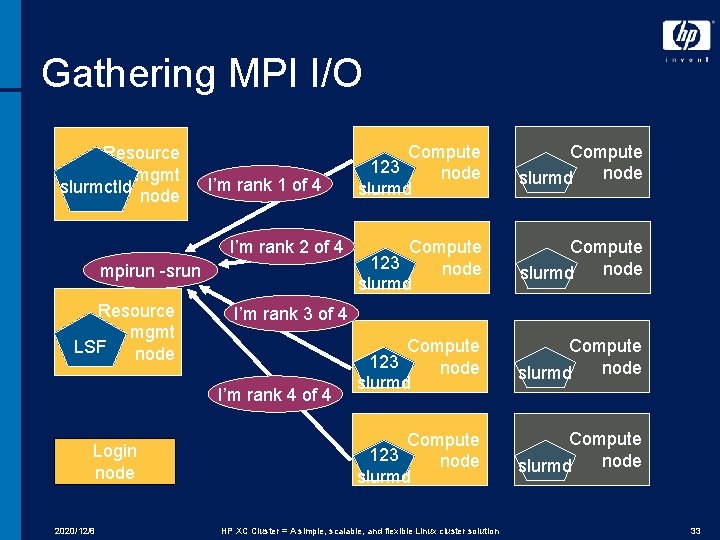

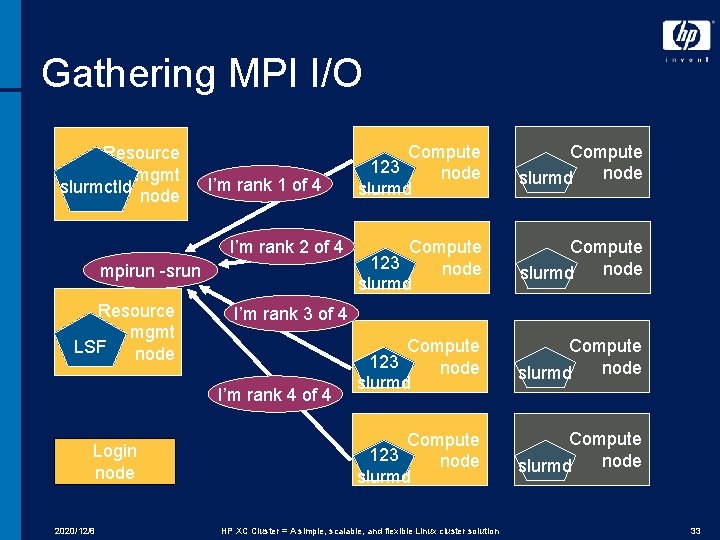

Gathering MPI I/O Resource mgmt slurmctld node I’m rank 1 of 4 I’m rank 2 of 4 mpirun -srun Resource mgmt LSF node 2020/12/8 Compute node slurmd Compute 123 node slurmd Compute node slurmd I’m rank 3 of 4 I’m rank 4 of 4 Login node Compute 123 node slurmd HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 33

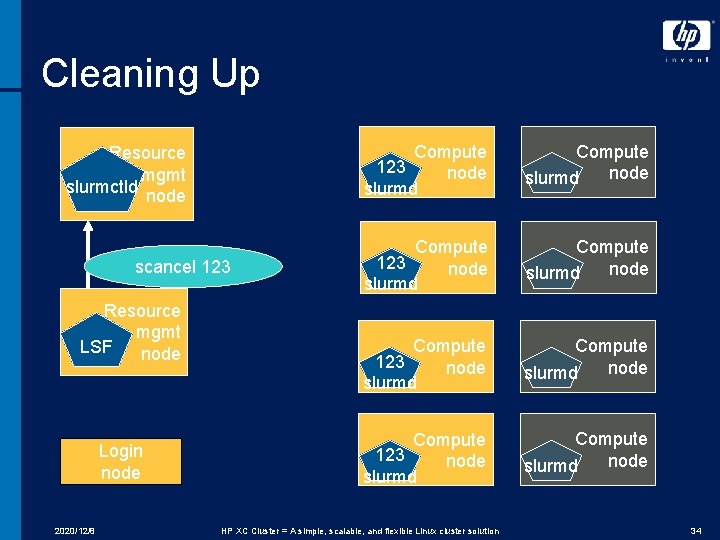

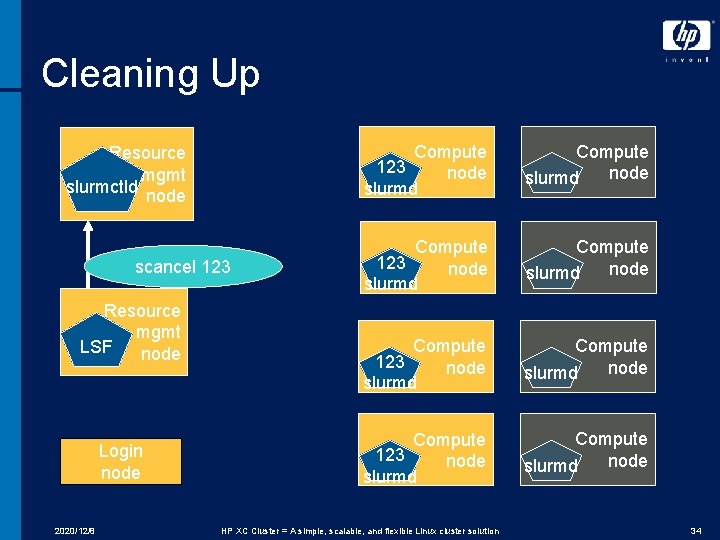

Cleaning Up Resource mgmt slurmctld node scancel 123 Resource mgmt LSF node Login node 2020/12/8 Compute 123 node slurmd Compute node slurmd HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 34

Troubleshooting LSF on XC 2020/12/8 HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 35

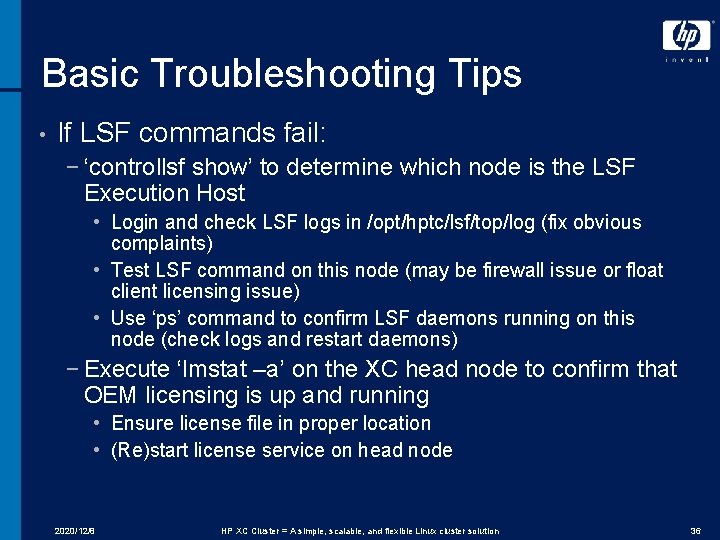

Basic Troubleshooting Tips • If LSF commands fail: − ‘controllsf show’ to determine which node is the LSF Execution Host • Login and check LSF logs in /opt/hptc/lsf/top/log (fix obvious complaints) • Test LSF command on this node (may be firewall issue or float client licensing issue) • Use ‘ps’ command to confirm LSF daemons running on this node (check logs and restart daemons) − Execute ‘lmstat –a’ on the XC head node to confirm that OEM licensing is up and running • Ensure license file in proper location • (Re)start license service on head node 2020/12/8 HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 36

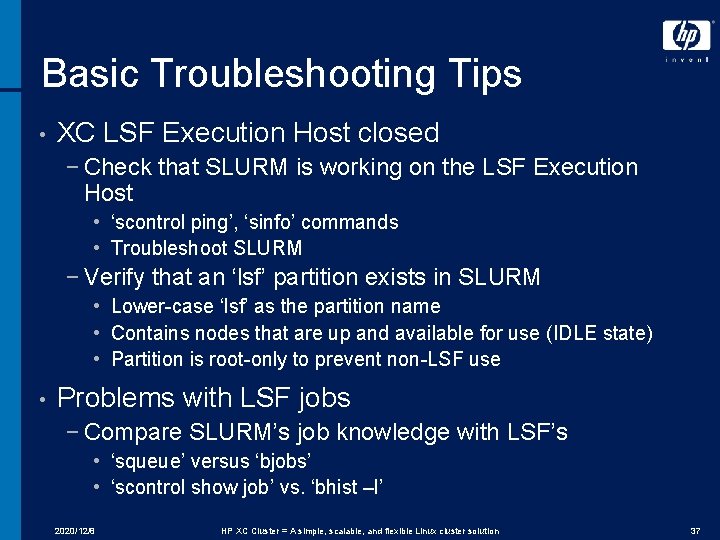

Basic Troubleshooting Tips • XC LSF Execution Host closed − Check that SLURM is working on the LSF Execution Host • ‘scontrol ping’, ‘sinfo’ commands • Troubleshoot SLURM − Verify that an ‘lsf’ partition exists in SLURM • Lower-case ‘lsf’ as the partition name • Contains nodes that are up and available for use (IDLE state) • Partition is root-only to prevent non-LSF use • Problems with LSF jobs − Compare SLURM’s job knowledge with LSF’s • ‘squeue’ versus ‘bjobs’ • ‘scontrol show job’ vs. ‘bhist –l’ 2020/12/8 HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 37

2020/12/8 HP XC Cluster = A simple, scalable, and flexible Linux cluster solution 38