HPC Solutions to BESIII Data Processing Ran Du

HPC Solutions to BESIII Data Processing Ran Du duran@ihep. ac. cn , on behalf of the Scheduling Group Computing Center, IHEP 2019 -Jul-16 2021/12/19 HPC Solutions to BESIII Data Processing 1

Outline ❖ Slurm Clusters ❖ GPU Jobs from BESIII ❖ Support Systems ❖ Research & Development ❖ Conclusion & Next Step 2021/12/19 HPC Solutions to BESIII Data Processing 2

Slurm Clusters – GPU cluster 1/2 ❖ Slurm Clusters are constructed to run GPU and parallelism jobs. ❖ Slurm GPU Cluster : in production since Apr. , 2019 n SL 75 + Slurm 18. 08 n Resource p p p 1 control node 2 SL 7 login nodes 10 worker nodes : 80 NVIDIA v 100 GPU cards 2 more worker nodes to be added: 14 GPU cards Dedicated Lustre mount point : /hpcfs n Groups supported p Group gpupwa : Partial Wave Analysis p Group mlgpu : Machine Learning 2021/12/19 HPC Solutions to BESIII Data Processing 3

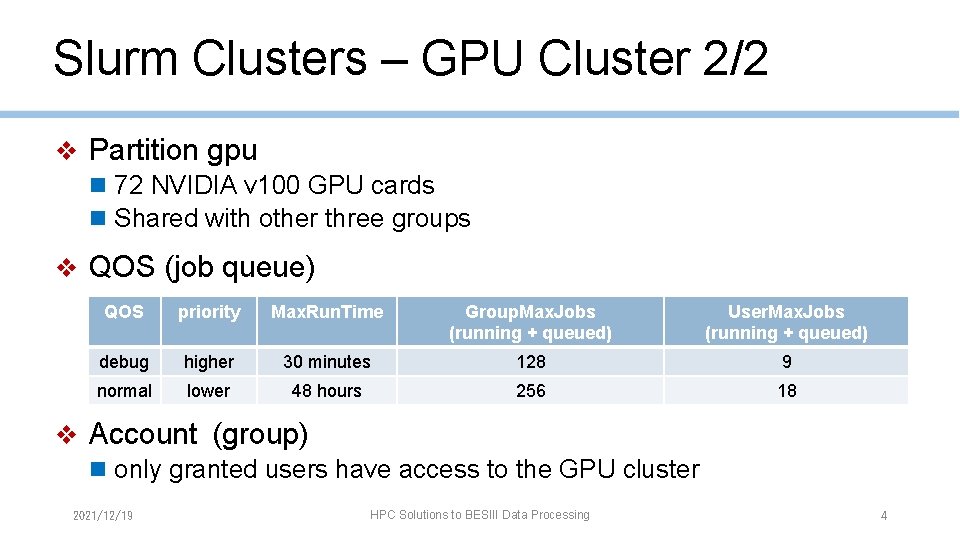

Slurm Clusters – GPU Cluster 2/2 ❖ Partition gpu n 72 NVIDIA v 100 GPU cards n Shared with other three groups ❖ QOS (job queue) QOS priority Max. Run. Time Group. Max. Jobs (running + queued) User. Max. Jobs (running + queued) debug higher 30 minutes 128 9 normal lower 48 hours 256 18 ❖ Account (group) n only granted users have access to the GPU cluster 2021/12/19 HPC Solutions to BESIII Data Processing 4

Slurm Clusters – CPU Cluster ❖ Slurm CPU Cluster : in production since Feb. , 2017 n SL 69 + Slurm 16. 05 n Resource p 1 control node p 14 SL 6 login nodes p 158 worker nodes : ~4, 000 CPU cores n Update : summer maintenance p Update to SL 75 + Slurm 18. 08 p Integrate with Slurm GPU Cluster 2021/12/19 HPC Solutions to BESIII Data Processing 5

Outline ❖ Slurm Clusters ❖ GPU Jobs from BESIII ❖ Support Systems ❖ Research & Development ❖ Conclusion & Next Step 2021/12/19 HPC Solutions to BESIII Data Processing 6

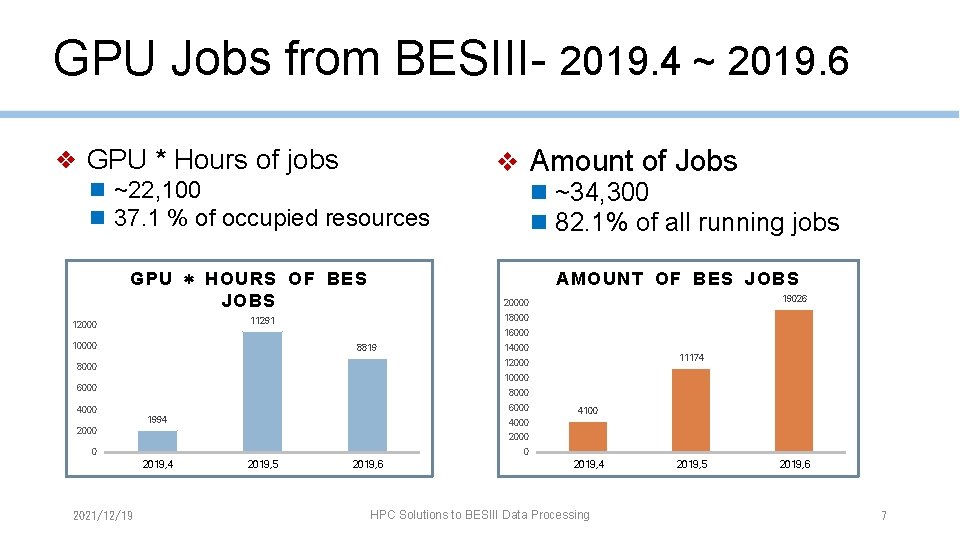

GPU Jobs from BESIII- 2019. 4 ~ 2019. 6 ❖ GPU * Hours of jobs n ~22, 100 n 37. 1 % of occupied resources n ~34, 300 n 82. 1% of all running jobs AMOUNT OF BES JOBS GPU * HOURS OF BES JOBS 19026 20000 18000 11291 12000 ❖ Amount of Jobs 16000 10000 8819 14000 11174 12000 8000 10000 6000 8000 6000 4000 1994 2000 0 0 2019, 4 2021/12/19 4100 4000 2019, 5 2019, 6 2019, 4 HPC Solutions to BESIII Data Processing 2019, 5 2019, 6 7

GPU Jobs from BESIII – group gpupwa ❖ Problem : HTC(High Throughput Computing) workload characters n large amount of submitted jobs : ~10, 000 / month n short running period : ~ 1 hour n lower parallelism degree : single-core, single-GPU ❖ Possible Solutions n HTC workload optimization : p 500 jobs / sec p To be testified on Slurm testbed. n Job array p Testified available p Update in User Manual later 2021/12/19 HPC Solutions to BESIII Data Processing 8

GPU Jobs from BESIII – group mlgpu ❖ Problem : irregular processes on worker nodes n User computing processes are forked from systemd(PID : 1) n Unkillable processes after jobs finished, unstable system ❖ Solutions n OS : SL 75 cgroup enabled p kernel provided functions to manage regular resource usage n Slurm : two cgroup plugins enabled p task/cgroup p proctrack/cgroup 2021/12/19 HPC Solutions to BESIII Data Processing 9

Outline ❖ Slurm Clusters ❖ GPU Jobs from BESIII ❖ Support Systems ❖ Research & Development ❖ Conclusion & Next Step 2021/12/19 HPC Solutions to BESIII Data Processing 10

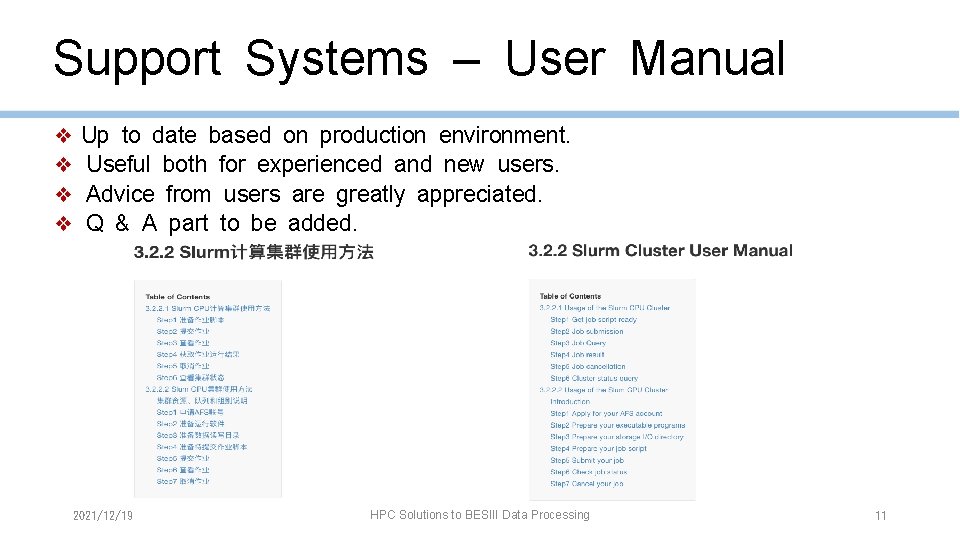

Support Systems – User Manual ❖ ❖ Up to date based on production environment. Useful both for experienced and new users. Advice from users are greatly appreciated. Q & A part to be added. 2021/12/19 HPC Solutions to BESIII Data Processing 11

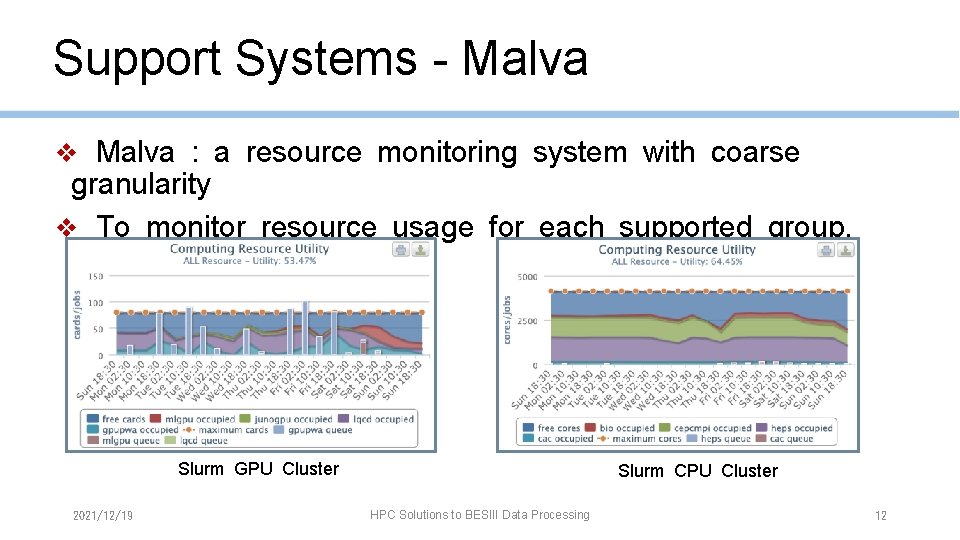

Support Systems - Malva ❖ Malva : a resource monitoring system with coarse granularity ❖ To monitor resource usage for each supported group. Slurm GPU Cluster 2021/12/19 Slurm CPU Cluster HPC Solutions to BESIII Data Processing 12

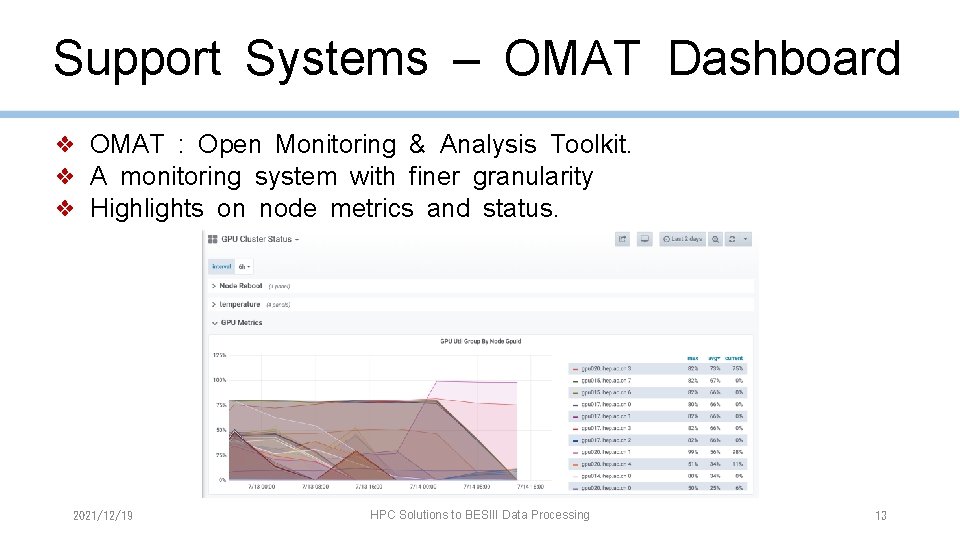

Support Systems – OMAT Dashboard ❖ OMAT : Open Monitoring & Analysis Toolkit. ❖ A monitoring system with finer granularity ❖ Highlights on node metrics and status. 2021/12/19 HPC Solutions to BESIII Data Processing 13

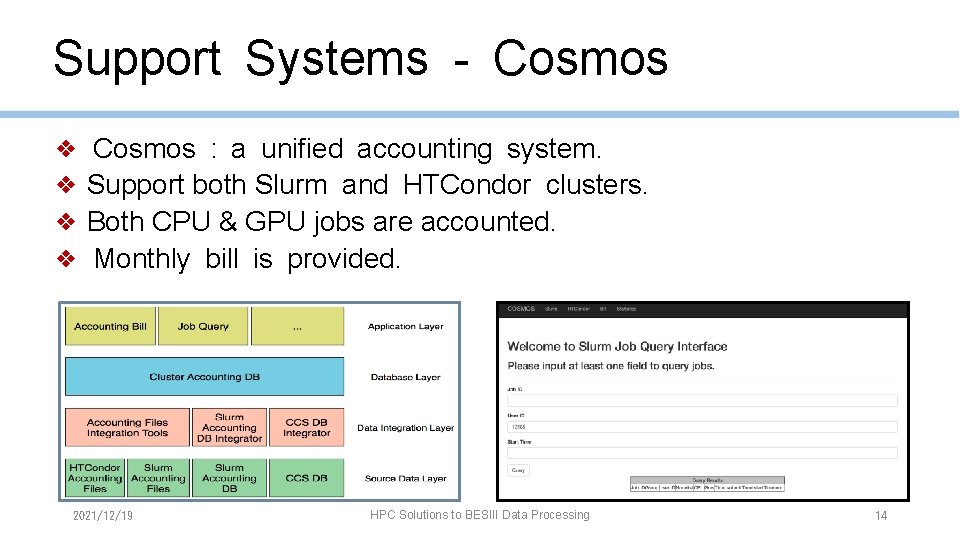

Support Systems - Cosmos ❖ ❖ Cosmos : a unified accounting system. Support both Slurm and HTCondor clusters. Both CPU & GPU jobs are accounted. Monthly bill is provided. 2021/12/19 HPC Solutions to BESIII Data Processing 14

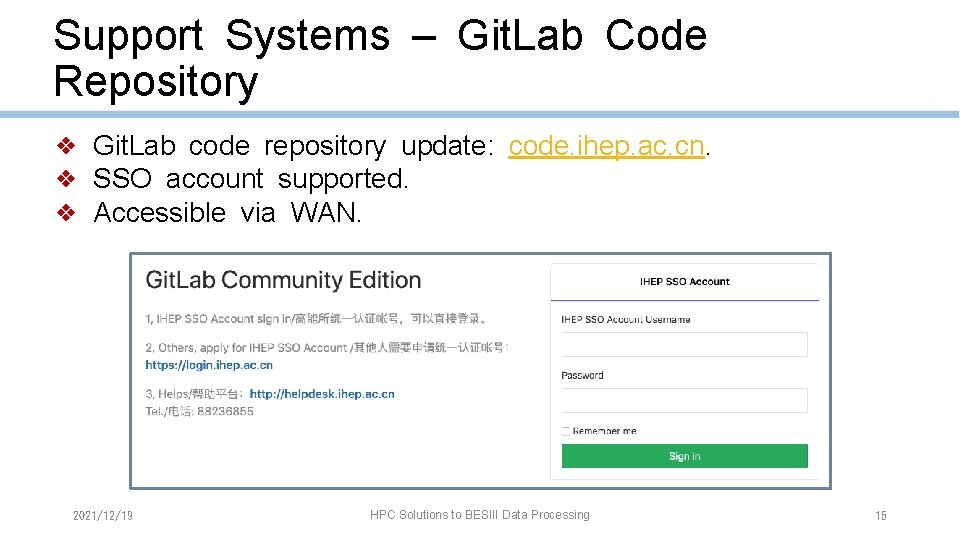

Support Systems – Git. Lab Code Repository ❖ Git. Lab code repository update: code. ihep. ac. cn. ❖ SSO account supported. ❖ Accessible via WAN. 2021/12/19 HPC Solutions to BESIII Data Processing 15

Outline ❖ Slurm Clusters ❖ GPU Jobs from BESIII ❖ Support Systems ❖ Research & Development ❖ Conclusion & Next Step 2021/12/19 HPC Solutions to BESIII Data Processing 16

Research & Development – Slurm Testbed ❖ Motivation n To testify configurations for the production system. n To provide test environment for research projects. ❖ Hardware Resources n 1 control node n 5 worker nodes p 2 MIC nodes p 1 GPU nodes p 2 CPU nodes ❖ Workload code templates n CVMFS : MPI, CUDA, Tensor. Flow, Py. CUDA… 2021/12/19 HPC Solutions to BESIII Data Processing 17

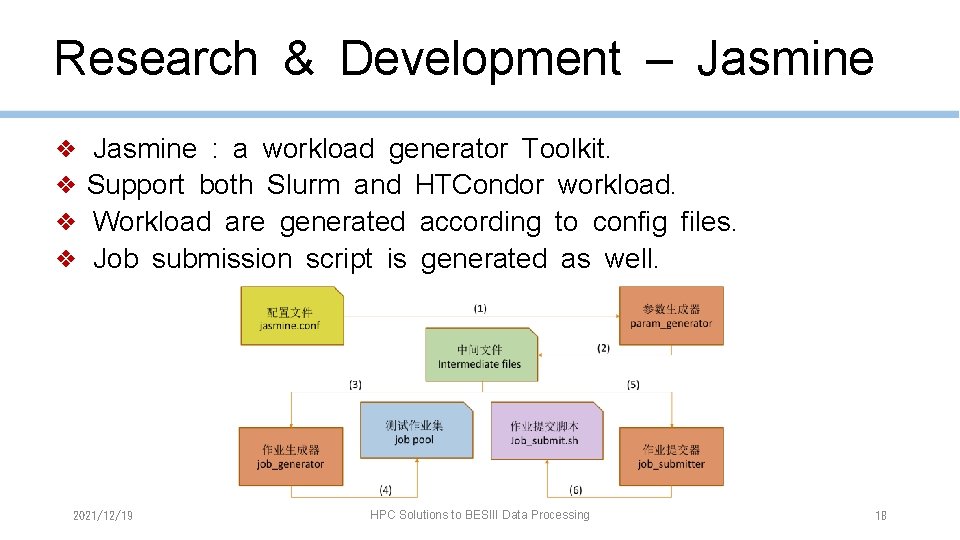

Research & Development – Jasmine ❖ ❖ Jasmine : a workload generator Toolkit. Support both Slurm and HTCondor workload. Workload are generated according to config files. Job submission script is generated as well. 2021/12/19 HPC Solutions to BESIII Data Processing 18

Research & Development – Tomato 1/2 ❖ Tomato : to migrate HTCondor jobs to the Slurm cluster. ❖ Motivation n HTCondor cluster resource utilization ratio : > 90% n Slurm cluster resource utilization ratio : ~50% ❖ Aims n To improve resource utilization of the Slurm CPU cluster. n To provide more opportunistic resources for HTCondor jobs. job 2021/12/19 job HPC Solutions to BESIII Data Processing 19

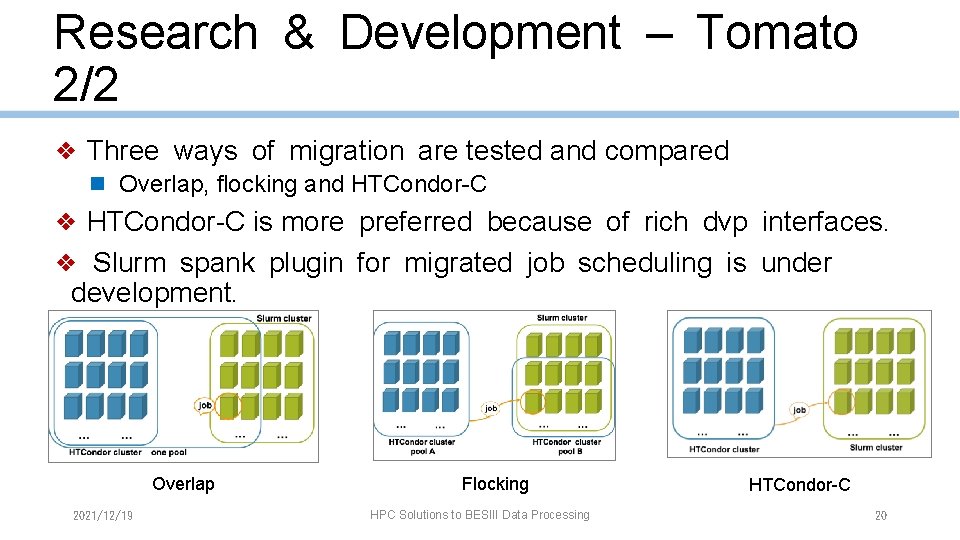

Research & Development – Tomato 2/2 ❖ Three ways of migration are tested and compared n Overlap, flocking and HTCondor-C ❖ HTCondor-C is more preferred because of rich dvp interfaces. ❖ Slurm spank plugin for migrated job scheduling is under development. Overlap 2021/12/19 Flocking HPC Solutions to BESIII Data Processing HTCondor-C 20

Outline ❖ Slurm Clusters ❖ GPU Jobs from BESIII ❖ Support Systems ❖ Research & Development ❖ Conclusion & Next Step 2021/12/19 HPC Solutions to BESIII Data Processing 21

Conclusion & Next Step ❖ Slurm clusters are constructed to run parallelism and GPU jobs. ❖ BESIII submitted 82% jobs and consumed 37% GPU resources. ❖ Group gpupwa and mlgpu encounted different problems, possible solutions are given or in progress. ❖ Support systems are developed to provide admin tools and user services. ❖ R&D projects are initiated for production testification and abetter future support. 2021/12/19 HPC Solutions to BESIII Data Processing 22

Thanks & Questions ? 2021/12/19 HPC Solutions to BESIII Data Processing 23

- Slides: 23