HPC Profile BOF Marvin Theimer Microsoft Corporation Marty

HPC Profile BOF Marvin Theimer Microsoft Corporation Marty Humphrey University of Virginia GGF 17, Tokyo May 10 2006

Agenda • 11: 00 – 11: 15 Review of Charter (Humphrey) • 11: 15 – 11: 30 HPC Use Cases – Base Case and Common Cases (Theimer) • 11: 30 – 11: 45 Extensible Job Submission Design (Theimer) • 11: 45 – 12: 00 Comparative Analysis • Extensible Job Submission Design and JSDL/BES (Wasson) • ESI – Snelling/Foster (Theimer) • 12: 00 – 12: 30 Discussion GGF 17, Tokyo May 10 2006

Review of Charter (11: 00 – 11: 15) GGF 17, Tokyo May 10 2006

History • GGF 14: Chicago Jul 14 2005 • “Minimal Web Services BOF” (aka WS-Management) • Newhouse, Theimer, Humphrey, Tollefsrud • GGF 15: Boston Oct 6 2005 • UVa update on WS-Management use for OGSA (Wasson) • Specific technical thoughts on the support of dual stacks • Suspended given rumored “reconcilation” • GGF 16: Athens Feb 14 2006 • “An evolutionary approach to realizing the Grid vision” • Theimer, Parastatidis, Hey, Humphrey, Fox • OGSA F 2 F Feb 17 206 • Theimer gives detailed presentation of the “evolutionary” paper GGF 17, Tokyo May 10 2006

More History • Mar 15 2006 • “Toward Converging Web Service Standards for Resources, Events, and Management” • HP, IBM, Intel, Microsoft • OGSA F 2 F: Sunnyvale, CA April 5 2006 • Theimer presented the use-case document • Since March 2006, active engagement of OGSA-WG mailing list to build consensus GGF 17, Tokyo May 10 2006

OGSA HPC Profile WG (Computing Area) • Objective: the profile and protocol specifications needed to realize the vertical use case of batch job scheduling of scientific/technical applications • “use case” = HPC use case • Output: HPCP (normative) • Scope • Identify any changes/extensions that are deemed necessary to existing protocol specifications and will work with the relevant working groups to try to affect the identified changes/extensions • Identify additional protocol specifications that need to be defined and will either work on their definition or spin them out to additionally defined working groups. GGF 17, Tokyo May 10 2006

OGSA HPC Profile WG (Computing Area) • “sub-profiles” • interface for specifying and submitting and scheduling jobs • interface for bulk data staging • Evolutionary approach • A simple base case will be defined that we expect to have universally implemented by all batch job scheduling clients and schedulers. • All additional functionality will be defined in terms of optional extensions (which are anticipated to be widely applicable) GGF 17, Tokyo May 10 2006

Pre-existing Documents • JSDL • BES • “An evolutionary approach to realizing the Grid vision” GGF 17, Tokyo May 10 2006

Status • Use-case in final revisions • Resource reservation • Provisioning • Execution • Next: what the framework should be for defining extension profiles • Aggressive milestones to meet vendor deadlines GGF 17, Tokyo May 10 2006

Deliverables • OGSA HPC Use Cases – Base Case and Common Cases (GFD-I) • OGSA HPC profile specification (GFD-R. P) • OGSA HPC initial common cases extension profile specification (GFD-R. P) GGF 17, Tokyo May 10 2006

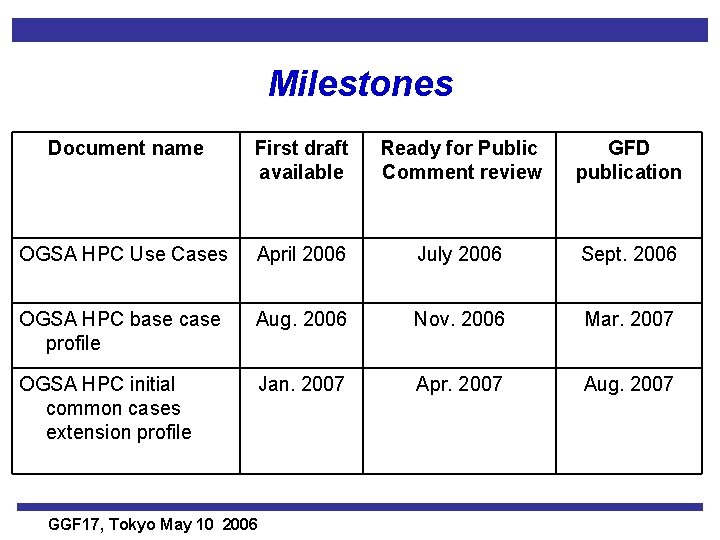

Milestones Document name First draft available Ready for Public Comment review GFD publication OGSA HPC Use Cases April 2006 July 2006 Sept. 2006 OGSA HPC base case profile Aug. 2006 Nov. 2006 Mar. 2007 OGSA HPC initial common cases extension profile Jan. 2007 Apr. 2007 Aug. 2007 GGF 17, Tokyo May 10 2006

![HPC Use Cases – Base Case and Common Cases [ GFD-I ] (11: 15 HPC Use Cases – Base Case and Common Cases [ GFD-I ] (11: 15](http://slidetodoc.com/presentation_image_h2/b8599db58d5e0f4863a33f369315d567/image-12.jpg)

HPC Use Cases – Base Case and Common Cases [ GFD-I ] (11: 15 – 11: 30) GGF 17, Tokyo May 10 2006

Goals • BASE case: • ALL scheduling clients and services are expected to understand • HPC, not Grid (i. e. , do NOT span administrative domains) • Common Cases: • Represent some significant fraction of implementors, not all implementors • NOT all cases – only common cases • capture client-visible functionality requirements rather than being technology/system-design-driven GGF 17, Tokyo May 10 2006

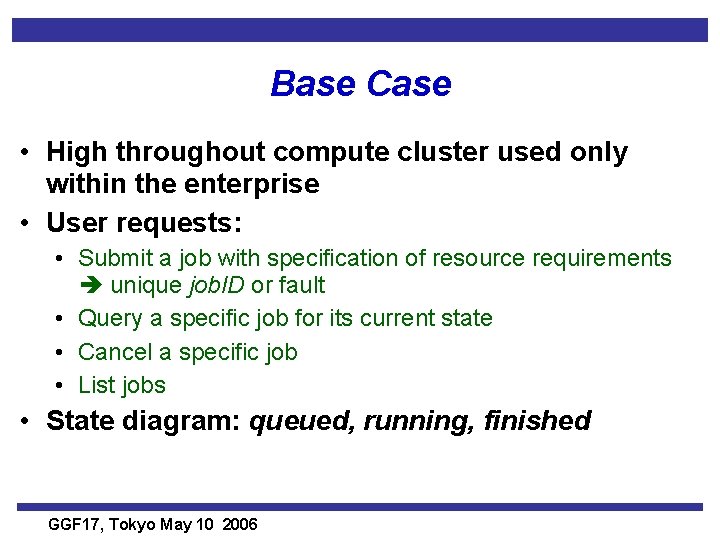

Base Case • High throughout compute cluster used only within the enterprise • User requests: • Submit a job with specification of resource requirements unique job. ID or fault • Query a specific job for its current state • Cancel a specific job • List jobs • State diagram: queued, running, finished GGF 17, Tokyo May 10 2006

Base Case (cont) • Only small set of “standard” resources • number of CPUs/compute nodes needed, memory requirements, disk requirements, etc. • Only equality of string values and numeric relationships among pairs of numeric values are provided in the base use case. • Once a job has been submitted it can be cancelled, but its resource requests can't be modified GGF 17, Tokyo May 10 2006

Base case: Out of Scope • Data access issues • Programs are assumed to be pre-installed • Creation and management of user security credentials • No need for directory services beyond something like DNS • Management of the system resources GGF 17, Tokyo May 10 2006

Base case: Fault tolerance model • Job fails because of “system problems” • Job must be resubmitted by client • the job scheduler will not automatically rerun the job • Failure of the scheduler may or may not cause currently running jobs to fail. GGF 17, Tokyo May 10 2006

Base case: Job Exits • Whether it exited successfully, with an error code, or terminated due to some other fault situation. • How long it ran in terms of wall-clock time. GGF 17, Tokyo May 10 2006

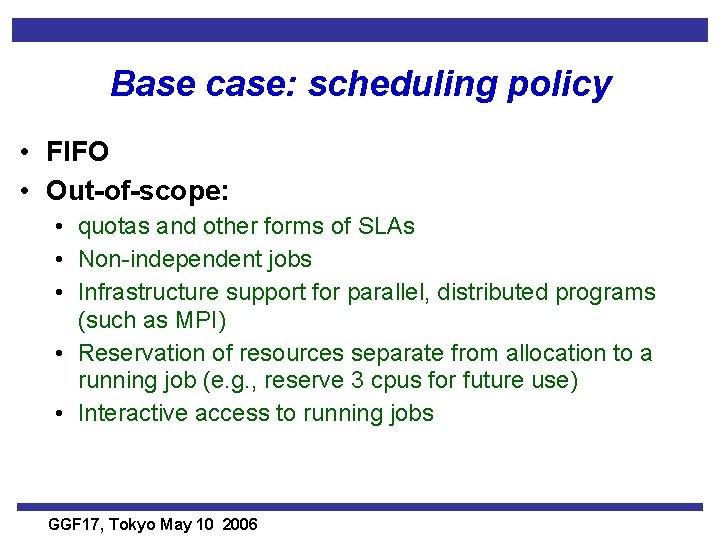

Base case: scheduling policy • FIFO • Out-of-scope: • quotas and other forms of SLAs • Non-independent jobs • Infrastructure support for parallel, distributed programs (such as MPI) • Reservation of resources separate from allocation to a running job (e. g. , reserve 3 cpus for future use) • Interactive access to running jobs GGF 17, Tokyo May 10 2006

Common Cases • Purpose of enumerating common cases: use as the basis for creating appropriate extension mechanisms • 13 cases GGF 17, Tokyo May 10 2006

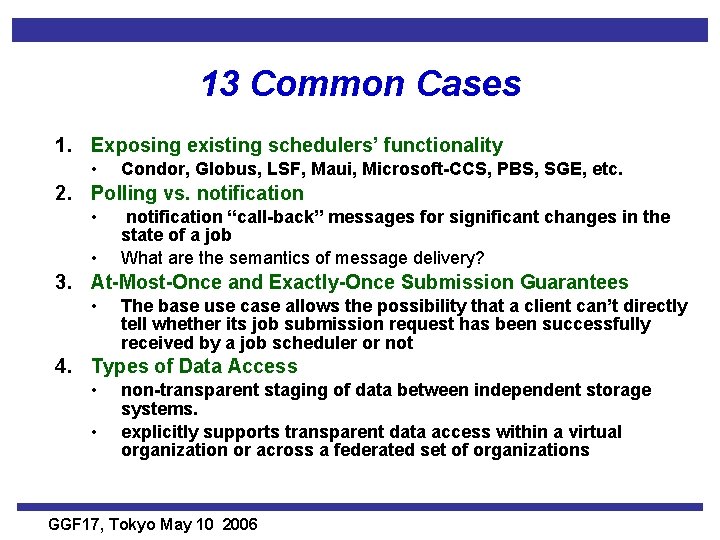

13 Common Cases 1. Exposing existing schedulers’ functionality • Condor, Globus, LSF, Maui, Microsoft-CCS, PBS, SGE, etc. 2. Polling vs. notification • • notification “call-back” messages for significant changes in the state of a job What are the semantics of message delivery? 3. At-Most-Once and Exactly-Once Submission Guarantees • The base use case allows the possibility that a client can’t directly tell whether its job submission request has been successfully received by a job scheduler or not 4. Types of Data Access • • non-transparent staging of data between independent storage systems. explicitly supports transparent data access within a virtual organization or across a federated set of organizations GGF 17, Tokyo May 10 2006

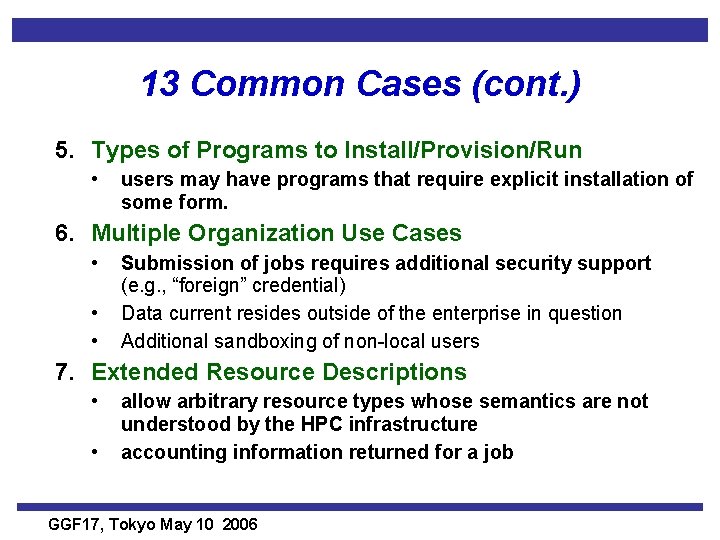

13 Common Cases (cont. ) 5. Types of Programs to Install/Provision/Run • users may have programs that require explicit installation of some form. 6. Multiple Organization Use Cases • • • Submission of jobs requires additional security support (e. g. , “foreign” credential) Data current resides outside of the enterprise in question Additional sandboxing of non-local users 7. Extended Resource Descriptions • • allow arbitrary resource types whose semantics are not understood by the HPC infrastructure accounting information returned for a job GGF 17, Tokyo May 10 2006

13 Common Cases (cont. ) 8. Extended Client/System Administrator Operations • • • users may wish to modify the requirements for a job after it has already been submitted Arrays of jobs system administrators: suspension/resumption of jobs and migration of jobs among compute nodes 9. Extended Scheduling Policies • • shortest/smallest-job-first, weighted-fair-share scheduling, etc. multiple submission queues, job submission quotas, and various forms of SLAs, such as guarantees on how quickly a job will be scheduled to run. GGF 17, Tokyo May 10 2006

13 Common Cases (cont. ) 10. Parallel Programs and Workflows of Programs • • instantiate such programs (e. g. , MPI) across multiple compute nodes in a suitable manner, including provision of information that will allow the various program instances to find each other within the cluster Programs may have execution dependencies on each other. 11. Advanced Reservations and Interactive Use Cases • • reserve resources for use at a specific future time communicate in real time with external client users 12. Cycle Scavenging • batch job scheduler dispatches jobs to machines that have dynamically indicated to it that they are currently available for running guest jobs. GGF 17, Tokyo May 10 2006

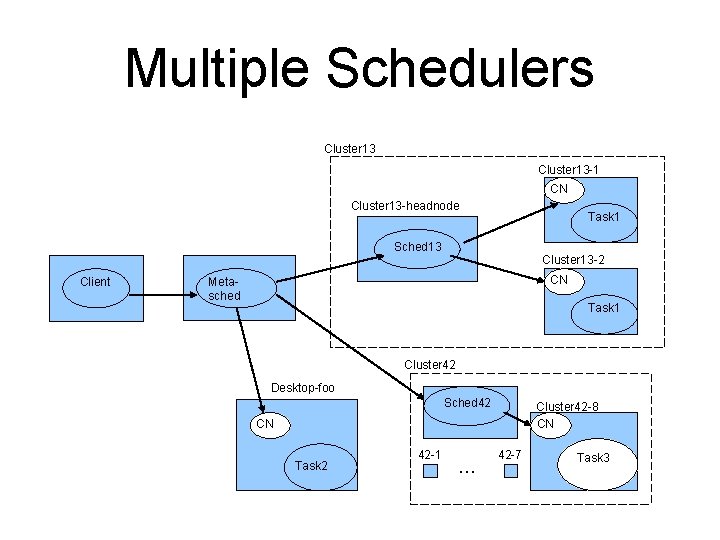

13 Common Cases (cont. ) 13. Multiple Schedulers • submit work to the whole of the computing infrastructure without having to manually select which facility to submit to GGF 17, Tokyo May 10 2006

Status • Need feedback • Is the base case sufficient? • Missing any “common” cases? • Any of the 13 “too uncommon”? GGF 17, Tokyo May 10 2006

Extensible Job Submission Design (11: 30 – 11: 45) GGF 17, Tokyo May 10 2006

Extensible Job Submission Design (EJS) • Main focus: extensibility • Philosophy: – Cover all the bases (resource reservation, provisioning, execution, data staging, etc. ) – Keep it simple • Approach: – Minimalist base cases (overall and for each subcomponent) – Optional extensions to enable both richer semantics and evolution

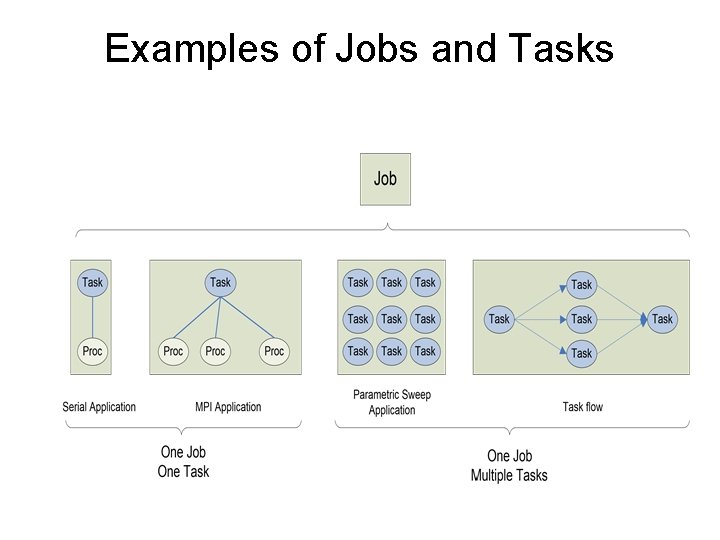

What is a Job? • OGSA glossary: – Job: User-defined task that is scheduled to be carried out by an execution subsystem – Task: ? ? ? • Single program instance? • Distributed MPI program? • What about data staging? BES defines simple workflows – Execution subsystem: ? ? ? • Job queue? • Process? Compute node? Multiple compute nodes? – Workflow: • Focus is on business processes & services • No mention of executing multiple user-defined tasks or data staging steps • Batch job scheduling literature: – Job ~ accounting entity under which multiple user-defined steps are run

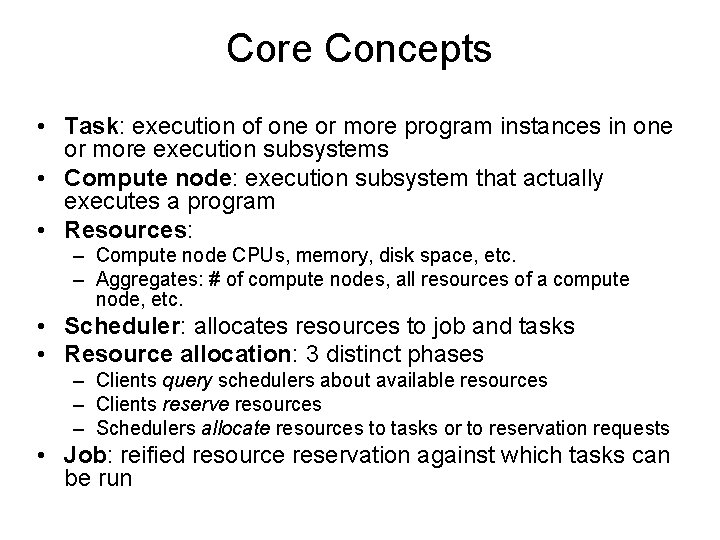

Core Concepts • Task: execution of one or more program instances in one or more execution subsystems • Compute node: execution subsystem that actually executes a program • Resources: – Compute node CPUs, memory, disk space, etc. – Aggregates: # of compute nodes, all resources of a compute node, etc. • Scheduler: allocates resources to job and tasks • Resource allocation: 3 distinct phases – Clients query schedulers about available resources – Clients reserve resources – Schedulers allocate resources to tasks or to reservation requests • Job: reified resource reservation against which tasks can be run

Examples of Jobs and Tasks

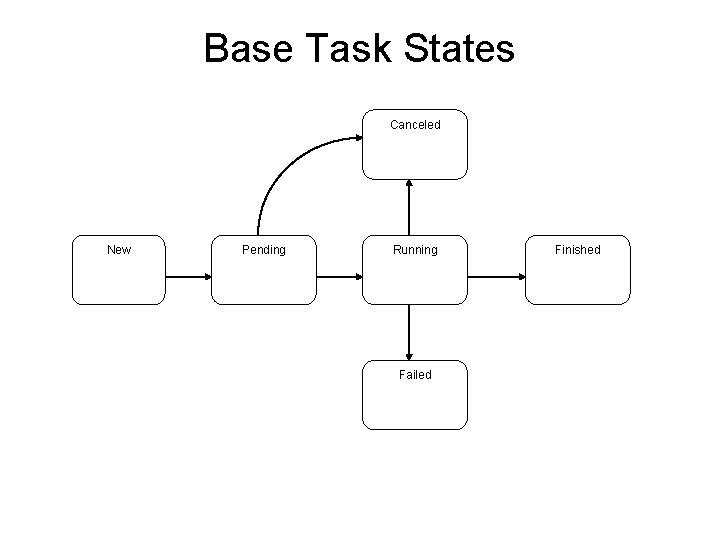

Base Task States Canceled New Pending Running Failed Finished

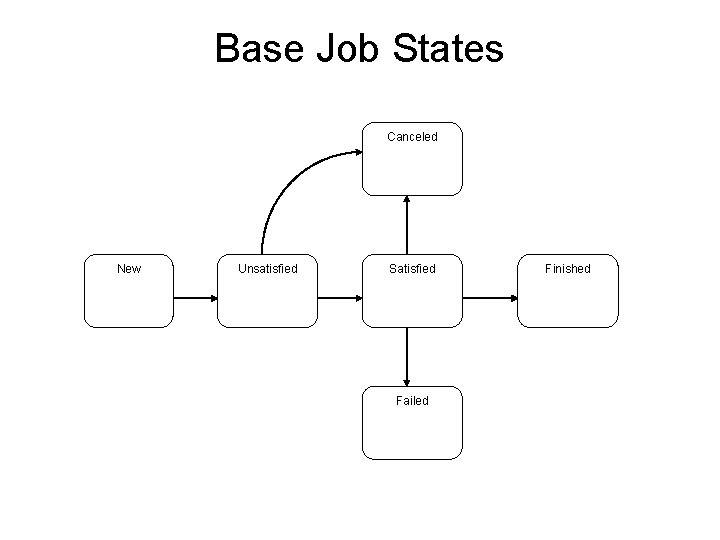

Base Job States Canceled New Unsatisfied Satisfied Failed Finished

Multiple Schedulers Cluster 13 -1 CN Cluster 13 -headnode Task 1 Sched 13 Cluster 13 -2 Client CN Metasched Task 1 Cluster 42 Desktop-foo Sched 42 Cluster 42 -8 CN CN Task 2 42 -1 … 42 -7 Task 3

Other Topics Covered • Advertising resource information • Failure and recovery model • Security and credential delegation

Types of Extensions • Purely additive extensions allowed (i. e. no changes to base semantics) • Additional WSDL operations (incl. for parameter overloading) • Array operations • Extended state diagrams • Extended resource descriptions • Extended information representations • Multiple, composable, extensible “micro”protocols

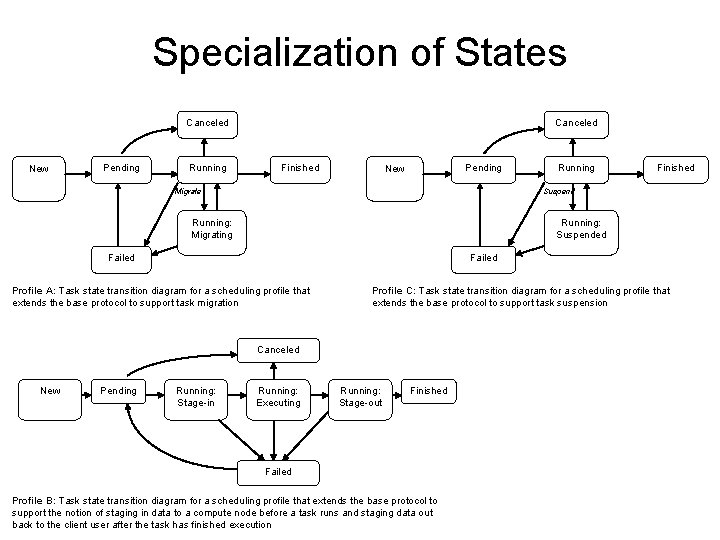

Specialization of States Canceled New Pending Running Canceled Finished Pending New Migrate Running: Suspended Failed Profile A: Task state transition diagram for a scheduling profile that extends the base protocol to support task migration Profile C: Task state transition diagram for a scheduling profile that extends the base protocol to support task suspension Canceled Pending Finished Suspend Running: Migrating New Running: Stage-in Running: Executing Running: Stage-out Finished Failed Profile B: Task state transition diagram for a scheduling profile that extends the base protocol to support the notion of staging in data to a compute node before a task runs and staging data out back to the client user after the task has finished execution

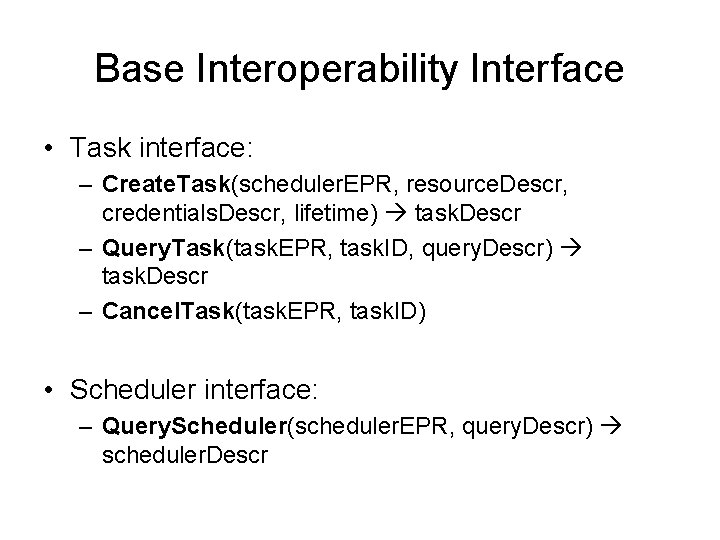

Base Interoperability Interface • Task interface: – Create. Task(scheduler. EPR, resource. Descr, credentials. Descr, lifetime) task. Descr – Query. Task(task. EPR, task. ID, query. Descr) task. Descr – Cancel. Task(task. EPR, task. ID) • Scheduler interface: – Query. Scheduler(scheduler. EPR, query. Descr) scheduler. Descr

Generic Extensions • • • Array operations Notifications Query operation modifiers Idempotent message delivery semantics EPR resolution

Task Interface Extensions • Re-execution of failed tasks • Additional & extended resource definitions • Additional operations – Modify. Task –… • • • Additional scheduling policies Support for parallel/distributed programs Data staging Provisioning Static workflow

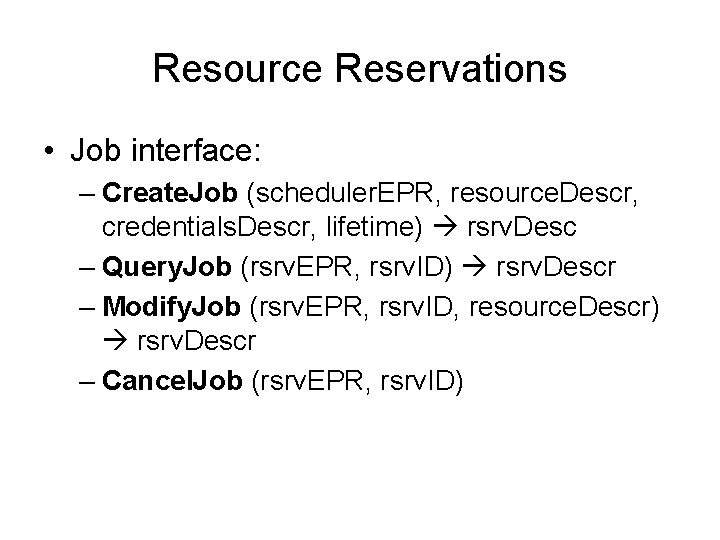

Resource Reservations • Job interface: – Create. Job (scheduler. EPR, resource. Descr, credentials. Descr, lifetime) rsrv. Desc – Query. Job (rsrv. EPR, rsrv. ID) rsrv. Descr – Modify. Job (rsrv. EPR, rsrv. ID, resource. Descr) rsrv. Descr – Cancel. Job (rsrv. EPR, rsrv. ID)

Multiple Schedulers • Hierarchical information option • Client scheduler list • Announce. Scheduler (scheduler. EPR, announcer. Desc)

Comparison of ESI to Extensible Job Submission Design • Focus of ESI: reconciliation/synthesis of Globus and Unicore • Focus of EJS: extensibility

- Slides: 43