How to solve the problem Text Information Retrieval

How to solve the problem? • Text Information Retrieval Systems such as Yahoo, Lycos, and Google have achieved great success. • Basic idea: using keywords to index and retrieve documents. • Motivation: Can we generalize relatively mature theories and techniques of text-based information retrieval to the image domain? • Image retrieval : a special case of information retrieval (IR). • Annotate images with meta-data such as text keywords. • We observe that the text-based techniques can also be effectively used to represent image features and conduct image retrieval. University at Buffalo July 2001

Generalize text retrieval techniques to image retrieval • In principle, text retrieval and image retrieval are the same • Concerned with information retrieval. • Dealing with different data formats. • An image is greatly different from a text document • Syntactically: 2 -dimensional vs. 1 - dimensional. • Semantically: the units of an image, either in the pixel level or in the segment level after segmentation, provide no clue at all about the semantics of the image; text documents have keywords. • Central questions: • What are “keywords” of an image? • How to generate “keywords” from images? University at Buffalo July 2001

Generalize text retrieval techniques to image retrieval • What are “keywords” of an image? • Pixels don't reflect semantics very well. • Objects are good candidates. However, object recognition still remains to be an open problem! • One practical approach: – images are partitioned into smaller blocks, and then a set of representative blocks, are selected as “keywords”. We term them as “keyblocks”. – An image can be encoded as the indices of the keyblocks in a codebook. Based on this image representation, information retrieval techniques in text domain can be generalized for image retrieval. University at Buffalo July 2001

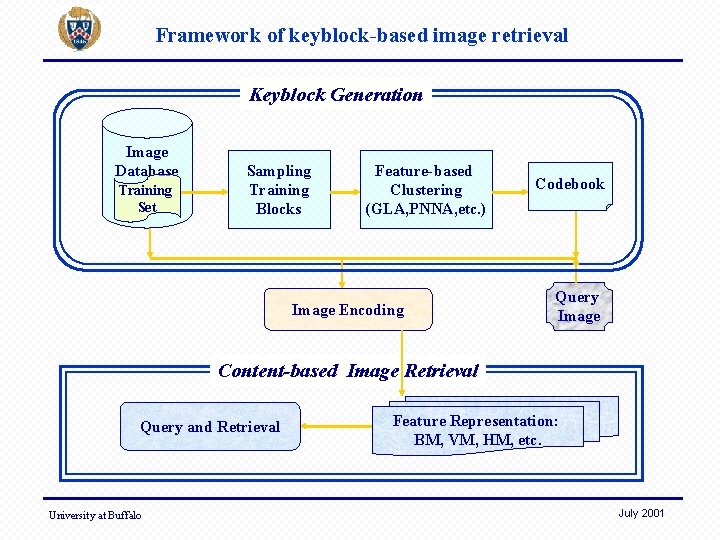

Framework of keyblock-based image retrieval Keyblock Generation Image Database Training Set Sampling Training Blocks Feature-based Clustering (GLA, PNNA, etc. ) Image Encoding Codebook Query Image Content-based Image Retrieval Query and Retrieval University at Buffalo Feature Representation: BM, VM, HM, etc. July 2001

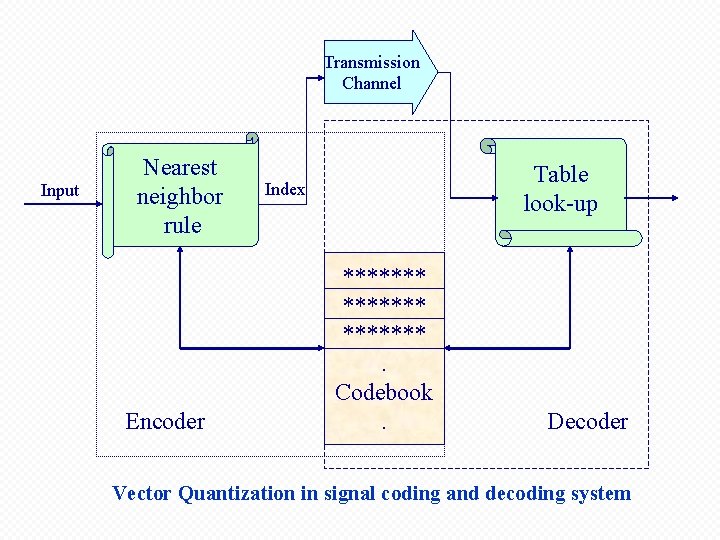

Transmission Channel Input Nearest neighbor rule Encoder Table look-up Index *******. Codebook. Decoder Vector Quantization in signal coding and decoding system

Why Use Vector Quantization? • VQ can reduce the number of possible pixels/blocks while keeping the quality of the images; • The code vectors (keyblocks) in the codebook are representative vectors generated by clustering algorithms. They have some semantic meaning since they are the representatives of the training vectors; • An image encoded with the codebook can be considered as a list of keyblocks. The representation format is similar to a text document which is a list of keywords. University at Buffalo July 2001

Keyblock generation and image encoding Various clustering algorithms can be used. • On original space partition/segment the images into smaller blocks, and then select a subset of representative blocks, which can be used as the keyblocks to represent the images. • On feature space extract low-level feature vectors, such as color, texture, and shape, from image segments/blocks, and then select a subset of representative feature vectors, which can be used as the keyblocks to represent the images. University at Buffalo July 2001

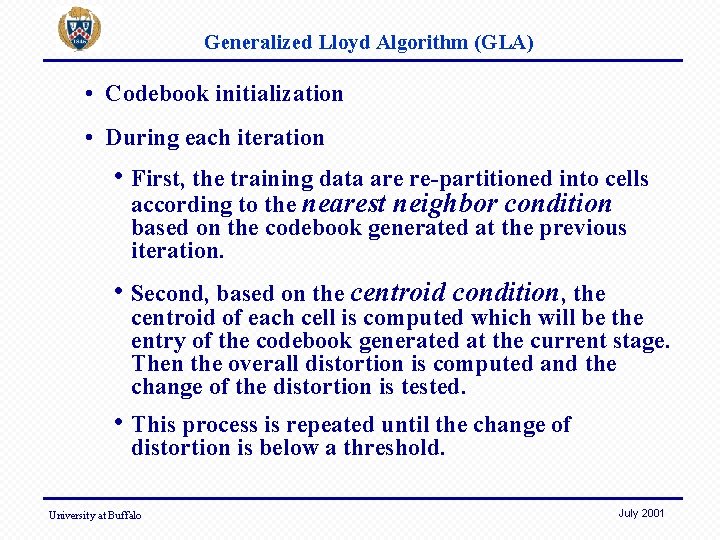

Generalized Lloyd Algorithm (GLA) • Codebook initialization • During each iteration • First, the training data are re-partitioned into cells according to the nearest neighbor condition based on the codebook generated at the previous iteration. • Second, based on the centroid condition, the centroid of each cell is computed which will be the entry of the codebook generated at the current stage. Then the overall distortion is computed and the change of the distortion is tested. • This process is repeated until the change of distortion is below a threshold. University at Buffalo July 2001

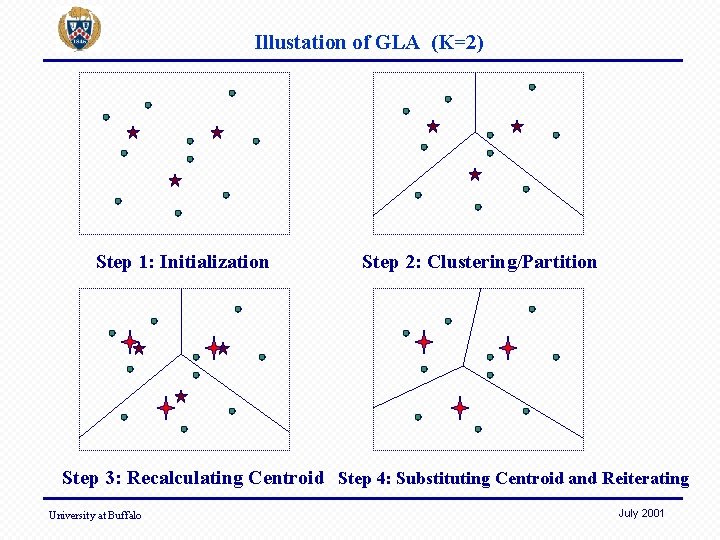

Illustation of GLA (K=2) Step 1: Initialization Step 2: Clustering/Partition Step 3: Recalculating Centroid Step 4: Substituting Centroid and Reiterating University at Buffalo July 2001

Pairwise Nearest Neighbor Algorithm (PNNA) • It starts with the whole training sequence. • At each iteration, two nearest code vectors are merged and replaced by their centroid. The codebook size is decreased by 1. • This process is repeated until the desired final codebook size is reached. University at Buffalo July 2001

Keyblocks Generation for an Image Database • Combining GLA and PNNA • Sample image Database to get the training set I ( |I|=m). • For each training image Ik (1 k m), decompose it into blocks of a fixed size to get a sequence of vectors, Then, apply GLA to extract a codebook Ck • Put all vectors in Ck (1 k m) into the training set T ; • Apply PNNA on T to get a codebook C’ ; • Apply GLA to T again with C’ as an initial codebook to get the final codebook C. University at Buffalo July 2001

Image Encoding • For each image in the database, decompose it into blocks. • Then, for each block, find the closest entry in the codebook and store the index correspondingly. • Now each image is a matrix of indices, which can be regarded as 1 -dimensional in scan order. This property is very similar to a text document which is considered as a linear list of keywords in textbased IR. University at Buffalo July 2001

Codebook ( a list of keyblocks) 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 …. . . Block Encoding Table Lookup Segmentation Segmented Image Original Image 14 14 14 16 14 15 16 19 16 18 18 16 Encoded Image Decoding Reconstructed Image

A raw image and the reconstructed images with different codebooks Original image carrier. jpg Reconstructed image (block 8 x 8, codebook size 1024) University at Buffalo Reconstructed image (block 4 x 4, codebook size 256) Reconstructed image (block 4 x 4, codebook size 1024) Codebook (block 4 x 4, size 256) Codebook (block 4 x 4, size 1024) Codebook (block 8 x 8, size 1024) July 2001

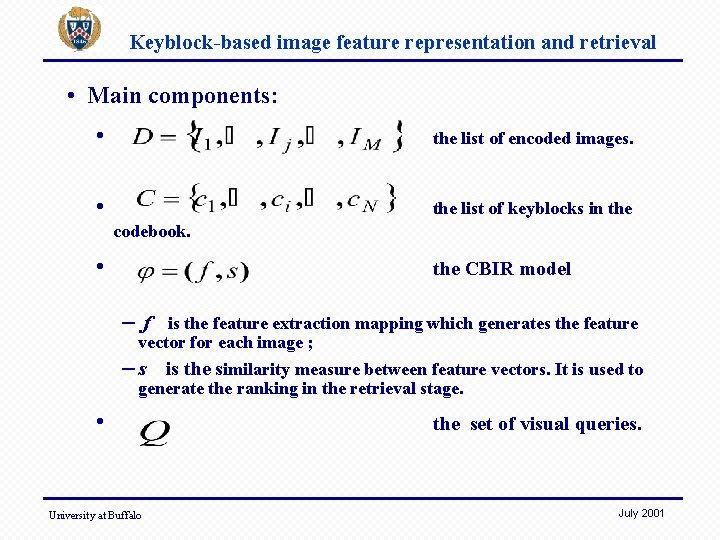

Keyblock-based image feature representation and retrieval • Main components: • the list of encoded images. • the list of keyblocks in the codebook. • the CBIR model –f is the feature extraction mapping which generates the feature vector for each image ; – s is the similarity measure between feature vectors. It is used to generate the ranking in the retrieval stage. • University at Buffalo the set of visual queries. July 2001

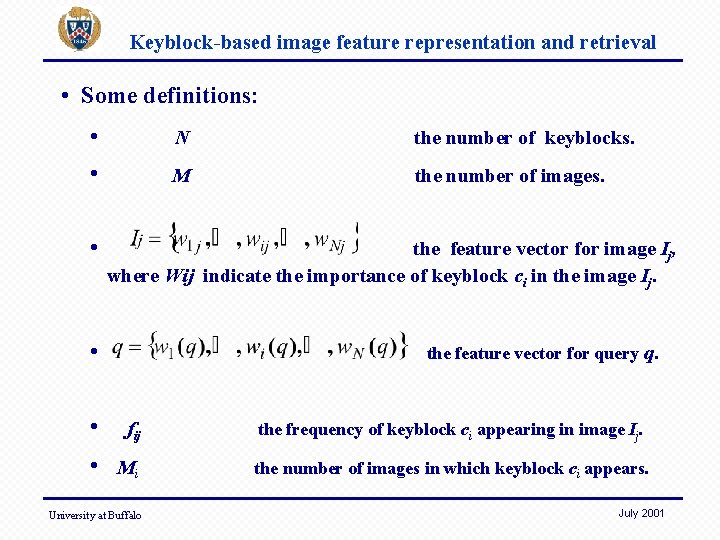

Keyblock-based image feature representation and retrieval • Some definitions: • N the number of keyblocks. • M the number of images. • the feature vector for image Ij, where Wij indicate the importance of keyblock ci in the image Ij. • the feature vector for query q. • fij • Mi University at Buffalo the frequency of keyblock ci appearing in image Ij. the number of images in which keyblock ci appears. July 2001

Single-block Models • Boolean Model and Vector Model are widely used in IR • adopt keywords to index and retrieve documents; • assume that both documents in the database and queries can be described by a set of mutually independent keywords. • Similar image feature representation models can be designed • use keyblocks instead of keywords for images; • individual keyblock's appearance in images is important information. University at Buffalo July 2001

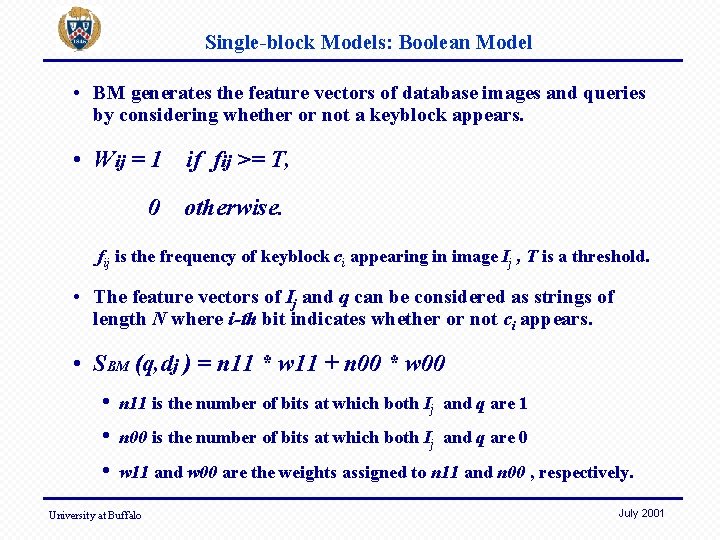

Single-block Models: Boolean Model • BM generates the feature vectors of database images and queries by considering whether or not a keyblock appears. • Wij = 1 if fij >= T, 0 otherwise. fij is the frequency of keyblock ci appearing in image Ij , T is a threshold. • The feature vectors of Ij and q can be considered as strings of length N where i-th bit indicates whether or not ci appears. • SBM (q, dj ) = n 11 * w 11 + n 00 * w 00 • n 11 is the number of bits at which both Ij and q are 1 • n 00 is the number of bits at which both Ij and q are 0 • w 11 and w 00 are the weights assigned to n 11 and n 00 , respectively. University at Buffalo July 2001

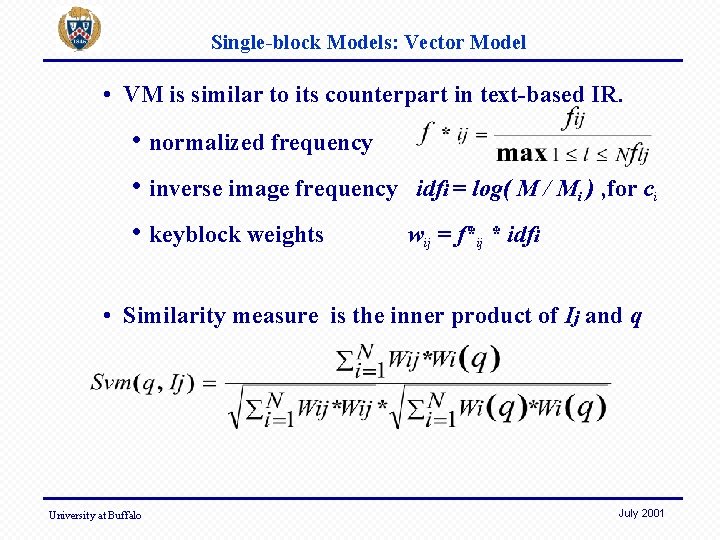

Single-block Models: Vector Model • VM is similar to its counterpart in text-based IR. • normalized frequency • inverse image frequency • keyblock weights idfi = log( M / Mi ) , for ci wij = f*ij * idfi • Similarity measure is the inner product of Ij and q University at Buffalo July 2001

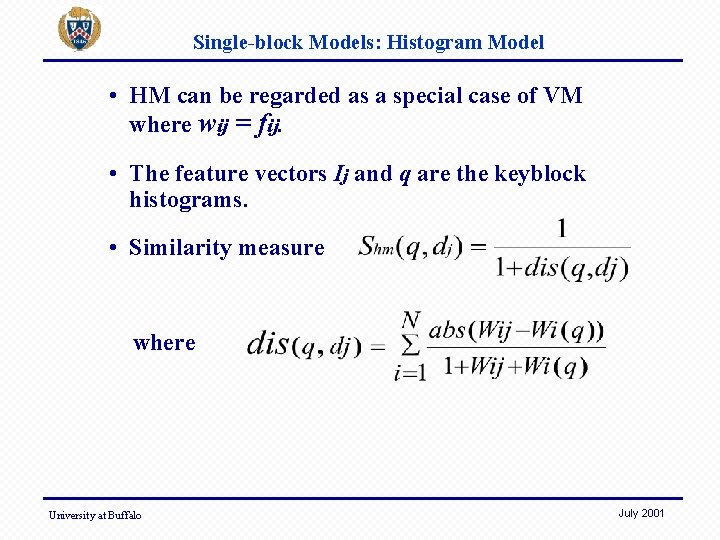

Single-block Models: Histogram Model • HM can be regarded as a special case of VM where wij = fij. • The feature vectors Ij and q are the keyblock histograms. • Similarity measure where University at Buffalo July 2001

N-block Models • The single-block models only focus on individual keyblock’s appearance, the correlation among keyblocks are not counted in. • We propose N-block Models • the correlation of image blocks is the focus. • the probabilities of a subset of keyblocks distributed according to certain spatial configurations are used as feature vectors. University at Buffalo July 2001

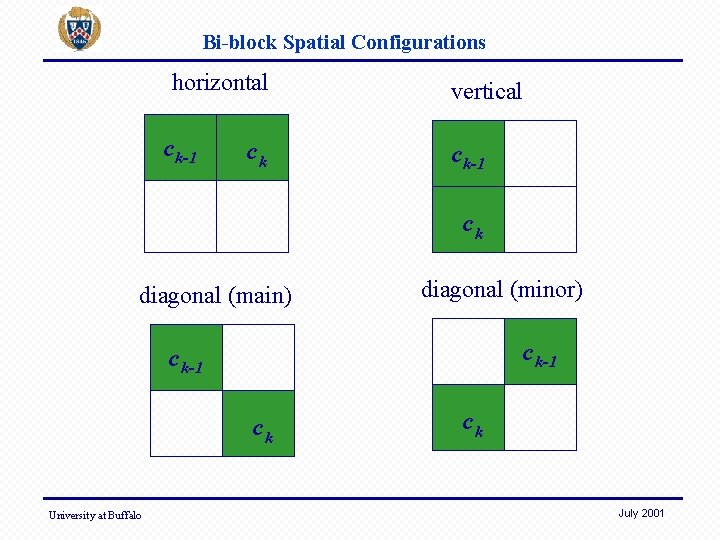

Bi-block Spatial Configurations horizontal c k-1 ck vertical c k-1 ck diagonal (main) diagonal (minor) c k-1 ck University at Buffalo ck July 2001

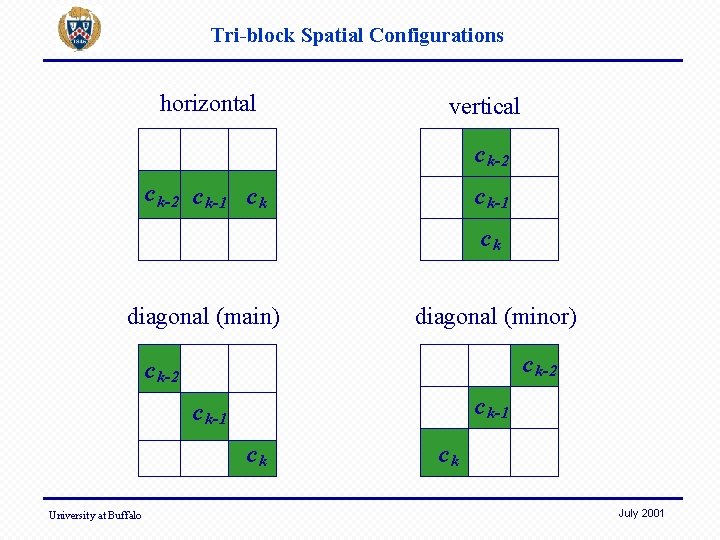

Tri-block Spatial Configurations horizontal vertical c k-2 c k-1 ck diagonal (main) diagonal (minor) c k-2 c k-1 ck University at Buffalo ck July 2001

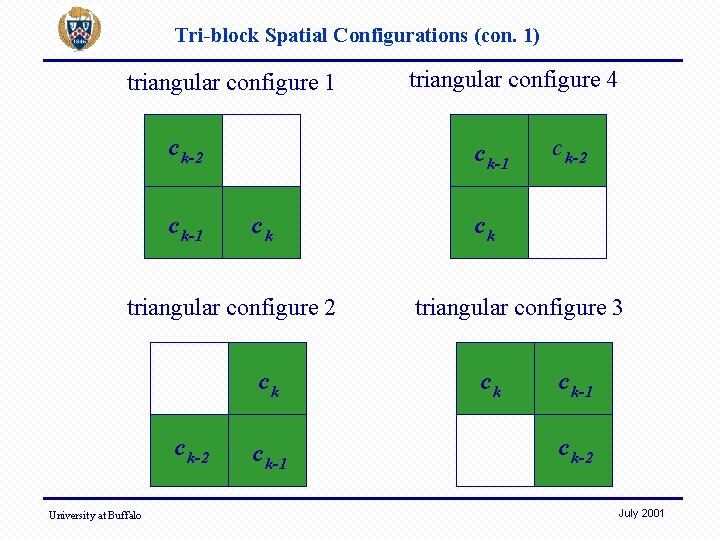

Tri-block Spatial Configurations (con. 1) triangular configure 1 c k-2 c k-1 ck triangular configure 2 ck c k-2 University at Buffalo triangular configure 4 c k-1 c k-2 ck triangular configure 3 ck c k-1 c k-2 July 2001

N-block Calculation • Given an encoded image with a codebook C, for a code string s C*, where C* is the set of all possible code strings on C, we count the occurrences of s in the image and denote the number as N(s). • Then, for ck, ck-1 C, • for ck, ck-1, ck-2 C, University at Buffalo July 2001

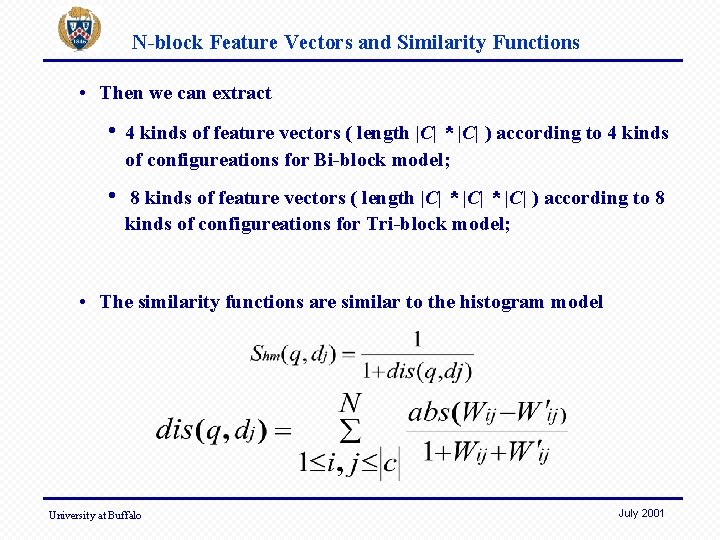

N-block Feature Vectors and Similarity Functions • Then we can extract • 4 kinds of feature vectors ( length |C| * |C| ) according to 4 kinds of configureations for Bi-block model; • 8 kinds of feature vectors ( length |C| * |C| ) according to 8 kinds of configureations for Tri-block model; • The similarity functions are similar to the histogram model University at Buffalo July 2001

Identical N-block Models • The dimensions of the feature vectors in bi-block and tri-block model are very large • High dimensional feature vectors require large storage • Deteriorates the efficiency because it is hard to apply indexing techniques • Harms the retrieval performance because they are highly sparse to represent image content in the vectors. • Identical Bi-block Model and Tri-block Model • Only captures the spatial correlation between identical keyblocks. When calculating , ck=ck-1 is required. When calculating ck=ck-1 =ck-2 is required. • Reduce the dimension of feature vectors to |C| University at Buffalo July 2001

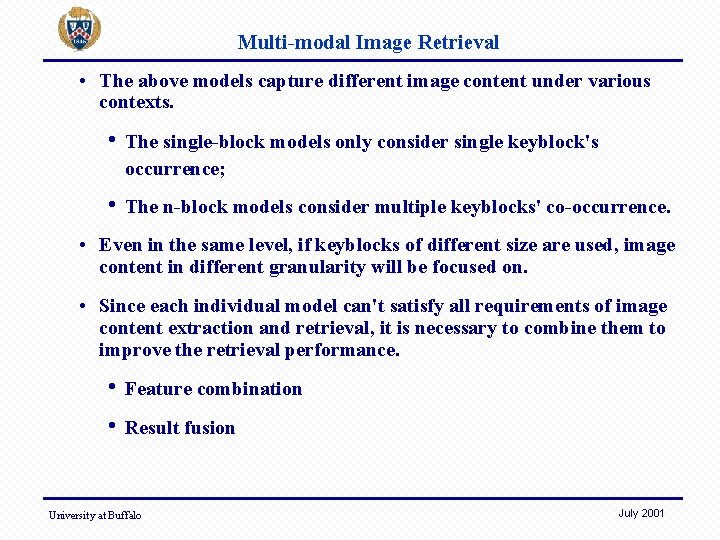

Multi-modal Image Retrieval • The above models capture different image content under various contexts. • The single-block models only consider single keyblock's occurrence; • The n-block models consider multiple keyblocks' co-occurrence. • Even in the same level, if keyblocks of different size are used, image content in different granularity will be focused on. • Since each individual model can't satisfy all requirements of image content extraction and retrieval, it is necessary to combine them to improve the retrieval performance. • Feature combination • Result fusion University at Buffalo July 2001

Feature Combination Model • In the phase of feature extraction, for each image, combine feature vectors generated by different models into one comprehensive feature vector. • Feature vectors • Model • Combination Model where or University at Buffalo July 2001

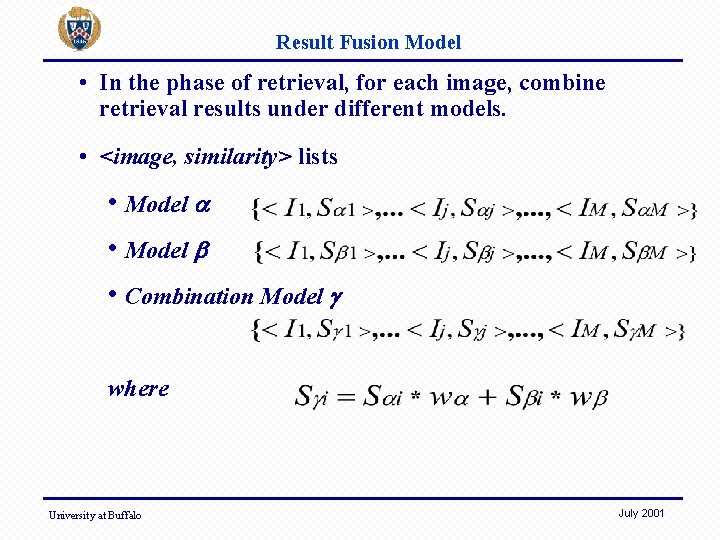

Result Fusion Model • In the phase of retrieval, for each image, combine retrieval results under different models. • <image, similarity> lists • Model • Combination Model where University at Buffalo July 2001

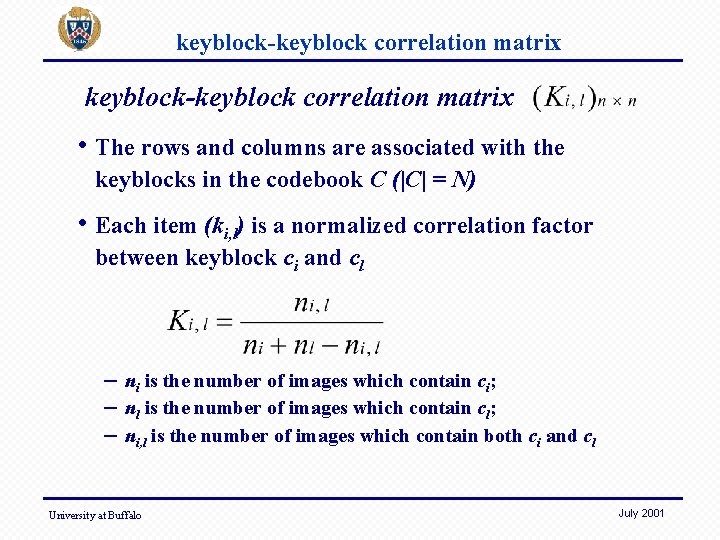

keyblock-keyblock correlation matrix • The rows and columns are associated with the keyblocks in the codebook C (|C| = N) • Each item (ki, l) is a normalized correlation factor between keyblock ci and cl – ni is the number of images which contain ci; – nl is the number of images which contain cl; – ni, l is the number of images which contain both ci and cl University at Buffalo July 2001

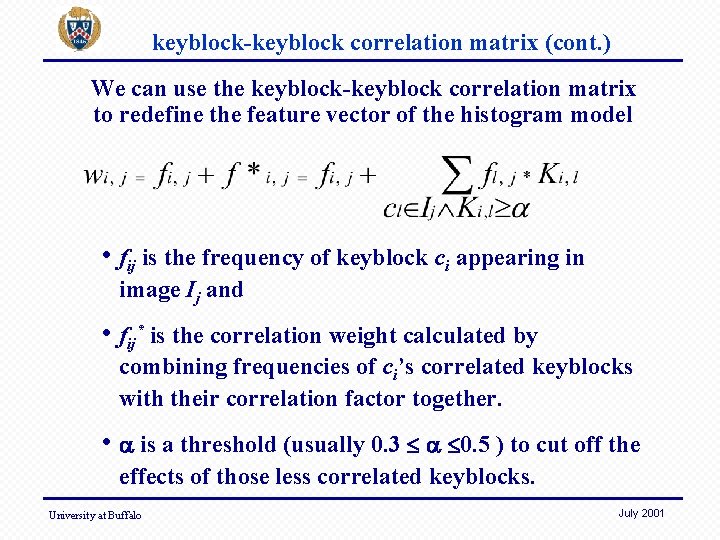

keyblock-keyblock correlation matrix (cont. ) We can use the keyblock-keyblock correlation matrix to redefine the feature vector of the histogram model • fij is the frequency of keyblock ci appearing in image Ij and • fij* is the correlation weight calculated by combining frequencies of ci’s correlated keyblocks with their correlation factor together. • is a threshold (usually 0. 3 0. 5 ) to cut off the effects of those less correlated keyblocks. University at Buffalo July 2001

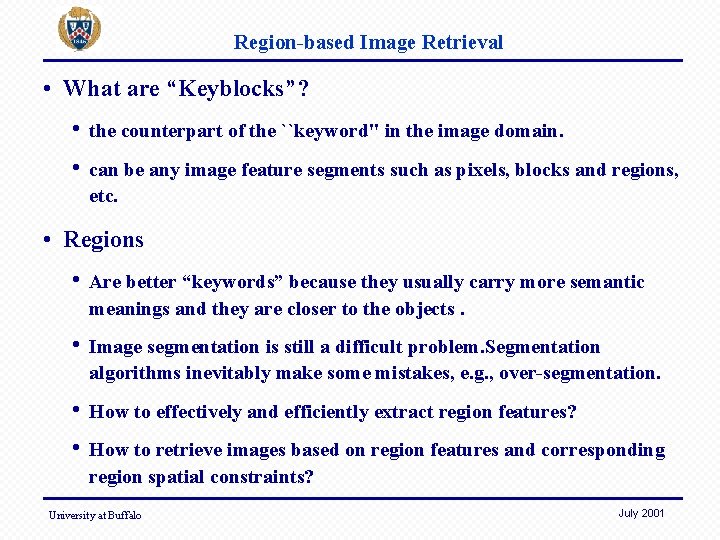

Region-based Image Retrieval • What are “Keyblocks”? • the counterpart of the ``keyword'' in the image domain. • can be any image feature segments such as pixels, blocks and regions, etc. • Regions • Are better “keywords” because they usually carry more semantic meanings and they are closer to the objects. • Image segmentation is still a difficult problem. Segmentation algorithms inevitably make some mistakes, e. g. , over-segmentation. • How to effectively and efficiently extract region features? • How to retrieve images based on region features and corresponding region spatial constraints? University at Buffalo July 2001

Region-based Image Retrieval Basic steps: • Images are segmented into several regions; • Visual features are extracted for each region; • The image content is represented by the set of region features; • At the query time, the query image is segmented into several regions. Then the features of one or more regions are matched against region features which represent images in the database. University at Buffalo July 2001

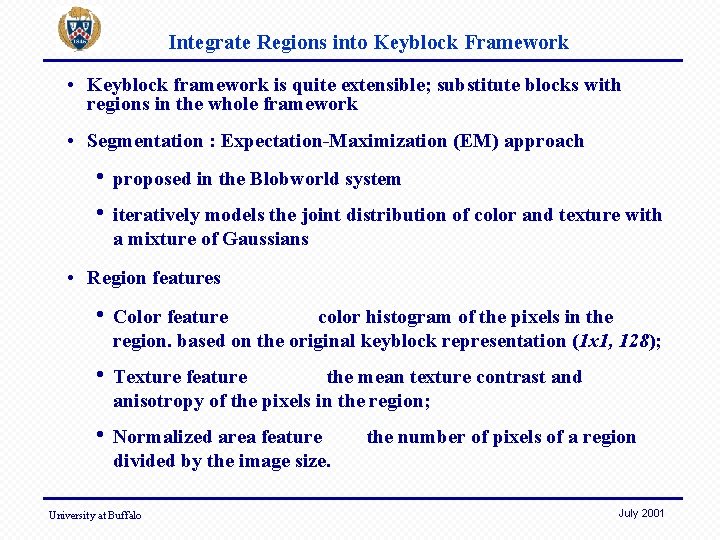

Integrate Regions into Keyblock Framework • Keyblock framework is quite extensible; substitute blocks with regions in the whole framework • Segmentation : Expectation-Maximization (EM) approach • • proposed in the Blobworld system iteratively models the joint distribution of color and texture with a mixture of Gaussians • Region features • Color feature color histogram of the pixels in the region. based on the original keyblock representation (1 x 1, 128); • Texture feature the mean texture contrast and anisotropy of the pixels in the region; • Normalized area feature divided by the image size. University at Buffalo the number of pixels of a region July 2001

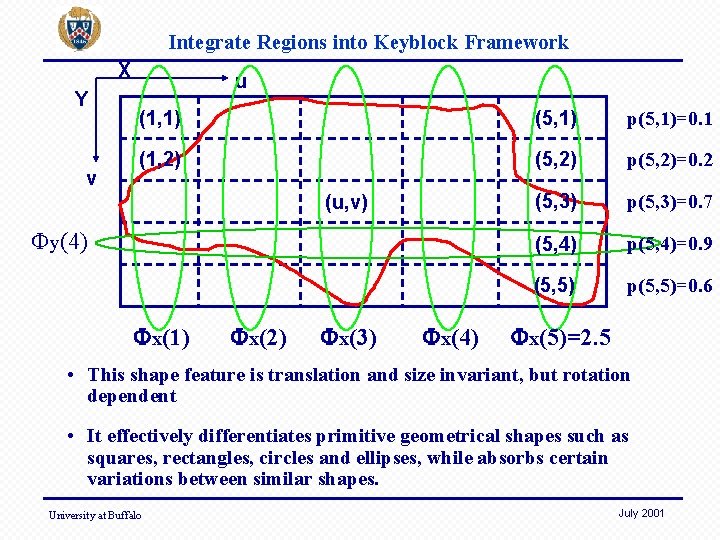

Integrate Regions into Keyblock Framework • Shape features feature vector ) X-axis and Y-axis profiles (10 -dimension • (1) Find the minimum bounding box B of the region; • (2) Equally subdivide B along both X and Y axes into 5 intervals; • (3) For each cell (u, v) obtained from the above subdivision, calculate the percentage p(u, v) of the region that cell (u, v) contains; • (4) Define the profile of the region along the X-axis as a 5 -element array x with the i-th element x(i) = 5 v=1 p(i, v); • (5) Similarly define the profile of the region along the Y-axis as a 5 -element array y with the j-th element y(j) = 5 v=1 p(u, j). University at Buffalo July 2001

Integrate Regions into Keyblock Framework X Y v u (1, 1) (5, 1) p(5, 1)=0. 1 (1, 2) (5, 2) p(5, 2)=0. 2 (5, 3) p(5, 3)=0. 7 (5, 4) p(5, 4)=0. 9 (5, 5) p(5, 5)=0. 6 (u, v) y(4) x(1) x(2) x(3) x(4) x(5)=2. 5 • This shape feature is translation and size invariant, but rotation dependent • It effectively differentiates primitive geometrical shapes such as squares, rectangles, circles and ellipses, while absorbs certain variations between similar shapes. University at Buffalo July 2001

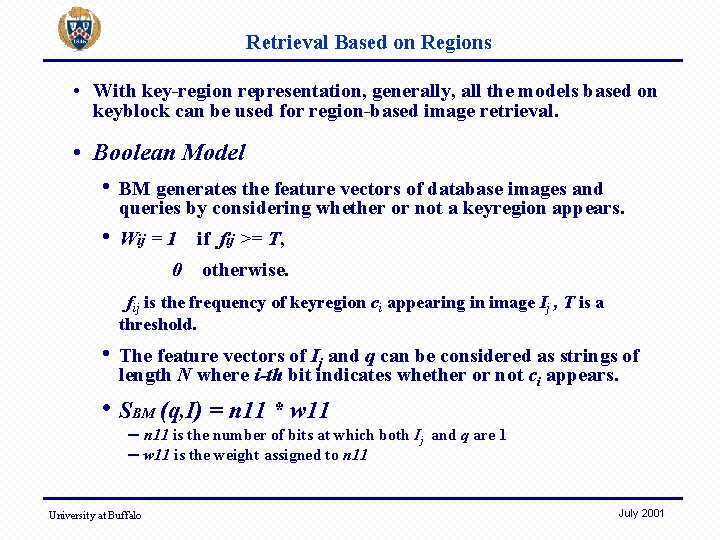

Retrieval Based on Regions • With key-region representation, generally, all the models based on keyblock can be used for region-based image retrieval. • Boolean Model • BM generates the feature vectors of database images and queries by considering whether or not a keyregion appears. • Wij = 1 if fij >= T, 0 otherwise. fij is the frequency of keyregion ci appearing in image Ij , T is a threshold. • The feature vectors of Ij and q can be considered as strings of length N where i-th bit indicates whether or not ci appears. • SBM (q, I) = n 11 * w 11 – n 11 is the number of bits at which both Ij and q are 1 – w 11 is the weight assigned to n 11 University at Buffalo July 2001

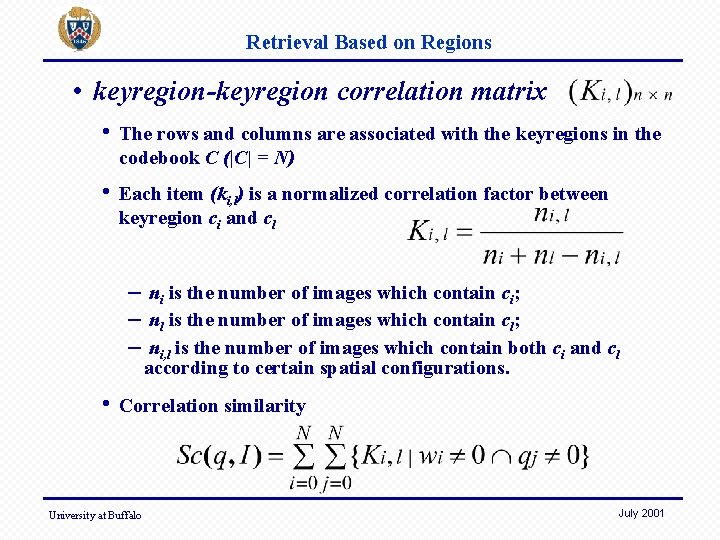

Retrieval Based on Regions • keyregion-keyregion correlation matrix • The rows and columns are associated with the keyregions in the codebook C (|C| = N) • Each item (ki, l) is a normalized correlation factor between keyregion ci and cl – ni is the number of images which contain ci; – nl is the number of images which contain cl; – ni, l is the number of images which contain both ci and cl according to certain spatial configurations. • Correlation similarity University at Buffalo July 2001

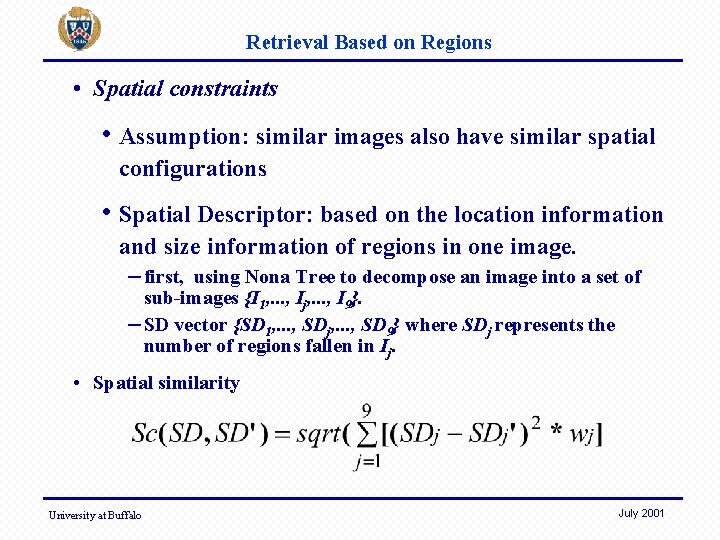

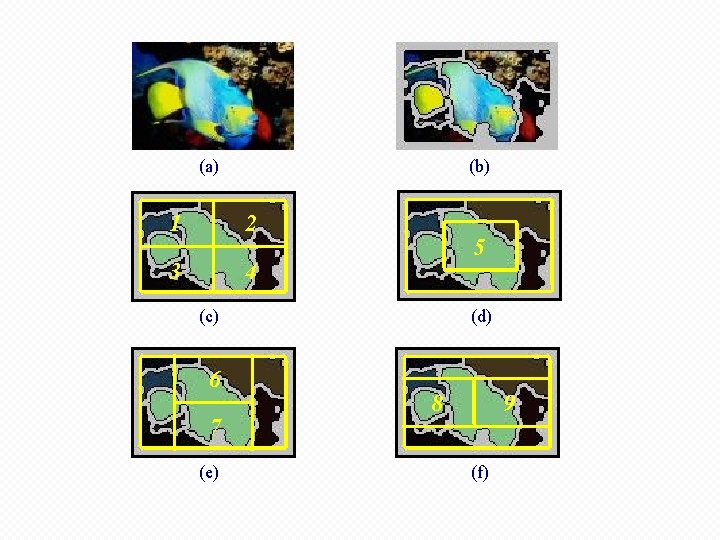

Retrieval Based on Regions • Spatial constraints • Assumption: similar images also have similar spatial configurations • Spatial Descriptor: based on the location information and size information of regions in one image. – first, using Nona Tree to decompose an image into a set of sub-images {I 1, . . . , Ij, . . . , I 9}. – SD vector {SD 1, . . . , SDj, . . . , SD 9} where SDj represents the number of regions fallen in Ij. • Spatial similarity University at Buffalo July 2001

(a) (b) 1 2 3 4 5 (c) 6 7 (e) (d) 8 9 (f)

Experiments • Purpose : effectiveness of keyblock approach • General procedure • Set up a test image database ; • Set up a query set with the ground truth; • After retrieval tasks are performed, for each query, precision and recall are calculated based on the ground truth; then average precision and recall are calculated based on all the queries; • Draw Precision-Recall Curves to compare the effectiveness of different approaches. University at Buffalo July 2001

Experiments on Test Databases • CDB (web color images) – snapshot of images on internet – 500 images , 41 groups, each group 10 or 20 images – 41 training images are randomly selected – query set : whole database – color feature techniques: histogram and color coherent vector (CCV) – average precision and recall from 1 to 40 returned images are calculated. • TDB (Brodatz texture images) – texture images – 2240 images , 112 groups, each group 20 images – 112 training images are randomly selected – query set : whole database – texture feature techniques : haar and daubechies wavelet – average precision and recall from 1 to 40 returned images are calculated. University at Buffalo July 2001

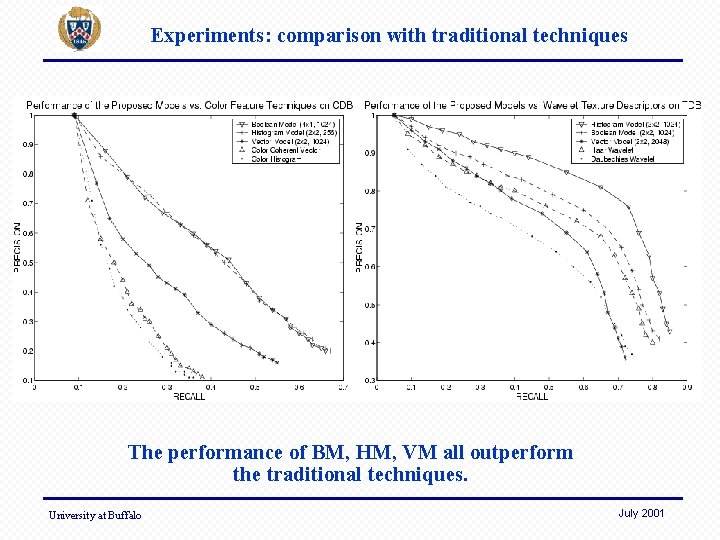

Experiments: comparison with traditional techniques The performance of BM, HM, VM all outperform the traditional techniques. University at Buffalo July 2001

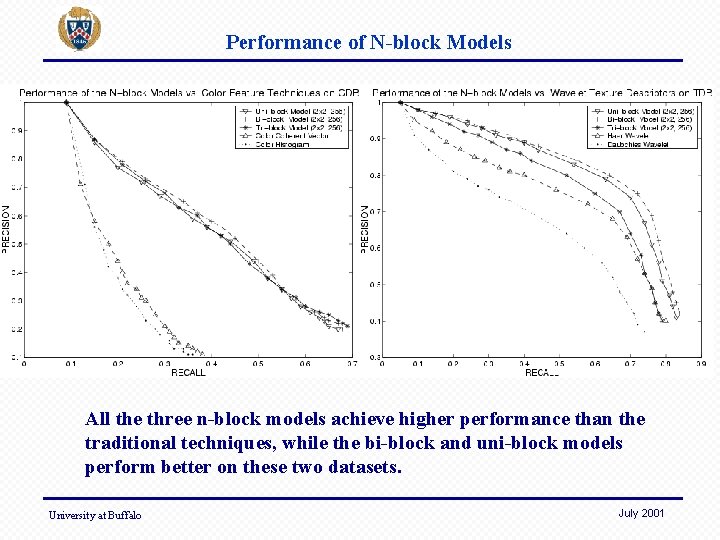

Performance of N-block Models All the three n-block models achieve higher performance than the traditional techniques, while the bi-block and uni-block models perform better on these two datasets. University at Buffalo July 2001

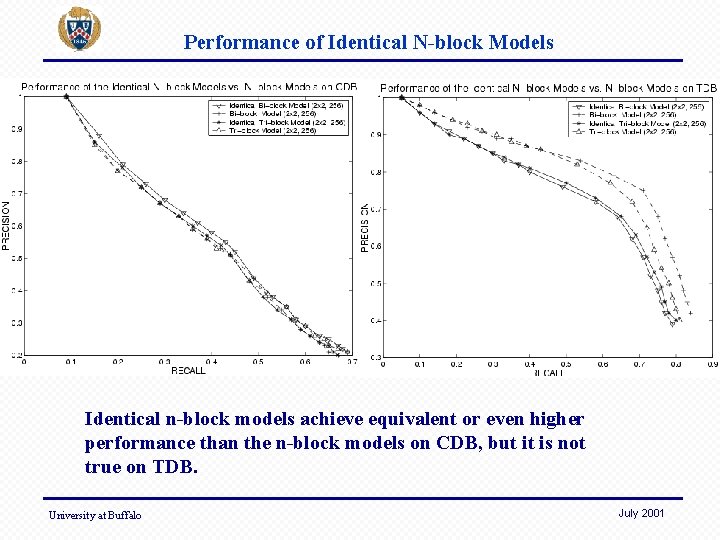

Performance of Identical N-block Models Identical n-block models achieve equivalent or even higher performance than the n-block models on CDB, but it is not true on TDB. University at Buffalo July 2001

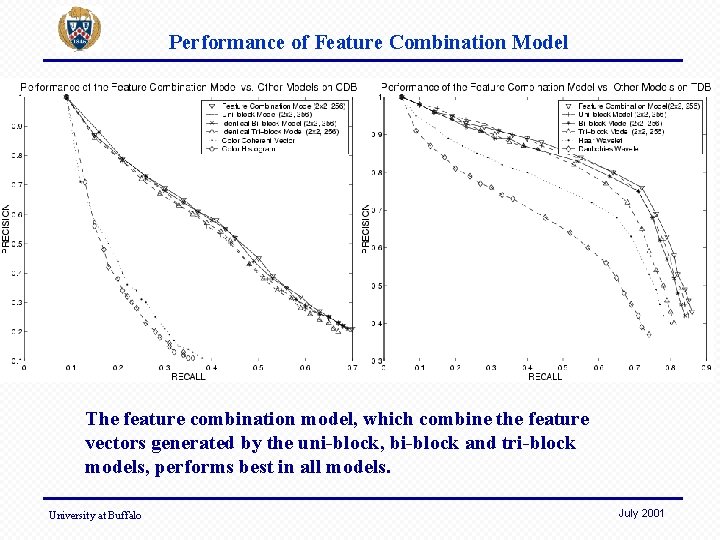

Performance of Feature Combination Model The feature combination model, which combine the feature vectors generated by the uni-block, bi-block and tri-block models, performs best in all models. University at Buffalo July 2001

Experiments on COREL • 31646 color images – size 120 x 80 or 80 x 120 – 939 training images are randomly selected to get keyblocks • query set – 6895 query images which are categorized to 82 groups. • average precision and recall from 1 to 100 returned images are calculated. University at Buffalo July 2001

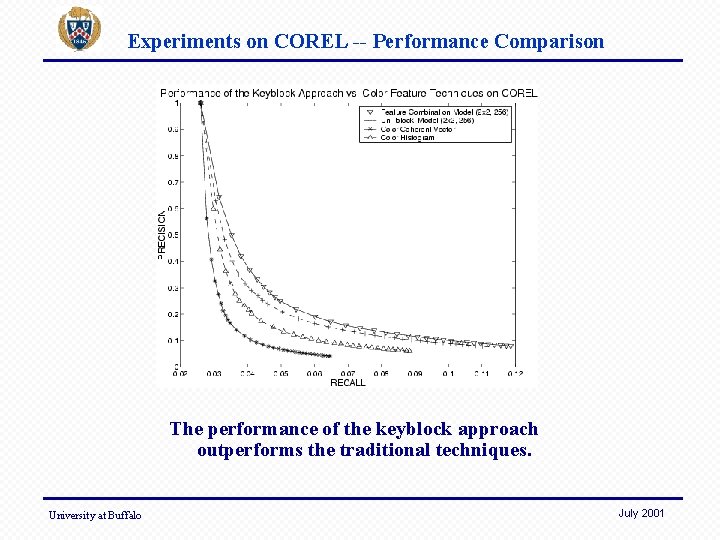

Experiments on COREL -- Performance Comparison The performance of the keyblock approach outperforms the traditional techniques. University at Buffalo July 2001

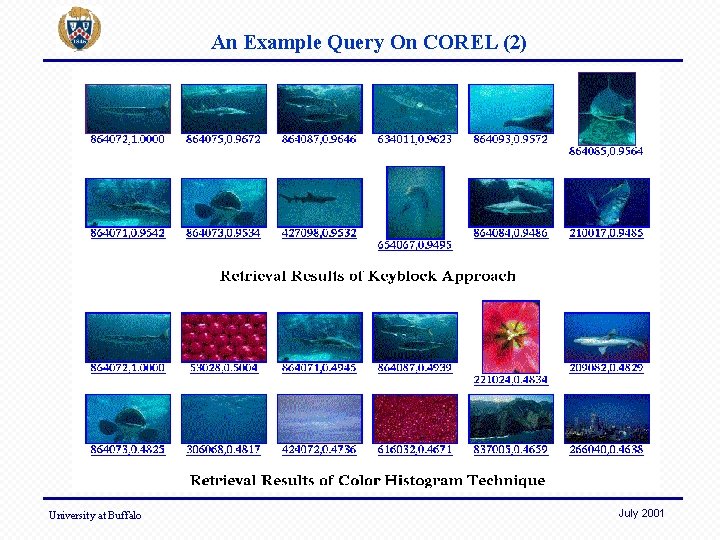

An Example Query On COREL (2) University at Buffalo July 2001

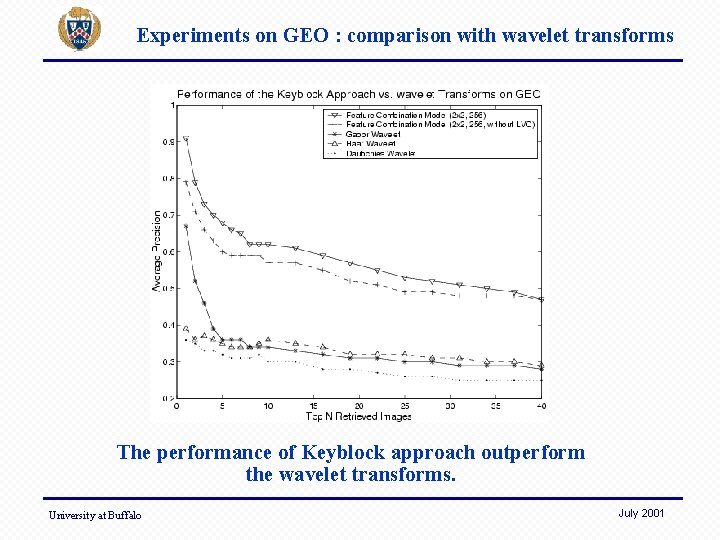

Experiments on GEO • Database GEO – Airphoto images of the Buffalo area provided by NCGIA at Buffalo – 405 images – 46 training images are used to get keyblocks • Query set – 33 query images which are sub-images of 32 x 32 chosen from the images in the database by GIS experts from NCGIA at Buffalo. – These query images are divided into 5 categories: agriculture, grass, forest, residential area, and water. University at Buffalo July 2001

Experiments on GEO : comparison with wavelet transforms The performance of Keyblock approach outperform the wavelet transforms. University at Buffalo July 2001

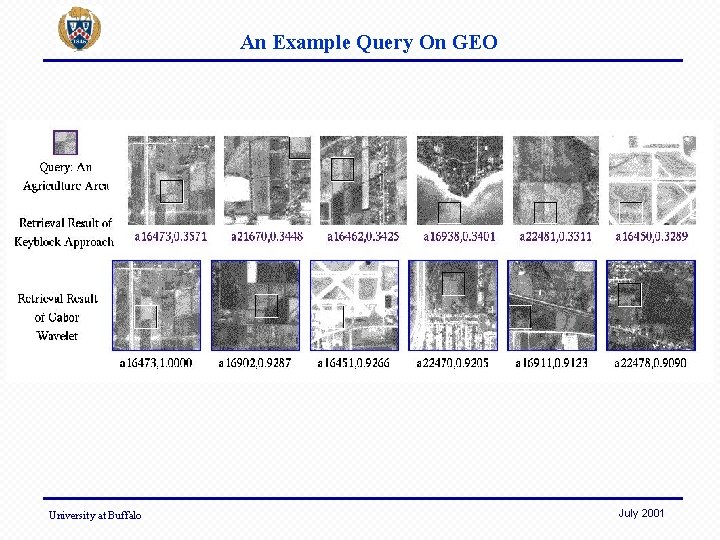

An Example Query On GEO University at Buffalo July 2001

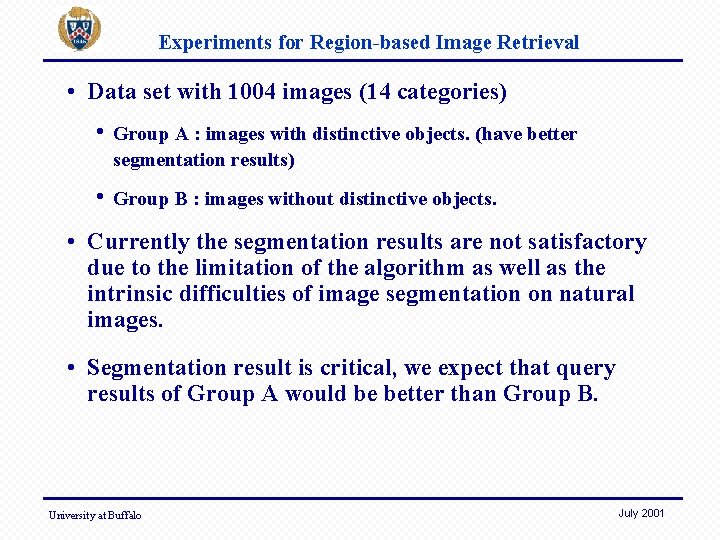

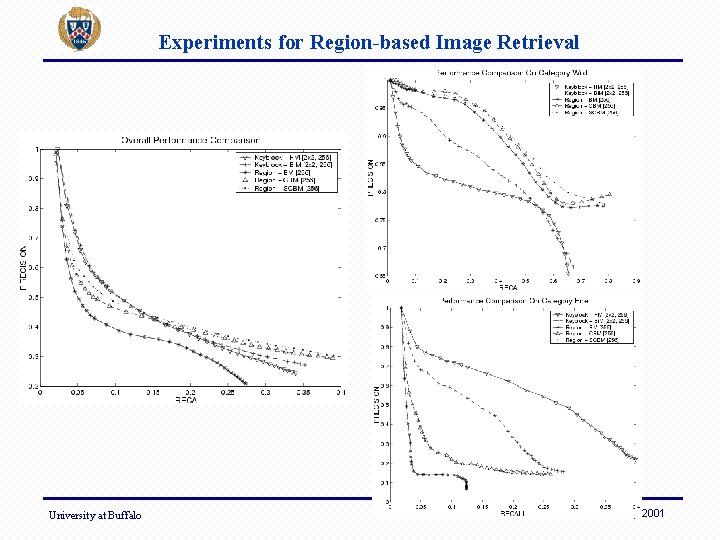

Experiments for Region-based Image Retrieval • Data set with 1004 images (14 categories) • Group A : images with distinctive objects. (have better segmentation results) • Group B : images without distinctive objects. • Currently the segmentation results are not satisfactory due to the limitation of the algorithm as well as the intrinsic difficulties of image segmentation on natural images. • Segmentation result is critical, we expect that query results of Group A would be better than Group B. University at Buffalo July 2001

Experiments for Region-based Image Retrieval University at Buffalo July 2001

Conclusion • Keyblock : An approach for content-based image retrieval • Exploiting analogous text-based IR techniques in image domain • Providing methods for extracting comprehensive image features which is more semantics-related than the existing lower-level features. • Experimental results have demonstrated : • Keyblock method is superior not only to color histogram and color coherent vector approaches which are in favor of color features, • but also to Gabor, Haar and Daubechies wavelet texture approaches which are in favor of texture features. University at Buffalo July 2001

- Slides: 56