How to review DMPs using review rubrics Myriam

How to review DMPs (using review rubrics) Myriam Mertens Open Access Week Belgium, 20 October 2020

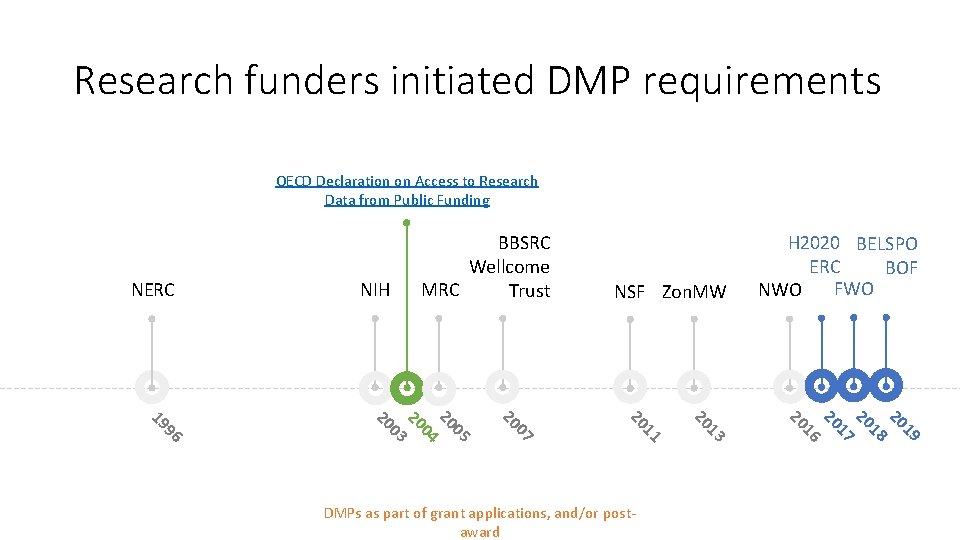

Research funders initiated DMP requirements OECD Declaration on Access to Research Data from Public Funding NERC NIH BBSRC Wellcome MRC Trust NSF Zon. MW 19 20 18 20 17 20 16 20 13 20 11 20 07 20 05 20 04 20 03 20 96 19 DMPs as part of grant applications, and/or postaward H 2020 BELSPO ERC BOF FWO NWO

How about DMP quality? • Often broad, open-ended questions • Perception that poor DMP quality has few consequences can undermine policies • Some funders assess DMPs, others ‘outsource’ DMP evaluation "BUREAUCRACY" by Lina Kusaite, licensed under CC BY-NC-ND 4. 0

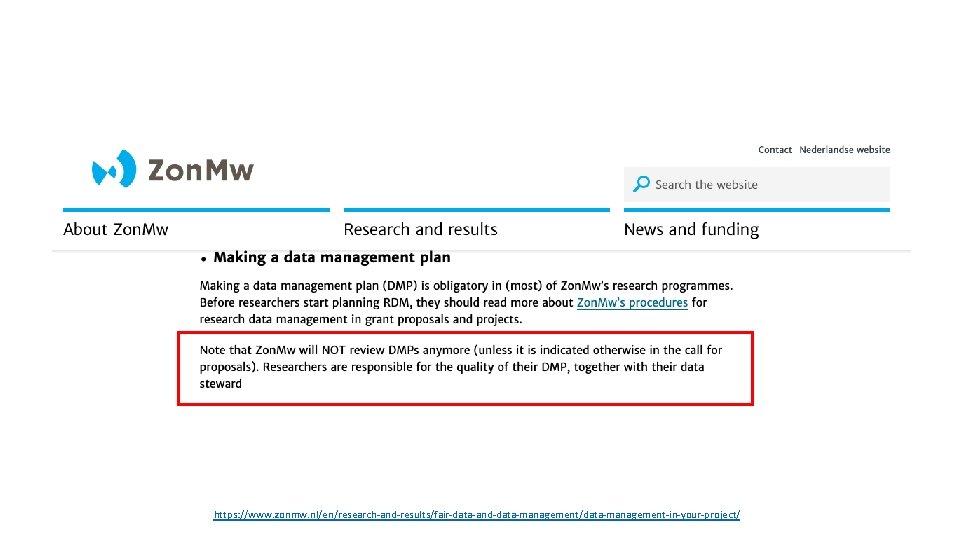

https: //www. zonmw. nl/en/research-and-results/fair-data-and-data-management/data-management-in-your-project/

Institutions stepping up to offer support Recruiting RDM support staff RDM advocacy & training Developing DMP feedback services

(P)reviewing DMPs • Requires significant effort • Requires right knowledge & skills − specialist input sometimes required • Also an opportunity to improve your own RDM competences!

What does a good DMP look like? • No absolute right answers • Look for evidence that researcher has given proper thought to issues • Some things to pay attention to: − Plan is appropriate? (e. g. relevant standards, alignment with disciplinary norms, use of (local) infrastructure/support services) − Feasible to implement? − Sufficiently detailed information? − Choices made are properly justified? − Advice has been sought where needed? From Sarah Jones (2016), Reviewing Data Management Plans. CC-BY

Tip: use a DMP review rubric • Concept initially developed in DART project (US) • “Analytic rubric to standardise the review of DMPs” • Matrix with performance criteria + performance levels

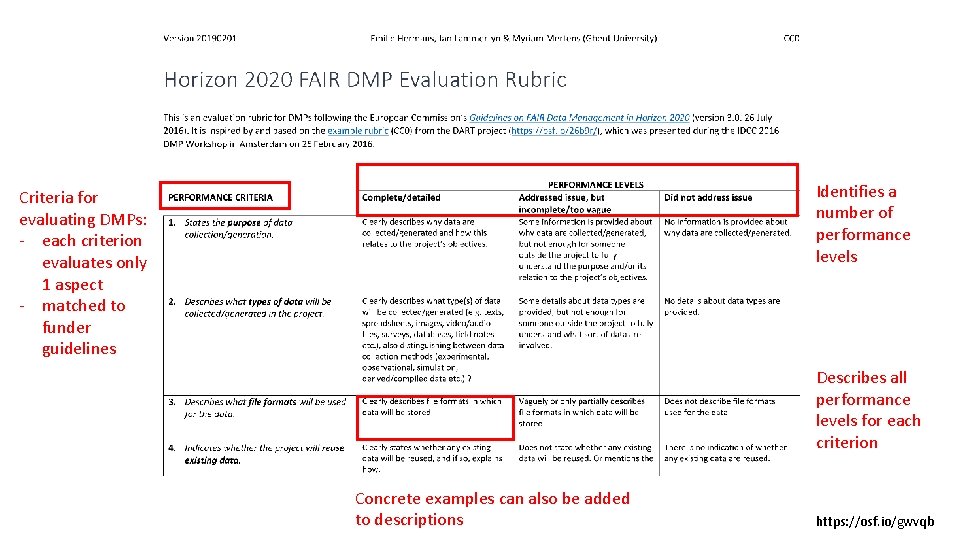

Identifies a number of performance levels Criteria for evaluating DMPs: - each criterion evaluates only 1 aspect - matched to funder guidelines Describes all performance levels for each criterion Concrete examples can also be added to descriptions https: //osf. io/gwvqb

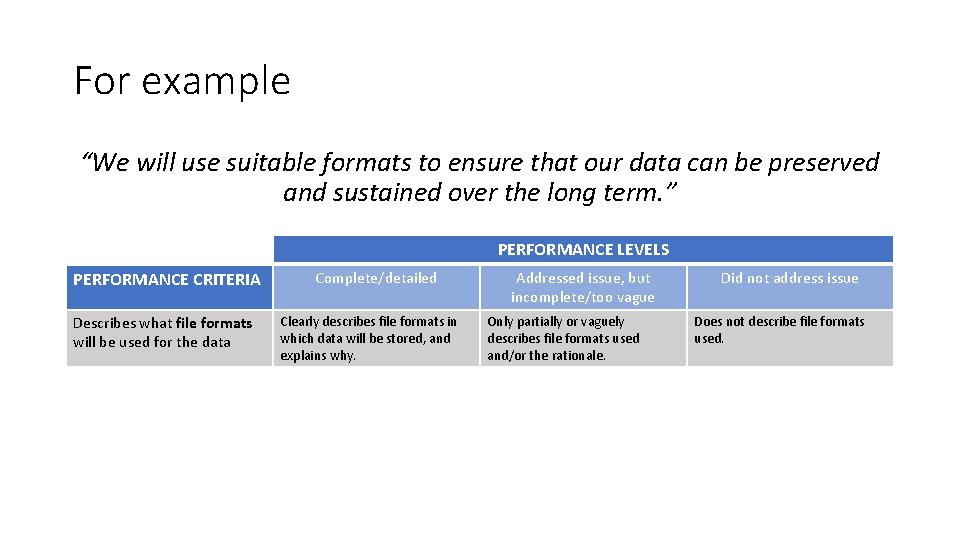

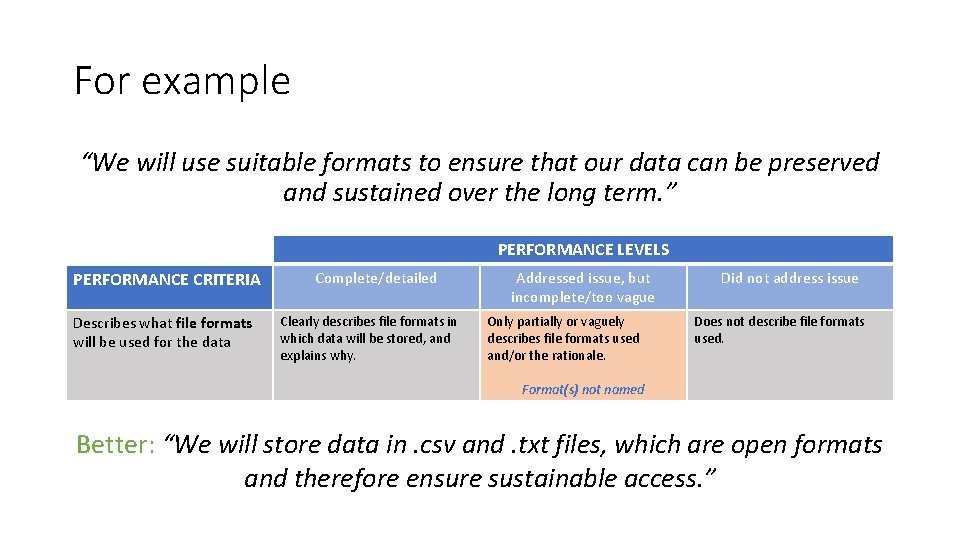

For example “We will use suitable formats to ensure that our data can be preserved and sustained over the long term. ” PERFORMANCE LEVELS PERFORMANCE CRITERIA Describes what file formats will be used for the data Complete/detailed Clearly describes file formats in which data will be stored, and explains why. Addressed issue, but incomplete/too vague Only partially or vaguely describes file formats used and/or the rationale. Did not address issue Does not describe file formats used.

For example “We will use suitable formats to ensure that our data can be preserved and sustained over the long term. ” PERFORMANCE LEVELS PERFORMANCE CRITERIA Describes what file formats will be used for the data Complete/detailed Clearly describes file formats in which data will be stored, and explains why. Addressed issue, but incomplete/too vague Only partially or vaguely describes file formats used and/or the rationale. Did not address issue Does not describe file formats used. Format(s) not named Better: “We will store data in. csv and. txt files, which are open formats and therefore ensure sustainable access. ”

Getting started • Best 1 rubric per DMP template • Guide on how to develop a rubric: https: //osf. io/gwvqb • Publicly available rubrics from UGent (CC 0): − FWO DMP rubric − BELSPO DMP rubric − Horizon 2020 FAIR DMP rubric − ERC DMP rubric

Some final remarks • Review rubrics may also be used for self-assessment • Collective DMP review practice is a good training exercise • Review rubrics may also be used to analyse DMPs and inform development of (institutional) RDM services − for which topics are performance levels are high/low? − what services could be offered to target areas of lower performance?

Thanks! Any questions?

- Slides: 14