How to Protect Big Data in a Containerized

How to Protect Big Data in a Containerized Environment Thomas Phelan Chief Architect, Blue. Data @tapbluedata

Outline § Securing a Big Data Environment § Data Protection § Transparent Data Encryption in a Containerized Environment § Takeaways

In the Beginning … § Hadoop was used to process public web data - No compelling need for security • No user or service authentication • No data security

Then Hadoop Became Popular Security is important.

Layers of Security in Hadoop § Access § Authentication § Authorization § Data Protection § Auditing § Policy (protect from human error)

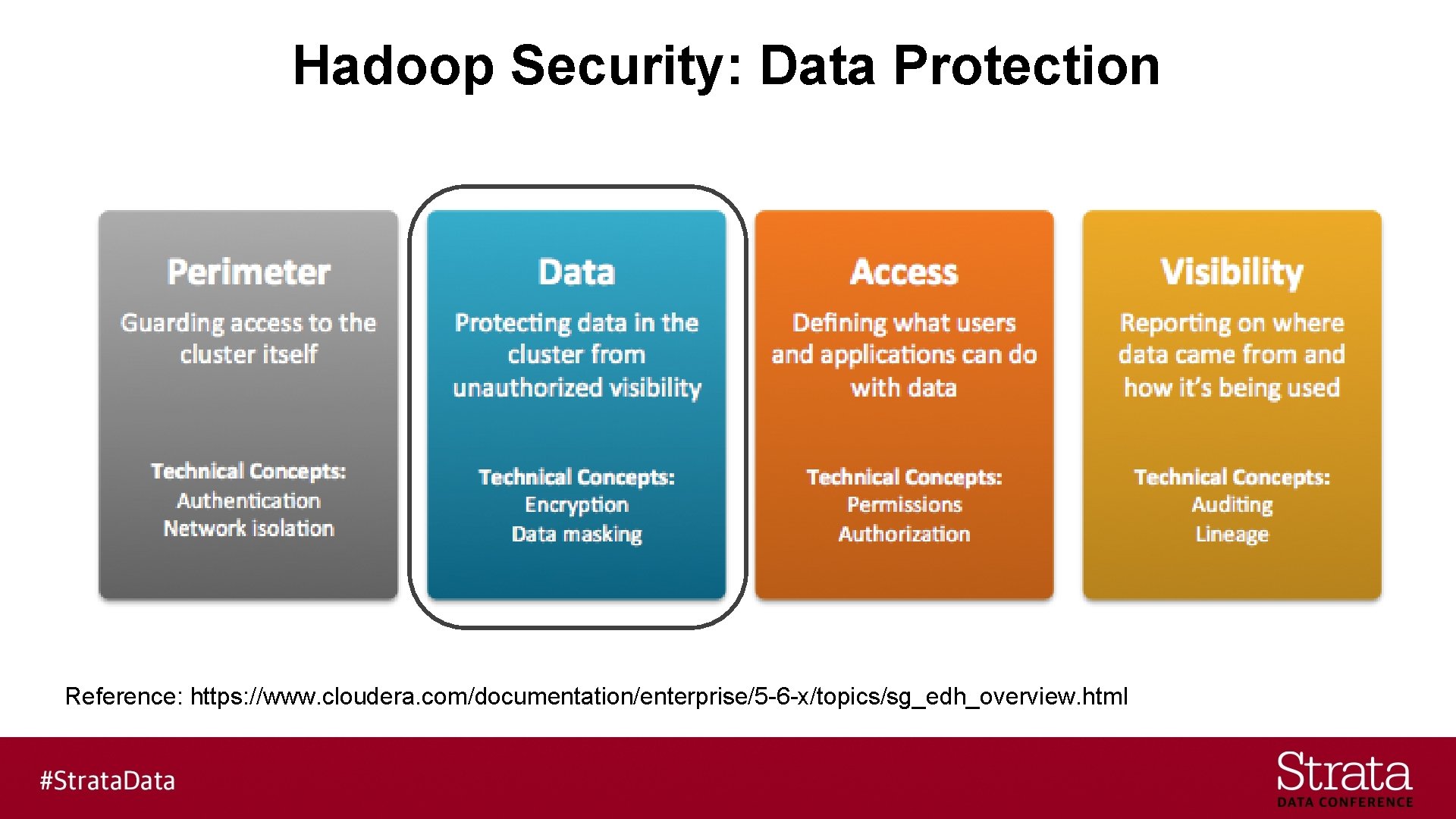

Hadoop Security: Data Protection Reference: https: //www. cloudera. com/documentation/enterprise/5 -6 -x/topics/sg_edh_overview. html

Focus on Data Security § Confidentiality - Confidentiality is lost when data is accessed by someone not authorized to do so § Integrity - Integrity is lost when data is modified in unexpected ways § Availability - Availability is lost when data is erased or becomes inaccessible Reference: https: //www. us-cert. gov/sites/default/files/publications/infosecuritybasics. pdf

Hadoop Distributed File System (HDFS) § Data Security Features - Access Control - Data Encryption - Data Replication

Access Control § Simple - Identity determined by host operating system § Kerberos - Identity determined by Kerberos credentials - One realm for both compute and storage - Required for HDFS Transparent Data Encryption

Data Encryption § Transforming data

Data Replication § 3 way replication - Can survive any 2 failures § Erasure Coding - Can survive more than 2 failures depending on parity bit configuration

HDFS with End-to-End Encryption § Confidentiality - Data Access § Integrity - Data Access + Data Encryption § Availability - Data Access + Data Replication

Data Encryption § How to transform the data? 10101110001001011 10001010001110101110 Cleartext XXXXXXXXXXXXXXXXXXXX Ciphertext

Data Encryption – At Rest § Data is encrypted while on persistent media (disk)

Data Encryption – In Transit § Data is encrypted while traveling over the network

The Whole Process Ciphertext

HDFS Transparent Data Encryption (TDE) § End-to-end encryption - Data is encrypted/decrypted at the client • Data is protected at rest and in transit § Transparent - No application level code changes required

HDFS TDE – Design § Goals: - Only an authorized client/user can access cleartext - HDFS never stores cleartext or unencrypted data encryption keys

HDFS TDE – Terminology § Encryption Zone - A directory whose file contents will be encrypted upon write and decrypted upon read - An EZKEY is generated for each zone

HDFS TDE – Terminology § EZKEY – encryption zone key § DEK – data encryption key § EDEK – encrypted data encryption key

HDFS TDE - Data Encryption § The same key is used to encrypt and decrypt data § The size of the ciphertext is exactly the same as the size of the original cleartext - EZKEY + DEK => EDEK - EDEK + EZKEY => DEK

HDFS TDE - Services § HDFS Name. Node (NN) § Kerberos Key Distribution Center (KDC) § Hadoop Key Management Server (KMS) - Key Trustee Server

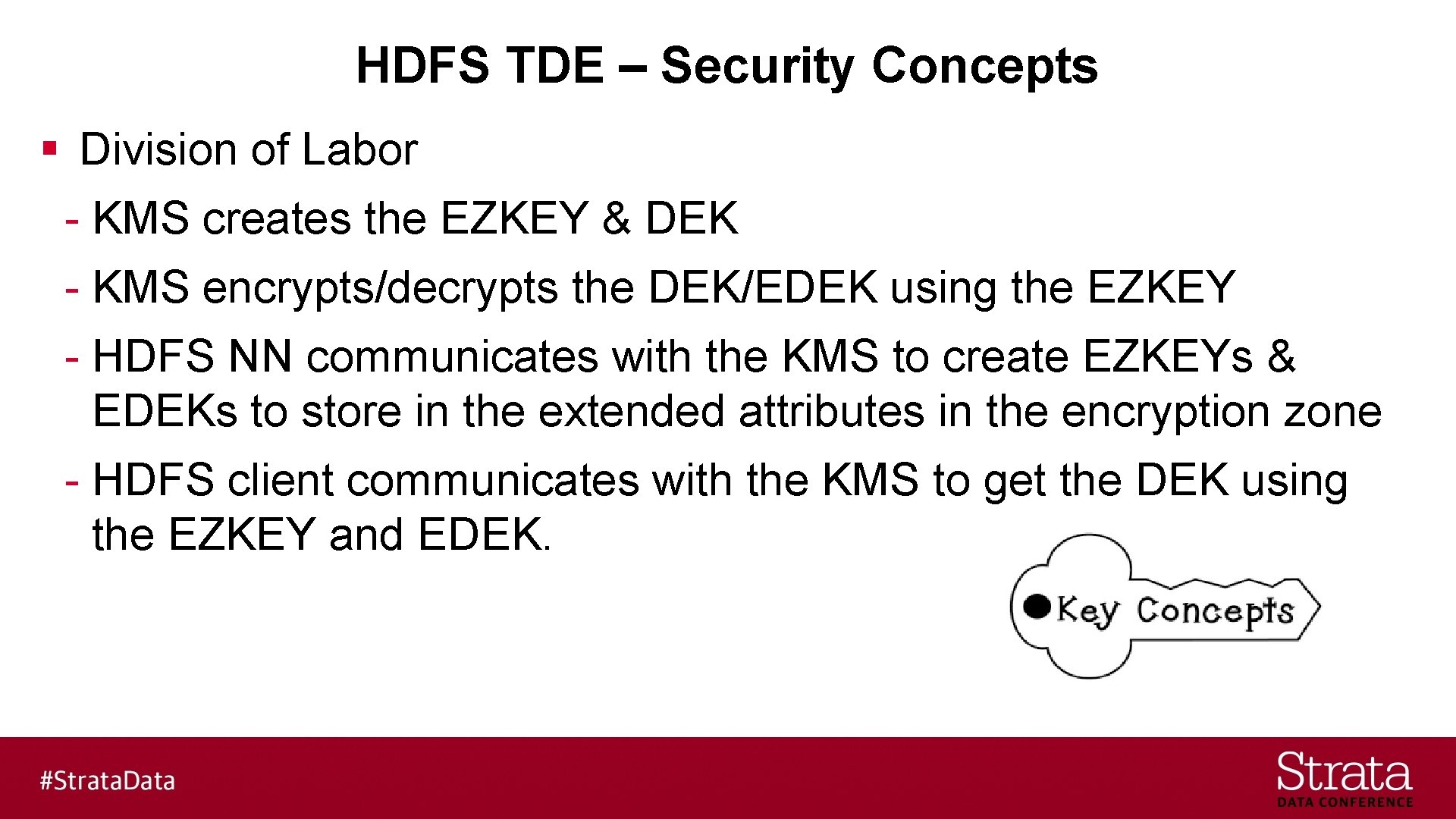

HDFS TDE – Security Concepts § Division of Labor - KMS creates the EZKEY & DEK - KMS encrypts/decrypts the DEK/EDEK using the EZKEY - HDFS NN communicates with the KMS to create EZKEYs & EDEKs to store in the extended attributes in the encryption zone - HDFS client communicates with the KMS to get the DEK using the EZKEY and EDEK.

HDFS TDE – Security Concepts § The name of the EZKEY is stored in the HDFS extended attributes of the directory associated with the encryption zone § The EDEK is stored in the HDFS extended attributes of the file in the encryption zone $ hadoop key … $ hdfs crypto …

HDFS Examples § Simplified for the sake of clarity: - Kerberos actions not shown - Name. Node EDEK cache not shown

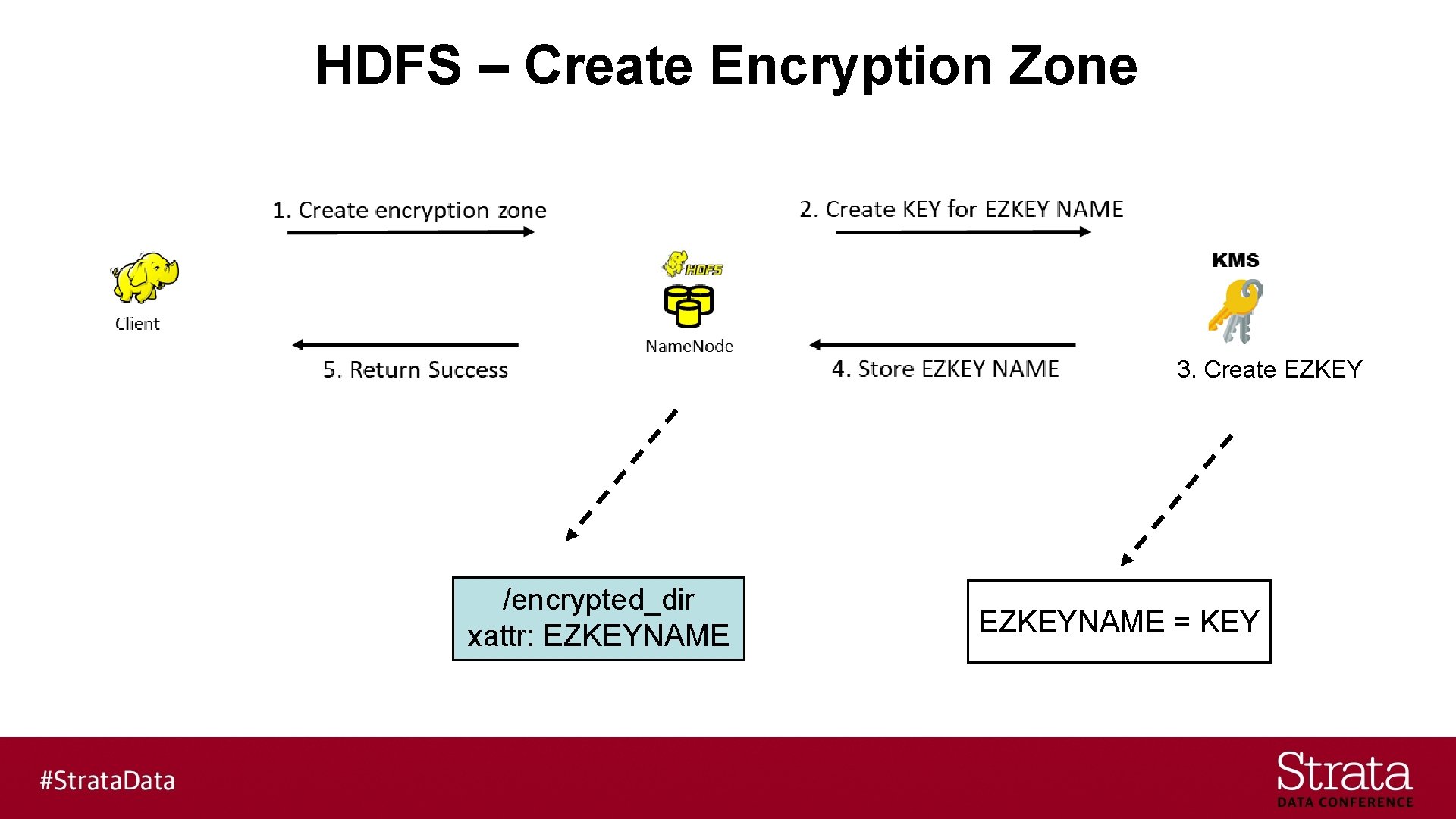

HDFS – Create Encryption Zone 3. Create EZKEY /encrypted_dir xattr: EZKEYNAME = KEY

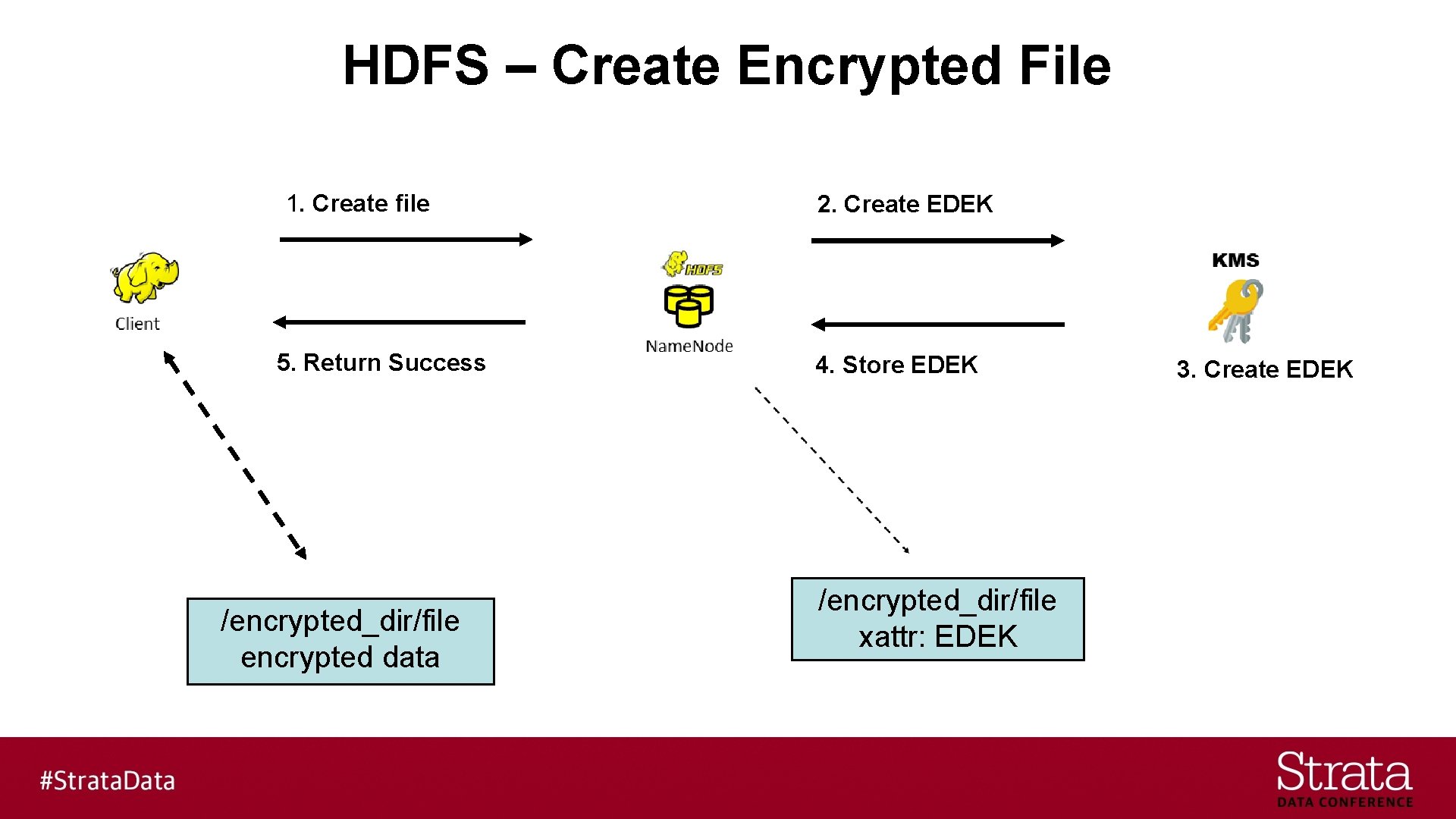

HDFS – Create Encrypted File 1. Create file 5. Return Success /encrypted_dir/file encrypted data 2. Create EDEK 4. Store EDEK /encrypted_dir/file xattr: EDEK 3. Create EDEK

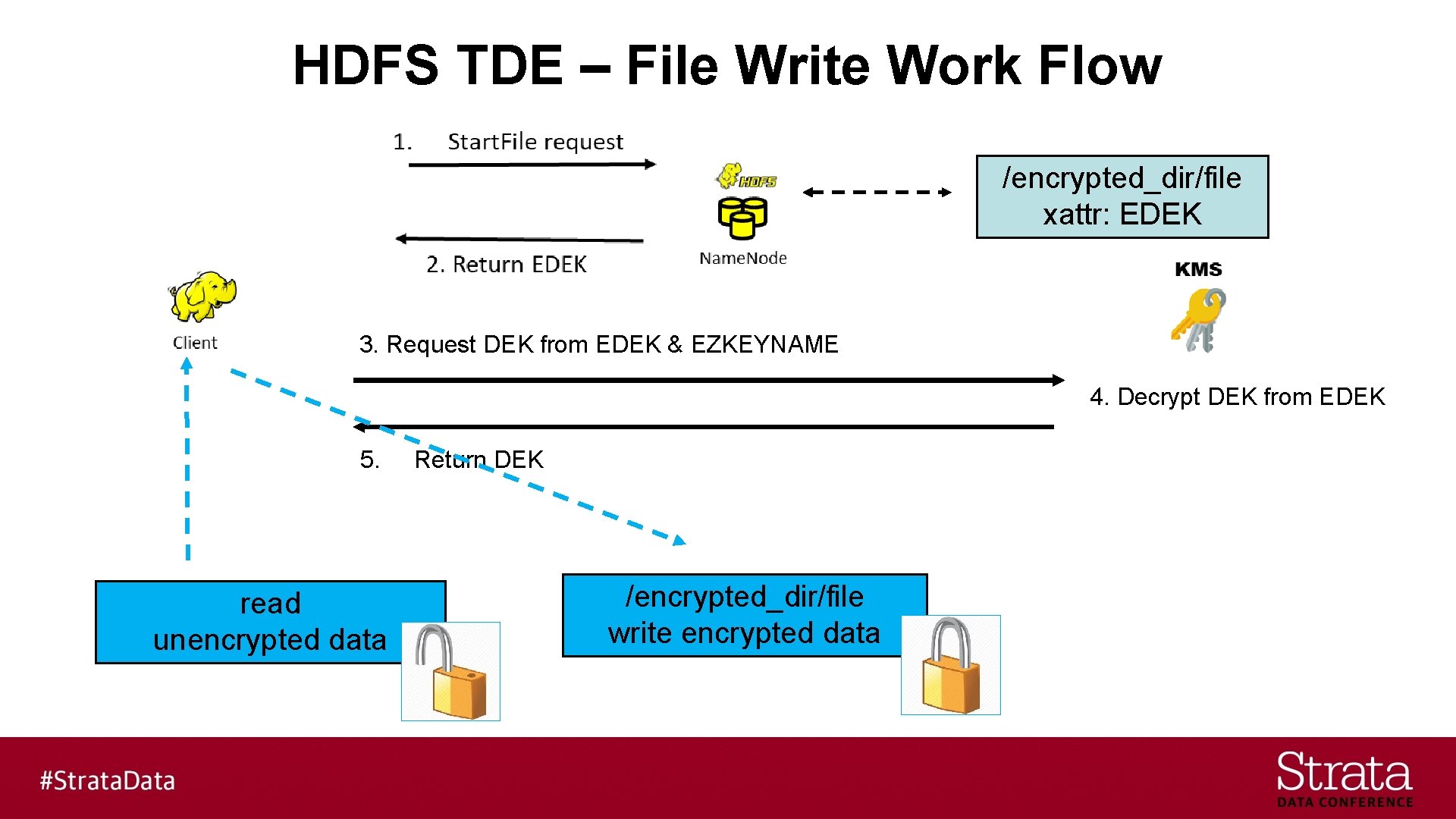

HDFS TDE – File Write Work Flow /encrypted_dir/file xattr: EDEK 3. Request DEK from EDEK & EZKEYNAME 4. Decrypt DEK from EDEK 5. read unencrypted data Return DEK /encrypted_dir/file write encrypted data

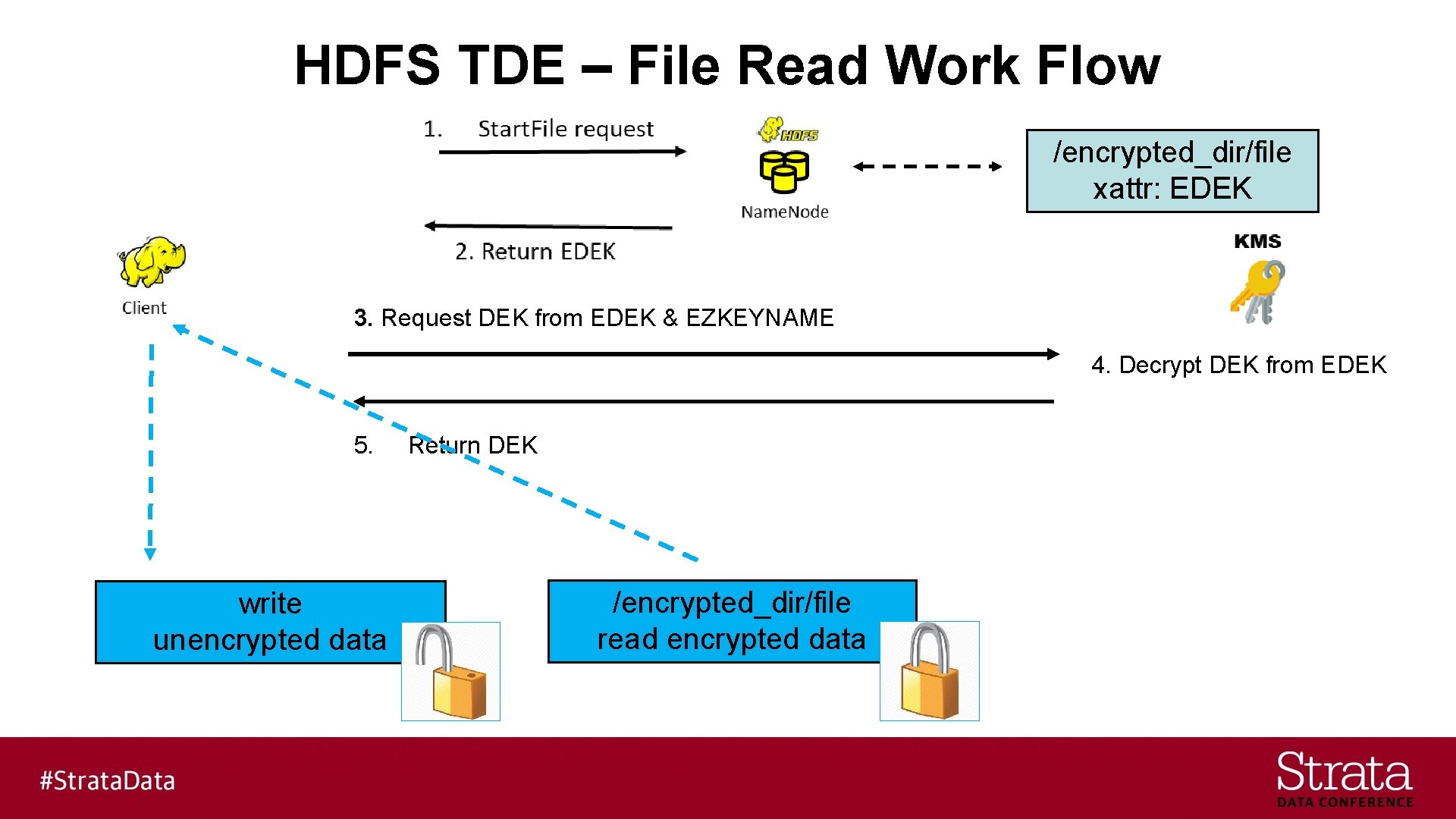

HDFS TDE – File Read Work Flow /encrypted_dir/file xattr: EDEK 3. Request DEK from EDEK & EZKEYNAME 4. Decrypt DEK from EDEK 5. write unencrypted data Return DEK /encrypted_dir/file read encrypted data

Bring in the Containers (i. e. Docker) § Issues with containers are the same for any virtualization platform - Multiple compute clusters - Multiple HDFS file systems - Multiple Kerberos realms - Cross-realm trust configuration

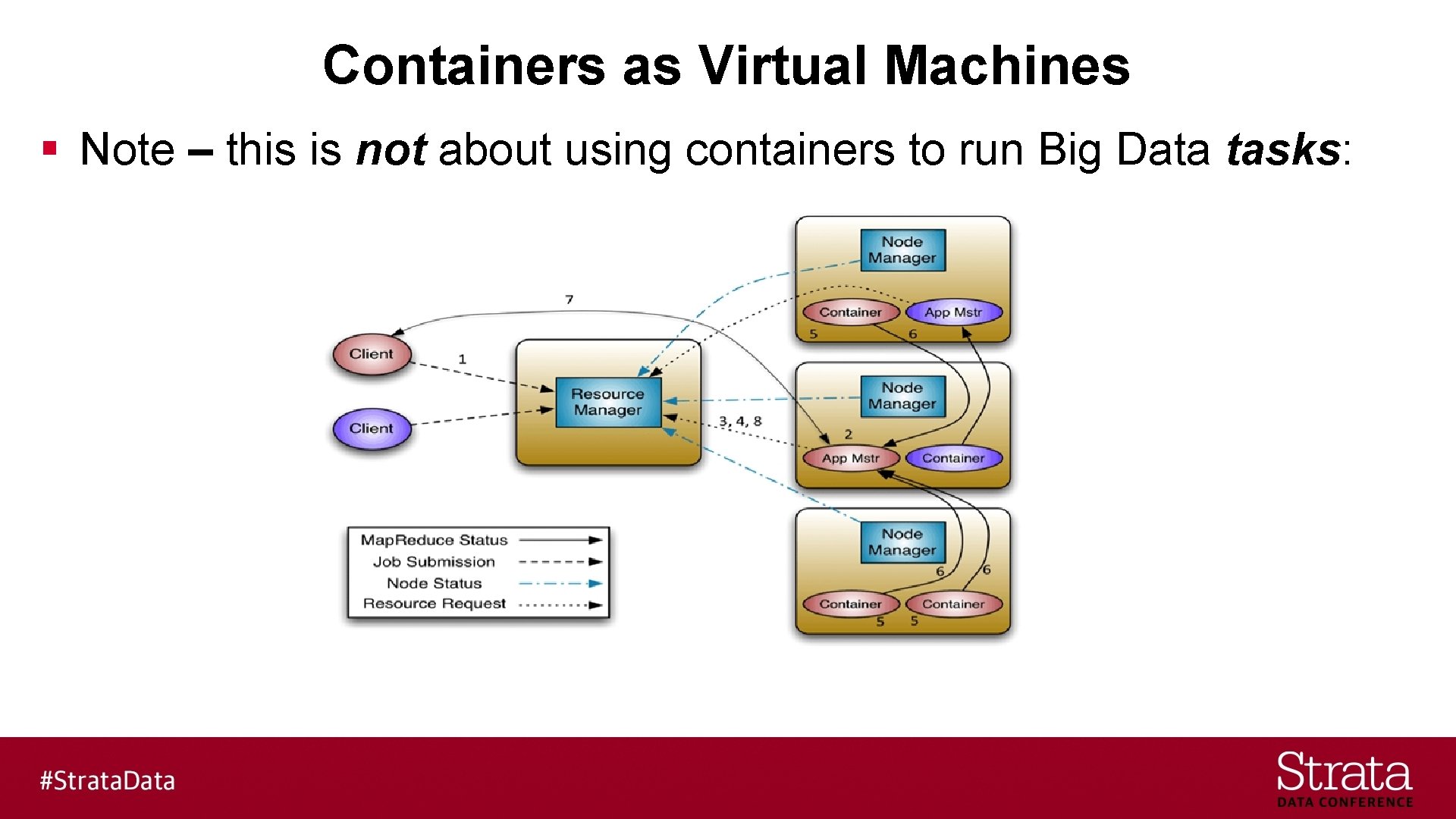

Containers as Virtual Machines § Note – this is not about using containers to run Big Data tasks:

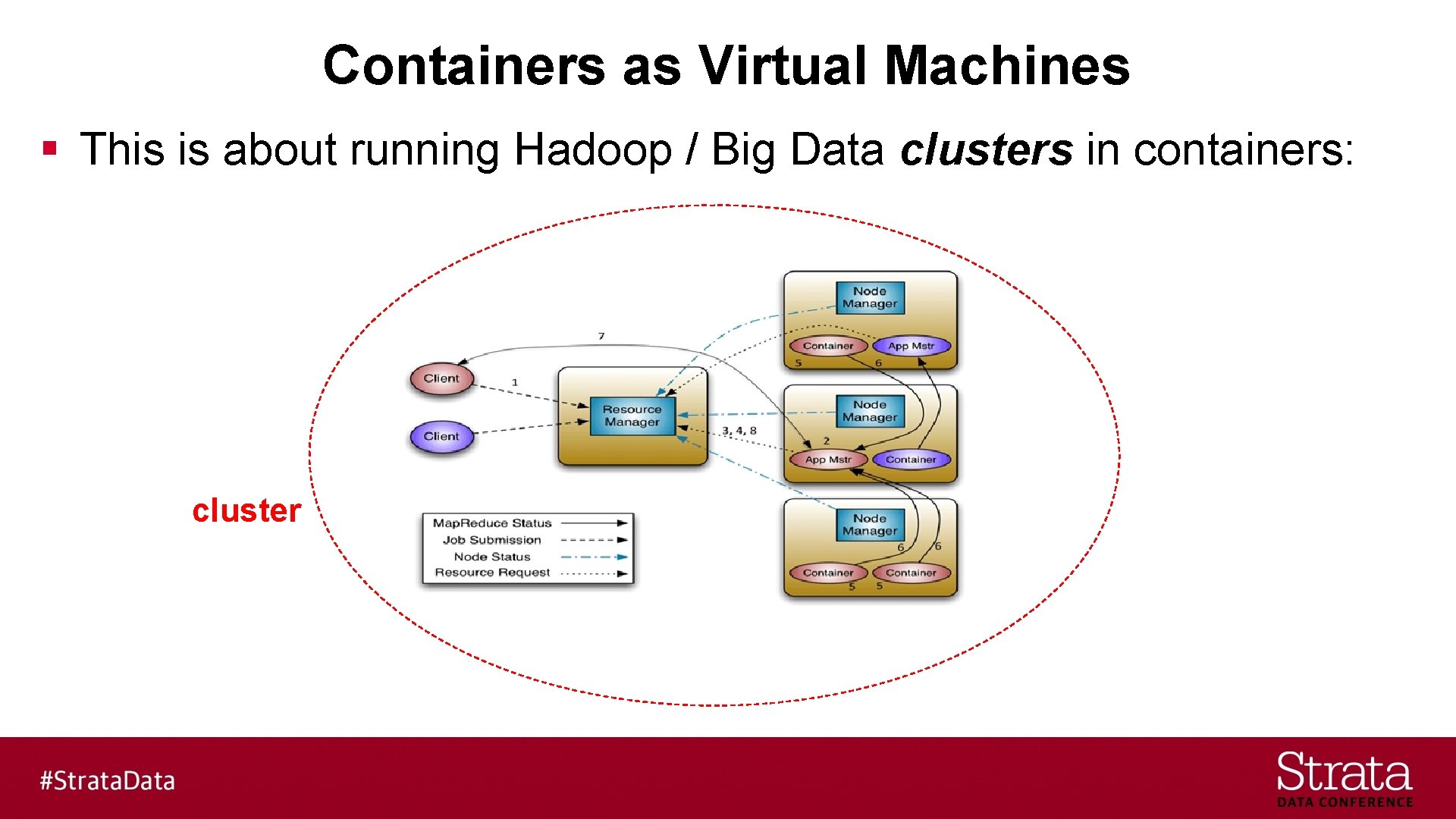

Containers as Virtual Machines § This is about running Hadoop / Big Data clusters in containers: cluster

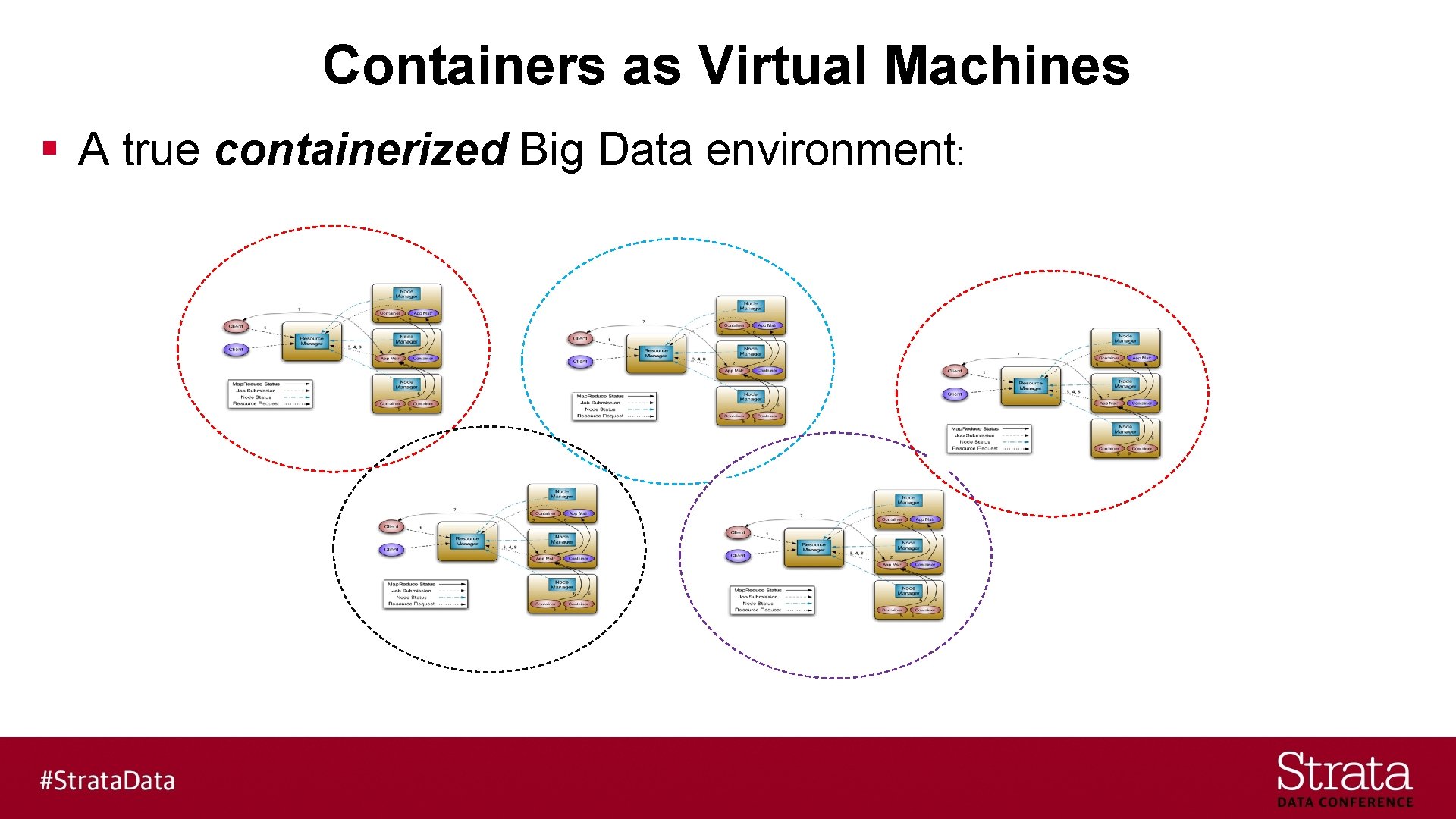

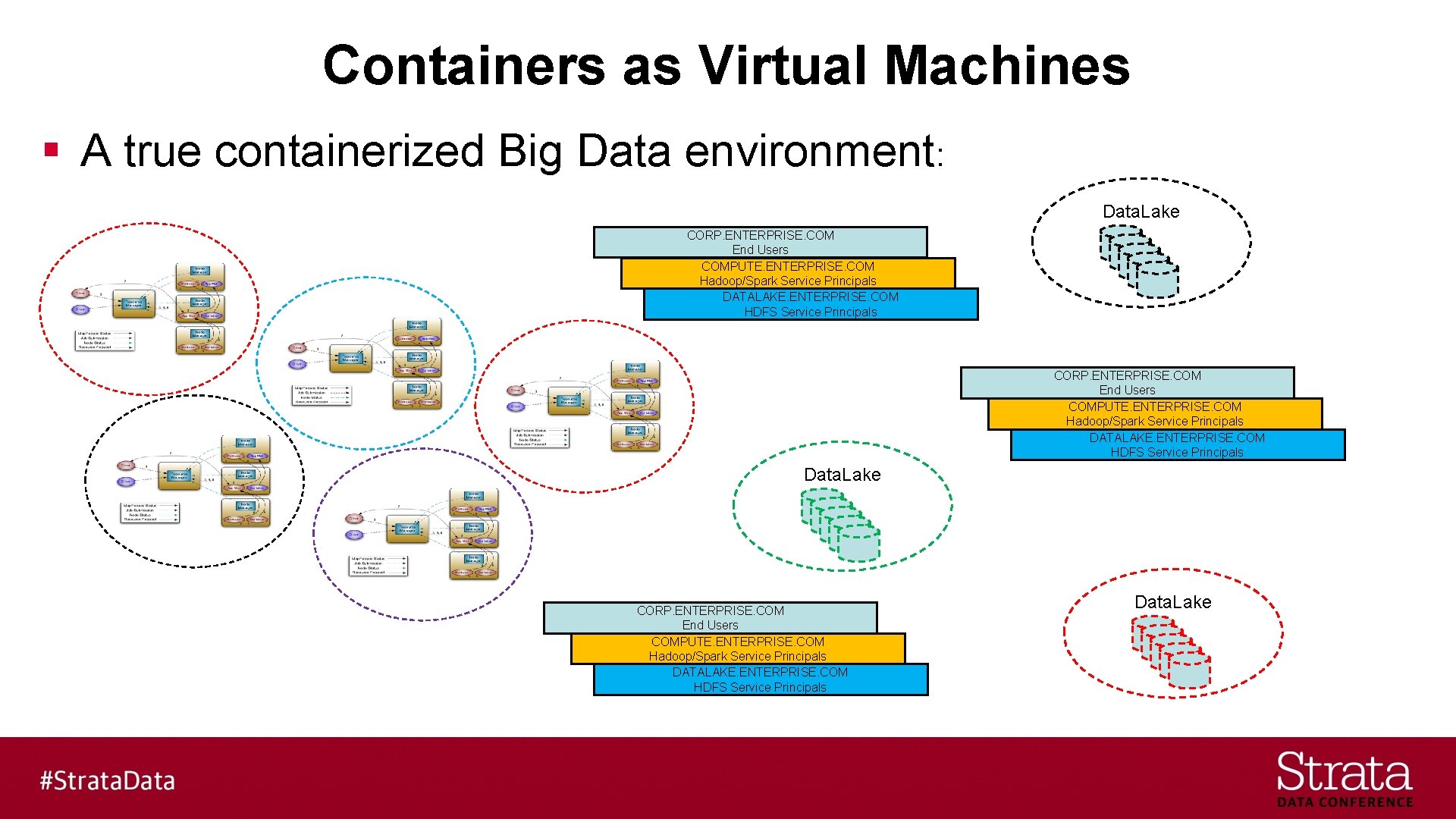

Containers as Virtual Machines § A true containerized Big Data environment:

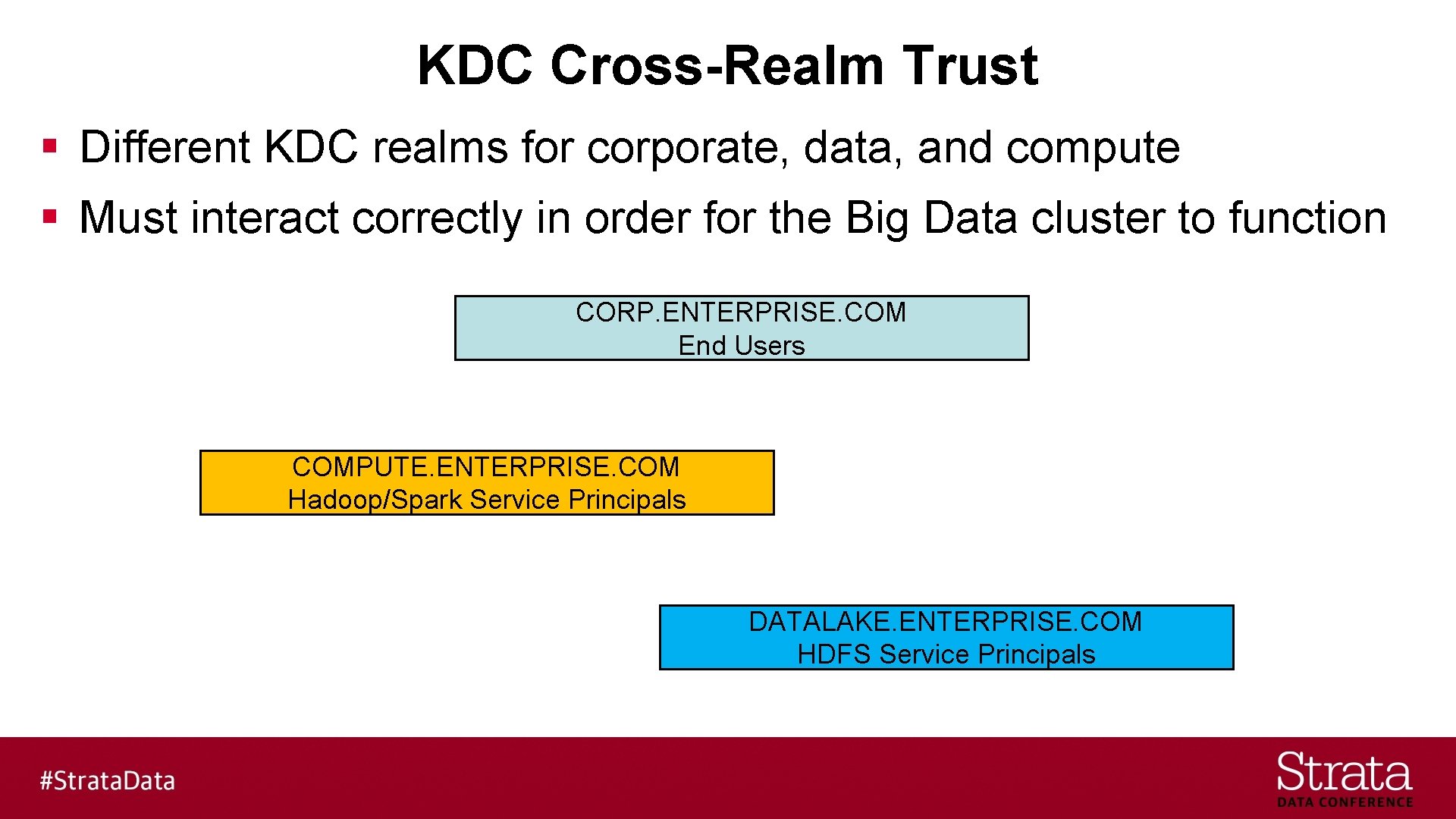

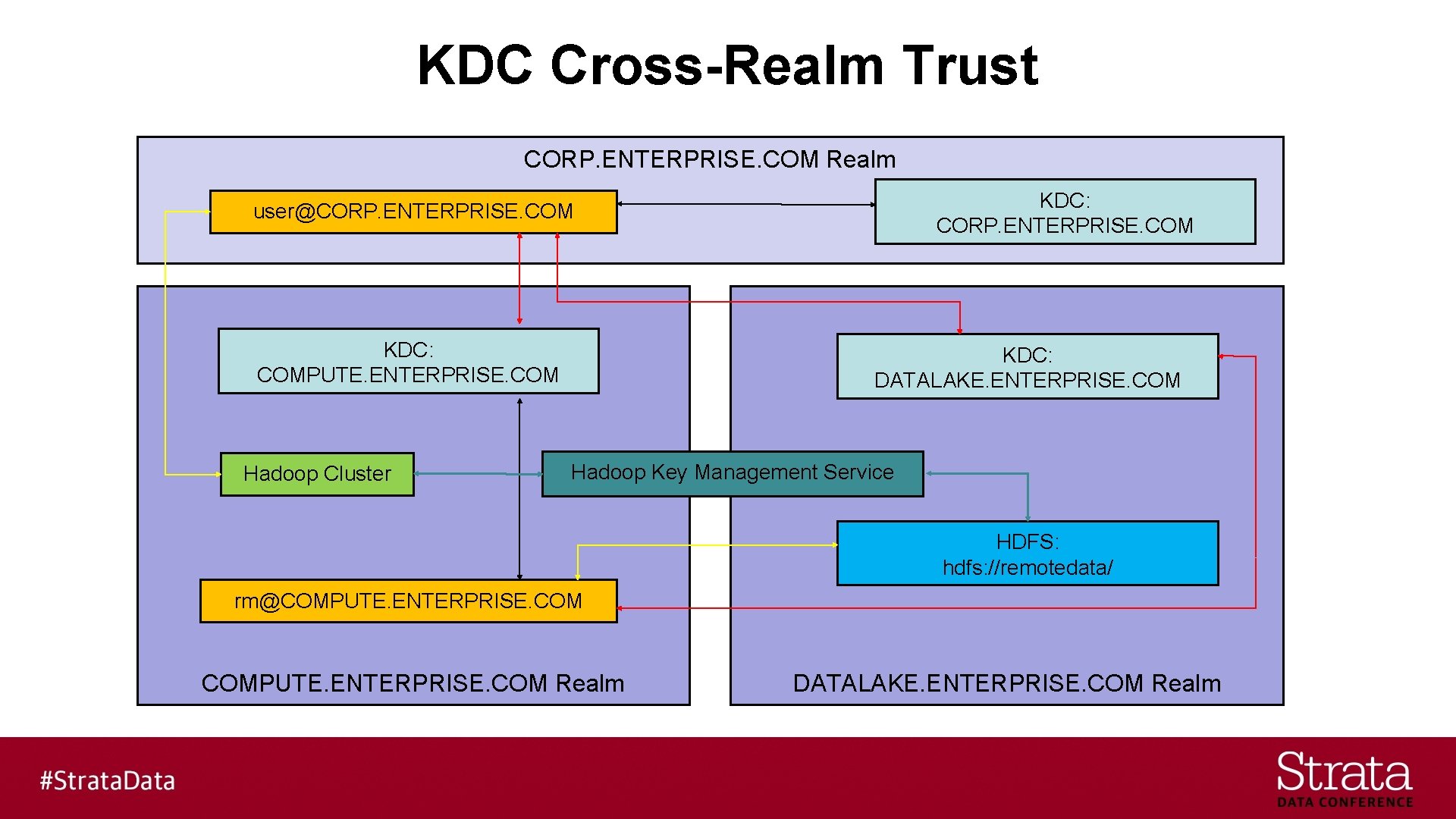

KDC Cross-Realm Trust § Different KDC realms for corporate, data, and compute § Must interact correctly in order for the Big Data cluster to function CORP. ENTERPRISE. COM End Users COMPUTE. ENTERPRISE. COM Hadoop/Spark Service Principals DATALAKE. ENTERPRISE. COM HDFS Service Principals

KDC Cross-Realm Trust § Different KDC realms for corporate, data, and compute - One-way trust • Compute realm trusts the corporate realm • Data realm trusts the compute realm

KDC Cross-Realm Trust CORP. ENTERPRISE. COM Realm KDC: CORP. ENTERPRISE. COM user@CORP. ENTERPRISE. COM KDC: COMPUTE. ENTERPRISE. COM Hadoop Cluster KDC: DATALAKE. ENTERPRISE. COM Hadoop Key Management Service HDFS: hdfs: //remotedata/ rm@COMPUTE. ENTERPRISE. COM Realm DATALAKE. ENTERPRISE. COM Realm

Key Management Service § Must be enterprise quality - Key Trustee Server • Java Key. Store KMS • Cloudera Navigator Key Trustee Server

Containers as Virtual Machines § A true containerized Big Data environment: Data. Lake CORP. ENTERPRISE. COM End Users COMPUTE. ENTERPRISE. COM Hadoop/Spark Service Principals DATALAKE. ENTERPRISE. COM HDFS Service Principals Data. Lake

Key Takeaways § Hadoop has many security layers - HDFS Transparent Data Encryption (TDE) is best of breed - Security is hard (complex) - Virtualization / containerization only makes it potentially harder - Compute and storage separation with virtualization / containerization can make it even harder still

Key Takeaways § Be careful with a build vs. buy decision for containerized Big Data - Recommendation: buy one already built - There are turnkey solutions (e. g. Blue. Data EPIC) Reference: www. bluedata. com/blog/2017/08/hadoop-spark-docker-ten-things-to-know

@tapbluedata www. bluedata. com Blue. Data Booth #1508 in Strata Expo Hall

- Slides: 41