How to Measure the Impact of Specific Development

- Slides: 19

How to Measure the Impact of Specific Development Practices on Fielded Defect Density

Purpose of Study: Mathematically correlate software development practices to defect density

Why is this important? If we can determine which practices lead to lower defect densities we can then use that knowledge to direct future development efforts with the goal of eliminating more defects for the least amount of cost.

Dual Purpose: The model also serves to help predict the ability of an organization to deliver the products on schedule or with a greater degree of accuracy in relation to the schedule. That is the study suggest that there is potentially a misconception within the industry; the conception that practices that lead to lower defect densities slow a project down and can lead to delays. The study shows that the organizations with the lowest defect density also have the highest probability of delivering the product on time. Additionally, these same organizations are less late when the project goes beyond the targeted delivery date.

History: The USAF Rome Laboratories produced one of the first documents that aimed at correlating development practices to defect density. This document served as a spring board for this study. The author sought to improve upon this initial work by: Ø Ø Ø Developing individual weights for each parameter. In the Rome study each parameter was given equal weight, whereas this study aimed to determine the individual weight for each parameter. The author sought out parameters that were objective and reliable. That is parameters that could be measured repeatedly and across organizations consistently. For example the author avoided measurements related to developer experience. Making the study boarder, that is applicable to commercial applications Independent of compiler Incorporating into the study newer technologies such as OO and incremental models

History Continued: Forty five organizations have been evaluated but only seventeen organizations’ documents have been used for the study due to the perceived accuracy and completeness of the data. The original parameter list was 27 but was then expanded to 102 parameters due to newer technologies and tools for data collection and through interviewing the organizations with the highest and lowest defect densities to determine the major differences between them.

Positive Attributes of the Study: Ø Only one person evaluated the practices for each organization making the evaluation process consistent, i. e. the same criteria was used across the board. Ø The author had intimate knowledge of each organization therefore could distinguish between “actual” and “wish list” practices. Ø The author required physical proof of all positively answered questions. Ø Author required a wide cross section of responses from organizations to help insure accuracy of reported data: managers, lead engineers, quality engineers, test engineers, seasoned members and new hires, etc.

The Outcome

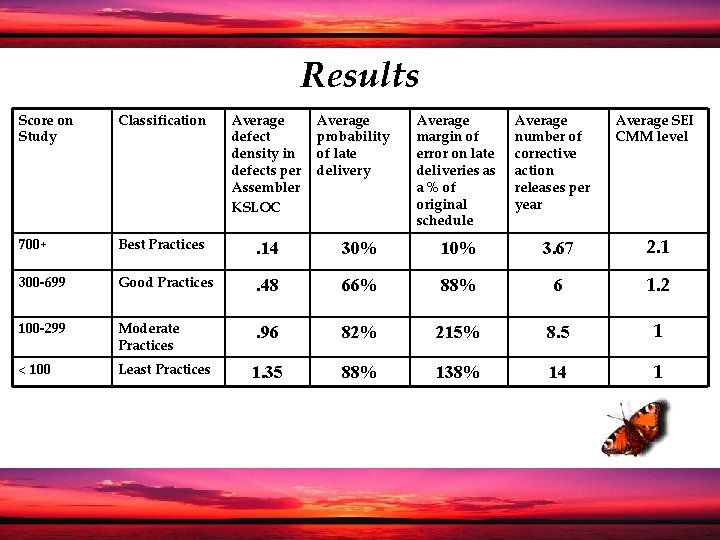

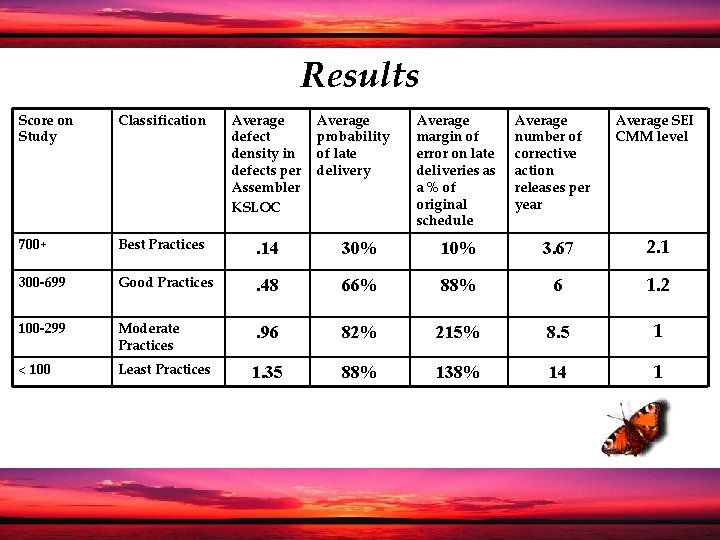

Results Score on Study Classification Average defect density in defects per Assembler KSLOC Average probability of late delivery Average margin of error on late deliveries as a % of original schedule Average number of corrective action releases per year Average SEI CMM level 700+ Best Practices . 14 30% 10% 3. 67 2. 1 300 -699 Good Practices . 48 66% 88% 6 1. 2 100 -299 Moderate Practices . 96 82% 215% 8. 5 1 < 100 Least Practices 1. 35 88% 138% 14 1

Common Practices Amongst organizations with the highest scores and lowest defect densities: Ø Software engineering is part of the engineering process and not considered to be an art form Ø Well rounded practices as opposed to believing in a “single bullet” philosophy Ø Formal and informal reviews of the software requirements prior to proceeding to design and code Ø Testers are involved in the requirement translation process

Common Practices Amongst organizations with the lowest scores and highest defect densities: Ø Lack of software management Ø Misconception that programmers know what the customer wants better then the customer does. Ø An inability to focus on the requirements and use cases with the highest occurrence probability Ø Complete void of a requirements definition process Ø Insufficient Testing Methods

The Score

Scoring Methodology Ø Review practices that had already been mathematically correlated by USAF Rome Laboratories Ø Study organizations that were at the top of their industry Ø Study organizations that were at the bottom of their industry Ø Poll customers what key factors they felt impacted software reliability and investigate

Scoring Methodology Continued Ø Select parameters that will correlate to many organizations as opposed to a single one Ø Make sure the parameter is measurable Ø Determine if each single parameter correlates to the empirical defect densities for each of the samples Ø Drop parameters that do not correlate but keep data incase parameter correlates at a later time Ø If parameter correlates determine its relative weight by weight, which does not necessarily directly or linearly relate to the correlation.

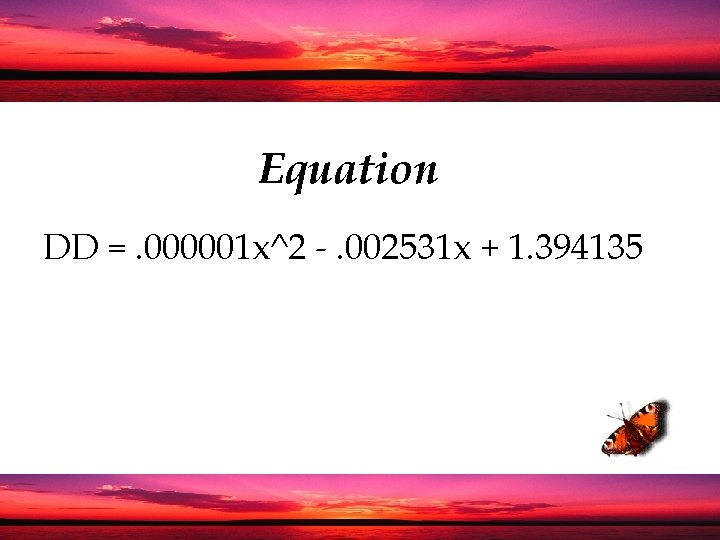

Equation DD =. 000001 x^2 -. 002531 x + 1. 394135

Stronger correlation was expected Ø Ø Configuration Management and source control Use of automated tools Code Reviews Implementation of OO technologies

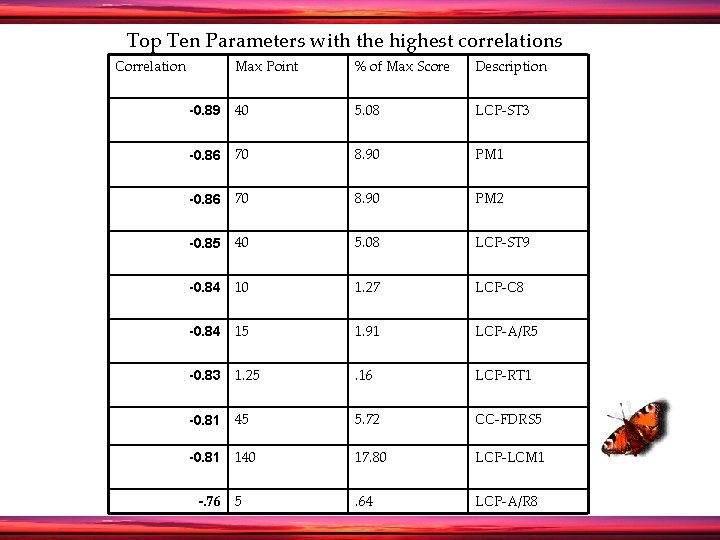

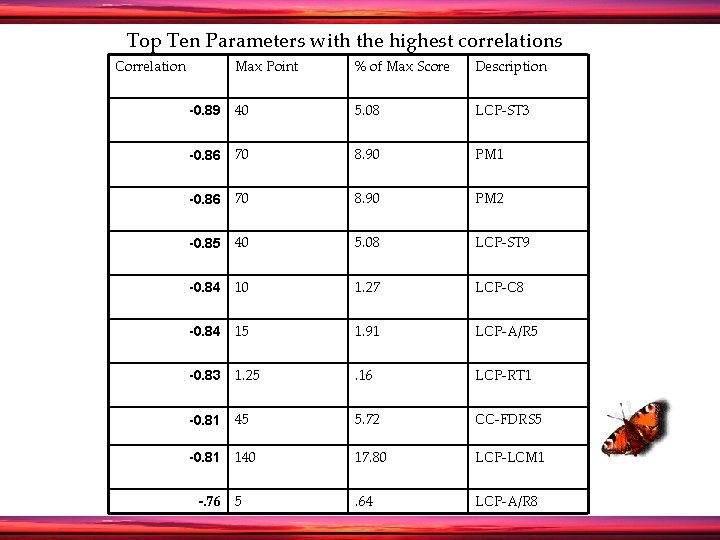

Top Ten Parameters with the highest correlations Correlation Max Point % of Max Score Description -0. 89 40 5. 08 LCP-ST 3 -0. 86 70 8. 90 PM 1 -0. 86 70 8. 90 PM 2 -0. 85 40 5. 08 LCP-ST 9 -0. 84 10 1. 27 LCP-C 8 -0. 84 15 1. 91 LCP-A/R 5 -0. 83 1. 25 . 16 LCP-RT 1 -0. 81 45 5. 72 CC-FDRS 5 -0. 81 140 17. 80 LCP-LCM 1 5 . 64 LCP-A/R 8 -. 76

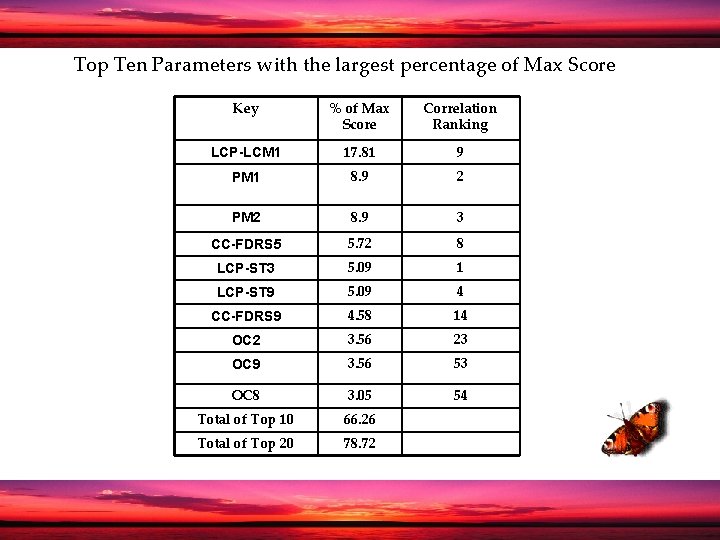

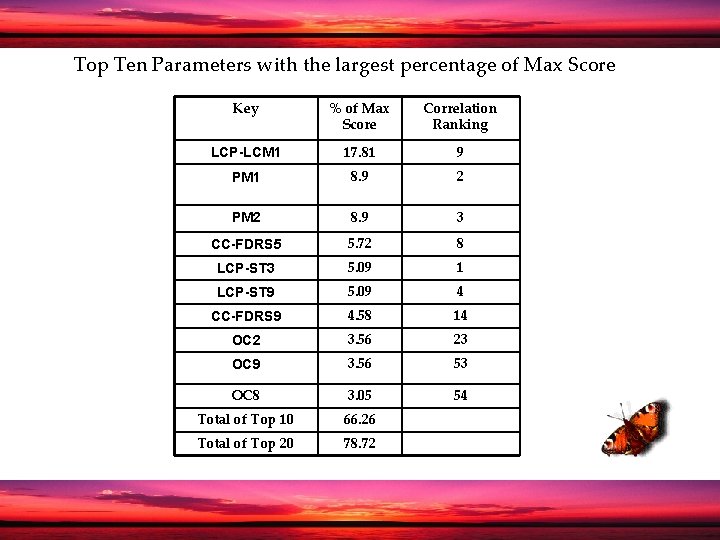

Top Ten Parameters with the largest percentage of Max Score Key % of Max Score Correlation Ranking LCP-LCM 1 17. 81 9 PM 1 8. 9 2 PM 2 8. 9 3 CC-FDRS 5 5. 72 8 LCP-ST 3 5. 09 1 LCP-ST 9 5. 09 4 CC-FDRS 9 4. 58 14 OC 2 3. 56 23 OC 9 3. 56 53 OC 8 3. 05 54 Total of Top 10 66. 26 Total of Top 20 78. 72

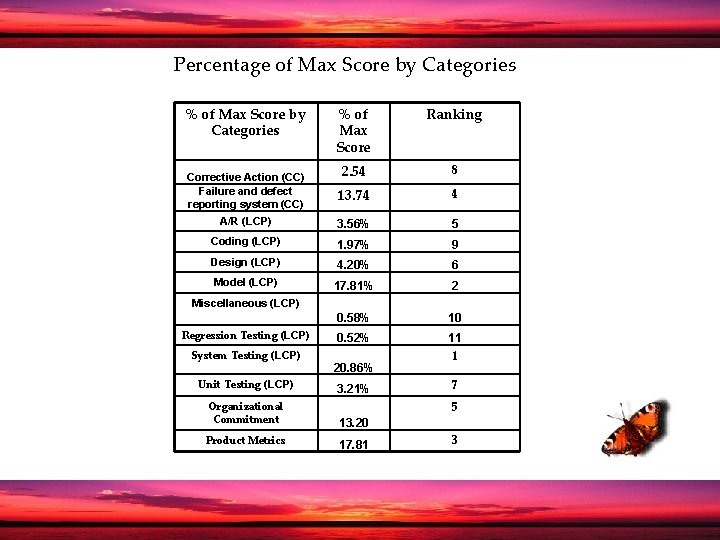

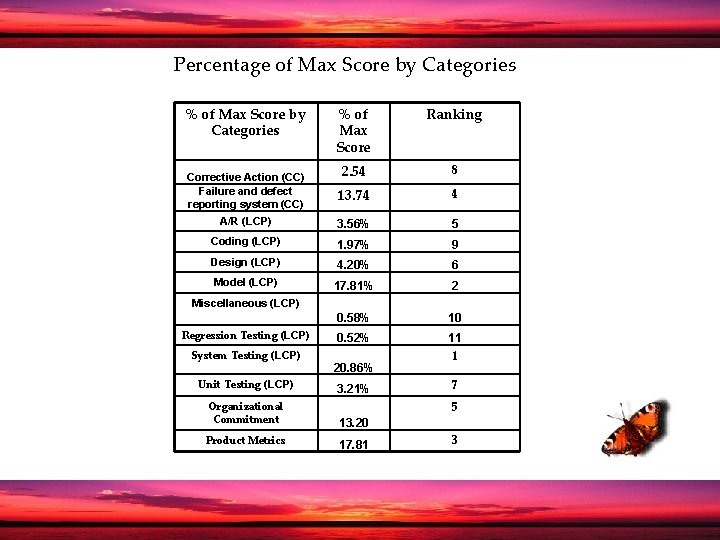

Percentage of Max Score by Categories % of Max Score Ranking Corrective Action (CC) Failure and defect reporting system (CC) 2. 54 8 13. 74 4 A/R (LCP) 3. 56% 5 Coding (LCP) 1. 97% 9 Design (LCP) 4. 20% 6 Model (LCP) 17. 81% 2 0. 58% 10 0. 52% 11 1 Miscellaneous (LCP) Regression Testing (LCP) System Testing (LCP) Unit Testing (LCP) 20. 86% 3. 21% 7 5 Organizational Commitment 13. 20 Product Metrics 17. 81 3