How to handle 1 000 events per second

- Slides: 46

How to handle 1 000 events per second in Google Cloud

About me Oleksandr Fedirko Big. Data Architect at Global. Logic I do Big. Data enabling on a projects Training and mentoring on Big. Data skills alexander. fedirko@gmail. com https: //www. linkedin. com/in/fedirko/

Use Google Data. Flow service

5 Q&A session

Developer vs Data engineer OOP SOLID Go. F Java C++ C# Java. Script Unit tests TDD DWH Business Intelligence Data Science DBA ETL Pipeline Reports R Data analysis

Agenda ● ● ● ● Starting point and basic assumptions at the project Evolution of the Cloud solution Challenges that push decisions Research on a Big. Data project, value of micro Po. Cs NFRs on a Big. Data project Good things that helped a lot on a project A place of MLAI in the system Conclusions

Starting point and basic assumptions at the project

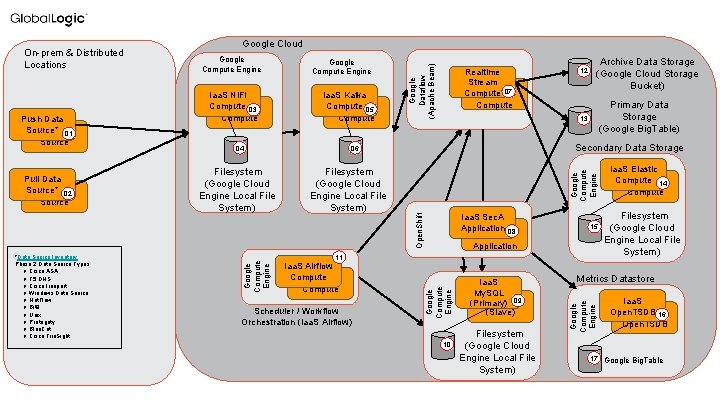

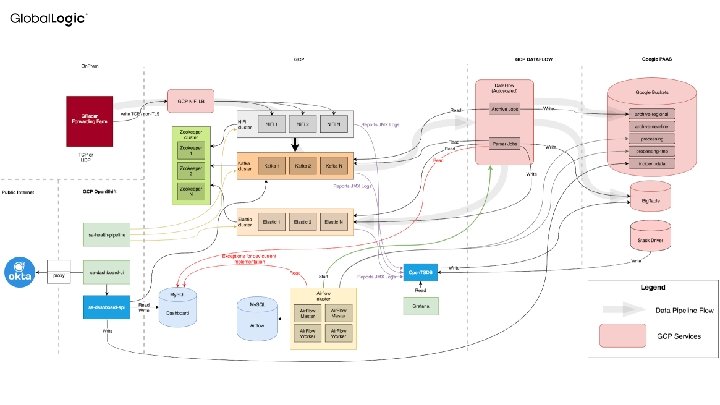

Starting point and basic assumptions at the project - Cloud agnostic User’s defined CEP Rules (complex event processing) 100 Data Source Types (Cisco ASA, Gigamon Netflow, Windows, Unix etc) 10 000 Data Sources (Routers, PCs, Servers etc) Need of MLAI Analytics Quick search SSO integration

Starting point and basic assumptions at the project Example of the Rules (High Traffic) When The event(s) were detected by one or more of these data source types "Net. Flow" And Bytes is greater than 1048576 bytes (1 Mb) Then Create Indicator “High Traffic” End

Starting point and basic assumptions at the project Example of the Rules (Port Scanning) When The event(s) were detected by one or more of these data source types "Cisco ASA" With the same source IP and destination IP more than 5 times, across more than 5 destination ports within 4 min Then Create Incident “Port Scanning” of threat type "External Hacking" End

Starting point and basic assumptions at the project Requirement example: Data Sources would be part of the Identity Database Product must integrate with the CMDB for the list of devices to be monitored. Product must be capable of indexing terabytes of normalized log data and provide performance in both indexed and table scans the exceeds search results of 1 million records a second.

Starting point and basic assumptions at the project Problems? - 1000 eps through Drools - No Autoscale on Data. Proc - Manage custom adapters via Open. Shift cluster - Stateful backend

Evolution of the Cloud solution

Evolution of the Cloud solution Limitation via So. W (Statement of Work) - GCP bounded - Exclude real time event view - Exclude metrics UI - Postpone AIML implementation - Postpone Analytical storage implementation - No sensitive data in the system - Exclude audit logging

Evolution of the Cloud solution Technology transform - From Azkaban to Air. Flow - Requirements to SRS (Software requirements specification) - From mutable rows to immutable - From Spark to Beam+Data. Flow - Agree on Ni. Fi as primary Ingest tech, get rid of custom Java adapters

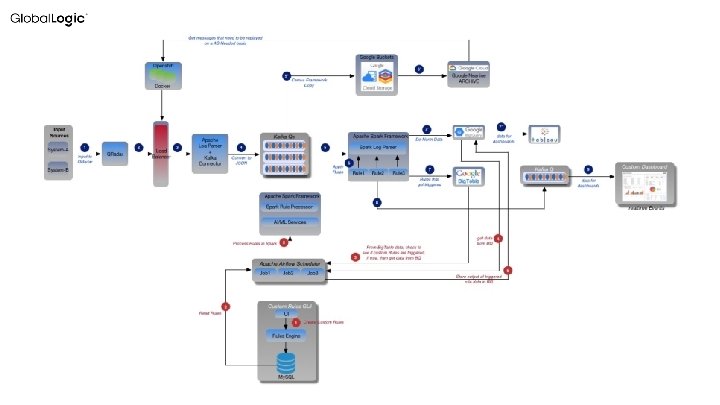

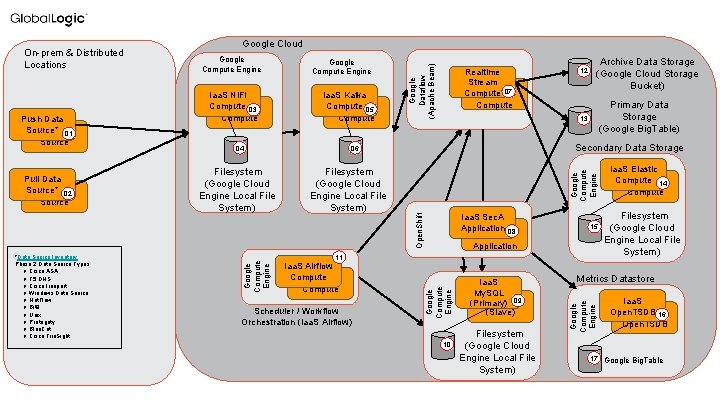

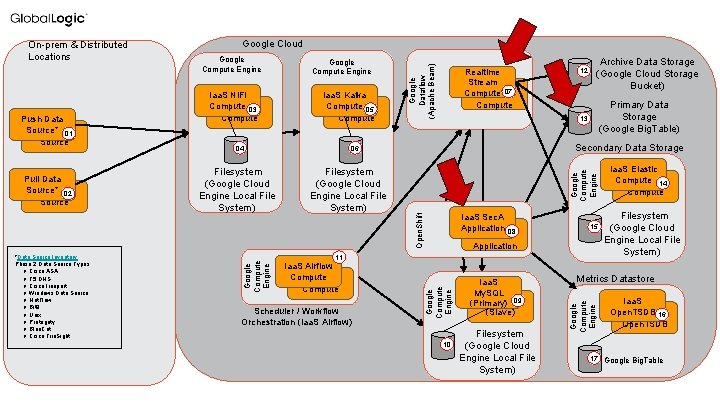

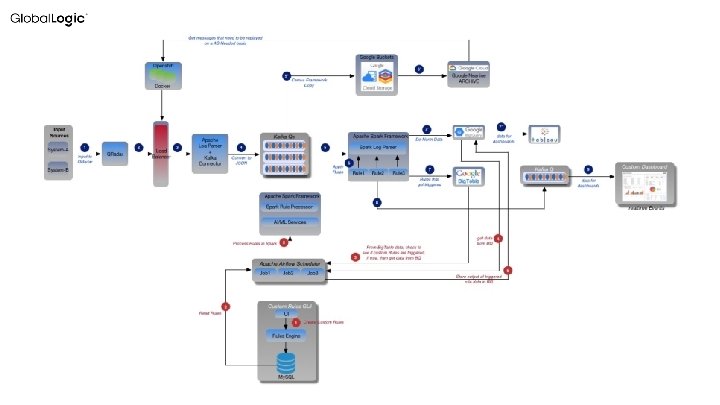

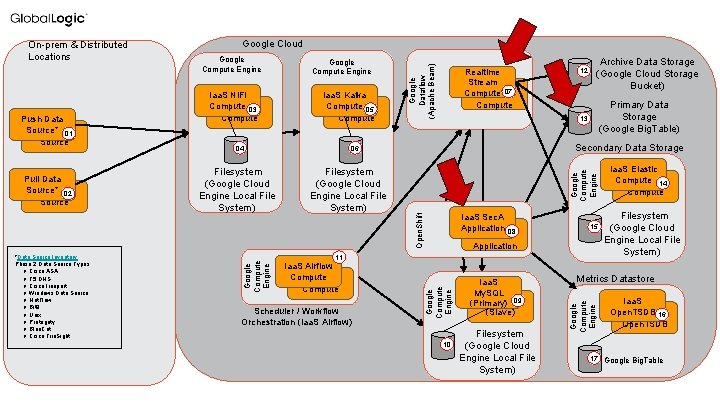

Iaa. S Kafka Compute Kafka 05 Compute 04 Filesystem (Google Cloud Engine Local File System) Realtime Stream Realtime Compute Stream 07 Compute Archive Data Storage (Google Cloud Storage Bucket) Primary Data Storage (Google Big. Table) 13 Secondary Data Storage 06 Filesystem (Google Cloud Engine Local File System) Iaa. S Sec. A Application Web 08 Application 15 11 Iaa. S Airflow Compute Scheduler / Workflow Orchestration (Iaa. S Airflow) Google Compute Engine *Data Source Inventory Phase 2 Data Source Types: ● Cisco ASA ● F 5 DNS ● Cisco Ironport ● Windows Data Source ● Net. Flow ● Bit 9 ● Unix ● Protegrity ● Blue. Cat ● Cisco Fire. Sight 12 Google Compute Engine Iaa. S Ni. Fi Compute Iaa. S Ni. Fi 03 Compute Google Compute Engine Open. Shift Pull Data Source* Pull Data 02 Source Google Compute Engine 10 Iaa. S My. SQL 09 (Primary) (Slave) Filesystem (Google Cloud Engine Local File System) Iaa. S Elastic Compute Iaa. S Elastic 14 Compute Filesystem (Google Cloud Engine Local File System) Metrics Datastore Google Compute Engine Push Data Source* Push Data 01 Source Google Cloud Google Dataflow (Apache Beam) On-prem & Distributed Locations Iaa. S Open. TSDB Iaa. S 16 Open. TSDB 17 Google Big. Table

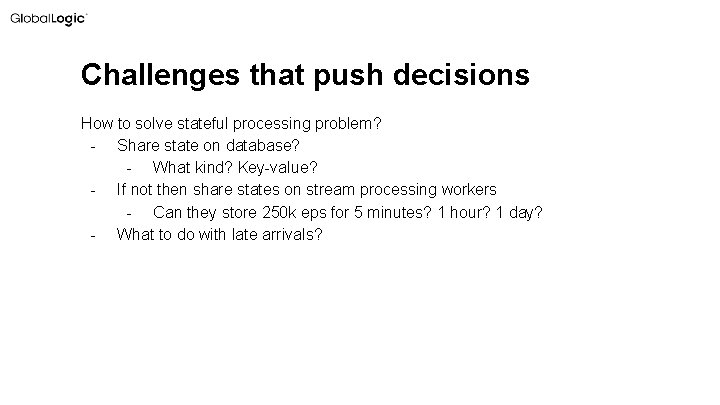

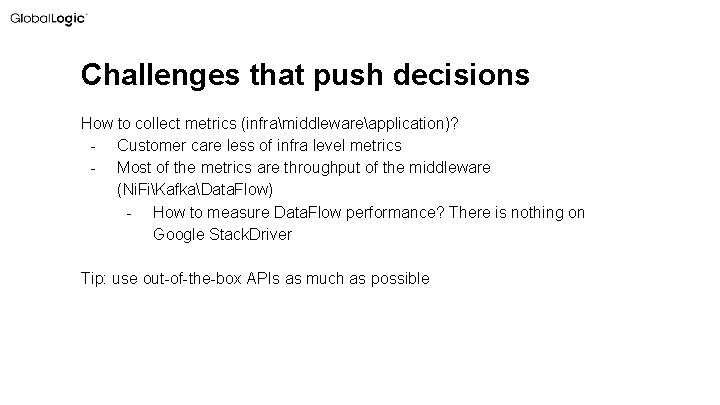

Challenges that push decisions

Challenges that push decisions How to solve stateful processing problem? - Share state on database? - What kind? Key-value? - If not then share states on stream processing workers - Can they store 250 k eps for 5 minutes? 1 hour? 1 day? - What to do with late arrivals?

Challenges that push decisions How to collect metrics (inframiddlewareapplication)? - Customer care less of infra level metrics - Most of the metrics are throughput of the middleware (Ni. FiKafkaData. Flow) - How to measure Data. Flow performance? There is nothing on Google Stack. Driver Tip: use out-of-the-box APIs as much as possible

Challenges that push decisions How to measure delay on component? - Call Kafka API for offsets? - What to do with Ni. Fi? - How to measure delay on Data. Flow?

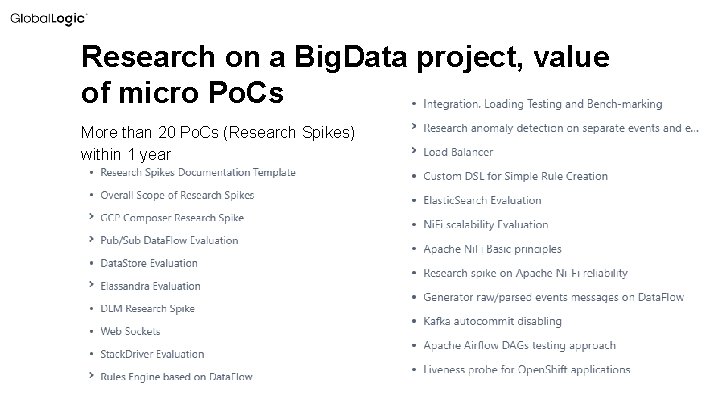

Research on a Big. Data project, value of micro Po. Cs

Research on a Big. Data project, value of micro Po. Cs More than 20 Po. Cs (Research Spikes) within 1 year

Research on a Big. Data project, value of micro Po. Cs For Data. Flow - Can it make 250 k eps ? - Does Beam fit well? - Would Data. Flow autoscaling work fine?

Research on a Big. Data project, value of micro Po. Cs For GCP Datastore - Would it make 250 k eps? - Can it be easily accessible? - Could it be integrated with Data. Flow?

Research on a Big. Data project, value of micro Po. Cs For GCP Pub. Sub - Would it make 250 k eps? - Can it deliver every message? - Can it scale up and down?

Research on a Big. Data project, value of micro Po. Cs For Air. Flow - Can we start static stream jobs from Air. Flow? - Can we manage batch jobs via Air. Flow by schedule? - Can we replace Azkaban with Air. Flow? - What kind of resources do we need for Air. Flow?

Research on a Big. Data project, value of micro Po. Cs For Ni. Fi overflow (to comply with zero messages loss) - What should Ni. Fi do when the downstream (Kafka) is down? - What should Ni. Fi do when the downstream (Kafka) just start throttling? - Store files to infinite storage - Process them later - Do not create extra pressure on Kafka

Research on a Big. Data project, value of micro Po. Cs For Replay service - How to recreate throughput on another environment? - Execute in parallel or sequentially? - What kind of UI to provide for user?

Research on a Big. Data project, value of micro Po. Cs For Kafka Manual Commit, to cover 0 message loss we have to switch to alternatives of Auto Commit (by default) - Can we switch to non-autocommit on Data. Flow? - Can we switch to non-autocommit on custom Kafka consuming jobs written in Java (Spring Cloud)? commit. Offsets. In. Finalize found. The problem is in its definition: “It helps with minimizing gaps or duplicate processing of records while restarting a pipeline from scratch. But it does not provide hard processing guarantees. ”

NFRs on a Big. Data project

NFRs on a Big. Data project - No message loss 250 k eps, with 1 kk eps spikes All secrets in Hashicorp Appliance with OWASP best practices Static Code Analysis End-to-End TLS for all connectivity No-downtime application update

NFRs on a Big. Data project Dev. Ops NFRs - Service Discovery (via Consul) - Circuit Breaker (via HystrixResilience 4 j) - Health Check (Spring Cloud) - Start pod on Open. Shift without any dependency (lazy start), give 200 response and fail later

Good things that helped a lot on a project

Good things that helped a lot on a project Extra team for CEP - 3 -5 people - Isolate from other members - Core functionality first, integration later

Good things that helped a lot on a project - Custom data generator - Custom scenarios - Throughput generation - Custom stream manager - Startstoprestart

Good things that helped a lot on a project - Keep your software design and architecture up-todate - Only live schemas in your Wiki, no static images - Make code review for everything

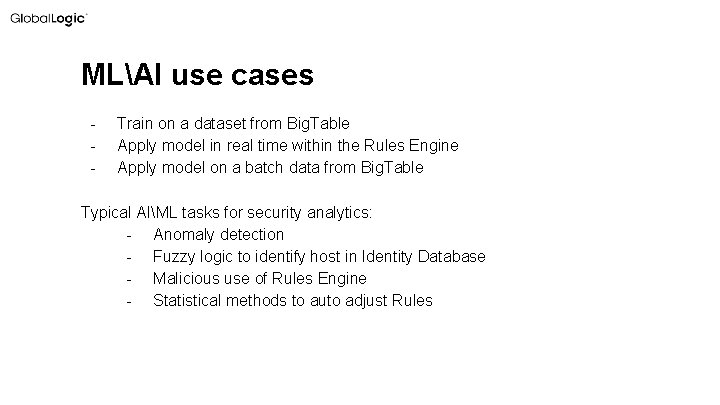

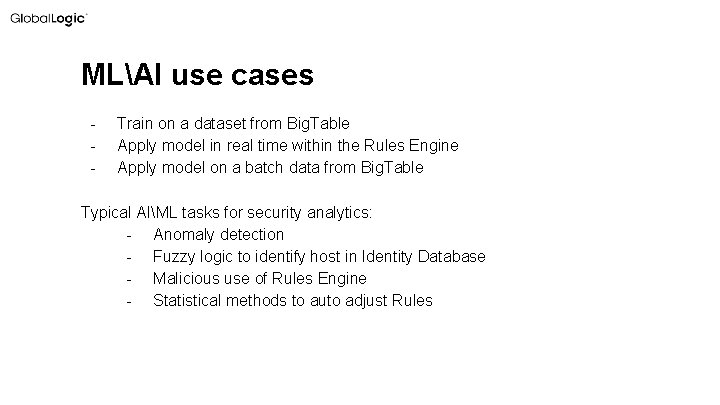

A place of MLAI in the system

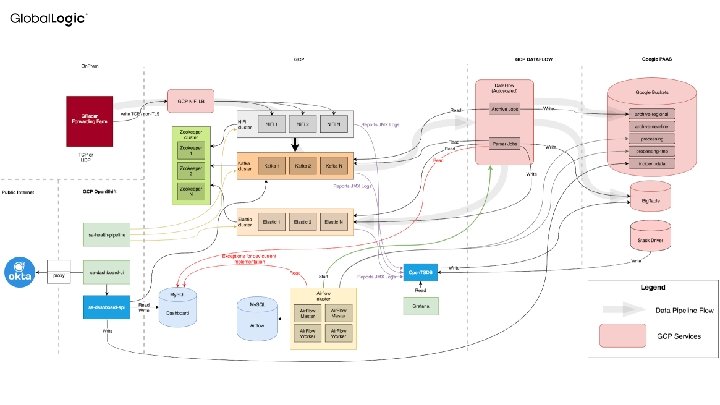

Iaa. S Kafka Compute Kafka 05 Compute 04 Filesystem (Google Cloud Engine Local File System) Realtime Stream Realtime Compute Stream 07 Compute Archive Data Storage (Google Cloud Storage Bucket) Primary Data Storage (Google Big. Table) 13 Secondary Data Storage 06 Filesystem (Google Cloud Engine Local File System) Iaa. S Sec. A Application Web 08 Application 15 11 Iaa. S Airflow Compute Scheduler / Workflow Orchestration (Iaa. S Airflow) Google Compute Engine *Data Source Inventory Phase 2 Data Source Types: ● Cisco ASA ● F 5 DNS ● Cisco Ironport ● Windows Data Source ● Net. Flow ● Bit 9 ● Unix ● Protegrity ● Blue. Cat ● Cisco Fire. Sight 12 Google Compute Engine Iaa. S Ni. Fi Compute Iaa. S Ni. Fi 03 Compute Google Compute Engine Open. Shift Pull Data Source* Pull Data 02 Source Google Compute Engine 10 Iaa. S My. SQL 09 (Primary) (Slave) Filesystem (Google Cloud Engine Local File System) Iaa. S Elastic Compute Iaa. S Elastic 14 Compute Filesystem (Google Cloud Engine Local File System) Metrics Datastore Google Compute Engine Push Data Source* Push Data 01 Source Google Cloud Google Dataflow (Apache Beam) On-prem & Distributed Locations Iaa. S Open. TSDB Iaa. S 16 Open. TSDB 17 Google Big. Table

MLAI use cases - Train on a dataset from Big. Table Apply model in real time within the Rules Engine Apply model on a batch data from Big. Table Typical AIML tasks for security analytics: - Anomaly detection - Fuzzy logic to identify host in Identity Database - Malicious use of Rules Engine - Statistical methods to auto adjust Rules

Conclusions

Conclusions - See something unknown - do micro Po. C Avoid mutable objects in Big Data Limit the scope to the real deliverable product Requirements too fuzzy? Make your own! Dev. Ops are your best friends (QA to) Do not use Gerrit Sketch everything before you start develop

45 Q&A session

Thank you!