How Emerging Memory Technologies Will Have You Rethinking

![Asymmetric Read-Write Costs: Prior Work (3) • Remote Memory References (RMR) [YA 95] – Asymmetric Read-Write Costs: Prior Work (3) • Remote Memory References (RMR) [YA 95] –](https://slidetodoc.com/presentation_image_h/e2a6166d6e8cfc62e987fa6e695d0a25/image-13.jpg)

![[BFGGS 16] • Symmetric Memory CPU 1 Asymmetric Memory 1 How Emerging Memory [BFGGS 16] • Symmetric Memory CPU 1 Asymmetric Memory 1 How Emerging Memory](https://slidetodoc.com/presentation_image_h/e2a6166d6e8cfc62e987fa6e695d0a25/image-16.jpg)

![Asymmetric Shared Memory • [BFGGS 15] [BBFGGMS 16] How Emerging Memory Technologies Will Have Asymmetric Shared Memory • [BFGGS 15] [BBFGGMS 16] How Emerging Memory Technologies Will Have](https://slidetodoc.com/presentation_image_h/e2a6166d6e8cfc62e987fa6e695d0a25/image-24.jpg)

![The Asymmetric External Memory model [BFGGS 15] • Small-memory CPU 1 Asymmetric Large-memory 0 The Asymmetric External Memory model [BFGGS 15] • Small-memory CPU 1 Asymmetric Large-memory 0](https://slidetodoc.com/presentation_image_h/e2a6166d6e8cfc62e987fa6e695d0a25/image-40.jpg)

![References (in order of first appearance) [KPMWEVNBPA 14] Ioannis Koltsidas, Roman Pletka, Peter Mueller, References (in order of first appearance) [KPMWEVNBPA 14] Ioannis Koltsidas, Roman Pletka, Peter Mueller,](https://slidetodoc.com/presentation_image_h/e2a6166d6e8cfc62e987fa6e695d0a25/image-44.jpg)

![References (cont. ) [BFGGS 15] Guy E. Blelloch, Jeremy T. Fineman, Phillip B. Gibbons, References (cont. ) [BFGGS 15] Guy E. Blelloch, Jeremy T. Fineman, Phillip B. Gibbons,](https://slidetodoc.com/presentation_image_h/e2a6166d6e8cfc62e987fa6e695d0a25/image-45.jpg)

- Slides: 45

How Emerging Memory Technologies Will Have You Rethinking Algorithm Design Phillip B. Gibbons Carnegie Mellon University PODC’ 16 Keynote Talk, July 28, 2016

50 Years of Algorithms Research …has focused on settings in which reads & writes to memory have equal cost But what if they have very DIFFERENT costs? How would that impact Algorithm Design? How Emerging Memory Technologies Will Have You Rethinking Algorithm Design © Phillip B. Gibbons 3

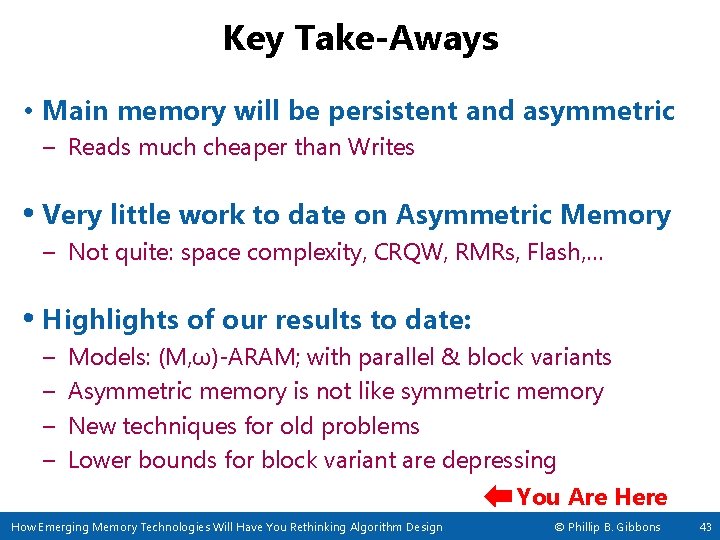

Key Take-Aways • Main memory will be persistent and asymmetric – Reads much cheaper than Writes • Very little work to date on Asymmetric Memory – Not quite: space complexity, CRQW, RMRs, Flash… • Highlights of our results to date: – – Models: (M, ω)-ARAM; with parallel & block variants Asymmetric memory is not like symmetric memory New techniques for old problems Lower bounds for block variant are depressing How Emerging Memory Technologies Will Have You Rethinking Algorithm Design © Phillip B. Gibbons 4

Emerging Memory Technologies How Emerging Memory Technologies Will Have You Rethinking Algorithm Design © Phillip B. Gibbons 5

Emerging Memory Technologies Motivation: – DRAM (today’s main memory) is volatile – DRAM energy cost is significant (~35% of DC energy) – DRAM density (bits/area) is limited Promising candidates: – – Phase-Change Memory (PCM) Spin-Torque Transfer Magnetic RAM (STT-RAM) Memristor-based Resistive RAM (Re. RAM) Conductive-bridging RAM (CBRAM) 3 D XPoint Key properties: – Persistent, significantly lower energy, can be higher density – Read latencies approaching DRAM, byte-addressable How Emerging Memory Technologies Will Have You Rethinking Algorithm Design © Phillip B. Gibbons 6

Another Key Property: Writes More Costly than Reads In these emerging memory technologies, bits are stored as “states” of the given material • No energy to retain state • Small energy to read state - Low current for short duration • Large energy to change state - High current for long duration PCM Writes incur higher energy costs, higher latency, lower per-DIMM bandwidth (power envelope constraints), endurance problems © Phillip B. Gibbons How Emerging Memory Technologies Will Have You Rethinking Algorithm Design 7

Cost Examples from Literature (Speculative) • PCM: writes 15 x slower, 15 x less BW, 10 x more energy • PCM L 3 Cache: writes up to 40 x slower, 17 x more energy • STT-RAM cell: writes 71 x slower, 1000 x more energy @ material level • Re. RAM DIMM: writes 117 x slower, 125 x more energy • CBRAM: writes 50 x more energy Sources: [KPMWEVNBPA 14] [DJX 09] [XDJX 11] [GZDCH 13] Costs are a well-kept secret by Vendors How Emerging Memory Technologies Will Have You Rethinking Algorithm Design © Phillip B. Gibbons 8

Are We There Yet? • 3 D XPoint will first come out in SSD form factor – No date announced: expectation is 2017 • Later will come out in DIMM form factor – Main memory: Loads/Stores on memory bus – No date announced: perhaps 2018 • Energy/density/persistence advantages Projected to become dominant main memory In near future: Main memory will be persistent & asymmetric How Emerging Memory Technologies Will Have You Rethinking Algorithm Design © Phillip B. Gibbons 9

Write-Efficient Algorithm Design Goal: Design write-efficient algorithms (write-limited, write-avoiding) • Fewer writes Lower energy, Faster How we model the asymmetry: In asymmetric memory, writes are ω times more costly than reads How Emerging Memory Technologies Will Have You Rethinking Algorithm Design © Phillip B. Gibbons 10

Warm up: Write-efficient Sorting • Solution: • Insert each key in random order into a binary search tree • An in-order tree traversal yields the sorted array How Emerging Memory Technologies Will Have You Rethinking Algorithm Design © Phillip B. Gibbons 11

Asymmetric Read-Write Costs: Prior Work (1) • Space complexity classes such as L – Can repeatedly read input – Only limited amount of working space What’s missing: Doesn’t charge for number of writes – OK to write every step • Similarly, streaming algorithms have limited space – But OK to write every step How Emerging Memory Technologies Will Have You Rethinking Algorithm Design © Phillip B. Gibbons 12

Asymmetric Read-Write Costs: Prior Work (2) • Reducing writes to contended shared memory vars – Multiprocessor cache coherence serializes writes, but reads can occur in parallel – Concurrent-read-queue-write (CRQW) model [GMR 98] – Contention in asynchronous shared memory algs [DHW 97] – Etc, etc What’s missing: Cost of writes to even un-contended vars – OK to write every step to disjoint vars (disjoint cache lines) • Similarly, reducing writes to minimize locking/synch – But OK for sequential code to write like a maniac! How Emerging Memory Technologies Will Have You Rethinking Algorithm Design © Phillip B. Gibbons 13

![Asymmetric ReadWrite Costs Prior Work 3 Remote Memory References RMR YA 95 Asymmetric Read-Write Costs: Prior Work (3) • Remote Memory References (RMR) [YA 95] –](https://slidetodoc.com/presentation_image_h/e2a6166d6e8cfc62e987fa6e695d0a25/image-13.jpg)

Asymmetric Read-Write Costs: Prior Work (3) • Remote Memory References (RMR) [YA 95] – Only charge for remote memory references, i. e. , references that require an interconnect traversal – In cache-coherent multiprocessors, only charge for: • A read(x) that gets its value from a write(x) by another process • A write(x) that invalidates a copy of x at another process – Thus, writes make it costly What’s missing: Doesn’t charge for number of writes How Emerging Memory Technologies Will Have You Rethinking Algorithm Design © Phillip B. Gibbons 14

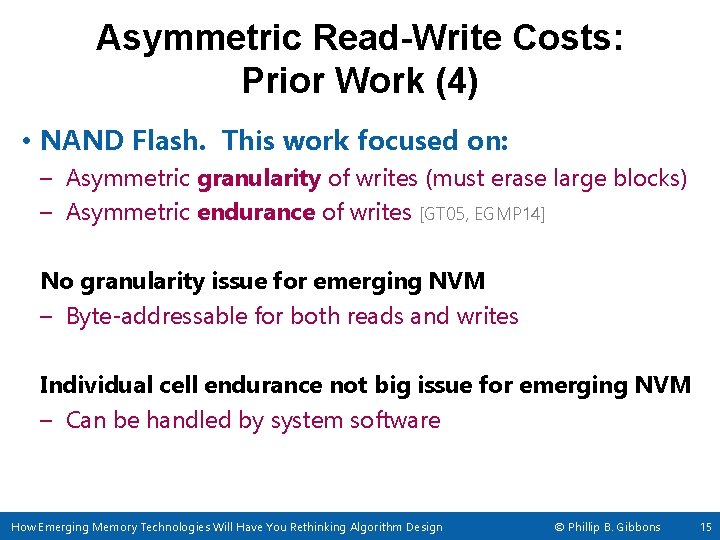

Asymmetric Read-Write Costs: Prior Work (4) • NAND Flash. This work focused on: – Asymmetric granularity of writes (must erase large blocks) – Asymmetric endurance of writes [GT 05, EGMP 14] No granularity issue for emerging NVM – Byte-addressable for both reads and writes Individual cell endurance not big issue for emerging NVM – Can be handled by system software How Emerging Memory Technologies Will Have You Rethinking Algorithm Design © Phillip B. Gibbons 15

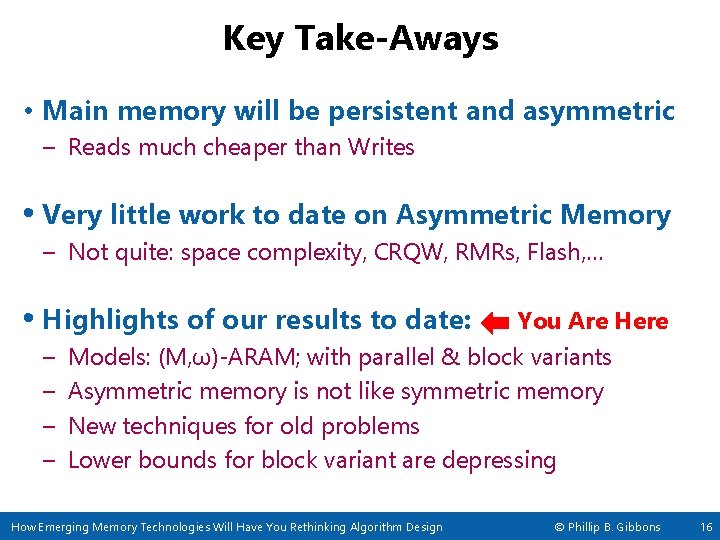

Key Take-Aways • Main memory will be persistent and asymmetric – Reads much cheaper than Writes • Very little work to date on Asymmetric Memory – Not quite: space complexity, CRQW, RMRs, Flash, … • Highlights of our results to date: – – You Are Here Models: (M, ω)-ARAM; with parallel & block variants Asymmetric memory is not like symmetric memory New techniques for old problems Lower bounds for block variant are depressing How Emerging Memory Technologies Will Have You Rethinking Algorithm Design © Phillip B. Gibbons 16

![BFGGS 16 Symmetric Memory CPU 1 Asymmetric Memory 1 How Emerging Memory [BFGGS 16] • Symmetric Memory CPU 1 Asymmetric Memory 1 How Emerging Memory](https://slidetodoc.com/presentation_image_h/e2a6166d6e8cfc62e987fa6e695d0a25/image-16.jpg)

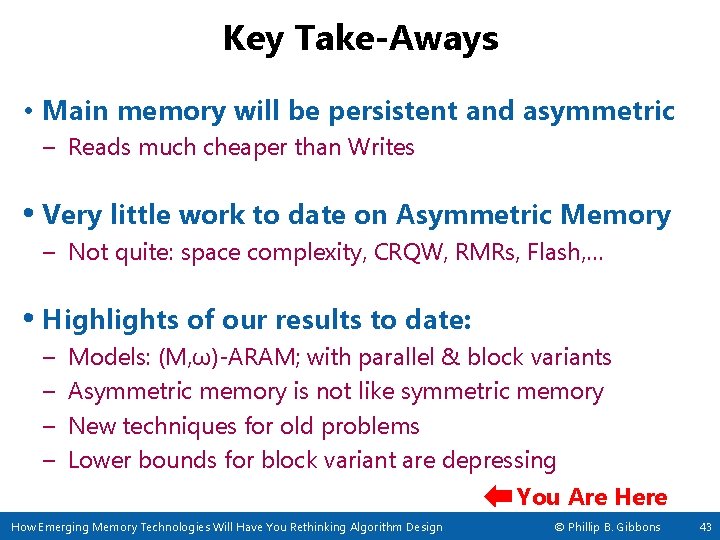

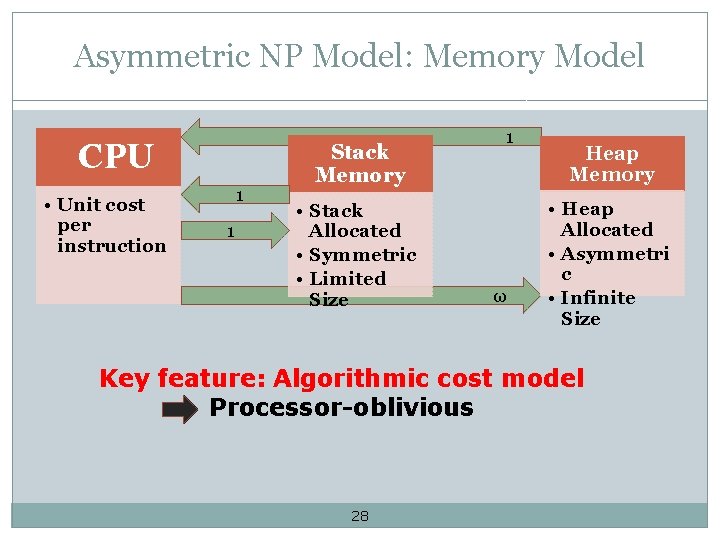

[BFGGS 16] • Symmetric Memory CPU 1 Asymmetric Memory 1 How Emerging Memory Technologies Will Have You Rethinking Algorithm Design © Phillip B. Gibbons 17

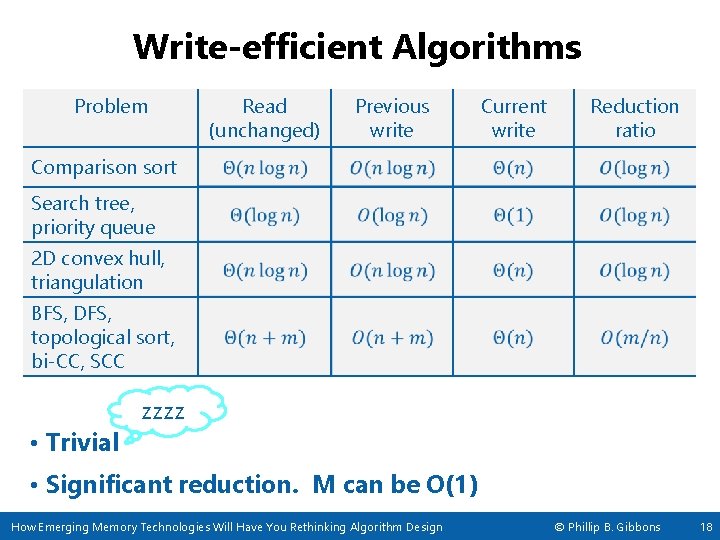

Write-efficient Algorithms Problem Read (unchanged) Previous write Current write Reduction ratio Comparison sort Search tree, priority queue 2 D convex hull, triangulation BFS, DFS, topological sort, bi-CC, SCC • Trivial zzzz • Significant reduction. M can be O(1) How Emerging Memory Technologies Will Have You Rethinking Algorithm Design © Phillip B. Gibbons 18

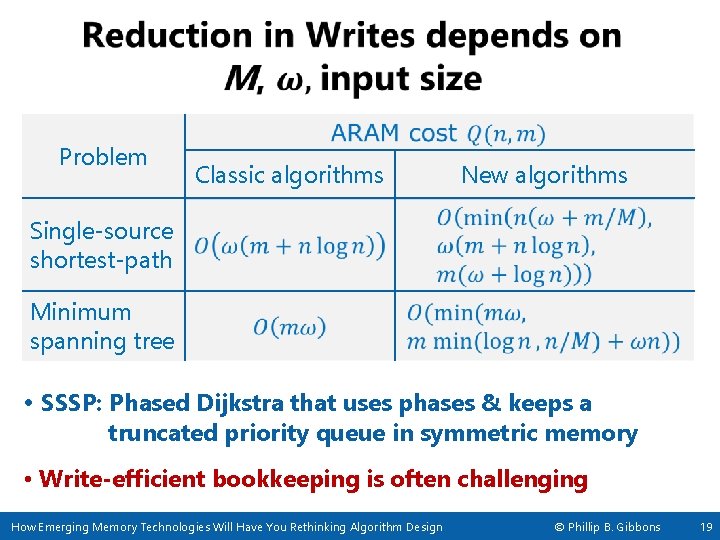

Problem Classic algorithms New algorithms Single-source shortest-path Minimum spanning tree • SSSP: Phased Dijkstra that uses phases & keeps a truncated priority queue in symmetric memory • Write-efficient bookkeeping is often challenging How Emerging Memory Technologies Will Have You Rethinking Algorithm Design © Phillip B. Gibbons 19

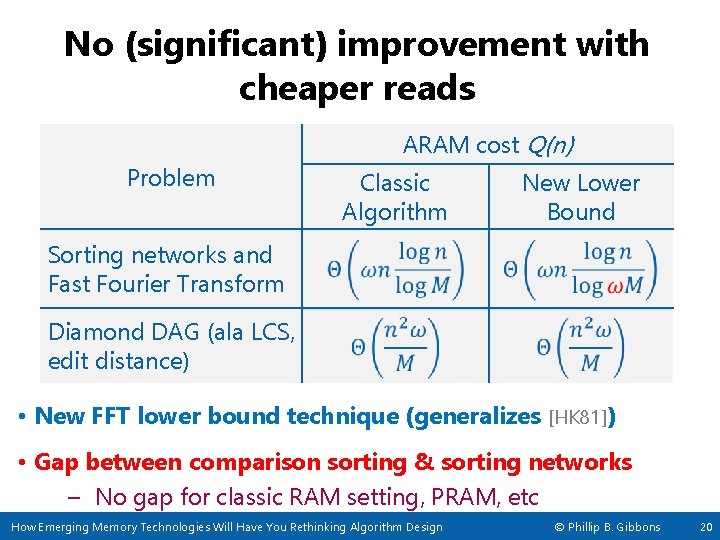

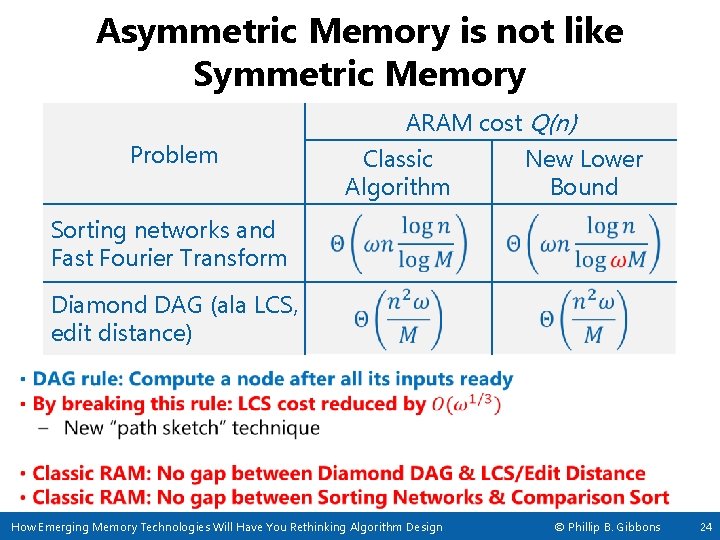

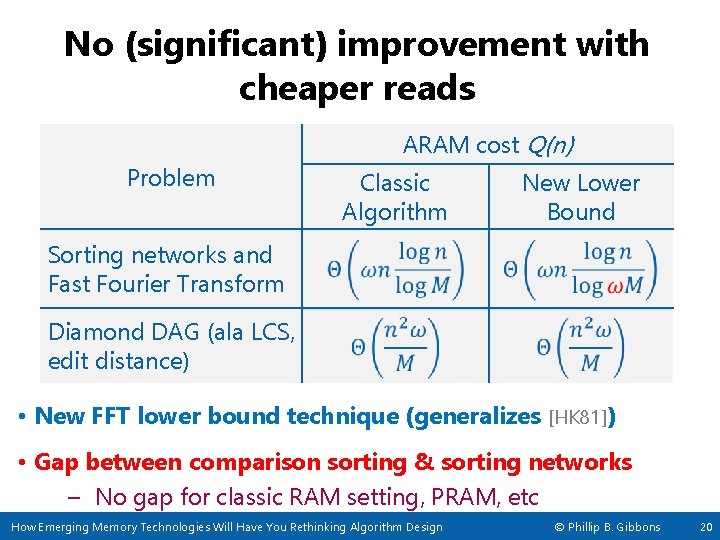

No (significant) improvement with cheaper reads ARAM cost Q(n) Problem Classic Algorithm New Lower Bound Sorting networks and Fast Fourier Transform Diamond DAG (ala LCS, edit distance) • New FFT lower bound technique (generalizes [HK 81]) • Gap between comparison sorting & sorting networks – No gap for classic RAM setting, PRAM, etc How Emerging Memory Technologies Will Have You Rethinking Algorithm Design © Phillip B. Gibbons 20

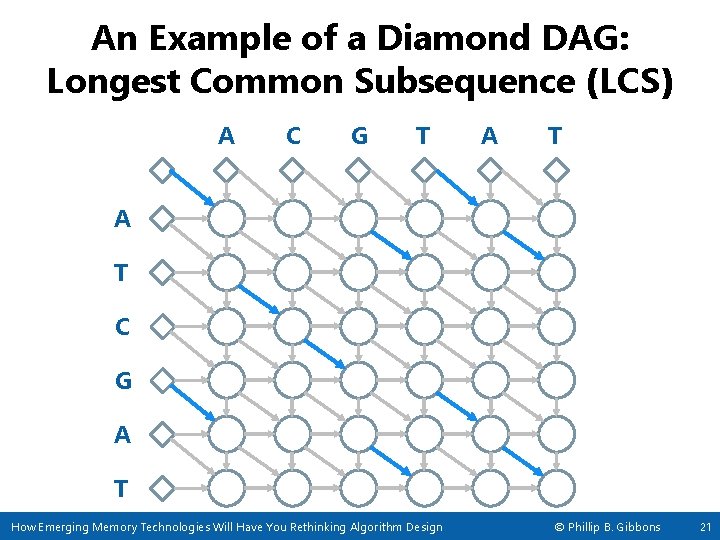

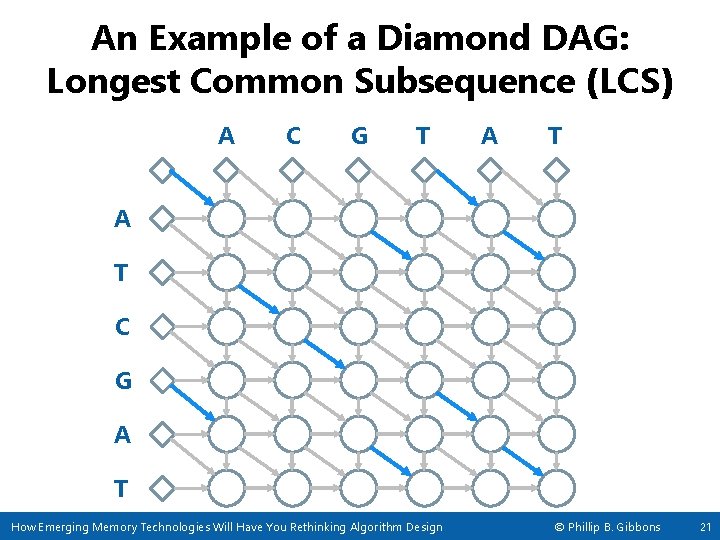

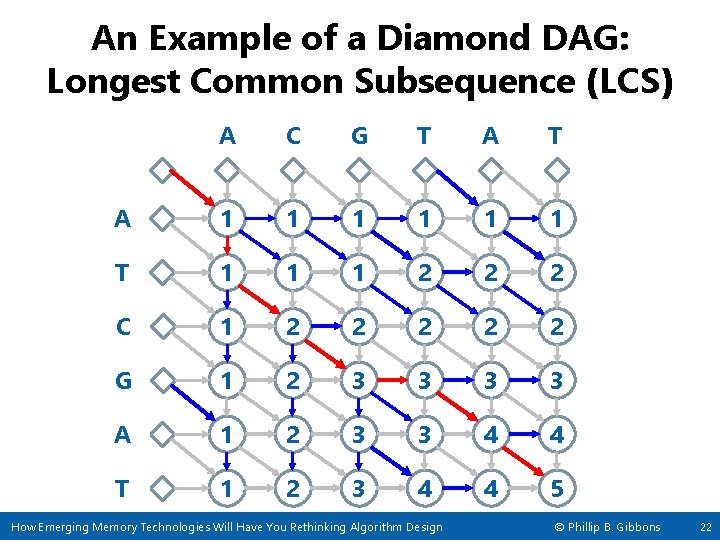

An Example of a Diamond DAG: Longest Common Subsequence (LCS) A C G T A T C G A T How Emerging Memory Technologies Will Have You Rethinking Algorithm Design © Phillip B. Gibbons 21

An Example of a Diamond DAG: Longest Common Subsequence (LCS) A C G T A 1 1 1 T 1 1 1 2 2 2 C 1 2 2 2 G 1 2 3 3 A 1 2 3 3 4 4 T 1 2 3 4 4 5 How Emerging Memory Technologies Will Have You Rethinking Algorithm Design © Phillip B. Gibbons 22

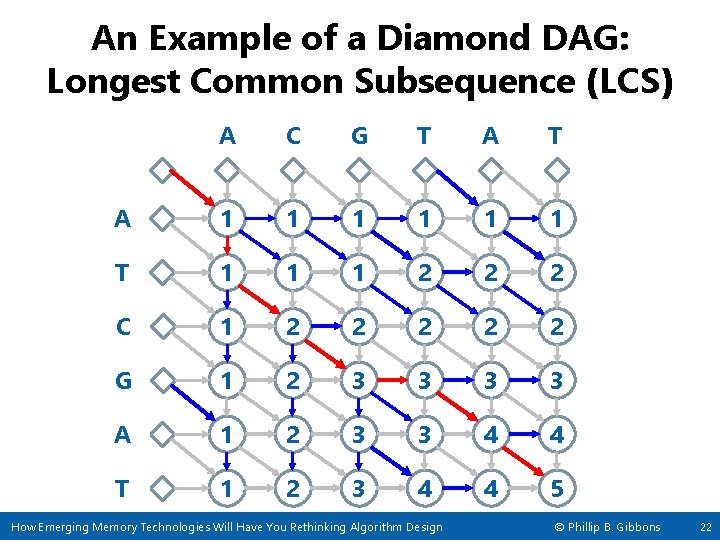

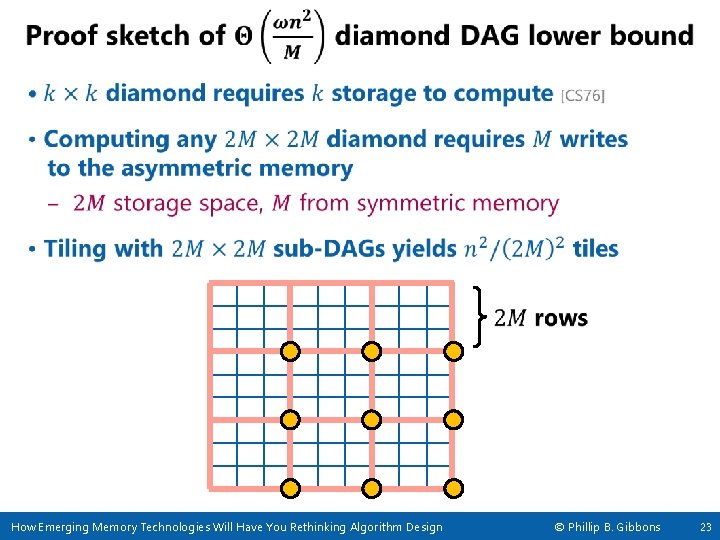

• How Emerging Memory Technologies Will Have You Rethinking Algorithm Design © Phillip B. Gibbons 23

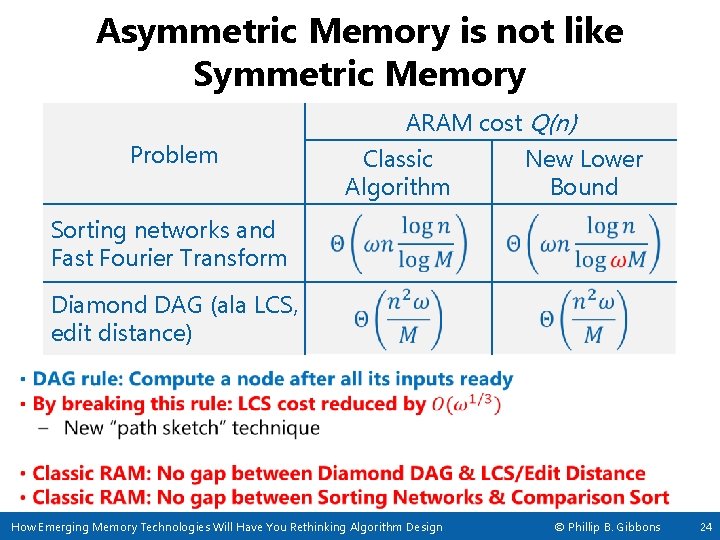

Asymmetric Memory is not like Symmetric Memory ARAM cost Q(n) Problem Classic Algorithm New Lower Bound Sorting networks and Fast Fourier Transform Diamond DAG (ala LCS, edit distance) • How Emerging Memory Technologies Will Have You Rethinking Algorithm Design © Phillip B. Gibbons 24

![Asymmetric Shared Memory BFGGS 15 BBFGGMS 16 How Emerging Memory Technologies Will Have Asymmetric Shared Memory • [BFGGS 15] [BBFGGMS 16] How Emerging Memory Technologies Will Have](https://slidetodoc.com/presentation_image_h/e2a6166d6e8cfc62e987fa6e695d0a25/image-24.jpg)

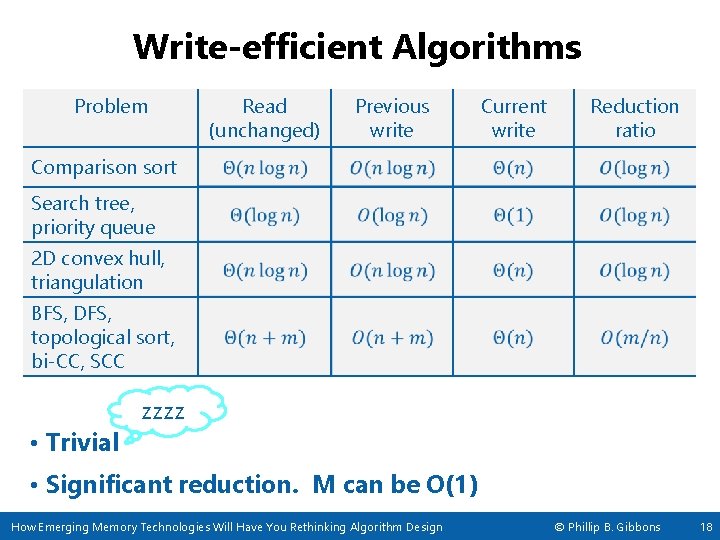

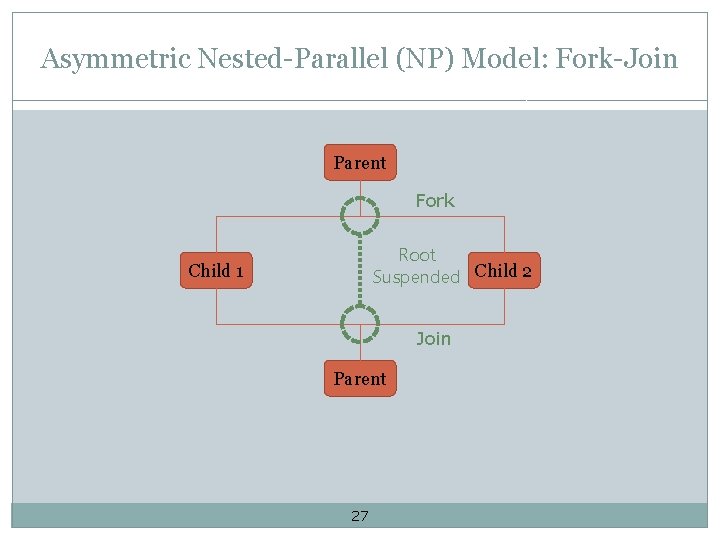

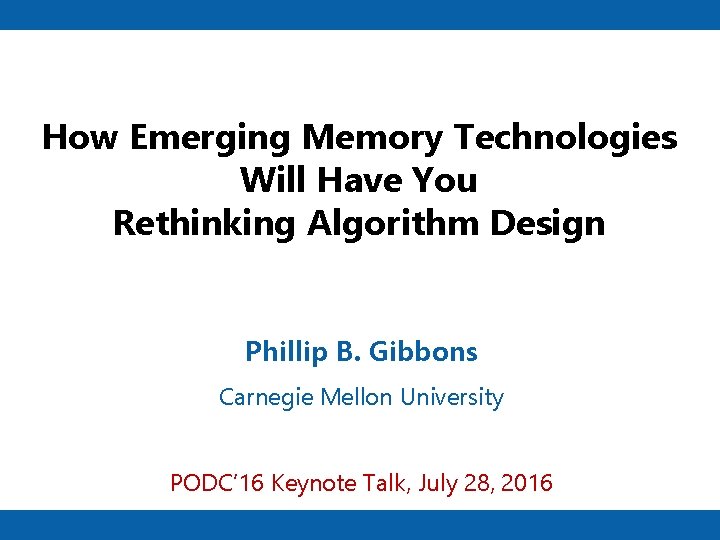

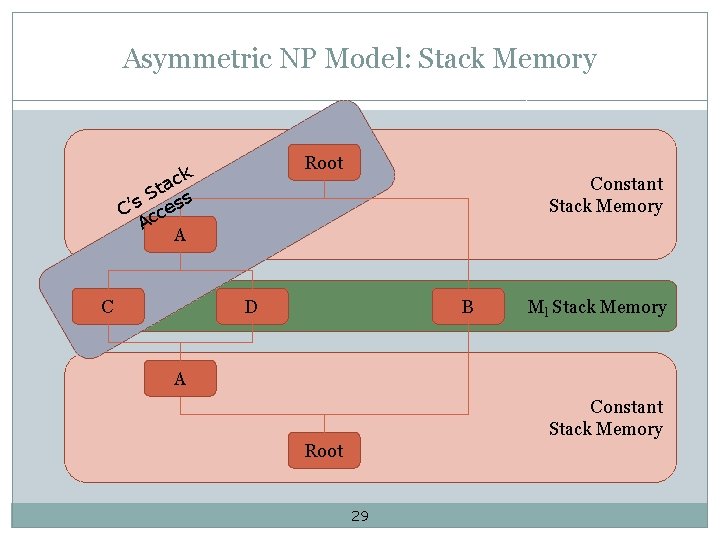

Asymmetric Shared Memory • [BFGGS 15] [BBFGGMS 16] How Emerging Memory Technologies Will Have You Rethinking Algorithm Design © Phillip B. Gibbons 25

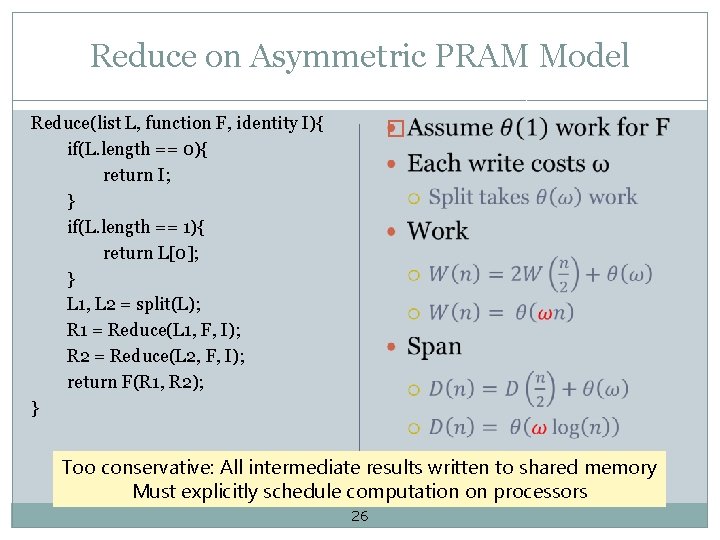

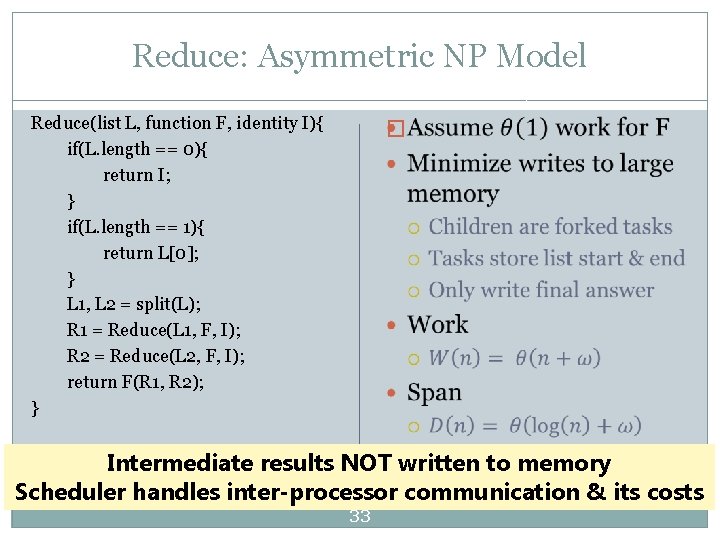

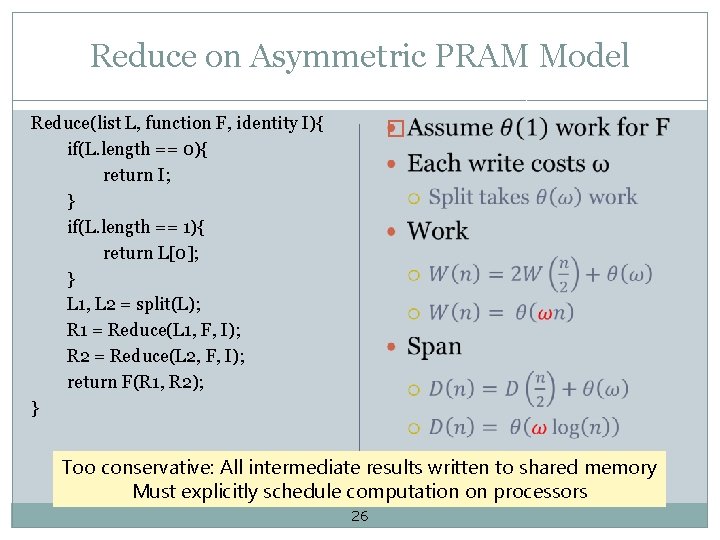

Reduce on Asymmetric PRAM Model Reduce(list L, function F, identity I){ if(L. length == 0){ return I; } if(L. length == 1){ return L[0]; } L 1, L 2 = split(L); R 1 = Reduce(L 1, F, I); R 2 = Reduce(L 2, F, I); return F(R 1, R 2); } � Too conservative: All intermediate results written to shared memory Must explicitly schedule computation on processors 26

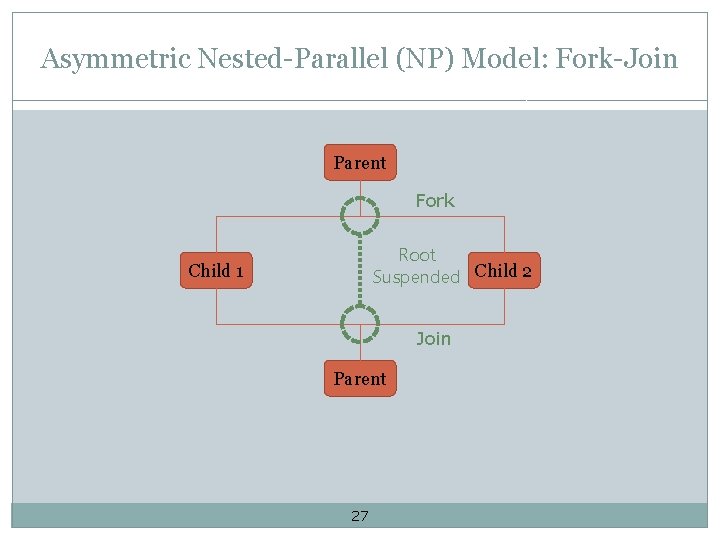

Asymmetric Nested-Parallel (NP) Model: Fork-Join Parent Fork Root Suspended Child 2 Child 1 Join Parent 27

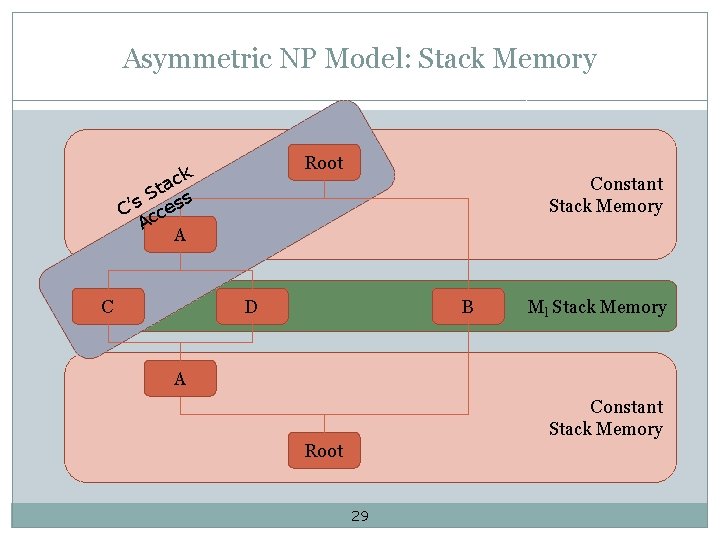

Asymmetric NP Model: Memory Model CPU • Unit cost per instruction 1 1 Stack Memory • Stack Allocated • Symmetric • Limited Size 1 ω Heap Memory • Heap Allocated • Asymmetri c • Infinite Size Key feature: Algorithmic cost model Processor-oblivious 28

Asymmetric NP Model: Stack Memory Root k ac t S ss s ’ C cce A A C Constant Stack Memory D B Ml Stack Memory A Constant Stack Memory Root 29

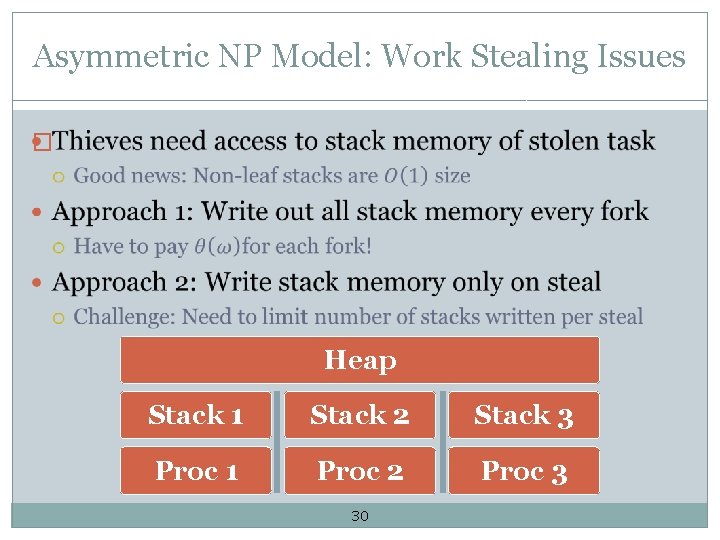

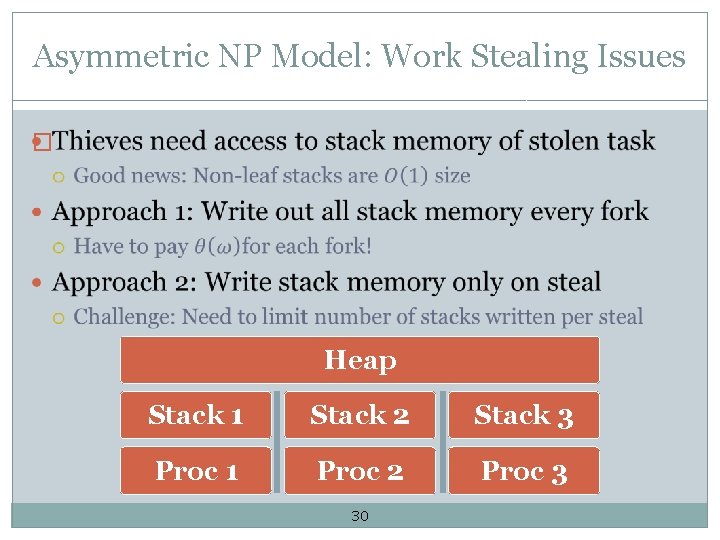

Asymmetric NP Model: Work Stealing Issues � Heap Stack 1 Stack 2 Stack 3 Proc 1 Proc 2 Proc 3 30

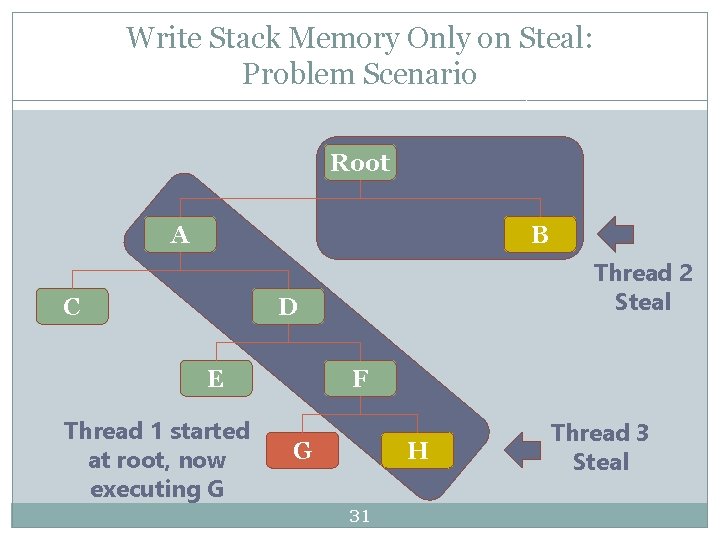

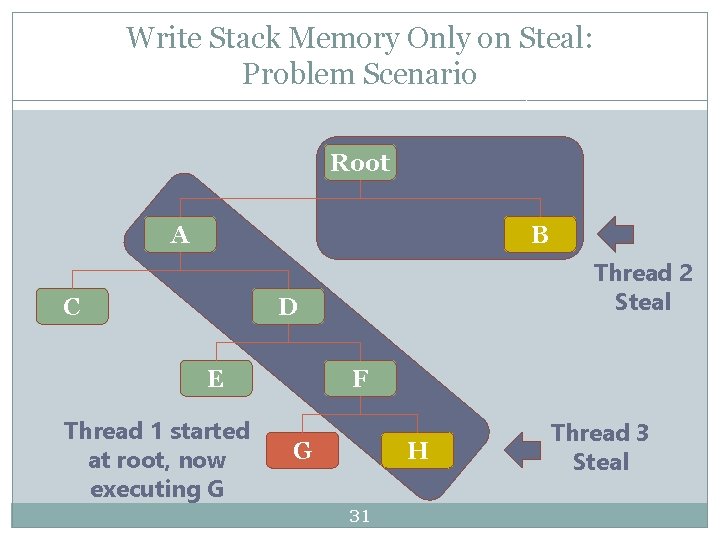

Write Stack Memory Only on Steal: Problem Scenario Root A B C Thread 2 Steal D E Thread 1 started at root, now executing G F G H 31 Thread 3 Steal

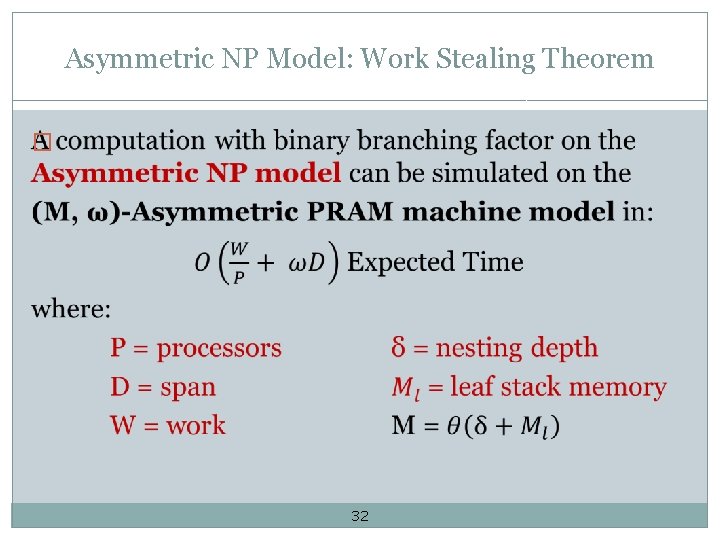

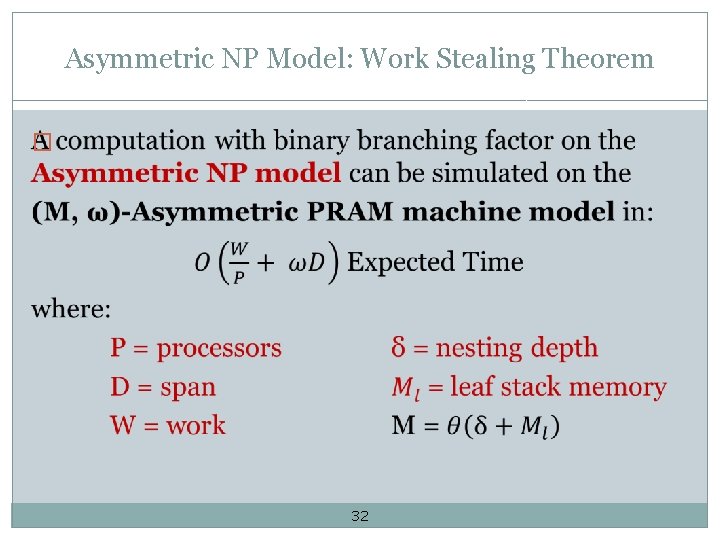

Asymmetric NP Model: Work Stealing Theorem � 32

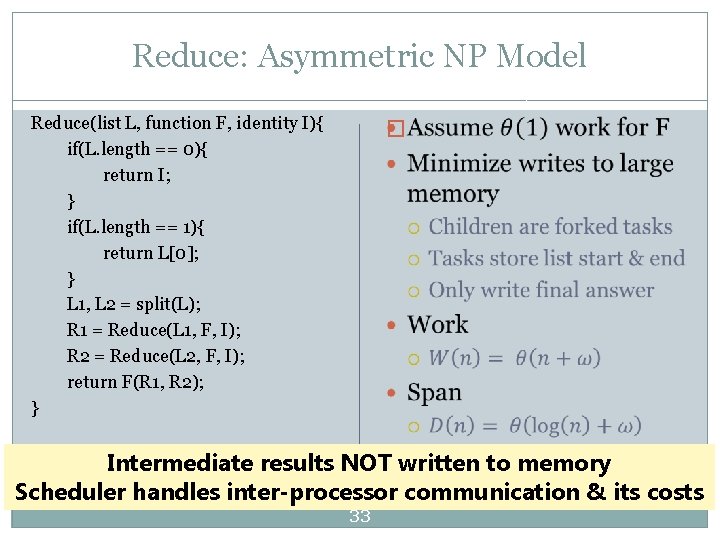

Reduce: Asymmetric NP Model Reduce(list L, function F, identity I){ if(L. length == 0){ return I; } if(L. length == 1){ return L[0]; } L 1, L 2 = split(L); R 1 = Reduce(L 1, F, I); R 2 = Reduce(L 2, F, I); return F(R 1, R 2); } � Intermediate results NOT written to memory Scheduler handles inter-processor communication & its costs 33

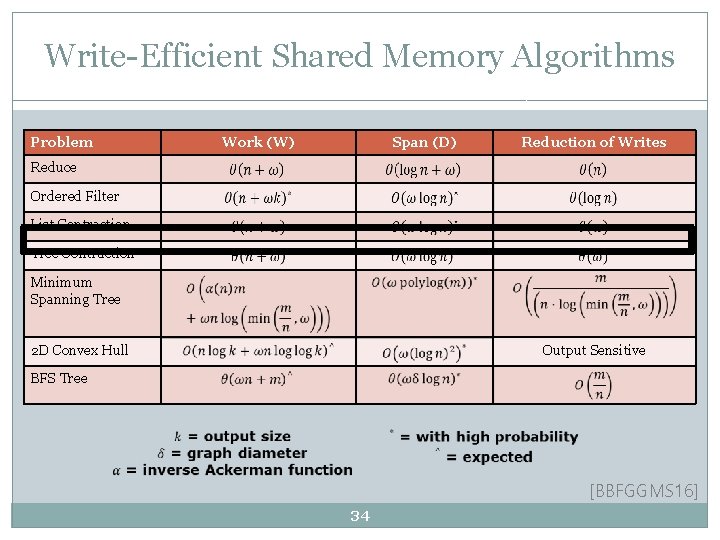

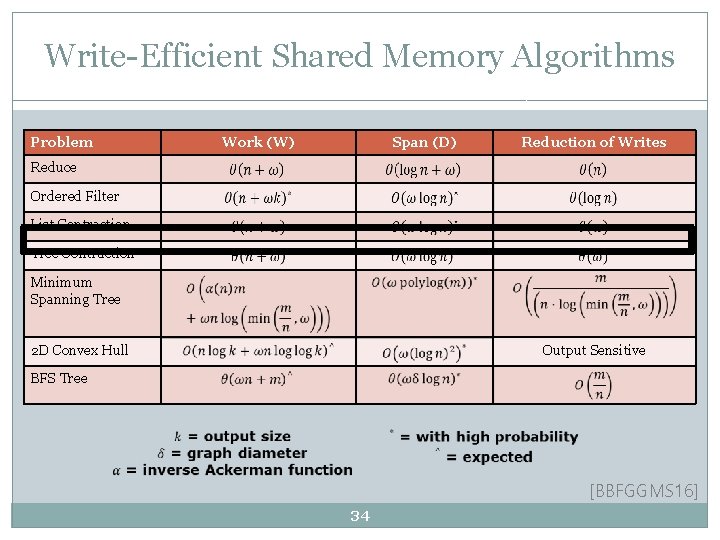

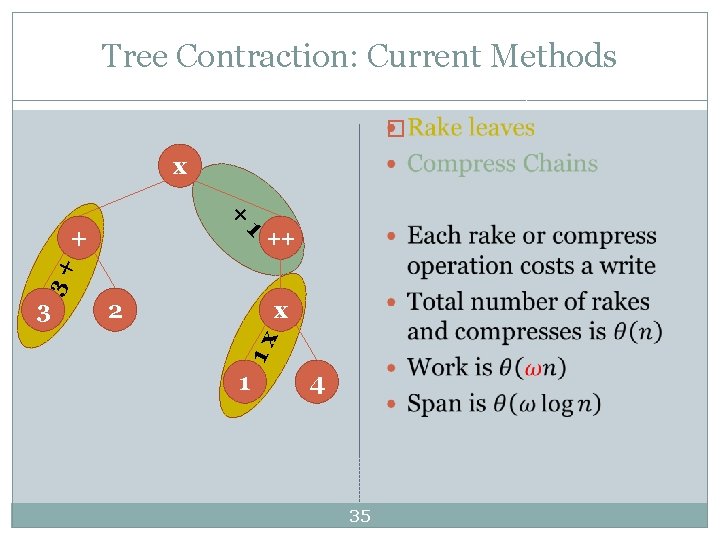

Write-Efficient Shared Memory Algorithms Problem Work (W) Span (D) Reduction of Writes Reduce Ordered Filter List Contraction Tree Contraction Minimum Spanning Tree 2 D Convex Hull Output Sensitive BFS Tree [BBFGGMS 16] 34

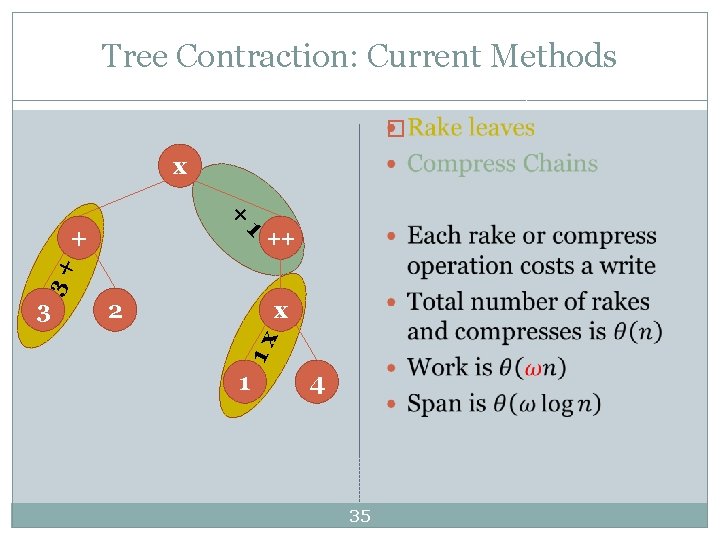

Tree Contraction: Current Methods � x + 2 x 1 x 3 ++ 1 3+ + 1 4 35

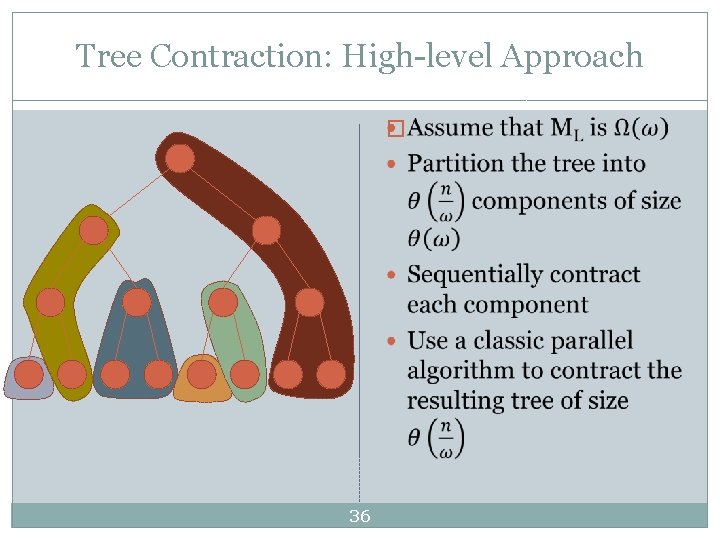

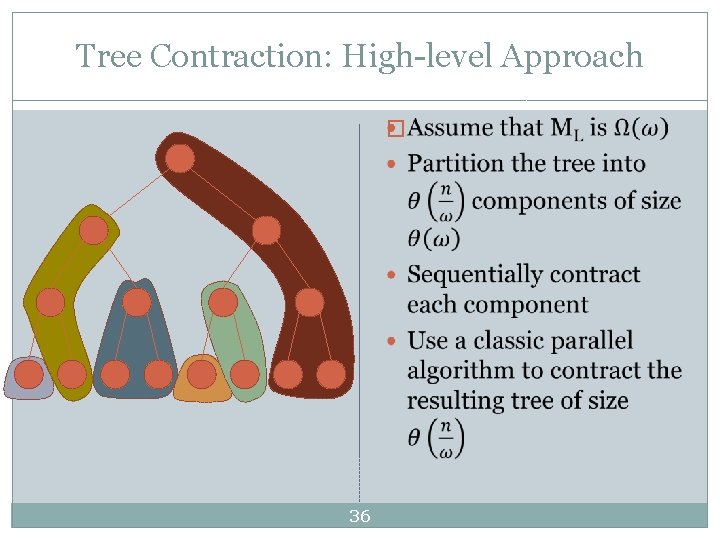

Tree Contraction: High-level Approach � 36

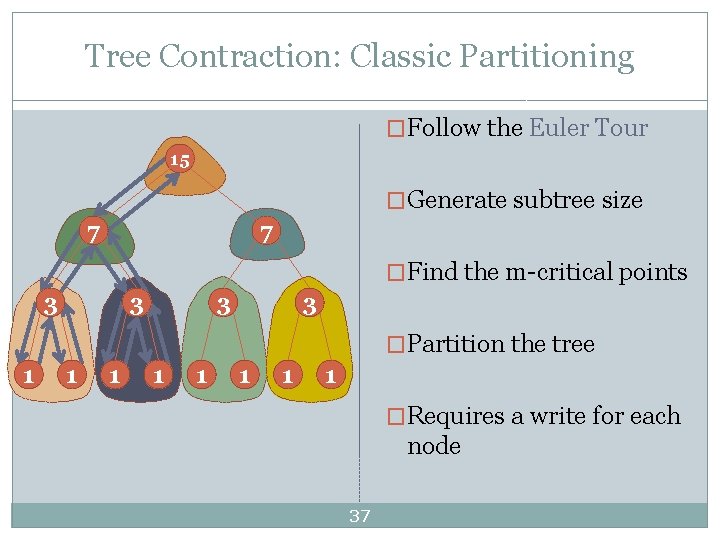

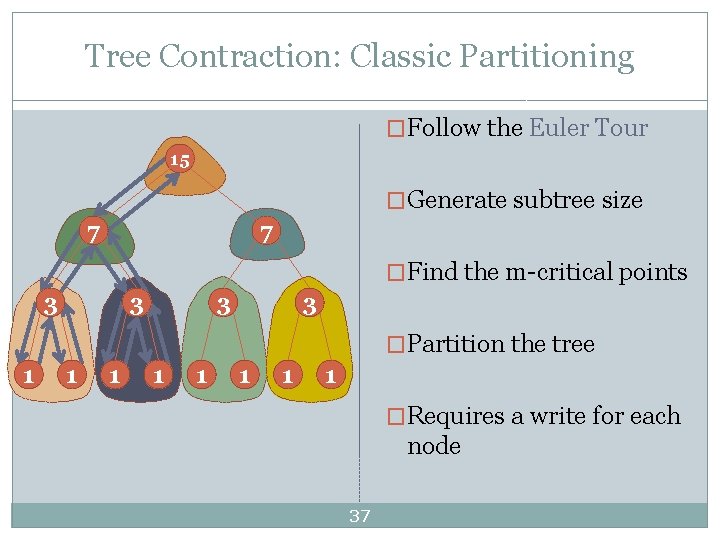

Tree Contraction: Classic Partitioning �Follow the Euler Tour 15 �Generate subtree size 7 7 �Find the m-critical points 3 3 �Partition the tree 1 1 1 1 �Requires a write for each node 37

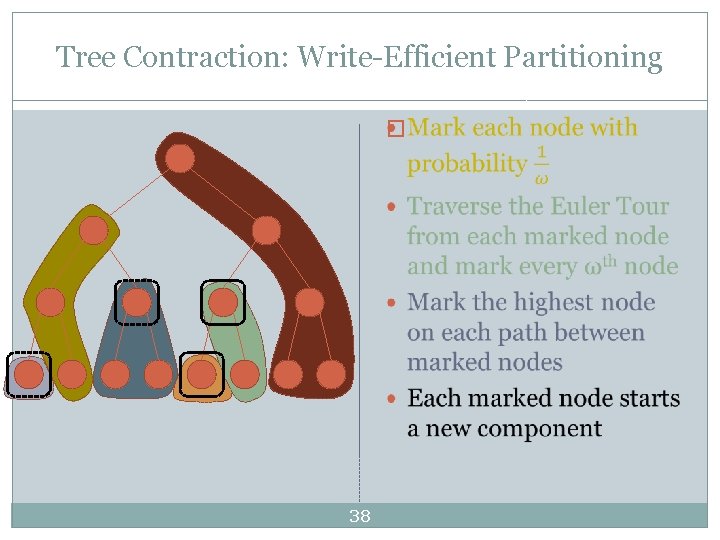

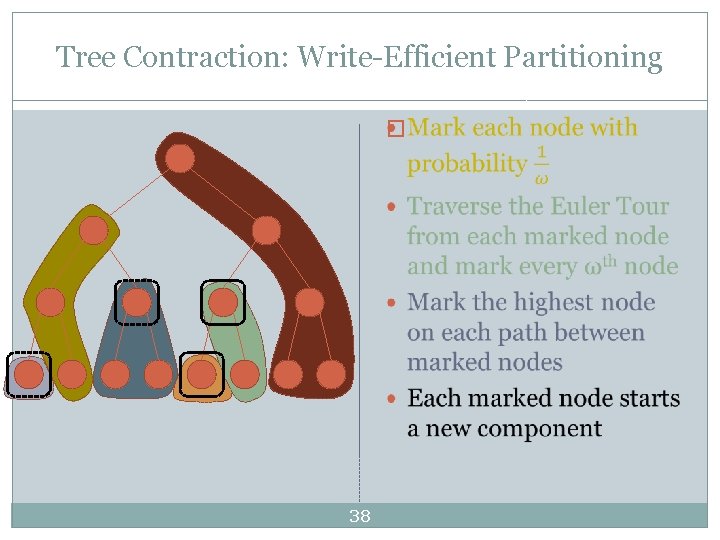

Tree Contraction: Write-Efficient Partitioning � 38

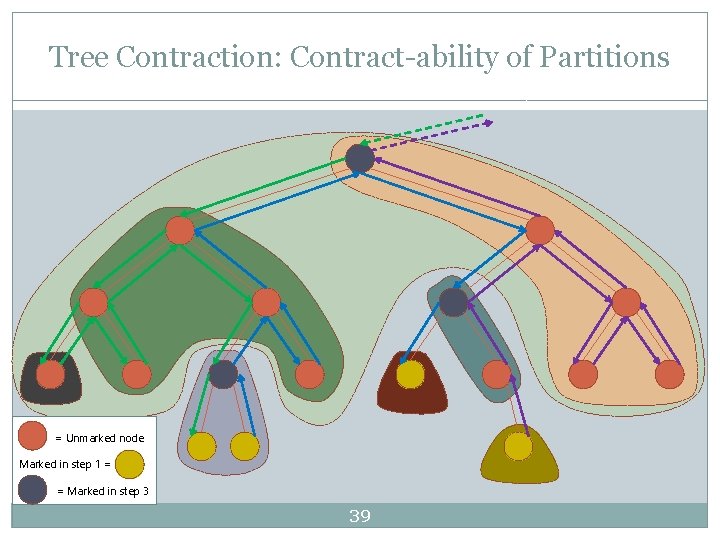

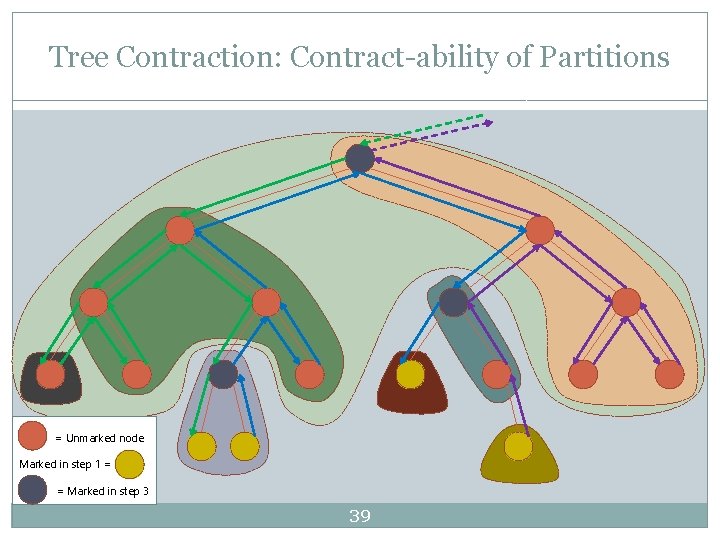

Tree Contraction: Contract-ability of Partitions = Unmarked node Marked in step 1 = = Marked in step 3 39

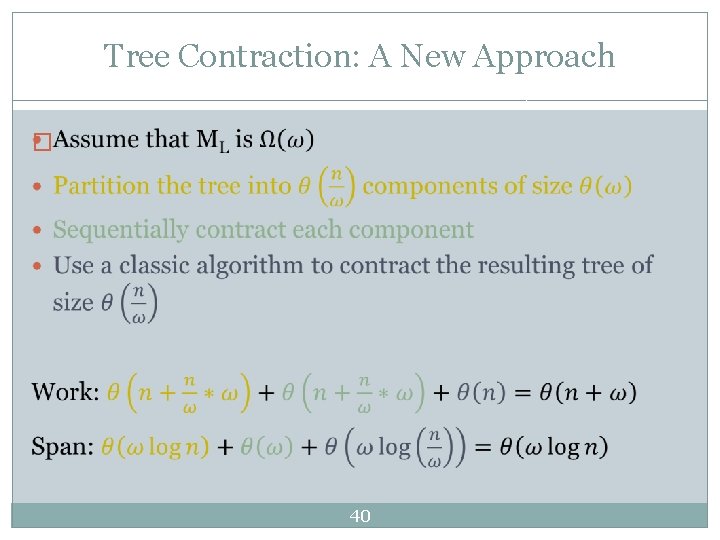

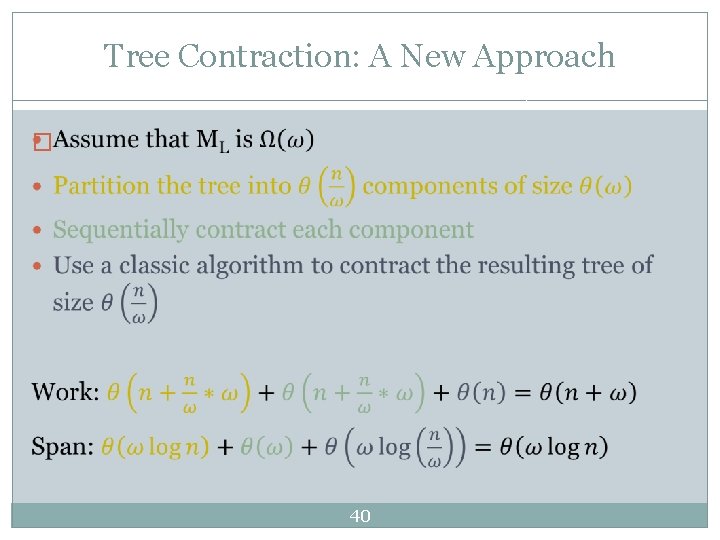

Tree Contraction: A New Approach � 40

![The Asymmetric External Memory model BFGGS 15 Smallmemory CPU 1 Asymmetric Largememory 0 The Asymmetric External Memory model [BFGGS 15] • Small-memory CPU 1 Asymmetric Large-memory 0](https://slidetodoc.com/presentation_image_h/e2a6166d6e8cfc62e987fa6e695d0a25/image-40.jpg)

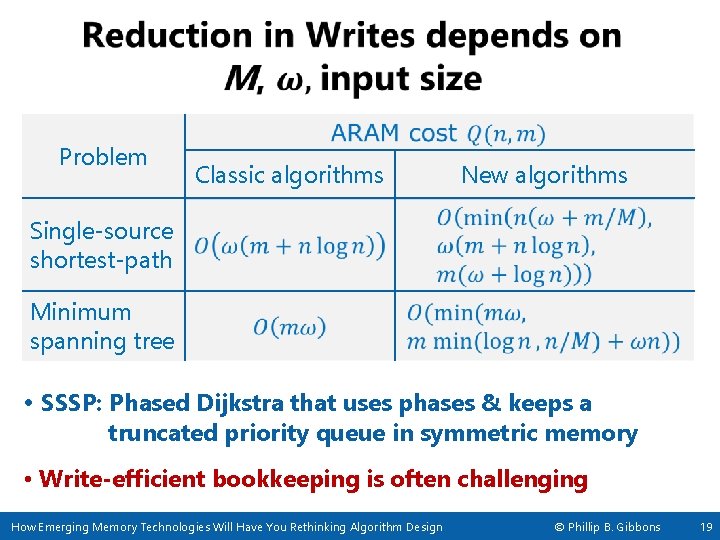

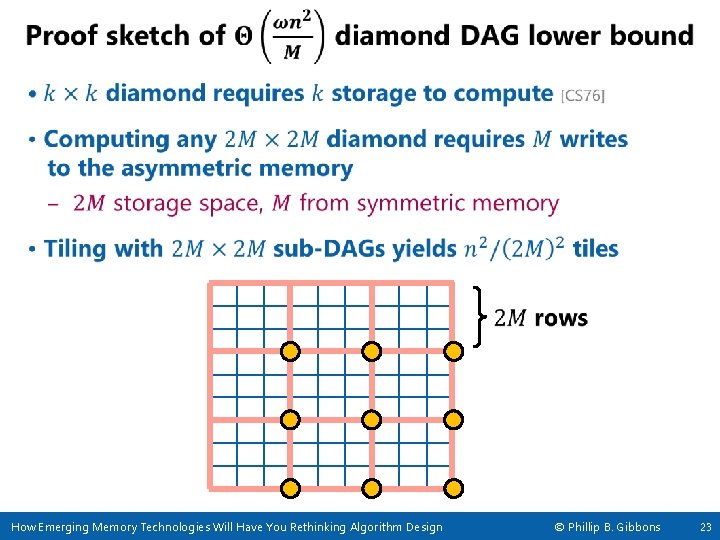

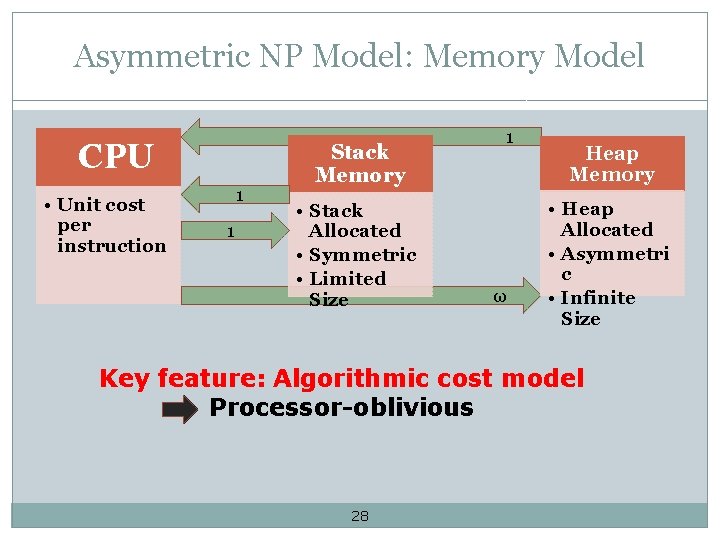

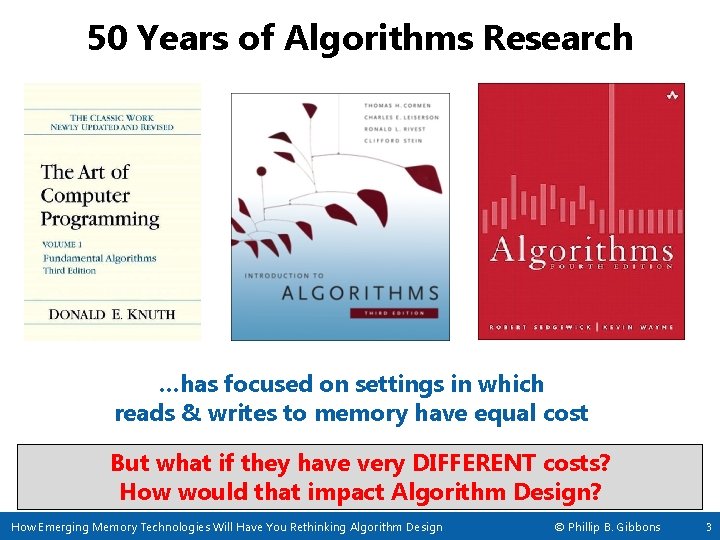

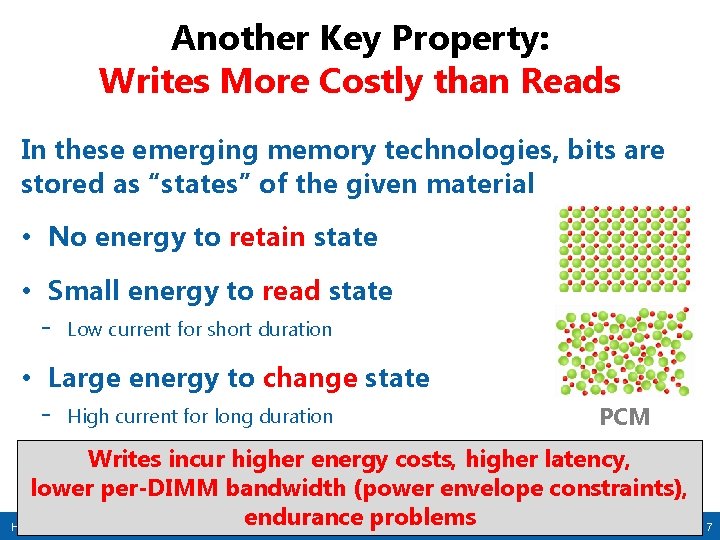

The Asymmetric External Memory model [BFGGS 15] • Small-memory CPU 1 Asymmetric Large-memory 0 1 � How Emerging Memory Technologies Will Have You Rethinking Algorithm Design © Phillip B. Gibbons 41

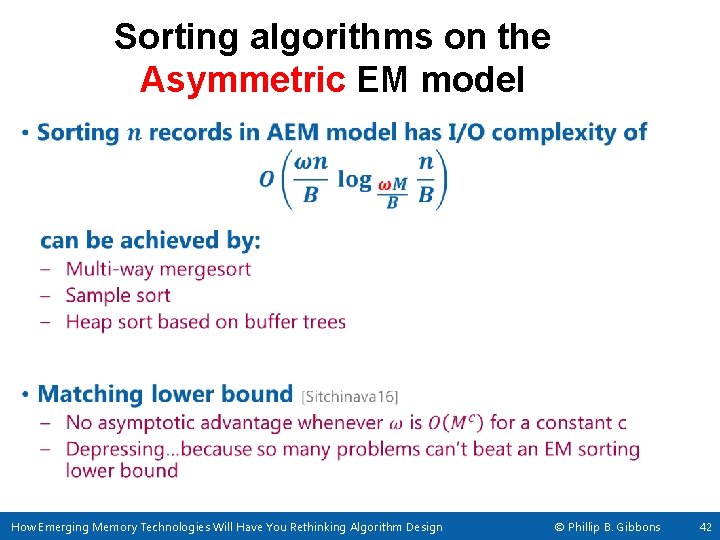

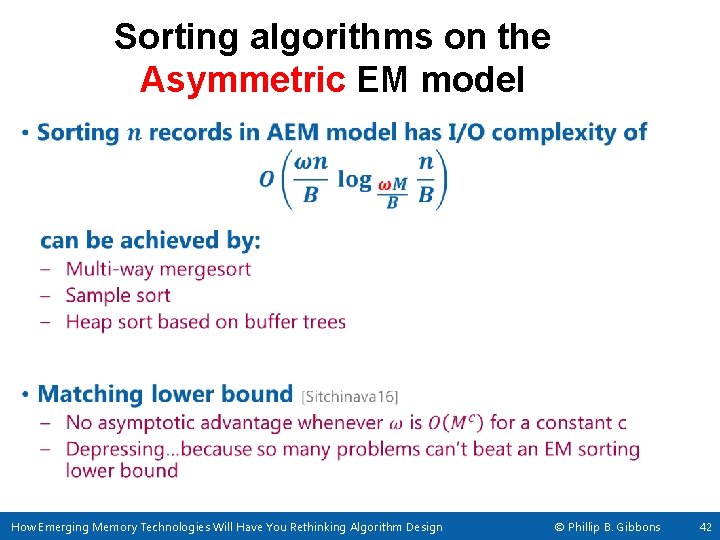

Sorting algorithms on the Asymmetric EM model • How Emerging Memory Technologies Will Have You Rethinking Algorithm Design © Phillip B. Gibbons 42

Key Take-Aways • Main memory will be persistent and asymmetric – Reads much cheaper than Writes • Very little work to date on Asymmetric Memory – Not quite: space complexity, CRQW, RMRs, Flash, … • Highlights of our results to date: – – Models: (M, ω)-ARAM; with parallel & block variants Asymmetric memory is not like symmetric memory New techniques for old problems Lower bounds for block variant are depressing You Are Here How Emerging Memory Technologies Will Have You Rethinking Algorithm Design © Phillip B. Gibbons 43

Thanks to Collaborators Naama Ben-David Guy Blelloch Jeremy Fineman Yan Gu Charles Mc. Guffey Julian Shun (Credit to Yan and Charlie for some of these slides) & Sponsors • • National Science Foundation Natural Sciences and Engineering Research Council of Canada Miller Institute for Basic Research in Sciences at UC Berkeley Intel (via ISTC for Cloud Computing & new ISTC for Visual Cloud) How Emerging Memory Technologies Will Have You Rethinking Algorithm Design © Phillip B. Gibbons 44

![References in order of first appearance KPMWEVNBPA 14 Ioannis Koltsidas Roman Pletka Peter Mueller References (in order of first appearance) [KPMWEVNBPA 14] Ioannis Koltsidas, Roman Pletka, Peter Mueller,](https://slidetodoc.com/presentation_image_h/e2a6166d6e8cfc62e987fa6e695d0a25/image-44.jpg)

References (in order of first appearance) [KPMWEVNBPA 14] Ioannis Koltsidas, Roman Pletka, Peter Mueller, Thomas Weigold, Evangelos Eleftheriou, Maria Varsamou, Athina Ntalla, Elina Bougioukou, Aspasia Palli, and Theodore Antonakopoulos. PSS: A Prototype Storage Subsystem based on PCM. NVMW, 2014. [DJX 09] Xiangyu Dong, Norman P. Jouupi, and Yuan Xie. PCRAMsim: System-level performance, energy, and area modeling for phase-change RAM. ACM ICCAD, 2009. [XDJX 11] Cong Xu, Xiangyu Dong, Norman P. Jouppi, and Yuan Xie. Design implications of memristor-based RRAM cross-point structures. IEEE DATE, 2011. [GZDCH 13] Nad Gilbert, Yanging Zhang, John Dinh, Benton Calhoun, and Shane Hollmer, "A 0. 6 v 8 pj/write non-volatile CBRAM macro embedded in a body sensor node for ultra low energy applications", IEEE VLSIC, 2013. [GMR 98] Phillip B. Gibbons, Yossi Matias, and Vijaya Ramachandran. The Queue-Read Queue-Write PRAM Model: Accounting for Contention in Parallel Algorithms, SIAM J. on Computing 28(2), 1998. [DHW 97] Cynthia Dwork, Maurice Herlihy, and Orli Waarts. Contention in Shared Memory Algorithms. ACM STOC, 1993. [YA 95] Jae-Heon Yang and James H. Anderson. A Fast, Scalable Mutual Exclusion Algorithm. Distributed Computing 9(1), 1995. [GT 05] Eran Gal and Sivan Toledo. Algorithms and data structures for flash memories. ACM Computing Surveys, 37(2), 2005. [EGMP 14] David Eppstein, Michael T. Goodrich, Michael Mitzenmacher, and Pawel Pszona. Wear minimization for cuckoo hashing: How not to throw a lot of eggs into one basket. ACM SEA, 2014. [BFGGS 16] Guy E. Blelloch, Jeremy T. Fineman, Phillip B. Gibbons, Yan Gu, and Julian Shun. Efficient Algorithms with Asymmetric Read and Write Costs. ESA, 2016. [HK 81] Jia-Wei Hong and H. T. Kung. I/O complexity: The red-blue pebble game. ACM STOC, 1981. [CS 76] Stephen Cook and Ravi Sethi. Storage requirements for deterministic polynomial time recognizable languages. JCSS, 13(1), 1976. How Emerging Memory Technologies Will Have You Rethinking Algorithm Design © Phillip B. Gibbons 45

![References cont BFGGS 15 Guy E Blelloch Jeremy T Fineman Phillip B Gibbons References (cont. ) [BFGGS 15] Guy E. Blelloch, Jeremy T. Fineman, Phillip B. Gibbons,](https://slidetodoc.com/presentation_image_h/e2a6166d6e8cfc62e987fa6e695d0a25/image-45.jpg)

References (cont. ) [BFGGS 15] Guy E. Blelloch, Jeremy T. Fineman, Phillip B. Gibbons, Yan Gu, and Julian Shun. Sorting with Asymmetric Read and Write Costs. ACM SPAA, 2015. [BBFGGMS 16] Naama Ben-David, Guy E. Blelloch, Jeremy T. Fineman, Phillip B. Gibbons, Yan Gu, Charles Mc. Guffey, and Julian Shun. Parallel Algorithms for Asymmetric Read-Write Costs. ACM SPAA, 2016. [Sitchinava 16] Nodari Sitchinava, personal communication, June 2016. Some additional related work: [CDGKKSS 16] Erin Carson, James Demmel, Laura Grigori, Nicholas Knight, Penporn Koanantakool, Oded Schwartz, and Harsha Vardhan Simhadri. Write-Avoiding Algorithms. IEEE IPDPS, 2016. [CGN 11] Shimin Chen, Phillip B. Gibbons, and Suman Nath. Rethinking Database Algorithms for Phase Change Memory. CIDR, 2011. [Viglas 14] Stratis D. Viglas. Write-limited sorts and joins for persistent memory. VLDB 7(5), 2014. How Emerging Memory Technologies Will Have You Rethinking Algorithm Design © Phillip B. Gibbons 46