Holland Computing Center David R Swanson Ph D

- Slides: 26

Holland Computing Center David R. Swanson, Ph. D. Director

Computational and Data. Sharing Core • Store and Share documents • Store and Share data and databases • Computing resources • Expertise

Who is HCC? • HPC provider for University of Nebraska • System-wide entity, evolved over last 11 years • Support from President, Chancellor, CIO, VCRED • 10 FTE, 6 students

HCC Resources • • Lincoln: • Tier-2 Machine Red (1500 cores, 400 TB) • Campus clusters Prairie. Fire, Sandhills (1500 cores, 25 TB) Omaha: • • Large IB cluster Firefly (4000 cores, 150 TB) 10 Gb/s connection to Internet 2 (DCN)

Staff • Dr. Adam Caprez, Dr. Ashu Guru, Dr. Jun Wang • Tom Harvill, Josh Samuelson, John Thiltges • Dr. Brian Bockleman (OSG development, grid computing) • Dr. Carl Lundstedt, Garhan Attebury (CMS) • Derek Weitzel, Chen He, Kartik Vedelaveni (GRAs) • Carson Cartwright, Kirk Miller, Shashank Reddy (ugrads)

HCC -- Schorr Center • 2200 sq. ft. machine room • 10 full-time staff • Prairie. Fire, Sandhills, Red and Merritt • 2100 TB storage • 10 gbps network

Three Types of Machines • ff. unl. edu : : : large capacity cluster. . . more coming soon • prairiefire. unl. edu // sandhills. unl. edu : : : special purpose cluster • merritt. unl. edu : : : shared memory machine • red. unl. edu : : : grid enabled cluster for US CMS (OSG)

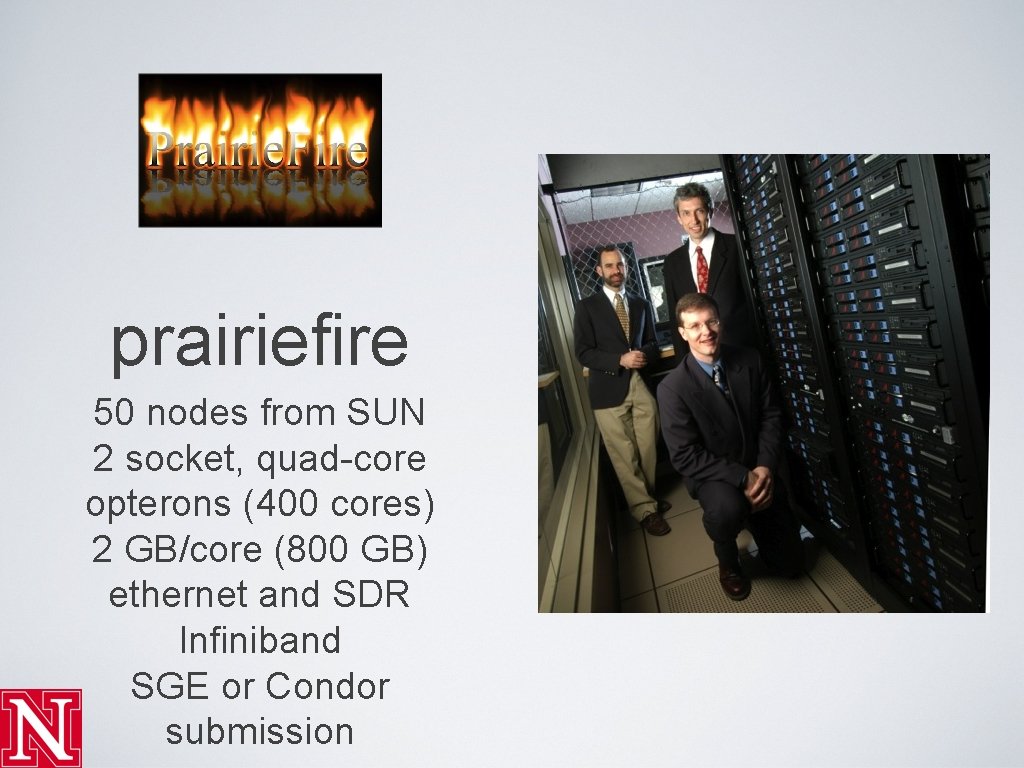

prairiefire 50 nodes from SUN 2 socket, quad-core opterons (400 cores) 2 GB/core (800 GB) ethernet and SDR Infiniband SGE or Condor submission

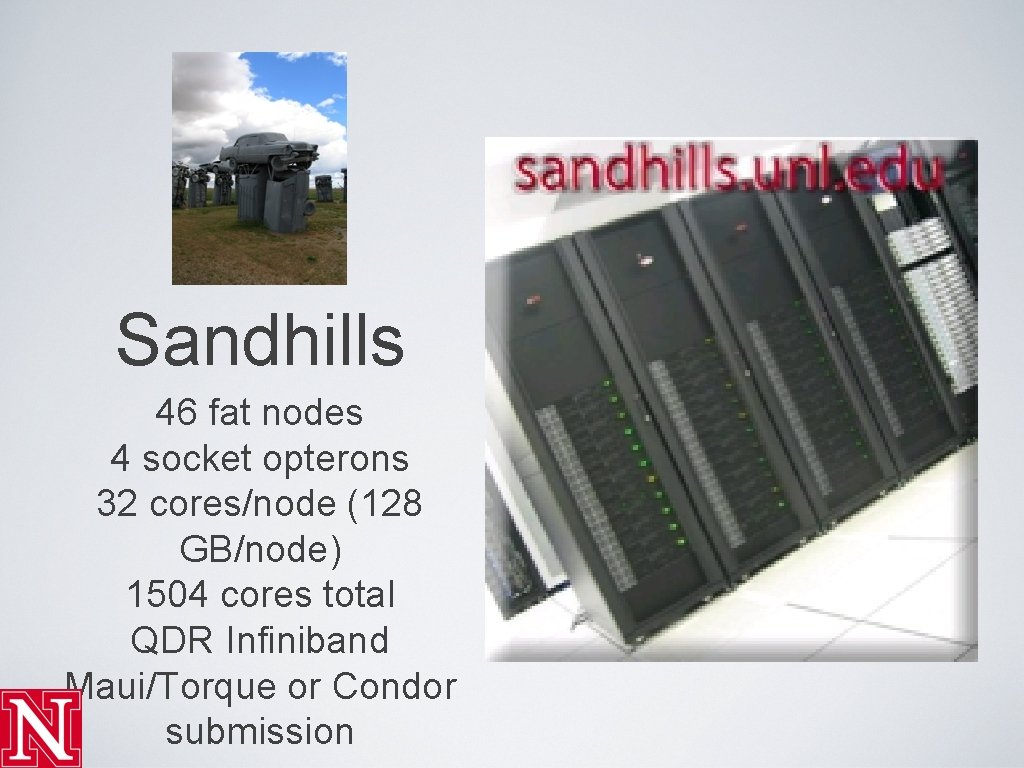

Sandhills 46 fat nodes 4 socket opterons 32 cores/node (128 GB/node) 1504 cores total QDR Infiniband Maui/Torque or Condor submission

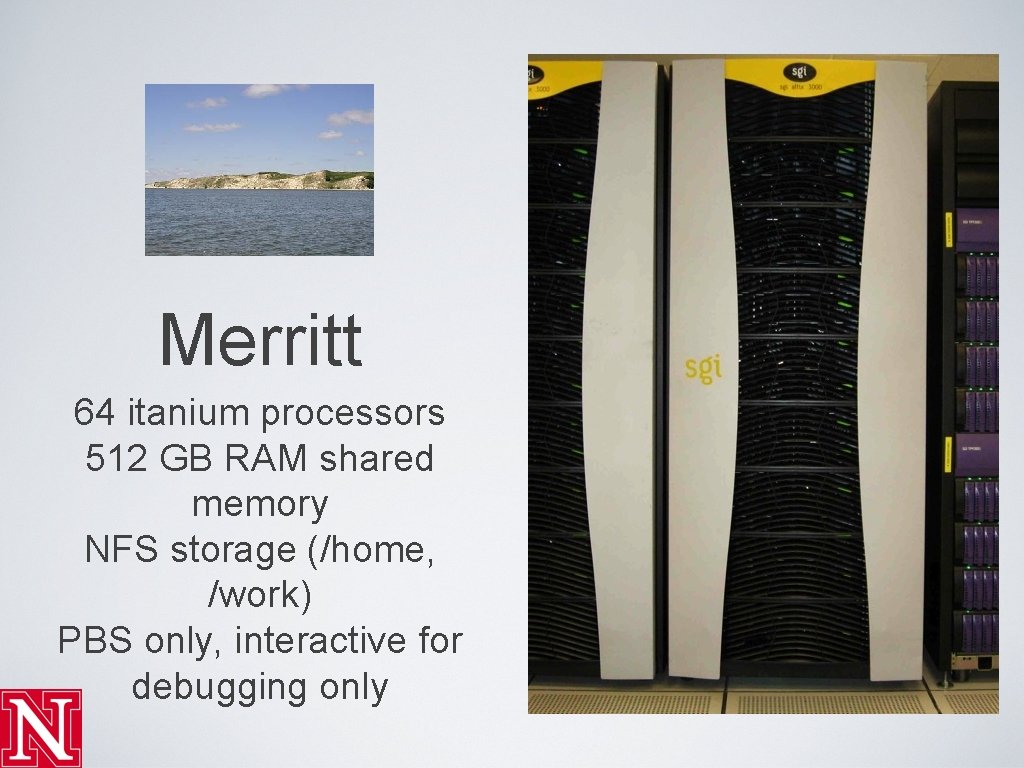

Merritt 64 itanium processors 512 GB RAM shared memory NFS storage (/home, /work) PBS only, interactive for debugging only

Red Open Science Grid machine part of US CMS project 240 TB storage (d. Cache) over 1100 compute cores certificates required, no login accounts

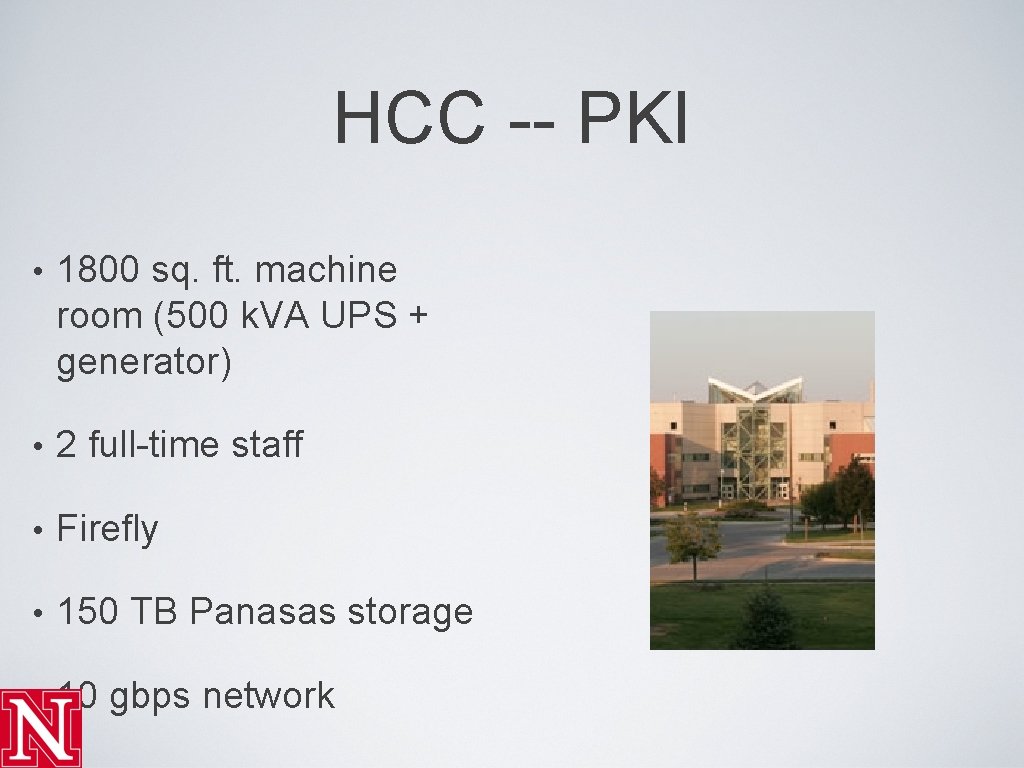

HCC -- PKI • 1800 sq. ft. machine room (500 k. VA UPS + generator) • 2 full-time staff • Firefly • 150 TB Panasas storage • 10 gbps network

Firefly 4000+ Opteron cores 150 TB Panasas storage Login or grid submissions Maui (PBS) Infiniband, Force 10 Gig. E

TBD 5800+ Opteron cores 400 TB Lustre storage Login or grid submissions Maui (PBS) QDR Infiniband, Gig. E

First Delivery. . .

Last year’s Usage Approaching 1 Million cpu hours/week

Resources & Expertise • Storage of large data sets (2100 TB) • High Performance Storage (Panasas) • High bandwidth transfers (9 gbps, ~50 TB/day) • 20 gbps between sites, 10 gbps to Internet 2 • High Performance Computing: ~10, 000 cores • Grid computing and High Throughput Computing

Usage Options • Shared Access • Free • Opportunistic • Storage limited • Shell or Grid • Priority Access deployment

Usage Options • Priority Access • Fee assessed • Reserved queue • Expandable Storage • Shell or Grid deployment

Computational and Data. Sharing Core • Will meet computational demands with a combination of Priority Access, Shared, and Grid resources • Storage will include a similar mixture, but likely consist of more dedicated resources • Often a trade-off between Hardware, Personnel and Software • Commercial Software saves Personnel time • Dedicated Hardware requires less development (grid protocols)

Computational and Data. Sharing Core • Resource organization at HCC • Per research group -- free to all NU faculty and staff • Associate quotas, fairshare or reserved portions of machines with these groups • /home/swanson/acaprez/. . . • accounting is straightforward

Computational and Data. Sharing Core • Start now - facilities and staff already in place • It’s free - albeit shared • Complaints currently encouraged (!) • Iterations required

More information • http: //hcc. unl. edu • dswanson@cse. unl. edu • hcc-support@unl. edu • David Swanson: (402) 472 -5006 • 118 K Schorr Center /// 158 H PKI /// Your Office • Tours /// Short Courses

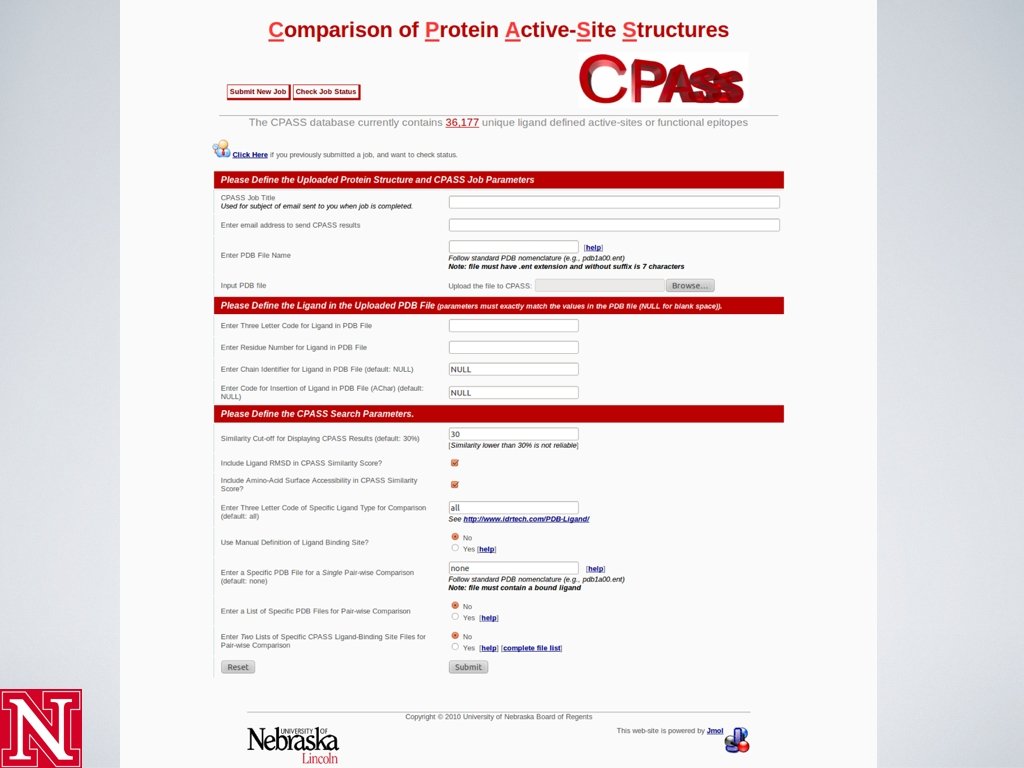

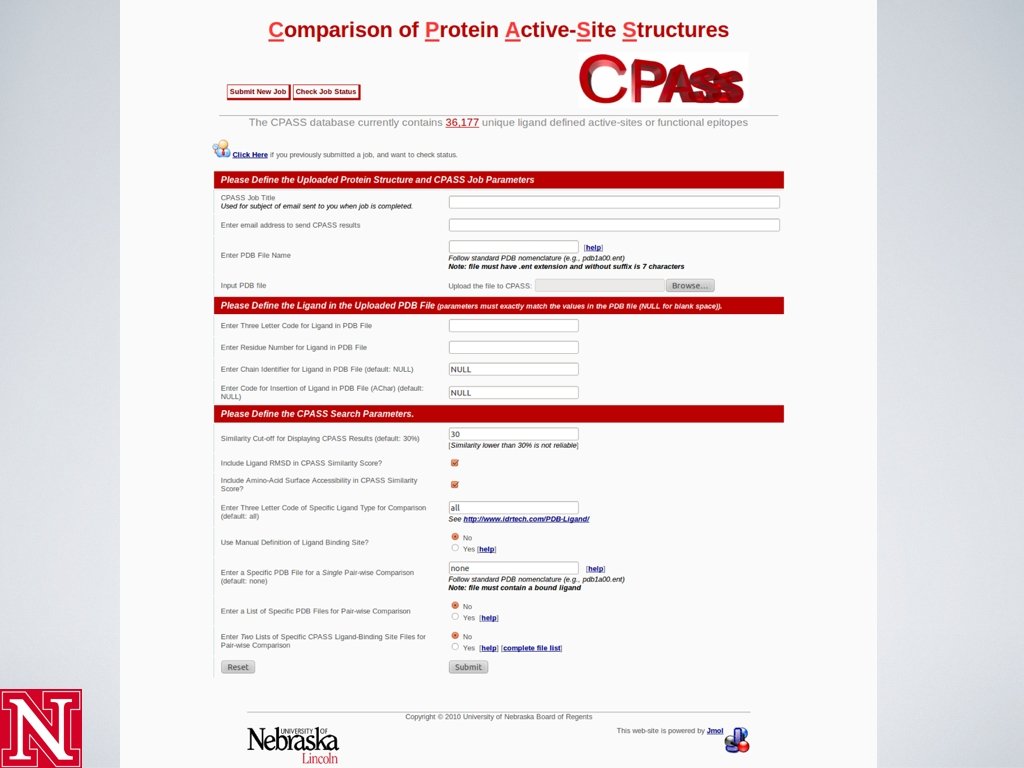

Sample Deployments • CPASS site (http: //cpass. unl. edu) • Dali. Lite, Rosetta, OMMSA • Logical. Doc ( https: //hcc-ngndoc. unl. edu/logicaldoc/ )