History of Artificial Intelligence Dana Nejedlov Department of

- Slides: 32

History of Artificial Intelligence Dana Nejedlová Department of Informatics Faculty of Economics Technical University of Liberec 1

What is Intelligence? • Common definition of artificial intelligence: – AI is a field which attempts to build intelligent machines and tries to understand intelligent entities. • But what is intelligence? – Learning, manipulating with facts, but also creativity, consciousness, emotion and intuition. • Can machines be intelligent? – Up to the present day it is not sure whether it is possible to build a machine that has all aspects of intelligence. – This kind of research is central in the field of AI. 2

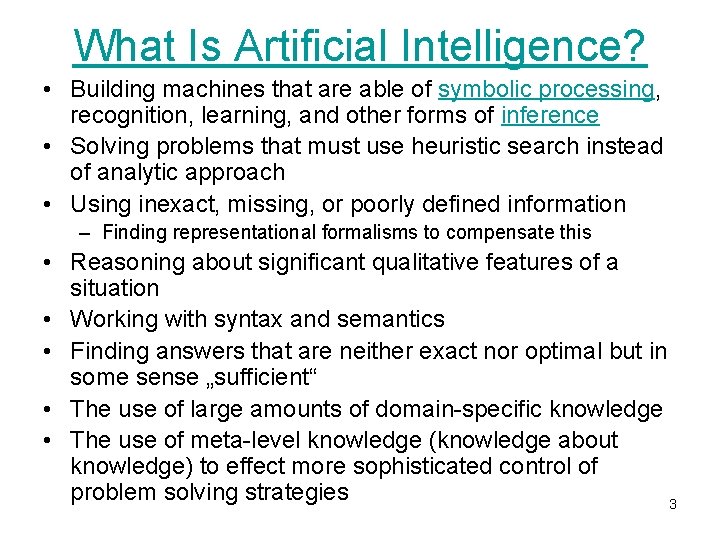

What Is Artificial Intelligence? • Building machines that are able of symbolic processing, recognition, learning, and other forms of inference • Solving problems that must use heuristic search instead of analytic approach • Using inexact, missing, or poorly defined information – Finding representational formalisms to compensate this • Reasoning about significant qualitative features of a situation • Working with syntax and semantics • Finding answers that are neither exact nor optimal but in some sense „sufficient“ • The use of large amounts of domain-specific knowledge • The use of meta-level knowledge (knowledge about knowledge) to effect more sophisticated control of problem solving strategies 3

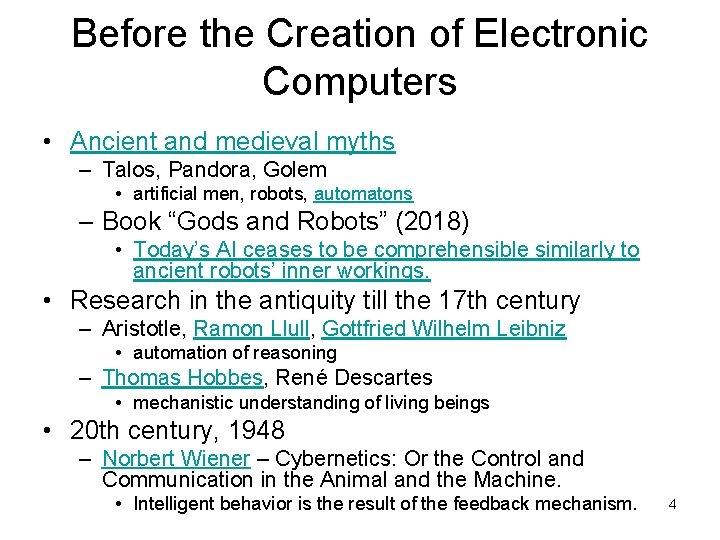

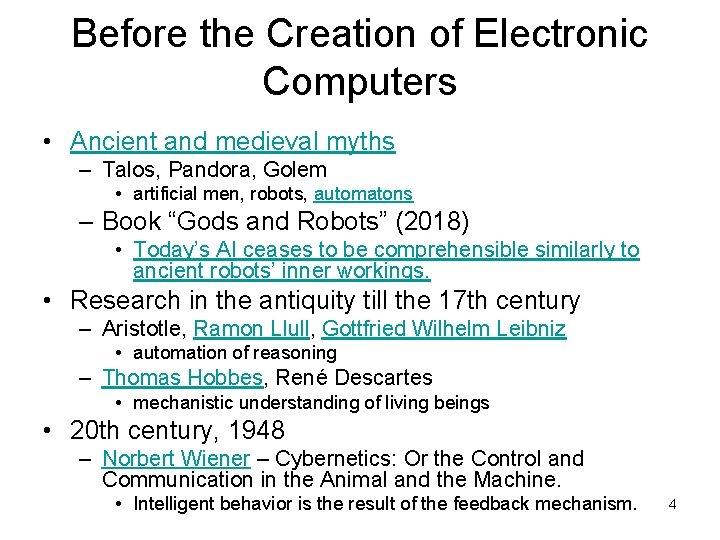

Before the Creation of Electronic Computers • Ancient and medieval myths – Talos, Pandora, Golem • artificial men, robots, automatons – Book “Gods and Robots” (2018) • Today’s AI ceases to be comprehensible similarly to ancient robots’ inner workings. • Research in the antiquity till the 17 th century – Aristotle, Ramon Llull, Gottfried Wilhelm Leibniz • automation of reasoning – Thomas Hobbes, René Descartes • mechanistic understanding of living beings • 20 th century, 1948 – Norbert Wiener – Cybernetics: Or the Control and Communication in the Animal and the Machine. • Intelligent behavior is the result of the feedback mechanism. 4

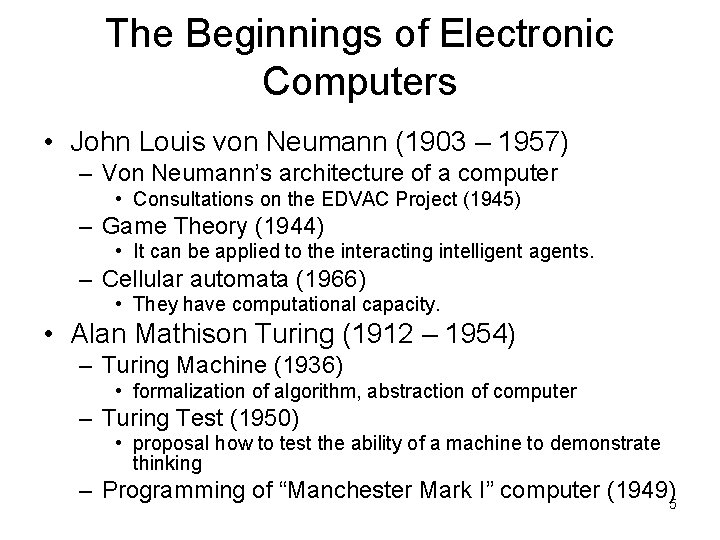

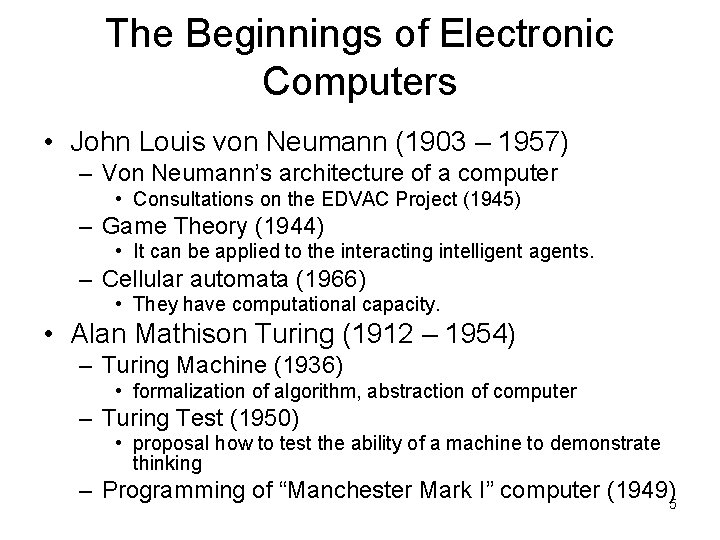

The Beginnings of Electronic Computers • John Louis von Neumann (1903 – 1957) – Von Neumann’s architecture of a computer • Consultations on the EDVAC Project (1945) – Game Theory (1944) • It can be applied to the interacting intelligent agents. – Cellular automata (1966) • They have computational capacity. • Alan Mathison Turing (1912 – 1954) – Turing Machine (1936) • formalization of algorithm, abstraction of computer – Turing Test (1950) • proposal how to test the ability of a machine to demonstrate thinking – Programming of “Manchester Mark I” computer (1949)5

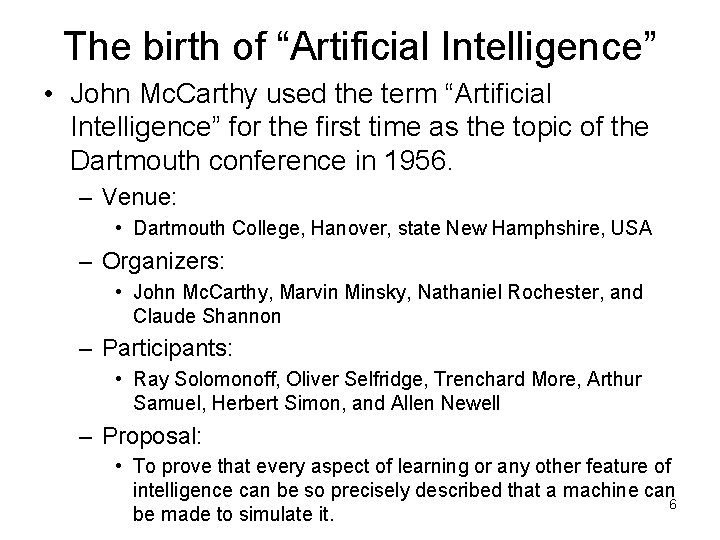

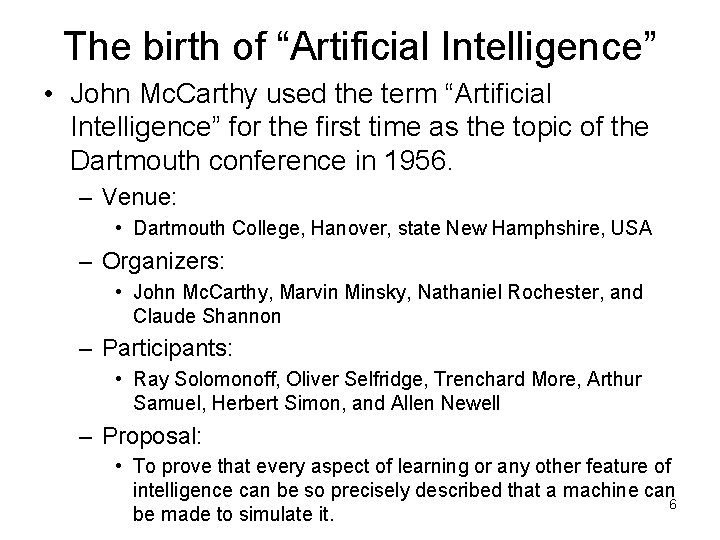

The birth of “Artificial Intelligence” • John Mc. Carthy used the term “Artificial Intelligence” for the first time as the topic of the Dartmouth conference in 1956. – Venue: • Dartmouth College, Hanover, state New Hamphshire, USA – Organizers: • John Mc. Carthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon – Participants: • Ray Solomonoff, Oliver Selfridge, Trenchard More, Arthur Samuel, Herbert Simon, and Allen Newell – Proposal: • To prove that every aspect of learning or any other feature of intelligence can be so precisely described that a machine can 6 be made to simulate it.

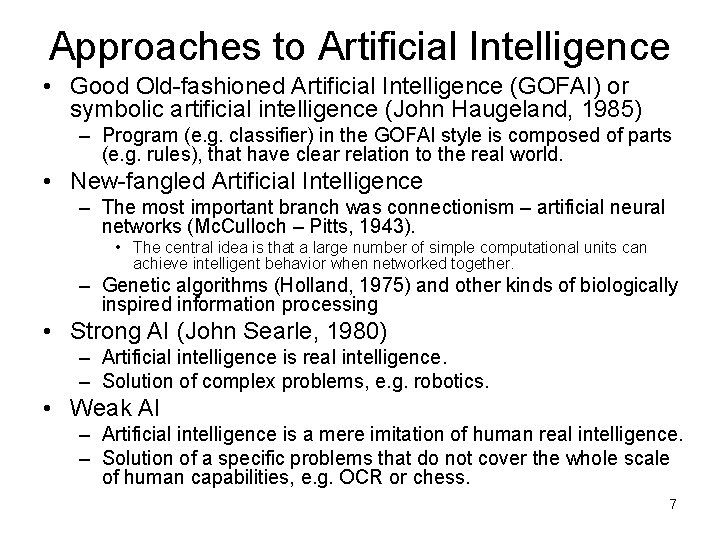

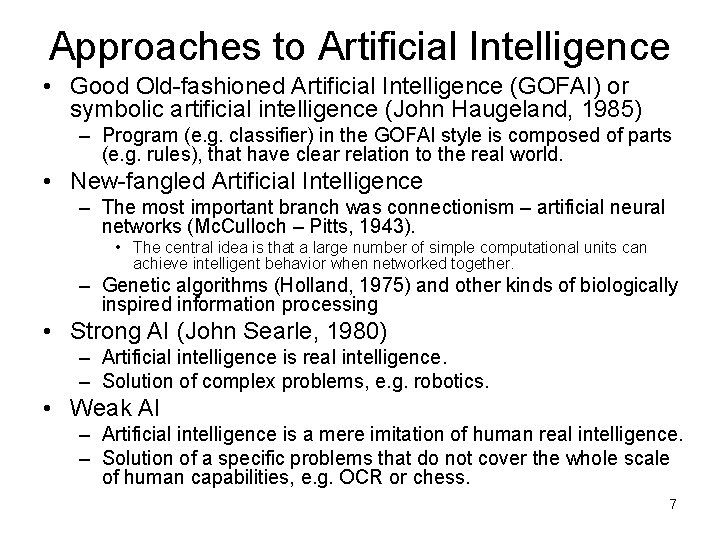

Approaches to Artificial Intelligence • Good Old-fashioned Artificial Intelligence (GOFAI) or symbolic artificial intelligence (John Haugeland, 1985) – Program (e. g. classifier) in the GOFAI style is composed of parts (e. g. rules), that have clear relation to the real world. • New-fangled Artificial Intelligence – The most important branch was connectionism – artificial neural networks (Mc. Culloch – Pitts, 1943). • The central idea is that a large number of simple computational units can achieve intelligent behavior when networked together. – Genetic algorithms (Holland, 1975) and other kinds of biologically inspired information processing • Strong AI (John Searle, 1980) – Artificial intelligence is real intelligence. – Solution of complex problems, e. g. robotics. • Weak AI – Artificial intelligence is a mere imitation of human real intelligence. – Solution of a specific problems that do not cover the whole scale of human capabilities, e. g. OCR or chess. 7

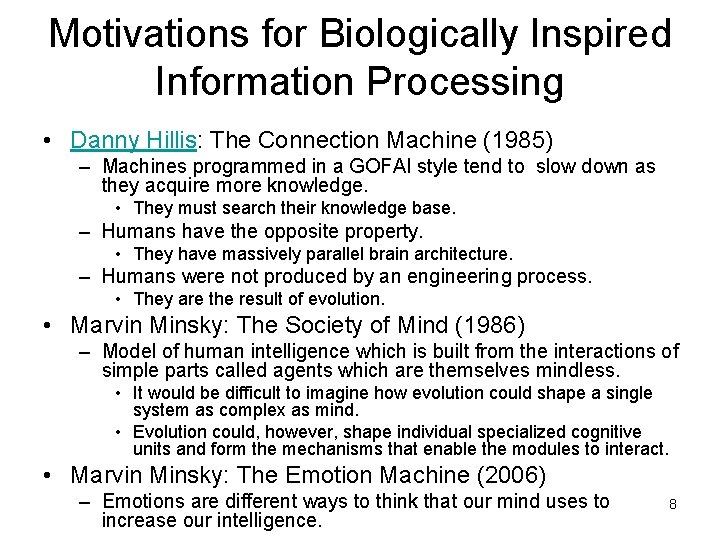

Motivations for Biologically Inspired Information Processing • Danny Hillis: The Connection Machine (1985) – Machines programmed in a GOFAI style tend to slow down as they acquire more knowledge. • They must search their knowledge base. – Humans have the opposite property. • They have massively parallel brain architecture. – Humans were not produced by an engineering process. • They are the result of evolution. • Marvin Minsky: The Society of Mind (1986) – Model of human intelligence which is built from the interactions of simple parts called agents which are themselves mindless. • It would be difficult to imagine how evolution could shape a single system as complex as mind. • Evolution could, however, shape individual specialized cognitive units and form the mechanisms that enable the modules to interact. • Marvin Minsky: The Emotion Machine (2006) – Emotions are different ways to think that our mind uses to increase our intelligence. 8

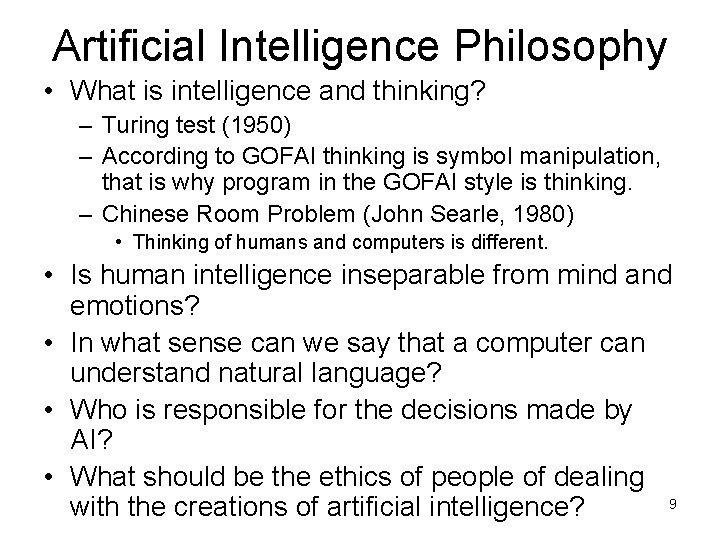

Artificial Intelligence Philosophy • What is intelligence and thinking? – Turing test (1950) – According to GOFAI thinking is symbol manipulation, that is why program in the GOFAI style is thinking. – Chinese Room Problem (John Searle, 1980) • Thinking of humans and computers is different. • Is human intelligence inseparable from mind and emotions? • In what sense can we say that a computer can understand natural language? • Who is responsible for the decisions made by AI? • What should be the ethics of people of dealing 9 with the creations of artificial intelligence?

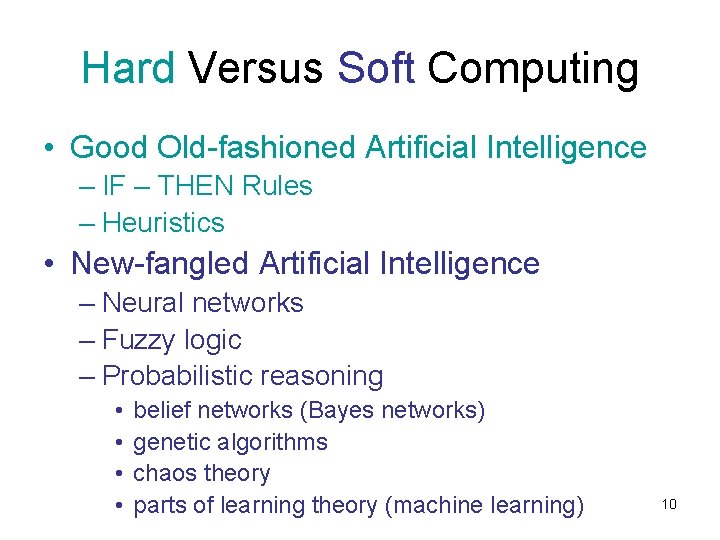

Hard Versus Soft Computing • Good Old-fashioned Artificial Intelligence – IF – THEN Rules – Heuristics • New-fangled Artificial Intelligence – Neural networks – Fuzzy logic – Probabilistic reasoning • • belief networks (Bayes networks) genetic algorithms chaos theory parts of learning theory (machine learning) 10

Heuristics • Problem-solving method that is usually successful, but can fail i some situations • Unclearly defined problems with missing or ambiguous data – Medical diagnosis – Vision, speech recognition • Helps to decide among infinite number of possible interpretations. • A problem may have an exact solution, but the computational cost of finding it may be prohibitive. – Chess, tic-tac-toe, 15 or 8 -puzzle, scheduling, path-finding… – Heuristic evaluation function • Evaluates each stage of solution. – Number of conflicts in a number of possible schedules • Helps to decide about the next step leading to the goal. – Selecting the schedule with minimum number of conflicts for the next 11 small changes attempting to find some correct schedule

Expectations from Artificial Intelligence • Predictions of Herbert Simon and Allen Newell (Heuristic Problem Solving, 1958), that within ten years – a digital computer will be the world's chess champion, – a digital computer will discover and prove an important new mathematical theorem, – a digital computer will compose critically acclaimed music, – most theories in psychology will take the form of computer programs. • Andrew Ng (Chinese-American computer scientist focusing on improving people’s lives using AI) – If a typical person can do a mental task with less than one second of thought, we can probably automate it using AI either now or in the near future. • https: //hbr. org/2016/11/what-artificial-intelligence-can-and-cantdo-right-now 12

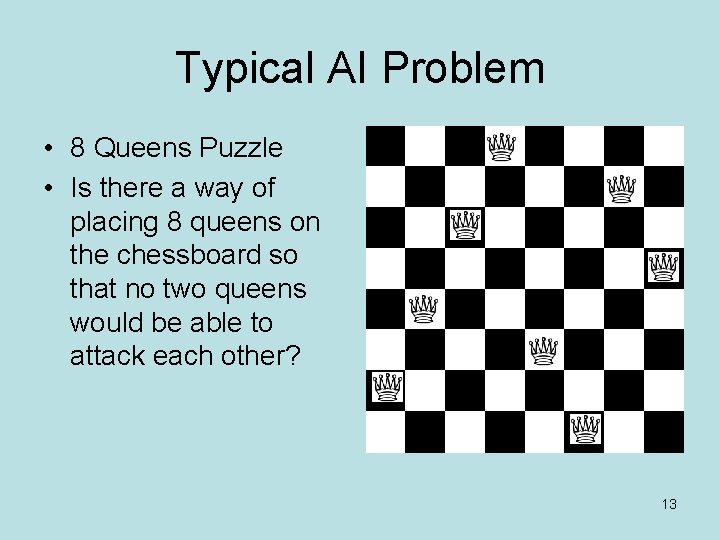

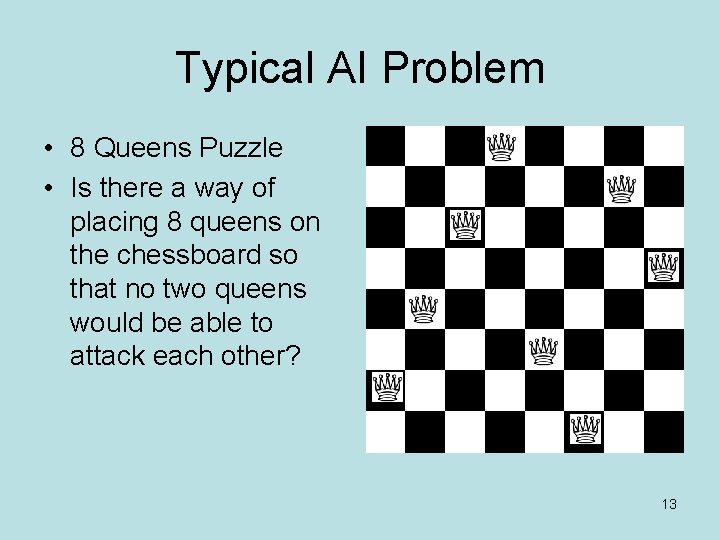

Typical AI Problem • 8 Queens Puzzle • Is there a way of placing 8 queens on the chessboard so that no two queens would be able to attack each other? 13

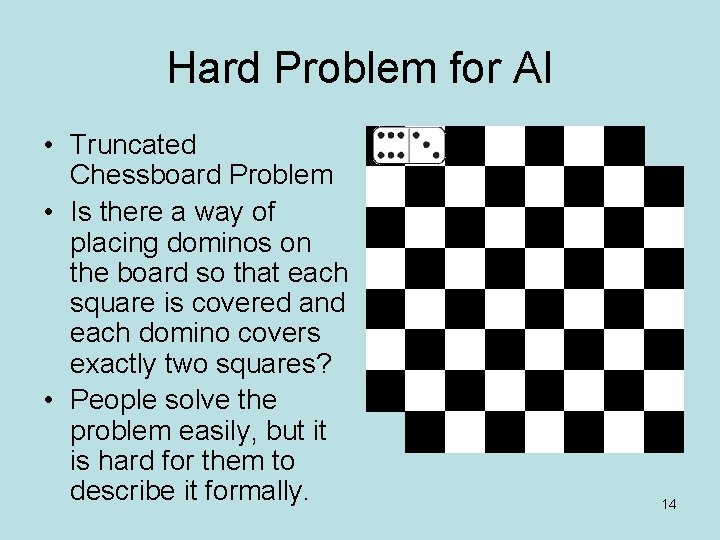

Hard Problem for AI • Truncated Chessboard Problem • Is there a way of placing dominos on the board so that each square is covered and each domino covers exactly two squares? • People solve the problem easily, but it is hard for them to describe it formally. 14

Limitations of Artificial Intelligence • David Hilbert (1862 – 1943) and Kurt Gödel (1906 – 1978) – Gödel‘s Incompleteness Theorem (1931) • Consistency of a formal system cannot be proved within the system, because it can contain statements with self-reference – logical paradoxes of the liar paradox type: – This statement is false. – Some tasks have no algorithms. • The halting problem – It is not decidable whether the algorithm will halt or not. – The algorithms in question contain again self-reference. • Complexity Theory (NP-completeness, 1971) – Some tasks have algorithms, but the computation cannot be completed in practice (on a real computer), because it would take too much time. • Roger Penrose (books The Emperor‘s New Mind, Shadows of the Mind) – It may not be possible to completely simulate biological intelligence by computational approaches as it may be based on (apparently quantum) phenomena that we do not know and are 15 not able to imitate.

Threats of Artificial Intelligence • Technological Singularity – Hypothesis that the invention of artificial superintelligence would accelerate technological progress which would have unpredictable effect on human society • Existential risk from artificial general intelligence – Book „Superintelligence“ by Nick Bostrom – Goals of AI should be carefully defined so that AI fulfilling these goals does not destroy humans. – Superintelligence is defined by Bostrom as an artificial intellect that is superior to human intellect in every aspect including creativity, general wisdom, and social skills. • https: //alldus. com/blog/articles/ai-quotes-from-some-of-the-worldstop-minds/ • http: //www. chinadaily. com. cn/bizchina/tech/201707/28/content_30278816. htm – People viewing AI as threat • Elon Musk, Stephen Hawking – People viewing AI as benefit to mankind • Mark Zuckenberg, Andrew Ng • Neural networks can be fooled into erroneous recognition by presenting specifically modified input (pictures, text, audio) to them 16 (Adversarial attacks). People can be fooled by deepfakes.

Gödel‘s Incompleteness Theorem • There are unprovable statements in every axiomatic mathematical system expressive enough to define the set of natural numbers. • Example theorem 1 = 2 • Proof of theorem: • If a = b, a ≠ 0, b ≠ 0, • then the two following equalities are also true: a 2 – b 2 = (a – b) ∙ (a + b), a 2 – b 2 = a 2 – ab. • And the following statements can be derived from them: a 2 – ab = (a – b) ∙ (a + b) a ∙ (a – b) = (a – b) ∙ (a + b) a=a+b a=a+a a = 2 a 1=2 • Truth can be verified only when knowledge beyond the natural finite numbers arithmetic is used. 17

The Logic Theorist – The First Artificial Intelligence Program • Allen Newell, J. C. Shaw and Herbert Simon at Carnegie Institute of Technology, now Carnegie Mellon University, in 1955 • It did logic proofs from the book “Principia Mathematica” (Bertrand Russell and Alfred North Whitehead, 1910). • It used mental processes of human experts. – cognitive science • To implement Logic Theorist on a computer, the three researchers developed a programming 18 language, IPL, a predecessor of Lisp.

Programming Languages • Tasks like natural language processing, knowledge representation, or theorem proving needed a special language allowing processing of symbolic data. • Lisp (John Mc. Carthy, USA, 1958) – functional paradigm / list processing • Program consists of functions of nested functions. • Data and programs are represented the same way: a list. – (+ 1 2 3) is a both a list of 4 atoms and a function returning value 6. • Program can serve as data for another program! – Powerful feature allowing flexible and productive coding. • Prolog (Alain Colmerauer, Europe, 1972) – declarative paradigm / logic programming • Program consists of facts and rules. – Programmer describes (i. e. declares) a problem. • Compiler deduces new facts from them. – Programmer does not write the algorithm for the solution. • Differentiable programming – new paradigm enabling machine learning programs to change their structure according to data thanks to new compilers 19 – references: sciencewal, skymind, medium, towardsdatascience

Programs with Symbolic Artificial Intelligence • The General Problem Solver (1957) – It was solving formalized symbolic problems, e. g. mathematical proofs and chess. • The Geometry Theorem Prover (1958) – It was proving theorems with the help of explicitly represented axioms. • SAINT (Symbolic Automatic INTegrator) – Integral calculus (1961) • ANALOGY (1963) – The picture A is to picture B like picture C to picture D. • IQ tests are used for measuring the intelligence of people. • Computers can be programmed to excel in IQ tests. • But those programs would be stupid in real-world situations. 20

Natural Language Processing • STUDENT (1964, 1967) – It was solving word problems in algebra. • SIR (Semantic Information Retrieval, 1968) – It was reading simple sentences and answered questions. • ELIZA (1965) – It was simulating psychologist. • TLC (Teachable Language Comprehender) (1969) – It was reading text and making semantic network. • SUR (Speech Understanding Research) (1971) – 5 -year plan of the ARPA (today DARPA) agency of a 21 research in continuous speech recognition

Expert Systems • They belong to the symbolic AI. • They use a set of rules and heuristics. • MACSYMA (MIT, 1968 -1982) – It was doing symbolic math calculations. • DENDRAL (SRI, 1965) – It is identifying chemicals. • MYCIN (SRI, Edward Shortliffe, 1974) – It diagnosed infectious blood diseases. – The following systems: EMYCIN, PUFF, INTERNIST - CADUCEUS 22

Commercial Expert Systems • PROSPECTOR (SRI, 1974 – 1983) – It is analyzing geological data and searching for deposits of minerals. • XCON – e. Xpert CONfigurer (CMU, 1978) – It was configuring DEC’s VAX computers. • TEIRESIAS (SRI, Randall Davis, 1976) – Knowledge Acquisition System (KAS) – It is acquiring knowledge from human experts. – It is building knowledge bases for expert 23 systems.

Robotics • • • Marvin Lee Minsky (* 1927) Freddy (University of Edinburgh, 1973) SHAKEY (SRI, 1969) SHRDLU (MIT, Terry Winograd, 1970) blocks worlds (MIT, 1970) – Robot has to manipulate building blocks according to instructions. • computer vision • natural language understanding • planning 24

The First Artificial Neural Networks • Warren Mc. Culloch and Walter Pitts – Model of artificial neuron (1943) – Neuron represents functions. • Donald Olding Hebb – Rule for neural network training (1949) • Marvin Minsky and Dean Edmonds have built the first computer with neural network. – SNARC (1951) 25

Other Artificial Neural Networks • Frank Rosenblatt – Perceptron (1957) • a single-layer network and its learning rule capable of learning linearly separable functions • Bernard Widrow and Marcian Ted Hoff – Minimization of network’s root square error – Delta rule (learning rule of a neural network) – ADAptive LINEar Systems or neurons or ADALINEs (1960) – MADALINEs (1962) • multi-layer versions of ADALINEs 26

Neural Networks Critique • Book „Perceptrons“ (Marvin Minsky and Seymour Papert, 1969) – When single-layer neural networks of a Perceptron type cannot learn XOR function (it is linearly inseparable), also multi-layer networks cannot learn it. – Hence funding of neural network research was stopped until the beginning of the 20 th century 80’s. • But multi-layer neural networks can learn the XOR function. • All that is needed for this is to find the right 27 algorithm for their training.

Neural Networks Resurrection • Hopfield net (John Hopfield, 1982) – It can learn a couple of pictures (patterns). • Self-Organizing Map (SOM) (Teuvo Kohonen, 1982) – It can do unsupervised learning. • Backpropagation (Arthur Bryson and Yu-Chi Ho, 1969) – algorithm for training of a multilayer neural network – It needs network’s neurons not to have a sharp threshold. – Because it was noticed, it was then rediscovered several times in the 70’s and the 80’s of the 20 th century and popularized in 1986. • NETtalk (Terry Sejnowski and Charles Rosenberg, 1986) – Multi-layer neural network, that learned English pronunciation and could generalize. – It used the backpropagation algorithm. 28

Neural Networks at Present • Neural networks with the quantity of layers and neurons used since 1980 s had problems in learning. • Since 2006 ways of training large networks are accomplished to be found. These networks have human-level performance. • Deep learning – neural networks with larger number of layers – Deep Belief Network • Its layers can be trained separately by unsupervised learning. – multilayer neural network trained using backpropagation • Its variant is convolutional neural network for recognition of images. • The main goal is to achieve the ability of extraction of the features of the object to be recognized on different levels of abstraction. See e. g. a lecture of Yoshua Bengio. – Pixels are the input into the first layer of the network, – edges, contours, and object parts are recognized on subsequent layers, – the class of the object is returned by the output layer. • Different levels of abstraction are solved on different layers of the network. • The network assigns the levels of abstraction to its layers by its own decision. • Prerequisites – the existence of large quantities of data and training datasets, e. g. : • labeled images • texts and their translations into multiple languages – distributed computing (e. g. cloud), more powerful CPUs, utilizing of GPUs • Critique – Geoffrey Hinton says "My view is throw it all away and start again. " – People do not need as much data as supervised learning does. 29

Present Applications of Neural Networks • Natural language processing (e. g. IBM Project Debater) • Generation of realistic images – Generative Adversarial Network – e. g. algorithms of Google a Facebook • Music composition with the help of machine learning – e. g. neural network Coconet trained on the music of J. S. Bach • Reinforcement learning – Solves tasks, where player interacts with environment and tries to maximize reward. – Neural network (Deep reinforcement learning) • Has state of the game and possible action of the player as its input. • Returns the reward of the player after the chosen action. • Learns from the observed combinations of states, actions and rewards. – Makes possible learning without human intervention. • Programs are playing against each other. – Resulting system can play videogames with human-level skill. • People can learn from it. (e. g. Dota 2: Open. AI. com, The Verge) – Limitations • Rules of the game must be entirely formalizable. – Facebook’s Chatbots started speaking in their own language. (Wired. com, Independent) – e. g. hundreds of GPUs and thousands of CPU cores for Dota 2 (Open. AI. com) • Needs extreme computing power. 30

The Most Important AI Laboratories • MIT (Massachusetts Institute of Technology) – 1959 - John Mc. Carthy and Marvin Minsky founded Artificial Intelligence Laboratory. • SRI (Stanford Research Institute) – 1963 - John Mc. Carthy founded AI Laboratory. • CMU (Carnegie Mellon University) – 1980 - Raj Reddy founded The Robotics Institute. • IBM – Watson (analysis of texts by means of commercial cloud applications) • Amazon – Amazon Machine Learning (business data analysis) • Google – Quantum AI Lab – application of quantum computing on artificial intelligence and machine learning – Deep. Mind – British acquisition that has developed algorithms for playing Go and Atari video games • Facebook AI Research • Open. AI – nonprofit research company founded by Elon Musk 31

Present • • • Robotic toys, space probes Robotics in machinery Home appliances (washers, vacuum cleaners) Data Mining, fraud detection, spam filtering Searching on the Internet (web agents) Modeling of interactive processes (agents) E-business – e-shops personalization Intelligent tutoring systems and SW interfaces Role-playing games, chess programs Speech and video recognition Machine translation 32

Contoh analisis sumber dan penggunaan dana

Contoh analisis sumber dan penggunaan dana Kuzyn gacka i mopka

Kuzyn gacka i mopka Xoon expert system

Xoon expert system State space search in artificial intelligence

State space search in artificial intelligence Searching for solutions in artificial intelligence

Searching for solutions in artificial intelligence 15-780 graduate artificial intelligence

15-780 graduate artificial intelligence Knowledge manipulation in artificial intelligence

Knowledge manipulation in artificial intelligence Types of knowledge

Types of knowledge Colbert, stephen. home page. 1 nov. 2006.

Colbert, stephen. home page. 1 nov. 2006. Kecerdasan kepemimpinan

Kecerdasan kepemimpinan Artificial intelligence assessment

Artificial intelligence assessment What math is used in artificial intelligence

What math is used in artificial intelligence Artificial intelligence peas

Artificial intelligence peas 15-780 graduate artificial intelligence

15-780 graduate artificial intelligence Xkcd

Xkcd Fuzzy propositions in artificial intelligence

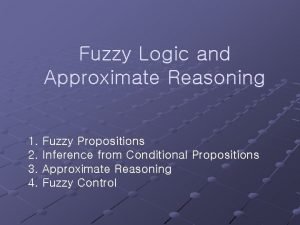

Fuzzy propositions in artificial intelligence Cse 571 artificial intelligence

Cse 571 artificial intelligence 15 780

15 780 Informed search and uninformed search

Informed search and uninformed search Artificial intelligence for class 6

Artificial intelligence for class 6 Augmented grammar in artificial intelligence

Augmented grammar in artificial intelligence Omniscience in artificial intelligence

Omniscience in artificial intelligence Importance of turing test in artificial intelligence

Importance of turing test in artificial intelligence Solving problems by searching artificial intelligence

Solving problems by searching artificial intelligence Partitioned semantic nets in artificial intelligence

Partitioned semantic nets in artificial intelligence Difference between a* and ao* algorithm in ai

Difference between a* and ao* algorithm in ai Artificial intelligence thesis proposals

Artificial intelligence thesis proposals Rule based deduction system

Rule based deduction system Inference by enumeration in artificial intelligence

Inference by enumeration in artificial intelligence Rote learning in artificial intelligence

Rote learning in artificial intelligence Chronological backtracking in depth first search involves

Chronological backtracking in depth first search involves Pxdes expert system

Pxdes expert system Optimal decisions in games in artificial intelligence

Optimal decisions in games in artificial intelligence