HighPerformance Grid Computing and Research Networking Message Passing

![Socket: server. c int main(int argc, char *argv[]) { int sockfd, newsockfd, portno, clilen; Socket: server. c int main(int argc, char *argv[]) { int sockfd, newsockfd, portno, clilen;](https://slidetodoc.com/presentation_image_h2/1d344a07cded59787c5ddc5fdd31dc46/image-9.jpg)

![Socket: client. c int main(int argc, char *argv[]) { int sockfd, portno, n; struct Socket: client. c int main(int argc, char *argv[]) { int sockfd, portno, n; struct](https://slidetodoc.com/presentation_image_h2/1d344a07cded59787c5ddc5fdd31dc46/image-10.jpg)

![A First MPI Program #include "mpi. h" #include<stdio. h> int main(int argc, char *argv[]) A First MPI Program #include "mpi. h" #include<stdio. h> int main(int argc, char *argv[])](https://slidetodoc.com/presentation_image_h2/1d344a07cded59787c5ddc5fdd31dc46/image-23.jpg)

![#include "mpi. h" #include<stdio. h> int main(int argc, char *argv[ ]) { int i, #include "mpi. h" #include<stdio. h> int main(int argc, char *argv[ ]) { int i,](https://slidetodoc.com/presentation_image_h2/1d344a07cded59787c5ddc5fdd31dc46/image-33.jpg)

- Slides: 72

High-Performance Grid Computing and Research Networking Message Passing with MPI Presented by Khalid Saleem Instructor: S. Masoud Sadjadi http: //www. cs. fiu. edu/~sadjadi/Teaching/ sadjadi At cs Dot fiu Dot edu 1

Acknowledgements n The content of many of the slides in this lecture notes have been adopted from the online resources prepared previously by the people listed below. Many thanks! n Henri Casanova n n n Principles of High Performance Computing http: //navet. ics. hawaii. edu/~casanova henric@hawaii. edu 2

Outline n Message Passing n MPI n Point-to-Point Communication n Collective Communication 3

Message Passing M P M . . . P network n n P n Each processor runs a process Processes communicate by exchanging messages They cannot share memory in the sense that they cannot address the same memory cells The above is a programming model and things may look different in the actual implementation (e. g. , MPI over Shared Memory) Message Passing is popular because it is general: n n n M Pretty much any distributed system works by exchanging messages, at some level Distributed- or shared-memory multiprocessors, networks of workstations, uniprocessors It is not popular because it is easy (it’s not) 4

Code Parallelization #pragma omp parallel for(i=0; i<5; i++) n Shared-memory programming …. . n parallelizing existing code can be very easy # ifdef _OPENMP n Open. MP: just add a few pragmas printf(“Hello”); n APIs available for C/C++ & Fortran # endif n n n Pthreads: pthread_create(…) Understanding parallel code is easy Distributed-memory programming n parallelizing existing code can be very difficult n n n No shared memory makes it impossible to “just” reference variables Explicit message exchanges can get really tricky Understanding parallel code is difficult n Data structures are split all over different memories 5

Programming Message Passing n n n Shared-memory programming is simple conceptually (sort of) Shared-memory machines are expensive when one wants a lot of processors It’s cheaper (and more scalable) to build distributed memory machines n n Distributed memory supercomputers (IBM SP series) Commodity clusters But then how do we program them? At a basic level, let the user deal with explicit messages n n difficult but provides the most flexibility 6

Message Passing n Isn’t exchanging messages completely known and understood? n n That’s the basis of the IP idea Networked computers running programs that communicate are very old and common n n DNS, e-mail, Web, . . . The answer is that, yes it is, we have “Sockets” n n Software abstraction of a communication between two Internet hosts Provides an API for programmers so that they do not need to know anything (or almost anything) about TCP/IP and write code with programs that communicate over the internet 7

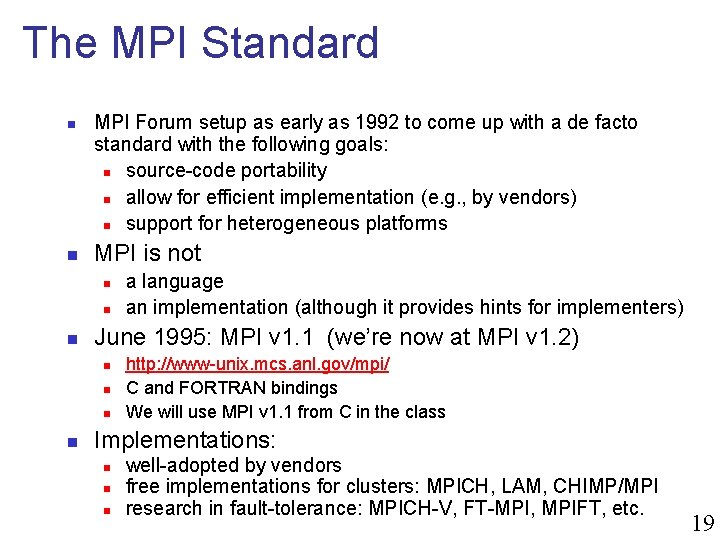

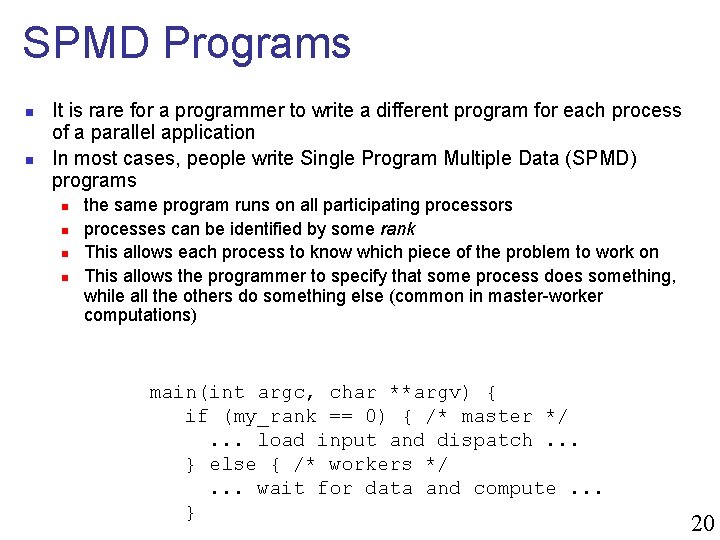

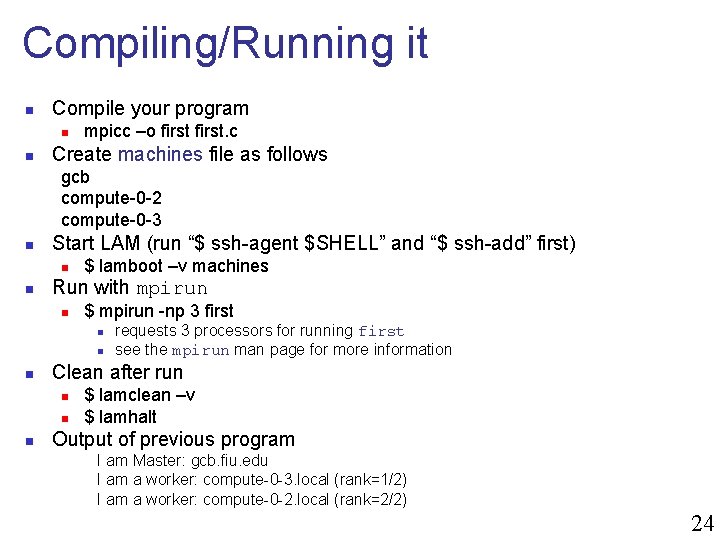

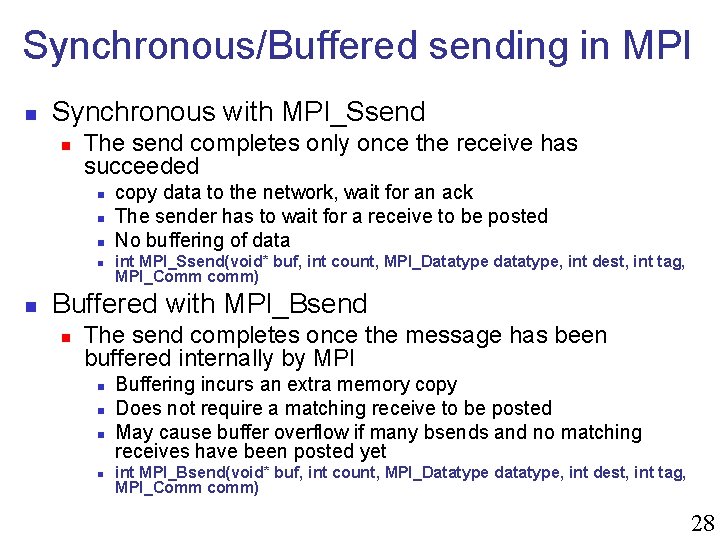

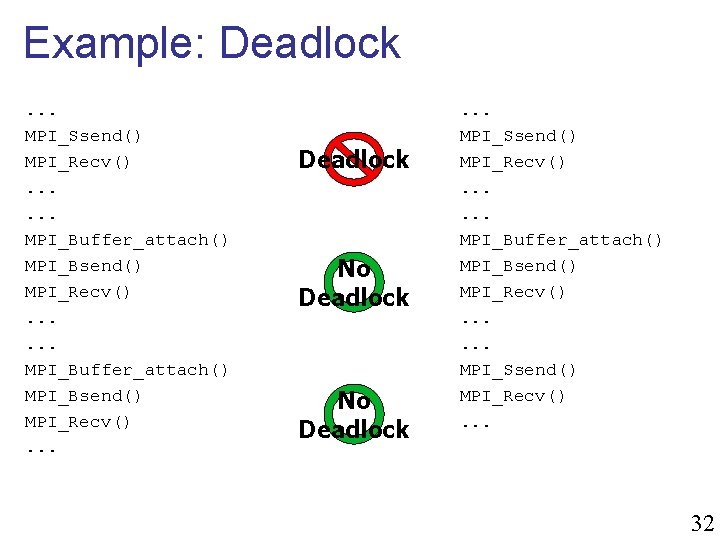

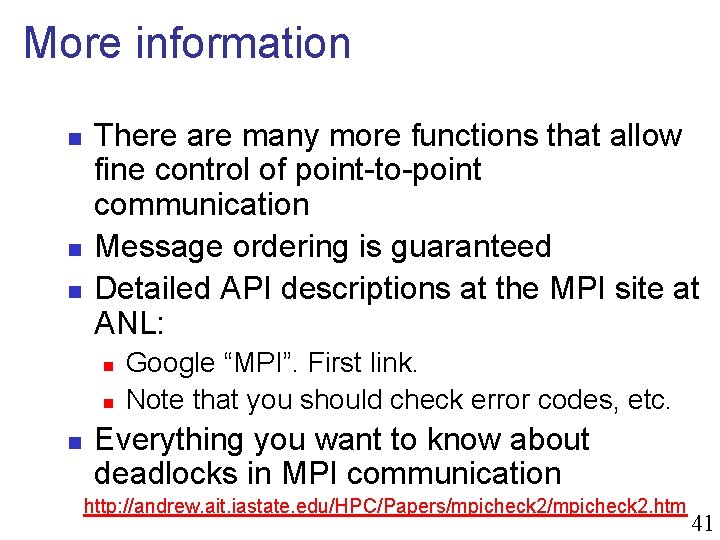

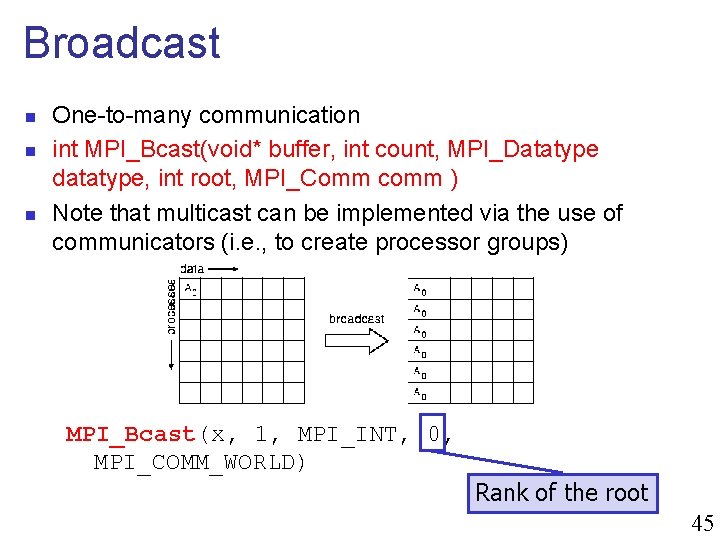

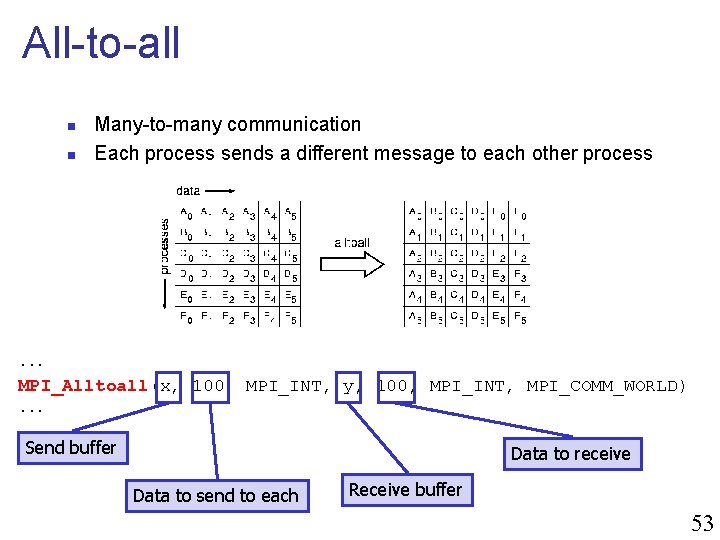

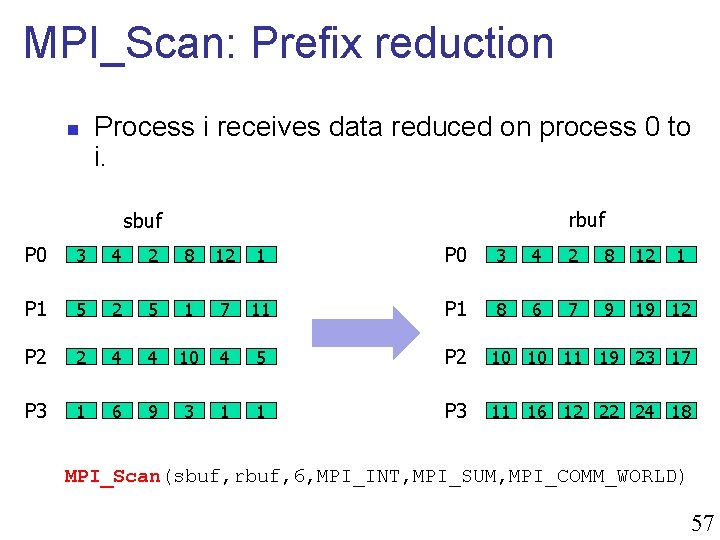

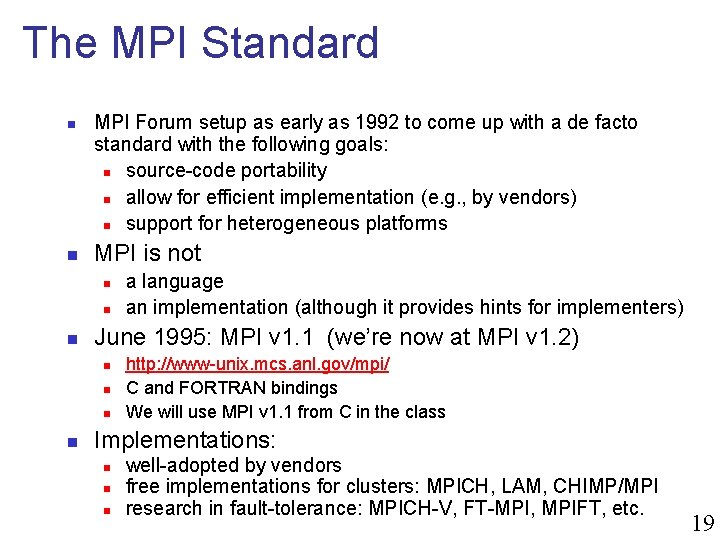

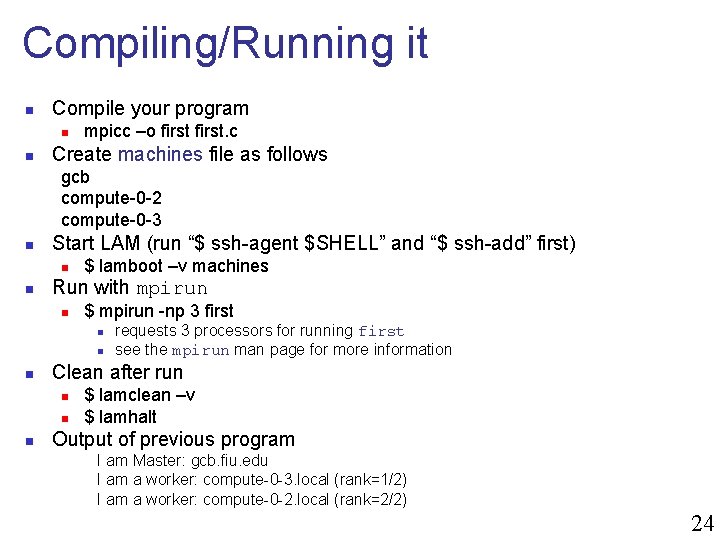

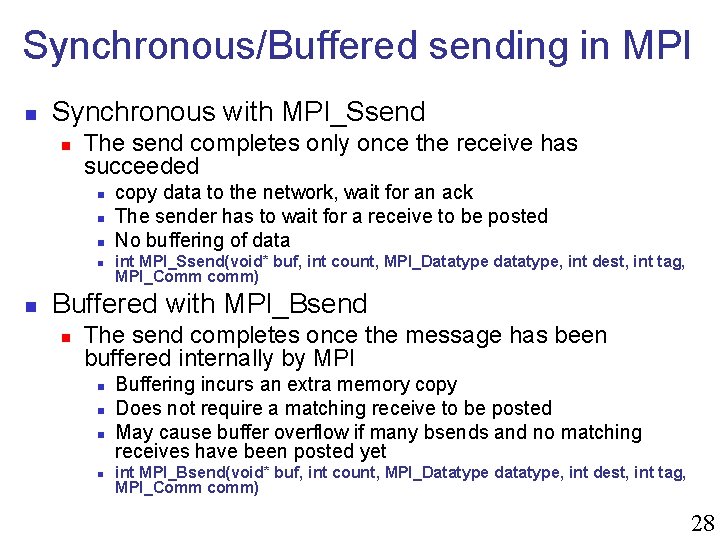

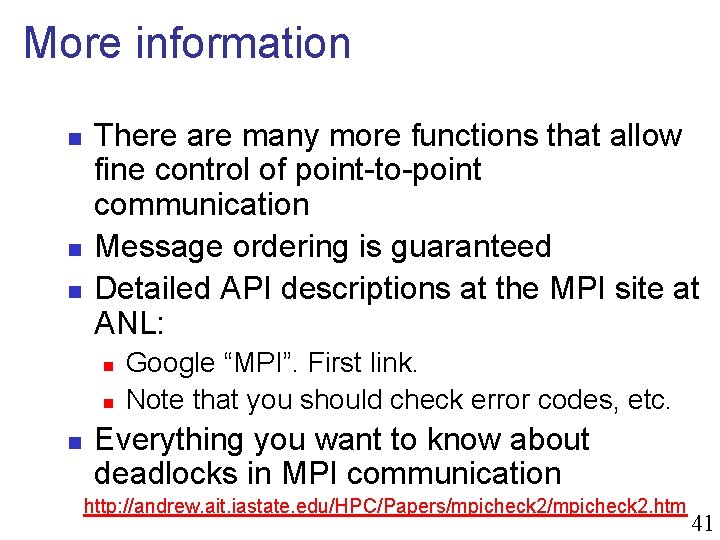

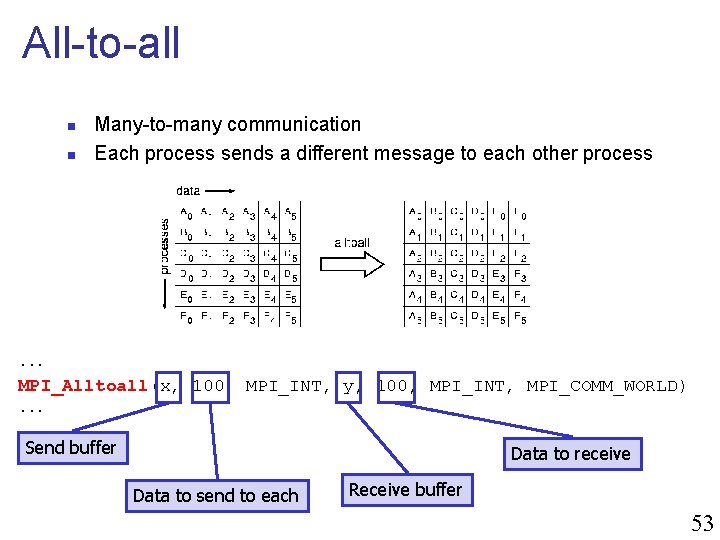

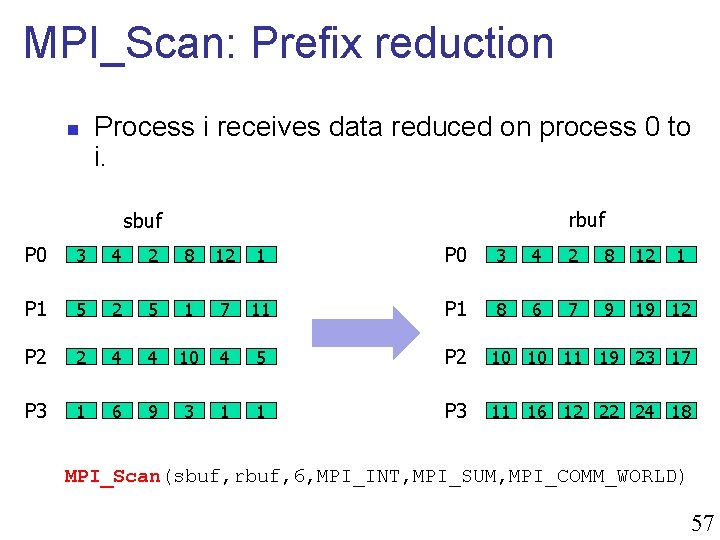

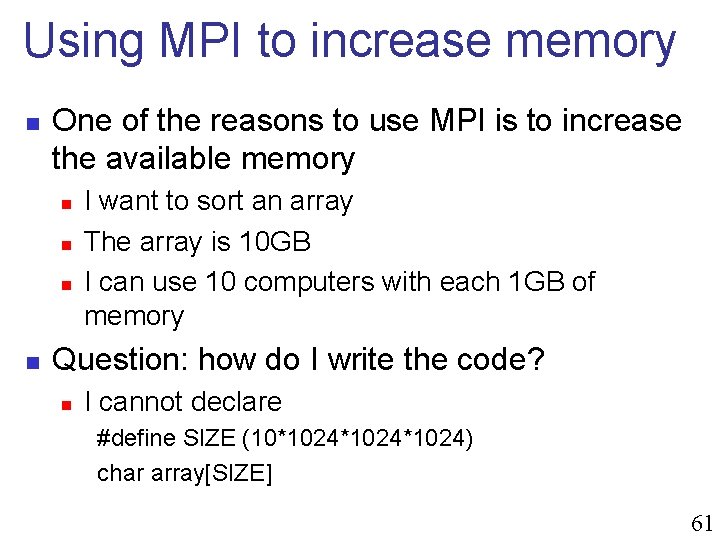

Socket Library in UNIX n Introduced by BSD in 1983 n n n The “Berkeley Socket API” For TCP and UDP on top of IP The API is known to not be very intuitive for first-time programmers What one typically does is write a set of “wrappers” that hide the complexity of the API behind simple function Fundamental concepts n Server side n n n Create a socket Bind it to a port numbers Listen on it Accept a connection Read/Write data Client side n n n Create a socket Connect it to a (remote) host/port Write/Read data 8

![Socket server c int mainint argc char argv int sockfd newsockfd portno clilen Socket: server. c int main(int argc, char *argv[]) { int sockfd, newsockfd, portno, clilen;](https://slidetodoc.com/presentation_image_h2/1d344a07cded59787c5ddc5fdd31dc46/image-9.jpg)

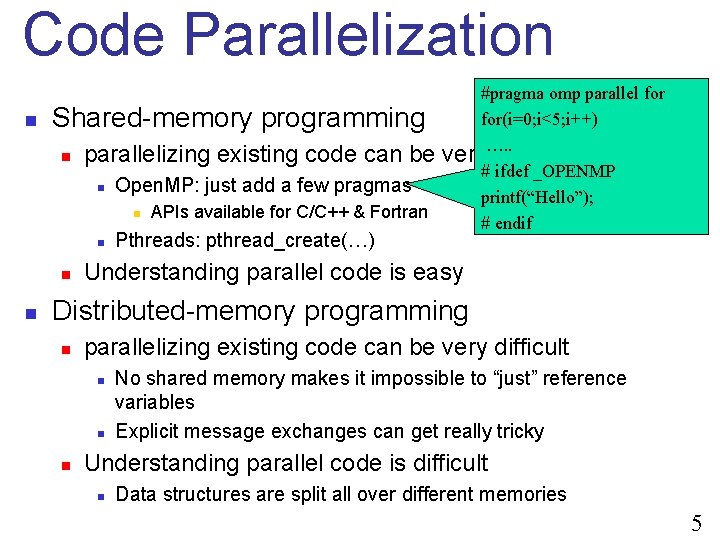

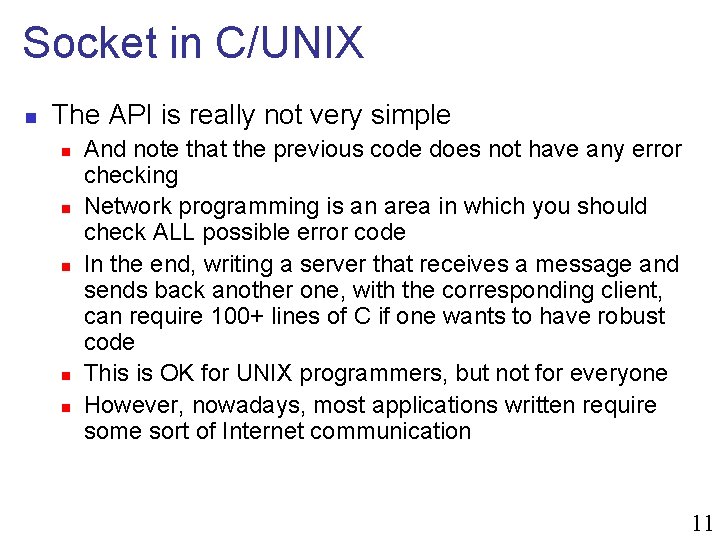

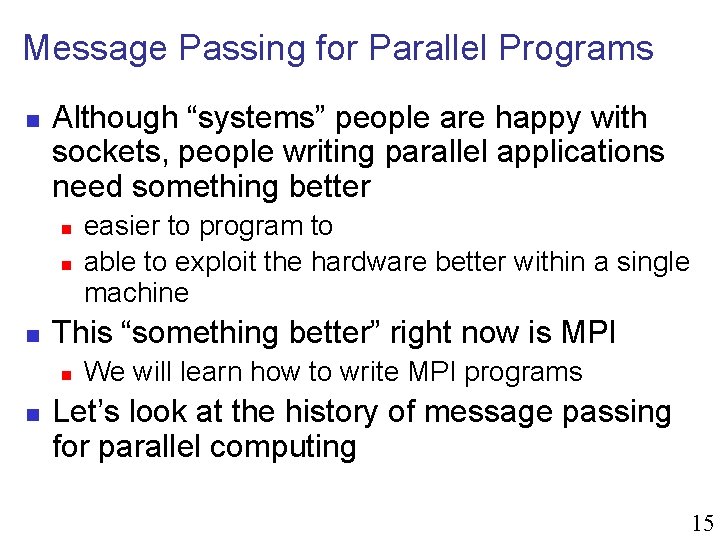

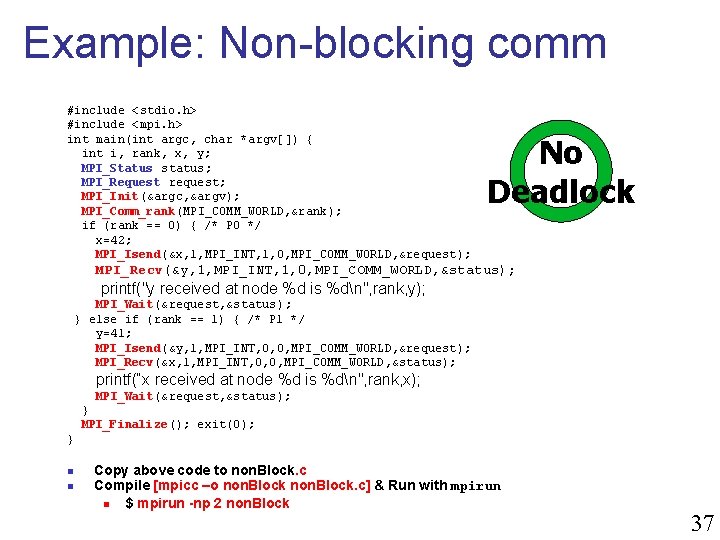

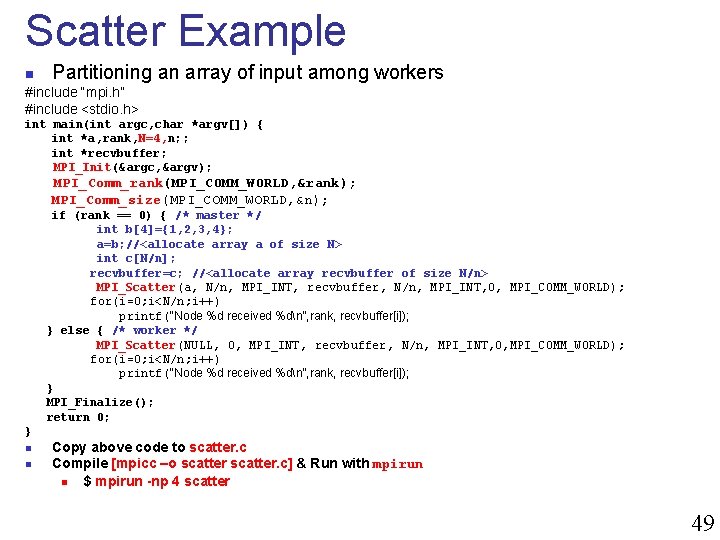

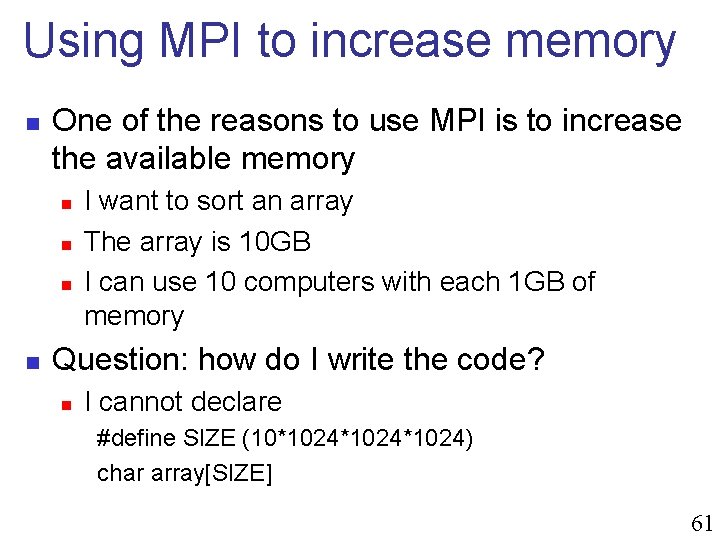

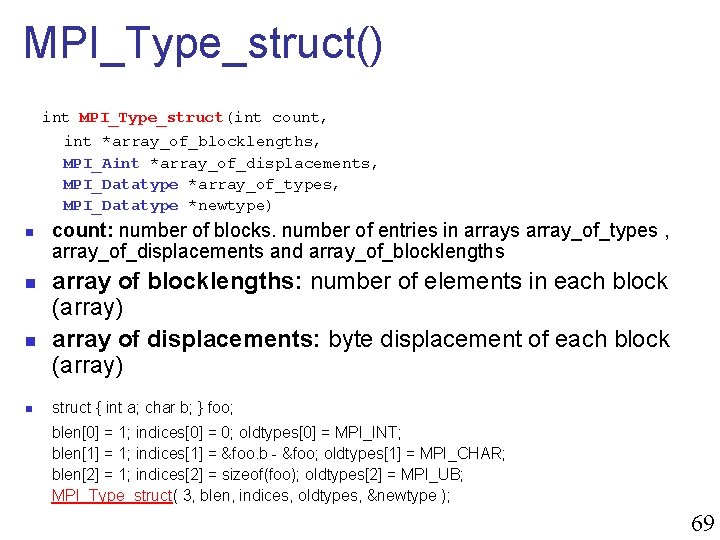

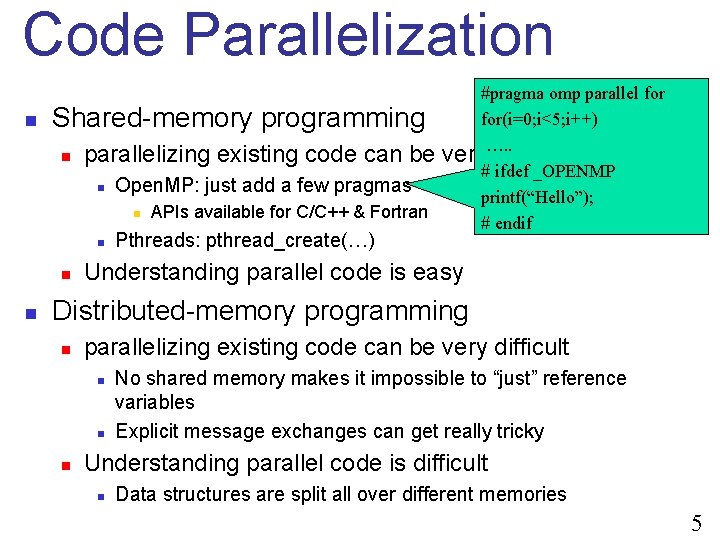

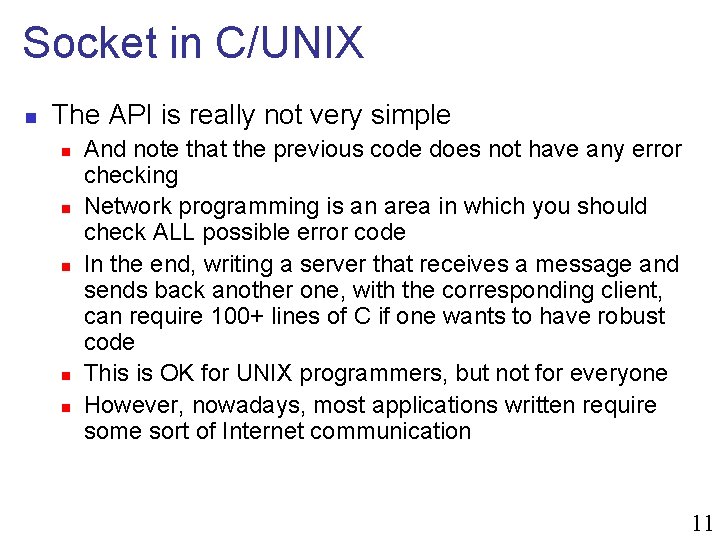

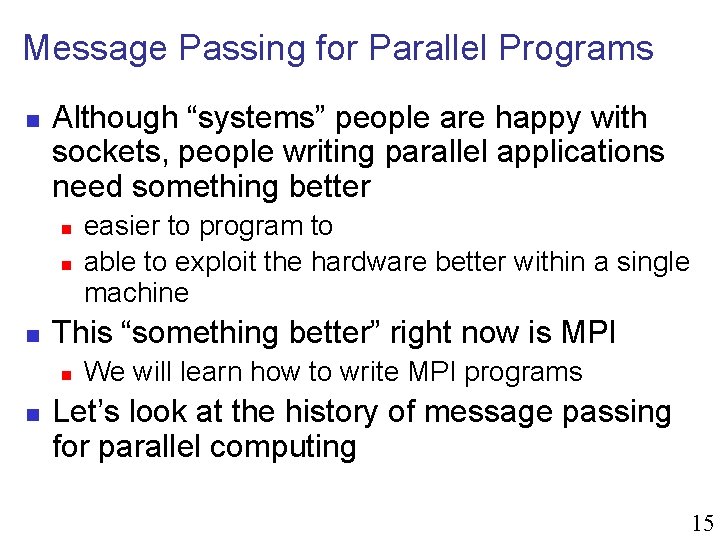

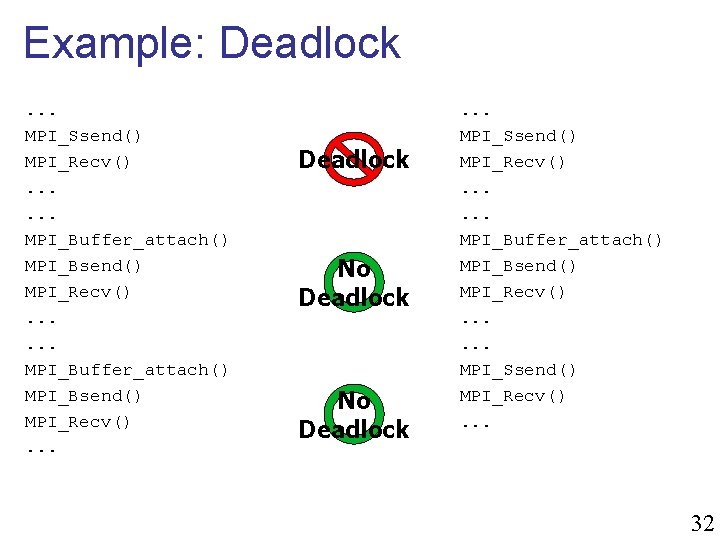

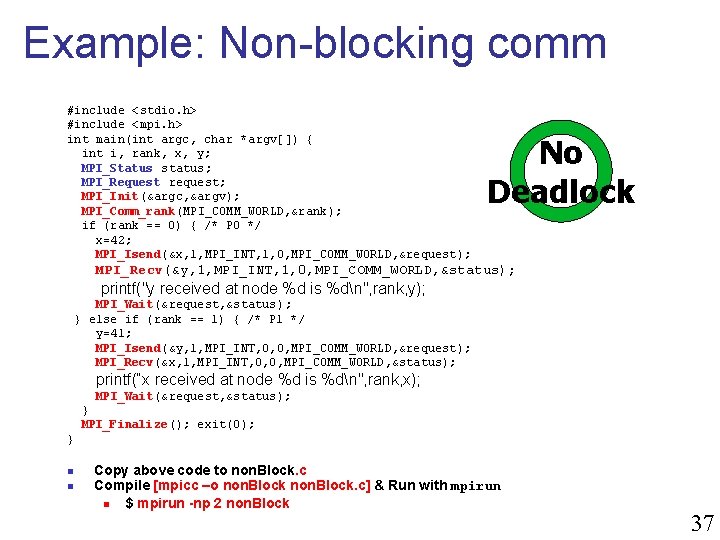

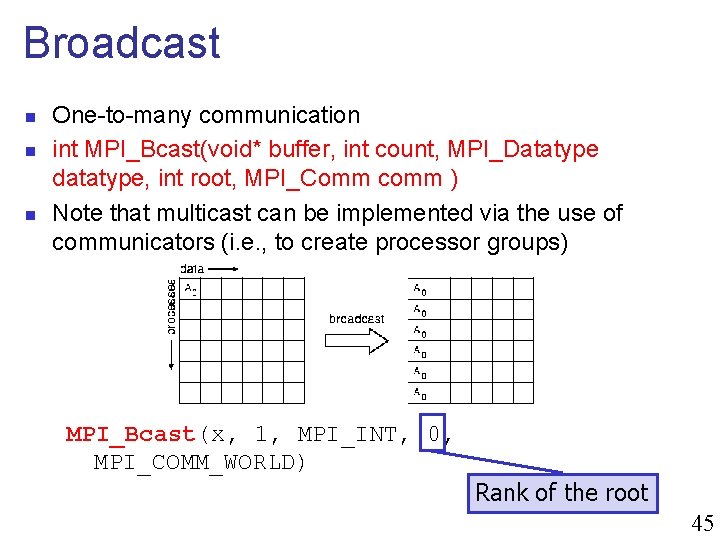

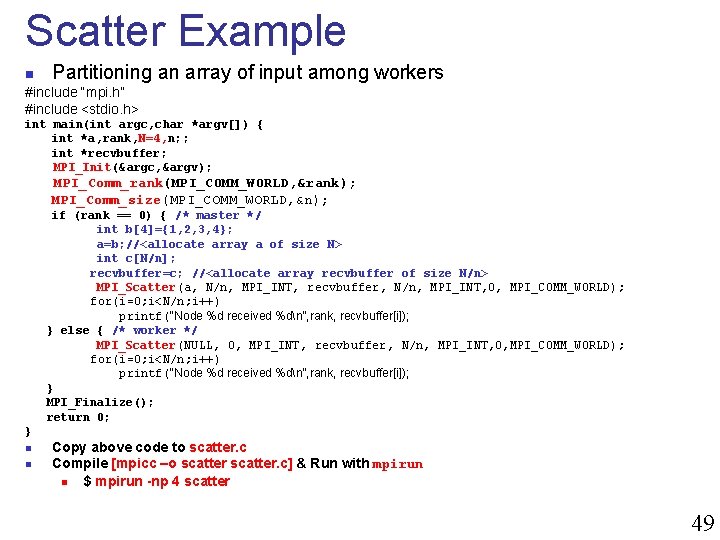

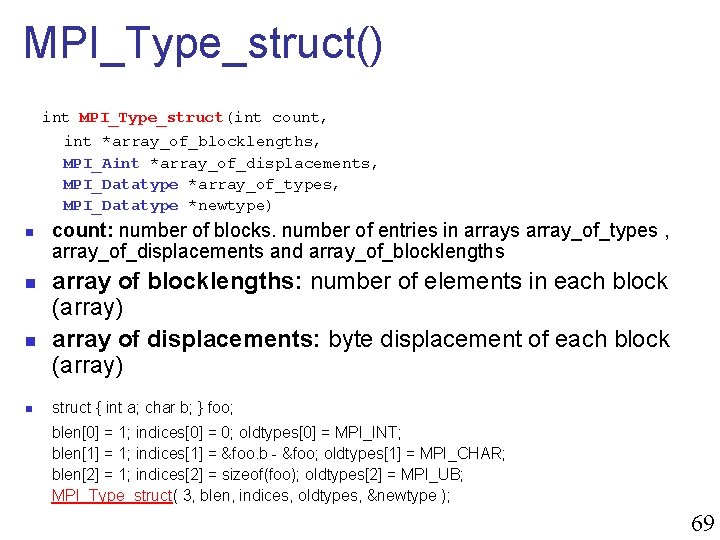

Socket: server. c int main(int argc, char *argv[]) { int sockfd, newsockfd, portno, clilen; char buffer[256]; struct sockaddr_in serv_addr, cli_addr; int n; sockfd = socket(AF_INET, SOCK_STREAM, 0); bzero((char *) &serv_addr, sizeof(serv_addr)); portno = 666; serv_addr. sin_family = AF_INET; serv_addr. sin_addr. s_addr = INADDR_ANY; serv_addr. sin_port = htons(portno); bind(sockfd, (struct sockaddr *) &serv_addr, sizeof(serv_addr)) listen(sockfd, 5); clilen = sizeof(cli_addr); newsockfd = accept(sockfd, (struct sockaddr *) &cli_addr, &clilen); bzero(buffer, 256); n = read(newsockfd, buffer, 255); printf("Here is the message: %sn", buffer); n = write(newsockfd, "I got your message", 18); return 0; } 9

![Socket client c int mainint argc char argv int sockfd portno n struct Socket: client. c int main(int argc, char *argv[]) { int sockfd, portno, n; struct](https://slidetodoc.com/presentation_image_h2/1d344a07cded59787c5ddc5fdd31dc46/image-10.jpg)

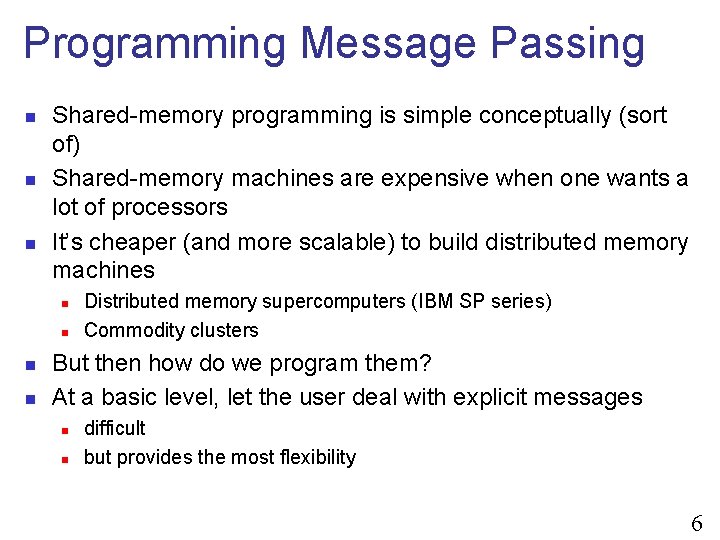

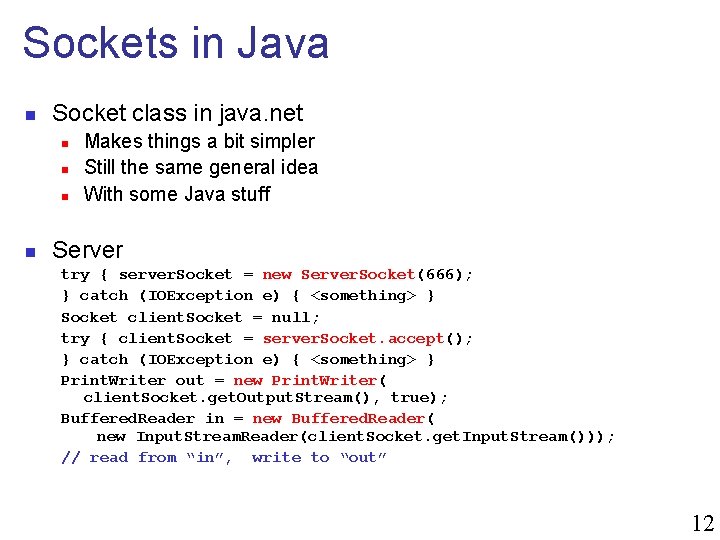

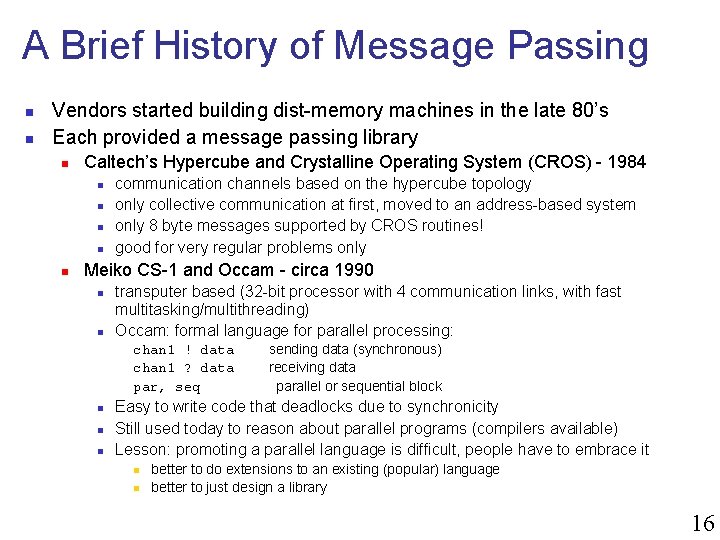

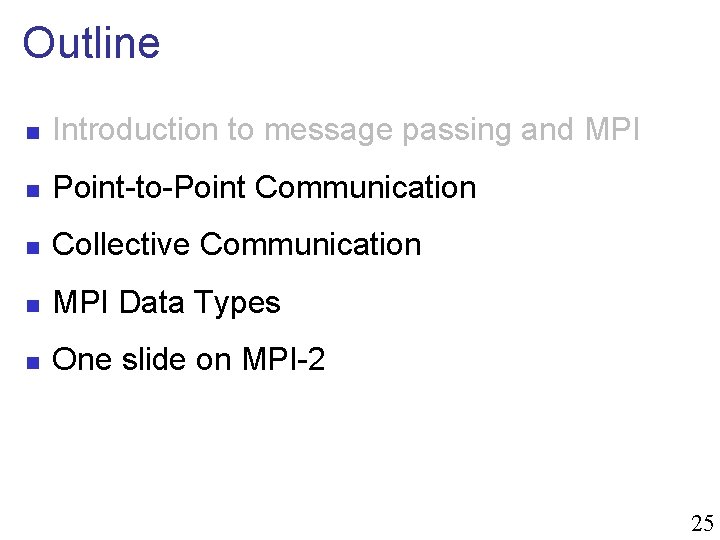

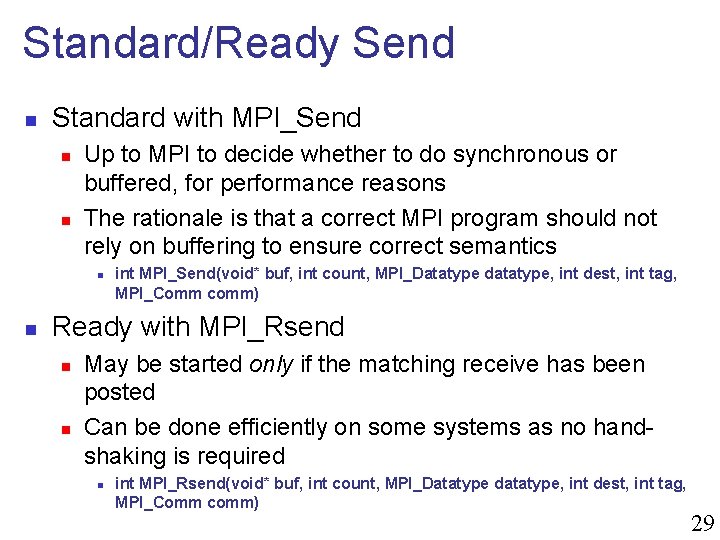

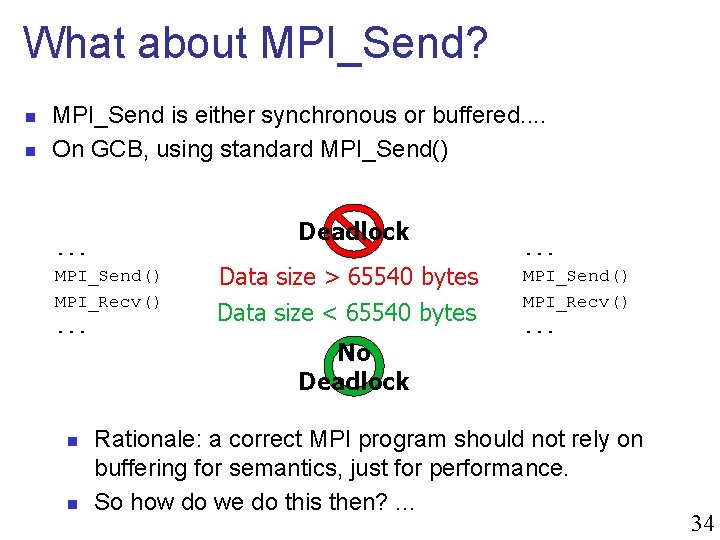

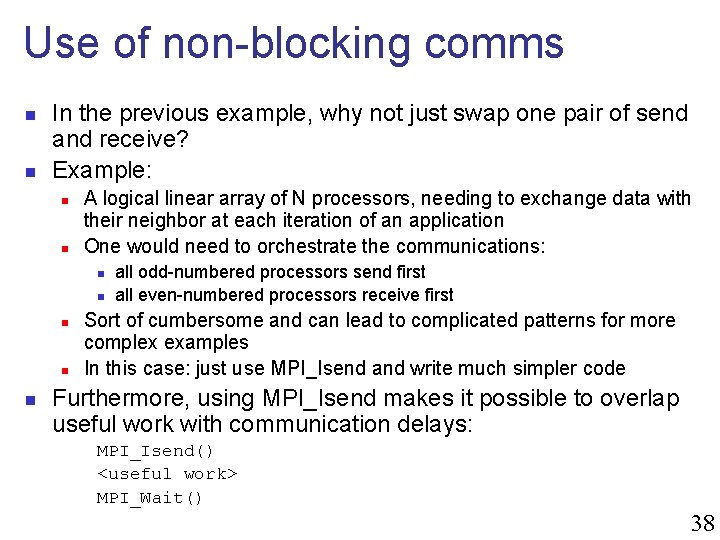

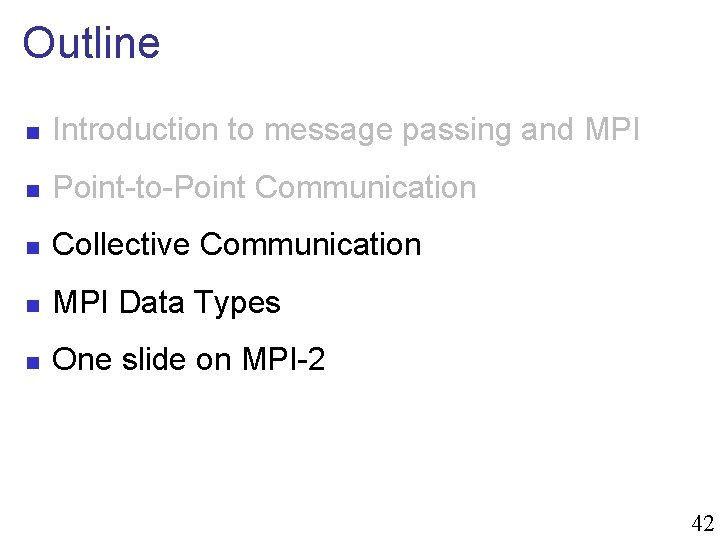

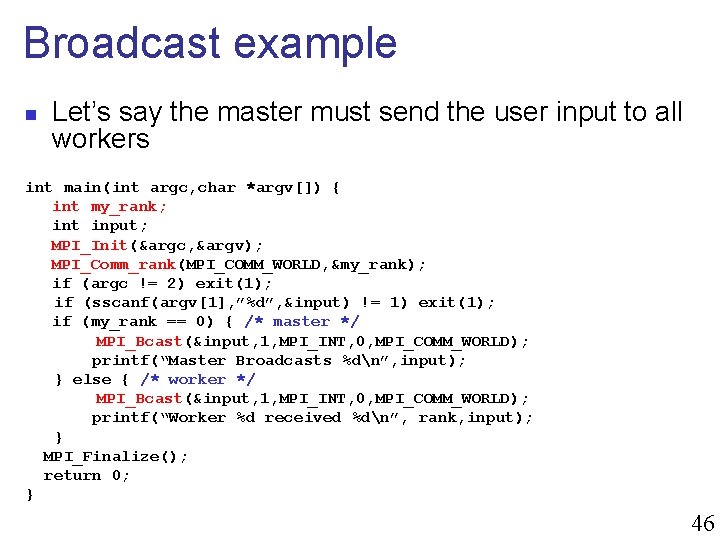

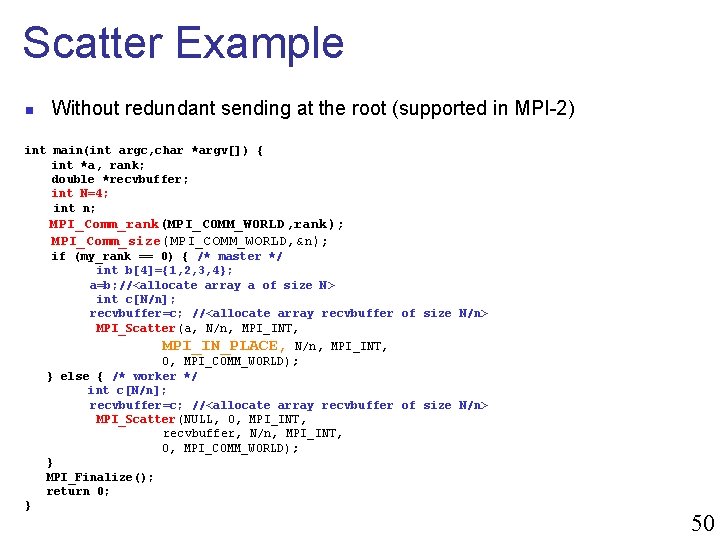

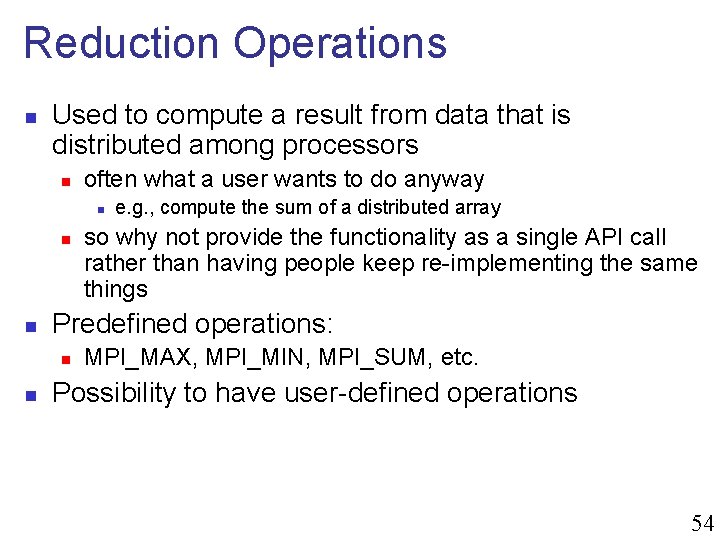

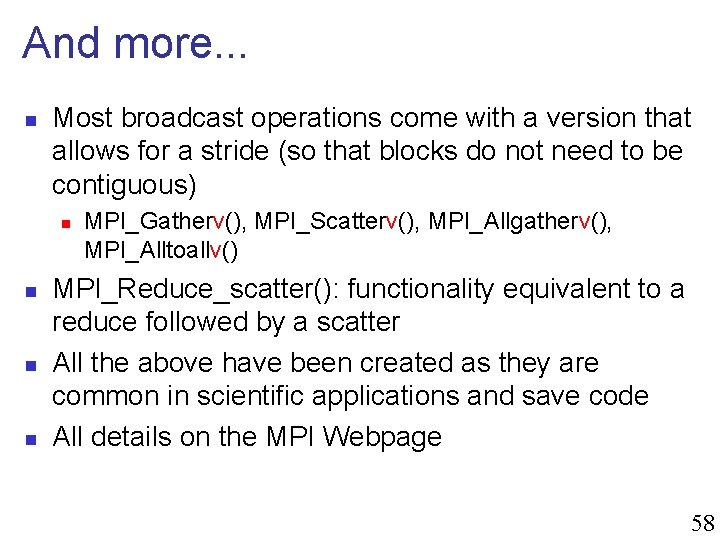

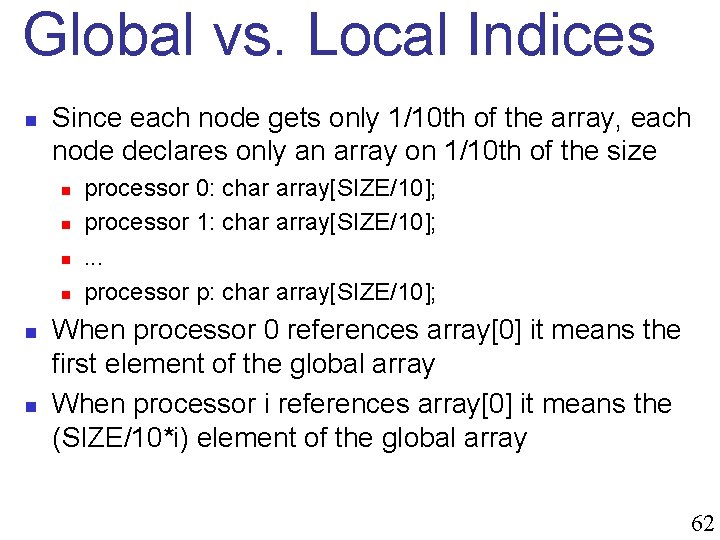

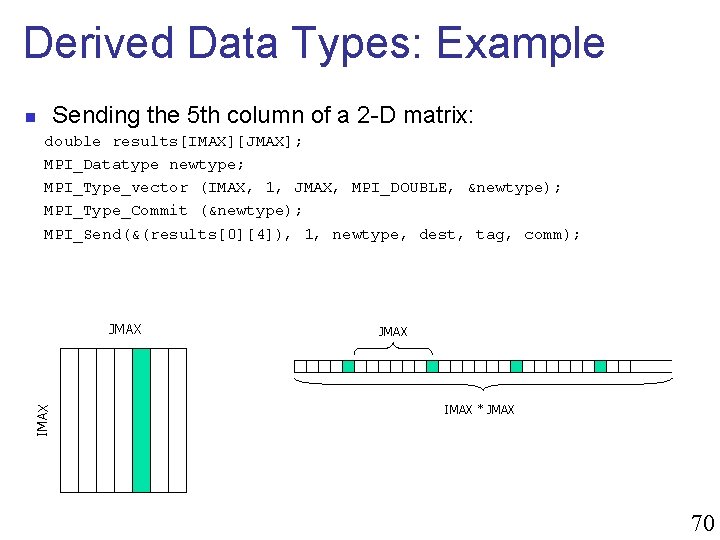

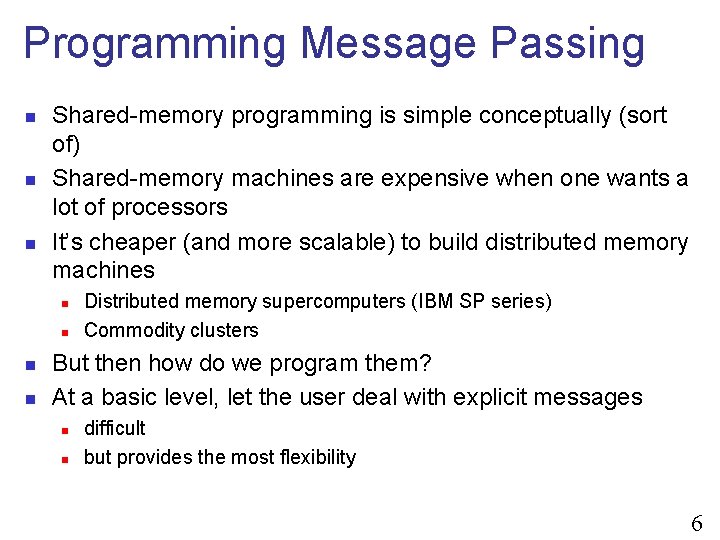

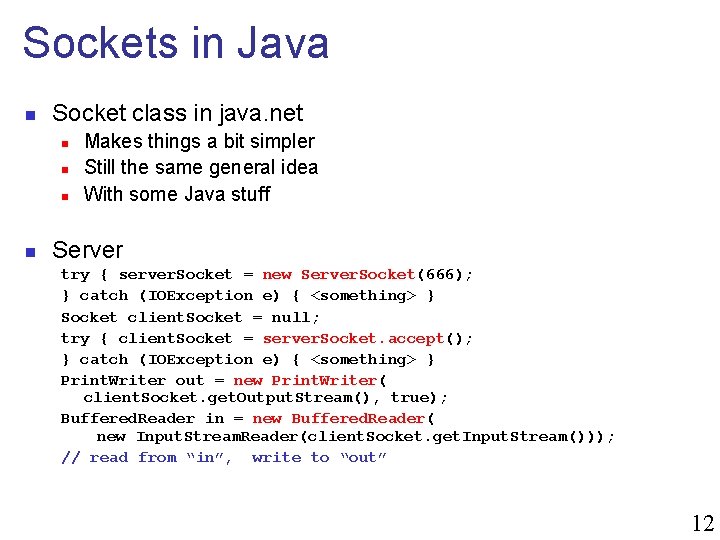

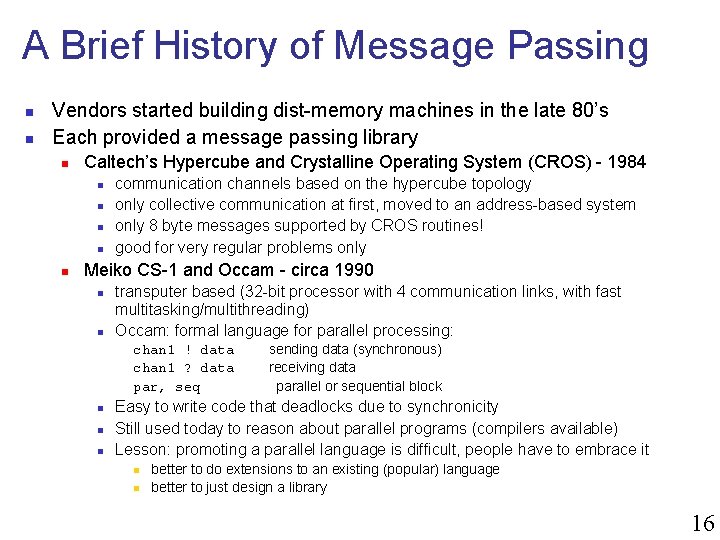

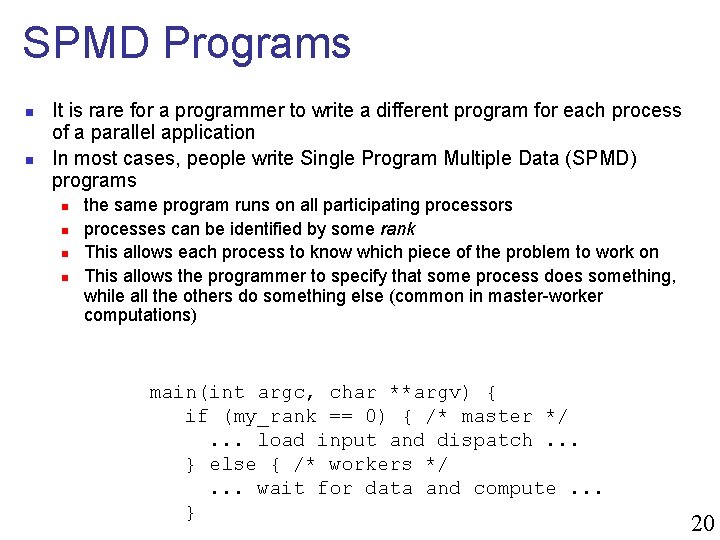

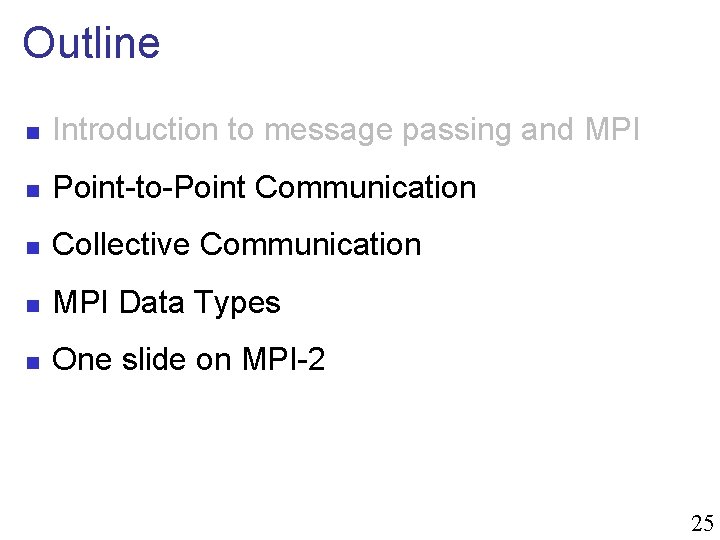

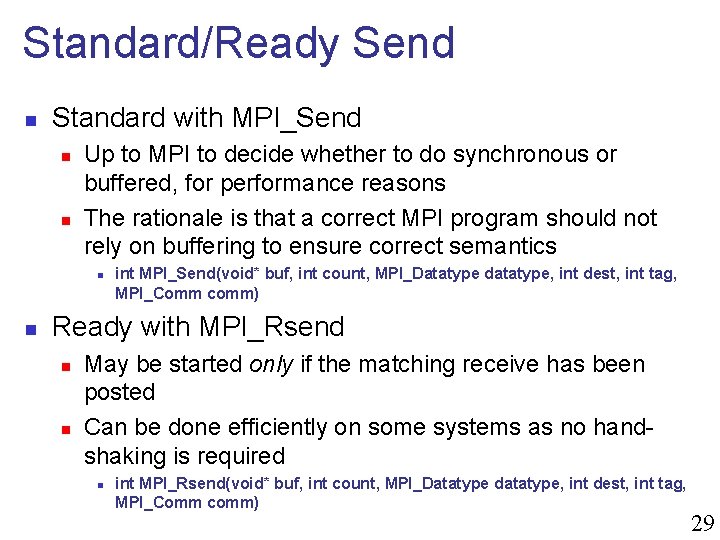

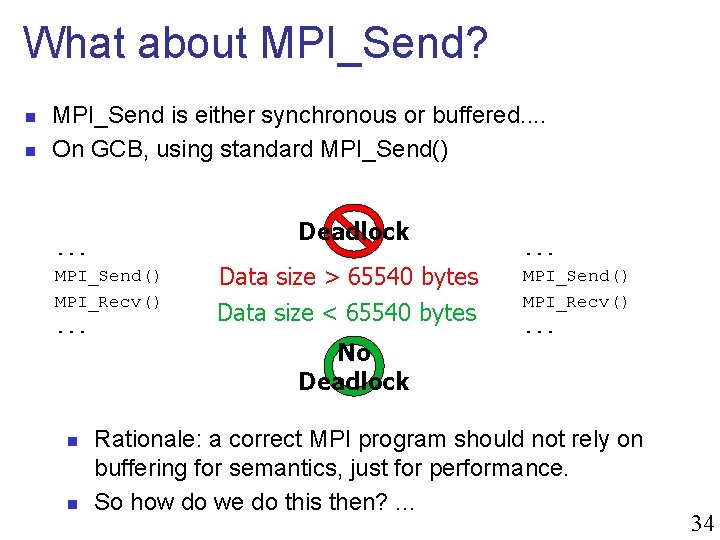

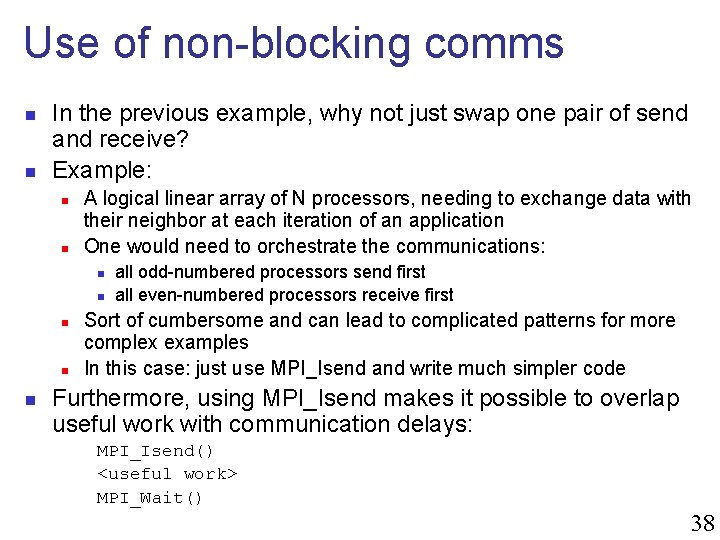

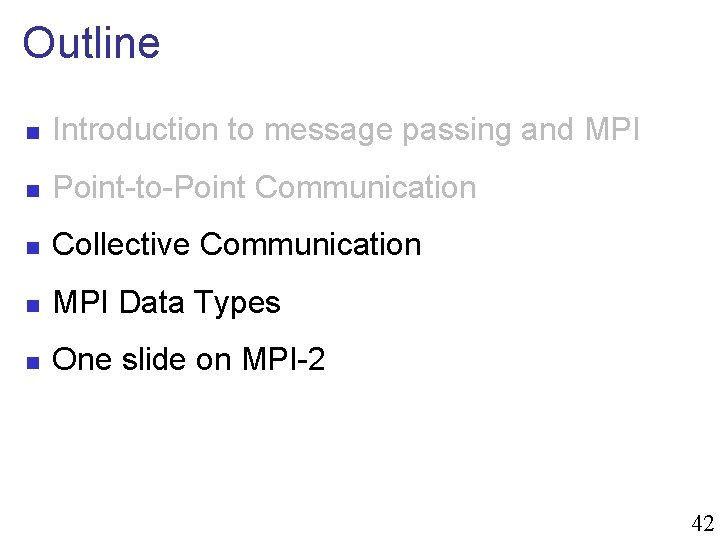

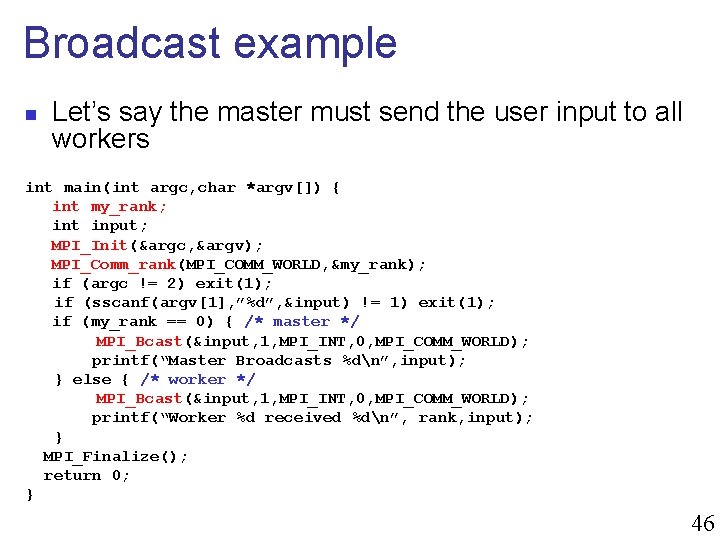

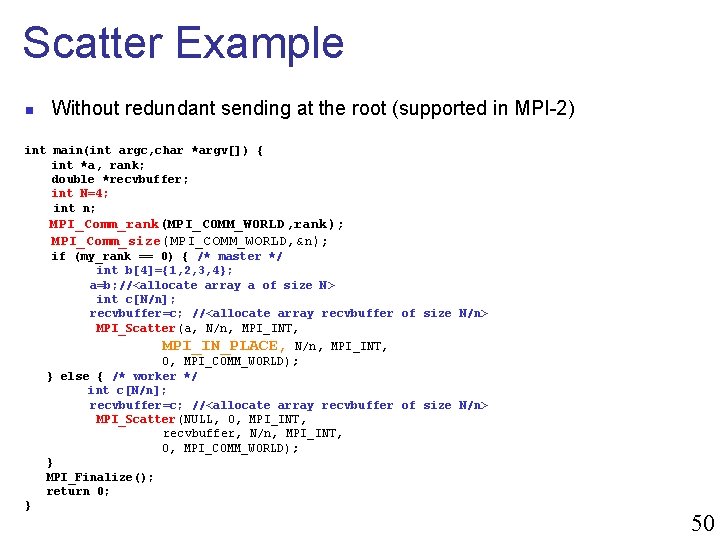

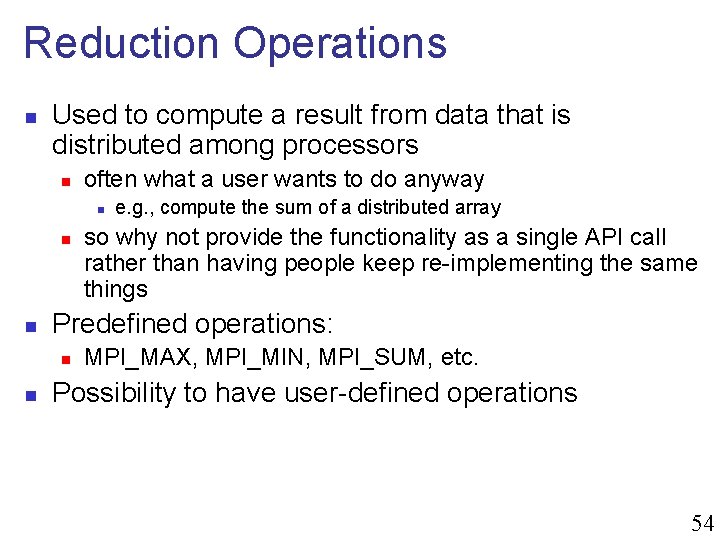

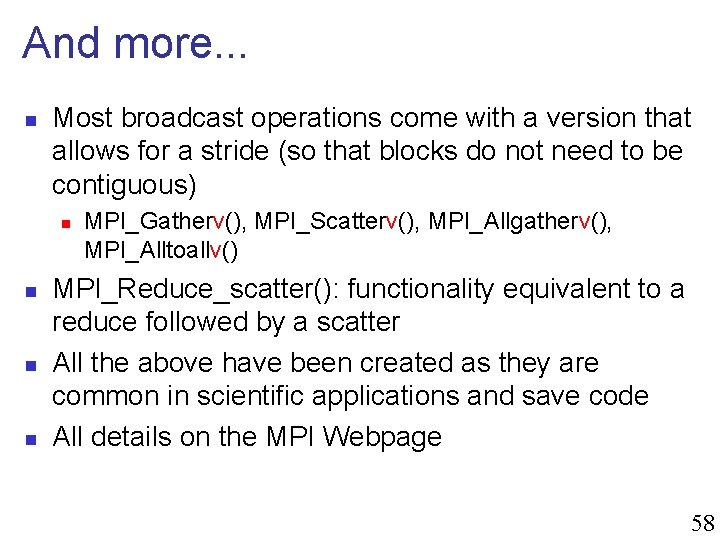

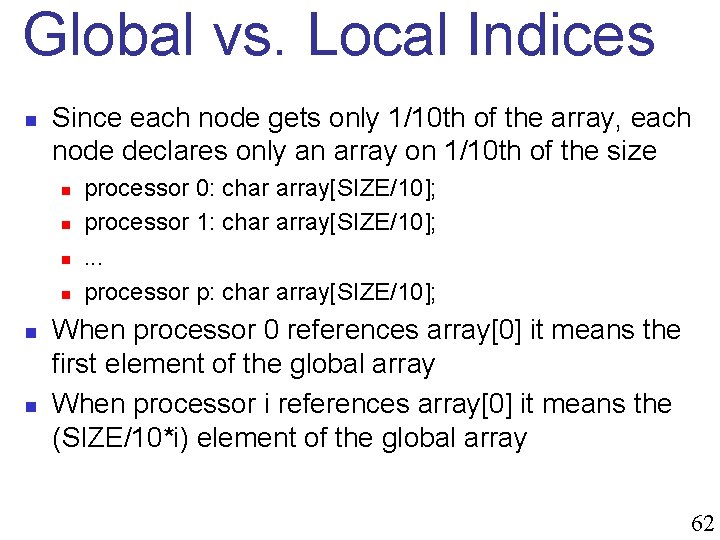

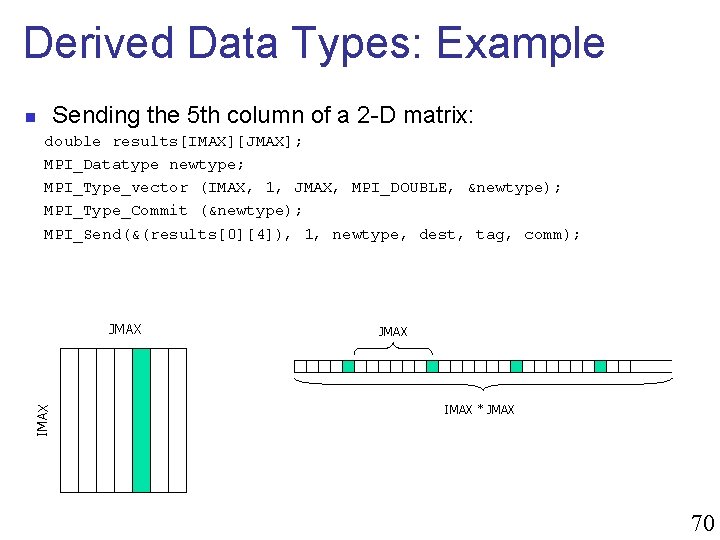

Socket: client. c int main(int argc, char *argv[]) { int sockfd, portno, n; struct sockaddr_in serv_addr; struct hostent *server; char buffer[256]; portno = 666; sockfd = socket(AF_INET, SOCK_STREAM, 0); server = gethostbyname(“server_host. univ. edu”); bzero((char *) &serv_addr, sizeof(serv_addr)); serv_addr. sin_family = AF_INET; bcopy((char *)server->h_addr, (char *)&serv_addr. sin_addr. s_addr, server ->h_length); serv_addr. sin_port = htons(portno); connect(sockfd, &serv_addr, sizeof(serv_addr )); printf("Please enter the message: "); bzero(buffer, 256); fgets(buffer, 255, stdin); write(sockfd, buffer, strlen(buffer )); bzero(buffer, 256); read(sockfd, buffer, 255); printf("%sn", buffer); return 0; } 10

Socket in C/UNIX n The API is really not very simple n n n And note that the previous code does not have any error checking Network programming is an area in which you should check ALL possible error code In the end, writing a server that receives a message and sends back another one, with the corresponding client, can require 100+ lines of C if one wants to have robust code This is OK for UNIX programmers, but not for everyone However, nowadays, most applications written require some sort of Internet communication 11

Sockets in Java n Socket class in java. net n n Makes things a bit simpler Still the same general idea With some Java stuff Server try { server. Socket = new Server. Socket(666); } catch (IOException e) { <something> } Socket client. Socket = null; try { client. Socket = server. Socket. accept(); } catch (IOException e) { <something> } Print. Writer out = new Print. Writer( client. Socket. get. Output. Stream(), true); Buffered. Reader in = new Buffered. Reader( new Input. Stream. Reader(client. Socket. get. Input. Stream())); // read from “in”, write to “out” 12

Sockets in Java client try {socket = new Socket(”server. univ. edu", 666); } catch { <something> } out = new Print. Writer(socket. get. Output. Stream(), true); in = new Buffered. Reader(new Input. Stream. Reader( socket. get. Input. Stream())); // write to out, read from in n n Much simpler than the C Note that if one writes a client-server program one typically creates a Thread after an accept, so that requests can be handled concurrently 13

Using Sockets for parallel programming? n One could think of writing all parallel code on a cluster using sockets n n nodes in the cluster Each node creates n-1 sockets on n-1 ports All nodes can communicate Problems with this approach n n n Complex code Only point-to-point communication But n n n All this complexity could be “wrapped” under a higher-level API And in fact, we’ll see that’s the basic idea Does not take advantage of fast networking within a cluster/MPP n n Sockets have “Internet stuff” in them that’s not necessary TPC/IP may not even be the right protocol! 14

Message Passing for Parallel Programs n Although “systems” people are happy with sockets, people writing parallel applications need something better n n n This “something better” right now is MPI n n easier to program to able to exploit the hardware better within a single machine We will learn how to write MPI programs Let’s look at the history of message passing for parallel computing 15

A Brief History of Message Passing n n Vendors started building dist-memory machines in the late 80’s Each provided a message passing library n Caltech’s Hypercube and Crystalline Operating System (CROS) - 1984 n n n communication channels based on the hypercube topology only collective communication at first, moved to an address-based system only 8 byte messages supported by CROS routines! good for very regular problems only Meiko CS-1 and Occam - circa 1990 n n transputer based (32 -bit processor with 4 communication links, with fast multitasking/multithreading) Occam: formal language for parallel processing: chan 1 ! data chan 1 ? data par, seq n n n sending data (synchronous) receiving data parallel or sequential block Easy to write code that deadlocks due to synchronicity Still used today to reason about parallel programs (compilers available) Lesson: promoting a parallel language is difficult, people have to embrace it n n better to do extensions to an existing (popular) language better to just design a library 16

A Brief History of Message Passing. . . n The Intel i. PSC 1, Paragon and NX n n n n Originally close to the Caltech Hypercube and CROS i. PSC 1 had commensurate message passing and computation performance hiding of underlying communication topology (process rank), multiple processes per node, any-to-any message passing, non-syn chronous messages, message tags, variable message lengths On the Paragon, NX 2 added interrupt-driven communications, some notion of filtering of messages with wildcards, global synchronization, arithmetic reduction operations ALL of the above are part of modern message passing IBM SPs and EUI Meiko CS-2 and CSTools, Thinking Machine CM 5 and the CMMD Active Message Layer (AML) 17

A Brief History of Message Passing n n We went from a highly restrictive system like the Caltech hypercube to great flexibility that is in fact very close to today’s state-of-the-art of message passing The main problem was: impossible to write portable code! n n n People started writing “portable” message passing libraries n n if I invest millions in an IBM-SP, do I really want to use some library that uses (slow) sockets? ? There was no clear winner for a long time n n Tricks with macros, PICL, P 4, PVM, PARMACS, CHIMPS, Express, etc. The main problem was performance n n programmers became expert of one system the systems would die eventually and one had to relearn a new system although PVM (Parallel Virtual Machine) had won in the end After a few years of intense activity and competition, it was agreed that a message passing standard should be developed n Designed by committee 18

The MPI Standard n n MPI Forum setup as early as 1992 to come up with a de facto standard with the following goals: n source-code portability n allow for efficient implementation (e. g. , by vendors) n support for heterogeneous platforms MPI is not n n n June 1995: MPI v 1. 1 (we’re now at MPI v 1. 2) n n a language an implementation (although it provides hints for implementers) http: //www-unix. mcs. anl. gov/mpi/ C and FORTRAN bindings We will use MPI v 1. 1 from C in the class Implementations: n n n well-adopted by vendors free implementations for clusters: MPICH, LAM, CHIMP/MPI research in fault-tolerance: MPICH-V, FT-MPI, MPIFT, etc. 19

SPMD Programs n n It is rare for a programmer to write a different program for each process of a parallel application In most cases, people write Single Program Multiple Data (SPMD) programs n n the same program runs on all participating processors processes can be identified by some rank This allows each process to know which piece of the problem to work on This allows the programmer to specify that some process does something, while all the others do something else (common in master-worker computations) main(int argc, char **argv) { if (my_rank == 0) { /* master */. . . load input and dispatch. . . } else { /* workers */. . . wait for data and compute. . . } 20

MPI Concepts n Fixed number of processors n n Communicator n n n When launching the application one must specify the number of processors to use, which remains unchanged throughout execution Abstraction for a group of processes that can communicate A process can belong to multiple communicators Makes it easy to partition/organize the application in multiple layers of communicating processes Default and global communicator: MPI_COMM_WORLD Process Rank n n The index of a process within a communicator Typically user maps his/her own virtual topology on top of just linear ranks n ring, grid, etc. 21

MPI Communicators User-created Communicator MPI_COMM_WORLD 0 1 2 5 6 7 0 10 11 01 12 3 15 1 16 4 8 9 2 14 13 4 17 6 3 5 19 18 7 User-created Communicator 8 22

![A First MPI Program include mpi h includestdio h int mainint argc char argv A First MPI Program #include "mpi. h" #include<stdio. h> int main(int argc, char *argv[])](https://slidetodoc.com/presentation_image_h2/1d344a07cded59787c5ddc5fdd31dc46/image-23.jpg)

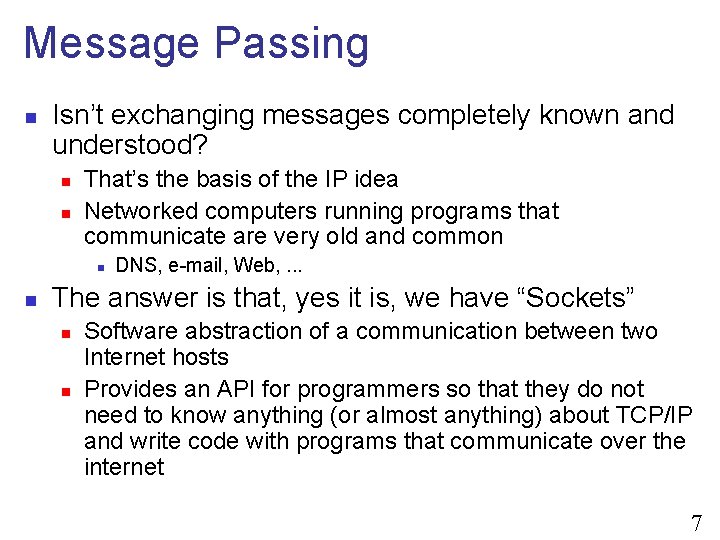

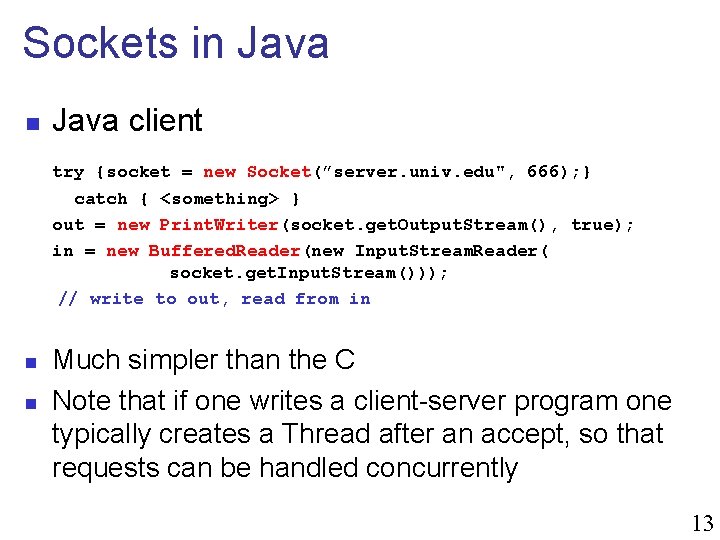

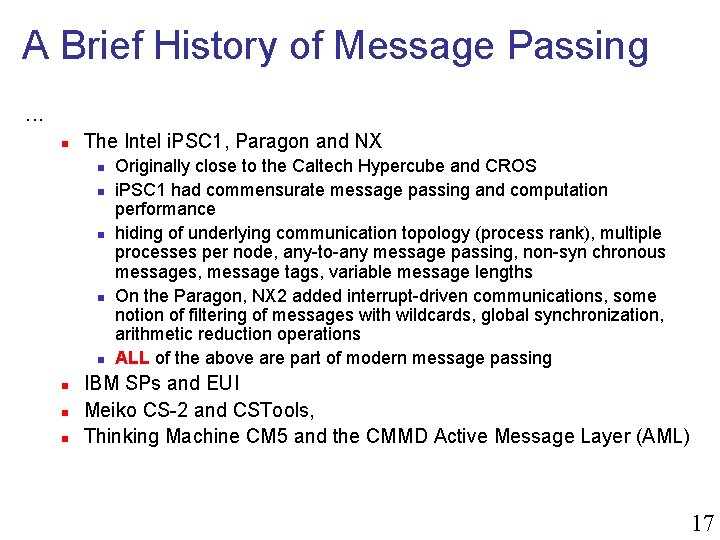

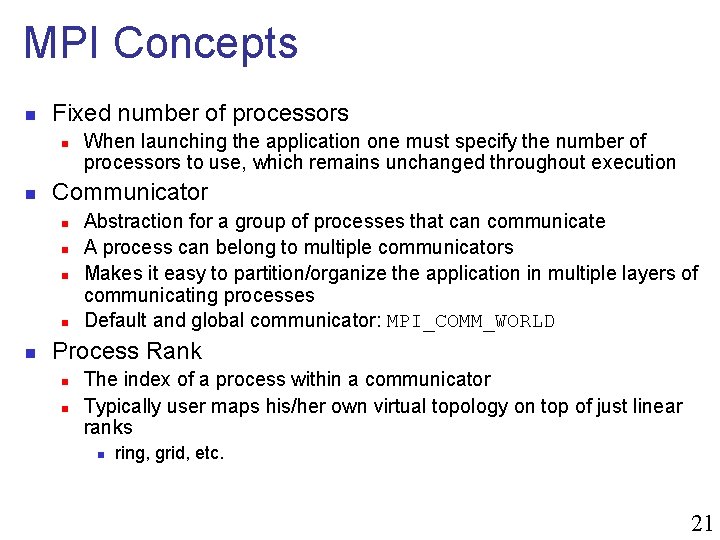

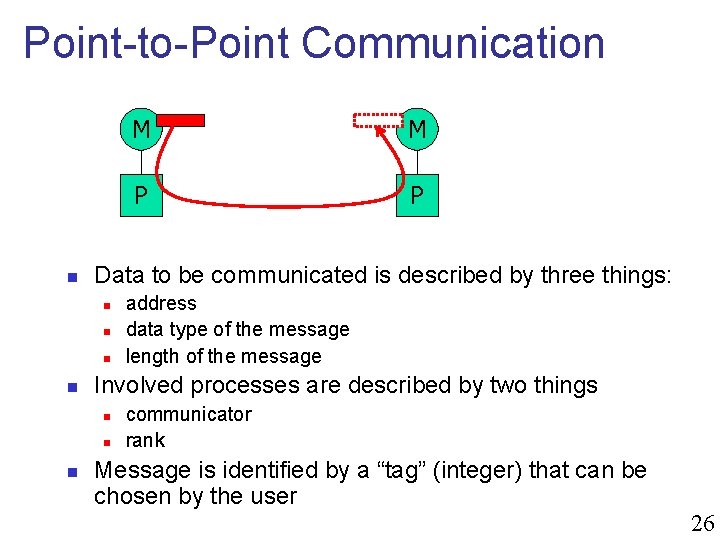

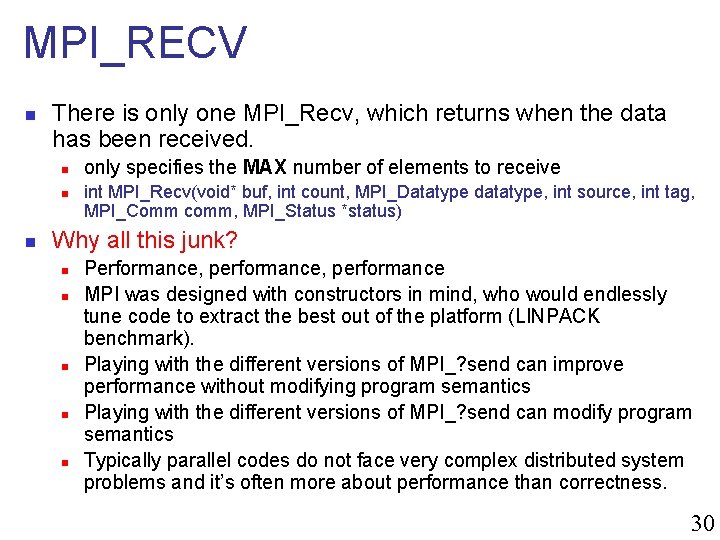

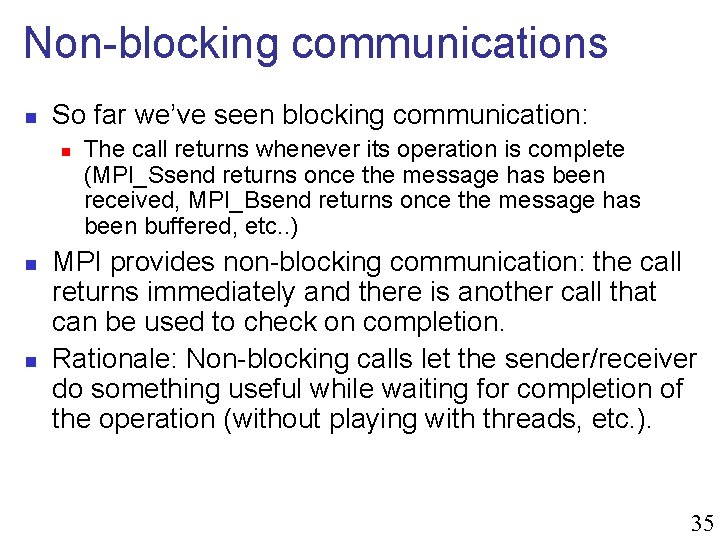

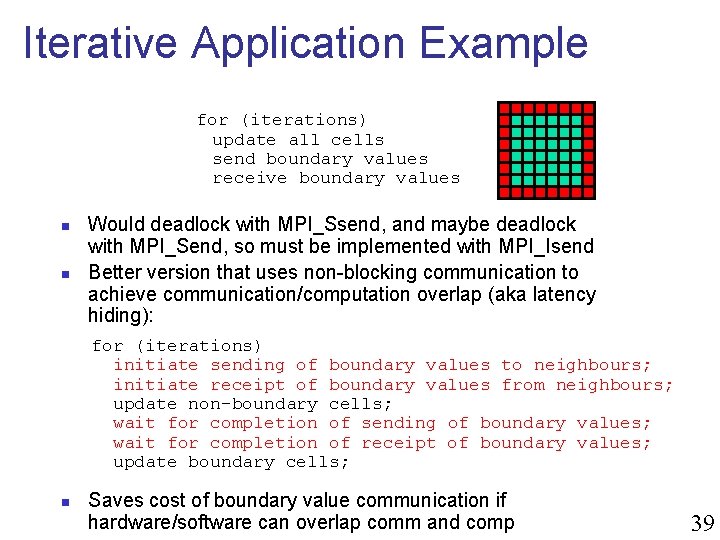

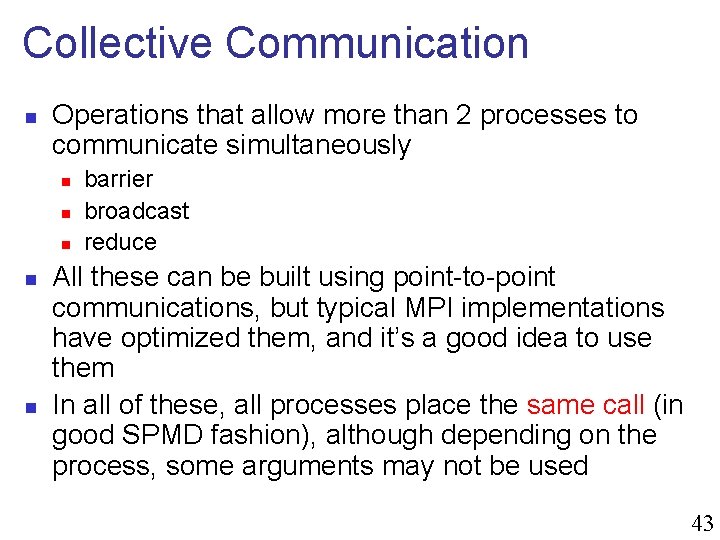

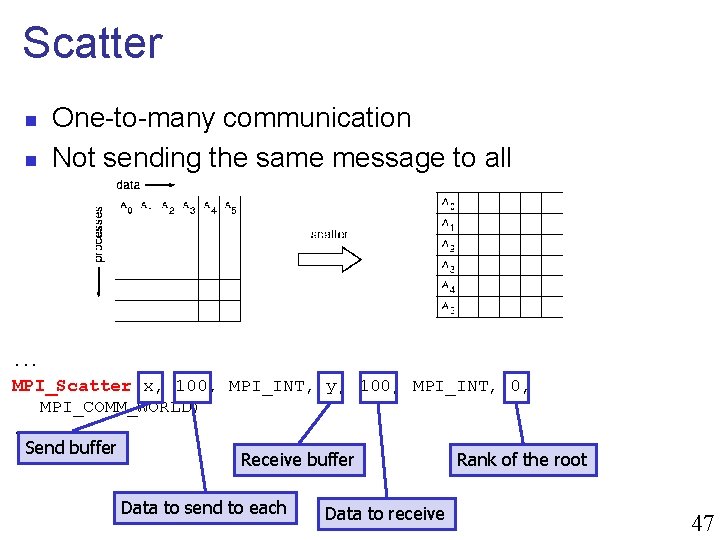

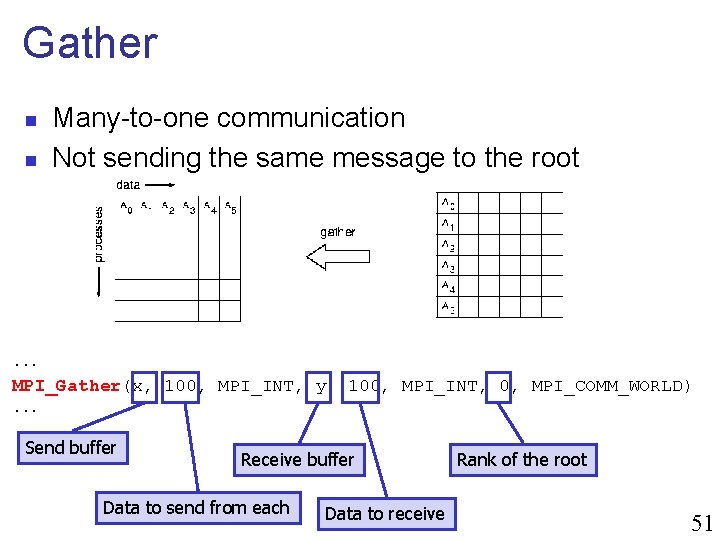

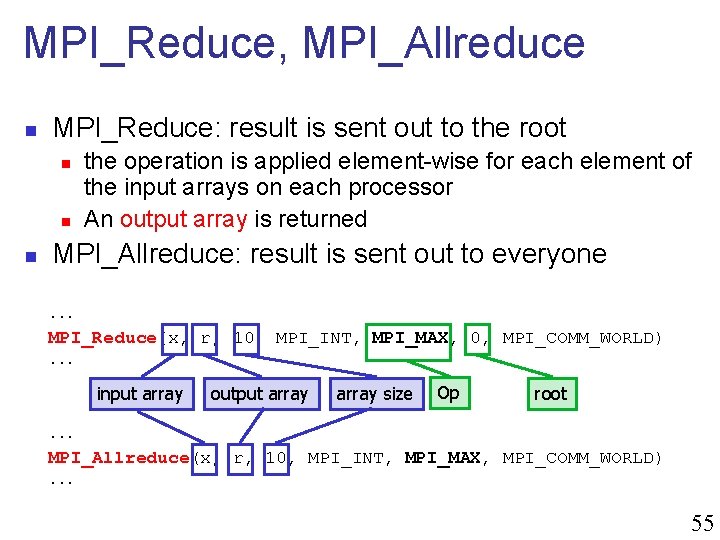

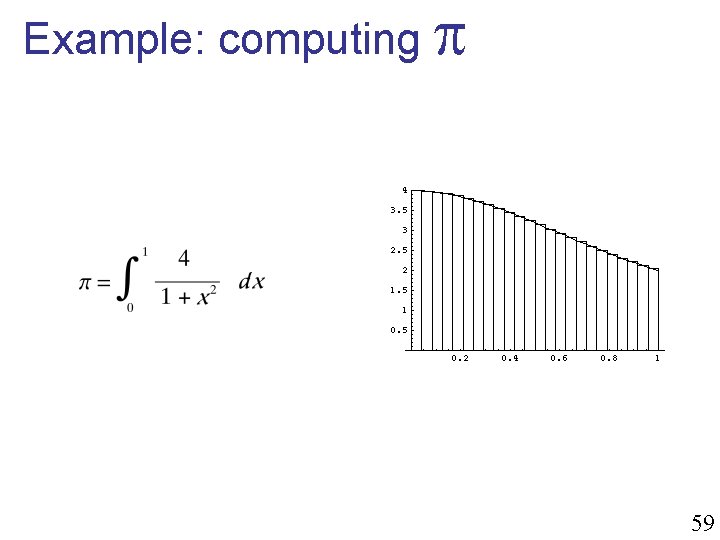

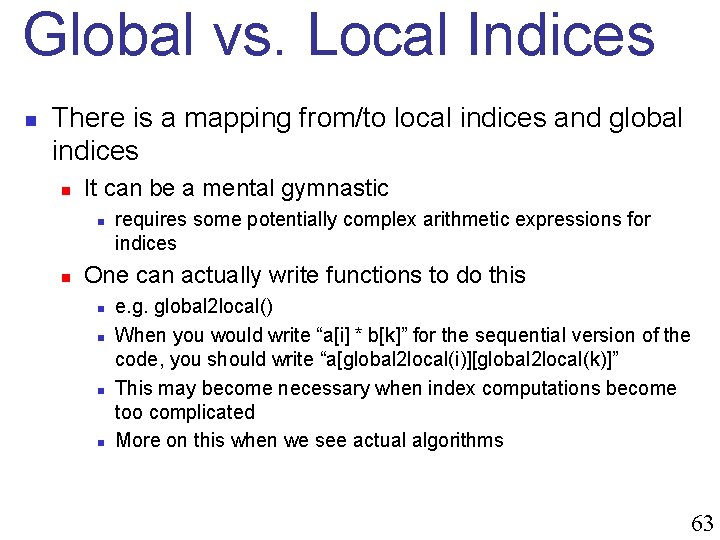

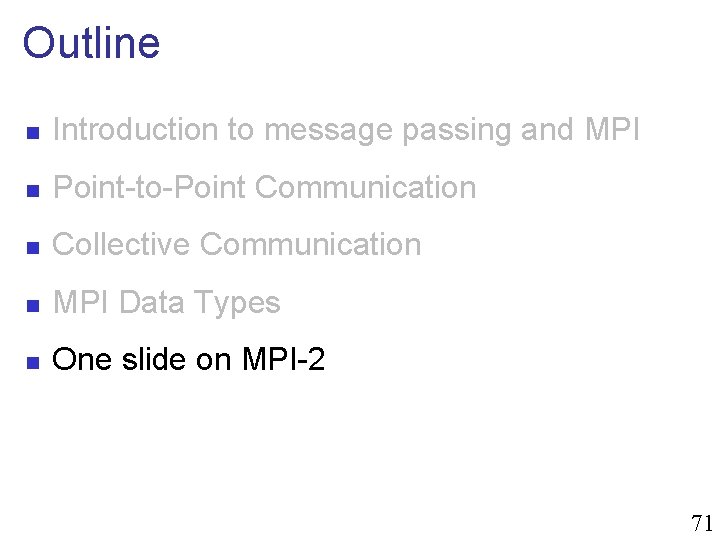

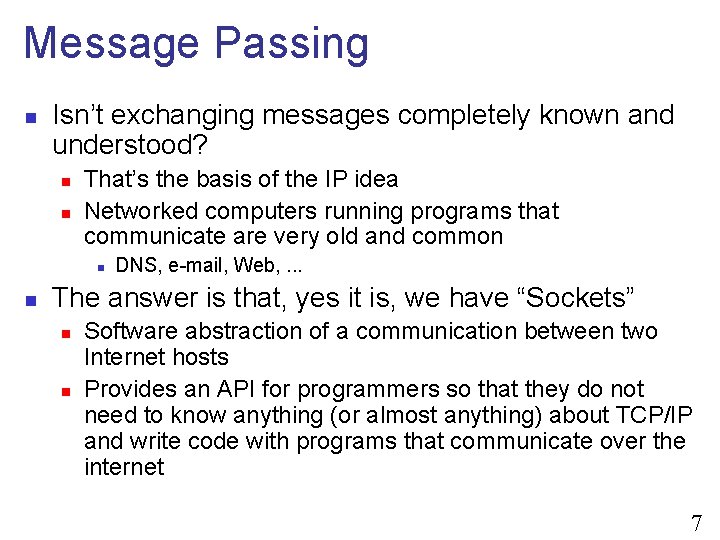

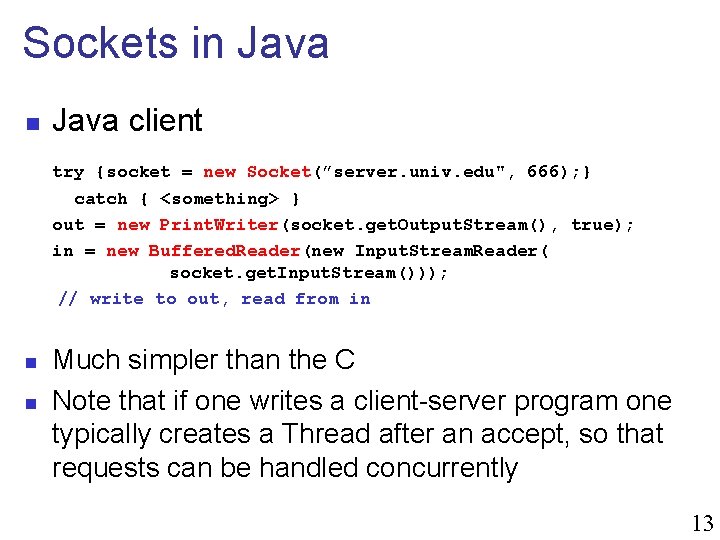

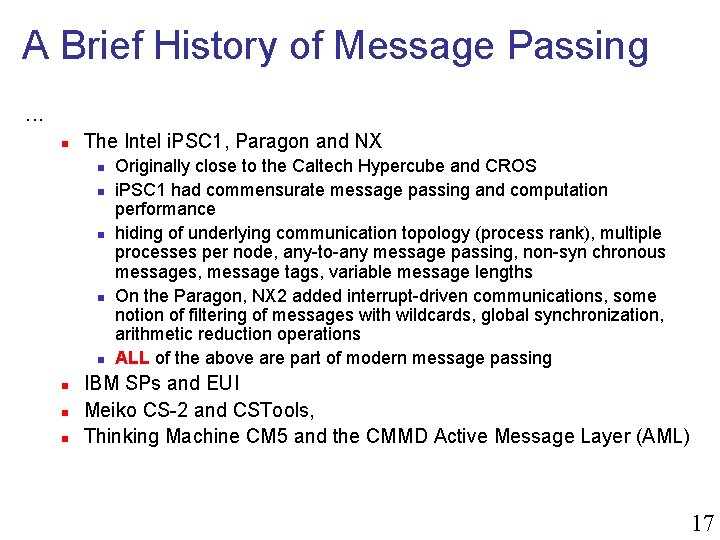

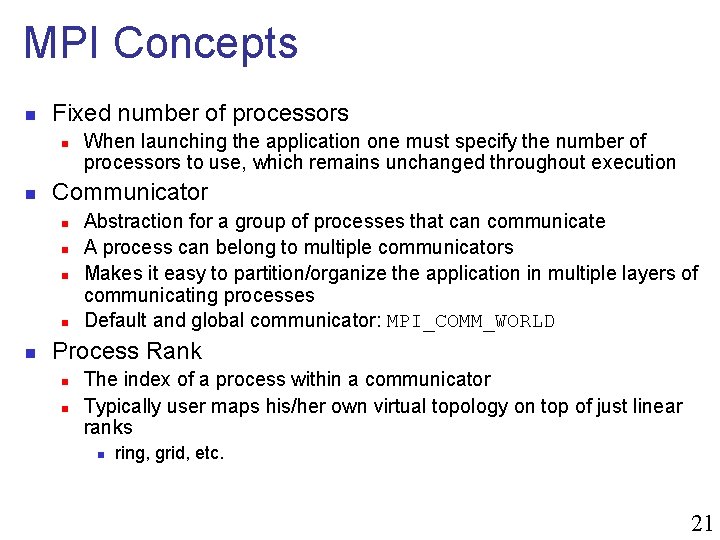

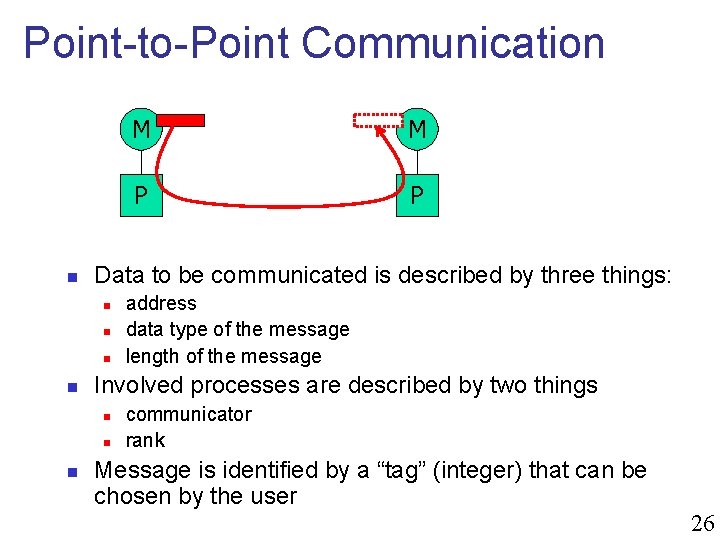

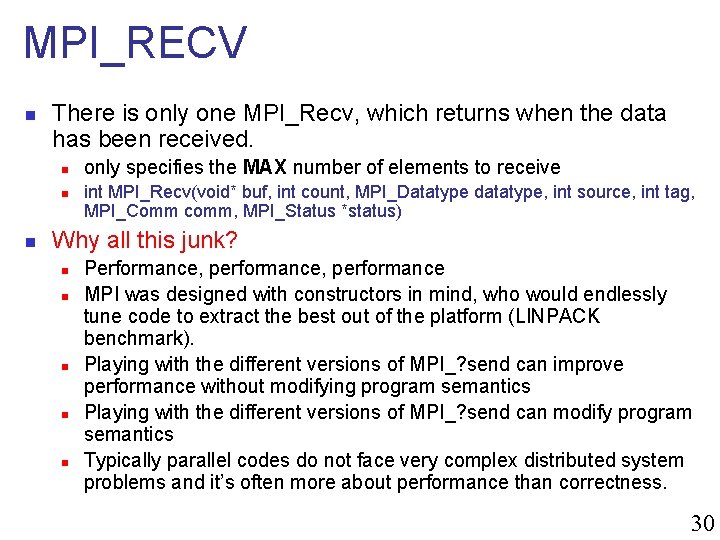

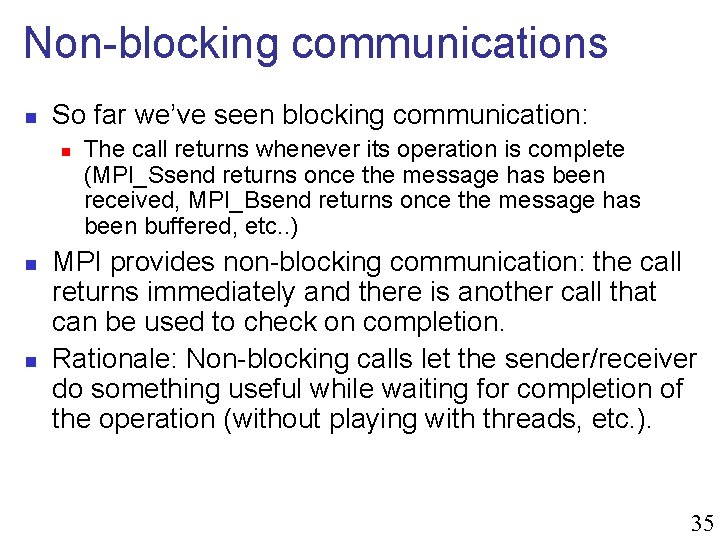

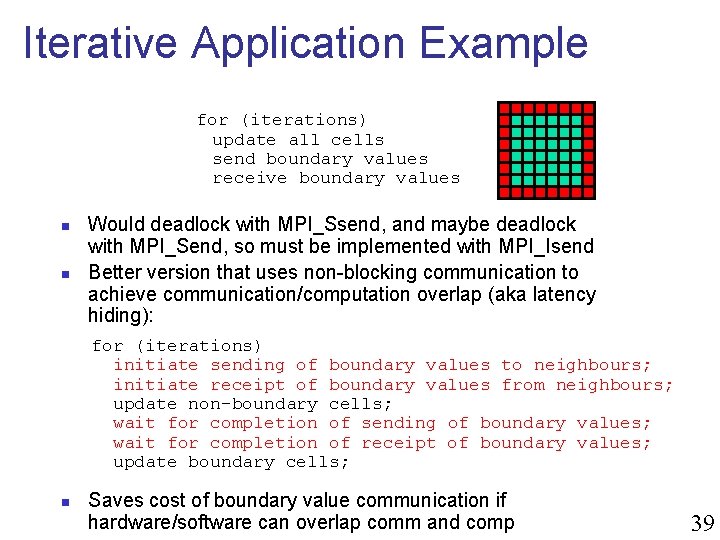

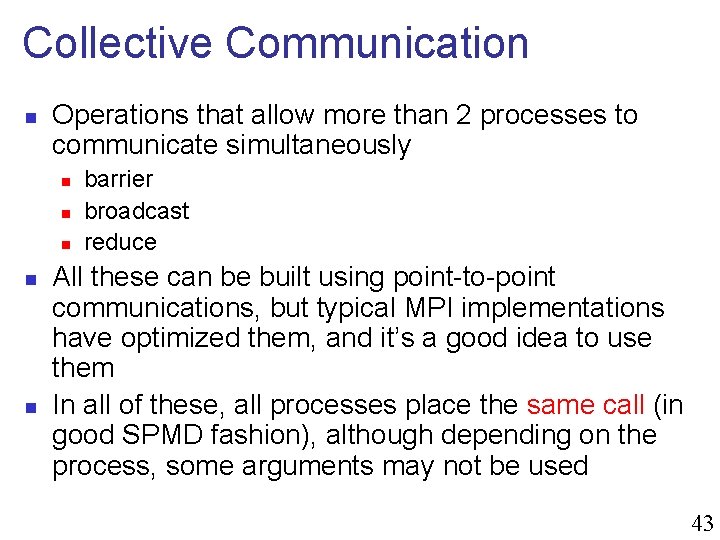

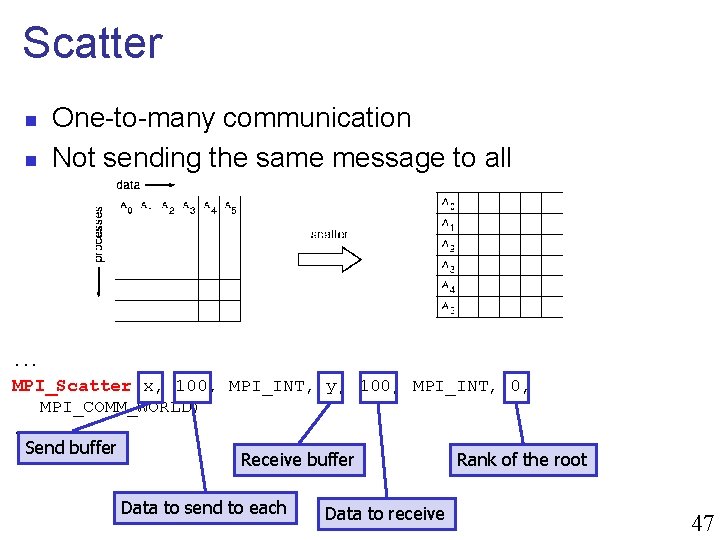

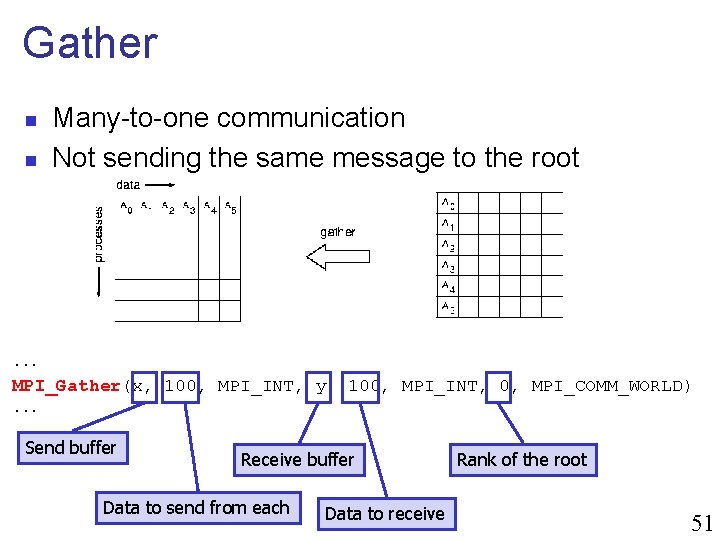

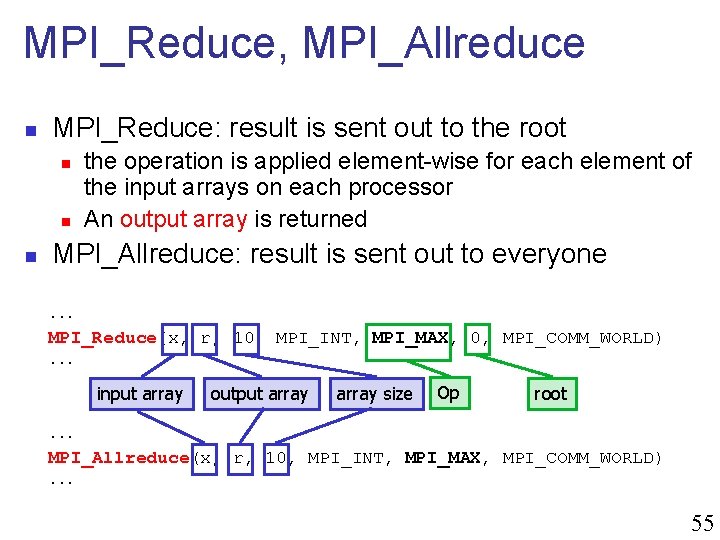

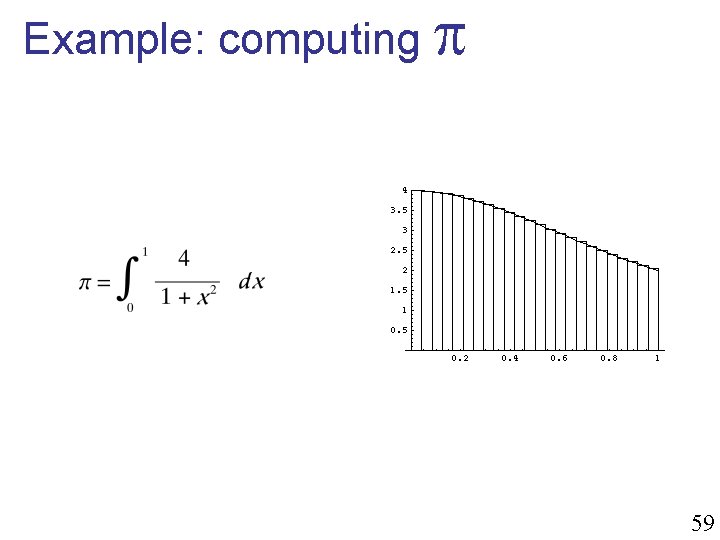

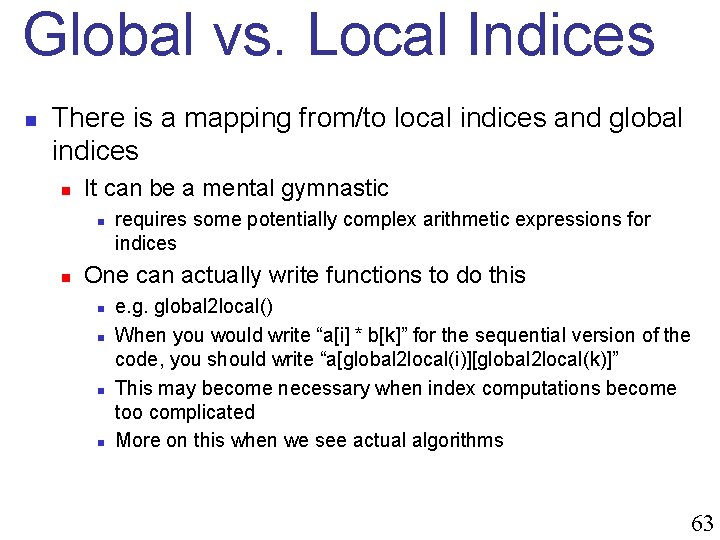

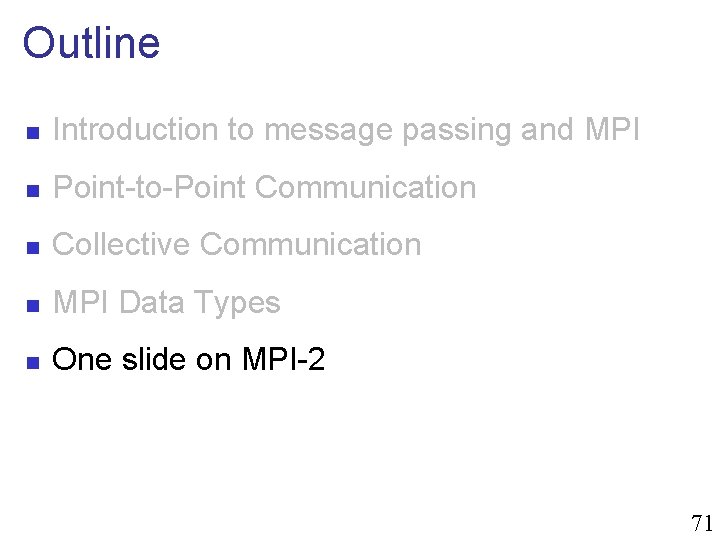

A First MPI Program #include "mpi. h" #include<stdio. h> int main(int argc, char *argv[]) { int rank, n; Has to be called first, and once char hostname[128]; MPI_Init(&argc, &argv); MPI_Comm_rank(MPI_COMM_WORLD, &rank); MPI_Comm_size(MPI_COMM_WORLD, &n); Assigns a rank to a process gethostname(hostname, 128); if(rank == 0) { printf("I am Master: %sn", hostname); } For size of MPI_COMM_WORLD else { printf("I am a worker: %s (rank=%d/%d)n", hostname, rank, n-1); } MPI_Finalize(); return 0; } Has to be called last, and once 23

Compiling/Running it n Compile your program n n mpicc –o first. c Create machines file as follows gcb compute-0 -2 compute-0 -3 n Start LAM (run “$ ssh-agent $SHELL” and “$ ssh-add” first) n n $ lamboot –v machines Run with mpirun n $ mpirun -np 3 first n n n Clean after run n requests 3 processors for running first see the mpirun man page for more information $ lamclean –v $ lamhalt Output of previous program I am Master: gcb. fiu. edu I am a worker: compute-0 -3. local (rank=1/2) I am a worker: compute-0 -2. local (rank=2/2) 24

Outline n Introduction to message passing and MPI n Point-to-Point Communication n Collective Communication n MPI Data Types n One slide on MPI-2 25

Point-to-Point Communication n P P address data type of the message length of the message Involved processes are described by two things n n n M Data to be communicated is described by three things: n n M communicator rank Message is identified by a “tag” (integer) that can be chosen by the user 26

Point-to-Point Communication n Two modes of communication: n n n Synchronous: Communication does not complete until the message has been received Asynchronous: Completes as soon as the message is “on its way”, and hopefully it gets to destination MPI provides four versions n synchronous, buffered, standard, ready 27

Synchronous/Buffered sending in MPI n Synchronous with MPI_Ssend n The send completes only once the receive has succeeded n n n copy data to the network, wait for an ack The sender has to wait for a receive to be posted No buffering of data int MPI_Ssend(void* buf, int count, MPI_Datatype datatype, int dest, int tag, MPI_Comm comm) Buffered with MPI_Bsend n The send completes once the message has been buffered internally by MPI n n Buffering incurs an extra memory copy Does not require a matching receive to be posted May cause buffer overflow if many bsends and no matching receives have been posted yet int MPI_Bsend(void* buf, int count, MPI_Datatype datatype, int dest, int tag, MPI_Comm comm) 28

Standard/Ready Send n Standard with MPI_Send n n Up to MPI to decide whether to do synchronous or buffered, for performance reasons The rationale is that a correct MPI program should not rely on buffering to ensure correct semantics n n int MPI_Send(void* buf, int count, MPI_Datatype datatype, int dest, int tag, MPI_Comm comm) Ready with MPI_Rsend n n May be started only if the matching receive has been posted Can be done efficiently on some systems as no handshaking is required n int MPI_Rsend(void* buf, int count, MPI_Datatype datatype, int dest, int tag, MPI_Comm comm) 29

MPI_RECV n There is only one MPI_Recv, which returns when the data has been received. n n n only specifies the MAX number of elements to receive int MPI_Recv(void* buf, int count, MPI_Datatype datatype, int source, int tag, MPI_Comm comm, MPI_Status *status) Why all this junk? n n n Performance, performance MPI was designed with constructors in mind, who would endlessly tune code to extract the best out of the platform (LINPACK benchmark). Playing with the different versions of MPI_? send can improve performance without modifying program semantics Playing with the different versions of MPI_? send can modify program semantics Typically parallel codes do not face very complex distributed system problems and it’s often more about performance than correctness. 30

Example: Sending and Receiving #include <stdio. h> #include <mpi. h> int main(int argc, char *argv[]) { int i, my_rank, nprocs, x[4]; MPI_Init(&argc, &argv); MPI_Comm_rank(MPI_COMM_WORLD, &my_rank); destination if (my_rank == 0) { /* master */ and x[0]=42; x[1]=43; x[2]=44; x[3]=45; source MPI_Comm_size(MPI_COMM_WORLD, &nprocs); for (i=1; i<nprocs; i++) MPI_Send(x, 4, MPI_INT, i, 0, MPI_COMM_WORLD); user-defined } else { /* worker */ tag MPI_Status status; MPI_Recv(x, 4, MPI_INT, 0, 0, MPI_COMM_WORLD, &status); } MPI_Finalize(); Max number of Can be examined via calls exit(0); elements to receive like MPI_Get_count(), etc. } 31

Example: Deadlock. . . MPI_Ssend() MPI_Recv(). . . MPI_Buffer_attach() MPI_Bsend() MPI_Recv(). . . Deadlock No Deadlock . . . MPI_Ssend() MPI_Recv(). . . MPI_Buffer_attach() MPI_Bsend() MPI_Recv(). . . MPI_Ssend() MPI_Recv(). . . 32

![include mpi h includestdio h int mainint argc char argv int i #include "mpi. h" #include<stdio. h> int main(int argc, char *argv[ ]) { int i,](https://slidetodoc.com/presentation_image_h2/1d344a07cded59787c5ddc5fdd31dc46/image-33.jpg)

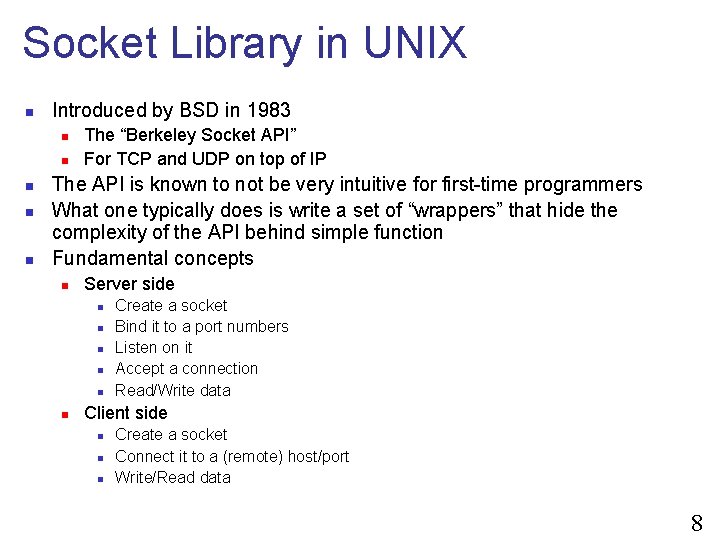

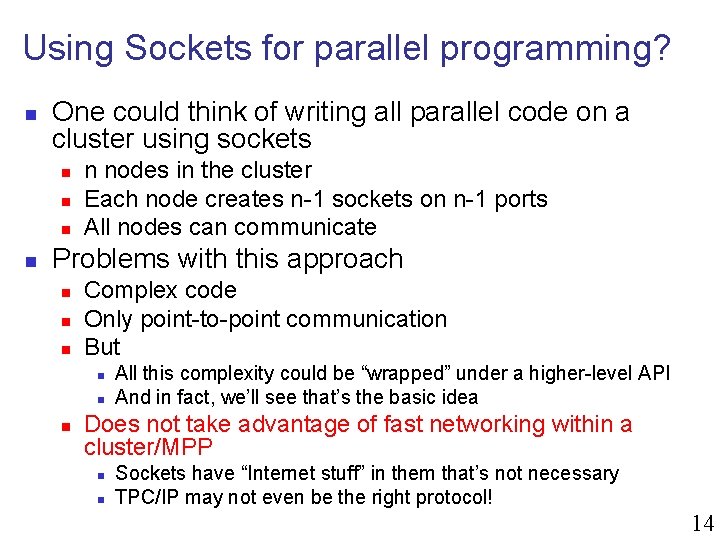

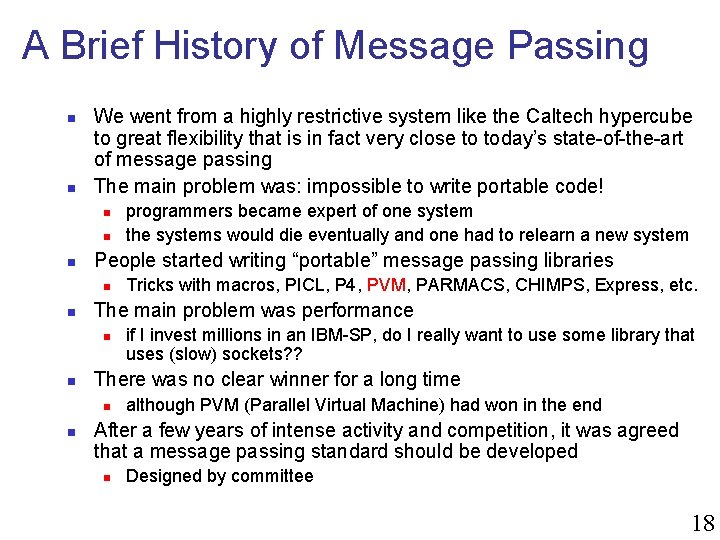

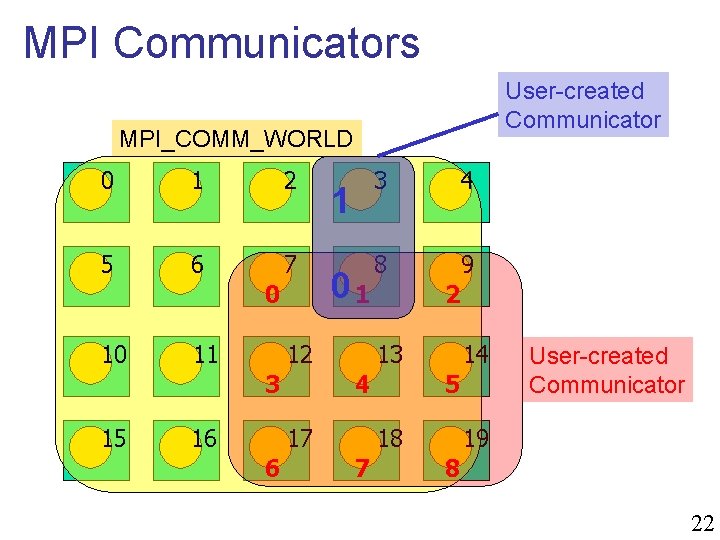

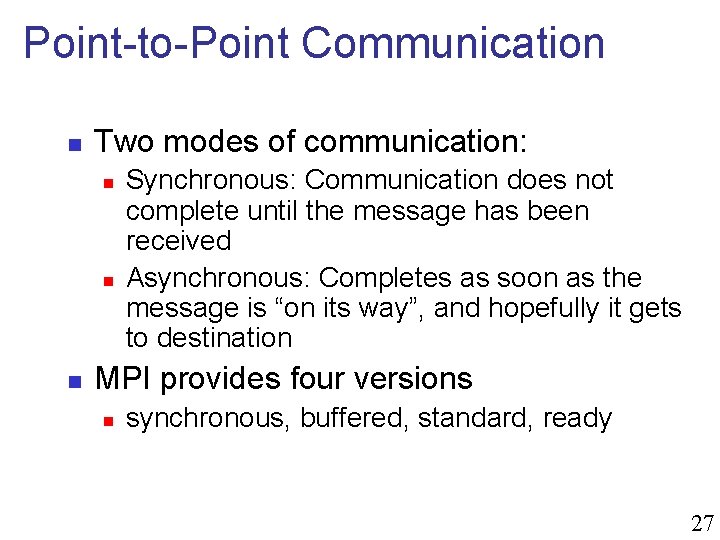

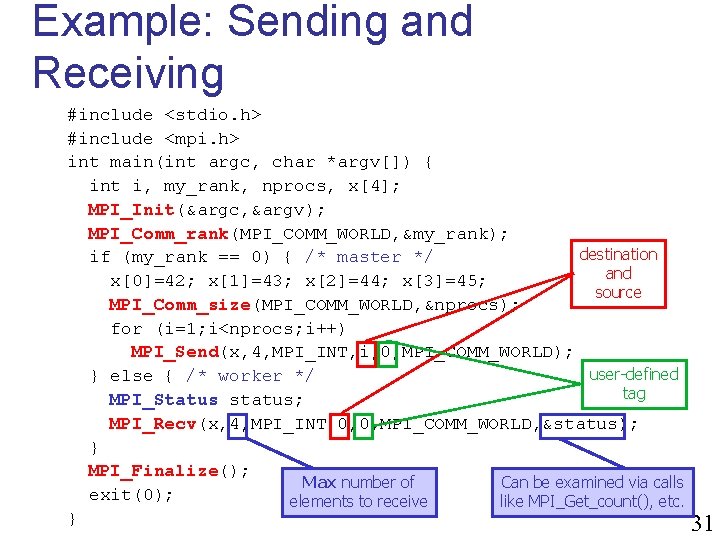

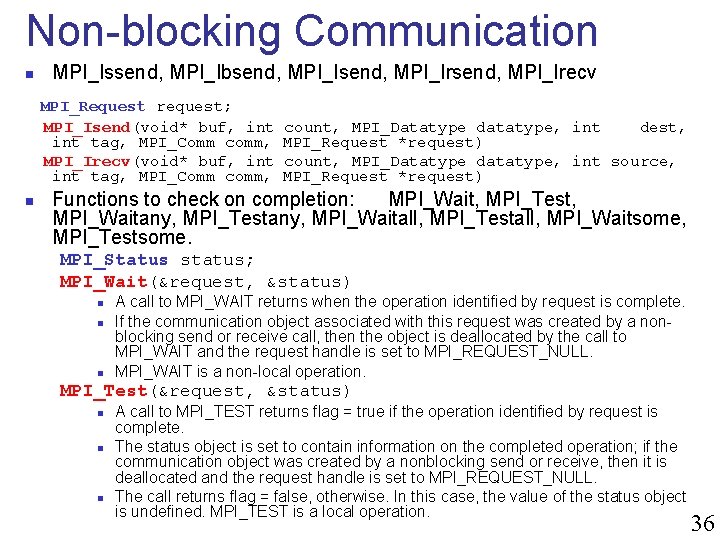

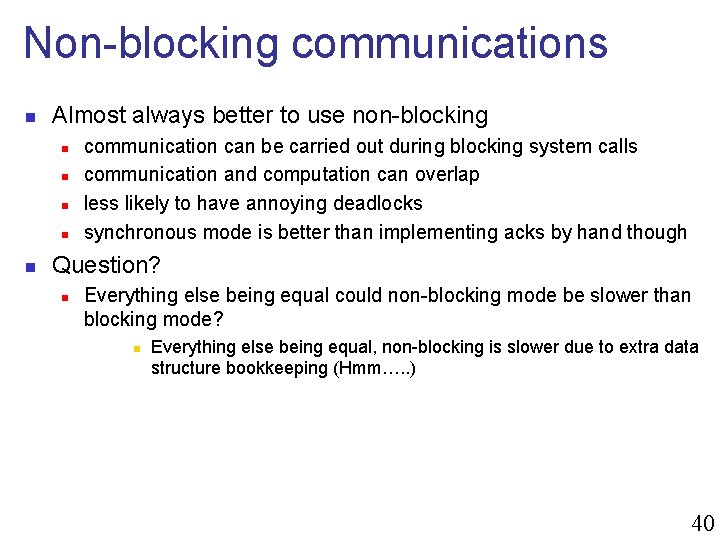

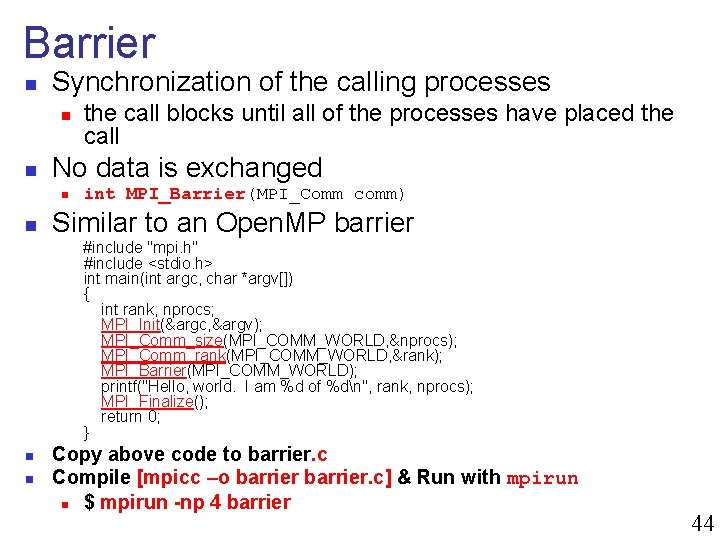

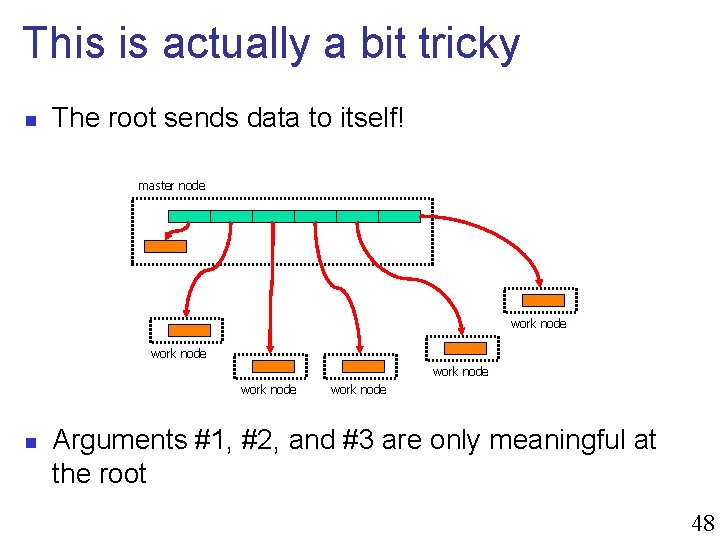

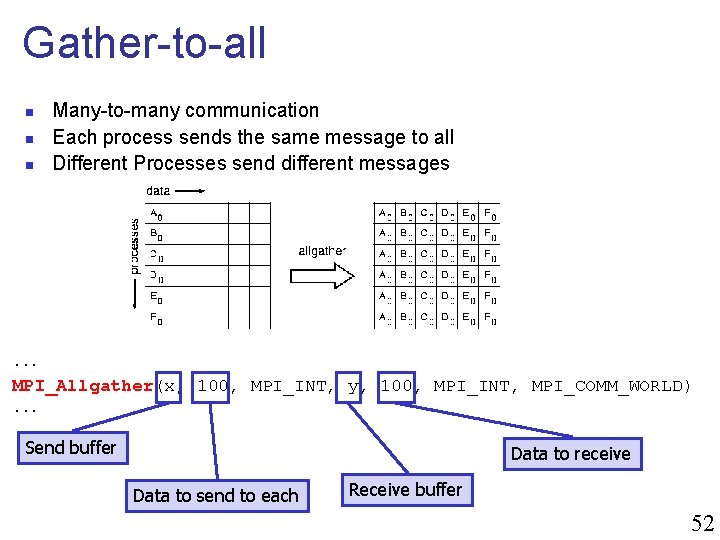

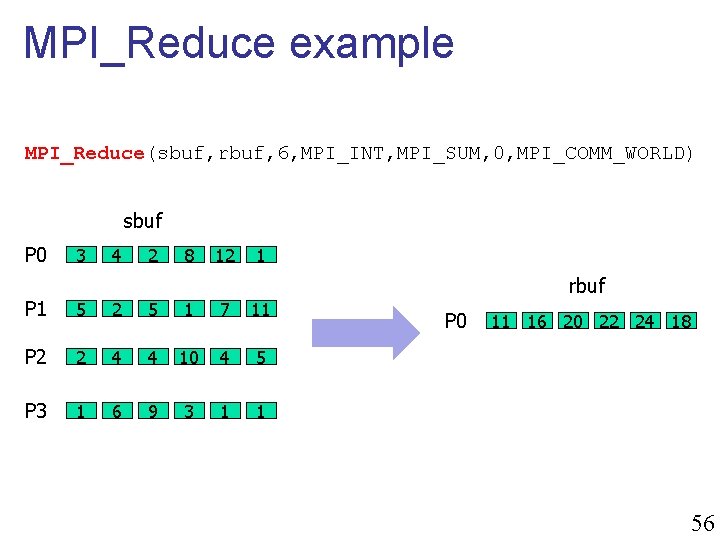

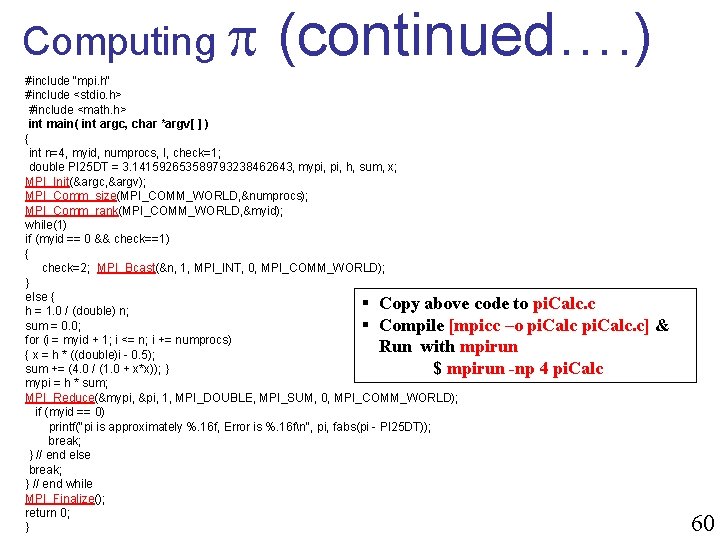

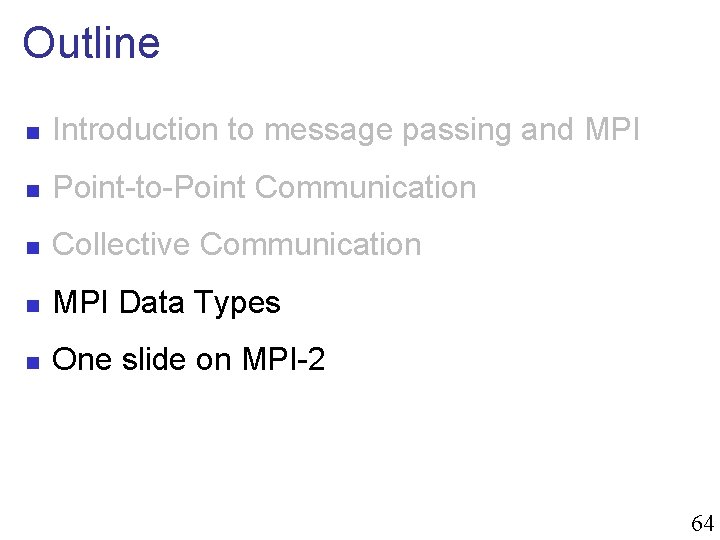

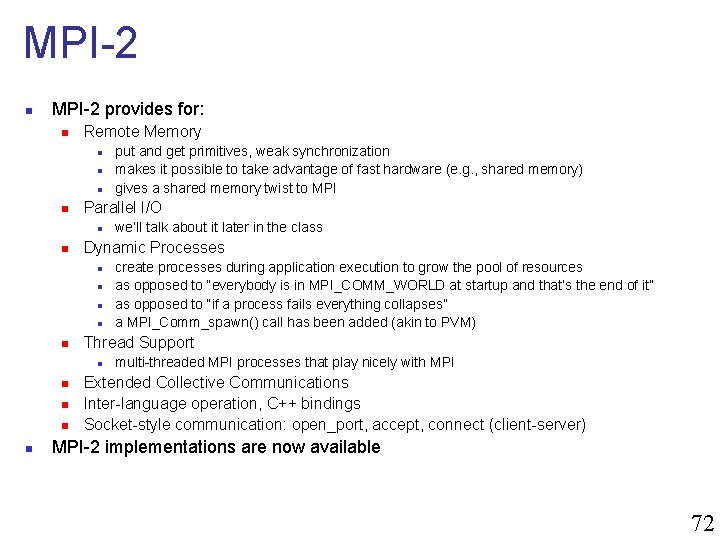

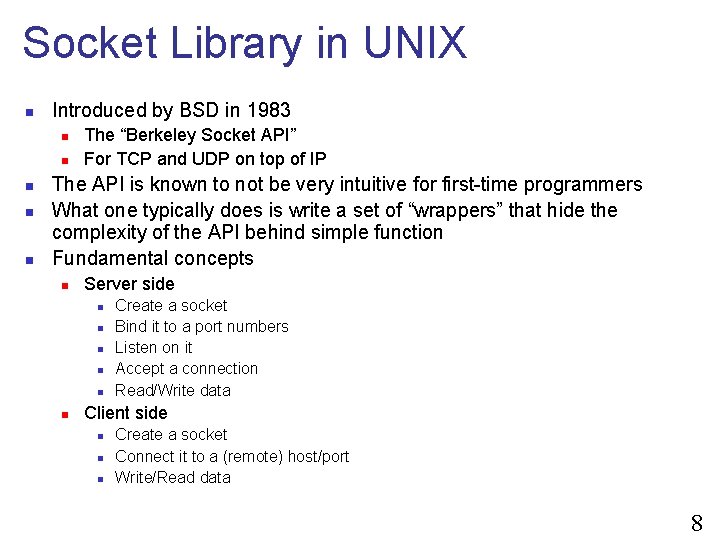

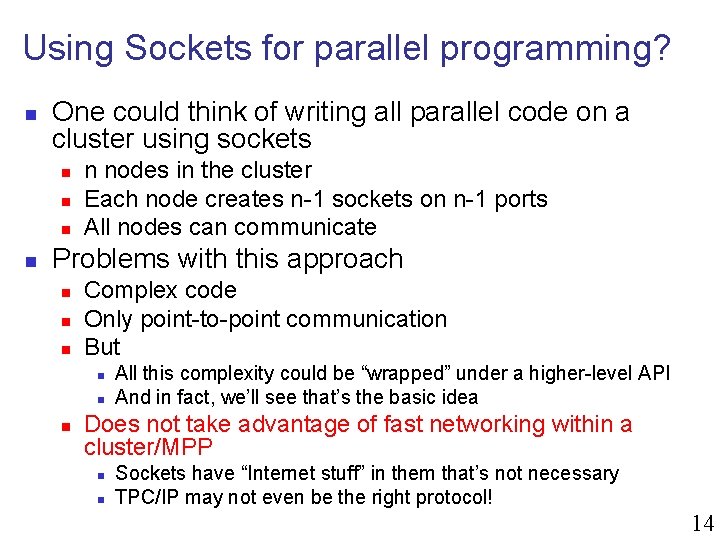

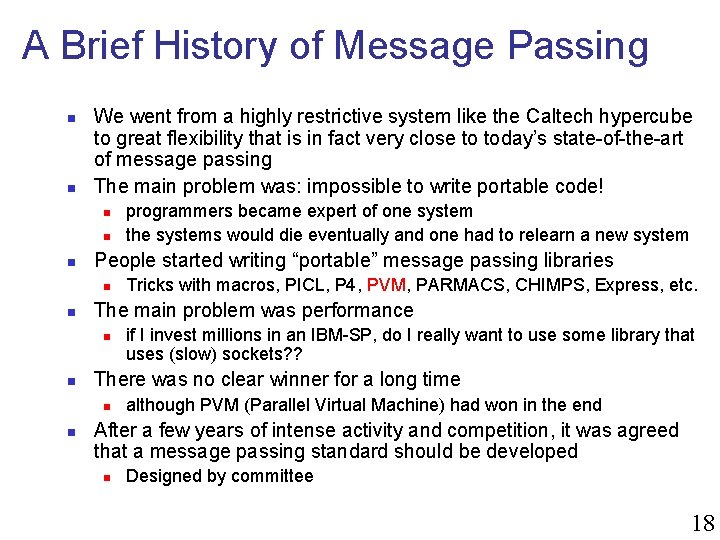

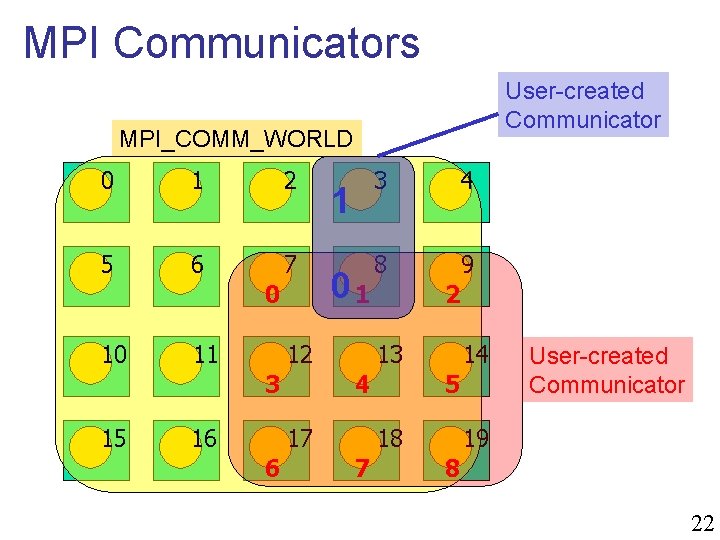

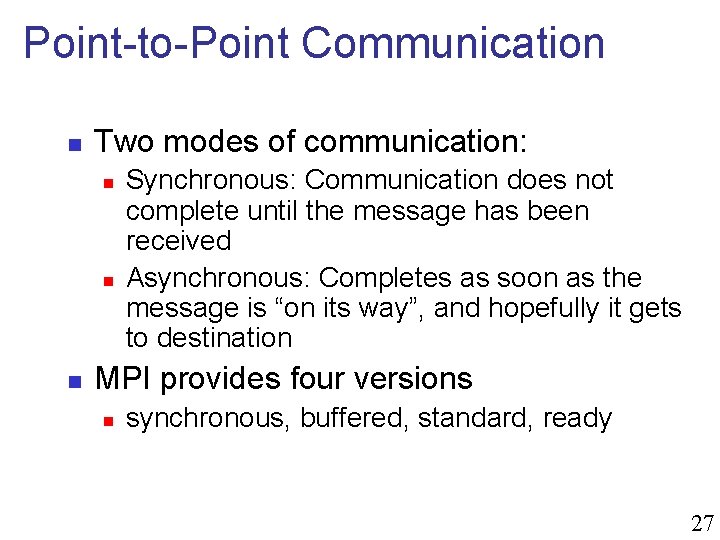

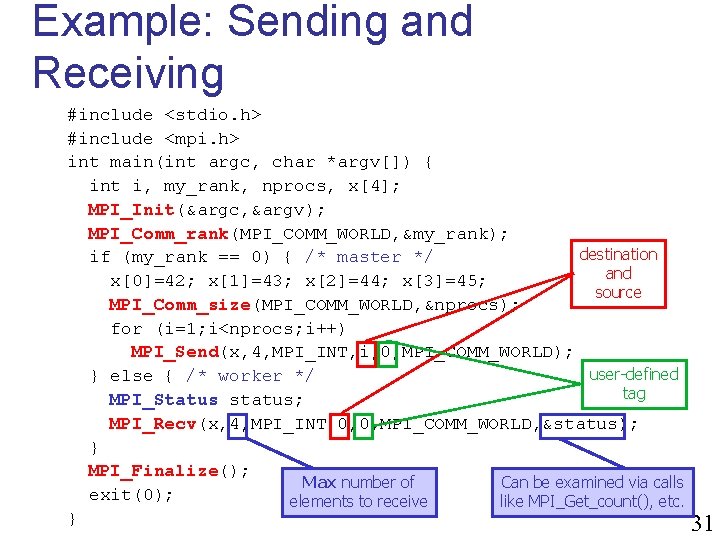

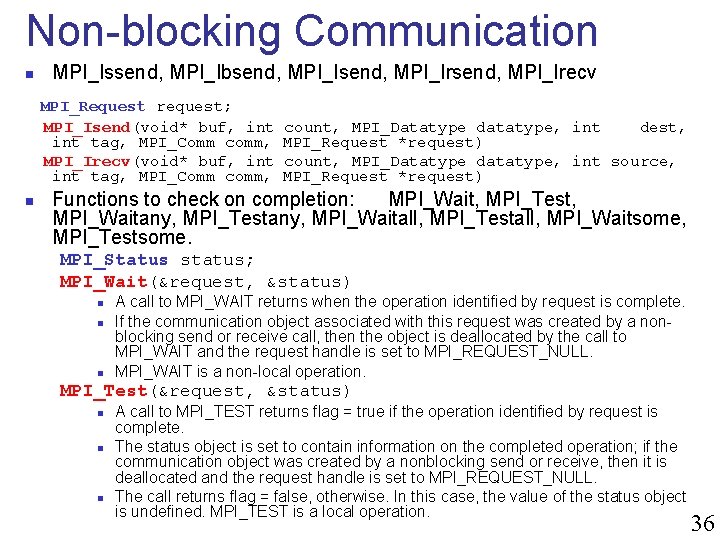

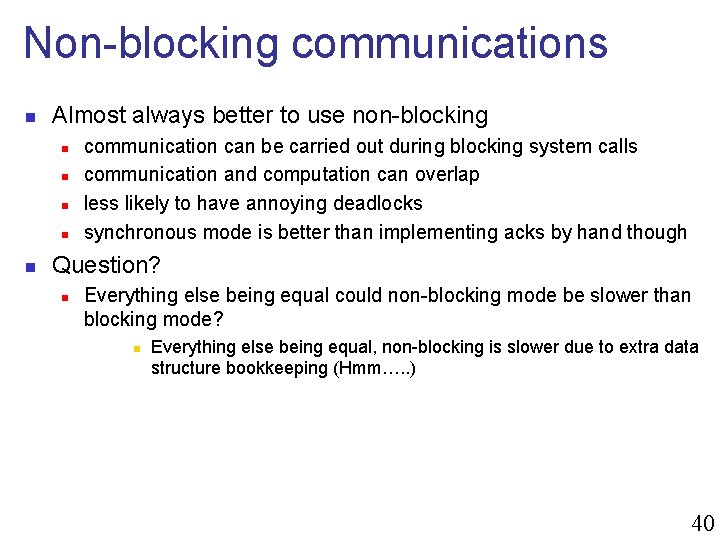

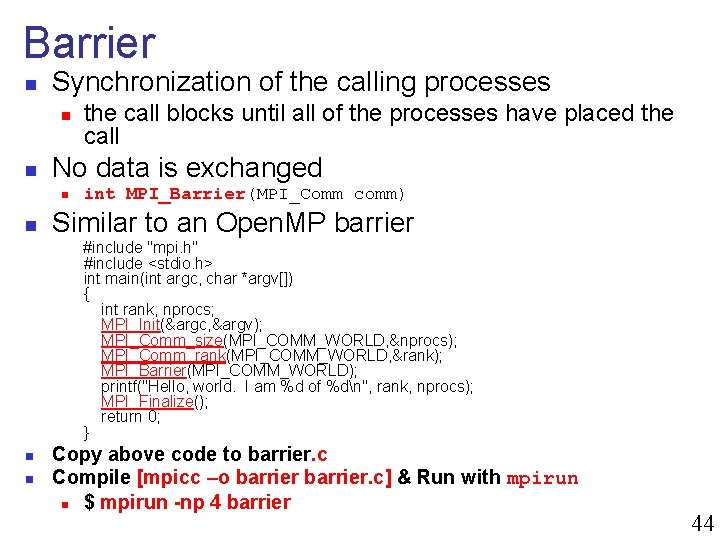

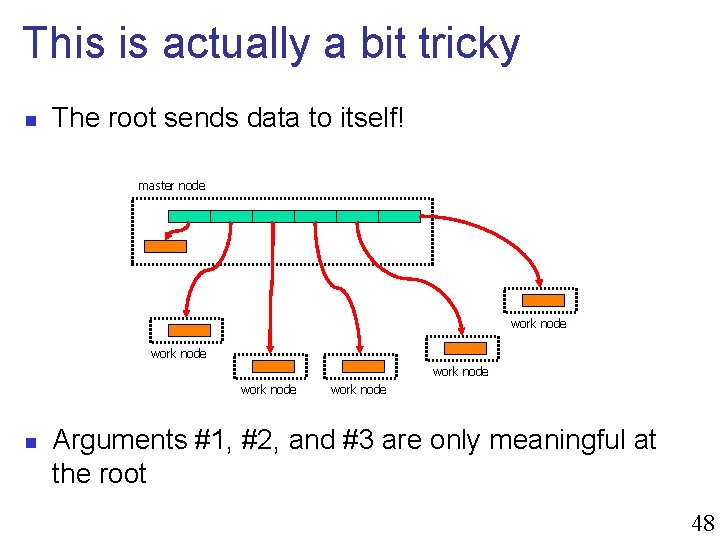

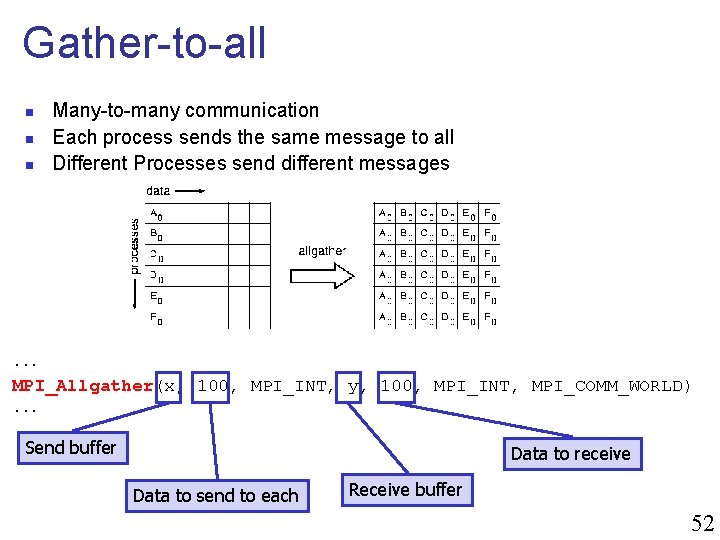

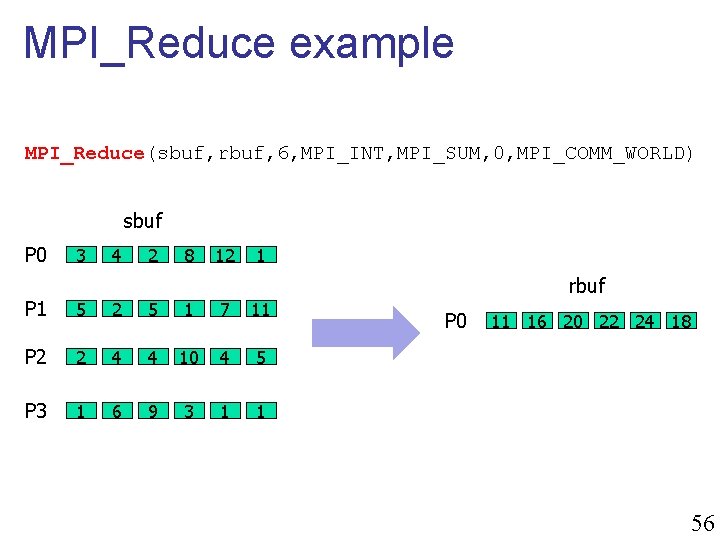

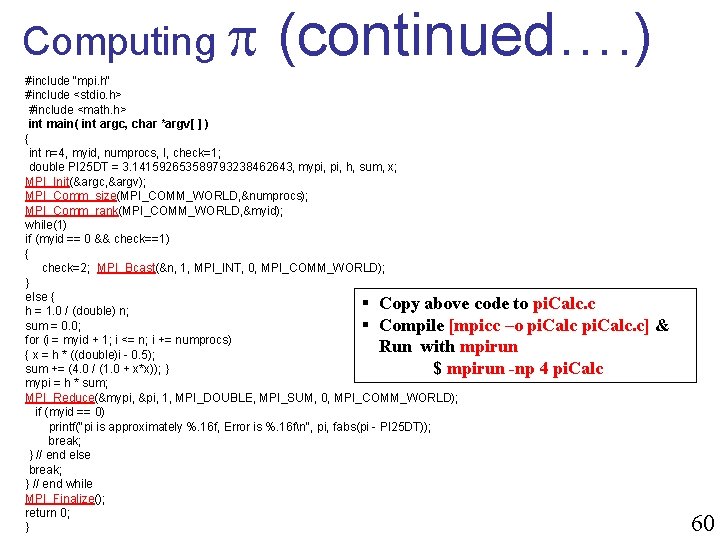

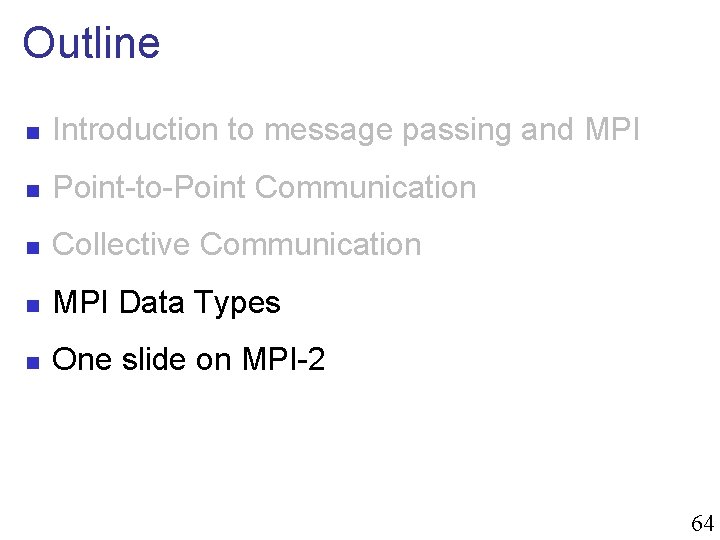

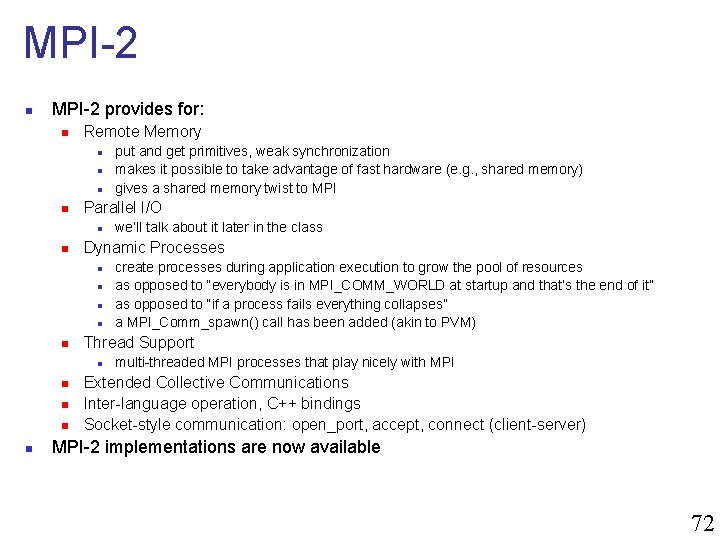

#include "mpi. h" #include<stdio. h> int main(int argc, char *argv[ ]) { int i, rank, n, x[4]; MPI_Init(&argc, &argv); MPI_Comm_rank(MPI_COMM_WORLD, &rank); if(rank ==0) { x[0]=42; x[1]=43; x[2]=44; x[3]=45; Change these to MPI_Send MPI_Comm_size(MPI_COMM_WORLD, &n); MPI_Status status; Deadlock situation for(i=1; i<n; i++) { MPI_Ssend(x, 4, MPI_INT, i, 0, MPI_COMM_WORLD); printf("Master sent to %dn", i); } MPI_Recv(x, 4, MPI_INT, 2, 0, MPI_COMM_WORLD, &status); printf("Master recvied from 2"); } else {MPI_Status status; MPI_Ssend(x, 4, MPI_INT, 0, 0, MPI_COMM_WORLD); MPI_Recv(x, 4, MPI_INT, 0, 0, MPI_COMM_WORLD, &status); printf("Worker %d received from Mastern", rank); } MPI_Finalize(); return 0; } Deadlock n n Copy above code to send. Recv. c Compile [mpicc –o send. Recv. c] & Run with mpirun n $ mpirun -np 3 send. Recv for no 33

What about MPI_Send? n n MPI_Send is either synchronous or buffered. . On GCB, using standard MPI_Send() . . . MPI_Send() MPI_Recv(). . . n n Deadlock Data size > 65540 bytes Data size < 65540 bytes No Deadlock . . . MPI_Send() MPI_Recv(). . . Rationale: a correct MPI program should not rely on buffering for semantics, just for performance. So how do we do this then? . . . 34

Non-blocking communications n So far we’ve seen blocking communication: n n n The call returns whenever its operation is complete (MPI_Ssend returns once the message has been received, MPI_Bsend returns once the message has been buffered, etc. . ) MPI provides non-blocking communication: the call returns immediately and there is another call that can be used to check on completion. Rationale: Non-blocking calls let the sender/receiver do something useful while waiting for completion of the operation (without playing with threads, etc. ). 35

Non-blocking Communication n MPI_Issend, MPI_Ibsend, MPI_Irsend, MPI_Irecv MPI_Request request; MPI_Isend(void* buf, int tag, MPI_Comm comm, MPI_Irecv(void* buf, int tag, MPI_Comm comm, n count, MPI_Datatype datatype, int dest, MPI_Request *request) count, MPI_Datatype datatype, int source, MPI_Request *request) Functions to check on completion: MPI_Wait, MPI_Test, MPI_Waitany, MPI_Testany, MPI_Waitall, MPI_Testall, MPI_Waitsome, MPI_Testsome. MPI_Status status; MPI_Wait(&request, &status) n n n A call to MPI_WAIT returns when the operation identified by request is complete. If the communication object associated with this request was created by a nonblocking send or receive call, then the object is deallocated by the call to MPI_WAIT and the request handle is set to MPI_REQUEST_NULL. MPI_WAIT is a non-local operation. MPI_Test(&request, &status) n n n A call to MPI_TEST returns flag = true if the operation identified by request is complete. The status object is set to contain information on the completed operation; if the communication object was created by a nonblocking send or receive, then it is deallocated and the request handle is set to MPI_REQUEST_NULL. The call returns flag = false, otherwise. In this case, the value of the status object is undefined. MPI_TEST is a local operation. 36

Example: Non-blocking comm #include <stdio. h> #include <mpi. h> int main(int argc, char * argv[]) { int i, rank, x, y; MPI_Status status; MPI_Request request; MPI_Init(&argc, &argv); MPI_Comm_rank(MPI_COMM_WORLD, &rank); if (rank == 0) { /* P 0 */ x=42; MPI_Isend(&x, 1, MPI_INT, 1, 0, MPI_COMM_WORLD, &request); No Deadlock MPI_Recv(&y, 1, MPI_INT, 1, 0, MPI_COMM_WORLD, &status); printf("y received at node %d is %dn", rank, y); MPI_Wait(&request, &status); } else if (rank == 1) { /* P 1 */ y=41; MPI_Isend(&y, 1, MPI_INT, 0, 0, MPI_COMM_WORLD, &request); MPI_Recv(&x, 1, MPI_INT, 0, 0, MPI_COMM_WORLD, &status); printf(“x received at node %d is %dn", rank, x); MPI_Wait(&request, &status); } MPI_Finalize(); exit(0); } n n Copy above code to non. Block. c Compile [mpicc –o non. Block. c] & Run with mpirun n $ mpirun -np 2 non. Block 37

Use of non-blocking comms n n In the previous example, why not just swap one pair of send and receive? Example: n n A logical linear array of N processors, needing to exchange data with their neighbor at each iteration of an application One would need to orchestrate the communications: n n n all odd-numbered processors send first all even-numbered processors receive first Sort of cumbersome and can lead to complicated patterns for more complex examples In this case: just use MPI_Isend and write much simpler code Furthermore, using MPI_Isend makes it possible to overlap useful work with communication delays: MPI_Isend() <useful work> MPI_Wait() 38

Iterative Application Example for (iterations) update all cells send boundary values receive boundary values n n Would deadlock with MPI_Ssend, and maybe deadlock with MPI_Send, so must be implemented with MPI_Isend Better version that uses non-blocking communication to achieve communication/computation overlap (aka latency hiding): for (iterations) initiate sending of boundary values to neighbours; initiate receipt of boundary values from neighbours; update non-boundary cells; wait for completion of sending of boundary values; wait for completion of receipt of boundary values; update boundary cells; n Saves cost of boundary value communication if hardware/software can overlap comm and comp 39

Non-blocking communications n Almost always better to use non-blocking n n n communication can be carried out during blocking system calls communication and computation can overlap less likely to have annoying deadlocks synchronous mode is better than implementing acks by hand though Question? n Everything else being equal could non-blocking mode be slower than blocking mode? n Everything else being equal, non-blocking is slower due to extra data structure bookkeeping (Hmm…. . ) 40

More information n There are many more functions that allow fine control of point-to-point communication Message ordering is guaranteed Detailed API descriptions at the MPI site at ANL: n n n Google “MPI”. First link. Note that you should check error codes, etc. Everything you want to know about deadlocks in MPI communication http: //andrew. ait. iastate. edu/HPC/Papers/mpicheck 2. htm 41

Outline n Introduction to message passing and MPI n Point-to-Point Communication n Collective Communication n MPI Data Types n One slide on MPI-2 42

Collective Communication n Operations that allow more than 2 processes to communicate simultaneously n n n barrier broadcast reduce All these can be built using point-to-point communications, but typical MPI implementations have optimized them, and it’s a good idea to use them In all of these, all processes place the same call (in good SPMD fashion), although depending on the process, some arguments may not be used 43

Barrier n Synchronization of the calling processes n n No data is exchanged n n the call blocks until all of the processes have placed the call int MPI_Barrier(MPI_Comm comm) Similar to an Open. MP barrier #include "mpi. h" #include <stdio. h> int main(int argc, char *argv[]) { int rank, nprocs; MPI_Init(&argc, &argv); MPI_Comm_size(MPI_COMM_WORLD, &nprocs); MPI_Comm_rank(MPI_COMM_WORLD, &rank); MPI_Barrier(MPI_COMM_WORLD); printf("Hello, world. I am %d of %dn", rank, nprocs); MPI_Finalize(); return 0; } n n Copy above code to barrier. c Compile [mpicc –o barrier. c] & Run with mpirun n $ mpirun -np 4 barrier 44

Broadcast n n n One-to-many communication int MPI_Bcast(void* buffer, int count, MPI_Datatype datatype, int root, MPI_Comm comm ) Note that multicast can be implemented via the use of communicators (i. e. , to create processor groups) MPI_Bcast(x, 1, MPI_INT, 0, MPI_COMM_WORLD) Rank of the root 45

Broadcast example n Let’s say the master must send the user input to all workers int main(int argc, char *argv[]) { int my_rank; int input; MPI_Init(&argc, &argv); MPI_Comm_rank(MPI_COMM_WORLD, &my_rank); if (argc != 2) exit(1); if (sscanf(argv[1], ”%d”, &input) != 1) exit(1); if (my_rank == 0) { /* master */ MPI_Bcast(&input, 1, MPI_INT, 0, MPI_COMM_WORLD); printf(“Master Broadcasts %dn”, input); } else { /* worker */ MPI_Bcast(&input, 1, MPI_INT, 0, MPI_COMM_WORLD); printf(“Worker %d received %dn”, rank, input); } MPI_Finalize(); return 0; } 46

Scatter n n One-to-many communication Not sending the same message to all . . . MPI_Scatter(x, 100, MPI_INT, y, 100, MPI_INT, 0, MPI_COMM_WORLD). . . Send buffer Receive buffer Data to send to each Data to receive Rank of the root 47

This is actually a bit tricky n The root sends data to itself! master node work node n work node Arguments #1, #2, and #3 are only meaningful at the root 48

Scatter Example n Partitioning an array of input among workers #include “mpi. h” #include <stdio. h> int main(int argc, char *argv[]) { int *a, rank, N=4, n; ; int *recvbuffer; MPI_Init(&argc, &argv); MPI_Comm_rank(MPI_COMM_WORLD, &rank); MPI_Comm_size(MPI_COMM_WORLD, &n); if (rank == 0) { /* master */ int b[4]={1, 2, 3, 4}; a=b; //<allocate array a of size N> int c[N/n]; recvbuffer=c; //<allocate array recvbuffer of size N/n> MPI_Scatter(a, N/n, MPI_INT, recvbuffer, N/n, MPI_INT, 0, MPI_COMM_WORLD); for(i=0; i<N/n; i++) printf(“Node %d received %dn”, rank, recvbuffer[i]); } else { /* worker */ MPI_Scatter(NULL, 0, MPI_INT, recvbuffer, N/n, MPI_INT, 0, MPI_COMM_WORLD); for(i=0; i<N/n; i++) printf(“Node %d received %dn”, rank, recvbuffer[i]); } MPI_Finalize(); return 0; } n n Copy above code to scatter. c Compile [mpicc –o scatter. c] & Run with mpirun n $ mpirun -np 4 scatter 49

Scatter Example n Without redundant sending at the root (supported in MPI-2) int main(int argc, char *argv[]) { int *a, rank; double *recvbuffer; int N=4; int n; MPI_Comm_rank(MPI_COMM_WORLD, rank); MPI_Comm_size(MPI_COMM_WORLD, &n); if (my_rank == 0) { /* master */ int b[4]={1, 2, 3, 4}; a=b; //<allocate array a of size N> int c[N/n]; recvbuffer=c; //<allocate array recvbuffer of size N/n> MPI_Scatter(a, N/n, MPI_INT, MPI_IN_PLACE, N/n, MPI_INT, 0, MPI_COMM_WORLD); } else { /* worker */ int c[N/n]; recvbuffer=c; //<allocate array recvbuffer of size N/n> MPI_Scatter(NULL, 0, MPI_INT, recvbuffer, N/n, MPI_INT, 0, MPI_COMM_WORLD); } MPI_Finalize(); return 0; } 50

Gather n n Many-to-one communication Not sending the same message to the root . . . MPI_Gather(x, 100, MPI_INT, y, 100, MPI_INT, 0, MPI_COMM_WORLD). . . Send buffer Receive buffer Data to send from each Data to receive Rank of the root 51

Gather-to-all n n n Many-to-many communication Each process sends the same message to all Different Processes send different messages . . . MPI_Allgather(x, 100, MPI_INT, y, 100, MPI_INT, MPI_COMM_WORLD). . . Send buffer Data to receive Data to send to each Receive buffer 52

All-to-all n n Many-to-many communication Each process sends a different message to each other process . . . MPI_Alltoall(x, 100, MPI_INT, y, 100, MPI_INT, MPI_COMM_WORLD). . . Send buffer Data to receive Data to send to each Receive buffer 53

Reduction Operations n Used to compute a result from data that is distributed among processors n often what a user wants to do anyway n n n so why not provide the functionality as a single API call rather than having people keep re-implementing the same things Predefined operations: n n e. g. , compute the sum of a distributed array MPI_MAX, MPI_MIN, MPI_SUM, etc. Possibility to have user-defined operations 54

MPI_Reduce, MPI_Allreduce n MPI_Reduce: result is sent out to the root n n n the operation is applied element-wise for each element of the input arrays on each processor An output array is returned MPI_Allreduce: result is sent out to everyone. . . MPI_Reduce(x, r, 10, MPI_INT, MPI_MAX, 0, MPI_COMM_WORLD). . . input array output array size Op root . . . MPI_Allreduce(x, r, 10, MPI_INT, MPI_MAX, MPI_COMM_WORLD). . . 55

MPI_Reduce example MPI_Reduce(sbuf, rbuf, 6, MPI_INT, MPI_SUM, 0, MPI_COMM_WORLD) sbuf P 0 3 4 2 8 12 1 rbuf P 1 5 2 5 1 7 11 P 2 2 4 4 10 4 5 P 3 1 6 9 3 1 1 P 0 11 16 20 22 24 18 56

MPI_Scan: Prefix reduction n Process i receives data reduced on process 0 to i. rbuf sbuf P 0 3 4 2 8 12 1 P 0 3 4 2 8 12 P 1 5 2 5 1 7 11 P 1 8 6 7 9 19 12 P 2 2 4 4 10 4 5 P 2 10 10 11 19 23 17 P 3 1 6 9 3 1 1 P 3 11 16 12 22 24 18 1 MPI_Scan(sbuf, rbuf, 6, MPI_INT, MPI_SUM, MPI_COMM_WORLD) 57

And more. . . n Most broadcast operations come with a version that allows for a stride (so that blocks do not need to be contiguous) n n MPI_Gatherv(), MPI_Scatterv(), MPI_Allgatherv(), MPI_Alltoallv() MPI_Reduce_scatter(): functionality equivalent to a reduce followed by a scatter All the above have been created as they are common in scientific applications and save code All details on the MPI Webpage 58

Example: computing 59

Computing (continued…. ) #include "mpi. h" #include <stdio. h> #include <math. h> int main( int argc, char *argv[ ] ) { int n=4, myid, numprocs, I, check=1; double PI 25 DT = 3. 141592653589793238462643, mypi, h, sum, x; MPI_Init(&argc, &argv); MPI_Comm_size(MPI_COMM_WORLD, &numprocs); MPI_Comm_rank(MPI_COMM_WORLD, &myid); while(1) if (myid == 0 && check==1) { check=2; MPI_Bcast(&n, 1, MPI_INT, 0, MPI_COMM_WORLD); } else { § Copy above code to pi. Calc. c h = 1. 0 / (double) n; sum = 0. 0; § Compile [mpicc –o pi. Calc. c] for (i = myid + 1; i <= n; i += numprocs) Run with mpirun { x = h * ((double)i - 0. 5); sum += (4. 0 / (1. 0 + x*x)); } $ mpirun -np 4 pi. Calc mypi = h * sum; MPI_Reduce(&mypi, &pi, 1, MPI_DOUBLE, MPI_SUM, 0, MPI_COMM_WORLD); if (myid == 0) printf("pi is approximately %. 16 f, Error is %. 16 fn", pi, fabs(pi - PI 25 DT)); break; } // end else break; } // end while MPI_Finalize(); return 0; } & 60

Using MPI to increase memory n One of the reasons to use MPI is to increase the available memory n n I want to sort an array The array is 10 GB I can use 10 computers with each 1 GB of memory Question: how do I write the code? n I cannot declare #define SIZE (10*1024*1024) char array[SIZE] 61

Global vs. Local Indices n Since each node gets only 1/10 th of the array, each node declares only an array on 1/10 th of the size n n n processor 0: char array[SIZE/10]; processor 1: char array[SIZE/10]; . . . processor p: char array[SIZE/10]; When processor 0 references array[0] it means the first element of the global array When processor i references array[0] it means the (SIZE/10*i) element of the global array 62

Global vs. Local Indices n There is a mapping from/to local indices and global indices n It can be a mental gymnastic n n requires some potentially complex arithmetic expressions for indices One can actually write functions to do this n n e. g. global 2 local() When you would write “a[i] * b[k]” for the sequential version of the code, you should write “a[global 2 local(i)][global 2 local(k)]” This may become necessary when index computations become too complicated More on this when we see actual algorithms 63

Outline n Introduction to message passing and MPI n Point-to-Point Communication n Collective Communication n MPI Data Types n One slide on MPI-2 64

More Advanced Messages n n Regularly strided data Data structure Blocks/Elements of a matrix struct { int a; double b; } n A set of variables int a; double b; int x[12]; 65

Problems with current messages n n n Packing strided data into temporary arrays wastes memory Placing individual MPI_Send calls for individual variables of possibly different types wastes time Both the above would make the code bloated è Motivation for MPI’s “derived data types” 66

Derived Data Types n A data type is defined by a “type map” n n Created at runtime in two phases n n n set of <type, displacement> pairs Construct the data type from existing types Commit the data type before it can be used Simplest constructor: contiguous type n Returns a new data type that represents the concatenation of count instances of old type int MPI_Type_contiguous(int count, MPI_Datatype oldtype, MPI_Datatype *newtype) 67

MPI_Type_vector() int MPI_Type_vector(int count, int blocklength, int stride, MPI_Datatype oldtype, MPI_Datatype *newtype) n count => no. of blocks n blocklength => elements in the block n stride => no. of elements between blocks n e. g. { ( double, 0), ( char, 8) } , with extent 16. n A call to MPI_TYPE_VECTOR( 2, 3, 4, oldtype, newtype) will create the datatype with type map, n { ( double, 0), ( char, 8), ( double, 16), ( char, 24), ( double, 32), ( char, 40), ( double, 64), ( char, 72), ( double, 80), ( char, 88), ( double, 96), ( char, 104) } n A call to MPI_TYPE_CONTIGUOUS(count, oldtype, newtype) is equivalent to a call to MPI_TYPE_VECTOR(count, 1, 1, oldtype, newtype) n n n lb(Typemap) = min(disp 0, disp 1, . . . , disp. N) ub(Typemap) = max(disp 0 + sizeof(type 0), disp 1 + sizeof(type 1), . . . , disp. N + sizeof(type. N)) extent(Typemap) = ub(Typemap) - lb(Typemap) + pad block length stride 68

MPI_Type_struct() int MPI_Type_struct(int count, int *array_of_blocklengths, MPI_Aint *array_of_displacements, MPI_Datatype *array_of_types, MPI_Datatype *newtype) n n count: number of blocks. number of entries in arrays array_of_types , array_of_displacements and array_of_blocklengths array of blocklengths: number of elements in each block (array) array of displacements: byte displacement of each block (array) struct { int a; char b; } foo; blen[0] = 1; indices[0] = 0; oldtypes[0] = MPI_INT; blen[1] = 1; indices[1] = &foo. b - &foo; oldtypes[1] = MPI_CHAR; blen[2] = 1; indices[2] = sizeof(foo); oldtypes[2] = MPI_UB; MPI_Type_struct( 3, blen, indices, oldtypes, &newtype ); 69

Derived Data Types: Example Sending the 5 th column of a 2 -D matrix: n double results[IMAX][JMAX]; MPI_Datatype newtype; MPI_Type_vector (IMAX, 1, JMAX, MPI_DOUBLE, &newtype); MPI_Type_Commit (&newtype); MPI_Send(&(results[0][4]), 1, newtype, dest, tag, comm); IMAX JMAX IMAX * JMAX 70

Outline n Introduction to message passing and MPI n Point-to-Point Communication n Collective Communication n MPI Data Types n One slide on MPI-2 71

MPI-2 n MPI-2 provides for: n Remote Memory n n Parallel I/O n n n n create processes during application execution to grow the pool of resources as opposed to “everybody is in MPI_COMM_WORLD at startup and that’s the end of it” as opposed to “if a process fails everything collapses” a MPI_Comm_spawn() call has been added (akin to PVM) Thread Support n n we’ll talk about it later in the class Dynamic Processes n n put and get primitives, weak synchronization makes it possible to take advantage of fast hardware (e. g. , shared memory) gives a shared memory twist to MPI multi-threaded MPI processes that play nicely with MPI Extended Collective Communications Inter-language operation, C++ bindings Socket-style communication: open_port, accept, connect (client-server) MPI-2 implementations are now available 72