HighPerformance Computing at the Martinos Center Iman Aganj

- Slides: 20

High-Performance Computing at the Martinos Center Iman Aganj Why & How January 23, 2020

High-Performance Computing (HPC) • What? – The use of advanced (usually shared) computing resources to solve large computational problems quickly and efficiently. • Why? – Processing of large datasets in parallel. – Access to remote resources that aren’t locally available, e. g. , • Large amount of memory • Graphics Processing Units (GPUs) • How? – Remote job submission.

What HPC resources are available to us? • Martinos Center Compute Cluster: – Launchpad – Icepuffs • Partners Research Computing: – ERISOne Linux Cluster – Windows Analysis Servers • Harvard Medical School Research Computing: – O 2 • External: – Open Science Grid – Mass Open Cloud

Martinos Center Compute Cluster Launchpad • Resources: – 96 nodes, each with: • 8 cores (total of 768 cores) • 56 GB of memory – Job scheduler: • PBS www. nmr. mgh. harvard. edu/martinos/user. Info/computer/launchpad. php

Martinos Center Compute Cluster Icepuffs • Resources: – 3 Icepuff nodes, each with: • 64 cores • 256 GB of memory • Pros (Launchpad & Icepuffs): – NMR network folders are already mounted. – Exclusive to Martinos members. – Latest version of Free. Surfer is ready to use. www. nmr. mgh. harvard. edu/martinos/user. Info/computer/icepuffs. php

Partners Research Computing ERISOne Linux Cluster • Resources: – 380 nodes, each with: • up to 36 cores (total of ~ 7000 cores) • up to 512 GB of memory – A 3 TB-memory server with 64 cores – GPUs: • 8 × Tesla V 100 -32 GB (soon 48 ×) • 4 × Tesla P 100 • 24 × Tesla M 2070 – Job scheduler: • LSF • Pros: – Some directories are mounted on the NMR network. – High-memory jobs (up to 498 GB in the big queue). – GPU access. https: //rc. partners. org/kb/article/2814

Partners Research Computing Windows Analysis Servers • Resources: – 2 Windows machines: • HPCWin 2 (32 cores, 256 GB of memory) • HPCWin 3 (32 cores, 320 GB of memory) – Connection using the Remote Desktop Protocol: • rdesktop hpcwin 3. research. partners. org • Use PARTNERSPartners. ID to log in. • Pros: – Run Windows applications. – Quick access to MS Office. https: //rc. partners. org/kb/computational-resources/windows-analysis-servers? article=2652

Harvard Medical School Research Computing • Resources: O 2 – 365 nodes, each with: • up to 32 cores (total of 11168 cores) • 256 GB of memory – 7 high-memory nodes • 750 GB of memory – GPUs • 8 × Tesla V 100 • 8 × Tesla M 40 (4 × 24 GB, 4 × 12 GB) • 16 × Tesla K 80 (12 GB each) – Job scheduler: • Slurm • Pros: – Available to both quad & non-quad HMS affiliates (and their RAs). – Many Matlab licenses with most toolboxes. – GPU access. https: //wiki. rc. hms. harvard. edu/display/O 2

Launchpad www. nmr. mgh. harvard. edu/martinos/user. Info/computer/launchpad. php

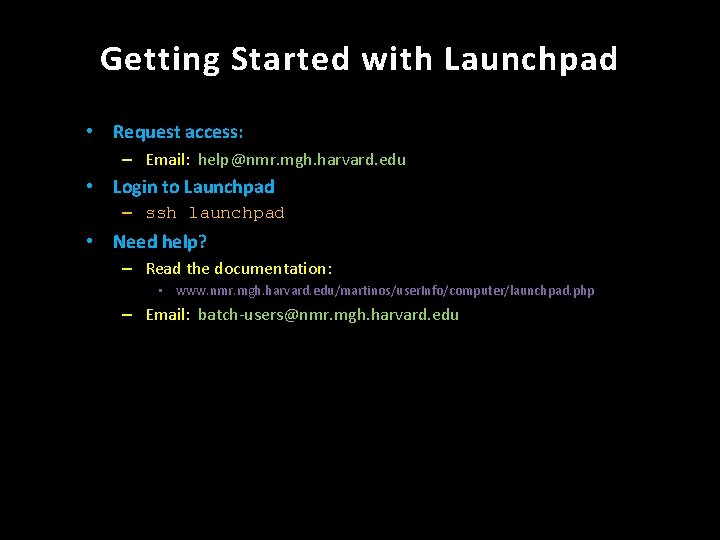

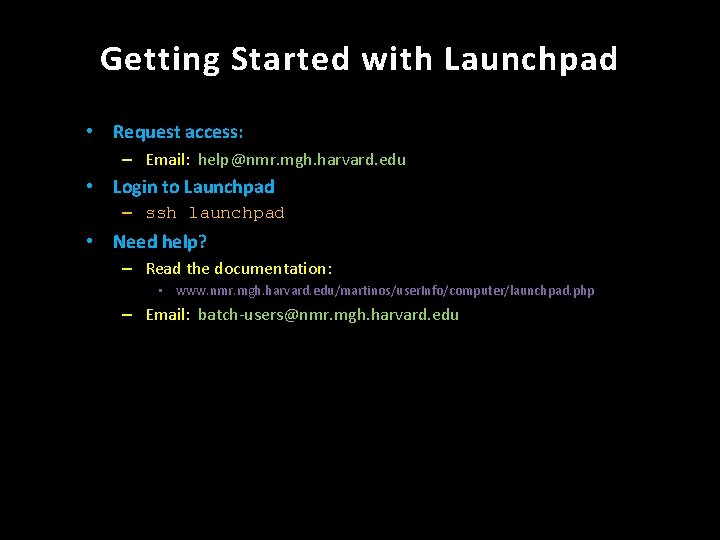

Getting Started with Launchpad • Request access: – Email: help@nmr. mgh. harvard. edu • Login to Launchpad – ssh launchpad • Need help? – Read the documentation: • www. nmr. mgh. harvard. edu/martinos/user. Info/computer/launchpad. php – Email: batch-users@nmr. mgh. harvard. edu

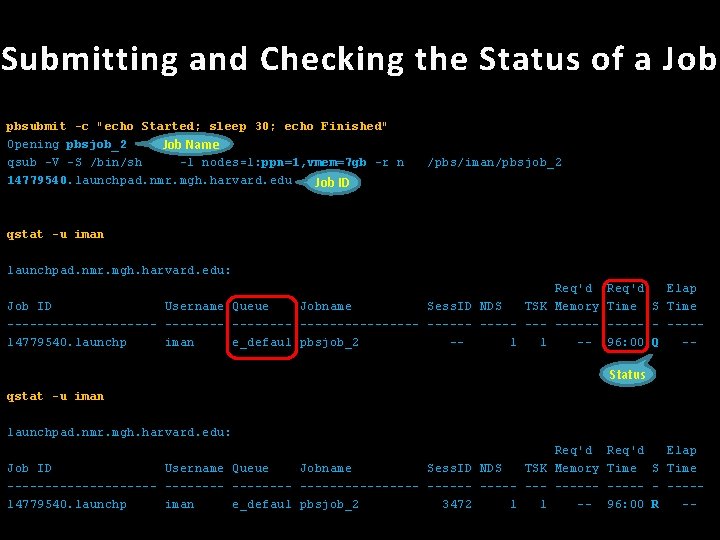

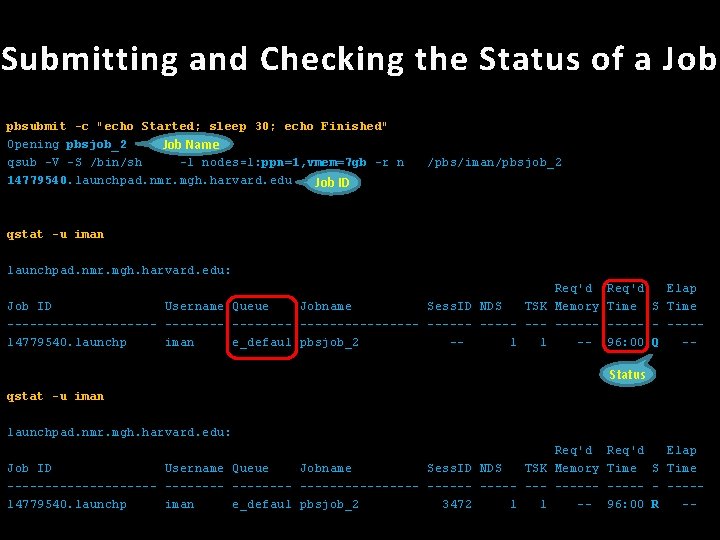

Submitting and Checking the Status of a Job pbsubmit -c "echo Started; sleep 30; echo Finished" Opening pbsjob_2 Job Name qsub -V -S /bin/sh -l nodes=1: ppn=1, vmem=7 gb -r n 14779540. launchpad. nmr. mgh. harvard. edu Job ID /pbs/iman/pbsjob_2 qstat -u iman launchpad. nmr. mgh. harvard. edu: Req'd Job ID Username Queue Jobname Sess. ID NDS TSK Memory ---------- -------- --- -----14779540. launchp iman e_defaul pbsjob_2 -1 1 -- Req'd Elap Time S Time ----- - ----96: 00 Q -- Status qstat -u iman launchpad. nmr. mgh. harvard. edu: Req'd Job ID Username Queue Jobname Sess. ID NDS TSK Memory ---------- -------- --- -----14779540. launchp iman e_defaul pbsjob_2 3472 1 1 -- Req'd Elap Time S Time ----- - ----96: 00 R --

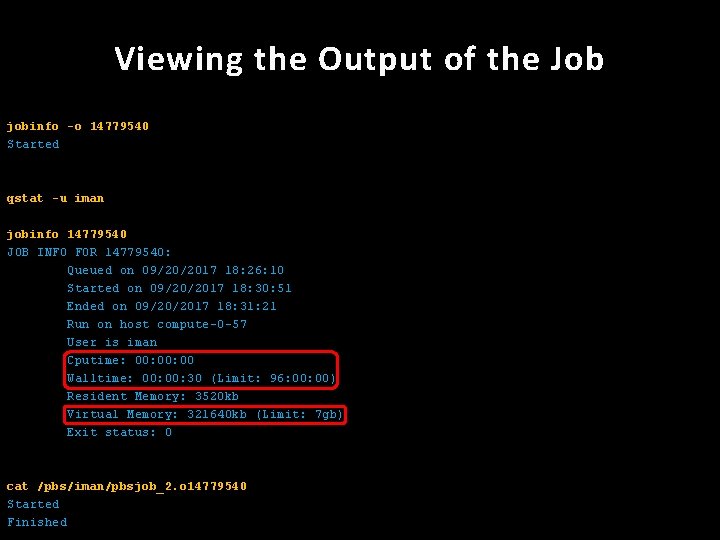

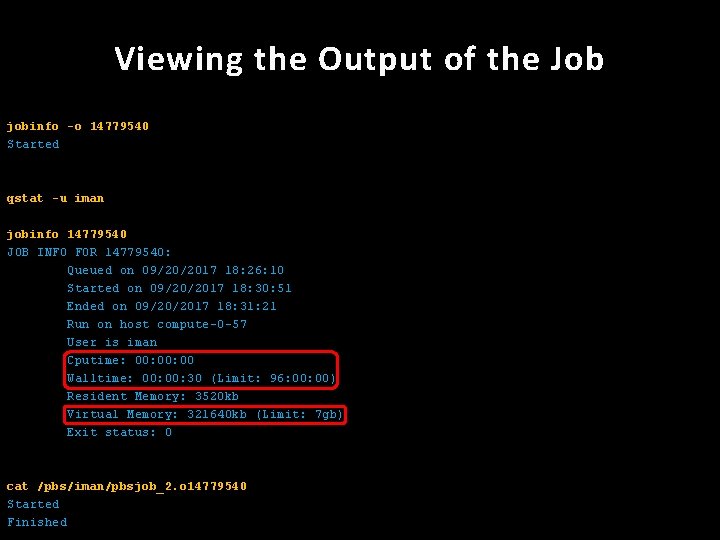

Viewing the Output of the Job jobinfo -o 14779540 Started qstat -u iman jobinfo 14779540 JOB INFO FOR 14779540: Queued on 09/20/2017 18: 26: 10 Started on 09/20/2017 18: 30: 51 Ended on 09/20/2017 18: 31: 21 Run on host compute-0 -57 User is iman Cputime: 00: 00 Walltime: 00: 30 (Limit: 96: 00) Resident Memory: 3520 kb Virtual Memory: 321640 kb (Limit: 7 gb) Exit status: 0 cat /pbs/iman/pbsjob_2. o 14779540 Started Finished

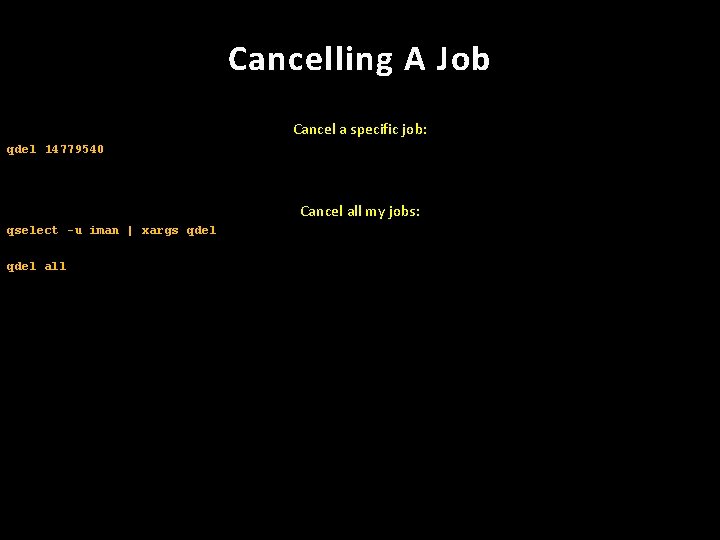

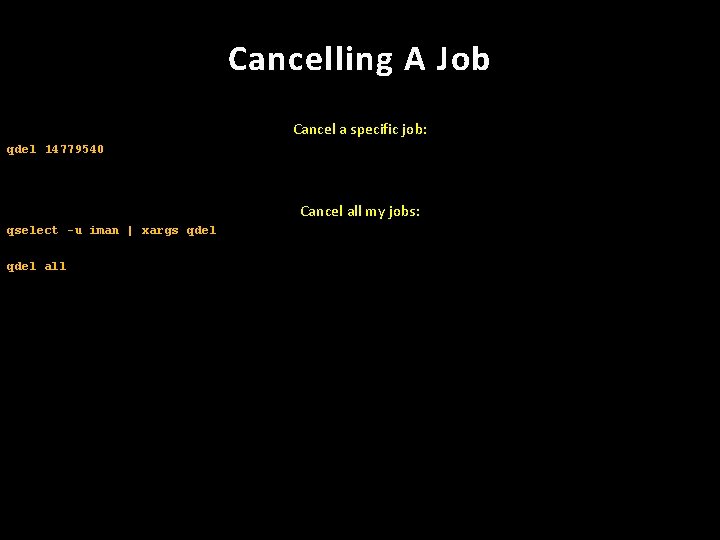

Cancelling A Job Cancel a specific job: qdel 14779540 Cancel all my jobs: qselect -u iman | xargs qdel all

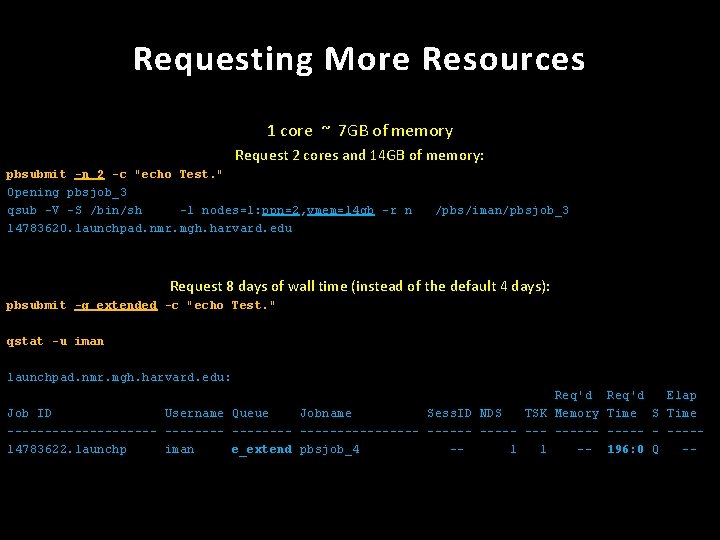

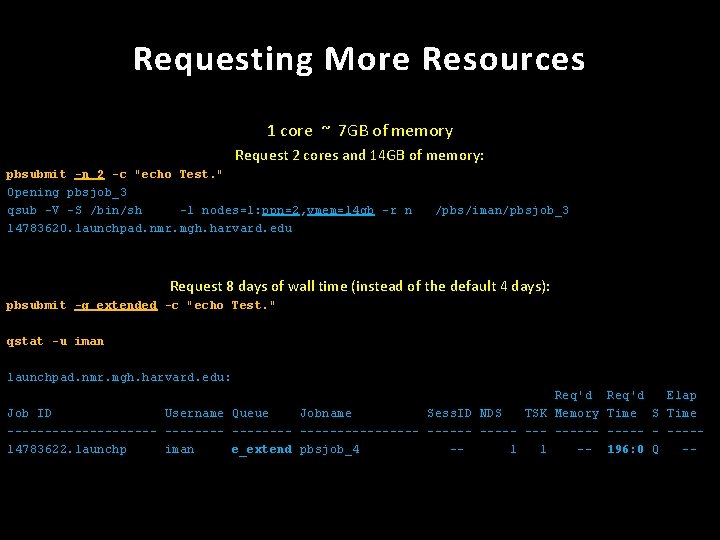

Requesting More Resources 1 core ~ 7 GB of memory Request 2 cores and 14 GB of memory: pbsubmit -n 2 -c "echo Test. " Opening pbsjob_3 qsub -V -S /bin/sh -l nodes=1: ppn=2, vmem=14 gb -r n 14783620. launchpad. nmr. mgh. harvard. edu /pbs/iman/pbsjob_3 Request 8 days of wall time (instead of the default 4 days): pbsubmit -q extended -c "echo Test. " qstat -u iman launchpad. nmr. mgh. harvard. edu: Req'd Job ID Username Queue Jobname Sess. ID NDS TSK Memory ---------- -------- --- -----14783622. launchp iman e_extend pbsjob_4 -1 1 -- Req'd Elap Time S Time ----- - ----196: 0 Q --

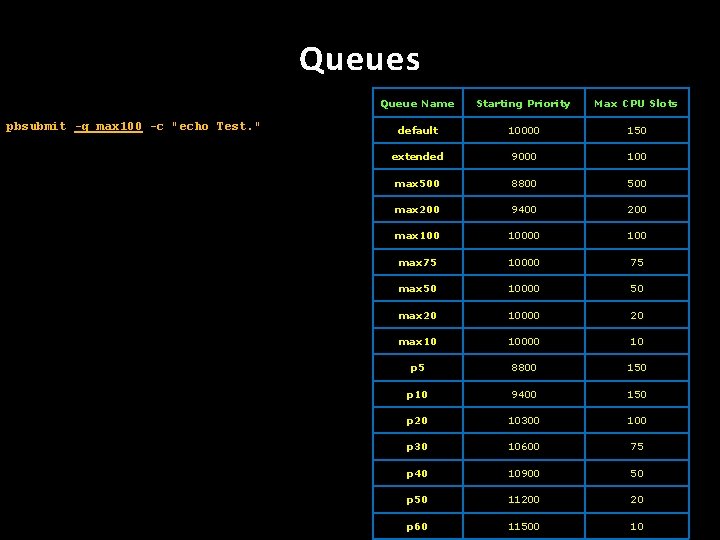

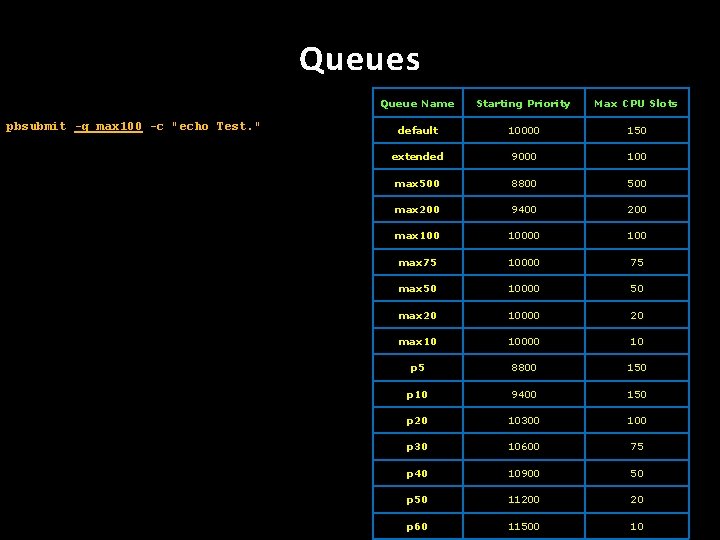

Queues pbsubmit -q max 100 -c "echo Test. " Queue Name Starting Priority Max CPU Slots default 10000 150 extended 9000 100 max 500 8800 500 max 200 9400 200 max 10000 100 max 75 10000 75 max 50 10000 50 max 20 10000 20 max 10 10000 10 p 5 8800 150 p 10 9400 150 p 20 10300 100 p 30 10600 75 p 40 10900 50 p 50 11200 20 p 60 11500 10

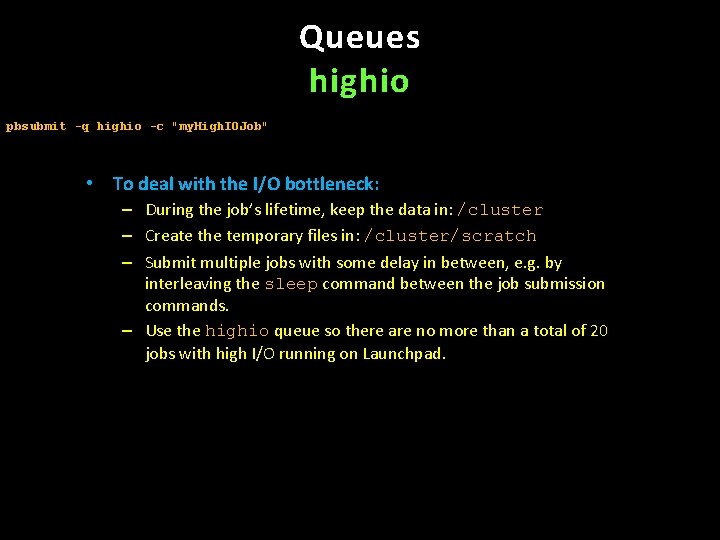

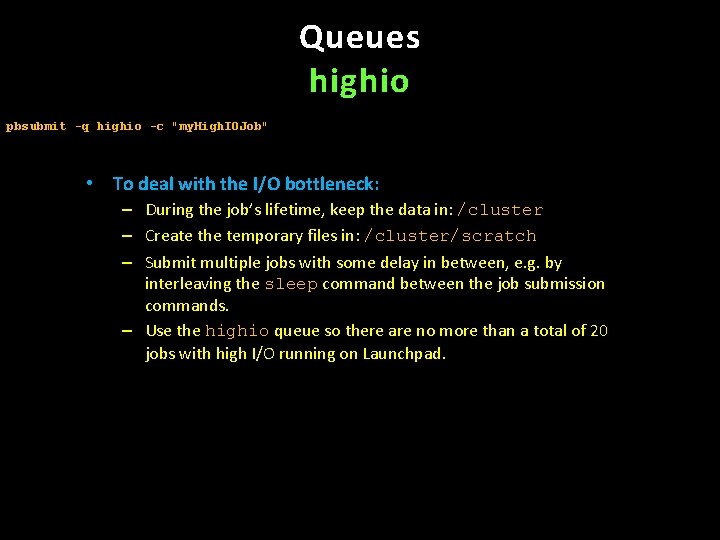

Queues highio pbsubmit -q highio -c "my. High. IOJob" • To deal with the I/O bottleneck: – During the job’s lifetime, keep the data in: /cluster – Create the temporary files in: /cluster/scratch – Submit multiple jobs with some delay in between, e. g. by interleaving the sleep command between the job submission commands. – Use the highio queue so there are no more than a total of 20 jobs with high I/O running on Launchpad.

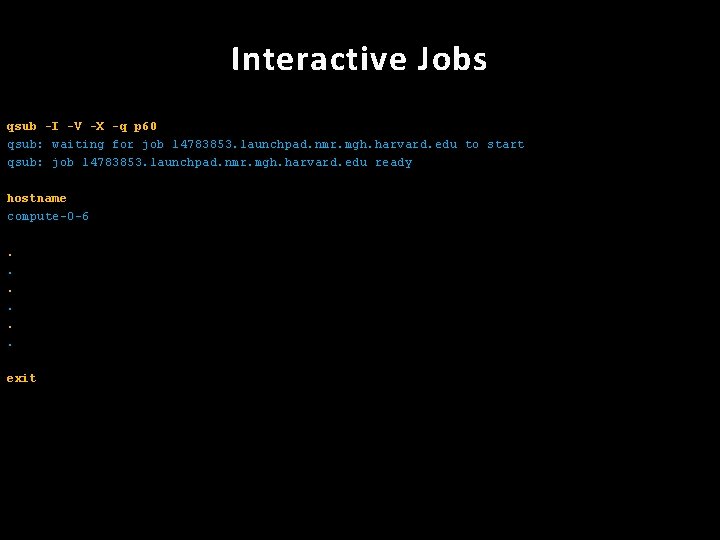

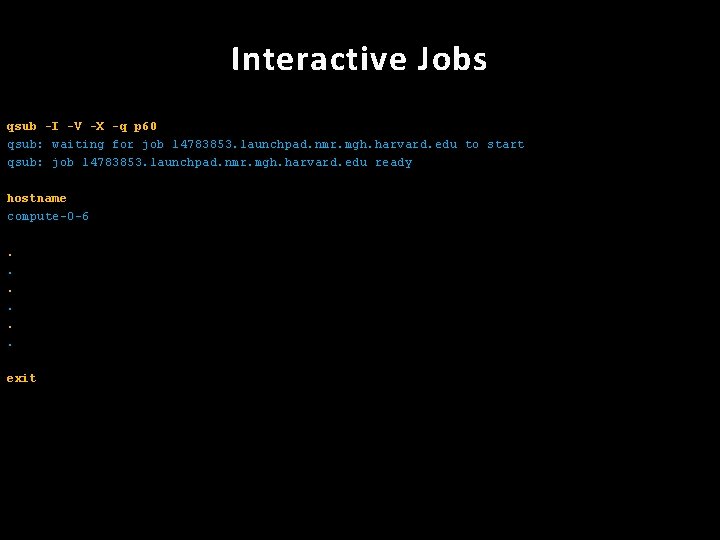

Interactive Jobs qsub -I -V -X -q p 60 qsub: waiting for job 14783853. launchpad. nmr. mgh. harvard. edu to start qsub: job 14783853. launchpad. nmr. mgh. harvard. edu ready hostname compute-0 -6. . . exit

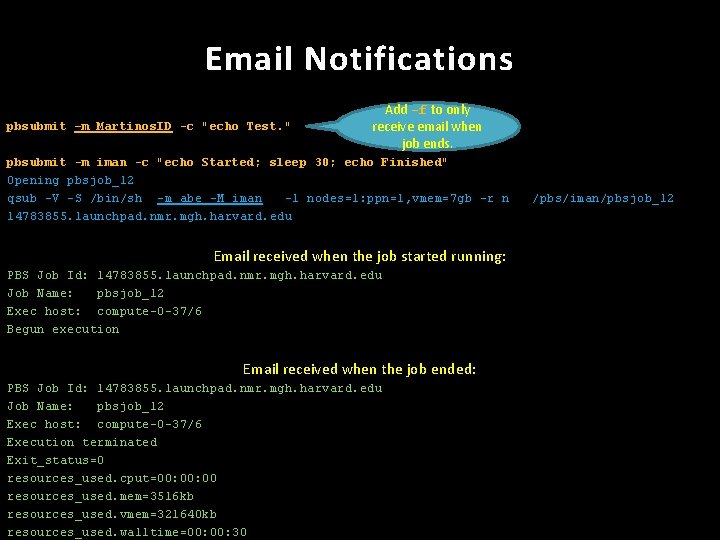

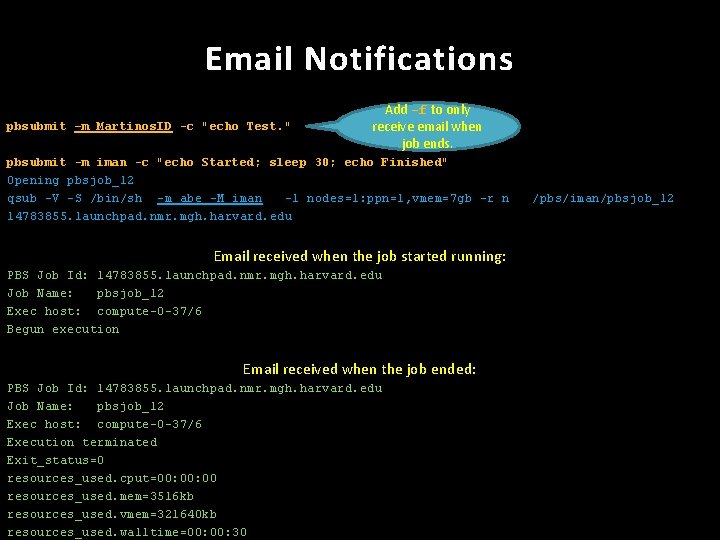

Email Notifications pbsubmit –m Martinos. ID -c "echo Test. " Add -f to only receive email when job ends. pbsubmit -m iman -c "echo Started; sleep 30; echo Finished" Opening pbsjob_12 qsub -V -S /bin/sh -m abe -M iman -l nodes=1: ppn=1, vmem=7 gb -r n 14783855. launchpad. nmr. mgh. harvard. edu Email received when the job started running: PBS Job Id: 14783855. launchpad. nmr. mgh. harvard. edu Job Name: pbsjob_12 Exec host: compute-0 -37/6 Begun execution Email received when the job ended: PBS Job Id: 14783855. launchpad. nmr. mgh. harvard. edu Job Name: pbsjob_12 Exec host: compute-0 -37/6 Execution terminated Exit_status=0 resources_used. cput=00: 00 resources_used. mem=3516 kb resources_used. vmem=321640 kb resources_used. walltime=00: 30 /pbs/iman/pbsjob_12

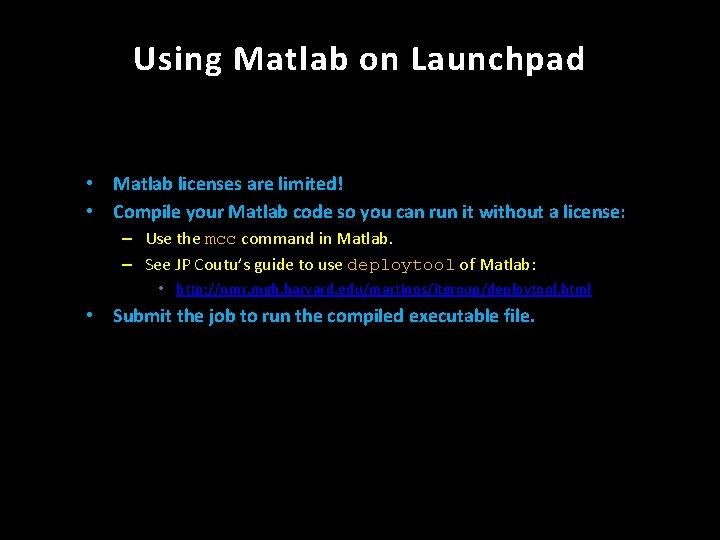

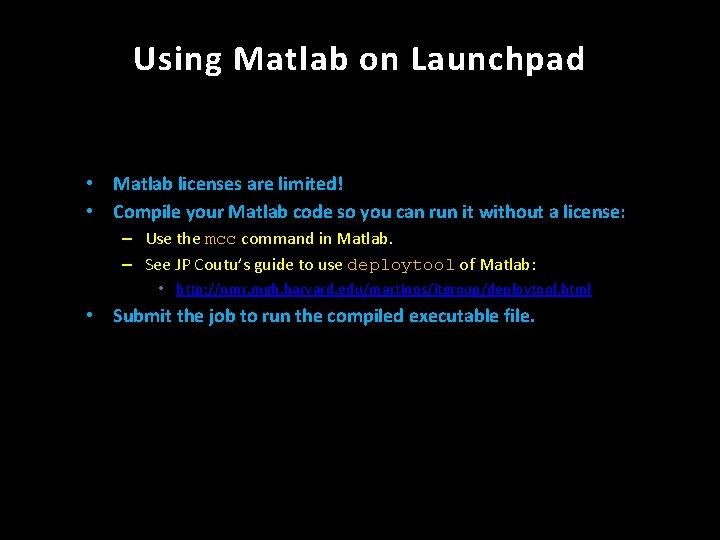

Using Matlab on Launchpad • Matlab licenses are limited! • Compile your Matlab code so you can run it without a license: – Use the mcc command in Matlab. – See JP Coutu’s guide to use deploytool of Matlab: • http: //nmr. mgh. harvard. edu/martinos/itgroup/deploytool. html • Submit the job to run the compiled executable file.

Thank You! If you use Launchpad in your research, please acknowledge the “NIH Instrumentation Grants 1 S 10 RR 023401, 1 S 10 RR 019307, and 1 S 10 RR 023043” in your publication.