Highly Scalable Distributed Dataflow Analysis Joseph L Greathouse

Highly Scalable Distributed Dataflow Analysis Joseph L. Greathouse Chelsea Le. Blanc Todd Austin Valeria Bertacco Advanced Computer Architecture Laboratory University of Michigan CGO, Chamonix, France April 6, 2011

Software Errors Abound n NIST: SW errors cost U. S. ~$60 billion/year as of 2002 n FBI CCS: Security Issues $67 billion/year as of 2005 q >⅓ from viruses, network intrusion, etc. 2

Goals of this Work n High quality dynamic software analysis q n Distribute Tests to Large Populations q n Find difficult bugs that other analyses miss Low overhead or users get angry Accomplished by sampling the analyses q Each user only tests part of the program 3

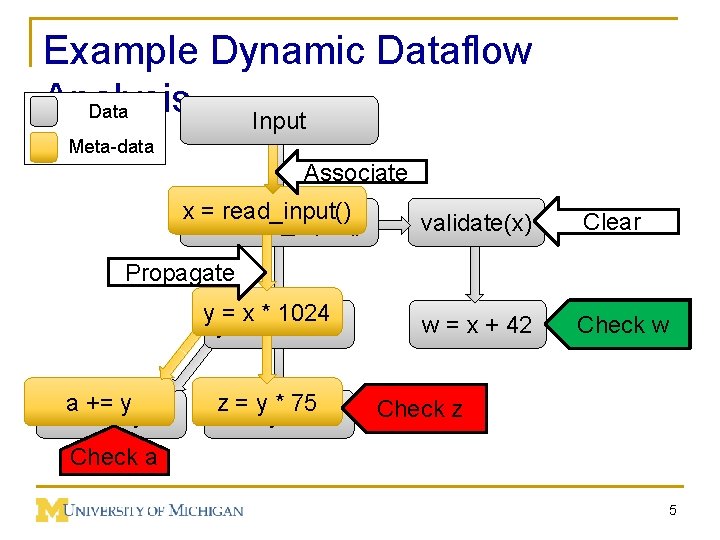

Dynamic Dataflow Analysis n Associate meta-data with program values n Propagate/Clear meta-data while executing n Check meta-data for safety & correctness n Forms dataflows of meta/shadow information 4

Example Dynamic Dataflow Analysis Data Input Meta-data Associate x = read_input() validate(x) Clear w = x + 42 Check w Propagate y = x * 1024 a += y z = y * 75 Check z Check a 5

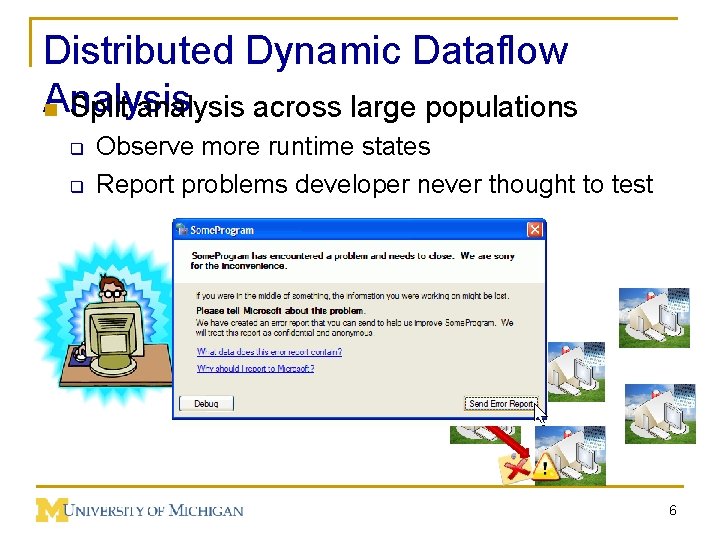

Distributed Dynamic Dataflow Analysis n Split analysis across large populations q q Observe more runtime states Report problems developer never thought to test Instrumented Program Potential problems 6

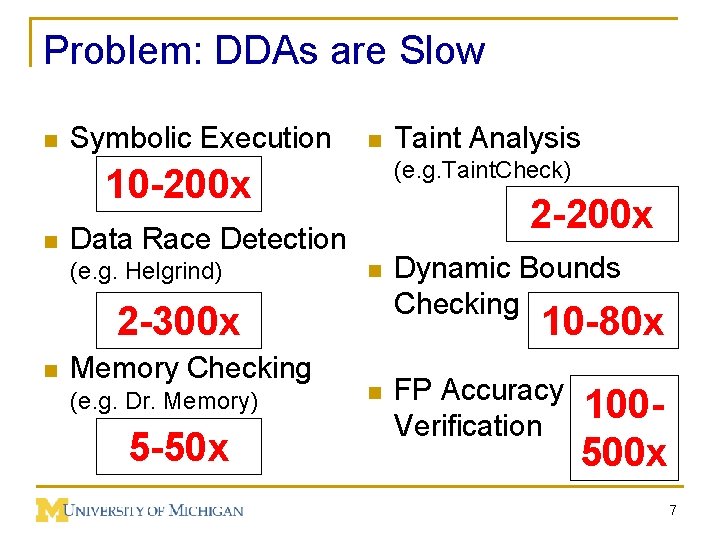

Problem: DDAs are Slow n Symbolic Execution n (e. g. Taint. Check) 10 -200 x n 2 -200 x Data Race Detection (e. g. Helgrind) n 2 -300 x n Memory Checking (e. g. Dr. Memory) 5 -50 x Taint Analysis Dynamic Bounds Checking 10 -80 x n FP Accuracy Verification 100500 x 7

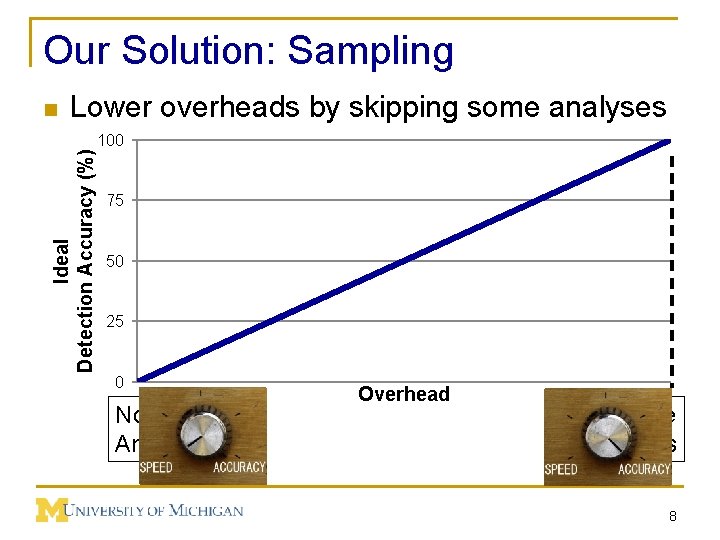

Our Solution: Sampling n Lower overheads by skipping some analyses Ideal Detection Accuracy (%) 100 75 50 25 0 No Analysis Overhead Complete Analysis 8

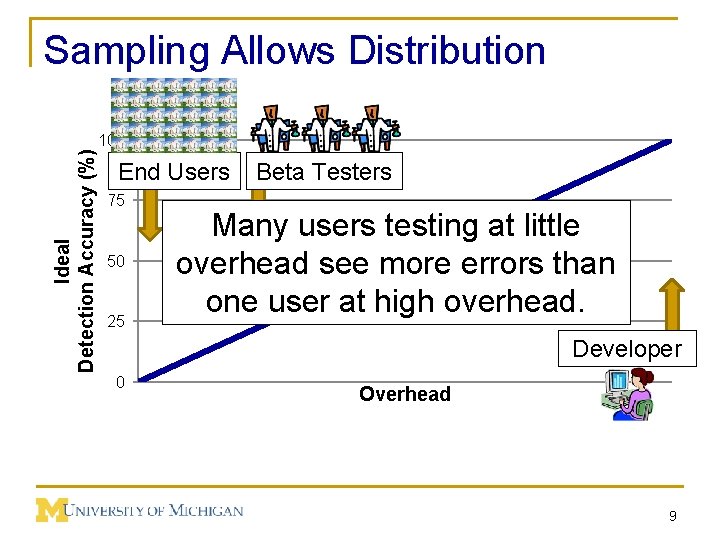

Sampling Allows Distribution Ideal Detection Accuracy (%) 100 End Users 75 50 25 Beta Testers Many users testing at little overhead see more errors than one user at high overhead. Developer 0 Overhead 9

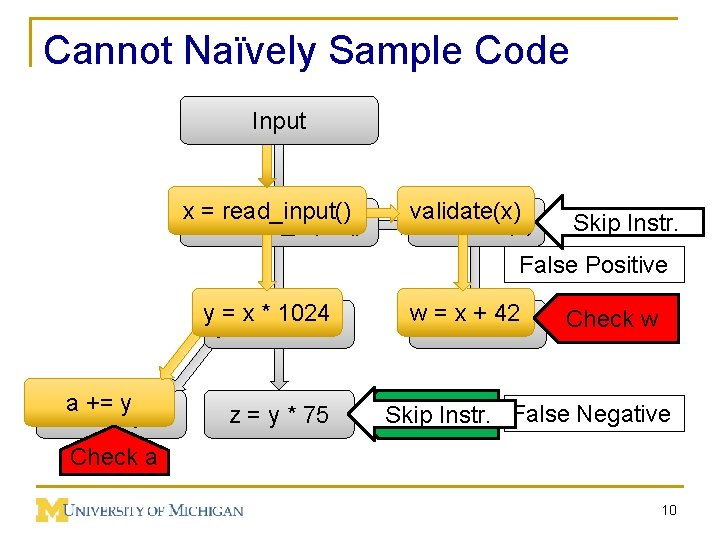

Cannot Naïvely Sample Code Input xx==read_input() validate(x) Validate(x) Skip Instr. False Positive yy==xx* *1024 aa+= +=yy z = y * 75 ww==xx++42 42 Check w Check z False Negative Skip Instr. Check a 10

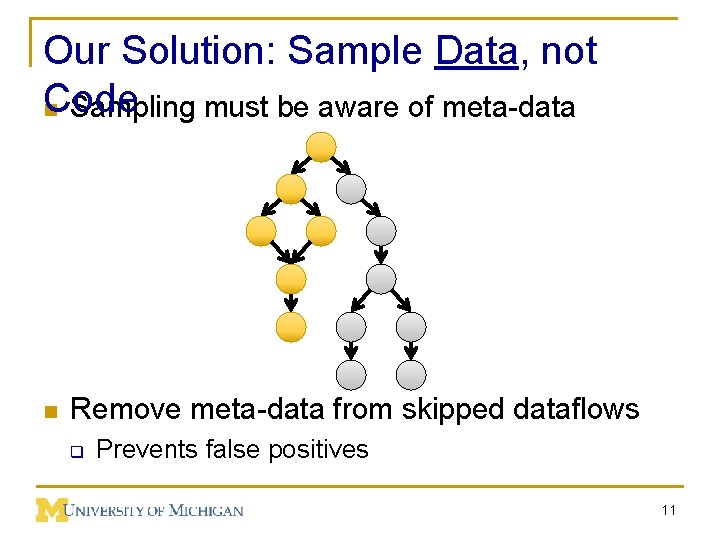

Our Solution: Sample Data, not Code n Sampling must be aware of meta-data n Remove meta-data from skipped dataflows q Prevents false positives 11

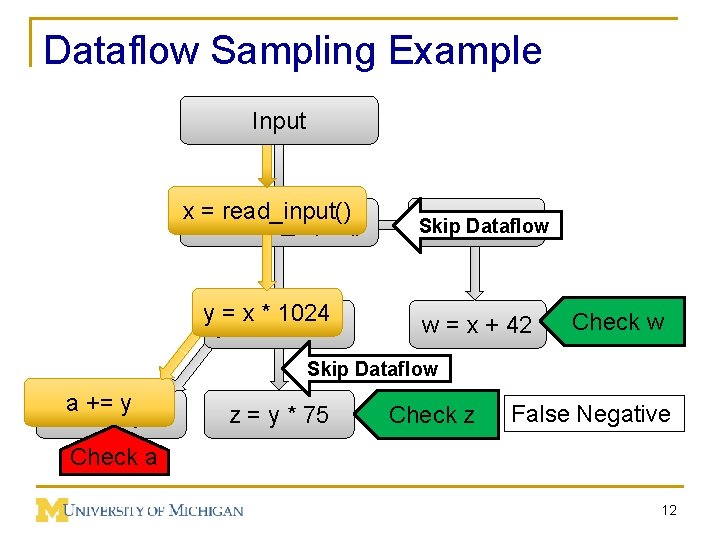

Dataflow Sampling Example Input xx==read_input() validate(x) Skip Dataflow yy==xx* *1024 w = x + 42 Check w Skip Dataflow aa+= +=yy z = y * 75 Check z False Negative Check a 12

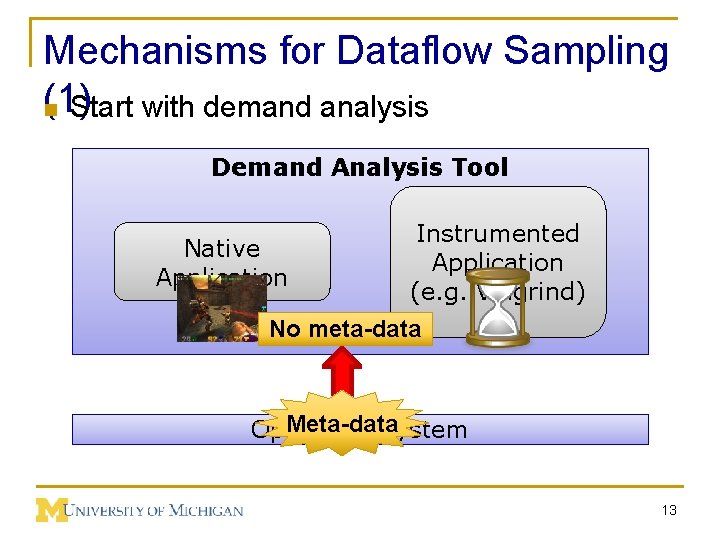

Mechanisms for Dataflow Sampling (1) n Start with demand analysis Demand Analysis Tool Native Application Instrumented Application (e. g. Valgrind) No meta-data Meta-data Operating System 13

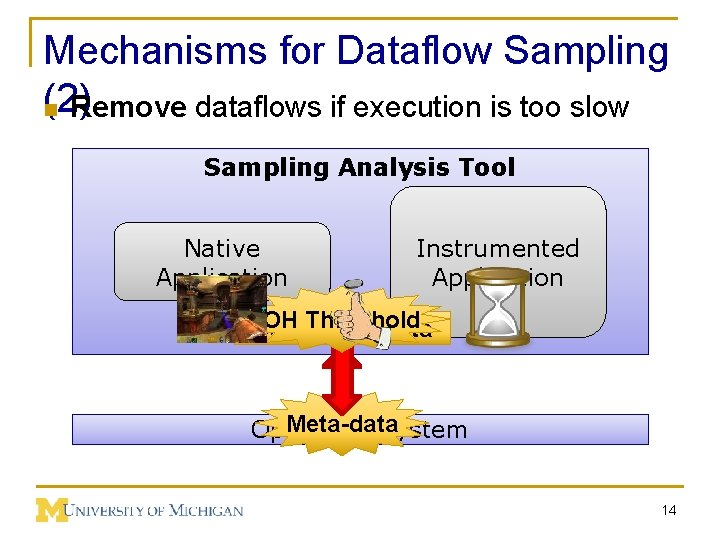

Mechanisms for Dataflow Sampling (2) n Remove dataflows if execution is too slow Sampling Analysis Tool Native Application Instrumented Application OH Threshold Clear meta-data Meta-data Operating System 14

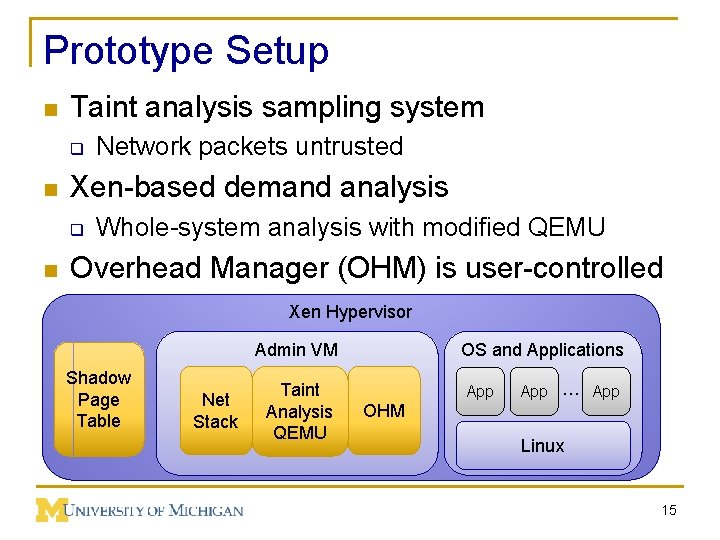

Prototype Setup n Taint analysis sampling system q n Xen-based demand analysis q n Network packets untrusted Whole-system analysis with modified QEMU Overhead Manager (OHM) is user-controlled Xen Hypervisor Admin VM Shadow Page Table Net Stack Taint Analysis QEMU OS and Applications App … App OHM Linux 15

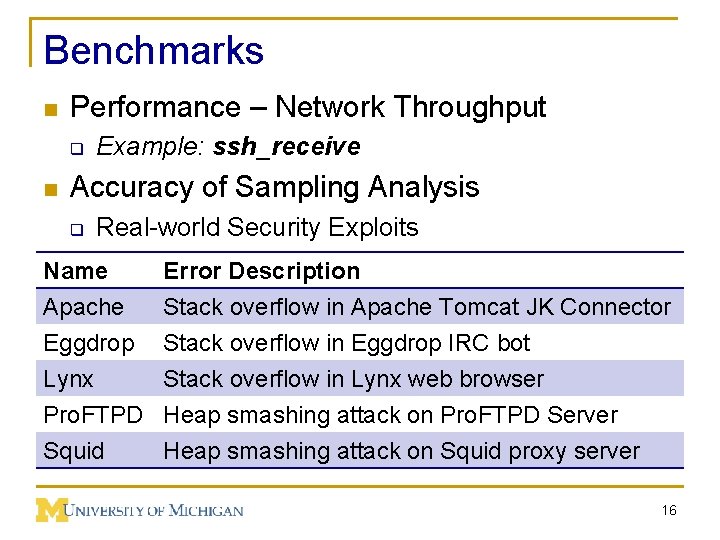

Benchmarks n Performance – Network Throughput q n Example: ssh_receive Accuracy of Sampling Analysis q Real-world Security Exploits Name Apache Eggdrop Lynx Error Description Stack overflow in Apache Tomcat JK Connector Stack overflow in Eggdrop IRC bot Stack overflow in Lynx web browser Pro. FTPD Heap smashing attack on Pro. FTPD Server Squid Heap smashing attack on Squid proxy server 16

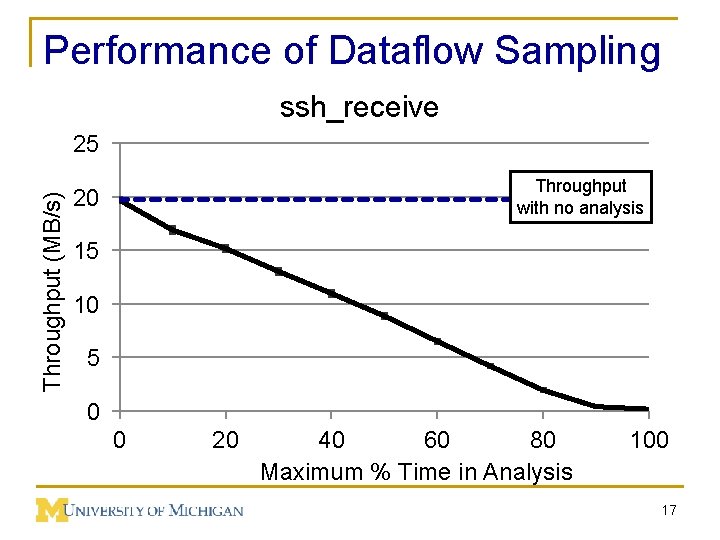

Performance of Dataflow Sampling ssh_receive Throughput (MB/s) 25 Throughput with no analysis 20 15 10 5 0 0 20 40 60 80 Maximum % Time in Analysis 100 17

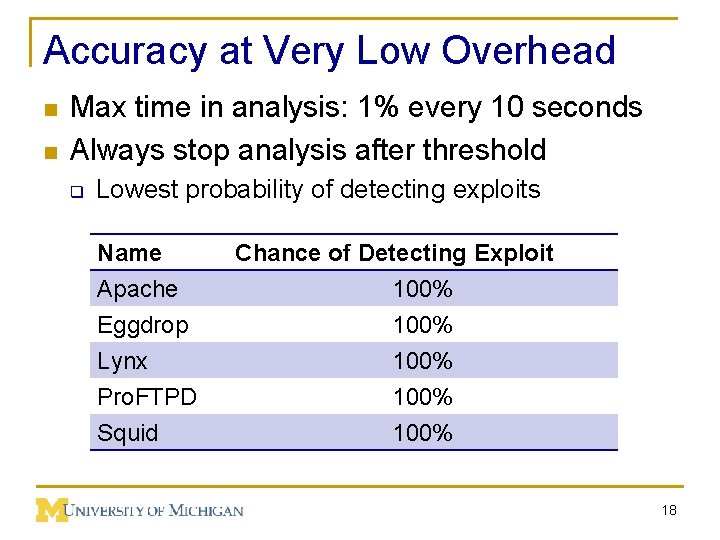

Accuracy at Very Low Overhead n n Max time in analysis: 1% every 10 seconds Always stop analysis after threshold q Lowest probability of detecting exploits Name Apache Eggdrop Lynx Pro. FTPD Squid Chance of Detecting Exploit 100% 100% 18

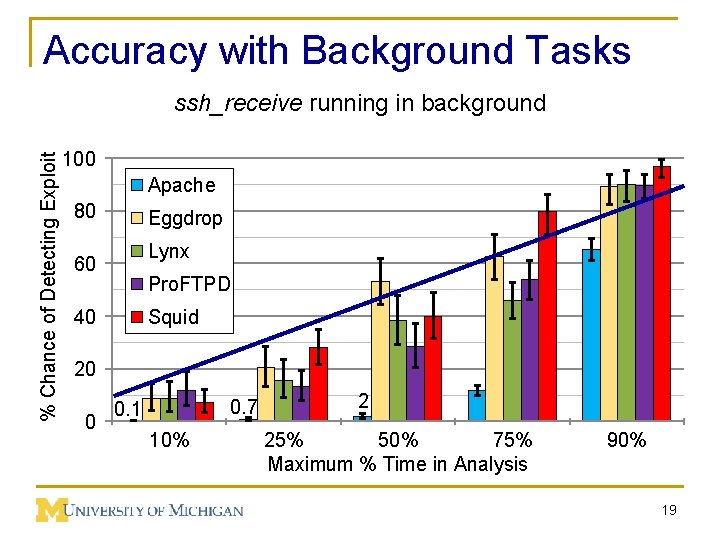

Accuracy with Background Tasks % Chance of Detecting Exploit ssh_receive running in background 100 Apache 80 Eggdrop Lynx 60 Pro. FTPD 40 Squid 20 0 0. 7 0. 1 10% 2 25% 50% 75% Maximum % Time in Analysis 90% 19

Conclusion & Future Work Dynamic dataflow sampling gives users a knob to control accuracy vs. performance n n n Better methods of sample choices Combine static information New types of sampling analysis 20

BACKUP SLIDES 21

Outline n Software Errors and Security n Dynamic Dataflow Analysis n Sampling and Distributed Analysis n Prototype System n Performance and Accuracy 22

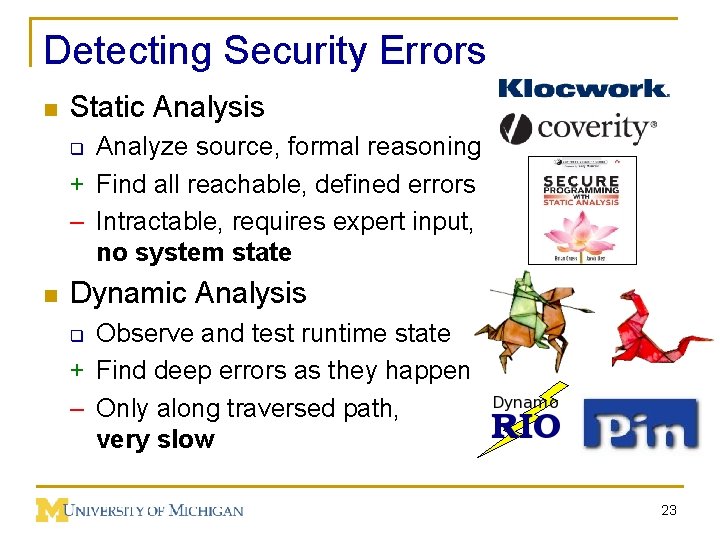

Detecting Security Errors n Static Analysis Analyze source, formal reasoning + Find all reachable, defined errors – Intractable, requires expert input, no system state q n Dynamic Analysis Observe and test runtime state + Find deep errors as they happen – Only along traversed path, very slow q 23

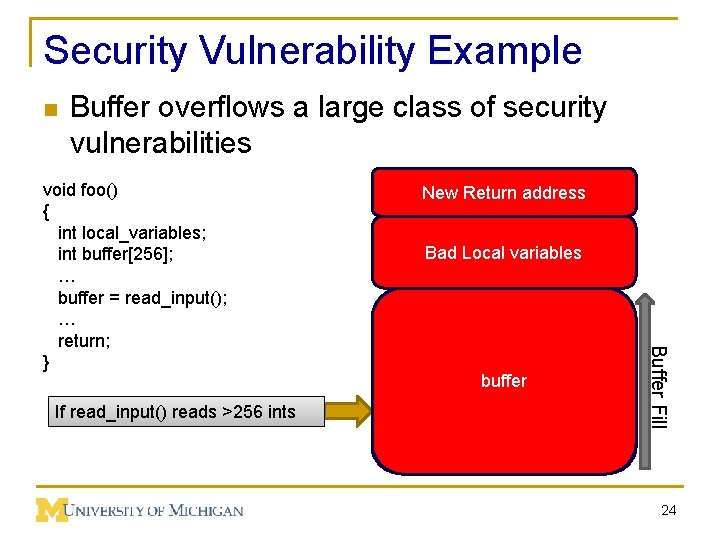

Security Vulnerability Example n Buffer overflows a large class of security vulnerabilities If read_input() reads >256 200 ints New Return address Bad Local variables buffer Buffer Fill void foo() { int local_variables; int buffer[256]; … buffer = read_input(); … return; } 24

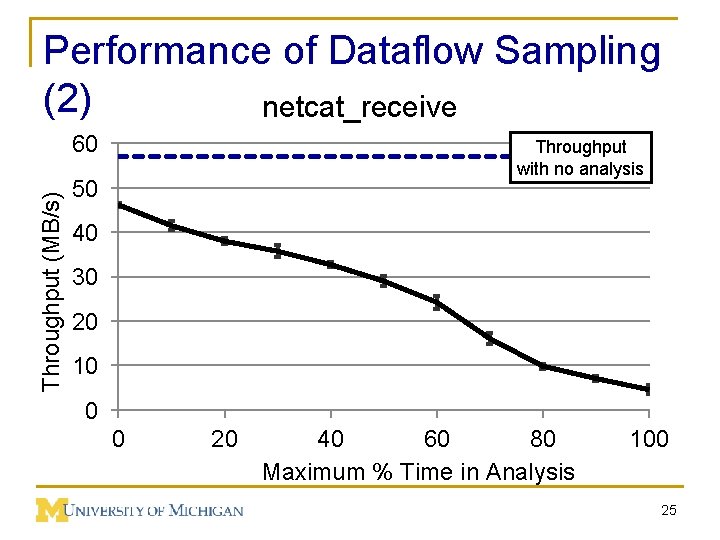

Performance of Dataflow Sampling (2) netcat_receive Throughput (MB/s) 60 Throughput with no analysis 50 40 30 20 10 0 0 20 40 60 80 Maximum % Time in Analysis 100 25

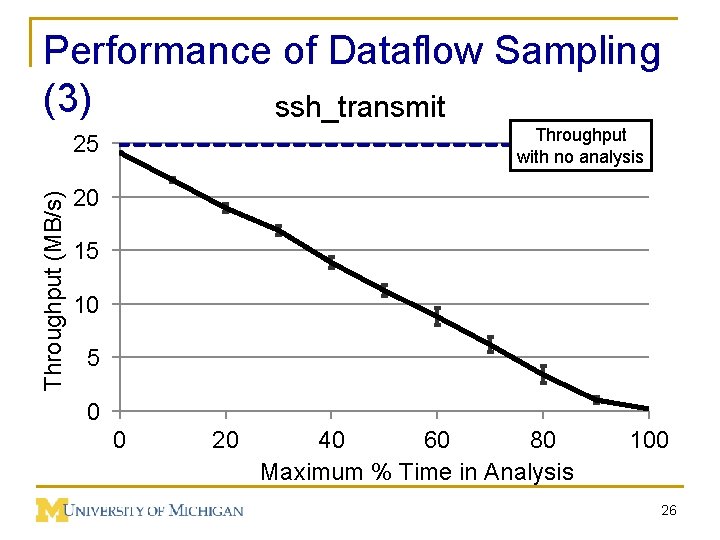

Performance of Dataflow Sampling (3) ssh_transmit Throughput with no analysis Throughput (MB/s) 25 20 15 10 5 0 0 20 40 60 80 Maximum % Time in Analysis 100 26

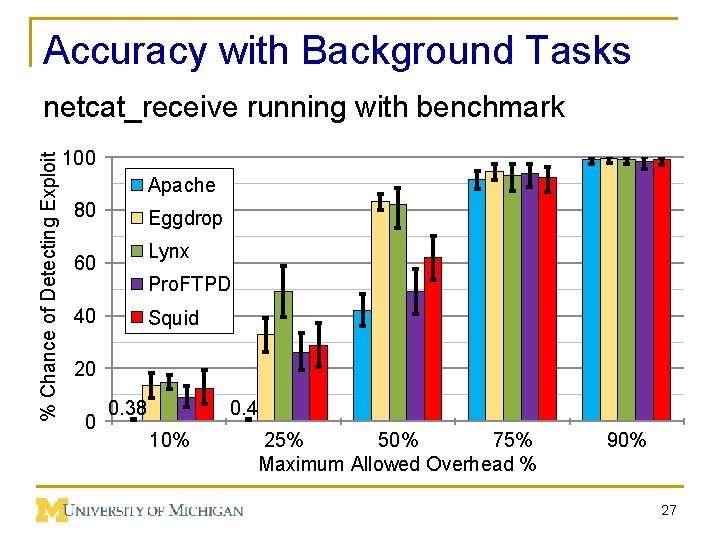

Accuracy with Background Tasks % Chance of Detecting Exploit netcat_receive running with benchmark 100 Apache 80 Eggdrop Lynx 60 Pro. FTPD 40 Squid 20 0 0. 4 0. 38 10% 25% 50% 75% Maximum Allowed Overhead % 90% 27

Width Test 28

- Slides: 28