High performance Throughput Les Cottrell SLAC Lecture 5

- Slides: 25

High performance Throughput Les Cottrell – SLAC Lecture # 5 a presented at the 26 th International Nathiagali Summer College on Physics and Contemporary Needs, 25 th June – 14 th July, Nathiagali, Pakistan Partially funded by DOE/MICS Field Work Proposal on Internet End-to-end Performance Monitoring (IEPM), also supported by IUPAP 1

How to measure • Selected about a dozen major collaborator sites in California, Colorado, Illinois, FR, CH, UK over last 9 months – Of interest to SLAC – Can get logon accounts • Use iperf – Choose window size and # parallel streams – Run for 10 seconds together with ping (loaded) – Stop iperf, run ping (unloaded) for 10 seconds – Change window or number of streams & repeat • Record streams, window, throughput (Mbits/s), loaded & unloaded ping responses 2

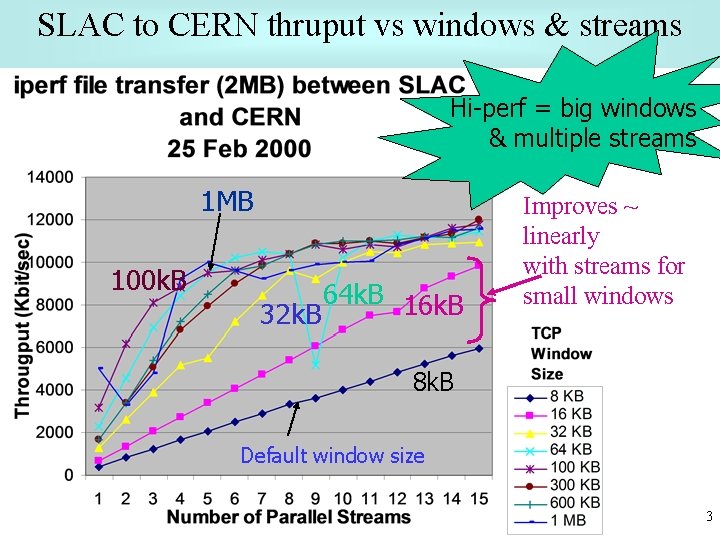

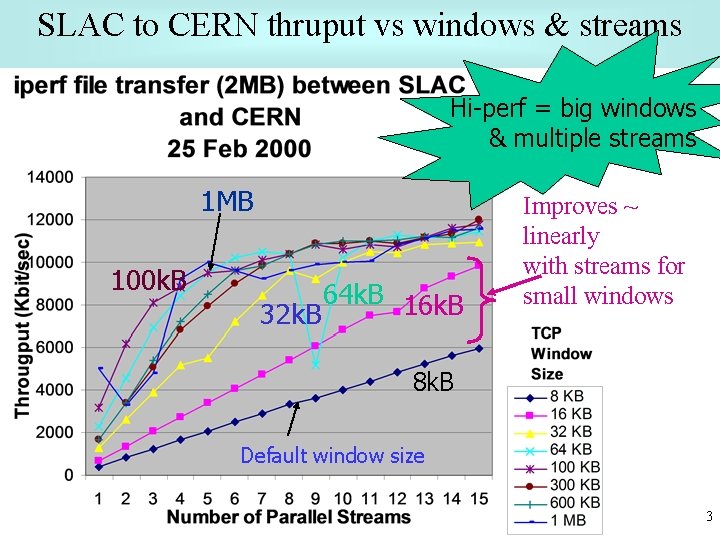

SLAC to CERN thruput vs windows & streams Hi-perf = big windows & multiple streams 1 MB 100 k. B 64 k. B 16 k. B 32 k. B Improves ~ linearly with streams for small windows 8 k. B Default window size 3

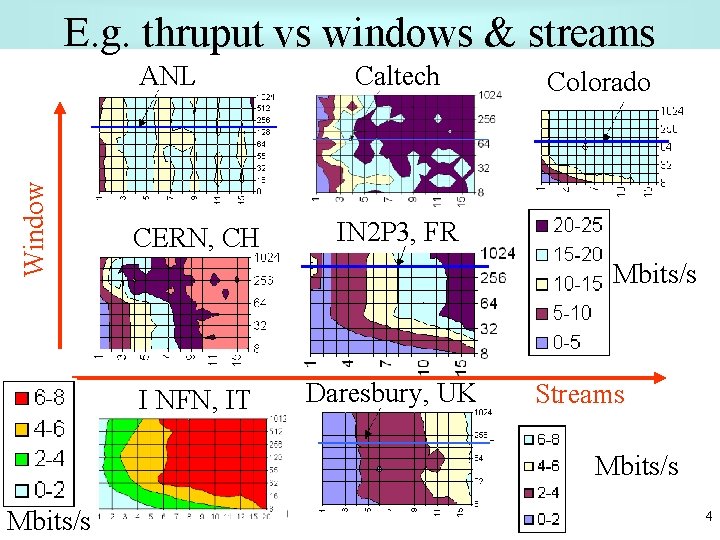

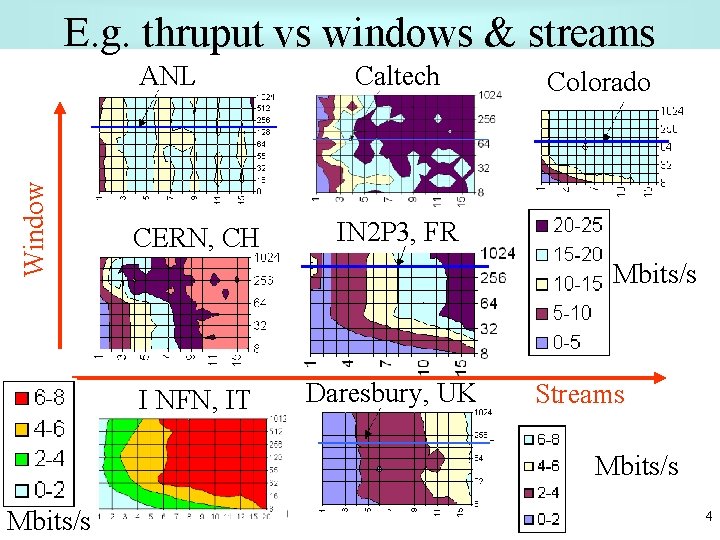

E. g. thruput vs windows & streams Window ANL CERN, CH Caltech Colorado IN 2 P 3, FR Mbits/s I NFN, IT Daresbury, UK Streams Mbits/s 4

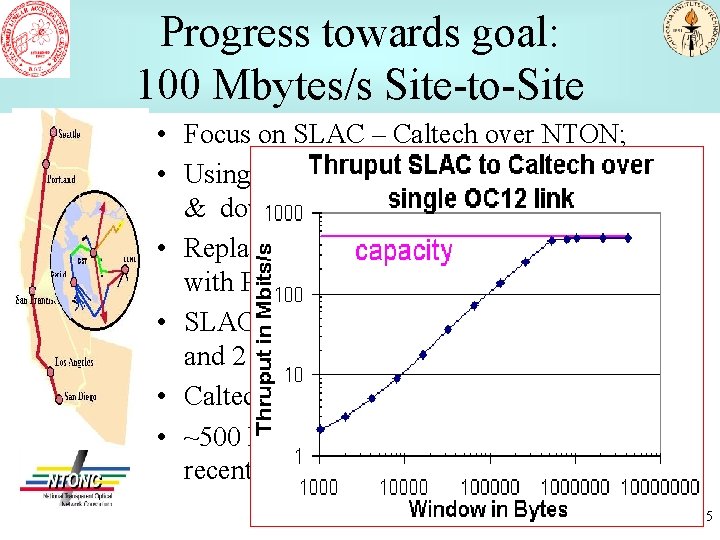

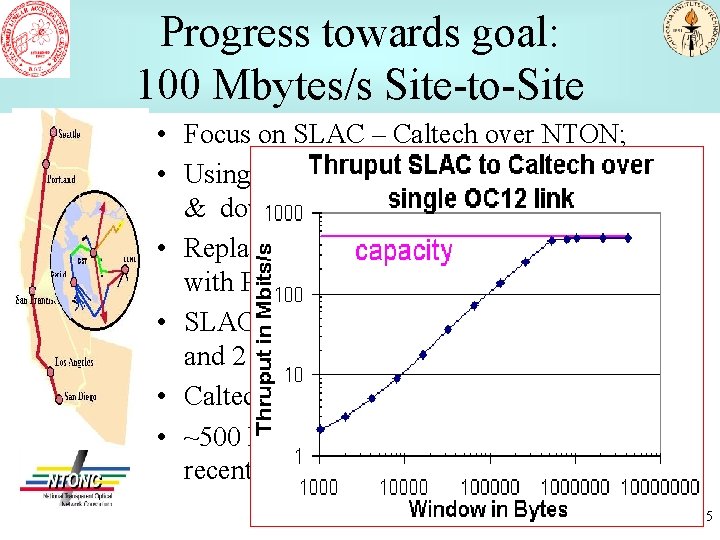

Progress towards goal: 100 Mbytes/s Site-to-Site • Focus on SLAC – Caltech over NTON; • Using NTON wavelength division fibers up & down W. Coast US; • Replaced Exemplar with 8*OC 3 & Suns with Pentium IIIs & OC 12 (622 Mbps) • SLAC Cisco 12000 with OC 48 (2. 4 Gbps) and 2 × OC 12; • Caltech Juniper M 160 & OC 48 • ~500 Mbits/s single stream achieved recently over OC 12. 5

SC 2000 WAN Challenge • SC 2000, Dallas to SLAC RTT ~ 48 msec – SLAC/FNAL booth: Dell Power. Edge PIII 2 * 550 MHz with 64 bit PCI + Dell 850 MHz both running Linux, each with Gig. E, connected to Cat 6009 with 2 Gig. E bonded to Extreme SC 2000 floor switch – NTON: OC 48 to GSR to Cat 5500 Gig E to Sun E 4500 4*460 MHz and Sun E 4500 6*336 MHz • Internet 2: 300 Mbits/s • NTON 960 Mbits/s • Details: – www-iepm. slac. stanford. edu/monitoring/bulk/sc 2 k. html 6

Iperf throughput conclusions 1/2 • Can saturate bottleneck links • For a given iperf measurement, streams share throughput equally. • For small window sizes throughput increases linearly with number of streams • Predicted optimum window sizes can be large (> Mbyte) • Need > 1 stream to get optimum performance • Can get close to max thruput with small (<=32 Mbyte) with sufficient (5 -10) streams • Improvements of 5 to 60 in thruput by using multiple streams & larger windows • Loss not sensitive to throughput 7

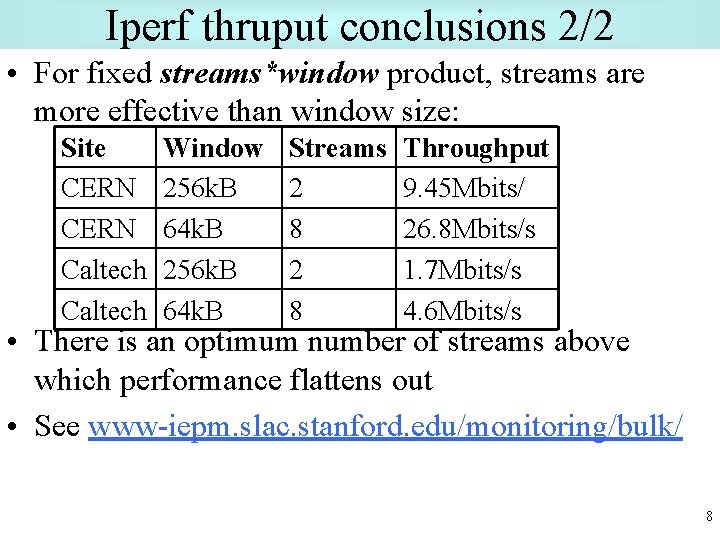

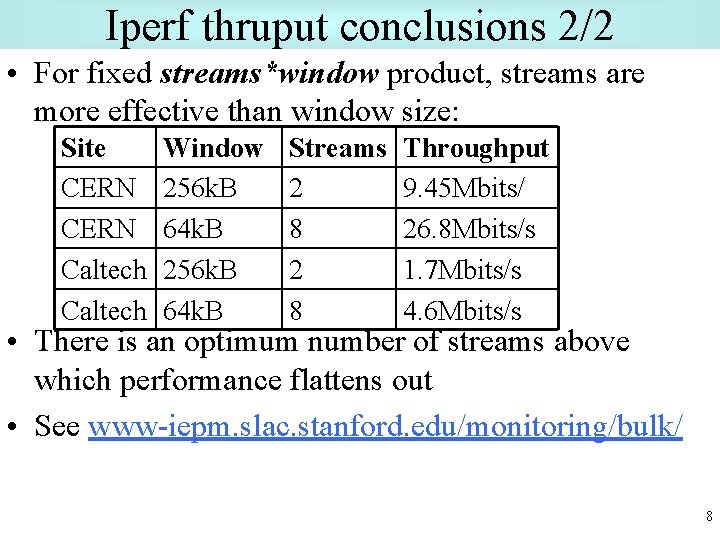

Iperf thruput conclusions 2/2 • For fixed streams*window product, streams are more effective than window size: Site CERN Caltech Window 256 k. B 64 k. B Streams 2 8 Throughput 9. 45 Mbits/ 26. 8 Mbits/s 1. 7 Mbits/s 4. 6 Mbits/s • There is an optimum number of streams above which performance flattens out • See www-iepm. slac. stanford. edu/monitoring/bulk/ 8

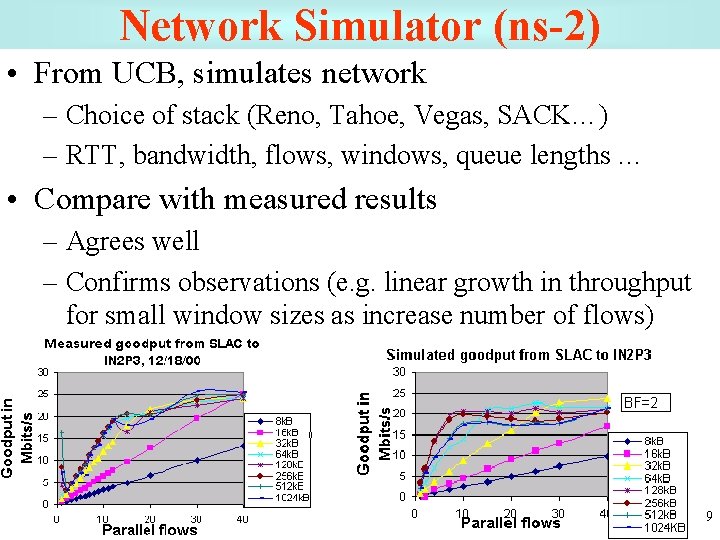

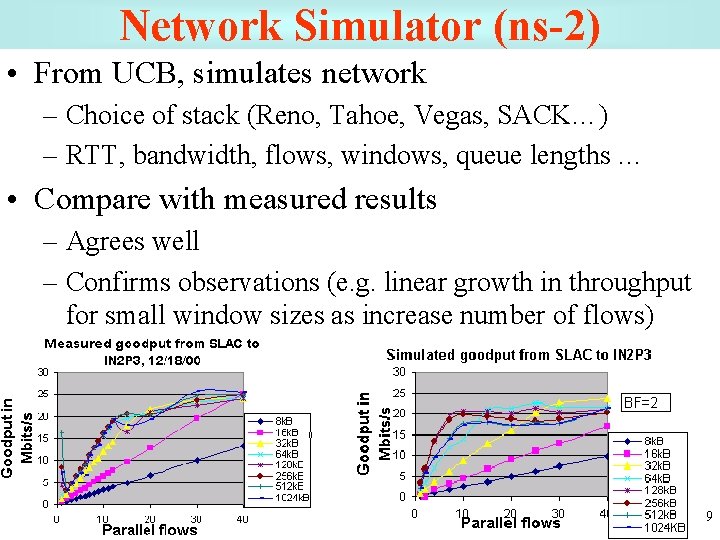

Network Simulator (ns-2) • From UCB, simulates network – Choice of stack (Reno, Tahoe, Vegas, SACK…) – RTT, bandwidth, flows, windows, queue lengths … • Compare with measured results – Agrees well – Confirms observations (e. g. linear growth in throughput for small window sizes as increase number of flows) 9

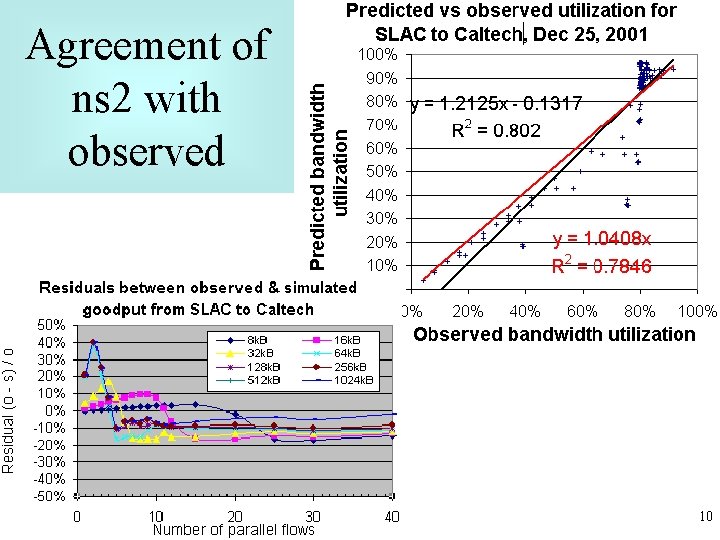

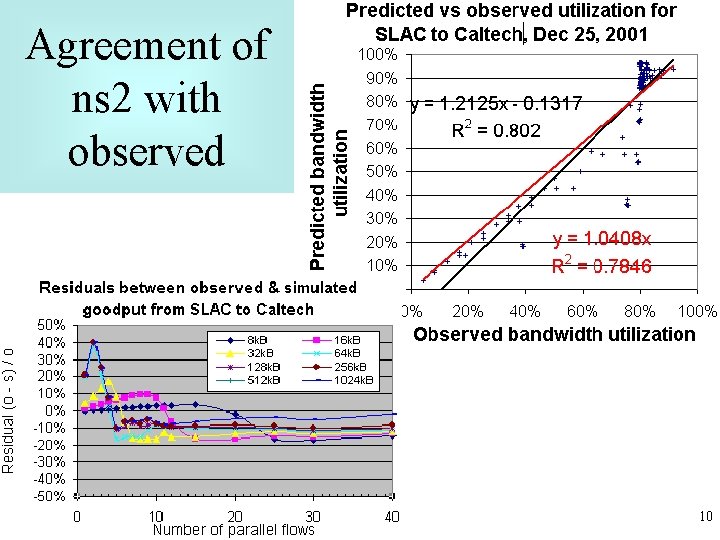

Agreement of ns 2 with observed 10

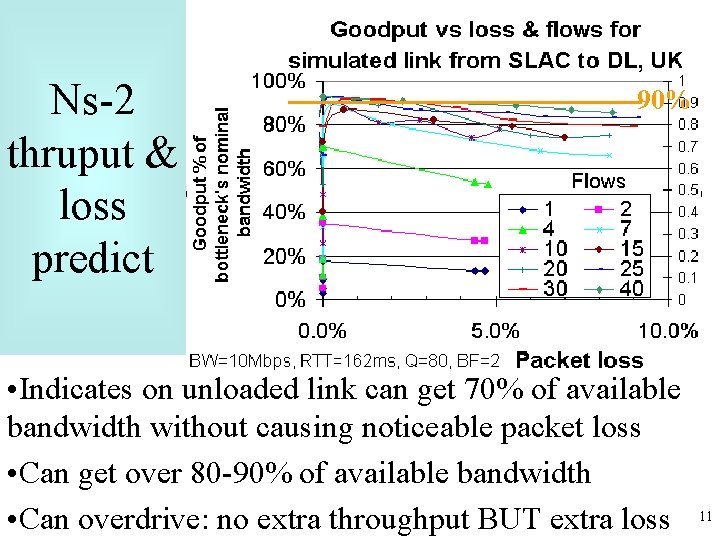

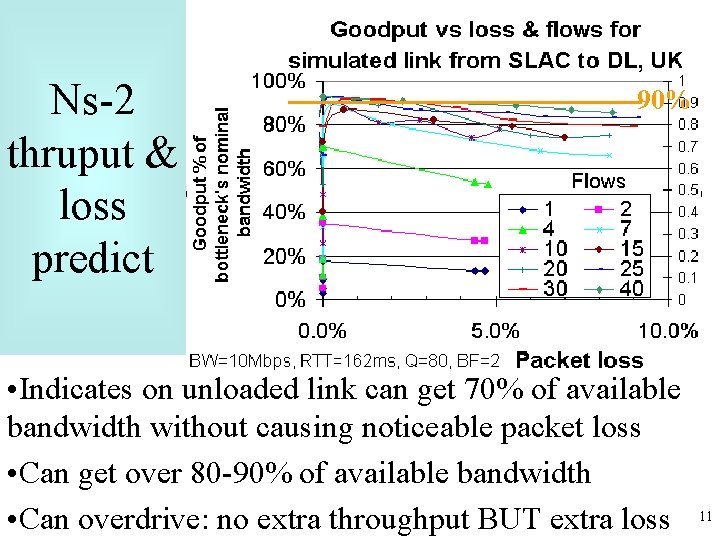

Ns-2 thruput & loss predict 90% • Indicates on unloaded link can get 70% of available bandwidth without causing noticeable packet loss • Can get over 80 -90% of available bandwidth • Can overdrive: no extra throughput BUT extra loss 11

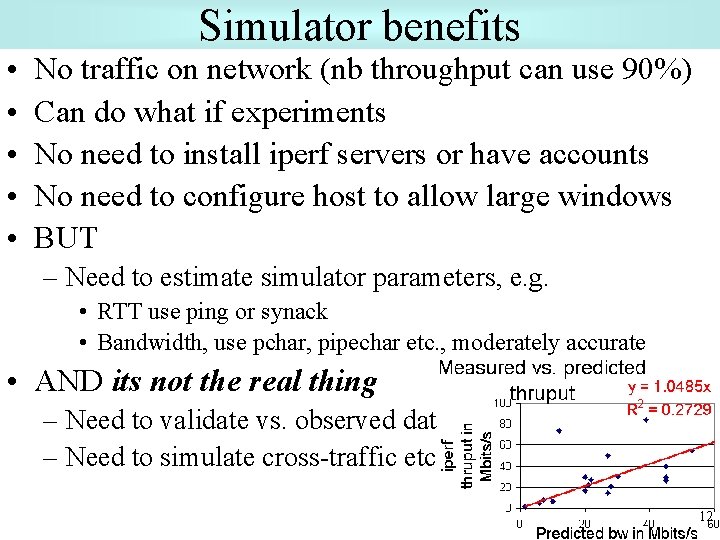

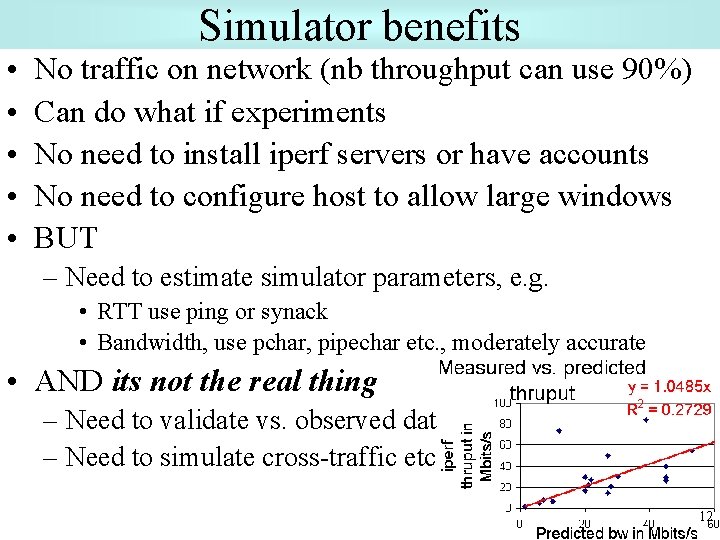

Simulator benefits • • • No traffic on network (nb throughput can use 90%) Can do what if experiments No need to install iperf servers or have accounts No need to configure host to allow large windows BUT – Need to estimate simulator parameters, e. g. • RTT use ping or synack • Bandwidth, use pchar, pipechar etc. , moderately accurate • AND its not the real thing – Need to validate vs. observed data – Need to simulate cross-traffic etc 12

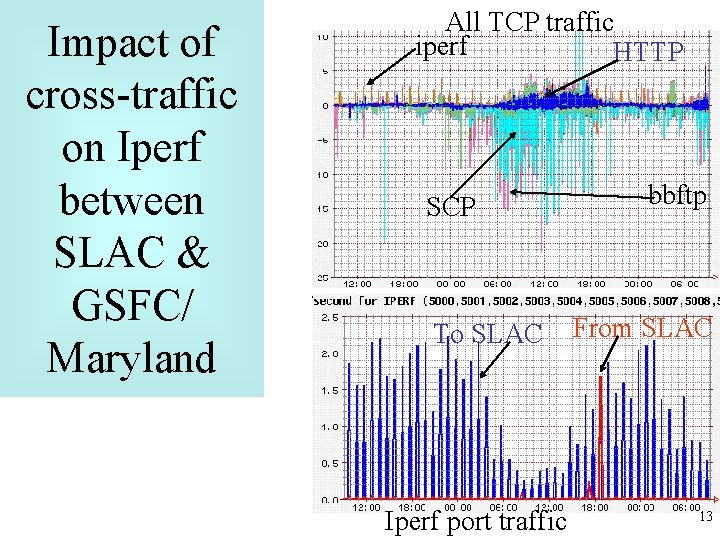

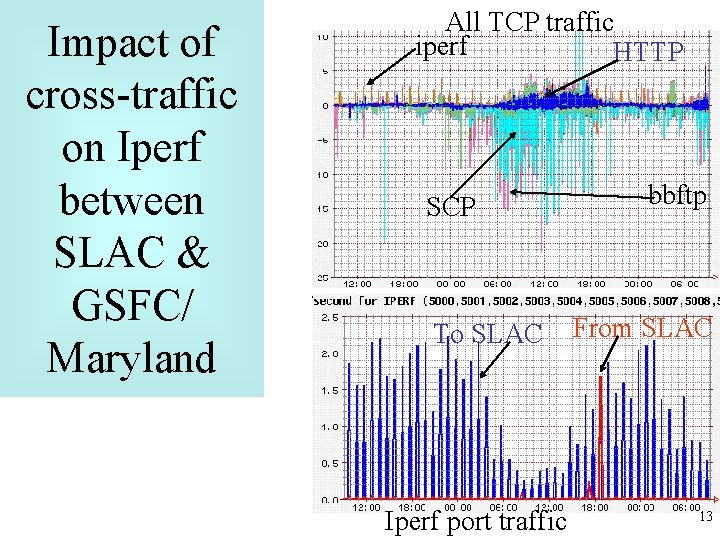

Impact of cross-traffic on Iperf between SLAC & GSFC/ Maryland All TCP traffic iperf HTTP SCP To SLAC Iperf port traffic bbftp From SLAC 13

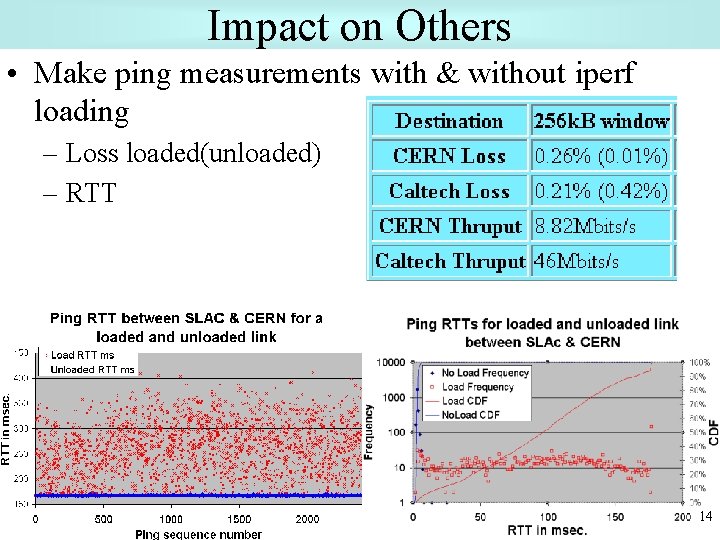

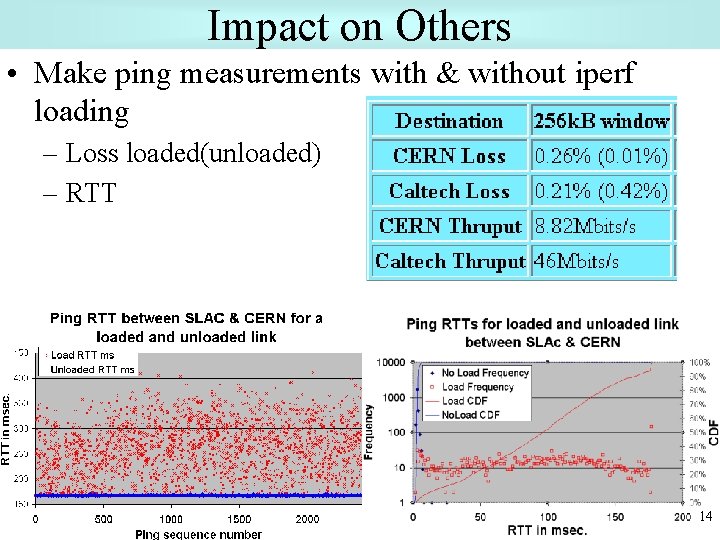

Impact on Others • Make ping measurements with & without iperf loading – Loss loaded(unloaded) – RTT 14

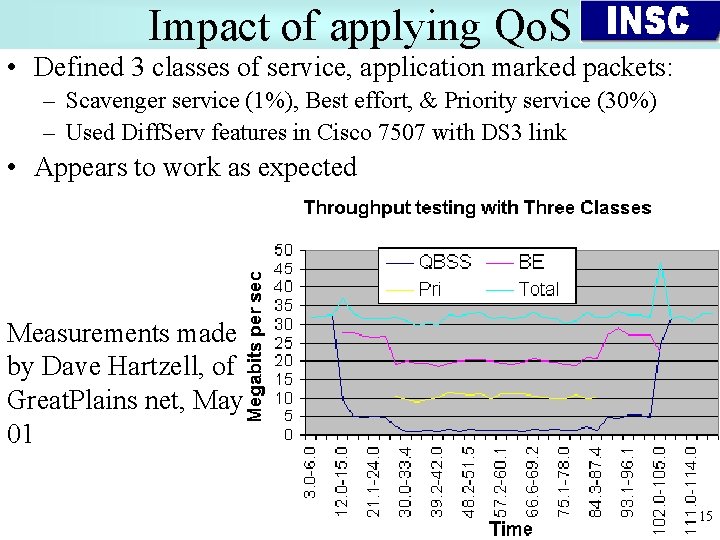

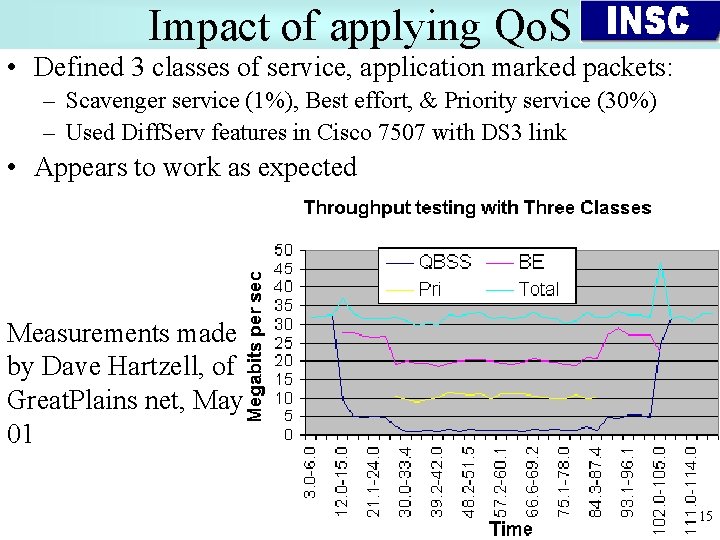

Impact of applying Qo. S • Defined 3 classes of service, application marked packets: – Scavenger service (1%), Best effort, & Priority service (30%) – Used Diff. Serv features in Cisco 7507 with DS 3 link • Appears to work as expected Measurements made by Dave Hartzell, of Great. Plains net, May 01 15

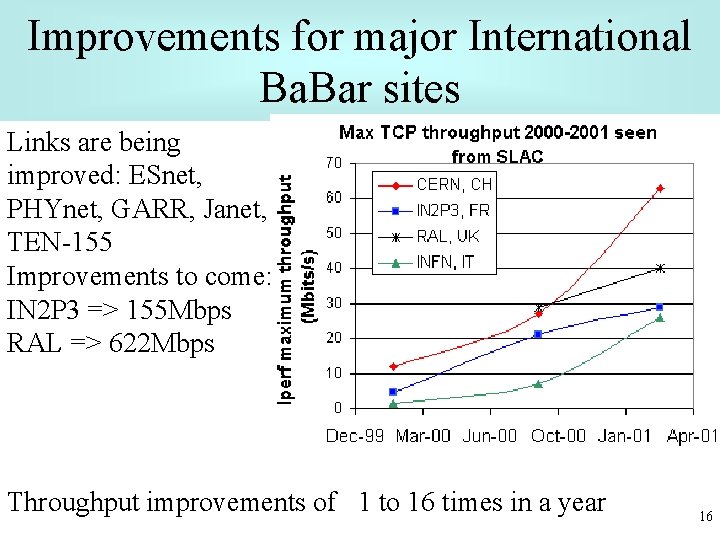

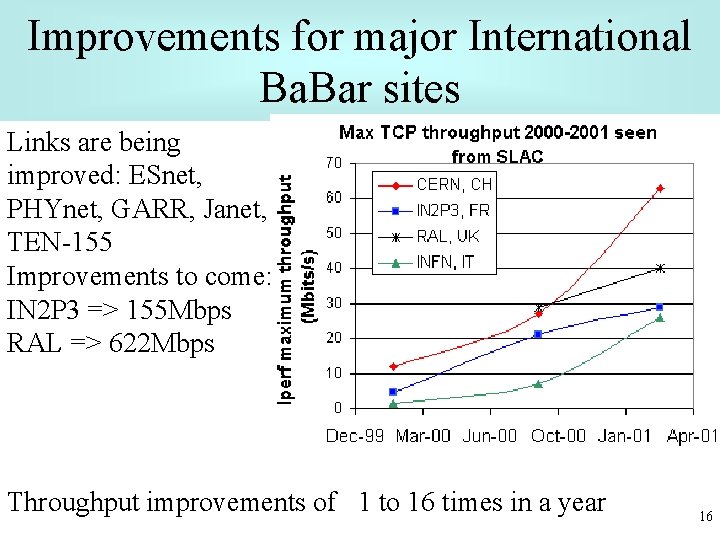

Improvements for major International Ba. Bar sites Links are being improved: ESnet, PHYnet, GARR, Janet, TEN-155 Improvements to come: IN 2 P 3 => 155 Mbps RAL => 622 Mbps Throughput improvements of 1 to 16 times in a year 16

Gigabit/second networking CERN • The start of a new era: – Very rapid progress towards 10 Gbps networking in both the Local (LAN) and Wide area (WAN) networking environments are being made. – 40 Gbps is in sight on WANs, but what after? – The success of the LHC computing Grid critically depends on the availability of Gbps links between CERN and LHC regional centers. • What does it mean? – In theory: • 1 GB file transferred in 11 seconds over a 1 Gbps circuit (*) • 1 TB file transfer would still require 3 hours • and 1 PB file transfer would require 4 months – In practice: • major transmission protocol issues will need to be addressed (*) according to the 75% empirical rule 17

CERN Very high speed file transfer (1) – High performance switched LAN assumed: • requires time & money. – High performance WAN also assumed: • also requires money but is becoming possible. • very careful engineering mandatory. – Will remain very problematic especially over high bandwidth*delay paths: • Might force the use Jumbo Frames because of interactions between TCP/IP and link error rates. – Could possibly conflict with strong security requirements 18

CERN Very high speed file transfer (2) • Following formula proposed by Matt Mathis/PSC (“The Macroscopic Behavior of the TCP Congestion Avoidance Algorithm”) to approximate the maximum TCP throughput under periodic packet loss: (MSS/RTT)*(1/sqrt(p)) • where MSS is the maximum segment size, 1460 bytes, in practice, and “p” is the packet loss rate. • Are TCP's "congestion avoidance" algorithms compatible with high speed, long distance networks. – The "cut transmit rate in half on single packet loss and then increase the rate additively (1 MSS by RTT)" algorithm may simply not work. – New TCP/IP adaptations may be needed in order to 19 better cope with “lfn”, e. g. TCP Vegas

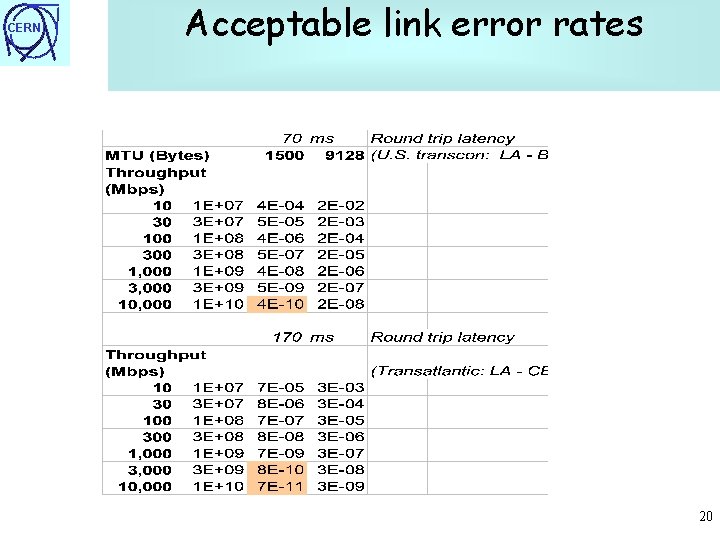

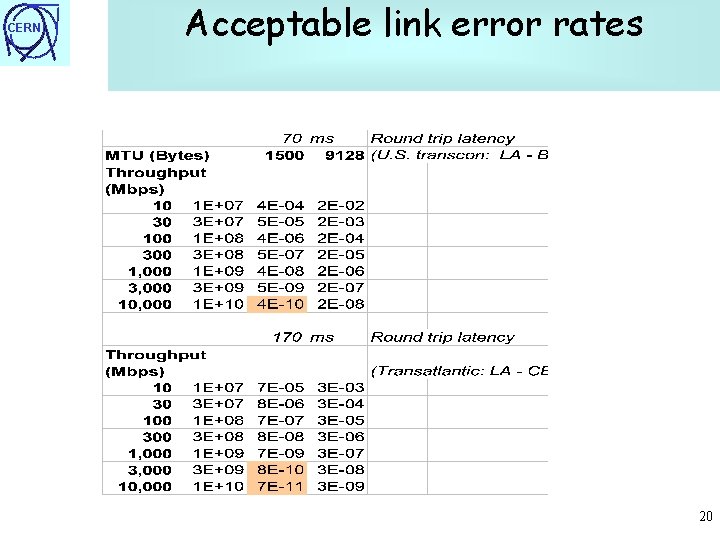

CERN Acceptable link error rates 20

CERN Very high speed file transfer (tentative conclusions) • Tcp/ip fairness only exist between similar flows, i. e. • similar duration, • similar RTTs. • Tcp/ip congestion avoidance algorithms need to be revisited (e. g. Vegas rather than Reno/New. Reno) – faster recovery after loss, selective acknowledgment. • Current ways of circumventing the problem, e. g. – Multi-stream & parallel socket • just bandages or the practical solution to the problem? • Web 100, a 3 MUSD NSF project, might help enormously! • • • better TCP/IP instrumentation (MIB), will allow read/write to internal TCP parameters self-tuning tools for measuring performance improved FTP implementation applications can tune stack • Non-Tcp/ip based transport solution, use of Forward Error Corrections (FEC), Early Congestion Notifications (ECN) rather than active queue management 21 techniques (RED/WRED)?

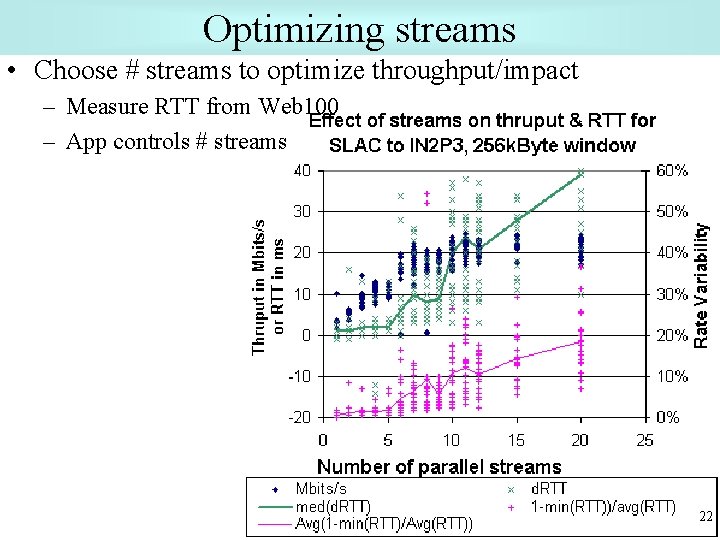

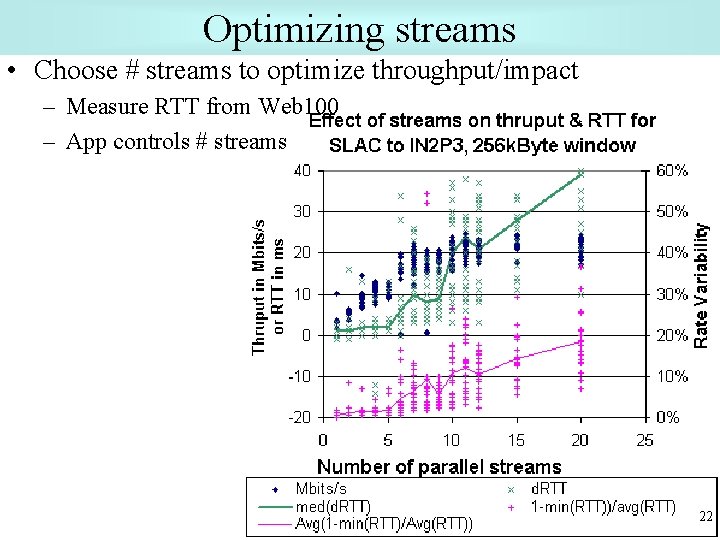

Optimizing streams • Choose # streams to optimize throughput/impact – Measure RTT from Web 100 – App controls # streams 22

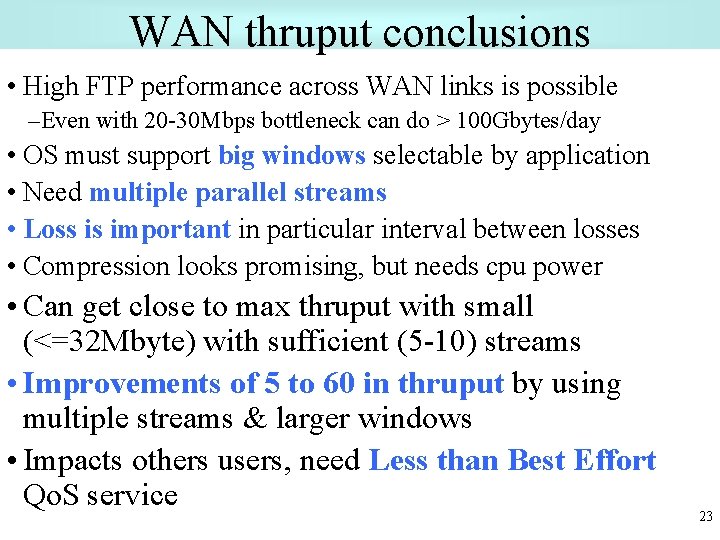

WAN thruput conclusions • High FTP performance across WAN links is possible –Even with 20 -30 Mbps bottleneck can do > 100 Gbytes/day • OS must support big windows selectable by application • Need multiple parallel streams • Loss is important in particular interval between losses • Compression looks promising, but needs cpu power • Can get close to max thruput with small (<=32 Mbyte) with sufficient (5 -10) streams • Improvements of 5 to 60 in thruput by using multiple streams & larger windows • Impacts others users, need Less than Best Effort Qo. S service 23

More Information • This talk: – www. slac. stanford. edu/grp/scs/net/talk/slac-wan-perf-apr 01. htm • IEPM/Ping. ER home site – www-iepm. slac. stanford. edu/ • Transfer tools: – http: //hepwww. rl. ac. uk/Adye/talks/010402 -ftp/html/sld 015. htm • TCP Tuning: – www. ncne. nlanr. net/training/presentations/tcp-tutorial. ppt 24

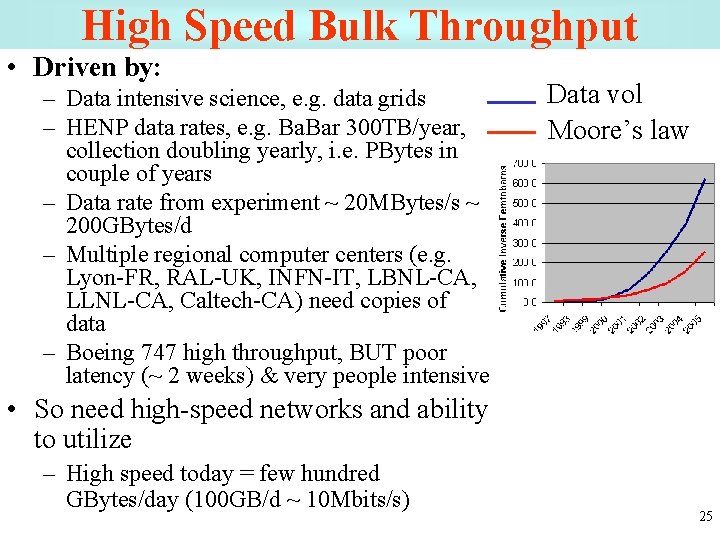

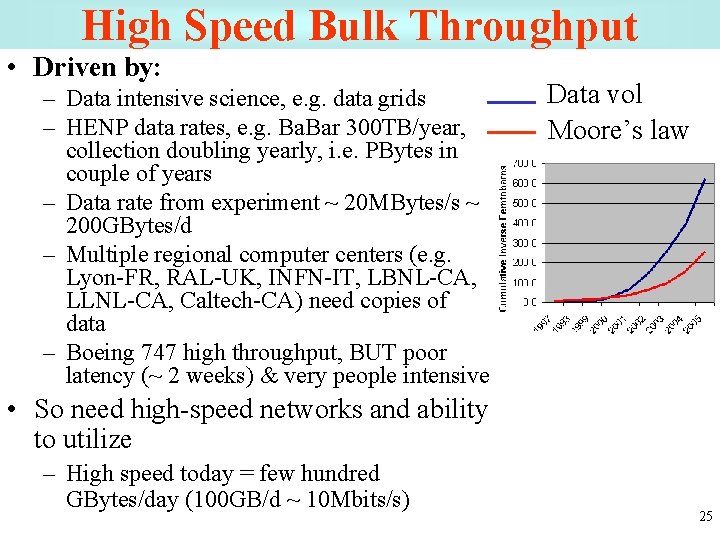

High Speed Bulk Throughput • Driven by: – Data intensive science, e. g. data grids – HENP data rates, e. g. Bar 300 TB/year, collection doubling yearly, i. e. PBytes in couple of years – Data rate from experiment ~ 20 MBytes/s ~ 200 GBytes/d – Multiple regional computer centers (e. g. Lyon-FR, RAL-UK, INFN-IT, LBNL-CA, LLNL-CA, Caltech-CA) need copies of data – Boeing 747 high throughput, BUT poor latency (~ 2 weeks) & very people intensive Data vol Moore’s law • So need high-speed networks and ability to utilize – High speed today = few hundred GBytes/day (100 GB/d ~ 10 Mbits/s) 25