High Performance Presentation 5 slidesMinute 65 slides 15

- Slides: 65

High Performance Presentation: 5 slides/Minute? (65 slides / 15 minutes) IO and DB “stuff” for LSST A new world record? Jim Gray Microsoft Research 1

Terra. Server Lessons Learned • • Hardware is 5 9’s (with clustering) 9 9 9 Software is 5 9’s (with clustering) 9 9 9 Admin is 4 9’s (offline maintenance) 9999 Network is 3 9’s (mistakes, environment)9 9 9 • Simple designs are best • 10 TB DB is management limit 1 PB = 100 x 10 TB DB this is 100 x better than 5 years ago. (yahoo!, Hot. Mail are 300 TB, Google! Is 2 PB) • Minimize use of tape – Backup to disk (snapshots) – Portable disk TBs 2

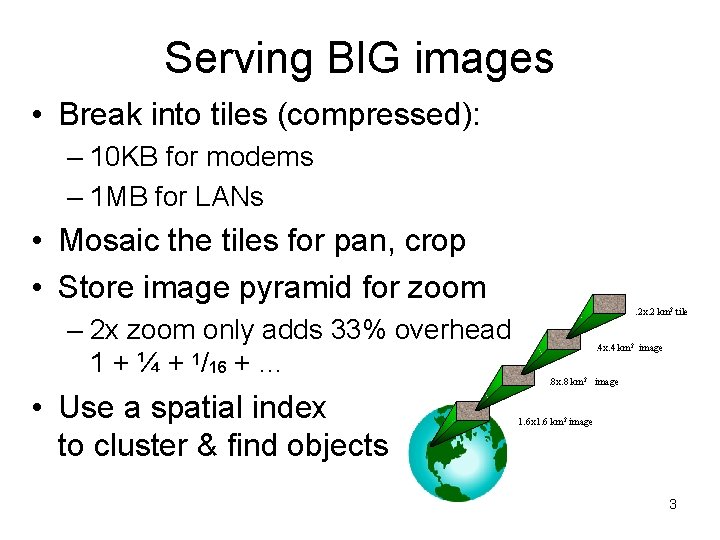

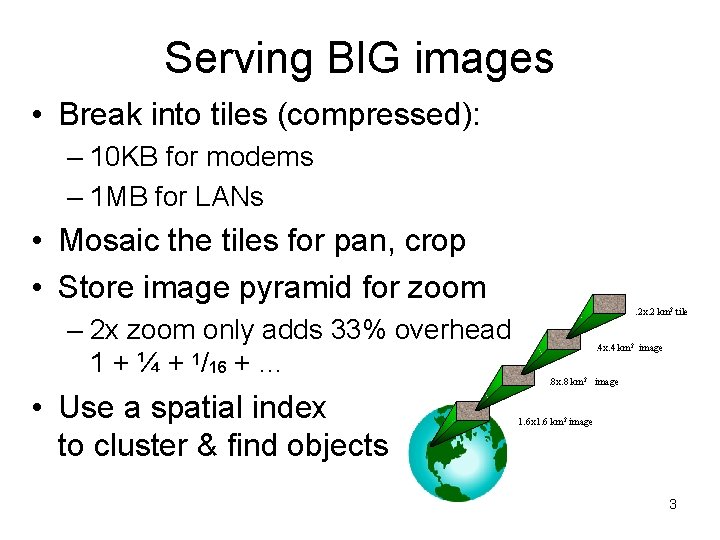

Serving BIG images • Break into tiles (compressed): – 10 KB for modems – 1 MB for LANs • Mosaic the tiles for pan, crop • Store image pyramid for zoom – 2 x zoom only adds 33% overhead 1 + ¼ + 1/16 + … • Use a spatial index to cluster & find objects . 2 x. 2 km 2 tile . 4 x. 4 km 2 image. 8 x. 8 km 2 image 1. 6 x 1. 6 km 2 image 3

Economics • People are more than 50% of costs • Disks are more than 50% of capital • Networking is the other 50% – People – Phone bill – Routers • Cpus are free (they come with the disks) 4

Sky. Server/ Sky. Query Lessons • DB is easy • Search – It is BEST to index – You can put objects and attributes in a row (SQL puts big blobs off-page) – If you can’t index, you can extract attributes and quickly compare – SQL can scan at 5 M records/cpu/second – Sequential scans are embarrassingly parallel • Web services are easy • XML Data Sets : – a universal way to represent answers – minimize round trips: 1 request/response – Diffgrams allow disconnected update 5

How Will We Find Stuff? Put everything in the DB (and index it) • • Need dbms features: Consistency, Indexing, Pivoting, Queries, Speed/scalability, Backup, replication If you don’t use one, you’r creating one! Simple logical structure: – Blob and link is all that is inherent – Additional properties (facets == extra tables) and methods on those tables (encapsulation) • • More than a file system Unifies data and meta-data Simpler to manage Easier to subset and reorganize Set-oriented access Allows online updates Automatic indexing, replication SQL 6

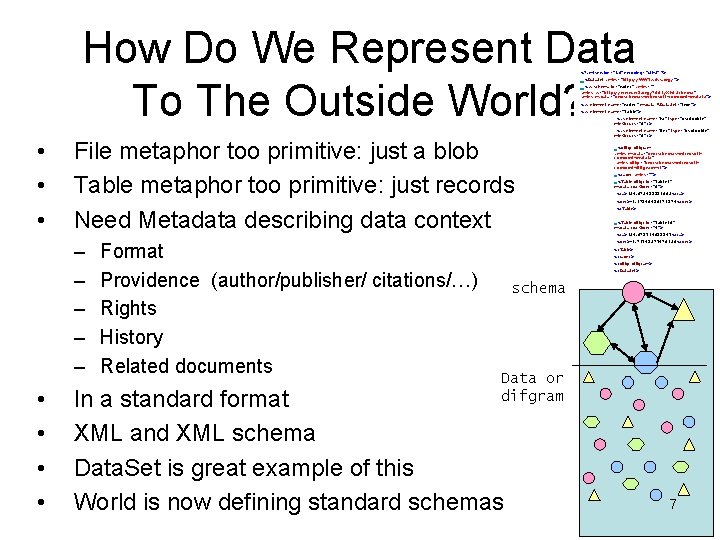

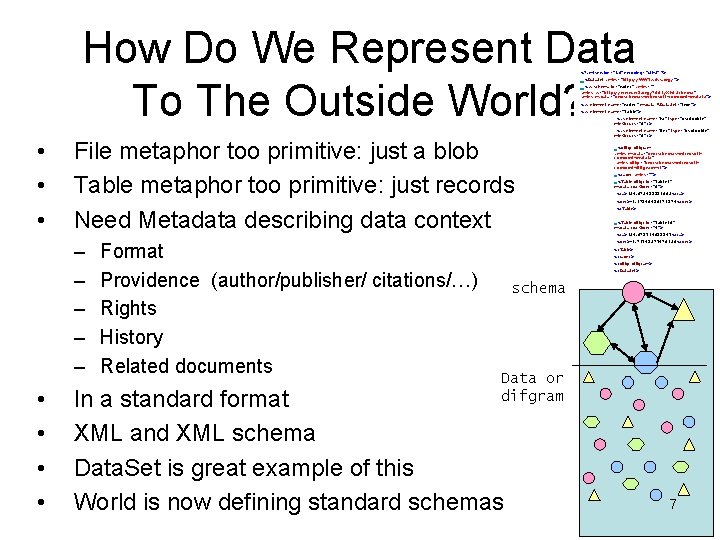

How Do We Represent Data To The Outside World? <? xml version="1. 0" encoding="utf-8" ? > - <Data. Set xmlns="http: //WWT. sdss. org/"> - <xs: schema id="radec" xmlns="" xmlns: xs="http: //www. w 3. org/2001/XMLSchema" xmlns: msdata="urn: schemas-microsoft-com: xml-msdata"> <xs: element name="radec" msdata: Is. Data. Set="true"> <xs: element name="Table"> <xs: element name="ra" type="xs: double" min. Occurs="0" /> • • • File metaphor too primitive: just a blob Table metaphor too primitive: just records Need Metadata describing data context – – – • • Format Providence (author/publisher/ citations/…) Rights History Related documents <xs: element name="dec" type="xs: double" min. Occurs="0" /> … - <diffgr: diffgram xmlns: msdata="urn: schemas-microsoftcom: xml-msdata" xmlns: diffgr="urn: schemas-microsoftcom: xml-diffgram-v 1"> - <radec xmlns=""> - <Table diffgr: id="Table 1" msdata: row. Order="0"> <ra>184. 028935351008 </ra> <dec>-1. 12590950121524 </dec> </Table> … - <Table diffgr: id="Table 10" msdata: row. Order="9"> <ra>184. 025719033547 </ra> <dec>-1. 21795827920186 </dec> </Table> </radec> </diffgr: diffgram> </Data. Set> schema Data or difgram In a standard format XML and XML schema Data. Set is great example of this World is now defining standard schemas 7

Emerging Concepts • Standardizing distributed data – – – Web Services, supported on all platforms Custom configure remote data dynamically XML: Extensible Markup Language SOAP: Simple Object Access Protocol WSDL: Web Services Description Language Data. Sets: Standard representation of an answer • Standardizing distributed computing – – Grid Services Custom configure remote computing dynamically Build your own remote computer, and discard Virtual Data: new data sets on demand 8

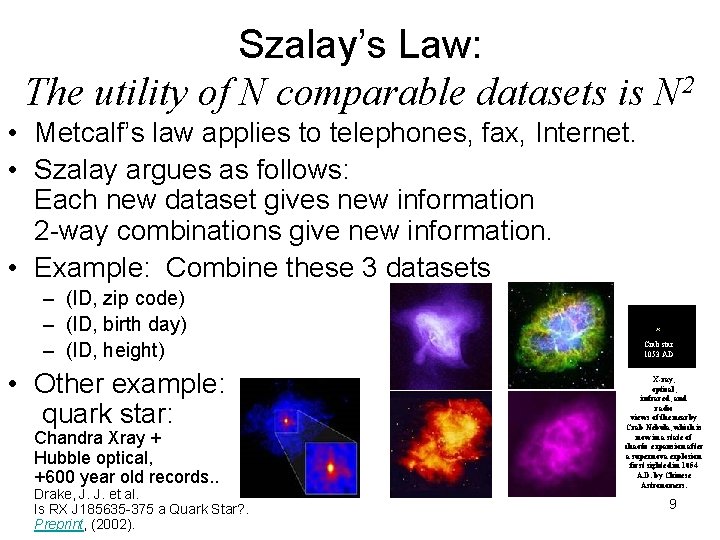

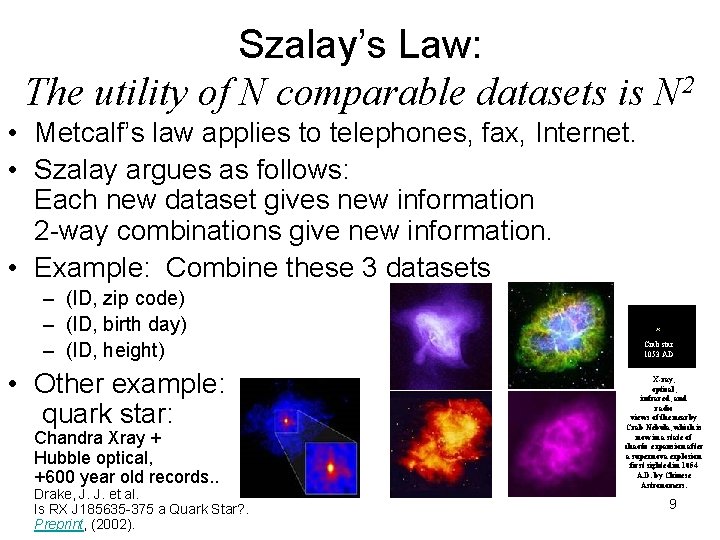

Szalay’s Law: The utility of N comparable datasets is N 2 • Metcalf’s law applies to telephones, fax, Internet. • Szalay argues as follows: Each new dataset gives new information 2 -way combinations give new information. • Example: Combine these 3 datasets – (ID, zip code) – (ID, birth day) – (ID, height) • Other example: quark star: Chandra Xray + Hubble optical, +600 year old records. . Drake, J. J. et al. Is RX J 185635 -375 a Quark Star? . Preprint, (2002). Crab star 1053 AD X-ray, optical, infrared, and radio views of the nearby Crab Nebula, which is now in a state of chaotic expansion after a supernova explosion first sighted in 1054 A. D. by Chinese Astronomers. 9

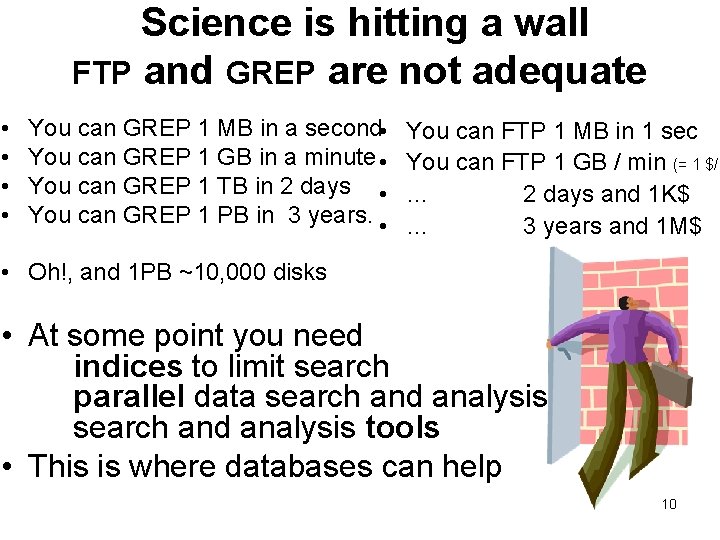

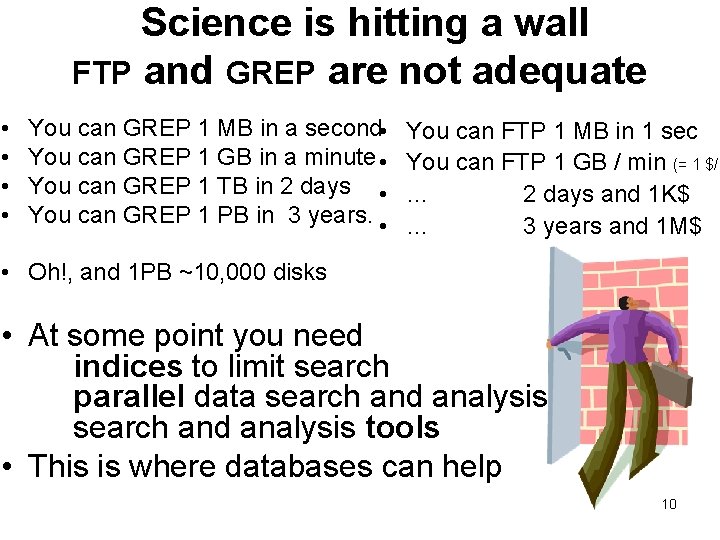

Science is hitting a wall FTP and GREP are not adequate • • You can GREP 1 MB in a second • You can GREP 1 GB in a minute • You can GREP 1 TB in 2 days • You can GREP 1 PB in 3 years. • You can FTP 1 MB in 1 sec You can FTP 1 GB / min (= 1 $/G … 2 days and 1 K$ … 3 years and 1 M$ • Oh!, and 1 PB ~10, 000 disks • At some point you need indices to limit search parallel data search and analysis tools • This is where databases can help 10

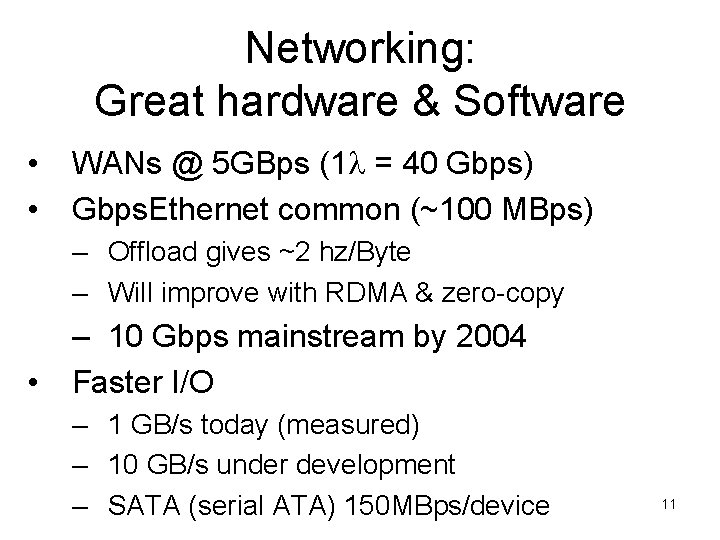

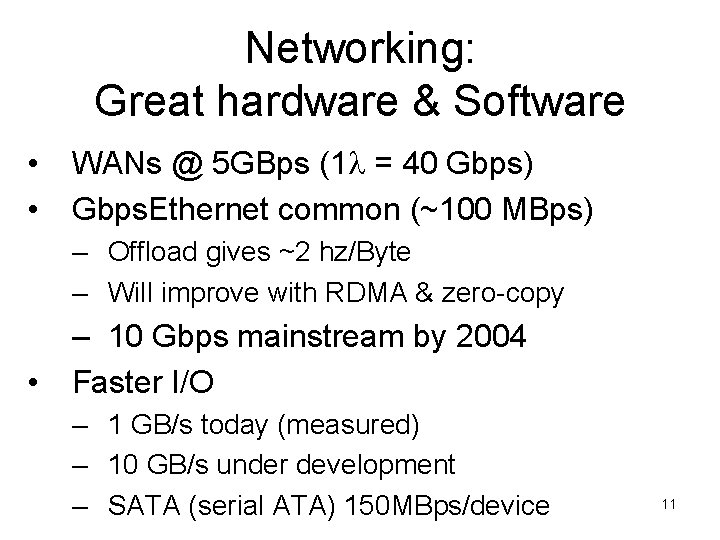

Networking: Great hardware & Software • • WANs @ 5 GBps (1 = 40 Gbps) Gbps. Ethernet common (~100 MBps) – Offload gives ~2 hz/Byte – Will improve with RDMA & zero-copy • – 10 Gbps mainstream by 2004 Faster I/O – 1 GB/s today (measured) – 10 GB/s under development – SATA (serial ATA) 150 MBps/device 11

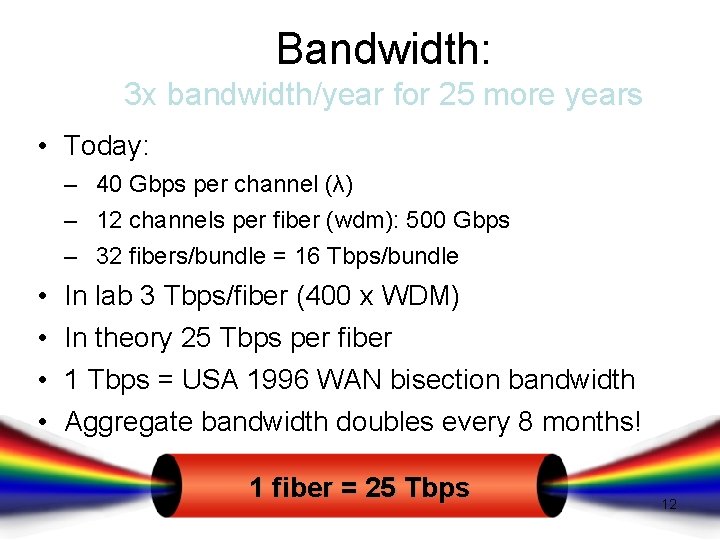

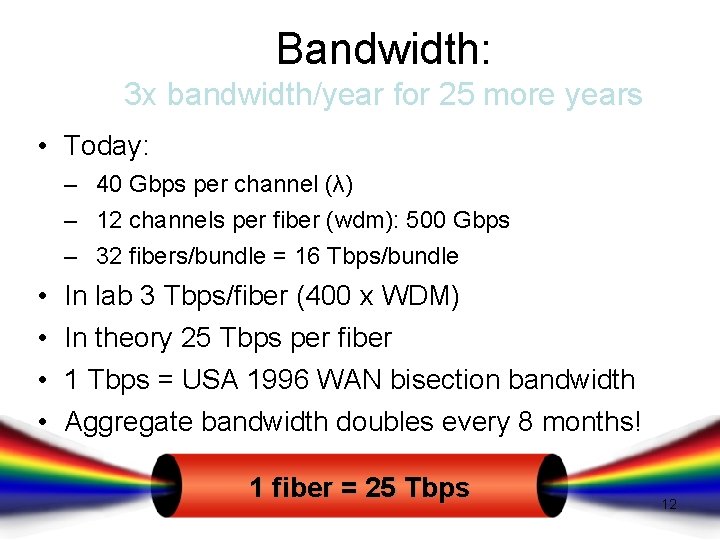

Bandwidth: 3 x bandwidth/year for 25 more years • Today: – 40 Gbps per channel (λ) – 12 channels per fiber (wdm): 500 Gbps – 32 fibers/bundle = 16 Tbps/bundle • • In lab 3 Tbps/fiber (400 x WDM) In theory 25 Tbps per fiber 1 Tbps = USA 1996 WAN bisection bandwidth Aggregate bandwidth doubles every 8 months! 1 fiber = 25 Tbps 12

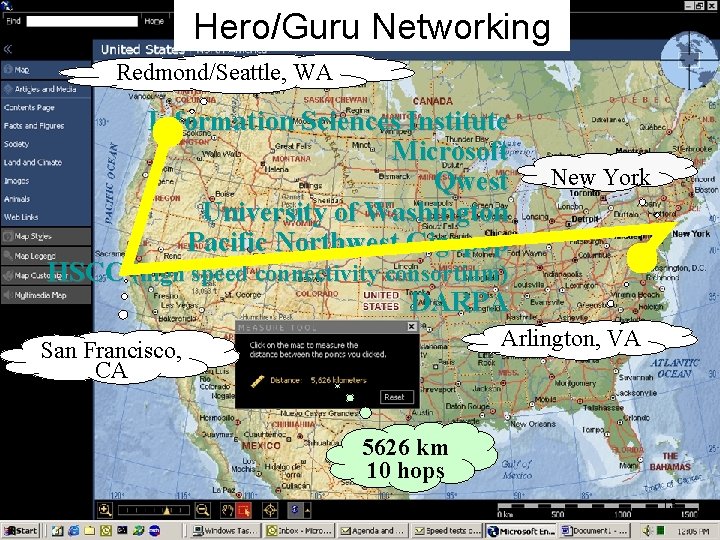

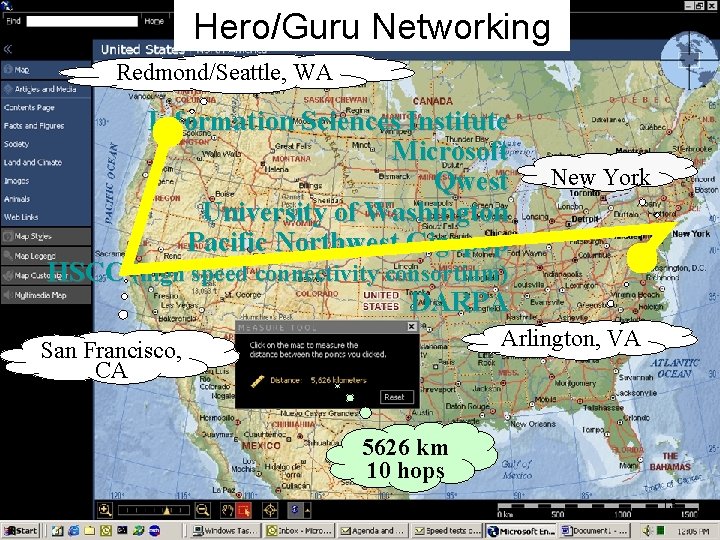

Hero/Guru Networking Redmond/Seattle, WA Information Sciences Institute Microsoft Qwest University of Washington Pacific Northwest Gigapop New York HSCC (high speed connectivity consortium) DARPA Arlington, VA San Francisco, CA 5626 km 10 hops 13

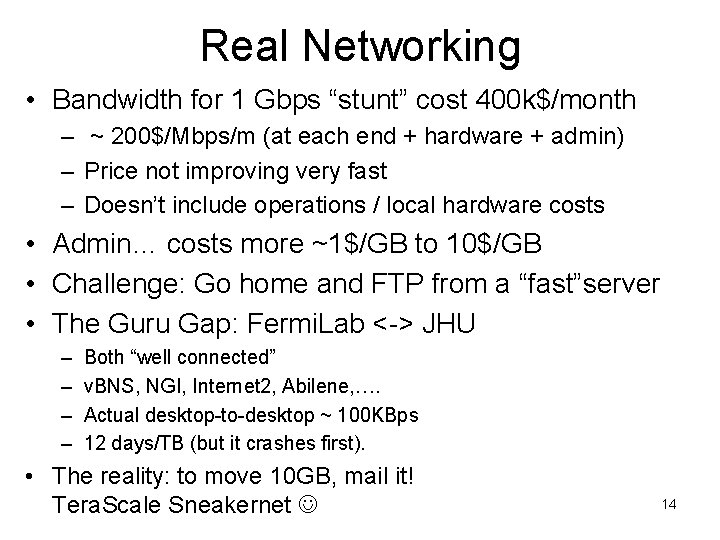

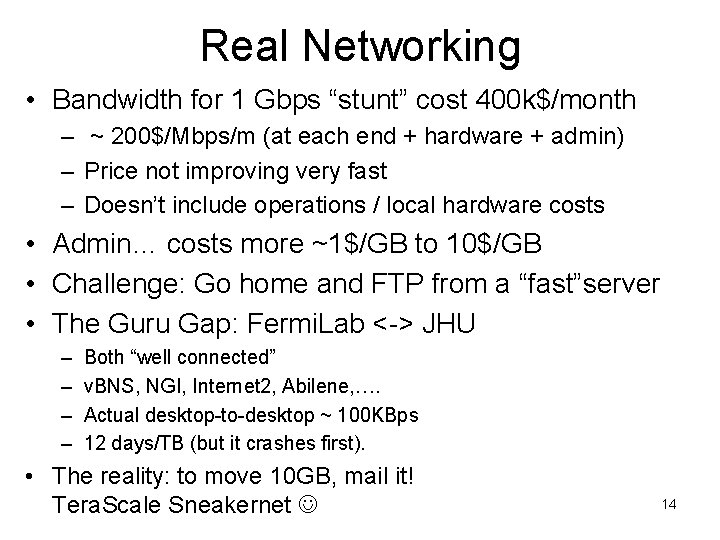

Real Networking • Bandwidth for 1 Gbps “stunt” cost 400 k$/month – ~ 200$/Mbps/m (at each end + hardware + admin) – Price not improving very fast – Doesn’t include operations / local hardware costs • Admin… costs more ~1$/GB to 10$/GB • Challenge: Go home and FTP from a “fast”server • The Guru Gap: Fermi. Lab <-> JHU – – Both “well connected” v. BNS, NGI, Internet 2, Abilene, …. Actual desktop-to-desktop ~ 100 KBps 12 days/TB (but it crashes first). • The reality: to move 10 GB, mail it! Tera. Scale Sneakernet 14

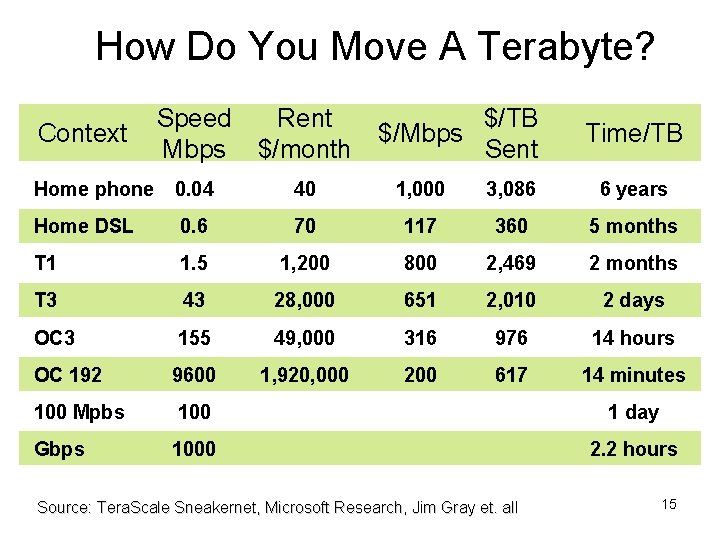

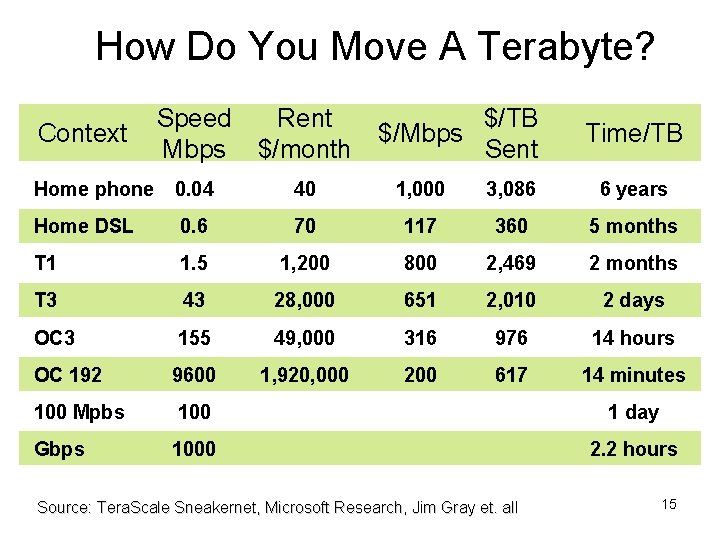

How Do You Move A Terabyte? Speed Mbps Rent $/month Home phone 0. 04 40 1, 000 3, 086 6 years Home DSL 0. 6 70 117 360 5 months T 1 1. 5 1, 200 800 2, 469 2 months T 3 43 28, 000 651 2, 010 2 days OC 3 155 49, 000 316 976 14 hours OC 192 9600 1, 920, 000 200 617 14 minutes 100 Mpbs 100 1 day Gbps 1000 2. 2 hours Context $/TB $/Mbps Sent Source: Tera. Scale Sneakernet, Microsoft Research, Jim Gray et. all Time/TB 15

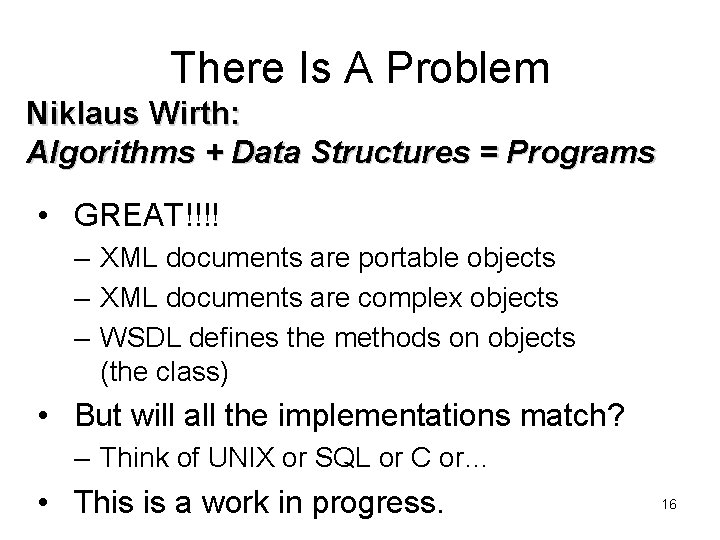

There Is A Problem Niklaus Wirth: Algorithms + Data Structures = Programs • GREAT!!!! – XML documents are portable objects – XML documents are complex objects – WSDL defines the methods on objects (the class) • But will all the implementations match? – Think of UNIX or SQL or C or… • This is a work in progress. 16

Changes To DBMS’s • Integration of Programs and Data – Put programs inside the database allows OODB – Gives you parallel execution • • Integration of Relational, Text, XML, Time Scaleout (even more) Auto. Admin (“no knobs”) Manage Petascale databases (utilities, geoplex, online, incremental) 17

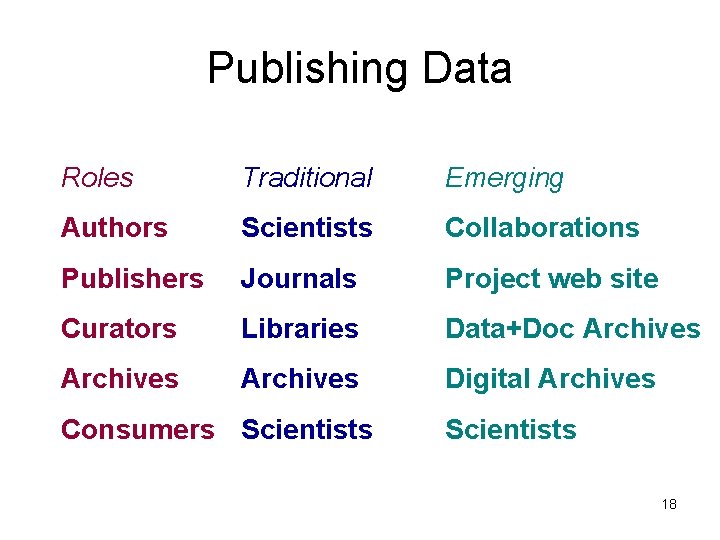

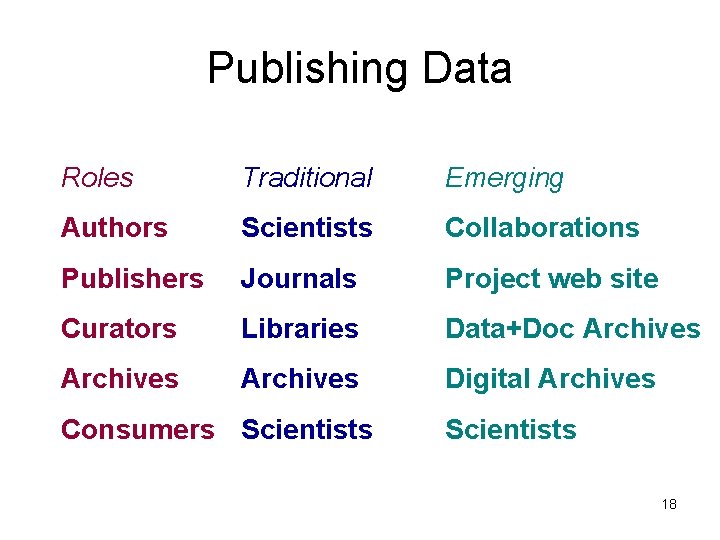

Publishing Data Roles Traditional Emerging Authors Scientists Collaborations Publishers Journals Project web site Curators Libraries Data+Doc Archives Digital Archives Consumers Scientists 18

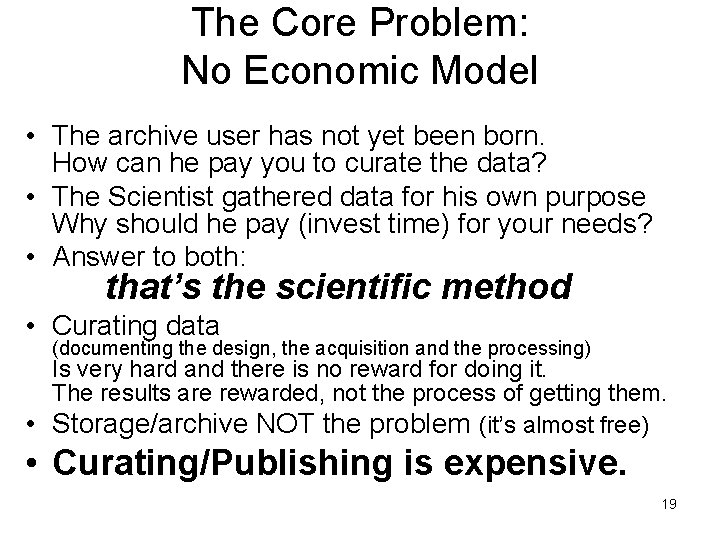

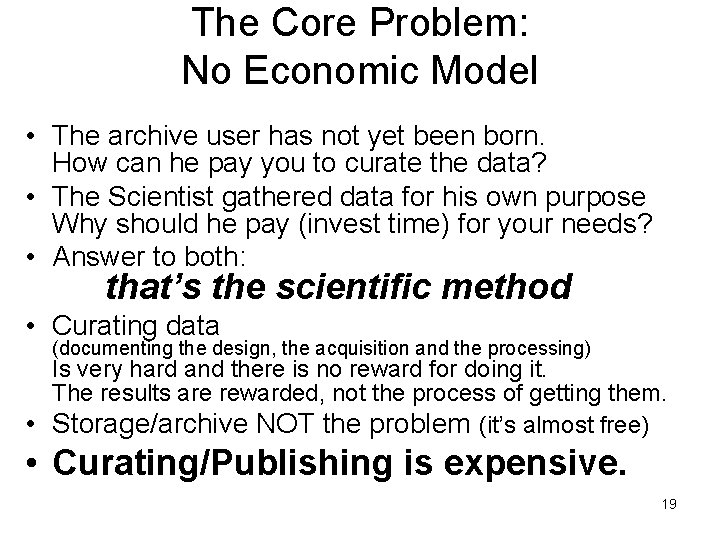

The Core Problem: No Economic Model • The archive user has not yet been born. How can he pay you to curate the data? • The Scientist gathered data for his own purpose Why should he pay (invest time) for your needs? • Answer to both: that’s the scientific method • Curating data (documenting the design, the acquisition and the processing) Is very hard and there is no reward for doing it. The results are rewarded, not the process of getting them. • Storage/archive NOT the problem (it’s almost free) • Curating/Publishing is expensive. 19

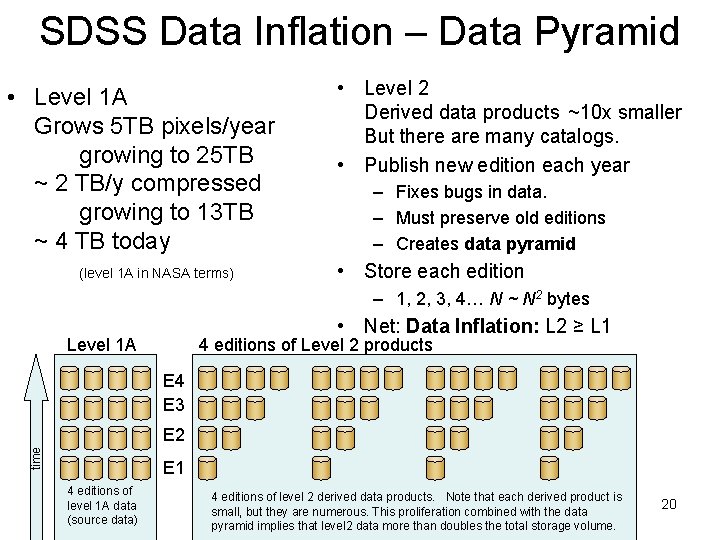

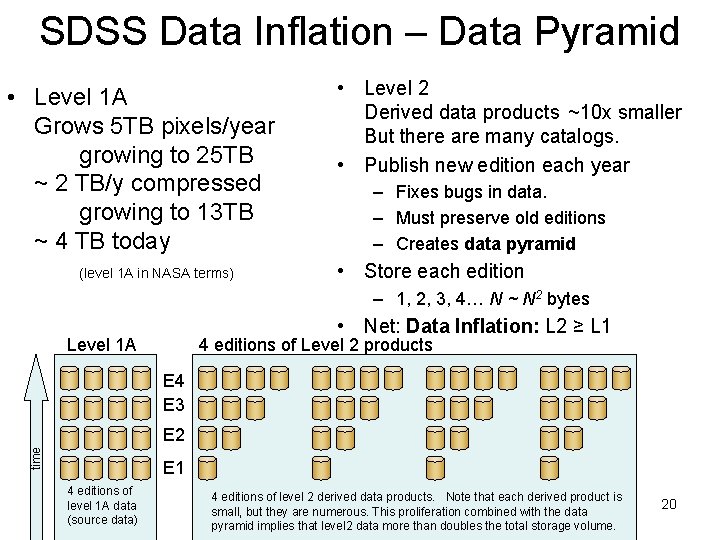

SDSS Data Inflation – Data Pyramid • Level 1 A Grows 5 TB pixels/year growing to 25 TB ~ 2 TB/y compressed growing to 13 TB ~ 4 TB today (level 1 A in NASA terms) • Level 2 Derived data products ~10 x smaller But there are many catalogs. • Publish new edition each year – Fixes bugs in data. – Must preserve old editions – Creates data pyramid • Store each edition – 1, 2, 3, 4… N ~ N 2 bytes • Net: Data Inflation: L 2 ≥ L 1 Level 1 A 4 editions of Level 2 products E 4 E 3 time E 2 E 1 4 editions of level 1 A data (source data) 4 editions of level 2 derived data products. Note that each derived product is small, but they are numerous. This proliferation combined with the data pyramid implies that level 2 data more than doubles the total storage volume. 20

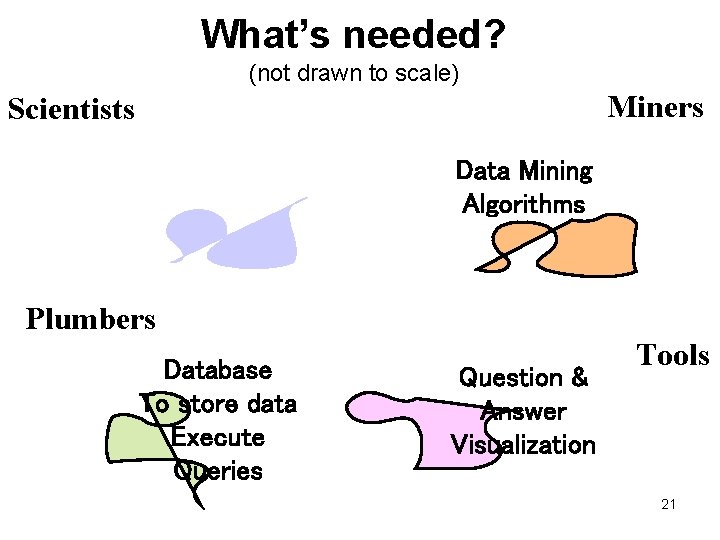

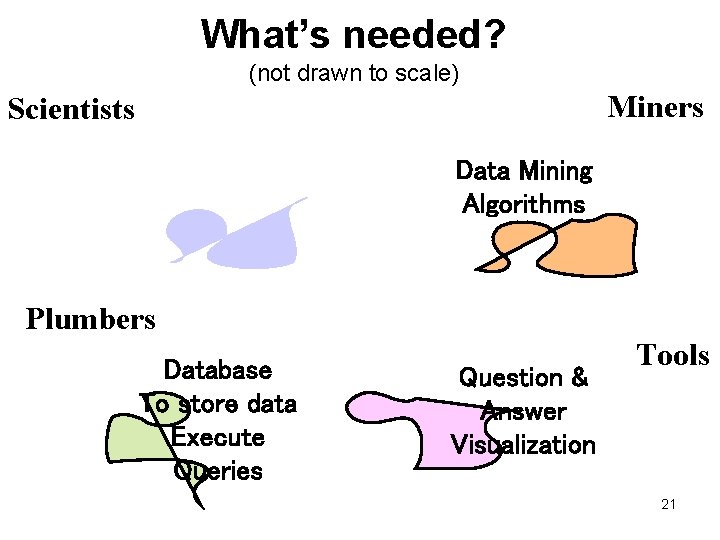

What’s needed? (not drawn to scale) Miners Scientists Data Mining Algorithms Plumbers Database To store data Execute Queries Question & Answer Visualization Tools 21

CS Challenges For Astronomers Scientists • Objectify your field: – – Precisely define what you are talking about. Objects and Methods / Attributes This is REALLY difficult. UCDs are a great start but, there is a long way to go • “Software is like entropy, it always increases. ” -- Norman Augustine, Augustine’s Laws – – Beware of legacy software – cost can eat you alive Share software where possible. Use standard software where possible. Expect it will cost you 25% to 40% of project. • Explain what you want to do with the VO – 20 queries or something like that. 22

Data Mining Algorithm s Challenge to Data Miners: Linear and Sub-Linear Algorithms Miners Techniques • Today most correlation / clustering algorithms are polynomial N 2 or N 3 or… • N 2 is VERY big when N is big (1018 is big) • Need sub-linear algorithms • Current approaches are near optimal given current assumptions. • So, need new assumptions probably heuristic and approximate 23

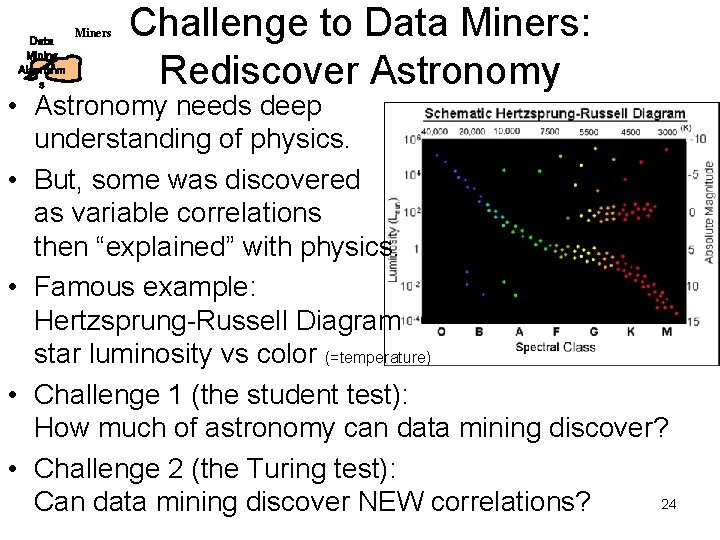

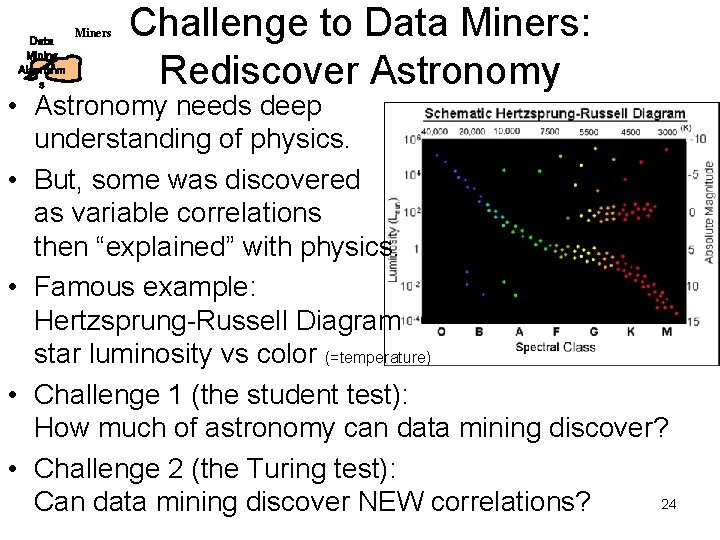

Data Mining Algorithm s Miners Challenge to Data Miners: Rediscover Astronomy • Astronomy needs deep understanding of physics. • But, some was discovered as variable correlations then “explained” with physics. • Famous example: Hertzsprung-Russell Diagram star luminosity vs color (=temperature) • Challenge 1 (the student test): How much of astronomy can data mining discover? • Challenge 2 (the Turing test): 24 Can data mining discover NEW correlations?

Plumbers Database To store data Execute Queries Plumbers: Organize and Search Petabytes • Automate – instrument-to-archive pipelines It is is a messy business – very labor intensive Most current designs do not scale (too many manual steps) Ba. Bar (1 TB/day) and ESO pipeline seem promising. A job-scheduling or workflow system – Physical Database design & access • Data access patterns are difficult to anticipate • Aggressively and automatically use indexing, sub-setting. • Search in parallel • Goals – Answer easy queries in 10 seconds. – Answer hard queries (correlations) in 10 minutes. 25

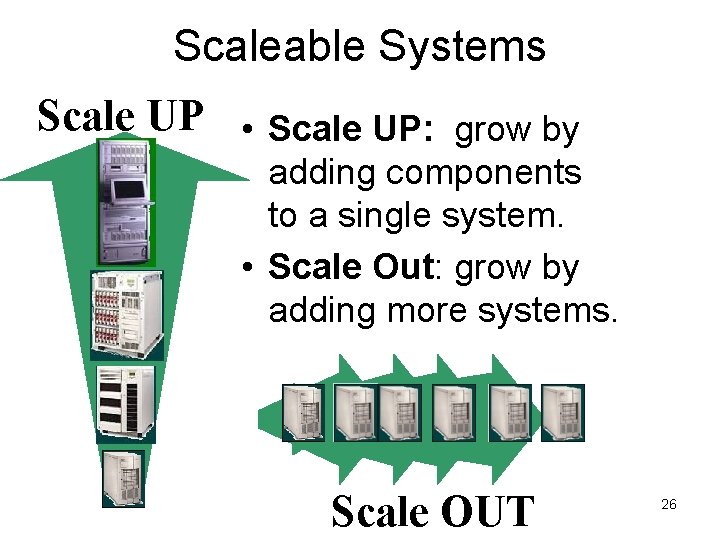

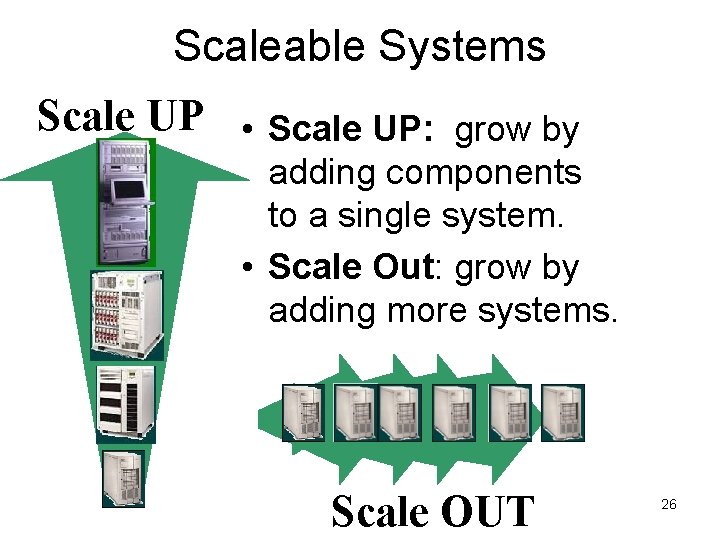

Scaleable Systems Scale UP • Scale UP: grow by adding components to a single system. • Scale Out: grow by adding more systems. Scale OUT 26

What’s New – Scale Up • • • 64 bit & TB size main memory SMP on chip: everything’s smp 32… 256 SMP: locality/affinity matters TB size disks High-speed LANs 27

Who needs 64 -bit addressing? You! Need 64 -bit addressing! • • • 640 K ought to be enough for anybody. Bill Gates, 1981 But that was 21 years ago == 2 21/3 = 14 bits ago. 20 bits + 14 bits = 34 bits so. . 16 GB ought to be enough for anybody Jim Gray, 2002 34 bits > 31 bits so… 34 bits == 64 bits YOU need 64 bit addressing! 28

64 bit – Why bother? • • 1966 Moore’s law: 4 x more RAM every 3 years. 1 bit of addressing every 18 months 36 years later: 2 36/3 = 24 more bits Not exactly right, but… 32 bits not enough for servers 32 bits gives no headroom for clients So, time is running out ( has run out ) • Good news: Itanium™ and Hammer™ are maturing And so is the base software (OS, drivers, DB, Web, . . . ) Windows & SQL @ 256 GB today! 29

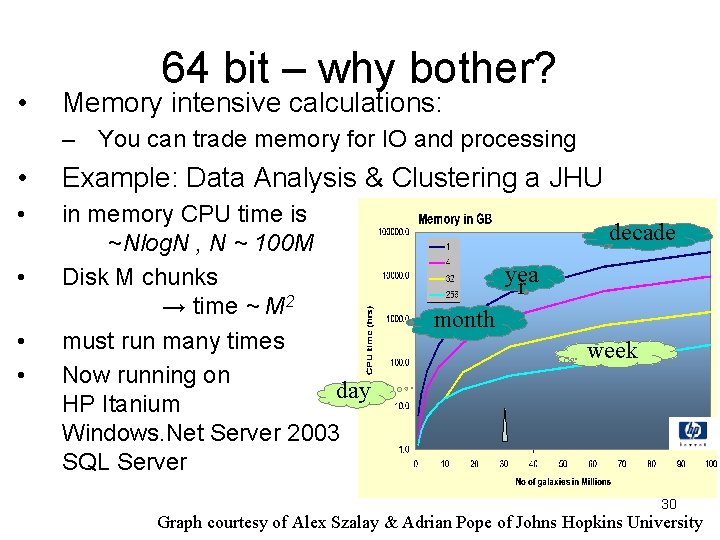

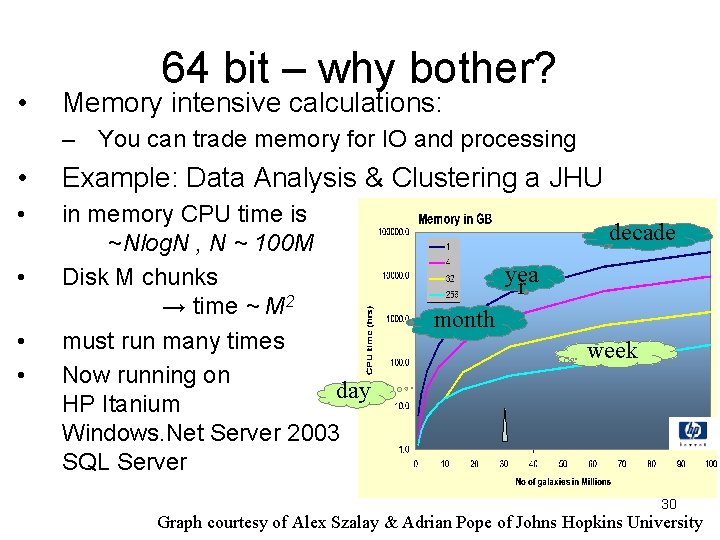

• 64 bit – why bother? Memory intensive calculations: – You can trade memory for IO and processing • Example: Data Analysis & Clustering a JHU • in memory CPU time is ~Nlog. N , N ~ 100 M Disk M chunks → time ~ M 2 must run many times Now running on day HP Itanium Windows. Net Server 2003 SQL Server • • • decade yea r month week 30 Graph courtesy of Alex Szalay & Adrian Pope of Johns Hopkins University

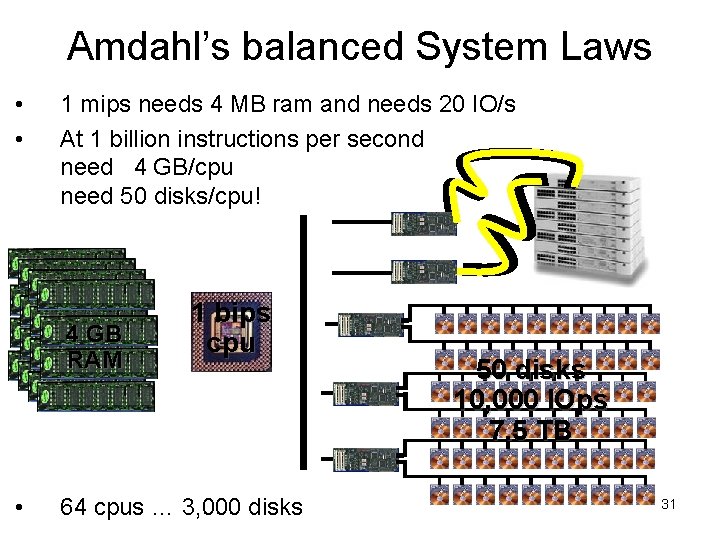

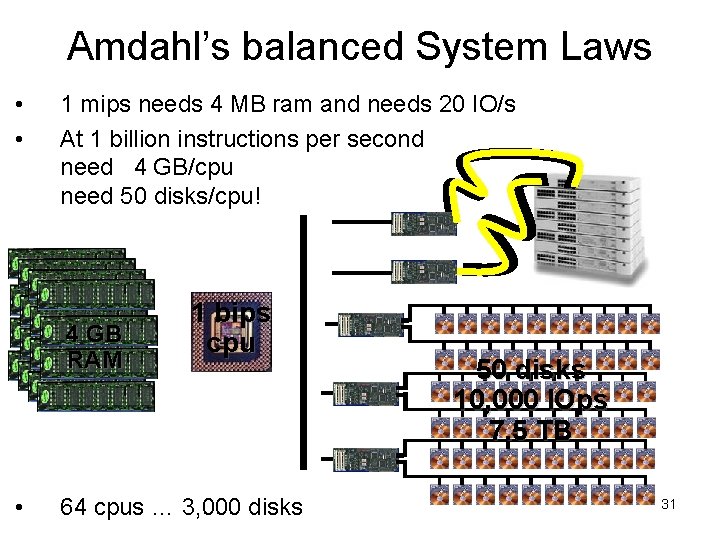

Amdahl’s balanced System Laws • • 1 mips needs 4 MB ram and needs 20 IO/s At 1 billion instructions per second need 4 GB/cpu need 50 disks/cpu! 4 GB RAM • 1 bips cpu 64 cpus … 3, 000 disks 50 disks 10, 000 IOps 7. 5 TB 31

The 5 Minute Rule – Trade RAM for Disk Arms • • If data re-referenced every 5 minutes It is cheaper to cache it in ram than to get it from disk A disk access/second ~ 50$ or ~ 50 MB for 1 second or ~ 50 KB for 1, 000 seconds. Each app has a memory “knee” Up to the knee, more memory helps a lot. 32

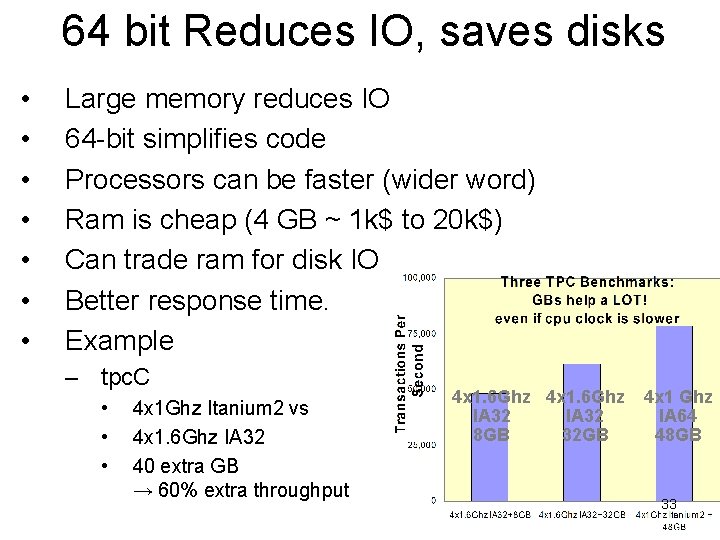

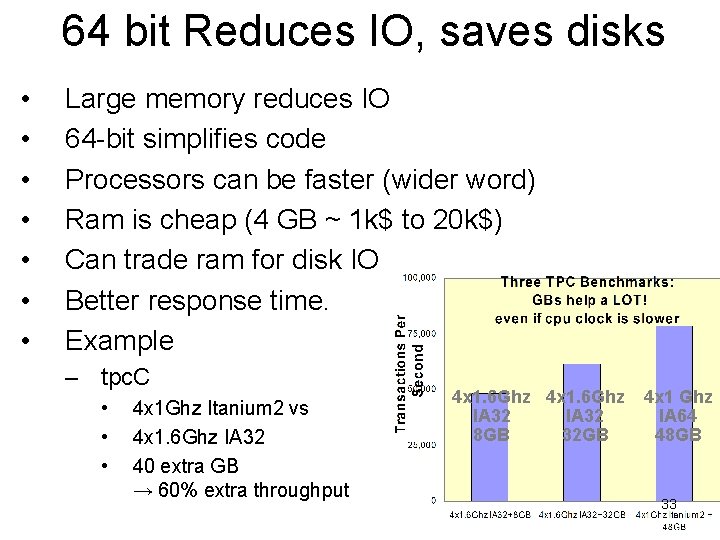

64 bit Reduces IO, saves disks • • Large memory reduces IO 64 -bit simplifies code Processors can be faster (wider word) Ram is cheap (4 GB ~ 1 k$ to 20 k$) Can trade ram for disk IO Better response time. Example – tpc. C • • • 4 x 1 Ghz Itanium 2 vs 4 x 1. 6 Ghz IA 32 40 extra GB → 60% extra throughput 4 x 1. 6 Ghz IA 32 8 GB 32 GB 4 x 1 Ghz IA 64 48 GB 33

AMD Hammer™ Coming Soon • • • AMD Hammer™ is 64 bit capable 2003: millions of Hammer™ CPUs will ship 2004: most AMD CPUs will be 64 bit 4 GB ram is less than 1, 000$ today less than 500$ in 2004 Desktops (Hammer™) and servers (Opteron™). You do the math, … Who will demand 64 bit capable software? 34

A 1 TB Main Memory • • • Amdahl’s law: 1 mips/MB , now 1: 5 so ~20 x 10 Ghz cpus need 1 TB ram ~ 250 k$ … 2 m$ today ~ 25 k$ … 200 k$ in 5 years 128 million pages – Takes a LONG time to fill – Takes a LONG time to refill • • • Needs new algorithms Needs parallel processing Which leads us to… – The memory hierarchy – smp – numa 35

Hyper-Threading: SMP on chip • If cpu is always waiting for memory Predict memory requests and prefetch – done • If cpu still always waiting for memory Multi-program it (multiple hardware threads per cpu) – Hyper Threading: Everything is SMP – 2 now more later – Also multiple cpus/chip • If your program is single threaded – You waste ½ the cpu and memory bandwidth – Eventually waste 80% • App builders need to plan for threads. 36

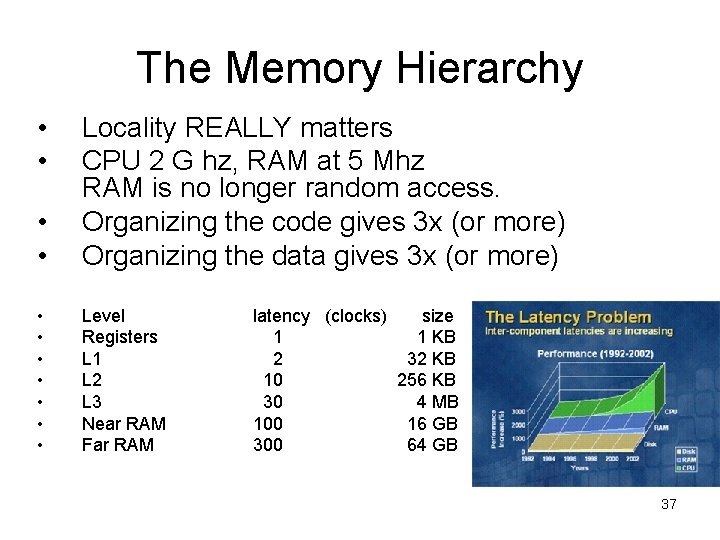

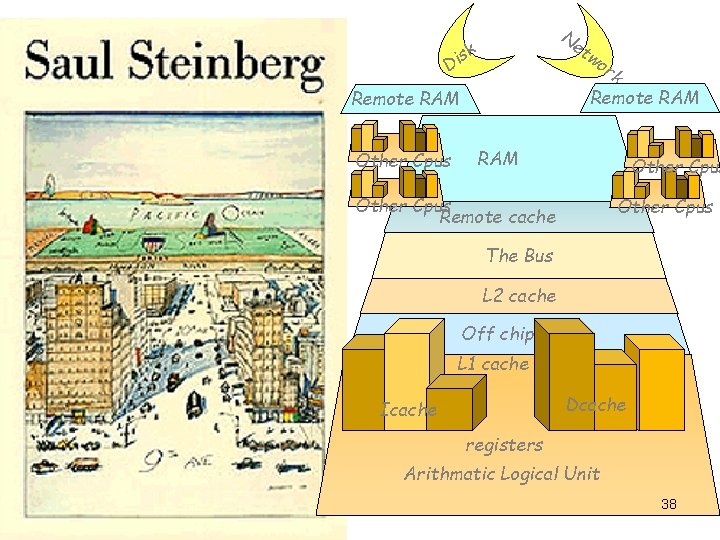

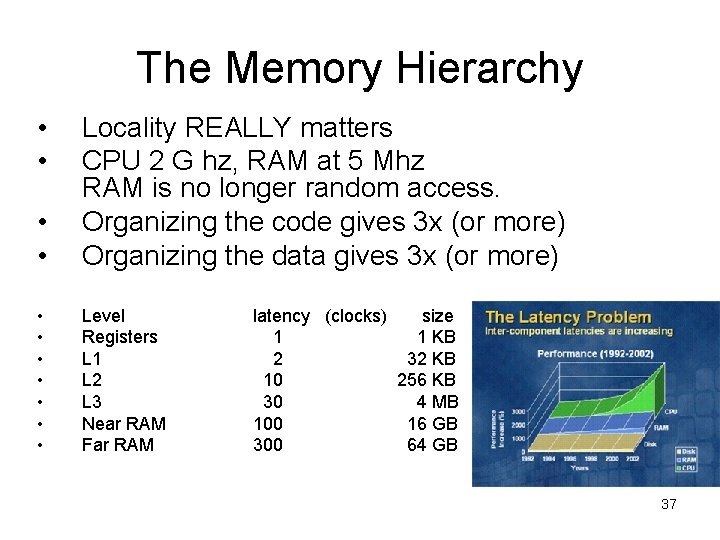

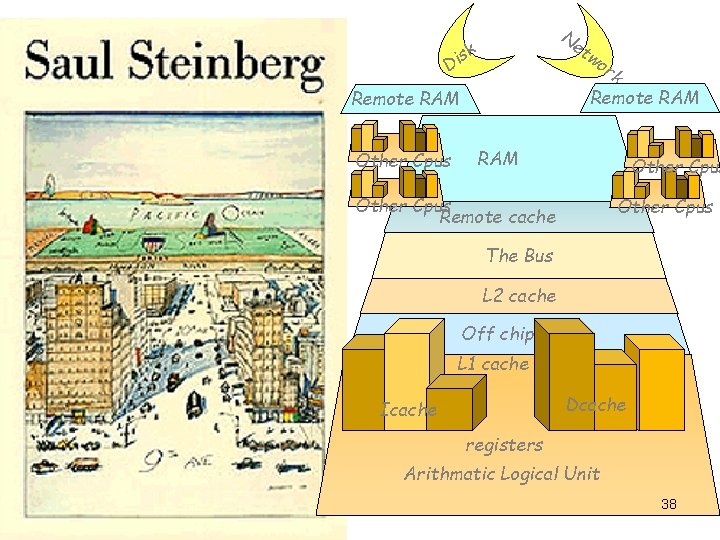

The Memory Hierarchy • • Locality REALLY matters CPU 2 G hz, RAM at 5 Mhz RAM is no longer random access. Organizing the code gives 3 x (or more) Organizing the data gives 3 x (or more) • • Level Registers L 1 L 2 L 3 Near RAM Far RAM latency (clocks) size 1 1 KB 2 32 KB 10 256 KB 30 4 MB 100 16 GB 300 64 GB 37

Ne tw sk i D k Remote RAM Other Cpus or RAM Other Cpus Remote cache Other Cpus The Bus L 2 cache Off chip L 1 cache Dcache Icache registers Arithmatic Logical Unit 38

Scaleup Systems Non-Uniform Memory Architecture (NUMA) Coherent but… remote memory is even slower CPU I/O Mem CPU I/O CPU Mem Mem CPU CPU Service Processor Chipset Mem Mem Config DB CPU Service Processor CPU Chipset Mem Mem CPU Mem Partition manager Chipset Mem I/O All cells see a common memory CPU CPU Chipset Mem Mem System interconnect Crossbar/Switch Slow local main memory Slower remote main memory Scaleup by adding cells Planning for 64 cpu, 1 TB ram Interconnect, Service Processor, Partition management are vendor specific Several vendors doing this Itanium and Hammer 39

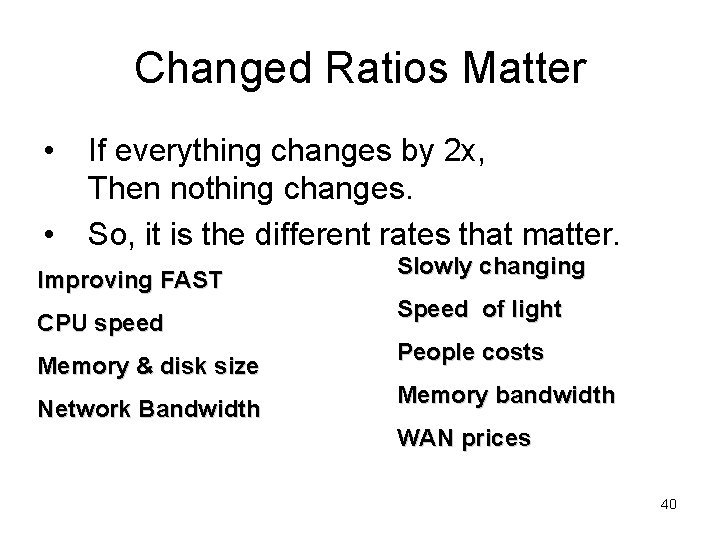

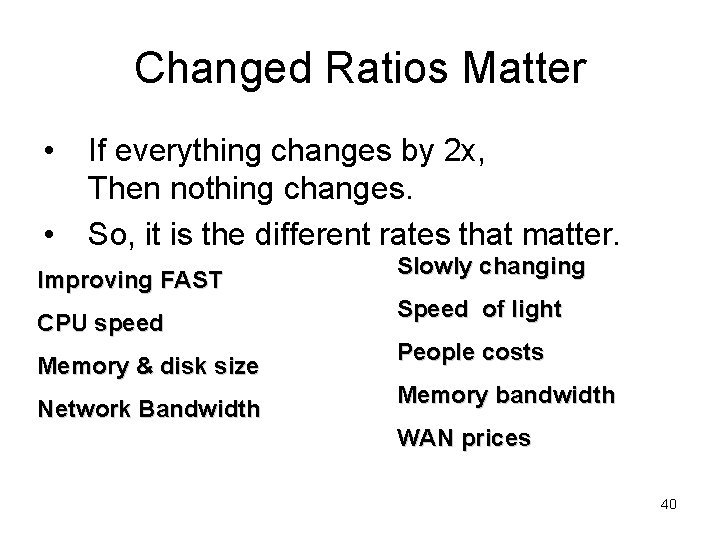

Changed Ratios Matter • • If everything changes by 2 x, Then nothing changes. So, it is the different rates that matter. Improving FAST CPU speed Memory & disk size Network Bandwidth Slowly changing Speed of light People costs Memory bandwidth WAN prices 40

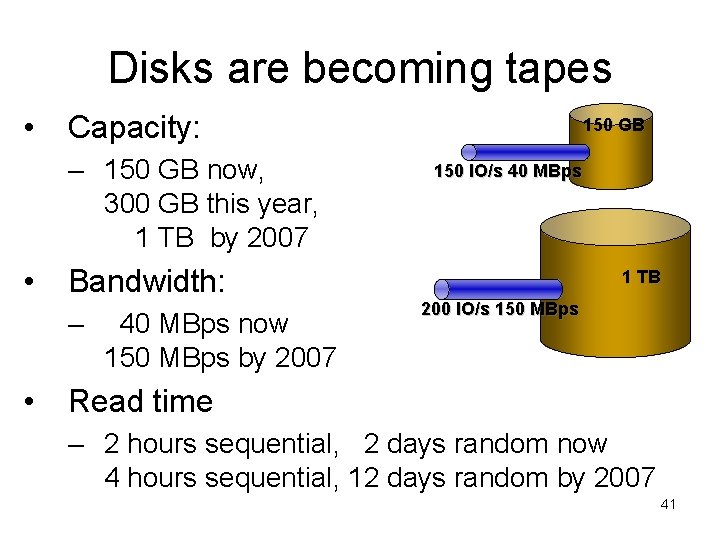

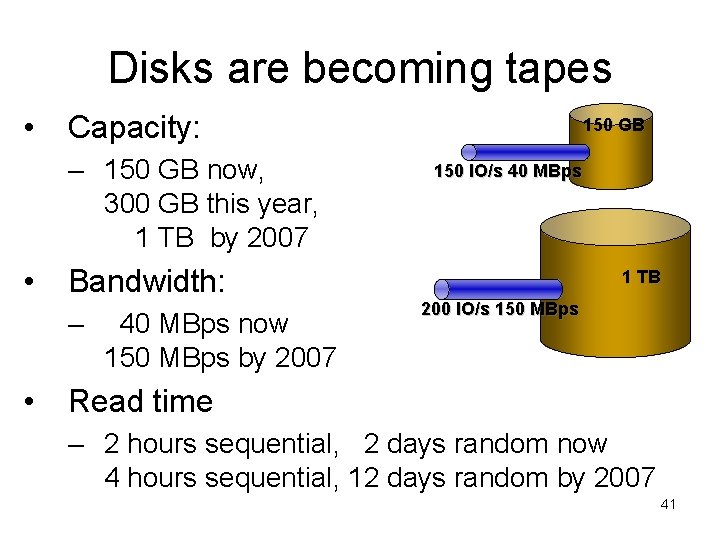

Disks are becoming tapes • Capacity: – 150 GB now, 300 GB this year, 1 TB by 2007 • 150 IO/s 40 MBps Bandwidth: – • 150 GB 40 MBps now 150 MBps by 2007 1 TB 200 IO/s 150 MBps Read time – 2 hours sequential, 2 days random now 4 hours sequential, 12 days random by 2007 41

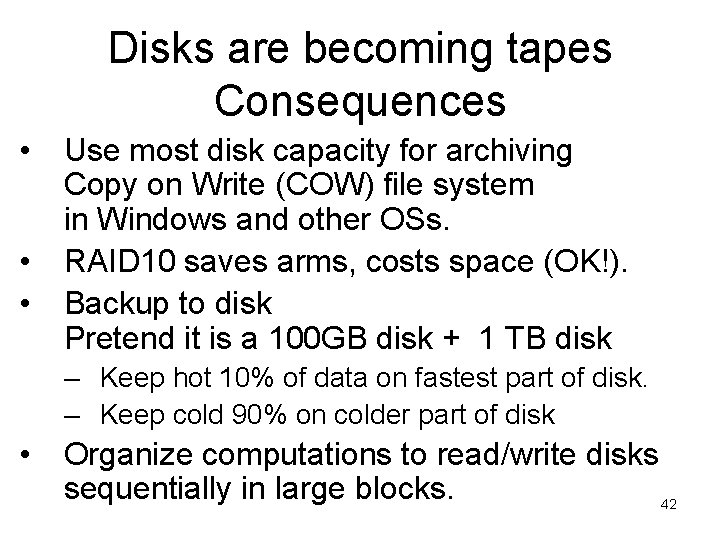

Disks are becoming tapes Consequences • • • Use most disk capacity for archiving Copy on Write (COW) file system in Windows and other OSs. RAID 10 saves arms, costs space (OK!). Backup to disk Pretend it is a 100 GB disk + 1 TB disk – Keep hot 10% of data on fastest part of disk. – Keep cold 90% on colder part of disk • Organize computations to read/write disks sequentially in large blocks. 42

Wiring is going serial and getting FAST! • • • Gbps Ethernet and SATA built into chips Raid Controllers: inexpensive and fast. 1 U storage bricks @ 2 -10 TB SAN or NAS (i. SCSI or CIFS/DAFS) 15 8 x 0 M SA Bp TA s/ lin k • Enet 100 MBps/link 43

NAS – SAN Horse Race • • Storage Hardware 1 k$/TB/y Storage Management 10 k$. . . 300 k$/TB/y So as with Server Consolidation Storage Consolidation Two styles: NAS (Network Attached Storage) File Server SAN (System Area Network) Disk Server I believe NAS is more manageable. 44

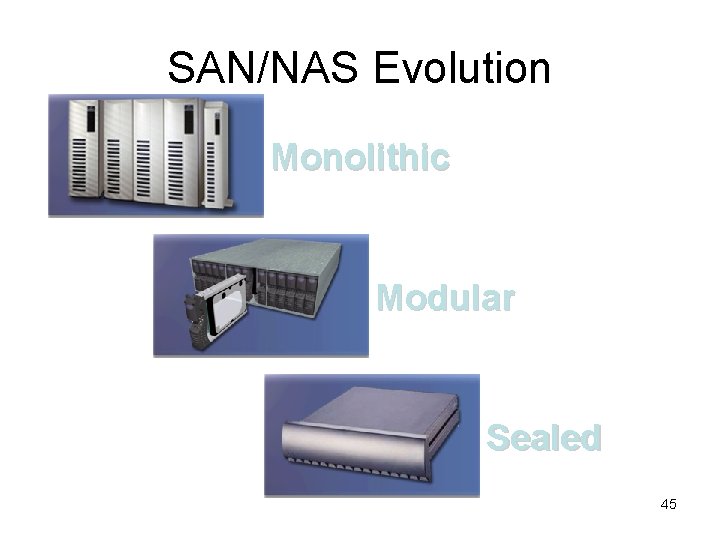

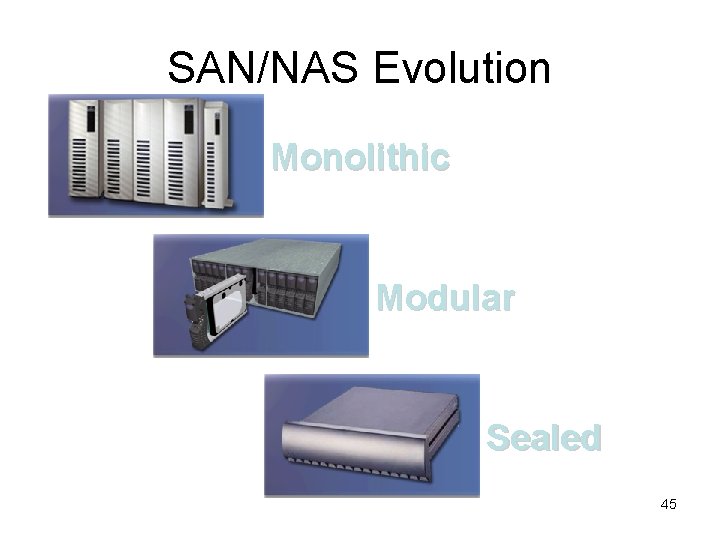

SAN/NAS Evolution Monolithic Modular Sealed 45

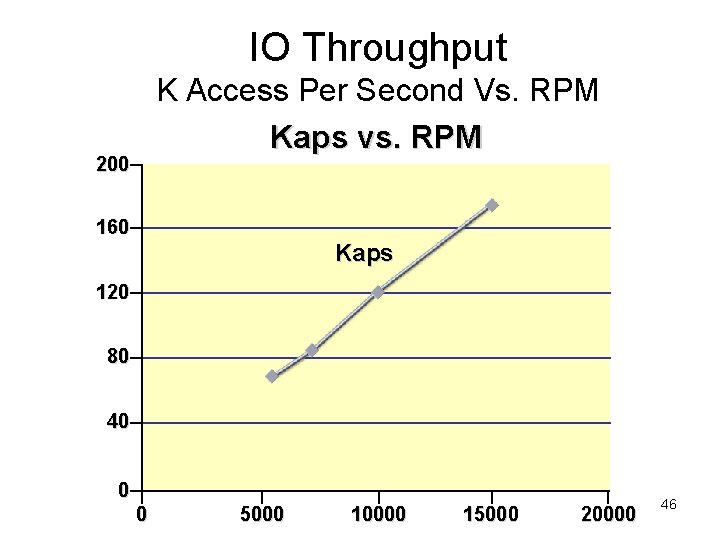

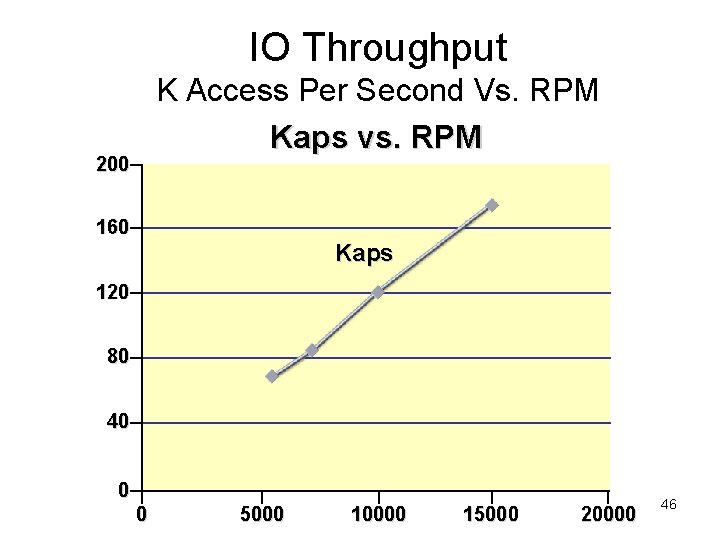

IO Throughput K Access Per Second Vs. RPM Kaps vs. RPM 200 160 Kaps 120 80 40 0 0 5000 10000 15000 20000 46

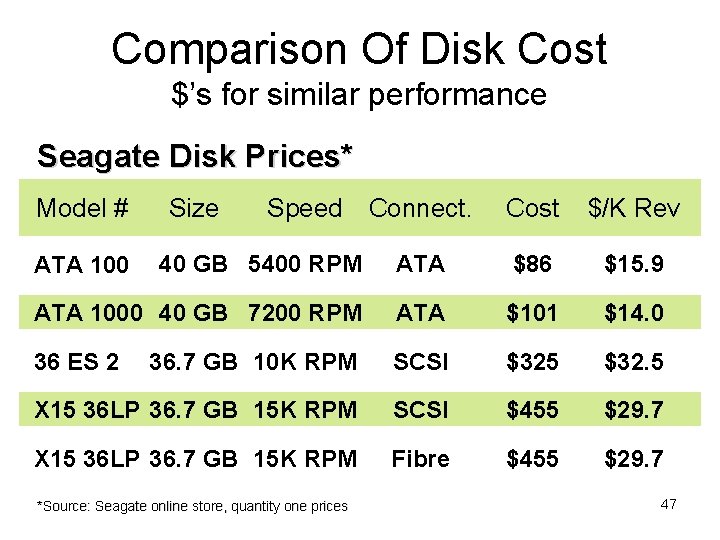

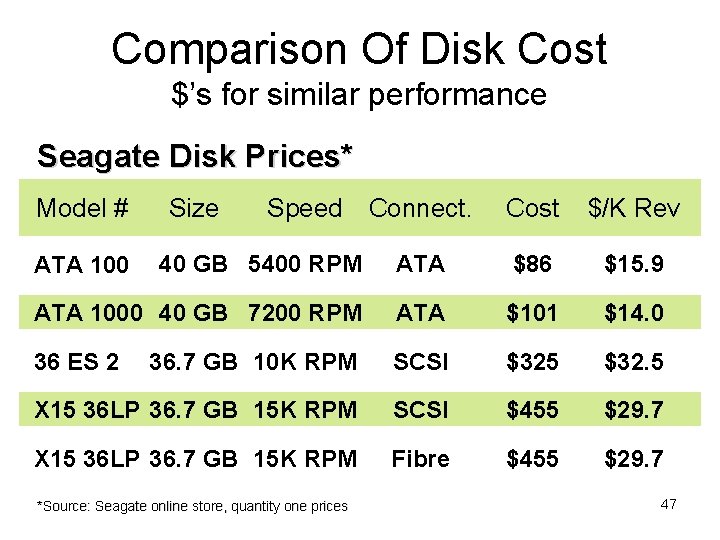

Comparison Of Disk Cost $’s for similar performance Seagate Disk Prices* Model # Connect. Cost $/K Rev 40 GB 5400 RPM ATA $86 $15. 9 ATA 1000 40 GB 7200 RPM ATA $101 $14. 0 36 ES 2 36. 7 GB 10 K RPM SCSI $325 $32. 5 X 15 36 LP 36. 7 GB 15 K RPM SCSI $455 $29. 7 X 15 36 LP 36. 7 GB 15 K RPM Fibre $455 $29. 7 ATA 100 Size Speed *Source: Seagate online store, quantity one prices 47

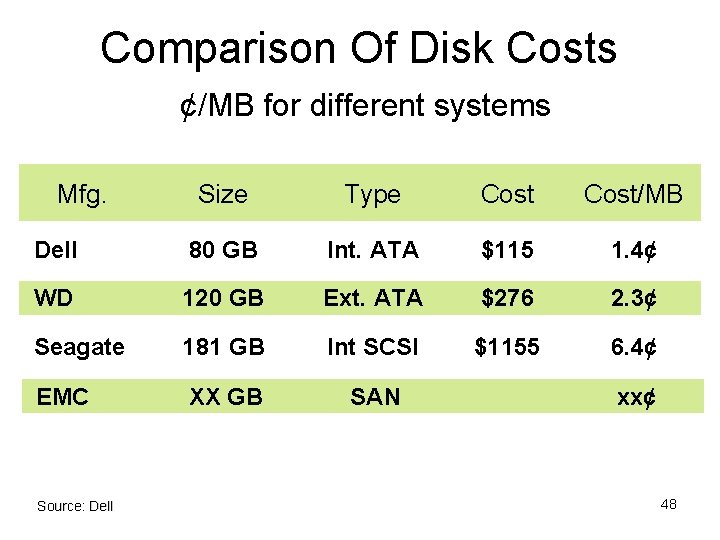

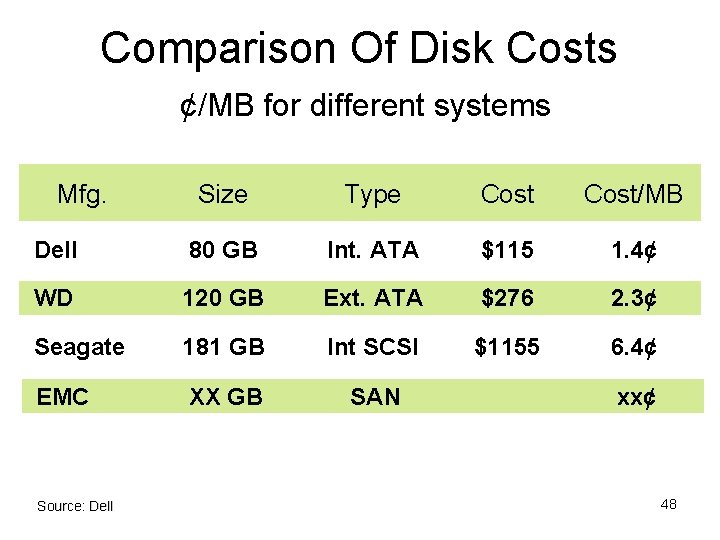

Comparison Of Disk Costs ¢/MB for different systems Mfg. Size Type Cost/MB Dell 80 GB Int. ATA $115 1. 4¢ WD 120 GB Ext. ATA $276 2. 3¢ Seagate 181 GB Int SCSI $1155 6. 4¢ EMC XX GB SAN Source: Dell xx¢ 48

Why Serial ATA Matters • Modern interconnect • Point-to-point drive connection – 150 Mbs –> 300 Mbs • Facilitates ATA disk arrays • Enables inexpensive “cool” storage 49

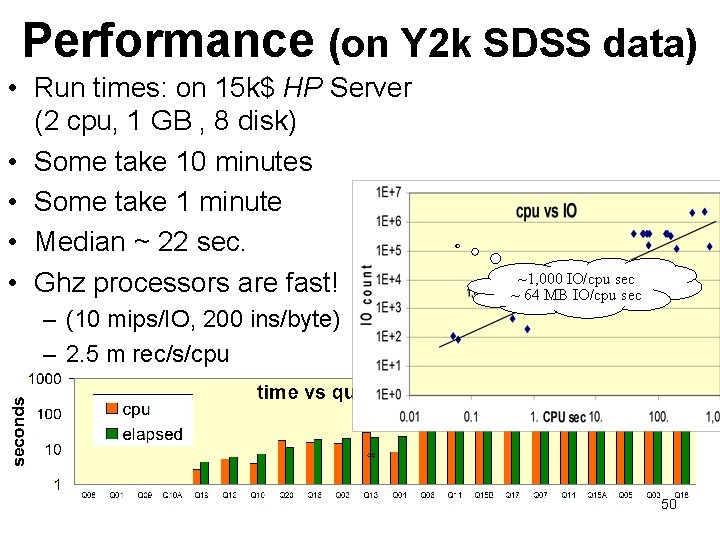

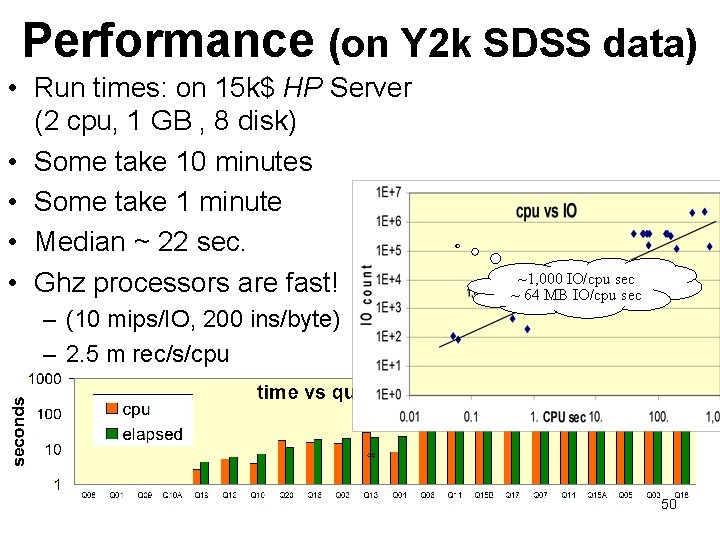

Performance (on Y 2 k SDSS data) • Run times: on 15 k$ HP Server (2 cpu, 1 GB , 8 disk) • Some take 10 minutes • Some take 1 minute • Median ~ 22 sec. • Ghz processors are fast! ~1, 000 IO/cpu sec ~ 64 MB IO/cpu sec – (10 mips/IO, 200 ins/byte) – 2. 5 m rec/s/cpu 50

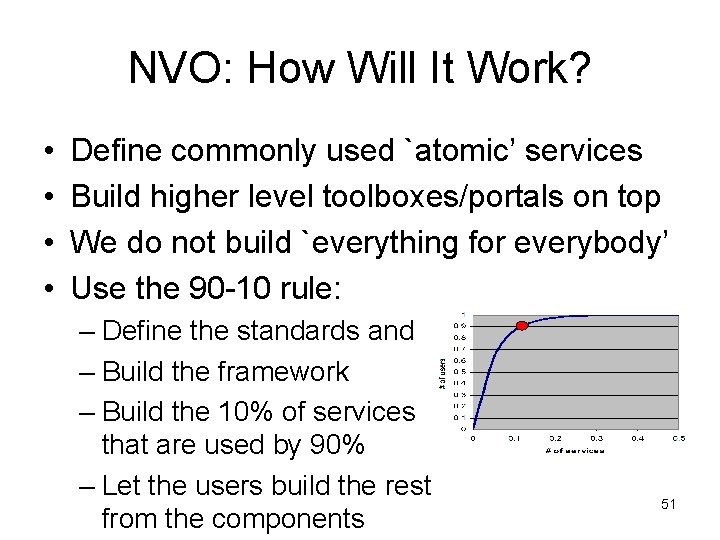

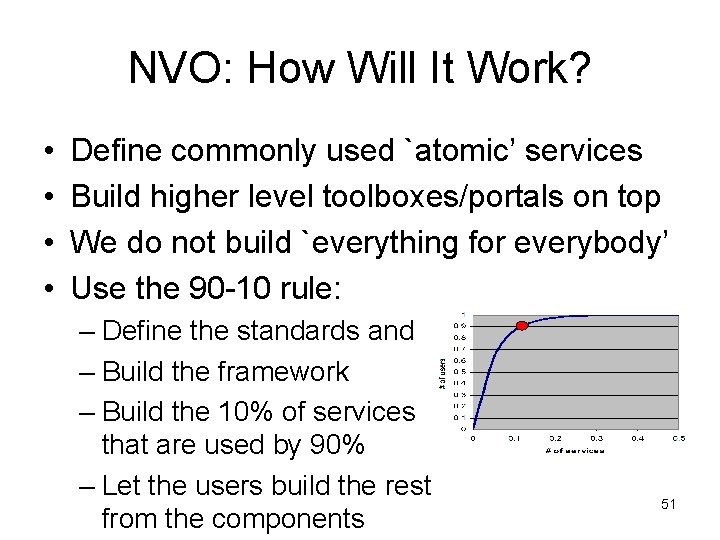

NVO: How Will It Work? • • Define commonly used `atomic’ services Build higher level toolboxes/portals on top We do not build `everything for everybody’ Use the 90 -10 rule: – Define the standards and interfaces – Build the framework – Build the 10% of services that are used by 90% – Let the users build the rest from the components 51

Data Federations of Web Services • Massive datasets live near their owners: – – Near the instrument’s software pipeline Near the applications Near data knowledge and curation Super Computer centers become Super Data Centers • Each Archive publishes a web service – Schema: documents the data – Methods on objects (queries) • Scientists get “personalized” extracts • Uniform access to multiple Archives. Federation – A common global schema 52

Grid and Web Services Synergy • I believe the Grid will be many web services share data (computrons are free) • IETF standards Provide – Naming – Authorization / Security / Privacy – Distributed Objects Discovery, Definition, Invocation, Object Model – Higher level services: workflow, transactions, DB, . . • Synergy: commercial Internet & Grid tools 53

Web Services: The Key? • Web SERVER: – Given a url + parameters – Returns a web page (often dynamic) Your h t program tp • Web SERVICE: – Given a XML document (soap msg) – Returns an XML document – Tools make this look like an RPC. • F(x, y, z) returns (u, v, w) – Distributed objects for the web. – + naming, discovery, security, . . • Internet-scale distributed computing b We e pag Your s o program ap Data In your address space Web Server ct e j ob ml in x Web Service 54

Grid? • Harvesting spare cpu cycles is not important – They are “free” (1$/cpu day) – They need applications and data (which are not free) (1$/GB shipped) • Accessing distributed data IS important – Send the programs to the data – Send the questions to the databases. • Super Computer Centers become Super Data Centers Super Application Centers 55

The Grid: Foster & Kesselman (Argonne National Laboratory) Internet computing and GRID technologies promise to change the way we tackle complex problems. They will enable largescale aggregation and sharing of computational, data and other resources across institutional boundaries …. Transform scientific disciplines ranging from high energy physics to the life sciences 56

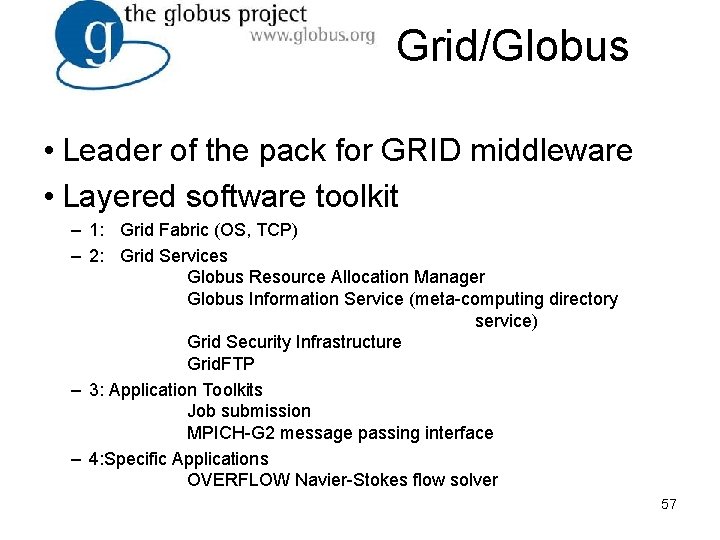

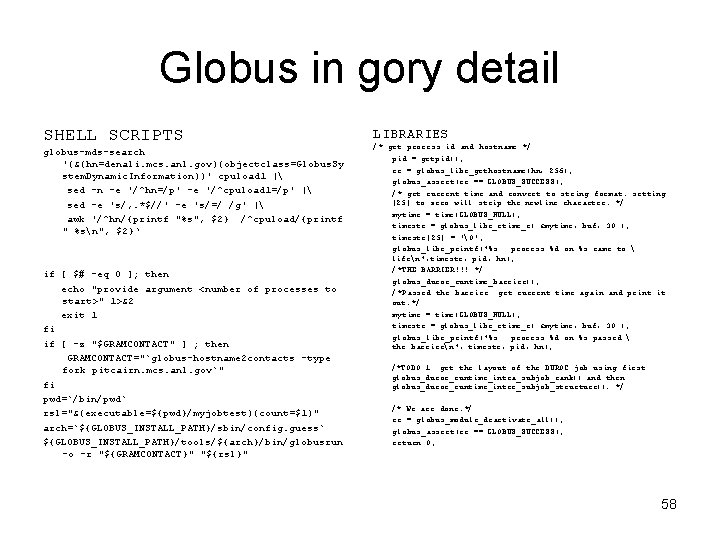

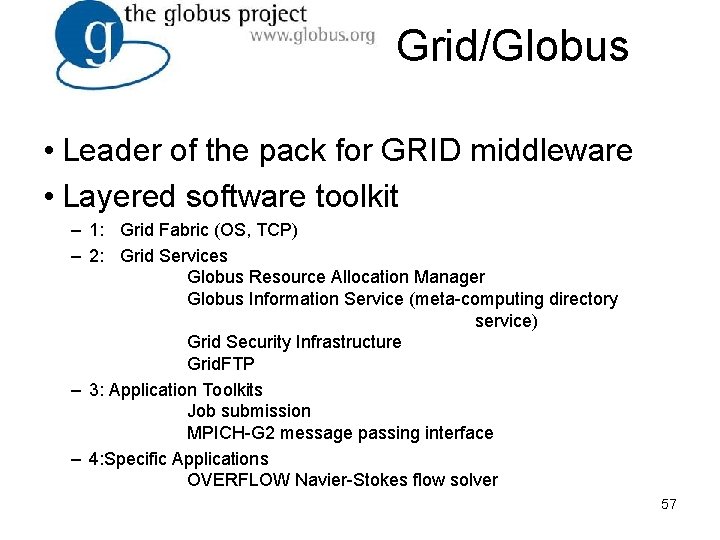

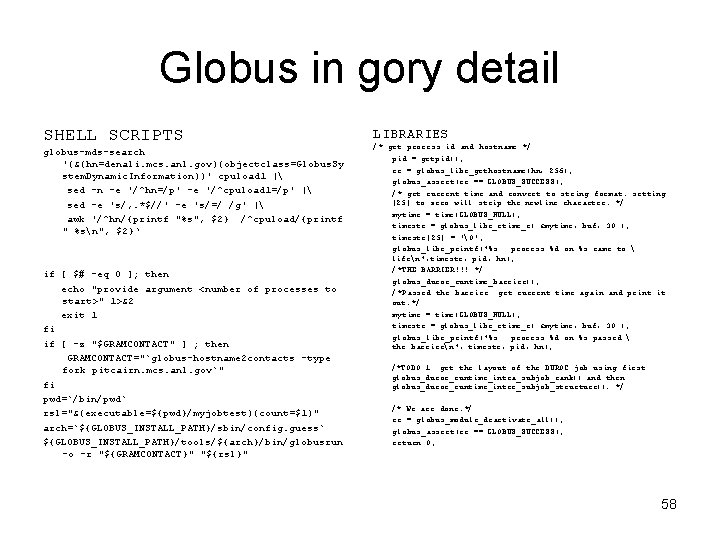

Grid/Globus • Leader of the pack for GRID middleware • Layered software toolkit – 1: Grid Fabric (OS, TCP) – 2: Grid Services Globus Resource Allocation Manager Globus Information Service (meta-computing directory service) Grid Security Infrastructure Grid. FTP – 3: Application Toolkits Job submission MPICH-G 2 message passing interface – 4: Specific Applications OVERFLOW Navier-Stokes flow solver 57

Globus in gory detail SHELL SCRIPTS globus-mds-search '(&(hn=denali. mcs. anl. gov)(objectclass=Globus. Sy stem. Dynamic. Information))' cpuload 1 | sed -n -e '/^hn=/p' -e '/^cpuload 1=/p' | sed -e 's/, . *$//' -e 's/=/ /g' | awk '/^hn/{printf "%s", $2} /^cpuload/{printf " %sn", $2}‘ if [ $# -eq 0 ]; then echo "provide argument <number of processes to start>" 1>&2 exit 1 fi if [ -z "$GRAMCONTACT" ] ; then GRAMCONTACT="`globus-hostname 2 contacts -type fork pitcairn. mcs. anl. gov`" fi pwd=`/bin/pwd` rsl="&(executable=${pwd}/myjobtest)(count=$1)" arch=`${GLOBUS_INSTALL_PATH}/sbin/config. guess` ${GLOBUS_INSTALL_PATH}/tools/${arch}/bin/globusrun -o -r "${GRAMCONTACT}" "${rsl}" LIBRARIES /* get process id and hostname */ pid = getpid(); rc = globus_libc_gethostname(hn, 256); globus_assert(rc == GLOBUS_SUCCESS); /* get current time and convert to string format. setting [25] to zero will strip the newline character. */ mytime = time(GLOBUS_NULL); timestr = globus_libc_ctime_r( &mytime, buf, 30 ); timestr[25] = '�'; globus_libc_printf("%s : process %d on %s came to lifen", timestr, pid, hn); /*THE BARRIER!!! */ globus_duroc_runtime_barrier(); /*Passed the barrier: get current time again and print it out. */ mytime = time(GLOBUS_NULL); timestr = globus_libc_ctime_r( &mytime, buf, 30 ); globus_libc_printf("%s : process %d on %s passed the barriern", timestr, pid, hn); /*TODO 1: get the layout of the DUROC job using first globus_duroc_runtime_intra_subjob_rank() and then globus_duroc_runtime_inter_subjob_structure(). */ /* We are done. */ rc = globus_module_deactivate_all(); globus_assert(rc == GLOBUS_SUCCESS); return 0; 58

Shielding Users • Users do not want to deal with XML, they want their data • Users do not want to deal with configuring grid computing, they want results • SOAP: data appears in user memory, XML is invisible • SOAP call: just a remote procedure 59

Atomic Services • Metadata information about resources – Waveband – Sky coverage – Translation of names to universal dictionary (UCD) • Simple search patterns on the resources – Cone Search – Image mosaic – Unit conversions • Simple filtering, counting, histogramming 60

Higher Level Services • Built on Atomic Services • Perform more complex tasks • Examples – Automated resource discovery – Cross-identifications – Photometric redshifts – Outlier detections – Visualization facilities • Expectation: 61

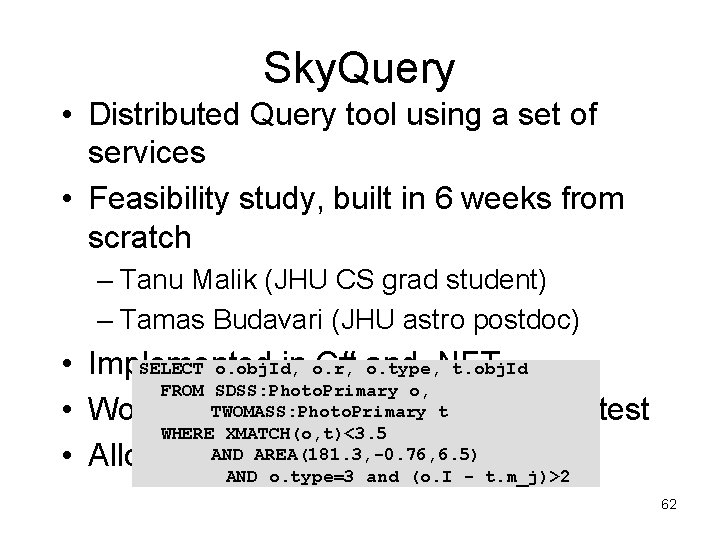

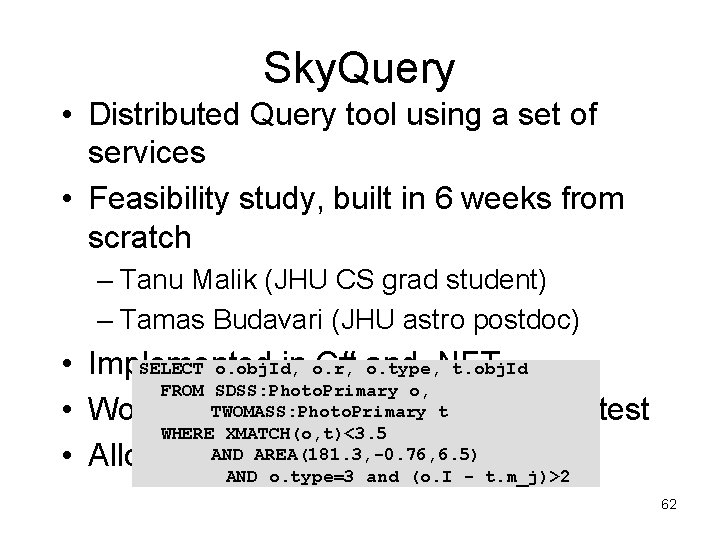

Sky. Query • Distributed Query tool using a set of services • Feasibility study, built in 6 weeks from scratch – Tanu Malik (JHU CS grad student) – Tamas Budavari (JHU astro postdoc) SELECT o. obj. Id, o. type, t. obj. Id • Implemented in o. r, C# and. NET FROM SDSS: Photo. Primary o, t XML Contest • Won 2 nd TWOMASS: Photo. Primary prize of Microsoft WHERE XMATCH(o, t)<3. 5 AND AREA(181. 3, -0. 76, 6. 5) • Allows queries like: AND o. type=3 and (o. I - t. m_j)>2 62

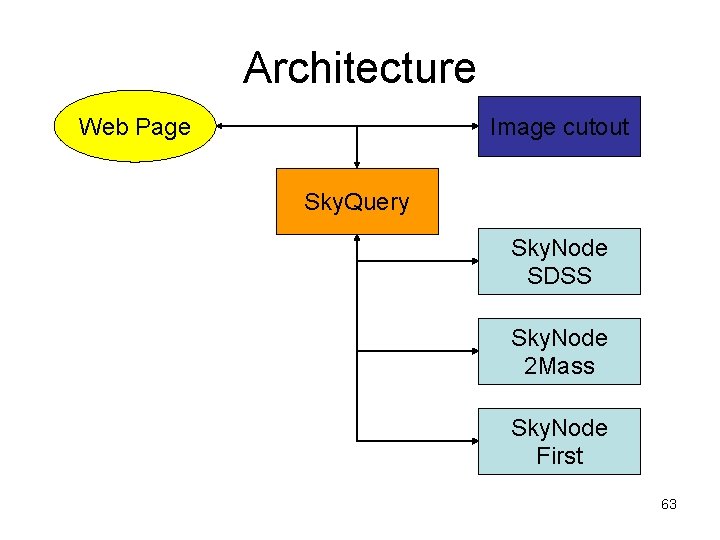

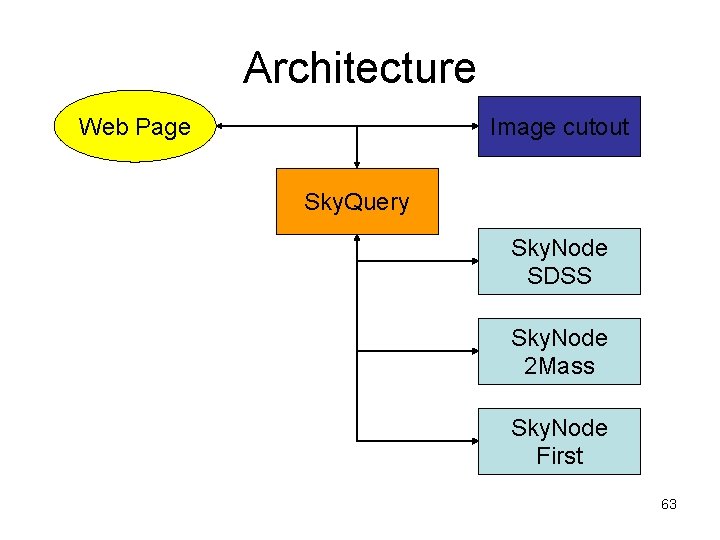

Architecture Web Page Image cutout Sky. Query Sky. Node SDSS Sky. Node 2 Mass Sky. Node First 63

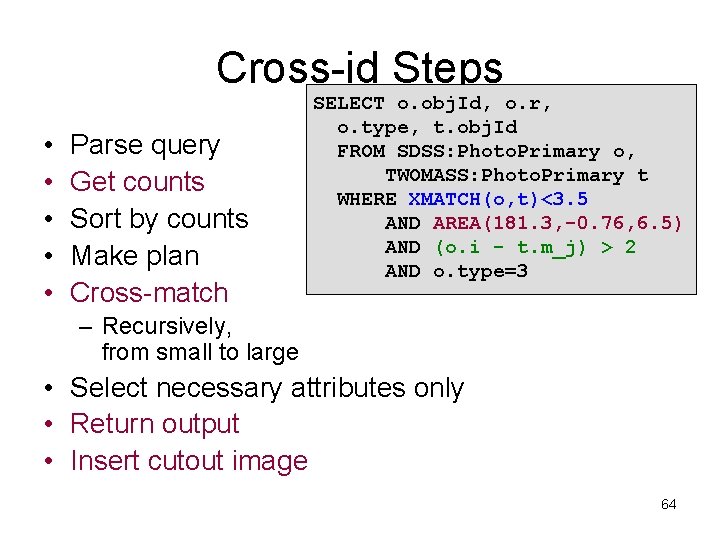

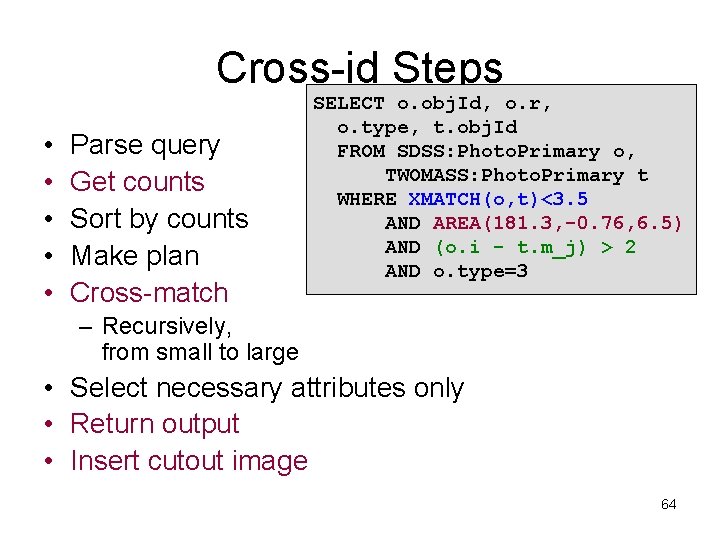

Cross-id Steps • • • Parse query Get counts Sort by counts Make plan Cross-match SELECT o. obj. Id, o. r, o. type, t. obj. Id FROM SDSS: Photo. Primary o, TWOMASS: Photo. Primary t WHERE XMATCH(o, t)<3. 5 AND AREA(181. 3, -0. 76, 6. 5) AND (o. i - t. m_j) > 2 AND o. type=3 – Recursively, from small to large • Select necessary attributes only • Return output • Insert cutout image 64

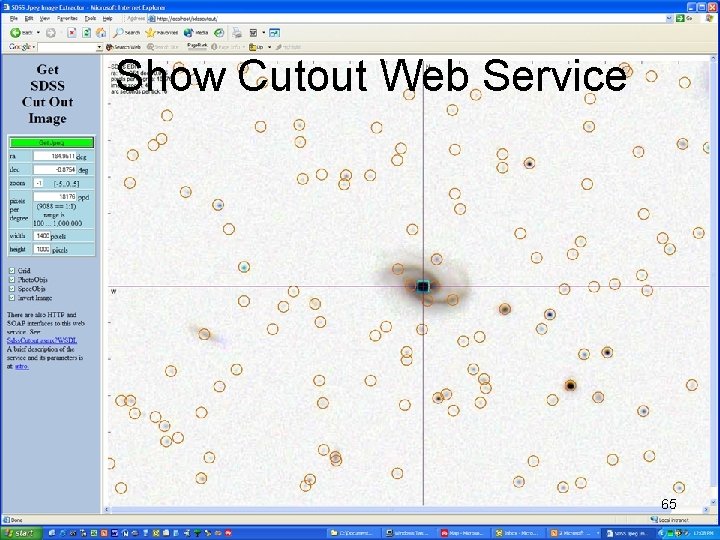

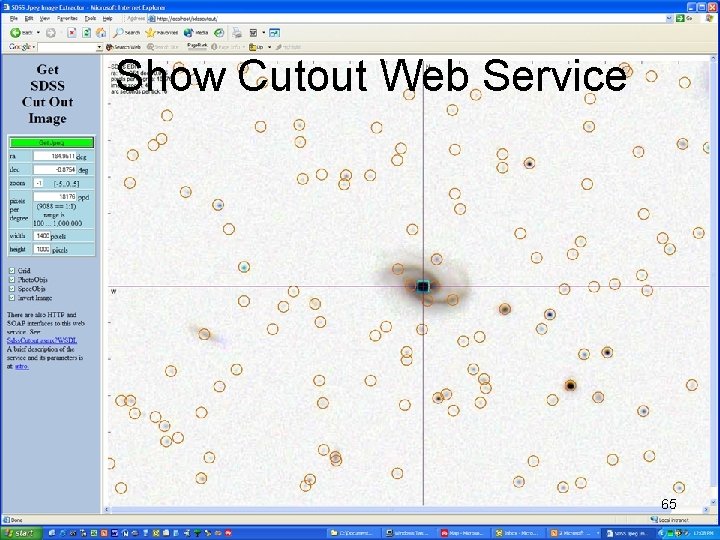

Show Cutout Web Service 65