High Performance Parallel Programming Dirk van der Knijff

![MPI_Type_struct - example int blocklen[2], extent; MPI_Aint disp[2]; MPI_Datatype[2], new; struct { MPI_INT int; MPI_Type_struct - example int blocklen[2], extent; MPI_Aint disp[2]; MPI_Datatype[2], new; struct { MPI_INT int;](https://slidetodoc.com/presentation_image_h2/ebf17d271317daa80508c5f413aff31b/image-21.jpg)

- Slides: 35

High Performance Parallel Programming Dirk van der Knijff Advanced Research Computing Information Division

High Performance Parallel Programming • Lecture 8: (MPI) (part 2) Message Passing Interface High Performance Parallel Programming

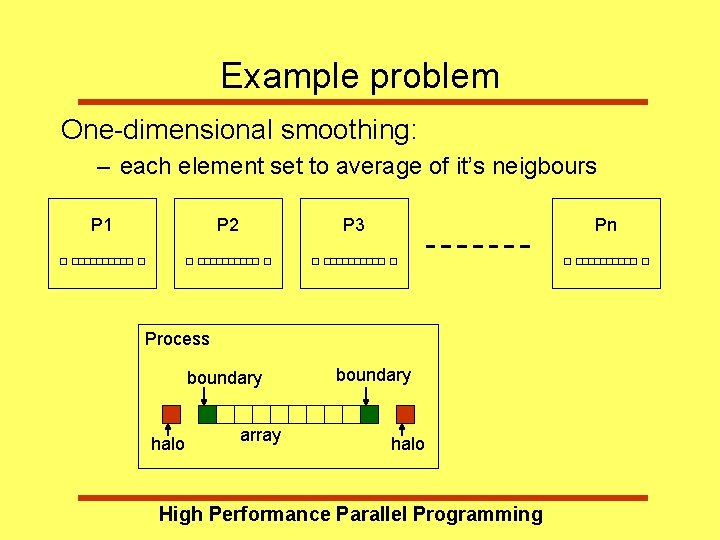

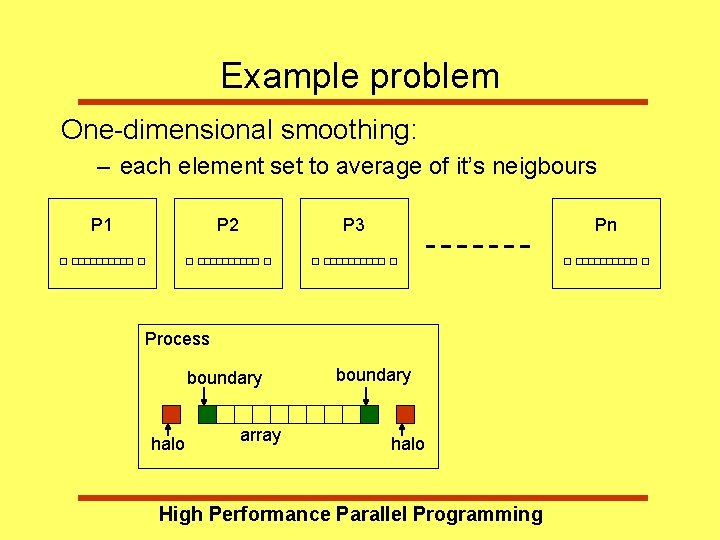

Example problem One-dimensional smoothing: – each element set to average of it’s neigbours P 1 P 2 P 3 Pn Process boundary halo array boundary halo High Performance Parallel Programming

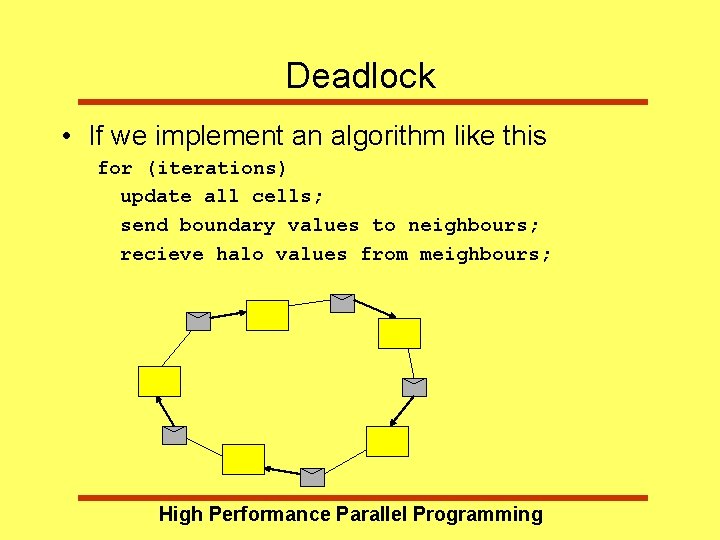

Deadlock • If we implement an algorithm like this for (iterations) update all cells; send boundary values to neighbours; recieve halo values from meighbours; High Performance Parallel Programming

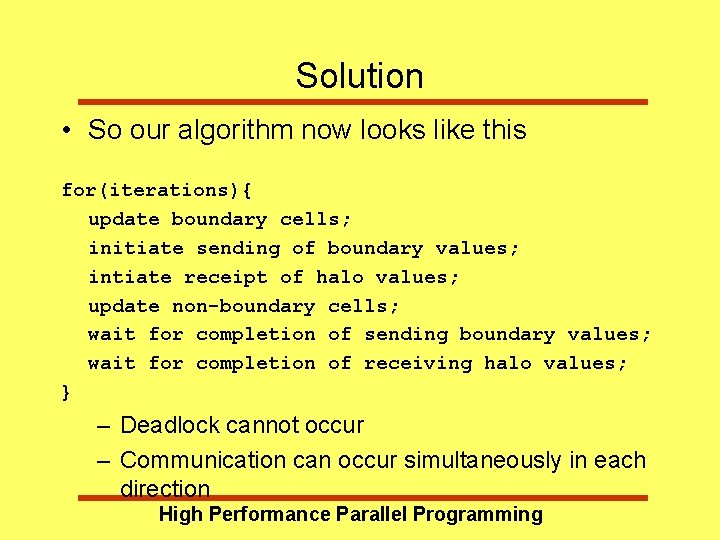

Non-blocking communications • Routine returns before the communication completes • Seperate communication into phases • Initiate non-blocking communication • Do some work (perhaps invloving other communications) • Wait for non-blocking communication to complete • Can test before waiting (or instead of) High Performance Parallel Programming

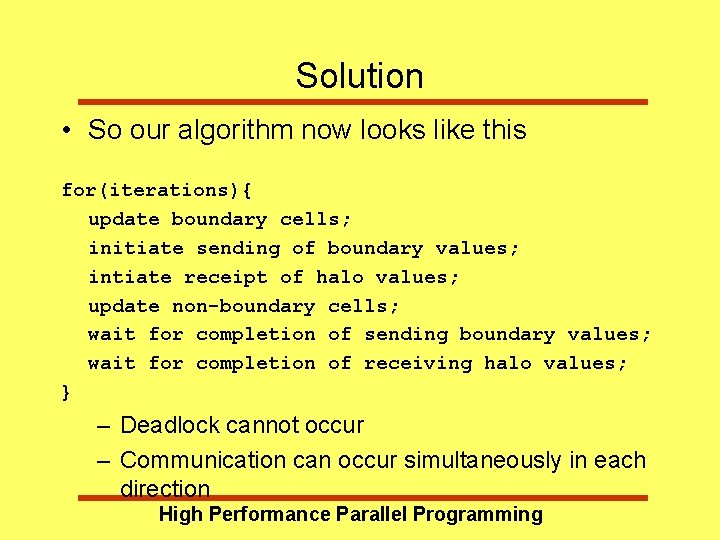

Solution • So our algorithm now looks like this for(iterations){ update boundary cells; initiate sending of boundary values; intiate receipt of halo values; update non-boundary cells; wait for completion of sending boundary values; wait for completion of receiving halo values; } – Deadlock cannot occur – Communication can occur simultaneously in each direction High Performance Parallel Programming

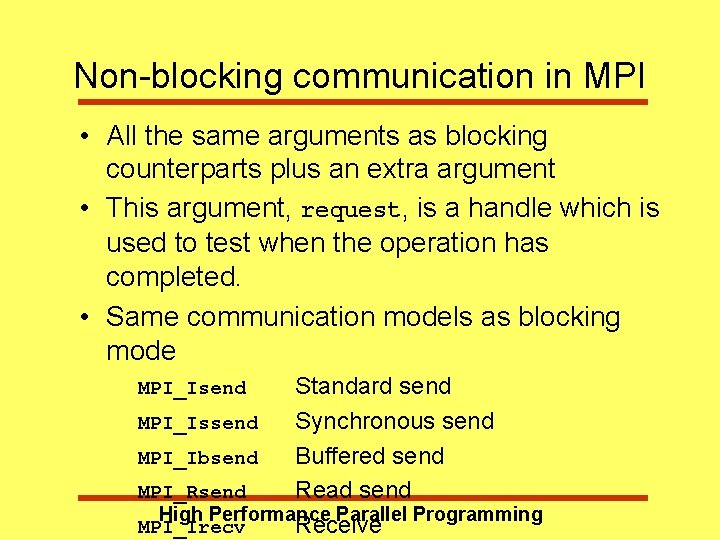

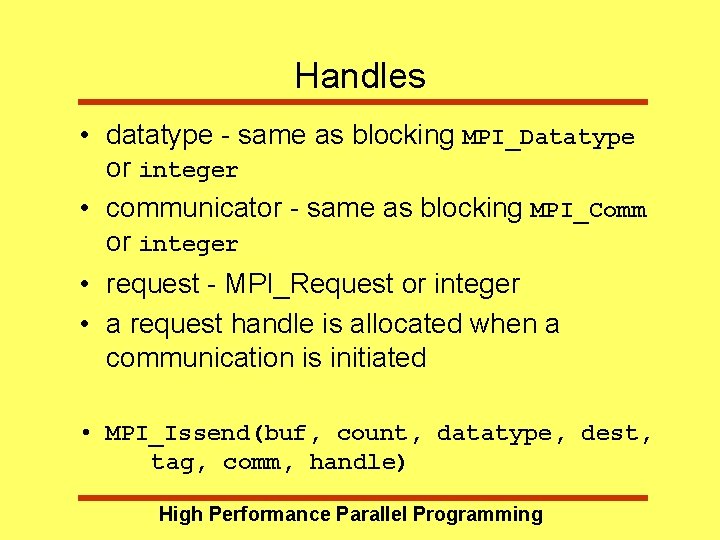

Non-blocking communication in MPI • All the same arguments as blocking counterparts plus an extra argument • This argument, request, is a handle which is used to test when the operation has completed. • Same communication models as blocking mode Standard send MPI_Issend Synchronous send MPI_Ibsend Buffered send MPI_Rsend Read send High Performance Parallel Programming MPI_Irecv Receive MPI_Isend

Handles • datatype - same as blocking MPI_Datatype or integer • communicator - same as blocking MPI_Comm or integer • request - MPI_Request or integer • a request handle is allocated when a communication is initiated • MPI_Issend(buf, count, datatype, dest, tag, comm, handle) High Performance Parallel Programming

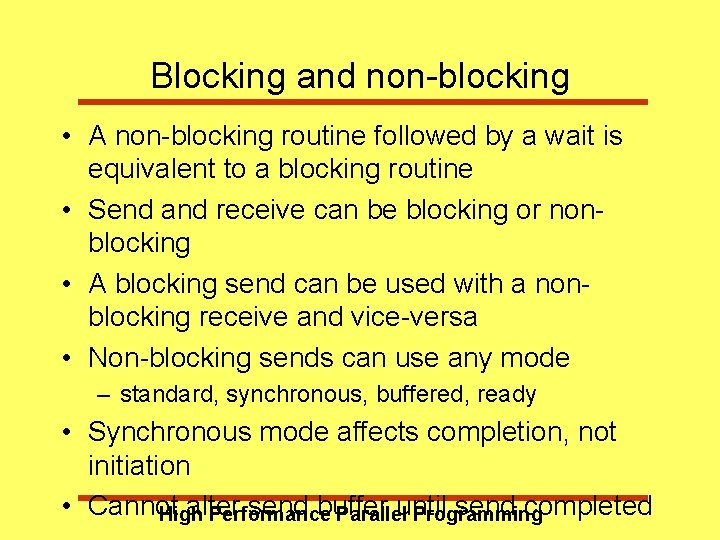

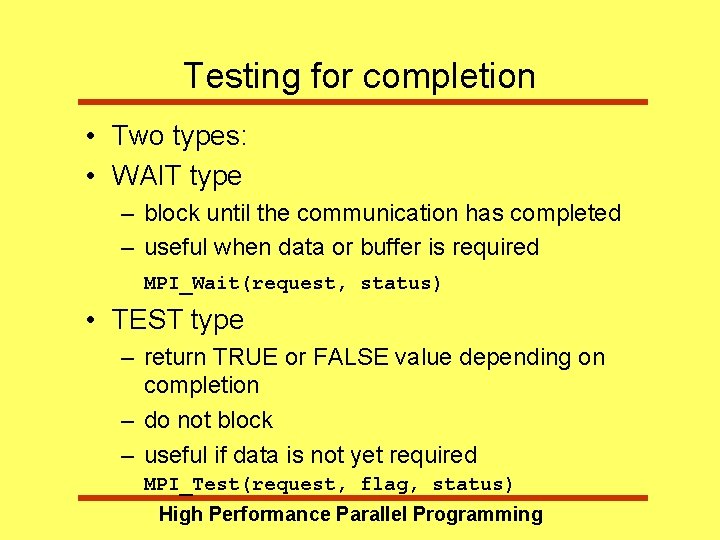

Testing for completion • Two types: • WAIT type – block until the communication has completed – useful when data or buffer is required MPI_Wait(request, status) • TEST type – return TRUE or FALSE value depending on completion – do not block – useful if data is not yet required MPI_Test(request, flag, status) High Performance Parallel Programming

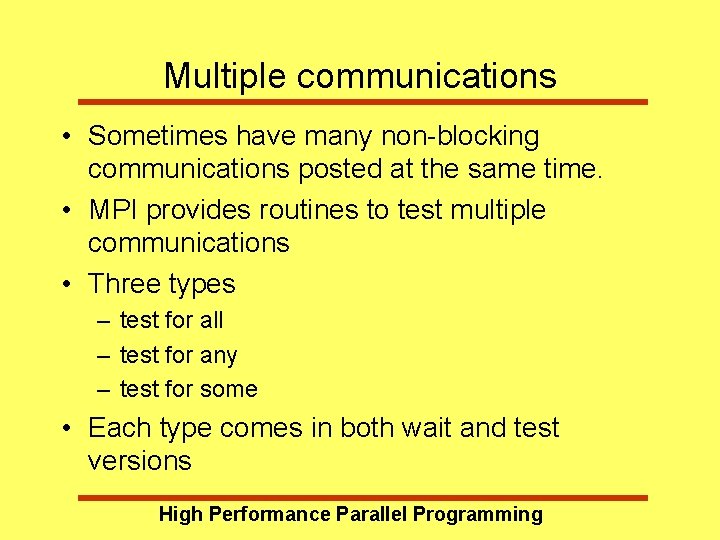

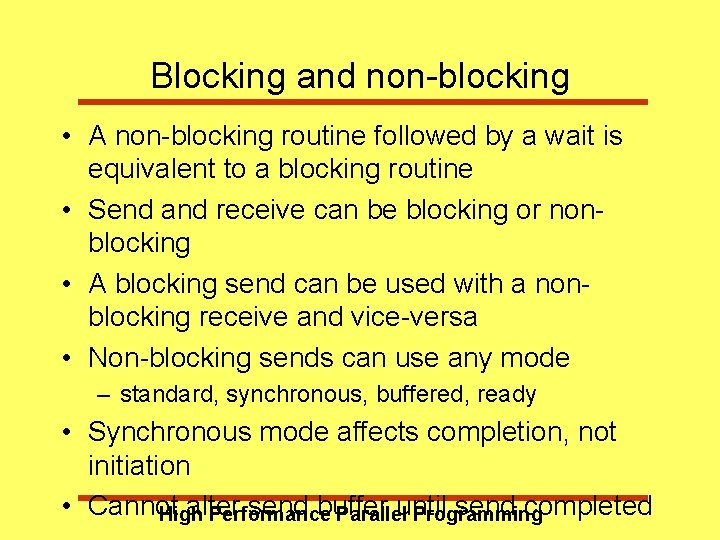

Blocking and non-blocking • A non-blocking routine followed by a wait is equivalent to a blocking routine • Send and receive can be blocking or nonblocking • A blocking send can be used with a nonblocking receive and vice-versa • Non-blocking sends can use any mode – standard, synchronous, buffered, ready • Synchronous mode affects completion, not initiation • Cannot alter send buffer until send completed High Performance Parallel Programming

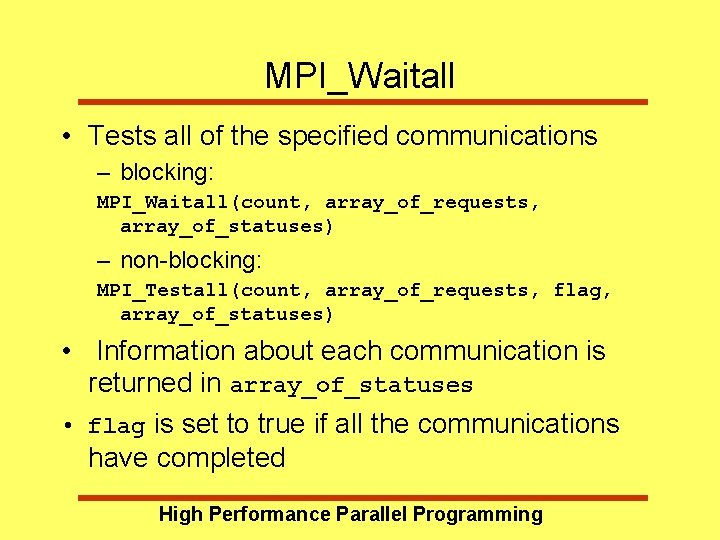

Multiple communications • Sometimes have many non-blocking communications posted at the same time. • MPI provides routines to test multiple communications • Three types – test for all – test for any – test for some • Each type comes in both wait and test versions High Performance Parallel Programming

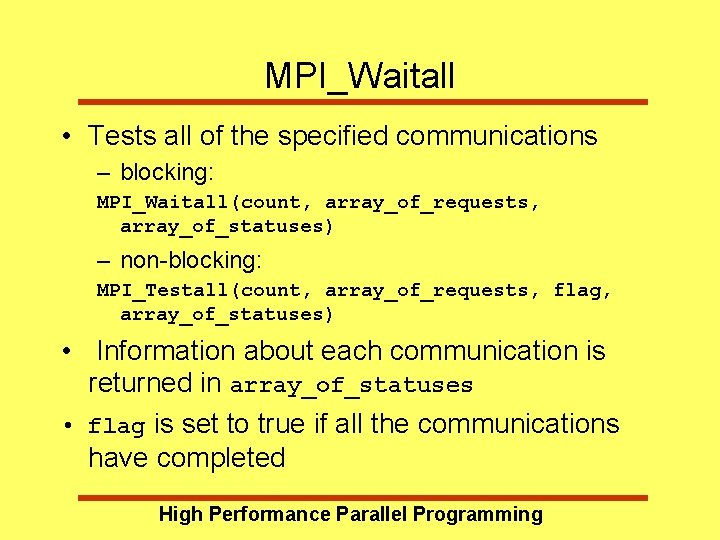

MPI_Waitall • Tests all of the specified communications – blocking: MPI_Waitall(count, array_of_requests, array_of_statuses) – non-blocking: MPI_Testall(count, array_of_requests, flag, array_of_statuses) • Information about each communication is returned in array_of_statuses • flag is set to true if all the communications have completed High Performance Parallel Programming

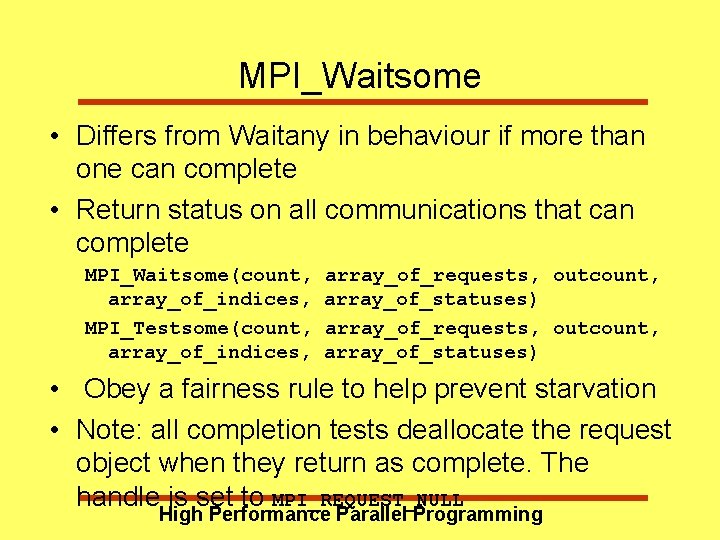

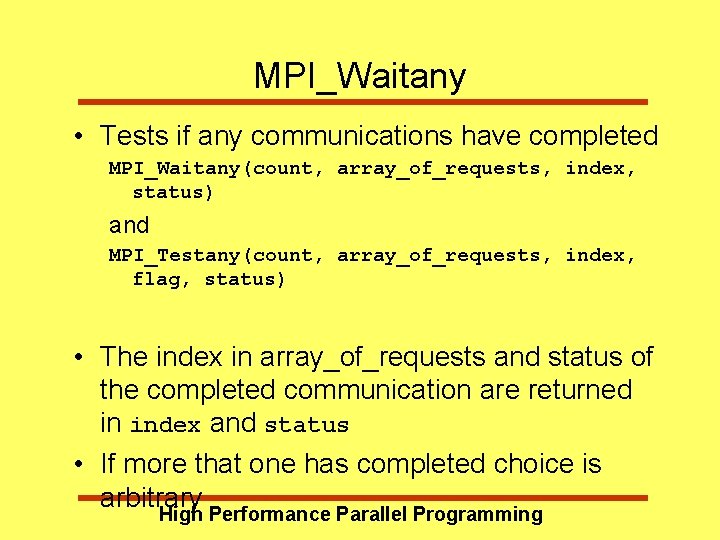

MPI_Waitany • Tests if any communications have completed MPI_Waitany(count, array_of_requests, index, status) and MPI_Testany(count, array_of_requests, index, flag, status) • The index in array_of_requests and status of the completed communication are returned in index and status • If more that one has completed choice is arbitrary High Performance Parallel Programming

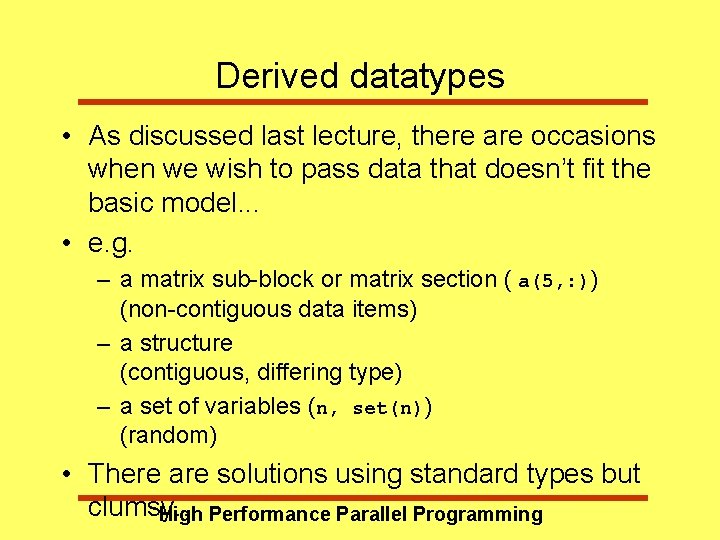

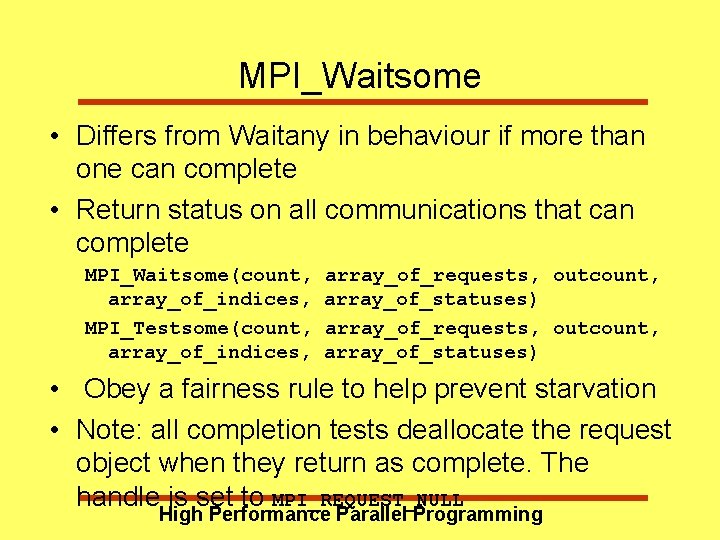

MPI_Waitsome • Differs from Waitany in behaviour if more than one can complete • Return status on all communications that can complete MPI_Waitsome(count, array_of_indices, MPI_Testsome(count, array_of_indices, array_of_requests, outcount, array_of_statuses) • Obey a fairness rule to help prevent starvation • Note: all completion tests deallocate the request object when they return as complete. The handle is set to MPI_REQUEST_NULL High Performance Parallel Programming

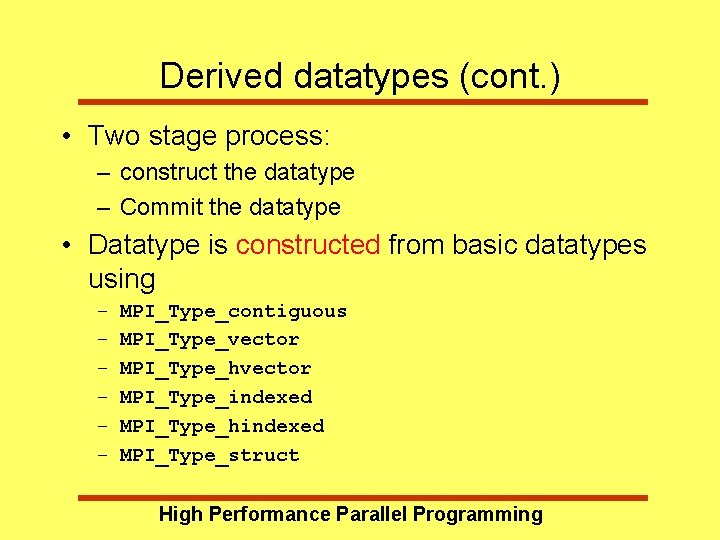

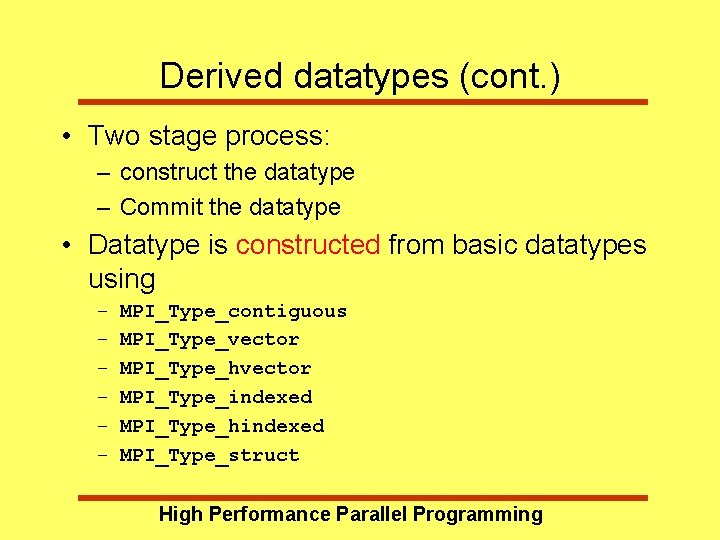

Derived datatypes • As discussed last lecture, there are occasions when we wish to pass data that doesn’t fit the basic model. . . • e. g. – a matrix sub-block or matrix section ( a(5, : )) (non-contiguous data items) – a structure (contiguous, differing type) – a set of variables (n, set(n)) (random) • There are solutions using standard types but clumsy. . . High Performance Parallel Programming

Derived datatypes (cont. ) • Two stage process: – construct the datatype – Commit the datatype • Datatype is constructed from basic datatypes using – – – MPI_Type_contiguous MPI_Type_vector MPI_Type_hvector MPI_Type_indexed MPI_Type_hindexed MPI_Type_struct High Performance Parallel Programming

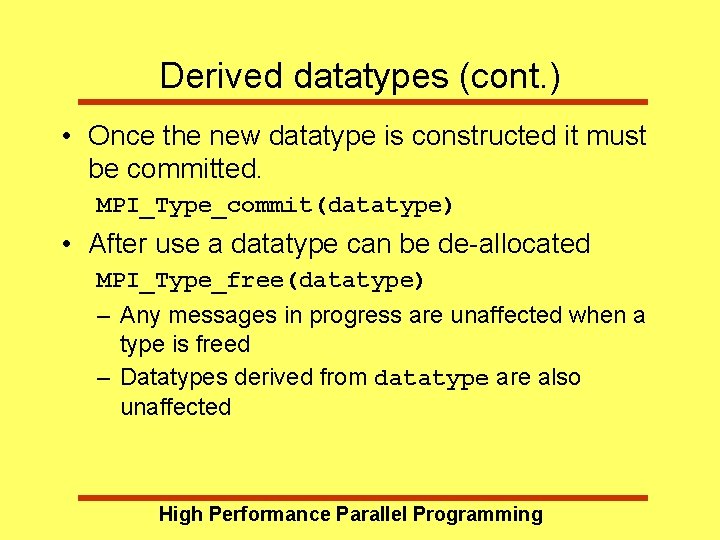

Derived datatypes (cont. ) • Once the new datatype is constructed it must be committed. MPI_Type_commit(datatype) • After use a datatype can be de-allocated MPI_Type_free(datatype) – Any messages in progress are unaffected when a type is freed – Datatypes derived from datatype are also unaffected High Performance Parallel Programming

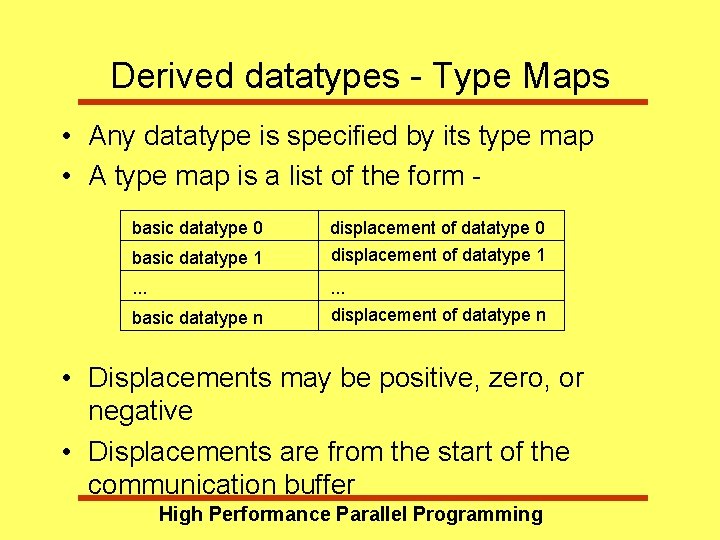

Derived datatypes - Type Maps • Any datatype is specified by its type map • A type map is a list of the form basic datatype 0 displacement of datatype 0 basic datatype 1 displacement of datatype 1 . . . basic datatype n displacement of datatype n • Displacements may be positive, zero, or negative • Displacements are from the start of the communication buffer High Performance Parallel Programming

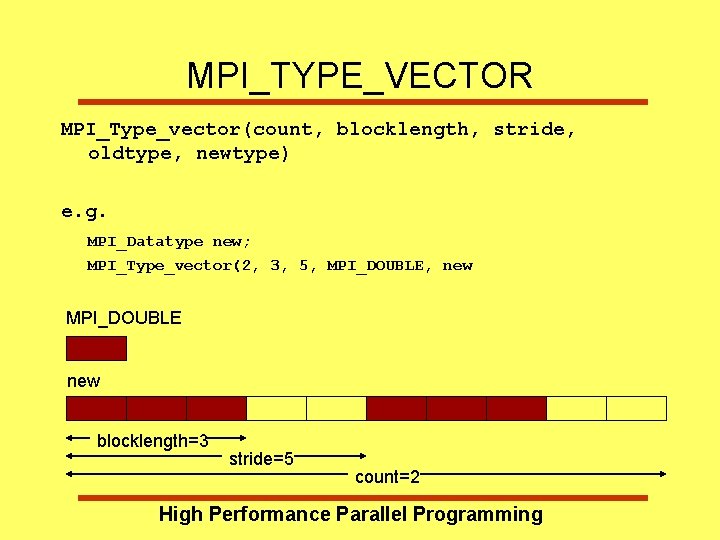

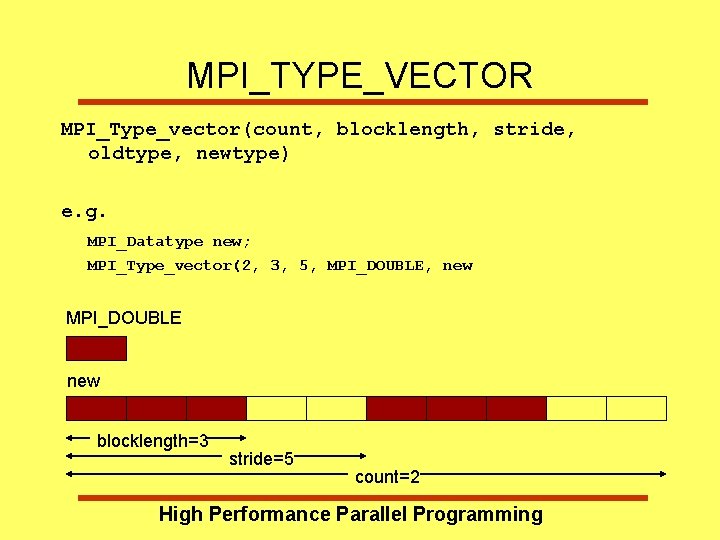

MPI_TYPE_VECTOR MPI_Type_vector(count, blocklength, stride, oldtype, newtype) e. g. MPI_Datatype new; MPI_Type_vector(2, 3, 5, MPI_DOUBLE, new MPI_DOUBLE new blocklength=3 stride=5 count=2 High Performance Parallel Programming

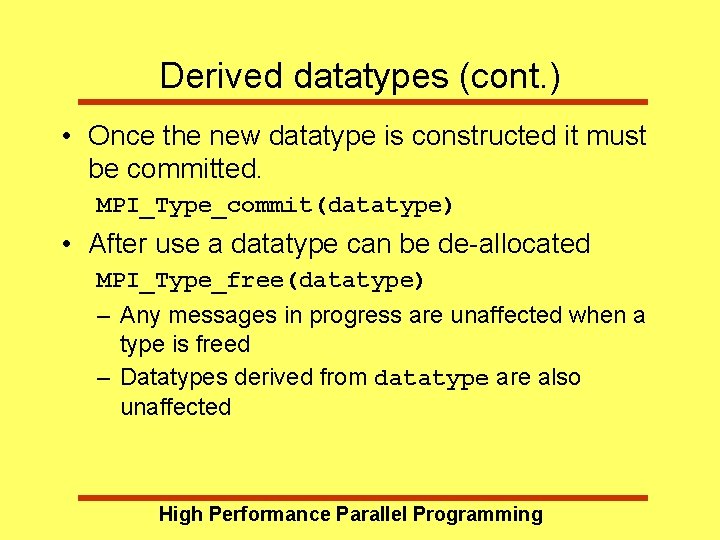

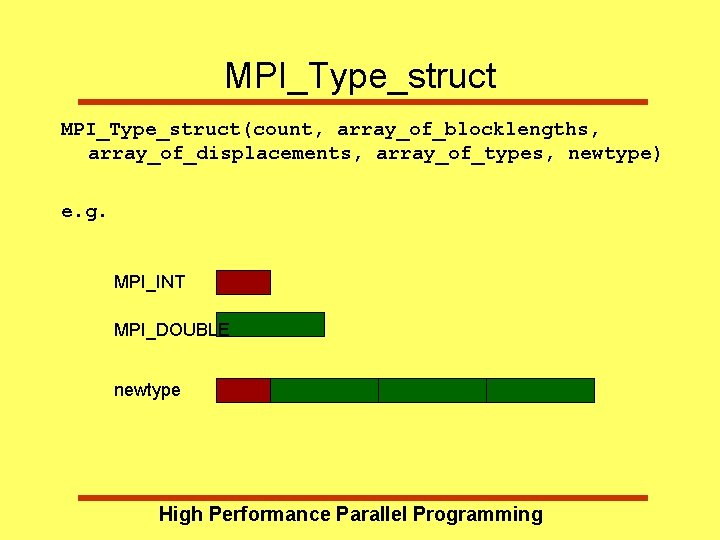

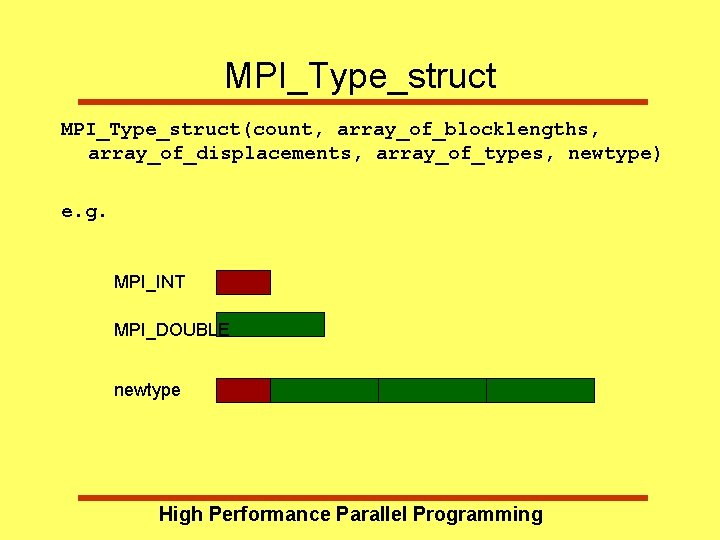

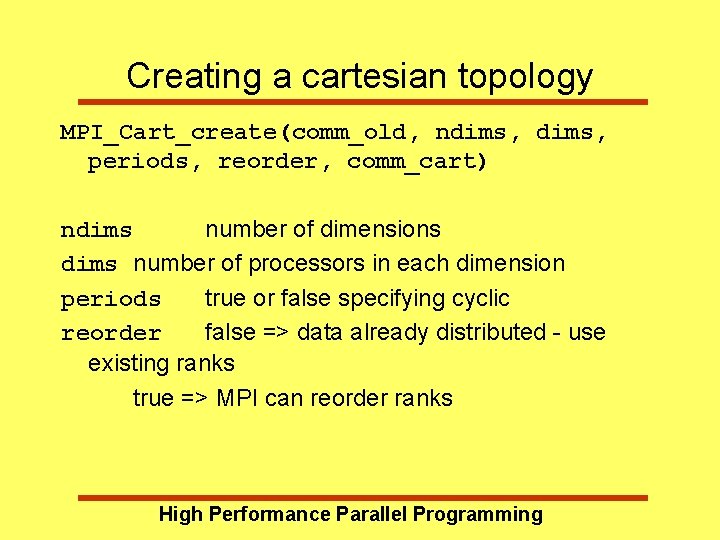

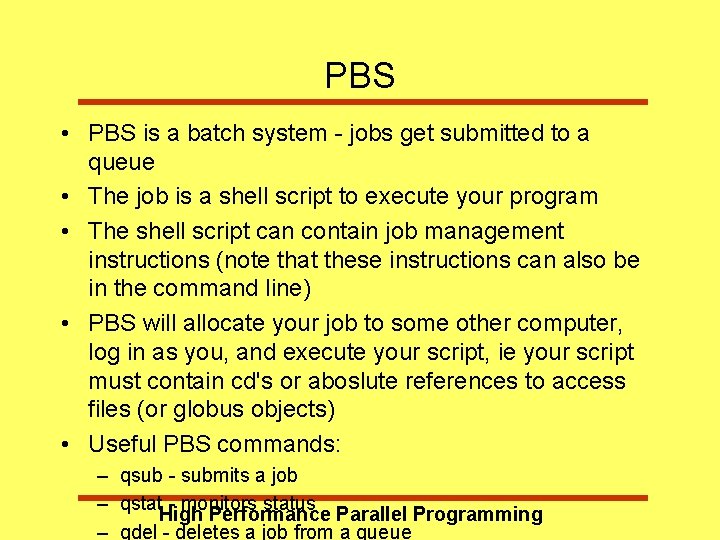

MPI_Type_struct(count, array_of_blocklengths, array_of_displacements, array_of_types, newtype) e. g. MPI_INT MPI_DOUBLE newtype High Performance Parallel Programming

![MPITypestruct example int blocklen2 extent MPIAint disp2 MPIDatatype2 new struct MPIINT int MPI_Type_struct - example int blocklen[2], extent; MPI_Aint disp[2]; MPI_Datatype[2], new; struct { MPI_INT int;](https://slidetodoc.com/presentation_image_h2/ebf17d271317daa80508c5f413aff31b/image-21.jpg)

MPI_Type_struct - example int blocklen[2], extent; MPI_Aint disp[2]; MPI_Datatype[2], new; struct { MPI_INT int; MPI_DOUBLE dble[3]; } msg; disp[0] = 0; MPI_Type_extent(MPI_INT, &extent); disp[1] = extent; type[0] = MPI_INT; type[1] = MPI_DOUBLE blocklen[0] = 1; blocklen[1] = 3; MPI_Type_struct(2, &blocklen, &disp, &type, new); MPI_Type_commit(&new); High Performance Parallel Programming

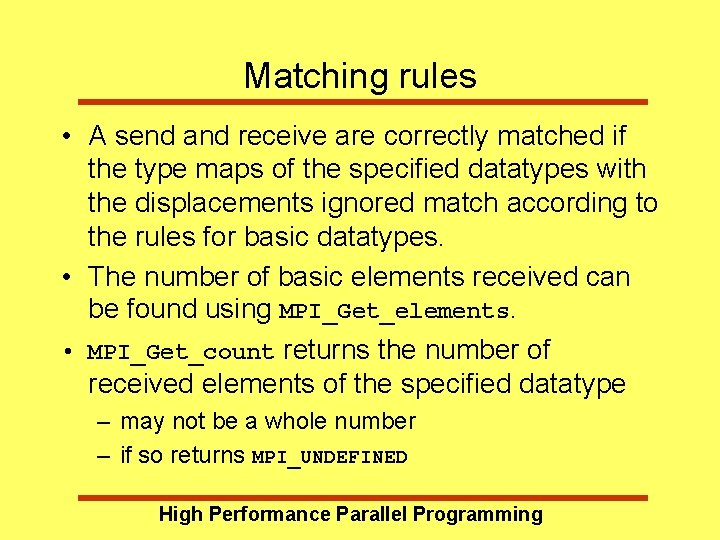

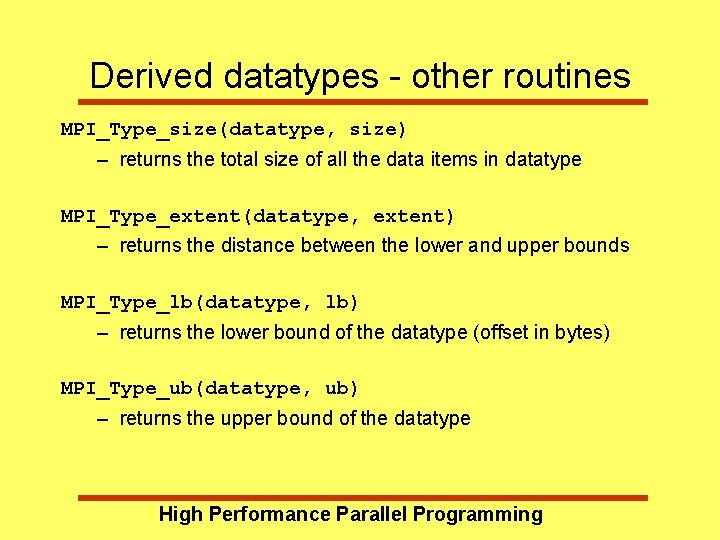

Derived datatypes - other routines MPI_Type_size(datatype, size) – returns the total size of all the data items in datatype MPI_Type_extent(datatype, extent) – returns the distance between the lower and upper bounds MPI_Type_lb(datatype, lb) – returns the lower bound of the datatype (offset in bytes) MPI_Type_ub(datatype, ub) – returns the upper bound of the datatype High Performance Parallel Programming

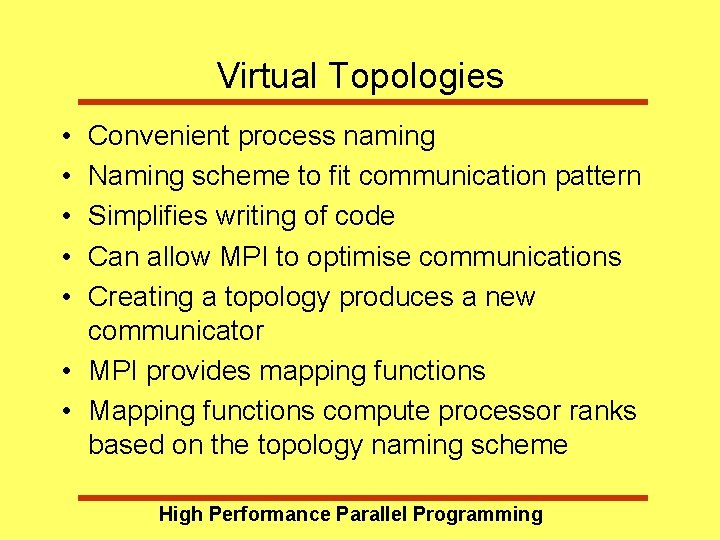

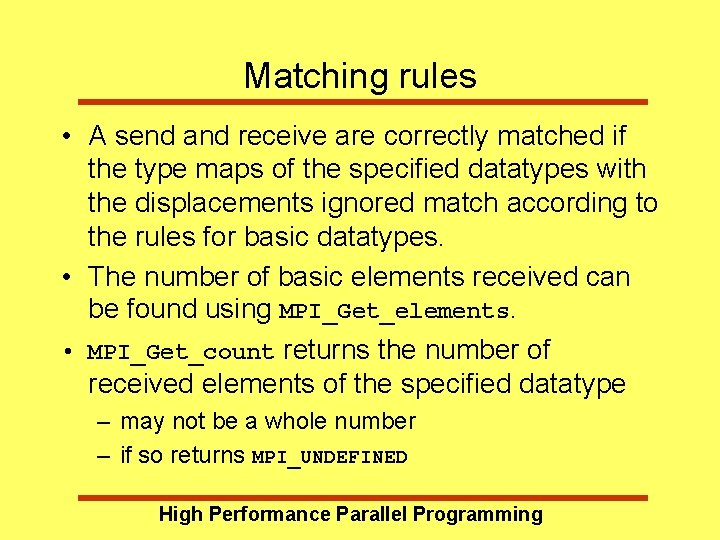

Matching rules • A send and receive are correctly matched if the type maps of the specified datatypes with the displacements ignored match according to the rules for basic datatypes. • The number of basic elements received can be found using MPI_Get_elements. • MPI_Get_count returns the number of received elements of the specified datatype – may not be a whole number – if so returns MPI_UNDEFINED High Performance Parallel Programming

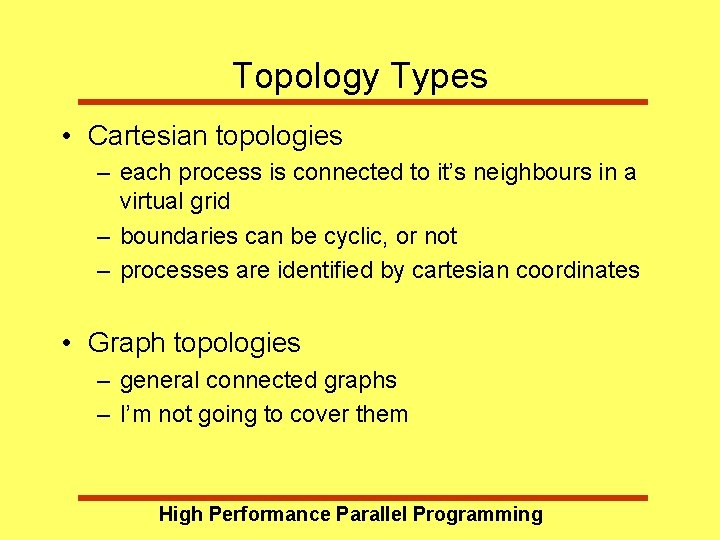

Virtual Topologies • • • Convenient process naming Naming scheme to fit communication pattern Simplifies writing of code Can allow MPI to optimise communications Creating a topology produces a new communicator • MPI provides mapping functions • Mapping functions compute processor ranks based on the topology naming scheme High Performance Parallel Programming

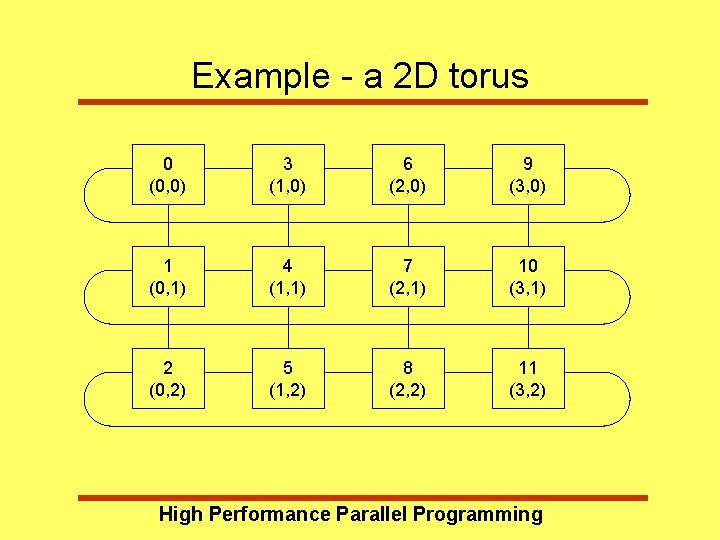

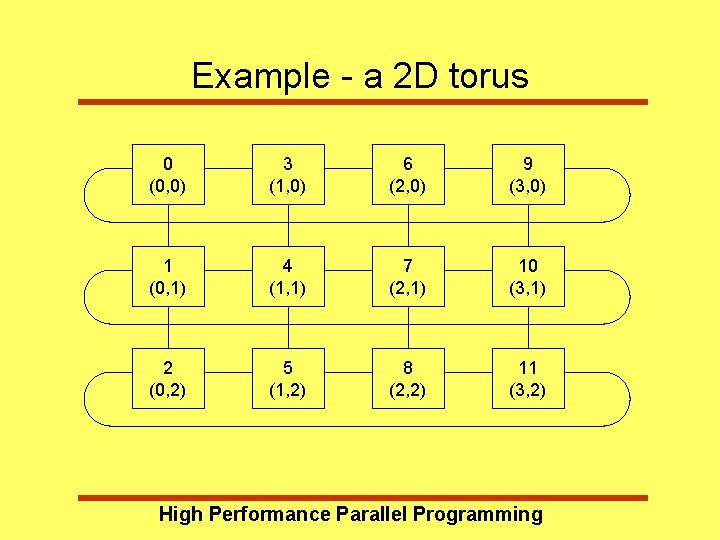

Example - a 2 D torus 0 (0, 0) 3 (1, 0) 6 (2, 0) 9 (3, 0) 1 (0, 1) 4 (1, 1) 7 (2, 1) 10 (3, 1) 2 (0, 2) 5 (1, 2) 8 (2, 2) 11 (3, 2) High Performance Parallel Programming

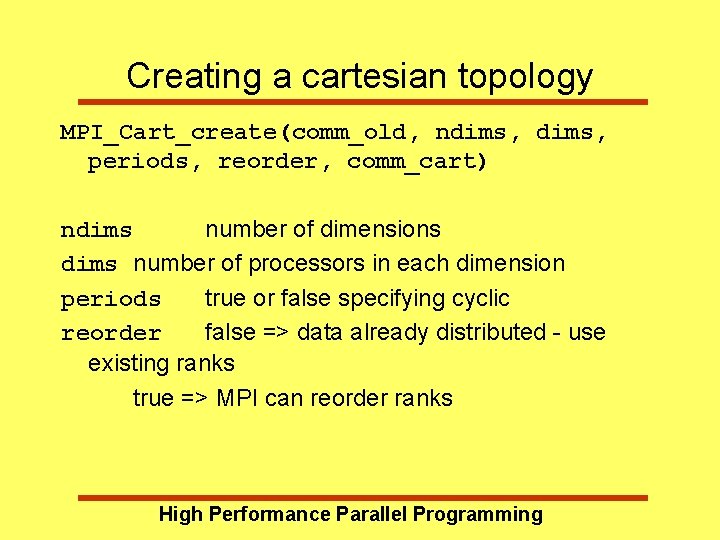

Topology Types • Cartesian topologies – each process is connected to it’s neighbours in a virtual grid – boundaries can be cyclic, or not – processes are identified by cartesian coordinates • Graph topologies – general connected graphs – I’m not going to cover them High Performance Parallel Programming

Creating a cartesian topology MPI_Cart_create(comm_old, ndims, periods, reorder, comm_cart) ndims number of dimensions dims number of processors in each dimension periods true or false specifying cyclic reorder false => data already distributed - use existing ranks true => MPI can reorder ranks High Performance Parallel Programming

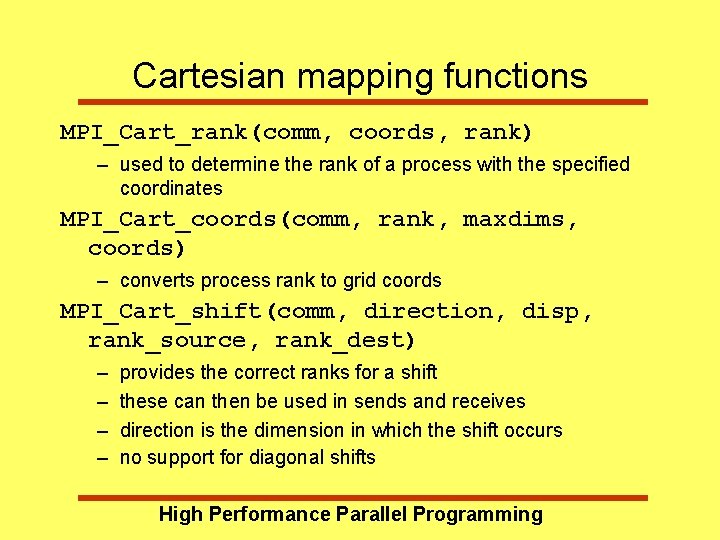

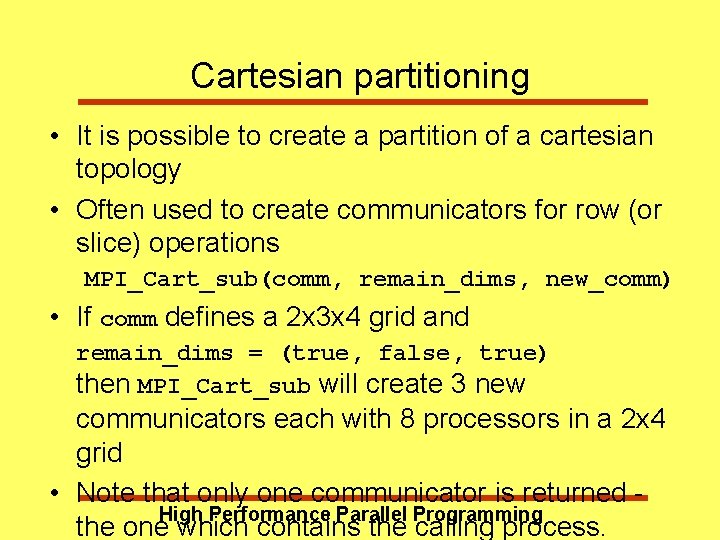

Cartesian mapping functions MPI_Cart_rank(comm, coords, rank) – used to determine the rank of a process with the specified coordinates MPI_Cart_coords(comm, rank, maxdims, coords) – converts process rank to grid coords MPI_Cart_shift(comm, direction, disp, rank_source, rank_dest) – – provides the correct ranks for a shift these can then be used in sends and receives direction is the dimension in which the shift occurs no support for diagonal shifts High Performance Parallel Programming

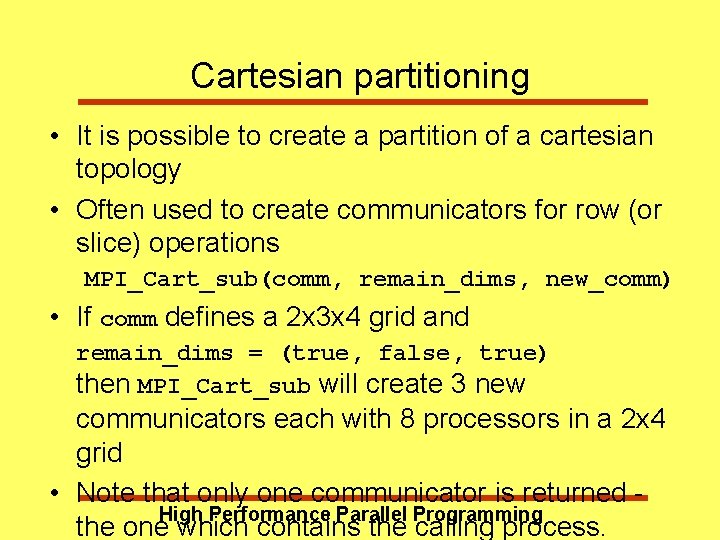

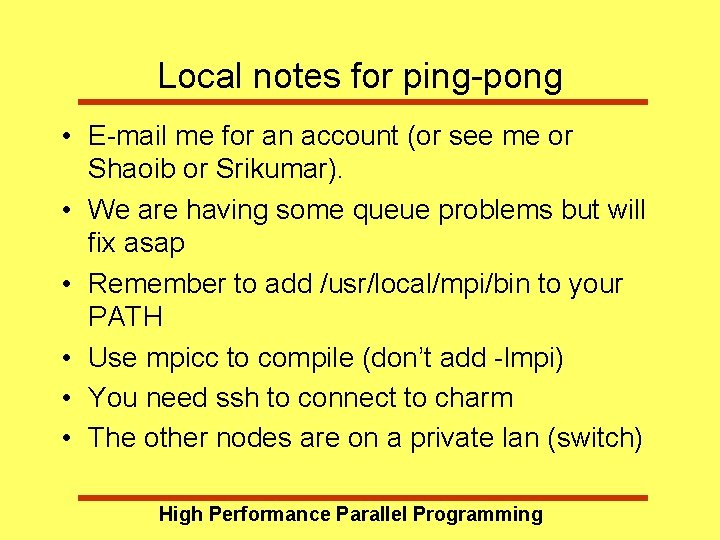

Cartesian partitioning • It is possible to create a partition of a cartesian topology • Often used to create communicators for row (or slice) operations MPI_Cart_sub(comm, remain_dims, new_comm) • If comm defines a 2 x 3 x 4 grid and remain_dims = (true, false, true) then MPI_Cart_sub will create 3 new communicators each with 8 processors in a 2 x 4 grid • Note that only one communicator is returned High Performance Parallel Programming the one which contains the calling process.

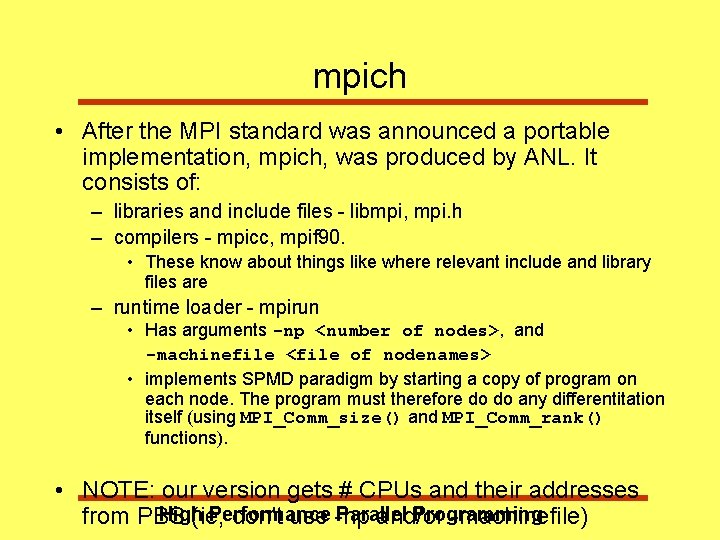

Local notes for ping-pong • E-mail me for an account (or see me or Shaoib or Srikumar). • We are having some queue problems but will fix asap • Remember to add /usr/local/mpi/bin to your PATH • Use mpicc to compile (don’t add -lmpi) • You need ssh to connect to charm • The other nodes are on a private lan (switch) High Performance Parallel Programming

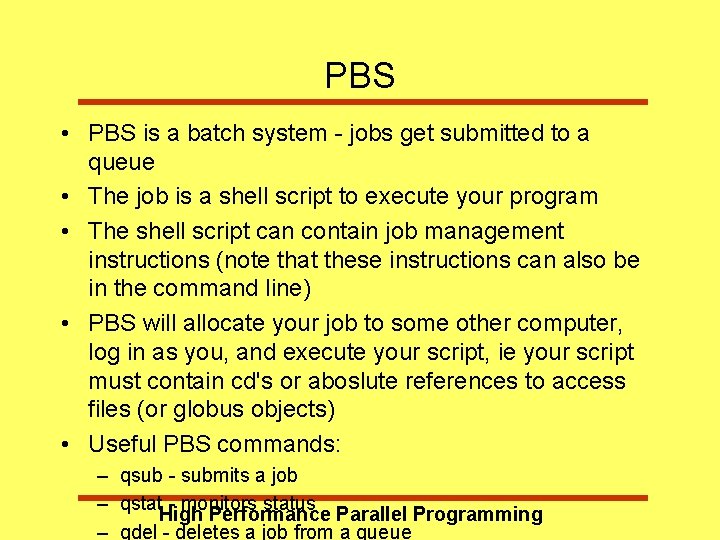

mpich • After the MPI standard was announced a portable implementation, mpich, was produced by ANL. It consists of: – libraries and include files - libmpi, mpi. h – compilers - mpicc, mpif 90. • These know about things like where relevant include and library files are – runtime loader - mpirun • Has arguments -np <number of nodes>, and -machinefile <file of nodenames> • implements SPMD paradigm by starting a copy of program on each node. The program must therefore do do any differentitation itself (using MPI_Comm_size() and MPI_Comm_rank() functions). • NOTE: our version gets # CPUs and their addresses High Performance Parallel Programming from PBS (ie, don't use -np and/or -machinefile)

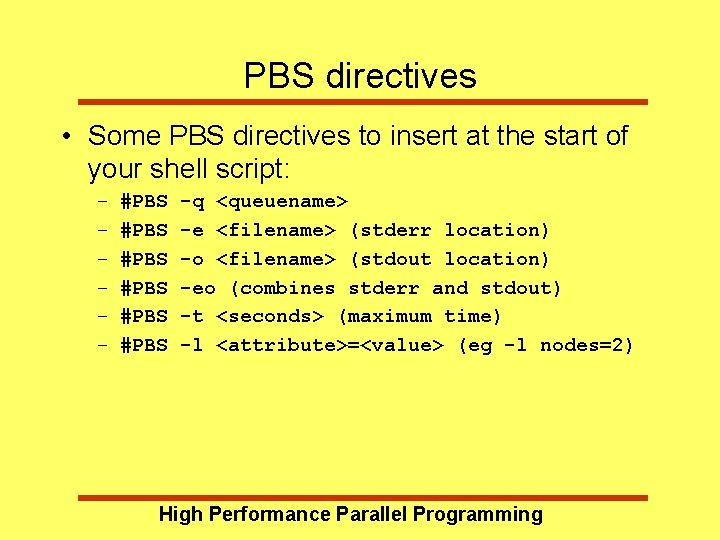

PBS • PBS is a batch system - jobs get submitted to a queue • The job is a shell script to execute your program • The shell script can contain job management instructions (note that these instructions can also be in the command line) • PBS will allocate your job to some other computer, log in as you, and execute your script, ie your script must contain cd's or aboslute references to access files (or globus objects) • Useful PBS commands: – qsub - submits a job – qstat - monitors status High Performance Parallel Programming – qdel - deletes a job from a queue

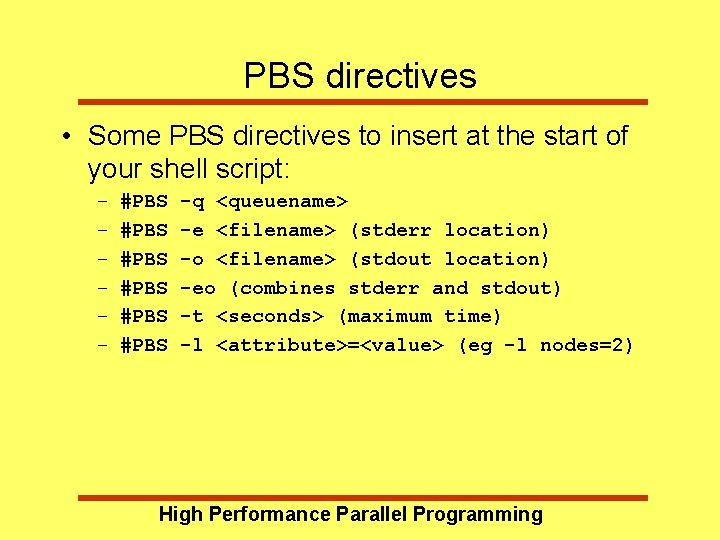

PBS directives • Some PBS directives to insert at the start of your shell script: – – – #PBS #PBS -q <queuename> -e <filename> (stderr location) -o <filename> (stdout location) -eo (combines stderr and stdout) -t <seconds> (maximum time) -l <attribute>=<value> (eg -l nodes=2) High Performance Parallel Programming

charm • charm. hpc. unimelb. edu. au is a dual Pentium (PII, 266 MHz, 128 MB RAM) and is the front end for the PC farm. It's running Red Hat Linux. • Behind charm are sixteen PCs (all 200 MHz MMX, with 64 MB RAM). Their DNS designations are pci 11. hpc. unimelb. edu. au, . . . , pci 18. hpc. unimelb. edu. au and pcj 11. hpc. unimelb. edu. au, . . . , pcj 18. hpc. unimelb. edu. au. • Open. PBS is the batch system that is implemented on charm. There are four batch queues implemented on charm: – pque all nodes – exclusive all nodes – pquei. High pc-i* nodes only Performance Parallel Programming

High Performance Parallel Programming Thursday: More MPI High Performance Parallel Programming