High Performance Computing ARCHITECTURE OVERVIEW COMPUTATIONAL METHODS 12312021

- Slides: 26

High Performance Computing ARCHITECTURE OVERVIEW COMPUTATIONAL METHODS 12/31/2021 COMPUTATIONAL METHODS 1

Outline Desktops, servers and computational power Clusters and interconnects Supercomputer architecture and design Future systems 12/31/2021 COMPUTATIONAL METHODS 2

What is available on your desktop? Intel Core i 7 4790 (Haswell) processor 4 cores at 4. 0 GHz 8 double precision floating point operations per second (FLOP/s) – full fused multiply add instructions (FMAs) ◦ 2 floating point units (FPUs) per core ◦ 16 FLOP per cycle ◦ FMA example: $0 = $0 x $2 + $1 64 GFLOP/s per core theoretical peak 256 GFLOP/s full system theoretical peak 12/31/2021 COMPUTATIONAL METHODS 3

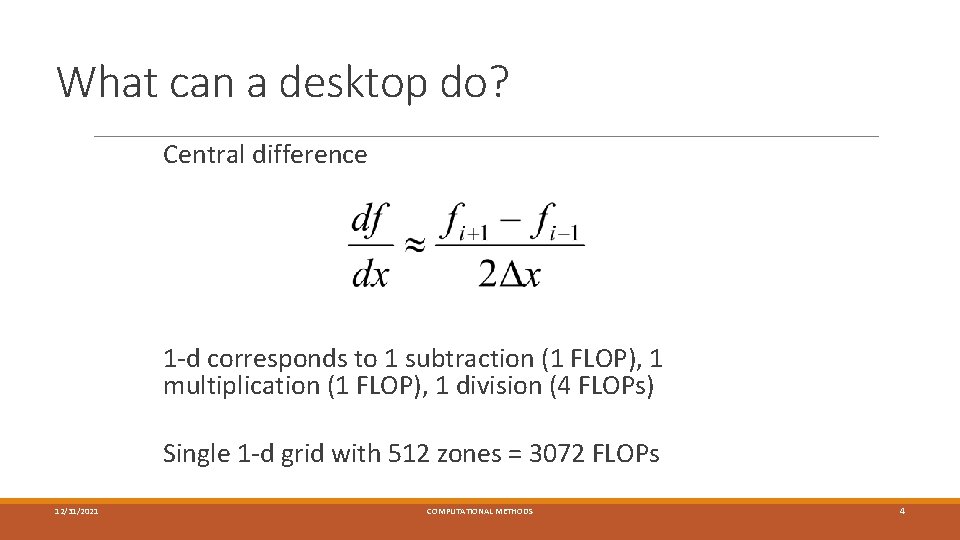

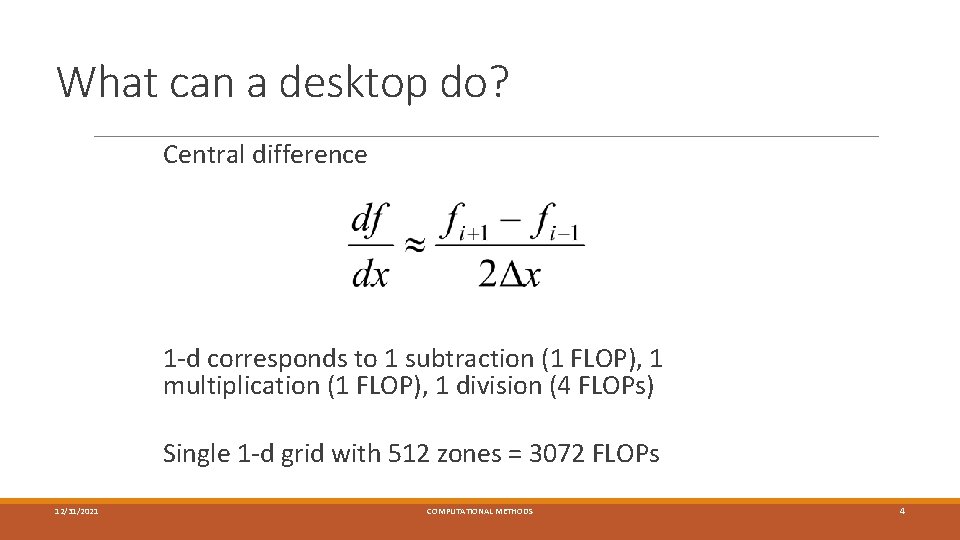

What can a desktop do? Central difference 1 -d corresponds to 1 subtraction (1 FLOP), 1 multiplication (1 FLOP), 1 division (4 FLOPs) Single 1 -d grid with 512 zones = 3072 FLOPs 12/31/2021 COMPUTATIONAL METHODS 4

What can a desktop do? Consider central difference on a 5123 3 -d mesh ◦ 5123 x 3 surfaces = 2. 4 GFLOPs ◦ On a single core of a 4. 0 GHz Core i 7 ◦ 0. 03 seconds of run time for 1 update with 100% efficiency (assumes 100% fused multiply add instructions) ◦ With perfect on-chip parallelization on 4 cores ◦ 0. 008 seconds per update ◦ 1. 25 minutes for 10, 000 updates ◦ Nothing, not even HPL, gets 100% efficiency! ◦ A more realistic efficiency is ~10% 12/31/2021 COMPUTATIONAL METHODS 5

Efficiency considerations No application ever gets 100% of theoretical peak for the following, not comprehensive, reasons ◦ 100% peak assumes 100% FMA instructions running on processors with AVX SIMD instructions. Does the algorithm in question even map well to FMAs? If not, the rest of vector instructions are 50% of FMA peak. ◦ Data must be moved from main memory through the CPU memory system. This motion has latency and a fixed bandwidth that may be shared with other cores. ◦ The algorithm may not map well into vector instructions at all. The code is then “serial” and runs at 1/8 th of peak if optimal. ◦ The application may require I/O, which can be very expensive and stall computation. This alone can take efficiency down by an order of magnitude in some cases. 12/31/2021 COMPUTATIONAL METHODS 6

More realistic problem Consider numerical hydrodynamics ◦ Updating 5 variables ◦ Computing wave speeds, solving eigenvalue problem, computing numerical fluxes ◦ Roughly 1000 FLOPs per zone per update in 3 d ◦ 134 GFLOPs per update for 5123 zones Runs take on order of hundreds of 14 hours on 4 cores at 10% efficiency 8 Bytes * 5123 * 5 variables = 5 GB 12/31/2021 COMPUTATIONAL METHODS 7

Consider these simulations… Numerical MHD, ~6 K FLOPs per zone per update 12 K time steps 1088 x 448 x 1088 grid = 530 million zones 3. 2 TFLOPs per update = 38 PFLOPs for the full calculation ~17 days on Haswell desktop (wouldn’t even fit in memory anyway) ◦ Actual simulation was run on MSI Itasca system using 2, 032 Nehalem cores (6 X less FLOP/s per core) for 6 hours color. avi 12/31/2021 COMPUTATIONAL METHODS 8

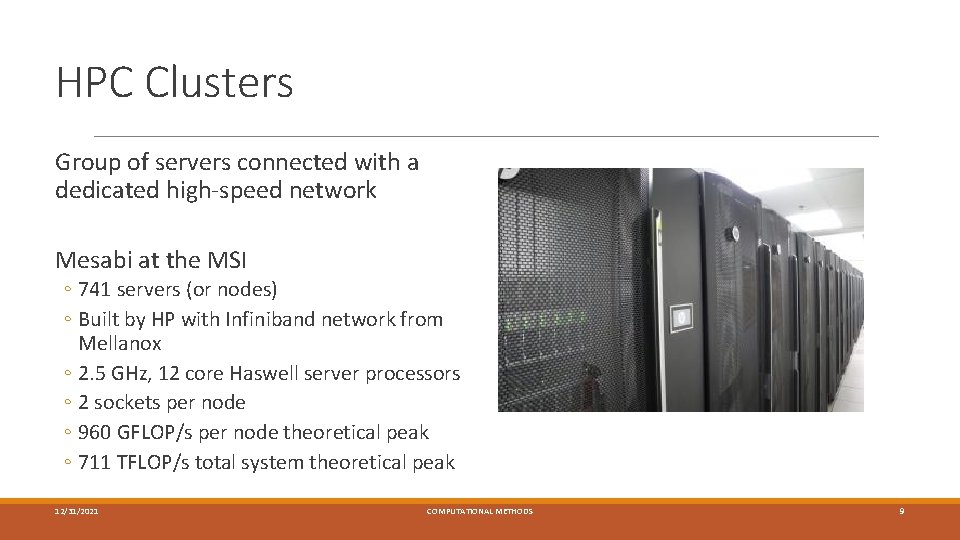

HPC Clusters Group of servers connected with a dedicated high-speed network Mesabi at the MSI ◦ 741 servers (or nodes) ◦ Built by HP with Infiniband network from Mellanox ◦ 2. 5 GHz, 12 core Haswell server processors ◦ 2 sockets per node ◦ 960 GFLOP/s per node theoretical peak ◦ 711 TFLOP/s total system theoretical peak 12/31/2021 COMPUTATIONAL METHODS 9

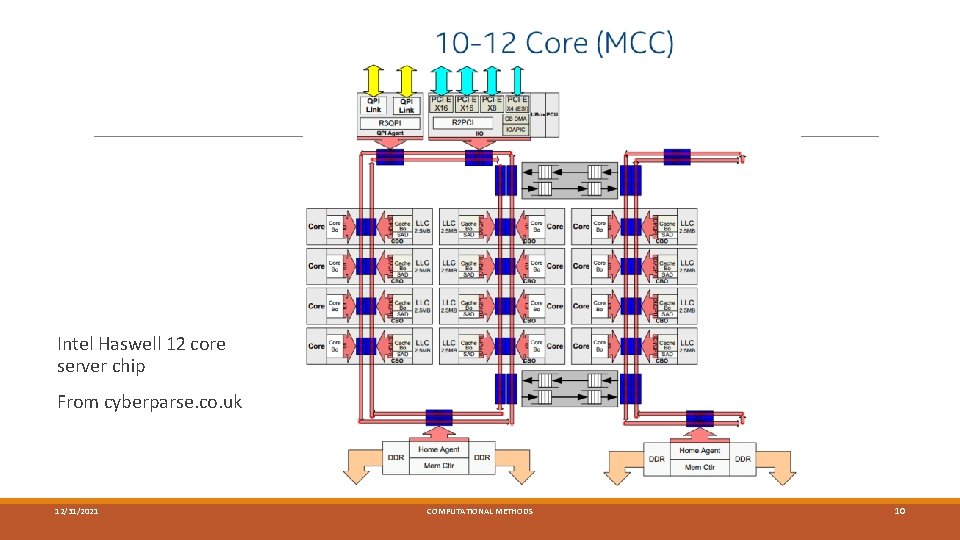

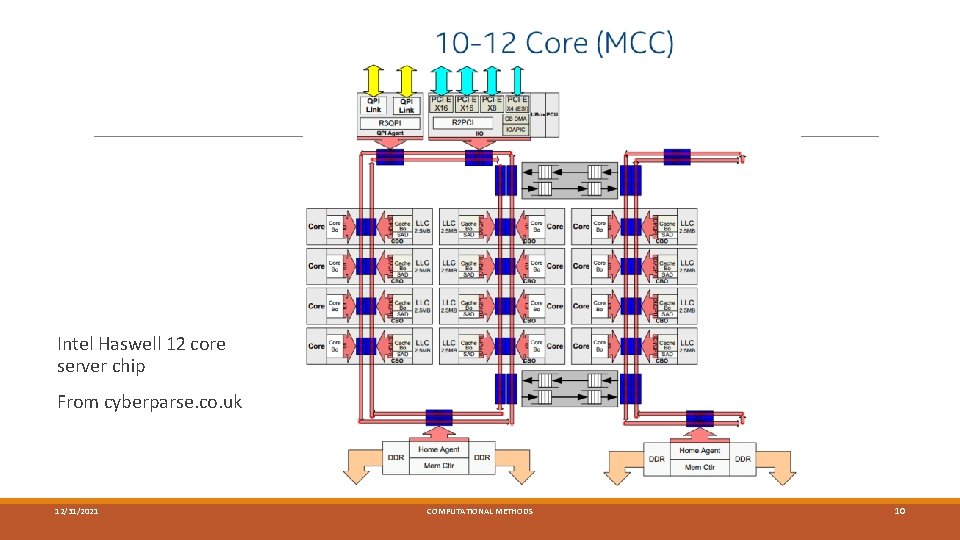

Intel Haswell 12 core server chip From cyberparse. co. uk 12/31/2021 COMPUTATIONAL METHODS 10

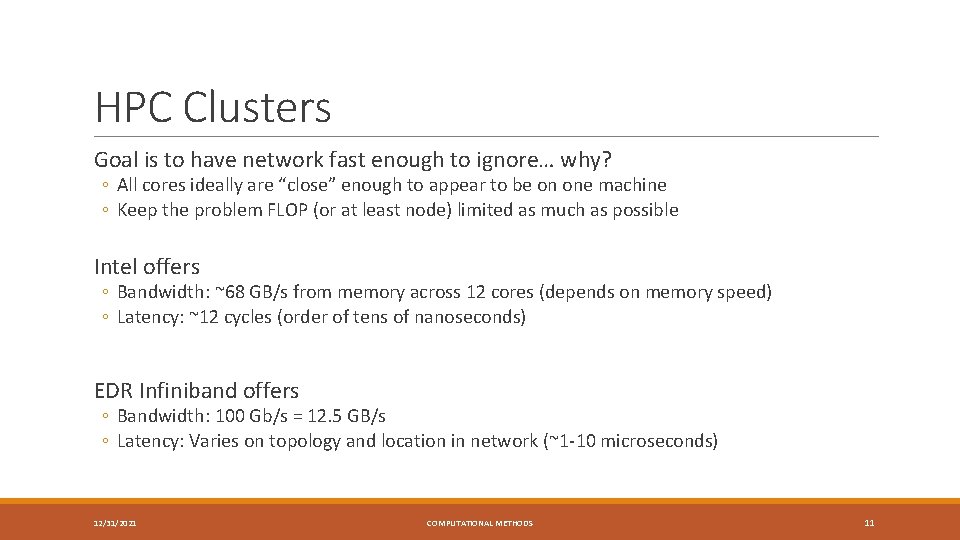

HPC Clusters Goal is to have network fast enough to ignore… why? ◦ All cores ideally are “close” enough to appear to be on one machine ◦ Keep the problem FLOP (or at least node) limited as much as possible Intel offers ◦ Bandwidth: ~68 GB/s from memory across 12 cores (depends on memory speed) ◦ Latency: ~12 cycles (order of tens of nanoseconds) EDR Infiniband offers ◦ Bandwidth: 100 Gb/s = 12. 5 GB/s ◦ Latency: Varies on topology and location in network (~1 -10 microseconds) 12/31/2021 COMPUTATIONAL METHODS 11

HPC Clusters Network types ◦ Infiniband ◦ FDR, EDR, etc ◦ Ethernet ◦ Up to 10 GB/s bandwidth ◦ Cheaper than Infiniband but also slower ◦ Custom ◦ Cray, IBM, Fujitsu, for example, all have custom networks (not “vanilla” clusters though) 12/31/2021 COMPUTATIONAL METHODS 12

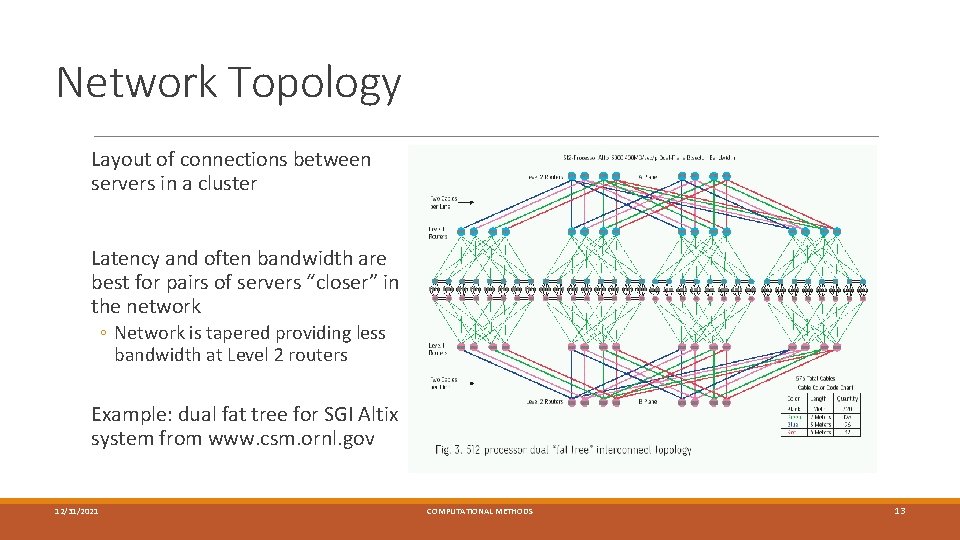

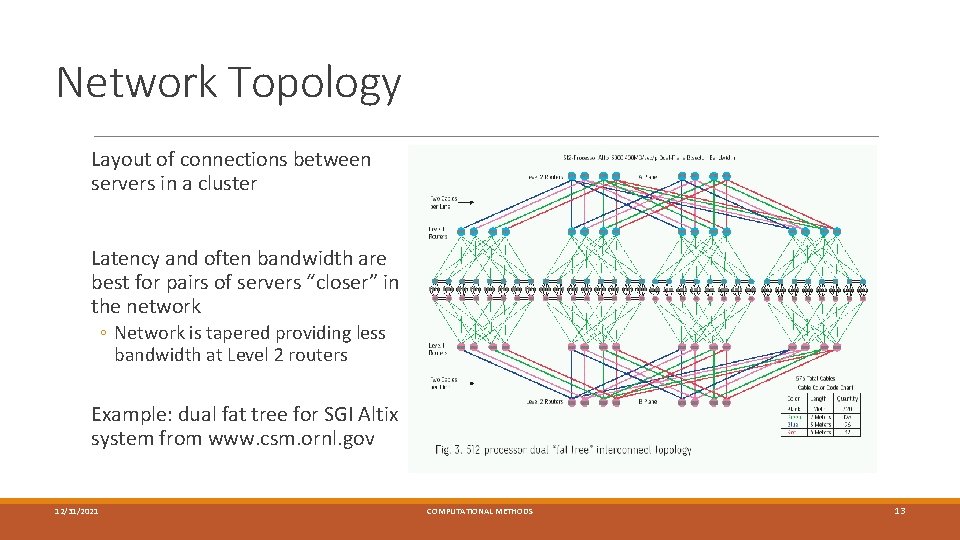

Network Topology Layout of connections between servers in a cluster Latency and often bandwidth are best for pairs of servers “closer” in the network ◦ Network is tapered providing less bandwidth at Level 2 routers Example: dual fat tree for SGI Altix system from www. csm. ornl. gov 12/31/2021 COMPUTATIONAL METHODS 13

Supercomputers What’s the difference from a cluster? ◦ Distinction is a bit subjective ◦ Presented as a single system (or mainframe) to the user ◦ Individual servers are stripped down to absolute basics ◦ Typically cannot operate as independent computer from the rest of the system 12/31/2021 COMPUTATIONAL METHODS 14

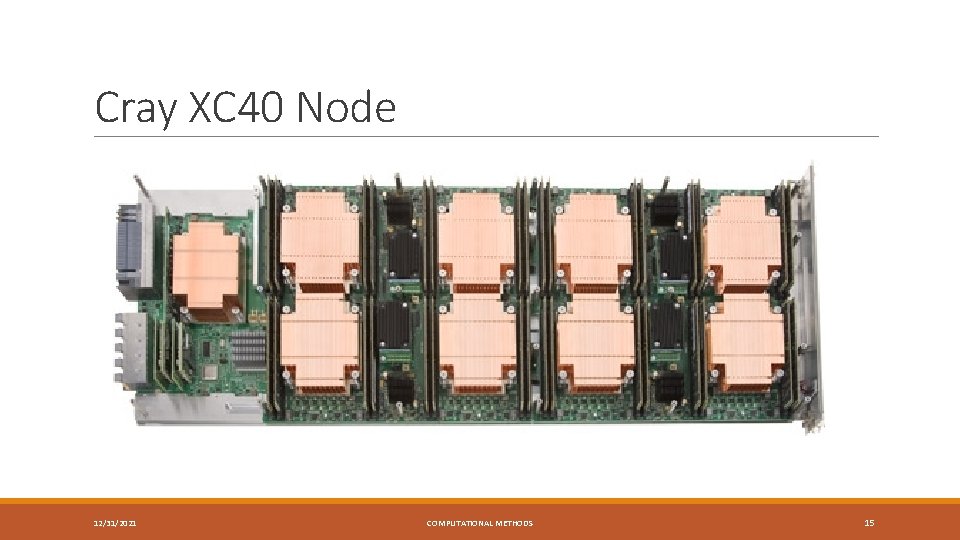

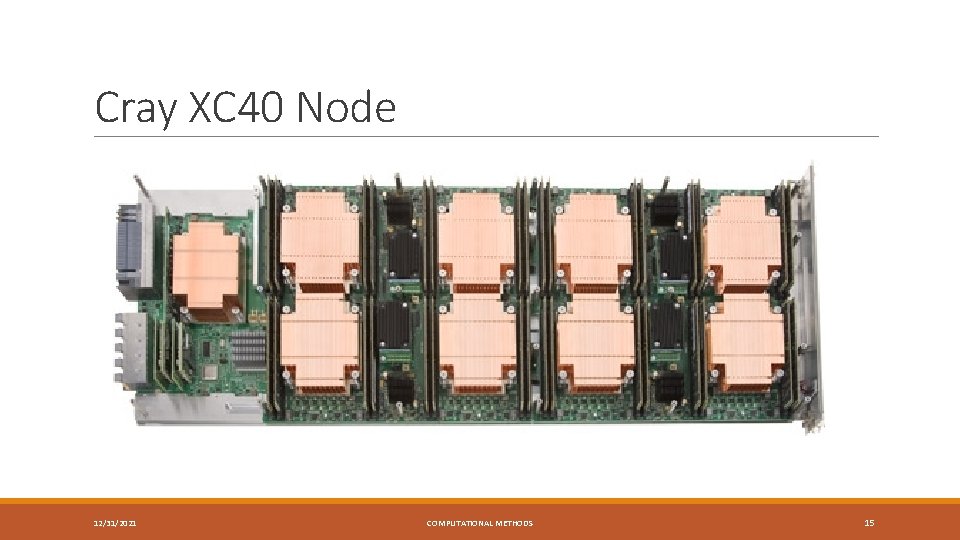

AMD Packages Cray XC 40 Node 12/31/2021 COMPUTATIONAL METHODS 15

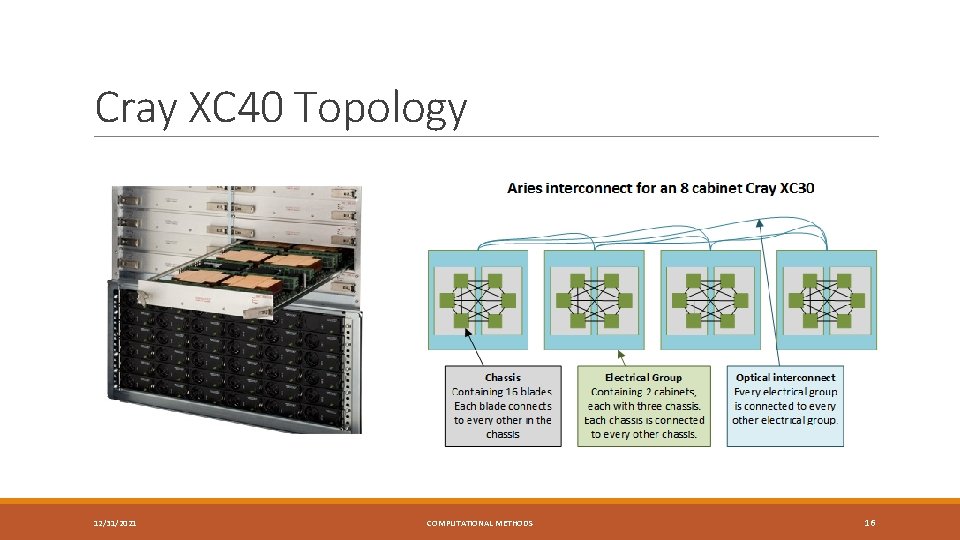

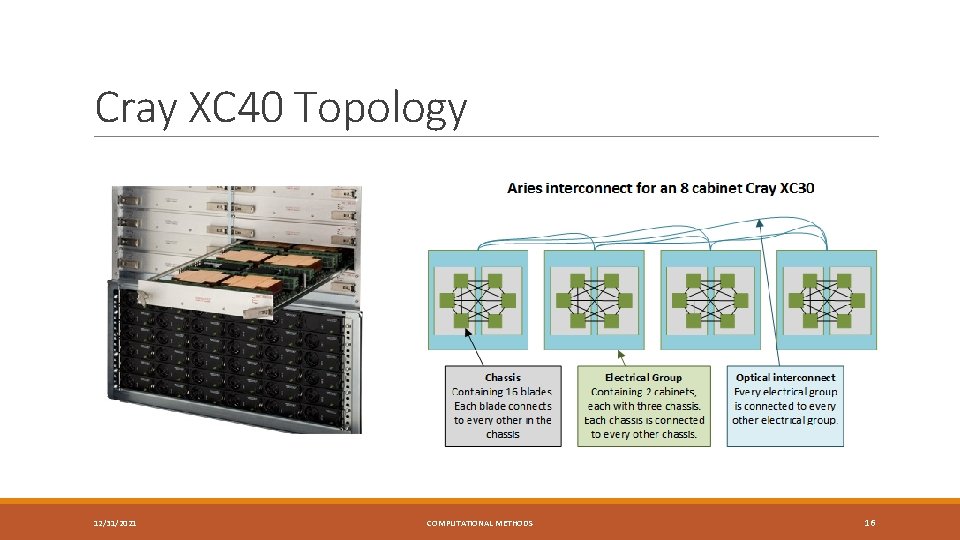

Cray XC 40 Topology 12/31/2021 COMPUTATIONAL METHODS 16

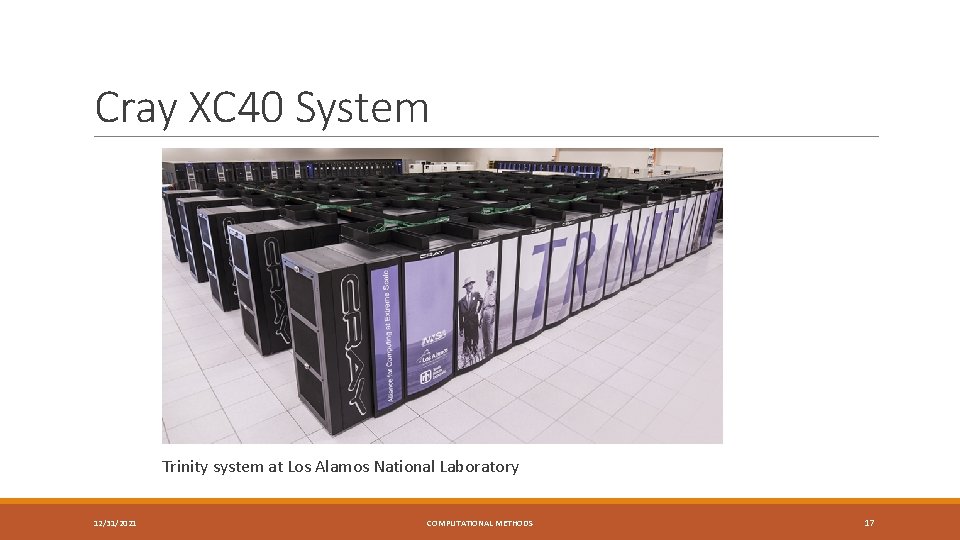

Cray XC 40 System Trinity system at Los Alamos National Laboratory 12/31/2021 COMPUTATIONAL METHODS 17

Trinity Provides mission critical computing to the National Nuclear Security Administration (NNSA) ◦ Currently #6 on Top 500. org November 2015 list ◦ Phase 1 is ~9600 XC 40 Haswell nodes (dual socket, 16 cores per socket) ◦ ~307, 000 cores ◦ Theoretical peak of ~11 PFLOP/s ◦ Phase 2 is ~9600 XC KNL nodes (single socket, > 60 cores per socket) ◦ Theoretical peak of ~18 PFLOP/s in addition to 11 PFLOP/s from Haswell nodes (just an conservative estimate, exact number cannot be released yet) ◦ 80 Petabytes of near-line storage ◦ Cray Sonexion lustre ◦ Draws up to 10 MW at peak utilization! 12/31/2021 COMPUTATIONAL METHODS 18

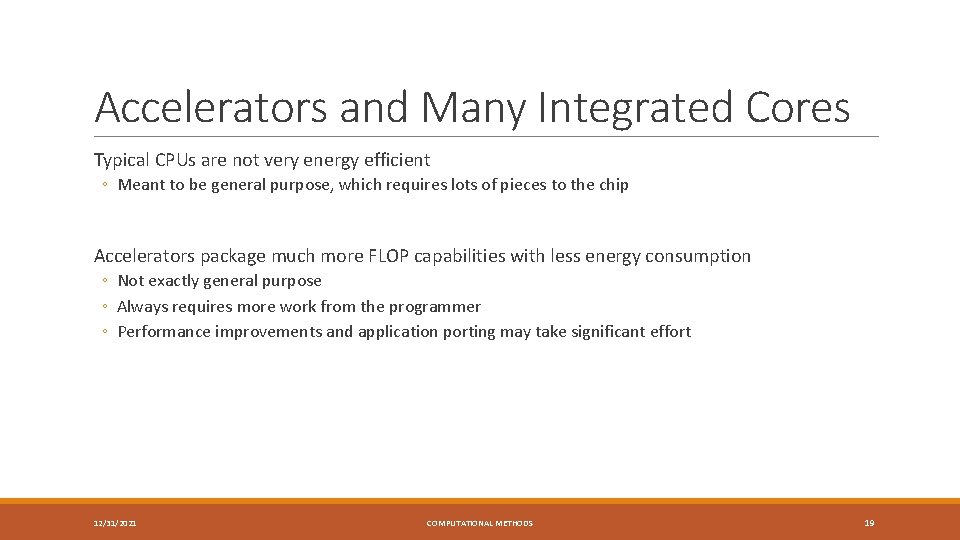

Accelerators and Many Integrated Cores Typical CPUs are not very energy efficient ◦ Meant to be general purpose, which requires lots of pieces to the chip Accelerators package much more FLOP capabilities with less energy consumption ◦ Not exactly general purpose ◦ Always requires more work from the programmer ◦ Performance improvements and application porting may take significant effort 12/31/2021 COMPUTATIONAL METHODS 19

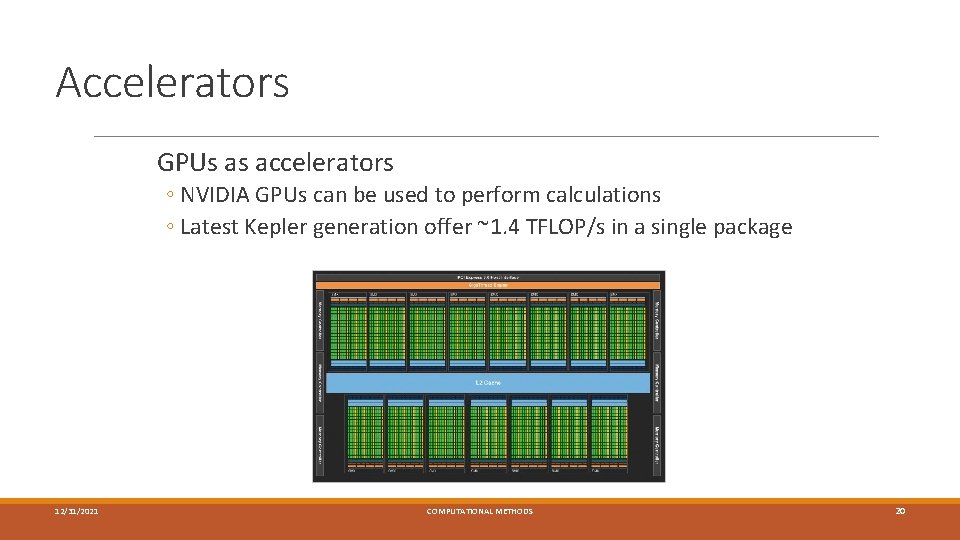

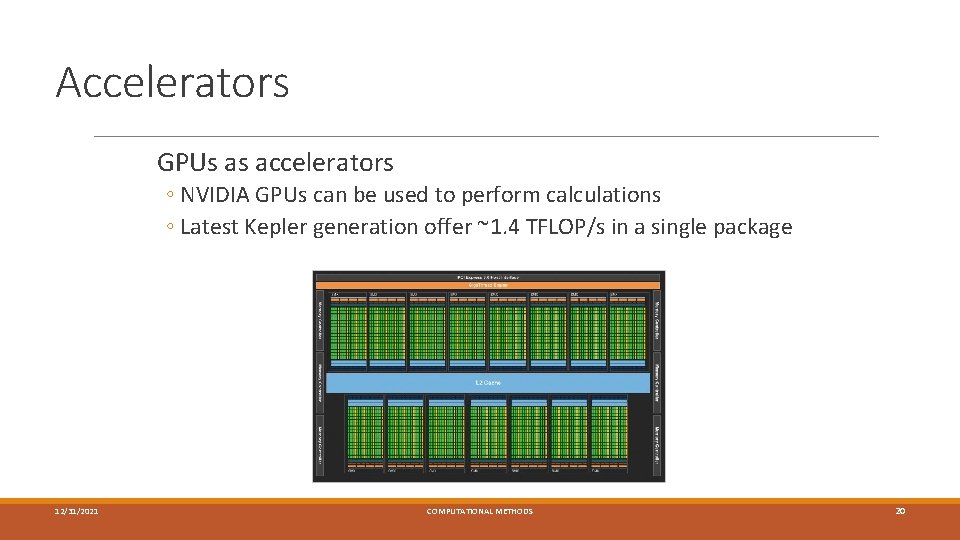

Accelerators GPUs as accelerators ◦ NVIDIA GPUs can be used to perform calculations ◦ Latest Kepler generation offer ~1. 4 TFLOP/s in a single package 12/31/2021 COMPUTATIONAL METHODS 20

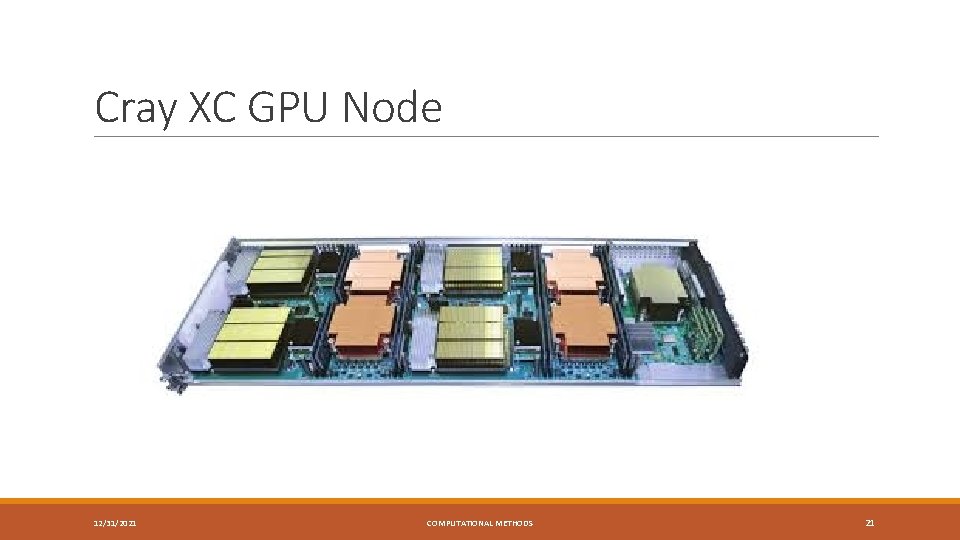

Cray XC GPU Node 12/31/2021 COMPUTATIONAL METHODS NVIDIA K 20 X 21

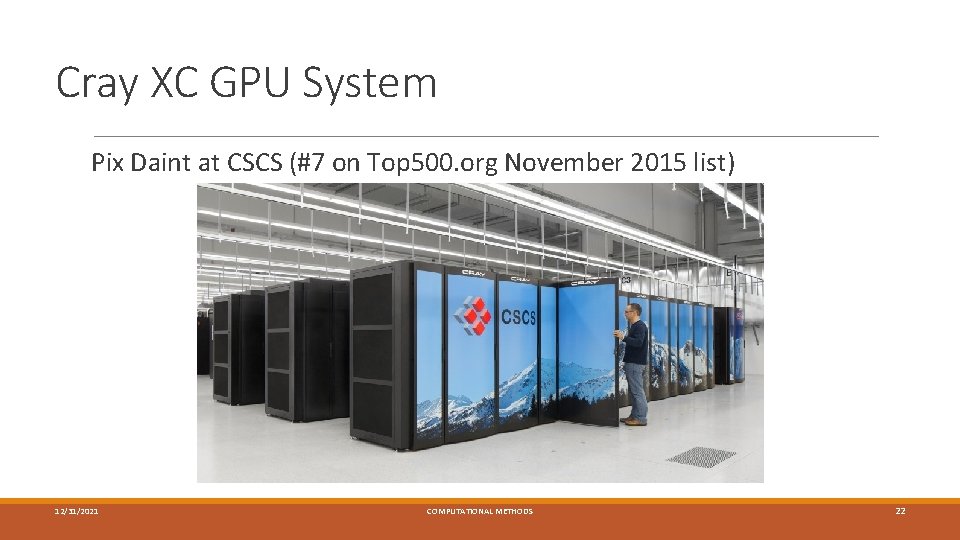

Cray XC GPU System Pix Daint at CSCS (#7 on Top 500. org November 2015 list) 12/31/2021 COMPUTATIONAL METHODS 22

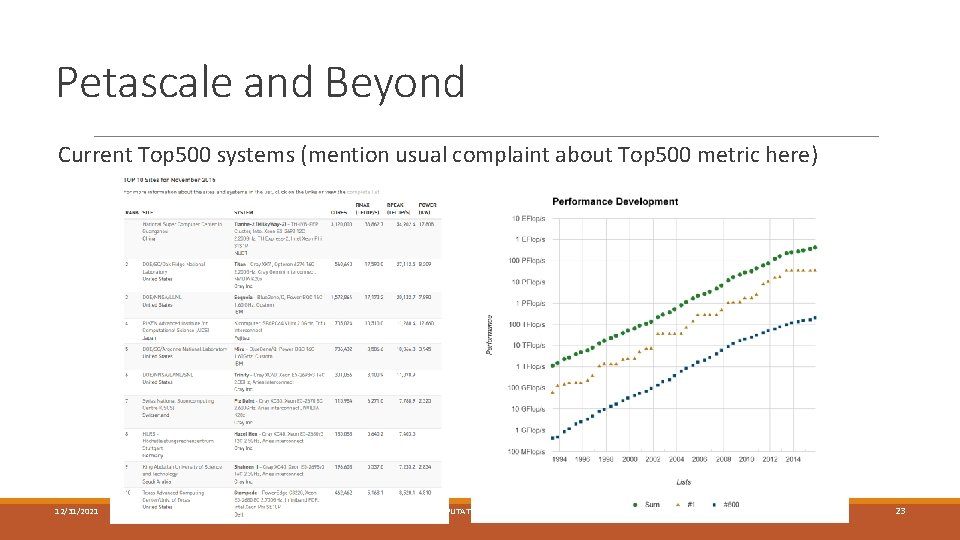

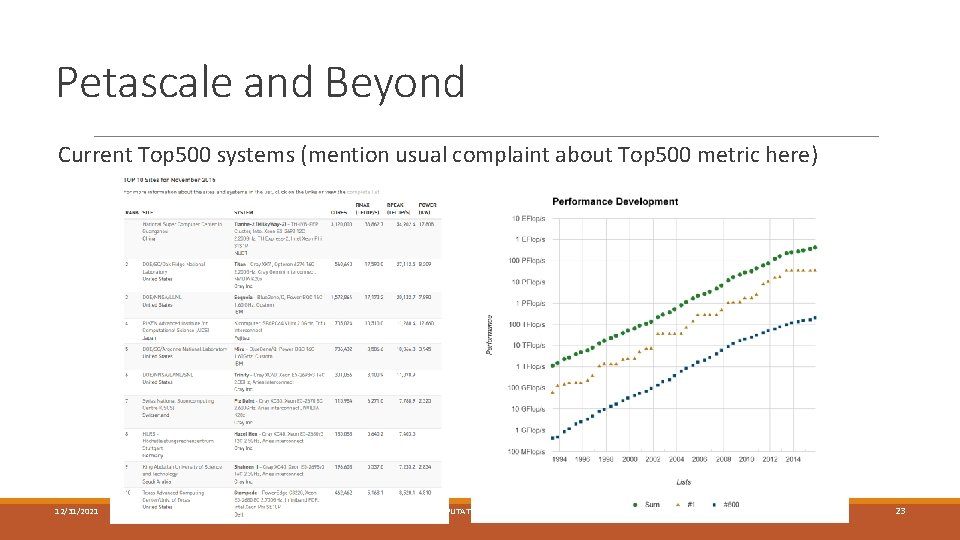

Petascale and Beyond Current Top 500 systems (mention usual complaint about Top 500 metric here) 12/31/2021 COMPUTATIONAL METHODS 23

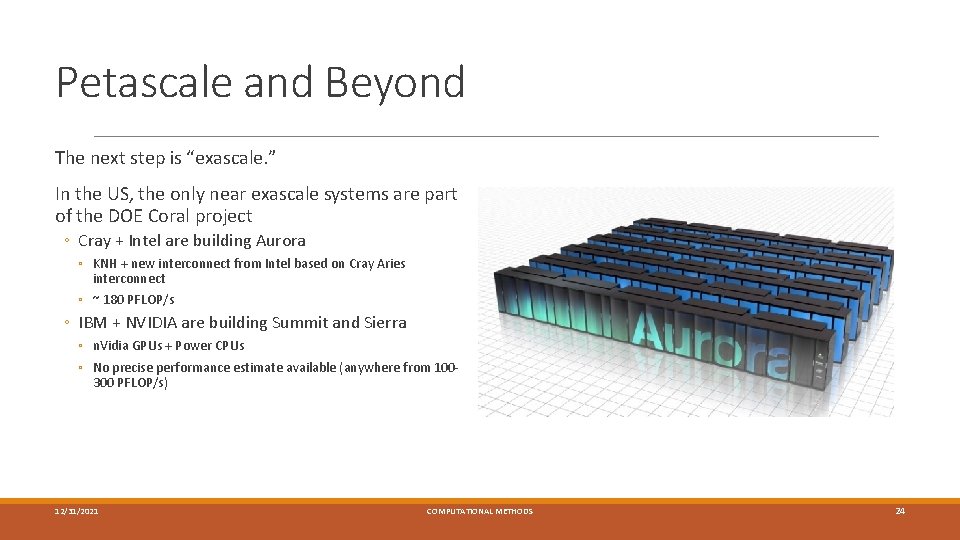

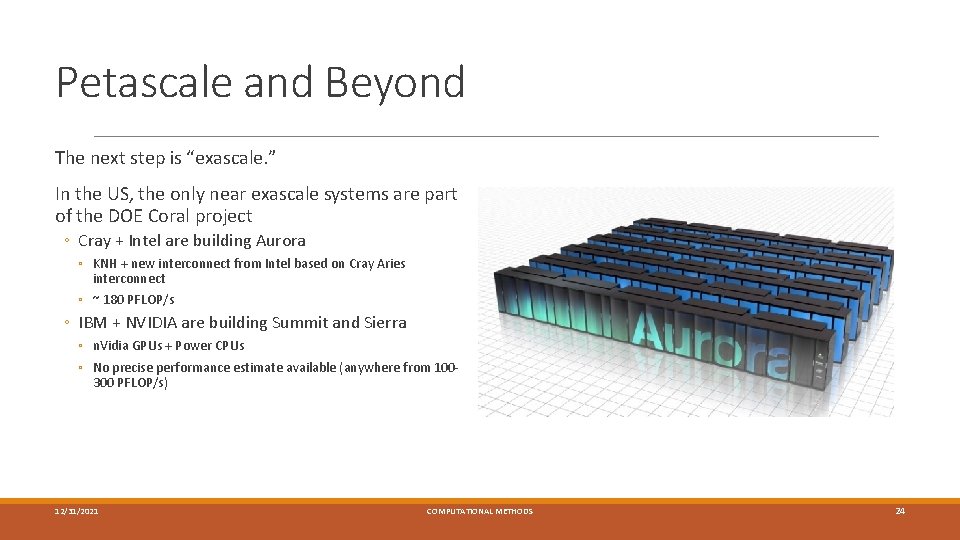

Petascale and Beyond The next step is “exascale. ” In the US, the only near exascale systems are part of the DOE Coral project ◦ Cray + Intel are building Aurora ◦ KNH + new interconnect from Intel based on Cray Aries interconnect ◦ ~ 180 PFLOP/s ◦ IBM + NVIDIA are building Summit and Sierra ◦ n. Vidia GPUs + Power CPUs ◦ No precise performance estimate available (anywhere from 100300 PFLOP/s) 12/31/2021 COMPUTATIONAL METHODS 24

Petascale and Beyond These systems will vet MIC and GPUs as possible technologies to Exascale Both have their risks, and neither may end up getting us there Alternative technologies being investigated as part of DOE funded projects ◦ ARM (yes, cell phones have ARM, but that version of ARM will NOT be used in HPC probably ever) ◦ Both n. Vidia and Intel have DOE funded projects on GPUs and MIC FPGAs (forget it unless something revolutionary happens with that technology) “Quantum” computers ◦ D-Wave systems are quantum-like analog computers able to solve exactly one class of problems that fit into minimization by annealing. These systems will NEVER be useful forecasting the weather or simulating any physics. ◦ True quantum gates are being developed but are not scalable right now. This technology may be decades off yet. 12/31/2021 COMPUTATIONAL METHODS 25

Programming Tomorrow will be a very brief overview of how one programs a supercomputer. 12/31/2021 COMPUTATIONAL METHODS 26