HIERARCHYAWARE LOSS FUNCTION ON A TREE STRUCTURED LABEL

HIERARCHY-AWARE LOSS FUNCTION ON A TREE STRUCTURED LABEL SPACE FOR AUDIO EVENT DETECTION Arindam Jati, Naveen Kumar, Ruxin Chen, Panayiotis Georgiou Presenter: Jaekwon Yoo, Sony Interactive Entertainment © Arindam Jati, 2019 1

Agenda • Audio event detection • Hierarchy in audio events • Goals of hierarchical audio event detection • Hierarchy-aware metric learning • Balanced triplet loss • Quadruplet loss • Multi-task training framework • Results • Conclusions and future works © Arindam Jati, 2019 2

Audio Event Detection • Understanding and recognizing the semantic class associated with an audio recording • The semantic class generally human annotated • Numerous applications in • Audio content understanding and retrieval • Surveillance • Traffic monitoring • Wildlife monitoring • Health and lifestyle monitoring systems • Audio events detection vs. classification • Detection: Recognize multiple sound effects from a mixed audio clip at different time instants • Classification: Identify the audio event class of an audio clip This paper © Arindam Jati, 2019 3

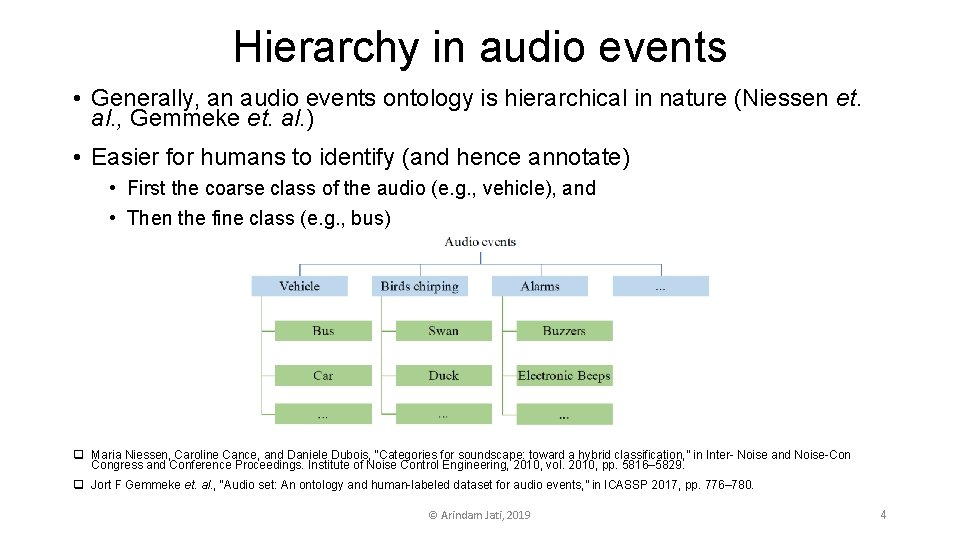

Hierarchy in audio events • Generally, an audio events ontology is hierarchical in nature (Niessen et. al. , Gemmeke et. al. ) • Easier for humans to identify (and hence annotate) • First the coarse class of the audio (e. g. , vehicle), and • Then the fine class (e. g. , bus) q Maria Niessen, Caroline Cance, and Daniele Dubois, “Categories for soundscape: toward a hybrid classification, ” in Inter- Noise and Noise-Con Congress and Conference Proceedings. Institute of Noise Control Engineering, 2010, vol. 2010, pp. 5816– 5829. q Jort F Gemmeke et. al. , “Audio set: An ontology and human-labeled dataset for audio events, ” in ICASSP 2017, pp. 776– 780. © Arindam Jati, 2019 4

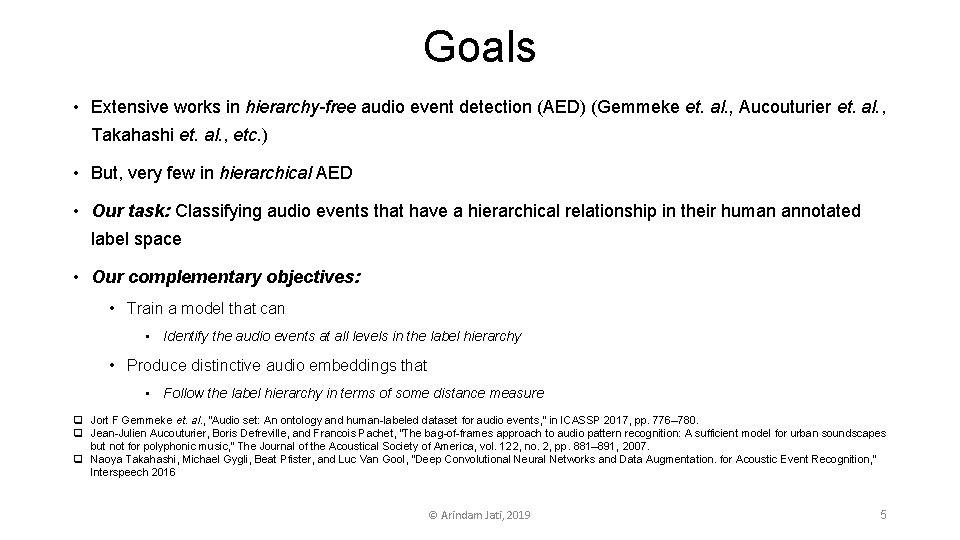

Goals • Extensive works in hierarchy-free audio event detection (AED) (Gemmeke et. al. , Aucouturier et. al. , Takahashi et. al. , etc. ) • But, very few in hierarchical AED • Our task: Classifying audio events that have a hierarchical relationship in their human annotated label space • Our complementary objectives: • Train a model that can • Identify the audio events at all levels in the label hierarchy • Produce distinctive audio embeddings that • Follow the label hierarchy in terms of some distance measure q Jort F Gemmeke et. al. , “Audio set: An ontology and human-labeled dataset for audio events, ” in ICASSP 2017, pp. 776– 780. q Jean-Julien Aucouturier, Boris Defreville, and Francois Pachet, “The bag-of-frames approach to audio pattern recognition: A sufficient model for urban soundscapes but not for polyphonic music, ” The Journal of the Acoustical Society of America, vol. 122, no. 2, pp. 881– 891, 2007. q Naoya Takahashi, Michael Gygli, Beat Pfister, and Luc Van Gool, “Deep Convolutional Neural Networks and Data Augmentation. for Acoustic Event Recognition, ” Interspeech 2016 © Arindam Jati, 2019 5

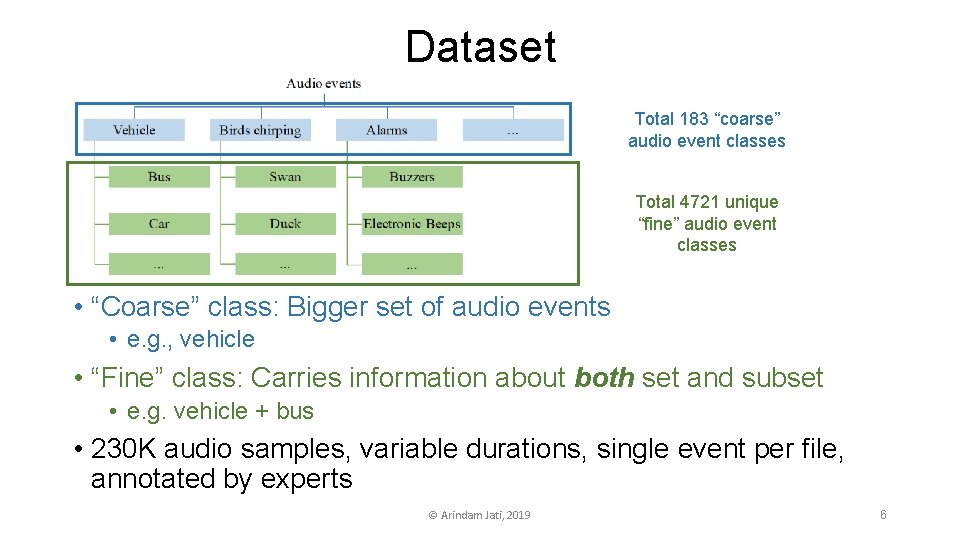

Dataset Total 183 “coarse” audio event classes Total 4721 unique “fine” audio event classes • “Coarse” class: Bigger set of audio events • e. g. , vehicle • “Fine” class: Carries information about both set and subset • e. g. vehicle + bus • 230 K audio samples, variable durations, single event per file, annotated by experts © Arindam Jati, 2019 6

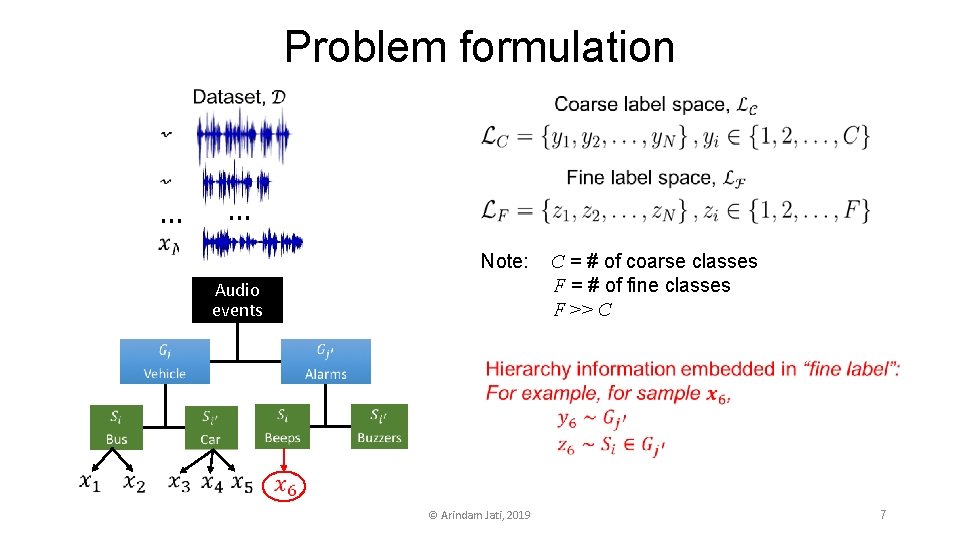

Problem formulation … … Note: Audio events © Arindam Jati, 2019 C = # of coarse classes F = # of fine classes F >> C 7

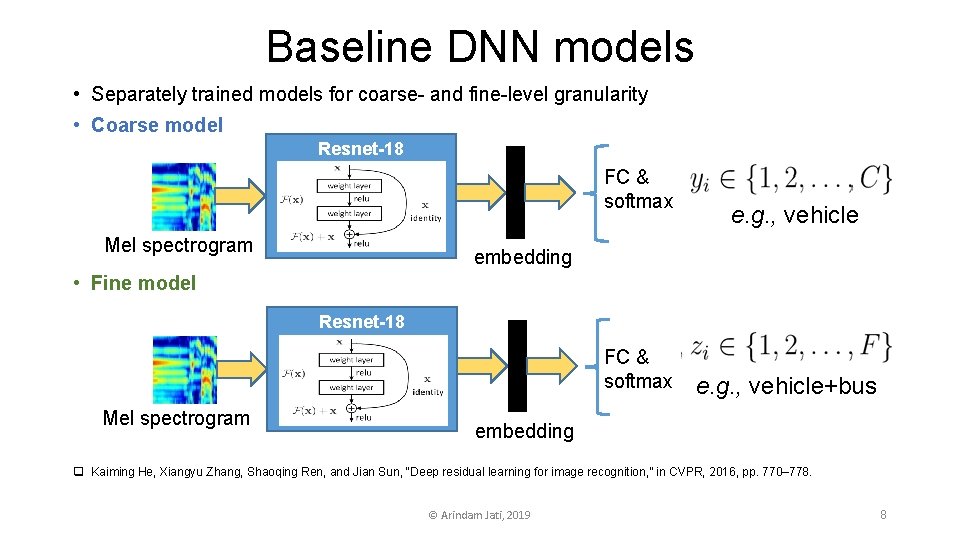

Baseline DNN models • Separately trained models for coarse- and fine-level granularity • Coarse model Resnet-18 FC & softmax Mel spectrogram e. g. , vehicle embedding • Fine model Resnet-18 FC & softmax Mel spectrogram e. g. , vehicle+bus embedding q Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun, “Deep residual learning for image recognition, ” in CVPR, 2016, pp. 770– 778. © Arindam Jati, 2019 8

Hierarchy-aware metric learning • Motivation: • Fine-grained feature representation / embedding • Compare the audio events at different levels in their taxonomy • Make better use of fewer samples at finer level • Similarity-based metric learning: • Pairwise contrastive loss • Triplet loss • Problem with basic metric learning: • Do not consider hierarchical label structures © Arindam Jati, 2019 9

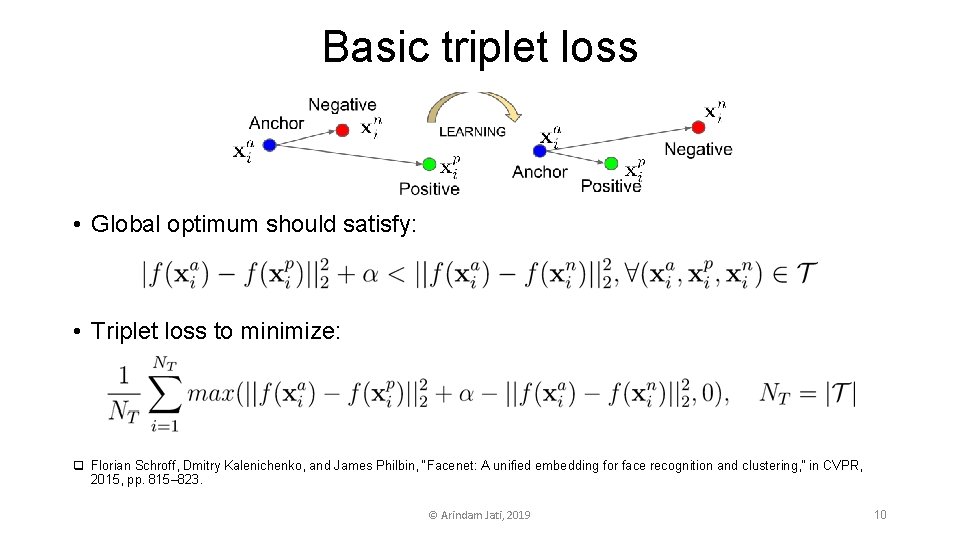

Basic triplet loss • Global optimum should satisfy: • Triplet loss to minimize: q Florian Schroff, Dmitry Kalenichenko, and James Philbin, “Facenet: A unified embedding for face recognition and clustering, ” in CVPR, 2015, pp. 815– 823. © Arindam Jati, 2019 10

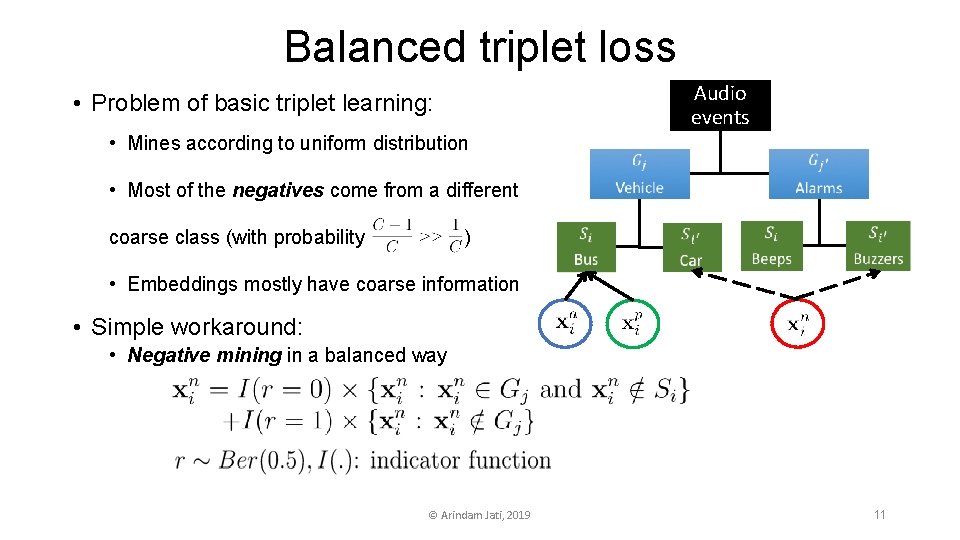

Balanced triplet loss Audio events • Problem of basic triplet learning: • Mines according to uniform distribution • Most of the negatives come from a different coarse class (with probability ) • Embeddings mostly have coarse information • Simple workaround: • Negative mining in a balanced way © Arindam Jati, 2019 11

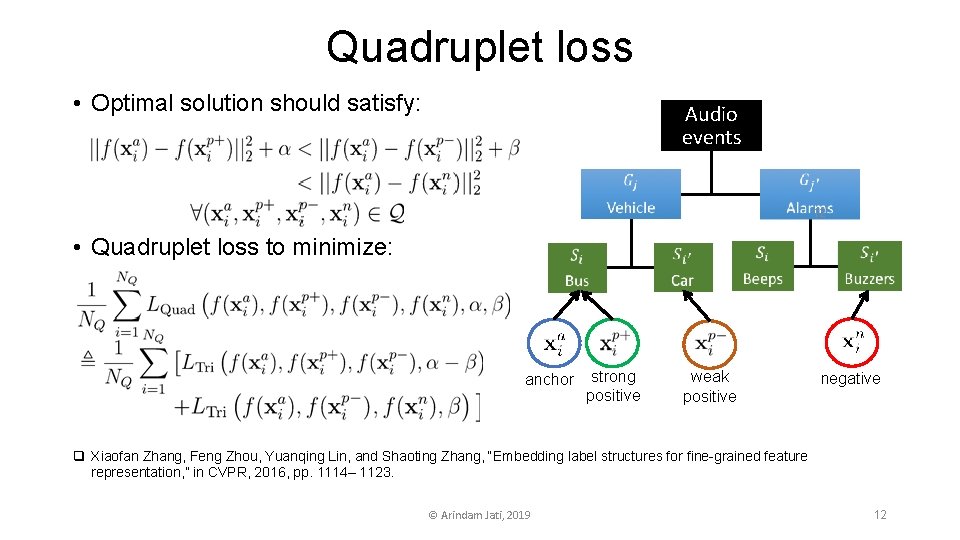

Quadruplet loss • Optimal solution should satisfy: Audio events 12 • Quadruplet loss to minimize: anchor strong positive weak positive negative q Xiaofan Zhang, Feng Zhou, Yuanqing Lin, and Shaoting Zhang, “Embedding label structures for fine-grained feature representation, ” in CVPR, 2016, pp. 1114– 1123. © Arindam Jati, 2019 12

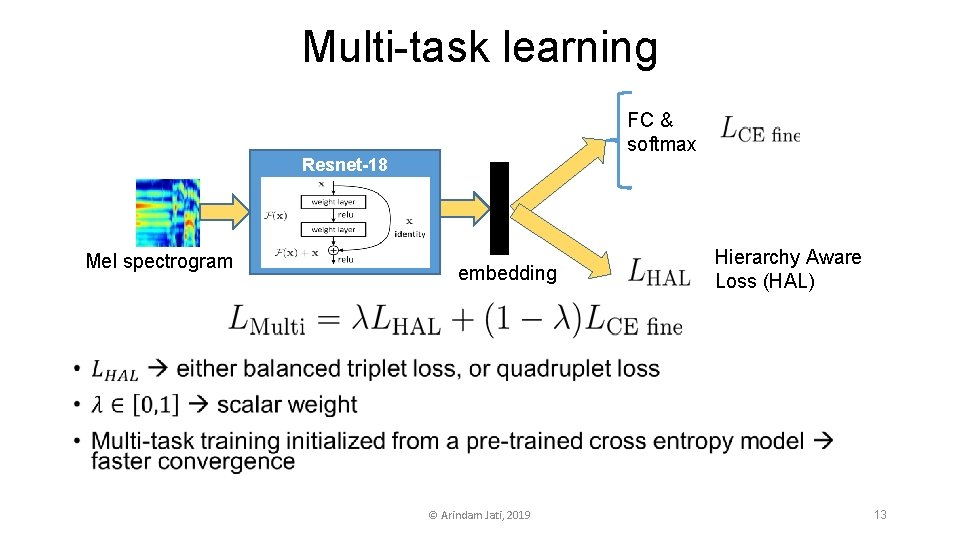

Multi-task learning • FC & softmax Resnet-18 Mel spectrogram embedding © Arindam Jati, 2019 Hierarchy Aware Loss (HAL) 13

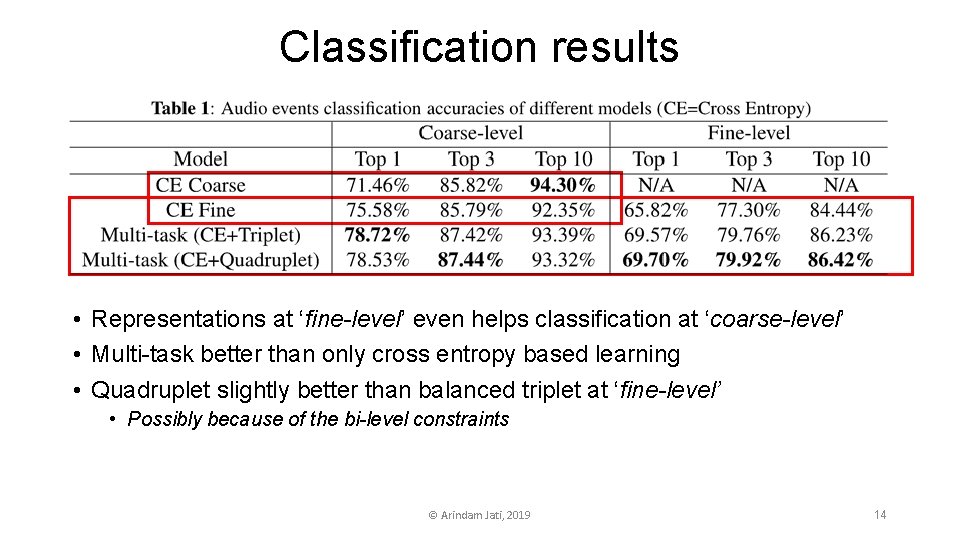

Classification results • Representations at ‘fine-level’ even helps classification at ‘coarse-level’ • Multi-task better than only cross entropy based learning • Quadruplet slightly better than balanced triplet at ‘fine-level’ • Possibly because of the bi-level constraints © Arindam Jati, 2019 14

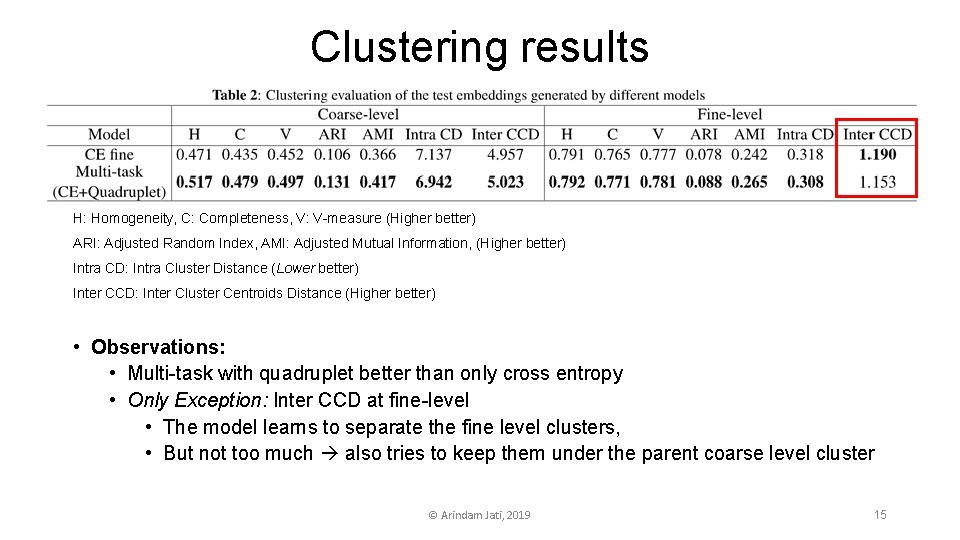

Clustering results H: Homogeneity, C: Completeness, V: V-measure (Higher better) ARI: Adjusted Random Index, AMI: Adjusted Mutual Information, (Higher better) Intra CD: Intra Cluster Distance (Lower better) Inter CCD: Inter Cluster Centroids Distance (Higher better) • Observations: • Multi-task with quadruplet better than only cross entropy • Only Exception: Inter CCD at fine-level • The model learns to separate the fine level clusters, • But not too much also tries to keep them under the parent coarse level cluster © Arindam Jati, 2019 15

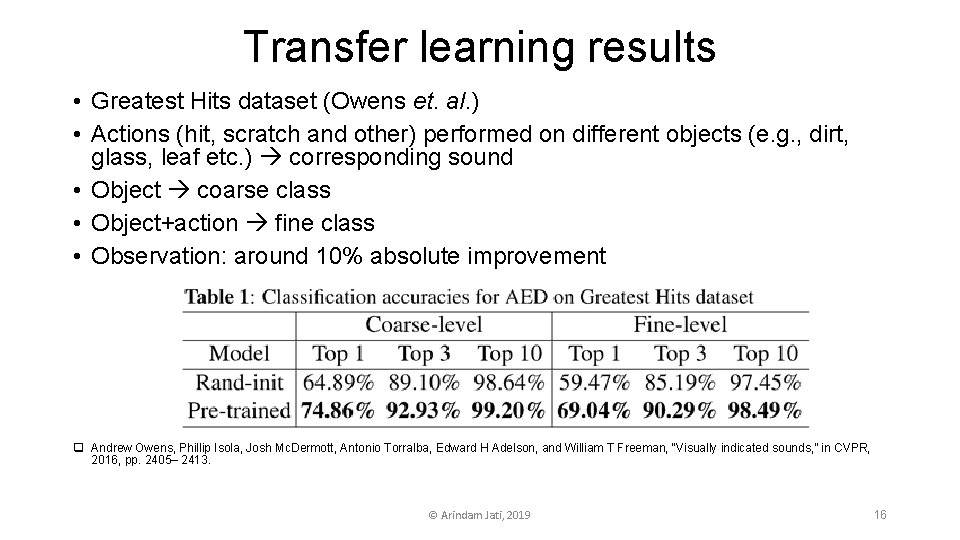

Transfer learning results • Greatest Hits dataset (Owens et. al. ) • Actions (hit, scratch and other) performed on different objects (e. g. , dirt, glass, leaf etc. ) corresponding sound • Object coarse class • Object+action fine class • Observation: around 10% absolute improvement q Andrew Owens, Phillip Isola, Josh Mc. Dermott, Antonio Torralba, Edward H Adelson, and William T Freeman, “Visually indicated sounds, ” in CVPR, 2016, pp. 2405– 2413. © Arindam Jati, 2019 16

Conclusion and future works • Bi-level hierarchical audio event detection task • Hierarchy-aware loss functions • Learn from the tree structured label ontology • Balance triplet loss • Quadruplet loss • Multi-task learning framework • Classification, clustering and transfer learning experiments Future directions: • Deeper and asymmetric label structures • Unsupervised extension © Arindam Jati, 2019 17

- Slides: 17