Hierarchies in Object Recognition and Classification Nat Twarog

Hierarchies in Object Recognition and Classification Nat Twarog 6. 870, November 24, 2008

What's a hierarchy? Mathematics: A partial ordering. Wikipedia: A hierarchy is an arrangement of objects, people, elements, values, grades, orders, classes, etc. , in a ranked or graduated series. Basically a hierarchy is a system by which things are arranged in an order, typically with all but one item having a “parent” (or occasionally multiple “parents”) immediately above them, and any number of “children” immediately below them

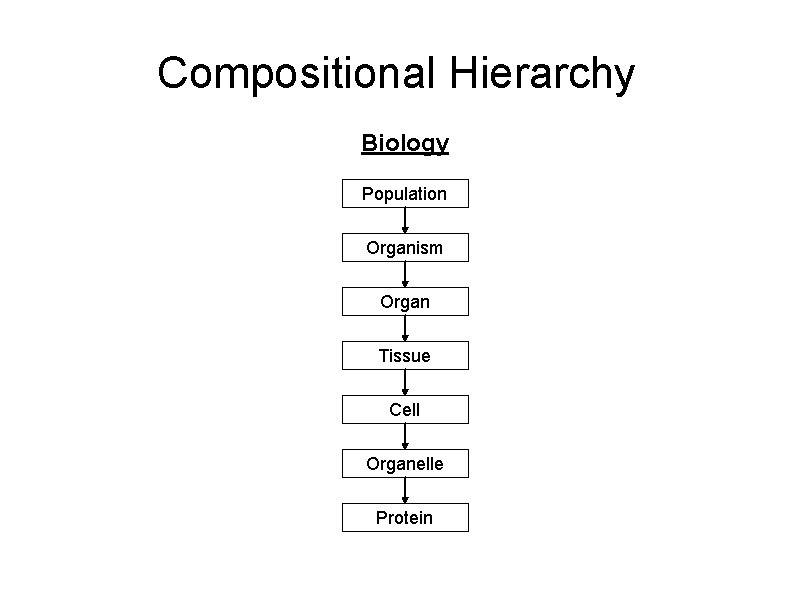

Compositional Hierarchy Biology Population Organism Organ Tissue Cell Organelle Protein

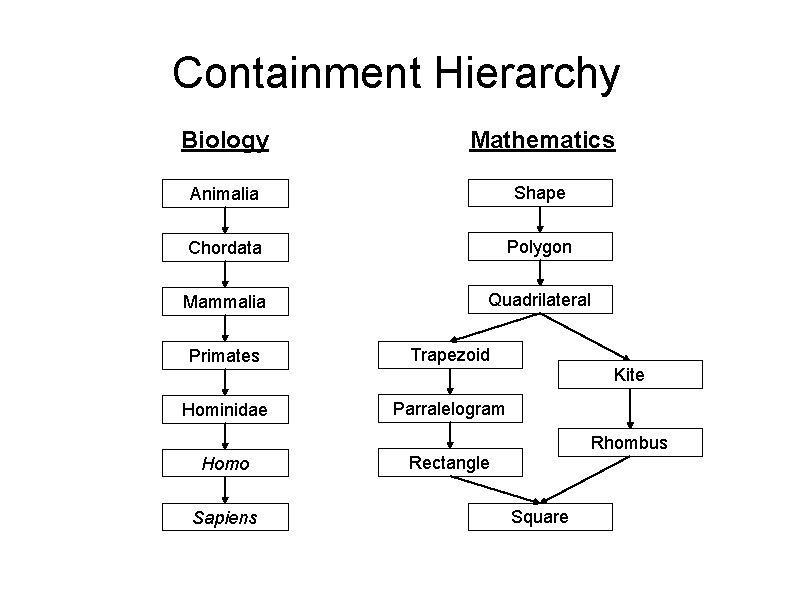

Containment Hierarchy Biology Mathematics Animalia Shape Chordata Polygon Mammalia Quadrilateral Primates Trapezoid Hominidae Parralelogram Homo Sapiens Kite Rhombus Rectangle Square

Feature Hierarchies for Object Classification Boris Epshtein & Shimon Ullman ICCV '05 Visual Features of Intermediate Complexity and Their Use in Classification Shimon Ullman, Michael Vidal Naquet & Erez Sali Nature Neuroscience, July 2002

Motivation Most object recognition schemes using parts or features fall into one of two categories: Using small basic features like Gabor patches or SIFT features Using large global complex features like templates or constellations However, small features return false positives far too often, and large global features are too picky

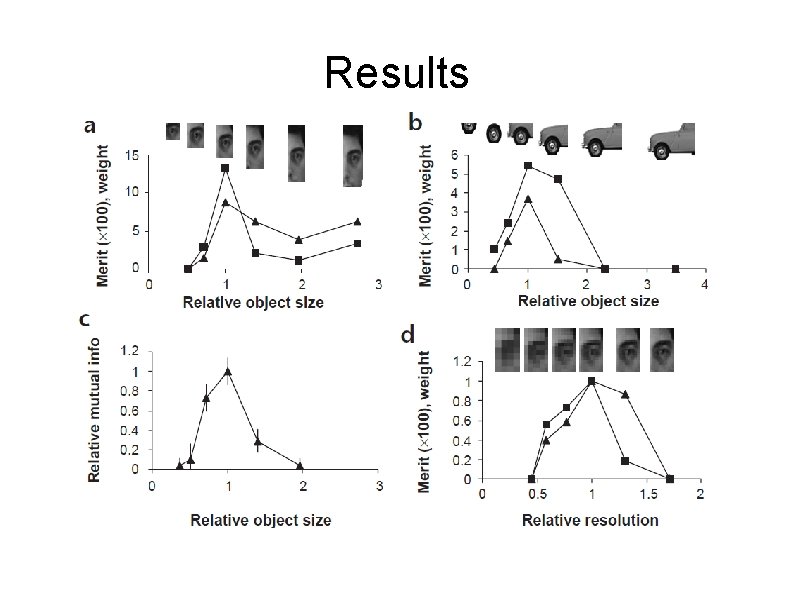

Intermediate Features - Better? To test the hypothesis that features of intermediate complexity (IC) might be optimal for use in object classification, image fragments of varying size were extracted from images of frontal faces and side views of cars Fragments were sorted by location, and for each fragment the mutual information was calculated: I(C, F) = H(C) – H(C|F) Where C denotes the class, F denotes the feature, and H denotes entropy.

Results

Conclusion IC features carry more information than small, simple features and large, complex ones Rationale is simple: IC features represent minimal trade-off between frequency and specificity But use of IC features raises a new problem: there are far more IC features than small, simple features Solution: heirarchical object classification

Hierarchical Classification Basic idea: in traditional object classification, objects are detected due to a sufficient presence/arrangement of some subset of features e. g. Cow ~ Body, tail, legs, head If features are too simple, too many false positives; too complex, too many misses Solution, represent parts as their own network of subparts e. g. Head ~ eyes, snout, ears, neck

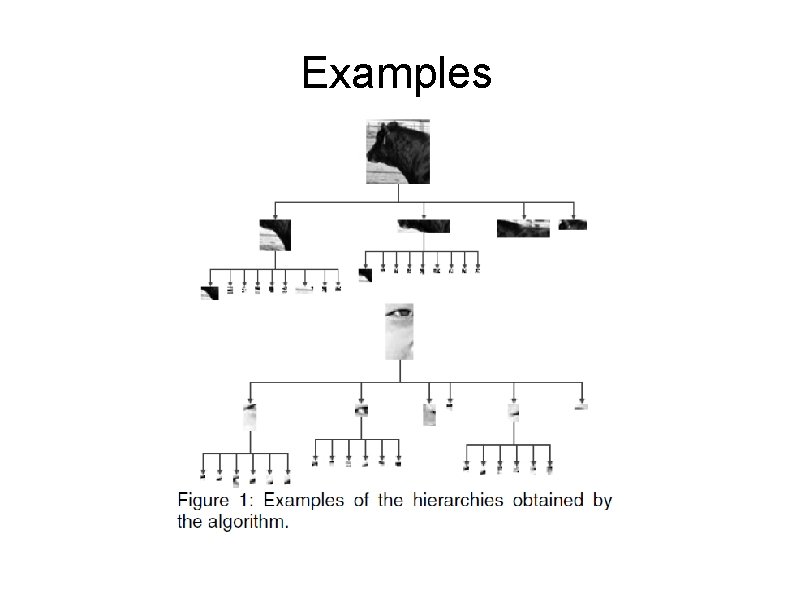

Examples

Previous Work Several algorithms have been proposed using more informative IC features, but without any hierarchical representation Other algorithms have used hierarchical representation of features, but hierarchies had predefined structures and/or used features which were significantly less informative than IC features

Structure of Training Algorithm Find most informative set of IC features For each feature, find most informative set of subfeatures Continue subdivision until subfeatures no longer significantly increase informativeness Select weights and regions of interest for all features and subfeatures using optimization of mutual information

Find informative features For a given class, collect >10, 000 image fragments from positive images (size 10%-50% of image on either axis) Select fragment with highest mutual information For each subsequent fragment, choose fragment which provides the most additional information Continue until best fragment adds insignificant information or set number of features is reached

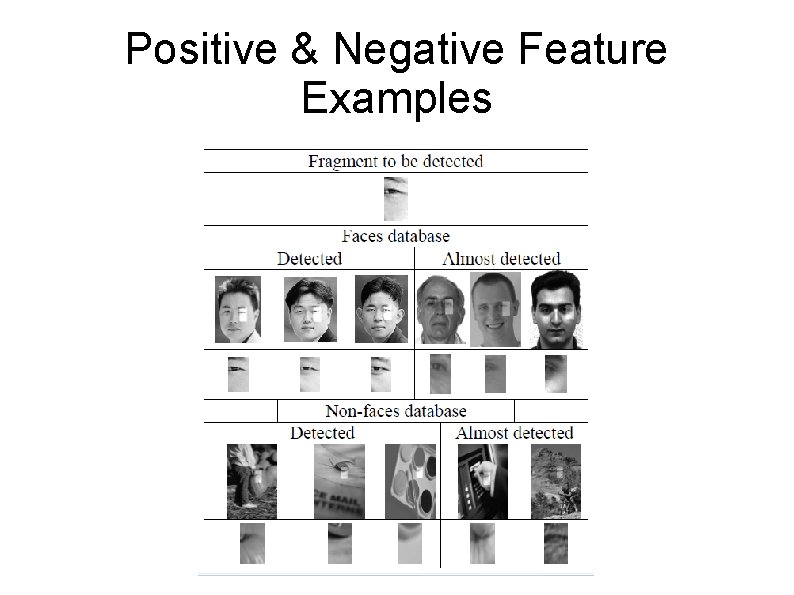

Finding Sub-features For any feature, the aim is to repeat the above process using the new feature as the target, rather than the overall object Positive examples are thus fragments in classpositive images where the feature is detected or almost detected Negative examples are fragments in class-negative images where the feature is detected or almost detected Sub-features are selected as before; if set of sub-features increases information, they are added to the hierarchy

Positive & Negative Feature Examples

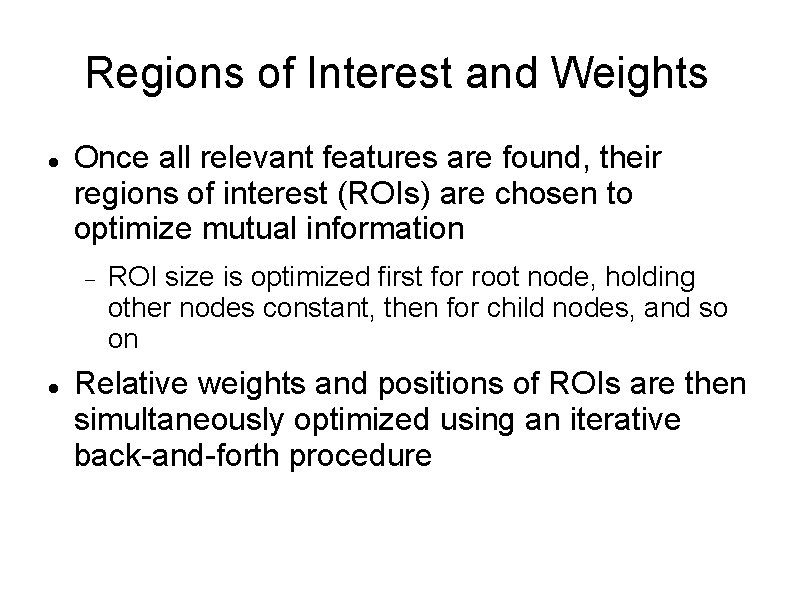

Regions of Interest and Weights Once all relevant features are found, their regions of interest (ROIs) are chosen to optimize mutual information ROI size is optimized first for root node, holding other nodes constant, then for child nodes, and so on Relative weights and positions of ROIs are then simultaneously optimized using an iterative back-and-forth procedure

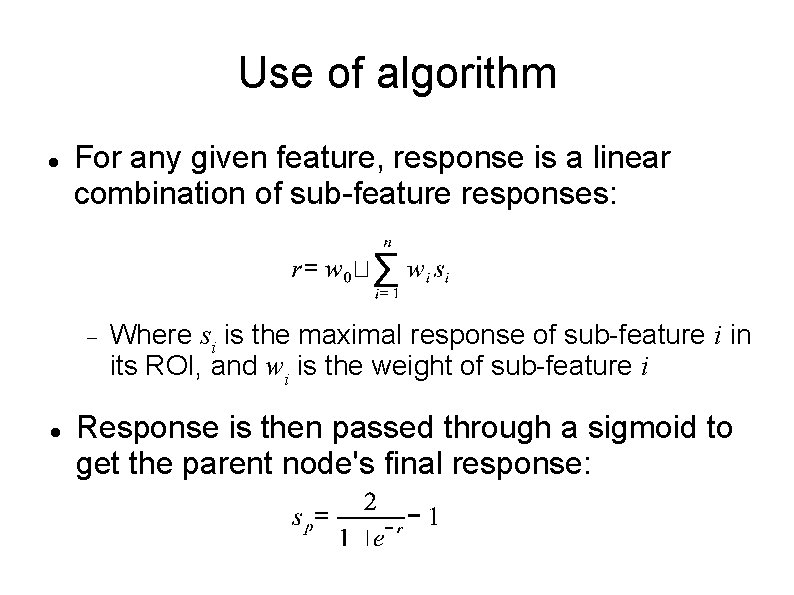

Use of algorithm For any given feature, response is a linear combination of sub-feature responses: Where si is the maximal response of sub-feature i in its ROI, and wi is the weight of sub-feature i Response is then passed through a sigmoid to get the parent node's final response:

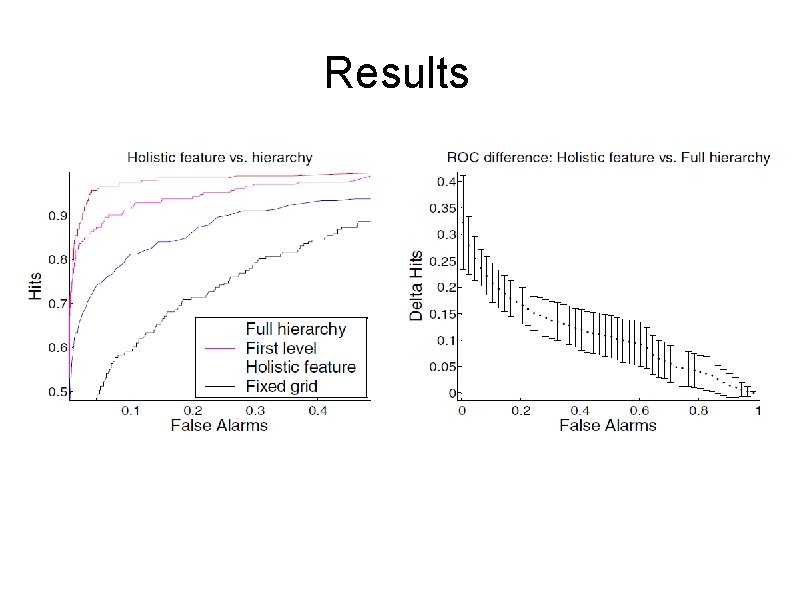

Results

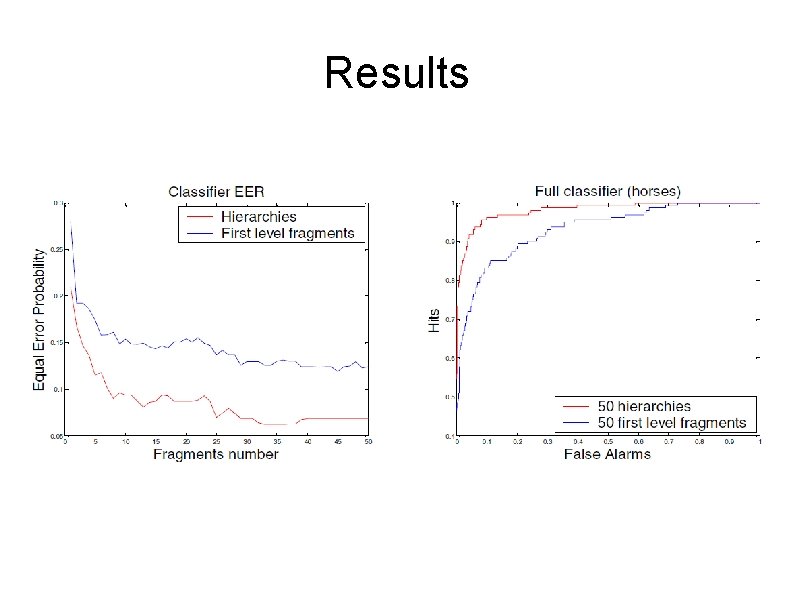

Results

Issues and Questions Optimization of features is not quite complete Application process is not as computationally efficient Features are chosen for maximum mutual information, then have ROIs optimized: it's possible that some ultimately more optimal features are excluded Response map must be calculated over entire image for every node in hierarchy Use of actual image fragments as features seems sub-optimal

Coarse-to-Fine Classification and Scene Labeling Donald Geman, 2003 Hierarchical Object Indexing and Sequential Learning Xiaodong Fan & Donald Geman ICPR 2004

Multi-class Object Classification Suppose we wish to read symbols off of a license plate With most algorithms we would test for the presence of every possible symbol one at a time, using a very large number of relevant object features This is slow, inefficient, and often inaccurate

Multi-class Object Classification What would be ideal would be a classifier which tests for multiple classes simultaenously In addition, the classifier should not be forced to fully check for every class at every location How to accomplish this? Let's play Twenty Questions

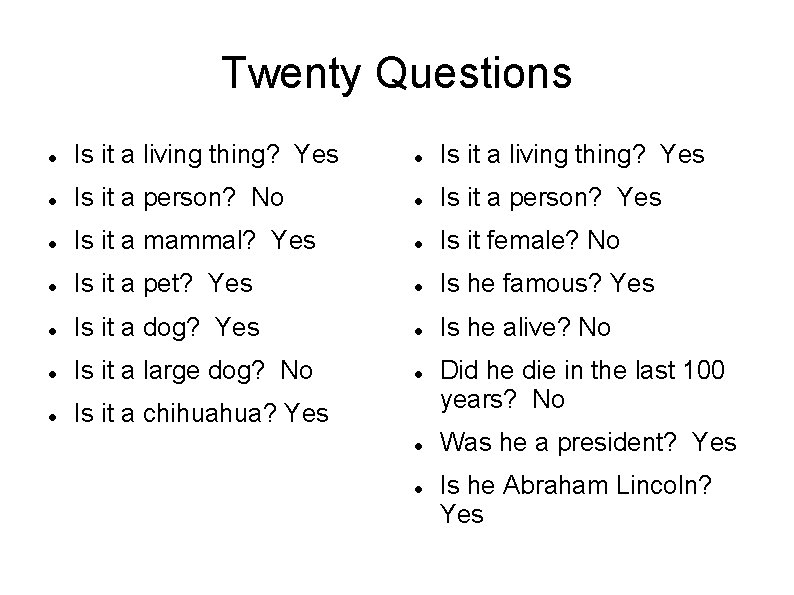

Twenty Questions Is it a living thing? Yes Is it a person? No Is it a person? Yes Is it a mammal? Yes Is it female? No Is it a pet? Yes Is he famous? Yes Is it a dog? Yes Is he alive? No Is it a large dog? No Is it a chihuahua? Yes Did he die in the last 100 years? No Was he a president? Yes Is he Abraham Lincoln? Yes

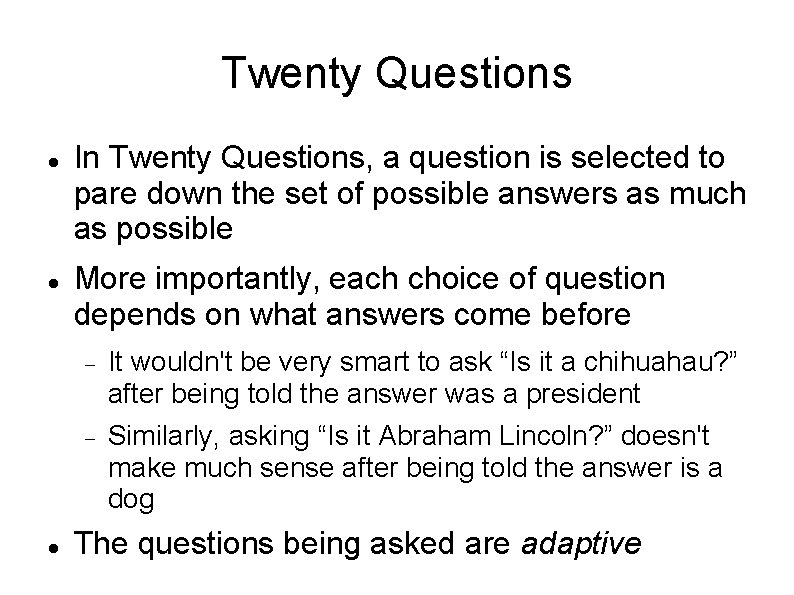

Twenty Questions In Twenty Questions, a question is selected to pare down the set of possible answers as much as possible More importantly, each choice of question depends on what answers come before It wouldn't be very smart to ask “Is it a chihuahau? ” after being told the answer was a president Similarly, asking “Is it Abraham Lincoln? ” doesn't make much sense after being told the answer is a dog The questions being asked are adaptive

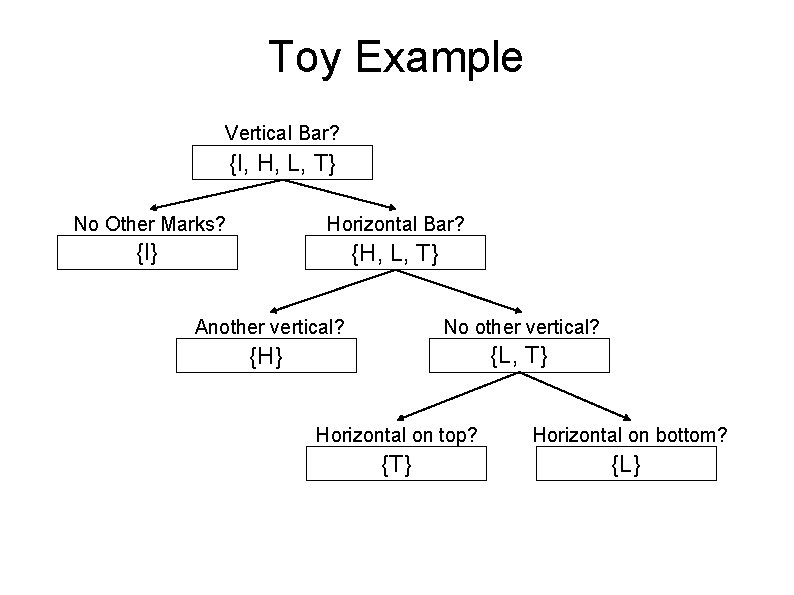

Coarse-to-Fine Classification Apply a similar principle to object classification Begin with broad tests to eliminate large numbers of possible hypotheses According to results of tests, apply successively more specific tests This set of tests creates a containment hierarchy of hypotheses

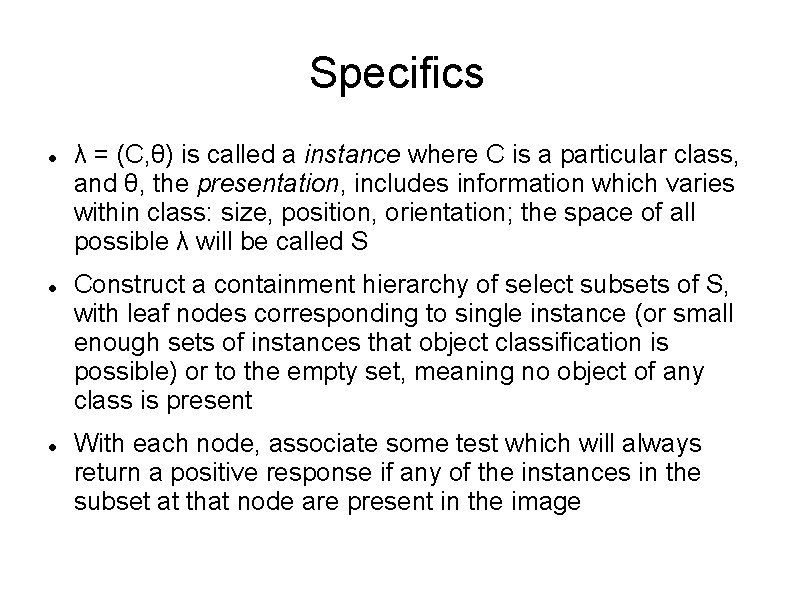

Specifics λ = (C, θ) is called a instance where C is a particular class, and θ, the presentation, includes information which varies within class: size, position, orientation; the space of all possible λ will be called S Construct a containment hierarchy of select subsets of S, with leaf nodes corresponding to single instance (or small enough sets of instances that object classification is possible) or to the empty set, meaning no object of any class is present With each node, associate some test which will always return a positive response if any of the instances in the subset at that node are present in the image

Toy Example Vertical Bar? {I, H, L, T} No Other Marks? Horizontal Bar? {I} {H, L, T} Another vertical? No other vertical? {H} {L, T} Horizontal on top? Horizontal on bottom? {T} {L}

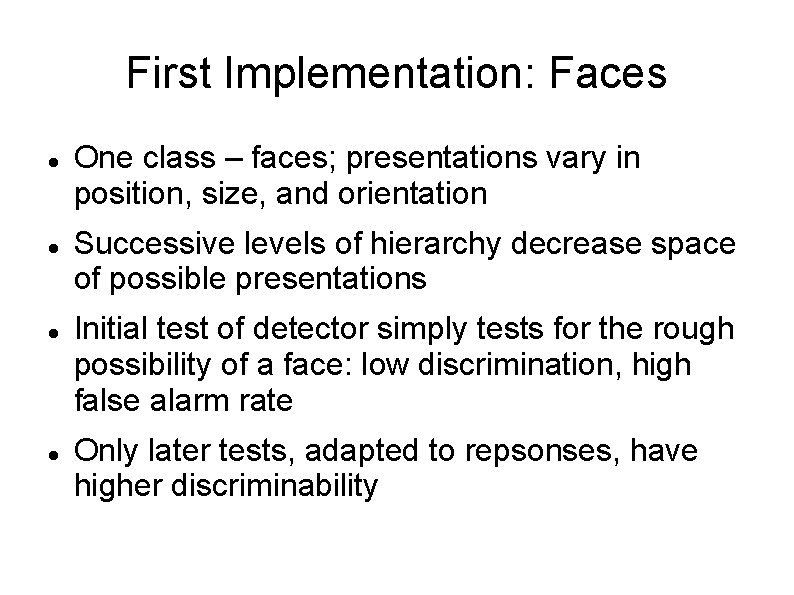

First Implementation: Faces One class – faces; presentations vary in position, size, and orientation Successive levels of hierarchy decrease space of possible presentations Initial test of detector simply tests for the rough possibility of a face: low discrimination, high false alarm rate Only later tests, adapted to repsonses, have higher discriminability

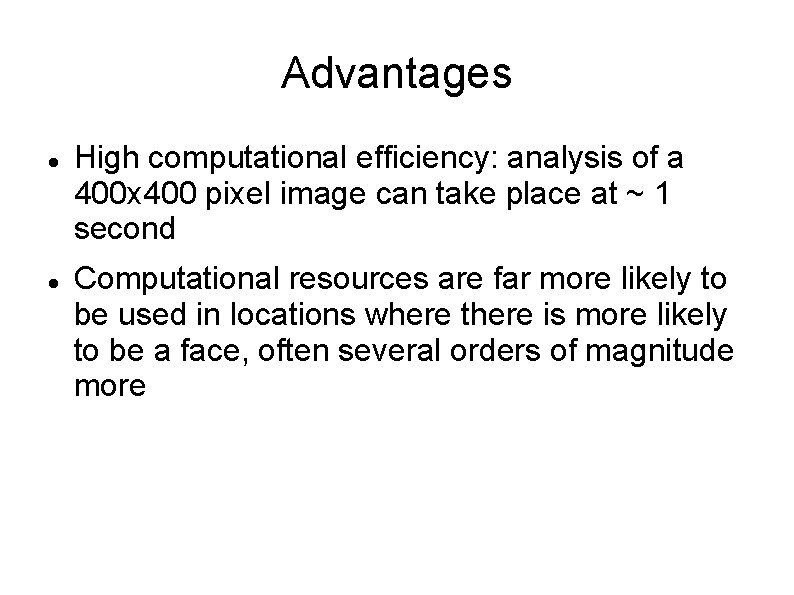

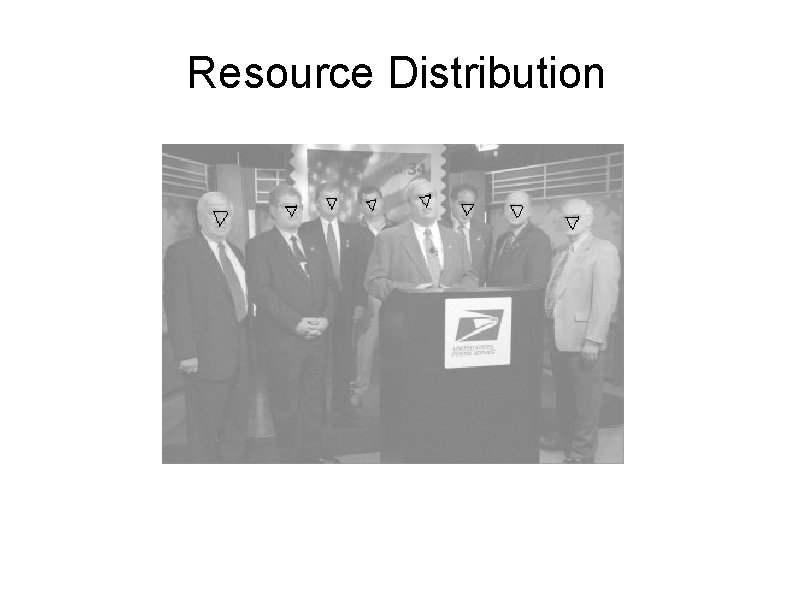

Advantages High computational efficiency: analysis of a 400 x 400 pixel image can take place at ~ 1 second Computational resources are far more likely to be used in locations where there is more likely to be a face, often several orders of magnitude more

Resource Distribution

Resource Distribution

Resource Distribution

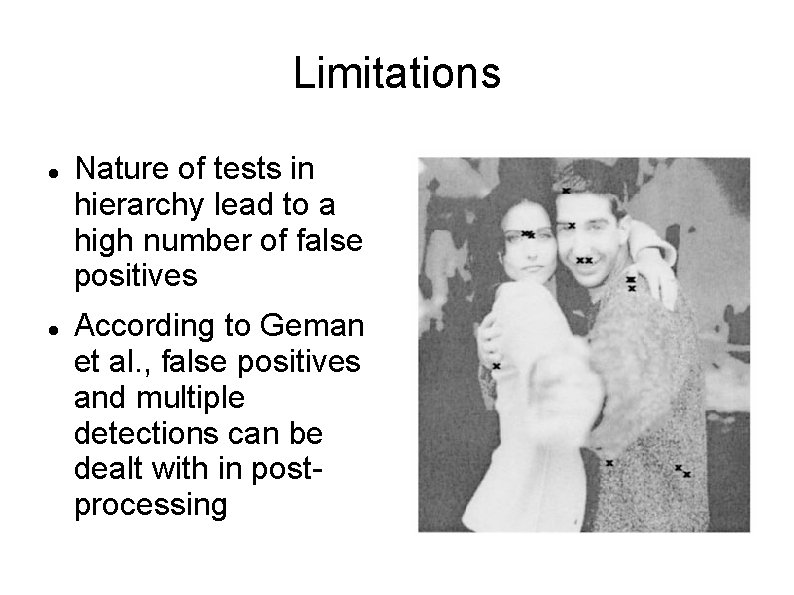

Limitations Nature of tests in hierarchy lead to a high number of false positives According to Geman et al. , false positives and multiple detections can be dealt with in postprocessing

Second Implementation: License Plates 37 object classes: 26 letters, 10 digits, and a vertical bar Presentation includes position, size, and tilt (assumed to be fairly small, < 5º) Hierarchy of instances constructed using “hierarchical clustering” Tests consist of weighted sum of responses of local orientation detectors, trained on positive & negative examples created by varying size, position and tilt of given templates

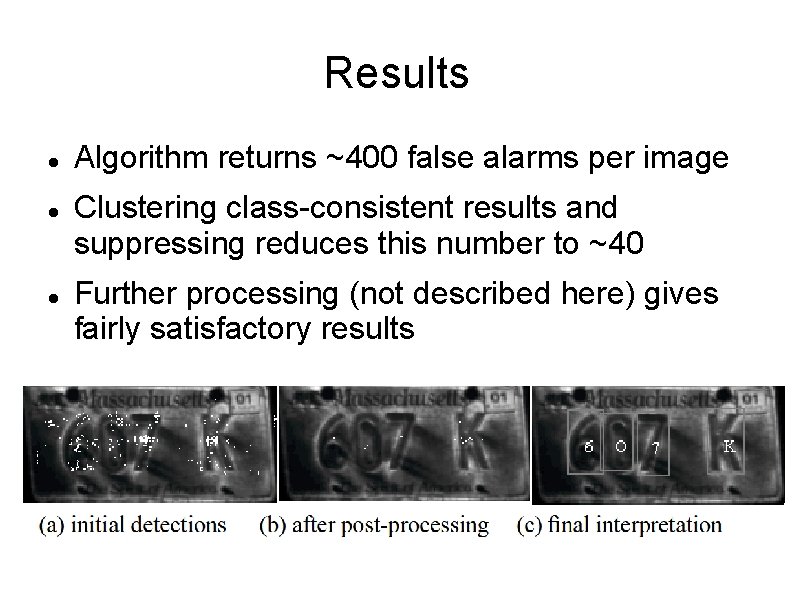

Results Algorithm returns ~400 false alarms per image Clustering class-consistent results and suppressing reduces this number to ~40 Further processing (not described here) gives fairly satisfactory results

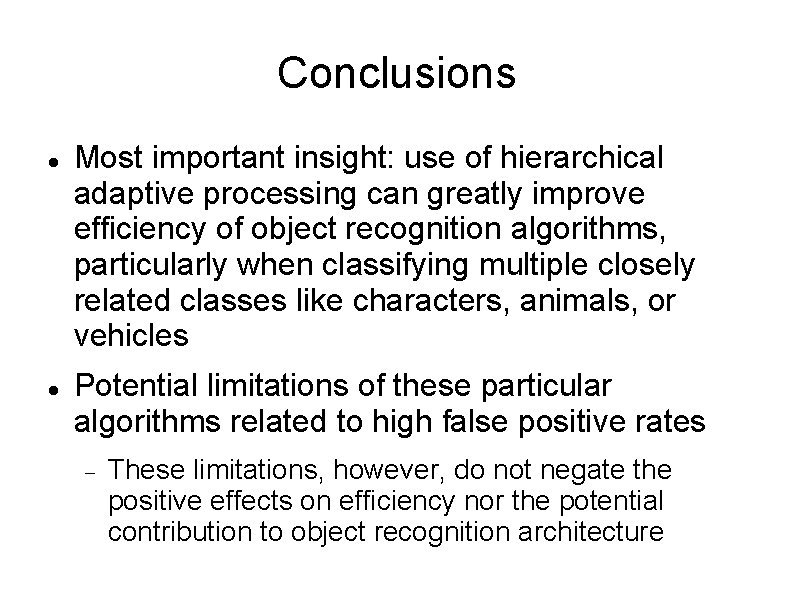

Conclusions Most important insight: use of hierarchical adaptive processing can greatly improve efficiency of object recognition algorithms, particularly when classifying multiple closely related classes like characters, animals, or vehicles Potential limitations of these particular algorithms related to high false positive rates These limitations, however, do not negate the positive effects on efficiency nor the potential contribution to object recognition architecture

References D. Geman, "Coarse-to-fine classification and scene labeling, " Nonlinear Estimation and Classification (eds. D. D. Denison et al. ), Lecture Notes in Statistics. New York: Springer-Verlag, 31 -48, 2003. F. Fleuret and D. Geman, "Fast face detection with precise pose estimation, " Proceedings ICPR 2002, 1, 235 -238, 2002. X. Fan and D. Geman, "Hierarchical object indexing and sequential learning, " Proceedings ICPR 2004, 3, 65 -68, 2004. Ullman, S. , Vidal-Naquet, M. , and Sali, E. “Visual features of intermediate complexity and their use in classification, ” Nature Neuroscience, 5(7), 1 -6, 2002. Epshtein, B. , and Ullman S. “Feature Hierarchies for Object Classification, ” Proceeedings ICCV 05, 1, 220 -227, 2005.

- Slides: 39