Hierarchical Topic Models and the Nested Chinese Restaurant

- Slides: 16

Hierarchical Topic Models and the Nested Chinese Restaurant Process Liutong Chen(lc 6 re) Siwei Liu(sl 7 vy) Shaojia Li(sl 4 ab)

Introduction 1. CRP 2. Model 3. Experiment 4. Conclusion

Introduction 1. Takes Bayesian approach to generate an appropriate prior via a distribution on partitions 2. Builds a hierarchical topic model 3. Illustrates approach on simulated data

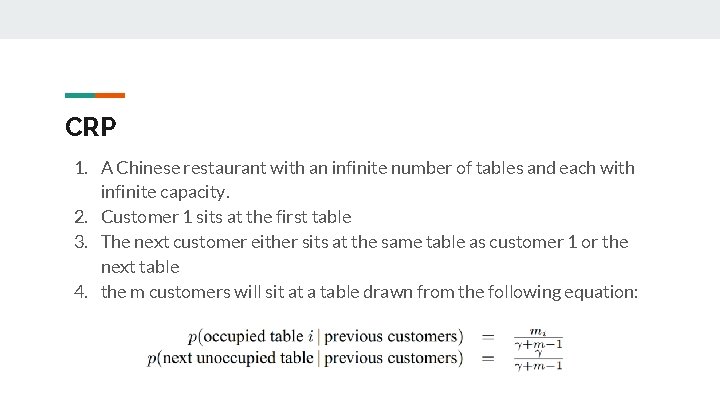

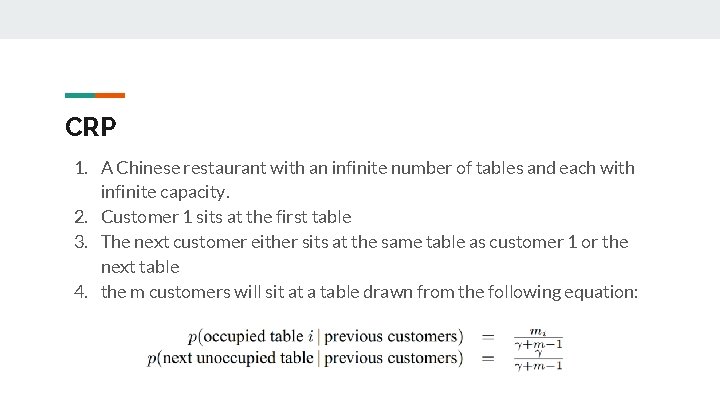

CRP 1. A Chinese restaurant with an infinite number of tables and each with infinite capacity. 2. Customer 1 sits at the first table 3. The next customer either sits at the same table as customer 1 or the next table 4. the m customers will sit at a table drawn from the following equation:

Extending CRP to Hierarchies Assumption: 1. Infinite amount of infinite table Chinese restaurant in a city. One of them is root restaurant. 2. Each tables has cards that refer to other restaurant 3. Each restaurant is referred to exactly once.

Extending CRP to Hierarchies Scene: 1. Time 1, a tourist enter the root restaurant and choose a table by above equation. 2. Time 2, the tourist go to the referred restaurant and choose a table by the equation. 3. Time L, the tourist is at the L-th referred restaurant and establish a path from root to L level in the infinite tree. 4. M tourist with L times, the collection of paths is a particular L level subtree of the infinite tree.

Hierarchical LDA Given an L-level tree and each node is associated with a topic. 1) Choose a path from root to leaf 2) Draw a vector of topic proportions θ from an L-dimensional Dirichlet 3) Generate the words in the document from a mixture of the topics along the path from root to leaf, with mixing proportions θ

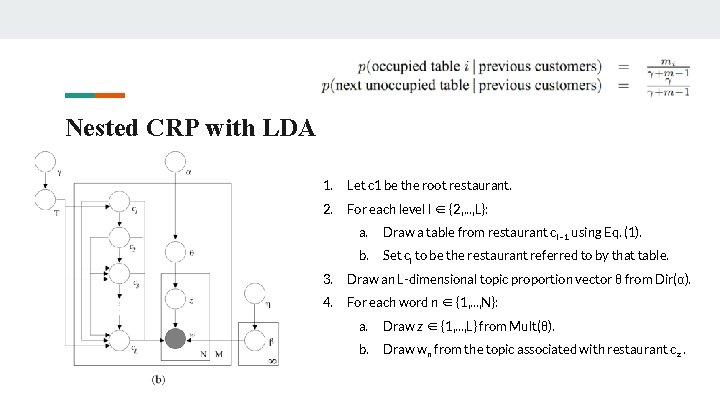

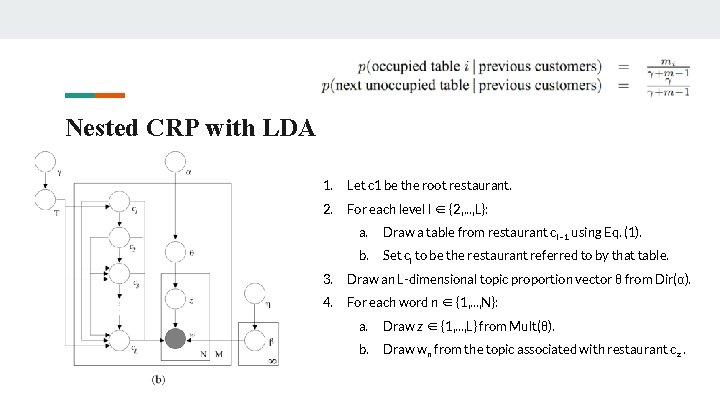

Nested CRP with LDA 1. Let c 1 be the root restaurant. 2. For each level l ∈ {2, . . . , L}: a. Draw a table from restaurant cl− 1 using Eq. (1). b. Set cl to be the restaurant referred to by that table. 3. Draw an L-dimensional topic proportion vector θ from Dir(α). 4. For each word n ∈ {1, . . . , N}: a. Draw z ∈ {1, . . . , L} from Mult(θ). b. Draw wn from the topic associated with restaurant c z.

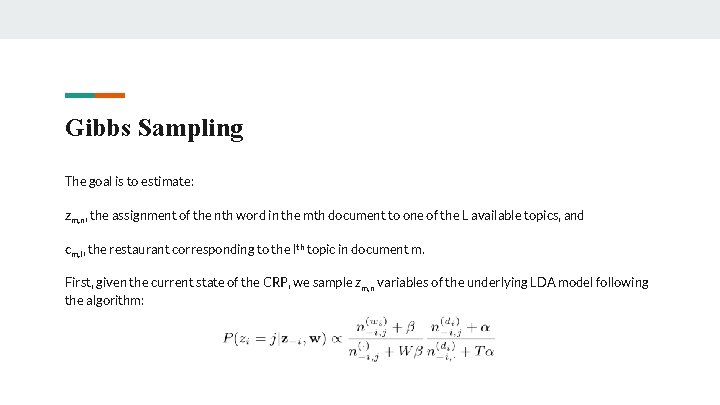

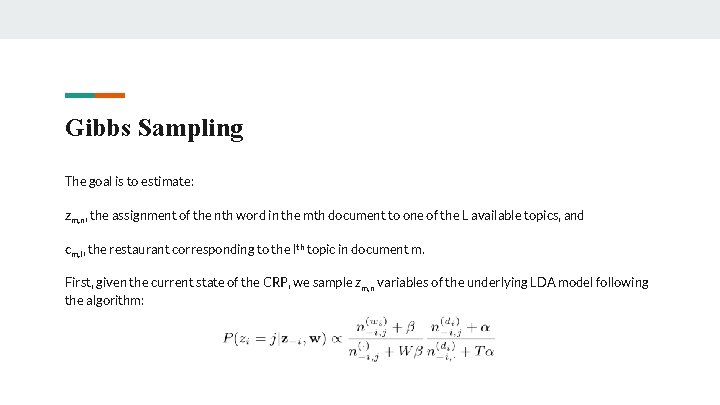

Gibbs Sampling The goal is to estimate: zm, n, the assignment of the nth word in the mth document to one of the L available topics, and cm, l, the restaurant corresponding to the lth topic in document m. First, given the current state of the CRP, we sample zm, n variables of the underlying LDA model following the algorithm:

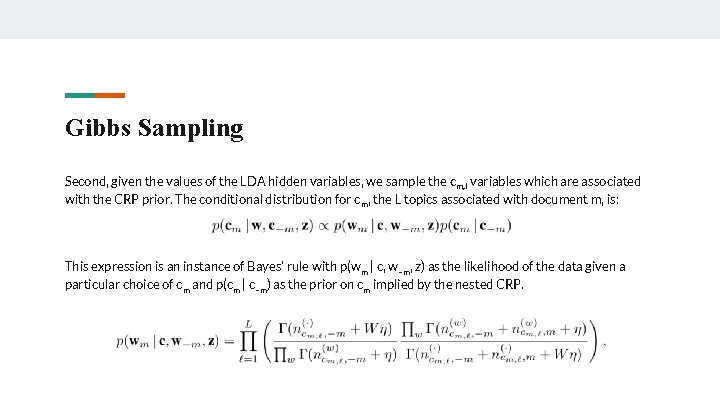

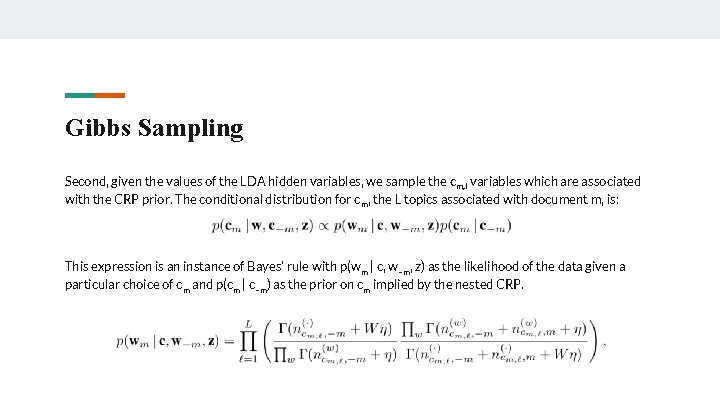

Gibbs Sampling Second, given the values of the LDA hidden variables, we sample the cm, l variables which are associated with the CRP prior. The conditional distribution for c m, the L topics associated with document m, is: This expression is an instance of Bayes’ rule with p(wm | c, w−m, z) as the likelihood of the data given a particular choice of c m and p(cm | c−m) as the prior on cm implied by the nested CRP.

Experiment 1. Compare CRP method with Bayes Factor method 2. Estimate five different hierarchies. 3. Demonstration on real data

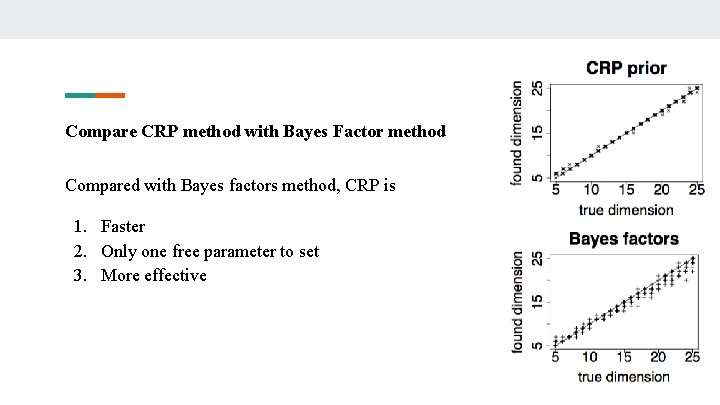

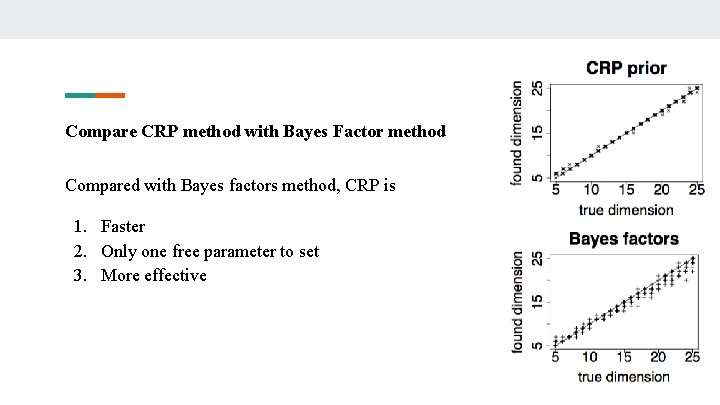

Compare CRP method with Bayes Factor method Compared with Bayes factors method, CRP is 1. Faster 2. Only one free parameter to set 3. More effective

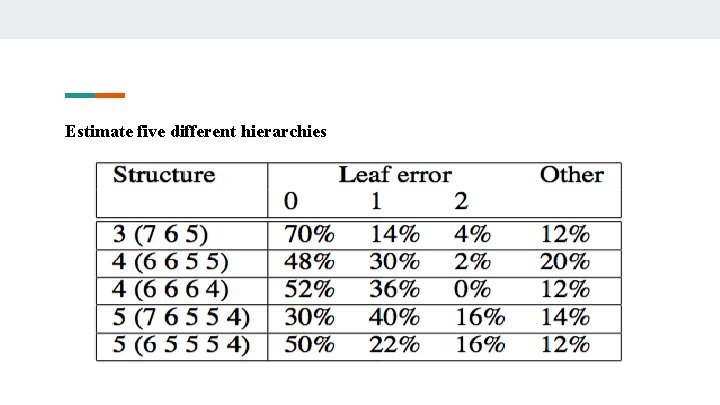

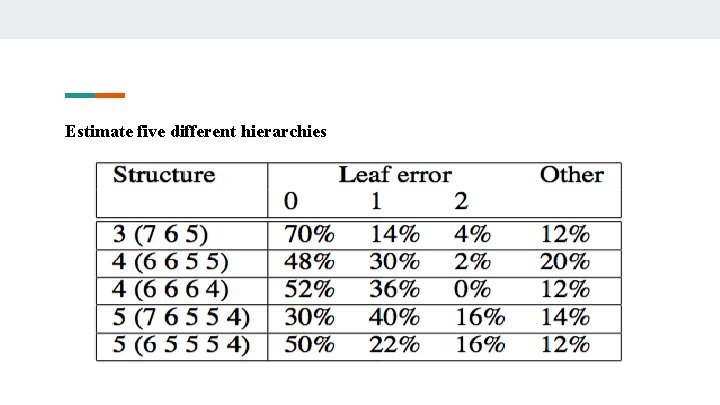

Estimate five different hierarchies

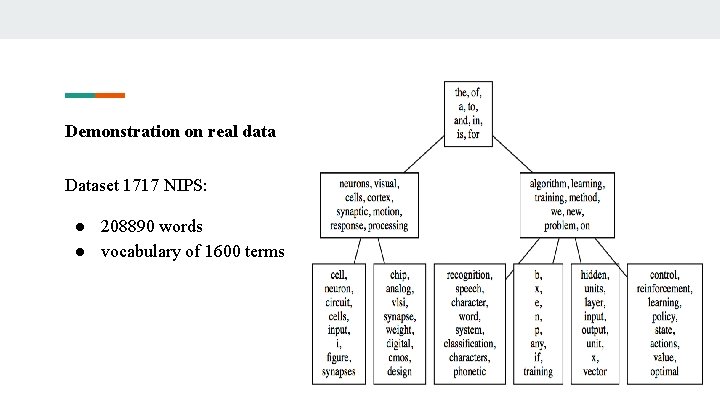

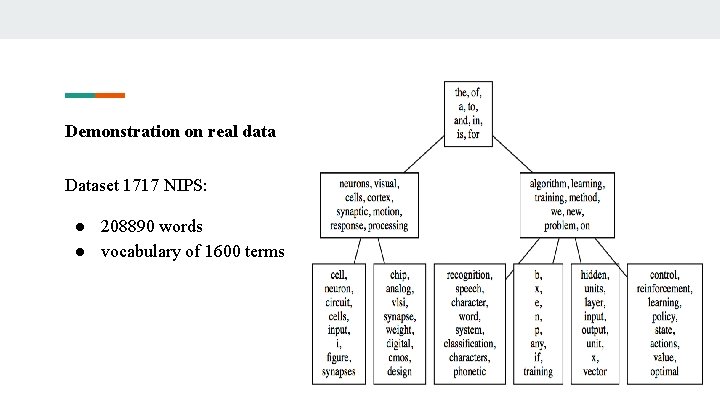

Demonstration on real data Dataset 1717 NIPS: ● 208890 words ● vocabulary of 1600 terms

Conclusion 1. Nested Chinese Restaurant Process 2. Gibbs sampling procedure for the model Extension: 1. Depth of hierarchies can vary from document to document 2. Documents in models are allowed to mix over paths

Questions?