HIERARCHICAL KNOWLEDGE GRADIENT FOR SEQUENTIAL SAMPLING Martijn Mes

![MEASUREMENT POLICIES [1/2] § Optimal policies: § Dynamic programming (computational challenge) § Special case: MEASUREMENT POLICIES [1/2] § Optimal policies: § Dynamic programming (computational challenge) § Special case:](https://slidetodoc.com/presentation_image/0eb322e5f6bee72bb6df10013301b54c/image-10.jpg)

![MEASUREMENT POLICIES [2/2] § Heuristic measurement policies, continued: § Boltzmann exploration § Interval estimation MEASUREMENT POLICIES [2/2] § Heuristic measurement policies, continued: § Boltzmann exploration § Interval estimation](https://slidetodoc.com/presentation_image/0eb322e5f6bee72bb6df10013301b54c/image-11.jpg)

![THE KNOWLEDGE-GRADIET POLICY [1/2] § Updating beliefs § We assume we start with a THE KNOWLEDGE-GRADIET POLICY [1/2] § Updating beliefs § We assume we start with a](https://slidetodoc.com/presentation_image/0eb322e5f6bee72bb6df10013301b54c/image-12.jpg)

![THE KNOWLEDGE-GRADIET POLICY [2/2] § Measurement decisions § The knowledge gradient is the expected THE KNOWLEDGE-GRADIET POLICY [2/2] § Measurement decisions § The knowledge gradient is the expected](https://slidetodoc.com/presentation_image/0eb322e5f6bee72bb6df10013301b54c/image-13.jpg)

![STATISTICAL AGGREGATION [1/2] § Instead of using a given covariance matrix, we might work STATISTICAL AGGREGATION [1/2] § Instead of using a given covariance matrix, we might work](https://slidetodoc.com/presentation_image/0eb322e5f6bee72bb6df10013301b54c/image-17.jpg)

![STATISTICAL AGGREGATION [2/2] § Examples continued: Aggregation of vector valued data (multi-attribute vectors): ignoring STATISTICAL AGGREGATION [2/2] § Examples continued: Aggregation of vector valued data (multi-attribute vectors): ignoring](https://slidetodoc.com/presentation_image/0eb322e5f6bee72bb6df10013301b54c/image-18.jpg)

![FURTHER RESEARCH [1/2] § Hierarchical sampling § HKG requires us to scan all possible FURTHER RESEARCH [1/2] § Hierarchical sampling § HKG requires us to scan all possible](https://slidetodoc.com/presentation_image/0eb322e5f6bee72bb6df10013301b54c/image-27.jpg)

![FURTHER RESEARCH [2/2] § The challenge is to cope with bias in downstream values FURTHER RESEARCH [2/2] § The challenge is to cope with bias in downstream values](https://slidetodoc.com/presentation_image/0eb322e5f6bee72bb6df10013301b54c/image-28.jpg)

- Slides: 29

HIERARCHICAL KNOWLEDGE GRADIENT FOR SEQUENTIAL SAMPLING Martijn Mes Department of Operational Methods for Production and Logistics University of Twente, The Netherlands Warren Powell Department of Operations Research and Financial Engineering Princeton University, USA Peter Frazier Department of Operations Research and Information Engineering Cornell University, USA 11 -10 -2009 INFORMS Annual Meeting San Diego Sunday, October 11, 2009 1 INFORMS Annual Meeting San

OPTIMAL LEARNING § Problem § Find the best alternative from a set of alternatives § Before choosing, you have to option to measure the alternatives § But measurements are noisy § How should you sequence your measurements to produce the best answer in the end? § For problems with a finite number of alternatives § On-line learning (learn as you earn): multi-armed bandit problem § Off-line learning: ranking and selection problem Let’s illustrate the problem… INFORMS Annual Meeting San Diego 11 -10 -2009 2

WHAT WOULD BE THE BEST PLACE TO GO FISHING? INFORMS Annual Meeting San Diego 11 -10 -2009 3

WHAT WOULD BE THE BEST PLACE TO BUILD A WIND FARM? INFORMS Annual Meeting San Diego 11 -10 -2009 4

WHAT WOULD BE THE BEST CHEMICAL COMPOUND IN A DRUG TO FIGHT A PARTICULAR DISEASE? INFORMS Annual Meeting San Diego 11 -10 -2009 5

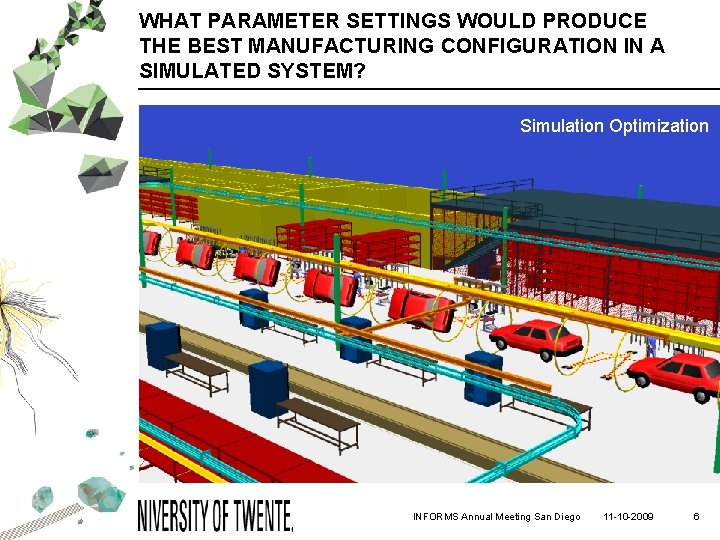

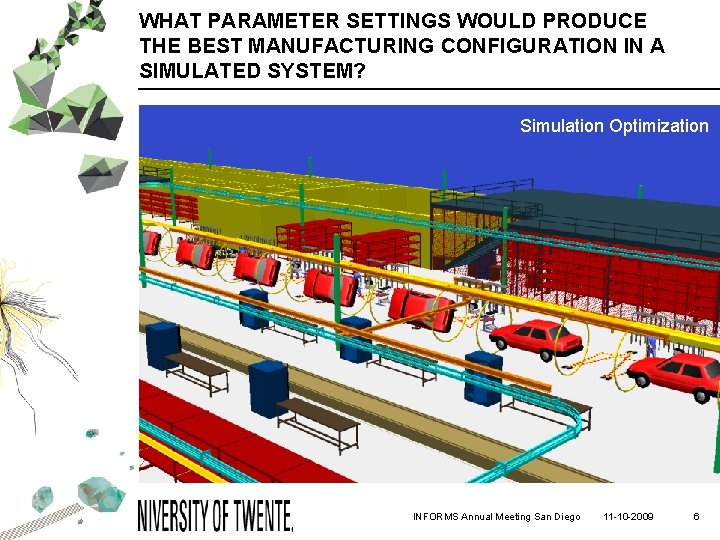

WHAT PARAMETER SETTINGS WOULD PRODUCE THE BEST MANUFACTURING CONFIGURATION IN A SIMULATED SYSTEM? Simulation Optimization INFORMS Annual Meeting San Diego 11 -10 -2009 6

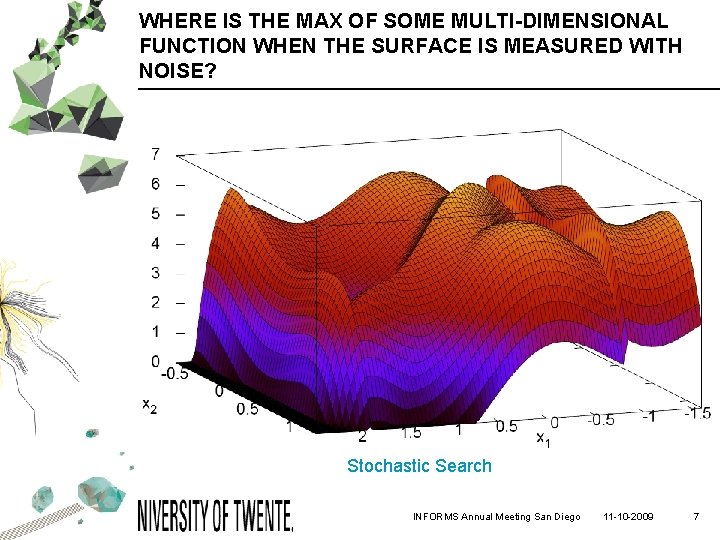

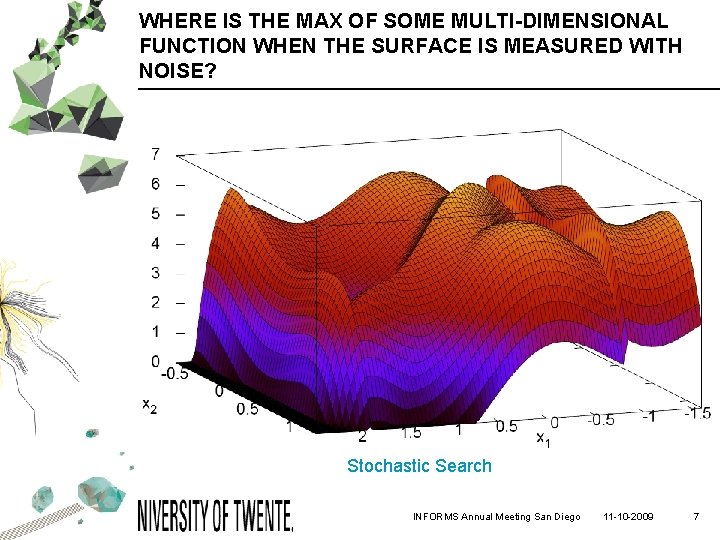

WHERE IS THE MAX OF SOME MULTI-DIMENSIONAL FUNCTION WHEN THE SURFACE IS MEASURED WITH NOISE? Stochastic Search INFORMS Annual Meeting San Diego 11 -10 -2009 7

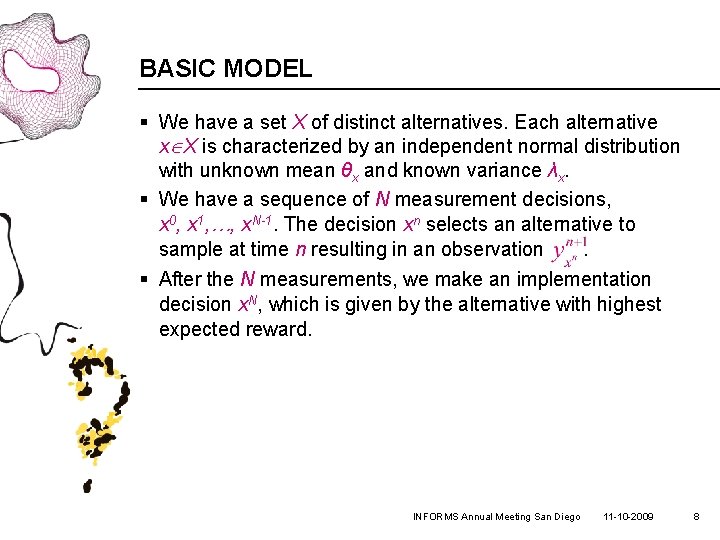

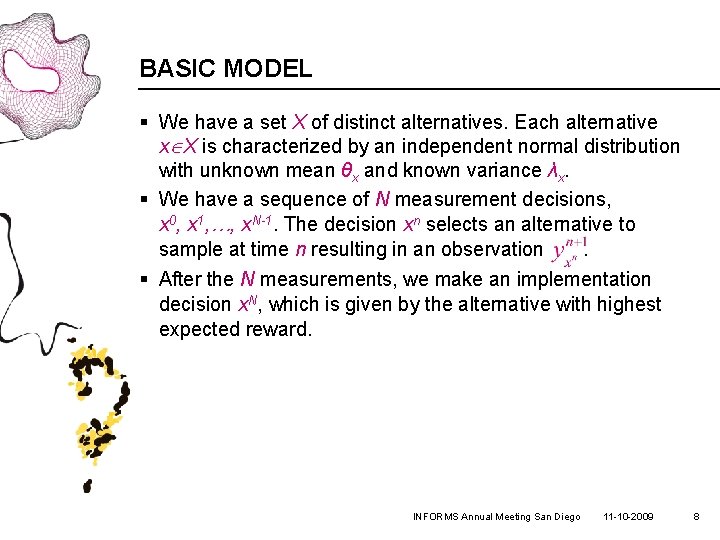

BASIC MODEL § We have a set X of distinct alternatives. Each alternative x X is characterized by an independent normal distribution with unknown mean θx and known variance λx. § We have a sequence of N measurement decisions, x 0, x 1, …, x. N-1. The decision xn selects an alternative to sample at time n resulting in an observation. § After the N measurements, we make an implementation decision x. N, which is given by the alternative with highest expected reward. INFORMS Annual Meeting San Diego 11 -10 -2009 8

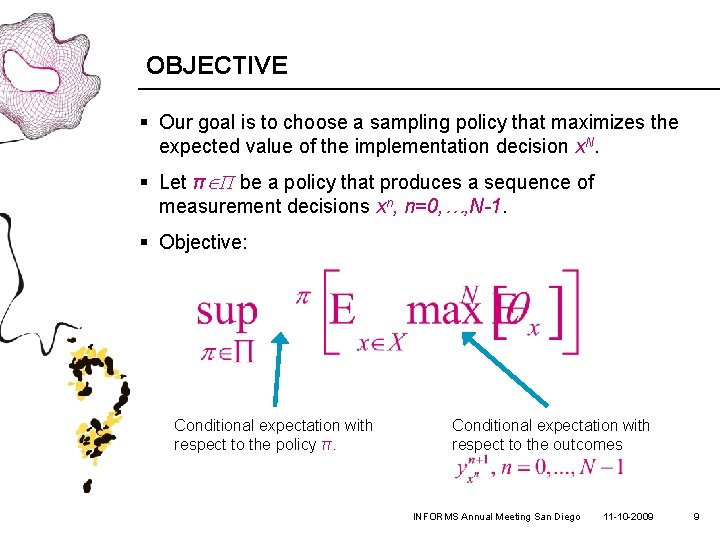

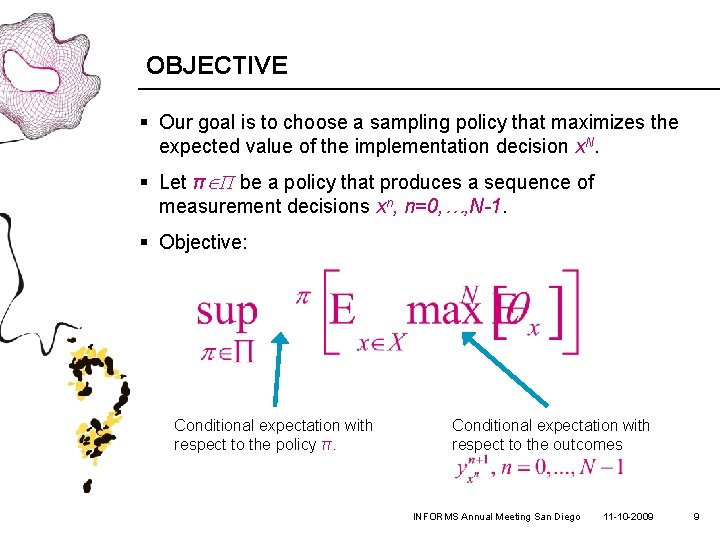

OBJECTIVE § Our goal is to choose a sampling policy that maximizes the expected value of the implementation decision x. N. § Let π Π be a policy that produces a sequence of measurement decisions xn, n=0, …, N-1. § Objective: Conditional expectation with respect to the policy π. Conditional expectation with respect to the outcomes INFORMS Annual Meeting San Diego 11 -10 -2009 9

![MEASUREMENT POLICIES 12 Optimal policies Dynamic programming computational challenge Special case MEASUREMENT POLICIES [1/2] § Optimal policies: § Dynamic programming (computational challenge) § Special case:](https://slidetodoc.com/presentation_image/0eb322e5f6bee72bb6df10013301b54c/image-10.jpg)

MEASUREMENT POLICIES [1/2] § Optimal policies: § Dynamic programming (computational challenge) § Special case: multi-armed bandit problem. Can be solved using the Gittins index (Gittins and Jones, 1974). § Heuristic measurement policies: § Pure exploitation: always make the choice that appears to be the best. § Pure exploration: make choices at random so that you are always learning more, but without regard to the cost of the decision. § Hybrid § Explore with probability ρ and exploit with probability 1 -ρ § Epsilon-greedy exploration: explore with probability pn=c/n. Goes to zero as n ∞, but not too quickly. INFORMS Annual Meeting San Diego 11 -10 -2009 10

![MEASUREMENT POLICIES 22 Heuristic measurement policies continued Boltzmann exploration Interval estimation MEASUREMENT POLICIES [2/2] § Heuristic measurement policies, continued: § Boltzmann exploration § Interval estimation](https://slidetodoc.com/presentation_image/0eb322e5f6bee72bb6df10013301b54c/image-11.jpg)

MEASUREMENT POLICIES [2/2] § Heuristic measurement policies, continued: § Boltzmann exploration § Interval estimation § Approximate policies for off-line learning § Optimal computing budget allocation (Chen et al. 1996) § LL(s) – Batch linear loss (Chick et al. , 2009) § Maximizing the expected value of a single measurement § (R 1, …, R 1) policy (Gupta and Miescke, 1996) § EVI (Chick et al. , 2009) § “Knowledge gradient” (Frazier and Powell, 2008) INFORMS Annual Meeting San Diego 11 -10 -2009 11

![THE KNOWLEDGEGRADIET POLICY 12 Updating beliefs We assume we start with a THE KNOWLEDGE-GRADIET POLICY [1/2] § Updating beliefs § We assume we start with a](https://slidetodoc.com/presentation_image/0eb322e5f6bee72bb6df10013301b54c/image-12.jpg)

THE KNOWLEDGE-GRADIET POLICY [1/2] § Updating beliefs § We assume we start with a distribution of belief about the true mean θx, (a Bayesian prior) § Next, we observe § Using Bayes theorem, we can show that our new distribution (posterior belief) about the true mean is § We perform these updates with each observation INFORMS Annual Meeting San Diego 11 -10 -2009 12

![THE KNOWLEDGEGRADIET POLICY 22 Measurement decisions The knowledge gradient is the expected THE KNOWLEDGE-GRADIET POLICY [2/2] § Measurement decisions § The knowledge gradient is the expected](https://slidetodoc.com/presentation_image/0eb322e5f6bee72bb6df10013301b54c/image-13.jpg)

THE KNOWLEDGE-GRADIET POLICY [2/2] § Measurement decisions § The knowledge gradient is the expected value of a single measurement § Knowledge-gradient policy INFORMS Annual Meeting San Diego 11 -10 -2009 13

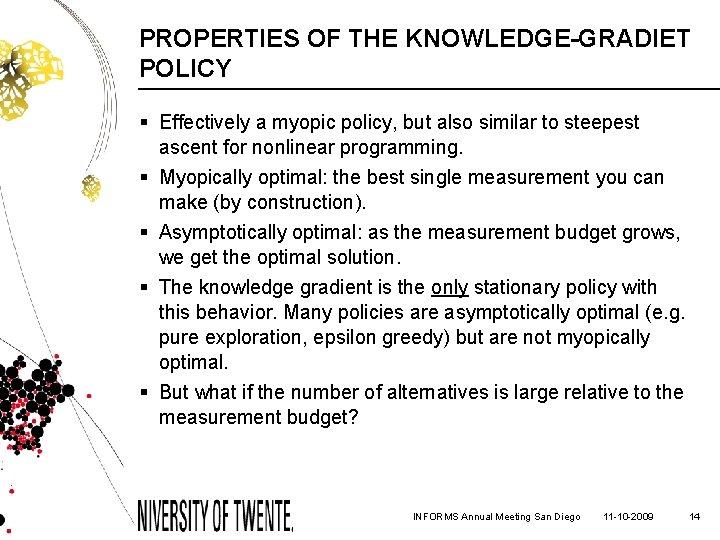

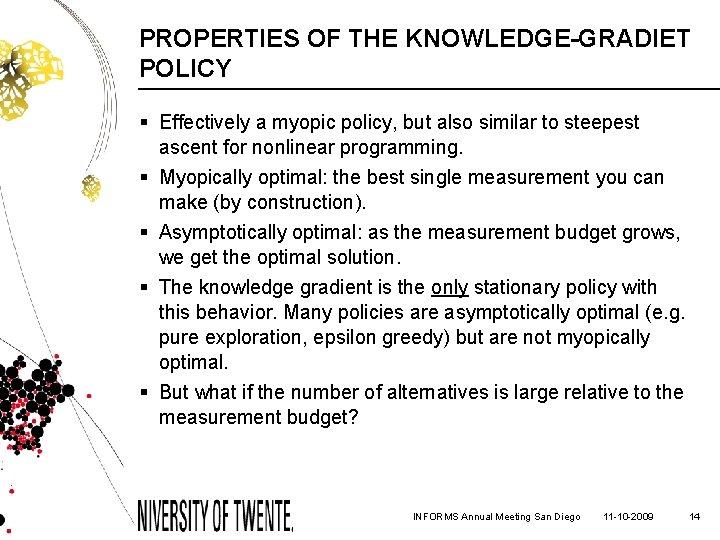

PROPERTIES OF THE KNOWLEDGE-GRADIET POLICY § Effectively a myopic policy, but also similar to steepest ascent for nonlinear programming. § Myopically optimal: the best single measurement you can make (by construction). § Asymptotically optimal: as the measurement budget grows, we get the optimal solution. § The knowledge gradient is the only stationary policy with this behavior. Many policies are asymptotically optimal (e. g. pure exploration, epsilon greedy) but are not myopically optimal. § But what if the number of alternatives is large relative to the measurement budget? INFORMS Annual Meeting San Diego 11 -10 -2009 14

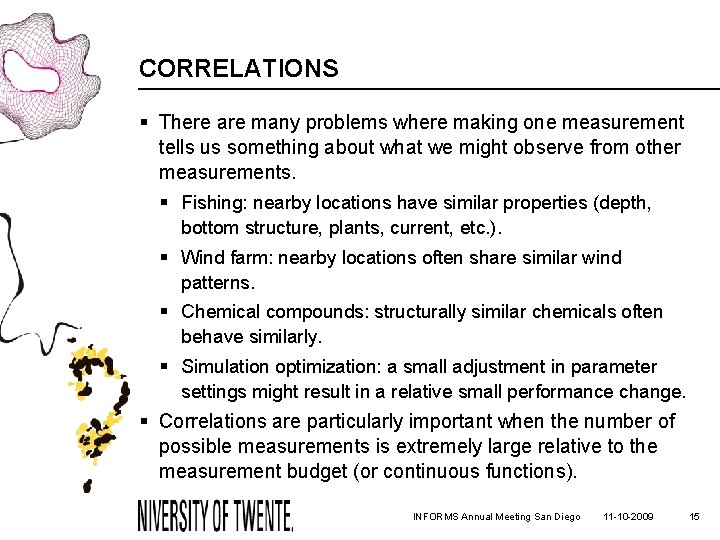

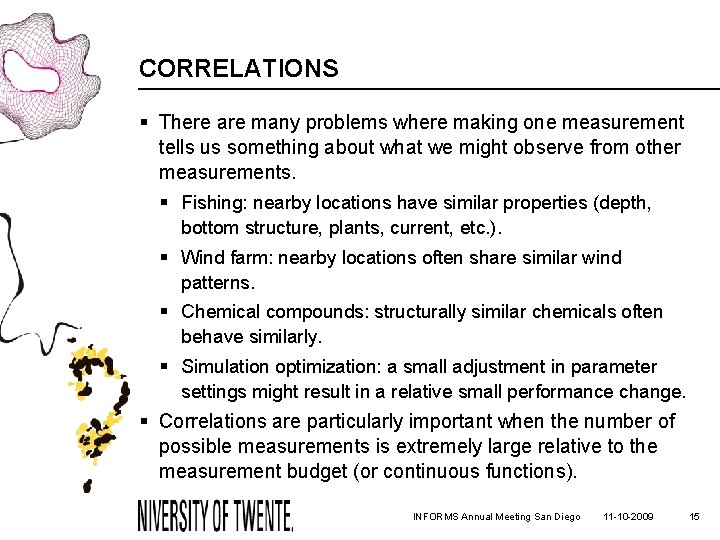

CORRELATIONS § There are many problems where making one measurement tells us something about what we might observe from other measurements. § Fishing: nearby locations have similar properties (depth, bottom structure, plants, current, etc. ). § Wind farm: nearby locations often share similar wind patterns. § Chemical compounds: structurally similar chemicals often behave similarly. § Simulation optimization: a small adjustment in parameter settings might result in a relative small performance change. § Correlations are particularly important when the number of possible measurements is extremely large relative to the measurement budget (or continuous functions). INFORMS Annual Meeting San Diego 11 -10 -2009 15

KNOWLEDGE GRADIENT FOR CORRELATED BELIEFS § The knowledge-gradient policy for correlated normal beliefs (Frazier, Powell, and Dayanik. , 2009) § Belief is multivariate normal § Significantly outperform methods which ignore correlations § Computing the expectation is more challenging § Assumption: covariance matrix known (or we first have to learn it). INFORMS Annual Meeting San Diego 11 -10 -2009 16

![STATISTICAL AGGREGATION 12 Instead of using a given covariance matrix we might work STATISTICAL AGGREGATION [1/2] § Instead of using a given covariance matrix, we might work](https://slidetodoc.com/presentation_image/0eb322e5f6bee72bb6df10013301b54c/image-17.jpg)

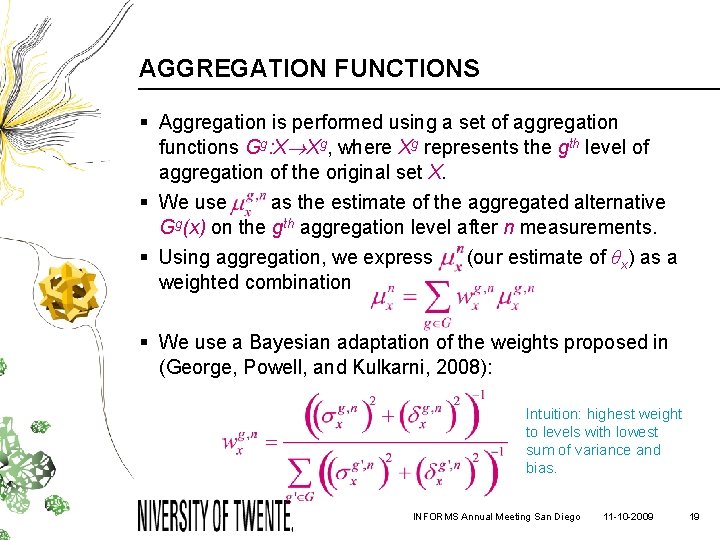

STATISTICAL AGGREGATION [1/2] § Instead of using a given covariance matrix, we might work with statistical aggregation to allow generalization across alternatives. § Examples: Geographical aggregation Binary tree aggregation for continuous functions INFORMS Annual Meeting San Diego 11 -10 -2009 17

![STATISTICAL AGGREGATION 22 Examples continued Aggregation of vector valued data multiattribute vectors ignoring STATISTICAL AGGREGATION [2/2] § Examples continued: Aggregation of vector valued data (multi-attribute vectors): ignoring](https://slidetodoc.com/presentation_image/0eb322e5f6bee72bb6df10013301b54c/image-18.jpg)

STATISTICAL AGGREGATION [2/2] § Examples continued: Aggregation of vector valued data (multi-attribute vectors): ignoring dimensions g=7 V(f(a 1)) g=6 V(a 1) g=5 V(a 1, f(a 2)) g=4 V(a 1, a 2) g=3 V(a 1, …, a 3) g=2 V(a 1, …, a 4) g=1 V(a 1, …, a 5) g=0 V(a 1, …, a 10) V = value of a driver with certain attributes a 1 = location a 2 = domicile a 3 = capacity type a 4 = scheduled time at home a 5 = days away from home a 6 = available time a 7 = geographical constraints a 8 = DOT road hours a 9 = DOT duty hours a 10= Eight-day duty hours INFORMS Annual Meeting San Diego 11 -10 -2009 18

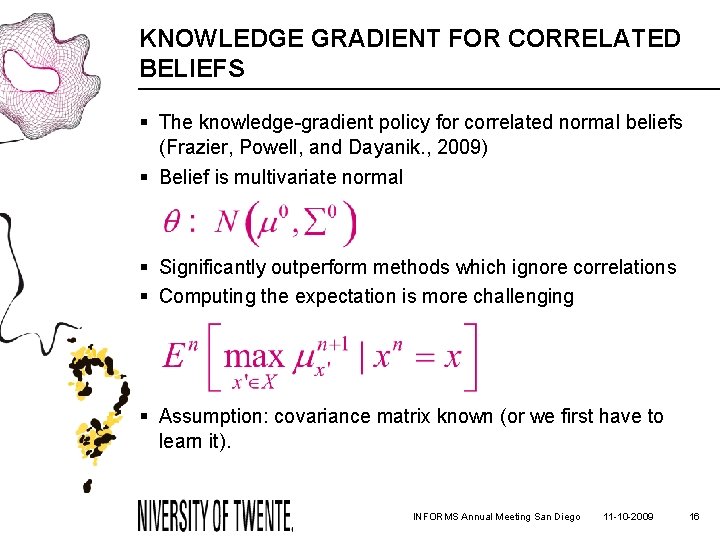

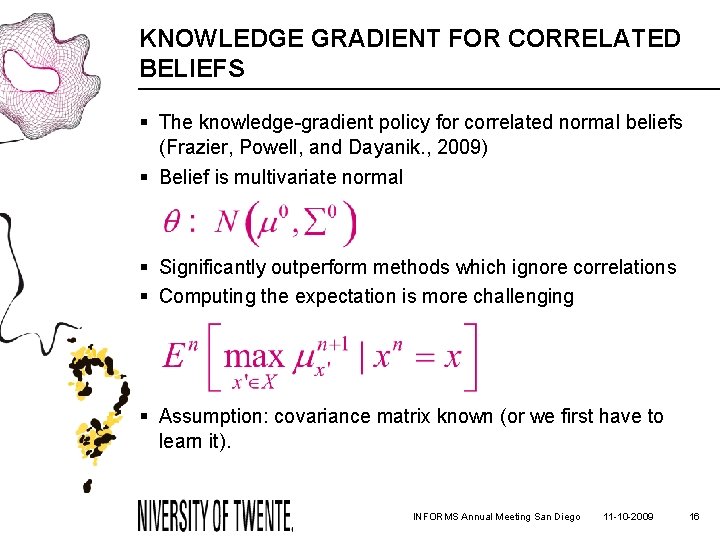

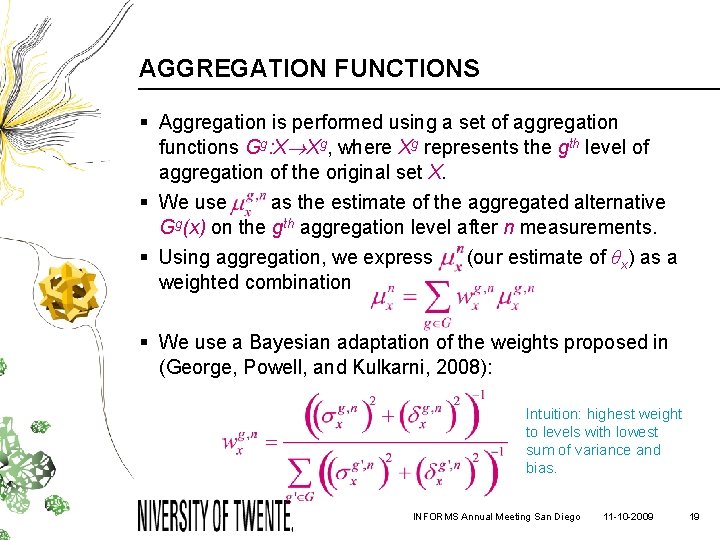

AGGREGATION FUNCTIONS § Aggregation is performed using a set of aggregation functions Gg: X Xg, where Xg represents the gth level of aggregation of the original set X. § We use as the estimate of the aggregated alternative Gg(x) on the gth aggregation level after n measurements. § Using aggregation, we express (our estimate of θx) as a weighted combination § We use a Bayesian adaptation of the weights proposed in (George, Powell, and Kulkarni, 2008): Intuition: highest weight to levels with lowest sum of variance and bias. INFORMS Annual Meeting San Diego 11 -10 -2009 19

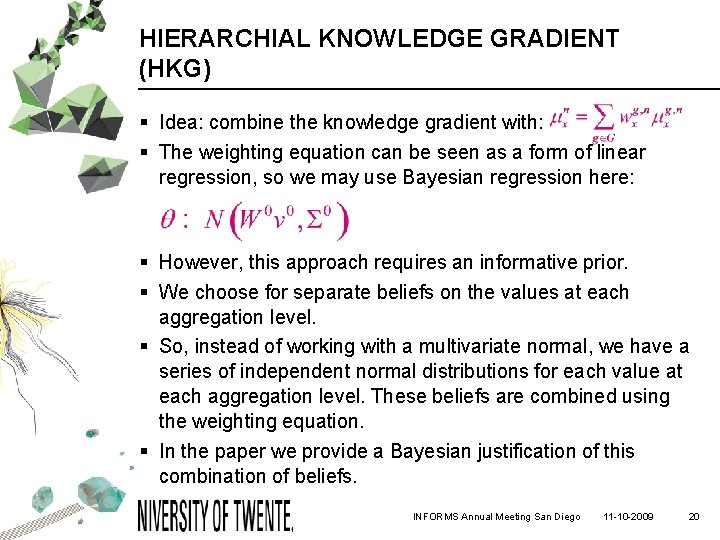

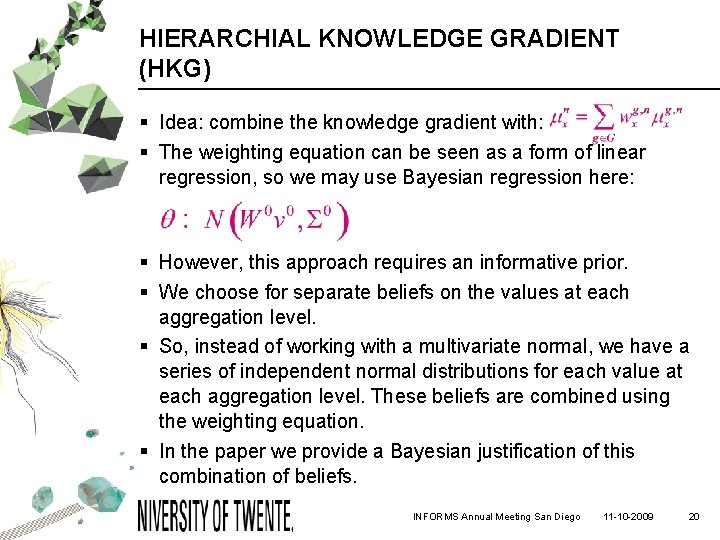

HIERARCHIAL KNOWLEDGE GRADIENT (HKG) § Idea: combine the knowledge gradient with: § The weighting equation can be seen as a form of linear regression, so we may use Bayesian regression here: § However, this approach requires an informative prior. § We choose for separate beliefs on the values at each aggregation level. § So, instead of working with a multivariate normal, we have a series of independent normal distributions for each value at each aggregation level. These beliefs are combined using the weighting equation. § In the paper we provide a Bayesian justification of this combination of beliefs. INFORMS Annual Meeting San Diego 11 -10 -2009 20

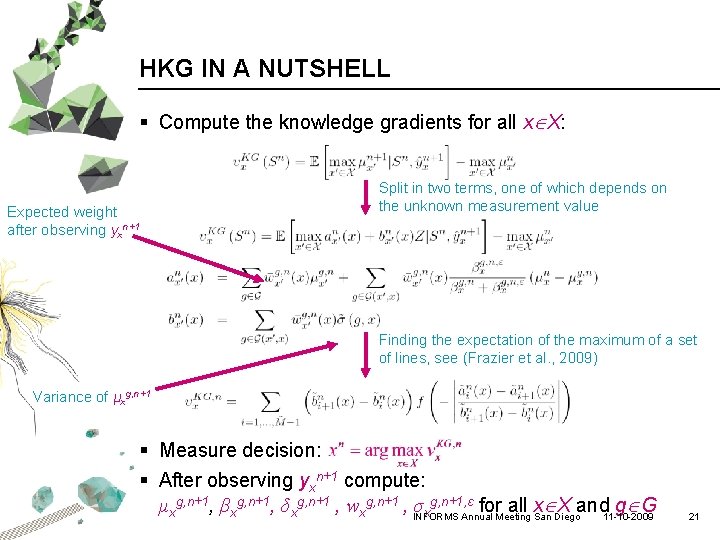

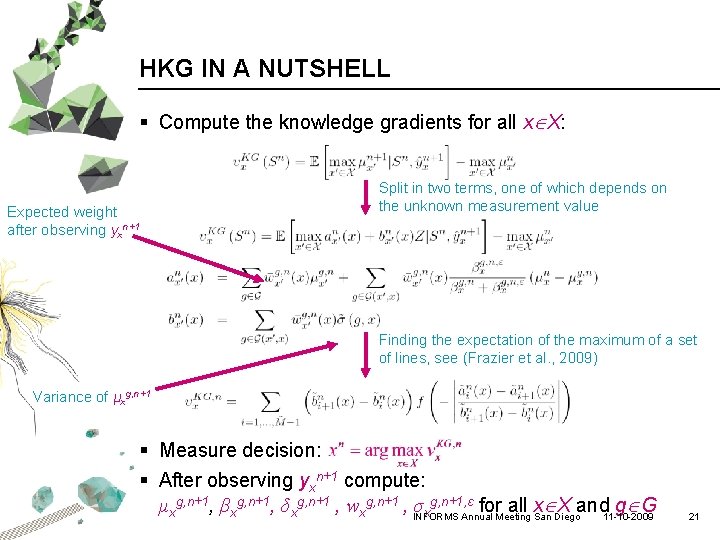

HKG IN A NUTSHELL § Compute the knowledge gradients for all x X: Expected weight after observing yxn+1 Split in two terms, one of which depends on the unknown measurement value Finding the expectation of the maximum of a set of lines, see (Frazier et al. , 2009) Variance of μxg, n+1 § Measure decision: § After observing yxn+1 compute: μxg, n+1, βxg, n+1, δxg, n+1 , wxg, n+1 , INFORMS σxg, n+1, ε for all x X and 11 -10 -2009 g G Annual Meeting San Diego 21

ILLUSTRATION OF HKG § The knowledge gradient policies prefer to measure alternatives with high mean and/or low precision: § Equal means measure lowest precision § Equal precisions measure highest mean § Some MS Excel demos… § Statistical aggregation § Sampling decisions INFORMS Annual Meeting San Diego 11 -10 -2009 22

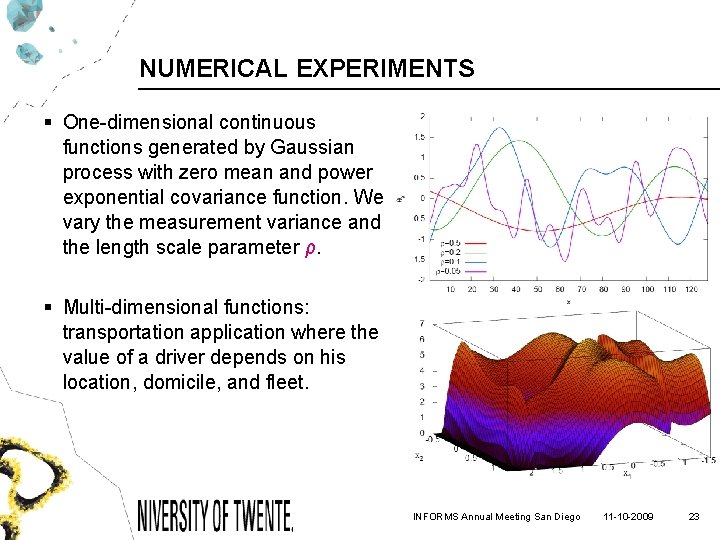

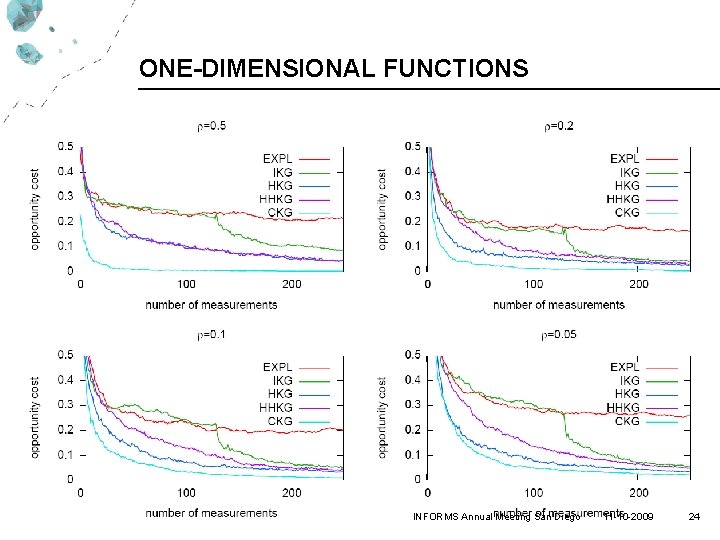

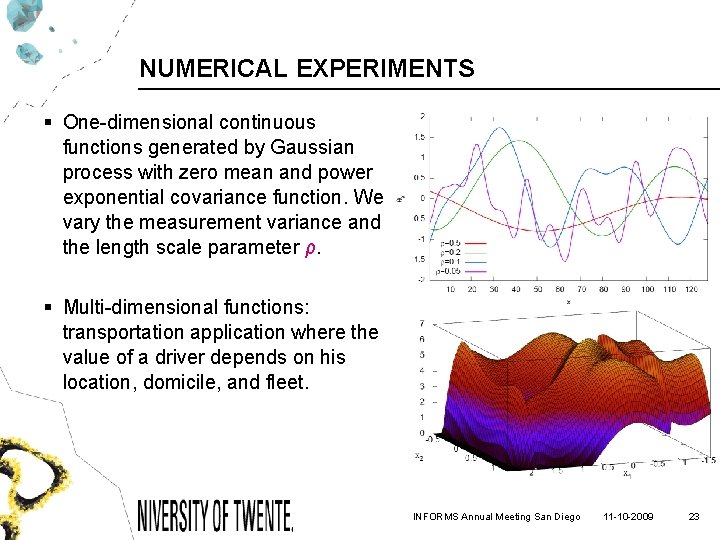

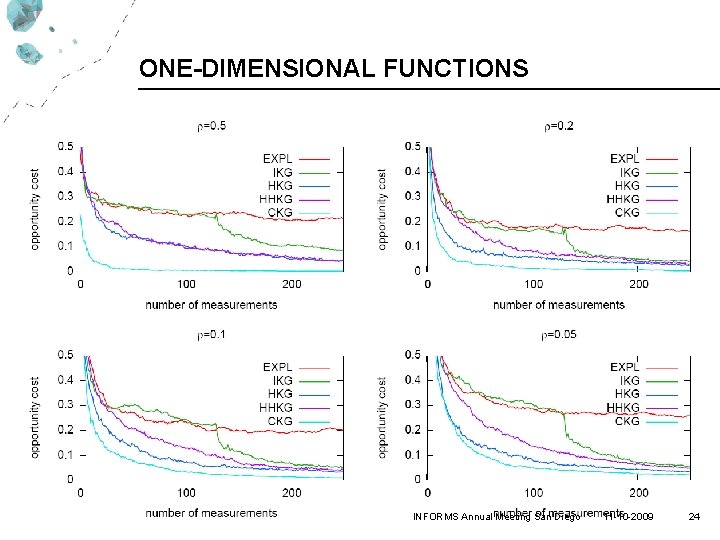

NUMERICAL EXPERIMENTS § One-dimensional continuous functions generated by Gaussian process with zero mean and power exponential covariance function. We vary the measurement variance and the length scale parameter ρ. § Multi-dimensional functions: transportation application where the value of a driver depends on his location, domicile, and fleet. INFORMS Annual Meeting San Diego 11 -10 -2009 23

ONE-DIMENSIONAL FUNCTIONS INFORMS Annual Meeting San Diego 11 -10 -2009 24

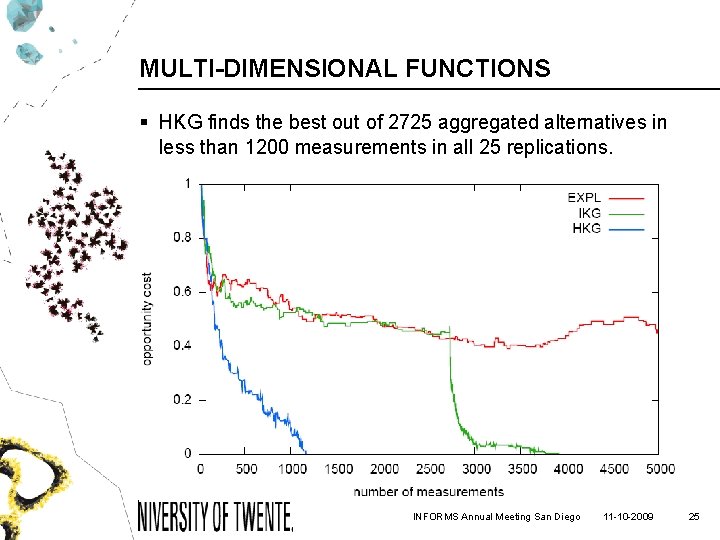

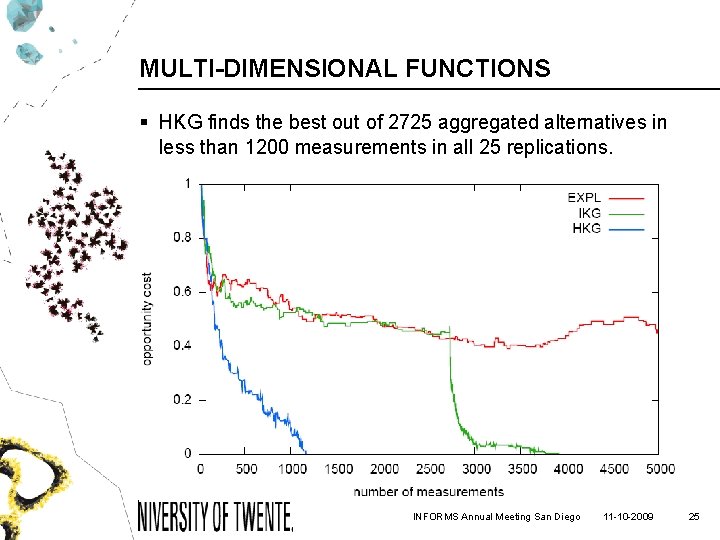

MULTI-DIMENSIONAL FUNCTIONS § HKG finds the best out of 2725 aggregated alternatives in less than 1200 measurements in all 25 replications. INFORMS Annual Meeting San Diego 11 -10 -2009 25

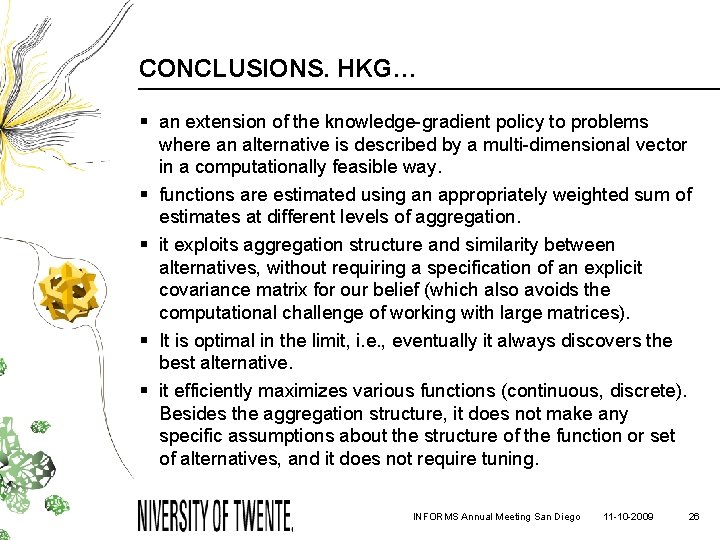

CONCLUSIONS. HKG… § an extension of the knowledge-gradient policy to problems where an alternative is described by a multi-dimensional vector in a computationally feasible way. § functions are estimated using an appropriately weighted sum of estimates at different levels of aggregation. § it exploits aggregation structure and similarity between alternatives, without requiring a specification of an explicit covariance matrix for our belief (which also avoids the computational challenge of working with large matrices). § It is optimal in the limit, i. e. , eventually it always discovers the best alternative. § it efficiently maximizes various functions (continuous, discrete). Besides the aggregation structure, it does not make any specific assumptions about the structure of the function or set of alternatives, and it does not require tuning. INFORMS Annual Meeting San Diego 11 -10 -2009 26

![FURTHER RESEARCH 12 Hierarchical sampling HKG requires us to scan all possible FURTHER RESEARCH [1/2] § Hierarchical sampling § HKG requires us to scan all possible](https://slidetodoc.com/presentation_image/0eb322e5f6bee72bb6df10013301b54c/image-27.jpg)

FURTHER RESEARCH [1/2] § Hierarchical sampling § HKG requires us to scan all possible measurements before making a decision. § As an alternative, we can use HKG to choose regions to measure at successively finer levels of aggregation. § Because aggregated sets have fewer elements than the disaggregated set, we might gain some computational advantage. § Challenge: what measures to use in an aggregated sampling decision? § Knowledge gradient for Approximate dynamic programming § To cope with the exploration versus exploitation problem. § Challenge… INFORMS Annual Meeting San Diego 11 -10 -2009 27

![FURTHER RESEARCH 22 The challenge is to cope with bias in downstream values FURTHER RESEARCH [2/2] § The challenge is to cope with bias in downstream values](https://slidetodoc.com/presentation_image/0eb322e5f6bee72bb6df10013301b54c/image-28.jpg)

FURTHER RESEARCH [2/2] § The challenge is to cope with bias in downstream values § Decision has impact on downstream path § Decision has impact on the value of states in the upstream path (off-policy Monte Carlo learning) INFORMS Annual Meeting San Diego 11 -10 -2009 28

QUESTIONS? Martijn Mes Assistant professor University of Twente School of Management and Governance Operational Methods for Production and Logistics The Netherlands Contact Phone: +31 -534894062 Email: m. r. k. mes@utwente. nl Web: http: //mb. utwente. nl/ompl/staff/Mes/ 11 -10 -2009 INFORMS Annual Meeting San Diego 29