HIDDEN MARKOV MODELS FOR SPEECH RECOGNITION Ganesh Sivaraman

- Slides: 38

HIDDEN MARKOV MODELS FOR SPEECH RECOGNITION Ganesh Sivaraman 1/22/2022 6: 30 AM 4/3/2017 3: 32 PM 1

Overview • Speech as a sequence of phonemes • What are triphones and why are they needed? • Markov chains • Properties of Markov chains • Definition of Hidden Markov models • Examples • Three main problems of HMMs • Solutions to the three problems of HMMs • Applying HMMs to speech recognition • A simple isolated word recognition system 1/22/2022 6: 30 AM 2

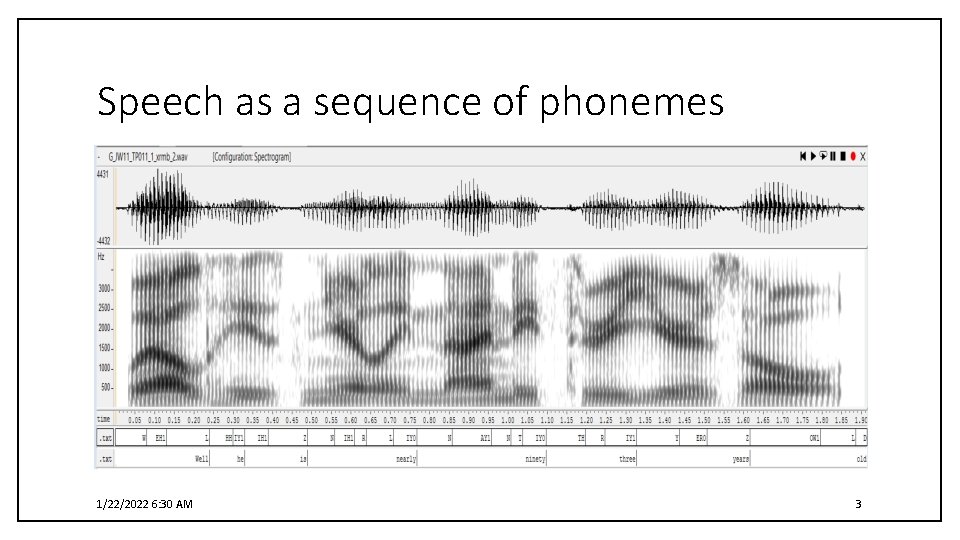

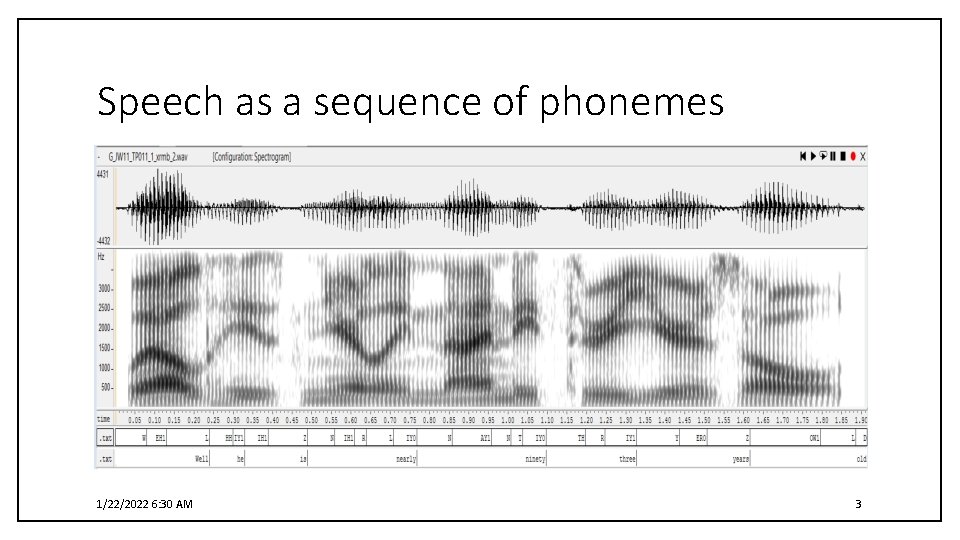

Speech as a sequence of phonemes 1/22/2022 6: 30 AM 3

Speech as a phoneme sequence – Monophone modeling “Hello World” hello + world Speech Recognition sil+hh+ah+l+ow+w+er+l+d+sil 1/22/2022 6: 30 AM 4

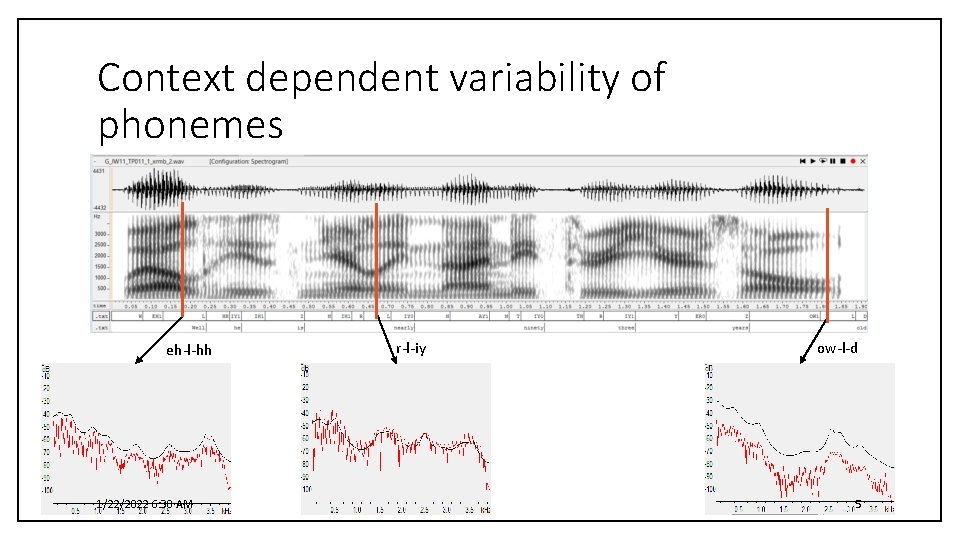

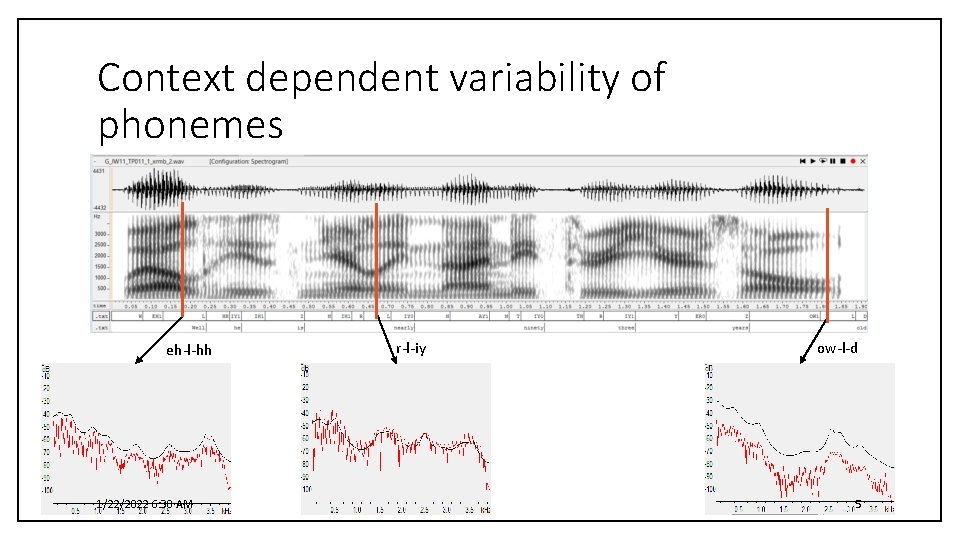

Context dependent variability of phonemes eh-l-hh 1/22/2022 6: 30 AM r-l-iy ow-l-d 5

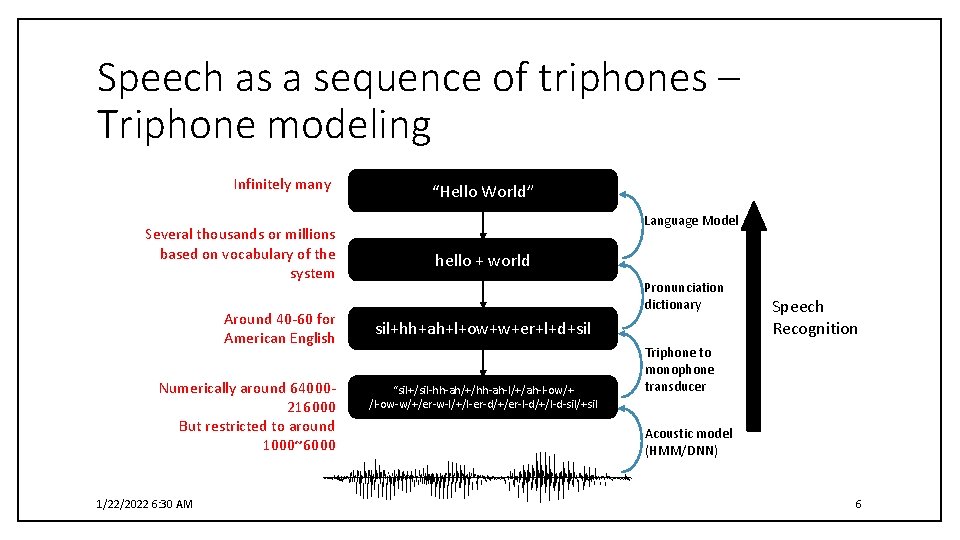

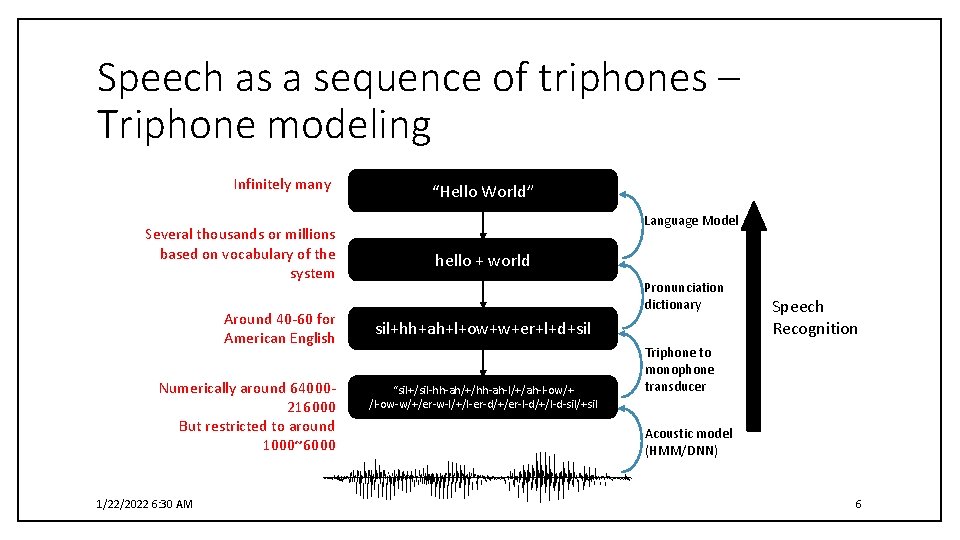

Speech as a sequence of triphones – Triphone modeling Infinitely many Several thousands or millions based on vocabulary of the system Around 40 -60 for American English Numerically around 64000216000 But restricted to around 1000~6000 1/22/2022 6: 30 AM “Hello World” Language Model hello + world Pronunciation dictionary sil+hh+ah+l+ow+w+er+l+d+sil “sil+/sil-hh-ah/+/hh-ah-l/+/ah-l-ow/+ /l-ow-w/+/er-w-l/+/l-er-d/+/er-l-d/+/l-d-sil/+sil Speech Recognition Triphone to monophone transducer Acoustic model (HMM/DNN) 6

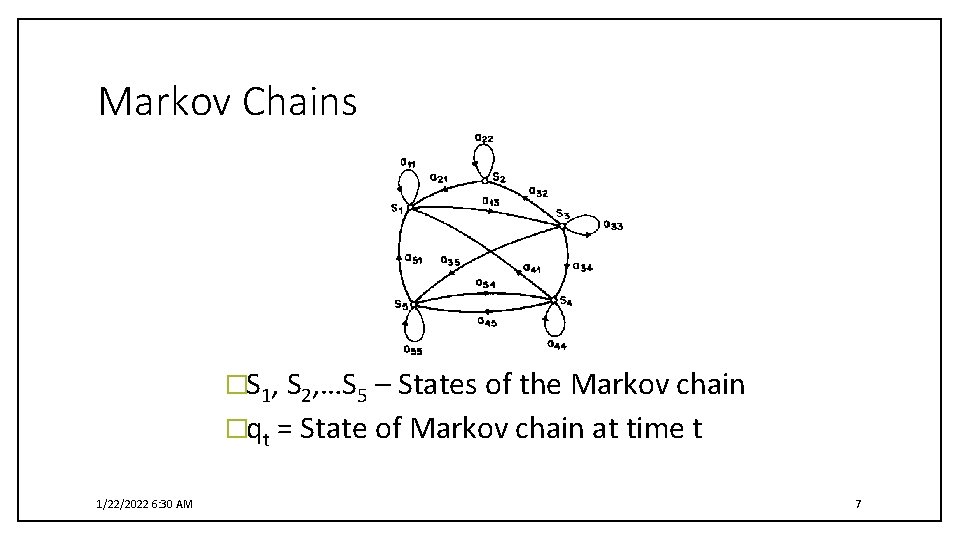

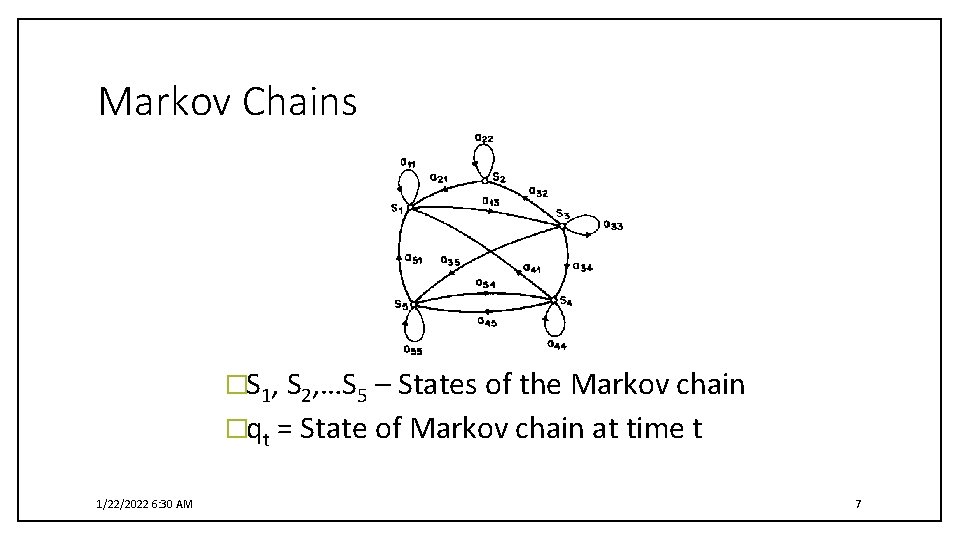

Markov Chains �S 1, S 2, …S 5 – States of the Markov chain �qt = State of Markov chain at time t 1/22/2022 6: 30 AM 7

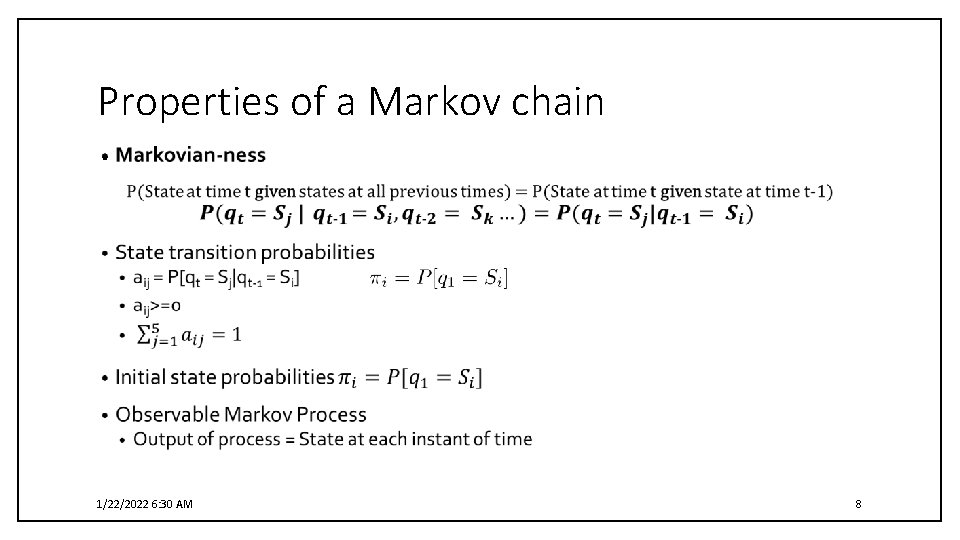

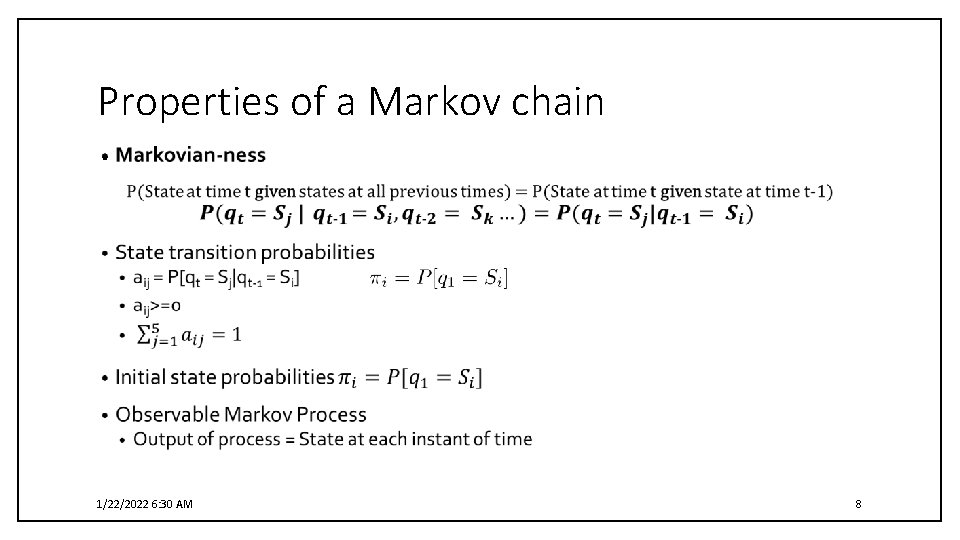

Properties of a Markov chain • 1/22/2022 6: 30 AM 8

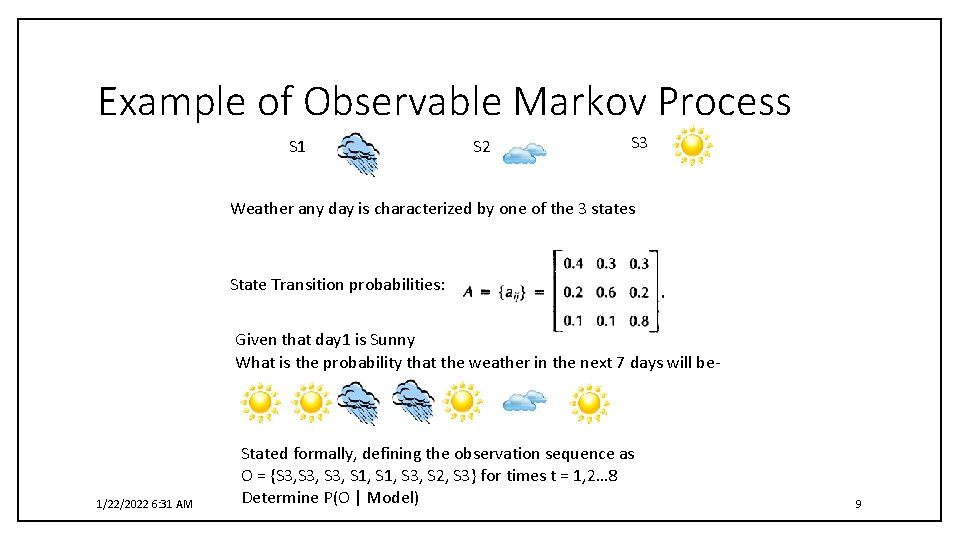

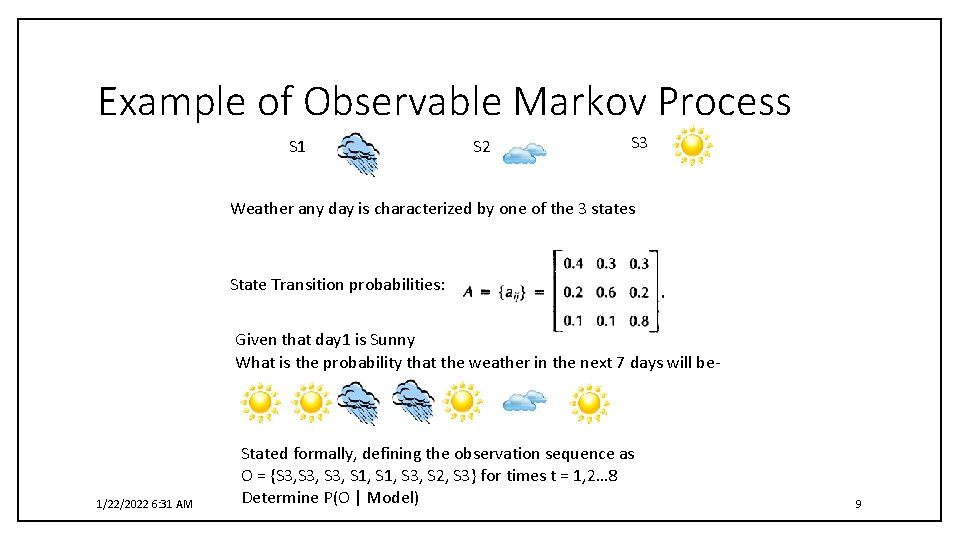

Example of Observable Markov Process S 1 S 2 S 3 Weather any day is characterized by one of the 3 states State Transition probabilities: Given that day 1 is Sunny What is the probability that the weather in the next 7 days will be- 1/22/2022 6: 31 AM Stated formally, defining the observation sequence as O = {S 3, S 1, S 3, S 2, S 3} for times t = 1, 2… 8 Determine P(O | Model) 9

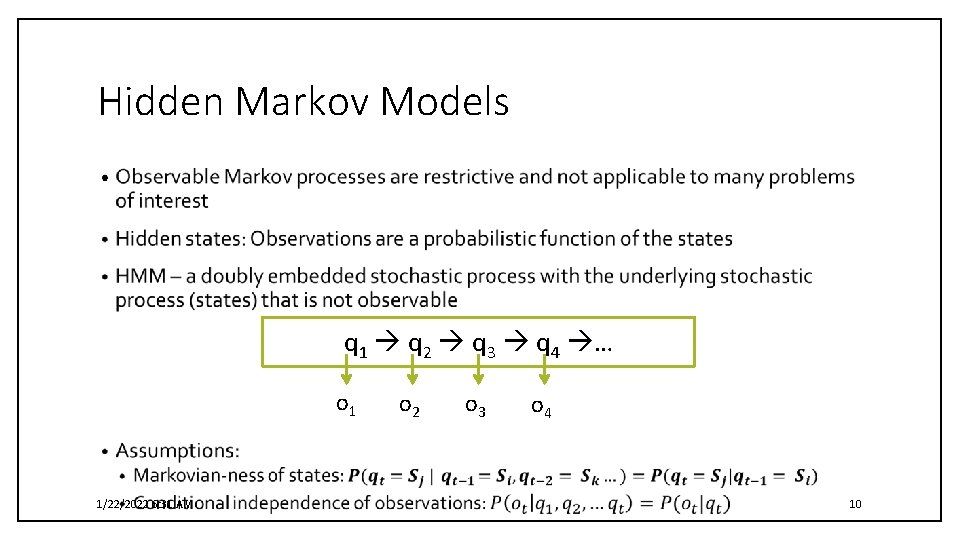

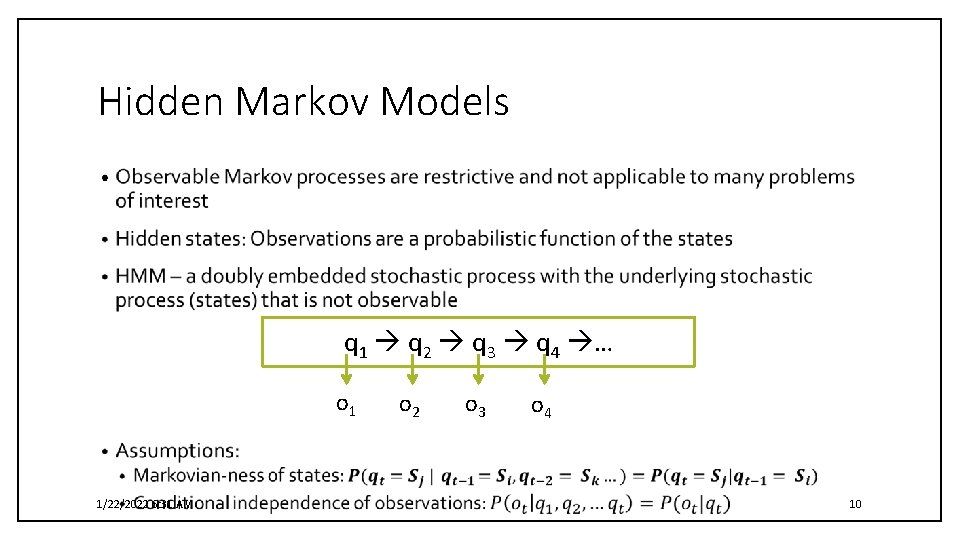

Hidden Markov Models • q 1 q 2 q 3 q 4 … o 1 1/22/2022 6: 31 AM o 2 o 3 o 4 10

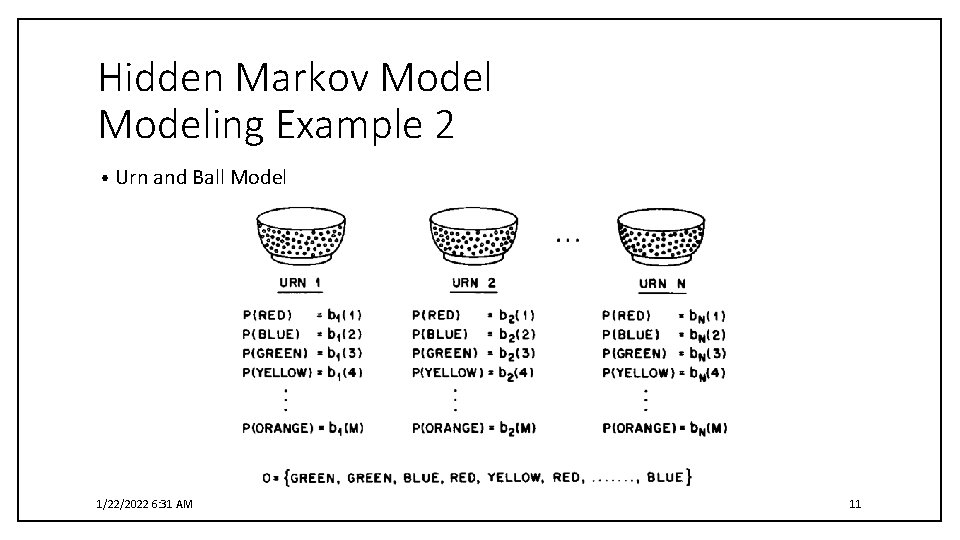

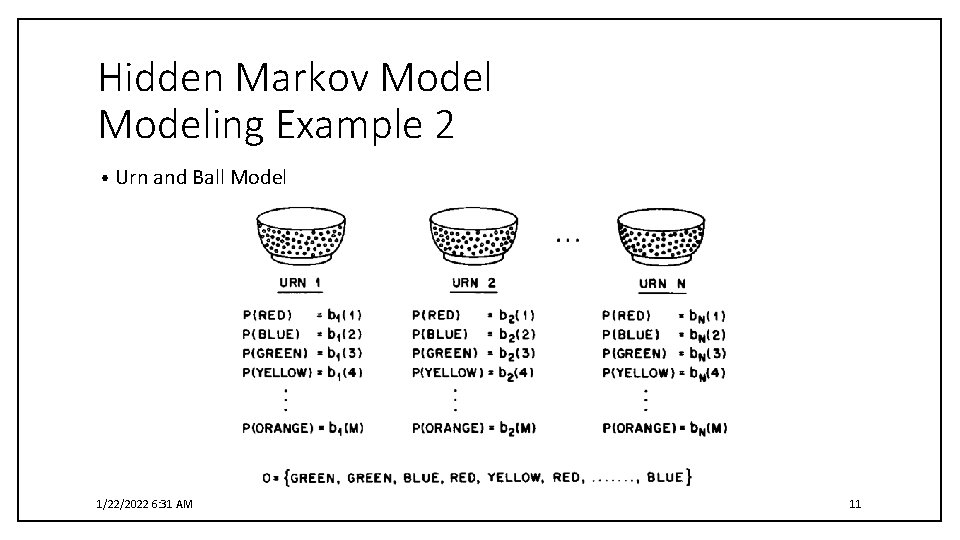

Hidden Markov Modeling Example 2 • Urn and Ball Model 1/22/2022 6: 31 AM 11

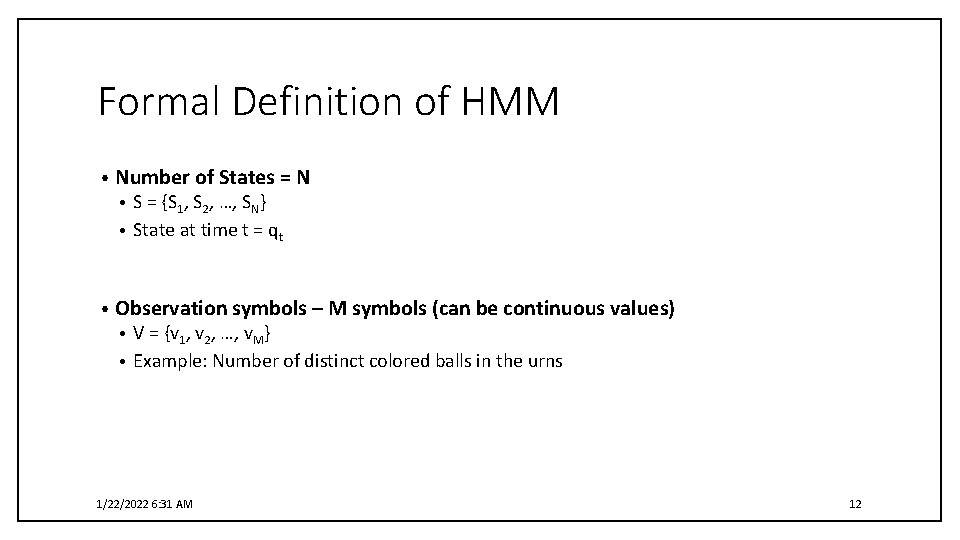

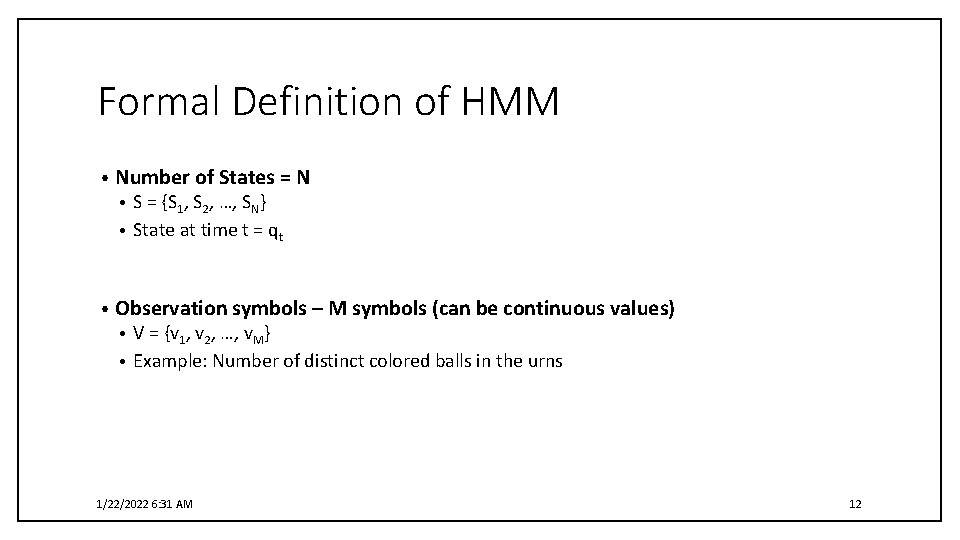

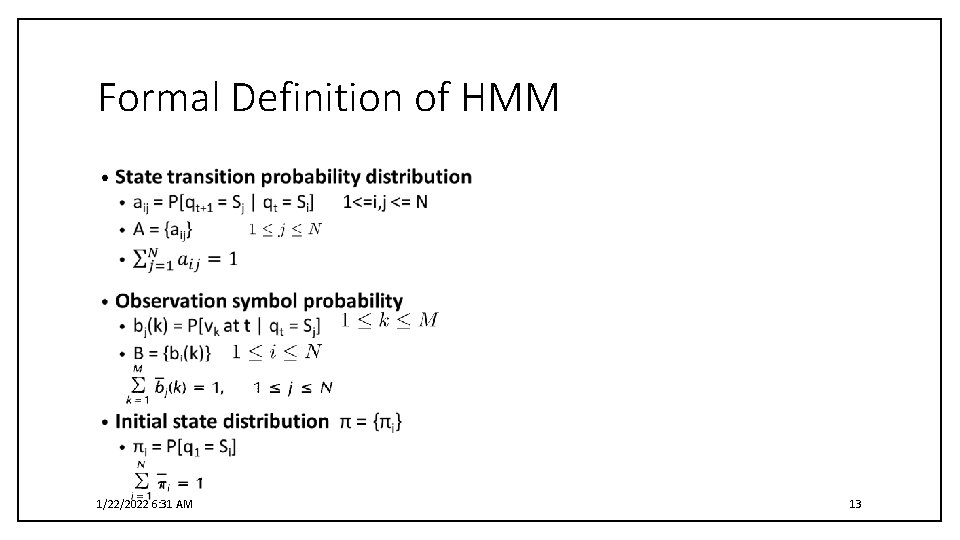

Formal Definition of HMM • Number of States = N S = {S 1, S 2, …, SN} • State at time t = qt • • Observation symbols – M symbols (can be continuous values) V = {v 1, v 2, …, v. M} • Example: Number of distinct colored balls in the urns • 1/22/2022 6: 31 AM 12

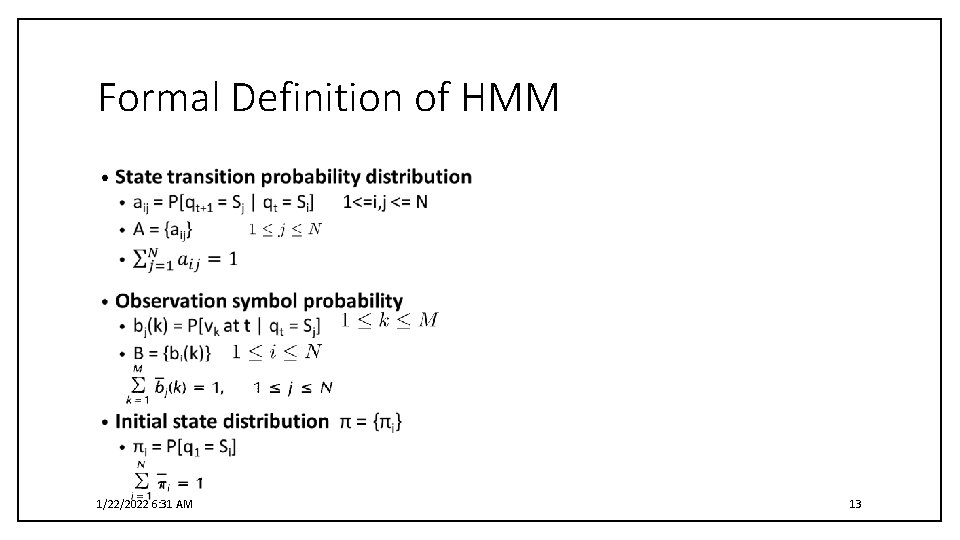

Formal Definition of HMM • 1/22/2022 6: 31 AM 13

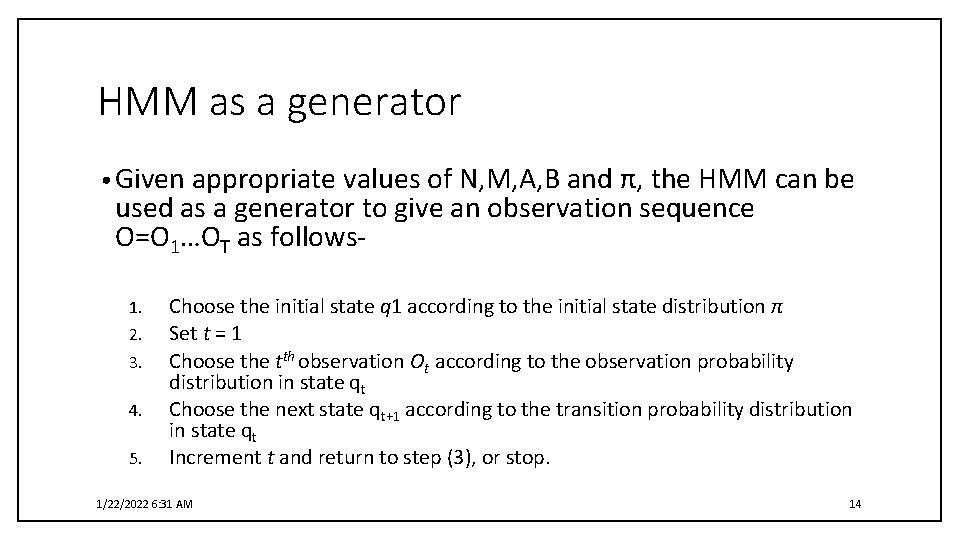

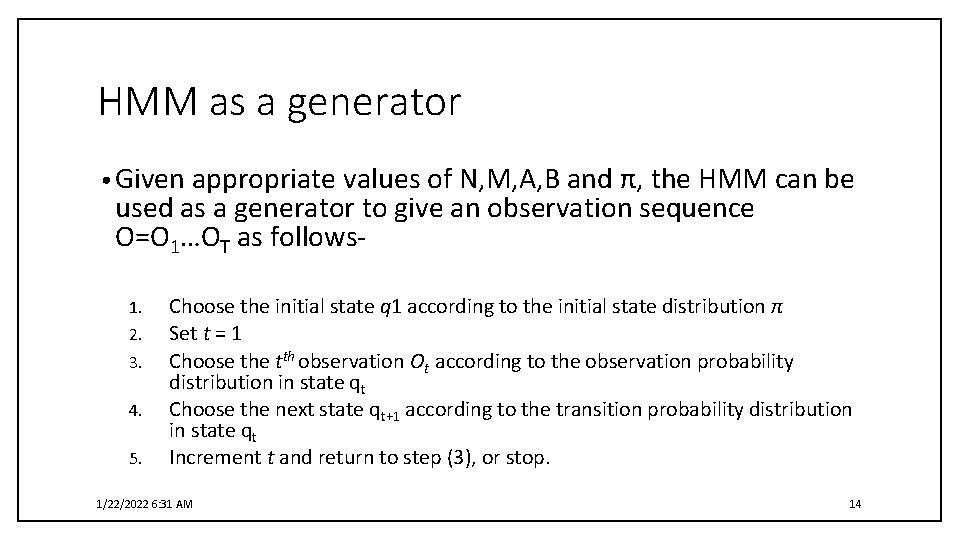

HMM as a generator • Given appropriate values of N, M, A, B and π, the HMM can be used as a generator to give an observation sequence O=O 1…OT as follows 1. 2. 3. 4. 5. Choose the initial state q 1 according to the initial state distribution π Set t = 1 Choose the tth observation Ot according to the observation probability distribution in state qt Choose the next state qt+1 according to the transition probability distribution in state qt Increment t and return to step (3), or stop. 1/22/2022 6: 31 AM 14

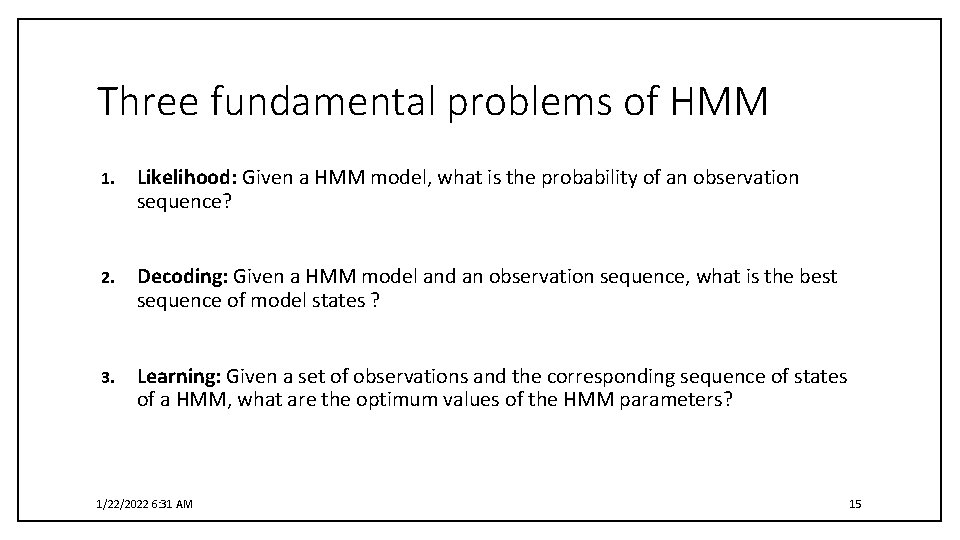

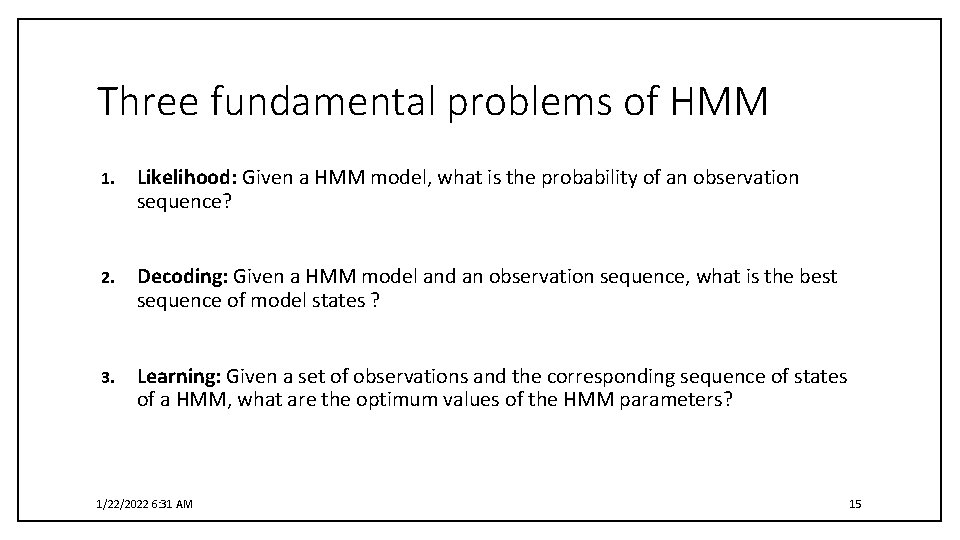

Three fundamental problems of HMM 1. Likelihood: Given a HMM model, what is the probability of an observation sequence? 2. Decoding: Given a HMM model and an observation sequence, what is the best sequence of model states ? 3. Learning: Given a set of observations and the corresponding sequence of states of a HMM, what are the optimum values of the HMM parameters? 1/22/2022 6: 31 AM 15

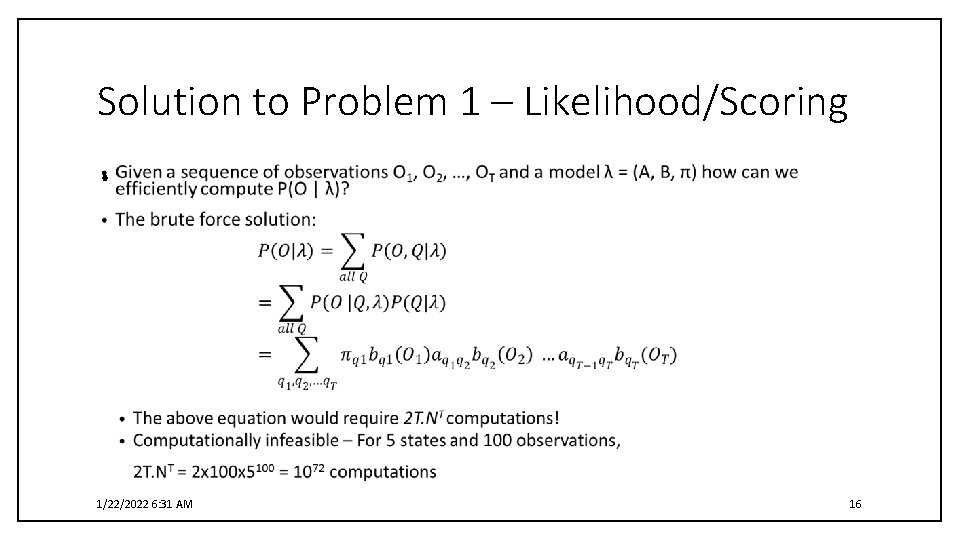

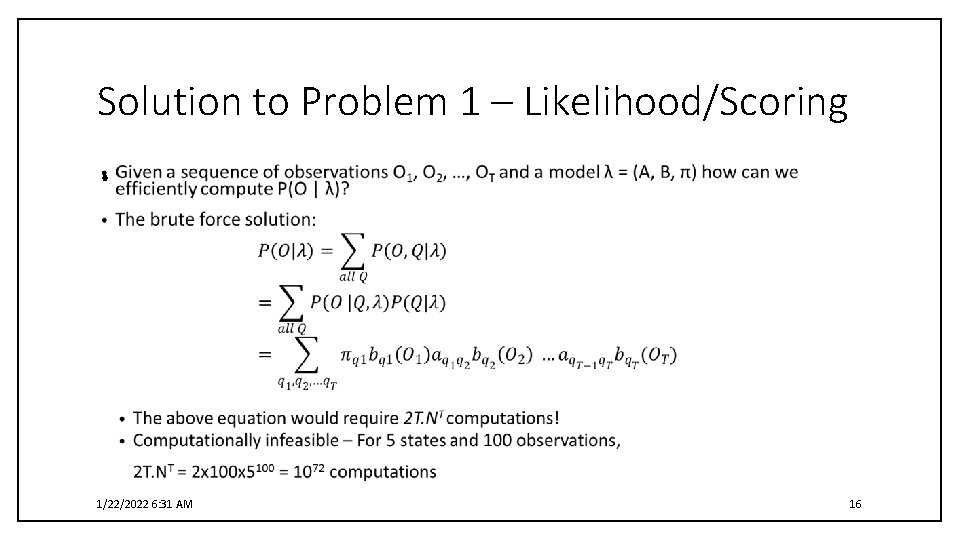

Solution to Problem 1 – Likelihood/Scoring • 1/22/2022 6: 31 AM 16

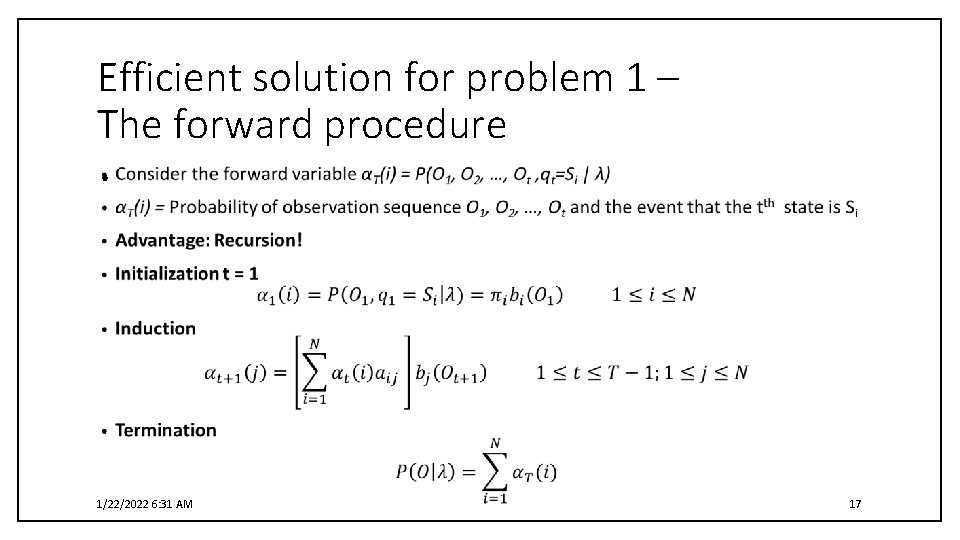

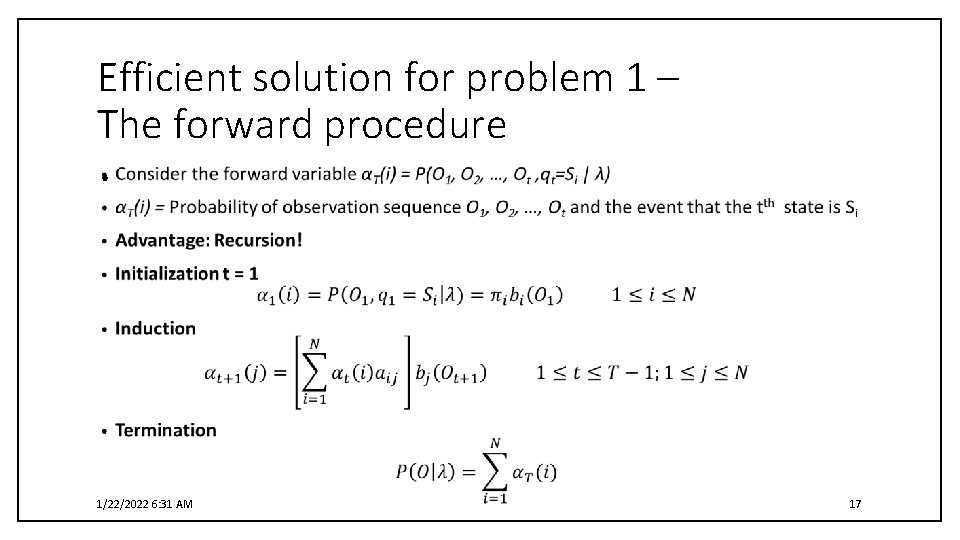

Efficient solution for problem 1 – The forward procedure • 1/22/2022 6: 31 AM 17

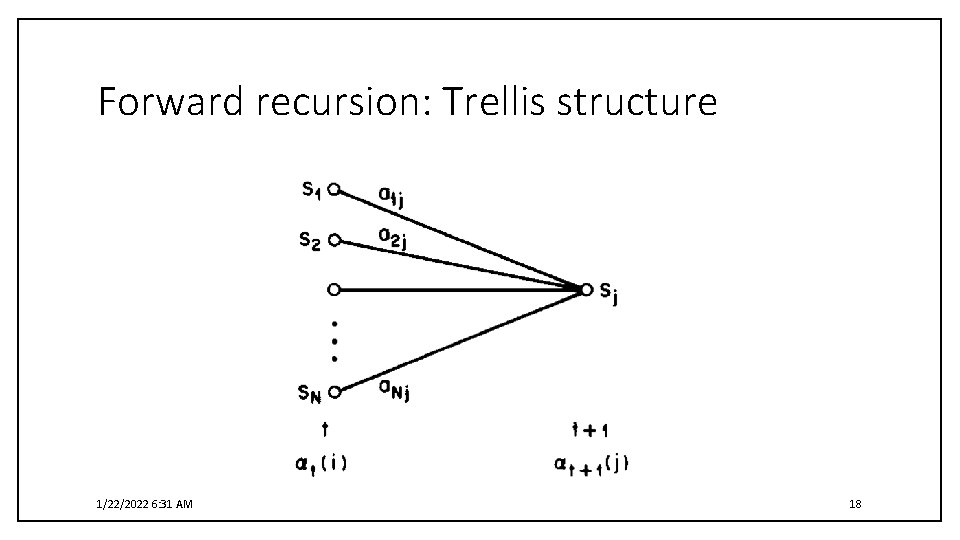

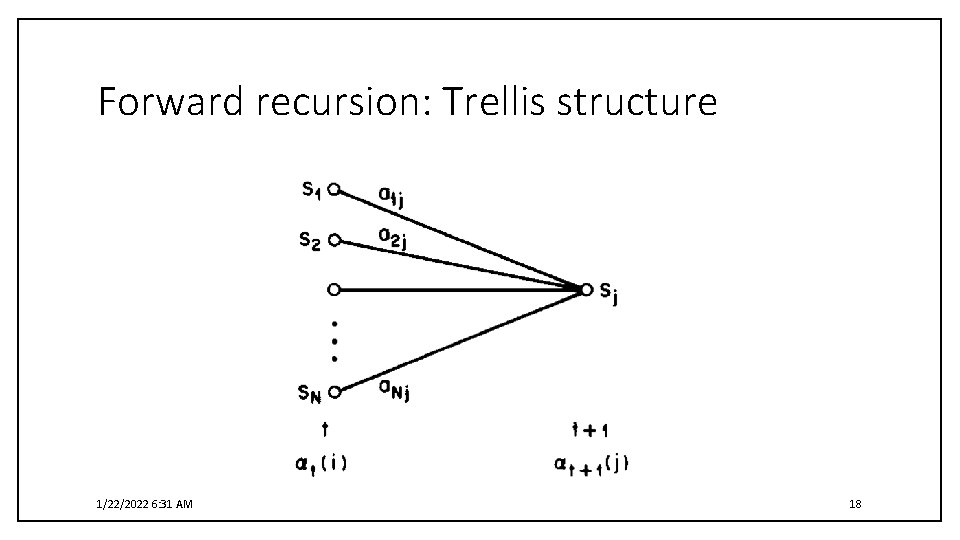

Forward recursion: Trellis structure 1/22/2022 6: 31 AM 18

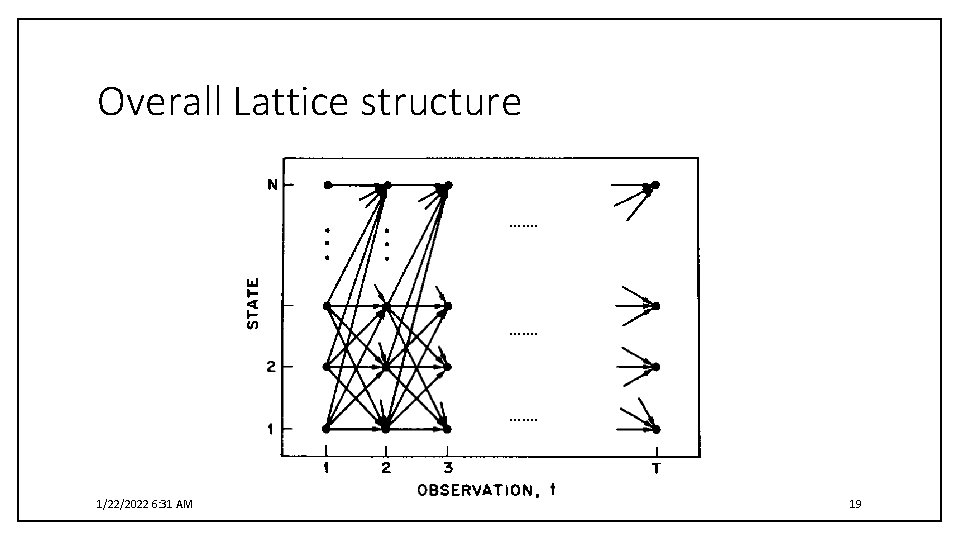

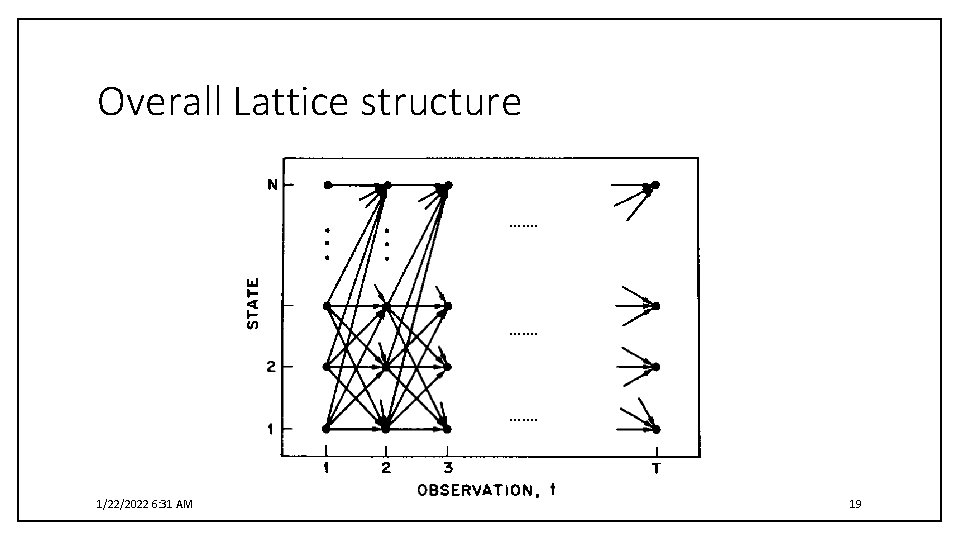

Overall Lattice structure ……. 1/22/2022 6: 31 AM 19

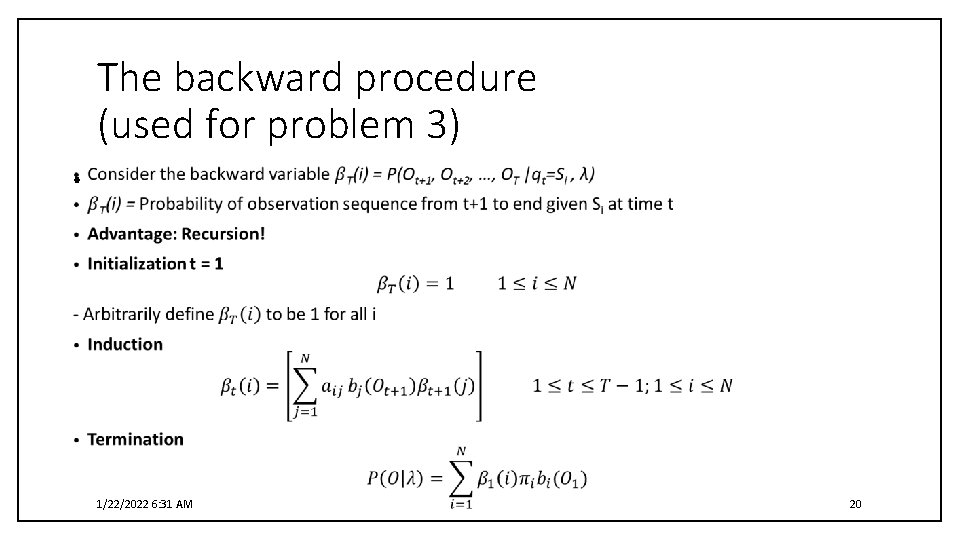

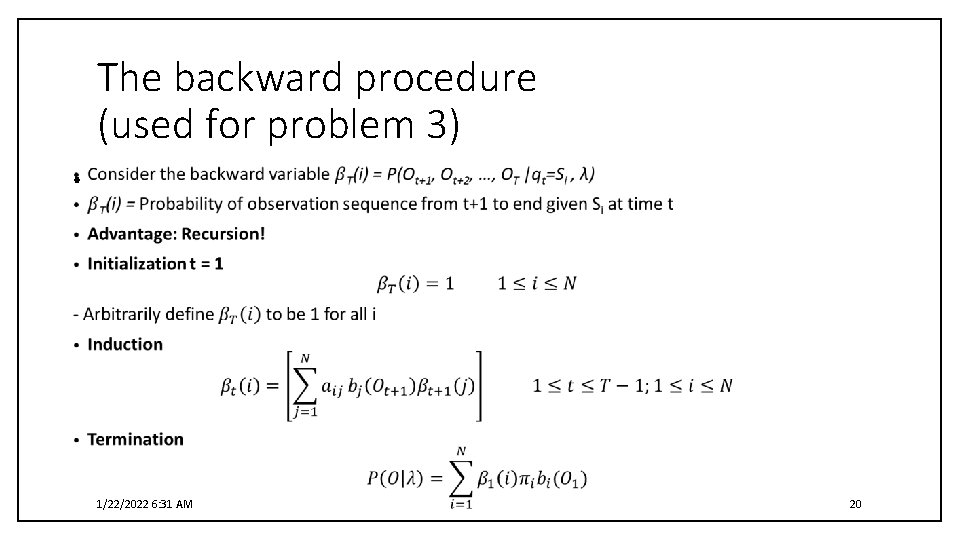

The backward procedure (used for problem 3) • 1/22/2022 6: 31 AM 20

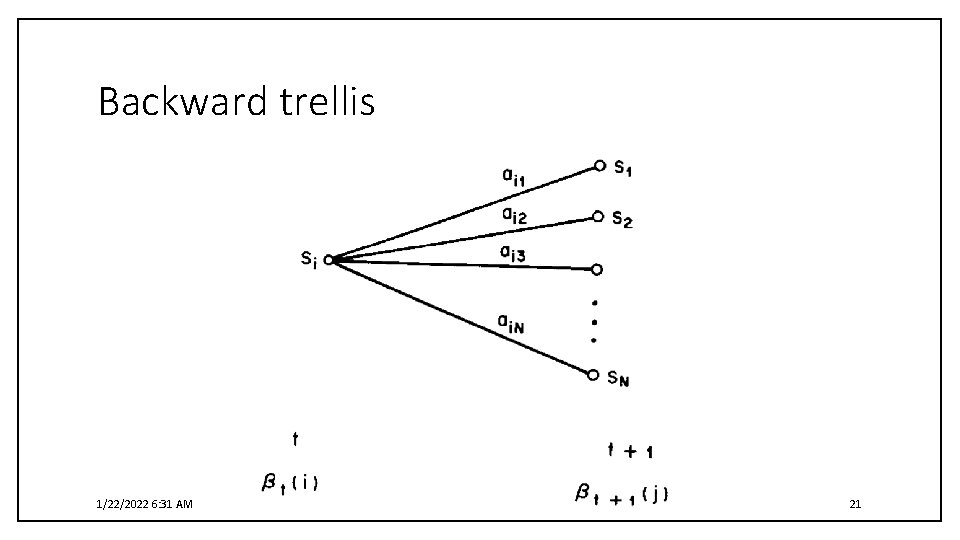

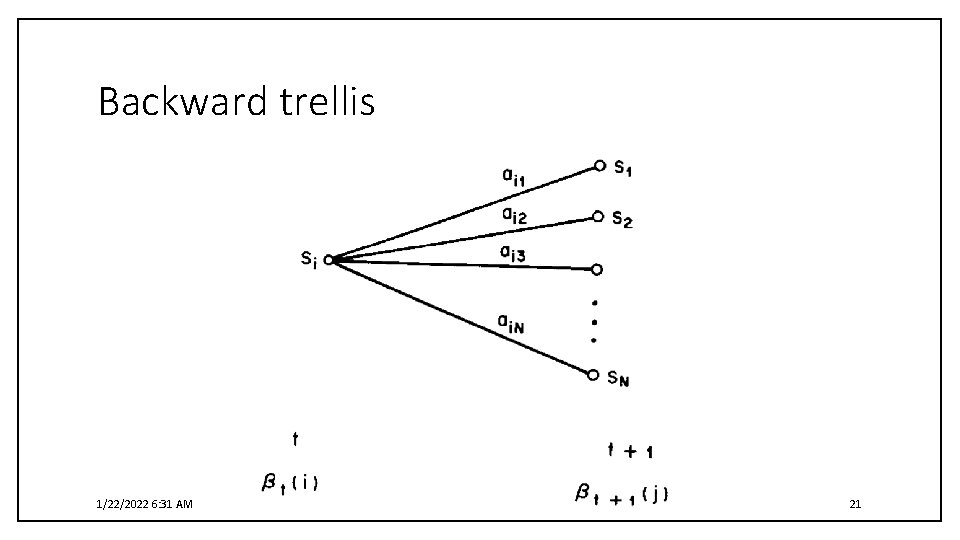

Backward trellis 1/22/2022 6: 31 AM 21

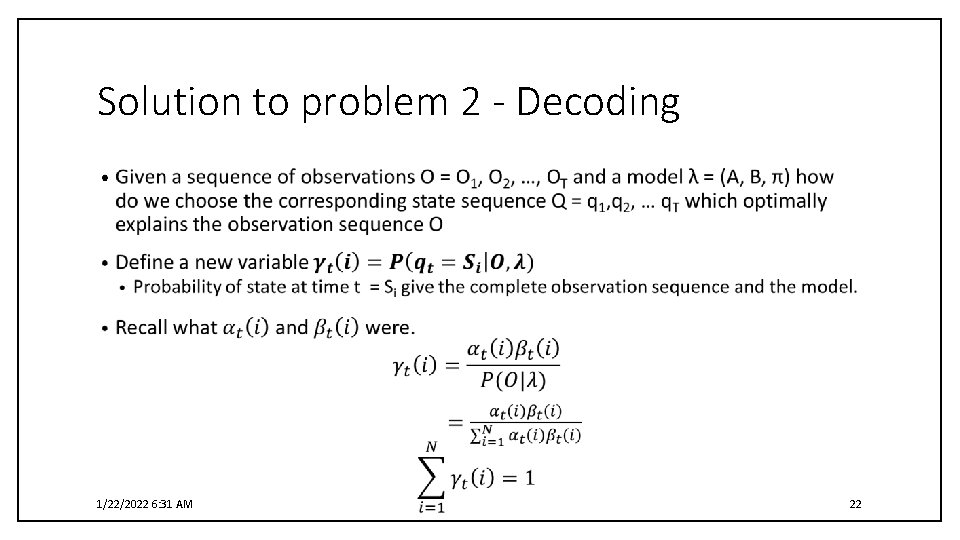

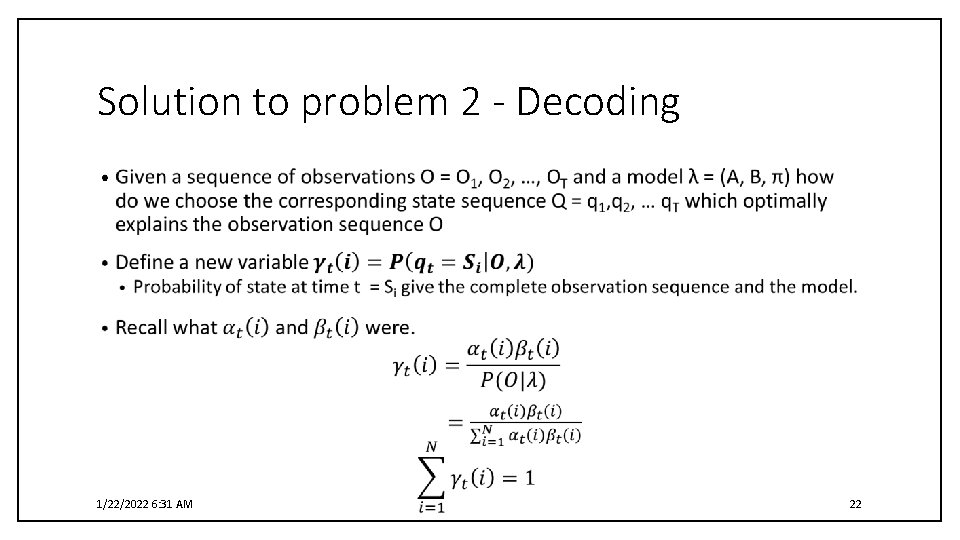

Solution to problem 2 - Decoding • 1/22/2022 6: 31 AM 22

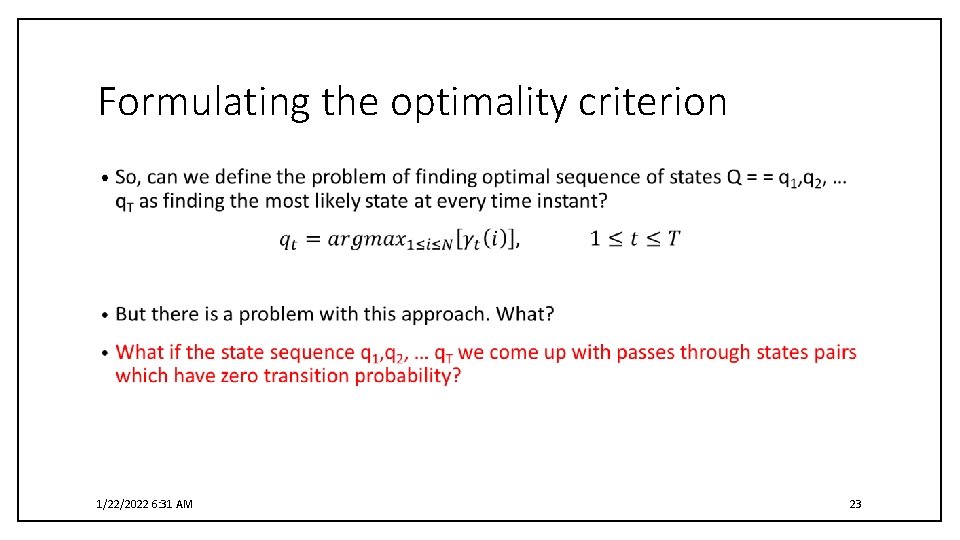

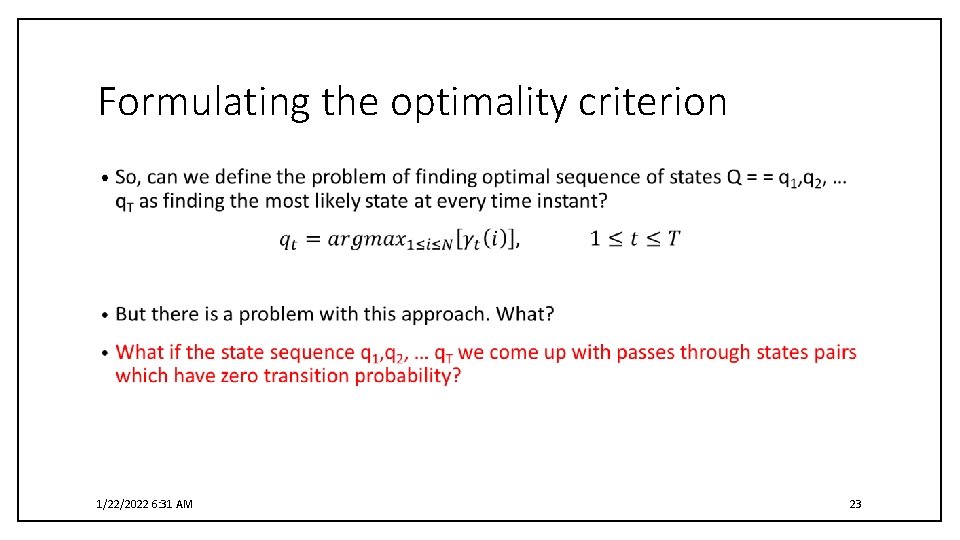

Formulating the optimality criterion • 1/22/2022 6: 31 AM 23

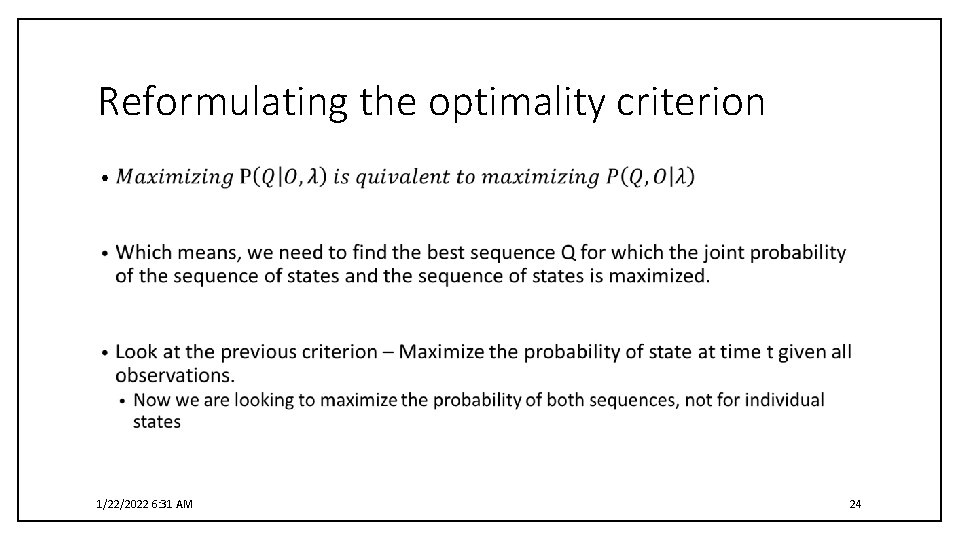

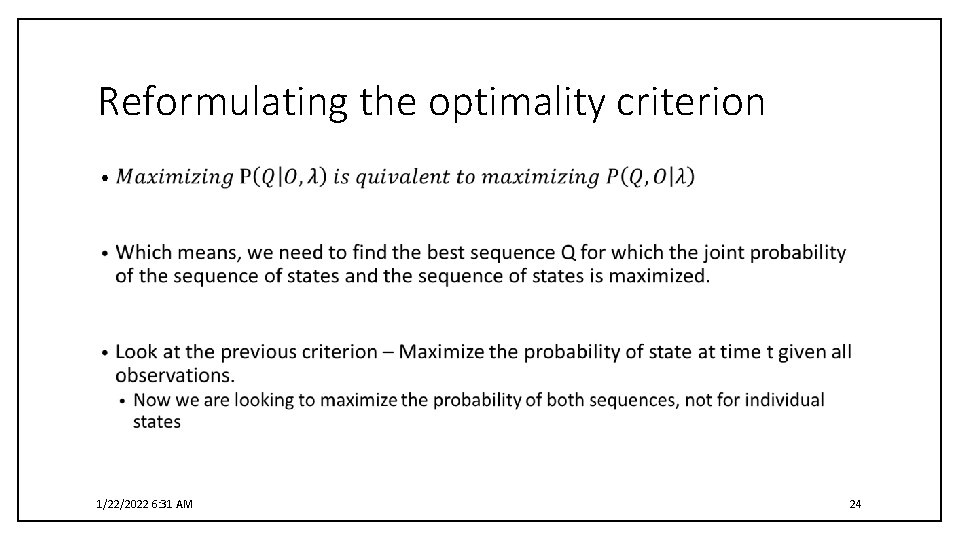

Reformulating the optimality criterion • 1/22/2022 6: 31 AM 24

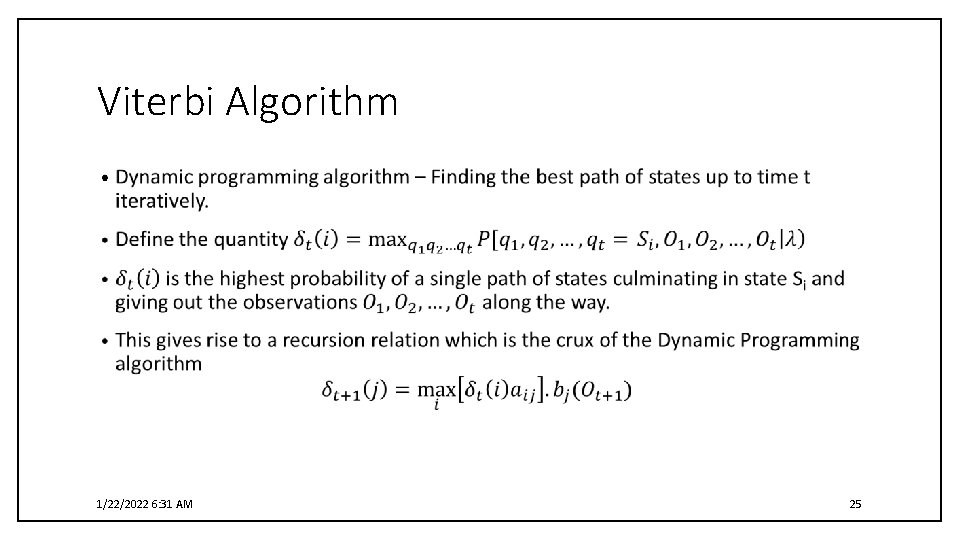

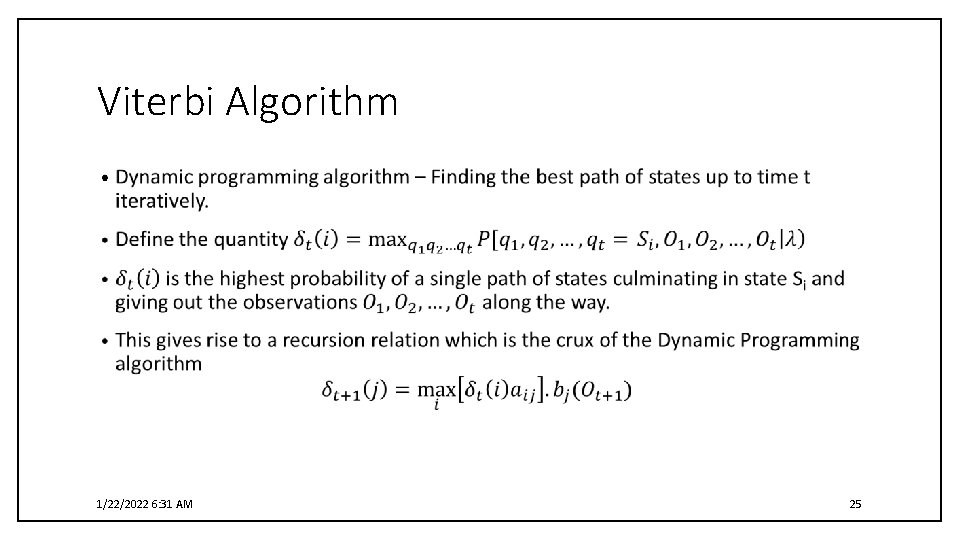

Viterbi Algorithm • 1/22/2022 6: 31 AM 25

Viterbi Algorithm 1/22/2022 6: 31 AM 26

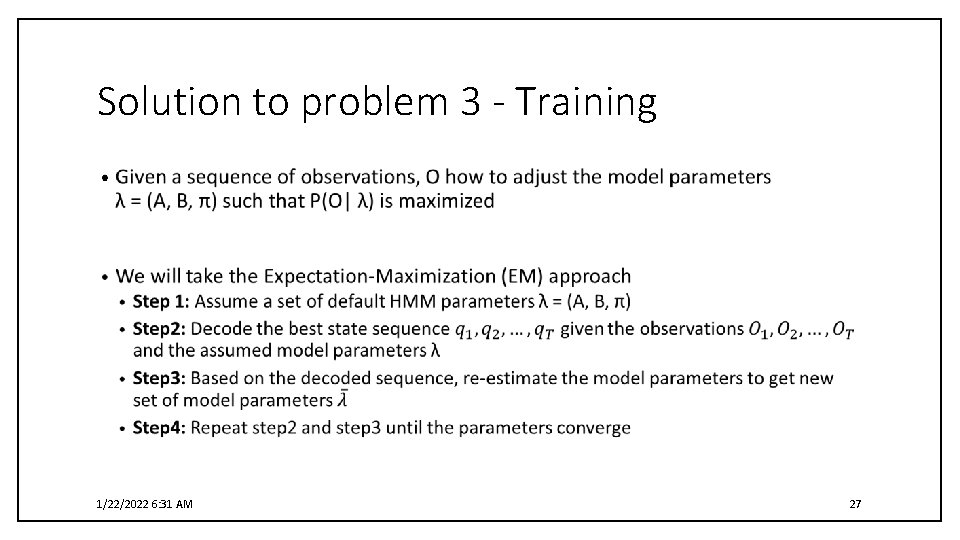

Solution to problem 3 - Training • 1/22/2022 6: 31 AM 27

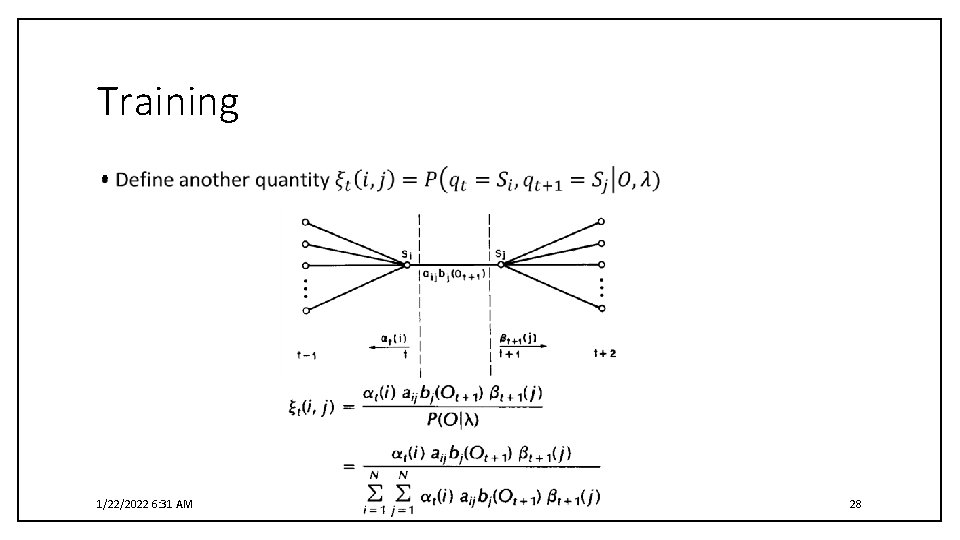

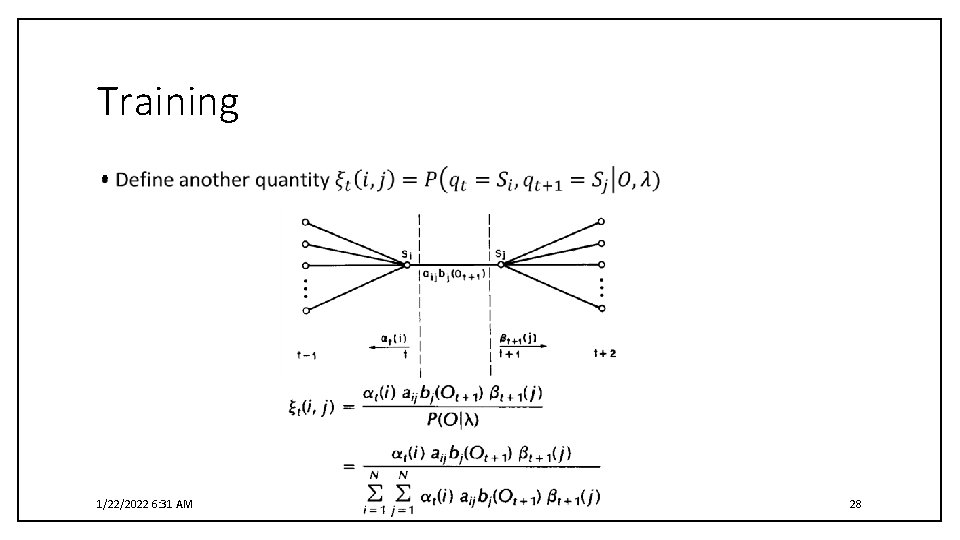

Training • 1/22/2022 6: 31 AM 28

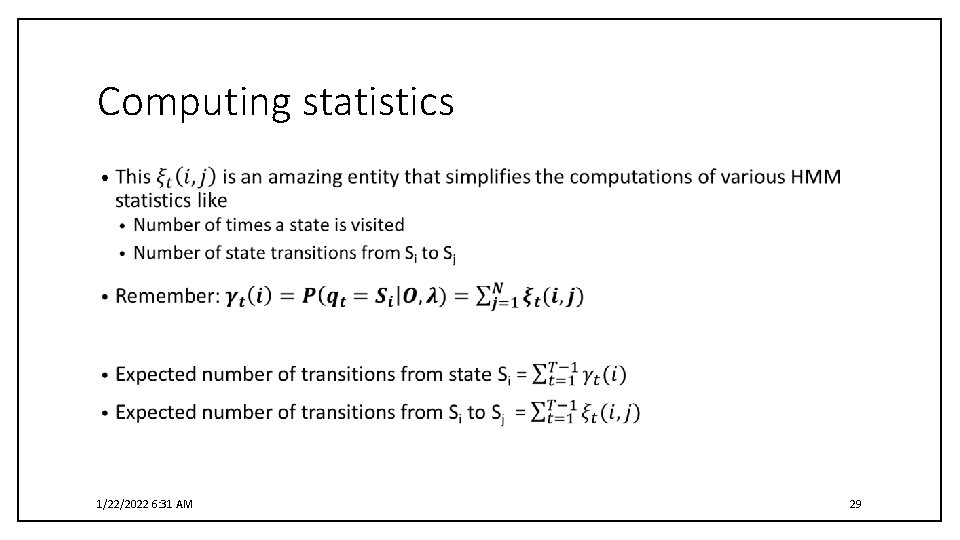

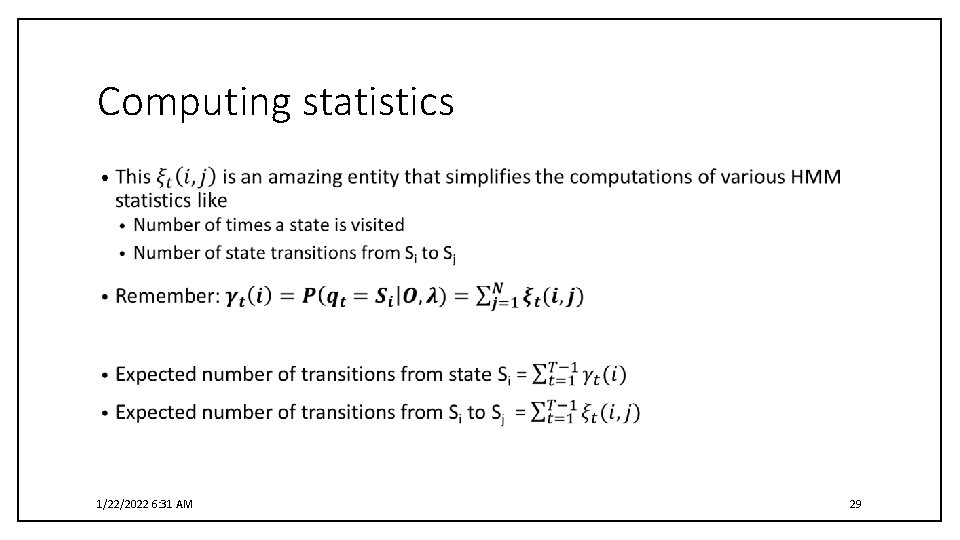

Computing statistics • 1/22/2022 6: 31 AM 29

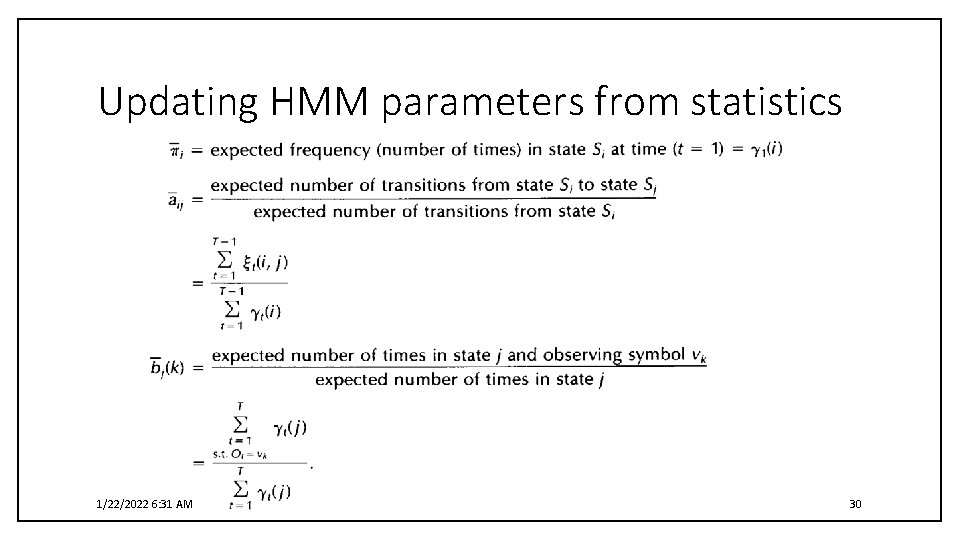

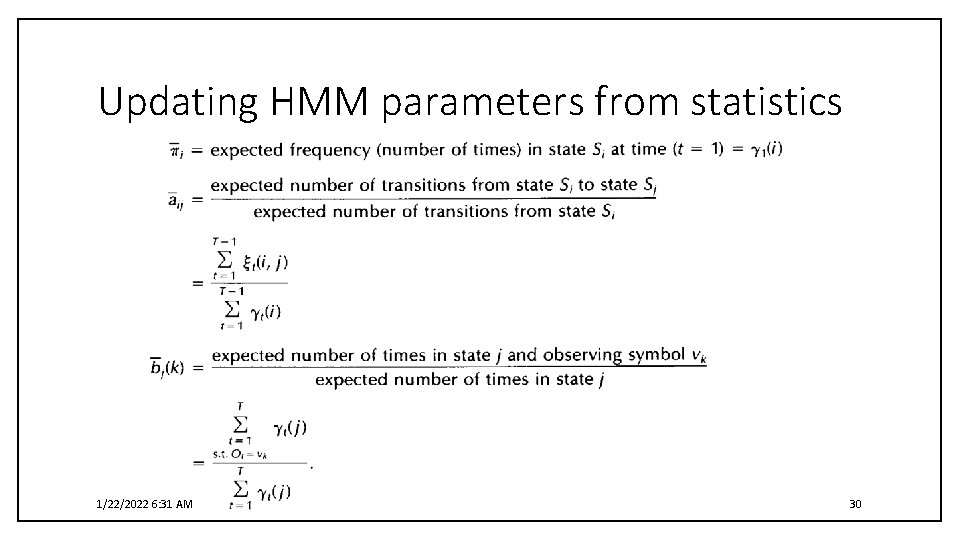

Updating HMM parameters from statistics 1/22/2022 6: 31 AM 30

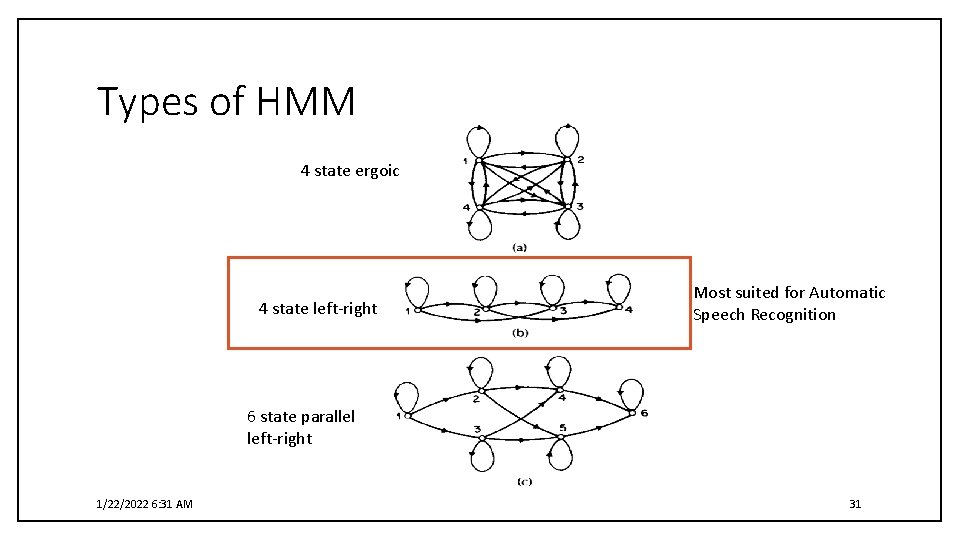

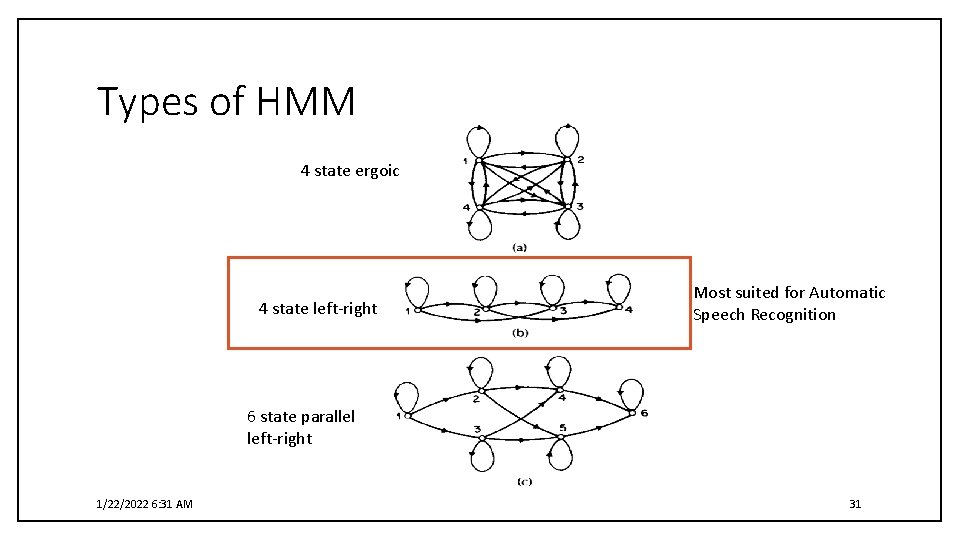

Types of HMM 4 state ergoic 4 state left-right Most suited for Automatic Speech Recognition 6 state parallel left-right 1/22/2022 6: 31 AM 31

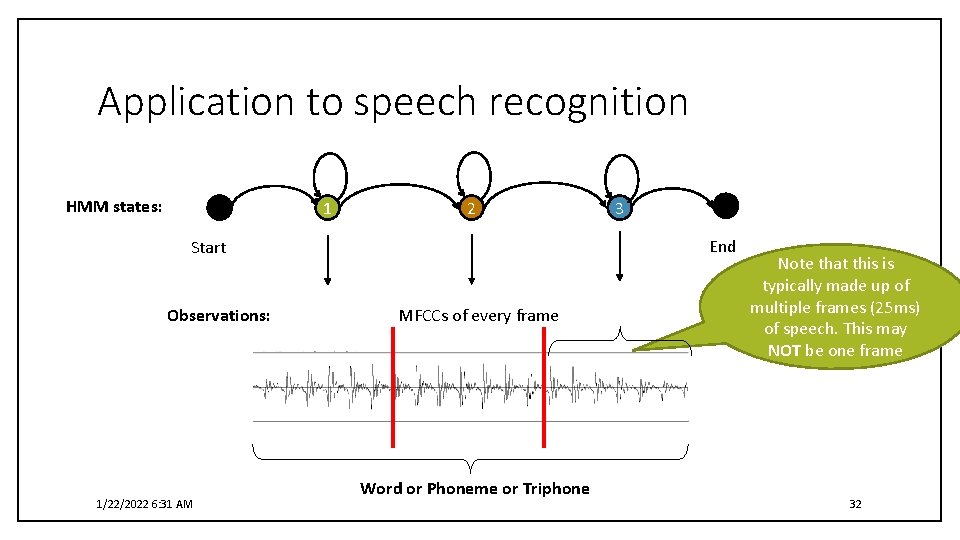

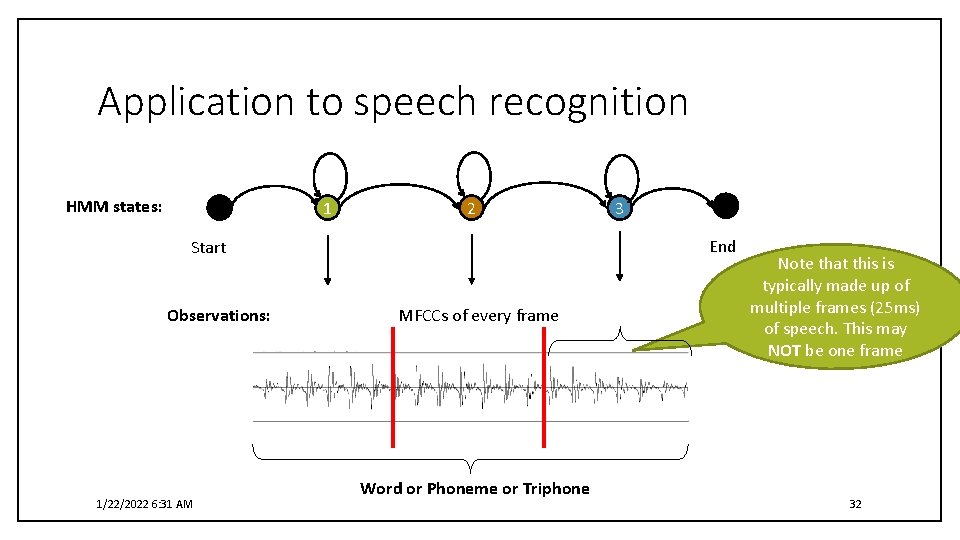

Application to speech recognition HMM states: 1 2 End Start Observations: 1/22/2022 6: 31 AM 3 MFCCs of every frame Word or Phoneme or Triphone Note that this is typically made up of multiple frames (25 ms) of speech. This may NOT be one frame 32

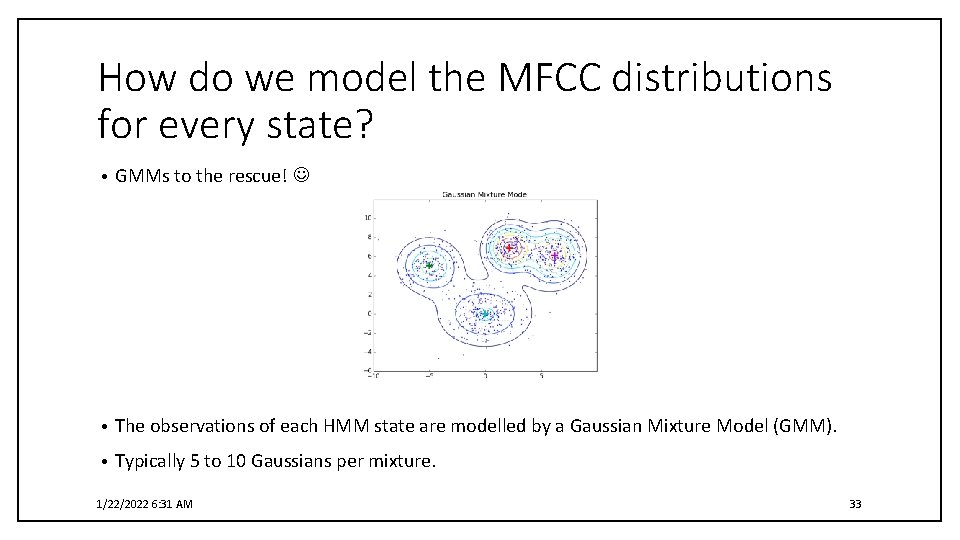

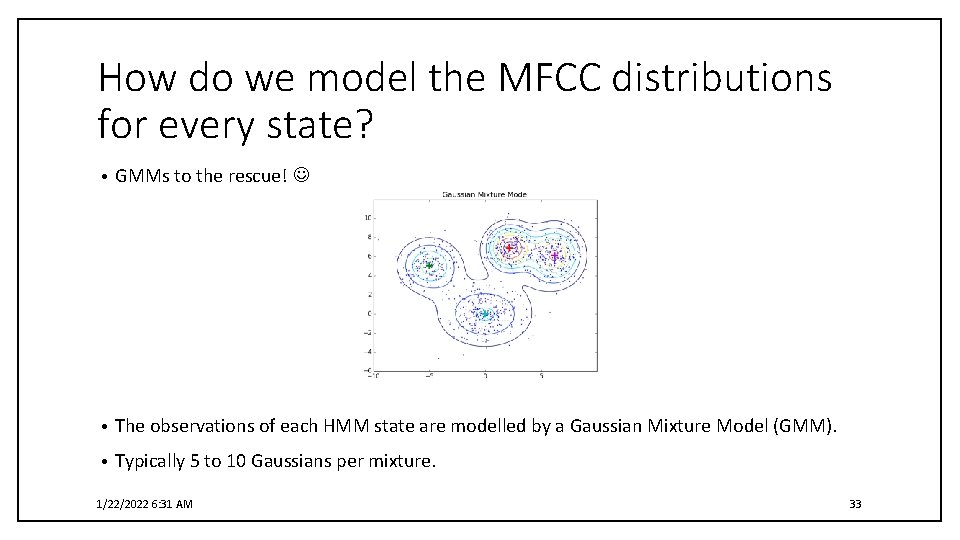

How do we model the MFCC distributions for every state? • GMMs to the rescue! • The observations of each HMM state are modelled by a Gaussian Mixture Model (GMM). • Typically 5 to 10 Gaussians per mixture. 1/22/2022 6: 31 AM 33

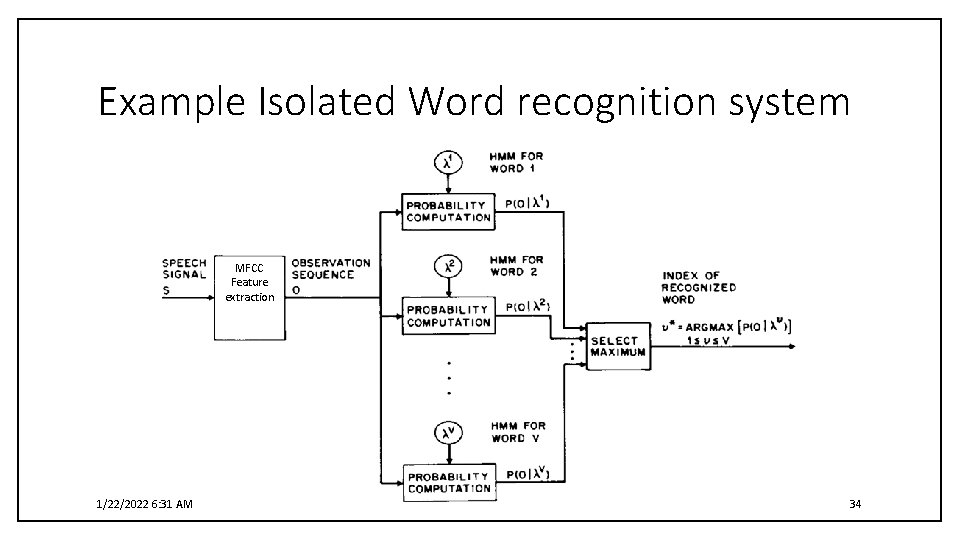

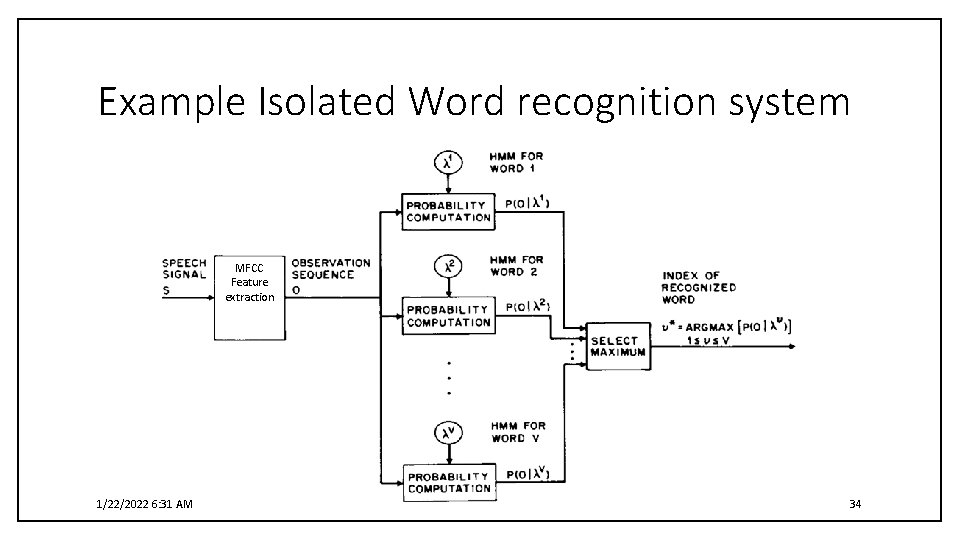

Example Isolated Word recognition system MFCC Feature extraction 1/22/2022 6: 31 AM 34

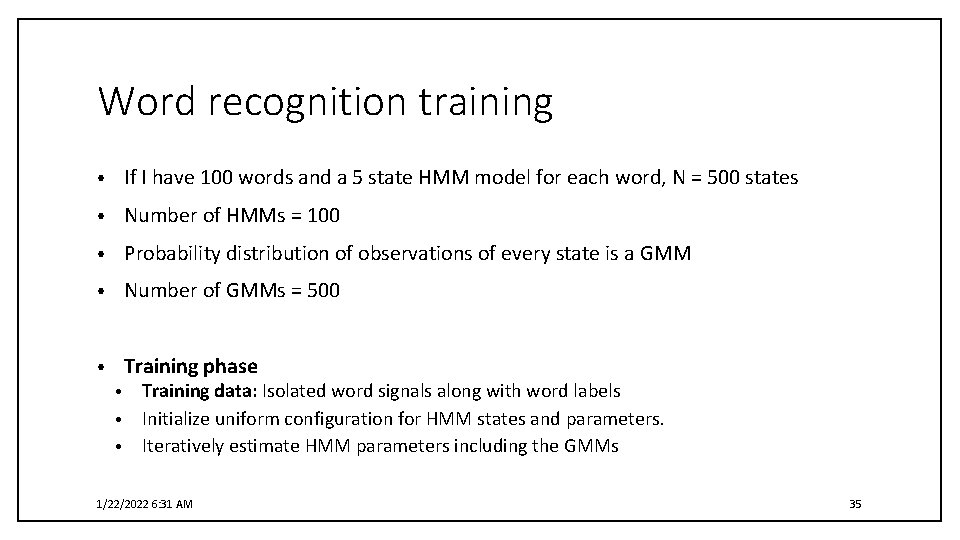

Word recognition training • If I have 100 words and a 5 state HMM model for each word, N = 500 states • Number of HMMs = 100 • Probability distribution of observations of every state is a GMM • Number of GMMs = 500 • Training phase Training data: Isolated word signals along with word labels • Initialize uniform configuration for HMM states and parameters. • Iteratively estimate HMM parameters including the GMMs • 1/22/2022 6: 31 AM 35

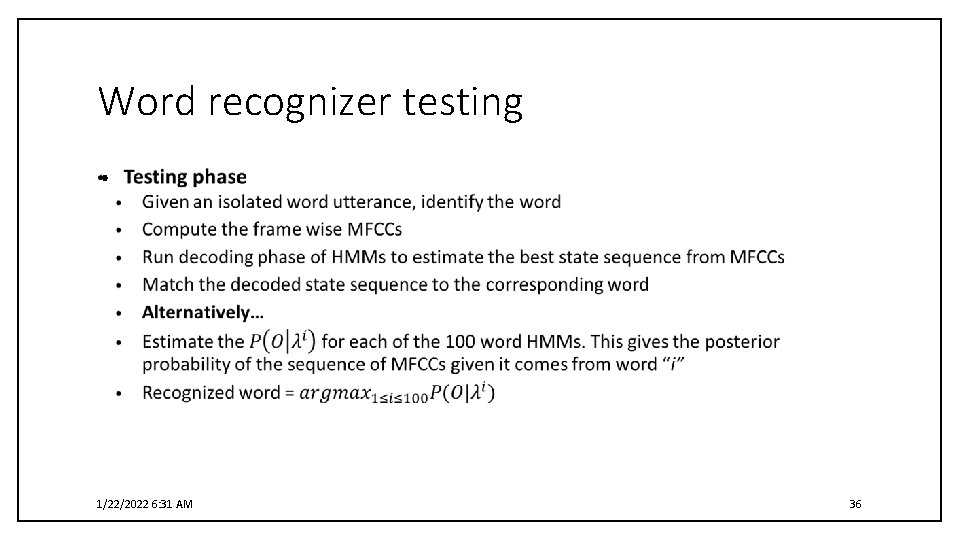

Word recognizer testing • 1/22/2022 6: 31 AM 36

Next Lecture • How does a continuous speech recognition system work? • Drawbacks and limitations of HMMs and their GMM acoustic models • Deep Neural Network as the knight in shining armor for ASR replacing GMMs • Recurrent neural networks for ASR Long-Short Term Memory (LSTM) networks • Bidierctional LSTM networks • • Tools for developing ASR Kaldi • Theano/Tensorflow • 1/22/2022 6: 31 AM 37

References • L. R. Rabiner, "A tutorial on hidden Markov models and selected applications in speech recognition, " in Proceedings of the IEEE, vol. 77, no. 2, pp. 257 -286, Feb 1989. • http: //hmmlearn. readthedocs. io/en/latest/index. html 1/22/2022 6: 31 AM 38