Heap Data Management for Limited Local Memory LLM

![Address Translation Functions main() { for (i=0; i<N; i++) { student[i] = malloc( sizeof(Student) Address Translation Functions main() { for (i=0; i<N; i++) { student[i] = malloc( sizeof(Student)](https://slidetodoc.com/presentation_image_h2/033bb0cb5e42ba3cfed9cb587bd630a5/image-14.jpg)

- Slides: 22

Heap Data Management for Limited Local Memory (LLM) Multicore Processors Ke Bai,Aviral Shrivastava Compiler Micro-architecture Lab M C L

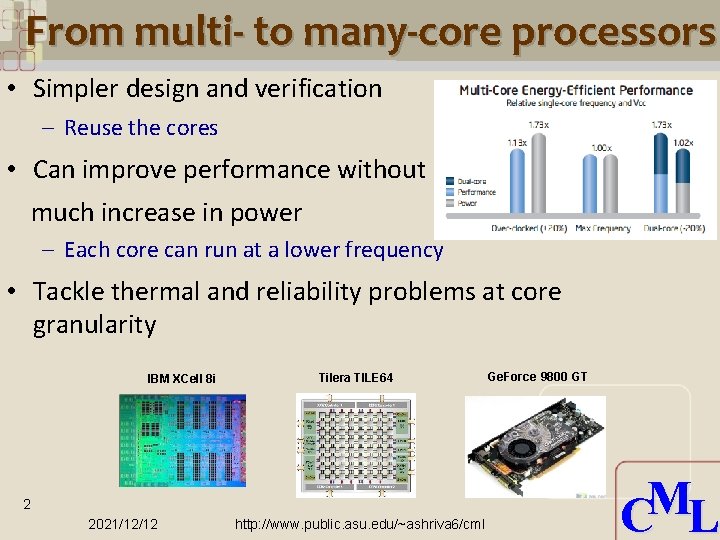

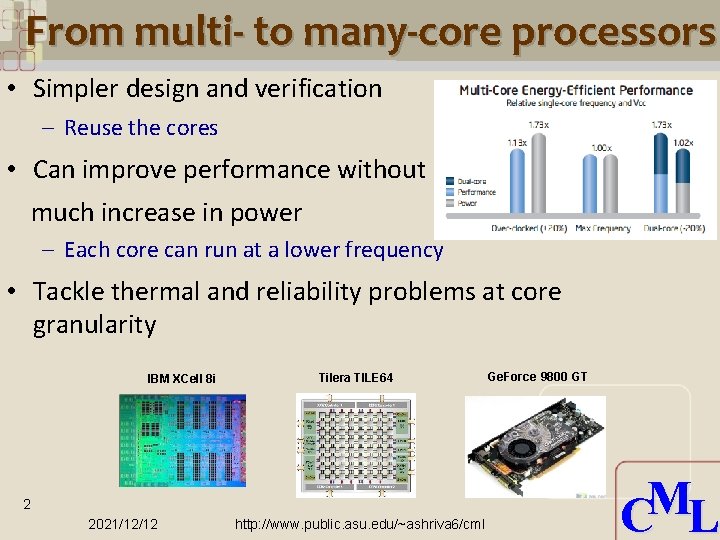

From multi- to many-core processors • Simpler design and verification – Reuse the cores • Can improve performance without much increase in power – Each core can run at a lower frequency • Tackle thermal and reliability problems at core granularity IBM XCell 8 i Tilera TILE 64 2 2021/12/12 http: //www. public. asu. edu/~ashriva 6/cml Ge. Force 9800 GT M C L

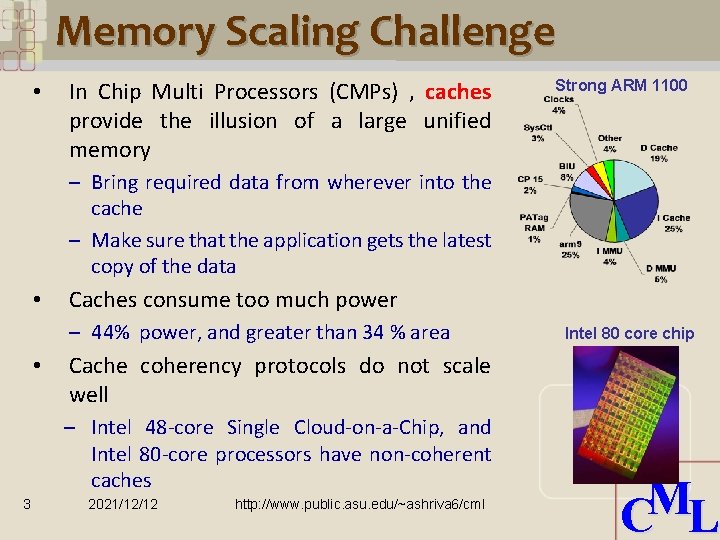

Memory Scaling Challenge • In Chip Multi Processors (CMPs) , caches provide the illusion of a large unified memory Strong ARM 1100 – Bring required data from wherever into the cache – Make sure that the application gets the latest copy of the data • Caches consume too much power – 44% power, and greater than 34 % area • Cache coherency protocols do not scale well – Intel 48 -core Single Cloud-on-a-Chip, and Intel 80 -core processors have non-coherent caches 3 Intel 80 core chip 2021/12/12 http: //www. public. asu. edu/~ashriva 6/cml M C L

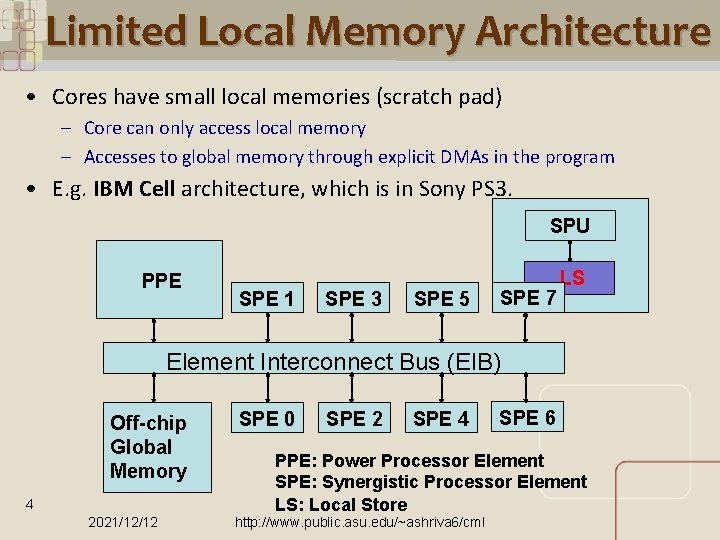

Limited Local Memory Architecture • Cores have small local memories (scratch pad) – Core can only access local memory – Accesses to global memory through explicit DMAs in the program • E. g. IBM Cell architecture, which is in Sony PS 3. SPU PPE SPE 1 SPE 3 SPE 5 SPE 7 LS Element Interconnect Bus (EIB) Off-chip Global Memory 4 2021/12/12 SPE 0 SPE 2 SPE 4 SPE 6 PPE: Power Processor Element SPE: Synergistic Processor Element LS: Local Store http: //www. public. asu. edu/~ashriva 6/cml M C L

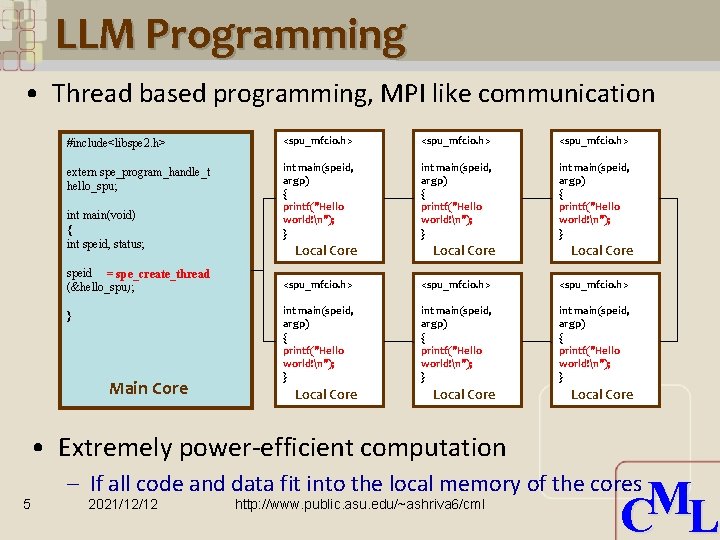

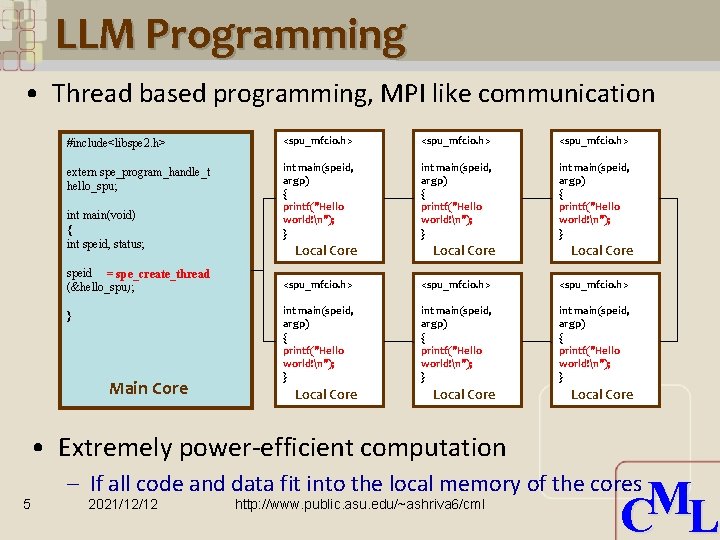

LLM Programming • Thread based programming, MPI like communication #include<libspe 2. h> <spu_mfcio. h> extern spe_program_handle_t hello_spu; int main(speid, argp) { printf("Hello world!n"); } int main(void) { int speid, status; speid = spe_create_thread (&hello_spu); } Main Core Local Core <spu_mfcio. h> int main(speid, argp) { printf("Hello world!n"); } Local Core • Extremely power-efficient computation 5 – If all code and data fit into the local memory of the cores 2021/12/12 http: //www. public. asu. edu/~ashriva 6/cml M C L

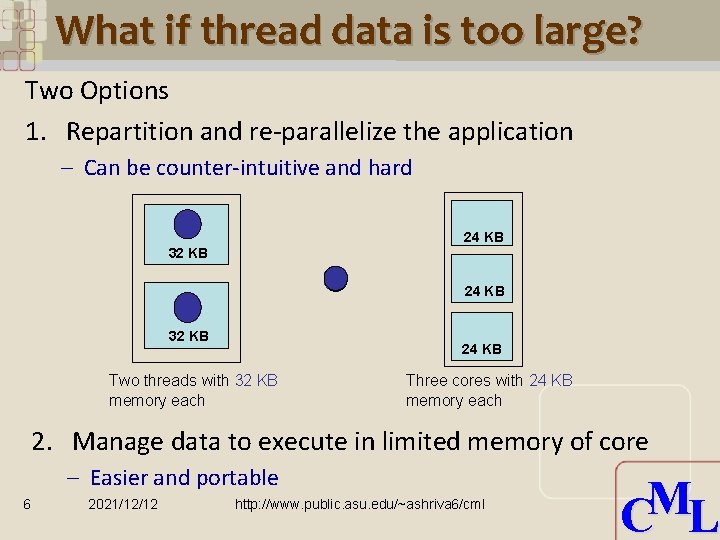

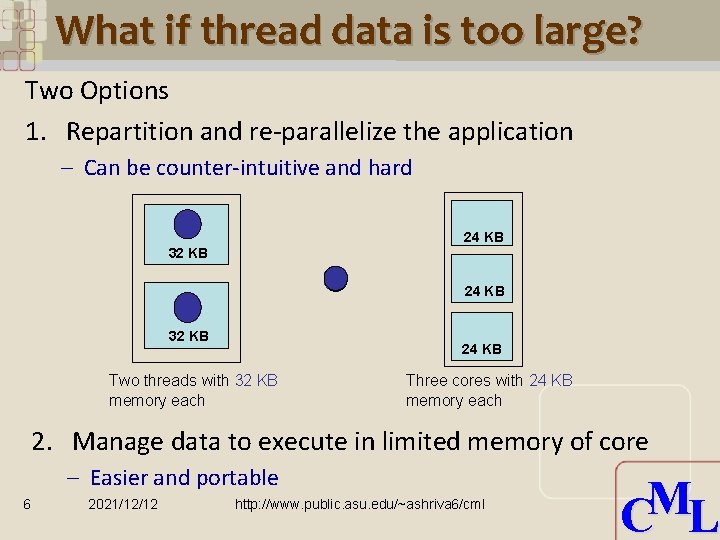

What if thread data is too large? Two Options 1. Repartition and re-parallelize the application – Can be counter-intuitive and hard 24 KB 32 KB 24 KB Two threads with 32 KB memory each Three cores with 24 KB memory each 2. Manage data to execute in limited memory of core – Easier and portable 6 2021/12/12 http: //www. public. asu. edu/~ashriva 6/cml M C L

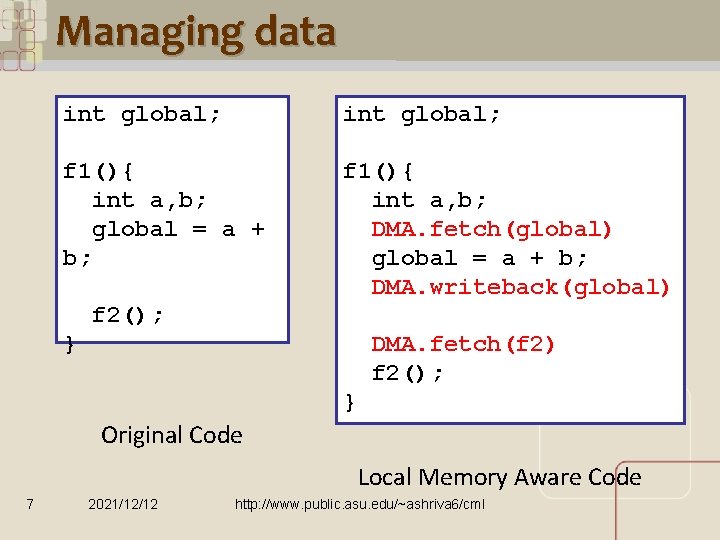

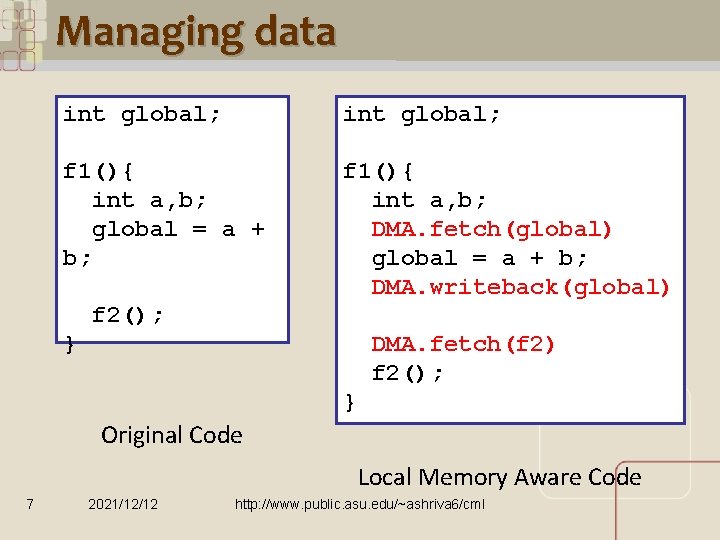

Managing data int global; f 1(){ int a, b; global = a + b; f 1(){ int a, b; DMA. fetch(global) global = a + b; DMA. writeback(global) f 2(); } DMA. fetch(f 2) f 2(); } Original Code Local Memory Aware Code 7 2021/12/12 http: //www. public. asu. edu/~ashriva 6/cml M C L

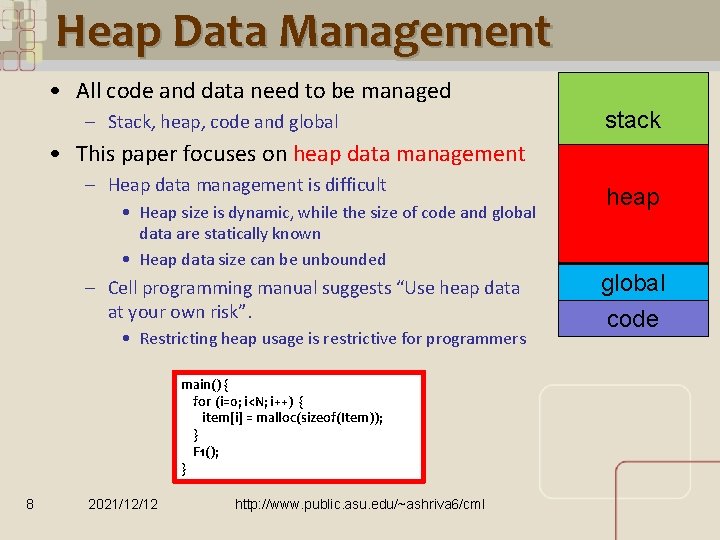

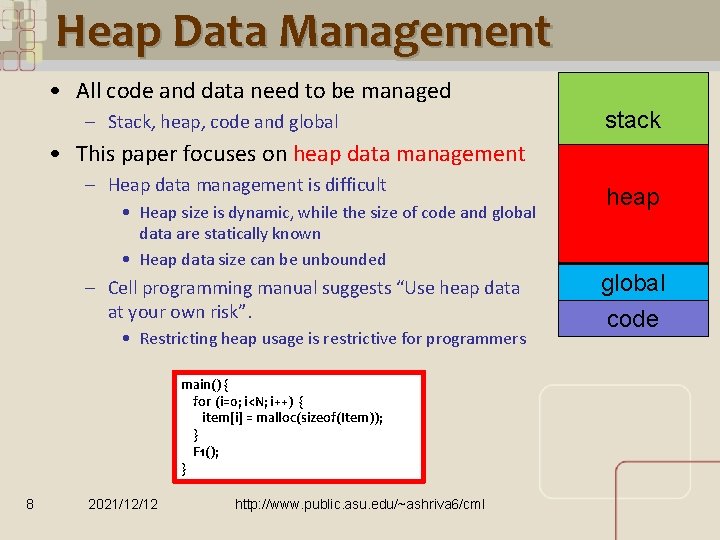

Heap Data Management • All code and data need to be managed – Stack, heap, code and global stack • This paper focuses on heap data management – Heap data management is difficult • Heap size is dynamic, while the size of code and global data are statically known • Heap data size can be unbounded – Cell programming manual suggests “Use heap data at your own risk”. • Restricting heap usage is restrictive for programmers main() { for (i=0; i<N; i++) { item[i] = malloc(sizeof(Item)); } F 1(); } 8 2021/12/12 http: //www. public. asu. edu/~ashriva 6/cml heap global code M C L

Outline of the talk • Motivation • Related works on heap data management • Our Approach of Heap Data Management • Experiments 9 2021/12/12 http: //www. public. asu. edu/~ashriva 6/cml M C L

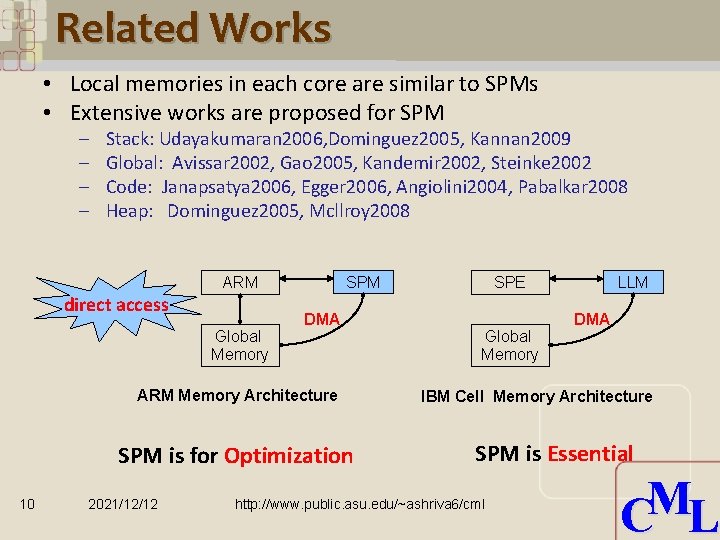

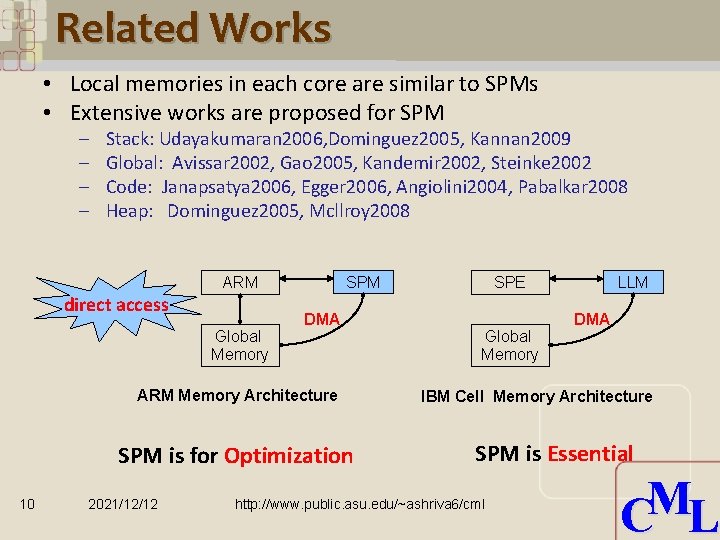

Related Works • Local memories in each core are similar to SPMs • Extensive works are proposed for SPM – – Stack: Udayakumaran 2006, Dominguez 2005, Kannan 2009 Global: Avissar 2002, Gao 2005, Kandemir 2002, Steinke 2002 Code: Janapsatya 2006, Egger 2006, Angiolini 2004, Pabalkar 2008 Heap: Dominguez 2005, Mcllroy 2008 SPM ARM direct access Global Memory DMA ARM Memory Architecture SPM is for Optimization 10 2021/12/12 LLM SPE Global Memory DMA IBM Cell Memory Architecture SPM is Essential http: //www. public. asu. edu/~ashriva 6/cml M C L

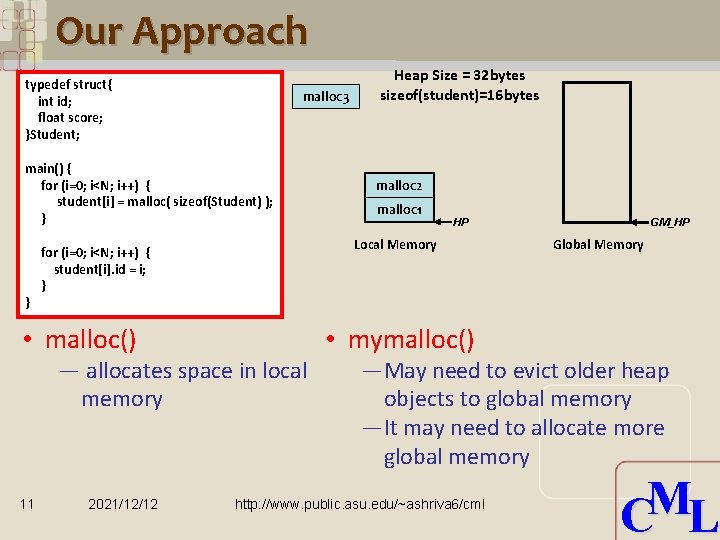

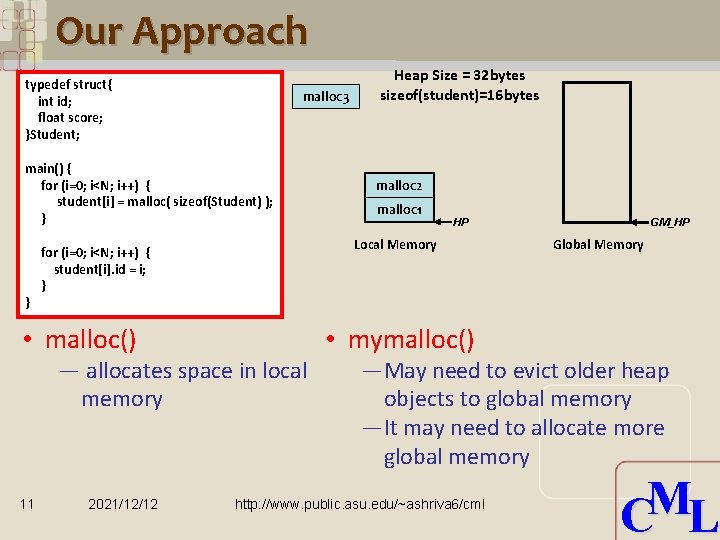

Our Approach typedef struct{ int id; float score; }Student; malloc 3 main() { for (i=0; i<N; i++) { student[i] = malloc( sizeof(Student) ); } } — allocates space in local memory 11 2021/12/12 malloc 1 GM_HP HP Local Memory for (i=0; i<N; i++) { student[i]. id = i; } • malloc() Heap Size = 32 bytes sizeof(student)=16 bytes Global Memory • mymalloc() —May need to evict older heap objects to global memory —It may need to allocate more global memory http: //www. public. asu. edu/~ashriva 6/cml M C L

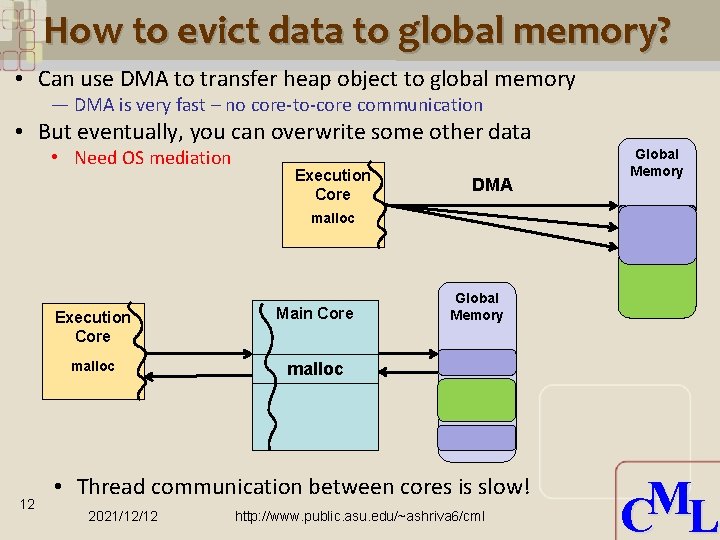

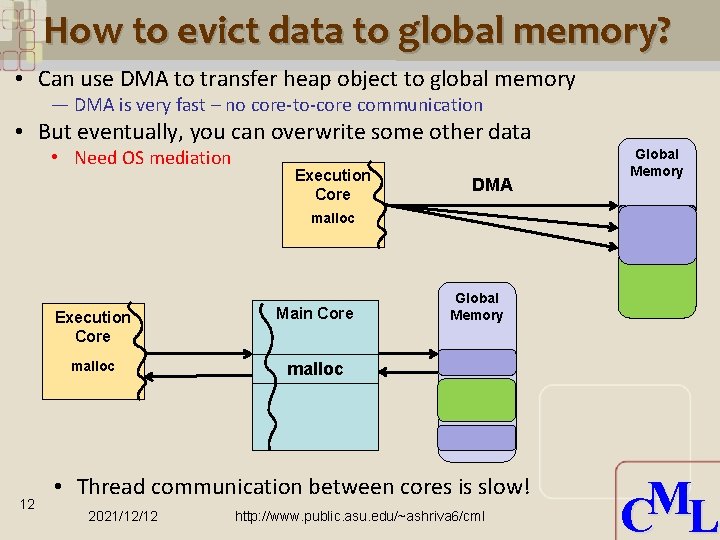

How to evict data to global memory? • Can use DMA to transfer heap object to global memory — DMA is very fast – no core-to-core communication • But eventually, you can overwrite some other data • Need OS mediation Execution Core DMA Global Memory malloc 12 Execution Core Main Core malloc Global Memory • Thread communication between cores is slow! 2021/12/12 http: //www. public. asu. edu/~ashriva 6/cml M C L

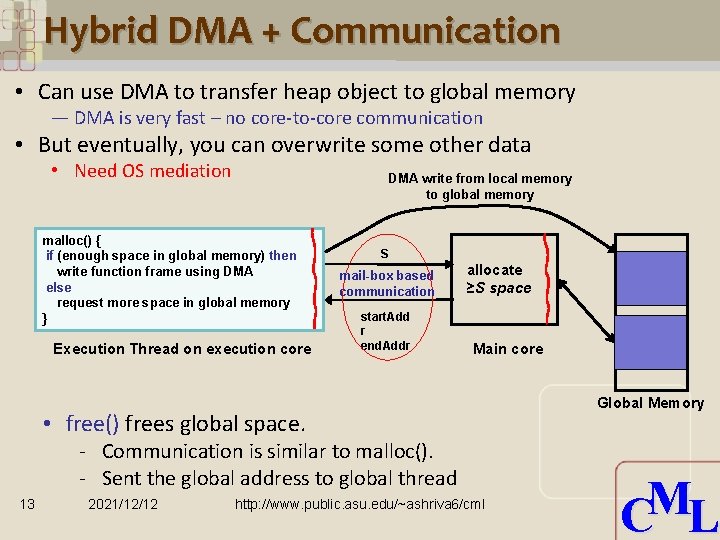

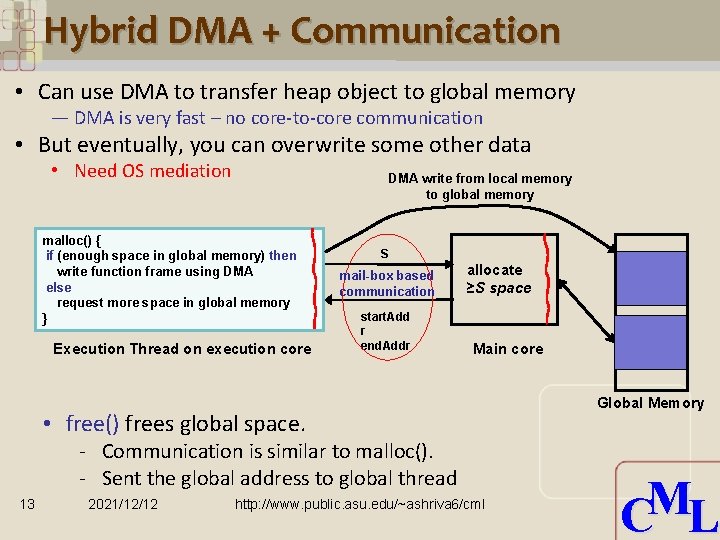

Hybrid DMA + Communication • Can use DMA to transfer heap object to global memory — DMA is very fast – no core-to-core communication • But eventually, you can overwrite some other data • Need OS mediation DMA write from local memory to global memory malloc() { if (enough space in global memory) then write function frame using DMA else request more space in global memory } Execution Thread on execution core S mail-box based communication start. Add r end. Addr allocate ≥S space Main core • free() frees global space. - Communication is similar to malloc(). - Sent the global address to global thread 13 2021/12/12 http: //www. public. asu. edu/~ashriva 6/cml Global Memory M C L

![Address Translation Functions main for i0 iN i studenti malloc sizeofStudent Address Translation Functions main() { for (i=0; i<N; i++) { student[i] = malloc( sizeof(Student)](https://slidetodoc.com/presentation_image_h2/033bb0cb5e42ba3cfed9cb587bd630a5/image-14.jpg)

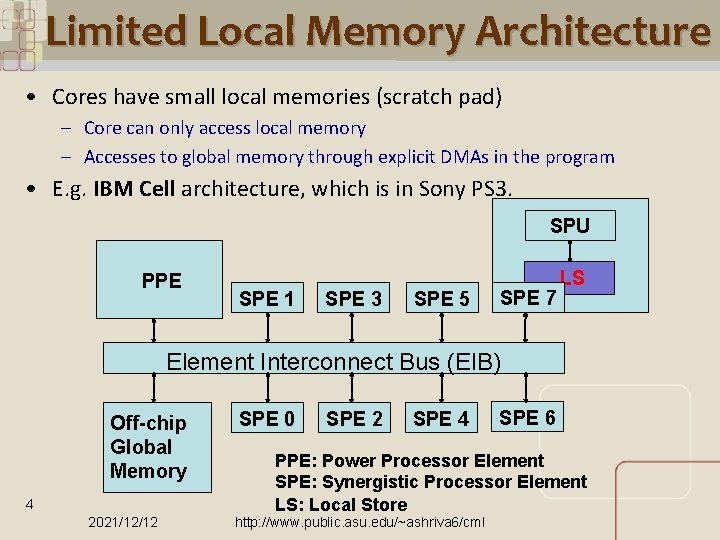

Address Translation Functions main() { for (i=0; i<N; i++) { student[i] = malloc( sizeof(Student) ); } } for (i=0; i<N; i++) { student[i] = p 2 s(student[i]); student[i]. id = i; student[i] = s 2 p(student[i]); } malloc 3 Heap Size = 32 bytes sizeof(student)=16 bytes HP malloc 2 malloc 1 GM_HP Local Memory Global Memory • Mapping from SPU address to global address is one to many. – Cannot easily find global address from SPU address • All heap accesses must happen through global addresses • p 2 s() will translate the global address to spu address – Make sure the heap object is in the local memory • s 2 p() will translate the spu address to global address 14 2021/12/12 M C L More details in the paper http: //www. public. asu. edu/~ashriva 6/cml

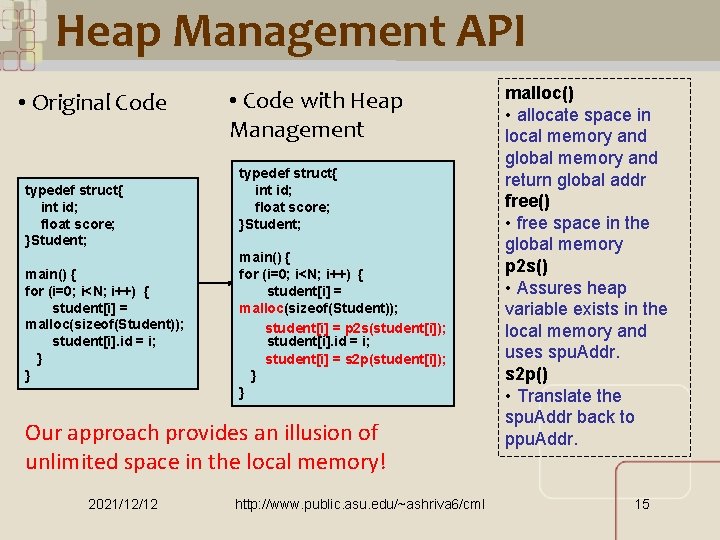

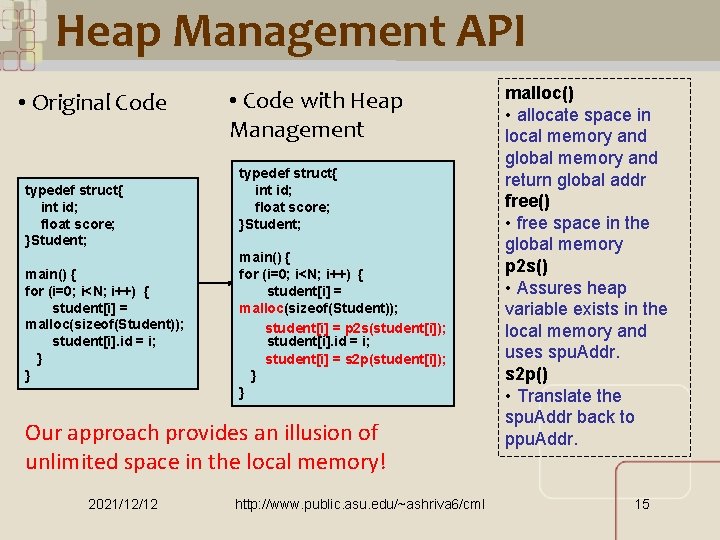

Heap Management API • Original Code typedef struct{ int id; float score; }Student; main() { for (i=0; i<N; i++) { student[i] = malloc(sizeof(Student)); student[i]. id = i; } } • Code with Heap Management typedef struct{ int id; float score; }Student; main() { for (i=0; i<N; i++) { student[i] = malloc(sizeof(Student)); student[i] = p 2 s(student[i]); student[i]. id = i; student[i] = s 2 p(student[i]); } } Our approach provides an illusion of unlimited space in the local memory! 2021/12/12 http: //www. public. asu. edu/~ashriva 6/cml malloc() • allocate space in local memory and global memory and return global addr free() • free space in the global memory p 2 s() • Assures heap variable exists in the local memory and uses spu. Addr. s 2 p() • Translate the spu. Addr back to ppu. Addr. M C L 15

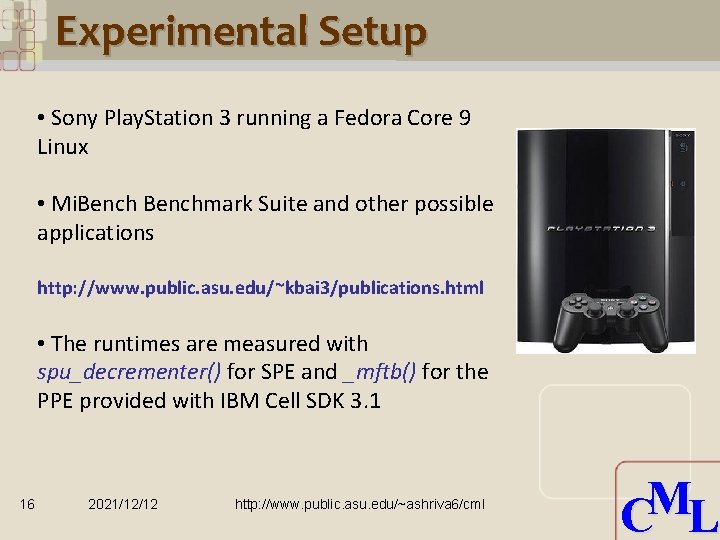

Experimental Setup • Sony Play. Station 3 running a Fedora Core 9 Linux • Mi. Benchmark Suite and other possible applications http: //www. public. asu. edu/~kbai 3/publications. html • The runtimes are measured with spu_decrementer() for SPE and _mftb() for the PPE provided with IBM Cell SDK 3. 1 16 2021/12/12 http: //www. public. asu. edu/~ashriva 6/cml M C L

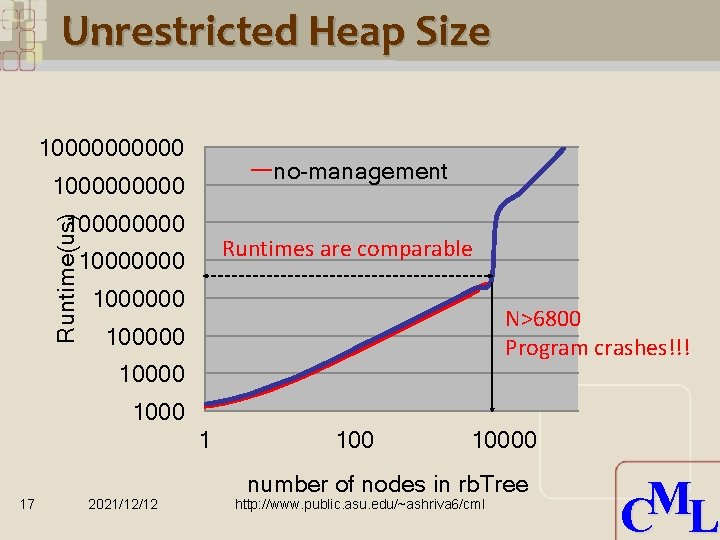

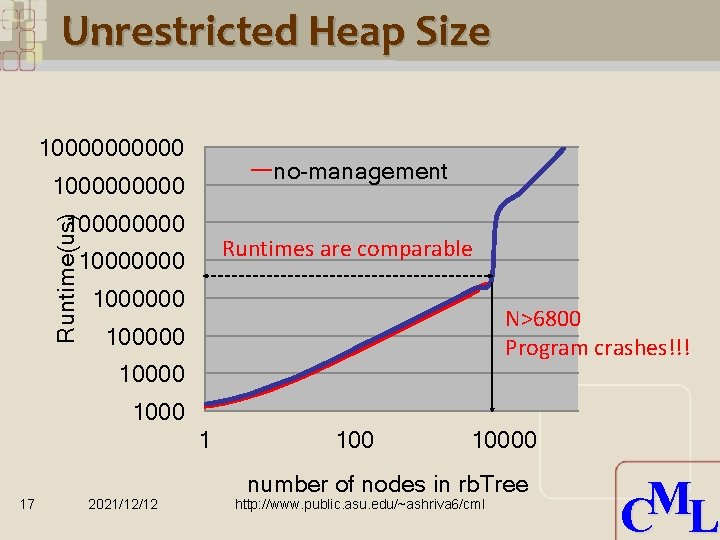

Unrestricted Heap Size 100000 no-management 100000 Runtime(us) 10000 Runtimes are comparable 10000000 1000000 N>6800 Program crashes!!! 100000 1000 1 17 2021/12/12 10000 number of nodes in rb. Tree http: //www. public. asu. edu/~ashriva 6/cml M C L

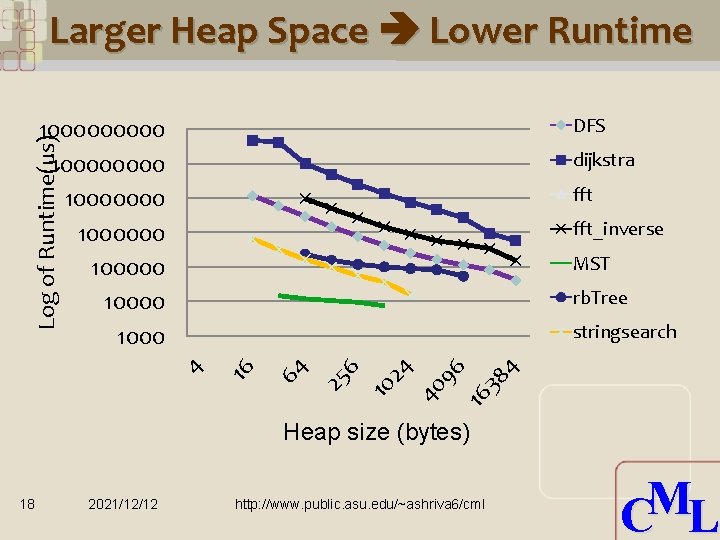

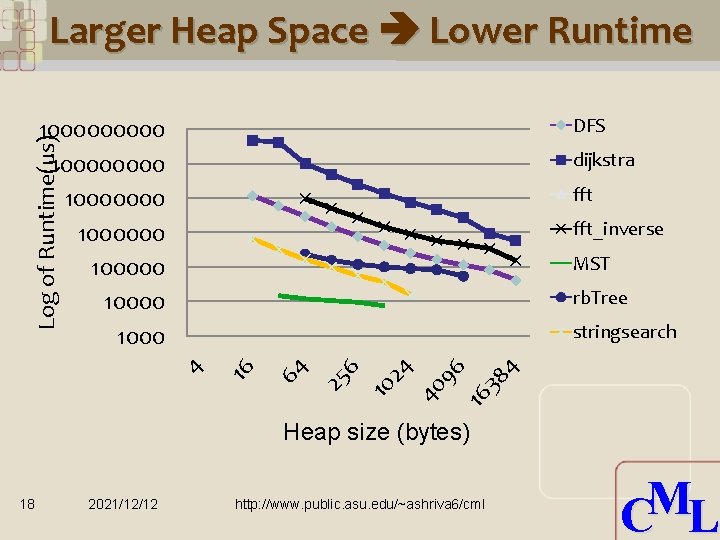

Larger Heap Space Lower Runtime DFS Log of Runtime(us) 10000000 1000000 10000 1000 dijkstra fft_inverse MST rb. Tree 4 16 38 96 40 24 10 6 25 64 16 4 stringsearch Heap size (bytes) 18 2021/12/12 http: //www. public. asu. edu/~ashriva 6/cml M C L

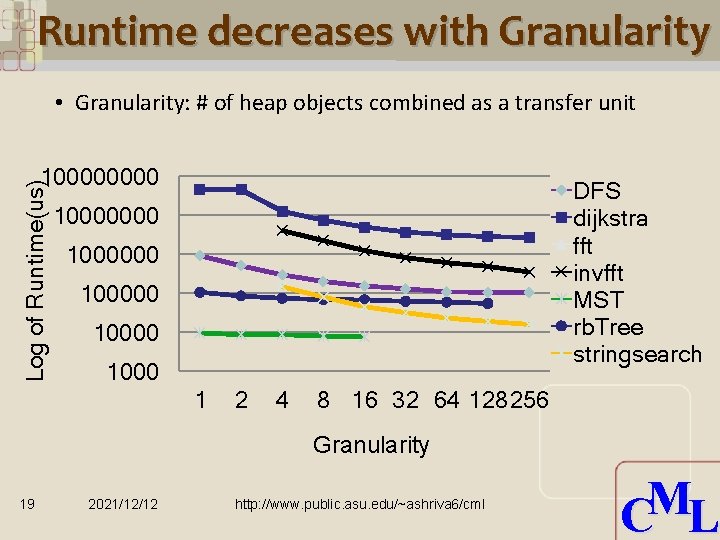

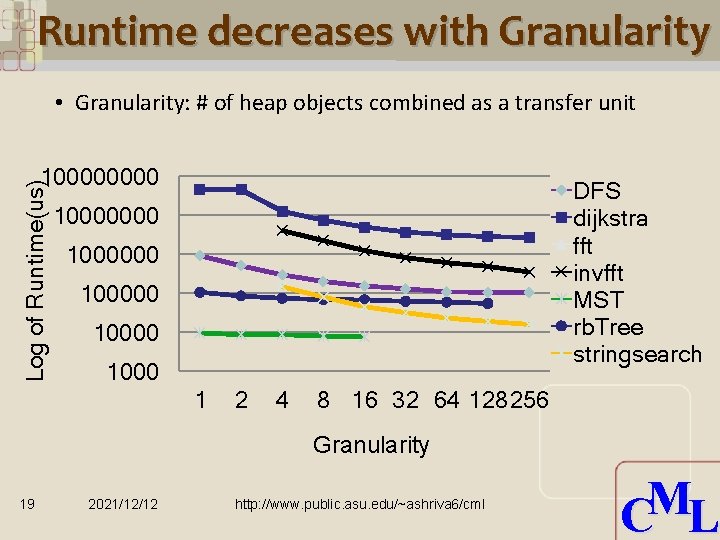

Runtime decreases with Granularity • Granularity: # of heap objects combined as a transfer unit Log of Runtime(us) 10000 DFS dijkstra fft invfft MST rb. Tree stringsearch 10000000 100000 1000 1 2 4 8 16 32 64 128256 Granularity 19 2021/12/12 http: //www. public. asu. edu/~ashriva 6/cml M C L

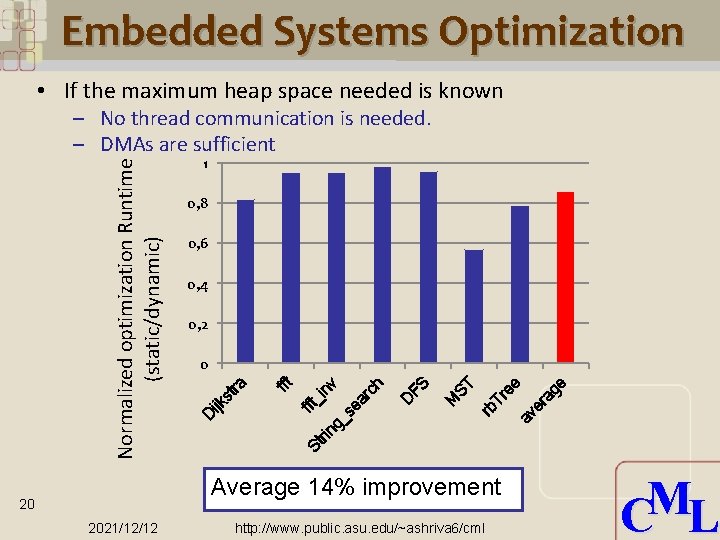

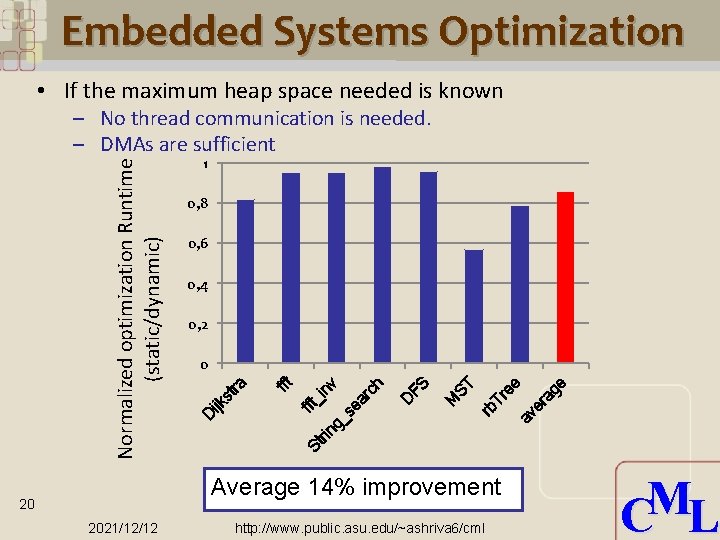

Embedded Systems Optimization • If the maximum heap space needed is known 1 0, 8 0, 6 0, 4 0, 2 Average 14% improvement 20 2021/12/12 av er ag e ee Tr rb ST M FS D h rc St rin g_ se a nv _i fft ijk st ra 0 D Normalized optimization Runtime (static/dynamic) – No thread communication is needed. – DMAs are sufficient http: //www. public. asu. edu/~ashriva 6/cml M C L

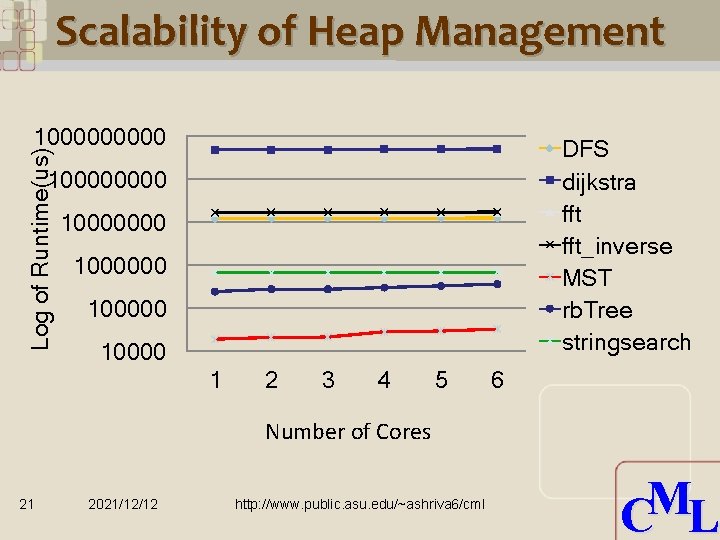

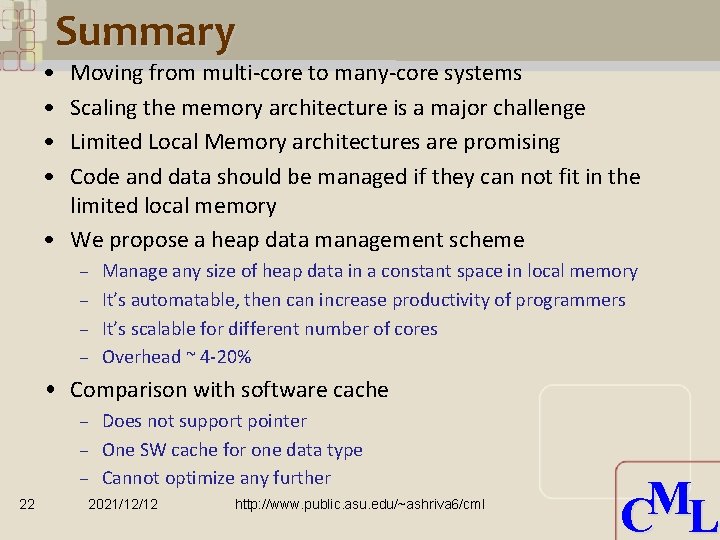

Scalability of Heap Management Log of Runtime(us) 100000 DFS dijkstra fft_inverse MST rb. Tree stringsearch 10000000 100000 1 2 3 4 5 6 Number of Cores 21 2021/12/12 http: //www. public. asu. edu/~ashriva 6/cml M C L

Summary • • Moving from multi-core to many-core systems Scaling the memory architecture is a major challenge Limited Local Memory architectures are promising Code and data should be managed if they can not fit in the limited local memory • We propose a heap data management scheme – – Manage any size of heap data in a constant space in local memory It’s automatable, then can increase productivity of programmers It’s scalable for different number of cores Overhead ~ 4 -20% • Comparison with software cache – Does not support pointer – One SW cache for one data type – Cannot optimize any further 22 2021/12/12 http: //www. public. asu. edu/~ashriva 6/cml M C L