Health Equity Analytics Solution Team Powder Quants Team

- Slides: 25

Health Equity Analytics Solution -Team Powder. Quants Team lead: Ben Taylor Analyst: Justin Powell bentaylorche@gmail. com

Outline • • Define the objective Data Formatting Data Clustering Predictive Analytics Model Solution ROI Looking Forward

Define the objective Brief background • Descriptive Analytics – This is the most basic solution. Nothing more than a graphical visualization resting on top of a database. If data visualization is needed there are many plug and play vendors such as Tableau, Domo, etc… • Predictive Analytics – Using the data from the descriptive analytics, can a model be built to predict account spend rate? This requires a background in modeling and proper metrics for success to ensure overfitting is not an issue. • Prescriptive Analytics – Rather than just firing a prediction or threshold to react to the data, prescriptive analytics attempts to use the model for insight to change the future outcome. An example of this would be targeting chronic diabetic customers to reduce the risk of limb amputation.

Define the objective • The problem objective is to develop a model that can predict account balance risk for preemptive notification. – Challenge • Focusing too much on the end goal can distract and confuse. Fund ? Inputs Ok • Simplifying the problem into tractable pieces reveals where the focus of the algorithm should be: Predicting the likely spend rate of each individual. The rest of the math after that is simple. Account balance Expected contribution Fund Inputs ? Ok Predicted spend

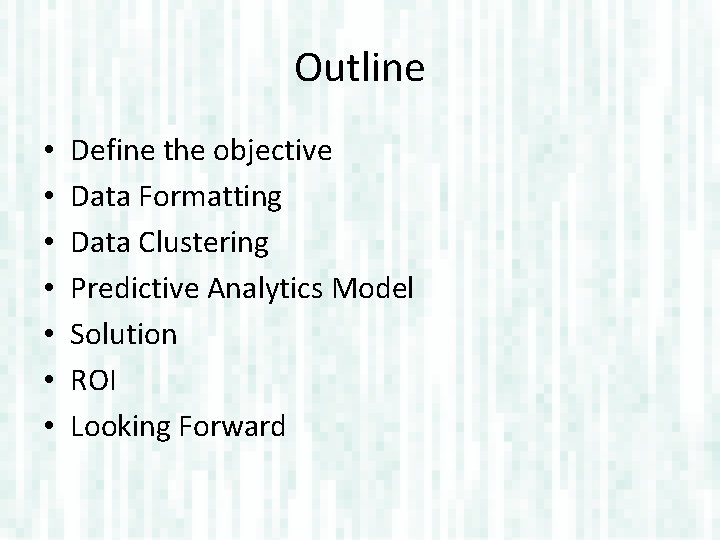

Define the objective • Common pitfalls with predictive analytics Inputs ? Predicted spend rate – Default Objective is incorrect • Many novice users will use default algorithms without much thought into the algorithms underlying objective. This can cause problems if the objective is simply tied to an overall error such as (R^2, RSME, etc…) which is not robust to outlier influence, scaling issues, or give the end user any sense of model confidence. [Powerquants use 3 metrics for comparison] – Overfitting => Solution confidence / quality • “Any solution without an associated confidence is no solution at all. ” An R^2 of 1 can be provided given enough input variables into a model, but offers poor predictive power beyond the training set. Cross validation / bootstrapping can assist to aid in model confidence assessment. [Powerquants provide robust confidence metrics]

Data Formating • Joining up the data – unique [Member. ID. dependent x OPT claim] – Combine all other data into single table keyed off of either claim. ID or member. ID

Clustering Data • Looking at the sparse raw data (left) it is nearly impossible to see the value. Clustering using a self organized map (right) allows for areas of interest to come to life. Now procedures with high use counts among members with correlations between other procedures are readily visible along the diagonal. Unique member Unique CPT codes ? Sparse! Shuffle organize Unique member (reordered) Unique CPT codes (reordered)

A closer look cool….

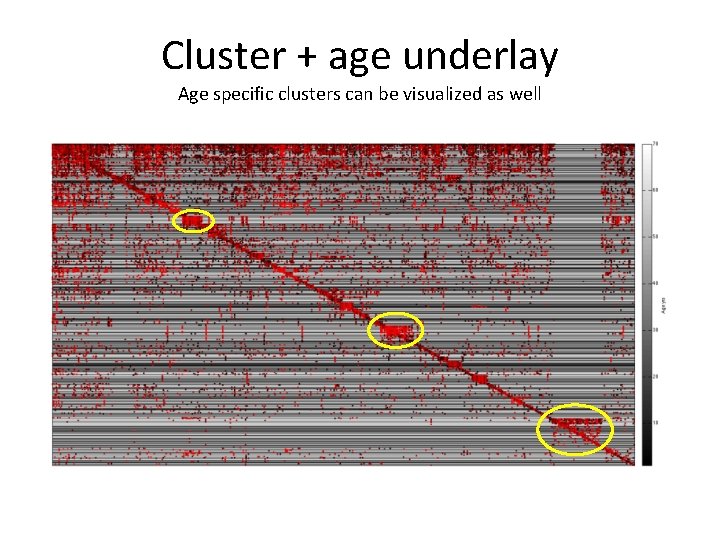

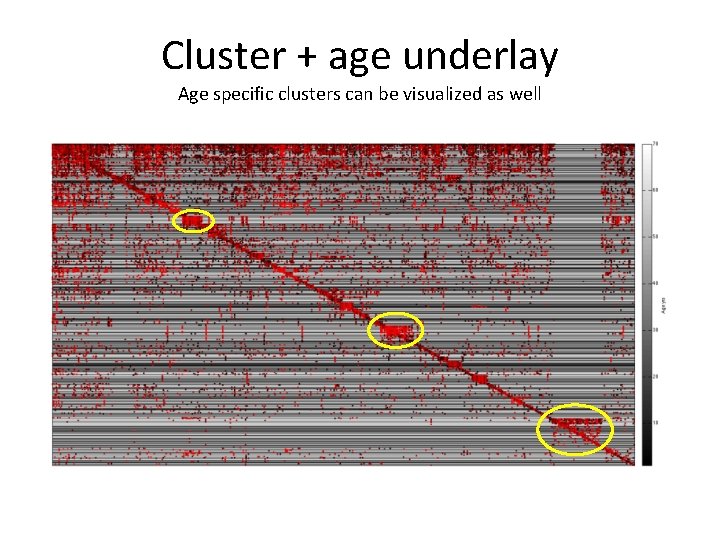

Cluster + age underlay Age specific clusters can be visualized as well

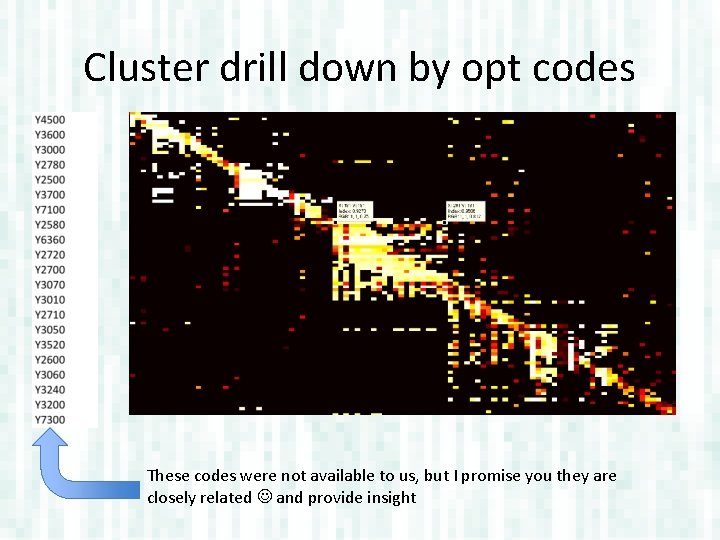

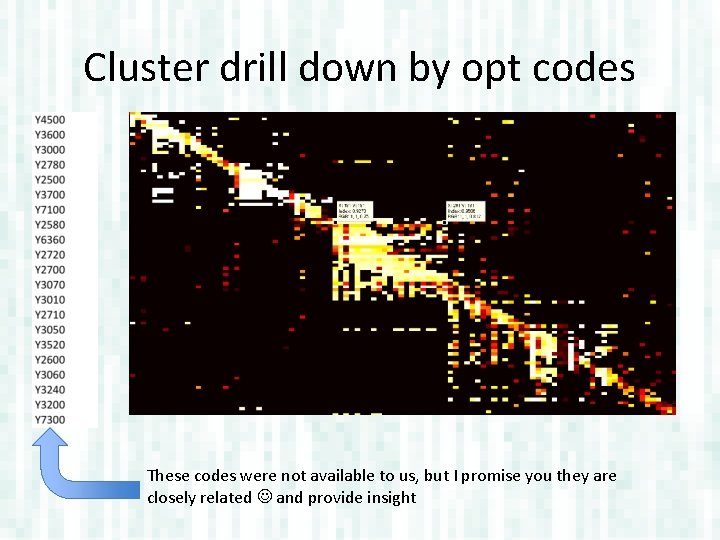

Cluster drill down by opt codes These codes were not available to us, but I promise you they are closely related and provide insight

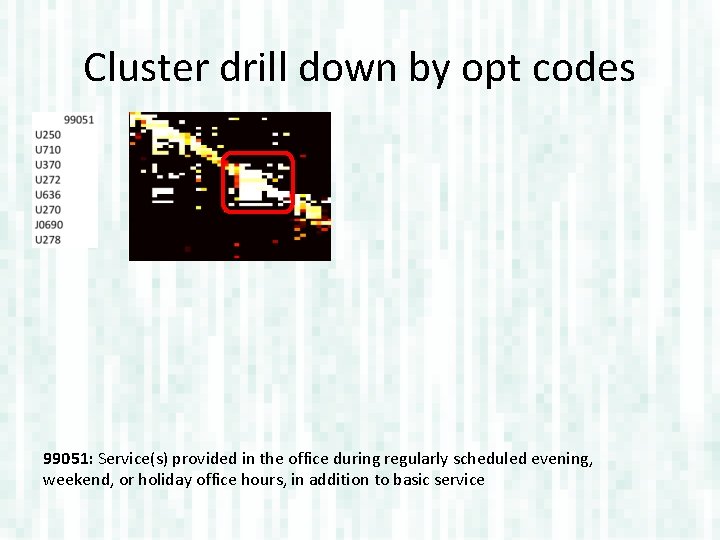

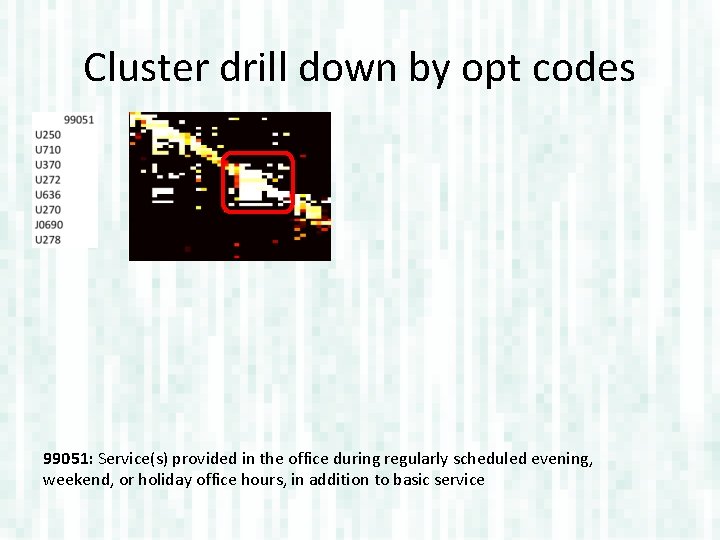

Cluster drill down by opt codes 99051: Service(s) provided in the office during regularly scheduled evening, weekend, or holiday office hours, in addition to basic service

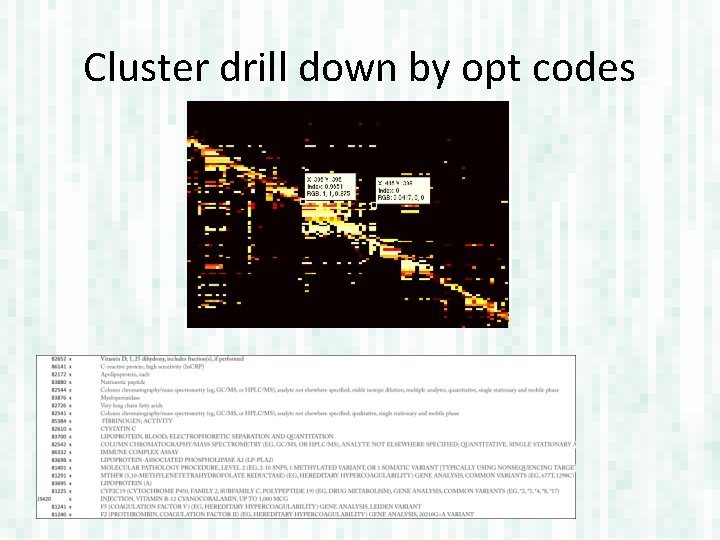

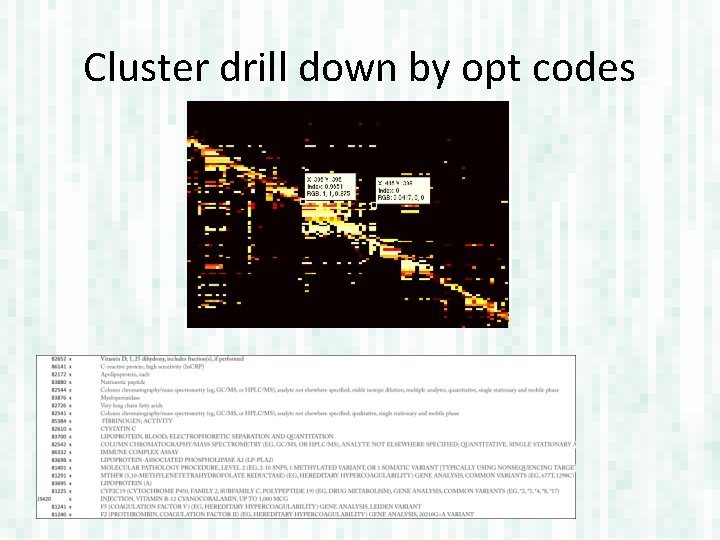

Cluster drill down by opt codes

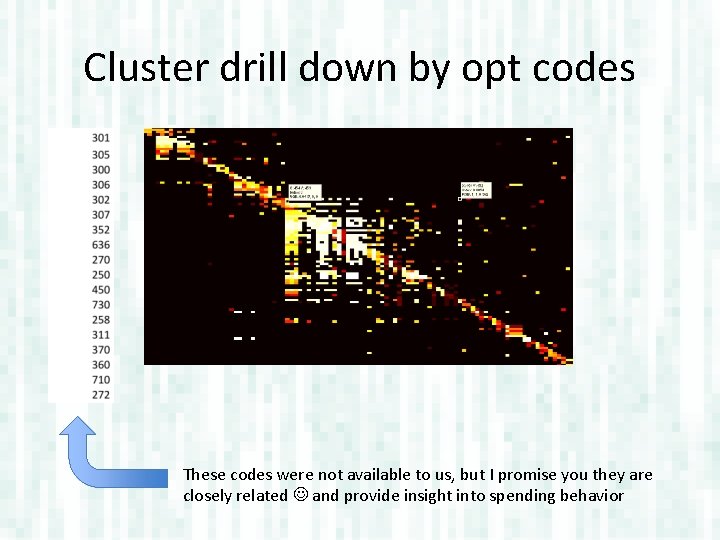

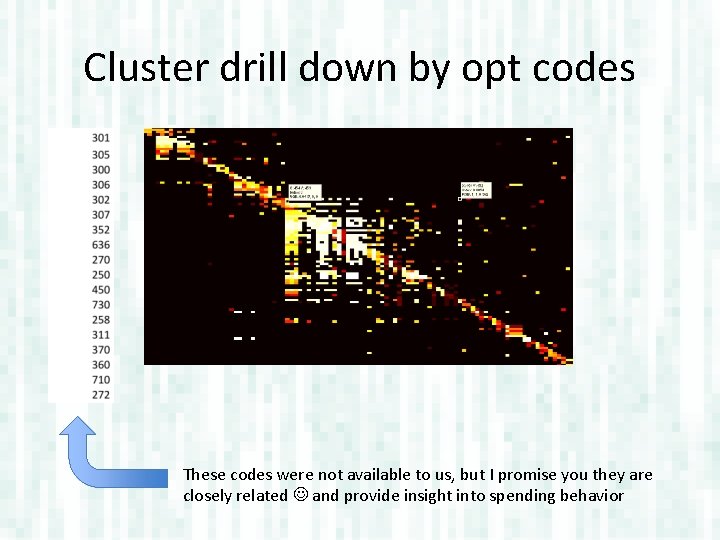

Cluster drill down by opt codes These codes were not available to us, but I promise you they are closely related and provide insight into spending behavior

Cluster drill down by opt codes

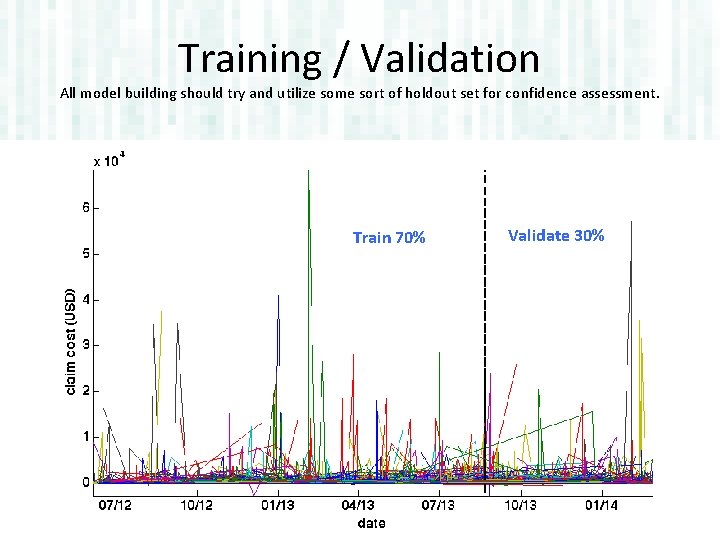

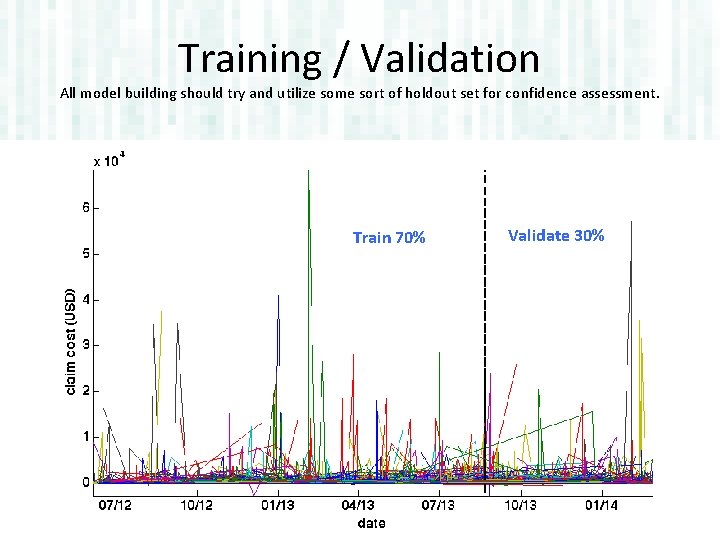

Training / Validation All model building should try and utilize some sort of holdout set for confidence assessment. Train 70% Validate 30%

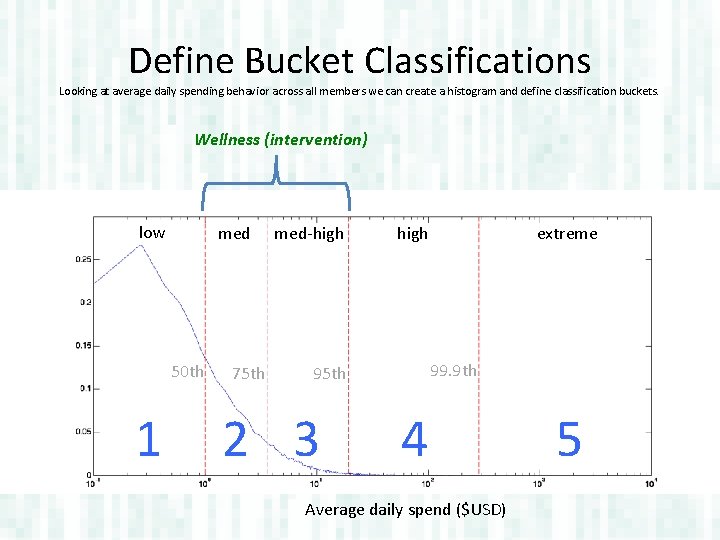

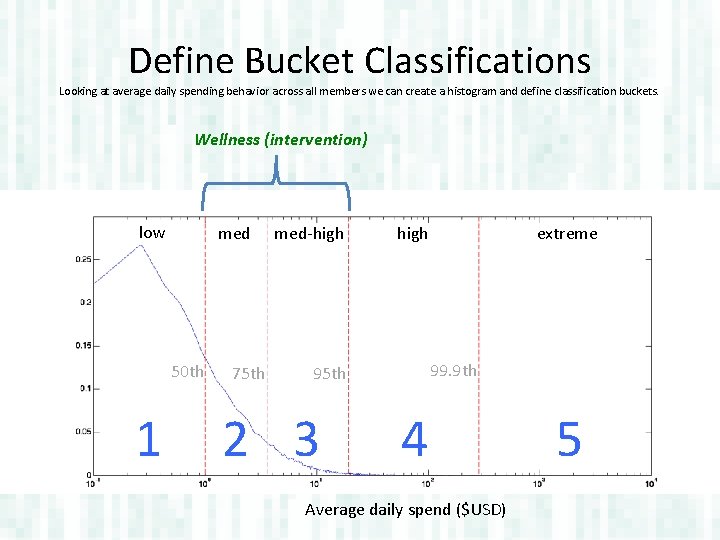

Define Bucket Classifications Looking at average daily spending behavior across all members we can create a histogram and define classification buckets. Wellness (intervention) low med 50 th 1 75 th med-high 99. 9 th 95 th 2 3 extreme 4 Average daily spend ($USD) 5

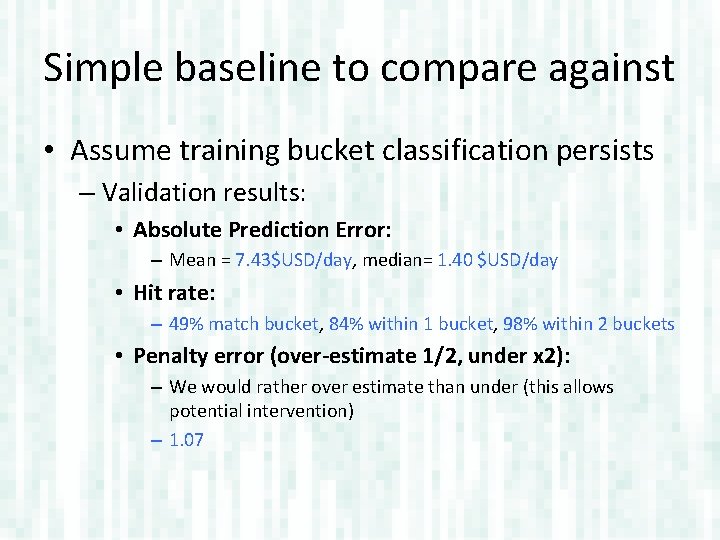

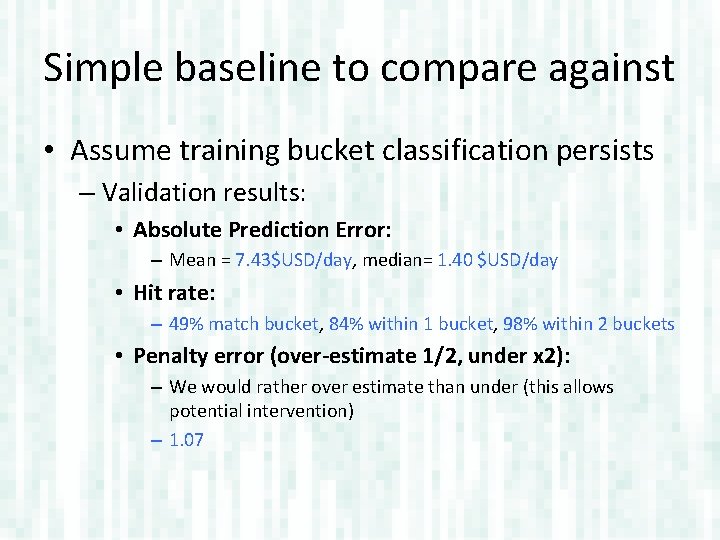

Simple baseline to compare against • Assume training bucket classification persists – Validation results: • Absolute Prediction Error: – Mean = 7. 43$USD/day, median= 1. 40 $USD/day • Hit rate: – 49% match bucket, 84% within 1 bucket, 98% within 2 buckets • Penalty error (over-estimate 1/2, under x 2): – We would rather over estimate than under (this allows potential intervention) – 1. 07

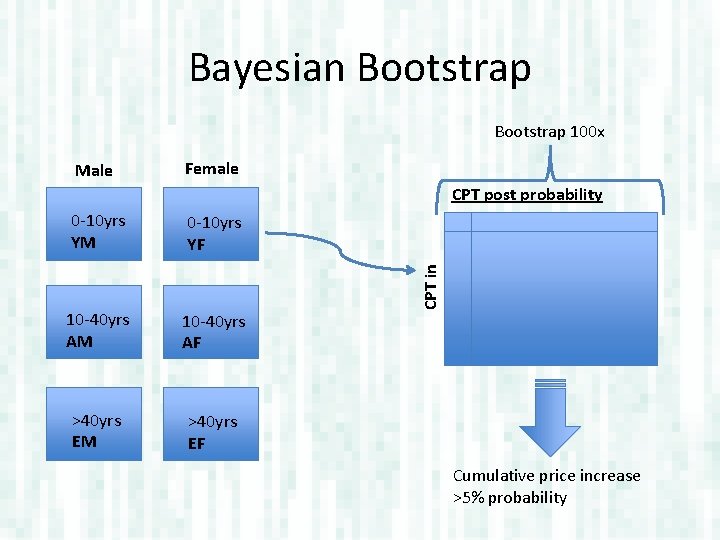

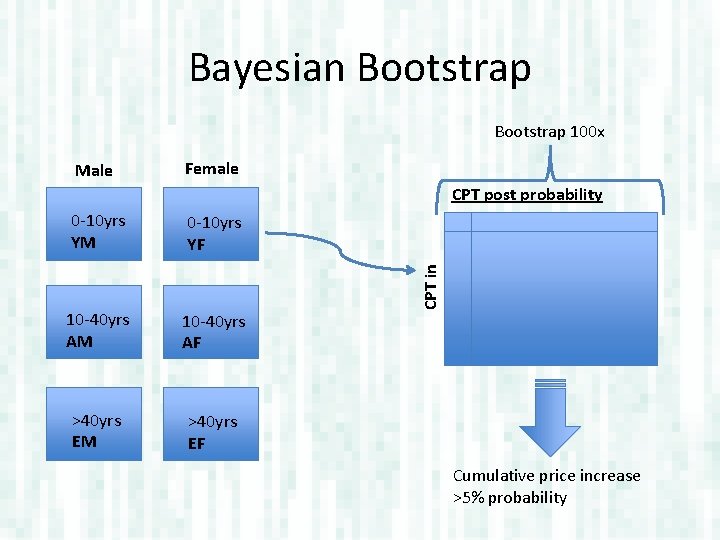

Bayesian Bootstrap 100 x Male Female 0 -10 yrs YM 0 -10 yrs YF 10 -40 yrs AM 10 -40 yrs AF >40 yrs EM >40 yrs EF CPT in CPT post probability Cumulative price increase >5% probability

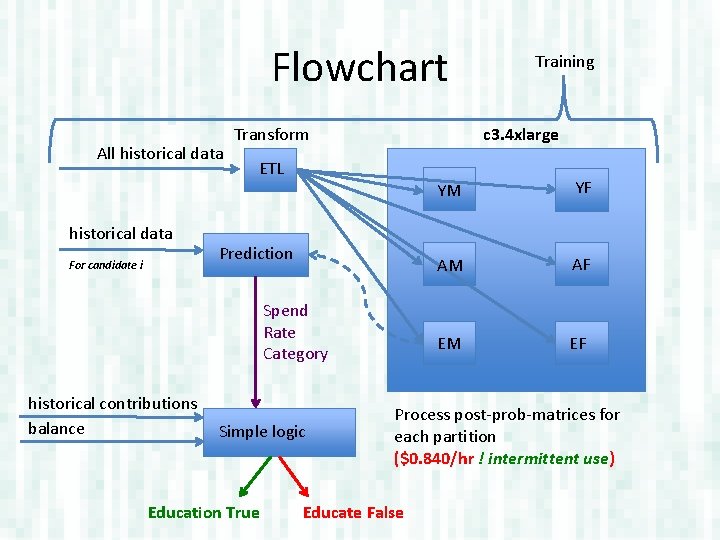

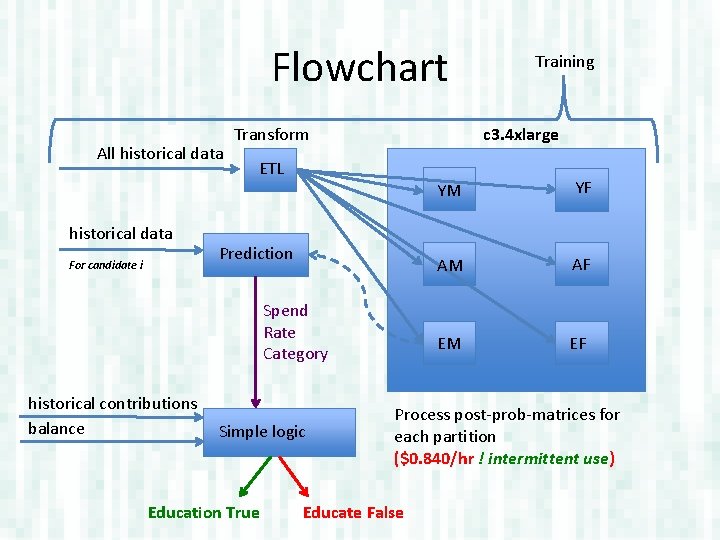

Flowchart All historical data For candidate i Transform c 3. 4 xlarge ETL Prediction Spend Rate Category historical contributions balance Simple logic Education True Training YM YF AM AF EM EF Process post-prob-matrices for each partition ($0. 840/hr ! intermittent use) Educate False

LAUNCH AWS Demo • Here I will launch my AWS instance and run the demo showing the distributed Bayesian bootstrap code running in memory on 16 cores and compare that to my local machine rate (~160 hrs).

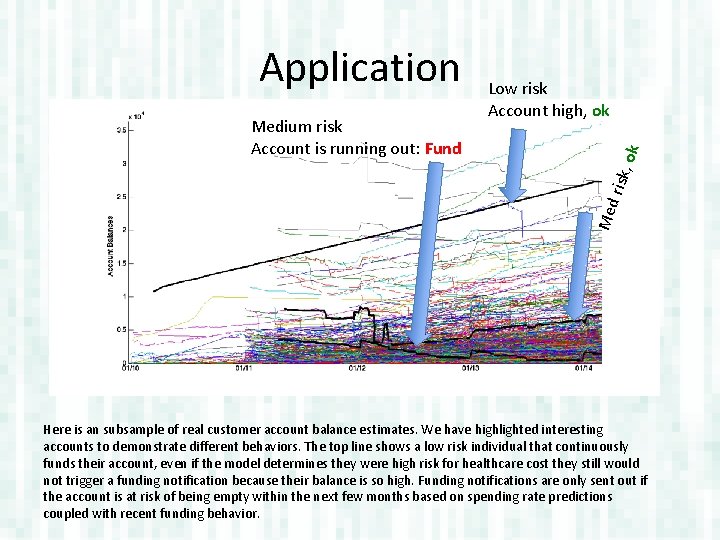

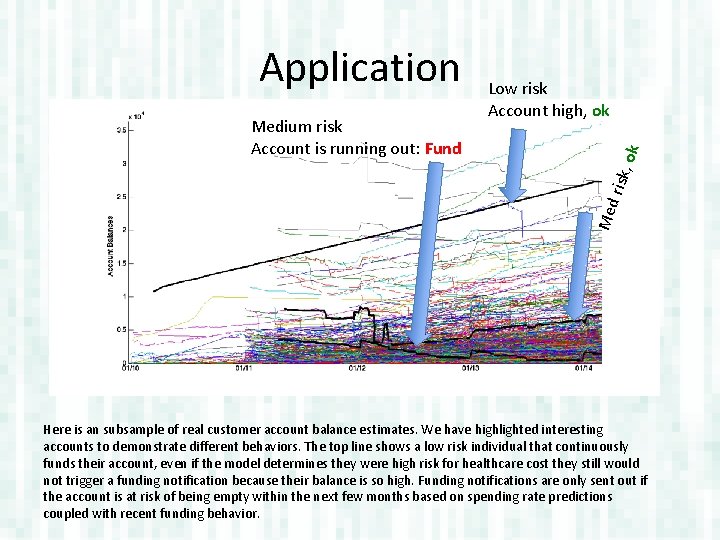

Med risk, Medium risk Account is running out: Fund Low risk Account high, ok ok Application Here is an subsample of real customer account balance estimates. We have highlighted interesting accounts to demonstrate different behaviors. The top line shows a low risk individual that continuously funds their account, even if the model determines they were high risk for healthcare cost they still would not trigger a funding notification because their balance is so high. Funding notifications are only sent out if the account is at risk of being empty within the next few months based on spending rate predictions coupled with recent funding behavior.

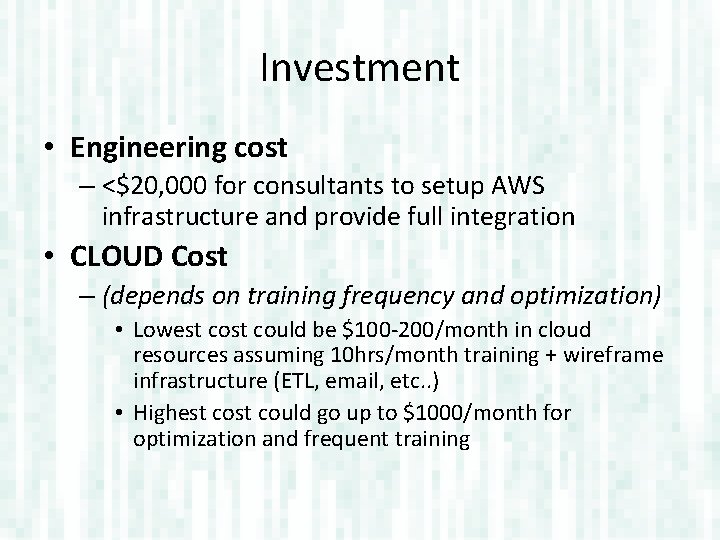

Investment • Engineering cost – <$20, 000 for consultants to setup AWS infrastructure and provide full integration • CLOUD Cost – (depends on training frequency and optimization) • Lowest could be $100 -200/month in cloud resources assuming 10 hrs/month training + wireframe infrastructure (ETL, email, etc. . ) • Highest could go up to $1000/month for optimization and frequent training

Return • Assuming a 5% reduction in health care costs – Reduction will come from wellness awareness and insight into clustered medical spending (discovered risks). With Bayesian bootstrapping you are essentially giving your customers rich tailored probability maps, do what you want with that information. (i. e. I am going in for X surgery, what are the risks or complications, and what are the costs of those risks for my demographic? ) • Patient responsibility: – $21, 979, 894. 32*0. 05=$1, 098, 994. 72 savings • Negotiated Price: – 144633170. 61*0. 05=$7, 231, 658. 53 savings

Future Opportunities • Operations make this type of problem a GPU candidate. – Running on GPUs can offer anywhere from 10 -100 x speed up. This could be a cost savings opportunity if frequent trainings are needed. • Bucket thresholds can be optimized – 50 th, 75 th, etc… thresholds are arbitrary, can be refined for greater predictive power. • More specific age/gender Bayesian maps can be created, including location given enough data. – Increase resolution, more age groups, smarter age transitions. Also including health assessment data would improve this type of risk clustering. • Clustering can be magnified for easier visualization + automated cluster threshold data mining methods can be used to automate insight mining in the clusters. – These clusters provide a wealth of knowledge on common procedures and the largest pain points in the sector. Spending time to develop cluster evaluation techniques would be worthwhile.

Code location • git clone https: //bitbucket. org/bentaylorche/heqdatacomp. git – Code is partial, I will check in the AWS demo at the presentation.