HardwareSoftware Codesign Kermin Fleming Computer Science Artificial Intelligence

![Hello, world! int main (int argc, char* argv[]) { int n = atoi(argv[1]); for Hello, world! int main (int argc, char* argv[]) { int n = atoi(argv[1]); for](https://slidetodoc.com/presentation_image/442896537eed1312906756affe5b9b74/image-2.jpg)

![Multithreading without threads or processies int icnt, ocnt = 0; Complex iframe[sz]; Complex oframe[sz]; Multithreading without threads or processies int icnt, ocnt = 0; Complex iframe[sz]; Complex oframe[sz];](https://slidetodoc.com/presentation_image/442896537eed1312906756affe5b9b74/image-17.jpg)

- Slides: 30

Hardware-Software Codesign Kermin Fleming Computer Science & Artificial Intelligence Lab Massachusetts Institute of Technology Many slides produced by: Arvind, Myron King, Man Cheuk Ng, Angshuman Parashar March 14, 2011 http: //csg. csail. mit. edu/6. 375 L 12 -1

![Hello world int main int argc char argv int n atoiargv1 for Hello, world! int main (int argc, char* argv[]) { int n = atoi(argv[1]); for](https://slidetodoc.com/presentation_image/442896537eed1312906756affe5b9b74/image-2.jpg)

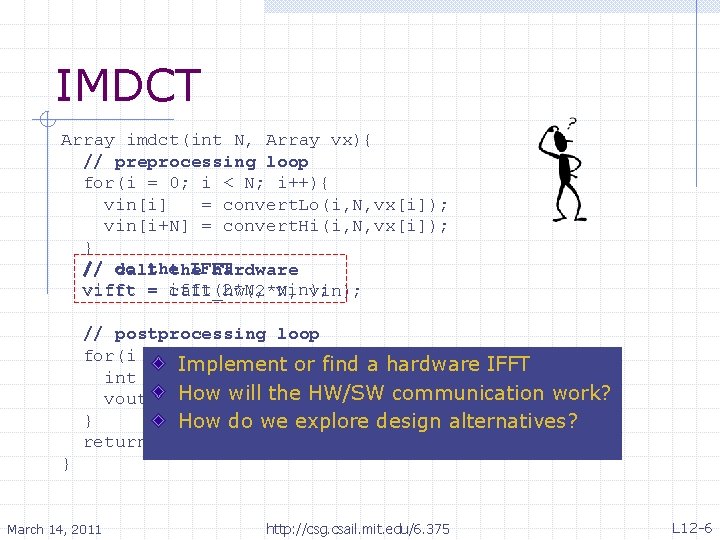

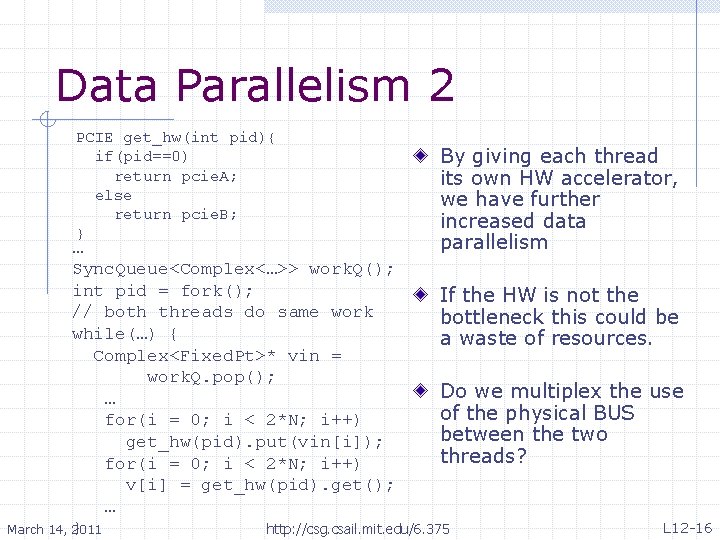

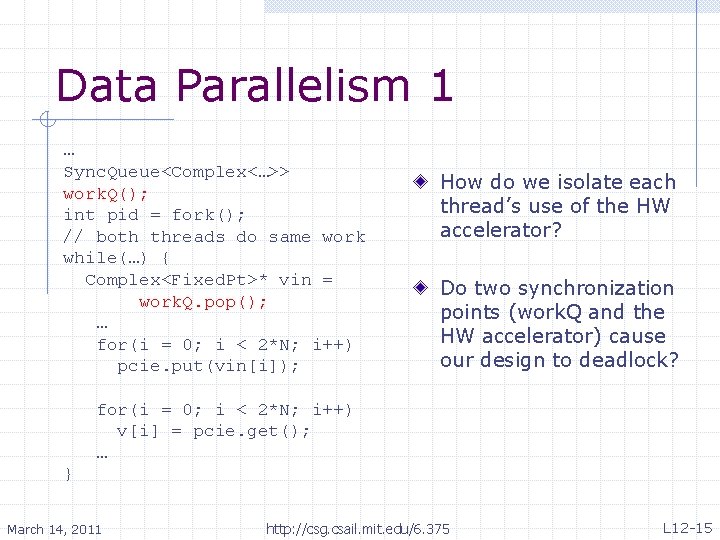

Hello, world! int main (int argc, char* argv[]) { int n = atoi(argv[1]); for (int i = 0; i < n; i++) { printf(“Hello, world!n”); } return 0; } module mk. Hello#(TOP_LEVEL_WIRES wires); CHANNEL_IFC channel <- mk. Channel(wires); // has a software counterpart Reg#(Bit#(8)) count <- mk. Reg(0); Reg#(Bit#(5)) state <- mk. Reg(0); rule init (count == 0); count <- channel. recv(); state <= 0; endrule hello (count != 0); case (state) 0: channel. send(‘H’); 1: channel. send(‘e’); 2: channel. send(‘l’); 3: channel. send(‘l’); . . . 16: count <= count – 1; endcase if (state != 16) state <= state + 1; else state <= 0; endrule endmodule March 14, 2011 http: //csg. csail. mit. edu/6. 375 L 12 -2

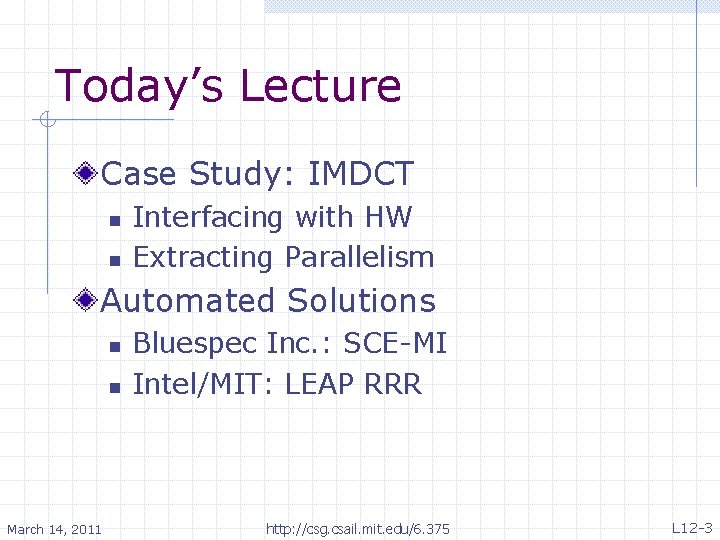

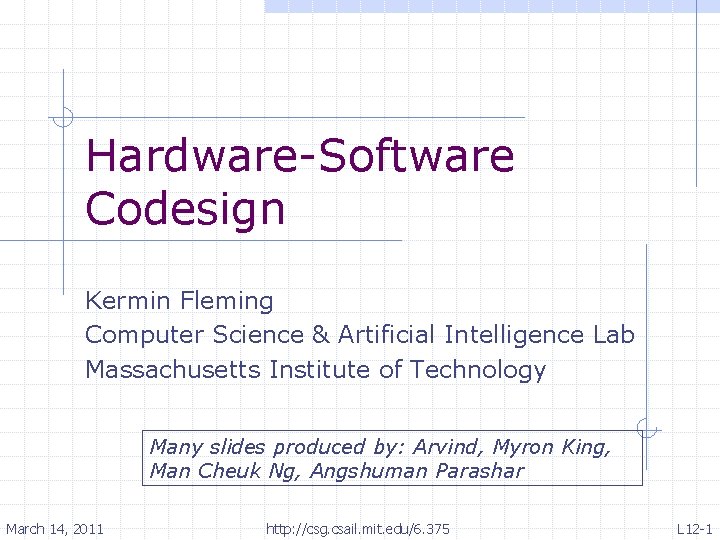

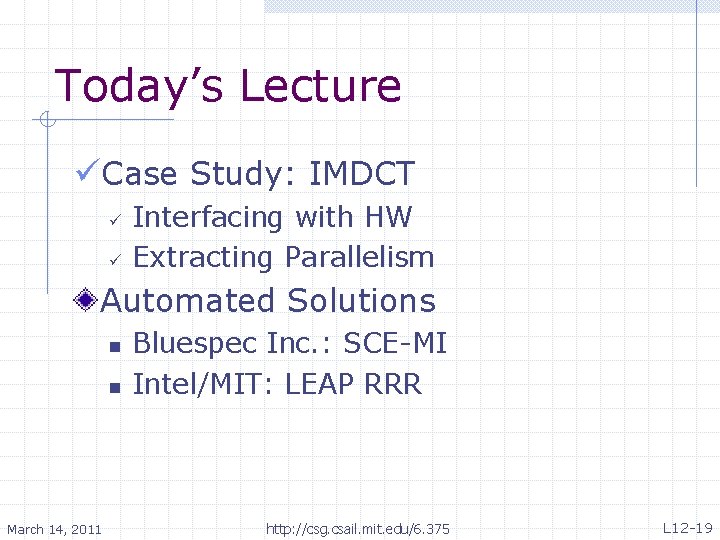

Today’s Lecture Case Study: IMDCT n n Interfacing with HW Extracting Parallelism Automated Solutions n n March 14, 2011 Bluespec Inc. : SCE-MI Intel/MIT: LEAP RRR http: //csg. csail. mit. edu/6. 375 L 12 -3

Ogg Vorbis Pipeline Bits Stream Parser Residue Decoder Ogg Vorbis is a audio compression format roughly comparable to other compression formats: e. g. MP 3, AAC, MWA. Floor Decoder IMDCT Windowing Input is a stream of compressed bits Parsed into frame residues and floor “predictions” The summed frequency results are converted to time-valued sequencies Final frames are windows to smooth out irregularities IMDCT takes the most computation PCM Output March 14, 2011 http: //csg. csail. mit. edu/6. 375 L 12 -4

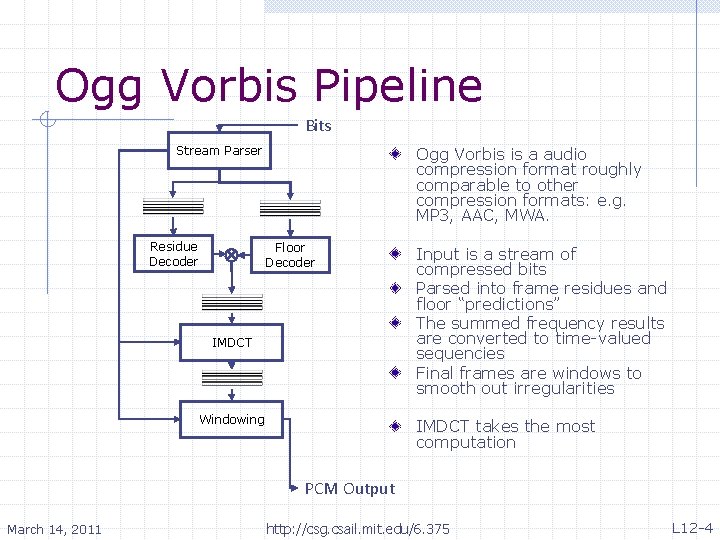

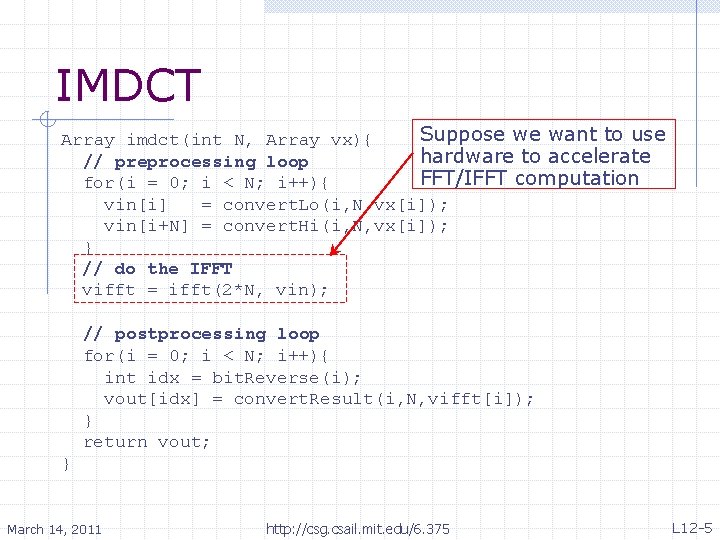

IMDCT Suppose we want to use Array imdct(int N, Array vx){ hardware to accelerate // preprocessing loop FFT/IFFT computation for(i = 0; i < N; i++){ vin[i] = convert. Lo(i, N, vx[i]); vin[i+N] = convert. Hi(i, N, vx[i]); } // do the IFFT vifft = ifft(2*N, vin); // postprocessing loop for(i = 0; i < N; i++){ int idx = bit. Reverse(i); vout[idx] = convert. Result(i, N, vifft[i]); } return vout; } March 14, 2011 http: //csg. csail. mit. edu/6. 375 L 12 -5

IMDCT Array imdct(int N, Array vx){ // preprocessing loop for(i = 0; i < N; i++){ vin[i] = convert. Lo(i, N, vx[i]); vin[i+N] = convert. Hi(i, N, vx[i]); } // do the IFFT call the hardware vifft = ifft(2*N, vin); call_hw(2*N, vin); // postprocessing loop for(i = 0; i < N; i++){ Implement or find a hardware IFFT int idx = bit. Reverse(i); How= will the HW/SW communication work? vout[idx] convert. Result(i, N, vifft[i]); } How do we explore design alternatives? return vout; } March 14, 2011 http: //csg. csail. mit. edu/6. 375 L 12 -6

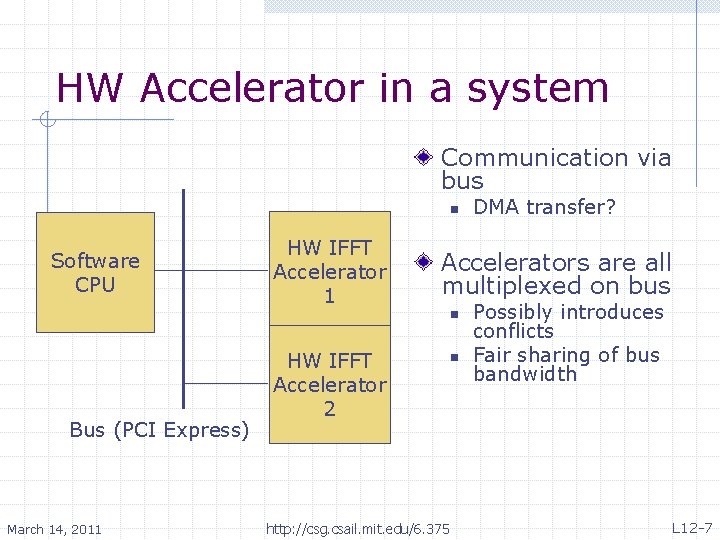

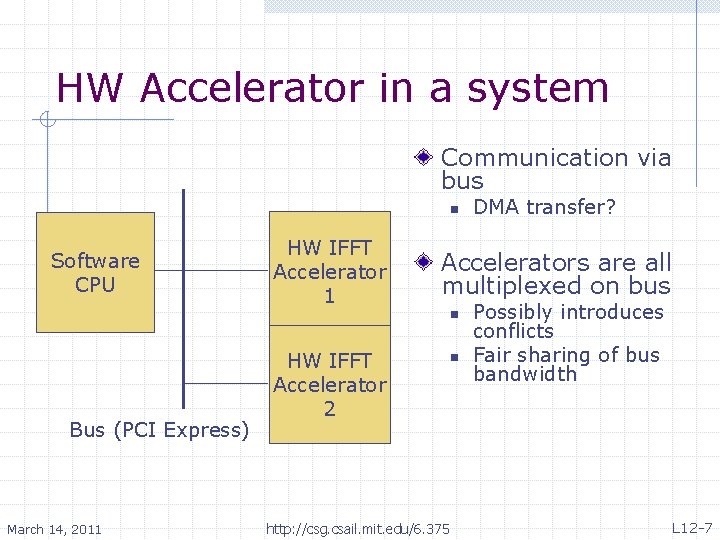

HW Accelerator in a system Communication via bus n Software CPU HW IFFT Accelerator 1 Accelerators are all multiplexed on bus n Bus (PCI Express) March 14, 2011 DMA transfer? HW IFFT Accelerator 2 http: //csg. csail. mit. edu/6. 375 n Possibly introduces conflicts Fair sharing of bus bandwidth L 12 -7

The HW Interface SW calls turn into a set of memory-mapped calls through Bus Three communication tasks set. Size input. Data output. Data n n Bus (PCI Express) March 14, 2011 n Set size of IFFT Enter data stream Take output out http: //csg. csail. mit. edu/6. 375 L 12 -8

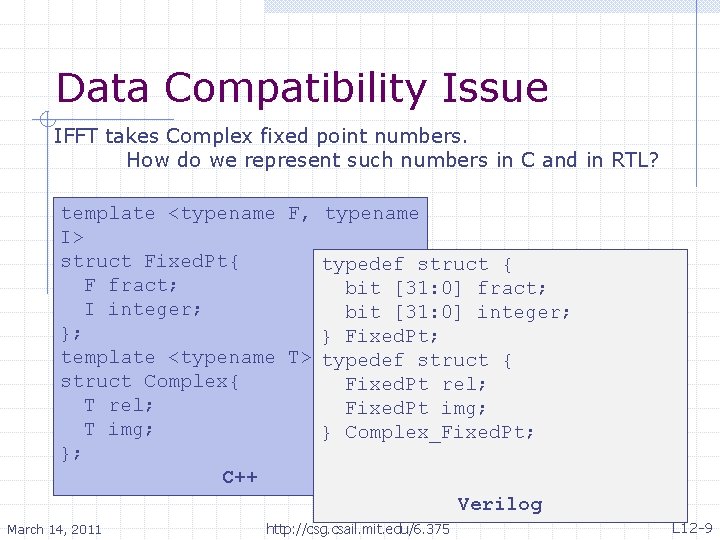

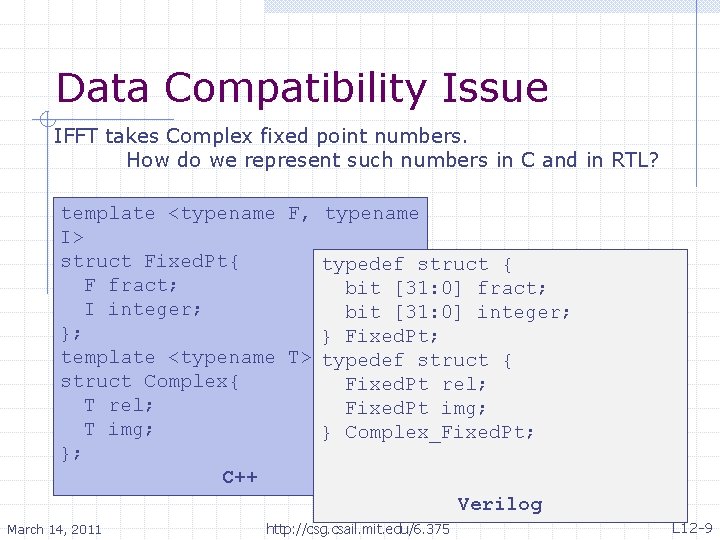

Data Compatibility Issue IFFT takes Complex fixed point numbers. How do we represent such numbers in C and in RTL? template <typename F, typename I> struct Fixed. Pt{ typedef struct { F fract; bit [31: 0] fract; I integer; bit [31: 0] integer; }; } Fixed. Pt; template <typename T> typedef struct { struct Complex{ Fixed. Pt rel; T rel; Fixed. Pt img; T img; } Complex_Fixed. Pt; }; C++ Verilog March 14, 2011 http: //csg. csail. mit. edu/6. 375 L 12 -9

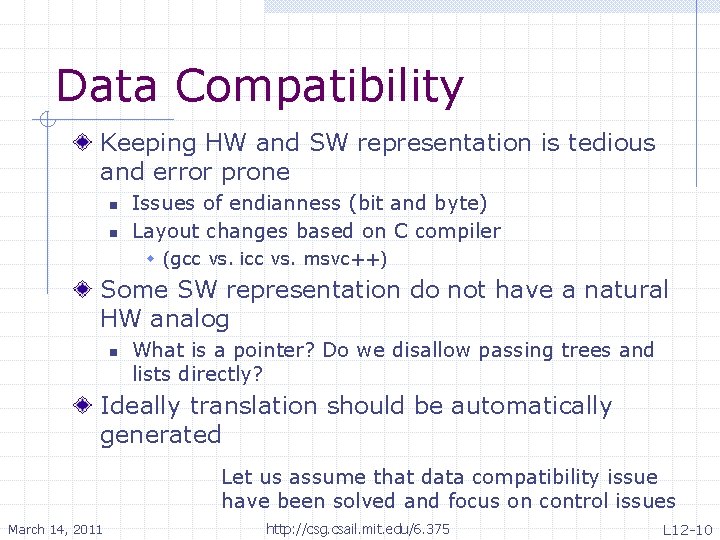

Data Compatibility Keeping HW and SW representation is tedious and error prone n n Issues of endianness (bit and byte) Layout changes based on C compiler w (gcc vs. icc vs. msvc++) Some SW representation do not have a natural HW analog n What is a pointer? Do we disallow passing trees and lists directly? Ideally translation should be automatically generated Let us assume that data compatibility issue have been solved and focus on control issues March 14, 2011 http: //csg. csail. mit. edu/6. 375 L 12 -10

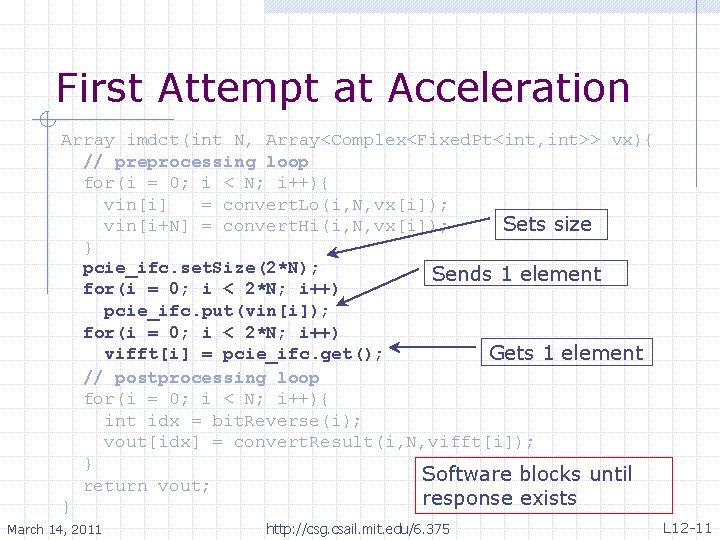

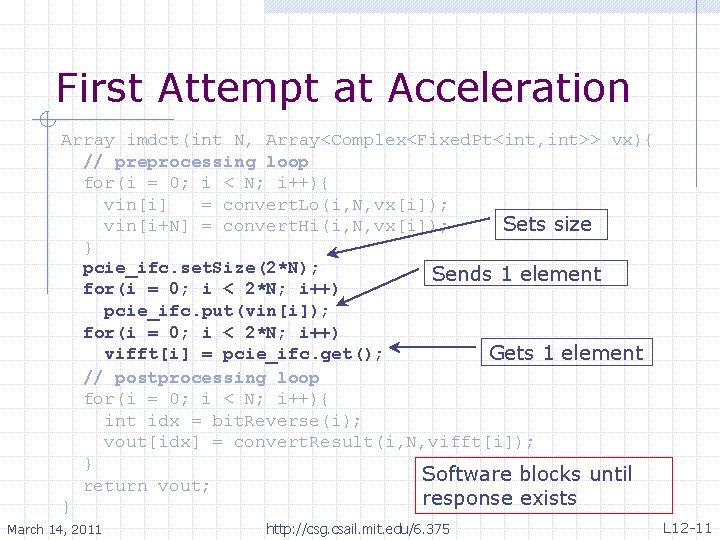

First Attempt at Acceleration Array imdct(int N, Array<Complex<Fixed. Pt<int, int>> vx){ // preprocessing loop for(i = 0; i < N; i++){ vin[i] = convert. Lo(i, N, vx[i]); Sets size vin[i+N] = convert. Hi(i, N, vx[i]); } pcie_ifc. set. Size(2*N); Sends 1 element for(i = 0; i < 2*N; i++) pcie_ifc. put(vin[i]); for(i = 0; i < 2*N; i++) vifft[i] = pcie_ifc. get(); Gets 1 element // postprocessing loop for(i = 0; i < N; i++){ int idx = bit. Reverse(i); vout[idx] = convert. Result(i, N, vifft[i]); } Software blocks until return vout; response exists } March 14, 2011 http: //csg. csail. mit. edu/6. 375 L 12 -11

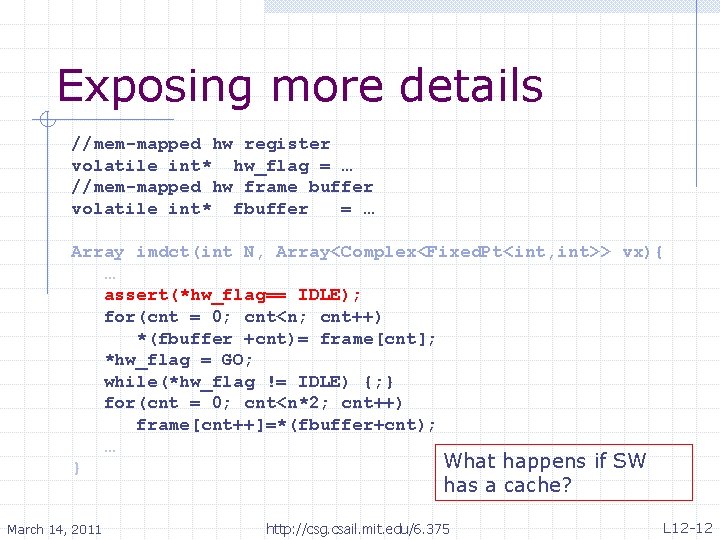

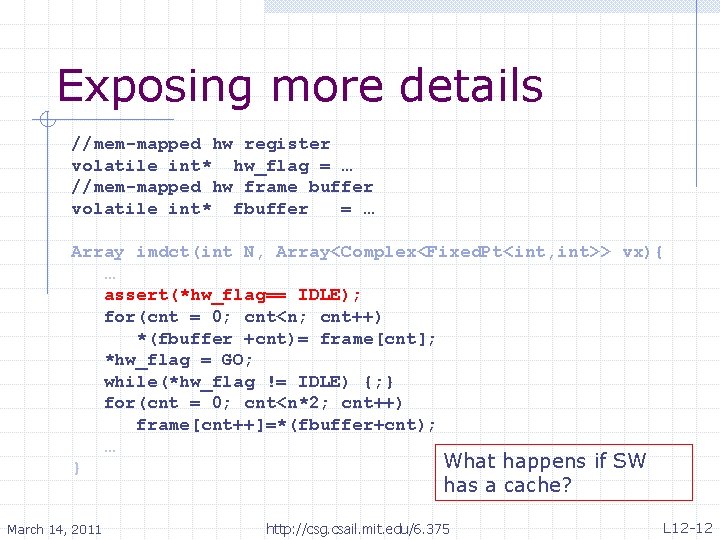

Exposing more details //mem-mapped hw register volatile int* hw_flag = … //mem-mapped hw frame buffer volatile int* fbuffer = … Array imdct(int N, Array<Complex<Fixed. Pt<int, int>> vx){ … assert(*hw_flag== IDLE); for(cnt = 0; cnt<n; cnt++) *(fbuffer +cnt)= frame[cnt]; *hw_flag = GO; while(*hw_flag != IDLE) {; } for(cnt = 0; cnt<n*2; cnt++) frame[cnt++]=*(fbuffer+cnt); … What happens if SW } has a cache? March 14, 2011 http: //csg. csail. mit. edu/6. 375 L 12 -12

Issues Are the internal hardware conditions exposed correctly by the hw_flag control register? Blocking SW is problematic: n n n March 14, 2011 Prevents the processor from doing anything while the accelerator is in use Hard to pipeline the accelerator Does not handle variation in timing well http: //csg. csail. mit. edu/6. 375 L 12 -13

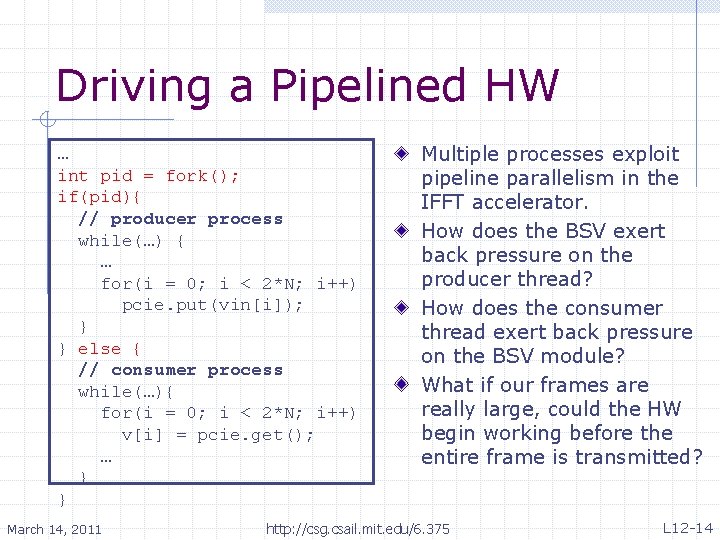

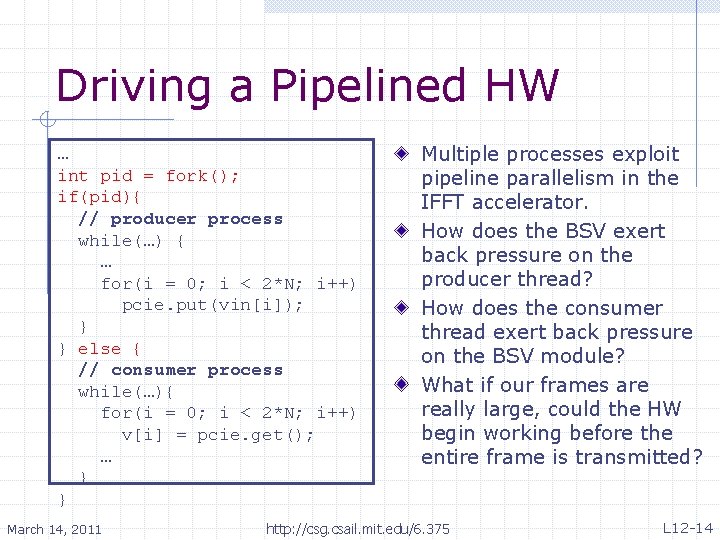

Driving a Pipelined HW … int pid = fork(); if(pid){ // producer process while(…) { … for(i = 0; i < 2*N; i++) pcie. put(vin[i]); } } else { // consumer process while(…){ for(i = 0; i < 2*N; i++) v[i] = pcie. get(); … } } March 14, 2011 Multiple processes exploit pipeline parallelism in the IFFT accelerator. How does the BSV exert back pressure on the producer thread? How does the consumer thread exert back pressure on the BSV module? What if our frames are really large, could the HW begin working before the entire frame is transmitted? http: //csg. csail. mit. edu/6. 375 L 12 -14

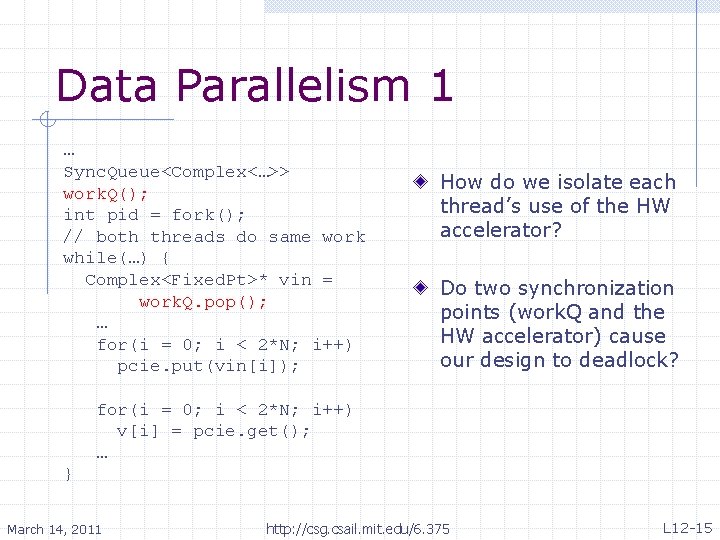

Data Parallelism 1 … Sync. Queue<Complex<…>> work. Q(); int pid = fork(); // both threads do same work while(…) { Complex<Fixed. Pt>* vin = work. Q. pop(); … for(i = 0; i < 2*N; i++) pcie. put(vin[i]); How do we isolate each thread’s use of the HW accelerator? Do two synchronization points (work. Q and the HW accelerator) cause our design to deadlock? for(i = 0; i < 2*N; i++) v[i] = pcie. get(); … } March 14, 2011 http: //csg. csail. mit. edu/6. 375 L 12 -15

Data Parallelism 2 PCIE get_hw(int pid){ if(pid==0) return pcie. A; else return pcie. B; } By giving each thread its own HW accelerator, we have further increased data parallelism … Sync. Queue<Complex<…>> work. Q(); int pid = fork(); If the HW is not the // both threads do same work bottleneck this could be while(…) { a waste of resources. Complex<Fixed. Pt>* vin = work. Q. pop(); Do we multiplex the use … of the physical BUS for(i = 0; i < 2*N; i++) between the two get_hw(pid). put(vin[i]); threads? for(i = 0; i < 2*N; i++) v[i] = get_hw(pid). get(); … } L 12 -16 http: //csg. csail. mit. edu/6. 375 March 14, 2011

![Multithreading without threads or processies int icnt ocnt 0 Complex iframesz Complex oframesz Multithreading without threads or processies int icnt, ocnt = 0; Complex iframe[sz]; Complex oframe[sz];](https://slidetodoc.com/presentation_image/442896537eed1312906756affe5b9b74/image-17.jpg)

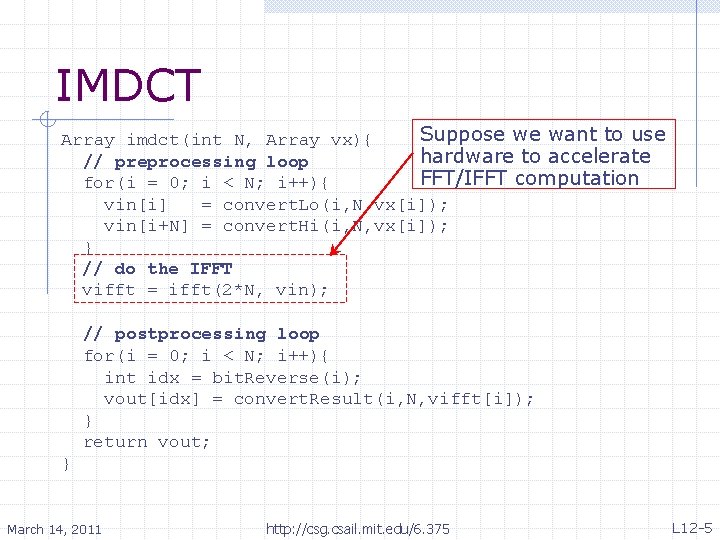

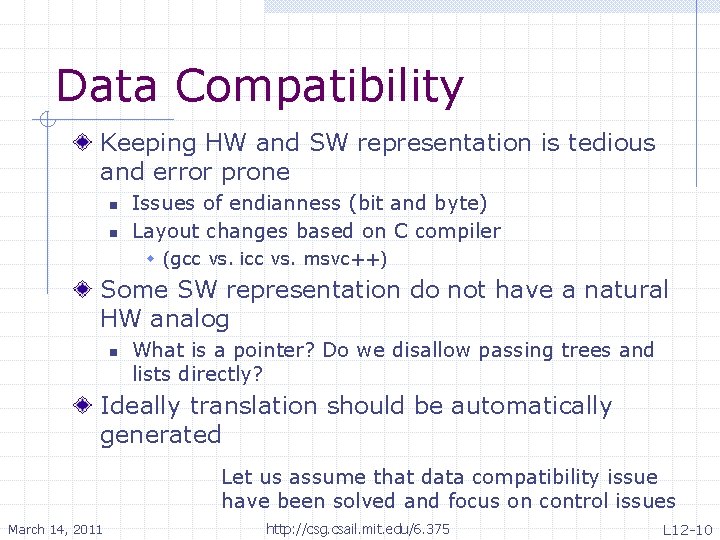

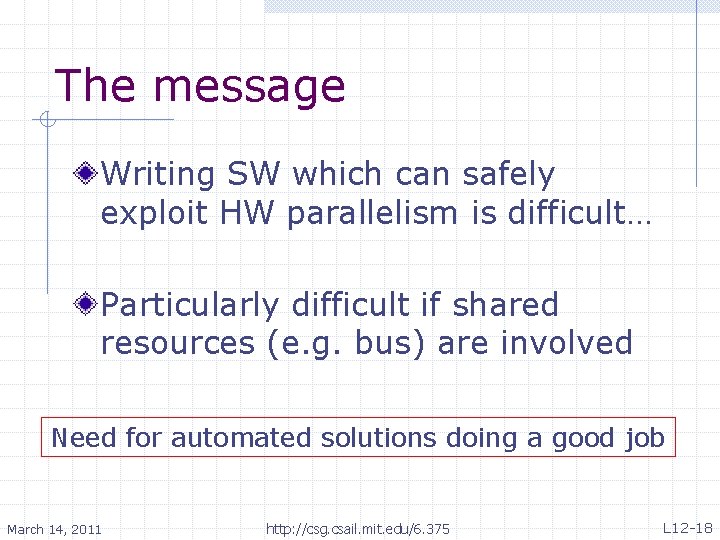

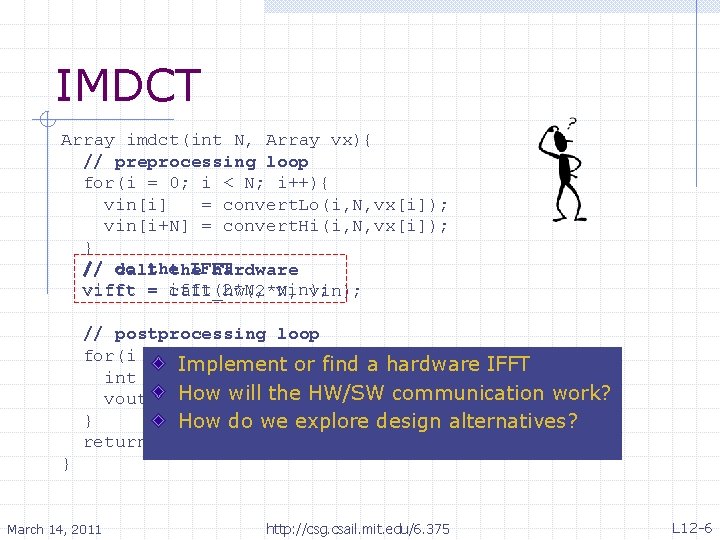

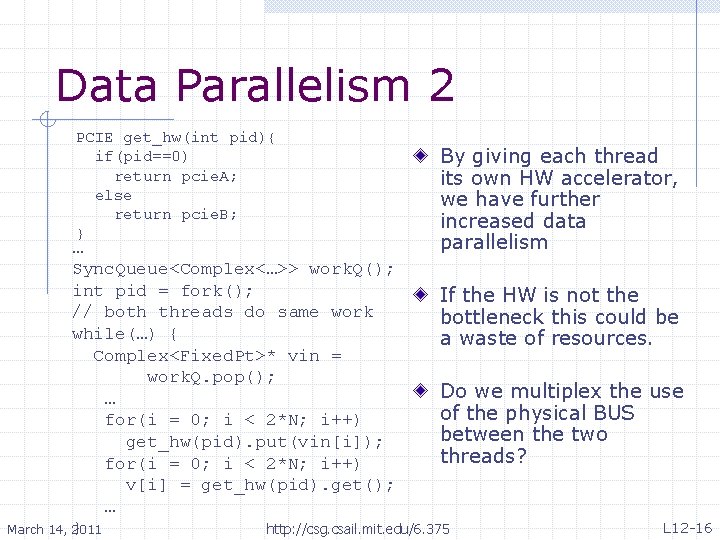

Multithreading without threads or processies int icnt, ocnt = 0; Complex iframe[sz]; Complex oframe[sz]; … // IMDCT loop while(…){ … // producer “thread” for(i = 0; i<2, icnt<n; i++) if(pcie. can_put()) pcie. put(iframe[icnt++]); // consumer “thread” for(i = 0; i<2, ocnt<n*2; i++) if(pcie. can_get()) oframe[ocnt++]= pcie. get(); … } March 14, 2011 Embedded execution environments often have little or no OS support, so multithreading must be emulated in user code Getting the arbitration right is a complex task All existing issues are compounded with the complexity of the duplicated states for each “thread” http: //csg. csail. mit. edu/6. 375 L 12 -17

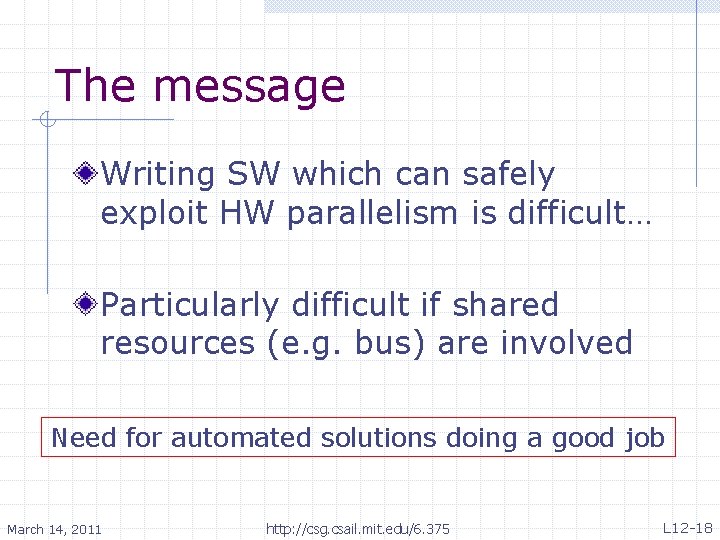

The message Writing SW which can safely exploit HW parallelism is difficult… Particularly difficult if shared resources (e. g. bus) are involved Need for automated solutions doing a good job March 14, 2011 http: //csg. csail. mit. edu/6. 375 L 12 -18

Today’s Lecture üCase Study: IMDCT ü ü Interfacing with HW Extracting Parallelism Automated Solutions n n March 14, 2011 Bluespec Inc. : SCE-MI Intel/MIT: LEAP RRR http: //csg. csail. mit. edu/6. 375 L 12 -19

Bluespec Co-design: SCE-MI Circuit verification is difficult n Billions of cycles of gate-level simulation Technology Cycles/sec* Bluesim 4 K FPGA 20 M ASIC 60 M+ How do we retain cycle accuracy? n Use SCE-MI *Target: Wi. Fi Transceiver March 14, 2011 http: //csg. csail. mit. edu/6. 375 L 12 -20

SCE-MI Use gated clocks to preserve cycleaccuracy n n n March 14, 2011 Circuit internals run at “Model Clock” ticks only when inputs and outputs to the circuit stabilize Another Co-design problem http: //csg. csail. mit. edu/6. 375 L 12 -21

Bluespec SCE-MI Used already in Lab n With a controlled clock on the FPGA Bluespec has a rich SCE-MI library n n March 14, 2011 Get/Put transactors provided User provides C++ and HW transactors for exotic interfaces http: //csg. csail. mit. edu/6. 375 L 12 -22

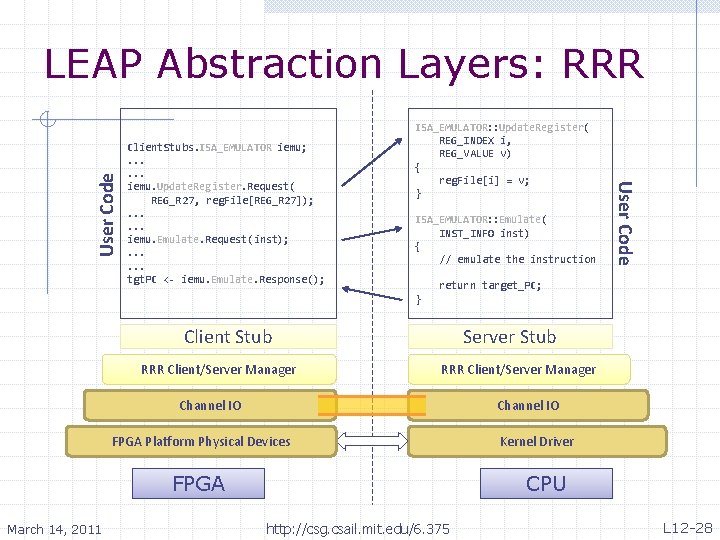

Intel/MIT: LEAP RRR Asynchronous Remote Request. Response stack for FPGA n Uses common Client/Server paradigm Similar in many respects to Bluespec SCE-MI n n March 14, 2011 Constrained user interface Open, many platforms supported http: //csg. csail. mit. edu/6. 375 L 12 -23

Client/Server interfaces Get/Put pairs are very common, and duals of each other, so the library defines Client/Server interface types for this purpose ready enable data ready resp_t data ready data put req_t enable interface Server #(req_t, resp_t); interface Put#(req_t) request; interface Get#(resp_t) response; endinterface get enable interface Client #(req_t, resp_t); interface Get#(req_t) request; interface Put#(resp_t) response; endinterface client get put server March 14, 2009 http: //csg. csail. mit. edu/6. 375 L 12 -24

RRR Specification Language // --------------------// create a new service called ISA_EMULATOR // --------------------service ISA_EMULATOR { // ----------------// declare services provided by CPU // ----------------server CPU <- FPGA; { method Update. Register(in REG_INDEX, in REG_VALUE); method Emulate(in INST_INFO, out INST_ADDR); }; // ----------------// declare services provided by FPGA // ----------------server FPGA <- CPU; { method Sync. Register(in REG_INDEX, in REG_VALUE); }; }; March 14, 2011 http: //csg. csail. mit. edu/6. 375 L 12 -25

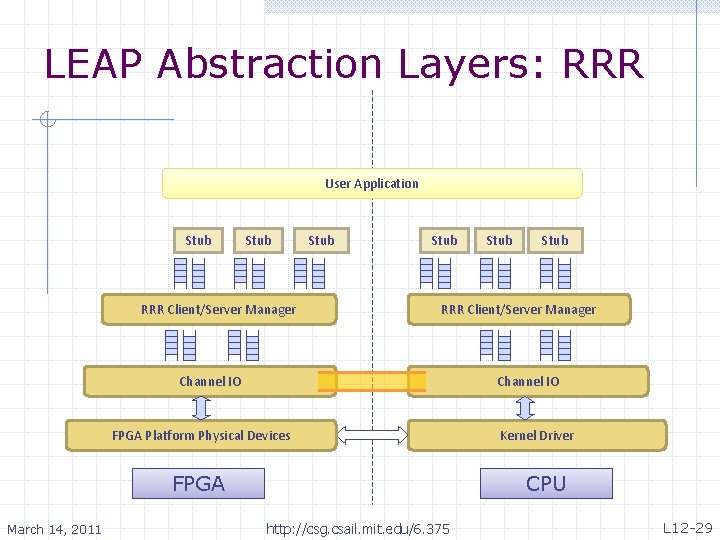

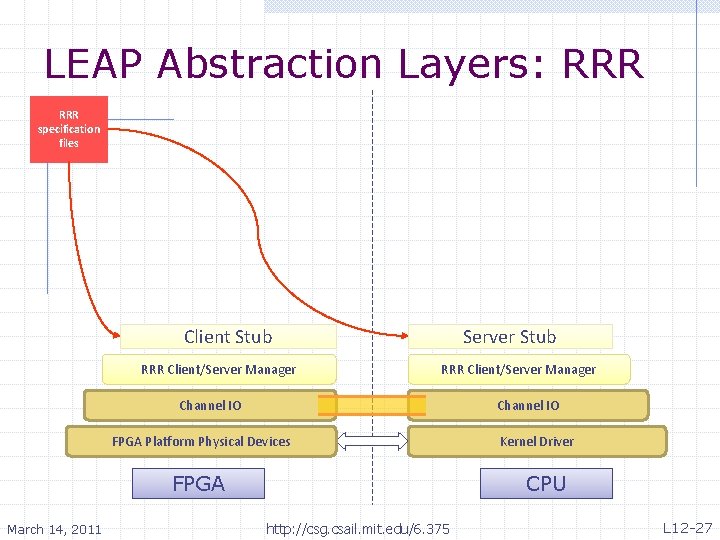

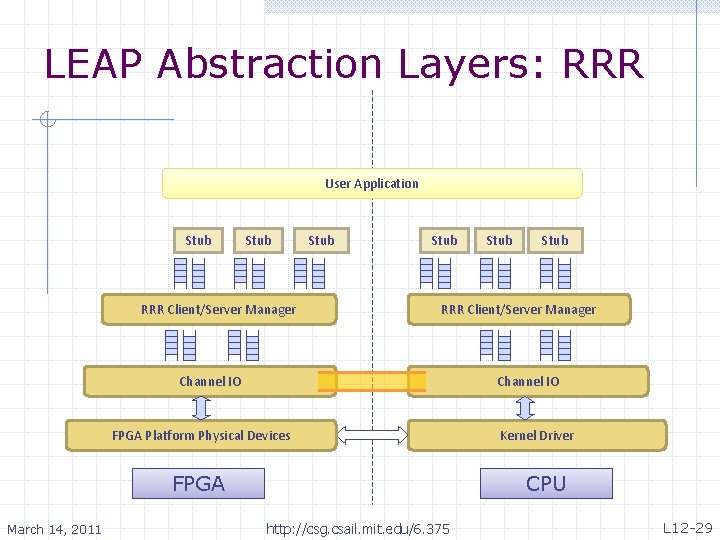

LEAP Abstraction Layers: RRR Channel IO FPGA Platform Physical Devices FPGA March 14, 2011 Kernel Driver CPU http: //csg. csail. mit. edu/6. 375 L 12 -26

LEAP Abstraction Layers: RRR specification files Client Stub RRR Client/Server Manager Server Stub RRR Client/Server Manager Channel IO FPGA Platform Physical Devices FPGA March 14, 2011 Kernel Driver CPU http: //csg. csail. mit. edu/6. 375 L 12 -27

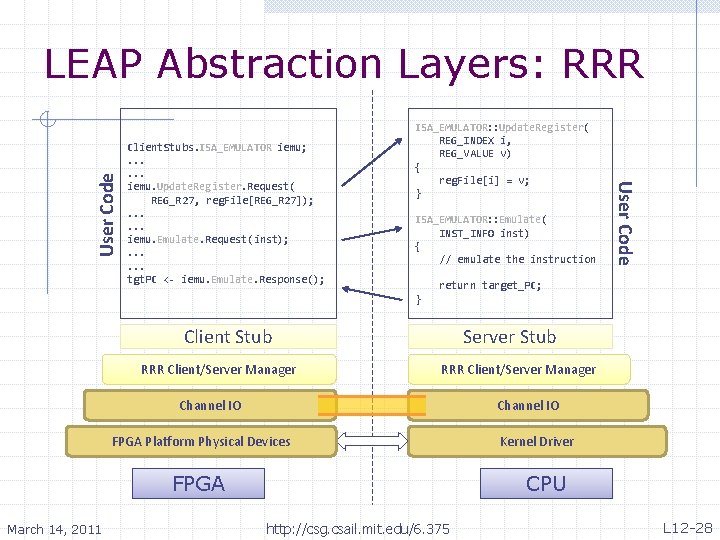

Client. Stubs. ISA_EMULATOR iemu; . . . iemu. Update. Register. Request( REG_R 27, reg. File[REG_R 27]); . . . iemu. Emulate. Request(inst); . . . tgt. PC <- iemu. Emulate. Response(); ISA_EMULATOR: : Update. Register( REG_INDEX i, REG_VALUE v) { reg. File[i] = v; } ISA_EMULATOR: : Emulate( INST_INFO inst) { // emulate the instruction User Code LEAP Abstraction Layers: RRR return target_PC; } Client Stub RRR Client/Server Manager Server Stub RRR Client/Server Manager Channel IO FPGA Platform Physical Devices FPGA March 14, 2011 Kernel Driver CPU http: //csg. csail. mit. edu/6. 375 L 12 -28

LEAP Abstraction Layers: RRR User Application Stub RRR Client/Server Manager Stub RRR Client/Server Manager Channel IO FPGA Platform Physical Devices FPGA March 14, 2011 Stub Kernel Driver CPU http: //csg. csail. mit. edu/6. 375 L 12 -29

Conclusion Writing SW which can safely exploit HW parallelism is difficult… Several automated tools are available n March 14, 2011 Development ongoing http: //csg. csail. mit. edu/6. 375 L 12 -30