Hadoop Installation and Map Reduce Programming by Dr

Hadoop Installation and Map. Reduce Programming by Dr. U. S. N. Raju Asst. Professor, Dept. of CS&E, N. I. T. Warangal 1 Big Image Data Processing on Hadoop 12/18/2021

Hadoop can be installed in your systems in three different modes: Local Standalone mode Pseudo distributed mode Fully distributed mode 2 Big Image Data Processing on Hadoop 12/18/2021

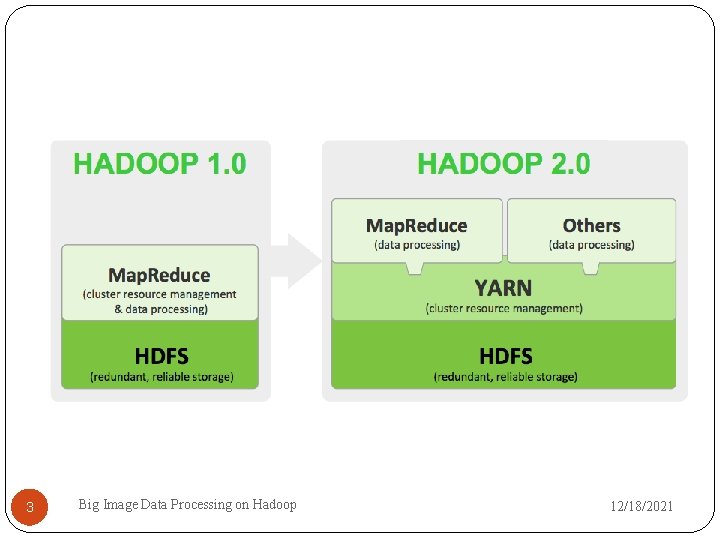

3 Big Image Data Processing on Hadoop 12/18/2021

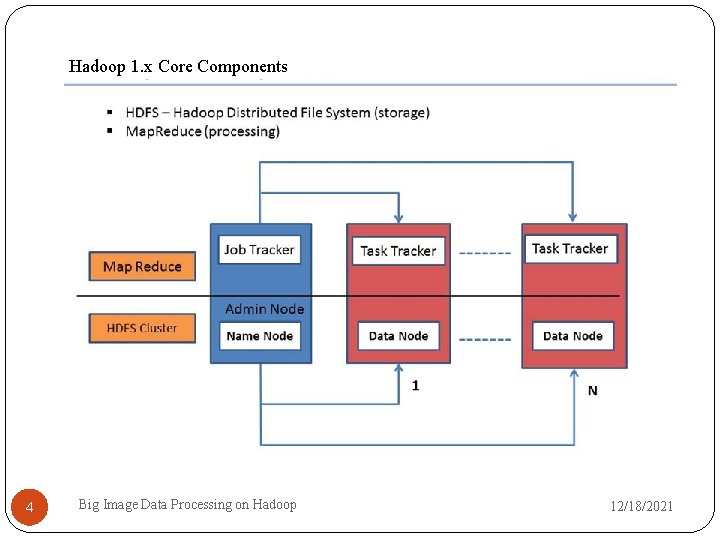

Hadoop 1. x Core Components 4 Big Image Data Processing on Hadoop 12/18/2021

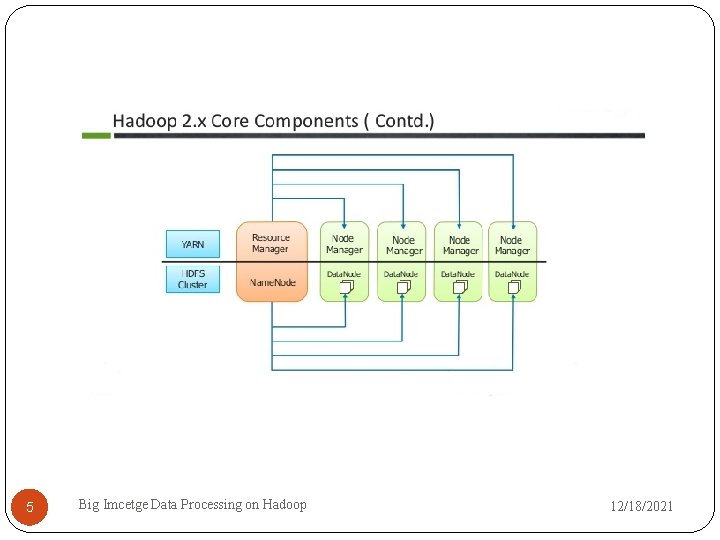

5 Big Imcetge Data Processing on Hadoop 12/18/2021

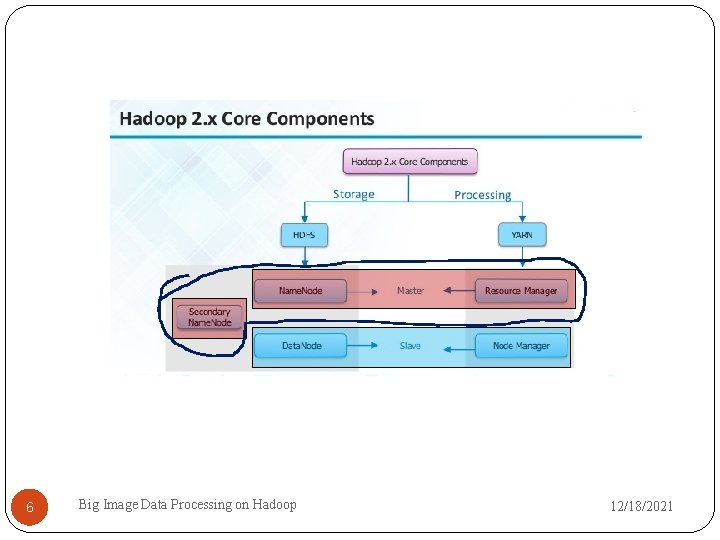

6 Big Image Data Processing on Hadoop 12/18/2021

Local Standalone mode: This is the default mode. In this mode, all the components of Hadoop, such as Name. Node, Data. Node, Job. Tracker and Task. Tracker, run on a single Java process. 7 Big Image Data Processing on Hadoop 12/18/2021

Pseudo-distributed mode: In this mode, a separate JVM is spawned for each of the Hadoop components and they communicate across network sockets, effectively giving a fully functioning minicluster on a single host. 8 Big Image Data Processing on Hadoop 12/18/2021

Fully Distributed mode: In this mode, Hadoop is spread across multiple machines, some of which will be general-purpose workers and others will be dedicated hosts for components, such as Name. Node and Job. Tracker. 9 Big Image Data Processing on Hadoop 12/18/2021

Environment Setup for Hadoop is supported by Ubuntu/GNU/Linux platform and its flavors. Therefore, we have to install a Ubuntu operating system for setting up Hadoop environment. In case you have an OS other than Ubuntu, you can install a Virtualbox software in it and have Ubuntu inside the Virtualbox. 10 Big Image Data Processing on Hadoop 12/18/2021

I. Local Standalone mode 11 Big Image Data Processing on Hadoop 12/18/2021

Step-1: Creating a User in Ubuntu: At the beginning, it is recommended to create a separate user for Hadoop to isolate Hadoop file system from Unix file system. In addition to this, as we need to prepare cluster, first create group and then a user in that group. 12 Big Image Data Processing on Hadoop 12/18/2021

Follow the steps given below to create a group and a user in that group: $ clear $ sudo addgroup nitw_viper_group $ sudo adduser -ingroup nitw_viper_user Password is ‘nitw’ in both the cases 13 Big Image Data Processing on Hadoop 12/18/2021

$sudo gedit /etc/sudoers Add the following line (after %sudo ALL=(ALL: ALL) %nitw_viper_group ALL=(ALL: ALL) ALL 14 Big Image Data Processing on Hadoop 12/18/2021

Change the user form existinguser to nitw_viper_user: LOGOUT of the present user LOGIN with the newly created user “nitw_viper_user” After this, if you open the terminal, you should get username and hostname as: ) nitw_viper_user@selab 5: -$ 15 Big Image Data Processing on Hadoop 12/18/2021

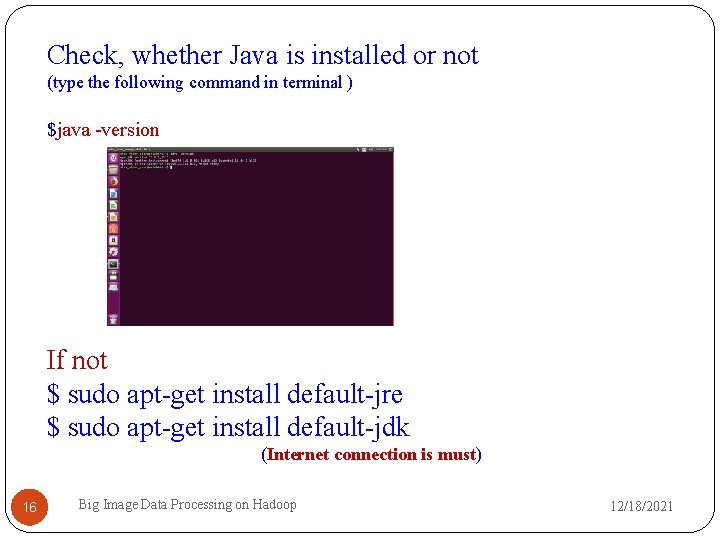

Check, whether Java is installed or not (type the following command in terminal ) $java -version If not $ sudo apt-get install default-jre $ sudo apt-get install default-jdk (Internet connection is must) 16 Big Image Data Processing on Hadoop 12/18/2021

Step-2: SSH Setup and Key Generation in Ubuntu: SSH setup is required to do different operations on a cluster such as starting, stopping, distributed daemon shell operations. To authenticate different users of Hadoop, it is required to provide public/private key pair for a Hadoop user and share it with different users. 17 Big Image Data Processing on Hadoop 12/18/2021

$ ssh localhost After Typing this you must be able to connect to localhost and able to see the following output. If it is working, then type exit. $ exit 18 Big Image Data Processing on Hadoop 12/18/2021

Otherwise use the following commands $ sudo apt-get install openssh-server $ sudo apt-get install vsftpd $ ssh localhost $ exit 19 Big Image Data Processing on Hadoop (Internet connection is must) 12/18/2021

The following commands are used for generating • A key value pair using SSH. • Copy the public keys from id_rsa. pub to authorized_keys and • Provide the owner with read and write permissions to authorized_keys file respectively. $ ssh-keygen -t rsa Press Enter for every option during this step 20 Big Image Data Processing on Hadoop 12/18/2021

$ cat ~/. ssh/id_rsa. pub >> ~/. ssh/authorized_keys $ chmod 0600 ~/. ssh/authorized_keys 21 Big Image Data Processing on Hadoop 12/18/2021

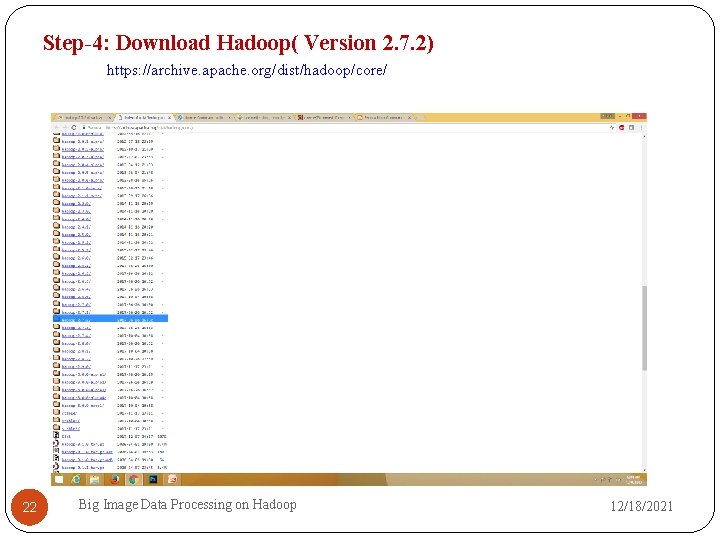

Step-4: Download Hadoop( Version 2. 7. 2) https: //archive. apache. org/dist/hadoop/core/ 22 Big Image Data Processing on Hadoop 12/18/2021

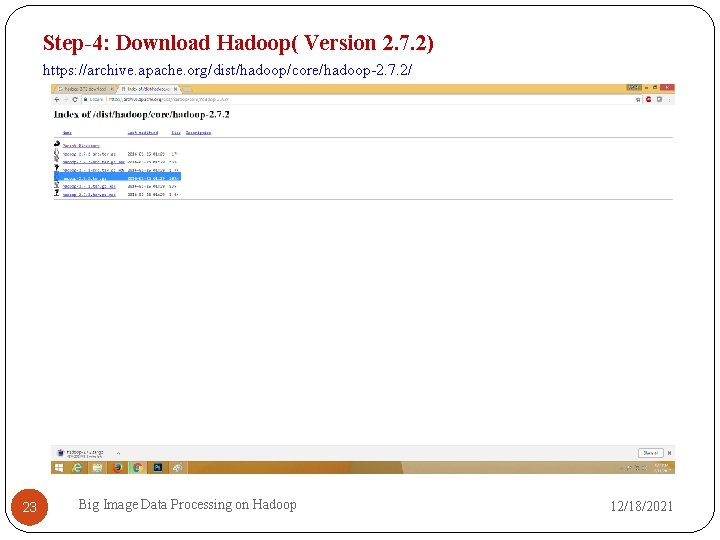

Step-4: Download Hadoop( Version 2. 7. 2) https: //archive. apache. org/dist/hadoop/core/hadoop-2. 7. 2/ 23 Big Image Data Processing on Hadoop 12/18/2021

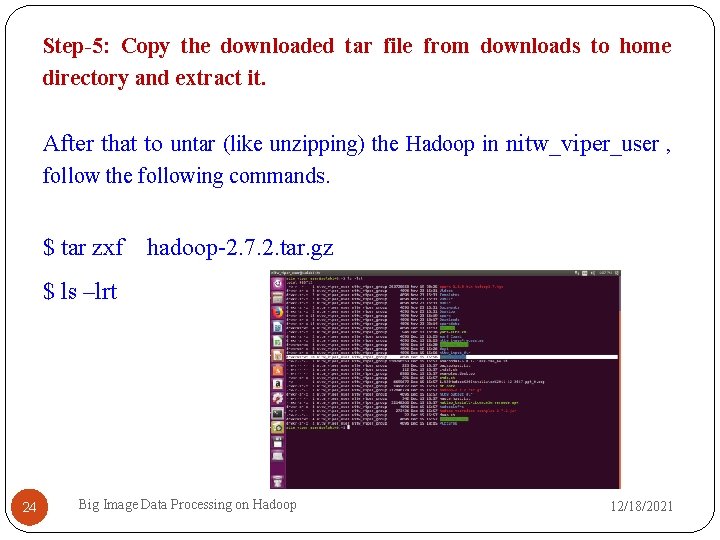

Step-5: Copy the downloaded tar file from downloads to home directory and extract it. After that to untar (like unzipping) the Hadoop in nitw_viper_user , follow the following commands. $ tar zxf hadoop-2. 7. 2. tar. gz $ ls –lrt 24 Big Image Data Processing on Hadoop 12/18/2021

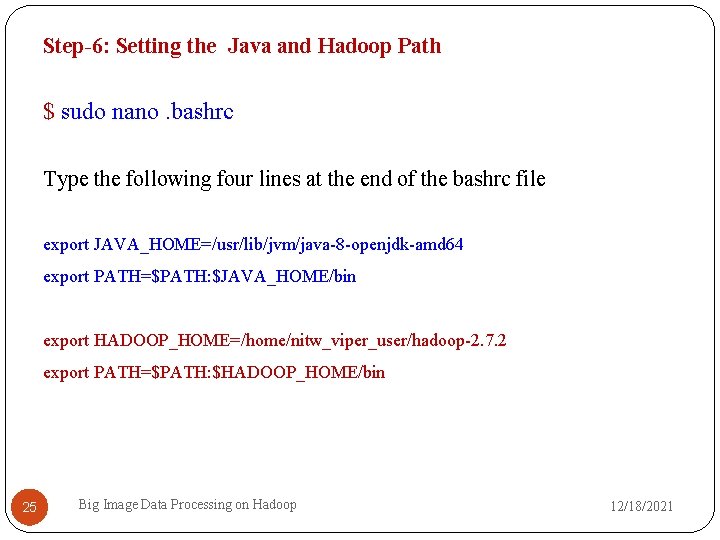

Step-6: Setting the Java and Hadoop Path $ sudo nano. bashrc Type the following four lines at the end of the bashrc file export JAVA_HOME=/usr/lib/jvm/java-8 -openjdk-amd 64 export PATH=$PATH: $JAVA_HOME/bin export HADOOP_HOME=/home/nitw_viper_user/hadoop-2. 7. 2 export PATH=$PATH: $HADOOP_HOME/bin 25 Big Image Data Processing on Hadoop 12/18/2021

Step-8: Now use the command $ source ~/. bashrc (This command is to refresh the terminal with updated bashrc) 26 Big Image Data Processing on Hadoop 12/18/2021

Step-9: Just verify, whether everything is done properly or not , to check the path settings are reflected or not. $ echo $JAVA_HOME $ echo $HADOOP_HOME $ echo $PATH (It will show both Java path and Hadoop Path) 27 Big Image Data Processing on Hadoop 12/18/2021

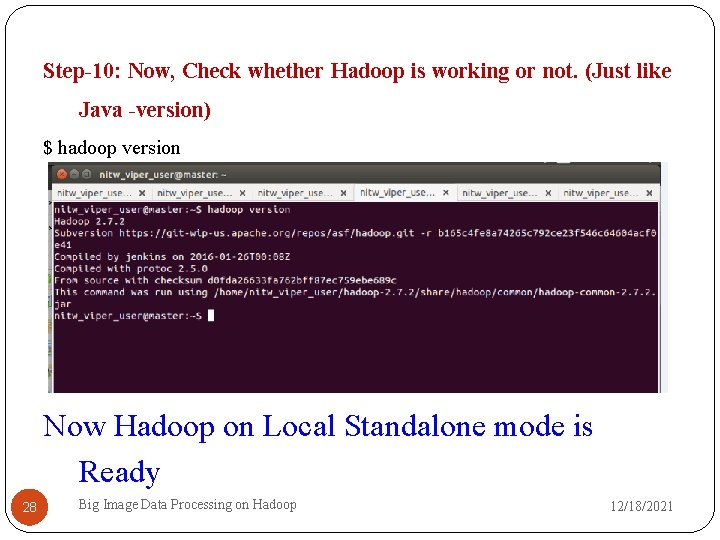

Step-10: Now, Check whether Hadoop is working or not. (Just like Java -version) $ hadoop version Now Hadoop on Local Standalone mode is Ready 28 Big Image Data Processing on Hadoop 12/18/2021

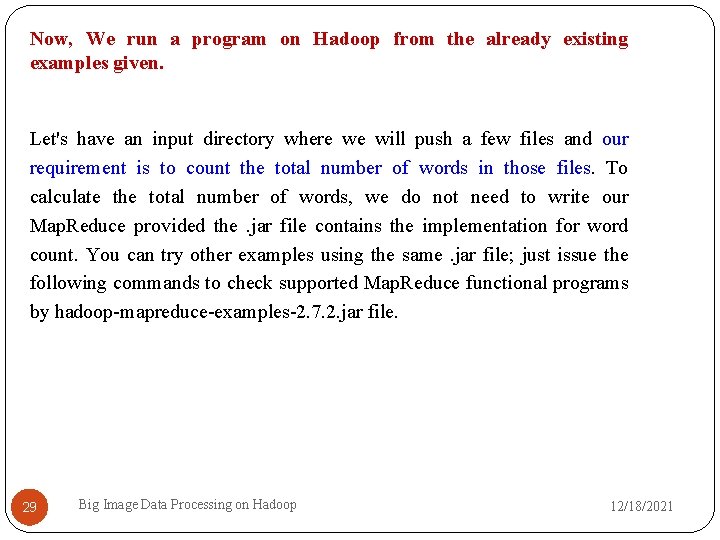

Now, We run a program on Hadoop from the already existing examples given. Let's have an input directory where we will push a few files and our requirement is to count the total number of words in those files. To calculate the total number of words, we do not need to write our Map. Reduce provided the. jar file contains the implementation for word count. You can try other examples using the same. jar file; just issue the following commands to check supported Map. Reduce functional programs by hadoop-mapreduce-examples-2. 7. 2. jar file. 29 Big Image Data Processing on Hadoop 12/18/2021

$ cp $HADOOP_HOME/share/hadoop/mapreduce/hadoop-mapreduceexamples-2. 7. 2. jar. (This dot is must, i. e. to copy into present working director) This command copies the examples into present working directory i. e. nitw_viper_user $ ls -lrt 30 Big Image Data Processing on Hadoop 12/18/2021

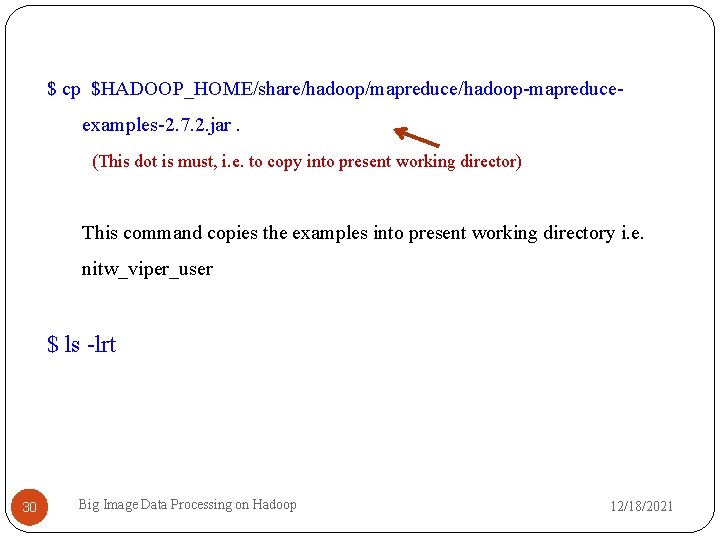

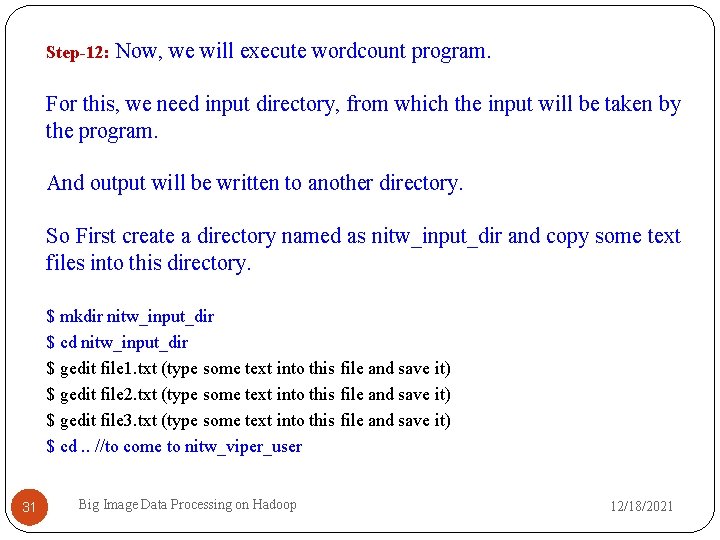

Step-12: Now, we will execute wordcount program. For this, we need input directory, from which the input will be taken by the program. And output will be written to another directory. So First create a directory named as nitw_input_dir and copy some text files into this directory. $ mkdir nitw_input_dir $ cd nitw_input_dir $ gedit file 1. txt (type some text into this file and save it) $ gedit file 2. txt (type some text into this file and save it) $ gedit file 3. txt (type some text into this file and save it) $ cd. . //to come to nitw_viper_user 31 Big Image Data Processing on Hadoop 12/18/2021

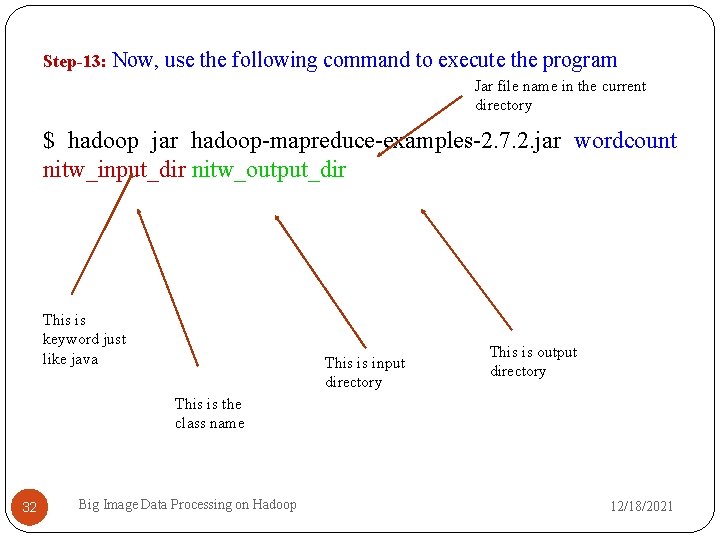

Step-13: Now, use the following command to execute the program Jar file name in the current directory $ hadoop jar hadoop-mapreduce-examples-2. 7. 2. jar wordcount nitw_input_dir nitw_output_dir This is keyword just like java This is input directory This is output directory This is the class name 32 Big Image Data Processing on Hadoop 12/18/2021

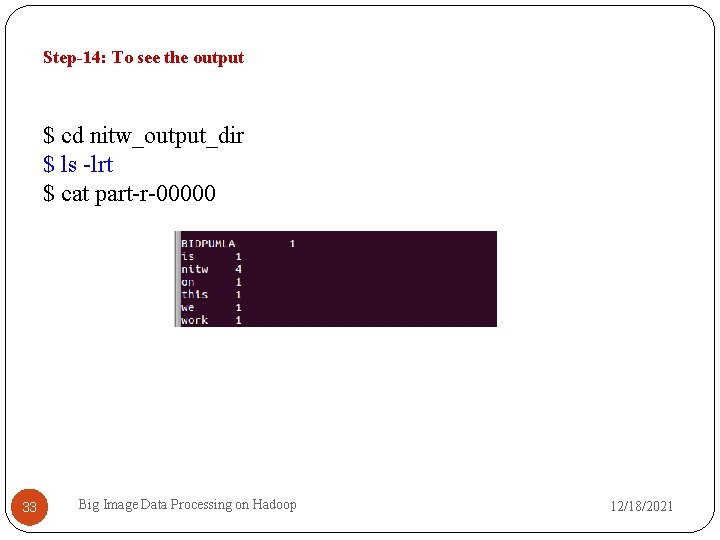

Step-14: To see the output $ cd nitw_output_dir $ ls -lrt $ cat part-r-00000 33 Big Image Data Processing on Hadoop 12/18/2021

II. Pseudo-distributed mode 34 Big Image Data Processing on Hadoop 12/18/2021

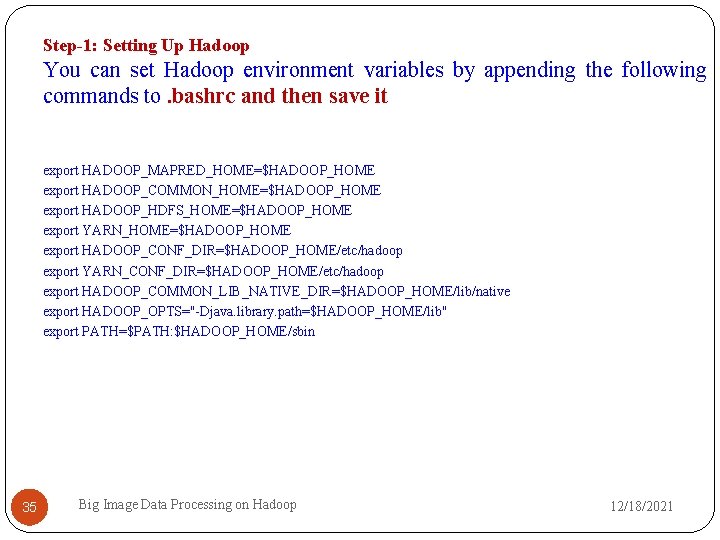

Step-1: Setting Up Hadoop You can set Hadoop environment variables by appending the following commands to. bashrc and then save it export HADOOP_MAPRED_HOME=$HADOOP_HOME export HADOOP_COMMON_HOME=$HADOOP_HOME export HADOOP_HDFS_HOME=$HADOOP_HOME export YARN_HOME=$HADOOP_HOME export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop export YARN_CONF_DIR=$HADOOP_HOME/etc/hadoop export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native export HADOOP_OPTS="-Djava. library. path=$HADOOP_HOME/lib" export PATH=$PATH: $HADOOP_HOME/sbin 35 Big Image Data Processing on Hadoop 12/18/2021

Step-2: Now use the command $ source ~/. bashrc (This command is to refresh the terminal with updated bashrc) 36 Big Image Data Processing on Hadoop 12/18/2021

Step-3: Hadoop Configuration You can find all the Hadoop “$HADOOP_HOME/etc/hadoop”. configuration files in the location It is required to make changes in those configuration files according to your Hadoop infrastructure. $ cd $HADOOP_HOME/etc/hadoop $ pwd 37 Big Image Data Processing on Hadoop 12/18/2021

In order to develop Hadoop programs in java, you have to reset the java environment variables in hadoop-env. sh file by replacing JAVA_HOME value with the location of java in your system. $ nano hadoop-env. sh Write this statement at the end of the file export JAVA_HOME=/usr/lib/jvm/java-8 -openjdk-amd 64 38 Big Image Data Processing on Hadoop 12/18/2021

Now, you need to configure the following files also core-site. xml hdfs-site. xml yarn-site-xml mapred-site. xml 39 Big Image Data Processing on Hadoop 12/18/2021

Step-4: Configuring core-site. xml The core-site. xml file contains information such as the port number used for Hadoop instance, memory allocated for the file system, memory limit for storing the data, and size of Read/Write buffers. Open the core-site. xml and add the following properties in between <configuration>, </configuration> tags. $ nano core-site. xml <configuration> <property> <name>fs. default. name</name> <value>hdfs: //localhost: 9000</value> </property> </configuration> 40 Big Image Data Processing on Hadoop 12/18/2021

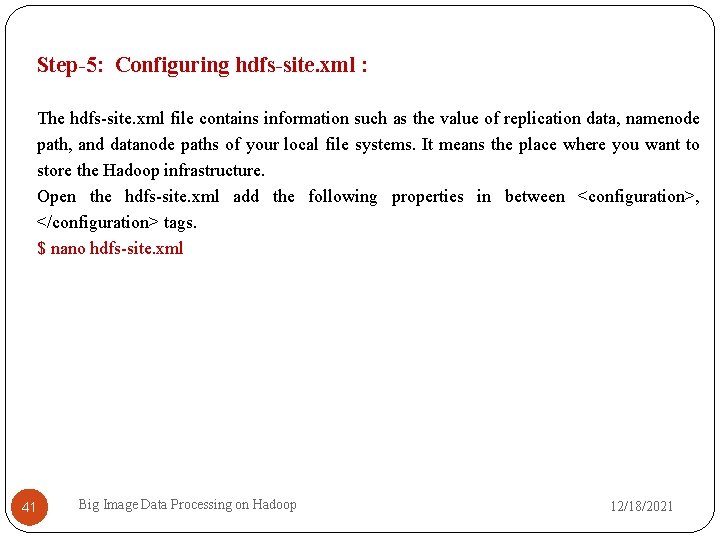

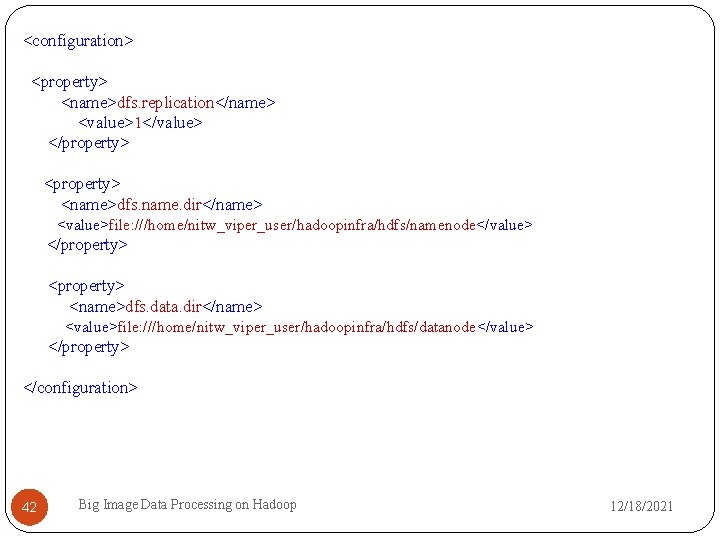

Step-5: Configuring hdfs-site. xml : The hdfs-site. xml file contains information such as the value of replication data, namenode path, and datanode paths of your local file systems. It means the place where you want to store the Hadoop infrastructure. Open the hdfs-site. xml add the following properties in between <configuration>, </configuration> tags. $ nano hdfs-site. xml 41 Big Image Data Processing on Hadoop 12/18/2021

<configuration> <property> <name>dfs. replication</name> <value>1</value> </property> <name>dfs. name. dir</name> <value>file: ///home/nitw_viper_user/hadoopinfra/hdfs/namenode</value> </property> <name>dfs. data. dir</name> <value>file: ///home/nitw_viper_user/hadoopinfra/hdfs/datanode</value> </property> </configuration> 42 Big Image Data Processing on Hadoop 12/18/2021

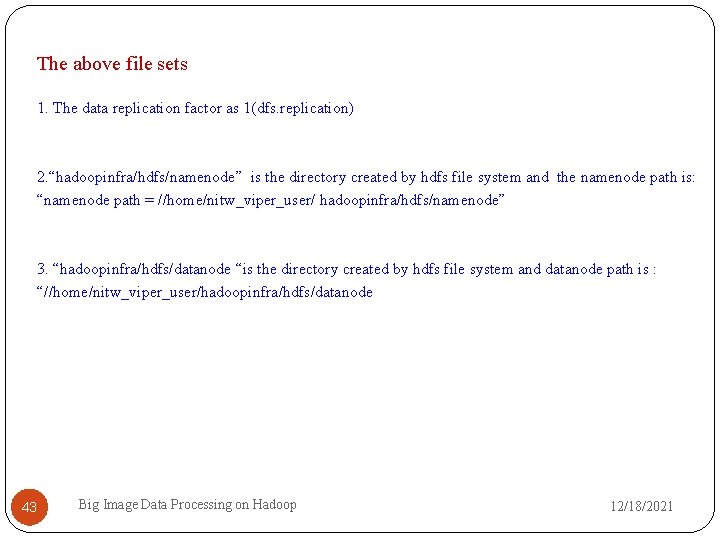

The above file sets 1. The data replication factor as 1(dfs. replication) 2. “hadoopinfra/hdfs/namenode” is the directory created by hdfs file system and the namenode path is: “namenode path = //home/nitw_viper_user/ hadoopinfra/hdfs/namenode” 3. “hadoopinfra/hdfs/datanode “is the directory created by hdfs file system and datanode path is : “//home/nitw_viper_user/hadoopinfra/hdfs/datanode 43 Big Image Data Processing on Hadoop 12/18/2021

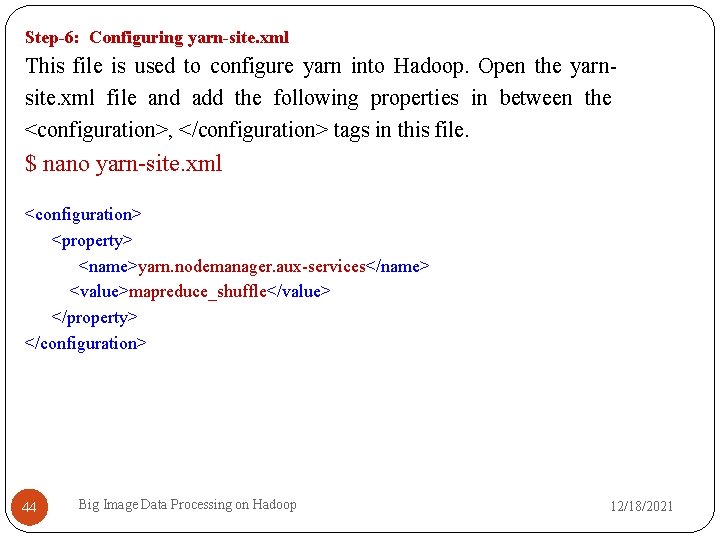

Step-6: Configuring yarn-site. xml This file is used to configure yarn into Hadoop. Open the yarnsite. xml file and add the following properties in between the <configuration>, </configuration> tags in this file. $ nano yarn-site. xml <configuration> <property> <name>yarn. nodemanager. aux-services</name> <value>mapreduce_shuffle</value> </property> </configuration> 44 Big Image Data Processing on Hadoop 12/18/2021

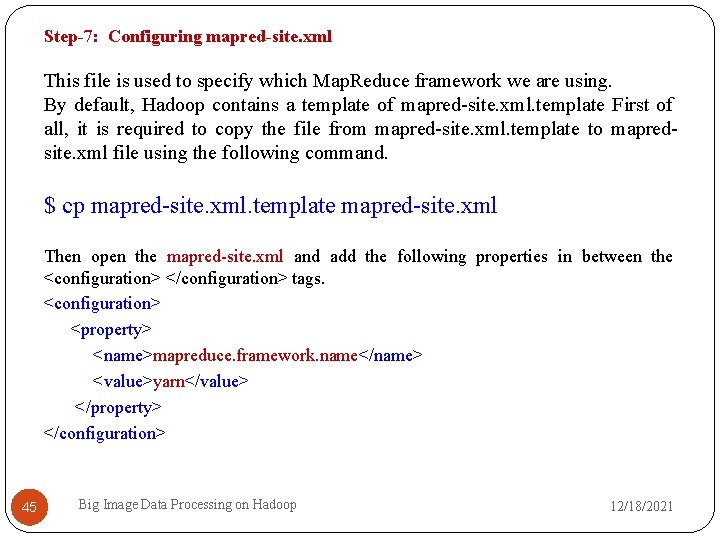

Step-7: Configuring mapred-site. xml This file is used to specify which Map. Reduce framework we are using. By default, Hadoop contains a template of mapred-site. xml. template First of all, it is required to copy the file from mapred-site. xml. template to mapredsite. xml file using the following command. $ cp mapred-site. xml. template mapred-site. xml Then open the mapred-site. xml and add the following properties in between the <configuration> </configuration> tags. <configuration> <property> <name>mapreduce. framework. name</name> <value>yarn</value> </property> </configuration> 45 Big Image Data Processing on Hadoop 12/18/2021

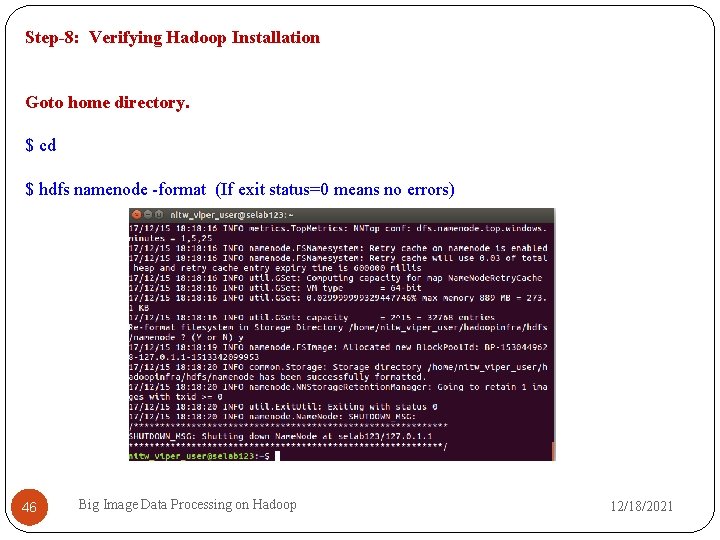

Step-8: Verifying Hadoop Installation Goto home directory. $ cd $ hdfs namenode -format (If exit status=0 means no errors) 46 Big Image Data Processing on Hadoop 12/18/2021

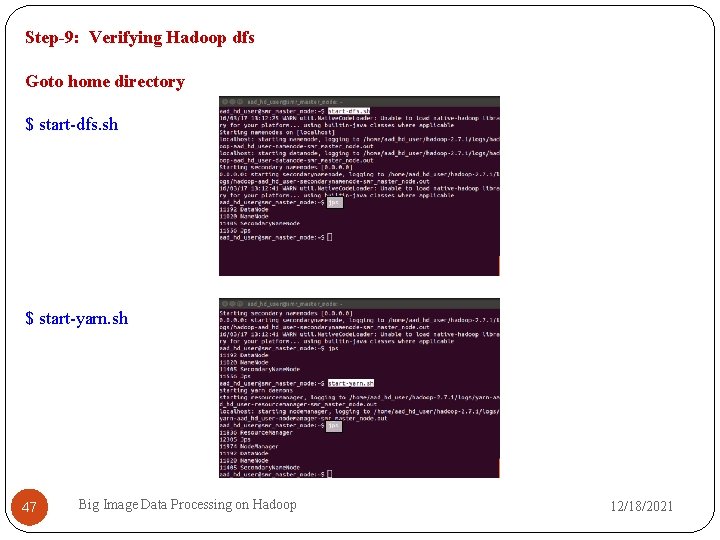

Step-9: Verifying Hadoop dfs Goto home directory $ start-dfs. sh $ start-yarn. sh 47 Big Image Data Processing on Hadoop 12/18/2021

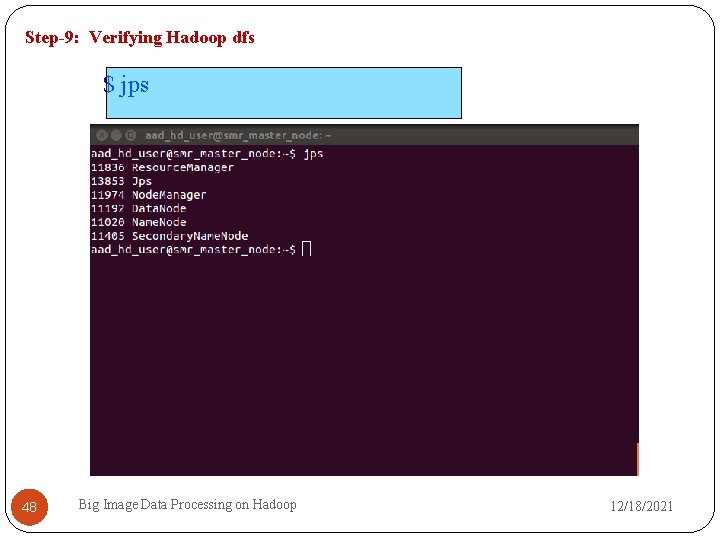

Step-9: Verifying Hadoop dfs $ jps 48 Big Image Data Processing on Hadoop 12/18/2021

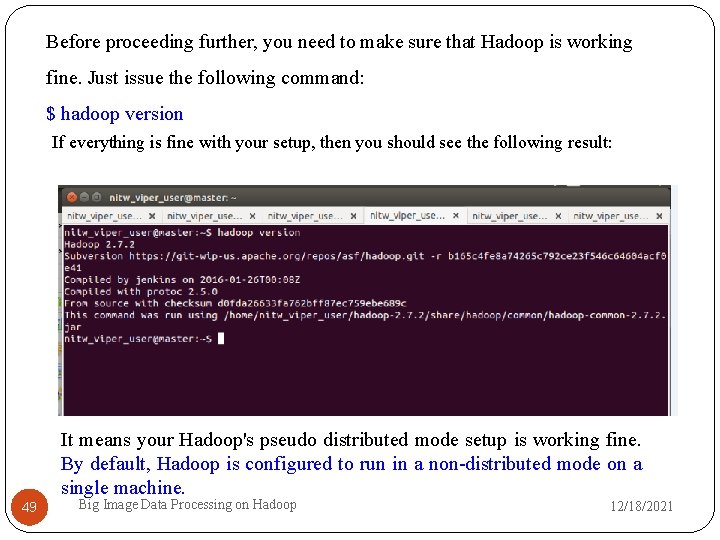

Before proceeding further, you need to make sure that Hadoop is working fine. Just issue the following command: $ hadoop version If everything is fine with your setup, then you should see the following result: It means your Hadoop's pseudo distributed mode setup is working fine. By default, Hadoop is configured to run in a non-distributed mode on a single machine. 49 Big Image Data Processing on Hadoop 12/18/2021

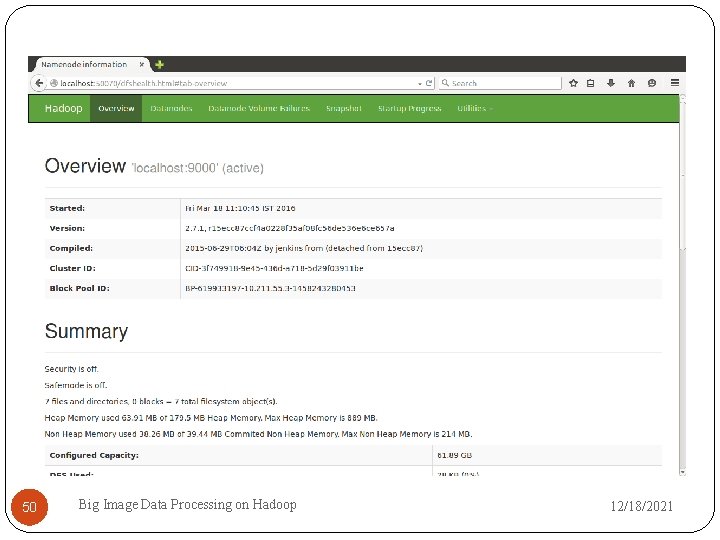

50 Big Image Data Processing on Hadoop 12/18/2021

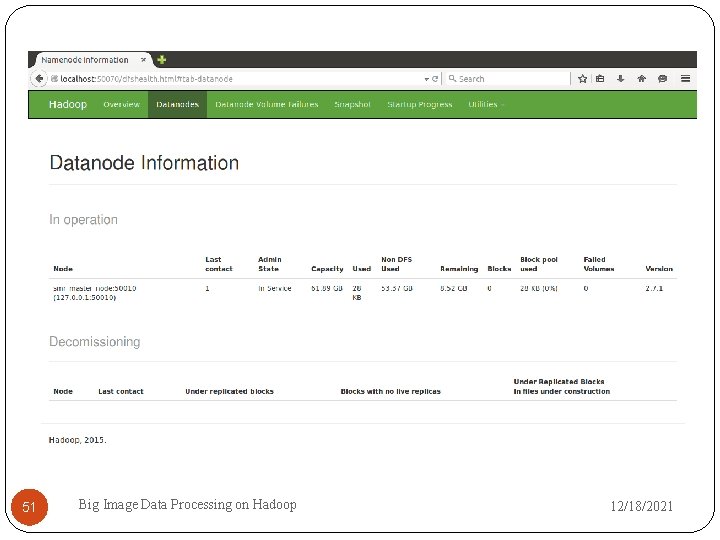

51 Big Image Data Processing on Hadoop 12/18/2021

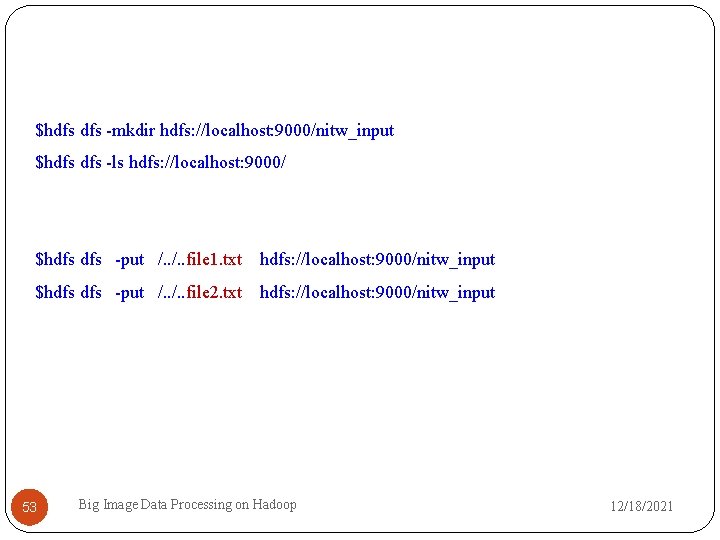

Word-count Program execution in HDFS Environment When you have installed in Standalone mode, the data that you have used to run the program is from local file system. But now, in Pseudo Distributed mode, we will be seeing how to put the data into HDFS and get the data from HDFS, so that we will have the feeling of working on Hadoop storage(HDFS). 52 Big Image Data Processing on Hadoop 12/18/2021

$hdfs -mkdir hdfs: //localhost: 9000/nitw_input $hdfs -ls hdfs: //localhost: 9000/ $hdfs -put /. . file 1. txt hdfs: //localhost: 9000/nitw_input $hdfs -put /. . file 2. txt hdfs: //localhost: 9000/nitw_input 53 Big Image Data Processing on Hadoop 12/18/2021

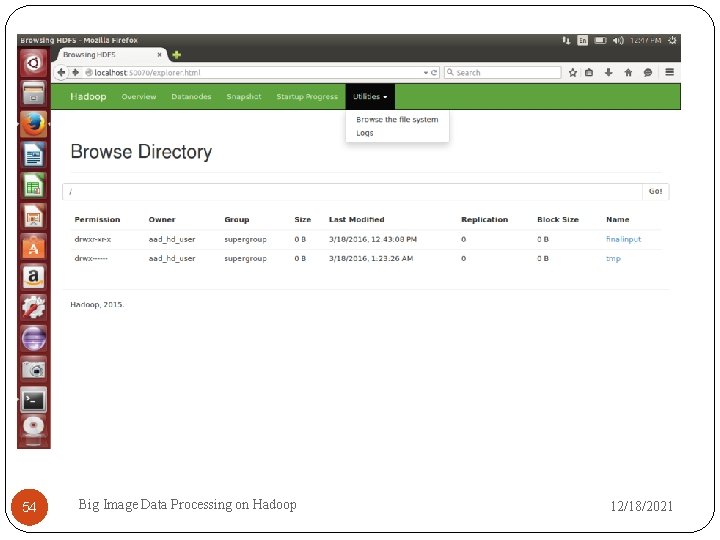

54 Big Image Data Processing on Hadoop 12/18/2021

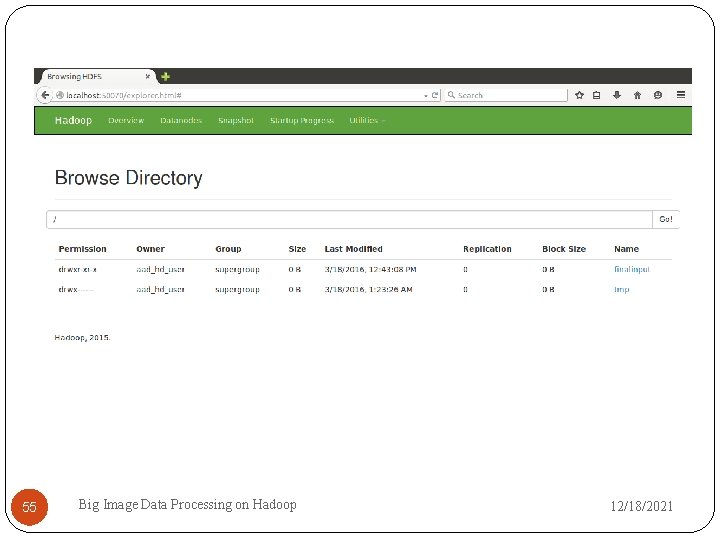

55 Big Image Data Processing on Hadoop 12/18/2021

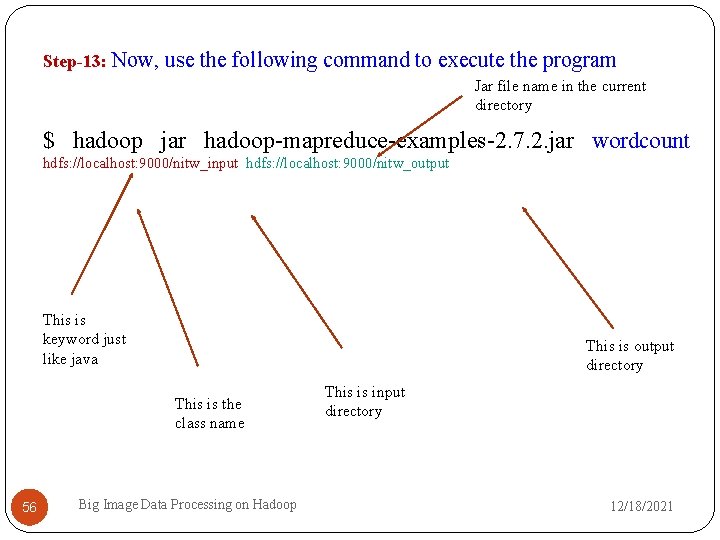

Step-13: Now, use the following command to execute the program Jar file name in the current directory $ hadoop jar hadoop-mapreduce-examples-2. 7. 2. jar wordcount hdfs: //localhost: 9000/nitw_input hdfs: //localhost: 9000/nitw_output This is keyword just like java This is output directory This is the class name 56 Big Image Data Processing on Hadoop This is input directory 12/18/2021

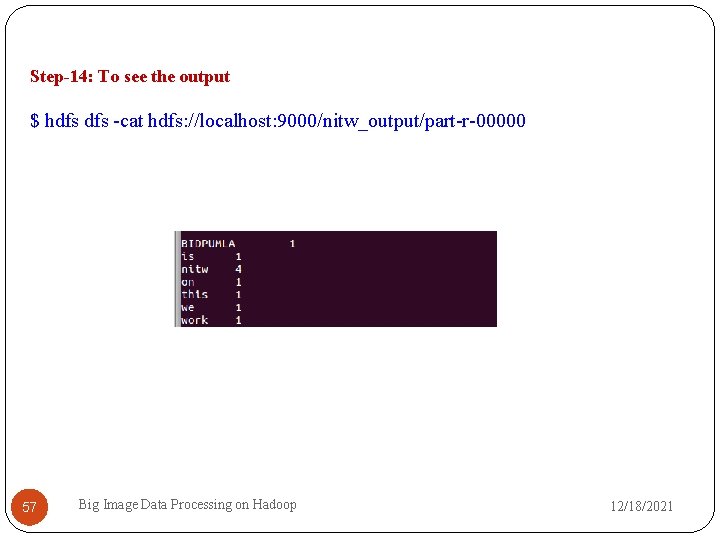

Step-14: To see the output $ hdfs -cat hdfs: //localhost: 9000/nitw_output/part-r-00000 57 Big Image Data Processing on Hadoop 12/18/2021

III. Fully-Distributed Mode 58 Big Image Data Processing on Hadoop 12/18/2021

Prerequisites Configuring Pseudo distributed mode of Hadoop. (Here we have used FIVE Pseudo distributed Mode of Hadoop installed systems. Stop all the processes running in all the Five systems by using the command. $stop-all. sh 59 (in all the five systems) Big Image Data Processing on Hadoop 12/18/2021

ALL THE SLAVE NODES MUST REMAIN IDLE UNLESS SPECIFIED! 60 Big Image Data Processing on Hadoop 12/18/2021

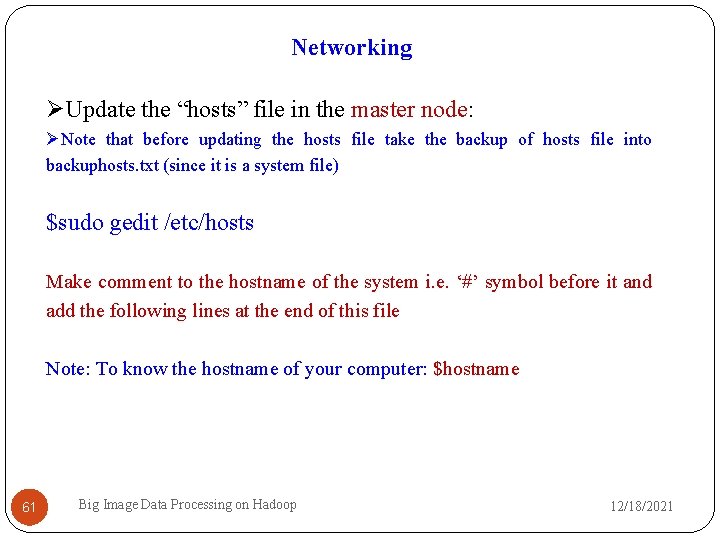

Networking Update the “hosts” file in the master node: Note that before updating the hosts file take the backup of hosts file into backuphosts. txt (since it is a system file) $sudo gedit /etc/hosts Make comment to the hostname of the system i. e. ‘#’ symbol before it and add the following lines at the end of this file Note: To know the hostname of your computer: $hostname 61 Big Image Data Processing on Hadoop 12/18/2021

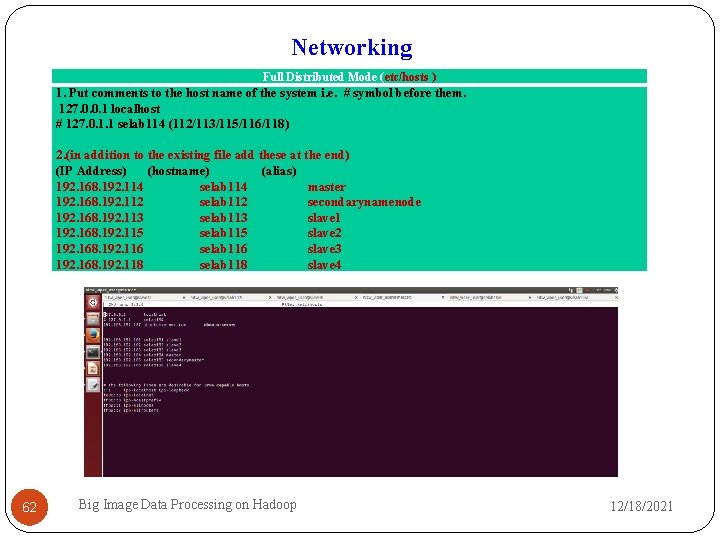

Networking Full Distributed Mode (etc/hosts ) 1. Put comments to the host name of the system i. e. # symbol before them. 127. 0. 0. 1 localhost # 127. 0. 1. 1 selab 114 (112/113/115/116/118) 2. (in addition to the existing file add these at the end) (IP Address) (hostname) (alias) 192. 168. 192. 114 selab 114 master 192. 168. 192. 112 selab 112 secondarynamenode 192. 168. 192. 113 selab 113 slave 1 192. 168. 192. 115 selab 115 slave 2 192. 168. 192. 116 selab 116 slave 3 192. 168. 192. 118 selab 118 slave 4 62 Big Image Data Processing on Hadoop 12/18/2021

Now we need to update the Hadoop configuration files: masters slaves core-site. xml hdfs-site. xml yarn-site-xml mapred-site. xml 63 Big Image Data Processing on Hadoop 12/18/2021

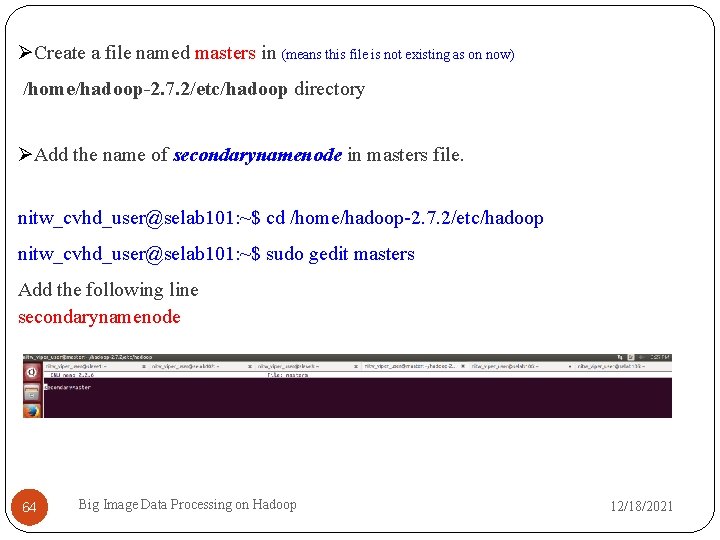

Create a file named masters in (means this file is not existing as on now) /home/hadoop-2. 7. 2/etc/hadoop directory Add the name of secondarynamenode in masters file. nitw_cvhd_user@selab 101: ~$ cd /home/hadoop-2. 7. 2/etc/hadoop nitw_cvhd_user@selab 101: ~$ sudo gedit masters Add the following line secondarynamenode 64 Big Image Data Processing on Hadoop 12/18/2021

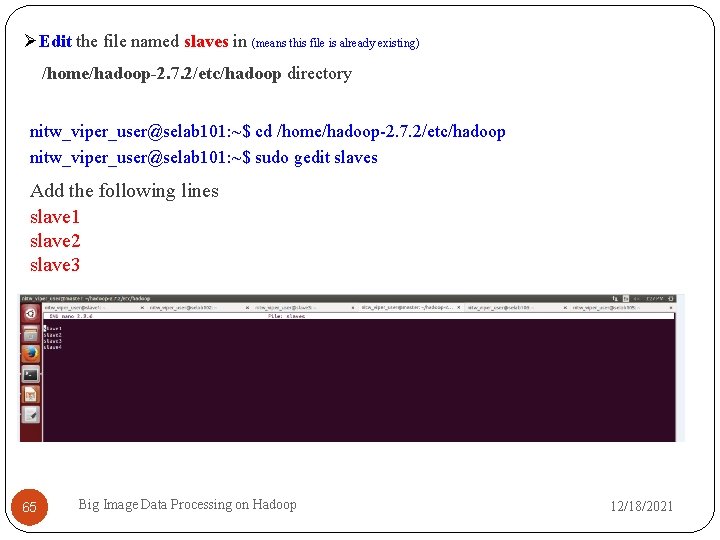

Edit the file named slaves in (means this file is already existing) /home/hadoop-2. 7. 2/etc/hadoop directory nitw_viper_user@selab 101: ~$ cd /home/hadoop-2. 7. 2/etc/hadoop nitw_viper_user@selab 101: ~$ sudo gedit slaves Add the following lines slave 1 slave 2 slave 3 65 Big Image Data Processing on Hadoop 12/18/2021

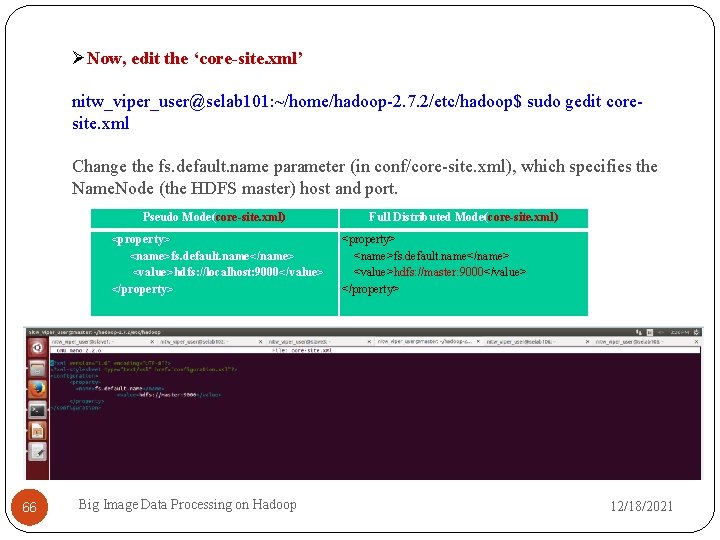

Now, edit the ‘core-site. xml’ nitw_viper_user@selab 101: ~/home/hadoop-2. 7. 2/etc/hadoop$ sudo gedit coresite. xml Change the fs. default. name parameter (in conf/core-site. xml), which specifies the Name. Node (the HDFS master) host and port. Pseudo Mode(core-site. xml) <property> <name>fs. default. name</name> <value>hdfs: //localhost: 9000</value> </property> 66 Big Image Data Processing on Hadoop Full Distributed Mode(core-site. xml) <property> <name>fs. default. name</name> <value>hdfs: //master: 9000</value> </property> 12/18/2021

Now, edit the ‘hdfs-site. xml’ (all machines) nitw_cvhd_user@selab 101: ~/home/hadoop-2. 7. 1/etc/hadoop$ sudo gedit hdfssite. xml Change the dfs. replication parameter (in conf/hdfs-site. xml) which specifies the default block replication. We have 3 data nodes available, so we set dfs. replication to 2. (you can have any number, but 3 is optimal) 67 Big Image Data Processing on Hadoop 12/18/2021

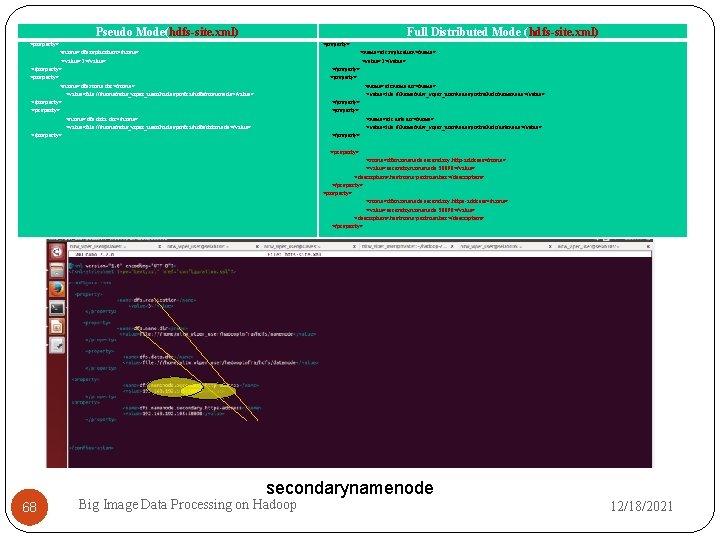

Pseudo Mode(hdfs-site. xml) Full Distributed Mode (hdfs-site. xml) <property> <name>dfs. replication</name> <value>1</value> </property> <name>dfs. name. dir</name> <value>file: ///home/nitw_viper_user/hadoopinfra/hdfs/namenode</value> </property> <name>dfs. data. dir</name> <value>file: ///home/nitw_viper_user/hadoopinfra/hdfs/datanode</value> </property> <name>dfs. replication</name> <value>3</value> </property> <name>dfs. name. dir</name> <value>file: ///home/nitw_viper_user/hadoopinfra/hdfs/namenode</value> </property> <name>dfs. data. dir</name> <value>file: ///home/nitw_viper_user/hadoopinfra/hdfs/datanode</value> </property> <name>dfs. namenode. secondary. http-address</name> <value>secondaynamenode: 50090</value> <description>hostname: portnumber</description> </property> <name>dfs. namenode. secondary. https-address</name> <value>secondaynamenode: 50090</value> <description>hostname: portnumber</description> </property> secondarynamenode 68 Big Image Data Processing on Hadoop 12/18/2021

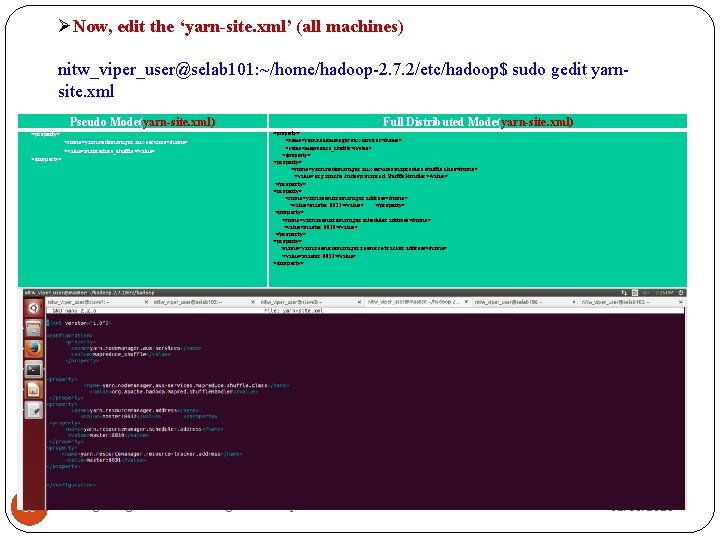

Now, edit the ‘yarn-site. xml’ (all machines) nitw_viper_user@selab 101: ~/home/hadoop-2. 7. 2/etc/hadoop$ sudo gedit yarnsite. xml Pseudo Mode(yarn-site. xml) <property> <name>yarn. nodemanager. aux-services</name> <value>mapreduce_shuffle</value> </property> 69 Full Distributed Mode(yarn-site. xml) <property> <name>yarn. nodemanager. aux-services</name> <value>mapreduce_shuffle</value> </property> <name>yarn. nodemanager. aux-services. mapreduce. shuffle. class</name> <value>org. apache. hadoop. mapred. Shuffle. Handler</value> </property> <name>yarn. resourcemanager. address</name> <value>master: 8032</value> </property> <name>yarn. resourcemanager. scheduler. address</name> <value>master: 8030</value> </property> <name>yarn. resourcemanager. resource-tracker. address</name> <value>master: 8031</value> </property> Big Image Data Processing on Hadoop 12/18/2021

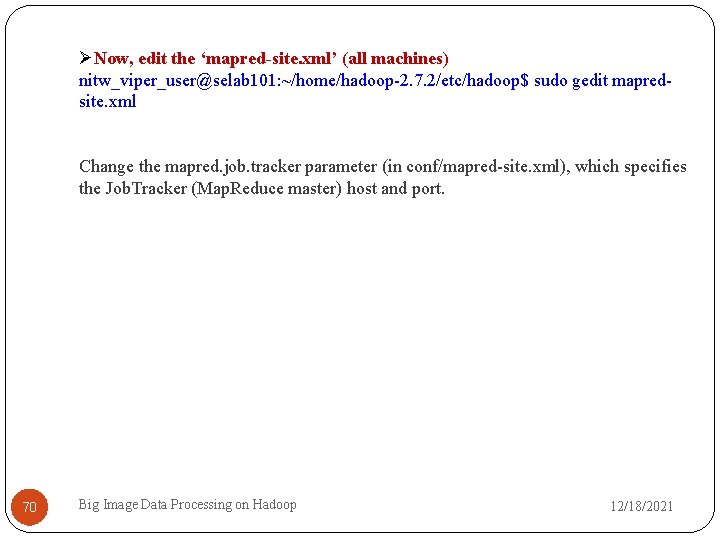

Now, edit the ‘mapred-site. xml’ (all machines) nitw_viper_user@selab 101: ~/home/hadoop-2. 7. 2/etc/hadoop$ sudo gedit mapredsite. xml Change the mapred. job. tracker parameter (in conf/mapred-site. xml), which specifies the Job. Tracker (Map. Reduce master) host and port. 70 Big Image Data Processing on Hadoop 12/18/2021

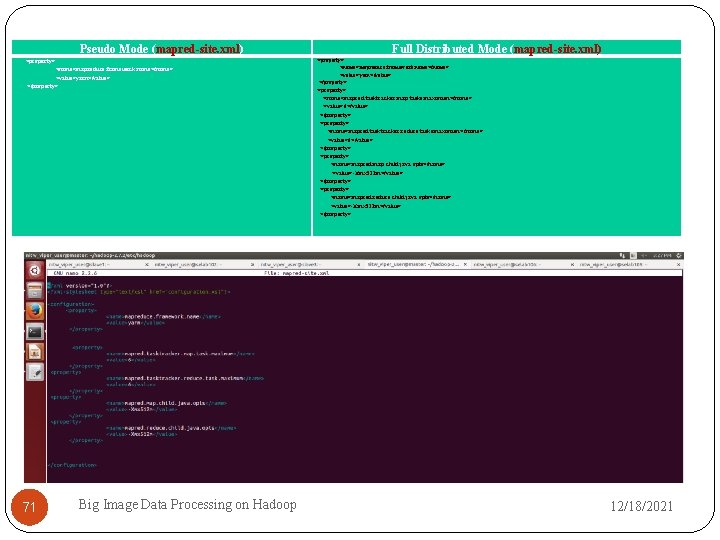

Pseudo Mode (mapred-site. xml) <property> <name>mapreduce. framework. name</name> <value>yarn</value> </property> 71 Big Image Data Processing on Hadoop Full Distributed Mode (mapred-site. xml) <property> <name>mapreduce. framework. name</name> <value>yarn</value> </property> <name>mapred. tasktracker. map. tasks. maximum</name> <value>6</value> </property> <name>mapred. tasktracker. reduce. tasks. maximum</name> <value>6</value> </property> <name>mapred. map. child. java. opts</name> <value>-Xmx 512 m</value> </property> <name>mapred. reduce. child. java. opts</name> <value>-Xmx 512 m</value> </property> 12/18/2021

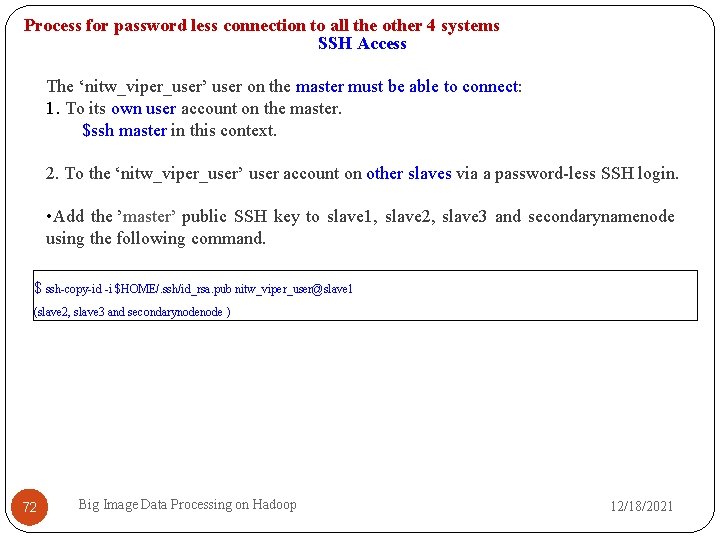

Process for password less connection to all the other 4 systems SSH Access The ‘nitw_viper_user’ user on the master must be able to connect: 1. To its own user account on the master. $ssh master in this context. 2. To the ‘nitw_viper_user’ user account on other slaves via a password-less SSH login. • Add the ’master’ public SSH key to slave 1, slave 2, slave 3 and secondarynamenode using the following command. $ ssh-copy-id -i $HOME/. ssh/id_rsa. pub nitw_viper_user@slave 1 (slave 2, slave 3 and secondarynode ) 72 Big Image Data Processing on Hadoop 12/18/2021

Now Update Hadoop configuration files on all slaves and Secondary. Name. Node: masters slaves core-site. xml hdfs-site. xml yarn-site-xml mapred-site. xml and also copy RSA Key of Master to all Slaves and Secondary. Namenode. For this whole process we have written a shell script, so without touching any of the other node of the cluster, we update everything from the master system only. 73 Big Image Data Processing on Hadoop 12/18/2021

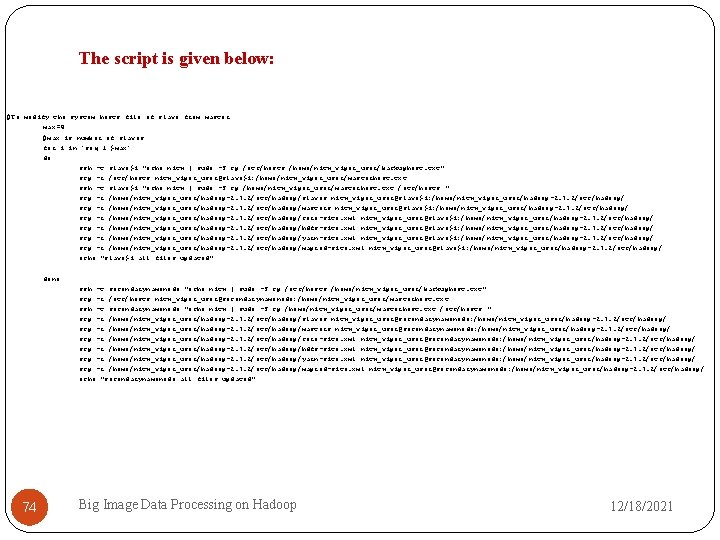

The script is given below: #To modify the system hosts file of slave from master max=4 #max is number of slaves for i in `seq 1 $max` do ssh -t slave$i "echo nitw | sudo -S cp /etc/hosts /home/nitw_viper_user/backuphost. txt" scp -r /etc/hosts nitw_viper_user@slave$i: /home/nitw_viper_user/masterhost. txt ssh -t slave$i "echo nitw | sudo -S cp /home/nitw_viper_user/masterhost. txt /etc/hosts " scp -r /home/nitw_viper_user/hadoop-2. 7. 2/etc/hadoop/slaves nitw_viper_user@slave$i: /home/nitw_viper_user/hadoop-2. 7. 2/etc/hadoop/ scp -r /home/nitw_viper_user/hadoop-2. 7. 2/etc/hadoop/masters nitw_viper_user@slave$i: /home/nitw_viper_user/hadoop-2. 7. 2/etc/hadoop/ scp -r /home/nitw_viper_user/hadoop-2. 7. 2/etc/hadoop/core-site. xml nitw_viper_user@slave$i: /home/nitw_viper_user/hadoop-2. 7. 2/etc/hadoop/ scp -r /home/nitw_viper_user/hadoop-2. 7. 2/etc/hadoop/hdfs-site. xml nitw_viper_user@slave$i: /home/nitw_viper_user/hadoop-2. 7. 2/etc/hadoop/ scp -r /home/nitw_viper_user/hadoop-2. 7. 2/etc/hadoop/yarn-site. xml nitw_viper_user@slave$i: /home/nitw_viper_user/hadoop-2. 7. 2/etc/hadoop/ scp -r /home/nitw_viper_user/hadoop-2. 7. 2/etc/hadoop/mapred-site. xml nitw_viper_user@slave$i: /home/nitw_viper_user/hadoop-2. 7. 2/etc/hadoop/ echo "slave$i all files updated" done ssh -t secondarynamenode "echo nitw | sudo -S cp /etc/hosts /home/nitw_viper_user/backuphost. txt" scp -r /etc/hosts nitw_viper_user@secondarynamenode: /home/nitw_viper_user/masterhost. txt ssh -t secondarynamenode "echo nitw | sudo -S cp /home/nitw_viper_user/masterhost. txt /etc/hosts " scp -r /home/nitw_viper_user/hadoop-2. 7. 2/etc/hadoop/slaves nitw_viper_user@secondarynamenode: /home/nitw_viper_user/hadoop-2. 7. 2/etc/hadoop/ scp -r /home/nitw_viper_user/hadoop-2. 7. 2/etc/hadoop/masters nitw_viper_user@secondarynamenode: /home/nitw_viper_user/hadoop-2. 7. 2/etc/hadoop/ scp -r /home/nitw_viper_user/hadoop-2. 7. 2/etc/hadoop/core-site. xml nitw_viper_user@secondarynamenode: /home/nitw_viper_user/hadoop-2. 7. 2/etc/hadoop/ scp -r /home/nitw_viper_user/hadoop-2. 7. 2/etc/hadoop/hdfs-site. xml nitw_viper_user@secondarynamenode: /home/nitw_viper_user/hadoop-2. 7. 2/etc/hadoop/ scp -r /home/nitw_viper_user/hadoop-2. 7. 2/etc/hadoop/yarn-site. xml nitw_viper_user@secondarynamenode: /home/nitw_viper_user/hadoop-2. 7. 2/etc/hadoop/ scp -r /home/nitw_viper_user/hadoop-2. 7. 2/etc/hadoop/mapred-site. xml nitw_viper_user@secondarynamenode: /home/nitw_viper_user/hadoop-2. 7. 2/etc/hadoop/ echo "secondarynamenode all files updated" 74 Big Image Data Processing on Hadoop 12/18/2021

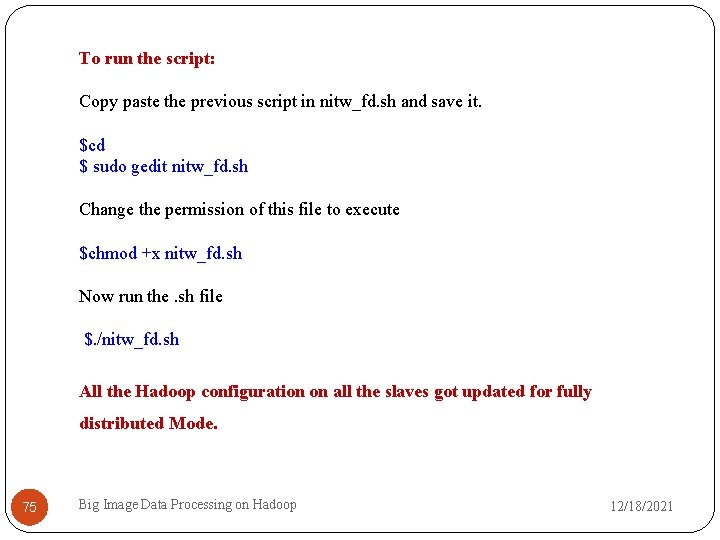

To run the script: Copy paste the previous script in nitw_fd. sh and save it. $cd $ sudo gedit nitw_fd. sh Change the permission of this file to execute $chmod +x nitw_fd. sh Now run the. sh file $. /nitw_fd. sh All the Hadoop configuration on all the slaves got updated for fully distributed Mode. 75 Big Image Data Processing on Hadoop 12/18/2021

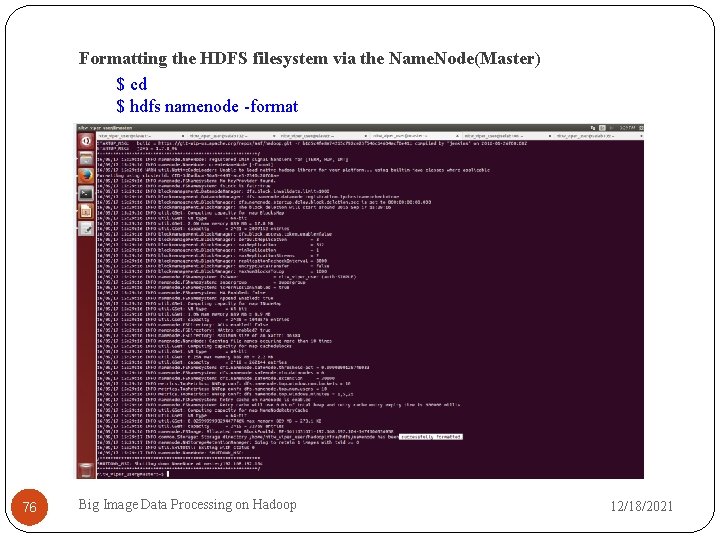

Formatting the HDFS filesystem via the Name. Node(Master) $ cd $ hdfs namenode -format 76 Big Image Data Processing on Hadoop 12/18/2021

Verifying Hadoop Goto home directory $ cd $ start-dfs. sh $ start-yarn. sh 77 Big Image Data Processing on Hadoop 12/18/2021

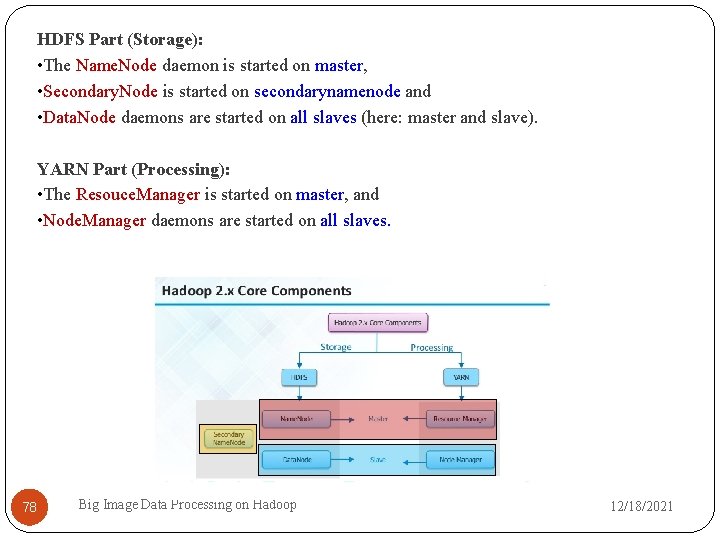

HDFS Part (Storage): • The Name. Node daemon is started on master, • Secondary. Node is started on secondarynamenode and • Data. Node daemons are started on all slaves (here: master and slave). YARN Part (Processing): • The Resouce. Manager is started on master, and • Node. Manager daemons are started on all slaves. 78 Big Image Data Processing on Hadoop 12/18/2021

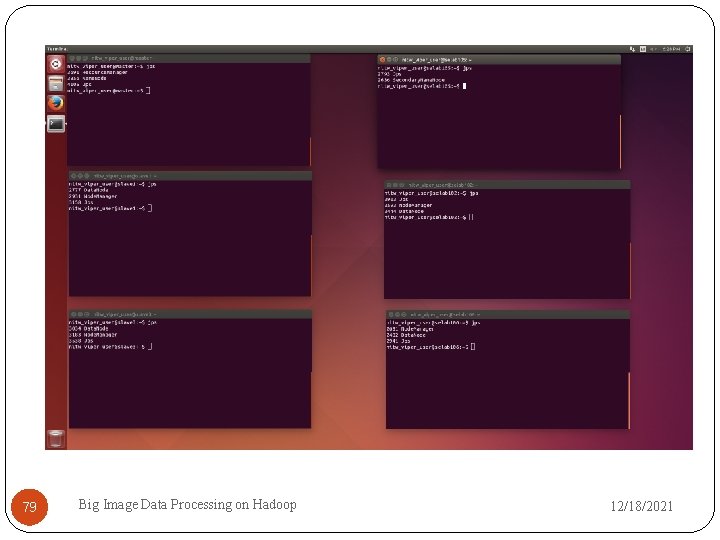

79 Big Image Data Processing on Hadoop 12/18/2021

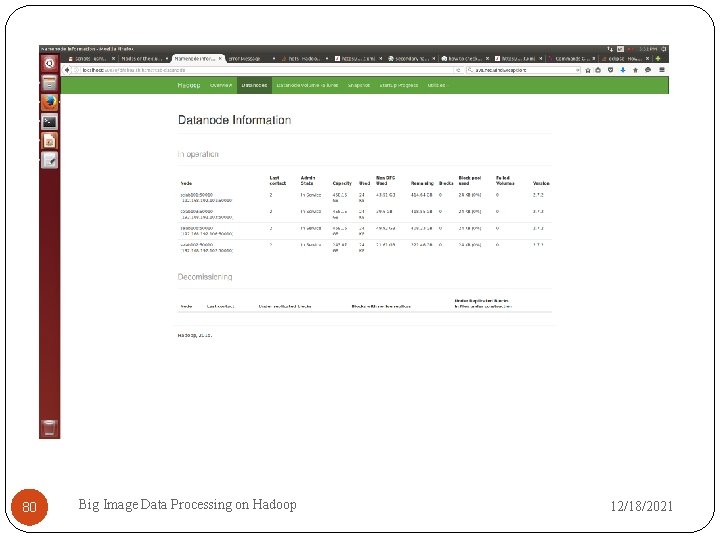

80 Big Image Data Processing on Hadoop 12/18/2021

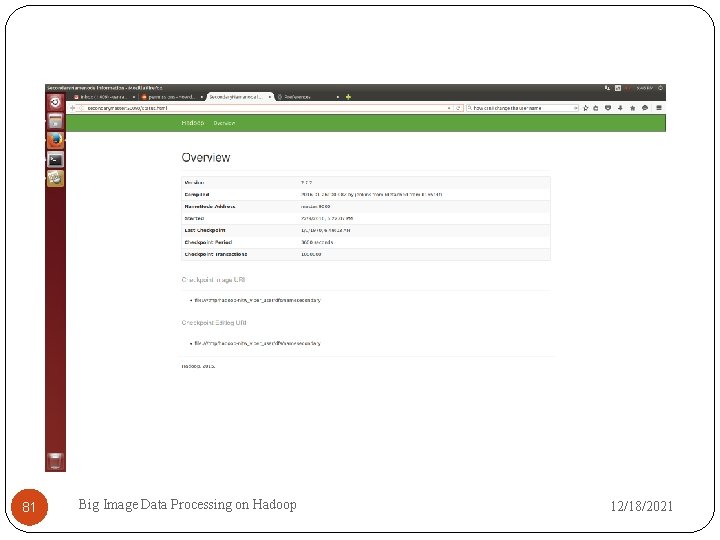

81 Big Image Data Processing on Hadoop 12/18/2021

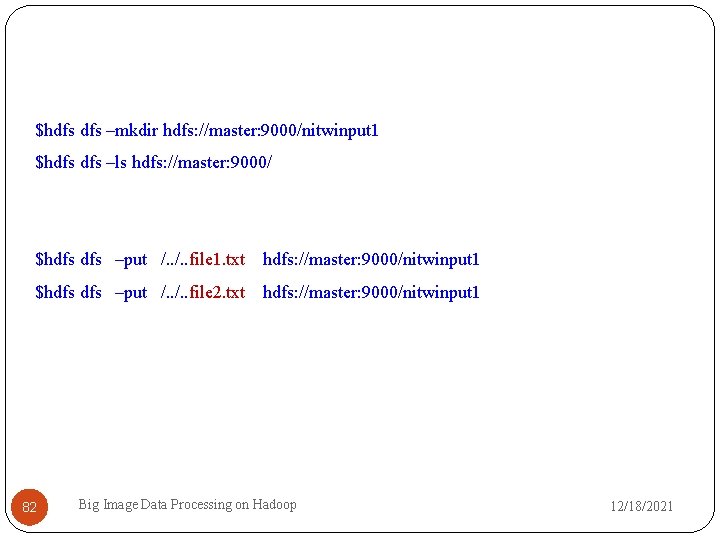

$hdfs –mkdir hdfs: //master: 9000/nitwinput 1 $hdfs –ls hdfs: //master: 9000/ $hdfs –put /. . file 1. txt hdfs: //master: 9000/nitwinput 1 $hdfs –put /. . file 2. txt hdfs: //master: 9000/nitwinput 1 82 Big Image Data Processing on Hadoop 12/18/2021

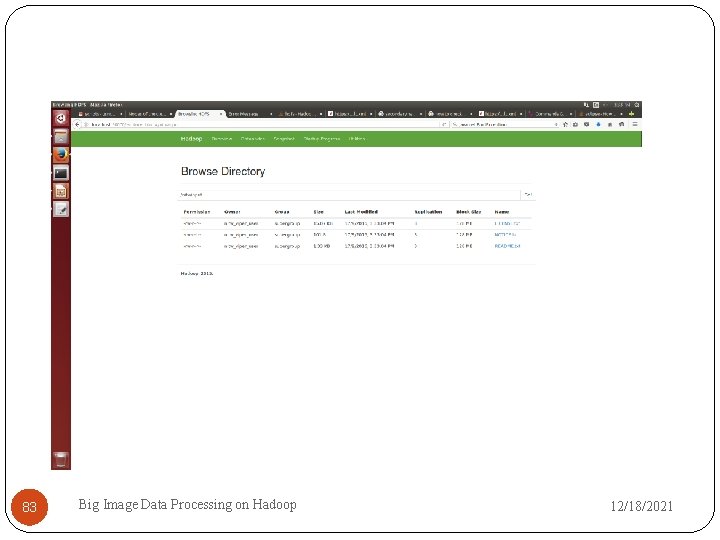

83 Big Image Data Processing on Hadoop 12/18/2021

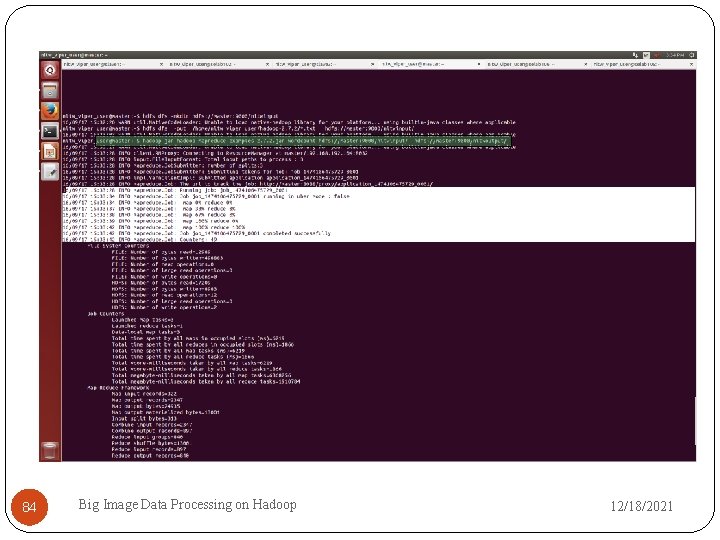

84 Big Image Data Processing on Hadoop 12/18/2021

85 Big Image Data Processing on Hadoop 12/18/2021

86 Big Image Data Processing on Hadoop 12/18/2021

87 Big Image Data Processing on Hadoop 12/18/2021

88 Big Image Data Processing on Hadoop 12/18/2021

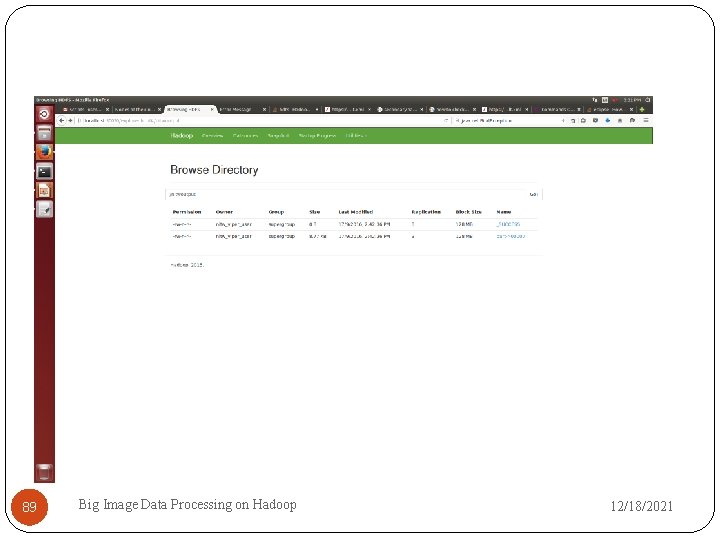

89 Big Image Data Processing on Hadoop 12/18/2021

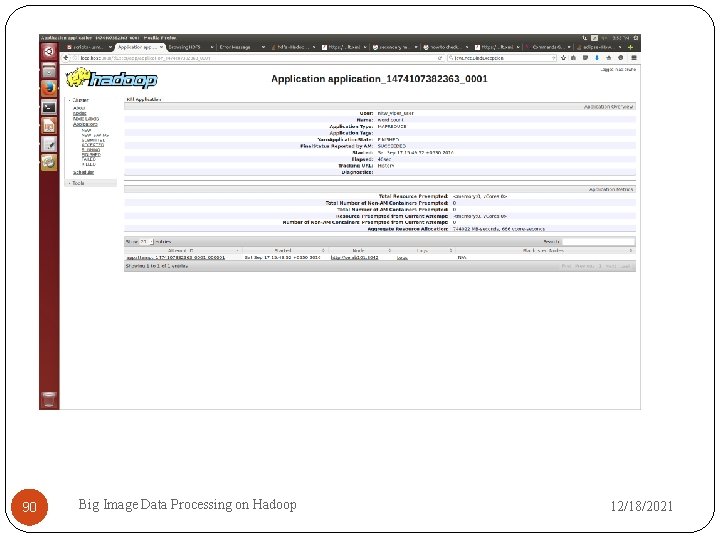

90 Big Image Data Processing on Hadoop 12/18/2021

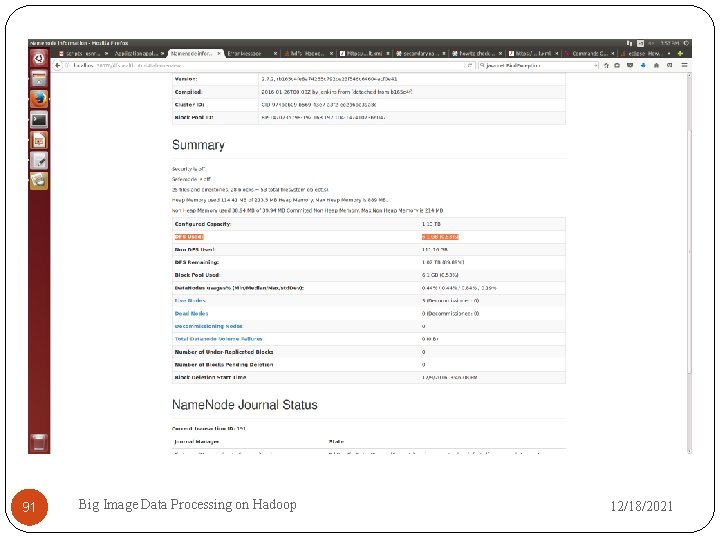

91 Big Image Data Processing on Hadoop 12/18/2021

Thank you … 92 Big Image Data Processing on Hadoop 12/18/2021

- Slides: 92