Guided Local Search CP Meets OR Edward Tsang

- Slides: 23

Guided Local Search – CP Meets OR Edward Tsang CP-AI-OR ’ 02 Constraint Satisfaction and Optimisation Group, University of Essex http: //cswww. essex. ac. uk/CSP/ GLS: CP Meets OR

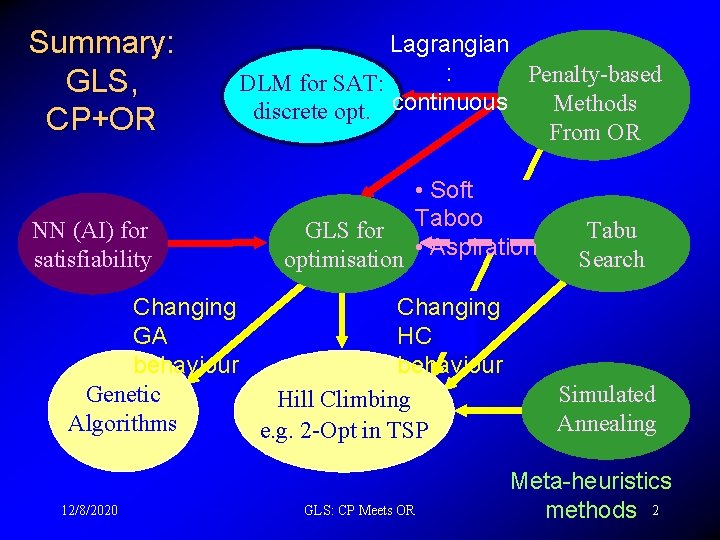

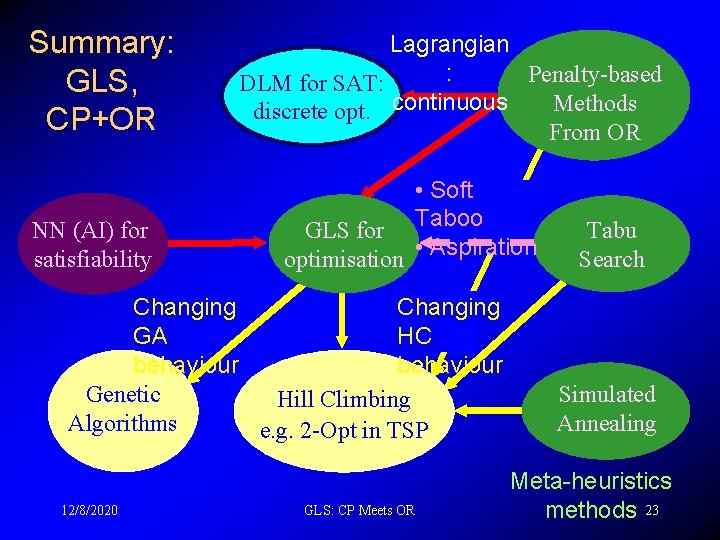

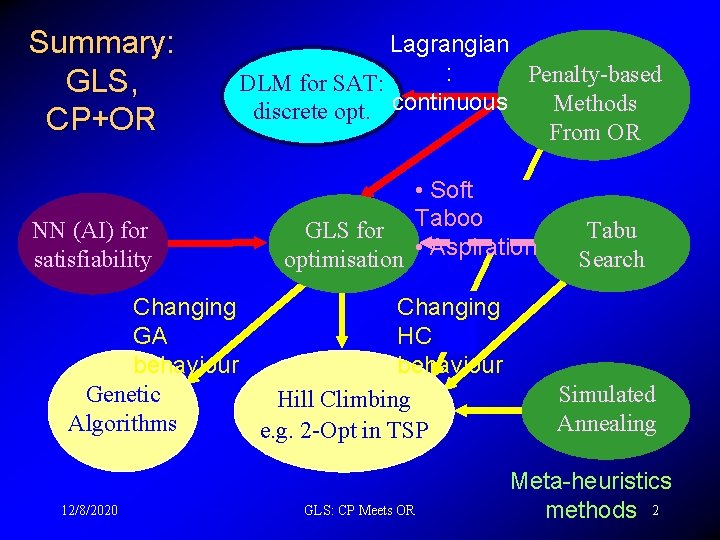

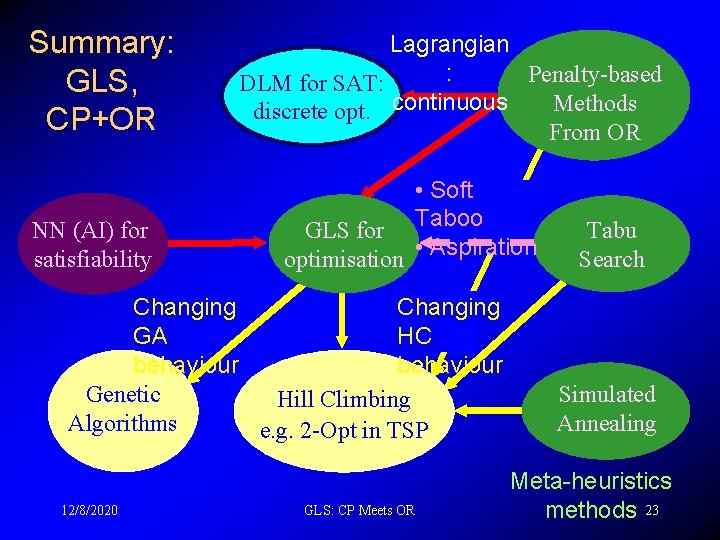

Summary: GLS, CP+OR NN (AI) for satisfiability Lagrangian : Penalty-based DLM for SAT: Methods discrete opt. continuous From OR • Soft GLS for Taboo optimisation • Aspiration Changing GA HC behaviour Genetic Hill Climbing Algorithms e. g. 2 -Opt in TSP 12/8/2020 GLS: CP Meets OR Tabu Search Simulated Annealing Meta-heuristics methods 2

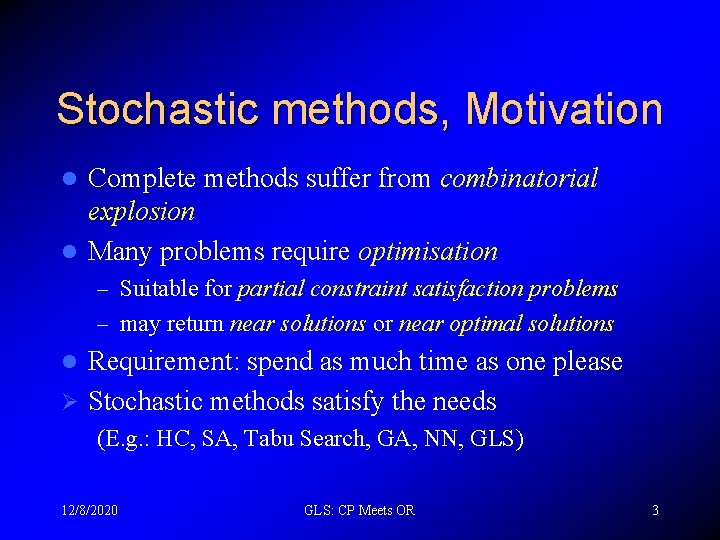

Stochastic methods, Motivation Complete methods suffer from combinatorial explosion l Many problems require optimisation l – Suitable for partial constraint satisfaction problems – may return near solutions or near optimal solutions Requirement: spend as much time as one please Ø Stochastic methods satisfy the needs l (E. g. : HC, SA, Tabu Search, GA, NN, GLS) 12/8/2020 GLS: CP Meets OR 3

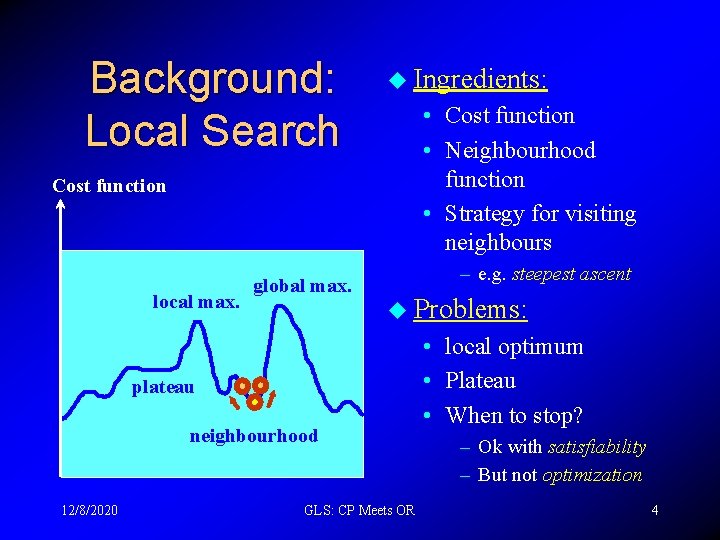

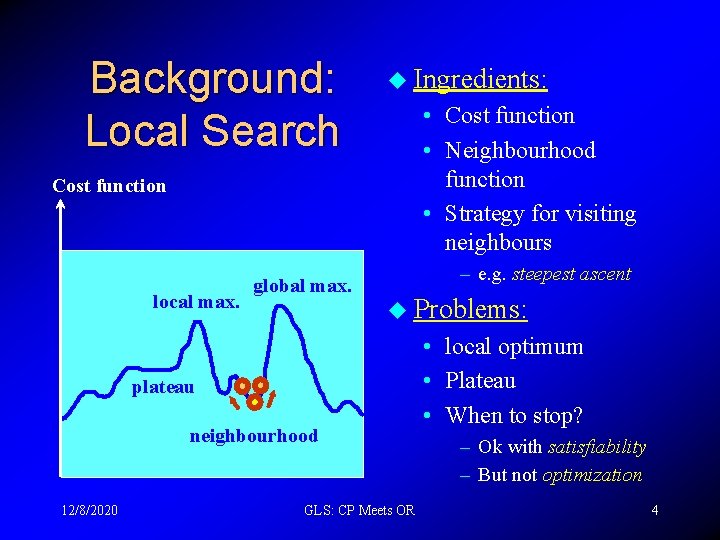

Background: Local Search u Ingredients: • Cost function • Neighbourhood function • Strategy for visiting neighbours Cost function local max. global max. – e. g. steepest ascent u Problems: plateau neighbourhood 12/8/2020 GLS: CP Meets OR • local optimum • Plateau • When to stop? – Ok with satisfiability – But not optimization 4

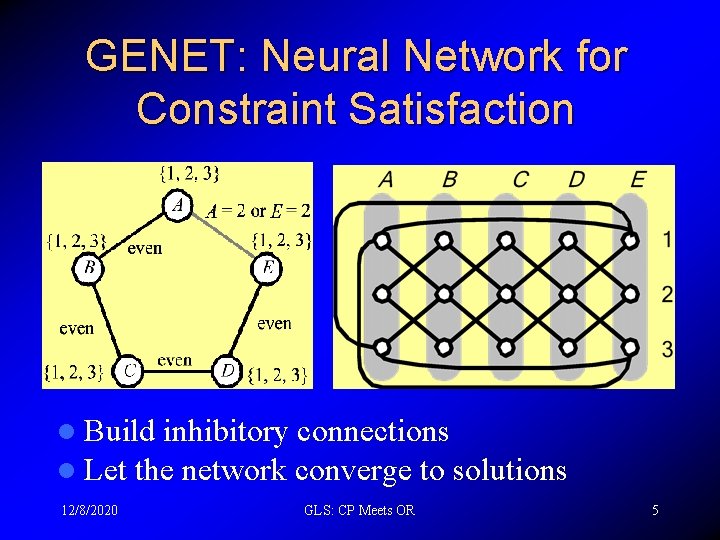

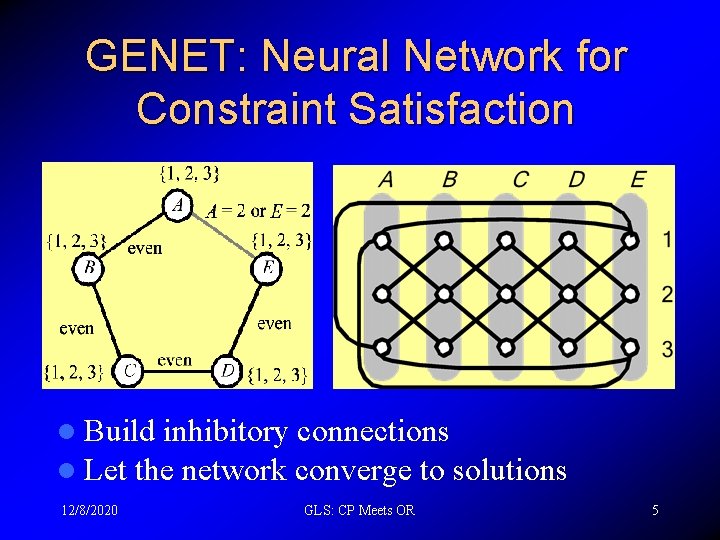

GENET: Neural Network for Constraint Satisfaction l Build inhibitory connections l Let the network converge to solutions 12/8/2020 GLS: CP Meets OR 5

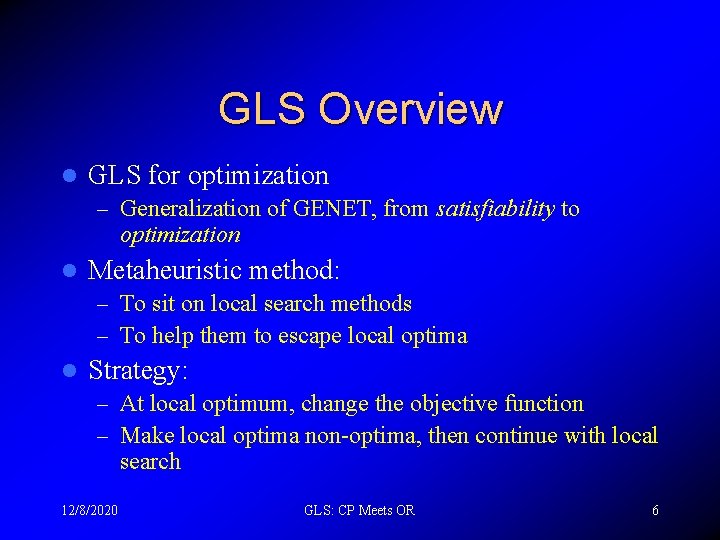

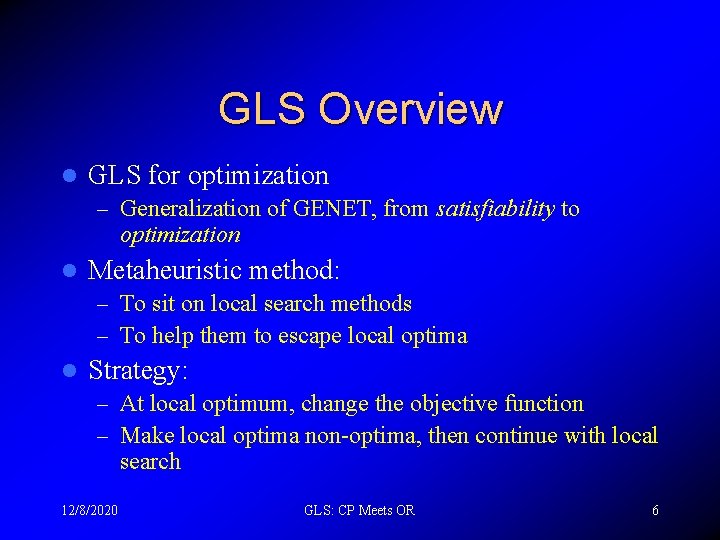

GLS Overview l GLS for optimization – Generalization of GENET, from satisfiability to optimization l Metaheuristic method: – To sit on local search methods – To help them to escape local optima l Strategy: – At local optimum, change the objective function – Make local optima non-optima, then continue with local search 12/8/2020 GLS: CP Meets OR 6

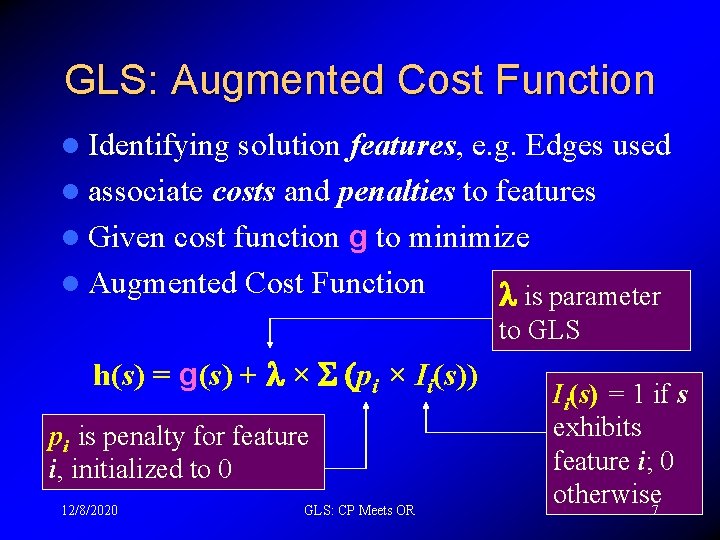

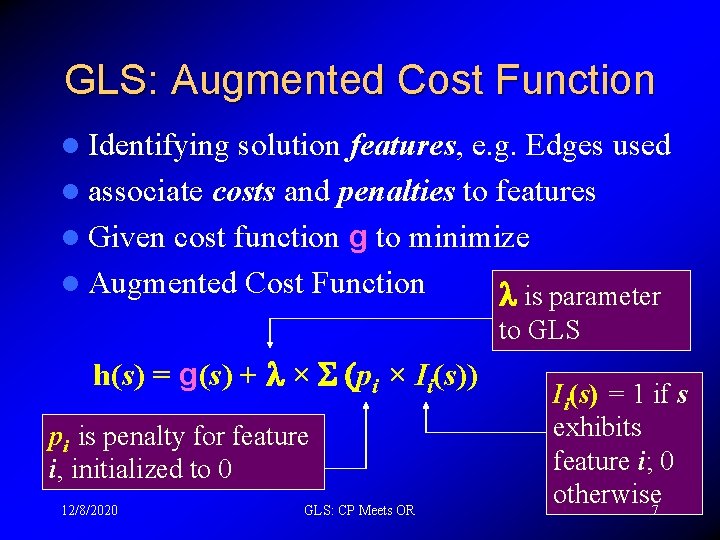

GLS: Augmented Cost Function l Identifying solution features, e. g. Edges used l associate costs and penalties to features l Given cost function g to minimize l Augmented Cost Function is parameter to GLS h(s) = g(s) + × (pi × Ii(s)) pi is penalty for feature i, initialized to 0 12/8/2020 GLS: CP Meets OR Ii(s) = 1 if s exhibits feature i; 0 otherwise 7

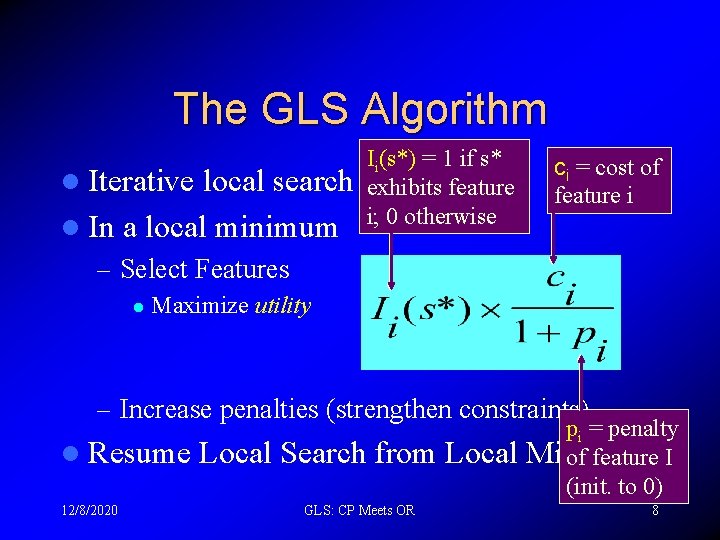

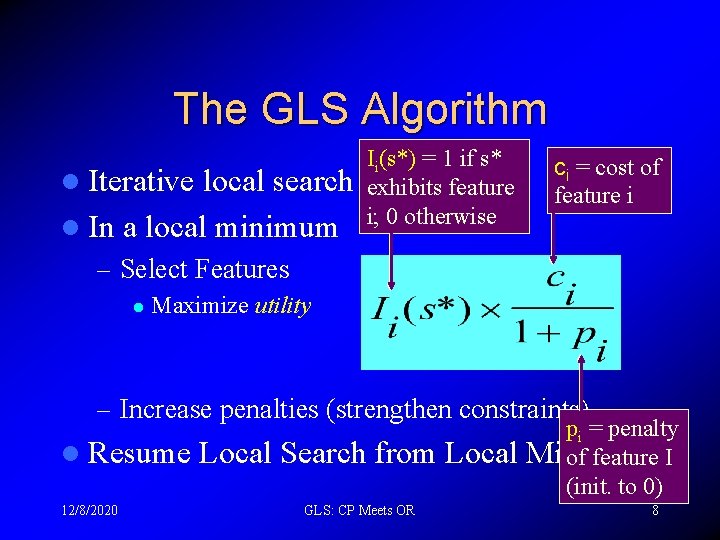

The GLS Algorithm l Iterative local search l In a local minimum Ii(s*) = 1 if s* exhibits feature i; 0 otherwise ci = cost of feature i – Select Features l Maximize utility – Increase penalties (strengthen constraints) l Resume 12/8/2020 Local Search from Local GLS: CP Meets OR pi = penalty Minimum of feature I (init. to 0) 8

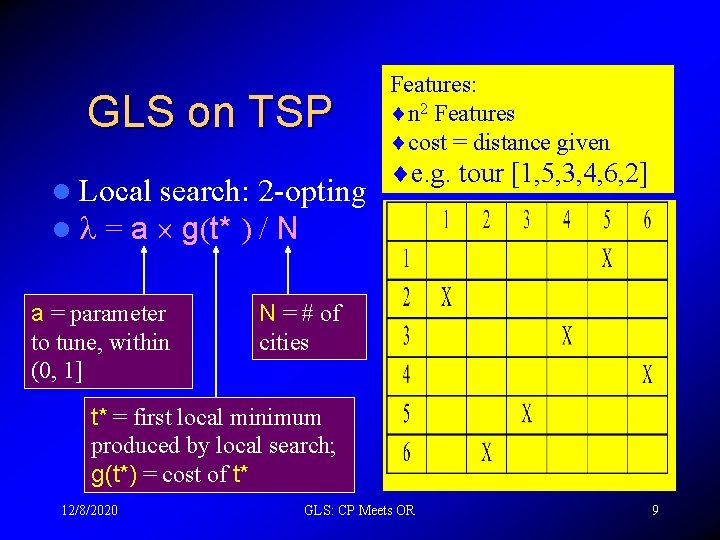

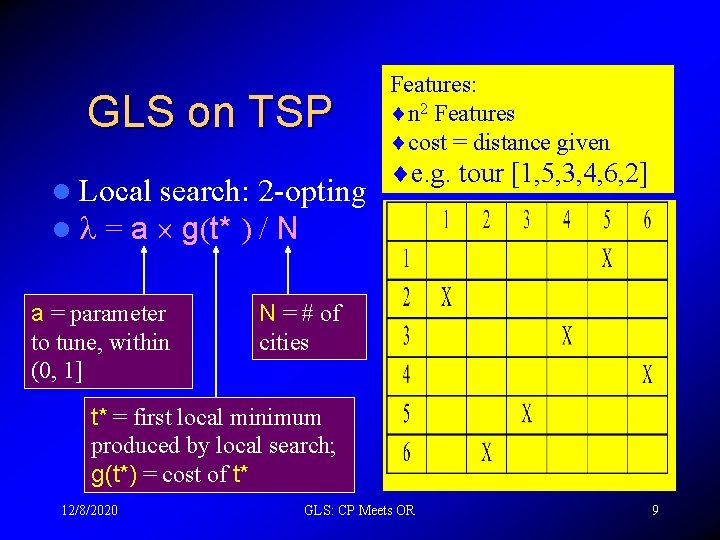

GLS on TSP l Local search: 2 -opting l = a g(t* ) / N a = parameter to tune, within (0, 1] Features: ¨n 2 Features ¨cost = distance given ¨e. g. tour [1, 5, 3, 4, 6, 2] N = # of cities t* = first local minimum produced by local search; g(t*) = cost of t* 12/8/2020 GLS: CP Meets OR 9

Components in GLS l Local search strategy – Also needed in HC, SA, Tabu Search l Features, costs – Sometimes come naturally from cost function l Main parameter: – Experimental results sometimes sensitive to – Our practice: = a g(first local optimum) l Question: how to tune a ( -coefficient)? 12/8/2020 GLS: CP Meets OR 11

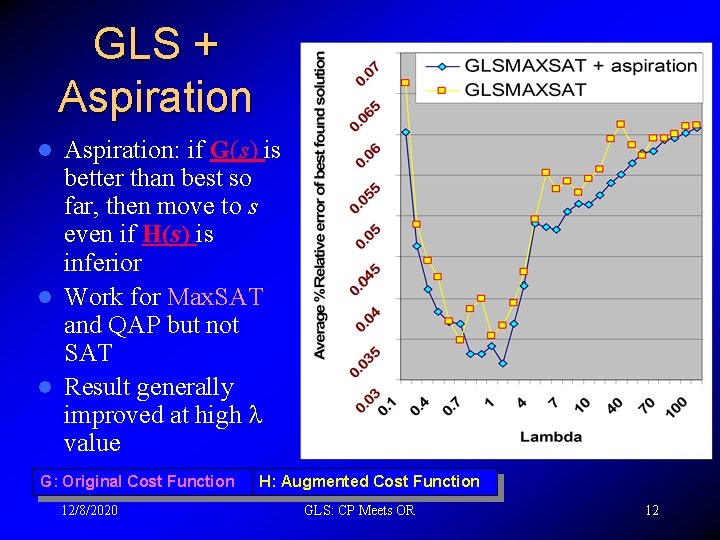

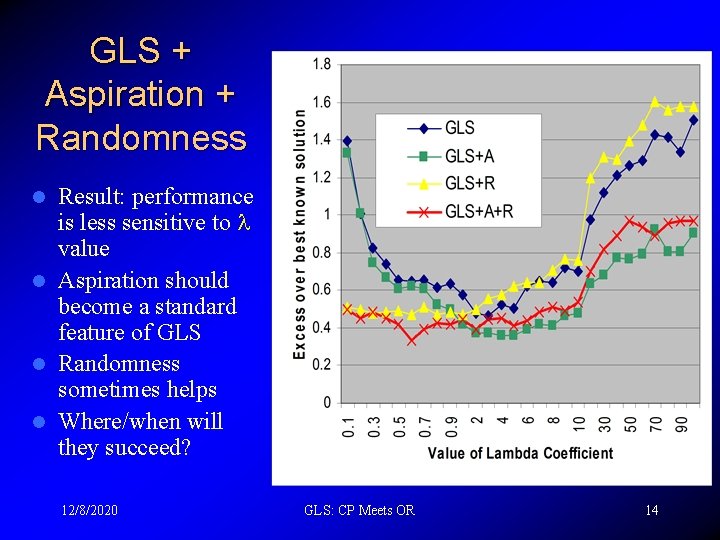

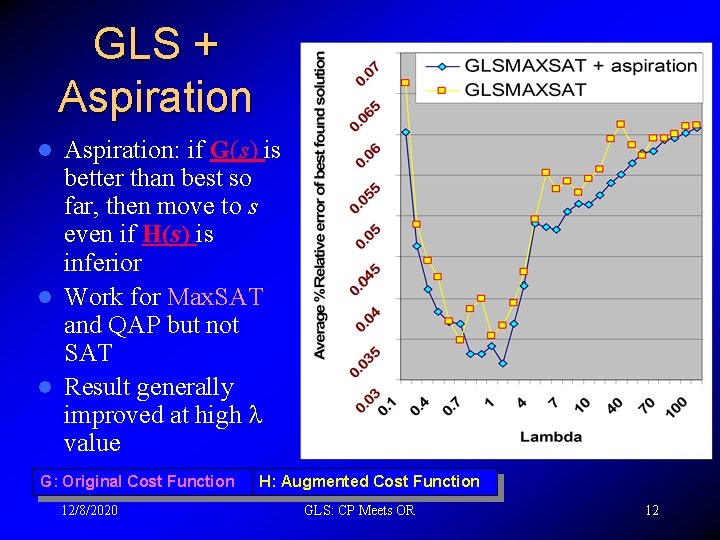

GLS + Aspiration: if G(s) is better than best so far, then move to s even if H(s) is inferior l Work for Max. SAT and QAP but not SAT l Result generally improved at high value l G: Original Cost Function 12/8/2020 H: Augmented Cost Function GLS: CP Meets OR 12

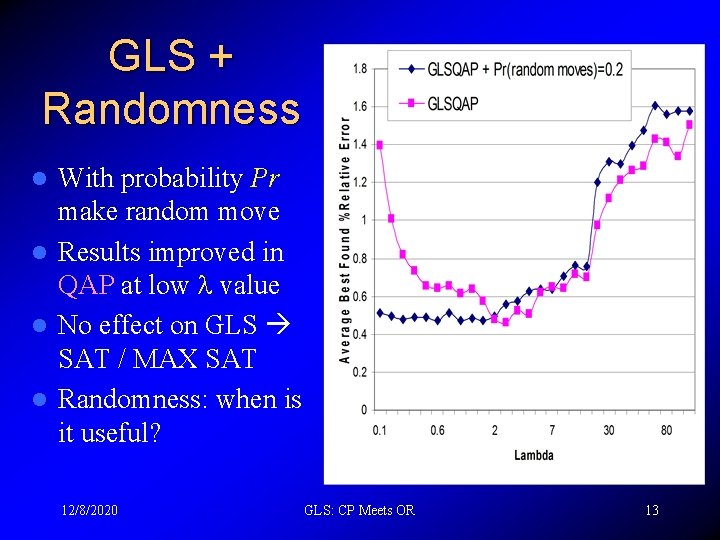

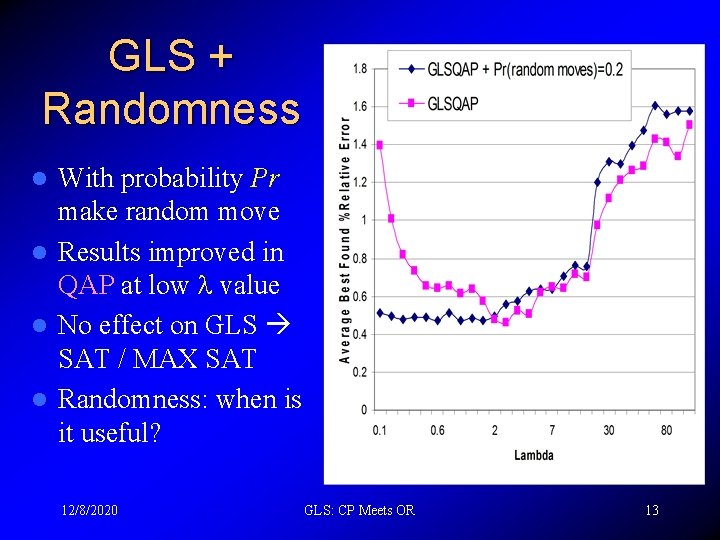

GLS + Randomness With probability Pr make random move l Results improved in QAP at low value l No effect on GLS SAT / MAX SAT l Randomness: when is it useful? l 12/8/2020 GLS: CP Meets OR 13

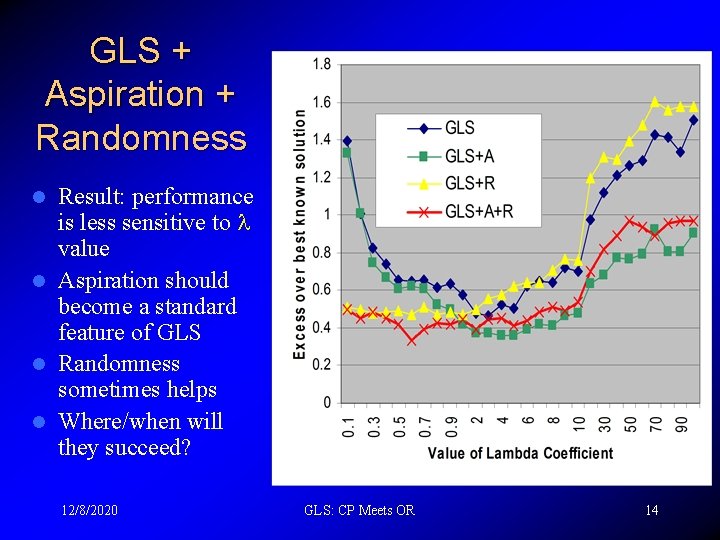

GLS + Aspiration + Randomness Result: performance is less sensitive to value l Aspiration should become a standard feature of GLS l Randomness sometimes helps l Where/when will they succeed? l 12/8/2020 GLS: CP Meets OR 14

Some GLS Applications l Radio Length Frequency Assignment l BT’s work force scheduling l Quadratic assignment l SAT / MAXSAT l Vehicle Routing Logic Programming (Melbourne, Singapore, Hong Kong) l Train scheduling (King’s College, London) l Bus scheduling (Leeds) l Bin Packing (University of Copenhagen) 12/8/2020 GLS: CP Meets OR 15

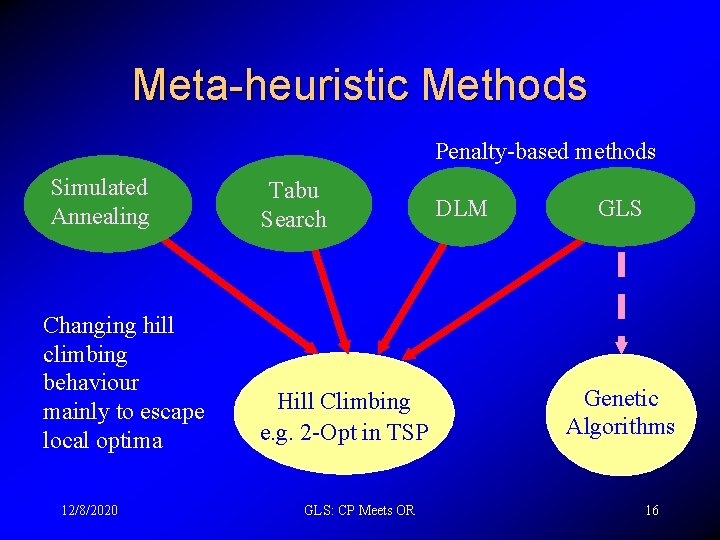

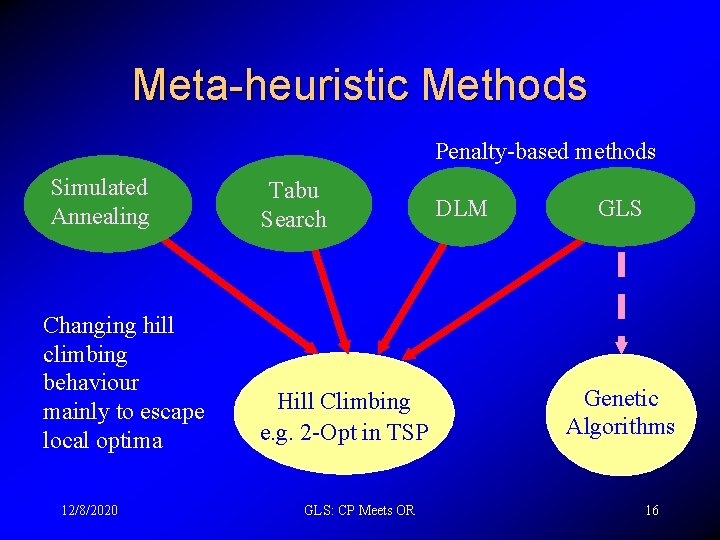

Meta-heuristic Methods Penalty-based methods Simulated Annealing Changing hill climbing behaviour mainly to escape local optima 12/8/2020 Tabu Search Hill Climbing e. g. 2 -Opt in TSP GLS: CP Meets OR DLM GLS Genetic Algorithms 16

GLS & Tabu Search l TS is a class of algorithms – Various ways to manipulate Taboo List – GLS is a more specific algorithm l Penalties in GLS are Soft Taboos – Taboos are normally hard constraints in TS l GLS borrowed taboo list from TS l GLS+ borrowed aspiration idea from TS l Hybrid GLS+TS used in ILOG Dispatcher 12/8/2020 GLS: CP Meets OR 17

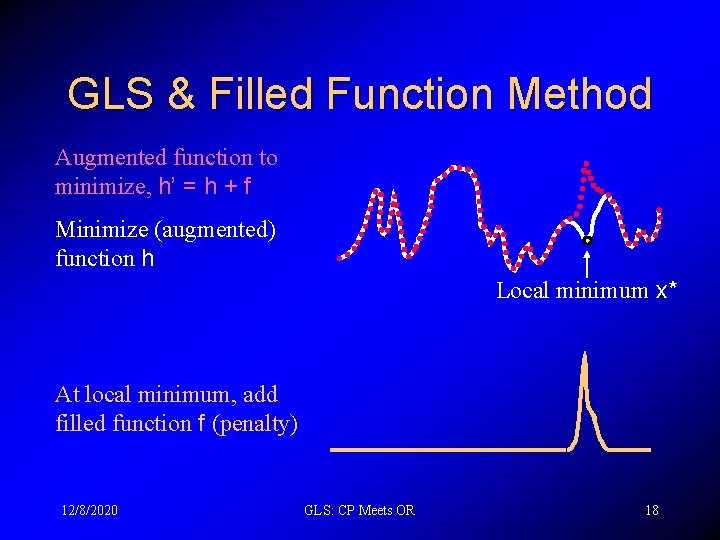

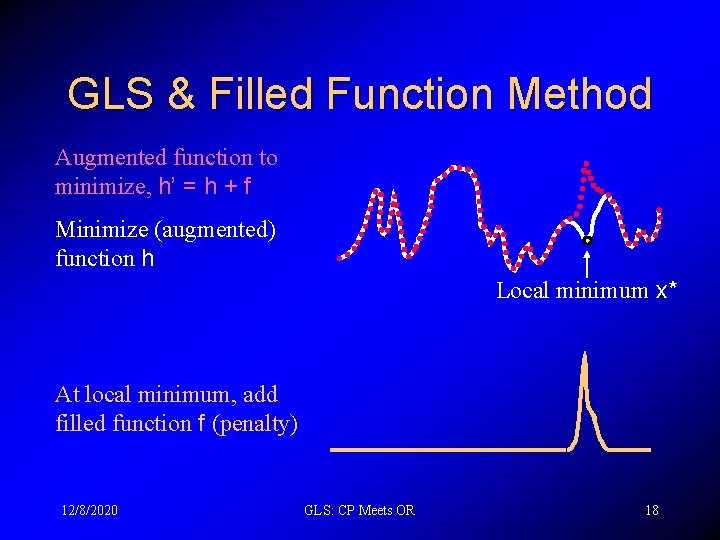

GLS & Filled Function Method Augmented function to minimize, h’ = h + f Minimize (augmented) function h Local minimum x* At local minimum, add filled function f (penalty) 12/8/2020 GLS: CP Meets OR 18

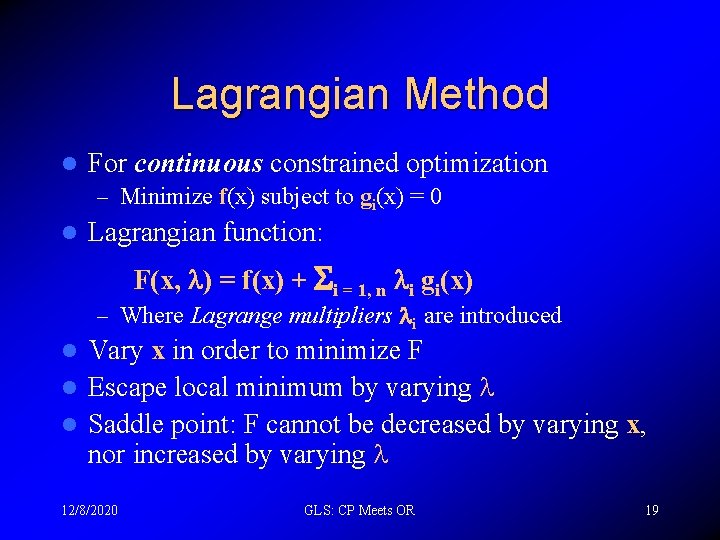

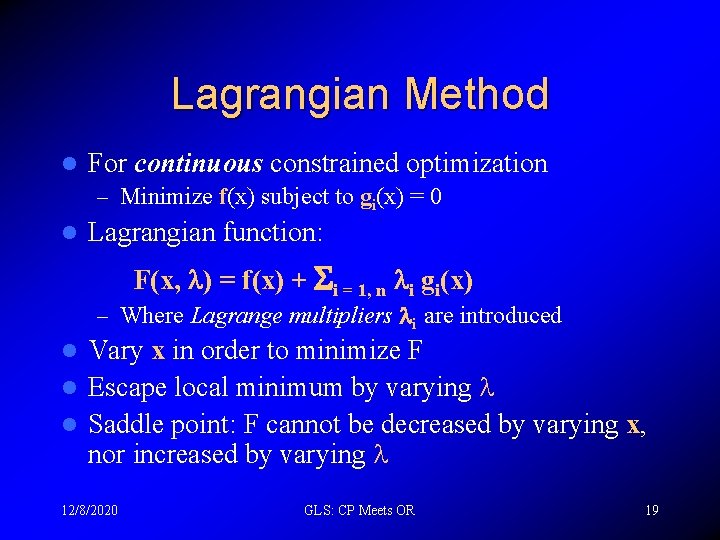

Lagrangian Method l For continuous constrained optimization – Minimize f(x) subject to gi(x) = 0 l Lagrangian function: F(x, ) = f(x) + i = 1, n i gi(x) – Where Lagrange multipliers i are introduced Vary x in order to minimize F l Escape local minimum by varying l Saddle point: F cannot be decreased by varying x, nor increased by varying l 12/8/2020 GLS: CP Meets OR 19

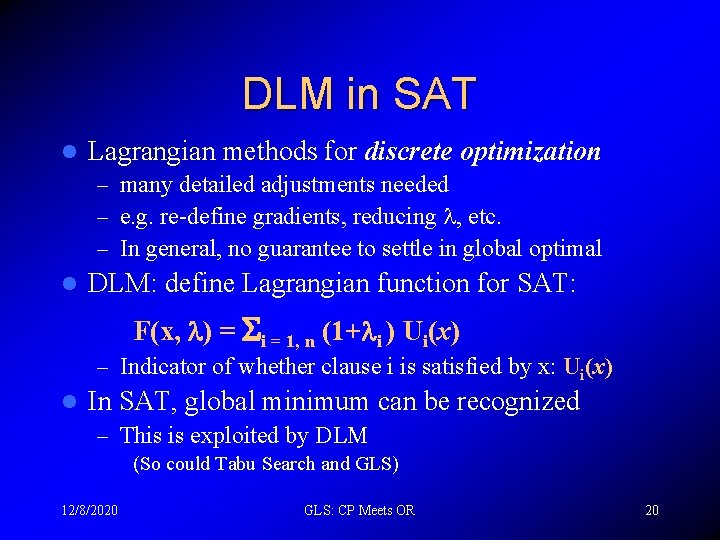

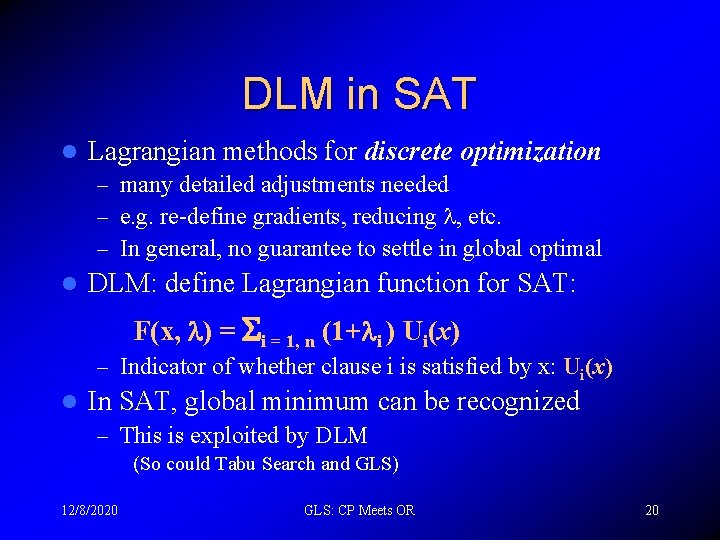

DLM in SAT l Lagrangian methods for discrete optimization – many detailed adjustments needed – e. g. re-define gradients, reducing , etc. – In general, no guarantee to settle in global optimal l DLM: define Lagrangian function for SAT: F(x, ) = i = 1, n (1+ i ) Ui(x) – Indicator of whether clause i is satisfied by x: Ui(x) l In SAT, global minimum can be recognized – This is exploited by DLM (So could Tabu Search and GLS) 12/8/2020 GLS: CP Meets OR 20

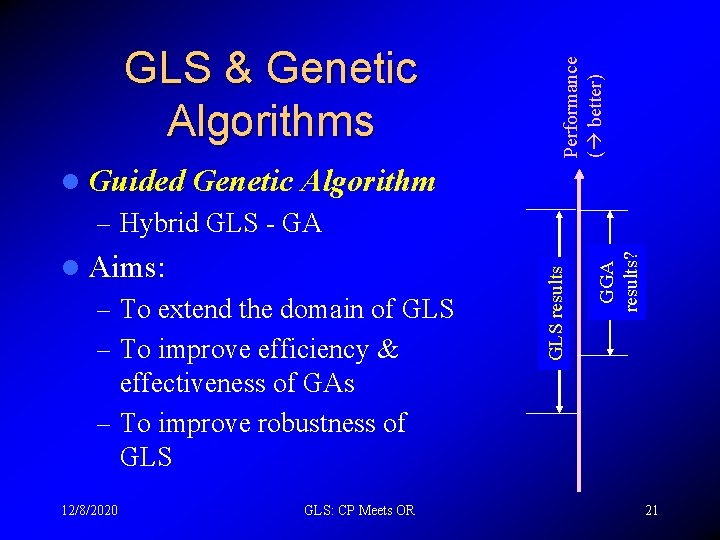

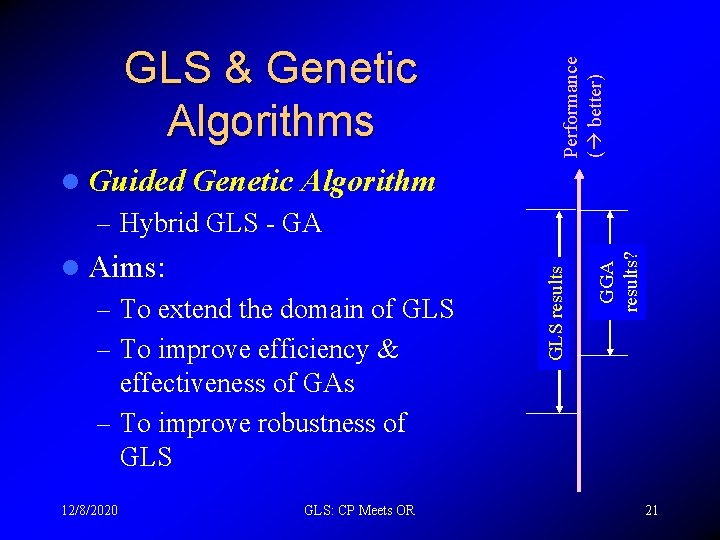

l Guided Performance ( better) GLS & Genetic Algorithms Genetic Algorithm – To extend the domain of GLS – To improve efficiency & GGA results? l Aims: GLS results – Hybrid GLS - GA effectiveness of GAs – To improve robustness of GLS 12/8/2020 GLS: CP Meets OR 21

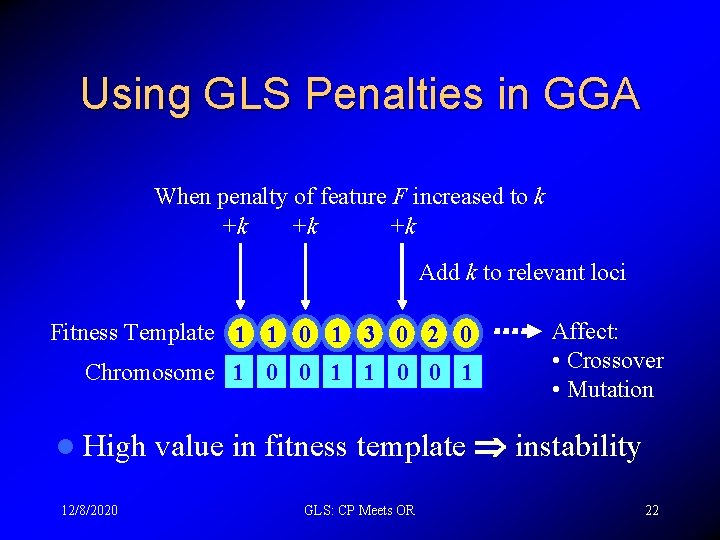

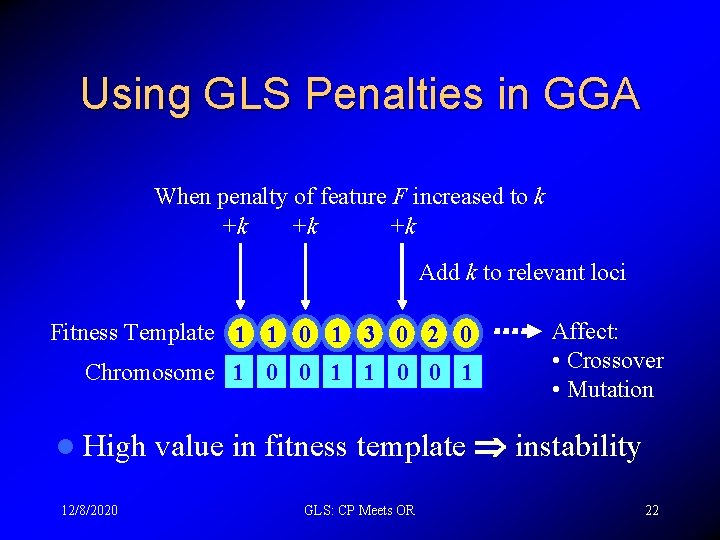

Using GLS Penalties in GGA When penalty of feature F increased to k +k +k +k Add k to relevant loci Fitness Template 1 1 0 1 3 0 2 0 Chromosome 1 0 0 1 l High 12/8/2020 Affect: • Crossover • Mutation value in fitness template instability GLS: CP Meets OR 22

Summary: GLS, CP+OR NN (AI) for satisfiability Lagrangian : Penalty-based DLM for SAT: Methods discrete opt. continuous From OR • Soft GLS for Taboo optimisation • Aspiration Changing GA HC behaviour Genetic Hill Climbing Algorithms e. g. 2 -Opt in TSP 12/8/2020 GLS: CP Meets OR Tabu Search Simulated Annealing Meta-heuristics methods 23

The End http: //cswww. essex. ac. uk/CSP ZDC GLS SAT, MAXSAT, QAP Edward Tsang, Chang Wang, Jim Doran, James Borrett Andrew Davenport, Kangming Zhu, Chris Voudouris, Tung Leng Lau, John Ford, Patrick Mills, Richard Bradwell Funded by: University of Essex, EPSRC 12/8/2020 GLS: CP Meets OR 24