GRID superscalar a programming paradigm for GRID applications

- Slides: 65

GRID superscalar: a programming paradigm for GRID applications CEPBA-IBM Research Institute Rosa M. Badia, Jesús Labarta, Josep M. Pérez, Raül Sirvent SC 2004, Pittsburgh, Nov. 6 -12

Outline • • Objective The essence User’s interface Automatic code generation Run-time features Programming experiences Ongoing work Conclusions SC 2004, Pittsburgh, Nov. 6 -12

Objective • Ease the programming of GRID applications • Basic idea: ns seconds/minutes/hours SC 2004, Pittsburgh, Nov. 6 -12 Grid

Outline • • Objective The essence User’s interface Automatic code generation Current run-time features Programming experiences Future work Conclusions SC 2004, Pittsburgh, Nov. 6 -12

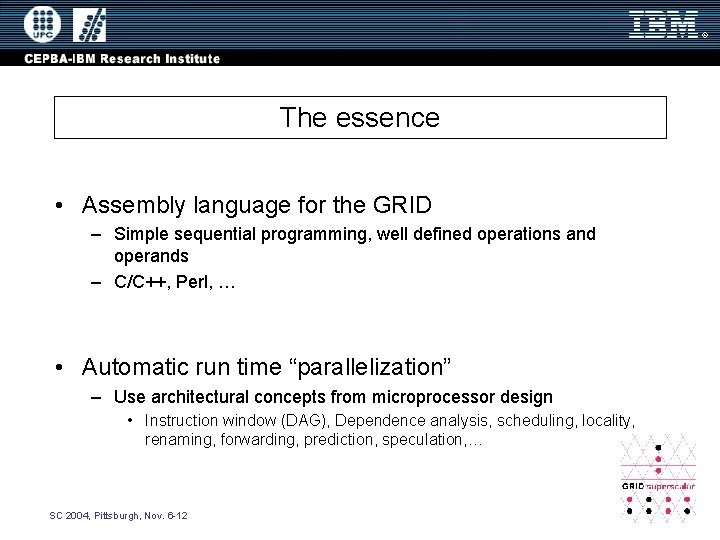

The essence • Assembly language for the GRID – Simple sequential programming, well defined operations and operands – C/C++, Perl, … • Automatic run time “parallelization” – Use architectural concepts from microprocessor design • Instruction window (DAG), Dependence analysis, scheduling, locality, renaming, forwarding, prediction, speculation, … SC 2004, Pittsburgh, Nov. 6 -12

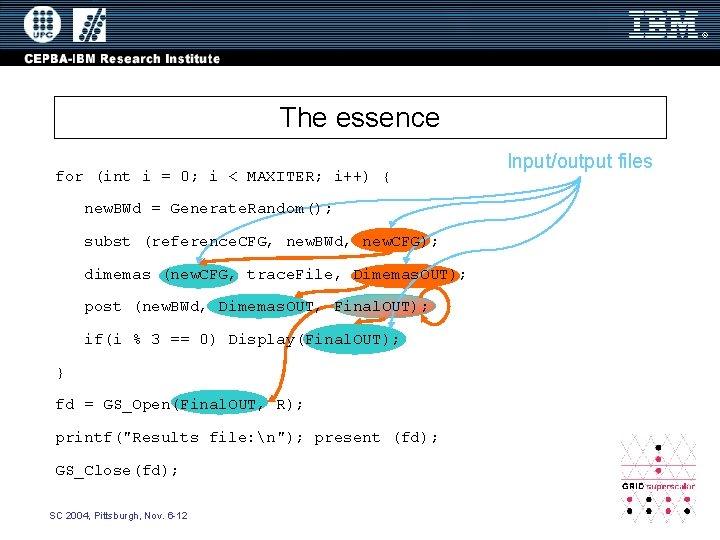

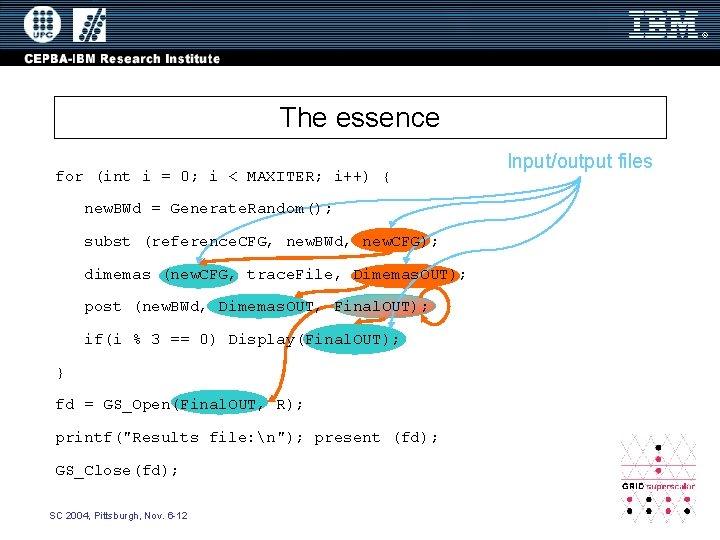

The essence for (int i = 0; i < MAXITER; i++) { new. BWd = Generate. Random(); subst (reference. CFG, new. BWd, new. CFG); dimemas (new. CFG, trace. File, Dimemas. OUT); post (new. BWd, Dimemas. OUT, Final. OUT); if(i % 3 == 0) Display(Final. OUT); } fd = GS_Open(Final. OUT, R); printf("Results file: n"); present (fd); GS_Close(fd); SC 2004, Pittsburgh, Nov. 6 -12 Input/output files

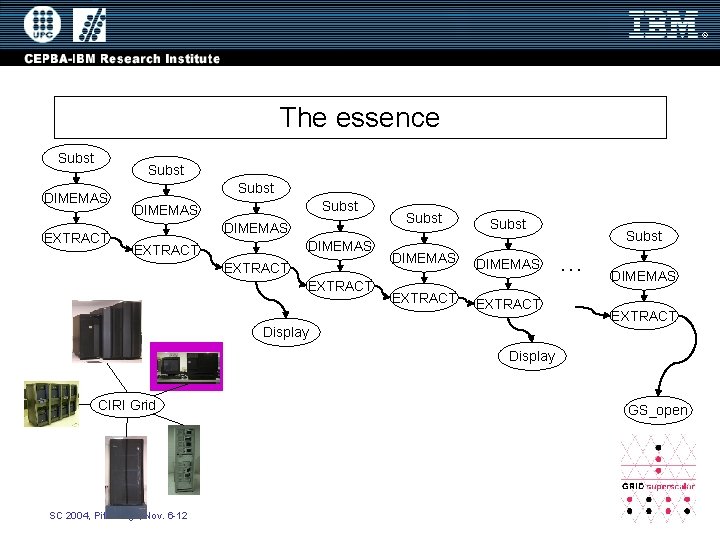

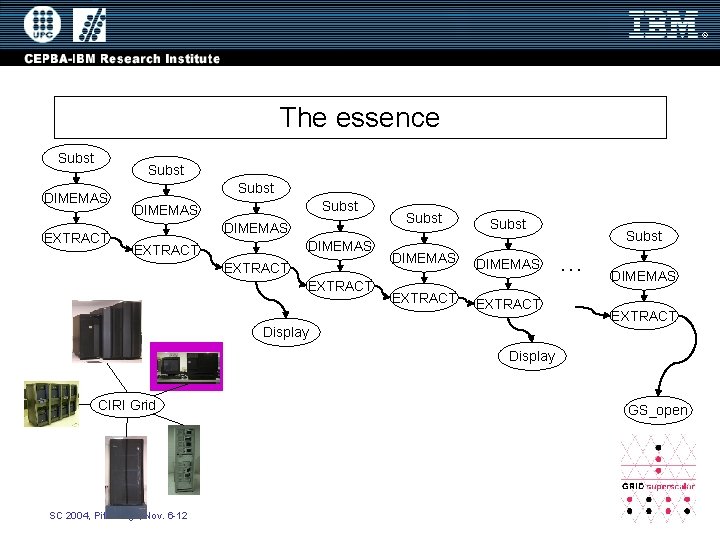

The essence Subst DIMEMAS EXTRACT Subst DIMEMAS EXTRACT Subst DIMEMAS EXTRACT Subst … DIMEMAS EXTRACT Display CIRI Grid SC 2004, Pittsburgh, Nov. 6 -12 GS_open

The essence Subst DIMEMAS EXTRACT Subst DIMEMAS EXTRACT Subst DIMEMAS EXTRACT Subst … DIMEMAS EXTRACT Display CIRI Grid SC 2004, Pittsburgh, Nov. 6 -12 GS_open

Outline • • Objective The essence User’s interface Automatic code generation Run-time features Programming experiences Ongoing work Conclusions SC 2004, Pittsburgh, Nov. 6 -12

User’s interface • Three components: – Main program – Subroutines/functions – Interface Definition Language (IDL) file • Programming languages: C/C++, Perl SC 2004, Pittsburgh, Nov. 6 -12

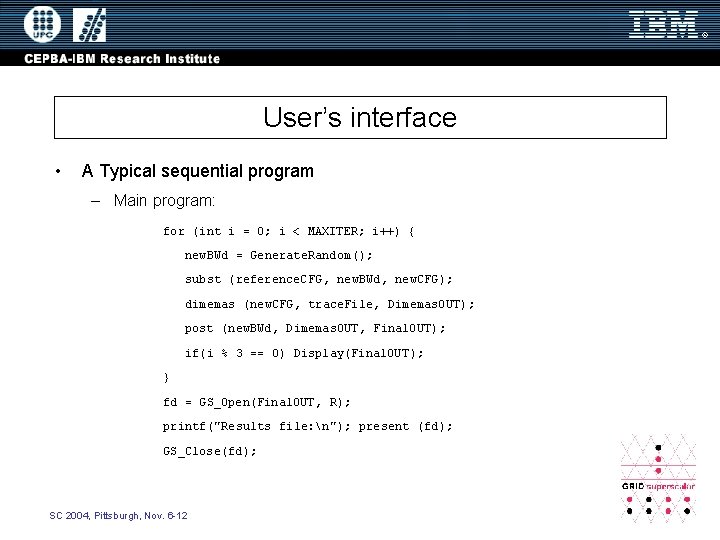

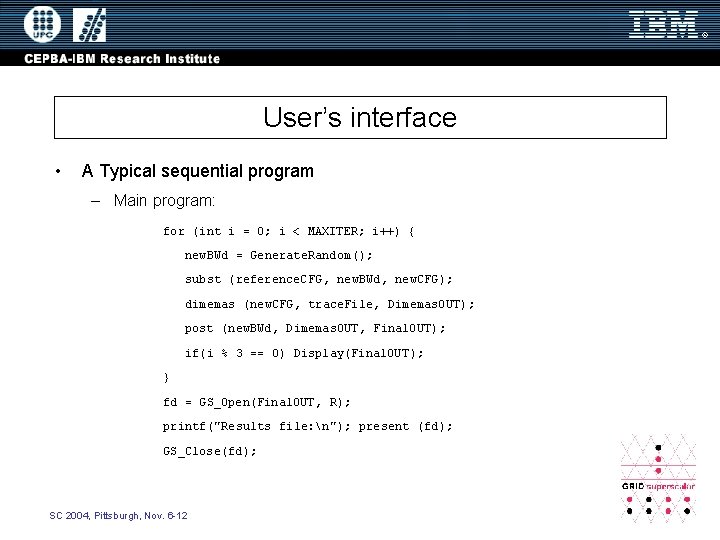

User’s interface • A Typical sequential program – Main program: for (int i = 0; i < MAXITER; i++) { new. BWd = Generate. Random(); subst (reference. CFG, new. BWd, new. CFG); dimemas (new. CFG, trace. File, Dimemas. OUT); post (new. BWd, Dimemas. OUT, Final. OUT); if(i % 3 == 0) Display(Final. OUT); } fd = GS_Open(Final. OUT, R); printf("Results file: n"); present (fd); GS_Close(fd); SC 2004, Pittsburgh, Nov. 6 -12

User’s interface • A Typical sequential program – Subroutines/functions void dimemas(in File new. CFG, in File trace. File, out File Dimemas. OUT) { char command[500]; putenv("DIMEMAS_HOME=/usr/local/cepba-tools"); sprintf(command, "/usr/local/cepba-tools/bin/Dimemas -o %s %s", Dimemas. OUT, new. CFG ); GS_System(command); } void display(in File toplot) { char command[500]; sprintf(command, ". /display. sh %s", toplot); GS_System(command); } SC 2004, Pittsburgh, Nov. 6 -12

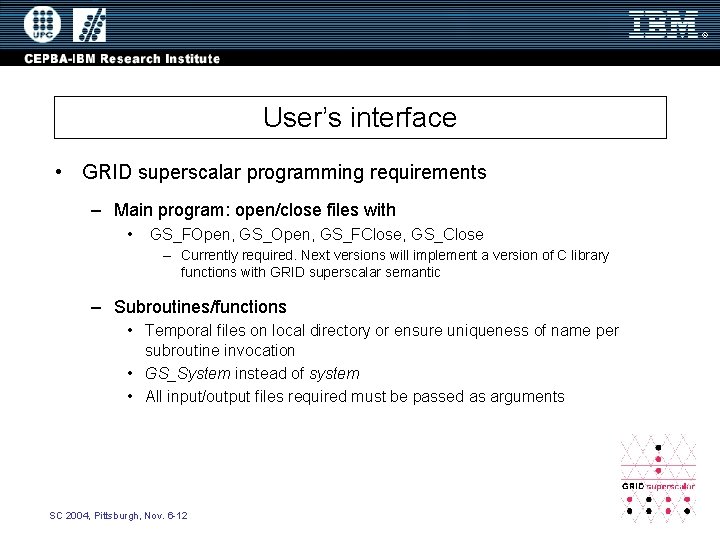

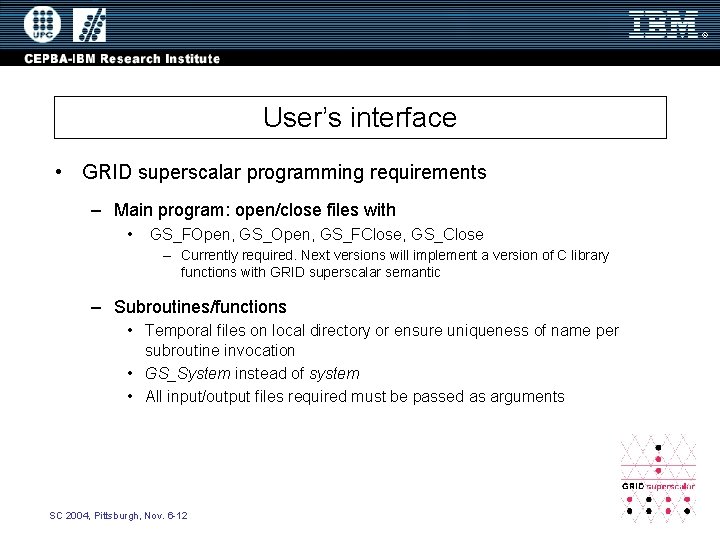

User’s interface • GRID superscalar programming requirements – Main program: open/close files with • GS_FOpen, GS_FClose, GS_Close – Currently required. Next versions will implement a version of C library functions with GRID superscalar semantic – Subroutines/functions • Temporal files on local directory or ensure uniqueness of name per subroutine invocation • GS_System instead of system • All input/output files required must be passed as arguments SC 2004, Pittsburgh, Nov. 6 -12

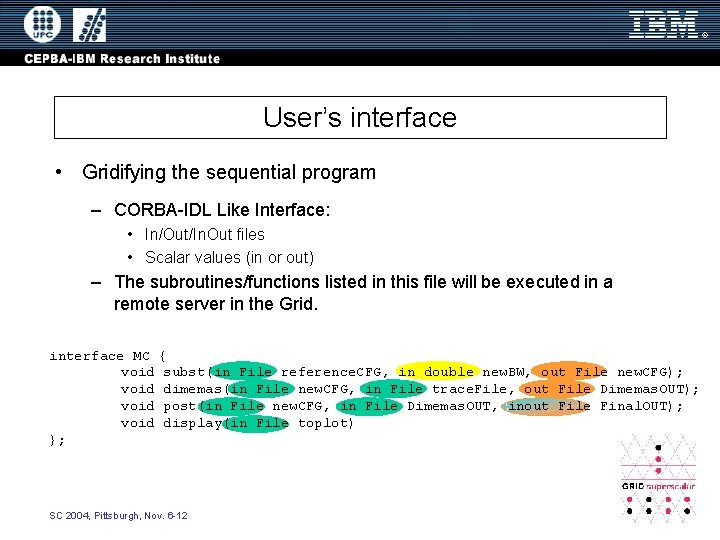

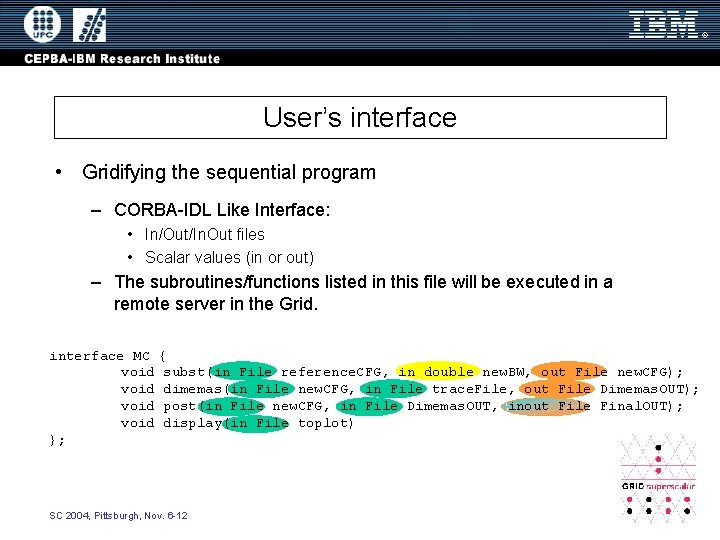

User’s interface • Gridifying the sequential program – CORBA-IDL Like Interface: • In/Out/In. Out files • Scalar values (in or out) – The subroutines/functions listed in this file will be executed in a remote server in the Grid. interface MC { void subst(in File reference. CFG, in double new. BW, out File new. CFG); void dimemas(in File new. CFG, in File trace. File, out File Dimemas. OUT); void post(in File new. CFG, in File Dimemas. OUT, inout File Final. OUT); void display(in File toplot) }; SC 2004, Pittsburgh, Nov. 6 -12

Outline • • Objective The essence User’s interface Automatic code generation Run-time features Programming experiences Ongoing work Conclusions SC 2004, Pittsburgh, Nov. 6 -12

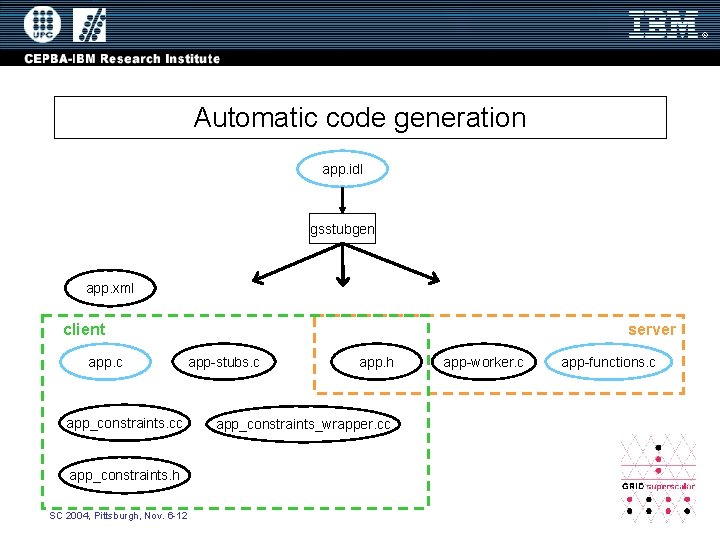

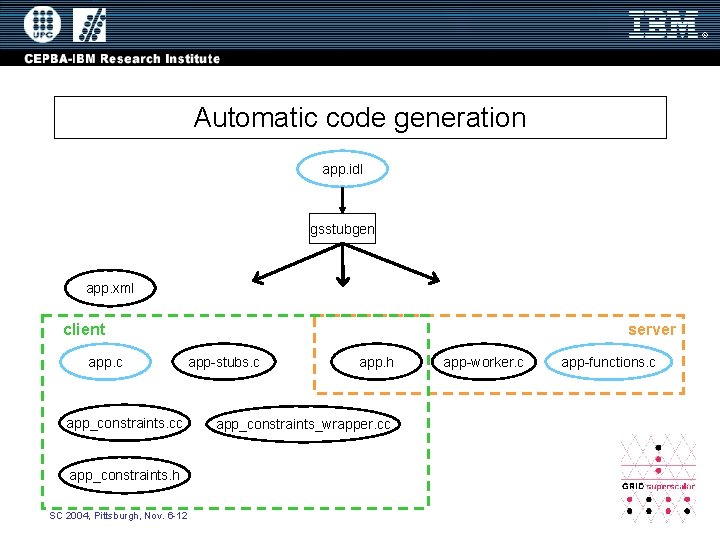

Automatic code generation app. idl gsstubgen app. xml server client app. c app_constraints. cc app_constraints. h SC 2004, Pittsburgh, Nov. 6 -12 app-stubs. c app. h app_constraints_wrapper. cc app-worker. c app-functions. c

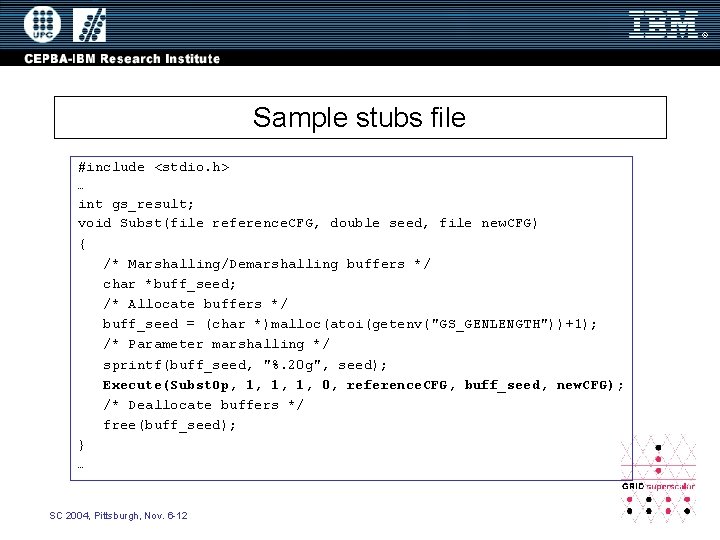

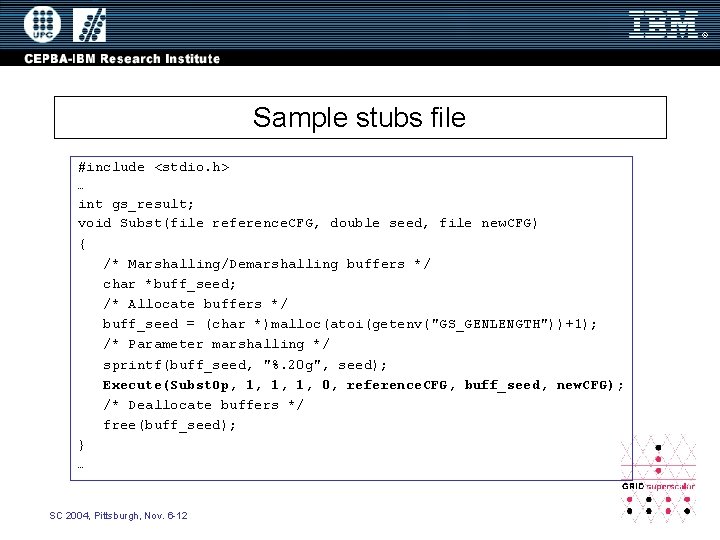

Sample stubs file #include <stdio. h> … int gs_result; void Subst(file reference. CFG, double seed, file new. CFG) { /* Marshalling/Demarshalling buffers */ char *buff_seed; /* Allocate buffers */ buff_seed = (char *)malloc(atoi(getenv("GS_GENLENGTH"))+1); /* Parameter marshalling */ sprintf(buff_seed, "%. 20 g", seed); Execute(Subst. Op, 1, 1, 1, 0, reference. CFG, buff_seed, new. CFG); /* Deallocate buffers */ free(buff_seed); } … SC 2004, Pittsburgh, Nov. 6 -12

Sample worker main file #include <stdio. h> … int main(int argc, char **argv) { enum operation. Code op. Cod = (enum operation. Code)atoi(argv[2]); Ini. Worker(argc, argv); switch(op. Cod) { case Subst. Op: { double seed; … seed = strtod(argv[4], NULL); Subst(argv[3], seed, argv[5]); } break; } End. Worker(gs_result, argc, argv); return 0; } SC 2004, Pittsburgh, Nov. 6 -12

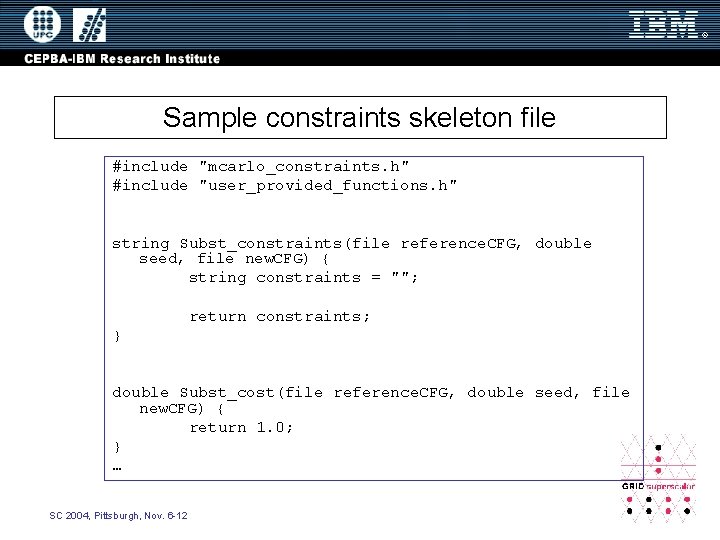

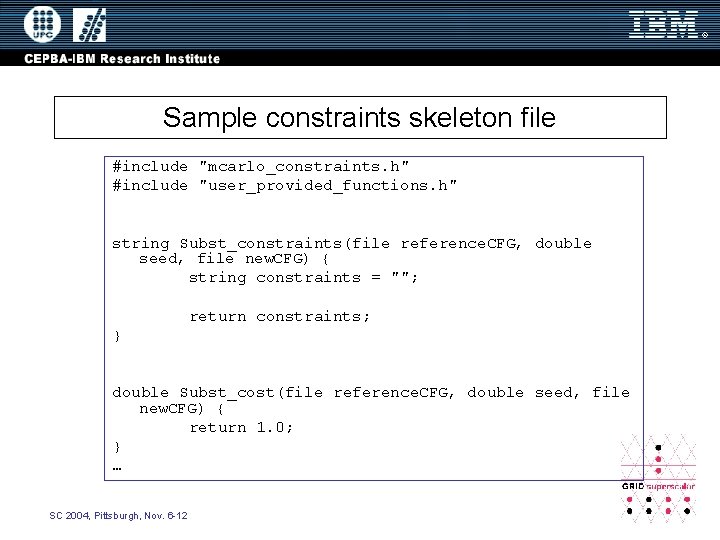

Sample constraints skeleton file #include "mcarlo_constraints. h" #include "user_provided_functions. h" string Subst_constraints(file reference. CFG, double seed, file new. CFG) { string constraints = ""; return constraints; } double Subst_cost(file reference. CFG, double seed, file new. CFG) { return 1. 0; } … SC 2004, Pittsburgh, Nov. 6 -12

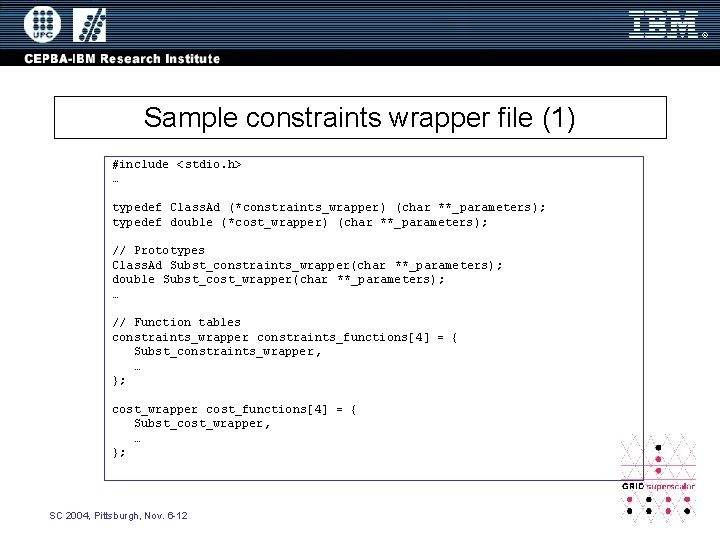

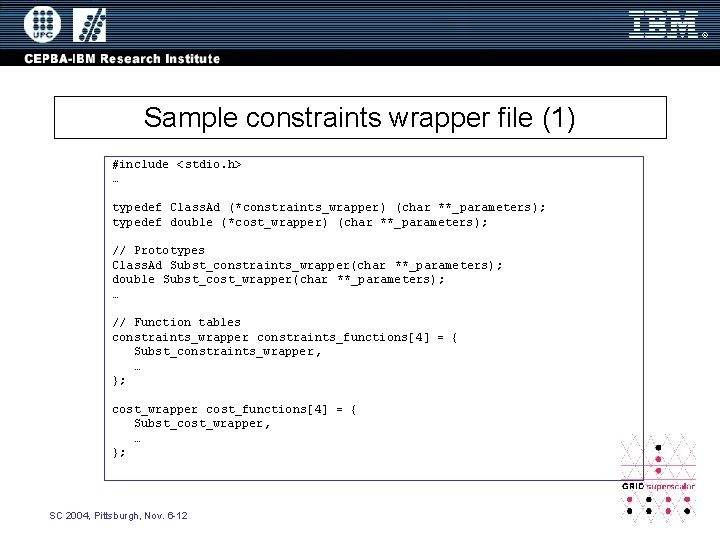

Sample constraints wrapper file (1) #include <stdio. h> … typedef Class. Ad (*constraints_wrapper) (char **_parameters); typedef double (*cost_wrapper) (char **_parameters); // Prototypes Class. Ad Subst_constraints_wrapper(char **_parameters); double Subst_cost_wrapper(char **_parameters); … // Function tables constraints_wrapper constraints_functions[4] = { Subst_constraints_wrapper , … }; cost_wrapper cost_functions[4] = { Subst_cost_wrapper, … }; SC 2004, Pittsburgh, Nov. 6 -12

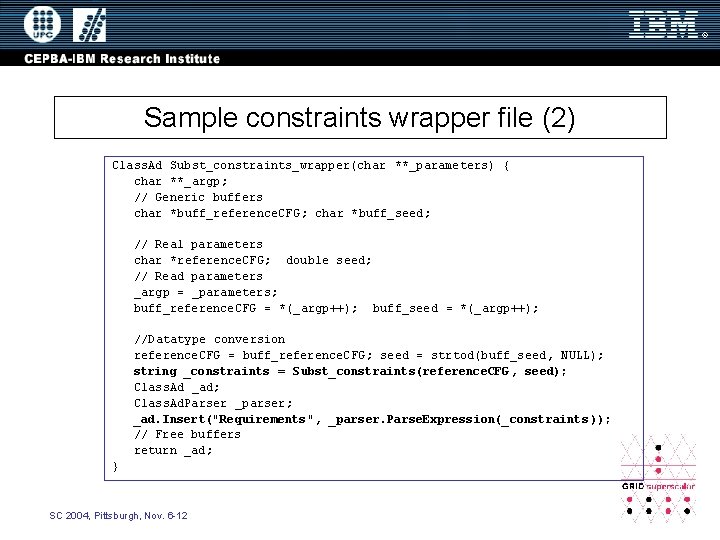

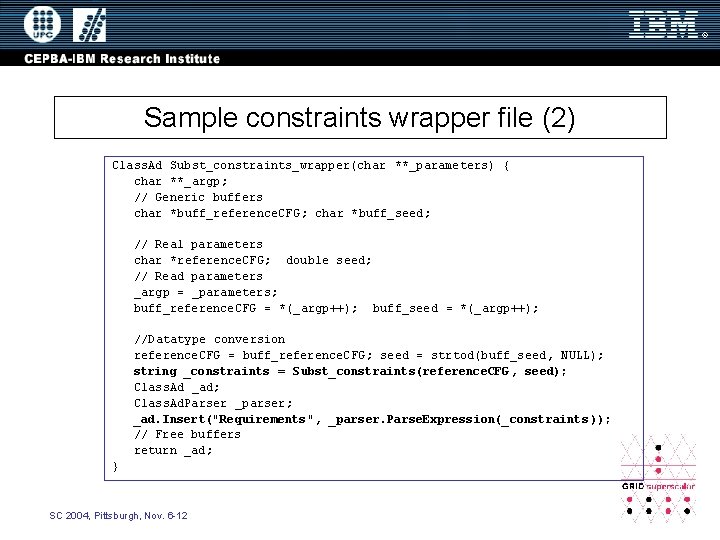

Sample constraints wrapper file (2) Class. Ad Subst_constraints_wrapper(char **_parameters) { char **_argp; // Generic buffers char *buff_reference. CFG; char *buff_seed; // Real parameters char *reference. CFG; double seed; // Read parameters _argp = _parameters; buff_reference. CFG = *(_argp++); buff_seed = *(_argp++); //Datatype conversion reference. CFG = buff_reference. CFG; seed = strtod(buff_seed, NULL); string _constraints = Subst_constraints(reference. CFG , seed); Class. Ad _ad; Class. Ad. Parser _parser; _ad. Insert("Requirements", _parser. Parse. Expression(_constraints )); // Free buffers return _ad; } SC 2004, Pittsburgh, Nov. 6 -12

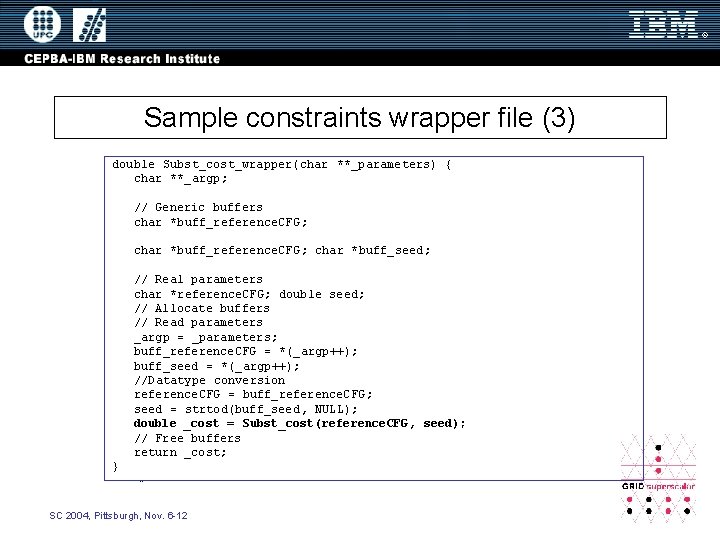

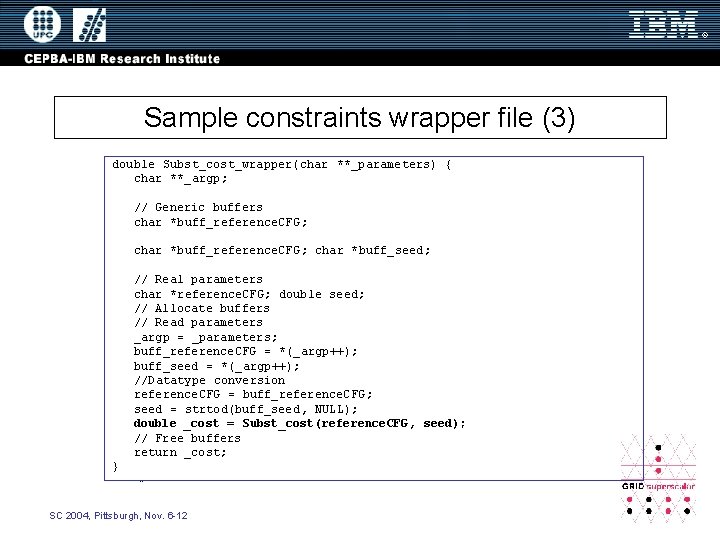

Sample constraints wrapper file (3) double Subst_cost_wrapper(char **_parameters) { char **_argp; // Generic buffers char *buff_reference. CFG; char *buff_seed; // Real parameters char *reference. CFG; double seed; // Allocate buffers // Read parameters _argp = _parameters; buff_reference. CFG = *(_argp++); buff_seed = *(_argp++); //Datatype conversion reference. CFG = buff_reference. CFG; seed = strtod(buff_seed, NULL); double _cost = Subst_cost(reference. CFG, seed); // Free buffers return _cost; } … SC 2004, Pittsburgh, Nov. 6 -12

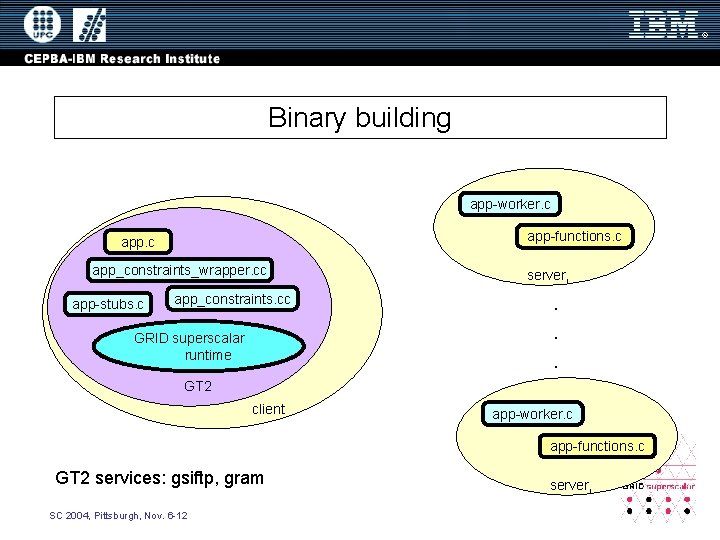

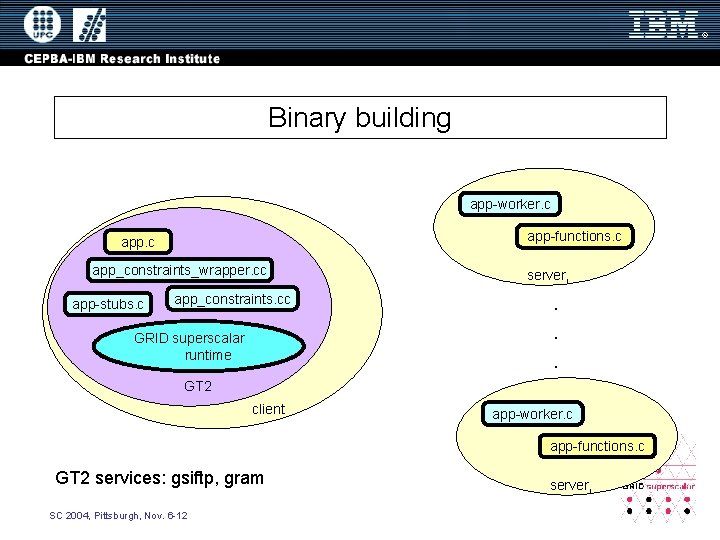

Binary building app-worker. c app-functions. c app_constraints_wrapper. cc app-stubs. c app_constraints. cc GRID superscalar runtime serveri . . . GT 2 client app-worker. c app-functions. c GT 2 services: gsiftp, gram SC 2004, Pittsburgh, Nov. 6 -12 serveri

Calls sequence without GRID superscalar app. c app-functions. c Local. Host SC 2004, Pittsburgh, Nov. 6 -12

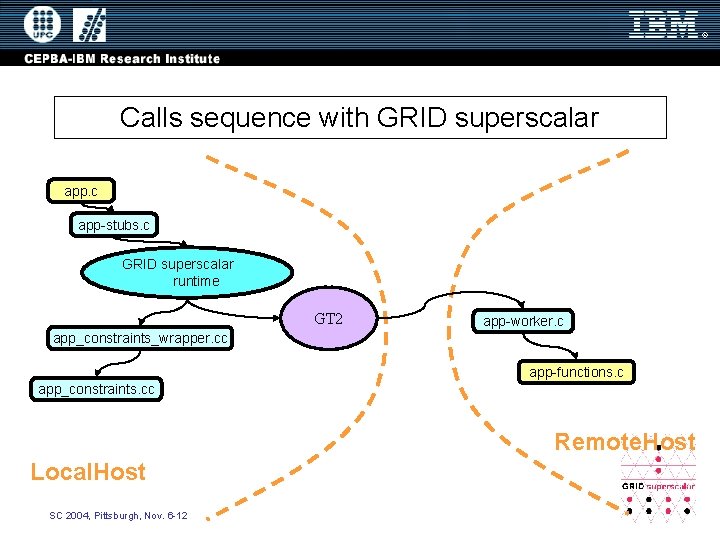

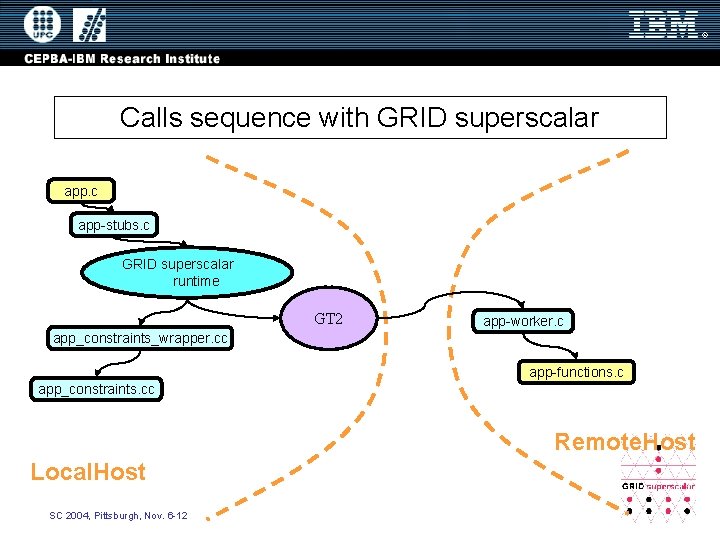

Calls sequence with GRID superscalar app. c app-stubs. c GRID superscalar runtime GT 2 app-worker. c app_constraints_wrapper. cc app-functions. c app_constraints. cc Remote. Host Local. Host SC 2004, Pittsburgh, Nov. 6 -12

Outline • • Objective The essence User interface Automatic code generation Run-time features Programming experiences Ongoing work Conclusions SC 2004, Pittsburgh, Nov. 6 -12

Run-time features • • Previous prototype over Condor and MW Current prototype over Globus 2. x, using the API File transfer, security, … provided by Globus Run-time implemented primitives – – GS_on, GS_off Execute GS_Open, GS_Close, GS_FOpen GS_Barrier – Worker side: GS_System SC 2004, Pittsburgh, Nov. 6 -12

Run-time features • Data dependence analysis • End of task notification • Renaming • Results collection • File forwarding • Explicit task synchronization • Shared disks management and file transfer policy • File management primitives • Resource brokering • Checkpointing at task level • Task scheduling • Deployer • Task submission • Exception handling • Current prototype over Globus 2. x, using the API • File transfer, security, … provided by Globus SC 2004, Pittsburgh, Nov. 6 -12

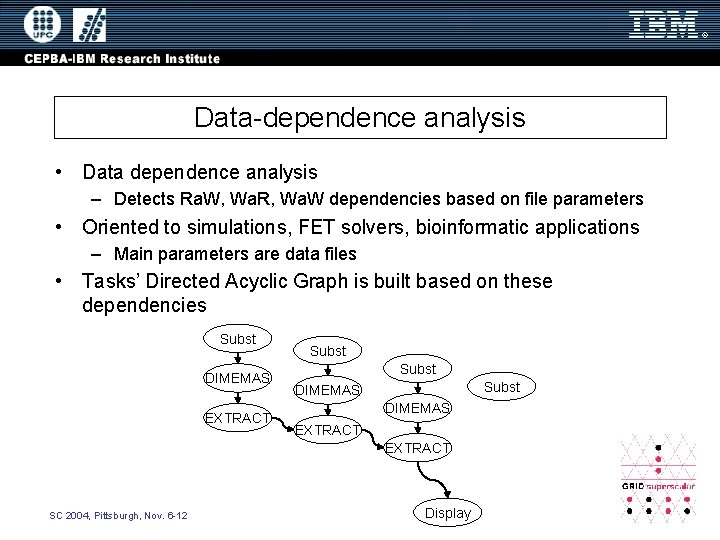

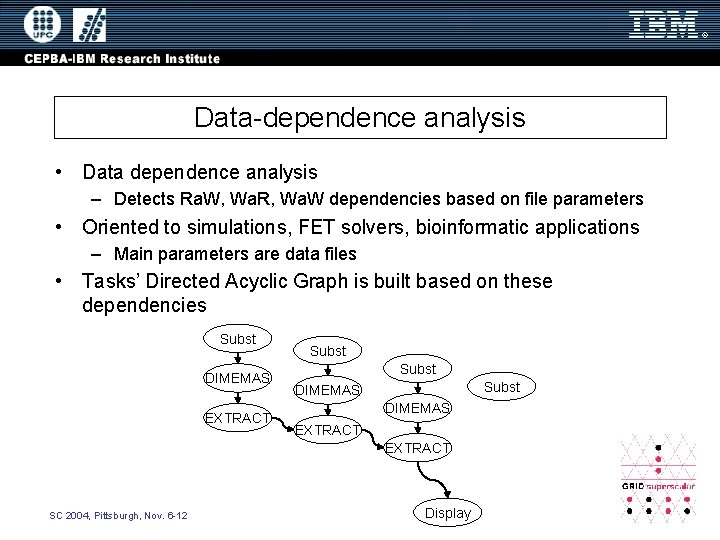

Data-dependence analysis • Data dependence analysis – Detects Ra. W, Wa. R, Wa. W dependencies based on file parameters • Oriented to simulations, FET solvers, bioinformatic applications – Main parameters are data files • Tasks’ Directed Acyclic Graph is built based on these dependencies Subst DIMEMAS EXTRACT Subst DIMEMAS EXTRACT SC 2004, Pittsburgh, Nov. 6 -12 Display

File-renaming • Wa. W and Wa. R dependencies are avoidable with renaming While { T 1 T 2 T 3 } (!end_condition()) (…, …, “f 1”); (“f 1”, …, …); (…, …, …); Wa. R T 1_1 “f 1” SC 2004, Pittsburgh, Nov. 6 -12 Wa. W T 1_2 “f 1_1” “f 1” T 2_1 T 2_2 T 3_1 T 3_2 T 1_N … “f 1_2” “f 1” T 1_N

File forwarding T 1 f 1 (by socket) f 1 T 2 • T 2 File forwarding reduces the impact of Ra. W data dependencies SC 2004, Pittsburgh, Nov. 6 -12

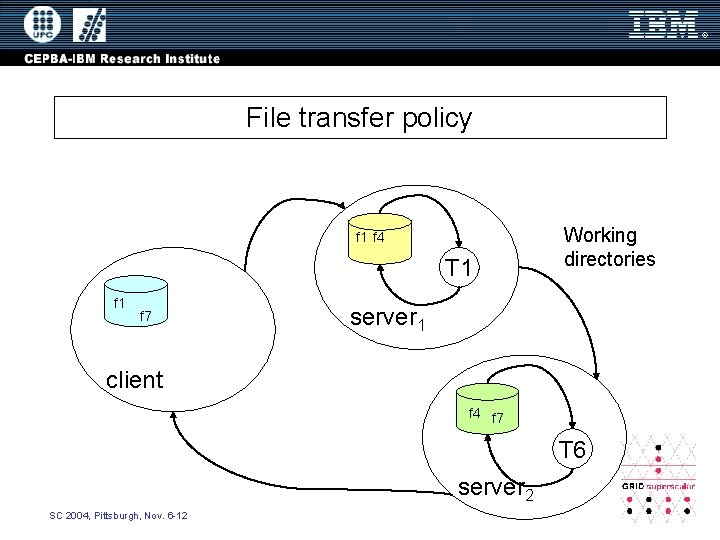

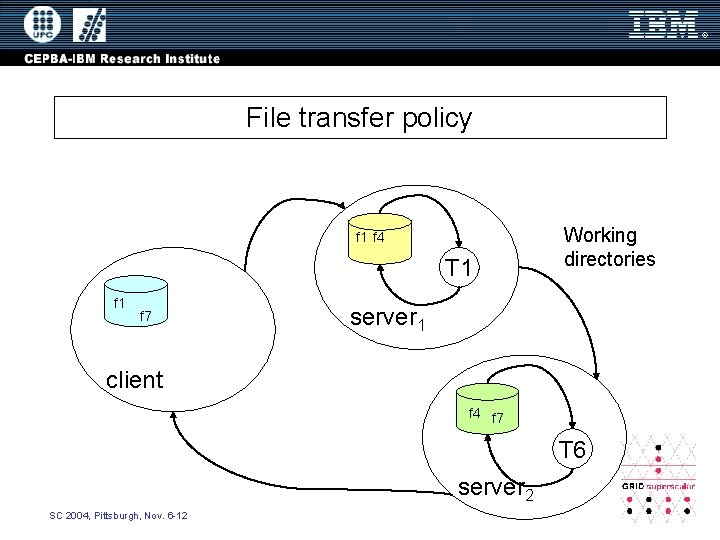

File transfer policy f 1 f 4 T 1 f 7 Working directories server 1 client f 4 f 7 T 6 server 2 SC 2004, Pittsburgh, Nov. 6 -12

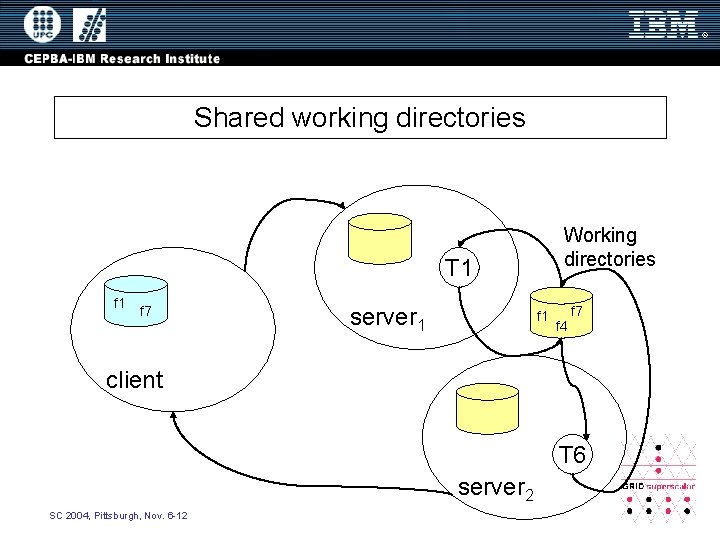

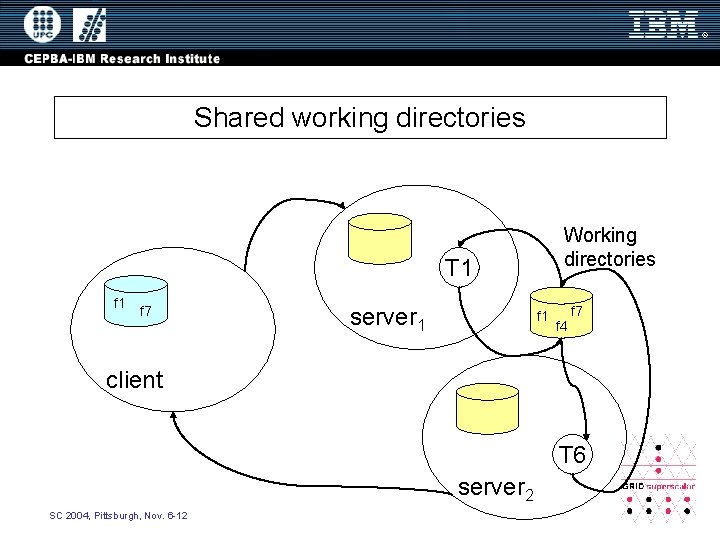

Shared working directories Working directories T 1 f 7 server 1 f 4 f 7 client T 6 server 2 SC 2004, Pittsburgh, Nov. 6 -12

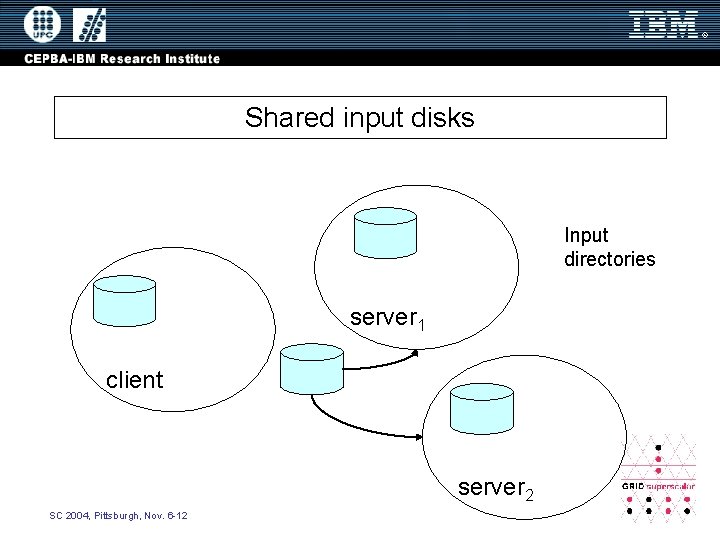

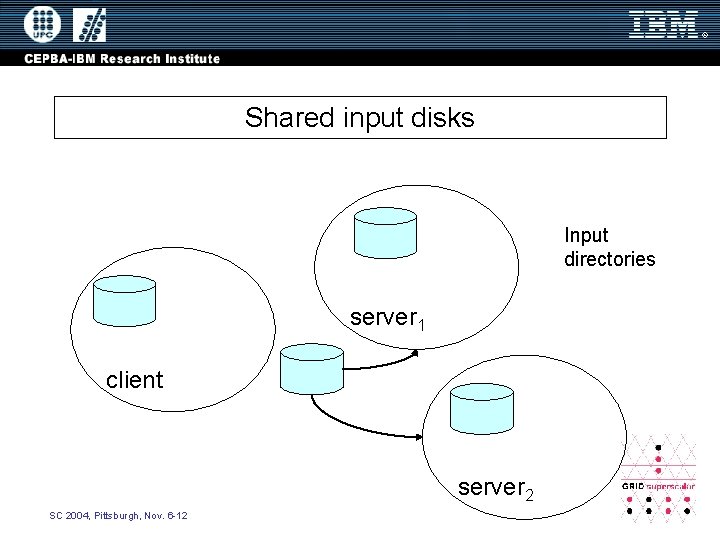

Shared input disks Input directories server 1 client server 2 SC 2004, Pittsburgh, Nov. 6 -12

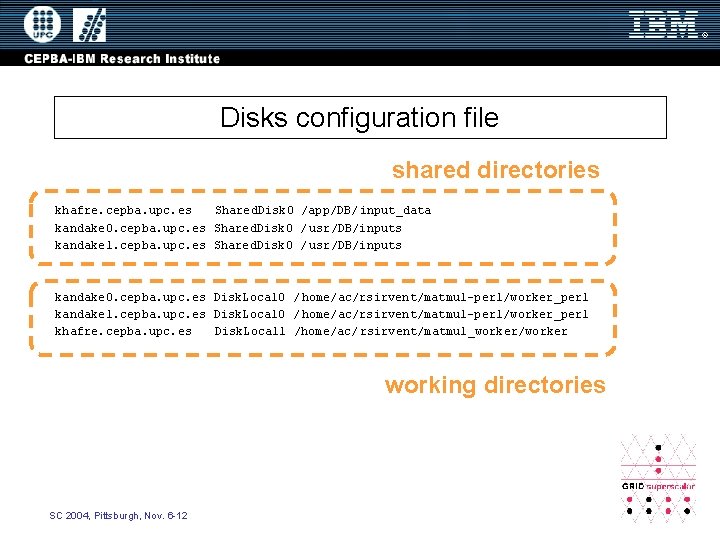

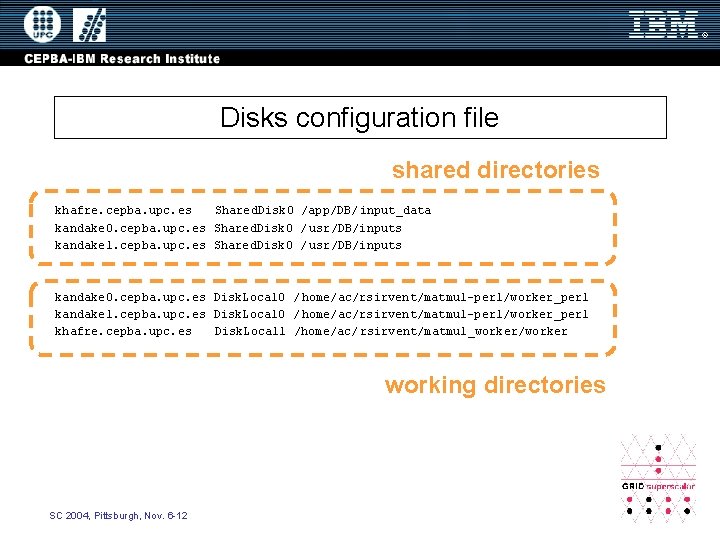

Disks configuration file shared directories khafre. cepba. upc. es Shared. Disk 0 /app/DB/input_data kandake 0. cepba. upc. es Shared. Disk 0 / usr/DB/inputs kandake 1. cepba. upc. es Shared. Disk 0 / usr/DB/inputs kandake 0. cepba. upc. es Disk. Local 0 / home/ac/rsirvent/matmul-perl/worker_perl kandake 1. cepba. upc. es Disk. Local 0 / home/ac/rsirvent/matmul-perl/worker_perl khafre. cepba. upc. es Disk. Local 1 /home/ac/rsirvent/matmul_worker/worker working directories SC 2004, Pittsburgh, Nov. 6 -12

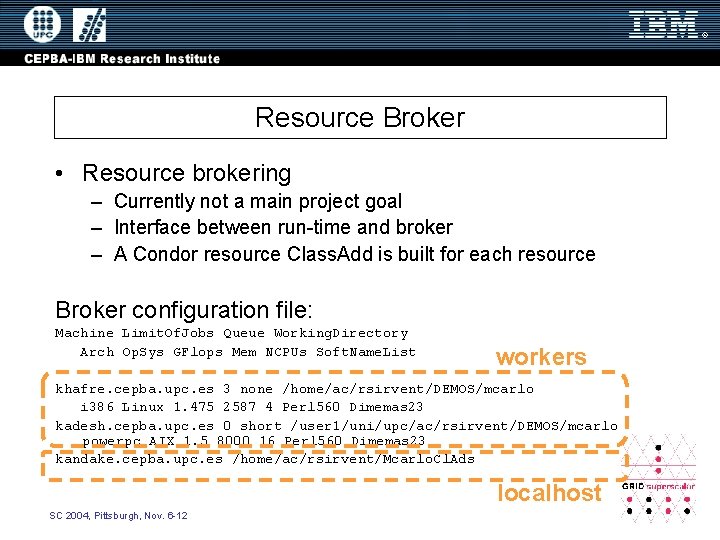

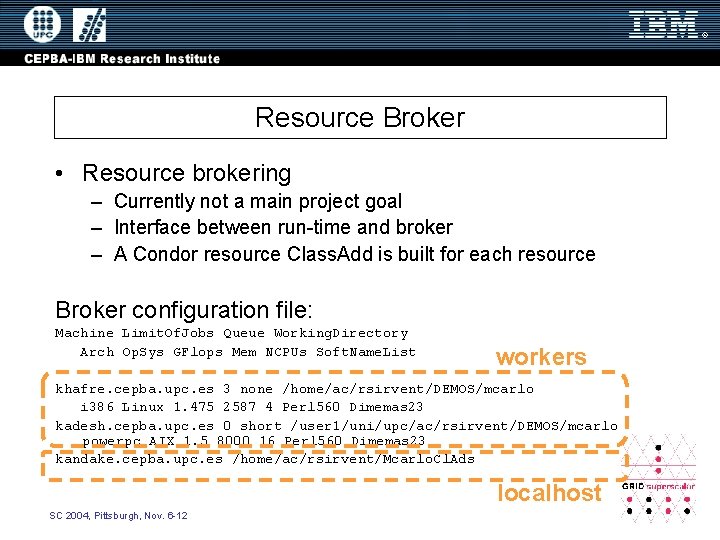

Resource Broker • Resource brokering – Currently not a main project goal – Interface between run-time and broker – A Condor resource Class. Add is built for each resource Broker configuration file: Machine Limit. Of. Jobs Queue Working. Directory Arch Op. Sys GFlops Mem NCPUs Soft. Name. List workers khafre. cepba. upc. es 3 none /home/ac/rsirvent/DEMOS/mcarlo i 386 Linux 1. 475 2587 4 Perl 560 Dimemas 23 kadesh. cepba. upc. es 0 short /user 1/uni/upc/ac/rsirvent/DEMOS/mcarlo powerpc AIX 1. 5 8000 16 Perl 560 Dimemas 23 kandake. cepba. upc. es /home/ac/rsirvent/Mcarlo. Cl. Ads localhost SC 2004, Pittsburgh, Nov. 6 -12

Resource selection (1) • • Cost and constraints specified by user and per IDL task: Cost (time) of each task instance is estimated double Dimem_cost(file cfg. File, file trace. File) { double time; time = (GS_Filesize(trace. File)/1000000) * f(GS_GFlops()); return(time); } • A task Class. Add is built on runtime for each task instance string Dimem_constraints(file cfg. File, file trace. File) { return "(member("Dimemas", other. Soft. Name. List))"; } SC 2004, Pittsburgh, Nov. 6 -12

Resource selection (2) • Broker receives requests from the run-time – Class. Add library used to match resource Class. Adds with task Class. Adds – If more than one matching, selects the resource which minimizes: – FT = File transfer time to resource r – ET = Execution time of task t on resource r (using user provided cost function) SC 2004, Pittsburgh, Nov. 6 -12

Task scheduling • Distributed between the Execute call, the callback function and the GS_Barrier call • Possibilities – The task can be submitted immediately after being created – Task waiting for resource – Task waiting for data dependency • GS_Barrier primitive before ending the program that waits for all tasks SC 2004, Pittsburgh, Nov. 6 -12

Task submission • Task submitted for execution as soon as the data dependencies are solved if resources are available • Composed of – File transfer – Task submission • All specified in RSL • Temporal directory created in the server working directory for each task • Calls to globus: – globus_gram_client_job_request – globus_gram_client_callback_allow – globus_poll_blocking SC 2004, Pittsburgh, Nov. 6 -12

End of task notification • Asynchronous state-change callbacks monitoring system – globus_gram_client_callback_allow() – callback_function • Data structures update in Execute function, GRID superscalar primitives and GS_Barrier SC 2004, Pittsburgh, Nov. 6 -12

Results collection • Collection of output parameters which are not files – Partial barrier synchronization (task generation from main code cannot continue till we have this scalar result value) • Socket and file mechanisms provided SC 2004, Pittsburgh, Nov. 6 -12

GS_Barrier • Implicit task synchronization – GS_Barrier – – Inserted in the user main program when required Main program execution is blocked globus_poll_blocking() called Once all tasks are finished the program may resume SC 2004, Pittsburgh, Nov. 6 -12

File management primitives • GRID superscalar file management API primitives: – – • • GS_FOpen GS_FClose GS_Open GS_Close Mandatory for file management operations in main program Opening a file with write option – Data dependence analysis – Renaming is applied • Opening a file with read option – Partial barrier until the task that is generating that file as output file finishes • Internally file management functions are handled as local tasks – Task node inserted – Data-dependence analysis – Function locally executed • Future work: offer a C library with GS semantic (source code with typicals calls could be used) SC 2004, Pittsburgh, Nov. 6 -12

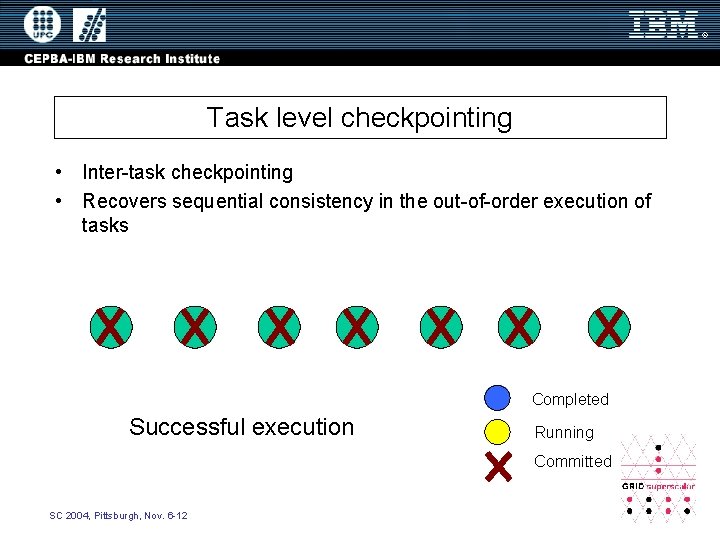

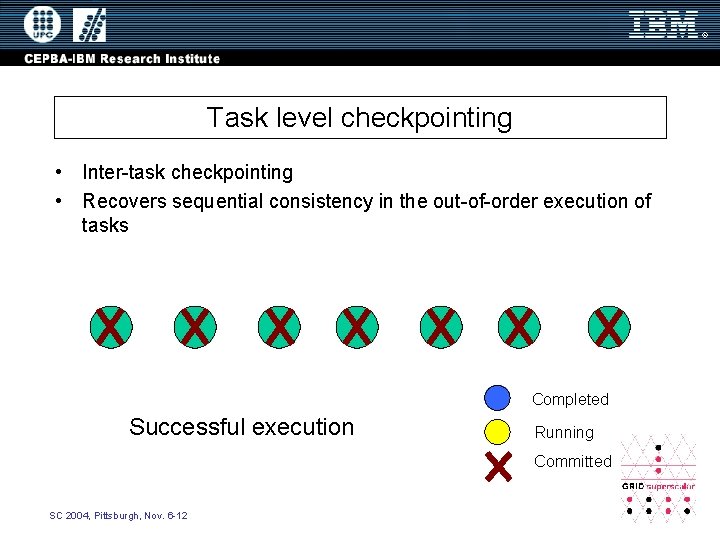

Task level checkpointing • Inter-task checkpointing • Recovers sequential consistency in the out-of-order execution of tasks 0 1 2 3 4 5 6 Completed Successful execution Running Committed SC 2004, Pittsburgh, Nov. 6 -12

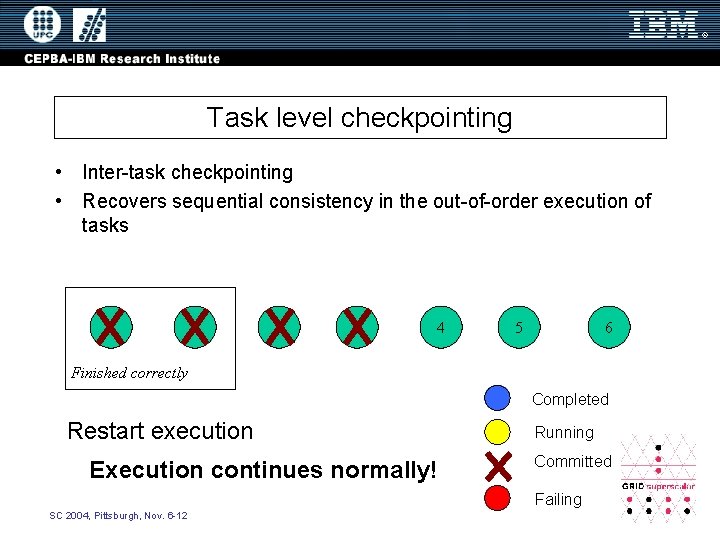

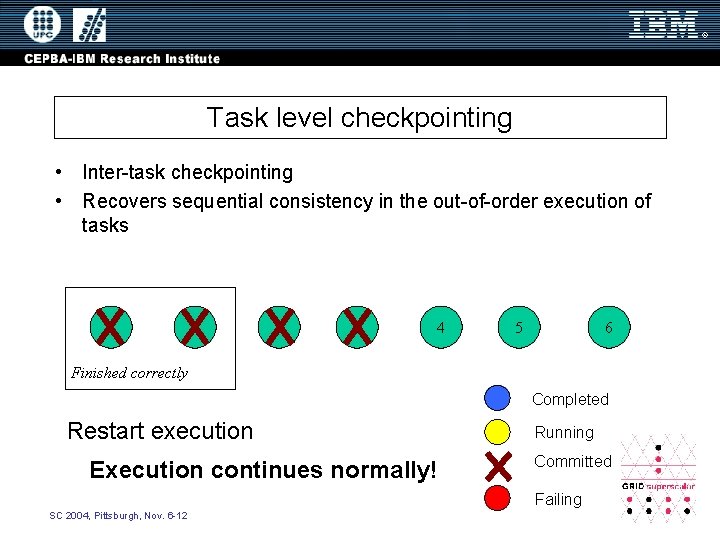

Task level checkpointing • Inter-task checkpointing • Recovers sequential consistency in the out-of-order execution of tasks 0 1 2 3 4 5 6 Finished correctly Completed Failing execution Running Cancel Committed Failing SC 2004, Pittsburgh, Nov. 6 -12

Task level checkpointing • Inter-task checkpointing • Recovers sequential consistency in the out-of-order execution of tasks 0 1 2 3 4 5 6 Finished correctly Completed Restart execution Execution continues normally! Running Committed Failing SC 2004, Pittsburgh, Nov. 6 -12

Checkpointing • On fail: from N versions of a file to one version (last committed version) • Transparent to application developer SC 2004, Pittsburgh, Nov. 6 -12

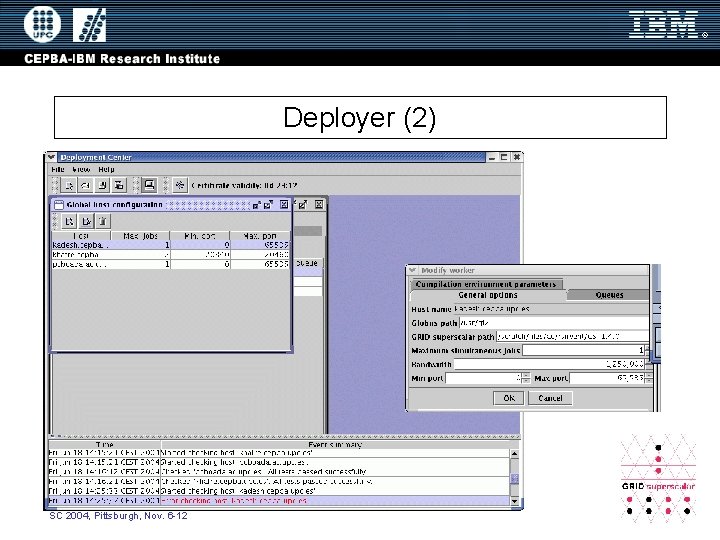

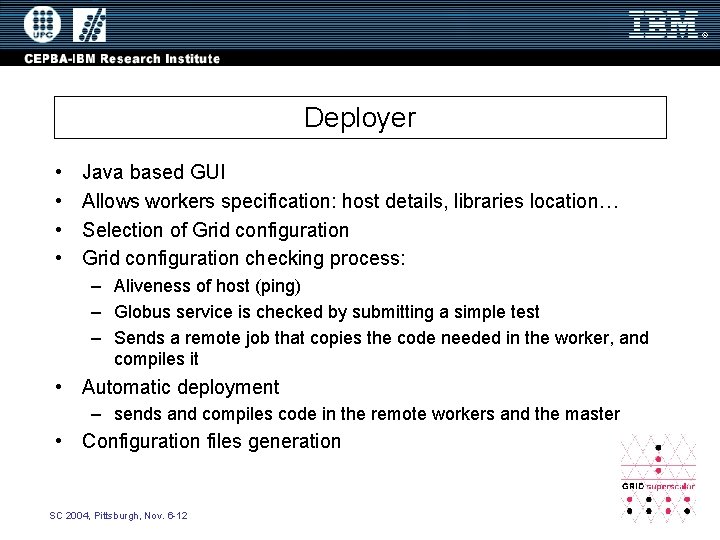

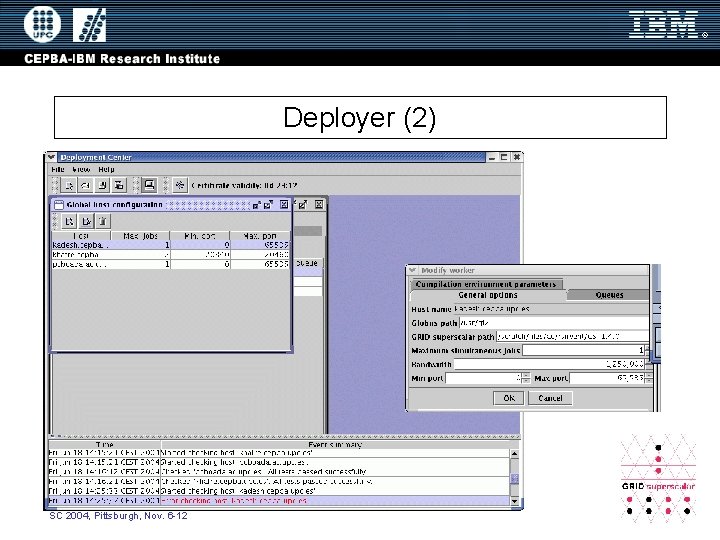

Deployer • • Java based GUI Allows workers specification: host details, libraries location… Selection of Grid configuration checking process: – Aliveness of host (ping) – Globus service is checked by submitting a simple test – Sends a remote job that copies the code needed in the worker, and compiles it • Automatic deployment – sends and compiles code in the remote workers and the master • Configuration files generation SC 2004, Pittsburgh, Nov. 6 -12

Deployer (2) • Automatic deployment SC 2004, Pittsburgh, Nov. 6 -12

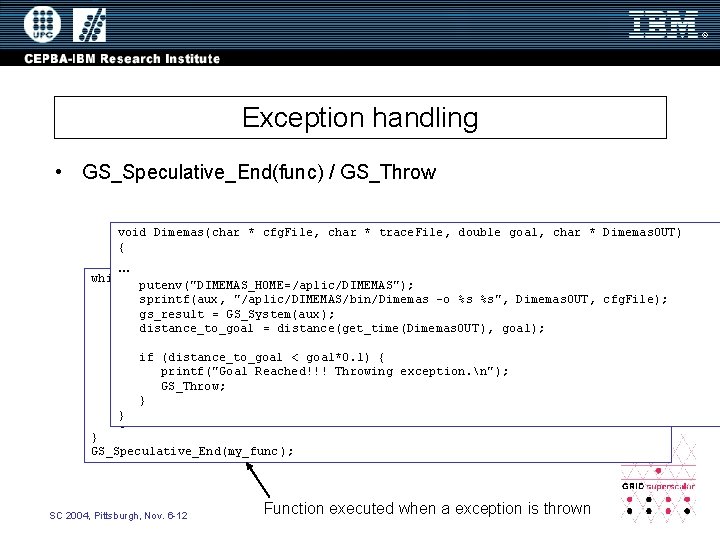

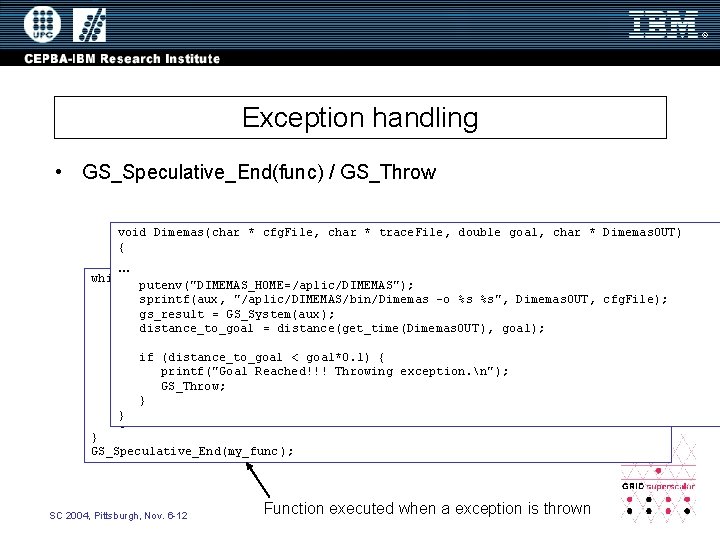

Exception handling • GS_Speculative_End(func) / GS_Throw void Dimemas(char * cfg. File, char * trace. File, double goal, char * Dimemas. OUT) { … while (j<MAX_ITERS){ putenv("DIMEMAS_HOME=/aplic/DIMEMAS"); get. Ranges(Lini, BWini, &Lmin, &Lmax, &BWmin, &BWmax); sprintf(aux, "/aplic/DIMEMAS/bin/Dimemas -o %s %s", Dimemas. OUT, cfg. File); for (i=0; i<ITERS; i++){ gs_result = GS_System(aux); L[i] = gen_rand(Lmin, Lmax); distance_to_goal = distance(get_time(Dimemas. OUT ), goal); BW[i] = gen_rand(BWmin, BWmax); Filter("nsend. cfg", L[i], BW[i], "tmp. cfg"); if (distance_to_goal < goal*0. 1) { Dimemas("tmp. cfg", "nsend_rec_nosm. trf", Elapsed_goal, "dim_ou. txt"); printf("Goal Reached!!! Throwing exception. n"); Extract("tmp. cfg", "dim_out. txt", "final_result. txt"); GS_Throw; } } get. New. Ini. Range("final_result. txt", &Lini, &BWini); } j++; } GS_Speculative_End(my_func ); SC 2004, Pittsburgh, Nov. 6 -12 Function executed when a exception is thrown

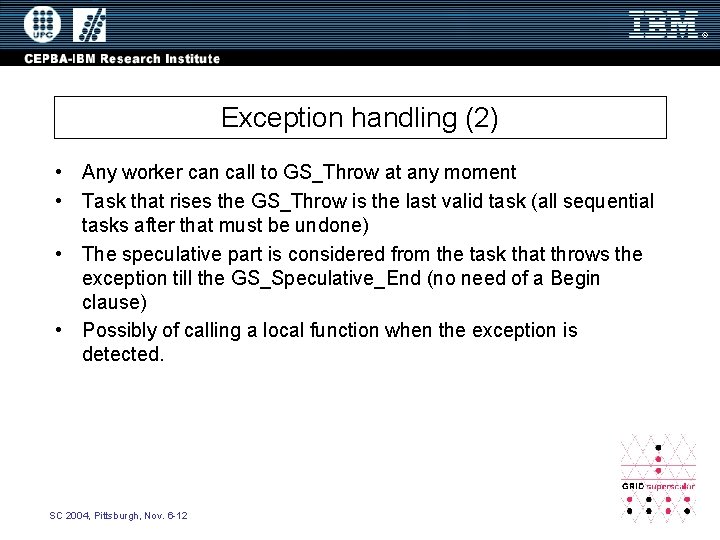

Exception handling (2) • Any worker can call to GS_Throw at any moment • Task that rises the GS_Throw is the last valid task (all sequential tasks after that must be undone) • The speculative part is considered from the task that throws the exception till the GS_Speculative_End (no need of a Begin clause) • Possibly of calling a local function when the exception is detected. SC 2004, Pittsburgh, Nov. 6 -12

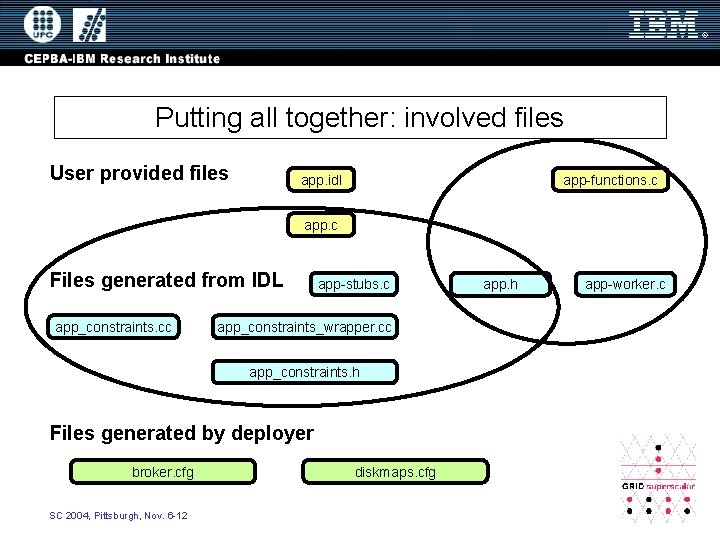

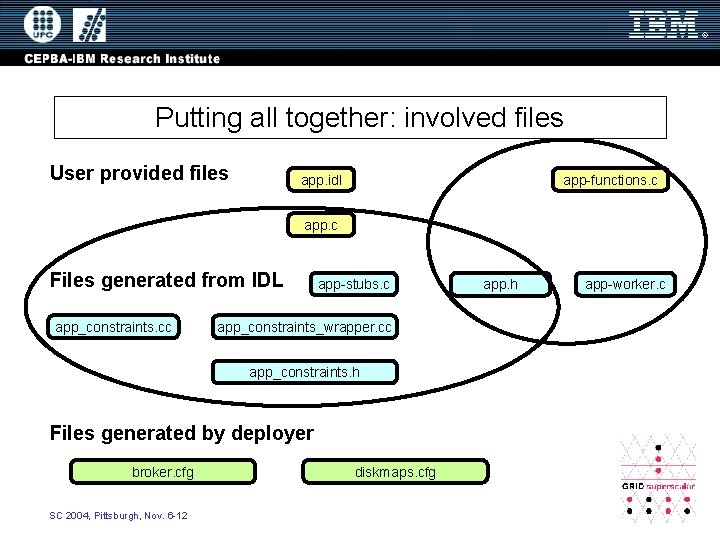

Putting all together: involved files User provided files app. idl app-functions. c app. c Files generated from IDL app_constraints. cc app-stubs. c app_constraints_wrapper. cc app_constraints. h Files generated by deployer broker. cfg SC 2004, Pittsburgh, Nov. 6 -12 diskmaps. cfg app. h app-worker. c

Outline • • Objective The essence User’s interface Automatic code generation Run-time features Programming experiences Ongoing work Conclusions SC 2004, Pittsburgh, Nov. 6 -12

Programming experiences • Performance modelling (Dimemas, Paramedir) – Algorithm flexibility • NAS Grid Benchmarks – Improved component programs flexibility – Reduced Grid level source code lines • Bioinformatics application (production) – Improved portability (Globus vs just Load. Leveler) – Reduced Grid level source code lines • Pblade solution for bioinformatics SC 2004, Pittsburgh, Nov. 6 -12

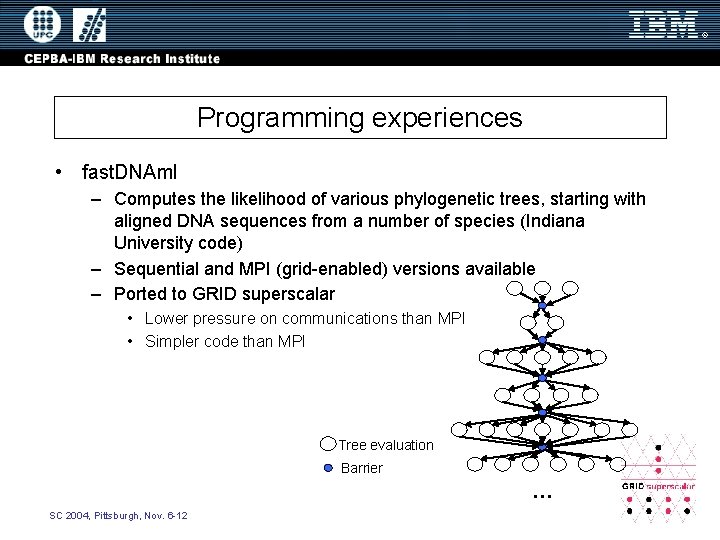

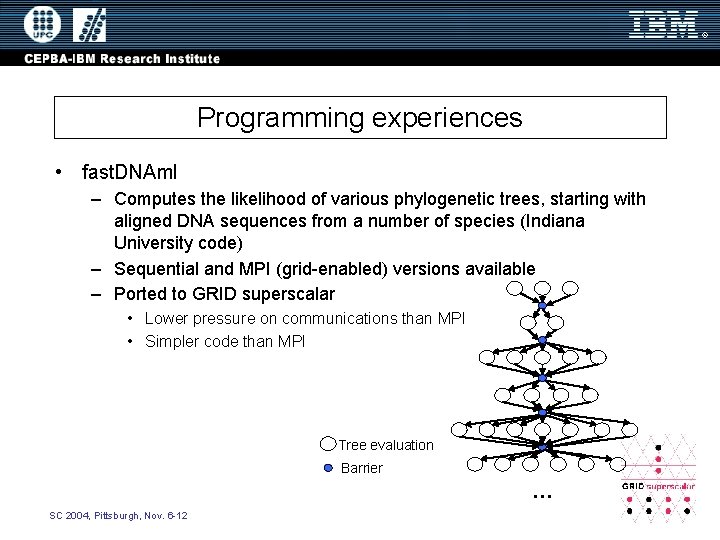

Programming experiences • fast. DNAml – Computes the likelihood of various phylogenetic trees, starting with aligned DNA sequences from a number of species (Indiana University code) – Sequential and MPI (grid-enabled) versions available – Ported to GRID superscalar • Lower pressure on communications than MPI • Simpler code than MPI Tree evaluation Barrier … SC 2004, Pittsburgh, Nov. 6 -12

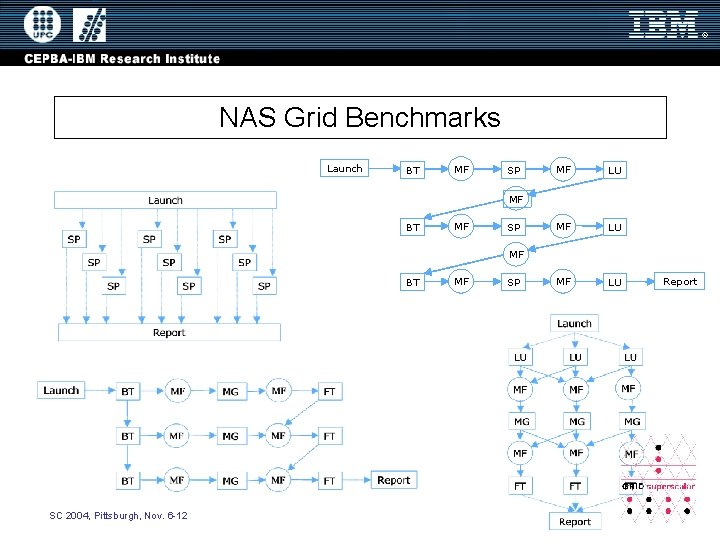

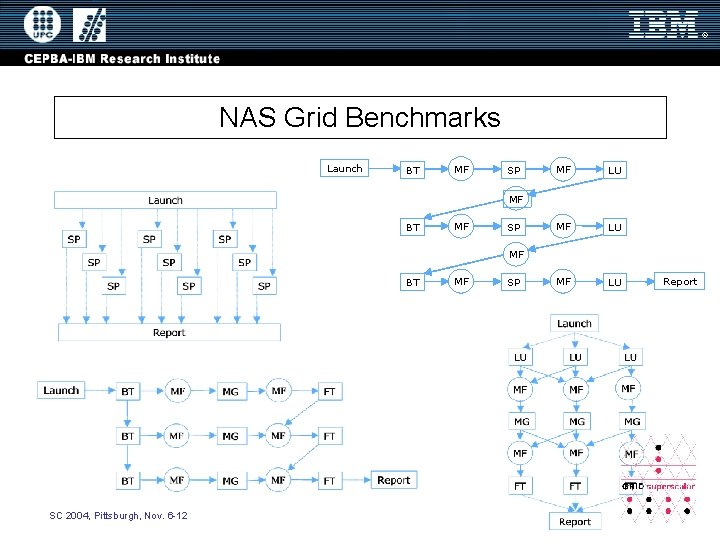

NAS Grid Benchmarks Launch BT MF SP MF LU MF BT MF SP MF BT SC 2004, Pittsburgh, Nov. 6 -12 MF SP Report

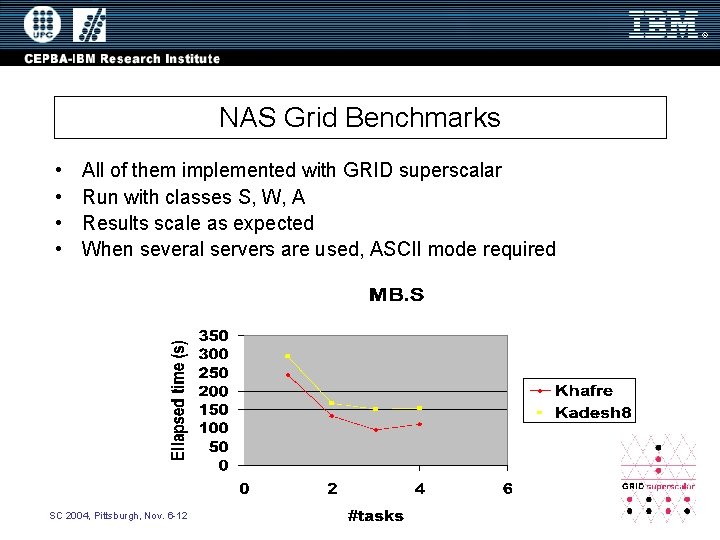

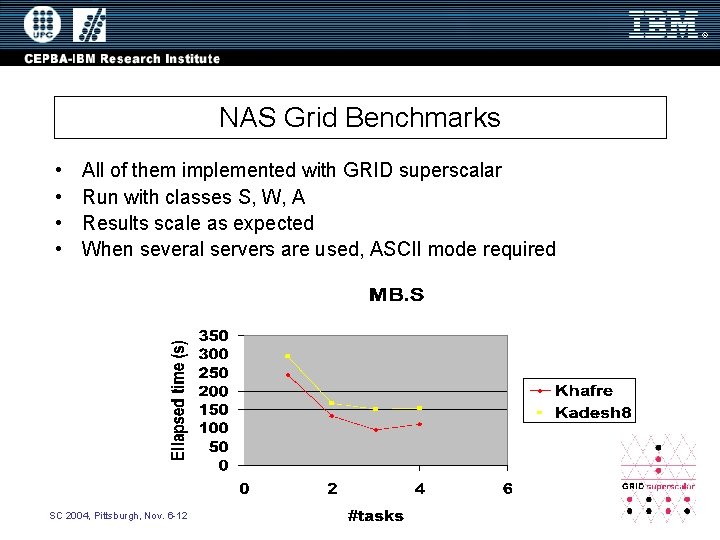

NAS Grid Benchmarks • • All of them implemented with GRID superscalar Run with classes S, W, A Results scale as expected When several servers are used, ASCII mode required SC 2004, Pittsburgh, Nov. 6 -12

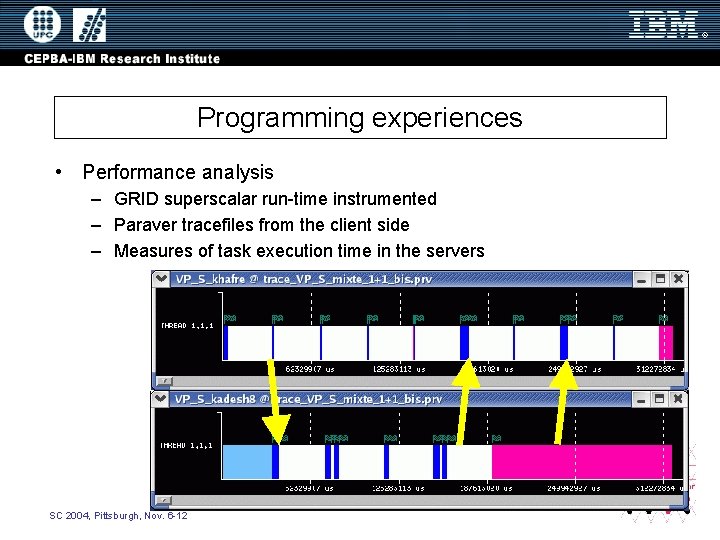

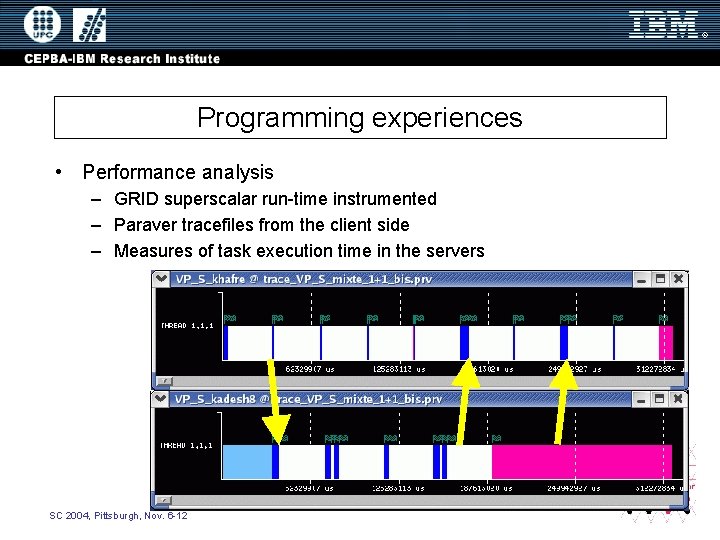

Programming experiences • Performance analysis – GRID superscalar run-time instrumented – Paraver tracefiles from the client side – Measures of task execution time in the servers SC 2004, Pittsburgh, Nov. 6 -12

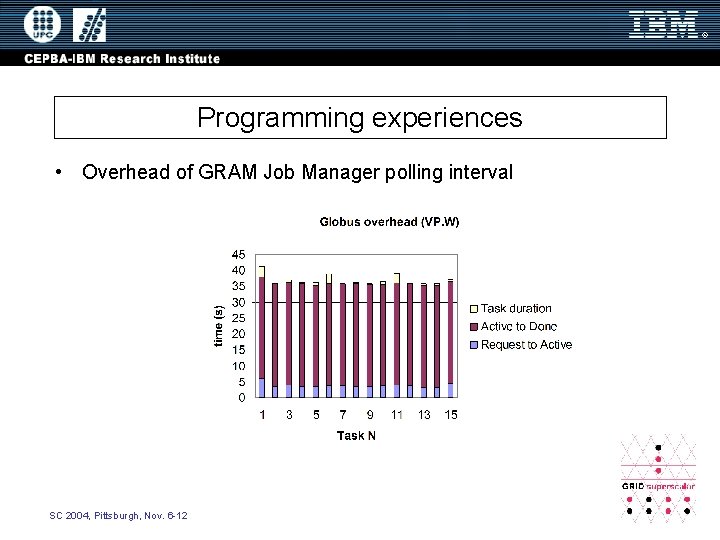

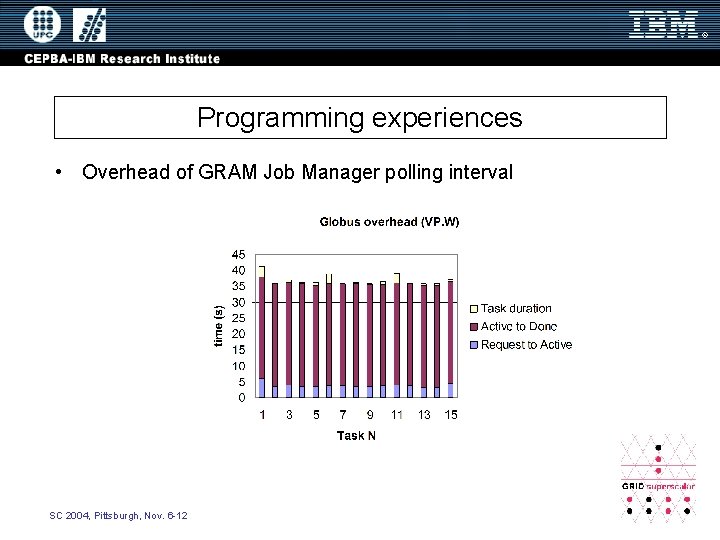

Programming experiences • Overhead of GRAM Job Manager polling interval SC 2004, Pittsburgh, Nov. 6 -12

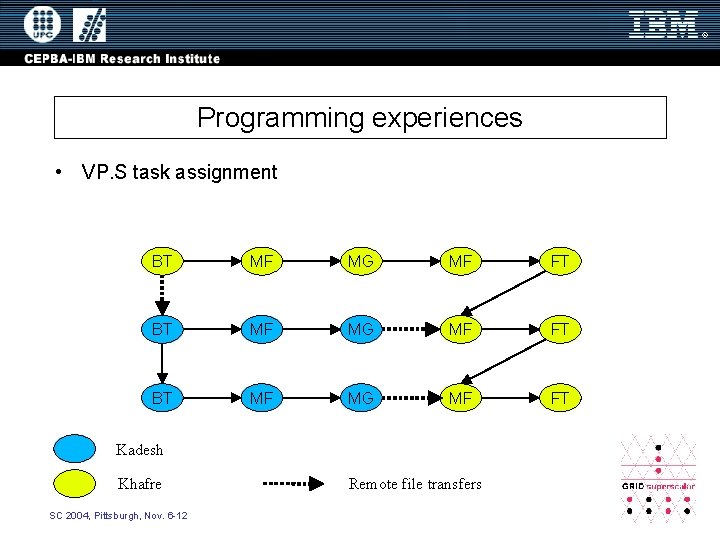

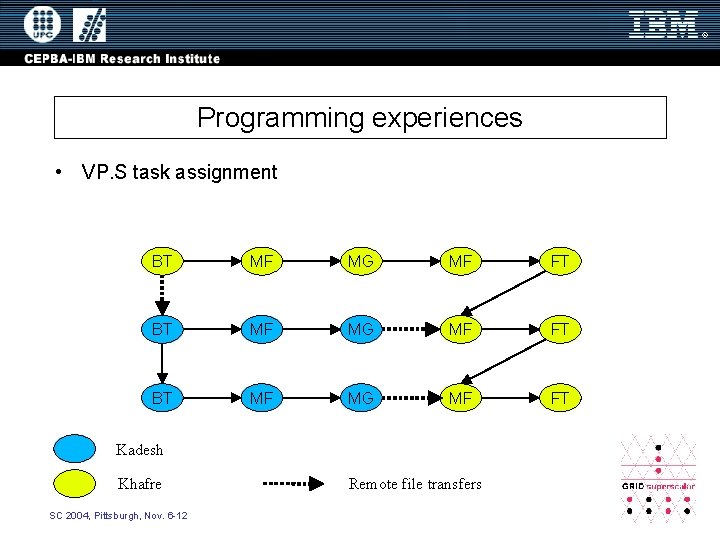

Programming experiences • VP. S task assignment BT MF MG MF FT Kadesh Khafre SC 2004, Pittsburgh, Nov. 6 -12 Remote file transfers

Outline • • Objective The essence User’s interface Automatic code generation Run-time features Programming experiences Ongoing work Conclusions SC 2004, Pittsburgh, Nov. 6 -12

Ongoing work • • • OGSA oriented resource broker, based on Globus Toolkit 3. x. Bindings to Ninf-G 2 Binding to ssh/rsh/scp New language bindings (shell script) And more future work: – Bindings to other basic middlewares • GAT, … – Enhancements in the run-time performance guided by the performance analysis SC 2004, Pittsburgh, Nov. 6 -12

Conclusions • Presentation of the ideas of GRID superscalar • Exists a viable way to ease the programming of Grid applications • GRID superscalar run-time enables – Use of the resources in the Grid – Exploiting the existent parallelism SC 2004, Pittsburgh, Nov. 6 -12

More information • GRID superscalar home page: http: //people. ac. upc. es/rosab/index_gs. htm • Rosa M. Badia, Jesús Labarta, Raül Sirvent, Josep M. Pérez, José M. Cela, Rogeli Grima, “Programming Grid Applications with GRID Superscalar”, Journal of Grid Computing, Volume 1 (Number 2): 151 -170 (2003). SC 2004, Pittsburgh, Nov. 6 -12