GRID superscalar a programming model for the Grid

- Slides: 67

GRID superscalar: a programming model for the Grid Doctoral Thesis Computer Architecture Department Technical University of Catalonia Raül Sirvent Pardell Advisor: Rosa M. Badia Sala GRID superscalar: a programming model for the Grid

Outline 1. 2. 3. 4. 5. Introduction Programming interface Runtime Fault tolerance at the programming model level Conclusions and future work GRID superscalar: a programming model for the Grid 2

Outline 1. Introduction 1. 1 Motivation 1. 2 Related work 1. 3 Thesis objectives and contributions 2. 3. 4. 5. Programming interface Runtime Fault tolerance at the programming model level Conclusions and future work GRID superscalar: a programming model for the Grid 3

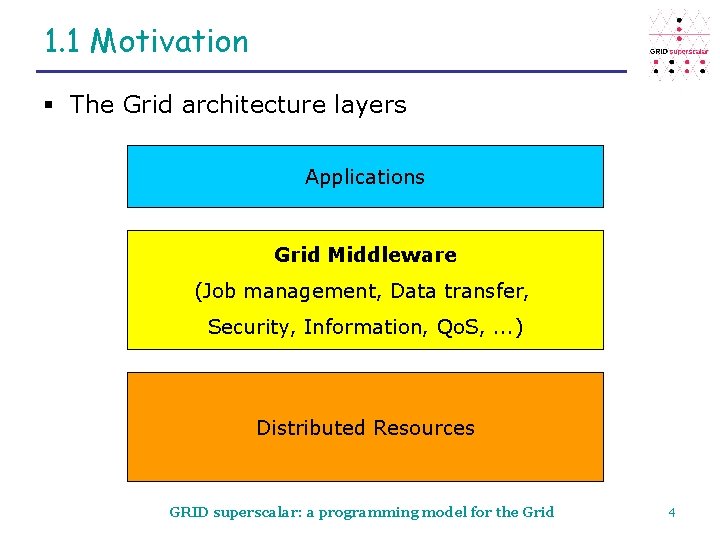

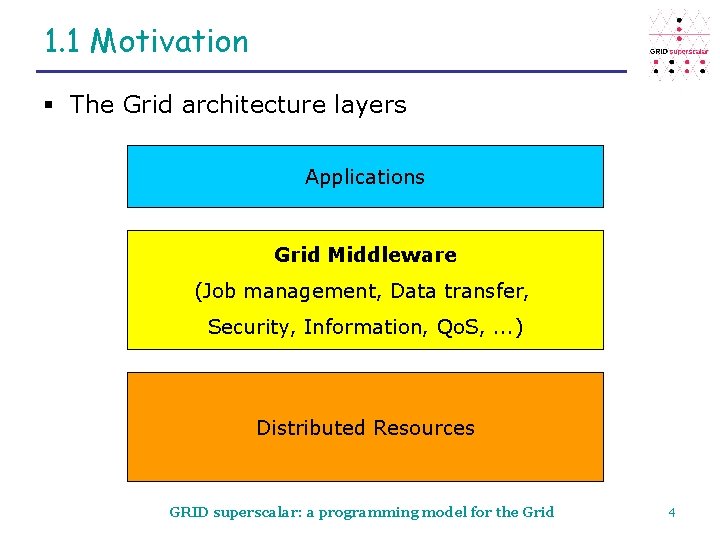

1. 1 Motivation § The Grid architecture layers Applications Grid Middleware (Job management, Data transfer, Security, Information, Qo. S, . . . ) Distributed Resources GRID superscalar: a programming model for the Grid 4

1. 1 Motivation § What middleware should I use? GRID superscalar: a programming model for the Grid 5

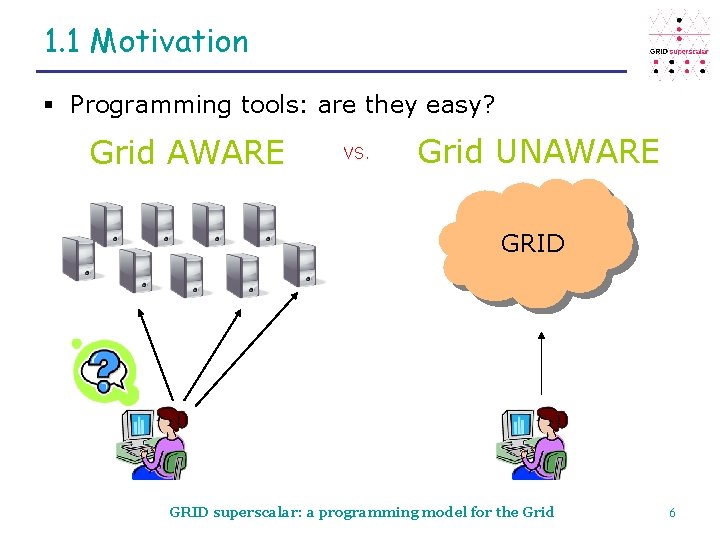

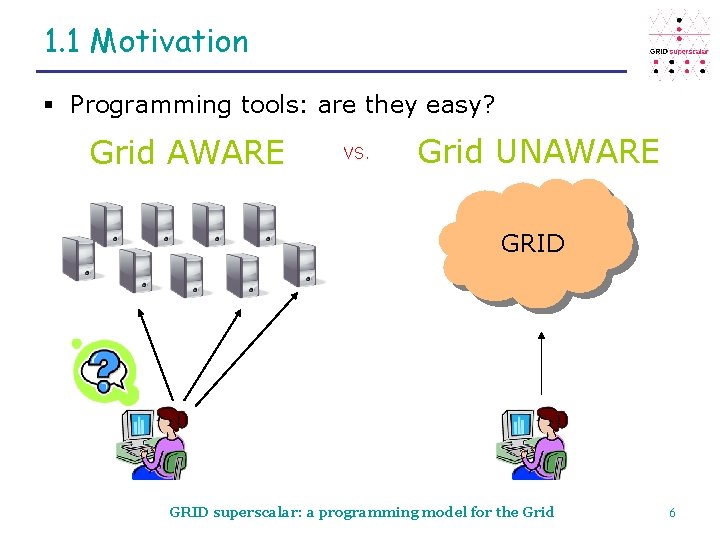

1. 1 Motivation § Programming tools: are they easy? Grid AWARE VS. Grid UNAWARE GRID superscalar: a programming model for the Grid 6

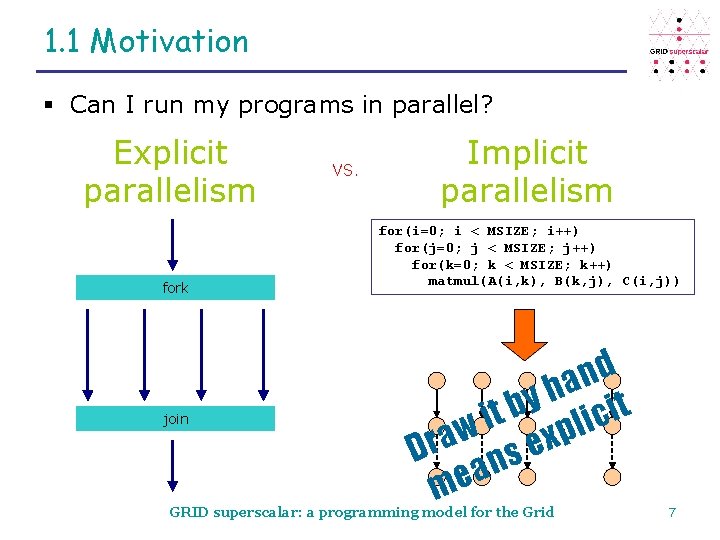

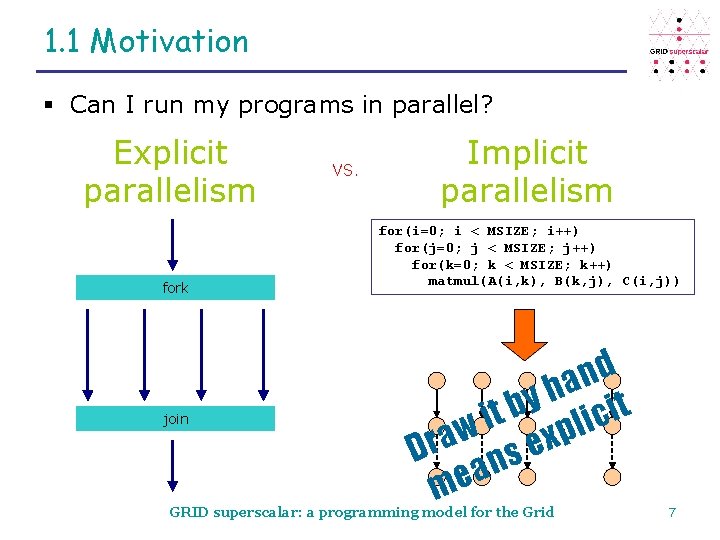

1. 1 Motivation § Can I run my programs in parallel? Explicit parallelism fork join VS. Implicit parallelism for(i=0; i < MSIZE; i++) for(j=0; j < MSIZE; j++) for(k=0; k < MSIZE; k++) matmul(A(i, k), B(k, j), C(i, j)) d n a h y b it t i … lic w exp a r D s n a e m GRID superscalar: a programming model for the Grid 7

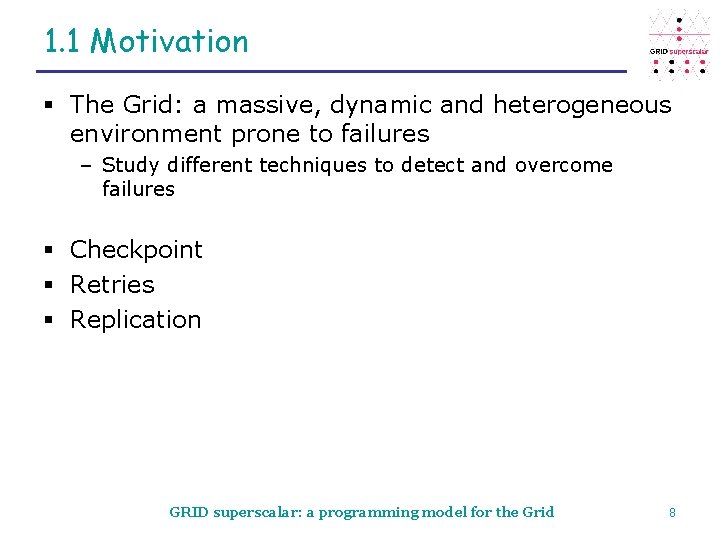

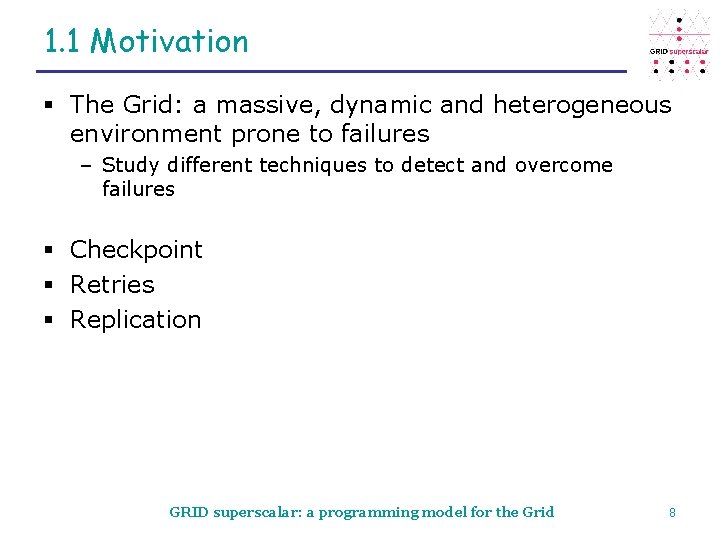

1. 1 Motivation § The Grid: a massive, dynamic and heterogeneous environment prone to failures – Study different techniques to detect and overcome failures § Checkpoint § Retries § Replication GRID superscalar: a programming model for the Grid 8

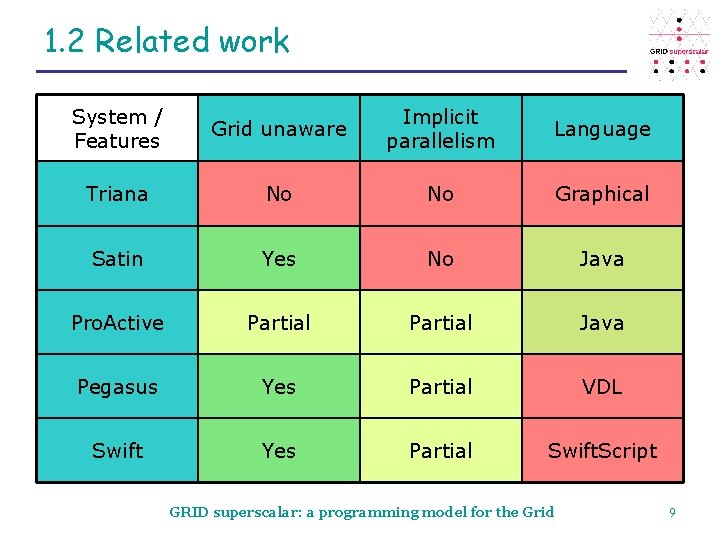

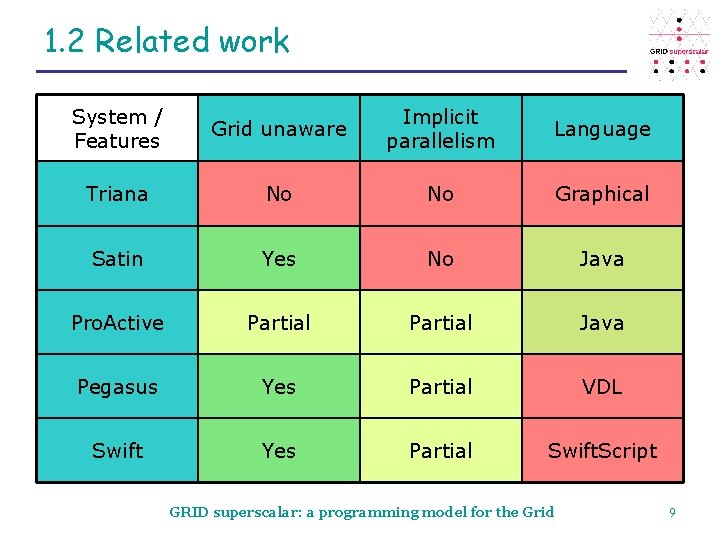

1. 2 Related work System / Features Grid unaware Implicit parallelism Language Triana No No Graphical Satin Yes No Java Pro. Active Partial Java Pegasus Yes Partial VDL Swift Yes Partial Swift. Script GRID superscalar: a programming model for the Grid 9

1. 3 Thesis objectives and contributions § Objective: create a programming model for the Grid – Grid unaware – Implicit parallelism – Sequential programming – Allows to use well-known imperative languages – Speed up applications – Include fault detection and recovery GRID superscalar: a programming model for the Grid 10

1. 3 Thesis objectives and contributions § Contribution: GRID superscalar – Programming interface – Runtime environment – Fault tolerance features GRID superscalar: a programming model for the Grid 11

Outline 1. Introduction 2. Programming interface 2. 1 Design 2. 2 User interface 2. 3 Programming comparison 3. Runtime 4. Fault tolerance at the programming model level 5. Conclusions and future work GRID superscalar: a programming model for the Grid 12

2. 1 Design § Interface objectives – Grid unaware – Implicit parallelism – Sequential programming – Allows to use well-known imperative languages GRID superscalar: a programming model for the Grid 13

2. 1 Design § Target applications – Algorithms which may be easily splitted in tasks • Branch and bound computations, divide and conquer algorithms, recursive algorithms, … – Coarse grained tasks – Independent tasks • Scientific workflows, optimization algorithms, parameter sweep – Main parameters: FILES • External simulators, finite element solvers, BLAST, GAMESS GRID superscalar: a programming model for the Grid 14

2. 1 Design § Application’s architecture: a master-worker paradigm – Master-worker parallel paradigm fits with our objectives – Main program: the master – Functions: workers • Function = Generic representation of a task – Glue to transform a sequential application into a masterworker application: stubs – skeletons (RMI, RPC, …) • Stub: call to runtime interface • Skeleton: binary which calls to the user function GRID superscalar: a programming model for the Grid 15

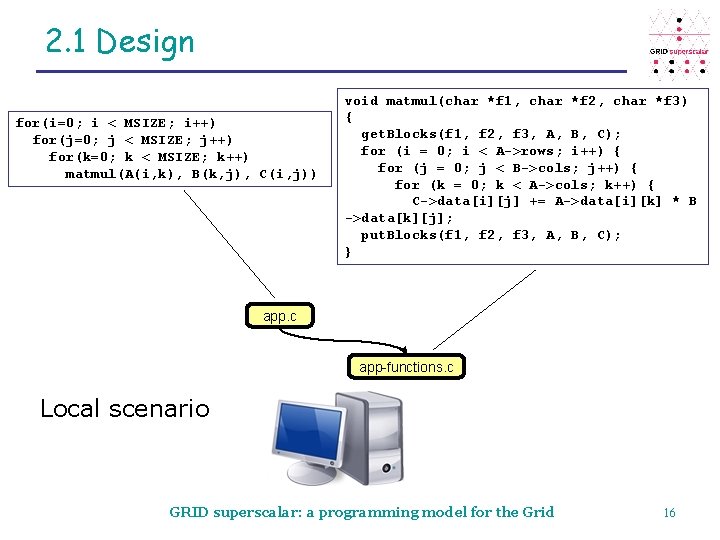

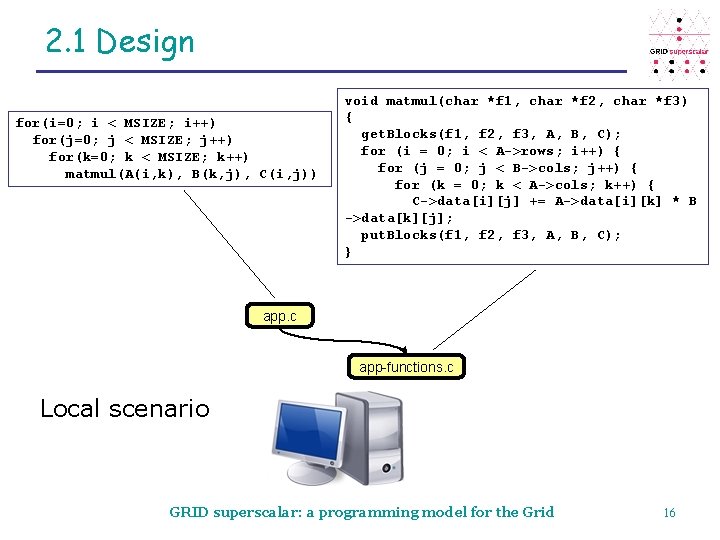

2. 1 Design for(i=0; i < MSIZE; i++) for(j=0; j < MSIZE; j++) for(k=0; k < MSIZE; k++) matmul(A(i, k), B(k, j), C(i, j)) void matmul(char *f 1, char *f 2, char *f 3) { get. Blocks(f 1, f 2, f 3, A, B, C); for (i = 0; i < A->rows; i++) { for (j = 0; j < B->cols; j++) { for (k = 0; k < A->cols; k++) { C->data[i][j] += A->data[i][k] * B ->data[k][j]; put. Blocks(f 1, f 2, f 3, A, B, C); } app. c app-functions. c Local scenario GRID superscalar: a programming model for the Grid 16

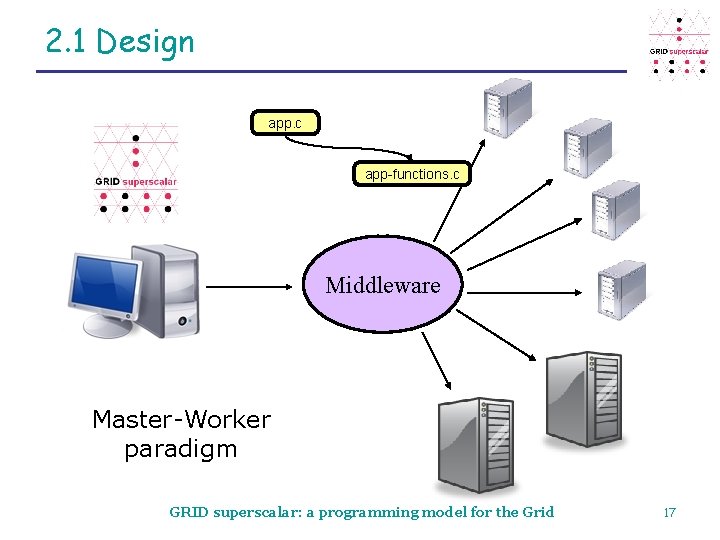

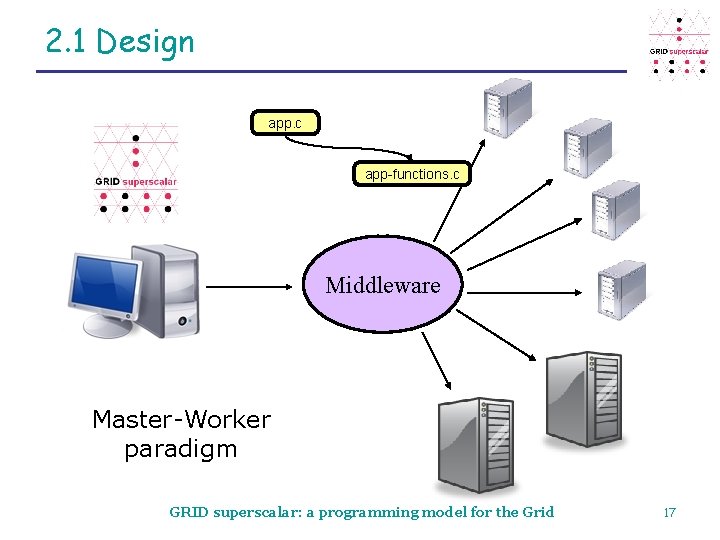

2. 1 Design app. c app-functions. c Middleware Master-Worker paradigm GRID superscalar: a programming model for the Grid 17

2. 1 Design § Intermediate language concept: assembler code C, C++, … Assembler Processor execution § In GRIDSs C, C++, … Workflow Grid execution § The Execute generic interface – Instruction set is defined by the user – Single entry point to the runtime – Allows easy building of programming language bindings (Java, Perl, Shell Script) • Easier technology adoption GRID superscalar: a programming model for the Grid 18

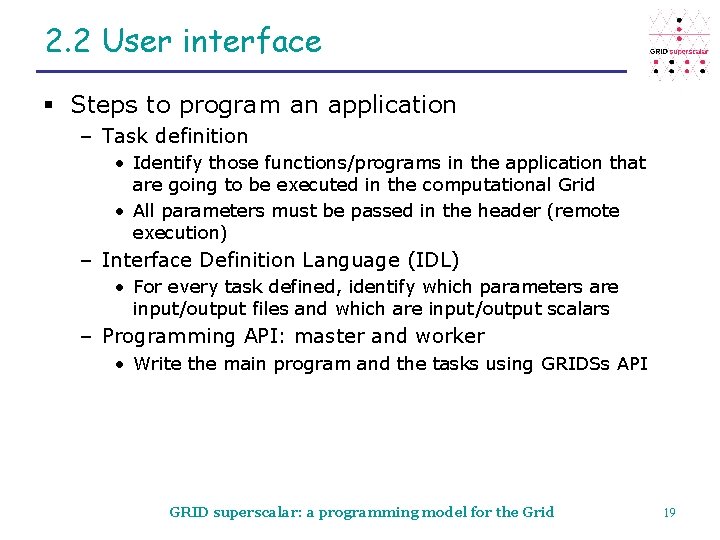

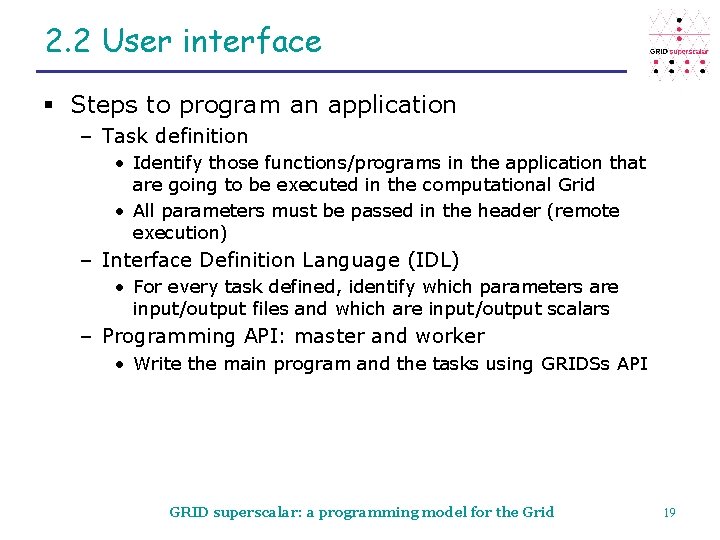

2. 2 User interface § Steps to program an application – Task definition • Identify those functions/programs in the application that are going to be executed in the computational Grid • All parameters must be passed in the header (remote execution) – Interface Definition Language (IDL) • For every task defined, identify which parameters are input/output files and which are input/output scalars – Programming API: master and worker • Write the main program and the tasks using GRIDSs API GRID superscalar: a programming model for the Grid 19

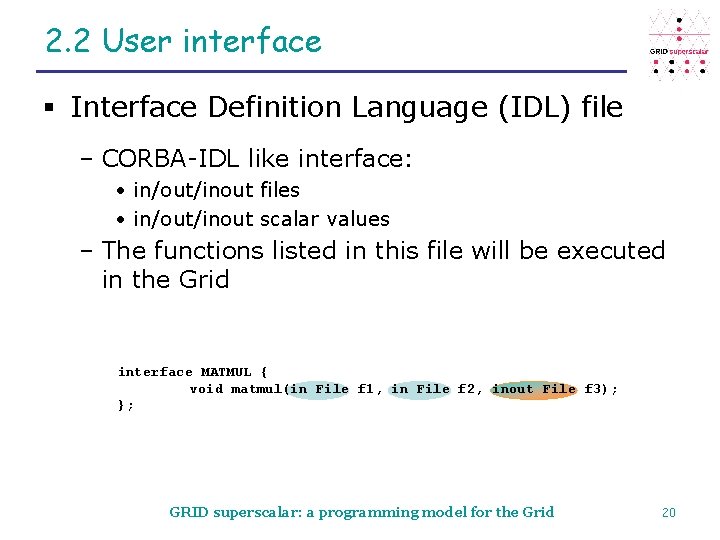

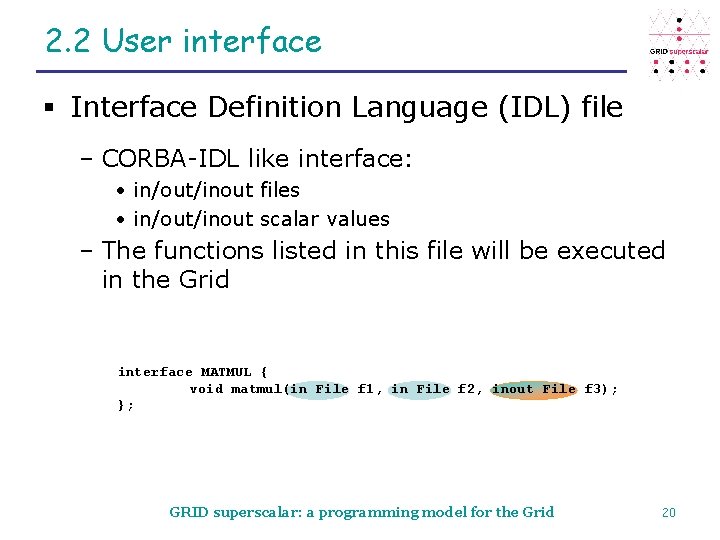

2. 2 User interface § Interface Definition Language (IDL) file – CORBA-IDL like interface: • in/out/inout files • in/out/inout scalar values – The functions listed in this file will be executed in the Grid interface MATMUL { void matmul(in File f 1, in File f 2, inout File f 3); }; GRID superscalar: a programming model for the Grid 20

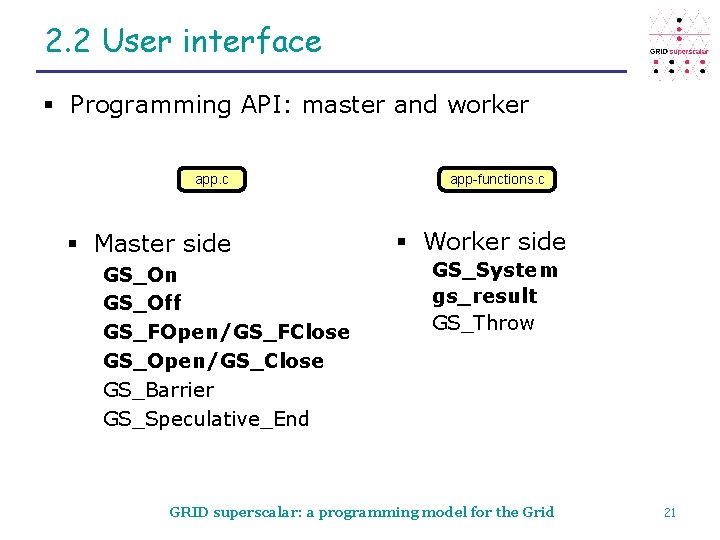

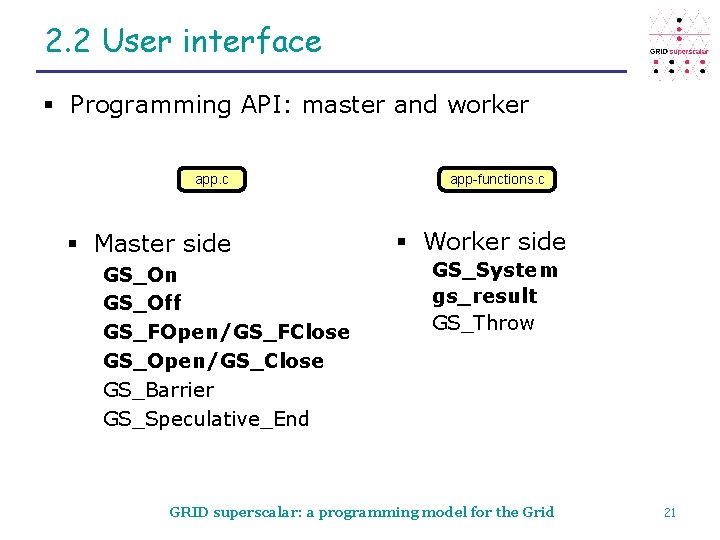

2. 2 User interface § Programming API: master and worker app. c § Master side GS_On GS_Off GS_FOpen/GS_FClose GS_Open/GS_Close GS_Barrier GS_Speculative_End app-functions. c § Worker side GS_System gs_result GS_Throw GRID superscalar: a programming model for the Grid 21

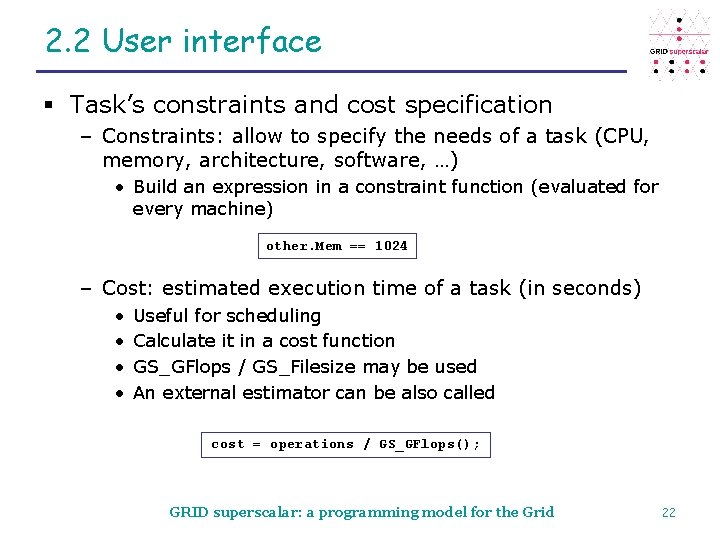

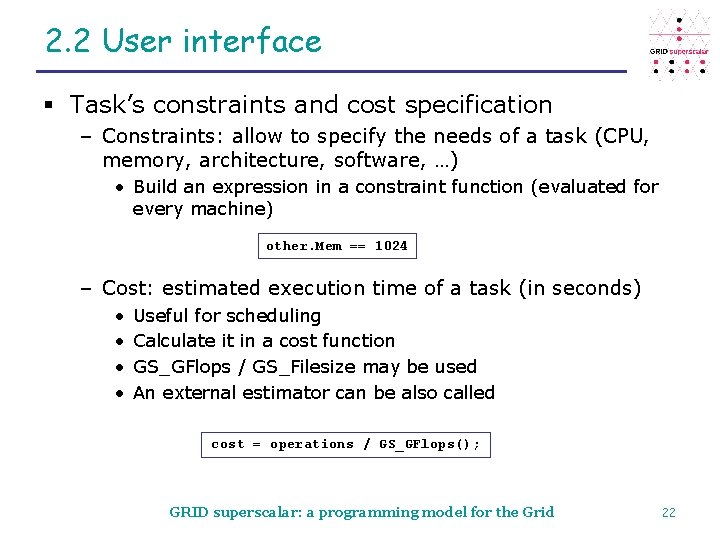

2. 2 User interface § Task’s constraints and cost specification – Constraints: allow to specify the needs of a task (CPU, memory, architecture, software, …) • Build an expression in a constraint function (evaluated for every machine) other. Mem == 1024 – Cost: estimated execution time of a task (in seconds) • • Useful for scheduling Calculate it in a cost function GS_GFlops / GS_Filesize may be used An external estimator can be also called cost = operations / GS_GFlops(); GRID superscalar: a programming model for the Grid 22

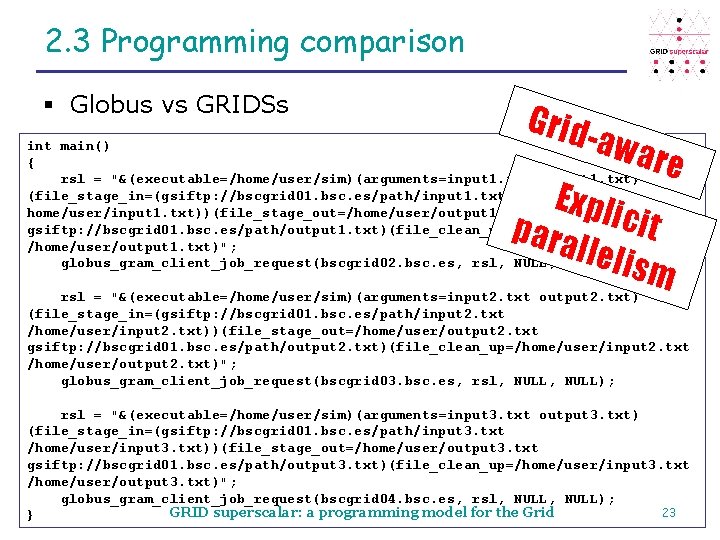

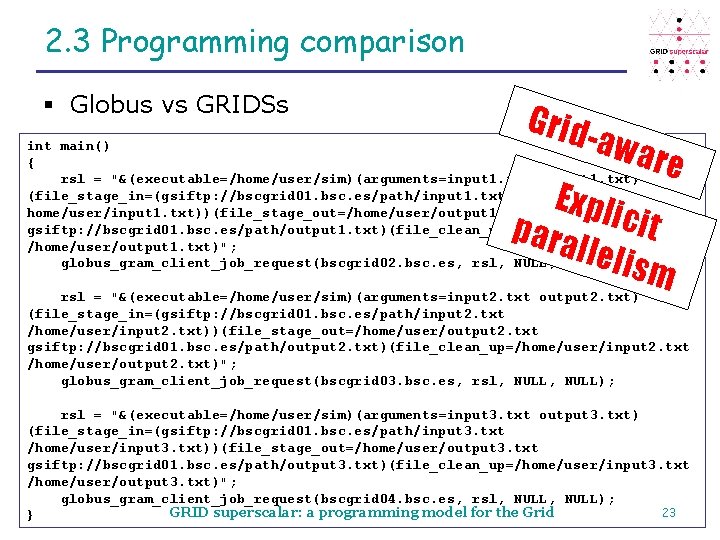

2. 3 Programming comparison § Globus vs GRIDSs Grid -awa re int main() { rsl = "&(executable=/home/user/sim)(arguments=input 1. txt output 1. txt) (file_stage_in=(gsiftp: //bscgrid 01. bsc. es/path/input 1. txt home/user/input 1. txt))(file_stage_out=/home/user/output 1. txt gsiftp: //bscgrid 01. bsc. es/path/output 1. txt)(file_clean_up=/home/user/input 1. txt /home/user/output 1. txt)"; globus_gram_client_job_request(bscgrid 02. bsc. es, rsl, NULL); Expl para icit llelis m rsl = "&(executable=/home/user/sim)(arguments=input 2. txt output 2. txt) (file_stage_in=(gsiftp: //bscgrid 01. bsc. es/path/input 2. txt /home/user/input 2. txt))(file_stage_out=/home/user/output 2. txt gsiftp: //bscgrid 01. bsc. es/path/output 2. txt)(file_clean_up=/home/user/input 2. txt /home/user/output 2. txt)"; globus_gram_client_job_request(bscgrid 03. bsc. es, rsl, NULL); rsl = "&(executable=/home/user/sim)(arguments=input 3. txt output 3. txt) (file_stage_in=(gsiftp: //bscgrid 01. bsc. es/path/input 3. txt /home/user/input 3. txt))(file_stage_out=/home/user/output 3. txt gsiftp: //bscgrid 01. bsc. es/path/output 3. txt)(file_clean_up=/home/user/input 3. txt /home/user/output 3. txt)"; globus_gram_client_job_request(bscgrid 04. bsc. es, rsl, NULL); 23 GRID superscalar: a programming model for the Grid }

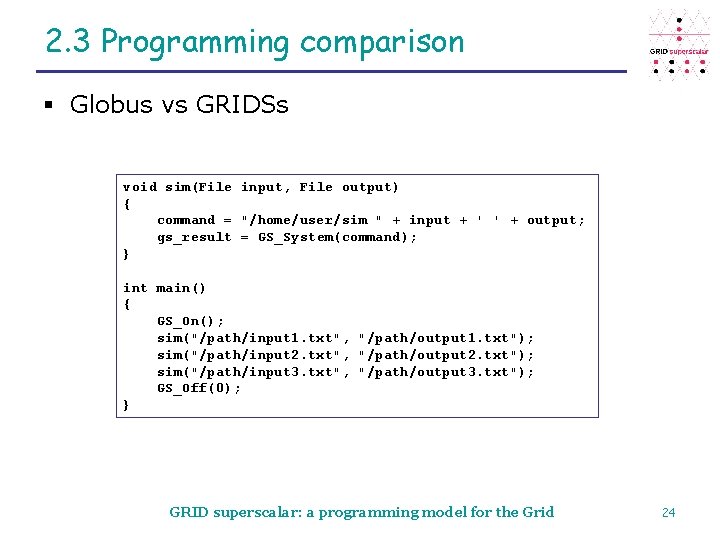

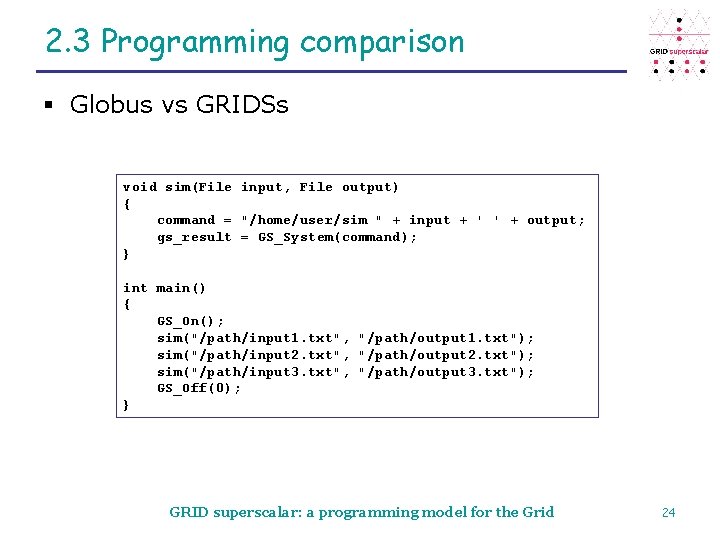

2. 3 Programming comparison § Globus vs GRIDSs void sim(File input, File output) { command = "/home/user/sim " + input + ' ' + output; gs_result = GS_System(command); } int main() { GS_On(); sim("/path/input 1. txt", "/path/output 1. txt"); sim("/path/input 2. txt", "/path/output 2. txt"); sim("/path/input 3. txt", "/path/output 3. txt"); GS_Off(0); } GRID superscalar: a programming model for the Grid 24

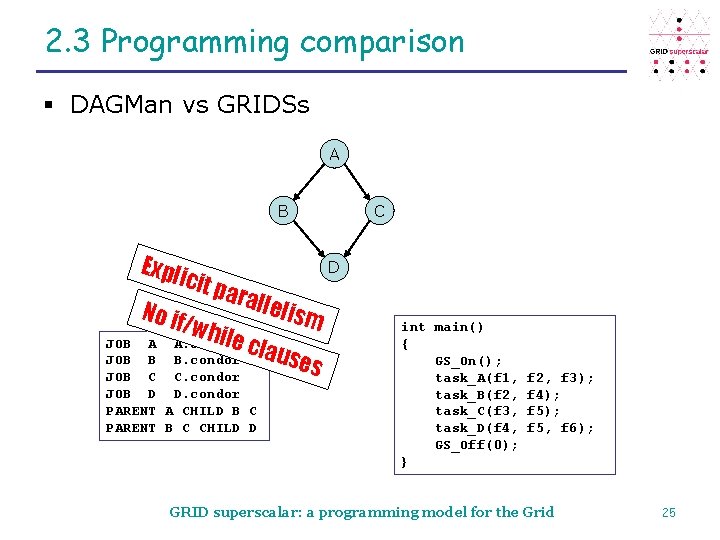

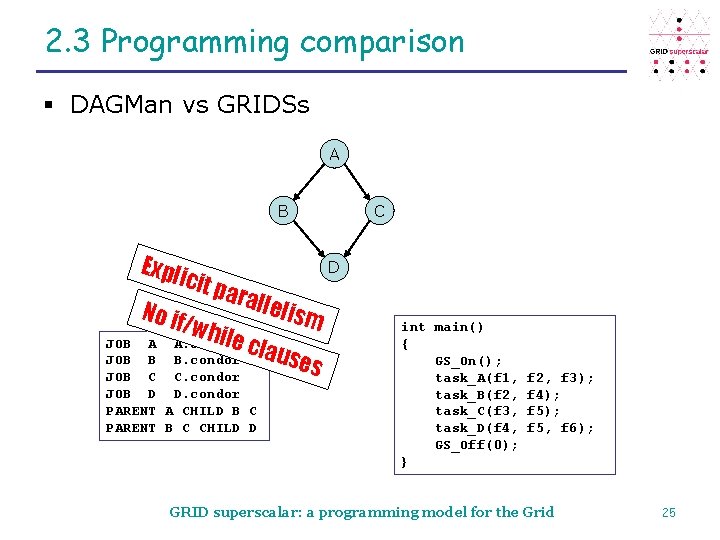

2. 3 Programming comparison § DAGMan vs GRIDSs A B Expl icit p aral lelis No if m /whi le cl A A. condor ause B B. condor s C C. condor JOB JOB D D. condor PARENT A CHILD B C PARENT B C CHILD D C D int main() { GS_On(); task_A(f 1, task_B(f 2, task_C(f 3, task_D(f 4, GS_Off(0); } f 2, f 3); f 4); f 5, f 6); GRID superscalar: a programming model for the Grid 25

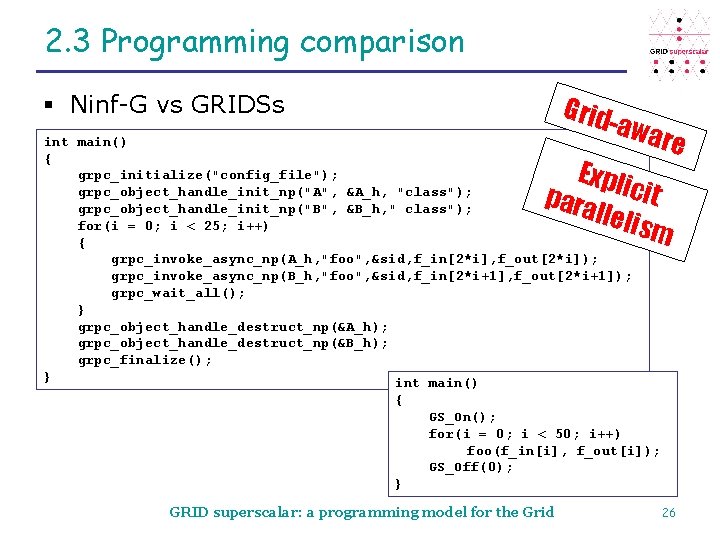

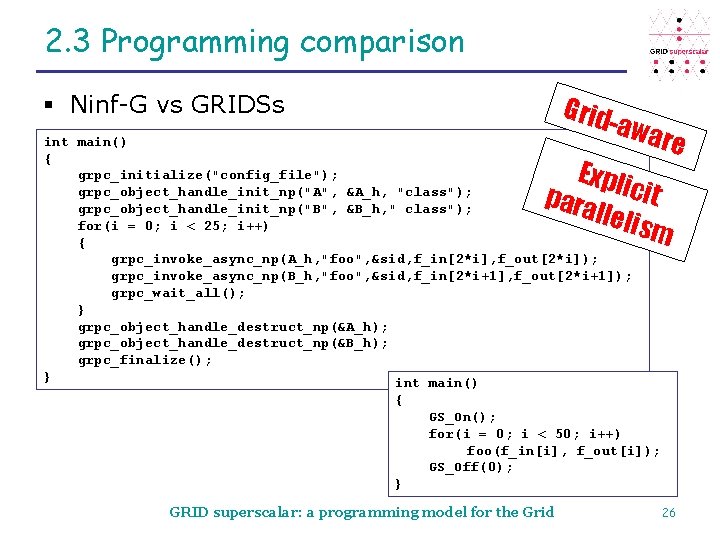

2. 3 Programming comparison Grid § Ninf-G vs GRIDSs -awa int main() { grpc_initialize("config_file"); grpc_object_handle_init_np("A", &A_h, "class"); grpc_object_handle_init_np("B", &B_h, " class"); for(i = 0; i < 25; i++) { grpc_invoke_async_np(A_h, "foo", &sid, f_in[2*i], f_out[2*i]); grpc_invoke_async_np(B_h, "foo", &sid, f_in[2*i+1], f_out[2*i+1]); grpc_wait_all(); } grpc_object_handle_destruct_np(&A_h); grpc_object_handle_destruct_np(&B_h); grpc_finalize(); } int main() re Expl para icit llelis m { GS_On(); for(i = 0; i < 50; i++) foo(f_in[i], f_out[i]); GS_Off(0); } GRID superscalar: a programming model for the Grid 26

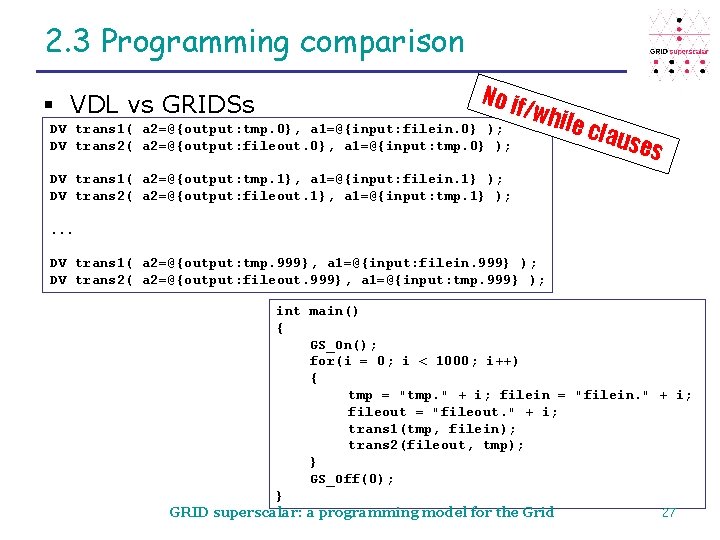

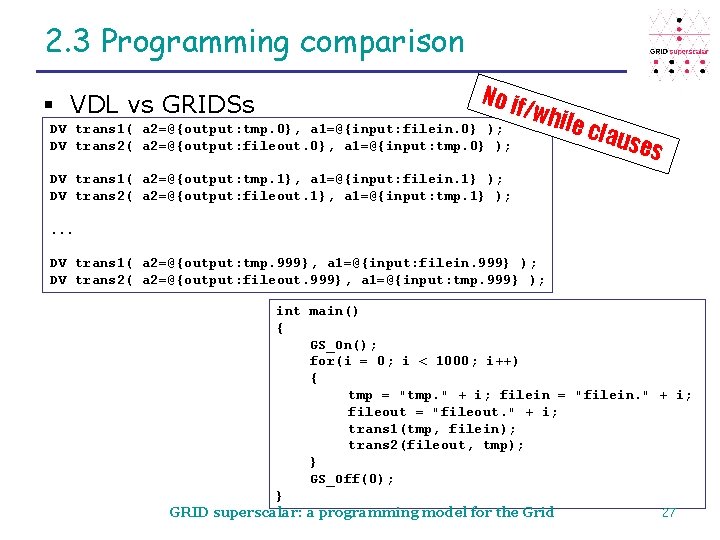

2. 3 Programming comparison § VDL vs GRIDSs No if DV trans 1( a 2=@{output: tmp. 0}, a 1=@{input: filein. 0} ); DV trans 2( a 2=@{output: fileout. 0}, a 1=@{input: tmp. 0} ); /whi le cl ause s DV trans 1( a 2=@{output: tmp. 1}, a 1=@{input: filein. 1} ); DV trans 2( a 2=@{output: fileout. 1}, a 1=@{input: tmp. 1} ); . . . DV trans 1( a 2=@{output: tmp. 999}, a 1=@{input: filein. 999} ); DV trans 2( a 2=@{output: fileout. 999}, a 1=@{input: tmp. 999} ); int main() { GS_On(); for(i = 0; i < 1000; i++) { tmp = "tmp. " + i; filein = "filein. " + i; fileout = "fileout. " + i; trans 1(tmp, filein); trans 2(fileout, tmp); } GS_Off(0); } 27 GRID superscalar: a programming model for the Grid

Outline 1. Introduction 2. Programming interface 3. Runtime 3. 1 Scientific contributions 3. 2 Developments 3. 3 Evaluation tests 4. Fault tolerance at the programming model level 5. Conclusions and future work GRID superscalar: a programming model for the Grid 28

3. 1 Scientific contributions § Runtime objectives – Extract implicit parallelism in sequential applications – Speed up execution using the Grid § Main requirement: Grid middleware – Job management – Data transfer – Security GRID superscalar: a programming model for the Grid 29

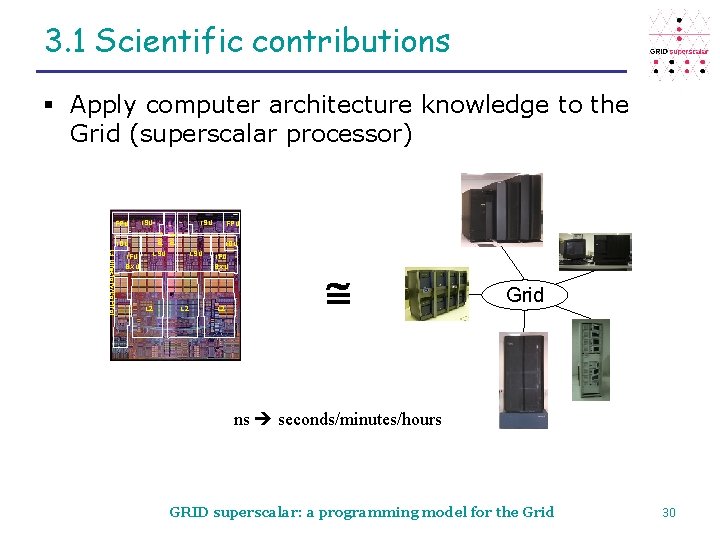

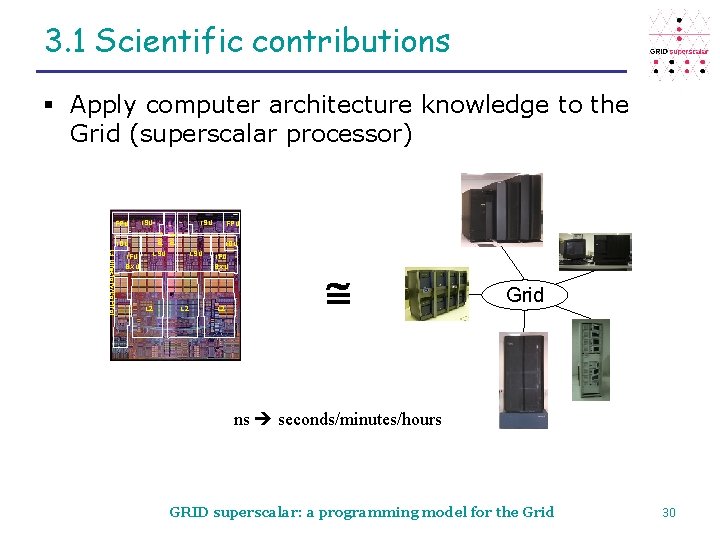

3. 1 Scientific contributions § Apply computer architecture knowledge to the Grid (superscalar processor) FPU ISU IDU L 3 Directory/Control LSU L 2 FPU FXU IDU IFU BXU ISU L 2 IFU BXU L 2 Grid ns seconds/minutes/hours GRID superscalar: a programming model for the Grid 30

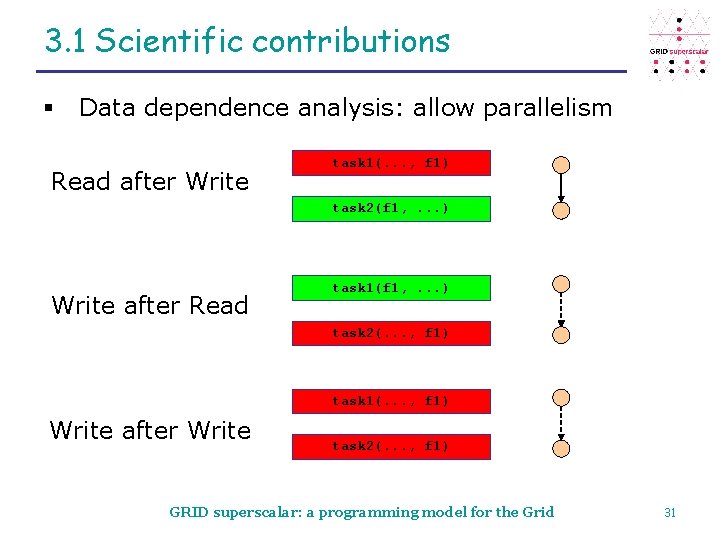

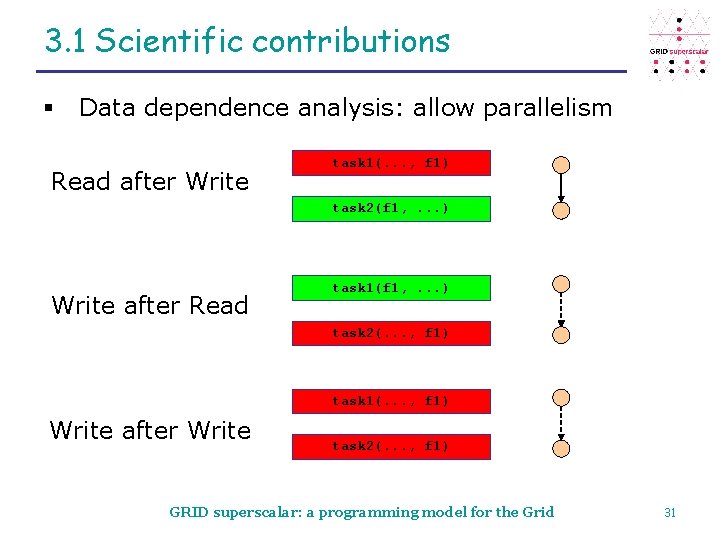

3. 1 Scientific contributions § Data dependence analysis: allow parallelism Read after Write task 1(. . . , f 1) task 2(f 1, . . . ) Write after Read task 1(f 1, . . . ) task 2(. . . , f 1) task 1(. . . , f 1) Write after Write task 2(. . . , f 1) GRID superscalar: a programming model for the Grid 31

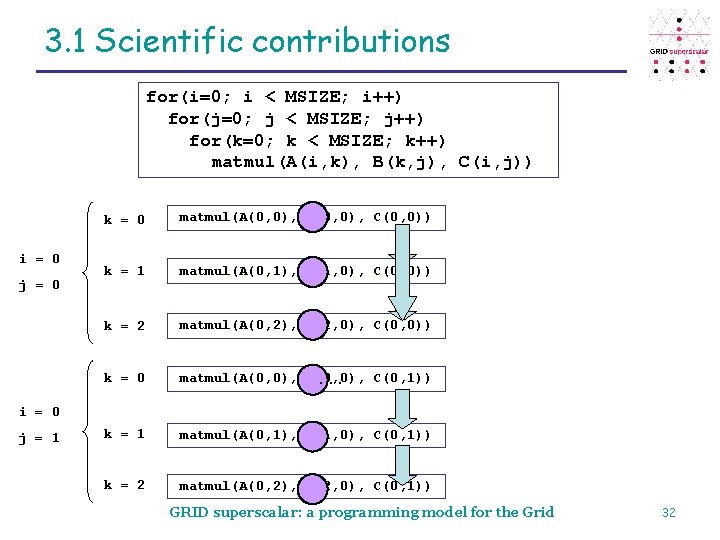

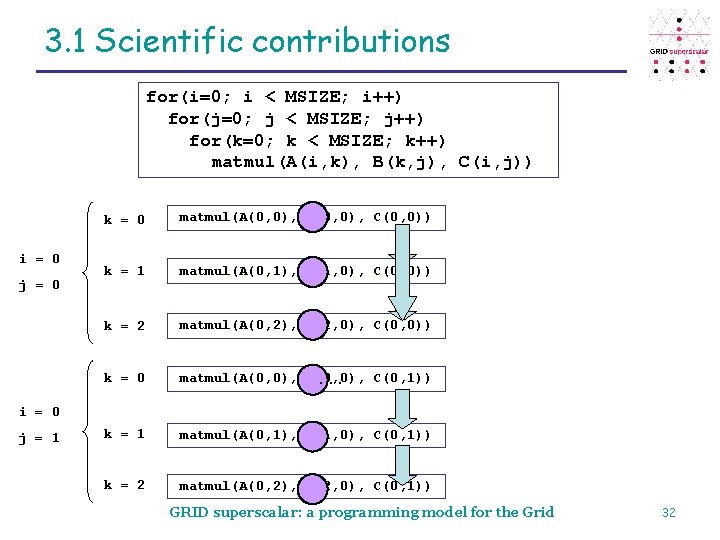

3. 1 Scientific contributions for(i=0; i < MSIZE; i++) for(j=0; j < MSIZE; j++) for(k=0; k < MSIZE; k++) matmul(A(i, k), B(k, j), C(i, j)) i = 0 j = 0 k = 0 matmul(A(0, 0), B(0, 0), C(0, 0)) k = 1 matmul(A(0, 1), B(1, 0), C(0, 0)) k = 2 matmul(A(0, 2), B(2, 0), C(0, 0)) k = 0 matmul(A(0, 0), B(0, 0), C(0, 1)). . . k = 1 matmul(A(0, 1), B(1, 0), C(0, 1)) k = 2 matmul(A(0, 2), B(2, 0), C(0, 1)) i = 0 j = 1 GRID superscalar: a programming model for the Grid 32

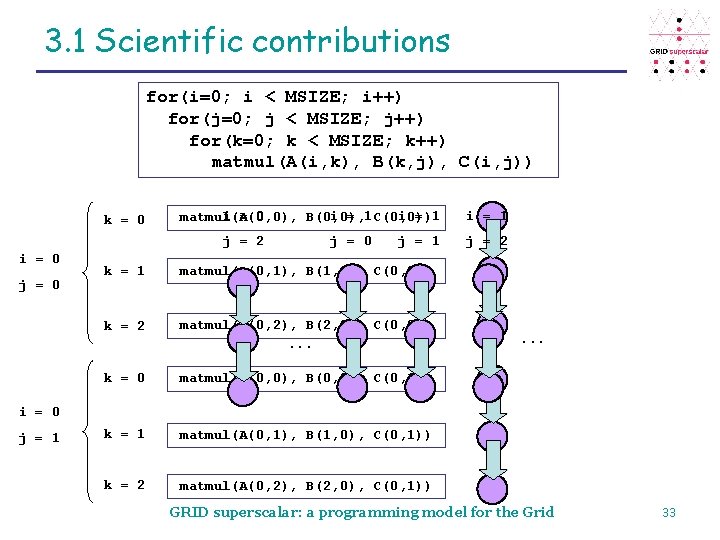

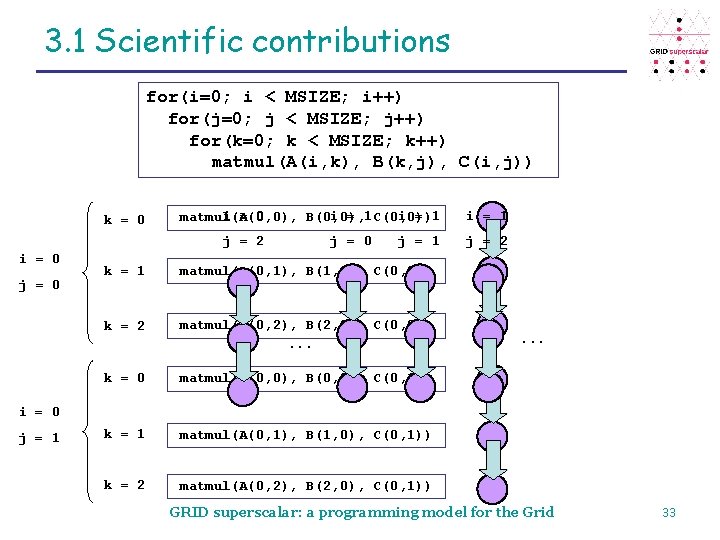

3. 1 Scientific contributions for(i=0; i < MSIZE; i++) for(j=0; j < MSIZE; j++) for(k=0; k < MSIZE; k++) matmul(A(i, k), B(k, j), C(i, j)) k = 0 i = 1 C(0, 0)) i = 1 matmul(A(0, 0), B(0, 0), j = 2 i = 0 j = 1 k = 1 matmul(A(0, 1), B(1, 0), C(0, 0)) k = 2 matmul(A(0, 2), B(2, 0), C(0, 0)). . . k = 0 matmul(A(0, 0), B(0, 0), C(0, 1)) k = 1 matmul(A(0, 1), B(1, 0), C(0, 1)) k = 2 matmul(A(0, 2), B(2, 0), C(0, 1)) i = 1 j = 2 . . . i = 0 j = 1 GRID superscalar: a programming model for the Grid 33

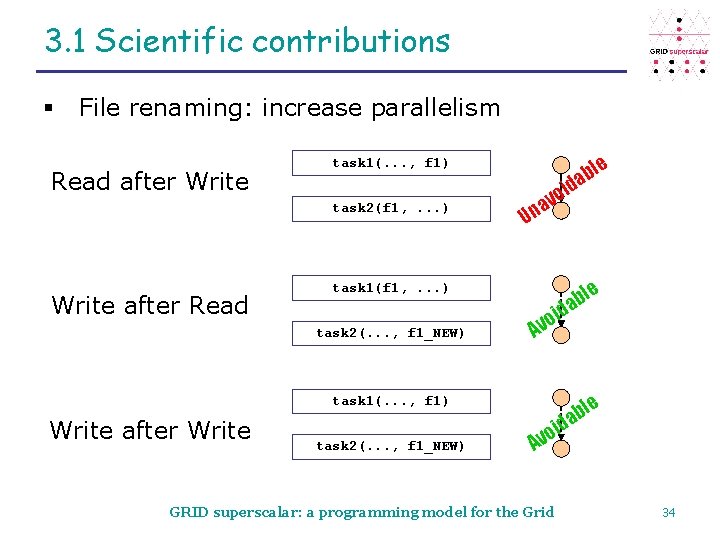

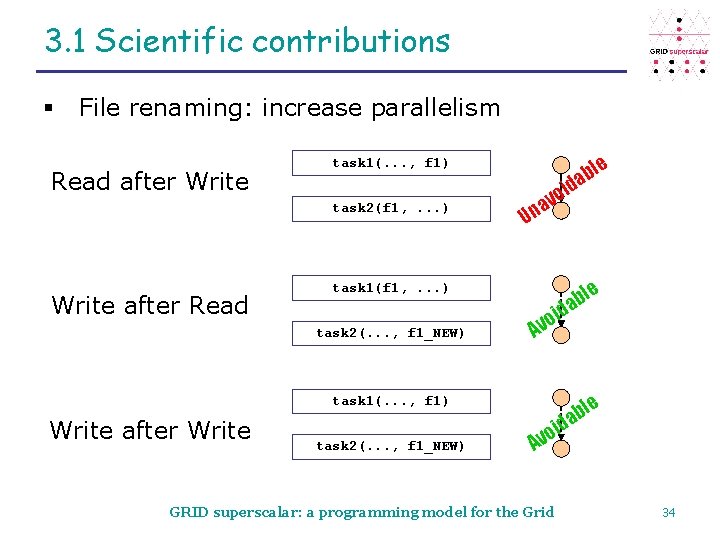

3. 1 Scientific contributions § File renaming: increase parallelism Read after Write task 2(f 1, . . . ) Write after Read le b ida task 1(. . . , f 1) vo a n U le b a oid task 1(f 1, . . . ) task 2(. . . , f 1_NEW) f 1) Av le b a task 1(. . . , f 1) Write after Write task 2(. . . , f 1_NEW) f 1) id o Av GRID superscalar: a programming model for the Grid 34

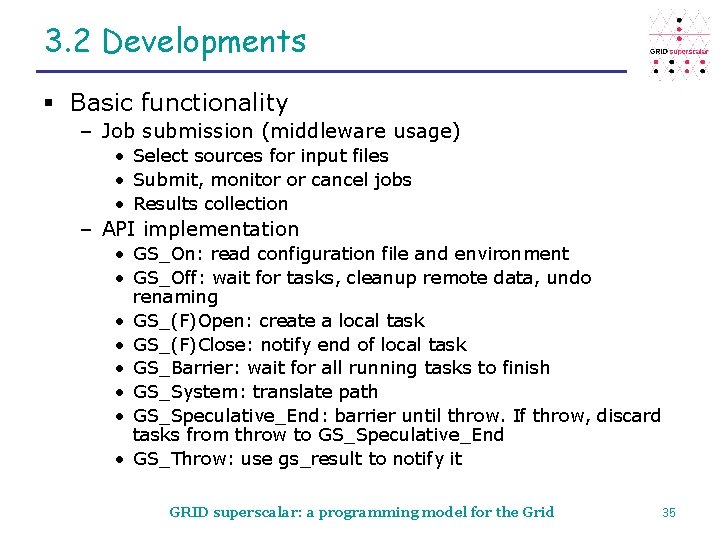

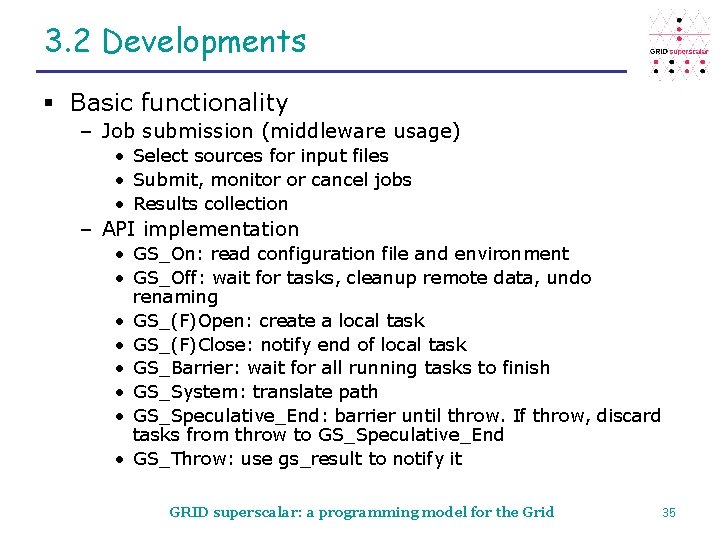

3. 2 Developments § Basic functionality – Job submission (middleware usage) • Select sources for input files • Submit, monitor or cancel jobs • Results collection – API implementation • GS_On: read configuration file and environment • GS_Off: wait for tasks, cleanup remote data, undo renaming • GS_(F)Open: create a local task • GS_(F)Close: notify end of local task • GS_Barrier: wait for all running tasks to finish • GS_System: translate path • GS_Speculative_End: barrier until throw. If throw, discard tasks from throw to GS_Speculative_End • GS_Throw: use gs_result to notify it GRID superscalar: a programming model for the Grid 35

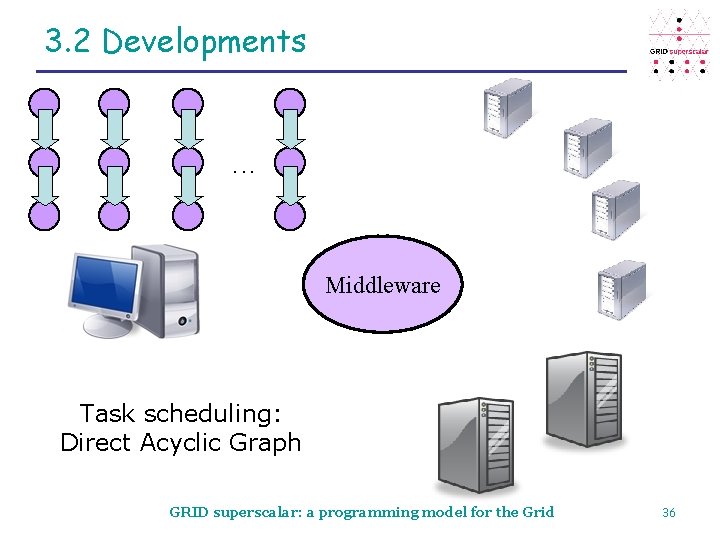

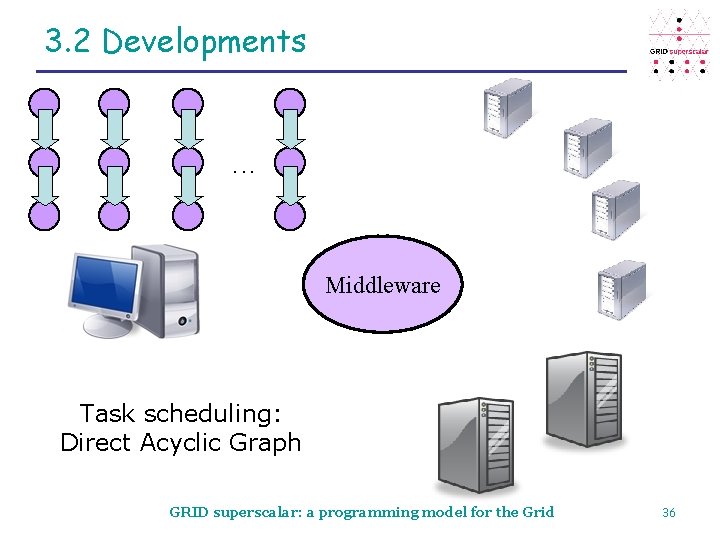

3. 2 Developments . . . Middleware Task scheduling: Direct Acyclic Graph GRID superscalar: a programming model for the Grid 36

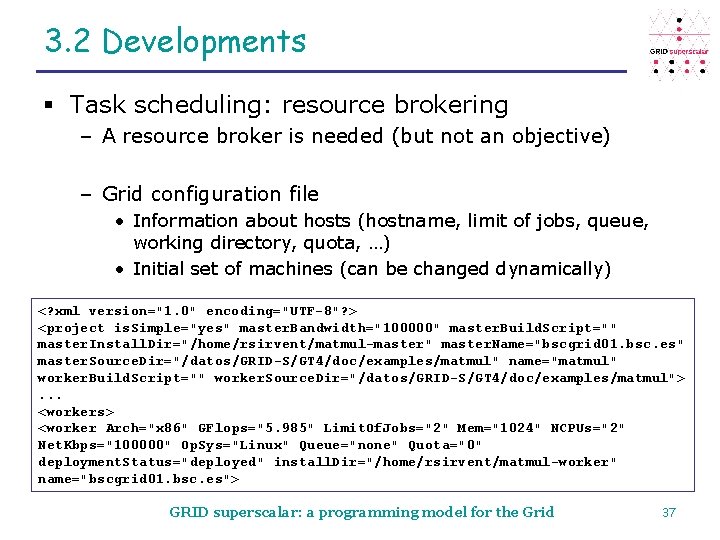

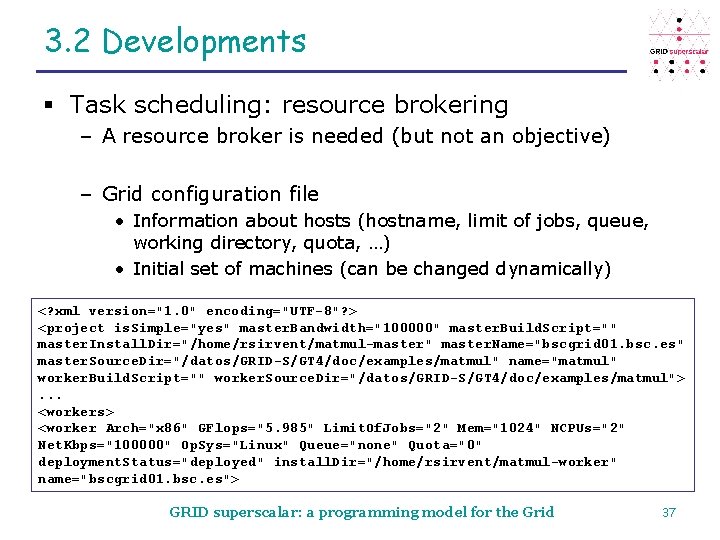

3. 2 Developments § Task scheduling: resource brokering – A resource broker is needed (but not an objective) – Grid configuration file • Information about hosts (hostname, limit of jobs, queue, working directory, quota, …) • Initial set of machines (can be changed dynamically) <? xml version="1. 0" encoding="UTF-8"? > <project is. Simple="yes" master. Bandwidth="100000" master. Build. Script="" master. Install. Dir="/home/rsirvent/matmul-master" master. Name="bscgrid 01. bsc. es" master. Source. Dir="/datos/GRID-S/GT 4/doc/examples/matmul" name="matmul" worker. Build. Script="" worker. Source. Dir="/datos/GRID-S/GT 4/doc/examples/matmul">. . . <workers> <worker Arch="x 86" GFlops="5. 985" Limit. Of. Jobs="2" Mem="1024" NCPUs="2" Net. Kbps="100000" Op. Sys="Linux" Queue="none" Quota="0" deployment. Status="deployed" install. Dir="/home/rsirvent/matmul-worker" name="bscgrid 01. bsc. es"> GRID superscalar: a programming model for the Grid 37

3. 2 Developments § Task scheduling: resource brokering – Scheduling policy • Estimation of total execution time of a single task • File. Transfer. Time: time to transfer needed files to a resource (calculated with the hosts information and the location of files) – Select fastest source for a file • Execution. Time: estimation of the task’s run time in a resource. An interface function (can be calculated, or estimated by an external entity) – Select fastest resource for execution • Smallest estimation is selected GRID superscalar: a programming model for the Grid 38

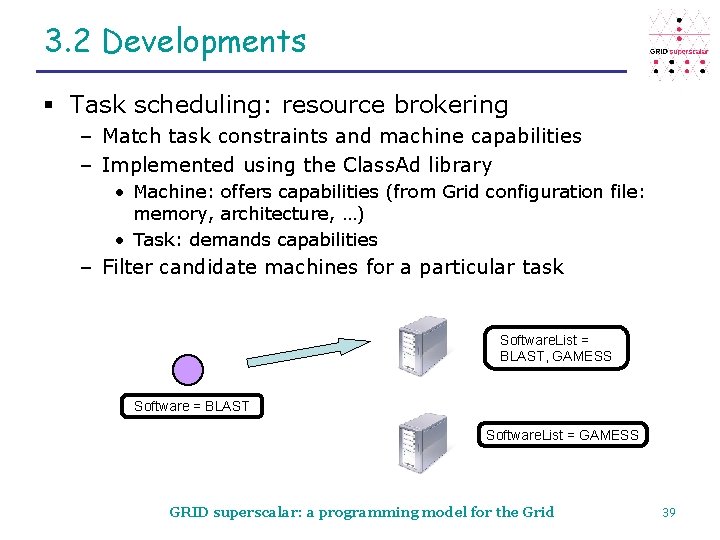

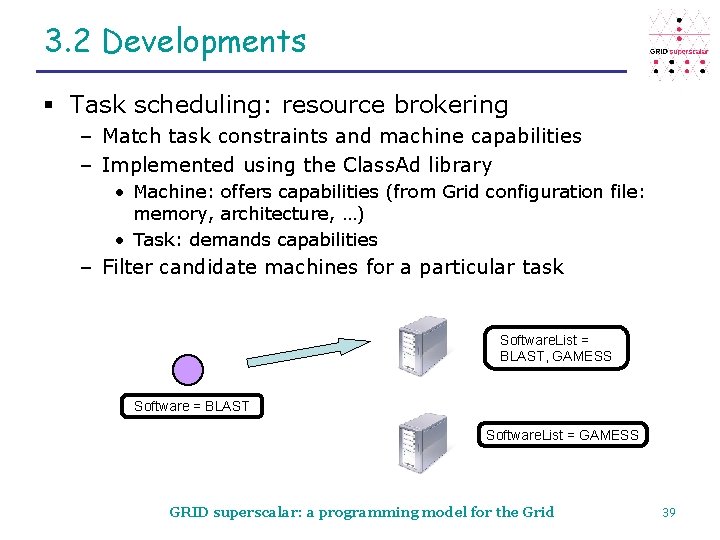

3. 2 Developments § Task scheduling: resource brokering – Match task constraints and machine capabilities – Implemented using the Class. Ad library • Machine: offers capabilities (from Grid configuration file: memory, architecture, …) • Task: demands capabilities – Filter candidate machines for a particular task Software. List = BLAST, GAMESS Software = BLAST Software. List = GAMESS GRID superscalar: a programming model for the Grid 39

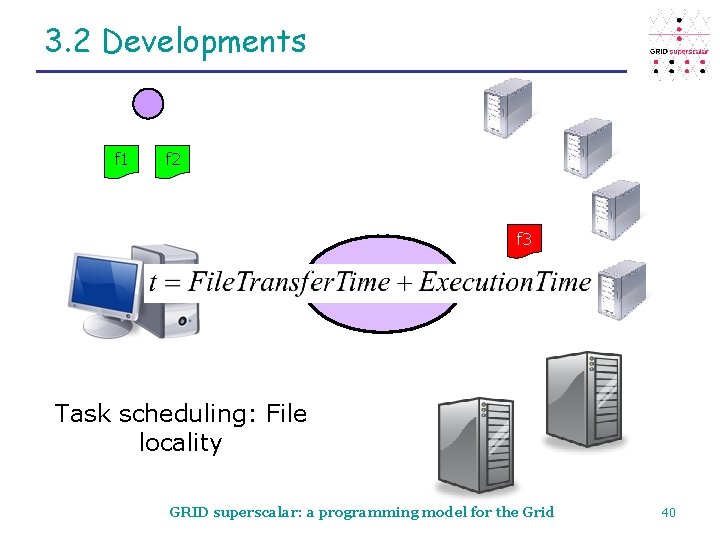

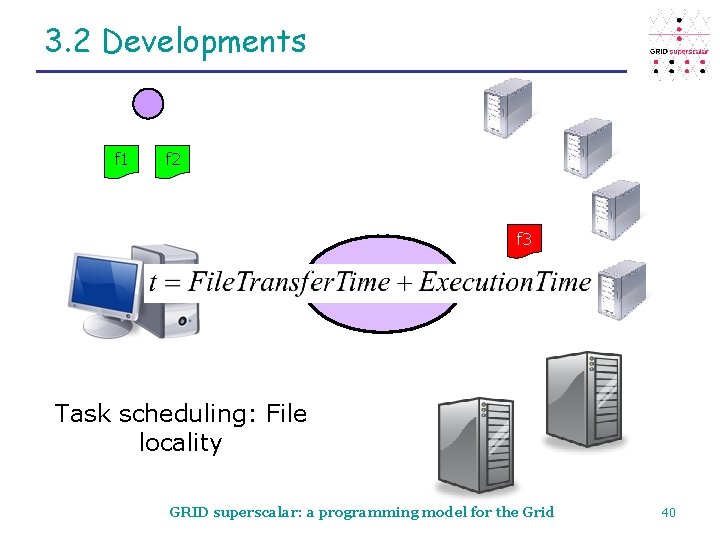

3. 2 Developments f 1 f 2 f 3 Middleware Task scheduling: File locality GRID superscalar: a programming model for the Grid 40

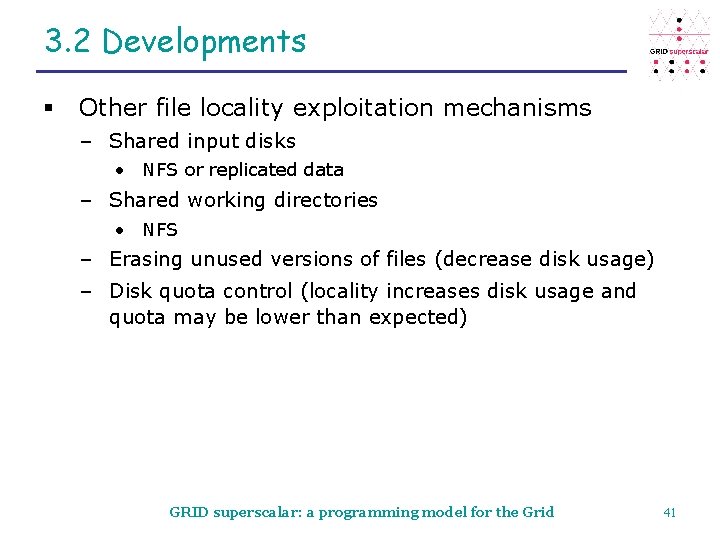

3. 2 Developments § Other file locality exploitation mechanisms – Shared input disks • NFS or replicated data – Shared working directories • NFS – Erasing unused versions of files (decrease disk usage) – Disk quota control (locality increases disk usage and quota may be lower than expected) GRID superscalar: a programming model for the Grid 41

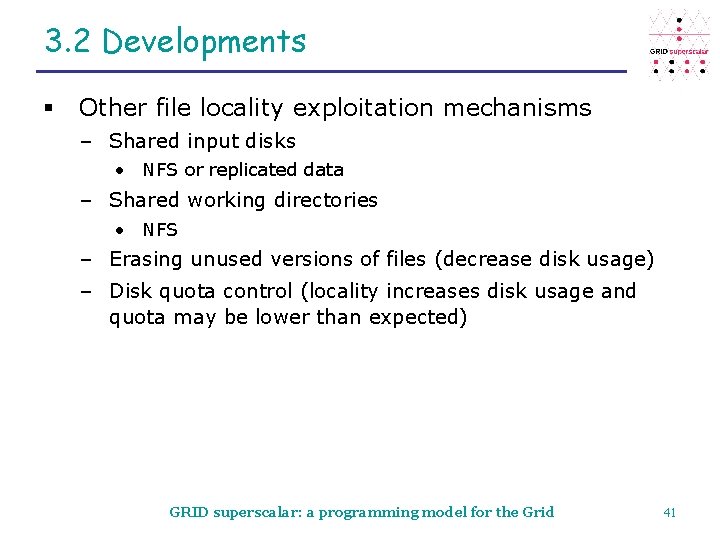

3. 3 Evaluation NAS Grid Benchmarks Representative benchmark, includes different types of workflows which emulate a wide range of Grid Applications Simple optimization example Representative of optimization algorithms, workflow with two-level synchronization New product and process development Production application, workflow with parallel chains of computation Potential energy hypersurface for acetone Massively parallel, long running application Protein comparison Production application, big computational challenge, massively parallel, high number of tasks fast. DNAml Well-known application in the context of MPI for Grids, workflow with synchronization steps GRID superscalar: a programming model for the Grid 42

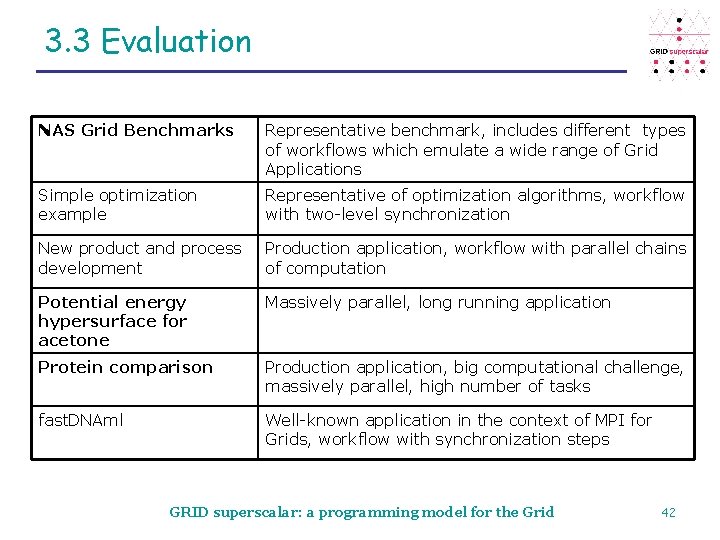

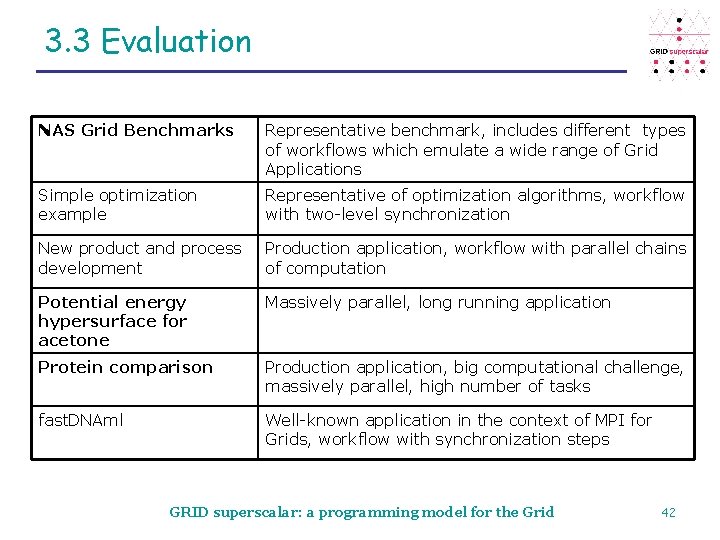

3. 3 Evaluation § NAS Grid Benchmarks Launch BT MF SP MF LU MF BT MF SP HC MF LU MF BT MF SP Report ED MB VP GRID superscalar: a programming model for the Grid 43

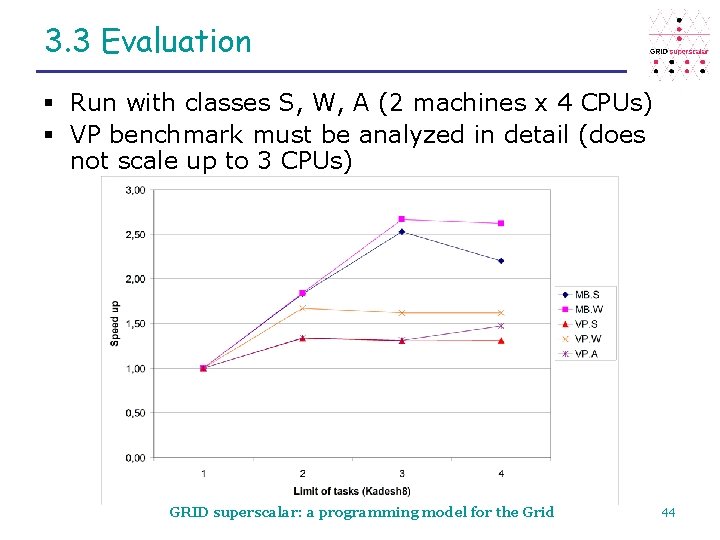

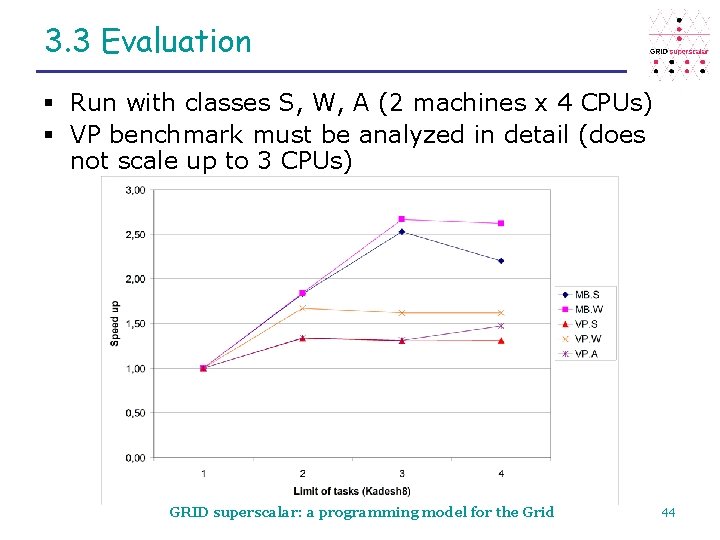

3. 3 Evaluation § Run with classes S, W, A (2 machines x 4 CPUs) § VP benchmark must be analyzed in detail (does not scale up to 3 CPUs) GRID superscalar: a programming model for the Grid 44

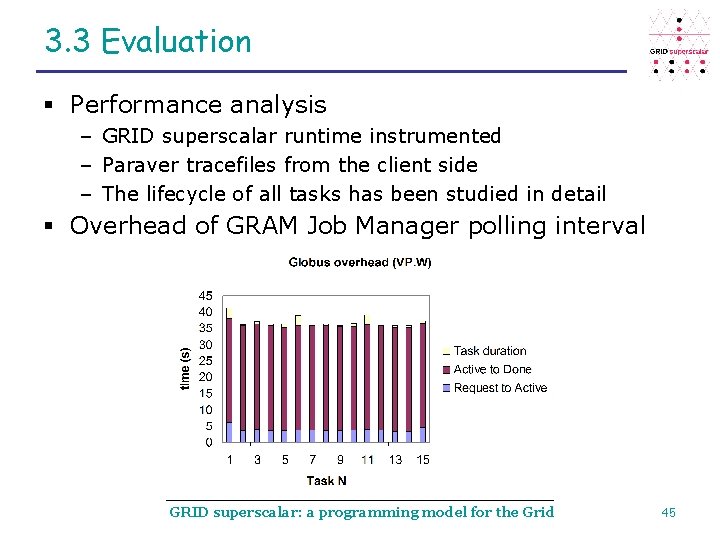

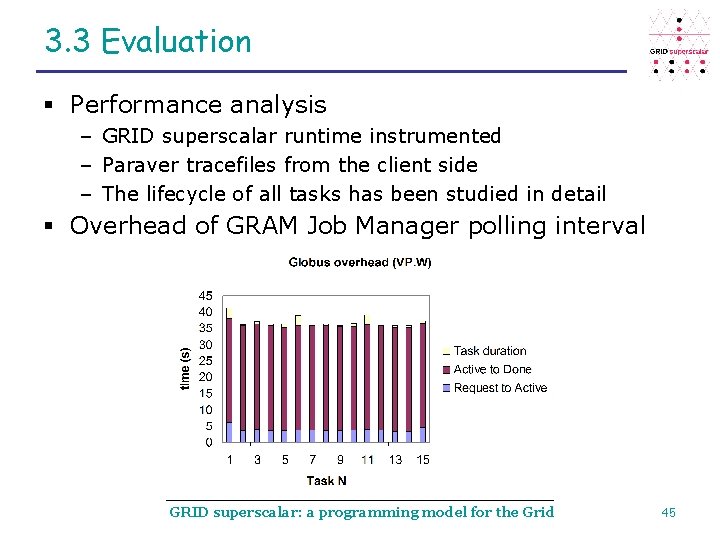

3. 3 Evaluation § Performance analysis – GRID superscalar runtime instrumented – Paraver tracefiles from the client side – The lifecycle of all tasks has been studied in detail § Overhead of GRAM Job Manager polling interval GRID superscalar: a programming model for the Grid 45

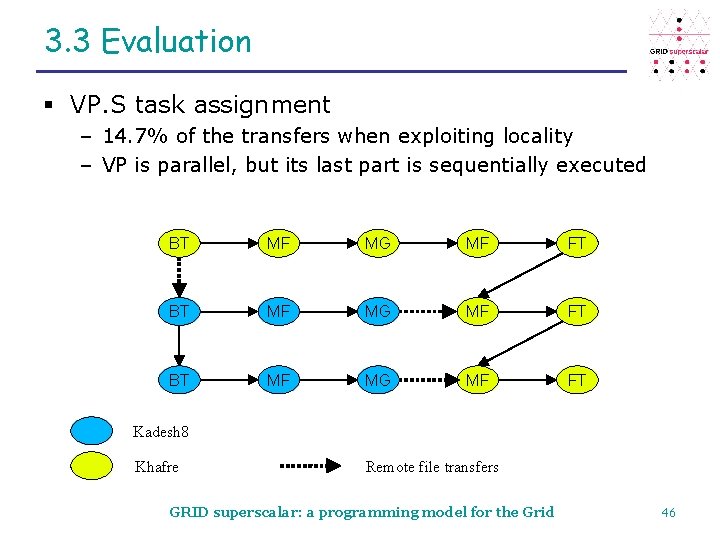

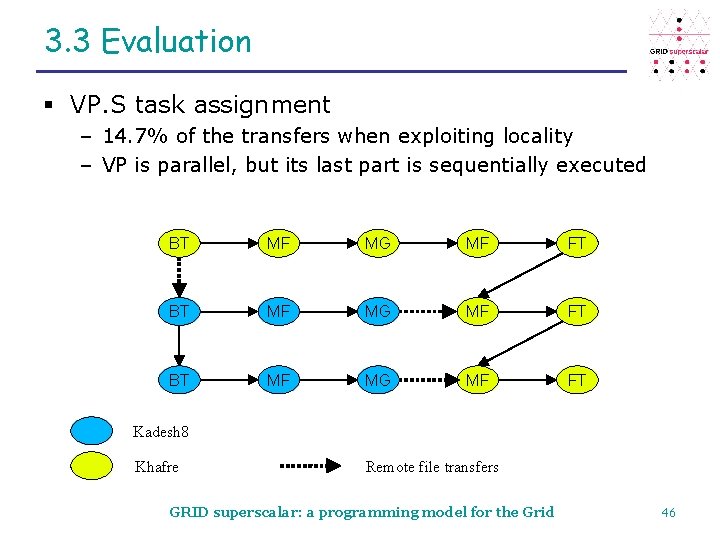

3. 3 Evaluation § VP. S task assignment – 14. 7% of the transfers when exploiting locality – VP is parallel, but its last part is sequentially executed BT MF MG MF FT Kadesh 8 Khafre Remote file transfers GRID superscalar: a programming model for the Grid 46

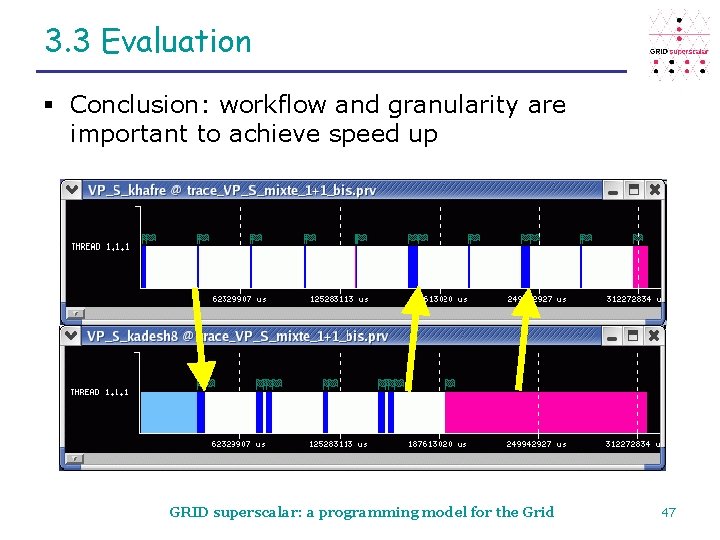

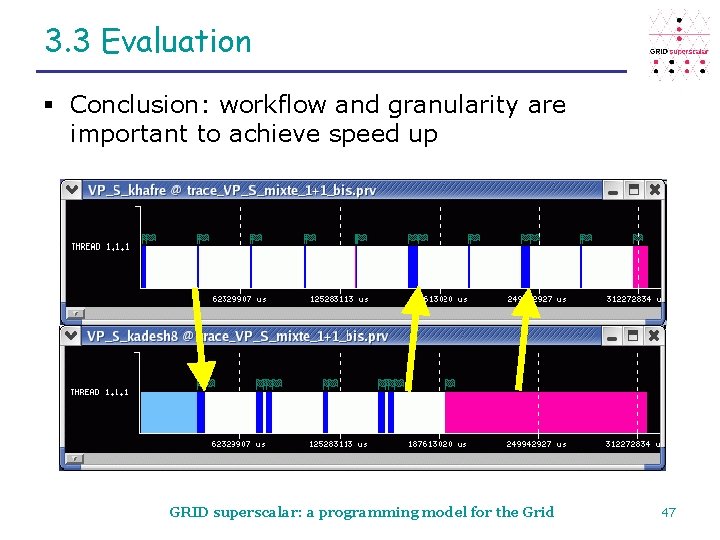

3. 3 Evaluation § Conclusion: workflow and granularity are important to achieve speed up GRID superscalar: a programming model for the Grid 47

3. 3 Evaluation Two-dimensional potential energy hypersurface for acetone as a function of the 1, and 2 angles GRID superscalar: a programming model for the Grid 48

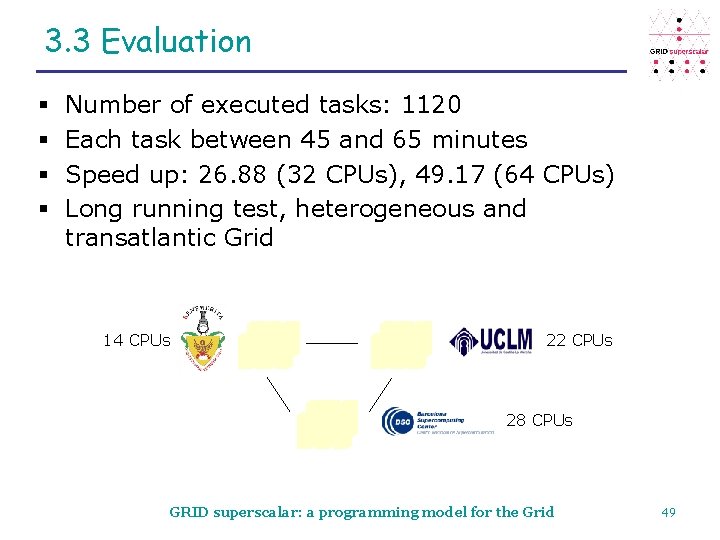

3. 3 Evaluation § § Number of executed tasks: 1120 Each task between 45 and 65 minutes Speed up: 26. 88 (32 CPUs), 49. 17 (64 CPUs) Long running test, heterogeneous and transatlantic Grid 14 CPUs 22 CPUs 28 CPUs GRID superscalar: a programming model for the Grid 49

3. 3 Evaluation § 15 million protein sequences have been compared using BLAST and GRID superscalar Genomes 15 15 million Proteins GRID superscalar: a programming model for the Grid 50

3. 3 Evaluation § 100, 000 tasks in 4000 CPUs (= 1, 000 exclusive nodes) § “Grid” of 1, 000 machines with very low latency between them – Stress test for the runtime § Avoids user to work with queuing system § Saves queuing system from handling a huge set of independent tasks GRID superscalar: a programming model for the Grid 51

GRID superscalar: programming interface and runtime § Publications Raül Sirvent, Josep M. Pérez, Rosa M. Badia, Jesús Labarta, "Automatic Grid workflow based on imperative programming languages", Concurrency and Computation: Practice and Experience, John Wiley & Sons, vol. 18, no. 10, pp. 1169 -1186, 2006. Rosa M. Badia, Raul Sirvent, Jesus Labarta, Josep M. Perez, "Programming the GRID: An Imperative Language-based Approach", Engineering The Grid: Status and Perspective, Section 4, Chapter 12, American Scientific Publishers, January 2006. Rosa M. Badia, Jesús Labarta, Raül Sirvent, Josep M. Pérez, José M. Cela and Rogeli Grima, "Programming Grid Applications with GRID Superscalar", Journal of Grid Computing, Volume 1, Issue 2, 2003. GRID superscalar: a programming model for the Grid 52

GRID superscalar: programming interface and runtime § Work related to standards R. M. Badia, D. Du, E. Huedo, A. Kokossis, I. M. Llorente, R. S. Montero, M. de Palol, R. Sirvent, and C. Vázquez, "Integration of GRID superscalar and Grid. Way Metascheduler with the DRMAA OGF Standard", Euro-Par, 2008. Raül Sirvent, Andre Merzky, Rosa M. Badia, Thilo Kielmann, "GRID superscalar and SAGA: forming a high-level and platformindependent Grid programming environment", Core. GRID Integration Workshop. Integrated Research in Grid Computing, Pisa (Italy), 2005. GRID superscalar: a programming model for the Grid 53

Outline 1. 2. 3. 4. Introduction Programming interface Runtime Fault tolerance at the programming model level 4. 1 Checkpointing 4. 2 Retry mechanisms 4. 3 Task replication 5. Conclusions and future work GRID superscalar: a programming model for the Grid 54

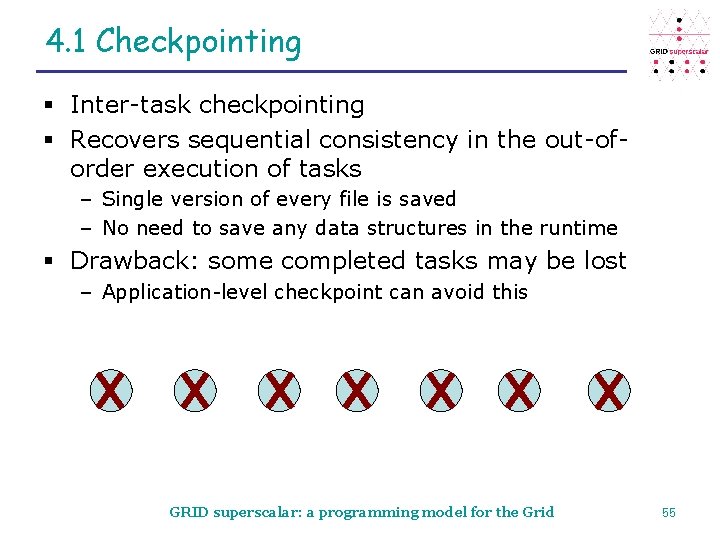

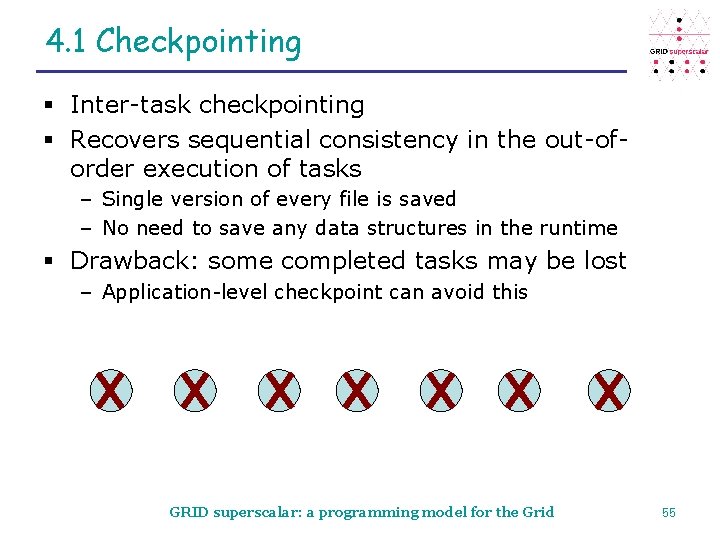

4. 1 Checkpointing § Inter-task checkpointing § Recovers sequential consistency in the out-oforder execution of tasks – Single version of every file is saved – No need to save any data structures in the runtime § Drawback: some completed tasks may be lost – Application-level checkpoint can avoid this 0 1 2 3 4 5 GRID superscalar: a programming model for the Grid 6 55

4. 1 Checkpointing § Conclusions – Low complexity in order to checkpoint a task • ~1% overhead introduced – Can deal with both application level errors or Grid level errors • Most important when an unrecoverable error appears – Transparent for end users GRID superscalar: a programming model for the Grid 56

4. 2 Retry mechanisms C Middleware Automatic drop of machines GRID superscalar: a programming model for the Grid 57

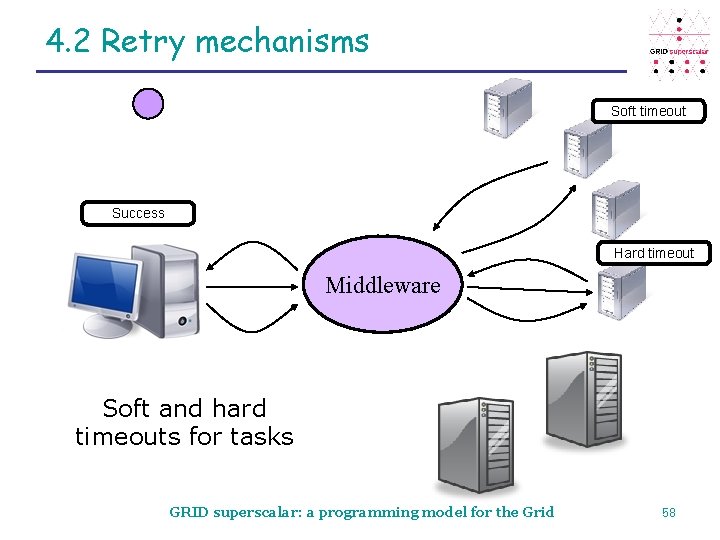

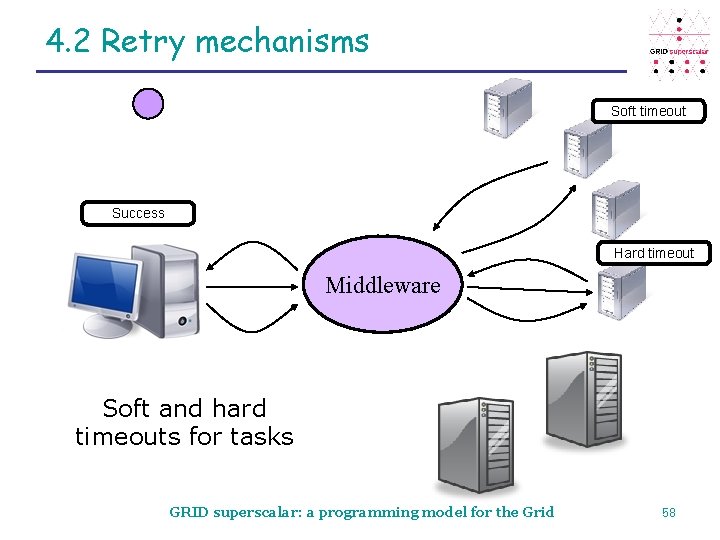

4. 2 Retry mechanisms Soft timeout Success Failure Hard Soft timeout Middleware Soft and hard timeouts for tasks GRID superscalar: a programming model for the Grid 58

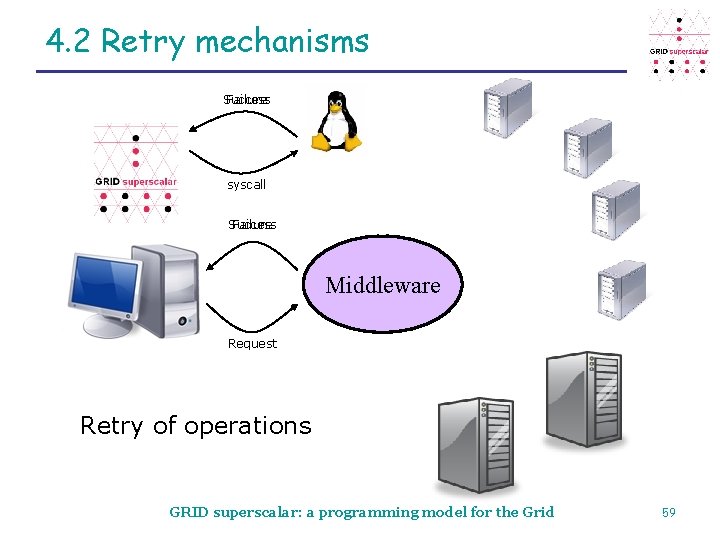

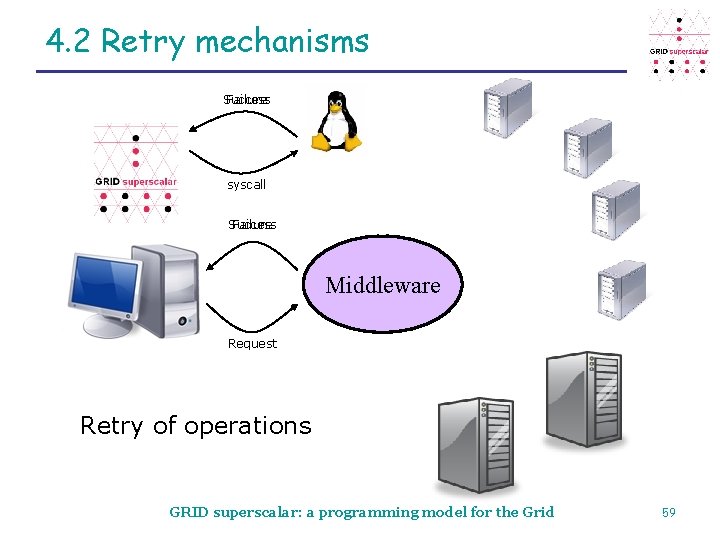

4. 2 Retry mechanisms Success Failure syscall Success Failure Middleware Request Retry of operations GRID superscalar: a programming model for the Grid 59

4. 2 Retry mechanisms § Conclusions – – – Keep running despite failures Dynamic: when and where to resubmit Detects performance degradations No overhead when no failures are detected Transparent for end users GRID superscalar: a programming model for the Grid 60

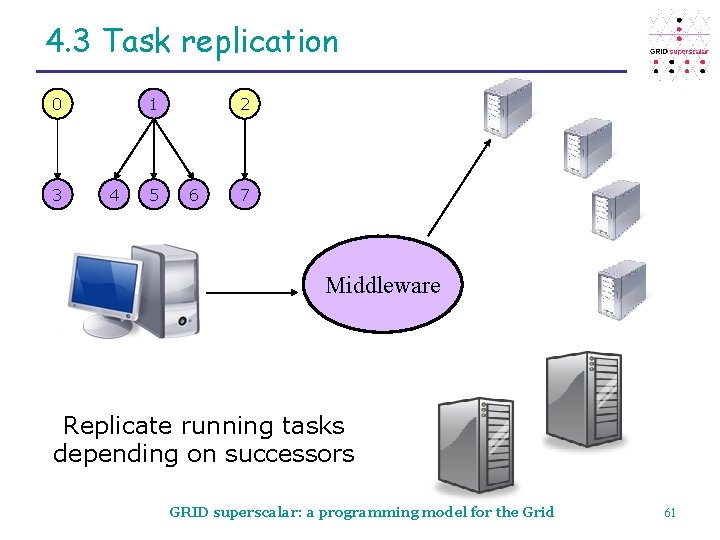

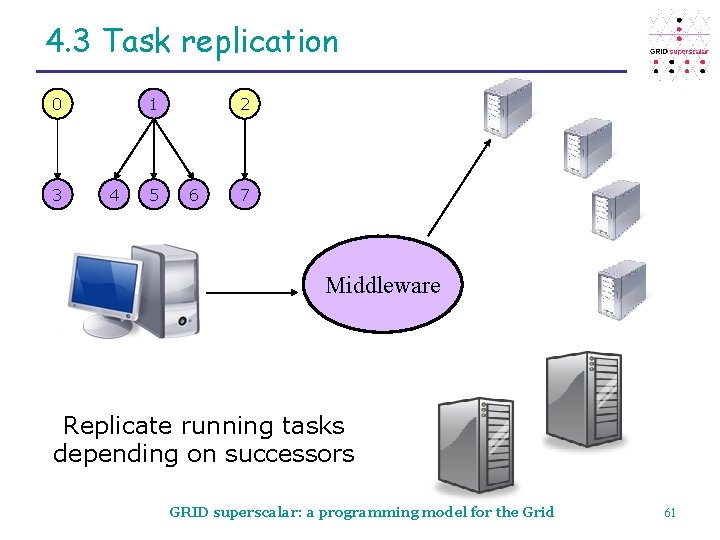

4. 3 Task replication 0 3 1 4 5 2 6 7 Middleware Replicate running tasks depending on successors GRID superscalar: a programming model for the Grid 61

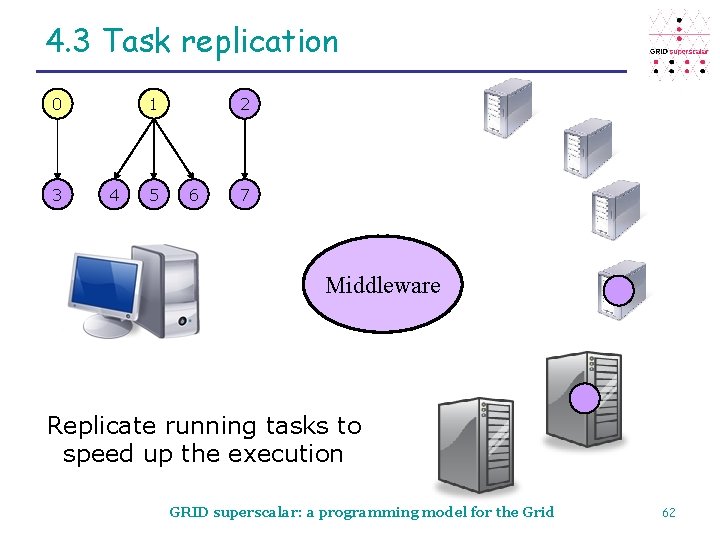

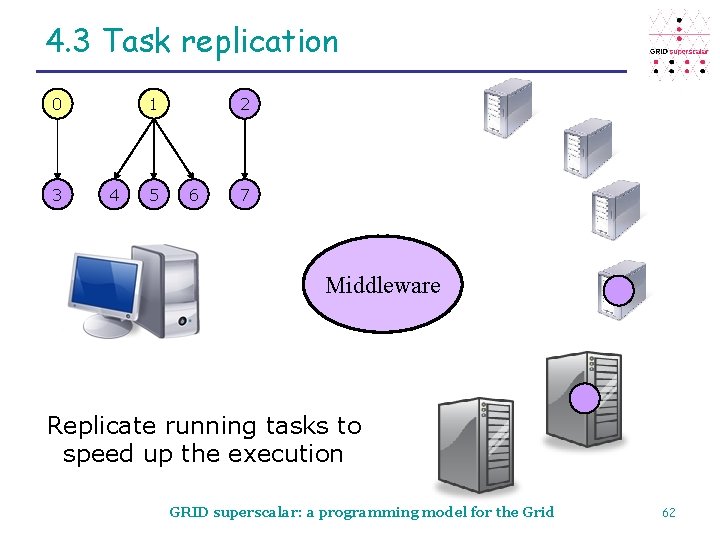

4. 3 Task replication 0 3 1 4 5 2 6 7 Middleware Replicate running tasks to speed up the execution GRID superscalar: a programming model for the Grid 62

4. 3 Task replication § Conclusions – Dynamic replication: application level knowledge is used (the workflow) – Replication can deal with failures hiding retry overhead – Replication can speed up applications in heterogeneous Grids – Transparent for end users – Drawback: increased usage of resources GRID superscalar: a programming model for the Grid 63

4. Fault tolerance features § Publications Vasilis Dialinos, Rosa M. Badia, Raül Sirvent, Josep M. Pérez and Jesús Labarta, "Implementing Phylogenetic Inference with GRID superscalar", Cluster Computing and Grid 2005 (CCGRID 2005), Cardiff, UK, 2005. Raül Sirvent, Rosa M. Badia and Jesús Labarta, "Graph-based task replication for workflow applications", Submitted, HPCC 2009. GRID superscalar: a programming model for the Grid 64

Outline 1. 2. 3. 4. 5. Introduction Programming interface Runtime Fault tolerance at the programming model level Conclusions and future work GRID superscalar: a programming model for the Grid 65

5. Conclusions and future work § Grid-unaware programming model § Transparent features for users, exploiting parallelism and failure treatment § Used in REAL systems and REAL applications § Some future research is already ONGOING (Star. Ss) GRID superscalar: a programming model for the Grid 66

5. Conclusions and future work § Future work – Grid of supercomputers (Red Española de Supercomputación) – Higher scale tests (hundreds? thousands? ) – More complex brokering • Resource discovery/monitoring • New scheduling policies based on the workflow • Automatic prediction of execution times – New policies for task replication – New architectures for Star. Ss GRID superscalar: a programming model for the Grid 67