Graph Processing COS 518 Advanced Computer Systems Lecture

- Slides: 15

Graph Processing COS 518: Advanced Computer Systems Lecture 12 Mike Freedman [Content adapted from K. Jamieson and J. Gonzalez]

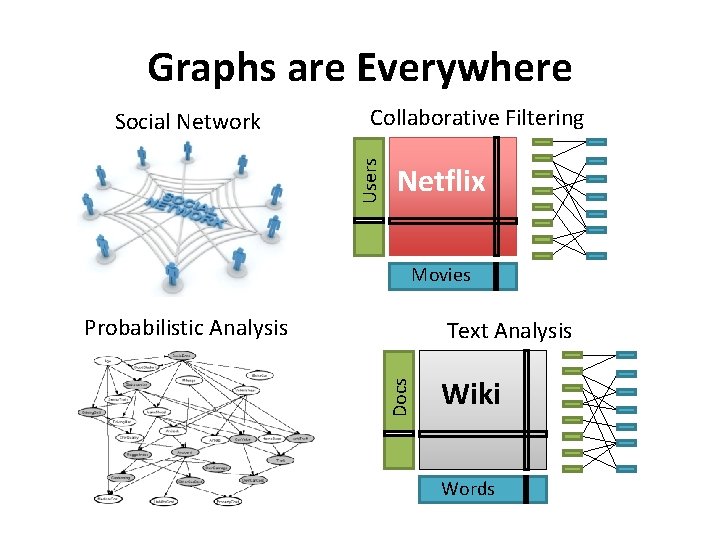

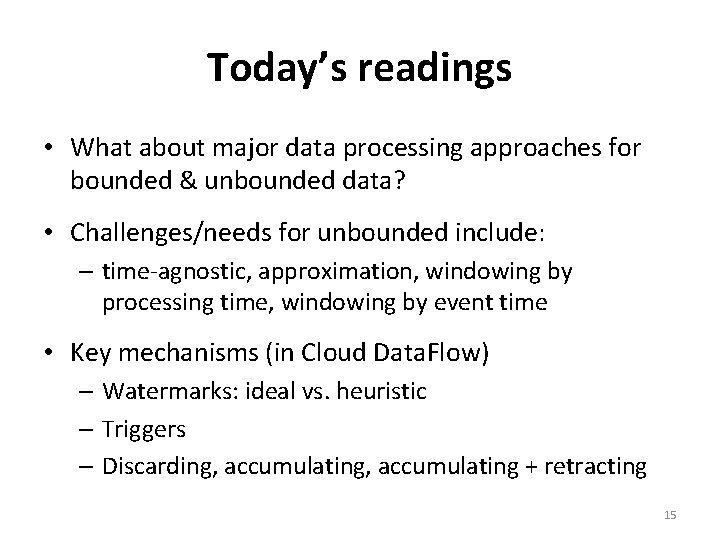

Graphs are Everywhere Collaborative Filtering Users Social Network Netflix Movies Probabilistic Analysis Docs Text Analysis Wiki Words

Concrete Examples Label Propagation Page Rank

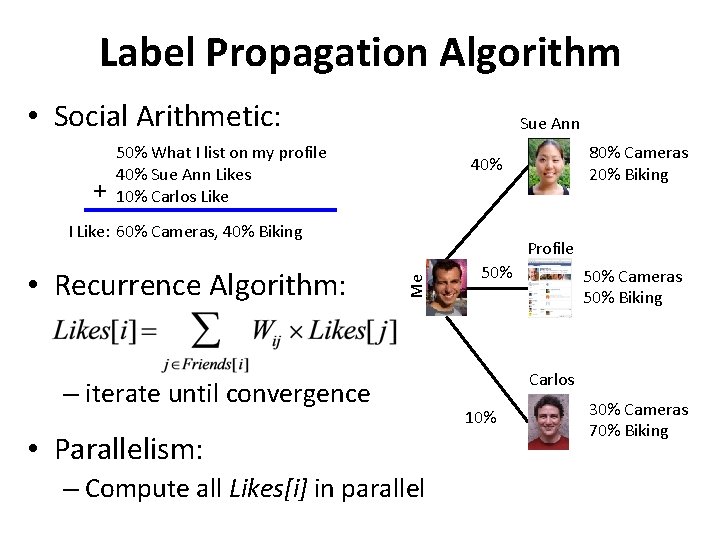

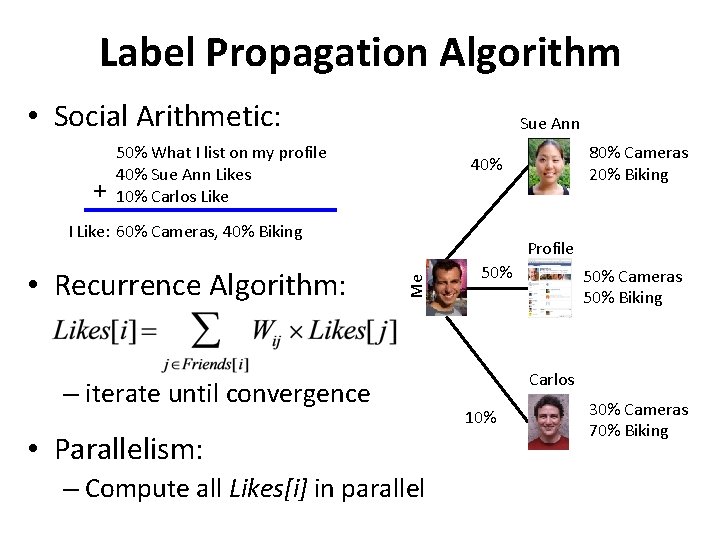

Label Propagation Algorithm • Social Arithmetic: + Sue Ann 50% What I list on my profile 40% Sue Ann Likes 10% Carlos Like I Like: 60% Cameras, 40% Biking Profile Me • Recurrence Algorithm: 80% Cameras 20% Biking 40% – iterate until convergence • Parallelism: – Compute all Likes[i] in parallel 50% Cameras 50% Biking Carlos 10% 30% Cameras 70% Biking

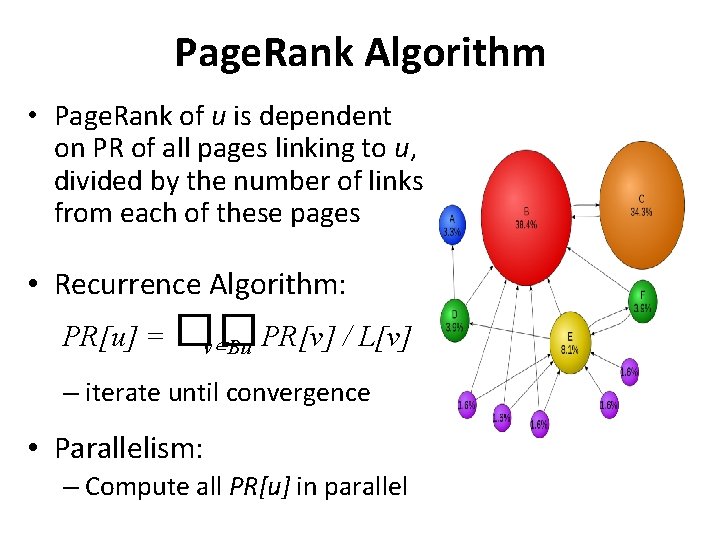

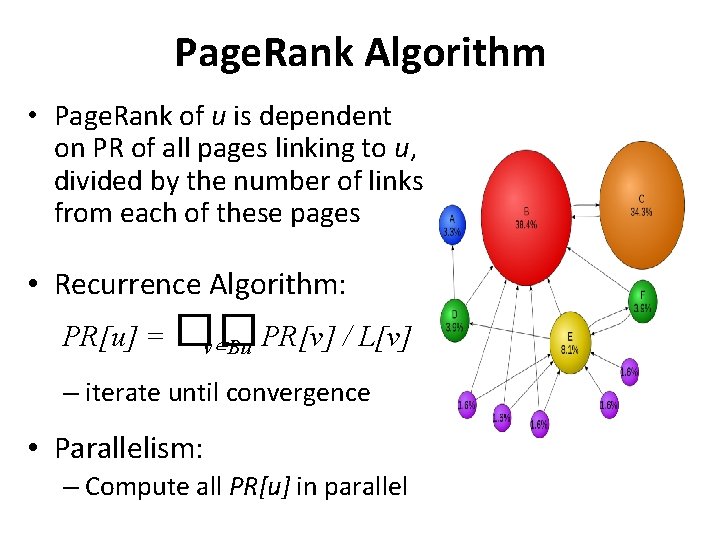

Page. Rank Algorithm • Page. Rank of u is dependent on PR of all pages linking to u, divided by the number of links from each of these pages • Recurrence Algorithm: PR[u] = �� v∈Bu PR[v] / L[v] – iterate until convergence • Parallelism: – Compute all PR[u] in parallel

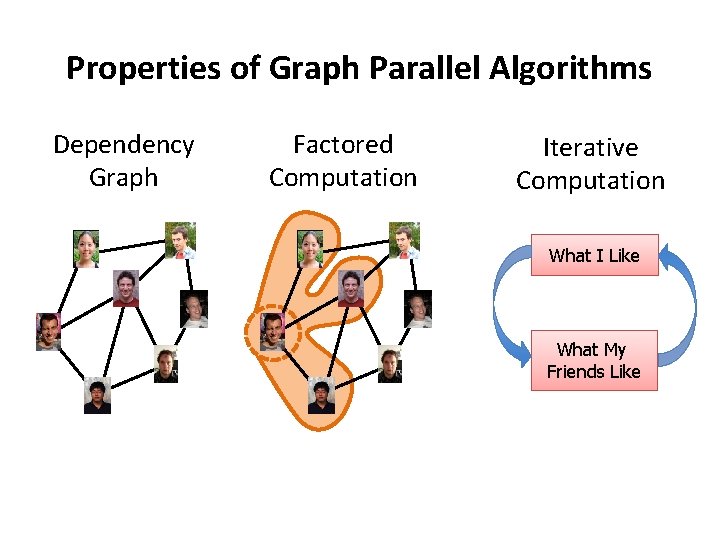

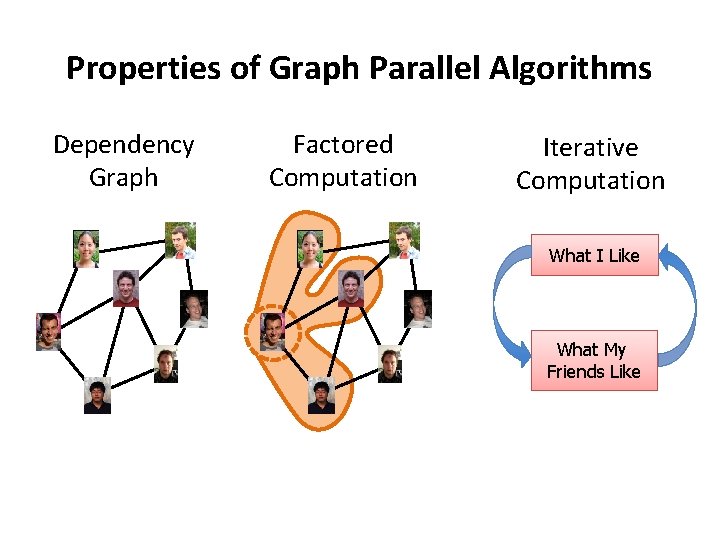

Properties of Graph Parallel Algorithms Dependency Graph Factored Computation Iterative Computation What I Like What My Friends Like

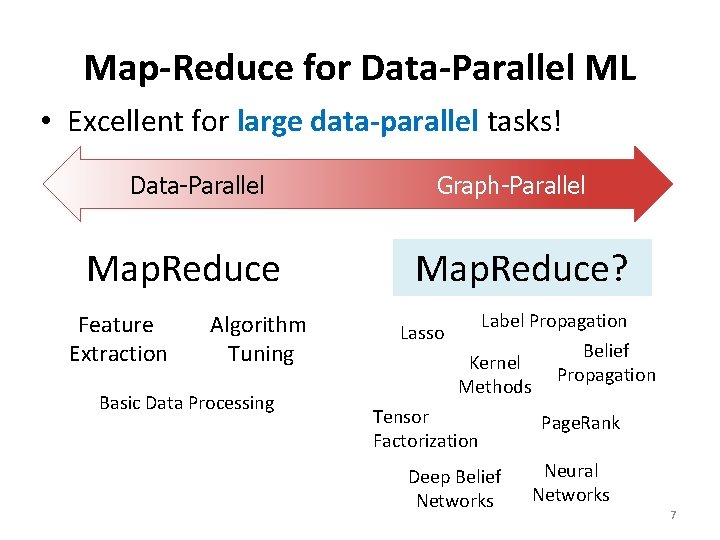

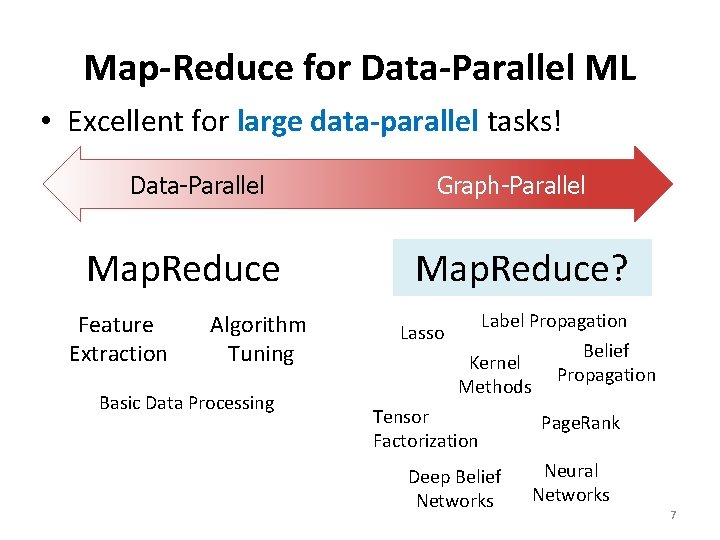

Map-Reduce for Data-Parallel ML • Excellent for large data-parallel tasks! Data-Parallel Map. Reduce Feature Extraction Algorithm Tuning Basic Data Processing Graph-Parallel Map. Reduce? Label Propagation Lasso Kernel Methods Tensor Factorization Deep Belief Networks Belief Propagation Page. Rank Neural Networks 7

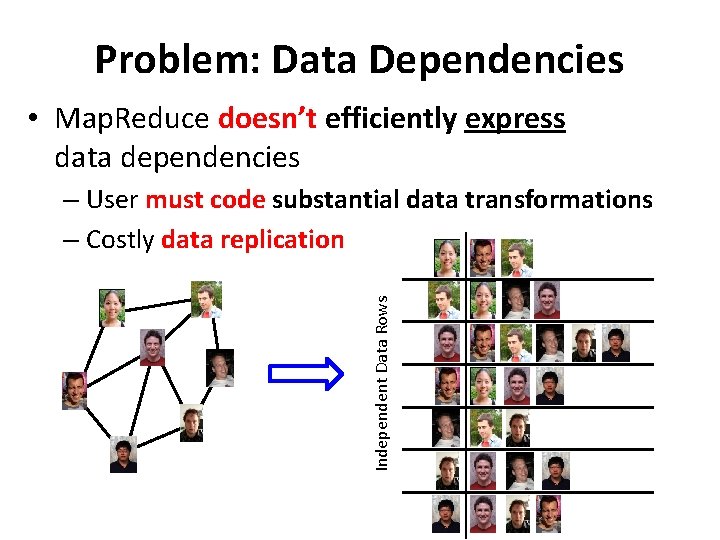

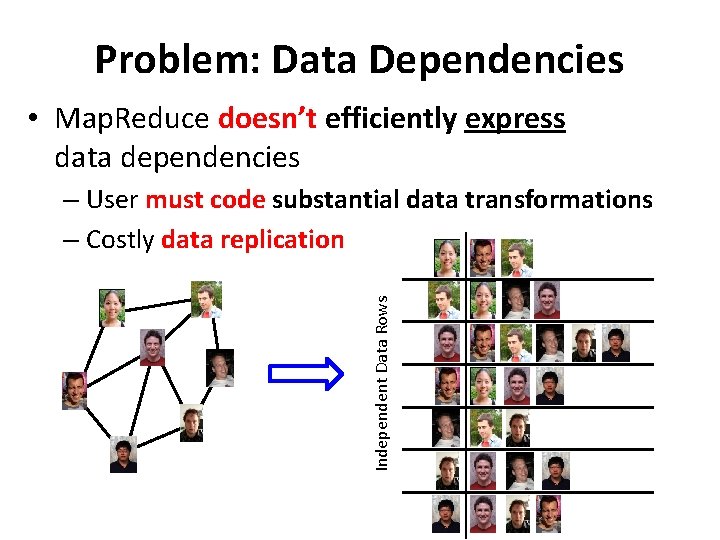

Problem: Data Dependencies • Map. Reduce doesn’t efficiently express data dependencies Independent Data Rows – User must code substantial data transformations – Costly data replication

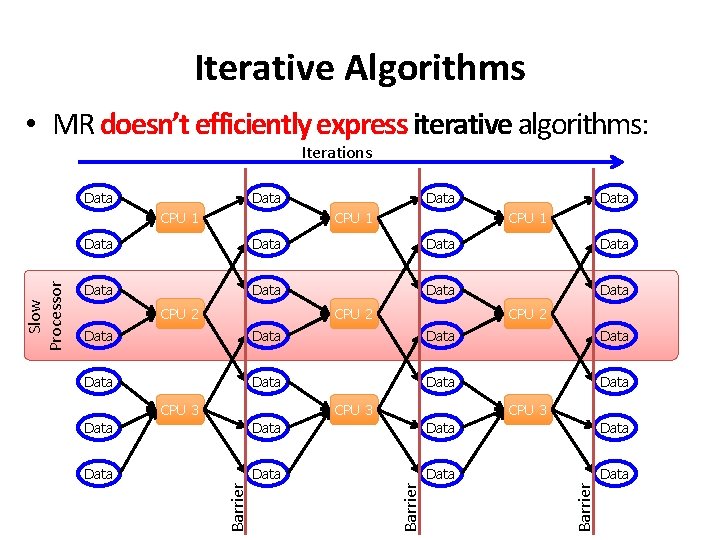

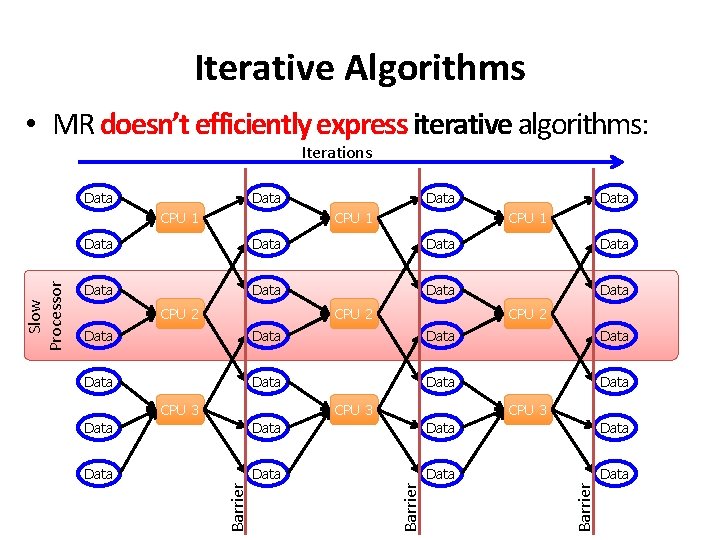

Iterative Algorithms • MR doesn’t efficiently express iterative algorithms: Iterations Data CPU 1 Data Data Data CPU 2 Data Data CPU 3 Data Data Barrier Slow Processor CPU 1 Data

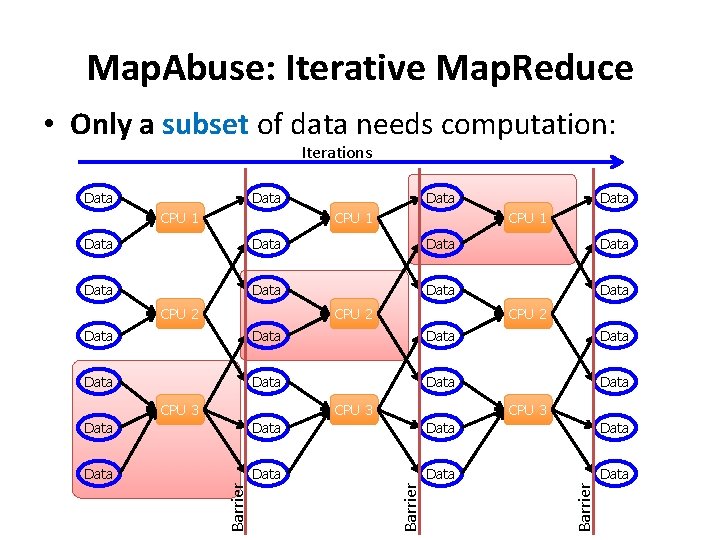

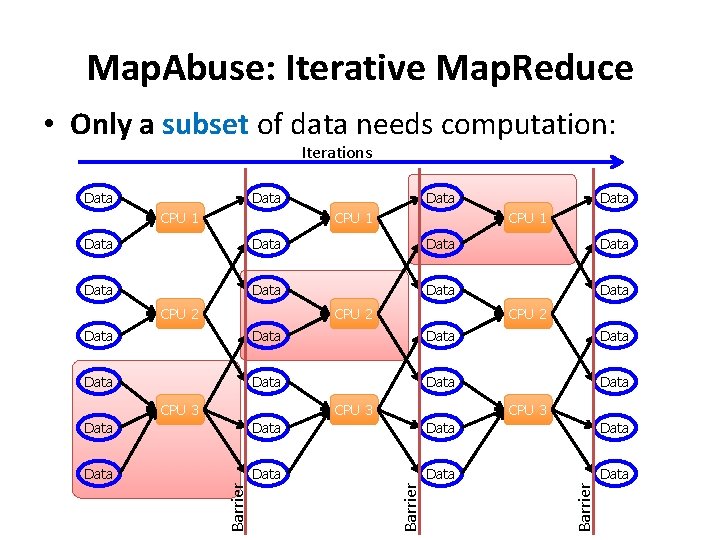

Map. Abuse: Iterative Map. Reduce • Only a subset of data needs computation: Iterations Data CPU 1 Data Data CPU 2 Data Data CPU 3 Data Data Barrier Data

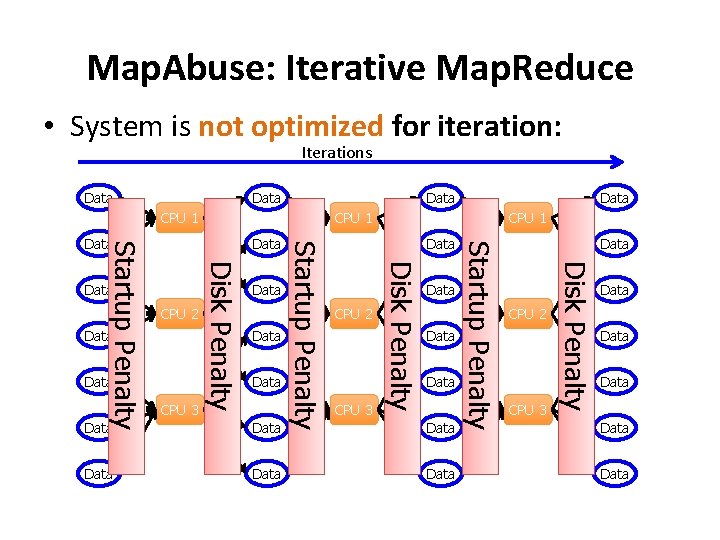

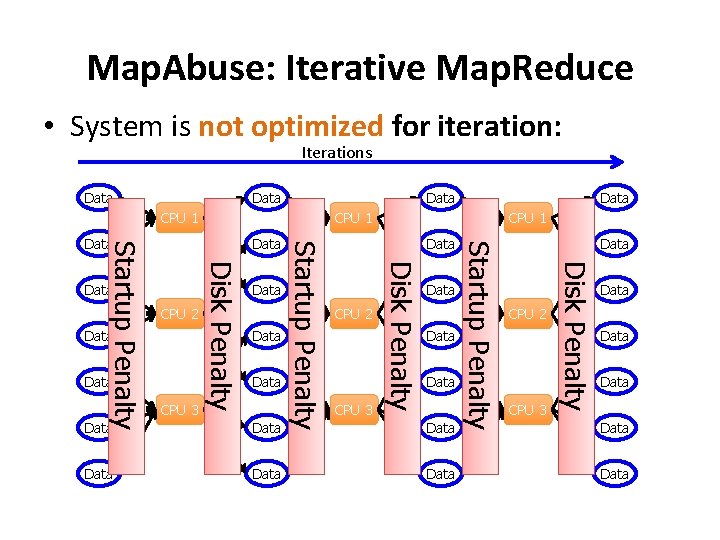

Map. Abuse: Iterative Map. Reduce • System is not optimized for iteration: Iterations Data CPU 1 Data CPU 2 CPU 3 Data Data CPU 2 CPU 3 Disk Penalty CPU 3 Data Startup Penalty Data CPU 1 Disk Penalty Data Disk Penalty Startup Penalty CPU 2 Data Startup Penalty Data Data Data

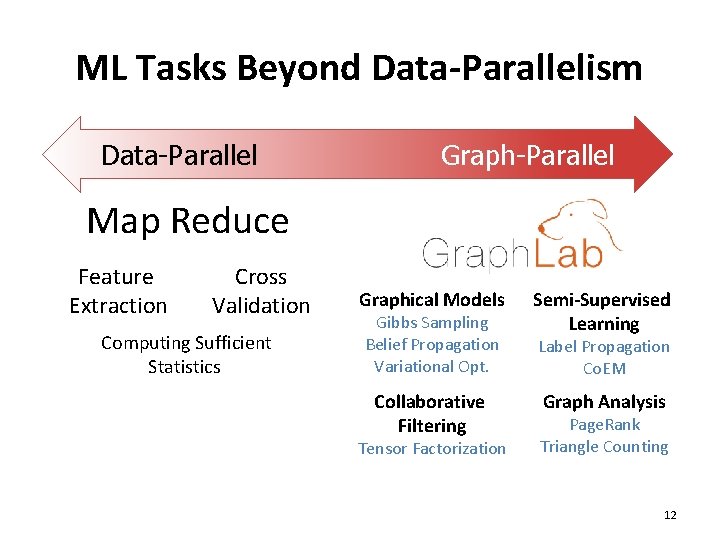

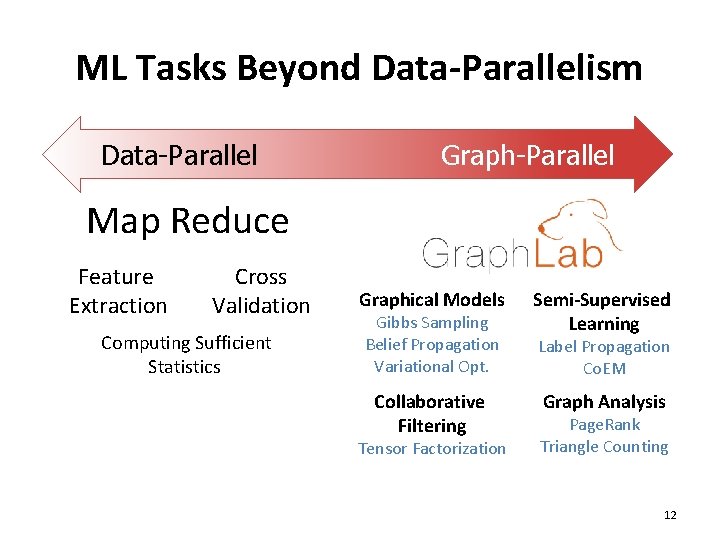

ML Tasks Beyond Data-Parallelism Data-Parallel Graph-Parallel Map Reduce Feature Extraction Cross Validation Computing Sufficient Statistics Graphical Models Gibbs Sampling Belief Propagation Variational Opt. Collaborative Filtering Tensor Factorization Semi-Supervised Learning Label Propagation Co. EM Graph Analysis Page. Rank Triangle Counting 12

This week’s lectures • Graph processing – Why relationships, sampling, and iterations often use in graph processing not well fit by Map. Reduce – How to take a graph-centric processing perspective • Machine learning – These are solving one type of ML algorithm – What other systems are needed, particularly given heavy focus on iterative algorithms 13

Today’s readings • Streaming is about unbounded data sets, not particular execution engines • Streaming is in fact a strict superset of batch, Lambda Architecture destined for retirement • Needs of good streaming systems: correctness and tools for reasoning about time. • Differences between event time and processing time, and the challenges they impose 14

Today’s readings • What about major data processing approaches for bounded & unbounded data? • Challenges/needs for unbounded include: – time-agnostic, approximation, windowing by processing time, windowing by event time • Key mechanisms (in Cloud Data. Flow) – Watermarks: ideal vs. heuristic – Triggers – Discarding, accumulating + retracting 15