Graduate attribute assessment as a COURSE INSTRUCTOR http

Graduate attribute assessment as a COURSE INSTRUCTOR http: //bit. ly/KK 6 Rsc Brian Frank and Jake Kaupp CEEA Workshop W 2 -1 B

WHY?

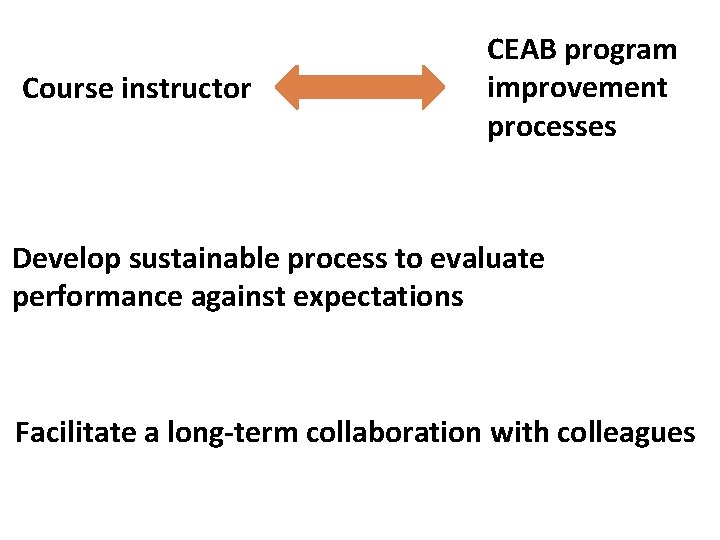

Course instructor CEAB program improvement processes Develop sustainable process to evaluate performance against expectations Facilitate a long-term collaboration with colleagues

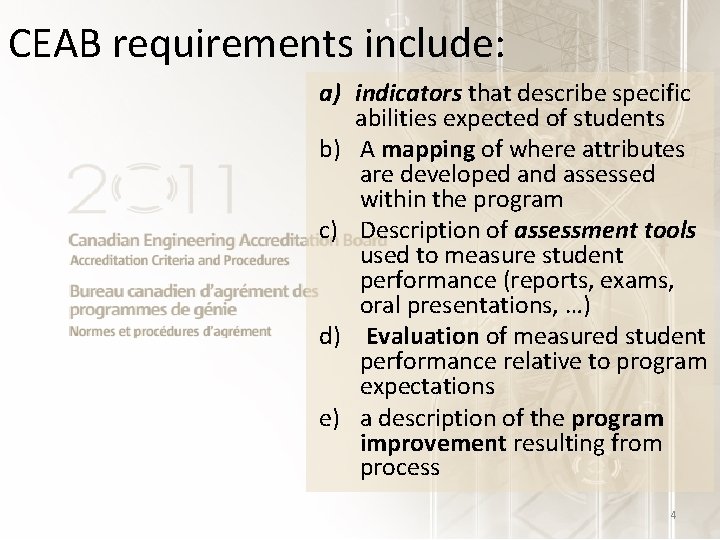

CEAB requirements include: a) indicators that describe specific abilities expected of students b) A mapping of where attributes are developed and assessed within the program c) Description of assessment tools used to measure student performance (reports, exams, oral presentations, …) d) Evaluation of measured student performance relative to program expectations e) a description of the program improvement resulting from process 4

Graduate attributes required 1. 2. 3. 4. 5. 6. Knowledge base for engineering Problem analysis Investigation Design Use of engineering tools Individual and team work 7. 8. 9. 10. 11. 12. Communication skills Professionalism Impact on society and environment Ethics and equity Economics and project manage. Lifelong learning

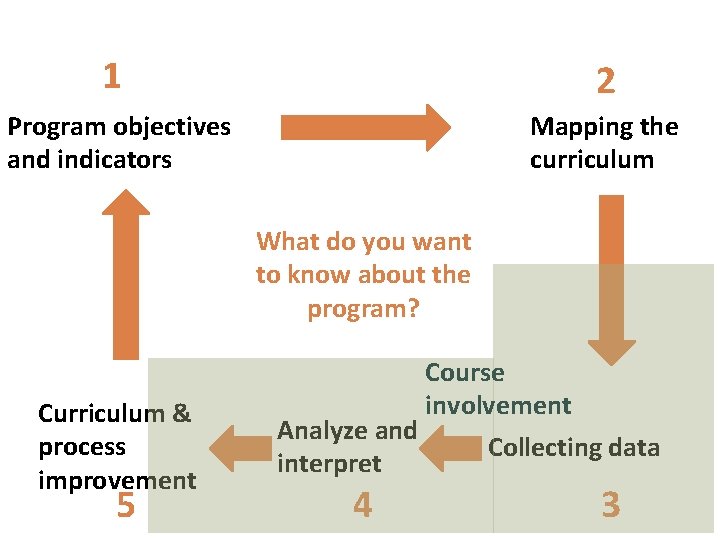

1 2 Mapping the curriculum Program objectives and indicators What do you want to know about the program? Curriculum & process improvement 5 Course involvement Analyze and Collecting data interpret 4 3

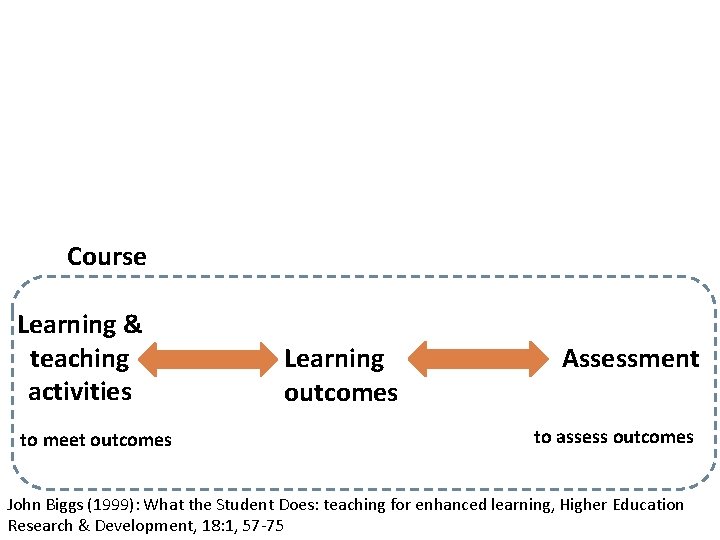

Course Learning & teaching activities to meet outcomes Learning outcomes Assessment to assess outcomes John Biggs (1999): What the Student Does: teaching for enhanced learning, Higher Education Research & Development, 18: 1, 57 -75

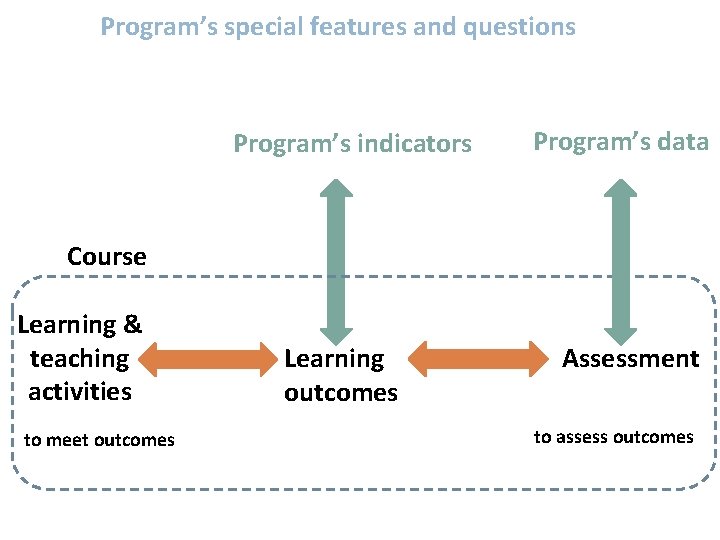

Program’s special features and questions Program’s indicators Program’s data Course Learning & teaching activities to meet outcomes Learning outcomes Assessment to assess outcomes

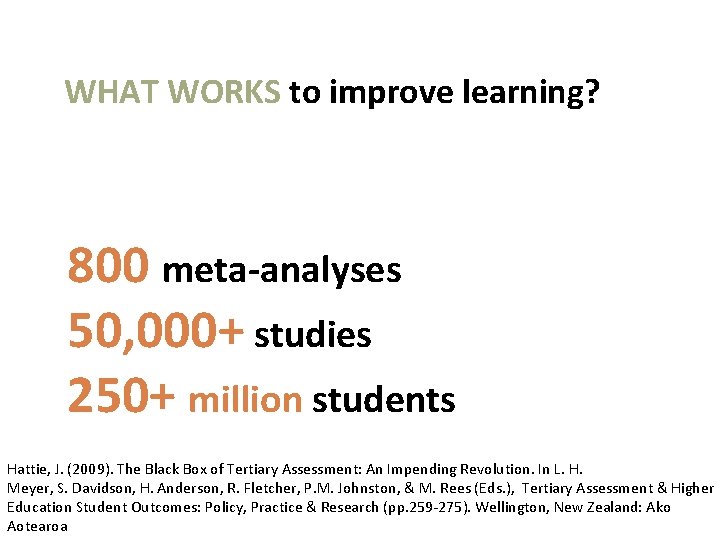

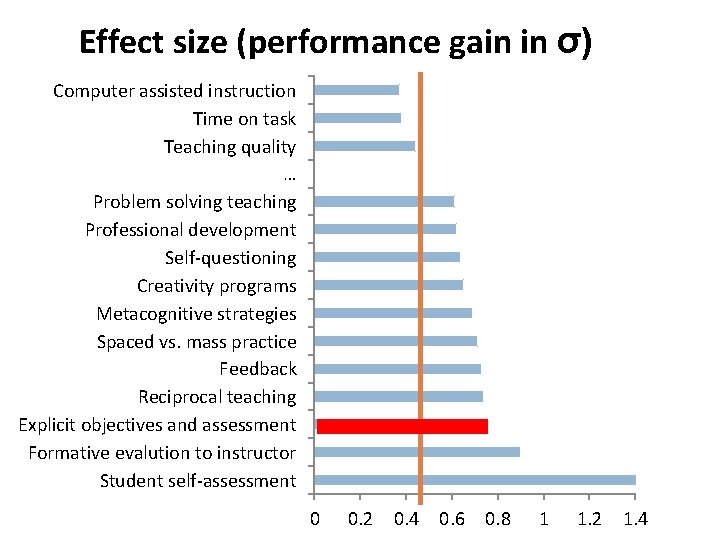

WHAT WORKS to improve learning? 800 meta-analyses 50, 000+ studies 250+ million students Hattie, J. (2009). The Black Box of Tertiary Assessment: An Impending Revolution. In L. H. Meyer, S. Davidson, H. Anderson, R. Fletcher, P. M. Johnston, & M. Rees (Eds. ), Tertiary Assessment & Higher Education Student Outcomes: Policy, Practice & Research (pp. 259 -275). Wellington, New Zealand: Ako Aotearoa

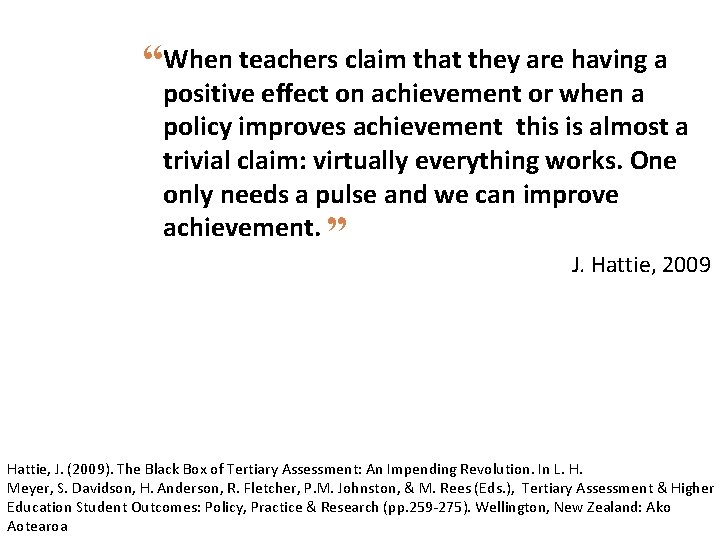

“When teachers claim that they are having a positive effect on achievement or when a policy improves achievement this is almost a trivial claim: virtually everything works. One only needs a pulse and we can improve achievement. ” J. Hattie, 2009 Hattie, J. (2009). The Black Box of Tertiary Assessment: An Impending Revolution. In L. H. Meyer, S. Davidson, H. Anderson, R. Fletcher, P. M. Johnston, & M. Rees (Eds. ), Tertiary Assessment & Higher Education Student Outcomes: Policy, Practice & Research (pp. 259 -275). Wellington, New Zealand: Ako Aotearoa

Effect size (performance gain in σ) Computer assisted instruction Time on task Teaching quality … Problem solving teaching Professional development Self-questioning Creativity programs Metacognitive strategies Spaced vs. mass practice Feedback Reciprocal teaching Explicit objectives and assessment Formative evalution to instructor Student self-assessment 0 0. 2 0. 4 0. 6 0. 8 1 1. 2 1. 4

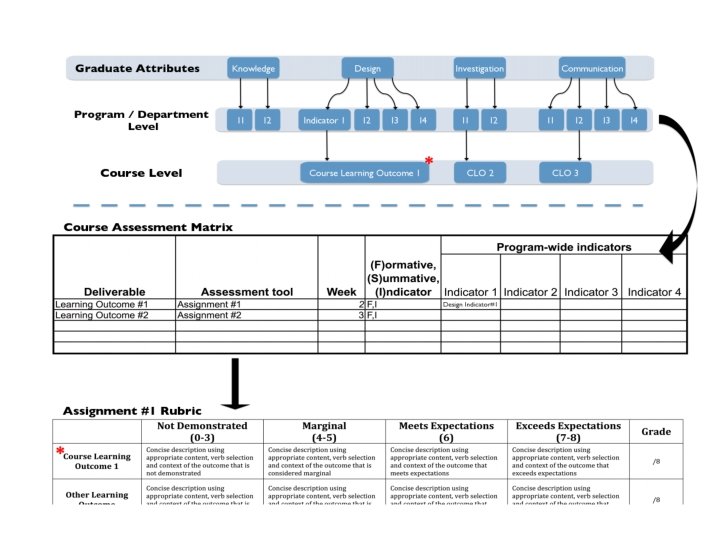

Mapping indicators to a course

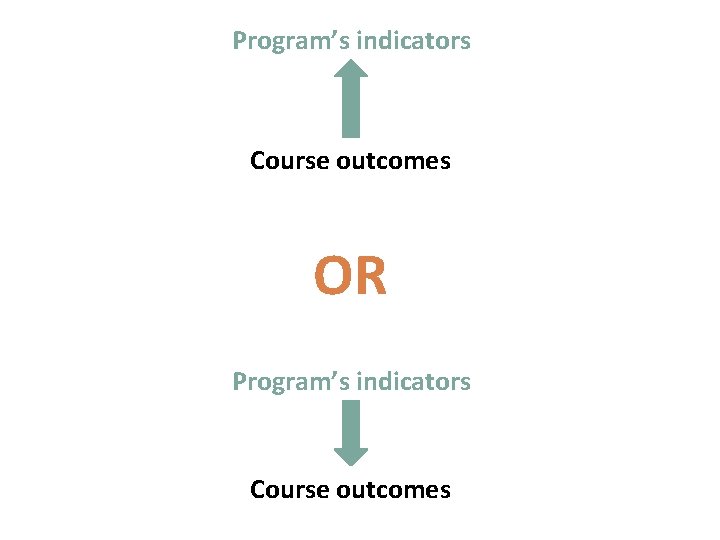

Program’s indicators Course outcomes OR Program’s indicators Course outcomes

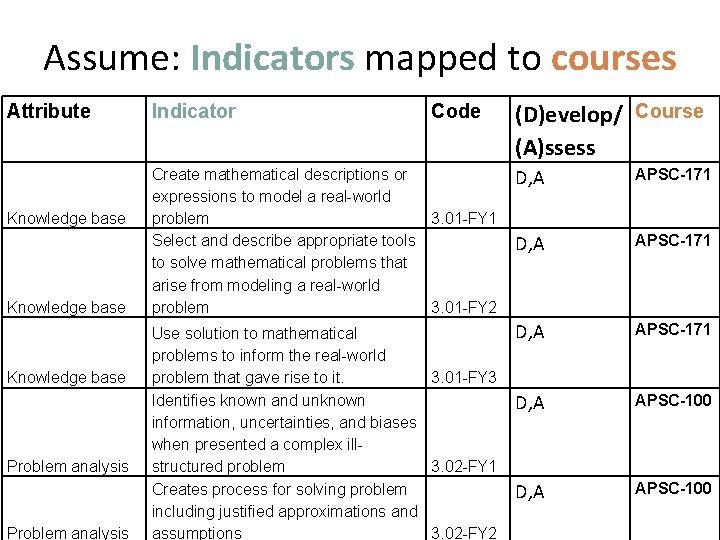

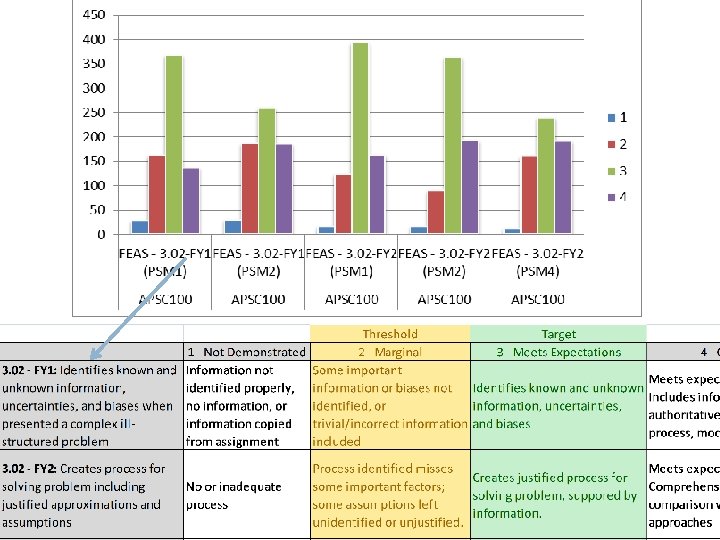

Assume: Indicators mapped to courses Attribute Knowledge base Problem analysis Indicator Code (D)evelop/ Course (A)ssess Create mathematical descriptions or expressions to model a real-world problem 3. 01 -FY 1 Select and describe appropriate tools to solve mathematical problems that arise from modeling a real-world problem 3. 01 -FY 2 D, A APSC-171 Use solution to mathematical problems to inform the real-world problem that gave rise to it. 3. 01 -FY 3 Identifies known and unknown information, uncertainties, and biases when presented a complex illstructured problem 3. 02 -FY 1 Creates process for solving problem including justified approximations and assumptions 3. 02 -FY 2 D, A APSC-171 D, A APSC-100

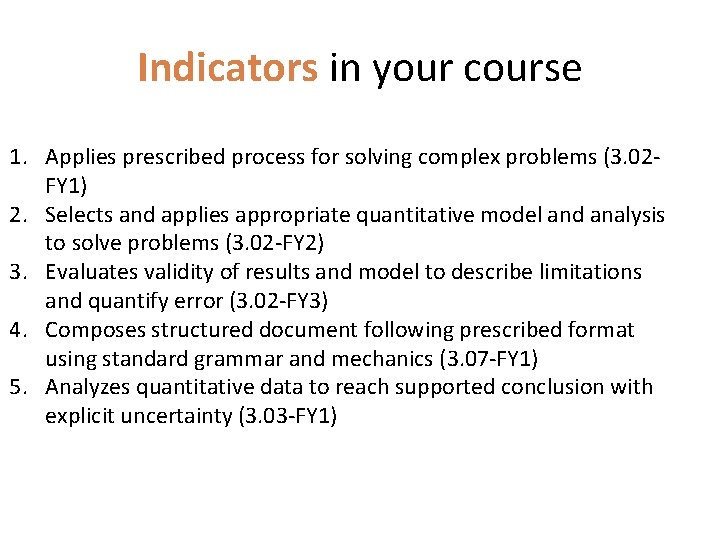

Indicators in your course 1. Applies prescribed process for solving complex problems (3. 02 FY 1) 2. Selects and applies appropriate quantitative model and analysis to solve problems (3. 02 -FY 2) 3. Evaluates validity of results and model to describe limitations and quantify error (3. 02 -FY 3) 4. Composes structured document following prescribed format using standard grammar and mechanics (3. 07 -FY 1) 5. Analyzes quantitative data to reach supported conclusion with explicit uncertainty (3. 03 -FY 1)

develop and assess indicators to answer questions.

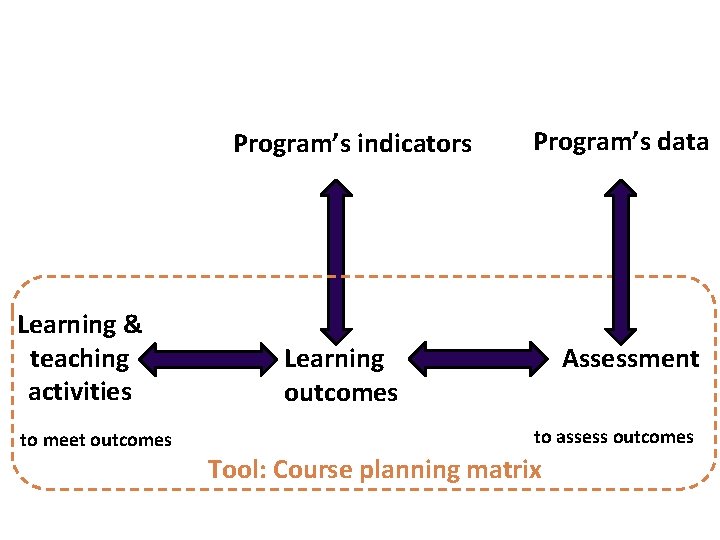

Program’s indicators Learning & teaching activities to meet outcomes Program’s data Learning outcomes Assessment to assess outcomes Tool: Course planning matrix

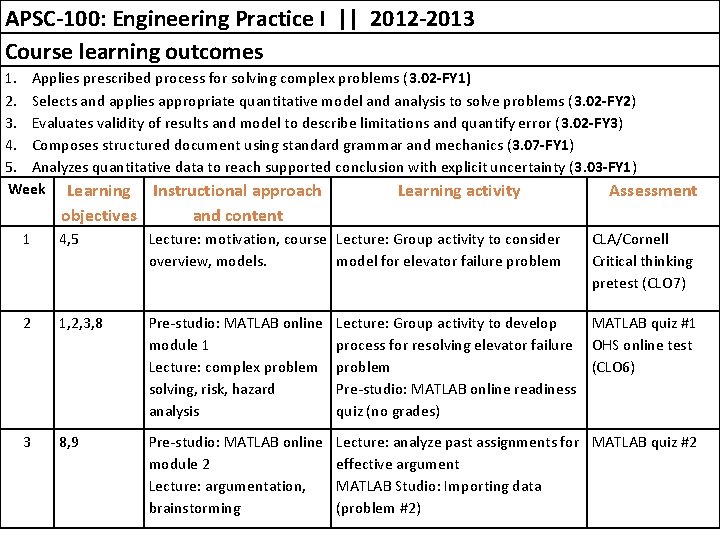

APSC-100: Engineering Practice I || 2012 -2013 Course learning outcomes 1. Applies prescribed process for solving complex problems (3. 02 -FY 1) 2. Selects and applies appropriate quantitative model and analysis to solve problems (3. 02 -FY 2) 3. Evaluates validity of results and model to describe limitations and quantify error (3. 02 -FY 3) 4. Composes structured document using standard grammar and mechanics (3. 07 -FY 1) 5. Analyzes quantitative data to reach supported conclusion with explicit uncertainty (3. 03 -FY 1) Week Learning Instructional approach Learning activity Assessment objectives and content 1 4, 5 Lecture: motivation, course Lecture: Group activity to consider overview, models. model for elevator failure problem CLA/Cornell Critical thinking pretest (CLO 7) 2 1, 2, 3, 8 Pre-studio: MATLAB online module 1 Lecture: complex problem solving, risk, hazard analysis Lecture: Group activity to develop process for resolving elevator failure problem Pre-studio: MATLAB online readiness quiz (no grades) MATLAB quiz #1 OHS online test (CLO 6) 3 8, 9 Pre-studio: MATLAB online module 2 Lecture: argumentation, brainstorming Lecture: analyze past assignments for MATLAB quiz #2 effective argument MATLAB Studio: Importing data (problem #2)

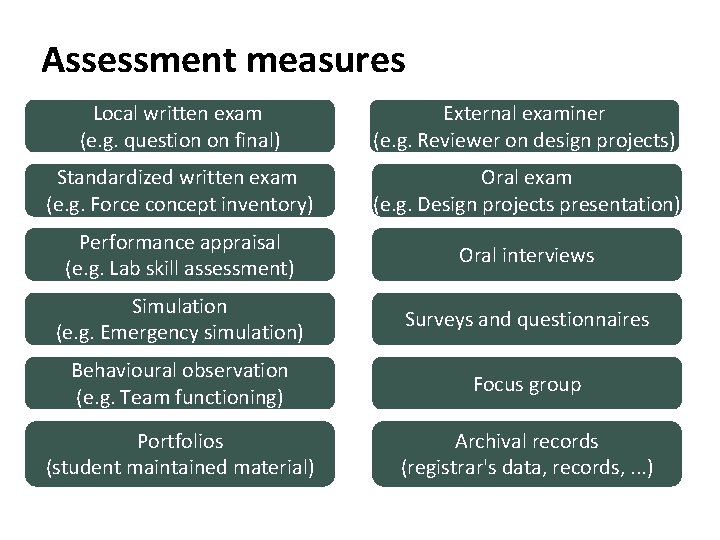

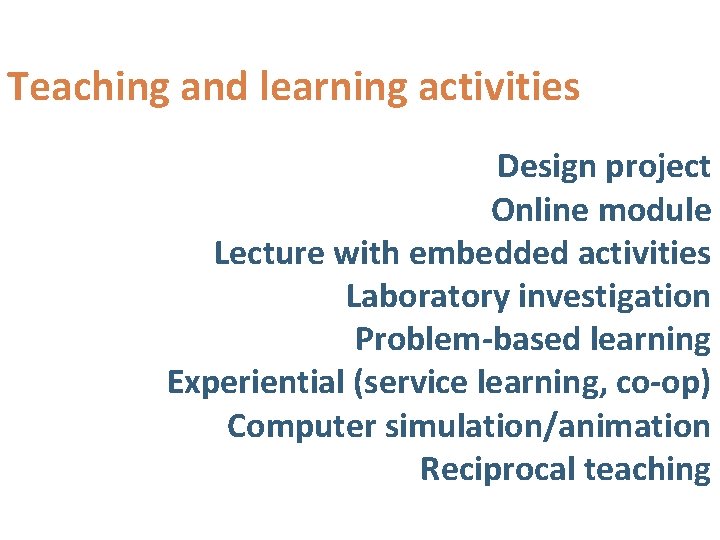

Assessment measures & Teaching and learning activities

Assessment measures Local written exam (e. g. question on final) External examiner (e. g. Reviewer on design projects) Standardized written exam (e. g. Force concept inventory) Oral exam (e. g. Design projects presentation) Performance appraisal (e. g. Lab skill assessment) Oral interviews Simulation (e. g. Emergency simulation) Surveys and questionnaires Behavioural observation (e. g. Team functioning) Focus group Portfolios (student maintained material) Archival records (registrar's data, records, . . . )

Teaching and learning activities Design project Online module Lecture with embedded activities Laboratory investigation Problem-based learning Experiential (service learning, co-op) Computer simulation/animation Reciprocal teaching

BREAKOUT 1 DEVELOP A COURSE PLAN http: //bit. ly/KK 6 Rsc This presentation and sample indicators: http: //bit. ly/LZi 2 wf

SCORING EFFICIENTLY AND RELIABLY

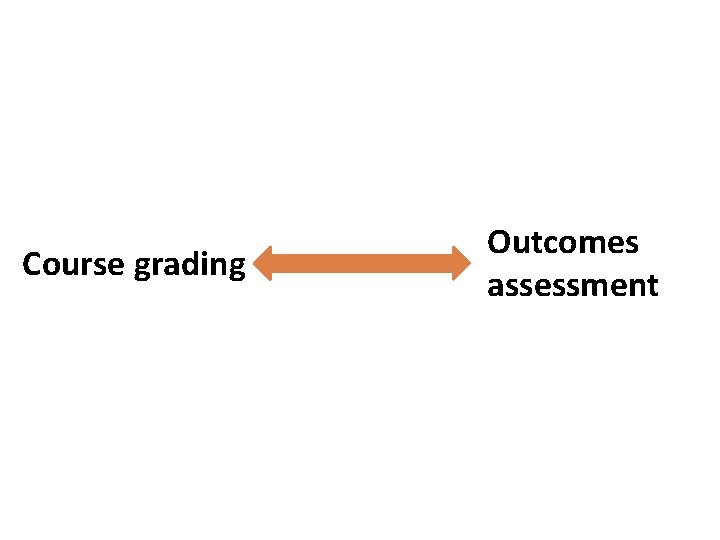

Course grading Outcomes assessment

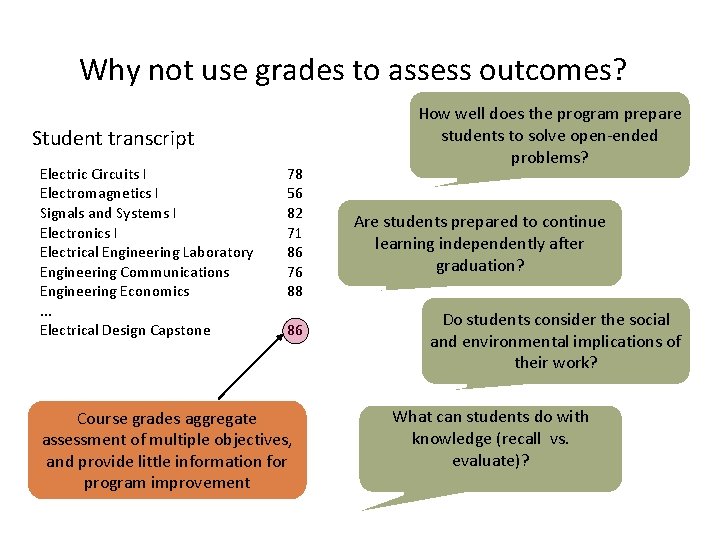

Why not use grades to assess outcomes? Student transcript Electric Circuits I Electromagnetics I Signals and Systems I Electronics I Electrical Engineering Laboratory Engineering Communications Engineering Economics. . . Electrical Design Capstone 78 56 82 71 86 76 88 86 Course grades aggregate assessment of multiple objectives, and provide little information for program improvement How well does the program prepare students to solve open-ended problems? Are students prepared to continue learning independently after graduation? Do students consider the social and environmental implications of their work? What can students do with knowledge (recall vs. evaluate)?

When assessing students, the scoring needs to be: Valid: they measure what they are supposed to measure Reliable: the results would be consistent when repeated with the same subjects under the same conditions (but with different graders) Expectations are clear to students, colleagues, and external reviewers

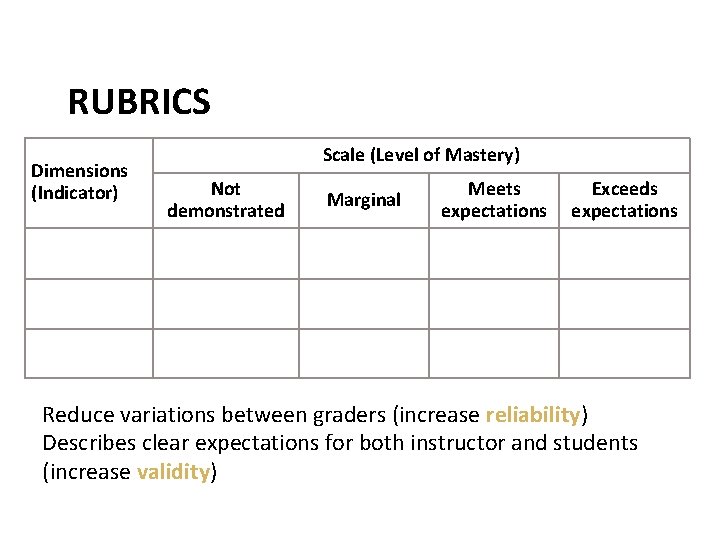

RUBRICS Dimensions (Indicator) Scale (Level of Mastery) Not demonstrated Marginal Meets expectations Exceeds expectations Reduce variations between graders (increase reliability) Describes clear expectations for both instructor and students (increase validity)

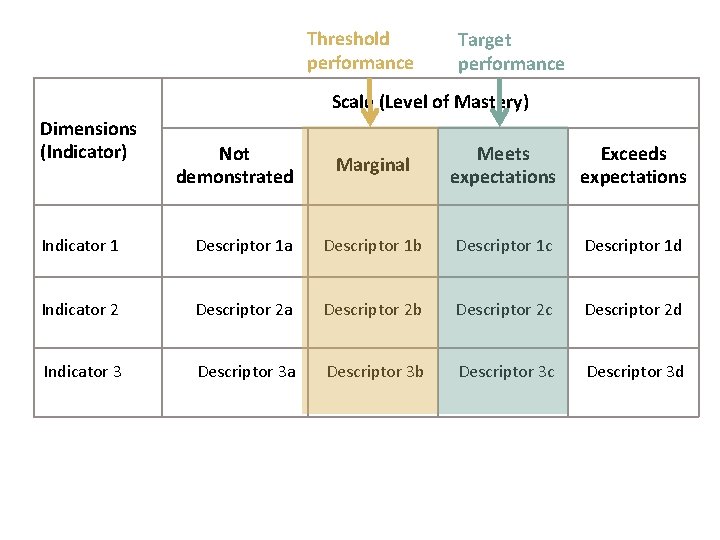

Threshold performance Target performance Scale (Level of Mastery) Dimensions (Indicator) Not demonstrated Marginal Meets expectations Exceeds expectations Indicator 1 Descriptor 1 a Descriptor 1 b Descriptor 1 c Descriptor 1 d Indicator 2 Descriptor 2 a Descriptor 2 b Descriptor 2 c Descriptor 2 d Indicator 3 Descriptor 3 a Descriptor 3 b Descriptor 3 c Descriptor 3 d

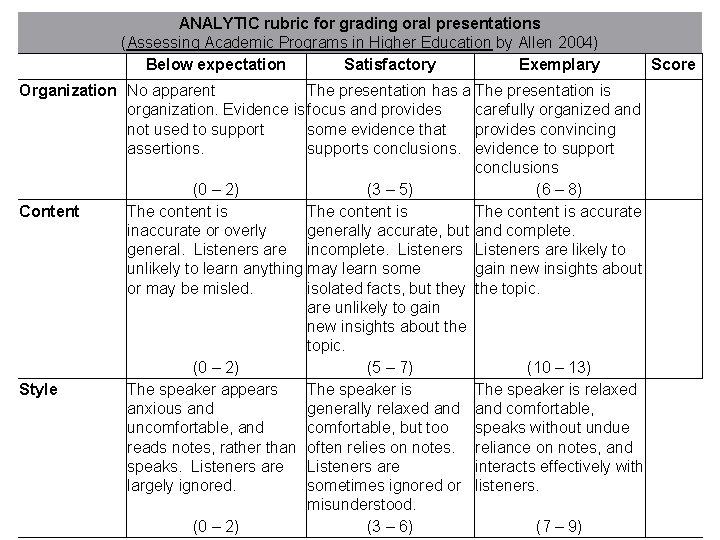

ANALYTIC rubric for grading oral presentations (Assessing Academic Programs in Higher Education by Allen 2004) Below expectation Satisfactory Exemplary Organization No apparent The presentation has a The presentation is organization. Evidence is focus and provides carefully organized and not used to support some evidence that provides convincing assertions. supports conclusions. evidence to support conclusions (0 – 2) (3 – 5) (6 – 8) Content The content is accurate inaccurate or overly generally accurate, but and complete. general. Listeners are incomplete. Listeners are likely to unlikely to learn anything may learn some gain new insights about or may be misled. isolated facts, but they the topic. are unlikely to gain new insights about the topic. (0 – 2) (5 – 7) (10 – 13) Style The speaker appears The speaker is relaxed anxious and generally relaxed and comfortable, uncomfortable, and comfortable, but too speaks without undue reads notes, rather than often relies on notes. reliance on notes, and speaks. Listeners are interacts effectively with largely ignored. sometimes ignored or listeners. misunderstood. (0 – 2) (3 – 6) (7 – 9) Score

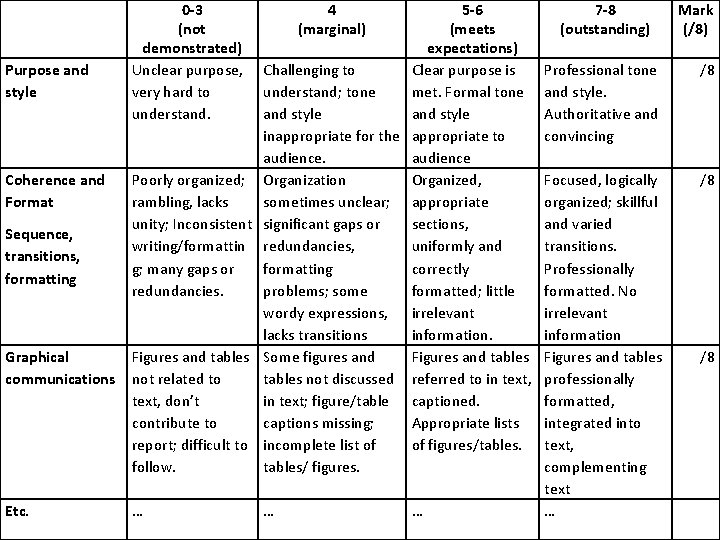

Purpose and style Coherence and Format Sequence, transitions, formatting Graphical communications Etc. 0 -3 (not demonstrated) Unclear purpose, very hard to understand. 5 -6 (meets expectations) Challenging to Clear purpose is understand; tone met. Formal tone and style inappropriate for the appropriate to audience Poorly organized; Organization Organized, rambling, lacks sometimes unclear; appropriate unity; Inconsistent significant gaps or sections, writing/formattin redundancies, uniformly and g; many gaps or formatting correctly redundancies. problems; some formatted; little wordy expressions, irrelevant lacks transitions information. Figures and tables Some figures and Figures and tables not related to tables not discussed referred to in text, don’t in text; figure/table captioned. contribute to captions missing; Appropriate lists report; difficult to incomplete list of of figures/tables. follow. tables/ figures. … 4 (marginal) … … 7 -8 (outstanding) Mark (/8) Professional tone and style. Authoritative and convincing /8 Focused, logically organized; skillful and varied transitions. Professionally formatted. No irrelevant information Figures and tables professionally formatted, integrated into text, complementing text … /8 /8

OBSERVABLE STATEMENTS OF PERFORMANCE ARE IMPORTANT

BREAKOUT 2 CREATE ONE DELIVERABLE AND RUBRIC FOR YOUR COURSE

http: //www. learningoutcomeassessment. org/Rubrics. htm#Samples … AND CONFERENCE PRESENTATIONS

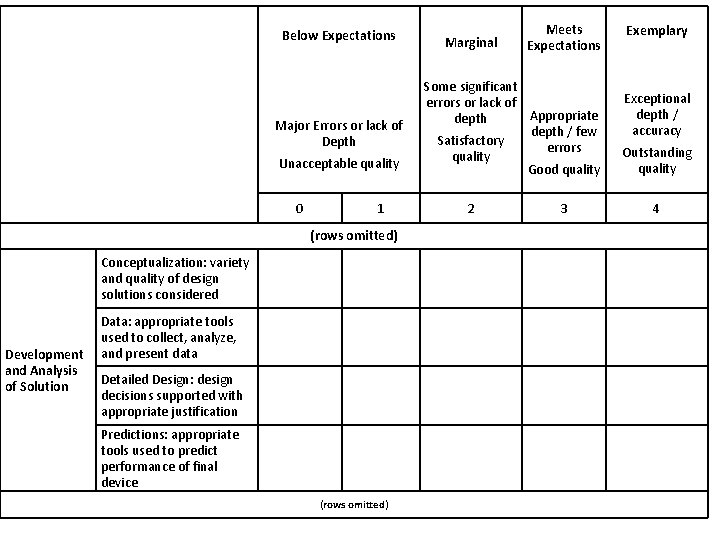

Below Expectations Major Errors or lack of Depth Unacceptable quality 0 1 Marginal Meets Expectations Some significant errors or lack of Appropriate depth / few Satisfactory errors quality Good quality Exemplary Exceptional depth / accuracy Outstanding quality 2 3 4 (rows omitted) Development and Analysis of Solution Conceptualization: variety and quality of design solutions considered Data: appropriate tools used to collect, analyze, and present data Detailed Design: design decisions supported with appropriate justification Predictions: appropriate tools used to predict performance of final device (rows omitted)

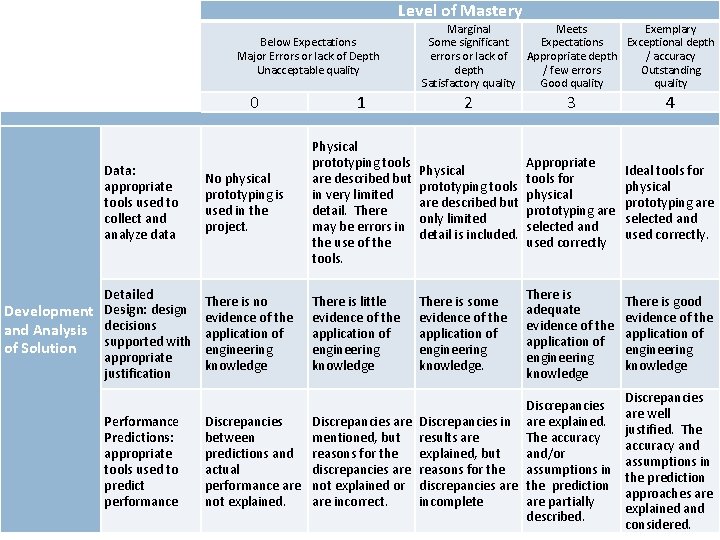

Level of Mastery Below Expectations Major Errors or lack of Depth Unacceptable quality 0 Data: appropriate tools used to collect and analyze data Detailed Development Design: design and Analysis decisions supported with of Solution appropriate justification Performance Predictions: appropriate tools used to predict performance Marginal Meets Exemplary Some significant Expectations Exceptional depth errors or lack of Appropriate depth / accuracy depth / few errors Outstanding Satisfactory quality Good quality 1 2 3 4 No physical prototyping is used in the project. Physical prototyping tools are described but in very limited detail. There may be errors in the use of the tools. Physical prototyping tools are described but only limited detail is included. Appropriate tools for physical prototyping are selected and used correctly Ideal tools for physical prototyping are selected and used correctly. There is no evidence of the application of engineering knowledge There is little evidence of the application of engineering knowledge There is some evidence of the application of engineering knowledge. There is adequate evidence of the application of engineering knowledge There is good evidence of the application of engineering knowledge Discrepancies are Discrepancies in are explained. between mentioned, but results are The accuracy predictions and reasons for the explained, but and/or actual discrepancies are reasons for the assumptions in performance are not explained or discrepancies are the prediction not explained. are incorrect. incomplete are partially described. Discrepancies are well justified. The accuracy and assumptions in the prediction approaches are explained and considered.

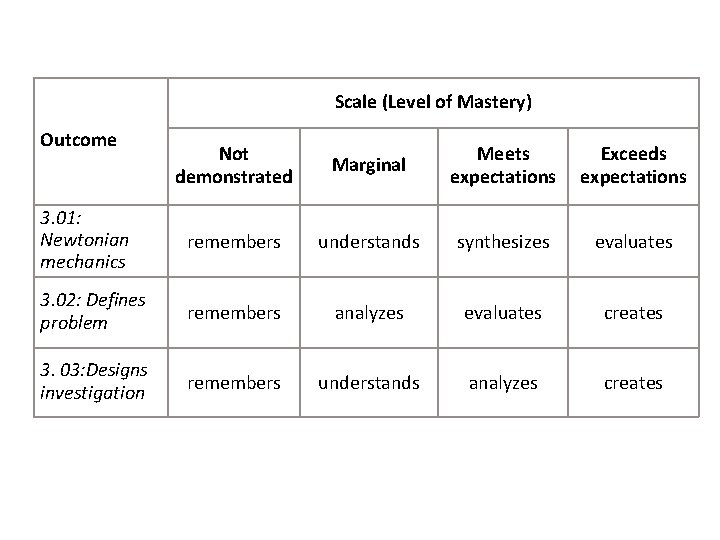

Scale (Level of Mastery) Outcome Not demonstrated Marginal Meets expectations Exceeds expectations 3. 01: Newtonian mechanics remembers understands synthesizes evaluates 3. 02: Defines problem remembers analyzes evaluates creates 3. 03: Designs investigation remembers understands analyzes creates

CALIBRATION FOR GRADERS

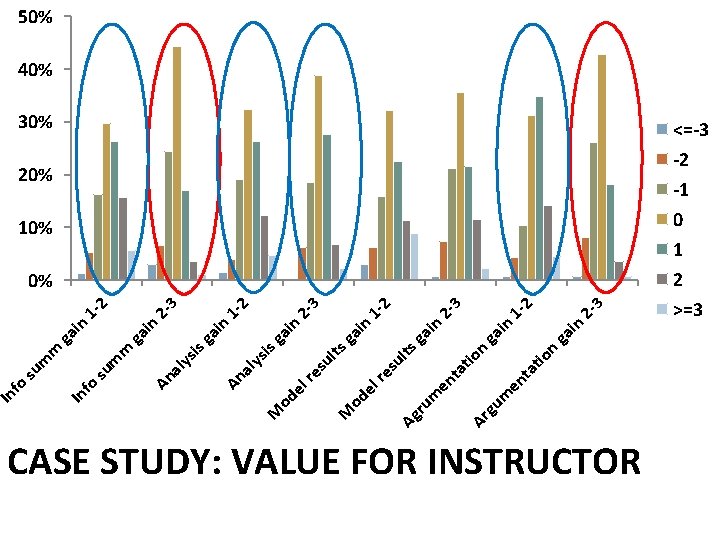

n ga tio ta 2 - in 3 2 1 - -3 2 n ga i n in ga lts -2 1 3 2 in 2 - n 1 - in ga lts is ga tio ta en en m Ar gu m ru Ag su re el od M ys al An ga i is ys -3 2 in ga m m -2 1 in ga m m al An su fo In 50% 40% 30% 20% 10% 0% CASE STUDY: VALUE FOR INSTRUCTOR <=-3 -2 -1 0 1 2 >=3

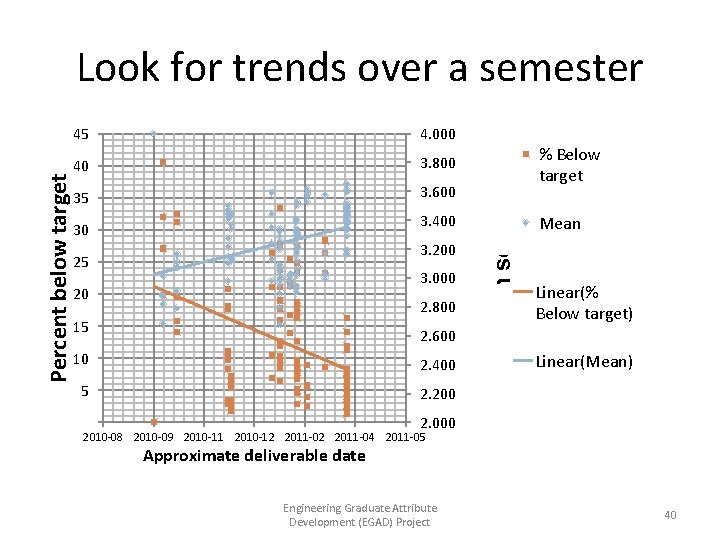

45 4. 000 40 3. 800 35 3. 600 3. 400 30 3. 200 25 3. 000 20 2. 800 15 2. 600 10 2. 400 5 2. 200 % Below target Mean score Percent below target Look for trends over a semester Mean Linear(% Below target) Linear(Mean) 2. 000 2010 -08 2010 -09 2010 -11 2010 -12 2011 -04 2011 -05 Approximate deliverable date Engineering Graduate Attribute Development (EGAD) Project 40

Pitfalls to avoid: Johnny B. “Good”: what is “good” performance? NARRis description applicable to all submissions? OW: nment: Is descriptor aligned with objective? lig a f o t Ou bloomin’ Bloom’s is not meant as a scale!

PROBLEMS YOU WILL FIND…

IT TAKES TIME

INITIALLY STUDENTS MAY NOT LOVE IT

SO… COLLABORATION IS IMPORTANT

CONTINUE COLLABORATION NETWORK AND SURVEY

Graduate attribute assessment as a COURSE INSTRUCTOR http: //bit. ly/KK 6 Rsc Brian Frank and Jake Kaupp CEEA Workshop W 2 -1 B

MODELS FOR SUSTAINING CHANGE

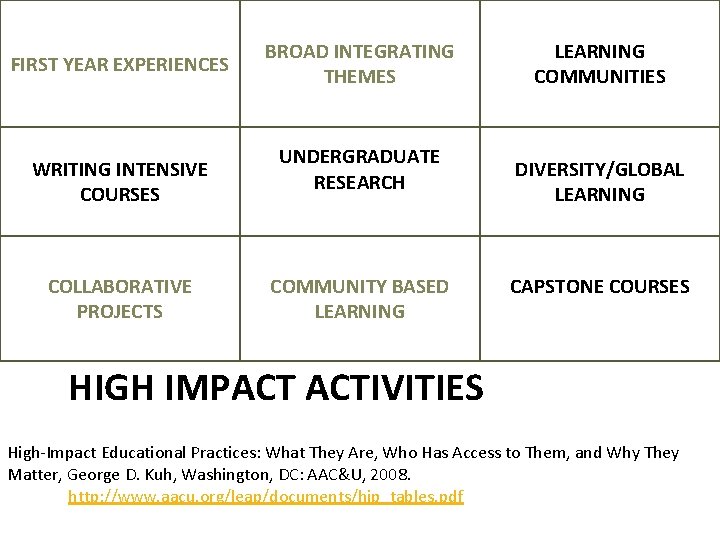

FIRST YEAR EXPERIENCES WRITING INTENSIVE COURSES COLLABORATIVE PROJECTS BROAD INTEGRATING THEMES UNDERGRADUATE RESEARCH COMMUNITY BASED LEARNING COMMUNITIES DIVERSITY/GLOBAL LEARNING CAPSTONE COURSES HIGH IMPACT ACTIVITIES High-Impact Educational Practices: What They Are, Who Has Access to Them, and Why They Matter, George D. Kuh, Washington, DC: AAC&U, 2008. http: //www. aacu. org/leap/documents/hip_tables. pdf

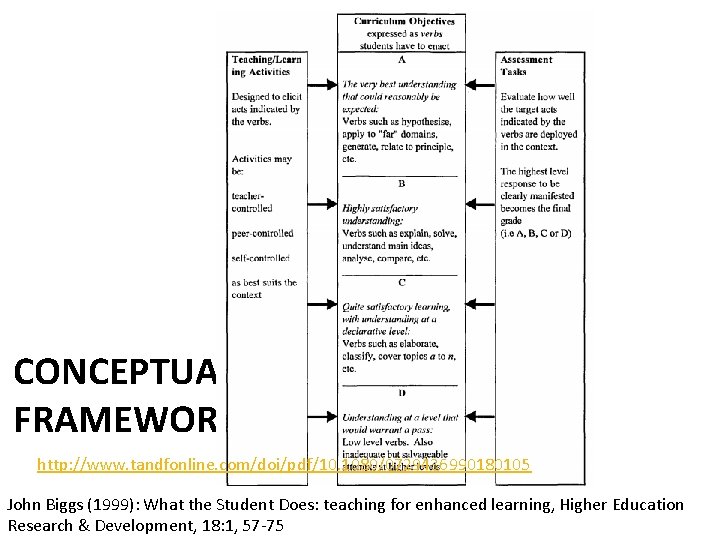

CONCEPTUAL FRAMEWORK http: //www. tandfonline. com/doi/pdf/10. 1080/0729436990180105 John Biggs (1999): What the Student Does: teaching for enhanced learning, Higher Education Research & Development, 18: 1, 57 -75

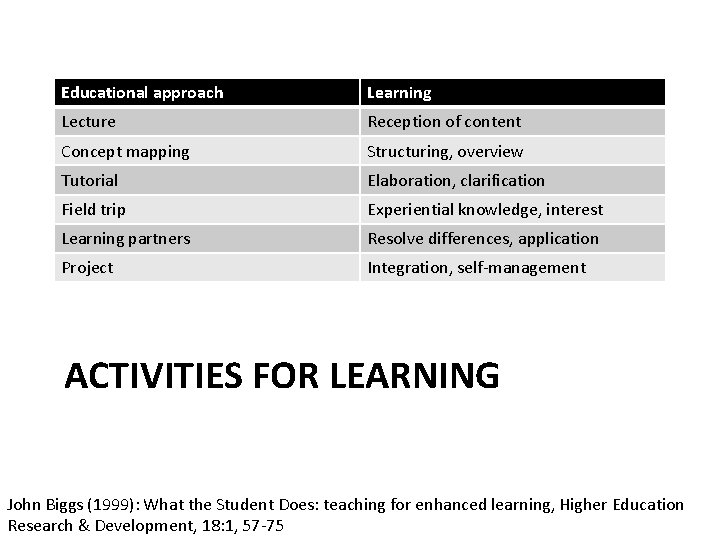

Educational approach Learning Lecture Reception of content Concept mapping Structuring, overview Tutorial Elaboration, clarification Field trip Experiential knowledge, interest Learning partners Resolve differences, application Project Integration, self-management ACTIVITIES FOR LEARNING John Biggs (1999): What the Student Does: teaching for enhanced learning, Higher Education Research & Development, 18: 1, 57 -75

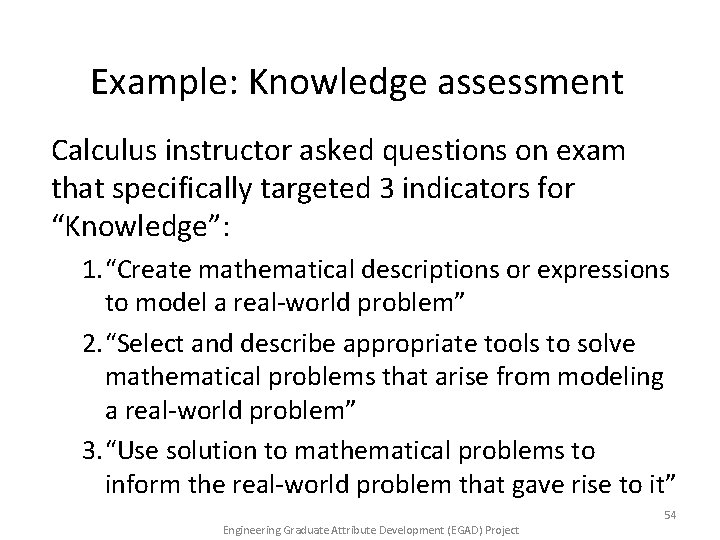

Example: Knowledge assessment Calculus instructor asked questions on exam that specifically targeted 3 indicators for “Knowledge”: 1. “Create mathematical descriptions or expressions to model a real-world problem” 2. “Select and describe appropriate tools to solve mathematical problems that arise from modeling a real-world problem” 3. “Use solution to mathematical problems to inform the real-world problem that gave rise to it” 54 Engineering Graduate Attribute Development (EGAD) Project

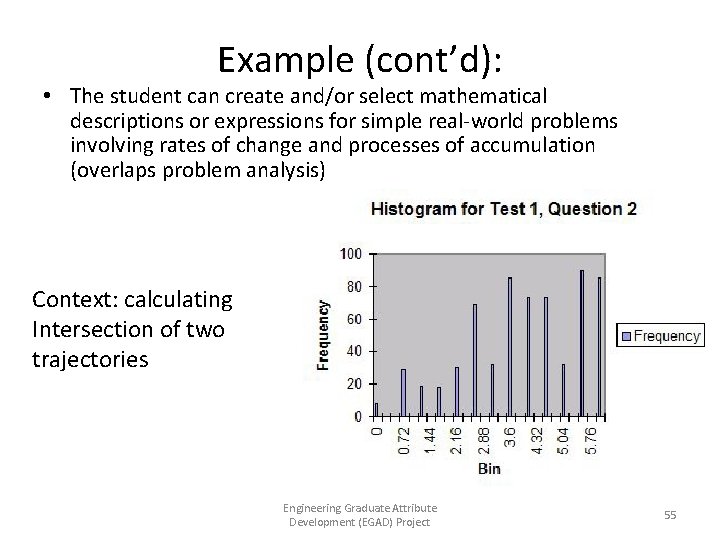

Example (cont’d): • The student can create and/or select mathematical descriptions or expressions for simple real-world problems involving rates of change and processes of accumulation (overlaps problem analysis) Context: calculating Intersection of two trajectories Engineering Graduate Attribute Development (EGAD) Project 55

CHECKLIST FOR INDICATORS

- Slides: 56