Gradient Enhanced Kriging Gaussian Process Models Keith R

- Slides: 1

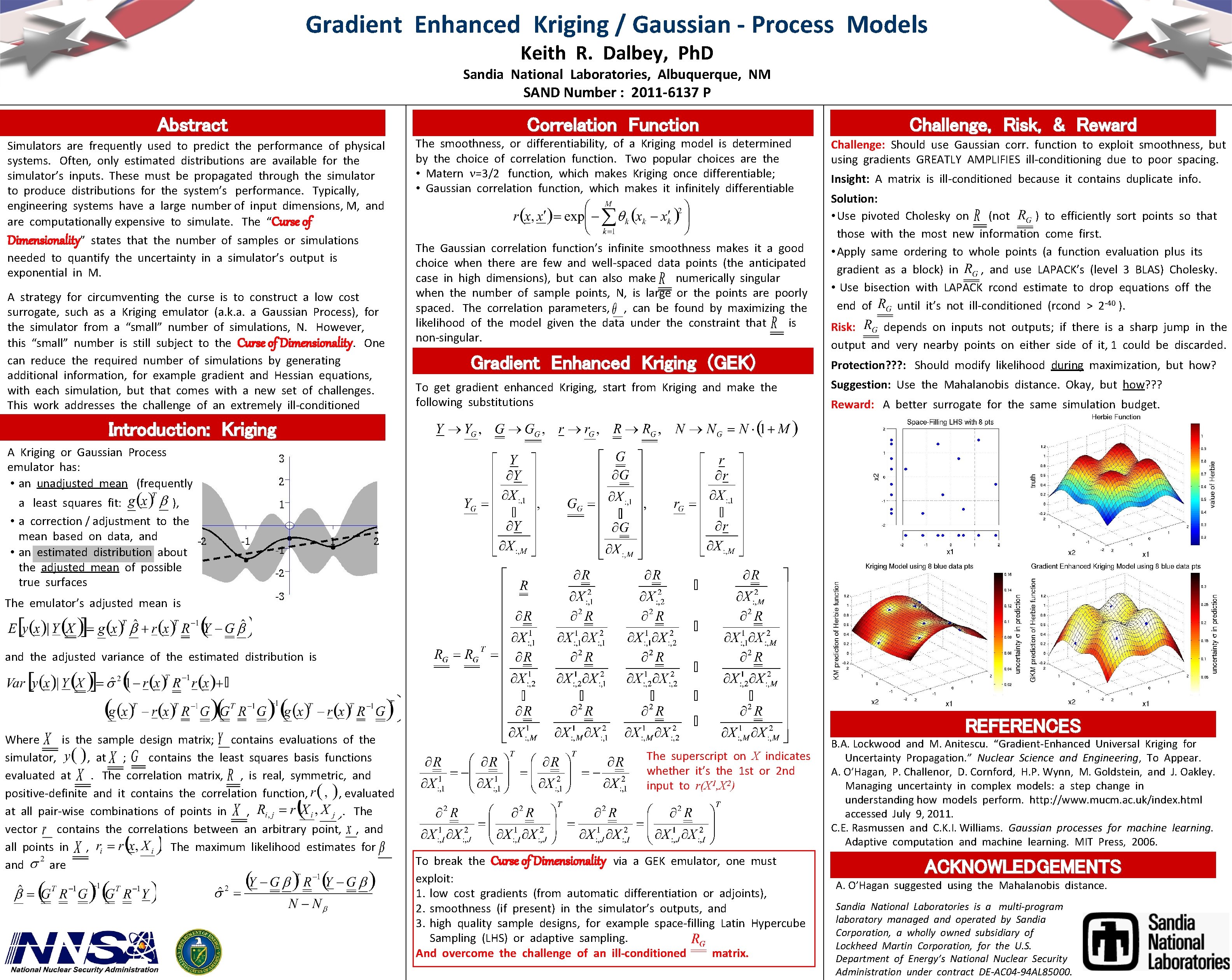

Gradient Enhanced Kriging / Gaussian - Process Models Keith R. Dalbey, Ph. D Sandia National Laboratories, Albuquerque, NM SAND Number : 2011 -6137 P Abstract Simulators are frequently used to predict the performance of physical systems. Often, only estimated distributions are available for the simulator’s inputs. These must be propagated through the simulator to produce distributions for the system’s performance. Typically, engineering systems have a large number of input dimensions, M, and are computationally expensive to simulate. The “Curse of Dimensionality” states that the number of samples or simulations needed to quantify the uncertainty in a simulator’s output is exponential in M. A strategy for circumventing the curse is to construct a low cost surrogate, such as a Kriging emulator (a. k. a. a Gaussian Process), for the simulator from a “small” number of simulations, N. However, this “small” number is still subject to the Curse of Dimensionality. One can reduce the required number of simulations by generating additional information, for example gradient and Hessian equations, with each simulation, but that comes with a new set of challenges. This work addresses the challenge of an extremely ill-conditioned correlation matrix for gradient enhanced Kriging. Correlation Function The smoothness, or differentiability, of a Kriging model is determined by the choice of correlation function. Two popular choices are the • Matern =3/2 function, which makes Kriging once differentiable; • Gaussian correlation function, which makes it infinitely differentiable The Gaussian correlation function’s infinite smoothness makes it a good choice when there are few and well-spaced data points (the anticipated case in high dimensions), but can also make numerically singular when the number of sample points, N, is large or the points are poorly spaced. The correlation parameters, , can be found by maximizing the likelihood of the model given the data under the constraint that is non-singular. Gradient Enhanced Kriging (GEK) To get gradient enhanced Kriging, start from Kriging and make the following substitutions Challenge, Risk, & Reward Challenge: Should use Gaussian corr. function to exploit smoothness, but using gradients GREATLY AMPLIFIES ill-conditioning due to poor spacing. Insight: A matrix is ill-conditioned because it contains duplicate info. Solution: • Use pivoted Cholesky on (not ) to efficiently sort points so that those with the most new information come first. • Apply same ordering to whole points (a function evaluation plus its gradient as a block) in , and use LAPACK’s (level 3 BLAS) Cholesky. • Use bisection with LAPACK rcond estimate to drop equations off the end of until it’s not ill-conditioned (rcond > 2 -40 ). Risk: depends on inputs not outputs; if there is a sharp jump in the output and very nearby points on either side of it, 1 could be discarded. Protection? ? ? : Should modify likelihood during maximization, but how? Suggestion: Use the Mahalanobis distance. Okay, but how? ? ? Reward: A better surrogate for the same simulation budget. Introduction: Kriging A Kriging or Gaussian Process emulator has: • an unadjusted mean (frequently a least squares fit: ), • a correction / adjustment to the mean based on data, and • an estimated distribution about the adjusted mean of possible true surfaces The emulator’s adjusted mean is and the adjusted variance of the estimated distribution is Where is the sample design matrix; contains evaluations of the simulator, , at ; contains the least squares basis functions evaluated at. The correlation matrix, , is real, symmetric, and positive-definite and it contains the correlation function, , evaluated at all pair-wise combinations of points in , . The vector contains the correlations between an arbitrary point, , and all points in , . The maximum likelihood estimates for and are REFERENCES The superscript on X indicates whether it’s the 1 st or 2 nd input to r(X 1, X 2) To break the Curse of Dimensionality via a GEK emulator, one must exploit: 1. low cost gradients (from automatic differentiation or adjoints), 2. smoothness (if present) in the simulator’s outputs, and 3. high quality sample designs, for example space-filling Latin Hypercube Sampling (LHS) or adaptive sampling. And overcome the challenge of an ill-conditioned matrix. B. A. Lockwood and M. Anitescu. “Gradient-Enhanced Universal Kriging for Uncertainty Propagation. ” Nuclear Science and Engineering, To Appear. A. O’Hagan, P. Challenor, D. Cornford, H. P. Wynn, M. Goldstein, and J. Oakley. Managing uncertainty in complex models: a step change in understanding how models perform. http: //www. mucm. ac. uk/index. html accessed July 9, 2011. C. E. Rasmussen and C. K. I. Williams. Gaussian processes for machine learning. Adaptive computation and machine learning. MIT Press, 2006. ACKNOWLEDGEMENTS A. O’Hagan suggested using the Mahalanobis distance. Sandia National Laboratories is a multi-program laboratory managed and operated by Sandia Corporation, a wholly owned subsidiary of Lockheed Martin Corporation, for the U. S. Department of Energy’s National Nuclear Security Administration under contract DE-AC 04 -94 AL 85000.