Gra FBoost Using accelerated flash storage for external

Gra. FBoost: Using accelerated flash storage for external graph analytics Sang-Woo Jun, Andy Wright, Sizhuo Zhang, Shuotao Xu and Arvind MIT CSAIL Funded by: 1

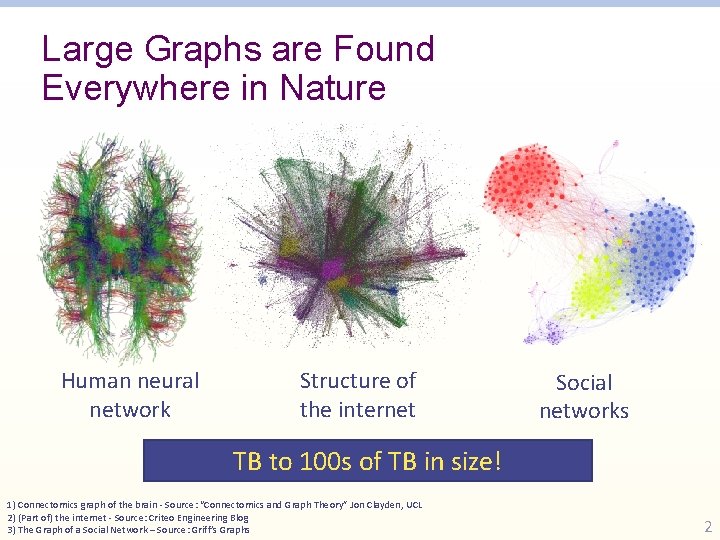

Large Graphs are Found Everywhere in Nature Human neural network Structure of the internet Social networks TB to 100 s of TB in size! 1) Connectomics graph of the brain - Source: “Connectomics and Graph Theory” Jon Clayden, UCL 2) (Part of) the internet - Source: Criteo Engineering Blog 3) The Graph of a Social Network – Source: Griff’s Graphs 2

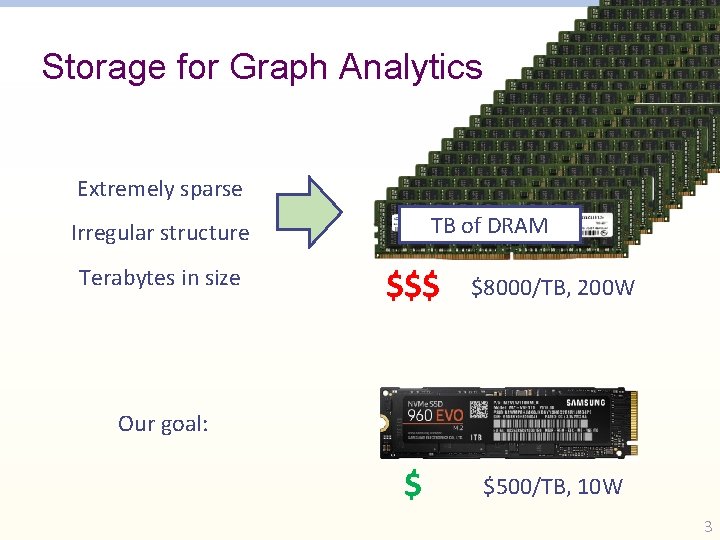

Storage for Graph Analytics Extremely sparse TB DRAM of DRAM Irregular structure Terabytes in size $$$ $8000/TB, 200 W $ $500/TB, 10 W Our goal: 3

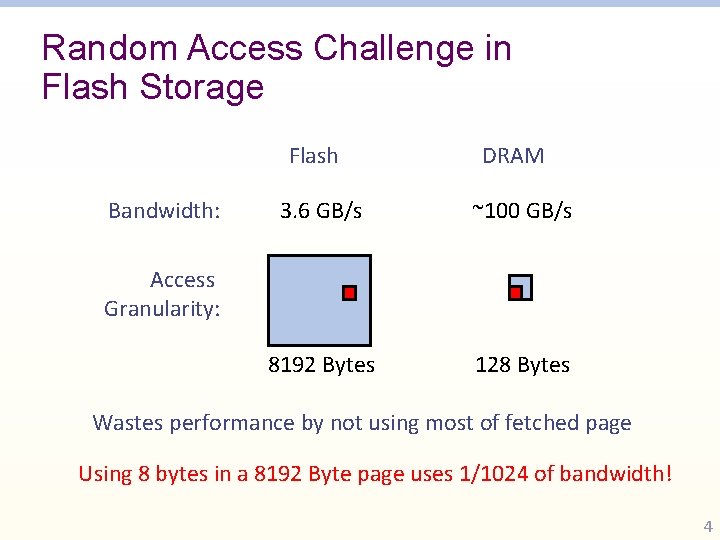

Random Access Challenge in Flash Storage Flash Bandwidth: DRAM 3. 6 GB/s ~100 GB/s 8192 Bytes 128 Bytes Access Granularity: Wastes performance by not using most of fetched page Using 8 bytes in a 8192 Byte page uses 1/1024 of bandwidth! 4

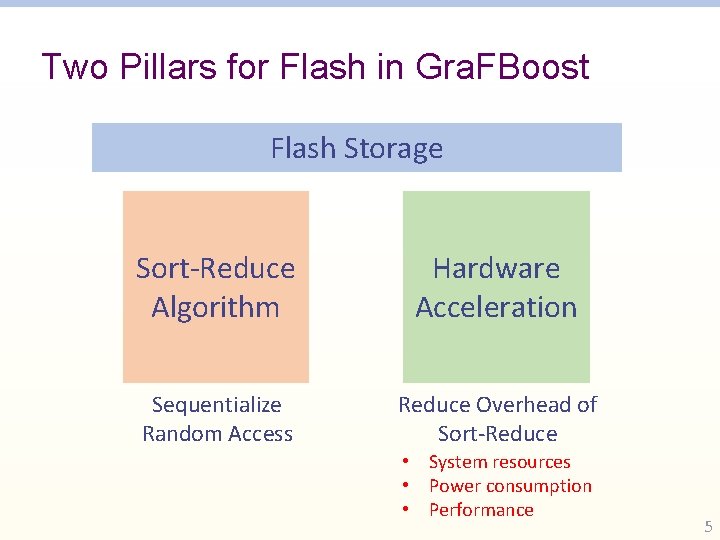

Two Pillars for Flash in Gra. FBoost Flash Storage Sort-Reduce Algorithm Hardware Acceleration Sequentialize Random Access Reduce Overhead of Sort-Reduce • System resources • Power consumption • Performance 5

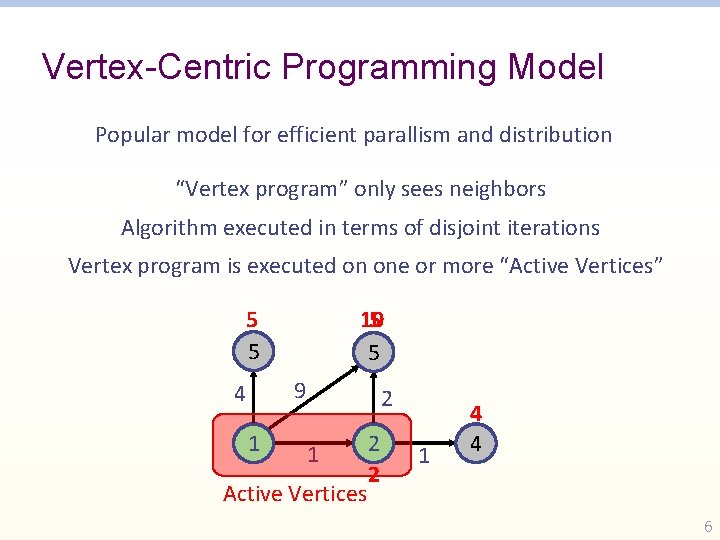

Vertex-Centric Programming Model Popular model for efficient parallism and distribution “Vertex program” only sees neighbors Algorithm executed in terms of disjoint iterations Vertex program is executed on one or more “Active Vertices” 5 ∞ 5 10 5 ∞ 5 9 4 1 2 1 Active Vertices 3 2 2 1 4 ∞ 4 6

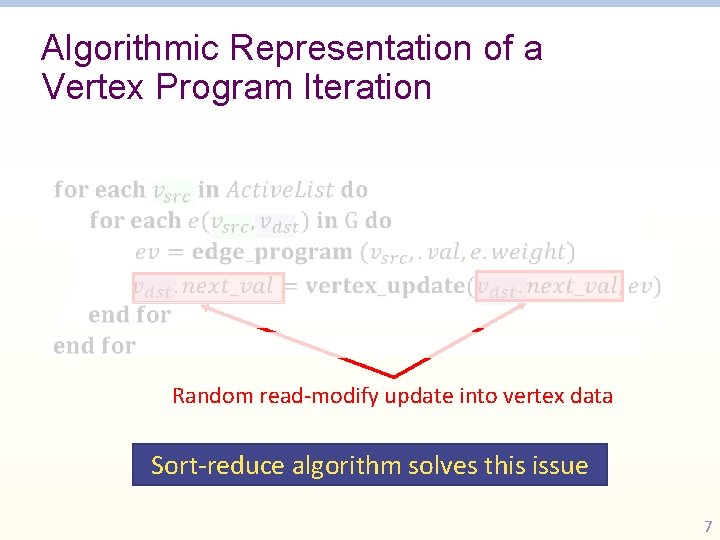

Algorithmic Representation of a Vertex Program Iteration Random read-modify update into vertex data Sort-reduce algorithm solves this issue 7

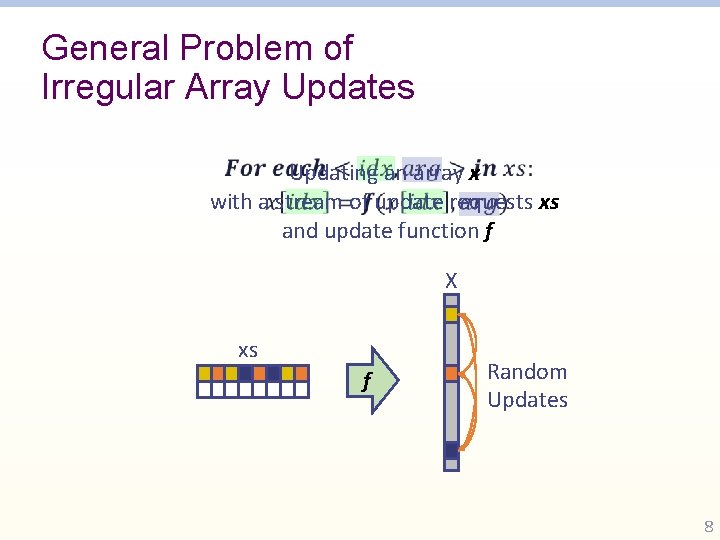

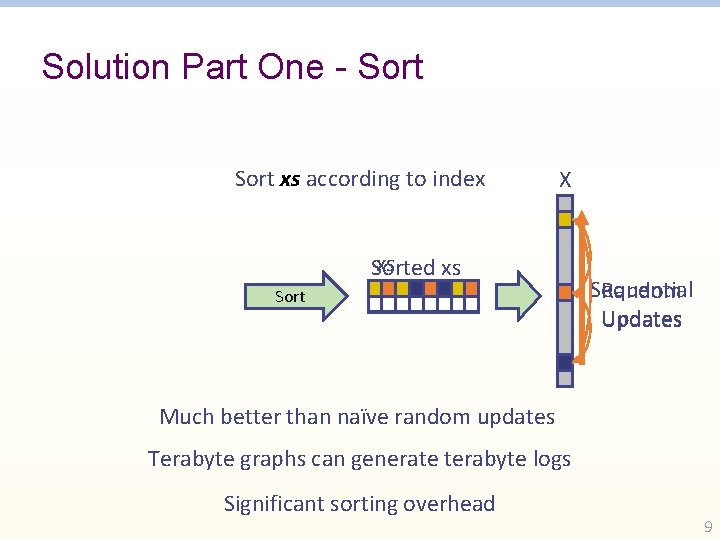

General Problem of Irregular Array Updates Updating an array x with a stream of update requests xs and update function f X xs f Random Updates 8

Solution Part One - Sort xs according to index X xs Sorted xs Sort Sequential Random Updates Much better than naïve random updates Terabyte graphs can generate terabyte logs Significant sorting overhead 9

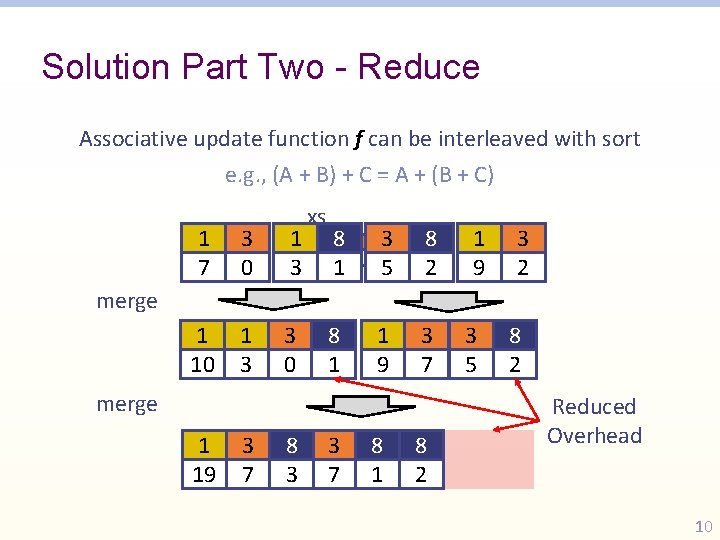

Solution Part Two - Reduce Associative update function f can be interleaved with sort e. g. , (A + B) + C = A + (B + C) 1 7 3 0 1 3 1 10 7 1 3 3 0 xs 8 1 3 5 8 2 8 1 1 9 3 72 1 9 3 2 merge 1 19 10 13 97 38 03 3 7 8 1 8 2 3 5 8 2 Reduced Overhead 10

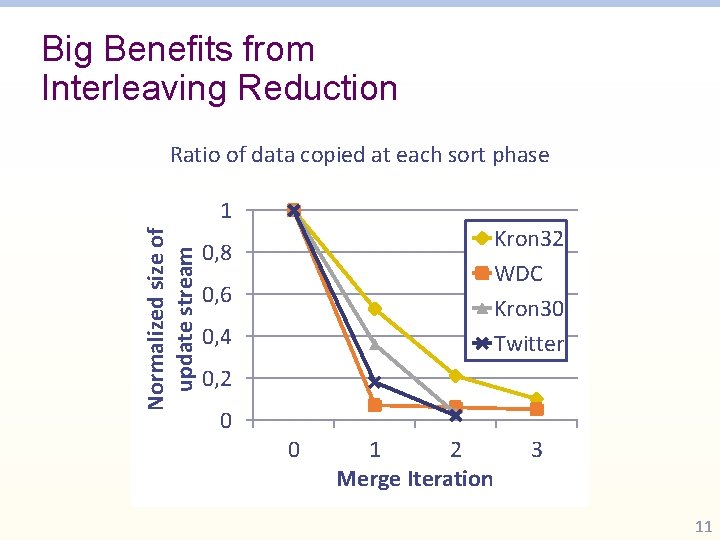

Big Benefits from Interleaving Reduction Normalized size of of update stream Ratio of data copied at each sort phase 1 Kron 32 WDC Kron 30 WDC Twitter 0, 8 0, 6 0, 4 0, 2 90% 0 0 1 2 Merge Iteration 3 11

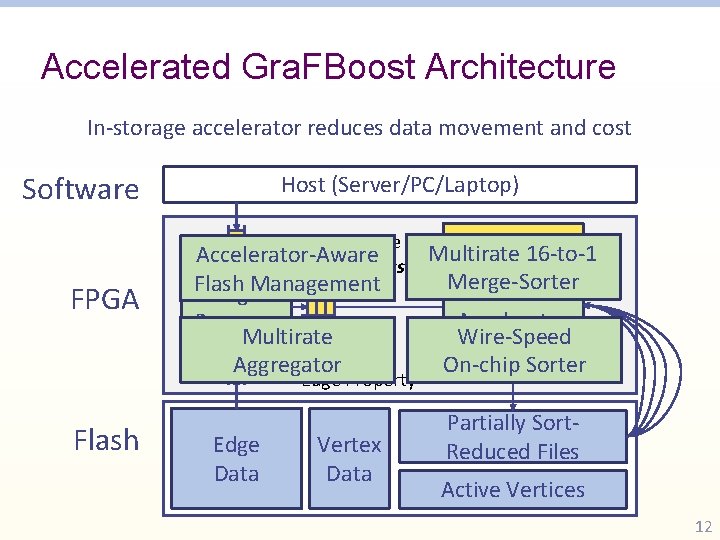

Accelerated Gra. FBoost Architecture In-storage accelerator reduces data movement and cost Software Host (Server/PC/Laptop) Vertex Value Accelerator-Aware Update Log (xs) FPGA Flash Edge. Management Program Multirate Aggregator Edge Property Flash Edge Data Vertex Data 1 GB DRAM Multirate 16 -to-1 Merge-Sorter Sort-Reduce Accelerator Wire-Speed On-chip Sorter Partially Sort. Reduced Files Active Vertices 12

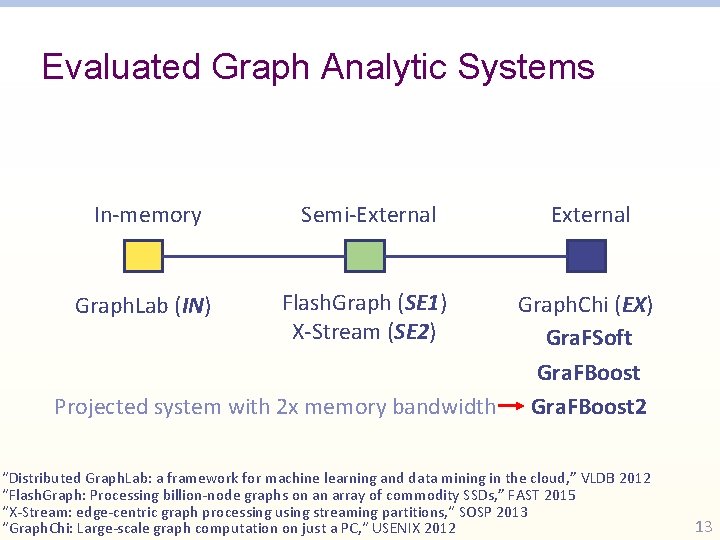

Evaluated Graph Analytic Systems In-memory Semi-External Flash. Graph (SE 1) X-Stream (SE 2) Graph. Chi (EX) Gra. FSoft Gra. FBoost Projected system with 2 x memory bandwidth Gra. FBoost 2 Graph. Lab (IN) “Distributed Graph. Lab: a framework for machine learning and data mining in the cloud, ” VLDB 2012 “Flash. Graph: Processing billion-node graphs on an array of commodity SSDs, ” FAST 2015 “X-Stream: edge-centric graph processing using streaming partitions, “ SOSP 2013 “Graph. Chi: Large-scale graph computation on just a PC, “ USENIX 2012 13

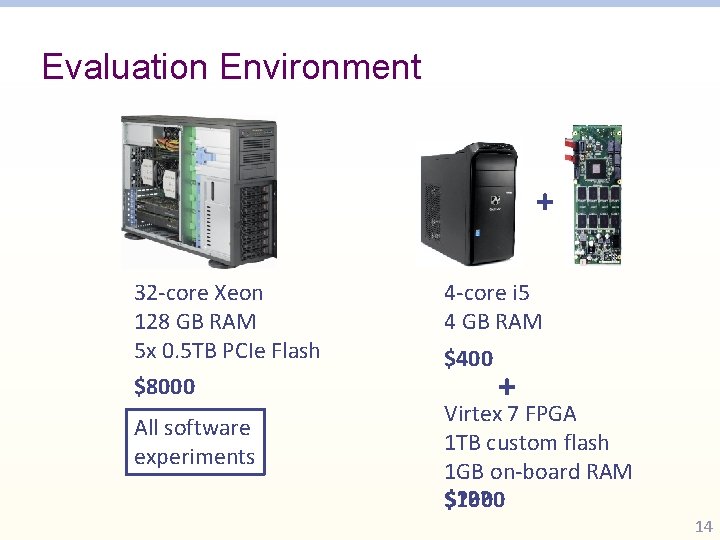

Evaluation Environment + 32 -core Xeon 128 GB RAM 5 x 0. 5 TB PCIe Flash $8000 All software experiments 4 -core i 5 4 GB RAM $400 + Virtex 7 FPGA 1 TB custom flash 1 GB on-board RAM $1000 $? ? ? 14

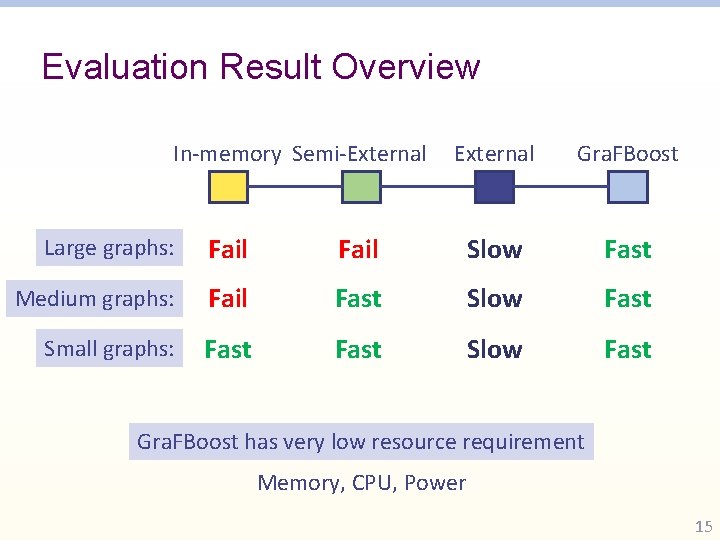

Evaluation Result Overview In-memory Semi-External Gra. FBoost Large graphs: Fail Slow Fast Medium graphs: Fail Fast Slow Fast Small graphs: Fast Slow Fast Gra. FBoost has very low resource requirement Memory, CPU, Power 15

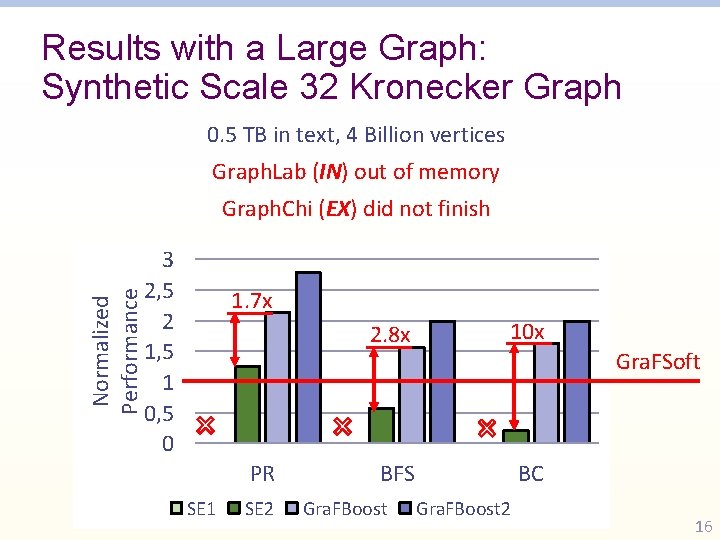

Results with a Large Graph: Synthetic Scale 32 Kronecker Graph 0. 5 TB in text, 4 Billion vertices Graph. Lab (IN) out of memory Normalized Performance Graph. Chi (EX) did not finish 3 2, 5 2 1, 5 1 0, 5 0 1. 7 x PR SE 1 SE 2 2. 8 x 10 x BFS BC Gra. FBoost Gra. FSoft Gra. FBoost 2 16

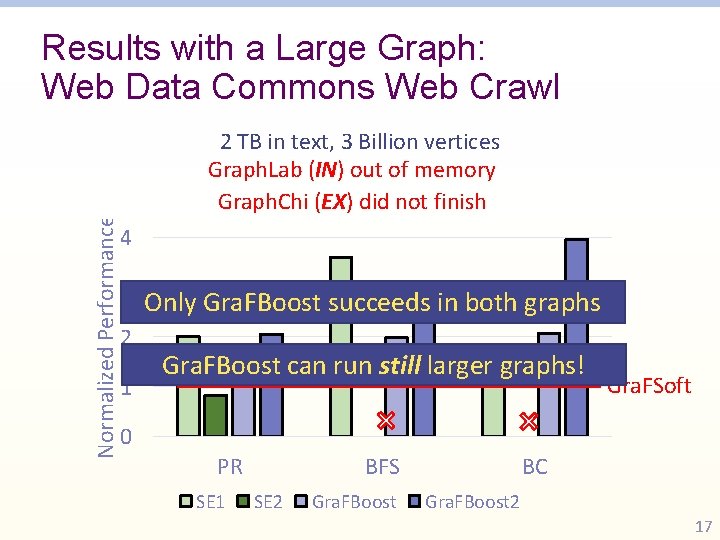

Normalized Performance Results with a Large Graph: Web Data Commons Web Crawl 2 TB in text, 3 Billion vertices Graph. Lab (IN) out of memory Graph. Chi (EX) did not finish 4 3 2 1 Only Gra. FBoost succeeds in both graphs Gra. FBoost can run still larger graphs! Gra. FSoft 0 PR SE 1 BFS SE 2 Gra. FBoost BC Gra. FBoost 2 17

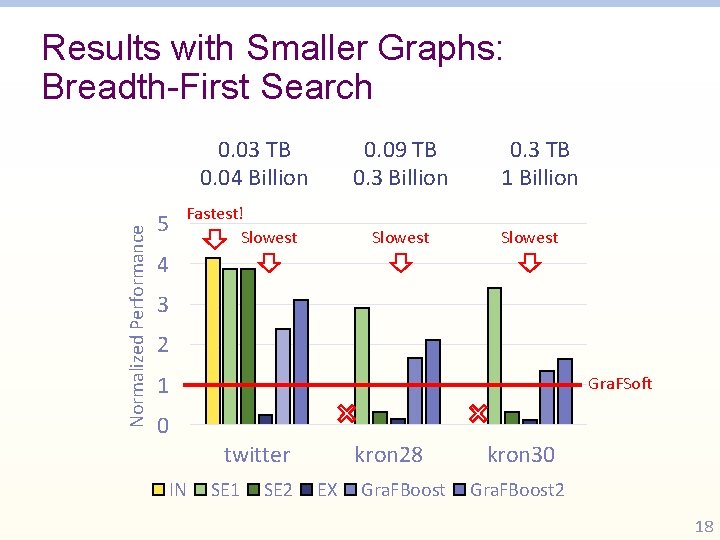

Results with Smaller Graphs: Breadth-First Search Normalized Performance 0. 03 TB 0. 04 Billion 5 0. 09 TB 0. 3 Billion Fastest! Slowest 0. 3 TB 1 Billion Slowest 4 3 2 1 Gra. FSoft 0 twitter IN SE 1 SE 2 kron 28 EX Gra. FBoost kron 30 Gra. FBoost 2 18

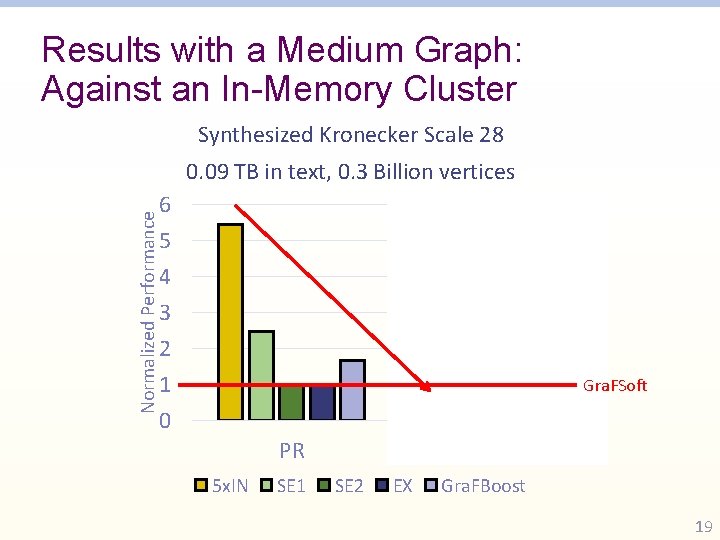

Results with a Medium Graph: Against an In-Memory Cluster Synthesized Kronecker Scale 28 Normalized Performance 0. 09 TB in text, 0. 3 Billion vertices 6 5 4 3 2 1 0 Gra. FSoft PR 5 x. IN SE 1 BFS SE 2 EX Gra. FBoost 19

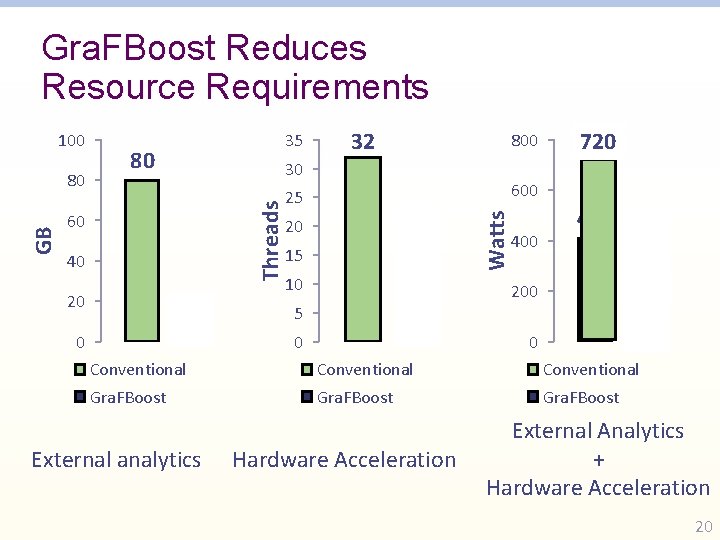

Gra. FBoost Reduces Resource Requirements 80 40 2 0 800 30 60 20 32 720 600 25 16 20 15 10 2 5 0 Watts GB 80 35 Threads 100 410 160 80 40 200 0 Conventional Gra. FBoost External analytics Hardware Acceleration External Analytics + Hardware Acceleration 20

Future Work Open-source Gra. FBoost Cleaning up code for users! Acceleration using Amazon F 1 Commercial accelerated storage Collaborating with Samsung More applications using sort-reduce Bioinformatics collaboration with Barcelona Supercomputering Center 21

Thank You Flash Storage Sort-Reduce Algorithm Hardware Acceleration 22

23

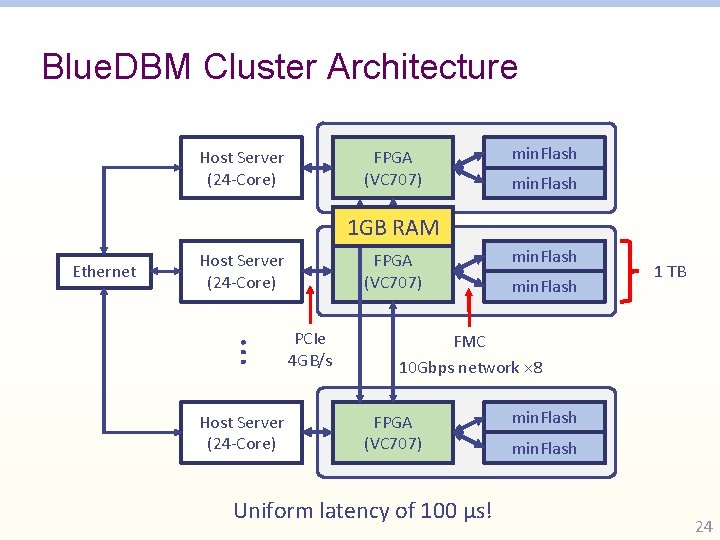

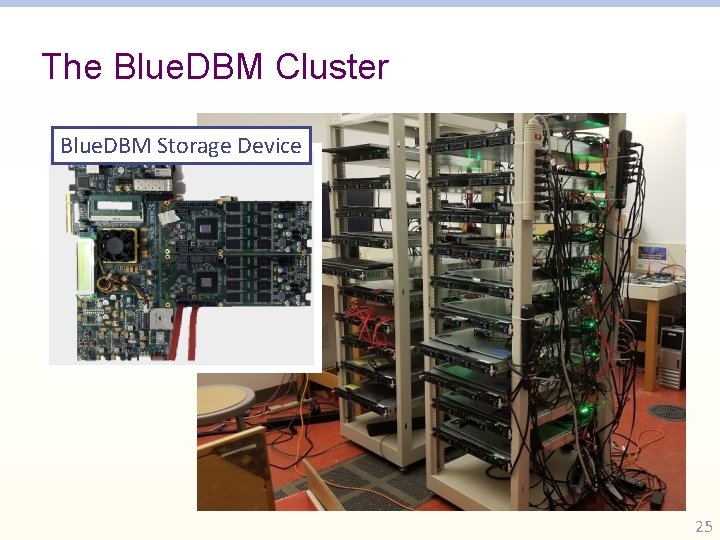

Blue. DBM Cluster Architecture Host Server (24 -Core) FPGA (VC 707) min. Flash 1 GB RAM Ethernet Host Server (24 -Core) … Host Server (24 -Core) FPGA (VC 707) PCIe 4 GB/s min. Flash 1 TB FMC 10 Gbps network × 8 FPGA (VC 707) Uniform latency of 100 µs! min. Flash 24

The Blue. DBM Cluster Blue. DBM Storage Device 25

- Slides: 25