GPUAccelerated Nick Local Image Thresholding Algorithm M Hassan

![Introduction • Looking for the best local thresholding algorithm [Gatos et al 2006] • Introduction • Looking for the best local thresholding algorithm [Gatos et al 2006] •](https://slidetodoc.com/presentation_image_h2/8fe3575125ba53aa0aca41acc1a2ee88/image-5.jpg)

![References • [1] B. Gatos, I. Pratikakis, and S. J. Perantonis, “Adaptive degraded document References • [1] B. Gatos, I. Pratikakis, and S. J. Perantonis, “Adaptive degraded document](https://slidetodoc.com/presentation_image_h2/8fe3575125ba53aa0aca41acc1a2ee88/image-26.jpg)

- Slides: 27

GPU-Accelerated Nick Local Image Thresholding Algorithm M. Hassan Najafi, Anirudh Murali, David J. Lilja, and John Sartori {najaf 011, mural 014, lilja, jsartori}@umn. edu ICPADS-2015

Overview • Introduction • Why Image thresholding • Different thresholding algorithms • Nick image thresholding method • Algorithm, Flow • Goals and contributions • Implementations • CPU sequential and CUDA GPU parallel implementations • GPU considerations and optimizations • Methodology of experiments • Experimental results • Effect of block size, image size, local window size • GPU execution overheads • Summary and conclusion 2 / 27 GPU-Accelerated Nick Local Image Processing Algorithm

Introduction • Why image thresholding ? • Document binarization • Classifying to background/foreground • Looking for a threshold value • Pixel intensity > threshold : 1 (foreground) • Pixel intensity <= threshold : 0 (background) • Thresholding algorithms • Global methods • Local methods 3 / 27 GPU-Accelerated Nick Local Image Processing Algorithm

Introduction • Global methods • A single threshold for the whole image • Otsu, Kaputar, Abutaleb, Monte, Don • Often very fast • But weak performance when the illumination over the document is not uniform • Local methods (adaptive methods) • A different threshold for each pixel • Nick, Bernsen, Niblack, Sauvola • Good results even on degraded documents • But too slow 4 / 27 GPU-Accelerated Nick Local Image Processing Algorithm

![Introduction Looking for the best local thresholding algorithm Gatos et al 2006 Introduction • Looking for the best local thresholding algorithm [Gatos et al 2006] •](https://slidetodoc.com/presentation_image_h2/8fe3575125ba53aa0aca41acc1a2ee88/image-5.jpg)

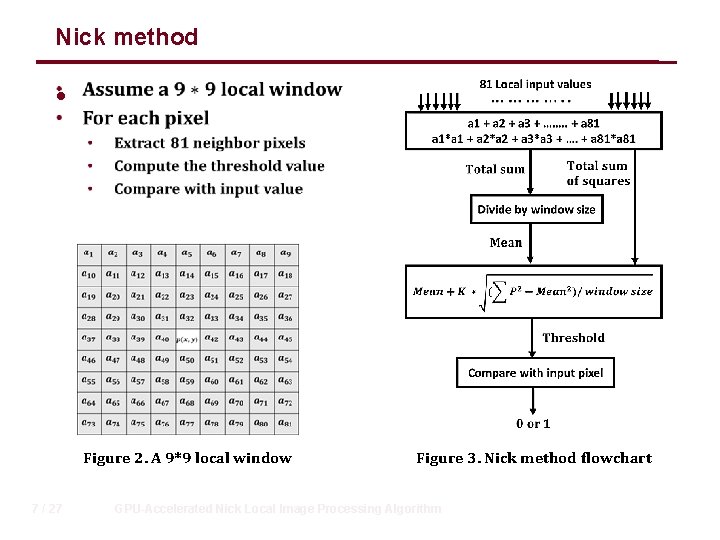

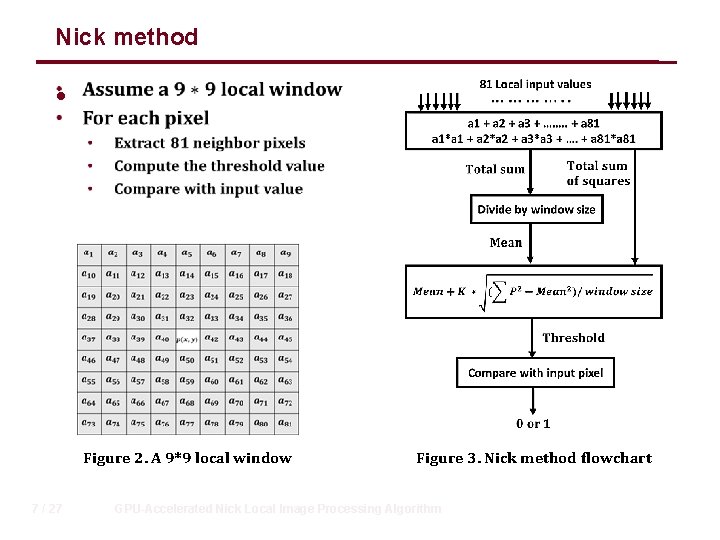

Introduction • Looking for the best local thresholding algorithm [Gatos et al 2006] • Nick method • Good performance even on severely degraded document images. Figure 1. (Left) Original input images, (Right) Outputs of binarization using Nick method. 5 / 27 GPU-Accelerated Nick Local Image Processing Algorithm

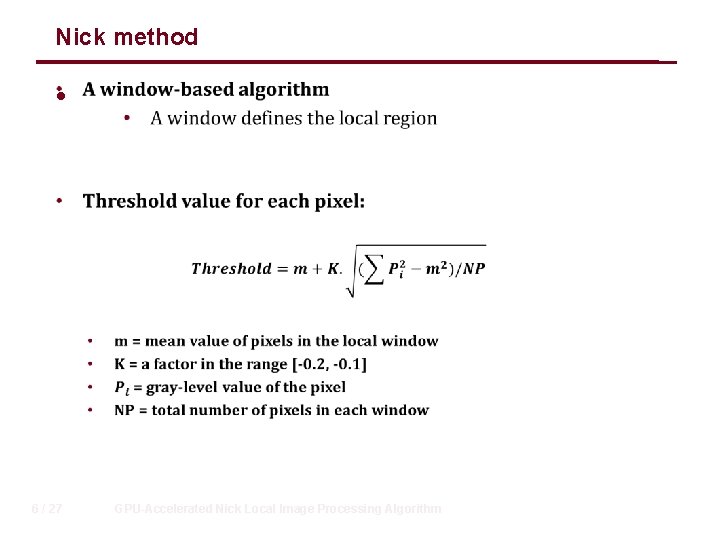

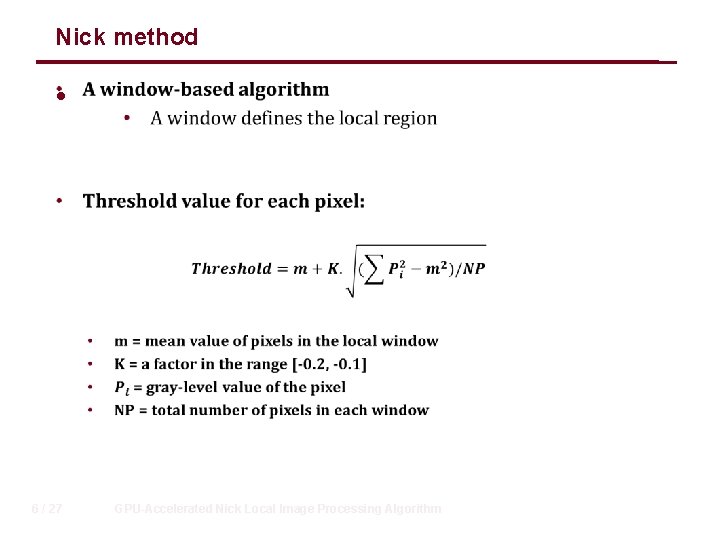

Nick method • 6 / 27 GPU-Accelerated Nick Local Image Processing Algorithm

Nick method • Figure 2. A 9*9 local window 7 / 27 Figure 3. Nick method flowchart GPU-Accelerated Nick Local Image Processing Algorithm

Goals and contributions • Exploiting Graphic Processing Units (GPUs) • Solve the long latency problem of Nick method • We develop three work-efficient CUDA kernel • Difference: how they load and access image pixels • How changing block size, window size, and image size can affect the maximum achievable speedup. • Performance scalability of the developed CUDA kernels as GPU architecture scales up. • Developing several linear regression models to predict the total binarization time 8 / 27 GPU-Accelerated Nick Local Image Processing Algorithm

Implementation: CPU single-thread • Need a reference • 9 / 27 An optimized single-thread implementation of the Nick method GPU-Accelerated Nick Local Image Processing Algorithm

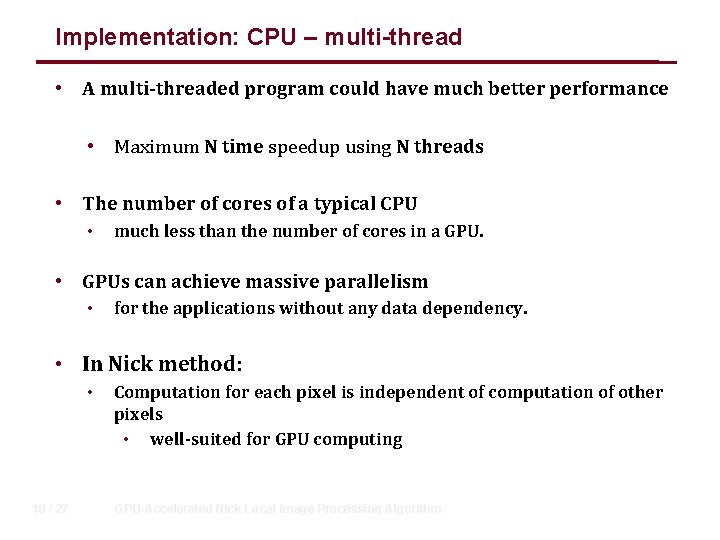

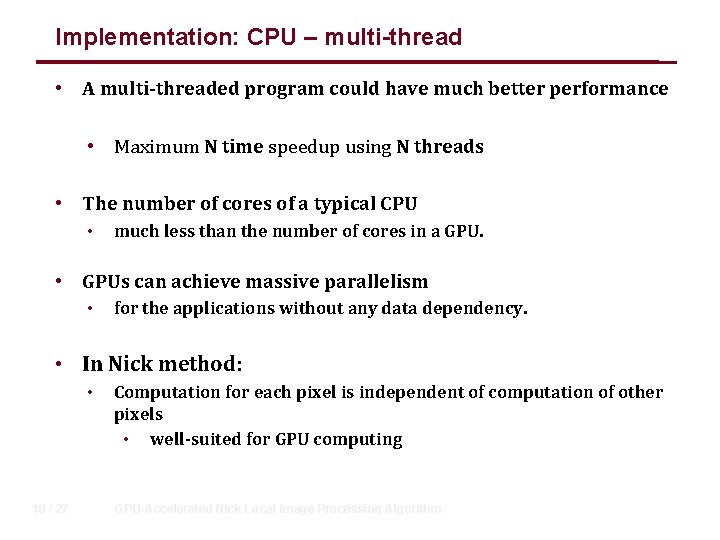

Implementation: CPU – multi-thread • A multi-threaded program could have much better performance • Maximum N time speedup using N threads • The number of cores of a typical CPU • much less than the number of cores in a GPU. • GPUs can achieve massive parallelism • for the applications without any data dependency. • In Nick method: • 10 / 27 Computation for each pixel is independent of computation of other pixels • well-suited for GPU computing GPU-Accelerated Nick Local Image Processing Algorithm

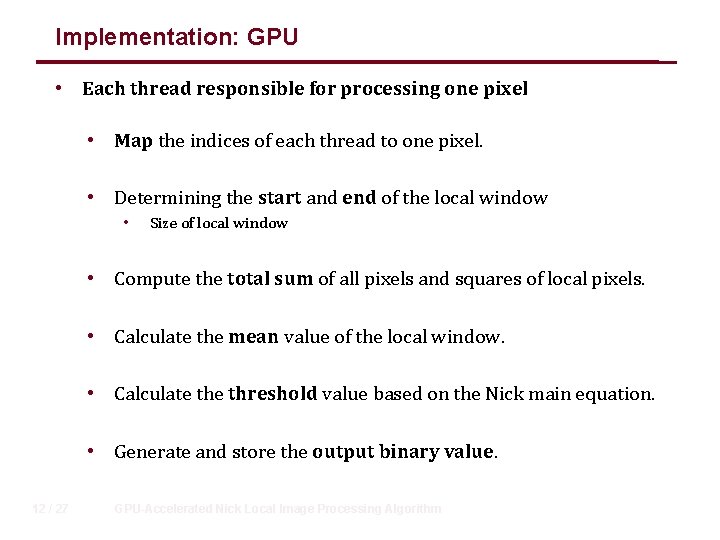

Implementation: GPU • Three work-efficient CUDA kernels • Difference: the way they load and access image pixel intensities • The first kernel (Global) • • No shared memory, load all pixels from the global memory Simplementation • The second kernel (Global-Shared) • • Exploits both SM shared memory and the global memory Shared memory to reuse data • The third kernel (Shared) • • 11 / 27 Only relies on shared memory More data reuse GPU-Accelerated Nick Local Image Processing Algorithm

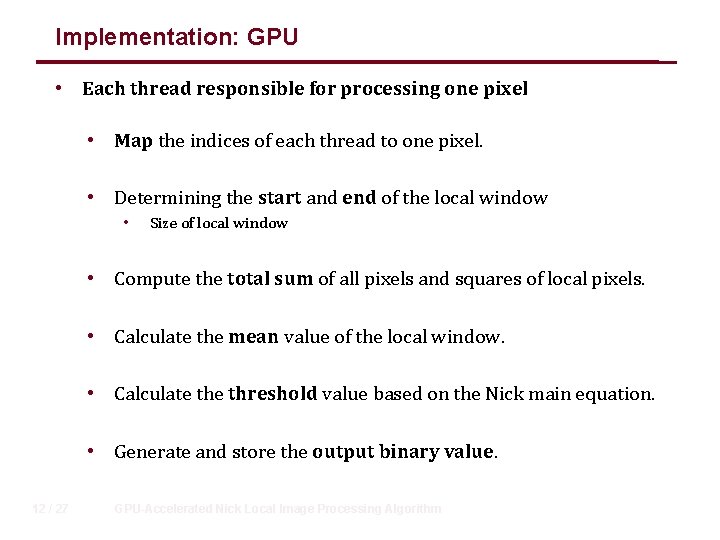

Implementation: GPU • Each thread responsible for processing one pixel • Map the indices of each thread to one pixel. • Determining the start and end of the local window • Size of local window • Compute the total sum of all pixels and squares of local pixels. • Calculate the mean value of the local window. • Calculate threshold value based on the Nick main equation. • Generate and store the output binary value. 12 / 27 GPU-Accelerated Nick Local Image Processing Algorithm

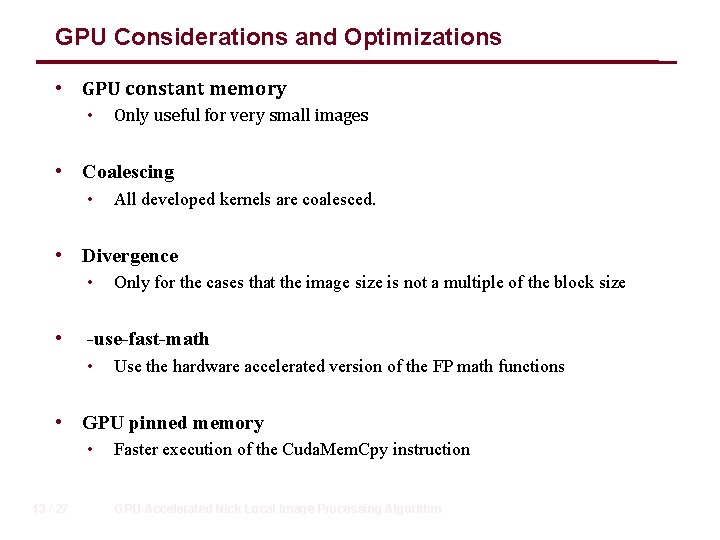

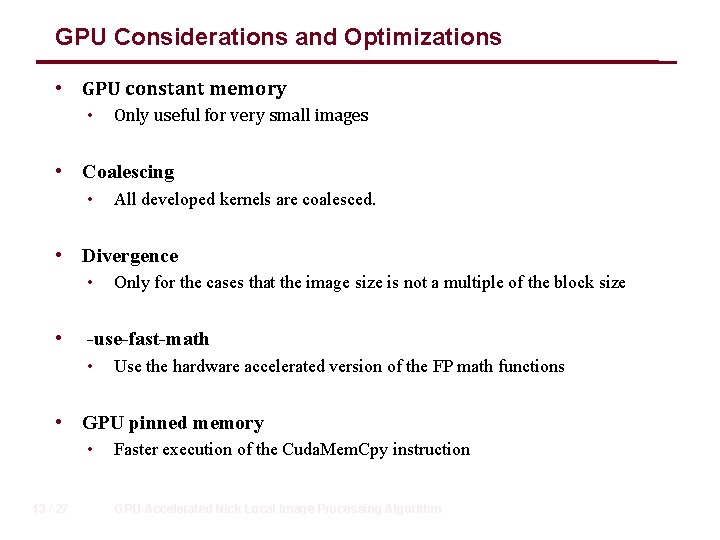

GPU Considerations and Optimizations • GPU constant memory • Only useful for very small images • Coalescing • All developed kernels are coalesced. • Divergence • • Only for the cases that the image size is not a multiple of the block size -use-fast-math • Use the hardware accelerated version of the FP math functions • GPU pinned memory • 13 / 27 Faster execution of the Cuda. Mem. Cpy instruction GPU-Accelerated Nick Local Image Processing Algorithm

Methodology • Nine different real input images • From 75*80 to 2500*4000 image size • Effect of increasing the size of the local window • 9*9, 15*15, and 33*33 • The number of threads in each block in calling GPU kernels • 8*8, 16*16, and 32*32 • CPU for sequential version 14 / 27 GPU-Accelerated Nick Local Image Processing Algorithm

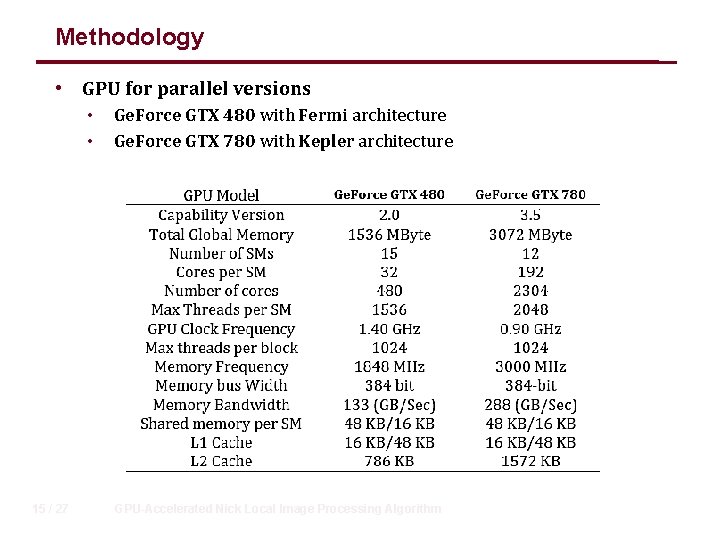

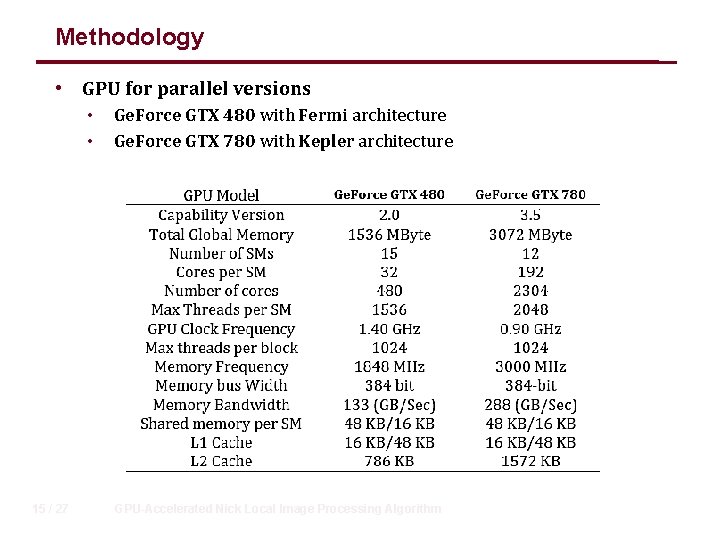

Methodology • GPU for parallel versions • • 15 / 27 Ge. Force GTX 480 with Fermi architecture Ge. Force GTX 780 with Kepler architecture GPU-Accelerated Nick Local Image Processing Algorithm

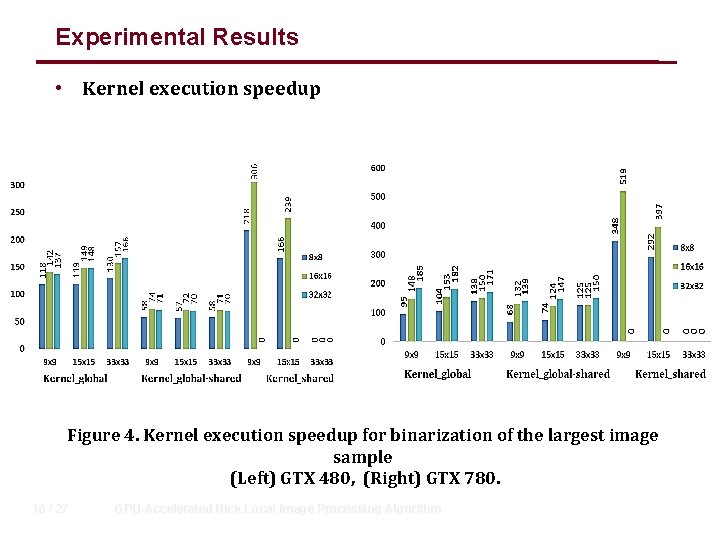

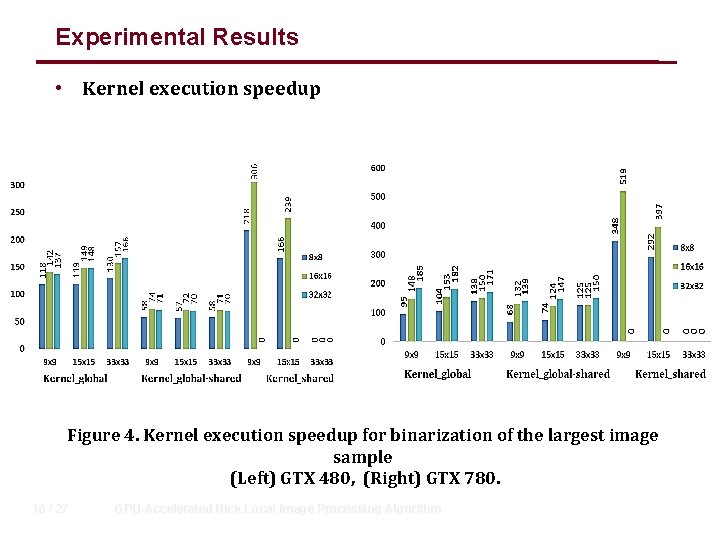

Experimental Results • Kernel execution speedup Figure 4. Kernel execution speedup for binarization of the largest image sample (Left) GTX 480, (Right) GTX 780. 16 / 27 GPU-Accelerated Nick Local Image Processing Algorithm

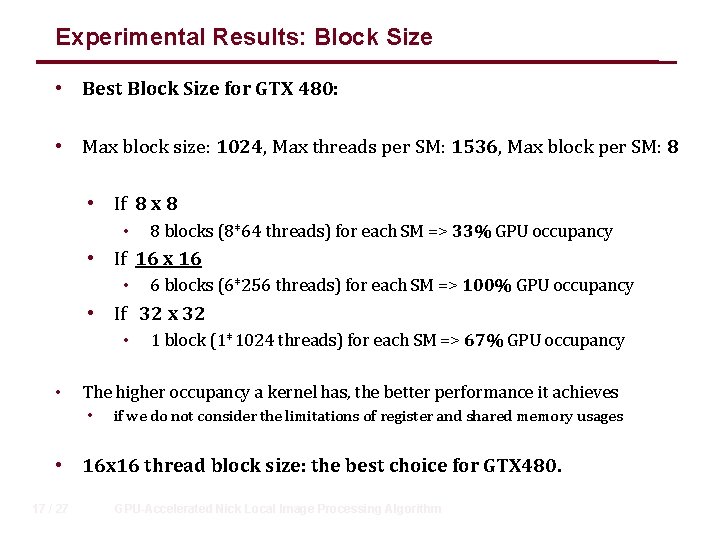

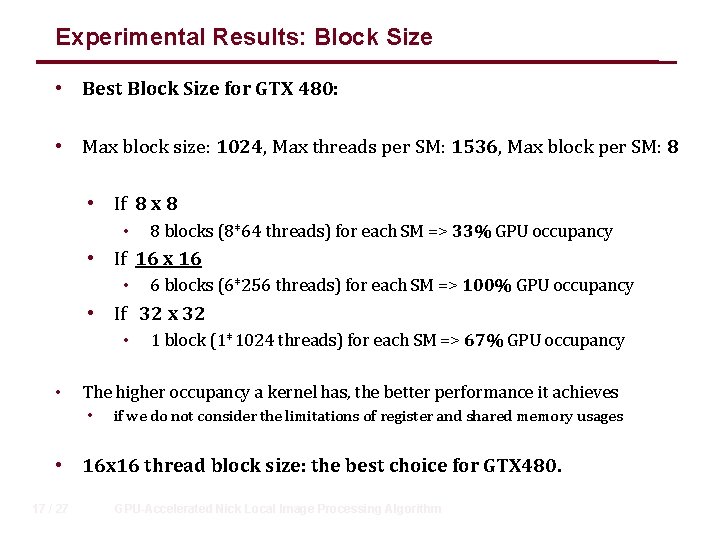

Experimental Results: Block Size • Best Block Size for GTX 480: • Max block size: 1024, Max threads per SM: 1536, Max block per SM: 8 • If 8 x 8 • 8 blocks (8*64 threads) for each SM => 33% GPU occupancy • If 16 x 16 • 6 blocks (6*256 threads) for each SM => 100% GPU occupancy • If 32 x 32 • • 1 block (1*1024 threads) for each SM => 67% GPU occupancy The higher occupancy a kernel has, the better performance it achieves • if we do not consider the limitations of register and shared memory usages • 16 x 16 thread block size: the best choice for GTX 480. 17 / 27 GPU-Accelerated Nick Local Image Processing Algorithm

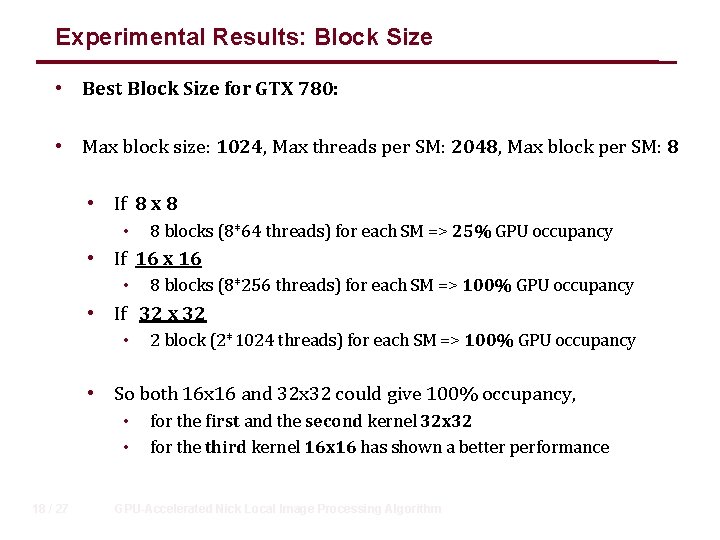

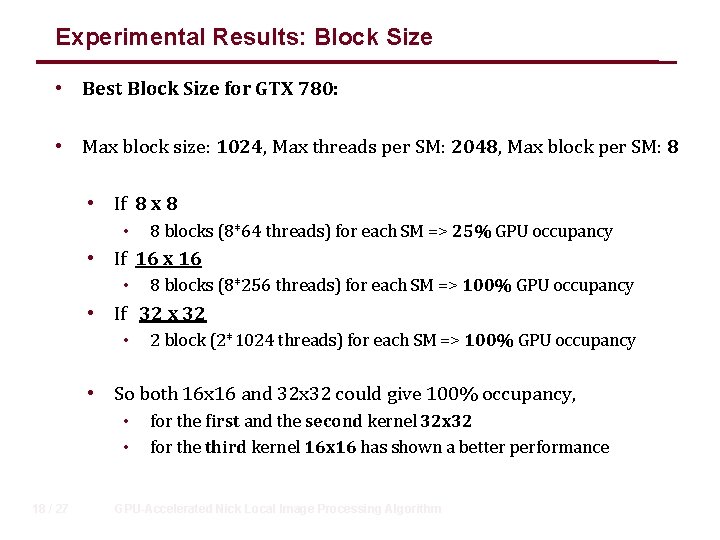

Experimental Results: Block Size • Best Block Size for GTX 780: • Max block size: 1024, Max threads per SM: 2048, Max block per SM: 8 • If 8 x 8 • 8 blocks (8*64 threads) for each SM => 25% GPU occupancy • If 16 x 16 • 8 blocks (8*256 threads) for each SM => 100% GPU occupancy • If 32 x 32 • 2 block (2*1024 threads) for each SM => 100% GPU occupancy • So both 16 x 16 and 32 x 32 could give 100% occupancy, • • 18 / 27 for the first and the second kernel 32 x 32 for the third kernel 16 x 16 has shown a better performance GPU-Accelerated Nick Local Image Processing Algorithm

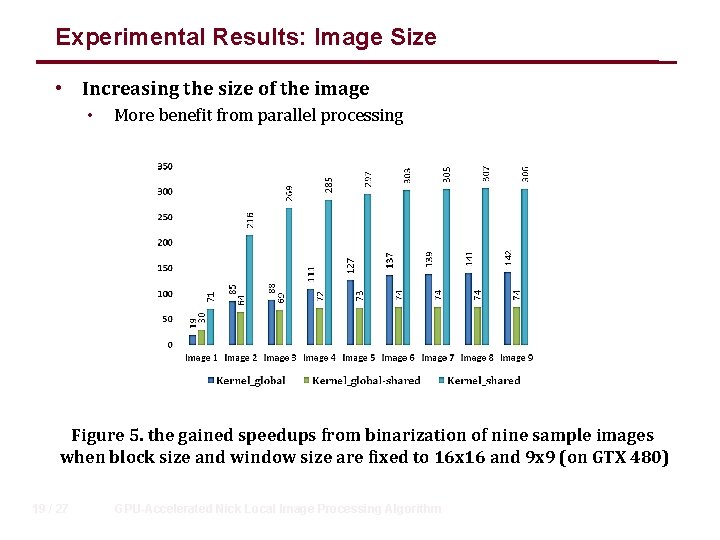

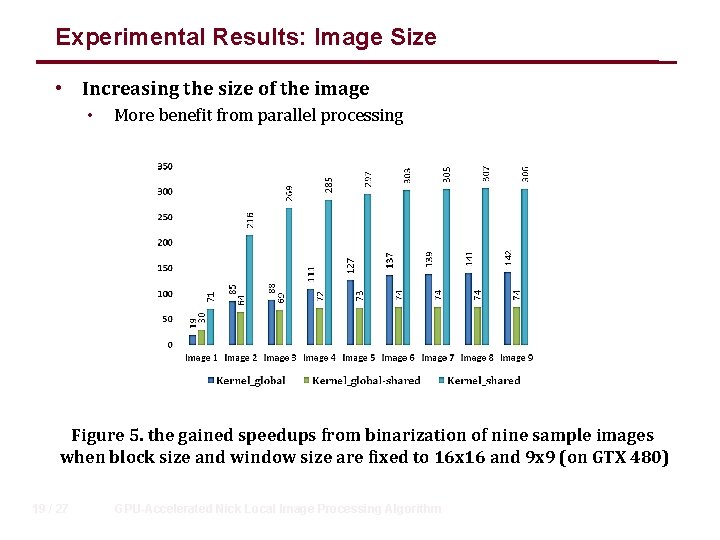

Experimental Results: Image Size • Increasing the size of the image • More benefit from parallel processing Figure 5. the gained speedups from binarization of nine sample images when block size and window size are fixed to 16 x 16 and 9 x 9 (on GTX 480) 19 / 27 GPU-Accelerated Nick Local Image Processing Algorithm

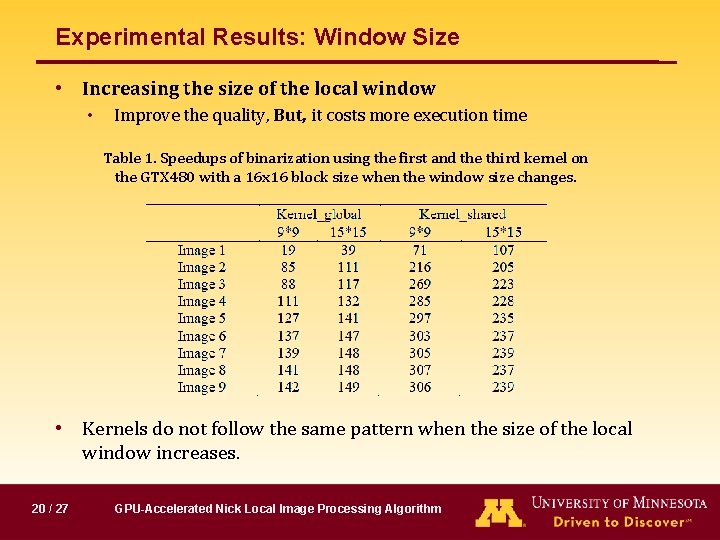

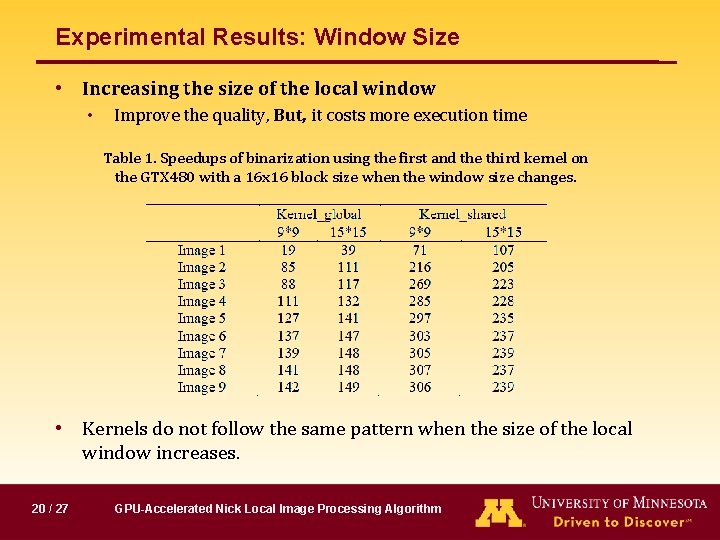

Experimental Results: Window Size • Increasing the size of the local window • Improve the quality, But, it costs more execution time Table 1. Speedups of binarization using the first and the third kernel on the GTX 480 with a 16 x 16 block size when the window size changes. • Kernels do not follow the same pattern when the size of the local window increases. 20 / 27 GPU-Accelerated Nick Local Image Processing Algorithm

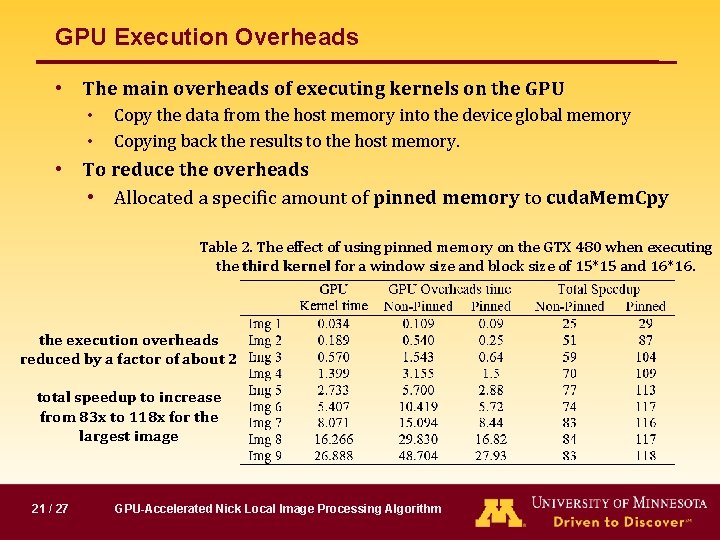

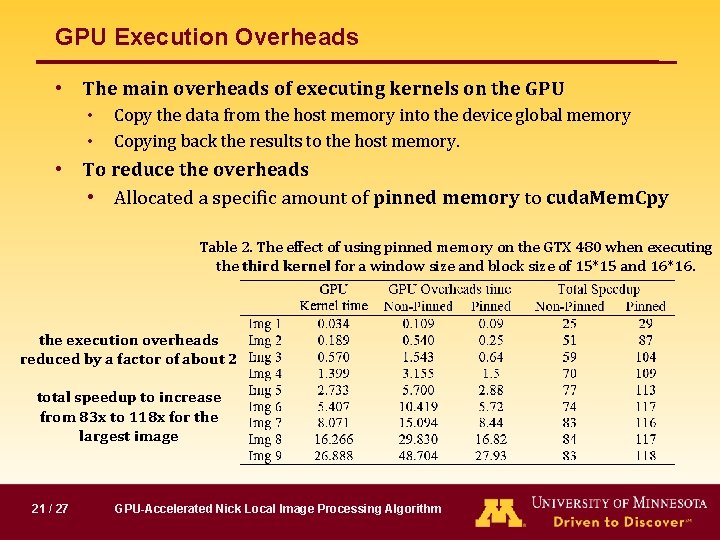

GPU Execution Overheads • The main overheads of executing kernels on the GPU • • Copy the data from the host memory into the device global memory Copying back the results to the host memory. • To reduce the overheads • Allocated a specific amount of pinned memory to cuda. Mem. Cpy Table 2. The effect of using pinned memory on the GTX 480 when executing the third kernel for a window size and block size of 15*15 and 16*16. the execution overheads reduced by a factor of about 2 total speedup to increase from 83 x to 118 x for the largest image 21 / 27 GPU-Accelerated Nick Local Image Processing Algorithm

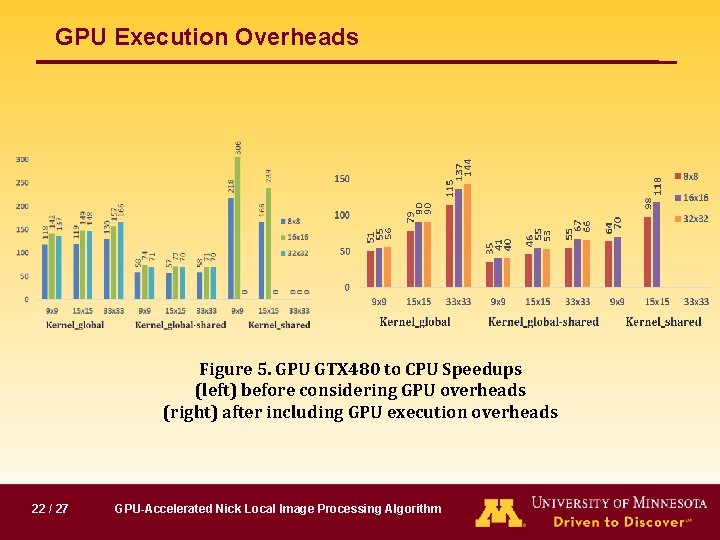

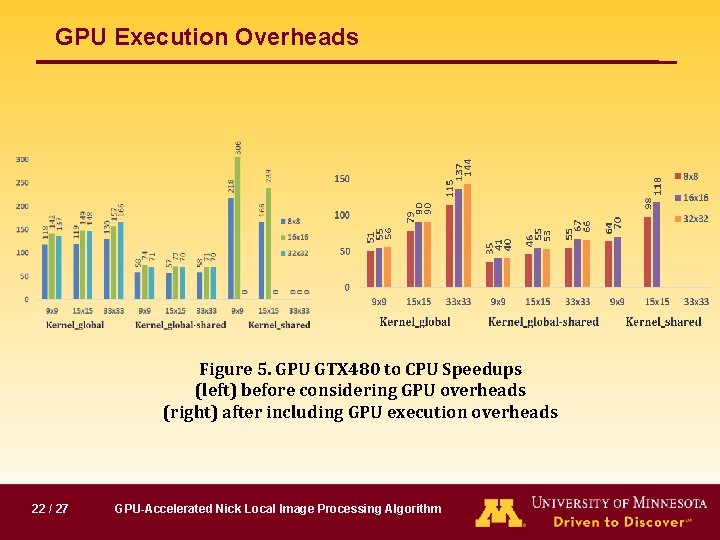

GPU Execution Overheads Figure 5. GPU GTX 480 to CPU Speedups (left) before considering GPU overheads (right) after including GPU execution overheads 22 / 27 GPU-Accelerated Nick Local Image Processing Algorithm

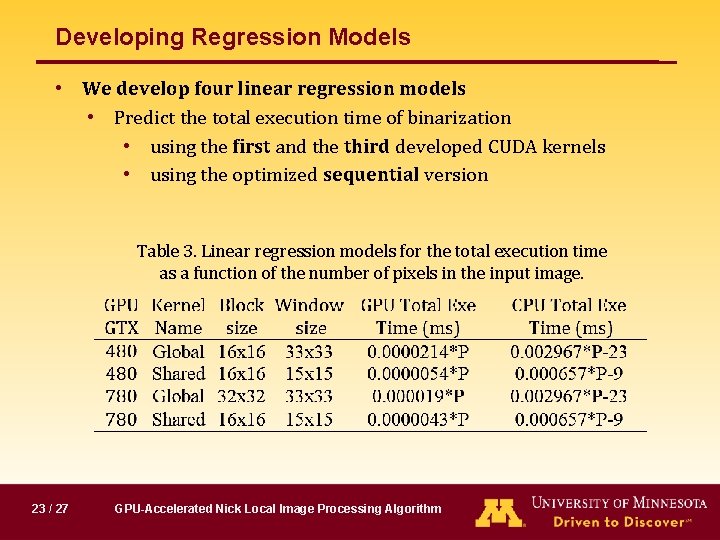

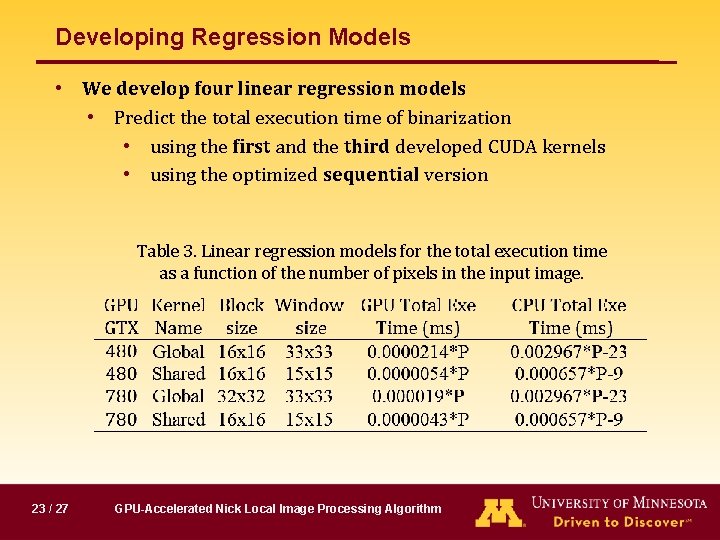

Developing Regression Models • We develop four linear regression models • Predict the total execution time of binarization • using the first and the third developed CUDA kernels • using the optimized sequential version Table 3. Linear regression models for the total execution time as a function of the number of pixels in the input image. 23 / 27 GPU-Accelerated Nick Local Image Processing Algorithm

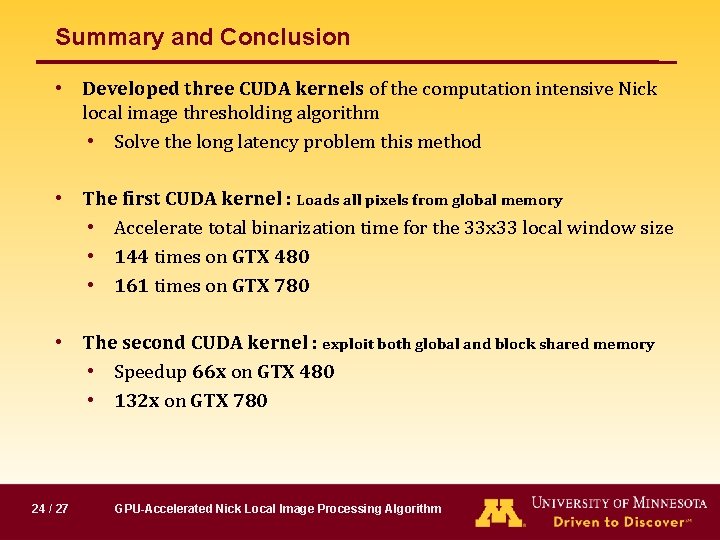

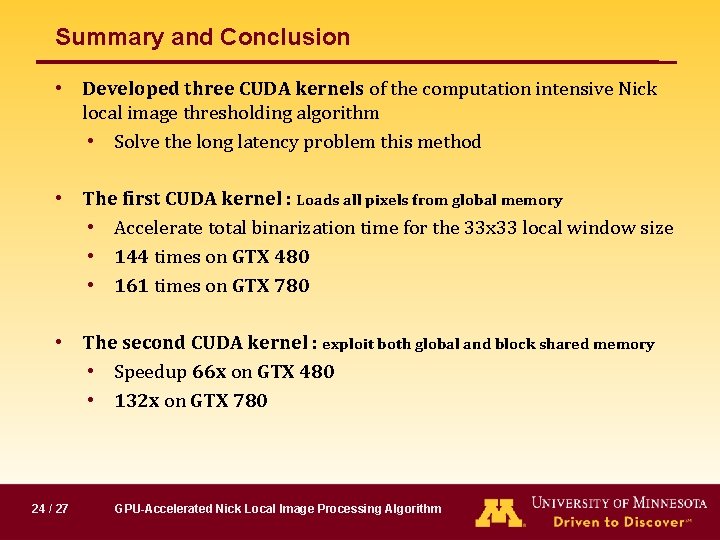

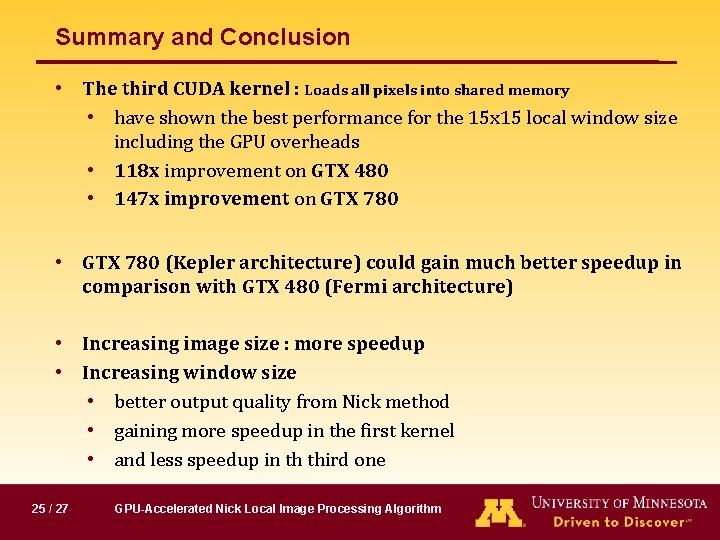

Summary and Conclusion • Developed three CUDA kernels of the computation intensive Nick local image thresholding algorithm • Solve the long latency problem this method • The first CUDA kernel : Loads all pixels from global memory • Accelerate total binarization time for the 33 x 33 local window size • 144 times on GTX 480 • 161 times on GTX 780 • The second CUDA kernel : exploit both global and block shared memory • Speedup 66 x on GTX 480 • 132 x on GTX 780 24 / 27 GPU-Accelerated Nick Local Image Processing Algorithm

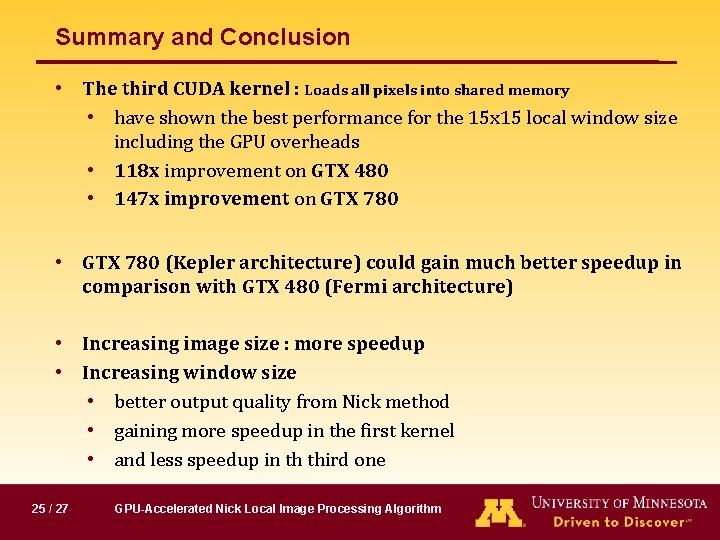

Summary and Conclusion • The third CUDA kernel : Loads all pixels into shared memory • have shown the best performance for the 15 x 15 local window size including the GPU overheads • 118 x improvement on GTX 480 • 147 x improvement on GTX 780 • GTX 780 (Kepler architecture) could gain much better speedup in comparison with GTX 480 (Fermi architecture) • Increasing image size : more speedup • Increasing window size • better output quality from Nick method • gaining more speedup in the first kernel • and less speedup in th third one 25 / 27 GPU-Accelerated Nick Local Image Processing Algorithm

![References 1 B Gatos I Pratikakis and S J Perantonis Adaptive degraded document References • [1] B. Gatos, I. Pratikakis, and S. J. Perantonis, “Adaptive degraded document](https://slidetodoc.com/presentation_image_h2/8fe3575125ba53aa0aca41acc1a2ee88/image-26.jpg)

References • [1] B. Gatos, I. Pratikakis, and S. J. Perantonis, “Adaptive degraded document image binarization, ” Pattern Recognit. , vol. 39, no. 3, pp. 317 – 327, 2006. • [2] F. Shafait, D. Keysers, and T. M. Breuel, “Efficient Implementation of Local Adaptive Thresholding Techniques Using Integral Images, ” Doc. Recognit. Retr. XV. , 2008. • [3] K. Khurshid, I. Siddiqi, C. Faure, and N. Vincent, “Comparison of Niblack inspired binarization methods for ancient documents, ” Proc. SPIE, vol. 7247. p. 72470 U– 9, 2009. • [4] E. Zemouri, Y. Chibani, and Y. Brik, “Enhancement of Historical Document Images by Combining Global and Local Binarization Technique, ” Int. J. Inf. Electron. Eng. , vol. 4, no. 1, 2014. 26 / 27 GPU-Accelerated Nick Local Image Processing Algorithm

Thank you Questions? Najaf 011@umn. edu 27 / 27 GPU-Accelerated Nick Local Image Processing Algorithm